Abstract

Quantifying emotional aspects of animal behavior (e.g., anxiety, social interactions, reward, and stress responses) is a major focus of neuroscience research. Because manual scoring of emotion-related behaviors is time-consuming and subjective, classical methods rely on easily quantified measures such as lever pressing or time spent in different zones of an apparatus (e.g., open vs. closed arms of an elevated plus maze). Recent advancements have made it easier to extract pose information from videos, and multiple approaches for extracting nuanced information about behavioral states from pose estimation data have been proposed. These include supervised, unsupervised, and self-supervised approaches, employing a variety of different model types. Representations of behavioral states derived from these methods can be correlated with recordings of neural activity to increase the scope of connections that can be drawn between the brain and behavior. In this mini review, we will discuss how deep learning techniques can be used in behavioral experiments and how different model architectures and training paradigms influence the type of representation that can be obtained.

Keywords: deep learning, emotion, supervised learning, unsupervised learning, self-supervised learning, neural recording, pose estimation

Introduction

Animal behavioral studies have been used to investigate neurobiological aspects of human emotions. In this mini review, we define “emotion” as patterns of behavioral, hormonal, and autonomic responses (Dolensek et al., 2020) that explain behaviors more complex than reflexes but less complex than volitional behavior (Adolphs, 2017). The use of animal models in combination with advanced recording techniques has furthered our understanding of the biological basis of emotional behavior (Kirlic et al., 2017; Xia and Kheirbek, 2020), but precise identification of the specific neural substrates and mechanisms for emotions and emotional behavior have proved elusive (Celeghin et al., 2017).

Various brain regions have been implicated in emotional behavior, including the anterior cingulate cortex (ACC; Johnson et al., 2022), insular cortex (Dolensek et al., 2020), and subcortical deep brain structures such as the amygdala (Joëls et al., 2018; Wu et al., 2021), nucleus accumbens (NAc; Aragona and Wang, 2009), and periaqueductal gray (Buhle et al., 2013; Reis et al., 2021). Because animal models rely solely on observations of behavior to quantify emotional states, accurate and nuanced behavior quantification is required to understand the neural basis of emotional states (Xia and Kheirbek, 2020).

One approach to this problem is manual annotation of animal behavior videos by human observers, labeling behaviors of interest on a frame-by-frame basis. This process is laborious, inefficient for processing large amounts of data, error-prone, and introduces subjectivity (Arac et al., 2019; van Dam et al., 2020). The other classical approach is measuring only aspects of behavior that can easily be measured by machines, e.g., by counting lever presses in an operant chamber or using simple tracking algorithms to detect the subject’s presence in defined regions of interest (ROIs). Advancements in computer vision and deep learning have opened the door to improvement in this area. Deep learning models, inspired by the way the mammalian brain processes information, are typically trained by an iterative optimization process with large quantities of data in a fast and quasi-deterministic way (Xia et al., 2018; Richards et al., 2019; Høye et al., 2021; Contreras et al., 2022). Such models, which aim to automate the process of extracting representations from data (Arel et al., 2010; Najafabadi et al., 2015), have been applied to problems in medical imaging, natural language processing, and image recognition (Najafabadi et al., 2015; Zhao et al., 2021). Deep learning promises to allow for more nuanced analysis of emotion-related behavior than classical methods. In this mini review, we will discuss ways of measuring rodent behaviors that are relevant to anxiety, social interaction, reward, and stress responses. We will describe how deep learning can be applied to studying emotional behavior in rodent models and continues to advance our understanding of neural activity and emotional behavior.

Paradigms for Measuring Emotional Behaviors

Researchers have studied anxiety-like behavior in rodents by using approach-avoidance conflicts. In these experiments, rewarding cues (e.g., food, drug, social interaction) elicit approach and reward-seeking behaviors (e.g., lever pressing) while aversive cues (e.g., foot shock, predatory threat) elicit avoidance and fear behaviors (e.g., freezing; Kirlic et al., 2017; Greiner et al., 2019). To assess anxiety-like behavior, researchers can present these stimuli and measure the degree to which an animal approaches or avoids them (Kirlic et al., 2017; Greiner et al., 2019). Paradigms used to measure different aspects of anxiety also include the elevated plus maze (EPM), elevated zero maze (EZM), open field test, social interaction test, hyponeophagia test, conditioned fear test, shock-probe test, Vogel conflict test, Geller-Seifter test, and the light-dark box assay (Sousa et al., 2006; Kirlic et al., 2017; Lezak et al., 2017). In conflict tests (e.g., Vogel and Geller-Seifter tests), animals seek reward (water/food) while a conflict is created by delivering punishment (shock) at a fixed ratio (e.g., every nth reward). Although deliberate avoidance is not assessed, the total shocks delivered is used as a measurement of anxiety-like behavior (Kirlic et al., 2017). Other tests measure stress responses in animals (novelty-induced suppression of feeding test, forced swim test; Sousa et al., 2006), depression-like behavior (e.g., tail suspension test; Xia and Kheirbek, 2020), or facial expression changes in reaction to different emotionally-salient stimuli (Dolensek et al., 2020).

Other classical methods focus on social behavior. Examples include the social interaction test, social preference-avoidance test, social approach-avoidance test, three-chambered social approach test, modified Y-maze, and tests that observe maternal behavior (Sousa et al., 2006; Kirlic et al., 2017). In the social interaction test, social and exploratory behaviors of two rodents are monitored in a familiar or unfamiliar context with different lighting conditions. The amount of time rodents spend interacting under the four test conditions is used as a measure of anxiety-like behavior. Other commonly quantified emotional behaviors include freezing, defecating, vocalizations, and self-grooming (File and Seth, 2003; Sousa et al., 2006). Conditioned place preference paradigms have been used to investigate social vs. drug reward in rodents where time spent in social or drug chambers are recorded (Thiel et al., 2008; Kummer et al., 2014). An operant social self-administration protocol described by Venniro and Shaham (2020) demonstrated volitional operant choice of social over drug reward where total lever presses for each reward was used as a measure of operant social choice.

Measurements such as rate, time, and number of task-related behaviors performed are convenient ways to quantify observable emotional behavior. However, many current assays rely on rudimentary measurements and generalized assumptions that reduce translational value. For example, we assume that animals in the EPM that spend less time in the open arms are more anxious than others that spend more time (Lezak et al., 2017). Measures like lever press counts and time spent in certain zones are not fully representative of complex internal emotional states. Animal movement and posture captured on video can convey much more information about behavior and internal state, but accessing that information in a systematic way is a major challenge in image processing and machine learning. Recent advances in pose estimation and emerging methods for behavioral analysis hold the potential to vastly increase the richness and variety of behavioral variables available for analysis.

Tracking and Analyzing Pose Estimation Data

The modern deep learning revolution began in 2012 when a convolutional neural network (CNN) reached human-level accuracy visual recognition (Serre, 2019; Mathis and Mathis, 2020). Since then, many techniques have been developed to apply deep learning algorithms to animal behavior analysis, including pose estimation algorithms [e.g., DeepLabCut (DLC), SLEAP; Mathis et al., 2018; Pereira et al., 2022]. Nath et al. (2019) highlights the DLC protocol, in which users manually label body parts in a subset of frames and use those labels to train a pose estimation model. The trained model is used to extract information about the subject’s pose, or geometric configuration of body parts (Mathis et al., 2018), from a large corpus of behavioral video data. DLC has been developed to accommodate more experimental setups, including top-down, bottom-up, and multi-animal 3D tracking (Lauer et al., 2022).

The output of pose estimation workflows (such as DLC) is frame-by-frame information about the location of the labeled body parts (if detected) within the video frame. For research focused primarily on tracking kinematics or an animal’s location (e.g., presence within some ROI), this may be sufficient for the desired analyses. For example, DLC has been used in EPM, EZM, and open field tests to measure time spent in different areas, distance traveled, location, and velocity (Cui et al., 2021; Lu et al., 2021; Sun et al., 2021; Johnson et al., 2022; Sánchez-Bellot et al., 2022). However, pose estimation also offers the possibility of extracting more general information about an animal’s actions and behavioral state from moment to moment. This area of study has attracted significant attention in recent years. A number of methods have been proposed for extracting this type of information from video data, a comparison of which is the main focus of this review.

Machine Learning Approaches for Emotional Behavioral Analysis

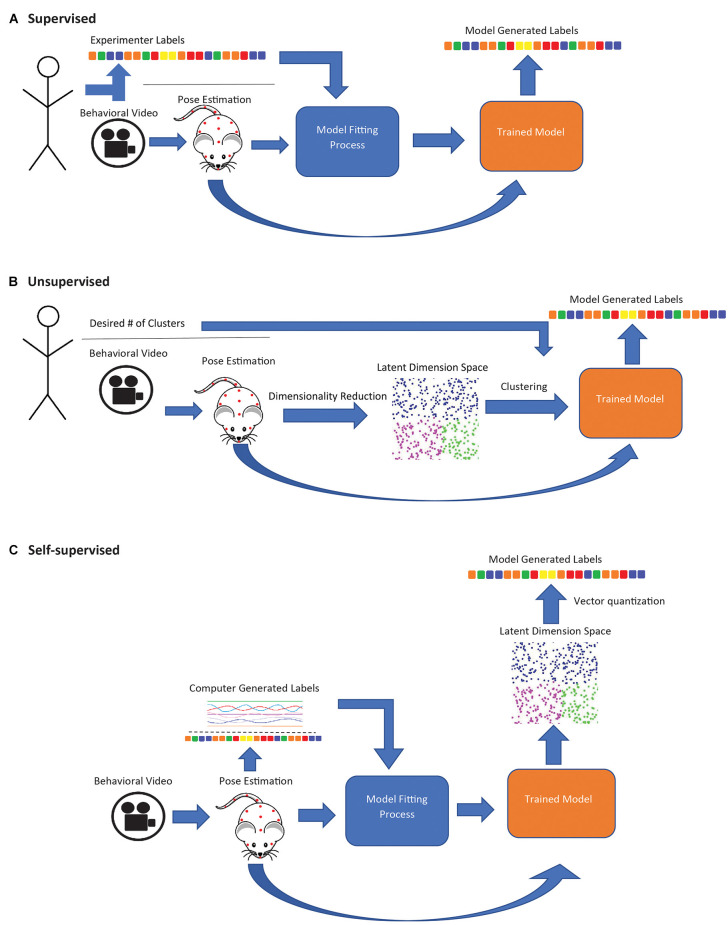

A variety of frameworks for deriving behavioral motifs and classifying behavioral states using pose estimation or related methods have been proposed (Table 1). Supervised approaches use pose data to identify experimenter-specified behaviors. Unsupervised approaches seek to identify naturally occurring behavior categories by clustering. Self-supervised approaches involve training a model to predict some variable derived from the data itself (rather than directly set by the experimenter) and the trained model is used as a feature extractor for subsequent clustering or other analyses. These three learning types affect the type of behaviors that may be identified.

Table 1.

Behavior classification algorithms.

| Supervised | Self-Supervised | Unsupervised | |

|---|---|---|---|

| Uses tracking data | SimBA, JAABA, DLC Analyzer | DBM, VAME, Selfee | B-SOiD, PyRAT |

| Built-in pose estimation | MARS | - | AlphaTracker |

| Does not use tracking data | - | BehaveNet | MoSeq, MotionMapper |

Supervised approaches employ a classification model trained on human-annotated data, which is then applied to new data (Figure 1A). SimBA (Nilsson et al., 2020) uses features extracted from DLC or SLEAP pose data as input to a random forest classifier to label mouse behaviors, such as sniffing (social behavior; Dawson et al., 2022), freezing (fear conditioning; Hon et al., 2022), and pup retrieval (Winters et al., 2022). DLCAnalyzer (Sturman et al., 2020) also includes capabilities for supervised behavior classification and has been used to track head dips in the EPM (Grimm et al., 2021) and head angle in the open-field test (von Ziegler et al., 2022). MARS combines pose estimation and behavior classification capabilities and is suitable for multi-animal social behavior analysis (e.g., attack, mounting; Segalin et al., 2021). These approaches are most suitable when researchers are interested in specific behaviors known a priori, as opposed to unsupervised or self-supervised approaches which offer no guarantee that any specific behavior will be identified as a distinct category. However, human annotations can be biased, subjective, and may not capture subtle differences in how a behavior is performed (Pereira et al., 2020). The utility of supervised approaches is limited in situations where researchers wish to uncover naturally occurring behavioral motifs. Additionally, the above frameworks all attempt to classify behavior within a narrow temporal window (e.g., features extracted in the SimBA workflow are calculated over a rolling 500 ms window; Nilsson et al., 2020), limiting their ability to identify highly contextual behavioral motifs or larger-scale patterns of behavior.

Figure 1.

Schematic diagram of supervised, unsupervised, and self-supervised approaches for animal behavior classification. (A) In supervised approaches, a model is trained to generate behavioral classifiers that replicate human annotations. (B) Unsupervised approaches are entirely data driven; pose estimation data is compressed to the latent state representation and clustered to maximize similarities in data points. Experimenters may sometimes specify the number of desired clusters. (C) In self-supervised approaches, a model is trained to generate labels derived from the data. The trained model is used to map subject behavior to a latent space, and the space is then discretized (usually with K-means).

Unsupervised learning models, in contrast to supervised models, are entirely data driven and require minimal experimenter input (Figure 1B). These models generate behavioral clusters based on similarities and differences between data points, e.g., video frames (Goodwin et al., 2022). Unsupervised methods generally involve a dimensionality reduction step, where a large set of input features are compressed into a low-dimensional representation, and a clustering step, where data points (i.e., frames in a video sequence) are clustered to maximize the similarity of points within a cluster, which in some cases requires the experimenter to specify the number of clusters. B-SOiD (Hsu and Yttri, 2021) and PyRAT (De Almeida et al., 2022) apply nonlinear dimensionality reduction, which can capture nonlinear relationships in the input data (Portnova-Fahreeva et al., 2020), while AlphaTracker (Chen et al., 2020) uses linear dimensionality reduction (principal component analysis, PCA). All three methods then group video frames into behavioral categories through hierarchical clustering. MoSeq (Wiltschko et al., 2015) takes a different approach, applying linear dimensionality reduction directly to video frames (without pose estimation) and segmenting the resulting continuous representation of behavior using an autoregressive hidden Markov model (ARHMM; Bryan and Levinson, 2015), a variant of the hidden Markov model adapted to modeling continuous valued time series as the product of an underlying sequence of discrete states, making it potentially better suited than simple clustering-based approaches to identify temporal patterns over a longer timescale.

Using unsupervised methods is advantageous when the experimenter wishes to label behaviors without choosing specific behaviors, or to uncover variations in how behaviors are performed. Approaches that use hierarchical (PyRAT, AlphaTracker) or density-based clustering methods (B-SOiD) do not even require that the user specify the number of behavioral clusters. This property is a double-edged sword, however, as hierarchical and density clustering algorithms do not attempt to ensure that all points included within a cluster are similar to each other, only that there is no clear dividing line. Thus, they are suited to detecting behaviors that occur in sustained bouts with relatively brief transitions between behaviors, but may group very different behaviors together when transitions between behaviors occur very frequently.

Self-supervised methods (Figure 1C) combine aspects of both supervised and unsupervised learning. Like supervised methods, they rely on classification or regression models (usually a deep neural network), but the model is trained to produce outputs derived from the data instead of manual annotations (in the simplest case, the autoencoder, the output is the same as the input). After training, the final classification/regression output layers of the model are discarded, and the model is used as a feature extractor (Misra and van der Maaten, 2019). Applied this way, the model performs nonlinear dimensionality reduction, mapping video frames to points in a feature space, with the choice of method for deriving the training outputs strongly influencing which aspects of the pose/video data are represented in that space. This “mapping” step may be followed by a discretization step, in which the transformed points (i.e., frames) are grouped into categories (typically using a vector quantization algorithm such as K-means). These categories are usually assigned descriptive labels by visually inspecting video clips to determine what behavior(s) each category is associated with.

Deep behavior mapping (DBM; Zhang et al., 2022) is a self-supervised framework, in which training labels are derived by assigning different labels to video frames according to which ROI the subject is in, in combination with time windows around experimental events (e.g., lever press). DBM, which uses pose data from DLC as input and employs a long-short term memory (LSTM; Hochreiter and Schmidhuber, 1997) classification network and discretizes the extracted feature space using K-means, has been used to capture behavioral microstates in a mouse operant task, including identifying distinct phases of a well-learned behavior sequence (e.g., lever approach, lever interaction, shifting from lever port to food port, food port search, food consumption) and also various non-task behaviors (grooming, rearing, visiting water sipper; Zhang et al., 2022). VAME (Luxem et al., 2022) uses a similar model architecture (using gated recurrent units (Cho et al., 2014), a variant of LSTM), but trains the model using only the pose data itself. The model is trained to reproduce the input pose sequence (i.e., an autoencoder), plus predict the pose data a short distance into the future. This approach has the benefit of not requiring the experimenter to specify rules for deriving output labels for training, at the cost of potentially making the process more sensitive to noise and occlusions (Hausmann et al., 2021; Luxem et al., 2022). Selfee (Jia et al., 2022) and BehaveNet (Batty et al., 2019), unlike VAME and DBM, operate directly on snippets of video rather than pose data from DLC/SLEAP, employing autoencoder-style training directly on video data for nonlinear dimensionality reduction. Selfee operates on short 3-frame snippets of raw video, has been used in mice to identify behaviors such as social nose contact and allogrooming in open field tests, and places its main emphasis on using the extracted feature space for a variety of downstream analyses (Jia et al., 2022). BehaveNet, unlike the other self-supervised methods, performs feature extraction on individual frames rather than sequences of frames, and does not consider the temporal structure of the data until the discretization step, which uses an autoregressive hidden Markov model (Batty et al., 2019).

Data with occluded views of experimental subjects present an additional challenge for pose estimation-based methods (Mathis and Mathis, 2020); for example, recording animals top-down can hide the feet of an animal, while top-down recordings of animals with headcaps or microendoscopes often suffer intermittent occlusion of parts of the head. Recording multiple animals interacting can also occlude body parts, but advances in 3D animal tracking (Marshall et al., 2022) can help minimize the effect of occlusions on tracking of animal identity. Another challenge with recording multiple animals is that pose-estimating algorithms like DLC may not be able to differentiate between similar looking animals (Lauer et al., 2022). AlphaTracker (Chen et al., 2020) does both tracking and behavior classification and could reliably track four identical-looking mice. The quality of the available data is likely to be a major determining factor in which types of methods are applicable to a problem, with unsupervised (e.g., B-SOiD, PyRAT) or autoencoder-based methods (VAME, BehaveNet, Selfee) best suited to handling relatively noise-free data, and classifier-based methods (e.g., SimBA, DBM) better suited to handling data with frequent occlusions and lower video quality.

Correlating Neural Activity with Emotional Behavior

Advances in imaging technology such as 1-photon miniscope calcium imaging allow for in vivo imaging of neuronal activity in freely behaving animals. Using techniques that record from freely moving animals allows for studying an animal’s neuronal activity in more complex behavioral paradigms (Laing et al., 2021). Recent miniscope imaging studies in freely moving animals correlate calcium activity with complex behaviors such as pain behavior (Liu et al., 2022), defensive behavior (Kennedy et al., 2020; Ponserre et al., 2022), and reward behavior (Siemian et al., 2021).

Many correlations between neural activity and animal behavior are based on measurements such as timestamps. Integrating neural imaging and machine/deep learning techniques in rodents allows for more nuanced relationships to be identified. Studies have utilized machine/deep learning techniques for head-fixed in vivo 2-photon calcium imaging in mice (Dolensek et al., 2020; Lu et al., 2021; Yue et al., 2021). However, many aspects of emotional behavior are best measured when rodents are freely behaving in the testing area (e.g., grooming, nesting, or approach/avoidance). Recent studies have utilized miniscope calcium imaging to allow recording of neural activity in freely moving animals, which can then be combined with advanced techniques for behavior analysis (Zhang et al., 2022). DLC-derived data in a rat exposure assay was used for automated classification of defensive behaviors and correlated with dorsal periaqueductal gray activity (Reis et al., 2021). DLC-derived data from mice in the EZM and open field tests was used to extract kinematics and identify correlations between the activity of interneurons in the ACC and anxiety and social behavior (Johnson et al., 2022). In a study on prosocial affiliative touch, a deep CNN and an LSTM-based RNN identified that affiliative allogrooming was controlled by the medial amygdala (Wu et al., 2021).

In vivo electrophysiology can also be combined with video recording in freely moving animals. For example, the firing rate of NAc and dorsolateral striatum neurons changed with operant vertical head movement as drug intake increased (Coffey et al., 2015). Pose estimation and deep learning-based behavioral analysis can also be used with optogenetic manipulation to understand functions of neuron ensembles in behavior (Grieco et al., 2021). For example, optogenetic stimulation of primary motor cortex in marmosets used DLC tracking of hand positions to identify neurons involved in forelimb movement (Ebina et al., 2019). Integrating deep learning analysis and neural recording in behavioral studies allows for correlation of neurons with behaviors in greater detail.

Discussion

The advent of machine learning and deep learning enables the simultaneous specialization and standardization of behavior classification processes across neuroscience research. Previous studies have utilized various deep learning techniques to look at behaviors that correlate with pain states (Bohic et al., 2021), social behavior in stressed mice (Rodriguez et al., 2020; Lee et al., 2022), depressive behavior in stressed mice (Rivet-Noor et al., 2022), and reward behavior in mice models of food seeking (Zhang et al., 2022) or drug and alcohol abuse (Campos-Ordoñez et al., 2022; Neira et al., 2022). This allows behavioral neuroscientists to explore behavioral nuances in a more generalizable way (Goodwin et al., 2022). As deep learning algorithms continue to evolve, we predict more integration of deep learning and neural recording techniques to elicit the neural mechanisms of behavioral motifs implicated in emotion.

Deep learning techniques can help parse connections between behavior and the brain in complicated situations. For example, various cell types, circuits, systems, and projections in the brain have roles in multiple emotional behaviors, evidenced by chronic pain and depression comorbidity (Taylor et al., 2015) or addiction and anxiety comorbidity (Greiner et al., 2019). There are many complex interactions and overlaps between neural activity that underlie emotional behaviors, and much remains to be discovered. Another example is that the mu-opioid system has been implicated not only in pain and reward, but also in modulation of social-emotional behaviors (Meier et al., 2021). Additionally, salience and affect play important roles in pain, as affective systems, motivational systems, and pain processing interact (Taylor et al., 2015). Combining neural recording techniques with behavior analyses that allow fine-grained differentiation of behavioral states and motifs can help resolve such paradoxes by providing a broader range of hypotheses to explain correlations between neural activity and behavior. This allows researchers to refine our understanding of the precise role each neural circuit plays in the larger interaction of internal state, external stimulus, and behavior. In this way, deep learning techniques advance our understanding of the neuronal mechanisms of emotional behavior in animal models, and the importance of using these methods has strong translational power to treat mood disorders, addiction, pain, and other emotional disorders in humans.

Author Contributions

JK wrote the first draft of the manuscript. AD developed DBM. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by NIH NIDA IRP. JK is supported by the NIDA IRP Scientific Director’s Fellowship for Diversity in Research. NB and YZ is supported by the Post-doctoral Fellowship from the Center on Compulsive Behaviors, National Institutes of Health. YL is supported by National Institutes of Health grants 3P20GM121310-05S1, R61NS115161, and 4UH3NS115608-02.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

- Adolphs R. (2017). How should neuroscience study emotions? By distinguishing emotion states, concepts and experiences. Soc. Cogn. Affect. Neurosci. 12, 24–31. 10.1093/scan/nsw153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arac A., Zhao P., Dobkin B. H., Carmichael S. T., Golshani P. (2019). DeepBehavior: a deep learning toolbox for automated analysis of animal and human behavior imaging data. Front. Syst. Neurosci. 13:20. 10.3389/fnsys.2019.00020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aragona B., Wang Z. (2009). Dopamine regulation of social choice in a monogamous rodent species. Front. Behav. Neurosci. 3:15. 10.3389/neuro.08.015.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arel I., Rose D. C., Karnowski T. P. (2010). Deep machine learning - a new frontier in artificial intelligence research [Research Frontier]. IEEE Comput. Int. Mag. 5, 13–18. 10.1109/MCI.2010.938364 [DOI] [Google Scholar]

- Batty E., Whiteway M., Saxena S., Biderman D., Abe T., Musall S., et al. (2019). “BehaveNet: nonlinear embedding and Bayesian neural decoding of behavioral videos,” in Advances in Neural Information Processing Systems, eds Hanna M. Wallach, Hugo Larochelle, Alina Beygelzimer, Florence d’Alché-Buc, and Emily B. Fox (Red Hook, NY, USA: Curran Associates, Inc.), 15706–15717. [Google Scholar]

- Bohic M., Pattison L. A., Jhumka Z. A., Rossi H., Thackray J. K., Ricci M., et al. (2021). Mapping the signatures of inflammatory pain and its relief. bioRxiv [Preprint]. 10.1101/2021.06.16.448689 [DOI] [Google Scholar]

- Bryan J. D., Levinson S. E. (2015). Autoregressive hidden Markov model and the speech signal. Procedia Comput. Sci. 61, 328–333. 10.1016/j.procs.2015.09.151 [DOI] [Google Scholar]

- Buhle J. T., Kober H., Ochsner K. N., Mende-Siedlecki P., Weber J., Hughes B. L., et al. (2013). Common representation of pain and negative emotion in the midbrain periaqueductal gray. Soc. Cogn. Affect. Neurosci. 8, 609–616. 10.1093/scan/nss038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campos-Ordoñez T., Alcalá E., Ibarra-Castañeda N., Buriticá J., González-Pérez Ó. (2022). Chronic exposure to cyclohexane induces stereotypic circling, hyperlocomotion and anxiety-like behavior associated with atypical c-Fos expression in motor- and anxiety-related brain regions. Behav. Brain Res. 418:113664. 10.1016/j.bbr.2021.113664 [DOI] [PubMed] [Google Scholar]

- Celeghin A., Diano M., Bagnis A., Viola M., Tamietto M. (2017). Basic emotions in human neuroscience: neuroimaging and beyond. Front. Psychol. 8:1432. 10.3389/fpsyg.2017.01432 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Z., Zhang R., Zhang Y. E., Zhou H., Fang H.-S., Rock R. R., et al. (2020). AlphaTracker: a multi-animal tracking and behavioral analysis tool. bioRxiv [Preprint]. 10.1101/2020.12.04.405159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cho K., Van Merriënboer B., Bahdanau D., Bengio Y. (2014). On the properties of neural machine translation: encoder-decoder approaches. arXiv [Preprint]. 418. 10.48550/arXiv.1409.1259 [DOI] [Google Scholar]

- Coffey K. R., Barker D. J., Gayliard N., Kulik J. M., Pawlak A. P., Stamos J. P., et al. (2015). Electrophysiological evidence of alterations to the nucleus accumbens and dorsolateral striatum during chronic cocaine self-administration. Eur. J. Neurosci. 41, 1538–1552. 10.1111/ejn.12904 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Contreras E. B., Sutherland R. J., Mohajerani M. H., Whishaw I. Q. (2022). Challenges of a small world analysis for the continuous monitoring of behavior in mice. Neurosci. Biobehav. Rev. 136:104621. 10.1016/j.neubiorev.2022.104621 [DOI] [PubMed] [Google Scholar]

- Cui Q., Pamukcu A., Cherian S., Chang I. Y. M., Berceau B. L., Xenias H. S., et al. (2021). Dissociable roles of pallidal neuron subtypes in regulating motor patterns. J. Neurosci. 41, 4036–4059. 10.1523/JNEUROSCI.2210-20.2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson M., Terstege D. J., Jamani N., Pavlov D., Tsutsui M., Bugescu R., et al. (2022). Sex-dependent role of hypocretin/orexin neurons in social behavior. bioRxiv [Preprint]. 10.1101/2022.08.19.504565 [DOI] [Google Scholar]

- De Almeida T. F., Spinelli B. G., Hypolito Lima R., Gonzalez M. C., Rodrigues A. C. (2022). PyRAT: an open-source python library for animal behavior analysis. Front. Neurosci. 16:779106. 10.3389/fnins.2022.779106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dolensek N., Gehrlach D. A., Klein A. S., Gogolla N. (2020). Facial expressions of emotion states and their neuronal correlates in mice. Science 368, 89–94. 10.1126/science.aaz9468 [DOI] [PubMed] [Google Scholar]

- Ebina T., Obara K., Watakabe A., Masamizu Y., Terada S.-I., Matoba R., et al. (2019). Arm movements induced by noninvasive optogenetic stimulation of the motor cortex in the common marmoset. Proc. Natl. Acad. Sci. U S A 116, 22844–22850. 10.1073/pnas.1903445116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- File S. E., Seth P. (2003). A review of 25 years of the social interaction test. Eur. J. Pharmacol. 463, 35–53. 10.1016/s0014-2999(03)01273-1 [DOI] [PubMed] [Google Scholar]

- Goodwin N. L., Nilsson S. R. O., Choong J. J., Golden S. A. (2022). Toward the explainability, transparency and universality of machine learning for behavioral classification in neuroscience. Curr. Opin. Neurobiol. 73:102544. 10.1016/j.conb.2022.102544 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greiner E. M., Müller I., Norris M. R., Ng K. H., Sangha S. (2019). Sex differences in fear regulation and reward-seeking behaviors in a fear-safety-reward discrimination task. Behav. Brain Res. 368:111903. 10.1016/j.bbr.2019.111903 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grieco F., Bernstein B. J., Biemans B., Bikovski L., Burnett C. J., Cushman J. D., et al. (2021). Measuring behavior in the home cage: study design, applications, challenges, and perspectives. Front. Behav. Neurosci. 15:735387. 10.3389/fnbeh.2021.735387 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimm C., Frässle S., Steger C., von Ziegler L., Sturman O., Shemesh N., et al. (2021). Optogenetic activation of striatal D1R and D2R cells differentially engages downstream connected areas beyond the basal ganglia. Cell Rep. 37:110161. 10.1016/j.celrep.2021.110161 [DOI] [PubMed] [Google Scholar]

- Hausmann S. B., Vargas A. M., Mathis A., Mathis M. W. (2021). Measuring and modeling the motor system with machine learning. Curr. Opin. Neurobiol. 70, 11–23. 10.1016/j.conb.2021.04.004 [DOI] [PubMed] [Google Scholar]

- Hochreiter S., Schmidhuber J. (1997). Long short-term memory. Neural Comput. 9, 1735–1780. 10.1162/neco.1997.9.8.1735 [DOI] [PubMed] [Google Scholar]

- Hon O. J., DiBerto J. F., Mazzone C. M., Sugam J., Bloodgood D. W., Hardaway J. A., et al. (2022). Serotonin modulates an inhibitory input to the central amygdala from the ventral periaqueductal gray. Neuropsychopharmacology 9, 1–11. 10.1038/s41386-022-01392-4[Online ahead of print]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Høye T. T., Ärje J., Bjerge K., Hansen O. L. P., Iosifidis A., Leese F., et al. (2021). Deep learning and computer vision will transform entomology. Proc. Natl. Acad. Sci. U S A 118:e2002545117. 10.1073/pnas.2002545117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu A. I., Yttri E. A. (2021). B-SOiD, an open-source unsupervised algorithm for identification and fast prediction of behaviors. Nat. Commun. 12:5188. 10.1038/s41467-021-25420-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jia Y., Li S., Guo X., Lei B., Hu J., Xu X.-H., et al. (2022). Selfee, self-supervised features extraction of animal behaviors. eLife 11:e76218. 10.7554/eLife.76218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joëls M., Karst H., Sarabdjitsingh R. A. (2018). The stressed brain of humans and rodents. Acta Physiol. (oxf) 223:e13066. 10.1111/apha.13066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson C., Kretsge L. N., Yen W. W., Sriram B., O’Connor A., Liu R. S., et al. (2022). Highly unstable heterogeneous representations in VIP interneurons of the anterior cingulate cortex. Mol. Psychiatry 27, 2602–2618. 10.1038/s41380-022-01485-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennedy A., Kunwar P. S., Li L.-Y., Stagkourakis S., Wagenaar D. A., Anderson D. J. (2020). Stimulus-specific hypothalamic encoding of a persistent defensive state. Nature 586, 730–734. 10.1038/s41586-020-2728-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirlic N., Young J., Aupperle R. L. (2017). Animal to human translational paradigms relevant for approach avoidance conflict decision making. Behav. Res. Ther. 96, 14–29. 10.1016/j.brat.2017.04.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kummer K. K., Hofhansel L., Barwitz C. M., Schardl A., Prast J. M., Salti A., et al. (2014). Differences in social interaction- vs. cocaine reward in mouse vs. rat. Front. Behav. Neurosci. 8:363. 10.3389/fnbeh.2014.00363 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laing B. T., Siemian J. N., Sarsfield S., Aponte Y. (2021). Fluorescence microendoscopy for in vivo deep-brain imaging of neuronal circuits. J. Neurosci. Methods 348:109015. 10.1016/j.jneumeth.2020.109015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lauer J., Zhou M., Ye S., Menegas W., Schneider S., Nath T., et al. (2022). Multi-animal pose estimation, identification and tracking with DeepLabCut. Nat. Methods 19, 496–504. 10.1038/s41592-022-01443-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee R. X., Stephens G. J., Kuhn B. (2022). Social relationship as a factor for the development of stress incubation in adult mice. Front. Behav. Neurosci. 16:854486. 10.3389/fnbeh.2022.854486 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lezak K. R., Missig G., Carlezon W. A., Jr. (2017). Behavioral methods to study anxiety in rodents. Dialogues Clin. Neurosci. 19, 181–191. 10.31887/DCNS.2017.19.2/wcarlezon [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu S., Ye M., Pao G. M., Song S. M., Jhang J., Jiang H., et al. (2022). Divergent brainstem opioidergic pathways that coordinate breathing with pain and emotions. Neuron 110, 857–873.e9. 10.1016/j.neuron.2021.11.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu J., Tjia M., Mullen B., Cao B., Lukasiewicz K., Shah-Morales S., et al. (2021). An analog of psychedelics restores functional neural circuits disrupted by unpredictable stress. Mol. Psychiatry 26, 6237–6252. 10.1038/s41380-021-01159-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luxem K., Mocellin P., Fuhrmann F., Kürsch J., Remy S., Bauer P. (2022). Identifying behavioral structure from deep variational embeddings of animal motion. bioRxiv [Preprint]. 10.1101/2020.05.14.095430 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marshall J. D., Li T., Wu J. H., Dunn T. W. (2022). Leaving flatland: advances in 3D behavioral measurement. Curr. Opin. Neurobiol. 73:102522. 10.1016/j.conb.2022.02.002 [DOI] [PubMed] [Google Scholar]

- Mathis A., Mamidanna P., Cury K. M., Abe T., Murthy V. N., Mathis M. W., et al. (2018). DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 21, 1281–1289. 10.1038/s41593-018-0209-y [DOI] [PubMed] [Google Scholar]

- Mathis M. W., Mathis A. (2020). Deep learning tools for the measurement of animal behavior in neuroscience. Curr. Opin. Neurobiol. 60, 1–11. 10.1016/j.conb.2019.10.008 [DOI] [PubMed] [Google Scholar]

- Meier I. M., van Honk J., Bos P. A., Terburg D. (2021). A mu-opioid feedback model of human social behavior. Neurosci. Biobehav. Rev. 121, 250–258. 10.1016/j.neubiorev.2020.12.013 [DOI] [PubMed] [Google Scholar]

- Misra I., van der Maaten L. (2019). Self-supervised learning of pretext-invariant representations. arXiv [Preprint]. 10.48550/arXiv.1912.01991 [DOI] [Google Scholar]

- Najafabadi M. M., Villanustre F., Khoshgoftaar T. M., Seliya N., Wald R., Muharemagic E. (2015). Deep learning applications and challenges in big data analytics. J. Big Data 2:1. 10.1186/s40537-014-0007-7 [DOI] [Google Scholar]

- Nath T., Mathis A., Chen A. C., Patel A., Bethge M., Mathis M. W. (2019). Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat. Protoc. 14, 2152–2176. 10.1038/s41596-019-0176-0 [DOI] [PubMed] [Google Scholar]

- Neira S., Hassanein L. A., Stanhope C. M., Buccini M. C., D’Ambrosio S. L., Flanigan M. E., et al. (2022). Chronic alcohol consumption alters home-cage behaviors and responses to ethologically relevant predator tasks in mice. bioRxiv [Preprint]. 10.1101/2022.02.04.479122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nilsson S. R., Goodwin N. L., Choong J. J., Hwang S., Wright H. R., Norville Z. C., et al. (2020). Simple behavioral analysis (SimBA) - an open source toolkit for computer classification of complex social behaviors in experimental animals. bioRxiv [Preprint]. 10.1101/2020.04.19.049452 [DOI] [Google Scholar]

- Pereira T. D., Shaevitz J. W., Murthy M. (2020). Quantifying behavior to understand the brain. Nat. Neurosci. 23, 1537–1549. 10.1038/s41593-020-00734-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira T. D., Tabris N., Matsliah A., Turner D. M., Li J., Ravindranath S., et al. (2022). SLEAP: a deep learning system for multi-animal pose tracking. Nat. Methods 19, 486–495. 10.1038/s41592-022-01426-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ponserre M., Fermani F., Gaitanos L., Klein R. (2022). Encoding of environmental cues in central amygdala neurons during foraging. J. Neurosci. 42, 3783–3796. 10.1523/JNEUROSCI.1791-21.2022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Portnova-Fahreeva A. A., Rizzoglio F., Nisky I., Casadio M., Mussa-Ivaldi F. A., Rombokas E. (2020). Linear and non-linear dimensionality-reduction techniques on full hand kinematics. Front. Bioeng. Biotechnol. 8:429. 10.3389/fbioe.2020.00429 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reis F. M. C. V., Liu J., Schuette P. J., Lee J. Y., Maesta-Pereira S., Chakerian M., et al. (2021). Shared dorsal periaqueductal gray activation patterns during exposure to innate and conditioned threats. J. Neurosci. 41, 5399–5420. 10.1523/JNEUROSCI.2450-20.2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards B. A., Lillicrap T. P., Beaudoin P., Bengio Y., Bogacz R., Christensen A., et al. (2019). A deep learning framework for neuroscience. Nat. Neurosci. 22, 1761–1770. 10.1038/s41593-019-0520-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rivet-Noor C. R., Merchak A. R., Li S., Beiter R. M., Lee S., Thomas J. A., et al. (2022). Stress-induced despair behavior develops independently of the Ahr-RORγt axis in CD4+ cells. Sci. Rep. 12:8594. 10.1038/s41598-022-12464-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez G., Moore S. J., Neff R. C., Glass E. D., Stevenson T. K., Stinnett G. S., et al. (2020). Deficits across multiple behavioral domains align with susceptibility to stress in 129S1/SvImJ mice. Neurobiol. Stress 13:100262. 10.1016/j.ynstr.2020.100262 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sánchez-Bellot C., AlSubaie R., Mishchanchuk K., Wee R. W. S., MacAskill A. F. (2022). Two opposing hippocampus to prefrontal cortex pathways for the control of approach and avoidance behaviour. Nat. Commun. 13:339. 10.1038/s41467-022-27977-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Segalin C., Williams J., Karigo T., Hui M., Zelikowsky M., Sun J. J., et al. (2021). The Mouse Action Recognition System (MARS) software pipeline for automated analysis of social behaviors in mice. eLife 10:e63720. 10.7554/eLife.63720 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serre T. (2019). Deep learning: the good, the bad and the ugly. Annu. Rev. Vis. Sci. 5, 399–426. 10.1146/annurev-vision-091718-014951 [DOI] [PubMed] [Google Scholar]

- Siemian J. N., Arenivar M. A., Sarsfield S., Borja C. B., Russell C. N., Aponte Y. (2021). Lateral hypothalamic LEPR neurons drive appetitive but not consummatory behaviors. Cell Rep. 36:109615. 10.1016/j.celrep.2021.109615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sousa N., Almeida O. F. X., Wotjak C. T. (2006). A hitchhiker’s guide to behavioral analysis in laboratory rodents. Genes Brain Behav. 5, 5–24. 10.1111/j.1601-183X.2006.00228.x [DOI] [PubMed] [Google Scholar]

- Sturman O., von Ziegler L., Schläppi C., Akyol F., Privitera M., Slominski D., et al. (2020). Deep learning-based behavioral analysis reaches human accuracy and is capable of outperforming commercial solutions. Neuropsychopharmacology 45, 1942–1952. 10.1038/s41386-020-0776-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun G., Lyu C., Cai R., Yu C., Sun H., Schriver K. E., et al. (2021). DeepBhvTracking: a novel behavior tracking method for laboratory animals based on deep learning. Front. Behav. Neurosci. 15:750894. 10.3389/fnbeh.2021.750894 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor A. M. W., Castonguay A., Taylor A. J., Murphy N. P., Ghogha A., Cook C., et al. (2015). Microglia disrupt mesolimbic reward circuitry in chronic pain. J. Neurosci. 35, 8442–8450. 10.1523/JNEUROSCI.4036-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thiel K. J., Okun A. C., Neisewander J. L. (2008). Social reward-conditioned place preference: a model revealing an interaction between cocaine and social context rewards in rats. Drug Alcohol Depend. 96, 202–212. 10.1016/j.drugalcdep.2008.02.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Dam E. A., Noldus L. P. J. J., van Gerven M. A. J. (2020). Deep learning improves automated rodent behavior recognition within a specific experimental setup. J. Neurosci. Methods 332:108536. 10.1016/j.jneumeth.2019.108536 [DOI] [PubMed] [Google Scholar]

- Venniro M., Shaham Y. (2020). An operant social self-administration and choice model in rats. Nat. Protoc. 15, 1542–1559. 10.1038/s41596-020-0296-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Ziegler L. M., Floriou-Servou A., Waag R., Das Gupta R. R., Sturman O., Gapp K., et al. (2022). Multiomic profiling of the acute stress response in the mouse hippocampus. Nat. Commun. 13:1824. 10.1038/s41467-022-29367-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiltschko A. B., Johnson M. J., Iurilli G., Peterson R. E., Katon J. M., Pashkovski S. L., et al. (2015). Mapping sub-second structure in mouse behavior. Neuron 88, 1121–1135. 10.1016/j.neuron.2015.11.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winters C., Gorssen W., Ossorio-Salazar V. A., Nilsson S., Golden S., D’Hooge R. (2022). Automated procedure to assess pup retrieval in laboratory mice. Sci. Rep. 12:1663. 10.1038/s41598-022-05641-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y. E., Dang J., Kingsbury L., Zhang M., Sun F., Hu R. K., et al. (2021). Neural control of affiliative touch in prosocial interaction. Nature 599, 262–267. 10.1038/s41586-021-03962-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xia D., Chen P., Wang B., Zhang J., Xie C. (2018). Insect detection and classification based on an improved convolutional neural network. Sensors (Basel) 18:4169. 10.3390/s18124169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xia F., Kheirbek M. A. (2020). Circuit-based biomarkers for mood and anxiety disorders. Trends Neurosci. 43, 902–915. 10.1016/j.tins.2020.08.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yue Y., Xu P., Liu Z., Sun X., Su J., Du H., et al. (2021). Motor training improves coordination and anxiety in symptomatic Mecp2-null mice despite impaired functional connectivity within the motor circuit. Sci. Adv. 7:eabf7467. 10.1126/sciadv.abf7467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Y., Denman A. J., Liang B., Werner C. T., Beacher N. J., Chen R., et al. (2022). Detailed mapping of behavior reveals the formation of prelimbic neural ensembles across operant learning. Neuron 110, 674–685.e6. 10.1016/j.neuron.2021.11.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao X., Sun F., Shen X. (2021). “The application of deep learning in micro-expression recognition,” in 2021 3rd International Conference on Machine Learning, Big Data and Business Intelligence (MLBDBI), (Taiyuan, China). 10.1109/MLBDBI54094.2021.00041 [DOI] [Google Scholar]