SUMMARY

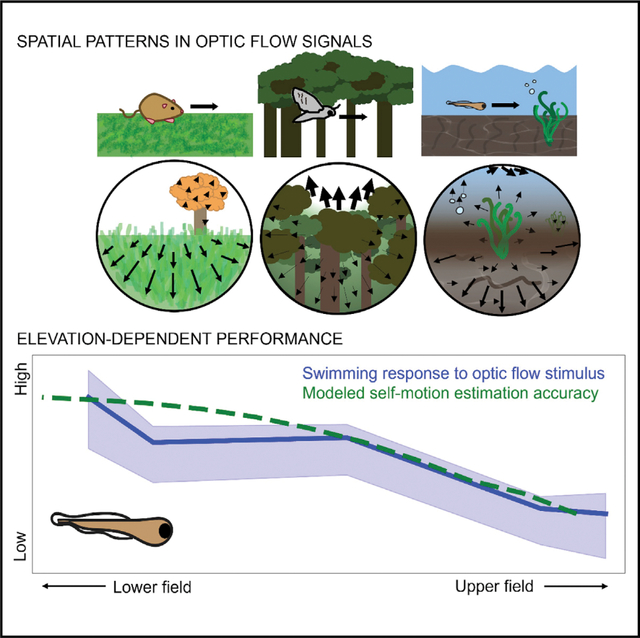

Animals benefit from knowing if and how they are moving. Across the animal kingdom, sensory information in the form of optic flow over the visual field is used to estimate self-motion. However, different species exhibit strong spatial biases in how they use optic flow. Here, we show computationally that noisy natural environments favor visual systems that extract spatially biased samples of optic flow when estimating self-motion. The performance associated with these biases, however, depends on interactions between the environment and the animal’s brain and behavior. Using the larval zebrafish as a model, we recorded natural optic flow associated with swimming trajectories in the animal’s habitat with an omnidirectional camera mounted on a mechanical arm. An analysis of these flow fields suggests that lateral regions of the lower visual field are most informative about swimming speed. This pattern is consistent with the recent findings that zebrafish optomotor responses are preferentially driven by optic flow in the lateral lower visual field, which we extend with behavioral results from a high-resolution spherical arena. Spatial biases in optic-flow sampling are likely pervasive because they are an effective strategy for determining self-motion in noisy natural environments.

In brief

Alexander et al. model natural motion statistics, neural responses, and behavior to show the benefit of spatial biases for self-motion estimation from optic flow, both in general and for the larval zebrafish. Their analysis combines a framework for self-motion estimation in noisy environments with a dataset of optic flow in zebrafish habitats.

Graphical Abstract

INTRODUCTION

Information about self-motion is potentially available from many sources. Inertia-based signals can be measured by vestibular systems, proprioception provides feedback on the forces generated by motor systems, and even haptic information is available, for example, from the wind in our hair. Among the diverse sensing modalities for self-motion, vision stands out as a key source of information for self-motion estimation across the animal kingdom. Visual cues to self-motion are well characterized by optic flow: the movement of features or brightness gradients across the visual field over time.

Laboratory experiments have shown that visual stimuli simulating optic flow during self-motion prompt robust behavioral responses in an impressive array of animals. The responses of flying insects to optic flow are well studied, from flies and bees to dragonflies and locusts.1 For example, bees have been shown to use optic flow to measure distance, maintain flight speed, control landings, and detect objects.2–4 Birds are likewise responsive to optic flow5: for example, parakeets navigate corridors by balancing lateral optic flow,6 and seagulls use optic flow to set their hovering height.7 Optic flow is not just important for animals that fly. Humans can use optic flow cues to predict collisions,8 estimate direction and distance of travel,9,10 maintain upright posture,11,12 and walk toward targets.13–15 In rodents, optic flow has been shown to affect representations of heading direction16 and to be combined with proprioceptive information during locomotion.17

In aquatic environments, optic flow is an important cue for self-motion estimation during swimming and self-stabilization against water currents.18 Like terrestrial vertebrates, different species of fish move both their eyes and their bodies in response to optic-flow cues.19–22 The larval zebrafish, Danio rerio, is of particular interest in this domain due to its use as a model organism in neuroscience for a broad set of behaviors and neural systems. Their tendency to swim along with moving visual stimuli is called the optomotor response (OMR). This behavior likely allows these fish to self-stabilize in moving currents in the wild; for example, as they swim forward in response to forward drifting stimuli, they stay “in place” visually. The amenability of larval zebrafish to whole-brain functional imaging, combined with the OMR behavior, has supported advances in understanding the circuitry that drives optic flow processing in the vertebrate brain.23,24

Although self-motion can in principle produce useful optic flow cues across the entire visual field,25,26 many animals appear to give priority to motion cues in a subset of their visual field. For example, different species of moths prefer to look either up or down to guide their behaviors,27,28 wasps maintain a specific visual angle above their nests during homing flights,29 praying mantises respond preferentially to optic flow in the central visual field,30 and humans prioritize the lower visual field to maintain posture.31 In flies, the lower field is preferred for course correction,32 and lower and upper fields are used for translation and rotation estimation, respectively.33 It was recently shown that the zebrafish OMR is driven preferentially by visual motion in regions of the lower visual field on the left and right sides of the animal.34

Optimal coding models of sensory processing predict that these spatial biases should vary lawfully in response to the statistics of the motion cues available in the visual experience or evolutionary context of each animal.35,36 Indeed, optic flow fields generated from natural environments can be sparse and noisy, putting pressure on animals to sample the most informative spatial regions. Different ecological niches likely correspond to systematic differences in spatial patterns in the availability of optic-flow cues. Previous work has used geometric models and measurements from videos to examine how natural experience may shape spatial biases in the fly26,37 and hawkmoth27 for estimating self-motion. However, given the complexity of self-motion-generated optic flow during natural behavior, the hypothesis that spatial biases are adaptive has proven challenging to test, both in general and for any specific model organism. Modeling the relationship between optic flow signals and self-motion estimates requires a combined consideration of environment geometry, signal and noise strength, motion-limiting behaviors, and neuronal receptive field structure.

We begin with an exploration of the general problem of visual self-motion estimation in natural environments. We model self-motion estimation from samples of naturalistic optic flow fields, demonstrating the effects of a set of pertinent environmental, behavioral, and neural factors. We show that a wide variety of error patterns are generated by different settings for each of these factors, suggesting that a holistic understanding of an animal’s habitat, actions, and receptive field structure is required to determine an advantageous spatial bias. We then model each of these factors for the visual experience of a specific model organism, the larval zebrafish, using omnidirectional videos captured in their native habitats during calibrated self-motion trajectories controlled by a custom-built robotic arm. Our results suggest that these animals can most reliably estimate their forward self-motion from regions in the lateral lower visual field, which is consistent with prior behavioral measures.34 Using a spherical stimulation arena covering more than 90% of the visual surround,38 we augment previous behavioral results with an extended map of the spatial biases in zebrafish OMR. These results bring together converging lines of evidence that biases in motion estimation reflect a general strategy to optimally measure behaviorally relevant information from natural environments.14,15,37,39–44

RESULTS

Determining self-motion from natural optic flow

We demonstrate the advantage of spatially biased optic flow sampling using the pipeline shown in Figure 1. Self-motion is estimated by comparing measured optic flow with idealized templates associated with each possible direction of self-motion (Figure 1A), as seen in the motion tunings of neuronal populations in several animals24,26,33,37 (for a comparison of other methods, see Tian et al.45 and Raudies and Neumann46). We consider both full field (Figure 1B) and local (Figure 1C) flow templates.

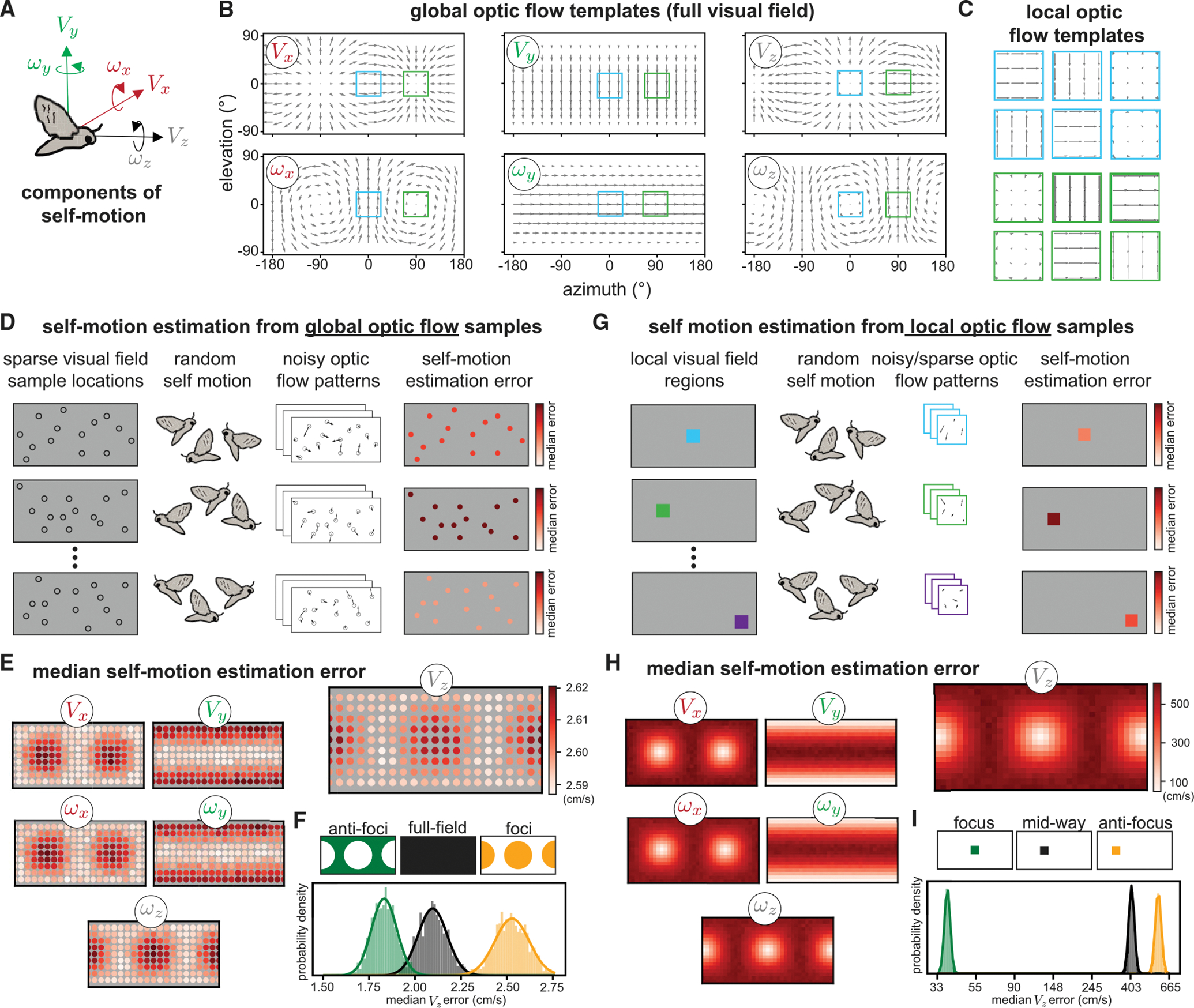

Figure 1. Spatial biases in optic flow sampling for self-motion estimation improve performance.

(A) We consider the optic flow generated by 6 components/directions of self-motion including translation and rotation, illustrated for a moth.

(B) Idealized optic flow templates for recovering each component of self-motion in a spherical environment are shown across the full visual field.

(C) For the blue and green regions highlighted in (B), we show the local flow templates for a 10° square region under a tangent projection. A small field of view can lead to a high degree of similarity between translation and rotation template patches (e.g., VX and ωY look very similar locally at these two positions but are more distinguishable with the full field of view). Arrowheads for the templates located at foci of expansion/contraction have been scaled up for visibility.

(D) To model self-motion estimation from sparse, noisy optic flow across the full visual field, we randomly sampled 60 visual field locations (of 180 predetermined possible locations) and calculated the median absolute self-motion estimation error for each component. Once a sample set was chosen, self-motion components were drawn uniformly at random from −1 to 1 (m/s for translation and rad/s for rotation), and additive Gaussian noise of standard deviation of 0.25 (relative to a maximal per-component flow magnitude of 1) was applied to the resulting flow vectors. The environment was modeled as a 1-m radius sphere. Each row illustrates one iteration of the model: we ran 10,000 iterations with different sample locations each time, and each iteration included 10,000 random self-motions for computing the median absolute error. Color bars indicate the median self-motion estimation error associated with each sample set. We then computed the overall median absolute error (median of medians) associated with each visual field sample location across all iterations.

(E) Heatmaps show the overall median absolute errors associated with each sample location for each self-motion component, with VZ plotted larger for visibility. The heatmap ranges for the other components are: VX (2.59,2.61), VY (2.94,2.99), ωX (1.48,1.50), ωY (1.68,1.71), and ωZ (1.48,1.50).

(F) Histograms illustrate the median VZ estimation error when 50% of the sample locations were used. These 50% could be spread evenly across the visual field (black), concentrated away from the VZ foci of expansion/contraction (green) or concentrated at these foci (orange). The anti-focus spatial bias leads to better estimates. We fitted each distribution with a Gaussian and computed the effect size (the difference between the means normalized by the pooled standard deviations) between the full field and biased samples as follows: anti-foci versus full field = −3.6; foci versus full field = 5.0.

(G) To model self-motion estimation from sparse, noisy optic flow using contiguous local visual field regions similar to receptive fields, we repeated this analysis on small, spatially contiguous regions. Each contiguous region subtended a 10° square and contained up to 100 contiguous optic flow samples, each of which had a 67% chance of being deleted after noise was added, with self-motion and additive noise sampled as before.

(H) Heat maps of the resulting median absolute errors. The heatmap ranges for the non-VZ translation components are the same as the VZ plot, and for the rotation components, they range from 20 to 350 deg/s.

(I) Histograms illustrate the median VZ estimation error (log-spaced) for the best local region (located at the VZ focus), the worst local region (located at the antifocus), and a region mid-way between those two. Effect sizes are as follows: focus versus mid-way = −64.8; anti-focus versus mid-way = 9.2.

Self-motion can be recovered perfectly if these templates match the environmental geometry, optic flow signals are available throughout the visual field, and nothing else in the environment is moving. Real optic flow fields, however, are rarely dense or clean. Sparsity in natural optic flow fields follows from the sparsity of spatial and temporal contrast.47–49 External noise is caused by motion cues in the environment, such as particles moving through air or water, swaying vegetation, moving animals, and optical effects like caustics and shadows. To examine the effects of noise and sparsity on spatial biases, we selected self-motion velocity components uniformly at random, synthesized optic flow samples, added Gaussian noise, and sparsified (by setting both sample and template values to zero at random locations). We initially assume the animal can move with six degrees of freedom (DOF) in a spherical environment with spatially uniform noise, then consider variations on each of these conditions (see figure captions for simulation details). We show that some regions are more useful than others in each of these conditions. This observation holds whether the full visual field is used or only a small contiguous local region is visible.

To evaluate self-motion estimation from the full visual field (global optic flow), we iteratively sampled optic flow across random sets of locations in the visual field and combined the self-motion estimation error across all sample sets (Figure 1D). This approach allows us to examine how each location in the visual field contributes to self-motion estimation, regardless of which other locations it is combined with. The median absolute error in all components of self-motion show small but consistent spatial variations (Figure 1E). Regions with smaller signal-to-noise ratios based on flow magnitude in the global templates (foci of expansion and contraction) are associated with larger errors, and regions with higher signal-to-noise ratios are associated with lower errors, consistent with the results derived from an internal noise model analyzed in Franz and Krapp.37 Importantly, sampling the set of low error locations results in better self-motion estimation than the same number of samples distributed evenly across the visual field. This is demonstrated in Figure 1F for forward translation (VZ). Selecting only the high error samples results in worse forward translation estimation (Figure 1F). We make no claims of optimality for this sampling strategy, instead noting that even naive use of error patterns can generate useful spatial biases.

Next, we restrict sampling to small contiguous regions of the visual field similar to individual receptive fields (RFs) (Figure 1G). In each region, we consider the self-motion estimation errors resulting from these isolated, contiguous samples (local optic flow). Note that the templates for these local regions are less distinct for each component than the global templates are (Figures 1B and 1C), such that the local self-motion estimation problem is generally less well conditioned. This leads directly to a reversal in the pattern of high and low error locations compared with the global sampling strategy, as local condition number has a greater impact than signal to noise (Figures 1H versus 1E). The errors are overall much higher for the local samples and the advantage of spatial biasing is amplified in this case (Figure 1I). Thus, while the visual ecology of different animals can vary widely, if natural flow cues tend to be sparse and noisy, then there will be an advantage for individuals that prioritize informative regions of the visual field.

Using this simulation and estimation framework, we will next evaluate the impact of behavioral and environmental factors on the relative accuracy of self-motion estimates derived from sampling locations across the visual field. We focus on the estimation of forward translation velocity (VZ) and heading direction (tan−1(VZ/VX)), because these are particularly ecologically relevant aspects of self-motion.

Behavior: Self-stabilization improves performance and shifts local spatial biases

Organisms are not entirely in control of their motion, particularly flying or swimming animals that navigate through moving air or water currents. However, many animals stabilize in several dimensions of motion, which simplifies the problems of self-motion estimation and control. This is particularly true for visual systems that rely on localized regions that suffer from template ambiguity. Motion-limiting behaviors can eliminate these ambiguities.

Here, we consider two behavioral simplifications. First, an animal might remove all self-motion other than forward translation (VZ; Figure 2A). In this case, there is a reduction in overall error, and there is still a clear advantage for spatially biased sampling. In this highly simplified setting, many local regions provide near-optimal performance (black and green overlap, bottom). The higher error regions occur primarily at the foci of expansion/contraction. With no ambiguity between templates in the local regime, the problem is perfectly conditioned, and signal to noise alone drives estimation errors, matching the global regime. The resulting spatial pattern of errors is a complete reversal of the local estimation error pattern for an animal moving with 6 DOFs (Figure 1H). While moving in only one direction is infeasible in most natural settings, this example illustrates that the motion behaviors of an animal are highly relevant in shaping their optimal spatial biases, particularly when optic flow is sampled locally.

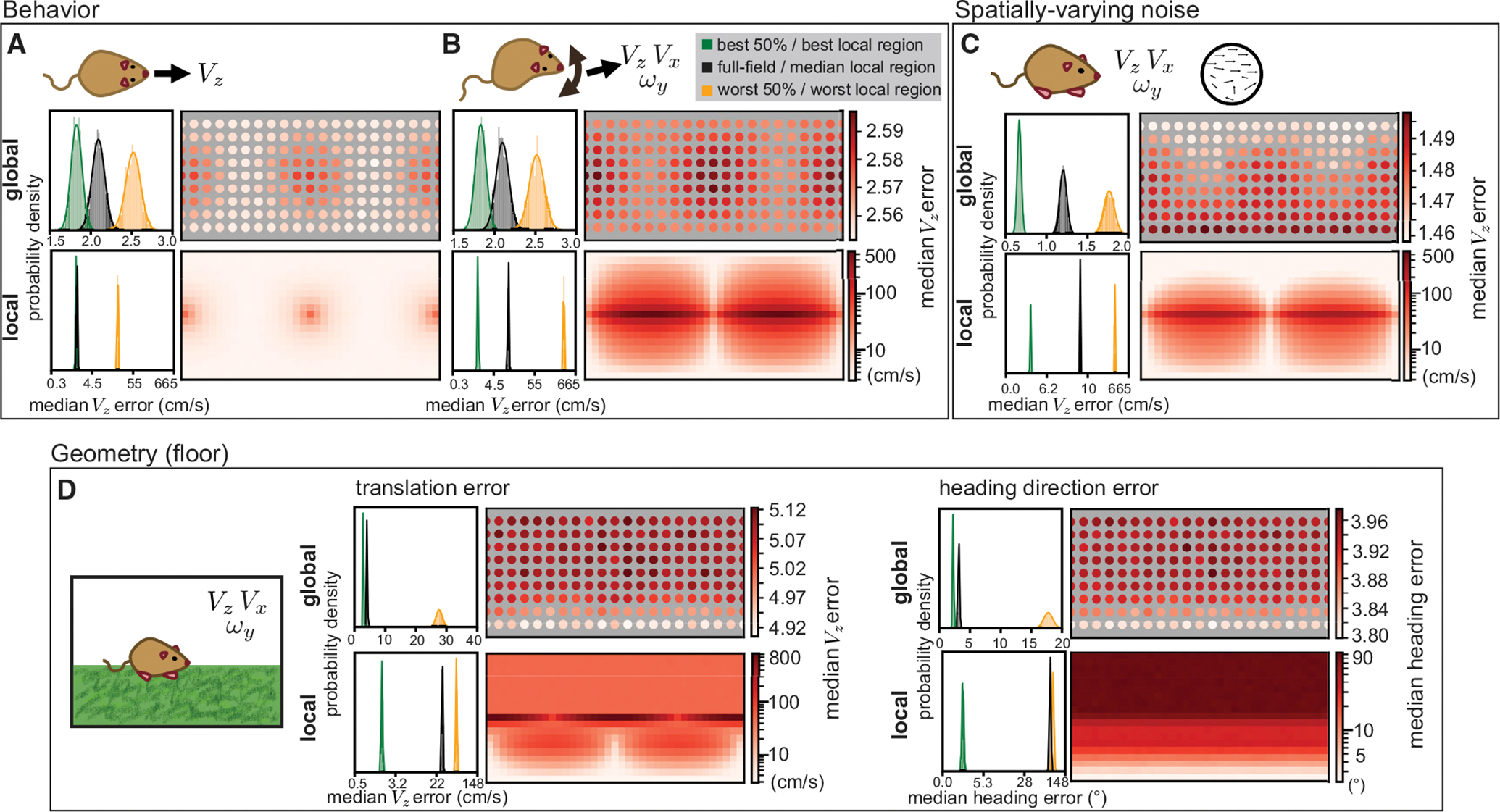

Figure 2. Animal behavior and environment shape spatial biases.

(A) An animal only moving forward removes the ambiguity in local flow templates so that both global and local errors indicate an away-from-foci advantage. Histograms on the left indicate the distribution of median absolute self-motion estimation errors for the best 50%, worst 50%, and full field global samples at matched resolution, and the best, worst, and median local regions over 1,000 simulations of 1,000 self-motion trajectories. Heatmaps indicating median errors are plotted in the same format as Figure 1. All model parameters are the same as Figure 1, except self-motion components other than VZ were all set to zero and a 1 DOF estimation applied. For the local optic flow, both the best and median regions are associated with very low error, resulting in a high degree of histogram overlap.

(B) An animal translating and rotating in a plane sees higher errors and expanded regions of ambiguity. All model parameters are the same as Figure 1, except self-motion components other than VZ, VX, and ωY were all set to zero and a 3 DOF estimation applied.

(C) When noise varies linearly from none at 90° elevation to a maximum at −90° elevation, low error regions are shifted upward. All model parameters are otherwise the same as (B), and maximum noise had a standard deviation of 0.25 (relative to a maximal per-component flow magnitude of 1).

(D) When the environment is a floor plane rather than a sphere, VZ error explodes at and above the equator. Heading estimates remove the depth ambiguity in translation component magnitude by comparing VX and VZ estimates, eliminating azimuth dependence for a pure lower field bias. All model parameters are the same as (B) except for the scene geometry, which is modeled as a flat floor 1m below and an infinitely far away wall in the upper visual field. Note that histograms and heatmaps for local errors in this figure are all on a log scale to account for the larger variability associated with different environmental settings.

For a terrestrial animal, or a swimming or flying animal that stabilizes its motion to a plane, three degrees of freedom may be a more reasonable simplification: forward translation (VZ), lateral translation (VX), and yaw (left/right) rotation (ωY) only. These can occur simultaneously, as when forward body motion is combined with a rotation of the head. This behavior, illustrated in Figure 2B, does not affect the spatial error patterns for global optic flow, but for local sampling, it leads to high estimation error in regions to the left and right sides, particularly along the equator where yaw rotation can be confused for forward or lateral translation. Elsewhere in the visual field, self-motion can be recovered reliably from local optic flow.

Environment: Spatially varying noise and scene geometry drive spatial bias

The previous examples showed environments with spatially uniform sparsity and noise. In reality, natural environments likely feature statistical regularities in the availability and reliability of optic flow signals across space. Figure 2C shows the result for estimating 3DOF motion in a sphere where the noise increases linearly from top to bottom. This shifts the low-error regions upward while maintaining the general spatial pattern for both globally and locally derived estimates. Spatial variations in sparsity have similar effects (not shown). When noise and sparsity are not present, all regions of the visual field are equally, perfectly informative; hence, the spatial distributions of noise and sparsity in an environment are fundamental drivers of error patterns in self-motion estimation.

The previous examples also all modeled the environment as a sphere. This could be considered a reasonable simplification for the ecological niches of some animals, like insects that fly through dense foliage. However, many natural environments are better modeled as having a ground plane that creates systematic depth variation as a function of elevation in the visual field.37,50,51 Figure 2D shows the different error patterns that emerge as a result of ground plane geometry for 3DOF motion. Here, we assume that all points above the ground are too distant to provide motion parallax cues so that forward velocity estimation errors shift from an elevation-symmetric pattern in the sphere (Figure 2B) to strongly favoring the lower field (Figure 2D). The worst errors in local VZ estimates occur at the equator toward the front/rear of the animal, where the small expected optic flow magnitude can lead to very large and incorrect predictions if rotation cues are mistaken for translation. Nonetheless, broad regions of the lower field are useful for determining the speed of forward translation.

When scene geometry is only known up to a scale factor, translation magnitudes cannot be accurately recovered, as a scene point moving fast and far away will generate the same optic flow as one moving slower and closer. This issue can be mitigated by comparing translation components (for example, by taking the heading angle) for a depth-independent estimation of self-motion. In the floor geometry, errors in horizontal heading angle estimation are also lowest in the lower visual field (Figure 2D, right). Spatial biases in heading error also have less azimuth dependence, as the foci for VX and VZ, both of which are needed to recover horizontal heading, are offset by 90°.

Simulations predict advantage to spatial biases of self-motion estimation

In summary, animal behavior, environmental noise, scene geometry, and sampling strategy all influence spatial patterns of error for an animal attempting to infer its self-motion from optic flow measurements. These error patterns can be used to easily generate a spatially biased sampling pattern that outperforms uniform sampling. The most informative regions of the visual field will differ for organisms in different niches, but with sufficient context, we should be able to quantify the advantage of a spatial bias for a particular animal. Next, we will apply this framework to the larval zebrafish and compare the predictions based on a model of these factors with direct measurements of spatial biases in the OMR.

Larval zebrafish optomotor swimming is driven by the lateral lower visual field

Larval zebrafish OMR is driven preferentially by moving stimuli in the lateral lower visual field,34 and pretectal neurons tuned for self-motion direction tend to have receptive fields in the lower visual field.24 To explore this behavior in more detail, we employed a spherical arena of over 10,000 individually controlled LEDs38 and displayed a series of moving stripe patterns to head-fixed larval zebrafish (Figures 3A, 3B, and S1A). The new arena allowed greater flexibility of stimulus patterns, larger coverage of the visual field, and higher spatial resolution for mapping of the spatial biases in the optomotor drive.

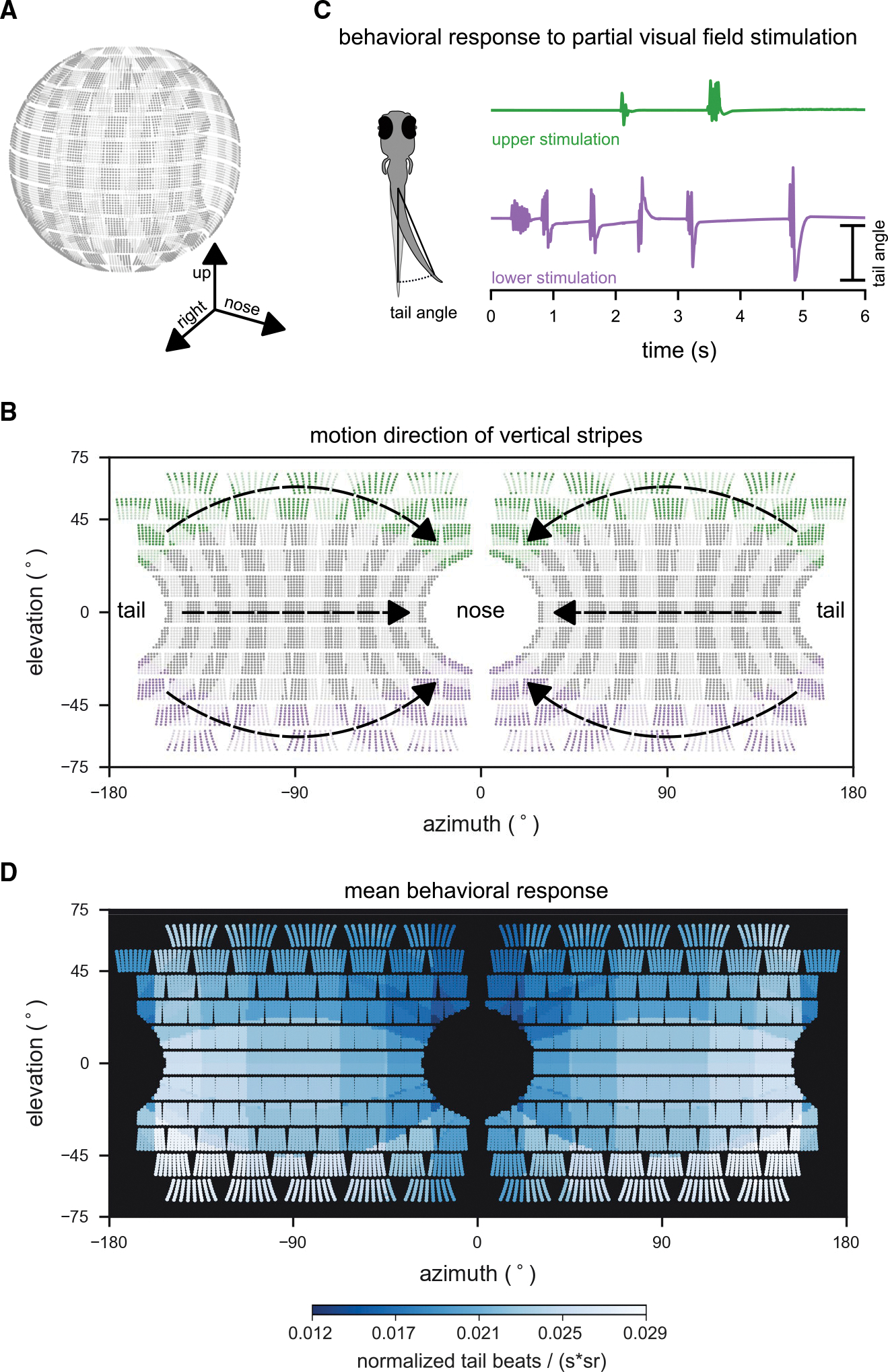

Figure 3. Larval zebrafish optomotor swimming is driven by the lateral lower visual field.

(A) Layout of individual LEDs in our spherical visual stimulation arena. The visual stimulus consisted of a pattern of vertical bars meant to simulate the fish swimming through a pipe with transverse stripes. Fish were placed in the center of the spherical arena, thus allowing for stimulation of an extended range of elevations (almost 140° × 360° of its visual field).

(B) During individual trials, stripes in different regions of the visual field were moving (example areas marked green or purple), whereas the rest remained static. Stimulus motion was from back to front, providing the percept of backward drift to the fish.

(C) Tail bend angles were measured for each recorded video frame to calculate behavioral responses to different stimulus locations. Fish generally responded more strongly to motion in the lower (purple trial), than in the upper (green trial) visual field.

(D) Heatmap color indicates the strength of the OMR to motion stimuli in different parts of the visual field. OMR drive was strongest for motion in the lateral lower visual field. To quantify the behavioral response, the number of tail beats per unit time was averaged over repeated trials for each fish, normalized by the fish’s maximum response to any of the stimuli, and scaled by the inverse of the area (in steradians) that was active (i.e., moving) for each stimulus to account for effects of stimulation area size on response strength. Responses were then combined into a single average across fish (n = 8 fish). Motion stimuli covered 19 different combinations of positions and steradian sizes in visual space.

See also Figure S1.

We consider tail beat responses to patterns that simulated backward drift in a tunnel (Figures 3B and 3C). These patterns were presented over 19 visual regions (Figure S1B), and the responses were combined to map the regions of the visual field that most effectively elicited an OMR (i.e., tail beats) (Figure 3D). These new data confirm and extend the conclusion that the fish preferentially use the lateral lower visual field to estimate self-motion signals pertinent to the forward swimming OMR (although substantial variation was observed from fish to fish; Figures S1C and S1D).

We now test the hypothesis that this spatial bias in OMR behavior reflects an adaptation to optic flow signals in the fish’s habitat. Combining knowledge of the fish’s behavior and visual system, ecological assessments of the zebrafish’s habitats, and a novel optic flow dataset, we model the factors relevant to zebrafish self-motion estimation. First, we use a natural video dataset to create a generative model of local flow regions observed in the zebrafish habitats, enabling an exploration of spatial biases in simulation. Then, we confirm simulation results by using the dataset videos directly to characterize elevation-dependent performance in self-motion recovery from natural optic flow recordings.

Dataset from the zebrafish habitat

We collected a video dataset from natural zebrafish habitats (Figures 4A and 4B; Table S1), which can be found in shallow freshwater environments throughout South Asia.52 Videos were collected using a waterproofed 360° camera mounted to a robotic arm for controlled motion trajectories: translations forward/backward, translations sideways, translations at 45°, clockwise/counterclockwise rotations, and circular turns (Table S2). A sample video frame from each site is shown in Figure 4C, with short video samples in Video S1. All frames and code used in the analyses are publicly available.53,54

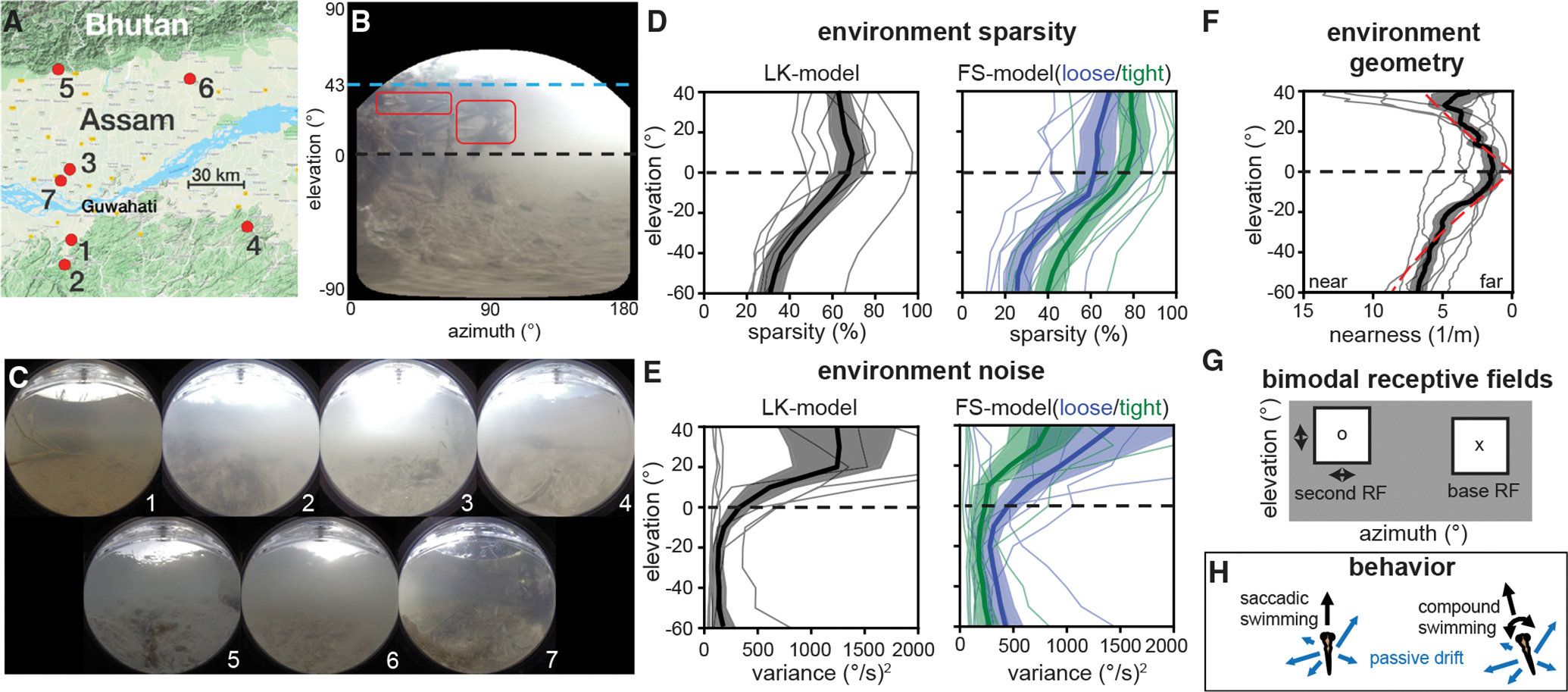

Figure 4. A model for natural optic flow statistics during zebrafish self-motion.

(A) Seven sites in the native range of the zebrafish were sampled, see Table S1 for details. Image from Google Maps.55

(B) An equirectangular projection of a sample frame shows elevation-dependent sources of signal and noise.

(C) Frames from each site summarize visual variation across the native range.

(D) Optic flow sparsity at individual sites (thin lines) and averaged across the dataset (thick lines, ±SEM in filled region) show high sparsity near and above the equator. This pattern holds across motion measurement algorithms (black, blue, and green).

(E) Errors in optic flow, attributed to natural motion within the environment, show higher variance in the upper visual field.

(F) The geometry estimated from optic flow vector magnitudes roughly matches a floor+ceiling model (dashed red) below the water line (~40°). Individual sites, SEM, and mean are plotted in the same manner as in (D) and (E).

(G) Receptive field structure is modeled in spatially separate bimodal pairs. Base RFs were placed in a predetermined grid of locations and paired with a range of possible second RFs.

(H) Two swimming behaviors are considered: saccadic swimming, where forward motion is added to lateral drifting (VZ ~ U[0,1] m/s + (VX,VZ) ~ U[−.5, −.5] m/s) and compound swimming, which additionally includes rotation (ωY ~ U[−1,1] rad/s). For more details, see Figures S1–S3 and S7, Video S1, and Tables S1–S4.

Calibrated camera parameters were used to reproject frames onto smaller planar tangent surfaces, creating image samples analogous to the local flow regions described in the previous section (Table S3). Optic flow was measured using two different algorithms: the Lukas-Kanade (LK) method56 and the Farid and Simoncelli (FS) differentiation method.57 Both methods apply the brightness constancy constraint to spatiotemporal derivatives, similar to the canonical computations thought to underlie motion processing across the animal kingdom.24,58–60 Whereas the LK method performs sparse tracking of informative flow features (e.g., corners), the FS derivative filters were applied to a pre-specified grid of points so that optic flow was computed regardless of the features present. Optic flow measurements were considered valid when features were successfully matched by the LK method and when the condition number and gradient magnitude met appropriate thresholds for the FS method. Since there is no a priori way to select a biologically meaningful threshold on gradients for the larval zebrafish, we consider two luminance gradient thresholds for the FS method, one relatively high (tight) and one relatively low (loose).

Motion sparsity and noise vary over elevation in the zebrafish habitat

The sample in Figure 4B illustrates several elevation-dependent sources of signal and noise typical of shallow aquatic environments. Due to refraction at the water’s surface, light from above the waterline fills the region from +90° to approximately +43° in elevation, known as Snell’s window. This fixed waterline (dashed blue line) often provides very strong optic flow cues, due to the high contrast edge between water and sky and the frequent presence of high scene velocities, but much of this optic flow is caused by the motion of the water surface and is misleading as a self-motion cue. In the portion of the upper field between Snell’s window and the equator (dashed black line), scene texture is somewhat sparse but can be augmented by reflection off of the underside of the water surface (red boxes). In a perfectly still and clear scene, the water surface would mirror the texture of the floor up to Snell’s window. In reality, scattering through turbid water and motion of the surface disrupt this reflection, so that high contrast edges are rare in the upper field. The equator generally lies in a region with very sparse contrast due to long viewing distances through turbid water. The lower field tends to provide much more visual contrast due to the texture and nearness of the floor. Optic flow in this region is not always informative to self-motion, though. For example, the bright lines visible are caustics caused by local focusing of sunlight by the water surface, and they provide a consistent but misleading optic flow field caused by water motion.

The video dataset was first used to determine an appropriate noise and sparsity model for the native habitat of the zebrafish. We focus on quantifying two features of optic flow: signal sparsity, modeled from the portion of valid flow measurements, and environmental noise, modeled from the deviation from ground truth flow fields in rotation videos. Elevation-dependent measurements were made covering a total visual area from −80° to +60°.

We found that signal sparsity was lowest in the lower visual field, increased up to the equator, and then dipped slightly with increasing elevation (Figure 4D). This trend held across optic flow calculation methods. The FS threshold can be set to match LK sparsity with more noise (loose, blue) or recover accuracy at the cost of higher sparsity (tight, green). We also found that the noise variance was larger in the upper visual field (Figure 4E). To accurately capture the high kurtosis of the flow errors, we fit the error distributions with a generalized zero-mean Gaussian at each elevation (Figure S2; Table S4). These models were used to generate optic flow simulating the sparsity and noise of the zebrafish habitat at each elevation. We report the results from the LK optic flow method in the main manuscript and include the results from the FS methods in the Supplement. The findings do not differ meaningfully between the different methods.

Shallow aquatic scene geometry in the zebrafish habitat

We adopt a simplified and widely applicable geometric model for a shallow underwater environment: a floor below the fish and a ceiling above, with features above the waterline too distant to provide accurate translation cues. We compare this model with estimates derived from translational trajectories in Figure 4F, based on elevation-dependent nearness (1/distance).

Larval zebrafish receptive fields for optic flow

We sampled optic flow in local regions spanning 40° × 40°, similar to larval pretectal and tectal RF sizes.34,61 RFs were placed without reference to the larval zebrafish field of view (approximately 160° per eye22) or binocular overlap. Each model RF contained a set of up to 25 flow measurements, modeling inputs from retinal ganglion cells with smaller receptive fields.62,63 RFs were considered in bimodal pairs (Figure 4G), as these bimodal pairings (i.e., two spatial regions per RF) are common in the larval zebrafish.24 In the larval zebrafish, these bimodal pairs tend to prefer a specific translation direction, with each mode offset symmetrically from the preferred translation axis and positioned away from the foci of that translation. By considering a grid of base RF locations and examining how performance varies when these are paired with a second RF across all potential locations in the visual field, our analysis predicts such symmetric equal-elevation pairs without a priori assumptions beyond the size, density, and bimodality of RFs.

Larval zebrafish swimming

Larval zebrafish display a diverse repertoire of visually driven swimming behaviors,64,65 but most of their self-generated translation is restricted to the horizontal plane.66,67 Using the vestibular system, the fish balance against roll and pitch;68,69 hence, we ignore these degrees of freedom as well. For underwater and flying animals, there is growing evidence for “saccadic locomotion” strategies, in which rotational movements (i.e., turns) are performed quickly and in temporal separation from translational movements, likely simplifying self-motion estimation.70–73 Thus, two swimming behaviors are considered here: saccadic swimming in which forward swimming is combined with slower passive horizontal drift due to water currents (Figure 4H, left) and compound swimming that additionally includes horizontal rotation (Figure 4H, right).

Simulation results show advantage for lateral lower visual field bias

Following the models described above for the environment sparsity and noise, scene geometry, RF structure, and swimming behavior, we simulated realistic local optic flow measurements across the visual field and assessed their informativeness for self-motion estimation using the framework described previously.

We found that the lowest forward translation (VZ) estimation errors were associated with RF pairs in the lateral lower visual field for saccadic swimming behavior. The lowest error occurred when the base and second RF were located at an elevation of −60° and has azimuths of 90° and −90°, respectively (marked with an x and o in Figure 5A, left). The heatmap shows the median absolute VZ error for all second RF locations paired with the best base RF location: there is a general trend of lower error in the lower visual field. This trend is not unique to the base RF shown. For each base RF, the error as a function of second RF elevation trended downward toward the bottom of the visual field (means shown in middle panel of Figure 5A, full results in Figure S4). Indeed, error was minimized by moving both the base and second RFs into the lower visual field (i.e., the green line has the lowest errors and slopes right-wards). The error in heading direction as a function of RF elevation matches these elevation-dependent effects. Examining the mean VZ error over azimuth (Figure 5A, right) across all second RF elevations demonstrates the importance of lateral RF placement. Here, we show just the errors for the best base RF elevation (−60°). Overall, errors tended to be minimized for RFs placed at azimuths of +90° and −90°.

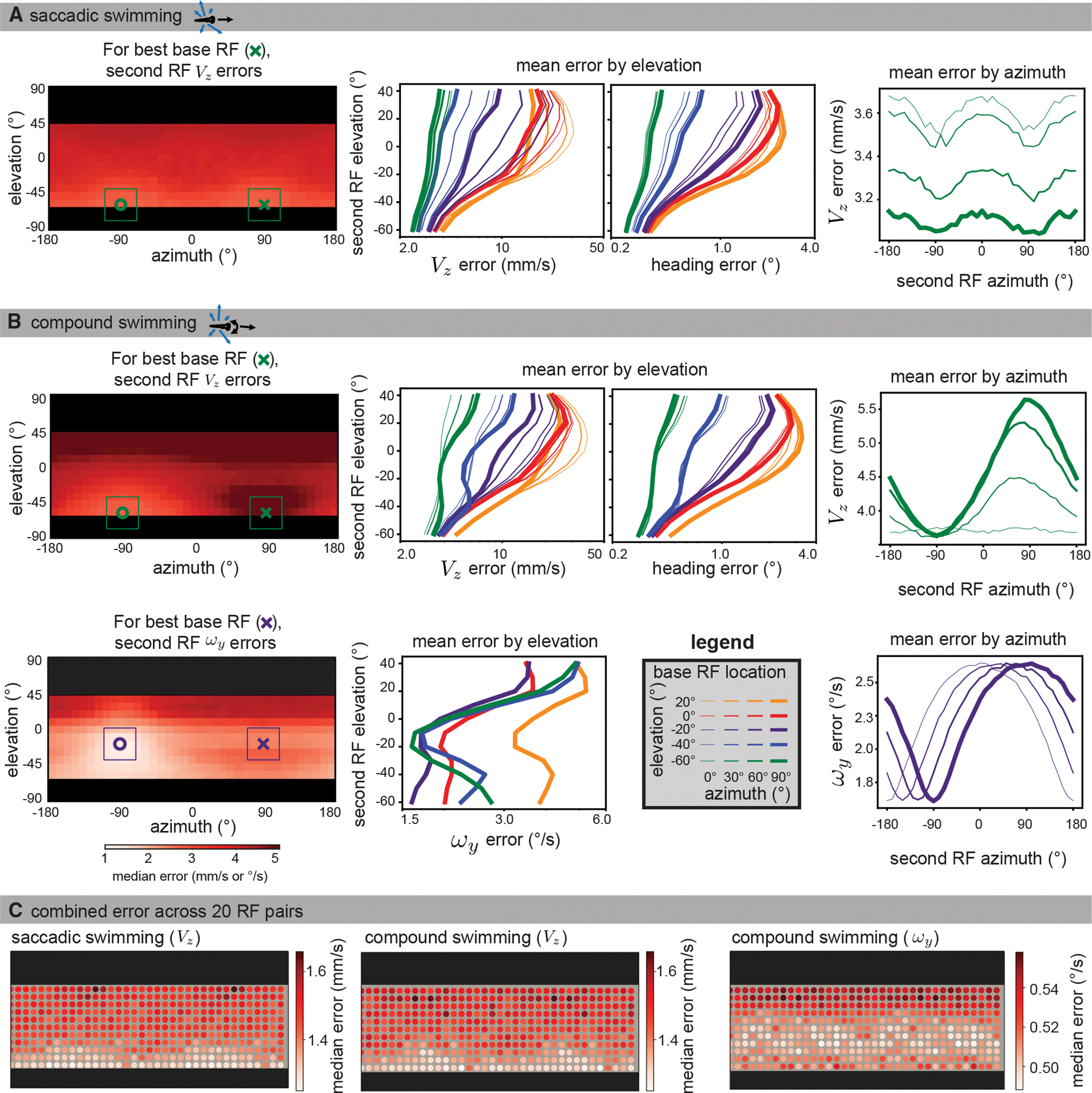

Figure 5. The visual ecology of the larval zebrafish generates lower translation estimation errors in the lateral lower field.

Data were simulated using naturalistic models of signal sparsity and noise, environment geometry, RF size and structure, and swimming behaviors. For each possible base RF location (see legend in B), median absolute errors were computed for 10,000 iterations across all second RF locations.

(A) Left: for saccadic swimming, the lowest error occurred when the base and second RF were located at an elevation of −60° and had azimuths of 90° and −90°, respectively. The plot shows a heatmap of the median absolute VZ error over across all second RF locations for the best base RF, and the single best base/second pair is indicated with an x and o. middle: for VZ and heading error, median absolute errors are averaged across azimuths to isolate elevation trends for each base RF location (colors indicate base RF elevation, line thicknesses indicate base RF azimuth). Both metrics show that translation is best recovered by RF pairs in the lower visual field. Right: When averaging across elevation for azimuth trends, the lower field base RFs tend to be best matched with a second RF at ±90° azimuth. For clarity, we only plot the results for the best base elevation (−60°).

(B) For compound swimming behavior, local translation errors (top left) show more extreme penalties for same-side sampling due to ambiguity with rotation. Elevation trends (top middle) are largely unaffected, other than an increase in average error, but azimuth trends (top right) show a strong advantage to sampling the lateral region on the other side of the body. The azimuth and elevation of the best individual pair are the same as for saccadic swimming. For rotation estimation (bottom), the lower field advantage is less strong, and the best performing bimodal RF (bottom left) appears at −20° elevation. Elevation trends (bottom middle) show strong performance throughout the lower field, whereas azimuth trends show no clear advantage to lateral regions, instead suggesting an azimuth separation of 180° minimizes error (bottom right). Full error maps can be seen in Figures S4 and S5, with a comparison across flow calculation methods shown in Figure S6.

(C) To characterize the performance across the visual field, we followed a similar method to the global method described in the previous section. Instead of a location sample corresponding to a single flow vector, we sampled sets of 20 RF pairs, with one centered at the sample location and the other 180° away in azimuth at the same elevation. The median absolute error over 1,000 estimates is shown for each of the motion directions from (A) and (B). Again, we see better performance in the extreme lower field for translation estimation (left, middle) and in the mid-lower field for rotation (right).

See also Figures S4–S6.

The results for translation estimation were similar for compound swimming (Figure 5B, top row). For RF azimuth, there was an extra boost (lower error) associated with placing the second RF on the opposite side of the nose, since this location is helpful for disambiguating optic flow caused by translation versus rotation. Rotation estimation errors were minimized with samples placed closer to the equator within the lower field (Figure5B, bottom row) and separated by 180° in azimuth. For rotation, the elevation of the best pair was −20° (purple lines), although the error averaged across all azimuths was slightly lower for the lowest base RF (−60°). The equatorial bias for rotation reflects the tension between the higher quality optic flow signals in the lower field and the geometric advantage of the distant equator for rotation estimation (the large distance removes ambiguities with translation signals). Full error maps are in Figure S5. The results shown in Figure 5 all use the LK-based noise model; similar results are shown for the two FS-based noise models in Figure S6.

We then considered the performance of combined bimodal RFs using an analysis similar to the global optic flow sampling shown in Figures 1 and 2. Rather than randomly sampling individual flows, we randomly sampled sets of 20 bimodal RFs, enough to cover the entire visible region when evenly spaced. The heatmaps in Figure 5C show the resulting median absolute errors associated with each spatial location in the visual field. The lower errors in the lateral lower field for translation, and toward the equator for rotation, are preserved even when multiple RFs are incorporated.

Comparing translation error across the two behavior types, we see that the lowest errors are comparable, but the highest errors are worse for the fish that combines translation with rotation while swimming. In noisy natural habitats, we infer that motion-limiting behaviors like saccadic swimming may reduce the advantage of spatial biases and allow more accurate self-motion estimation from less complex visual systems or more challenging scenes.

Results with real optic flow reiterate lower visual field bias

The previous section asserted a generative model of optic flow based on separate models of the scene geometry, signal availability, and noise in the native habitat of the zebrafish. This allowed for flexibility in our analysis: we could consider arbitrary velocities and simulate different locations across the visual field without loss of resolution or distortions in the camera. However, real optic flow samples from the video dataset provide a better reflection of higher order statistics of signal and noise, such as the spatial correlation of strong contrast cues or the presence of coherently moving distractors and scene geometry, which determines the tradeoff between translation and rotation cues. Thus, we turn to an analysis of real optic flow samples. Bimodal optic flow samples were collected from the high-resolution lateral regions of omnidirectional videos during several translation and rotation trajectories (see Table S2). Each flow sample contained two 40° × 40° regions, centered at ±90° in azimuth and matching in elevation. Each bimodal sample was used to estimate self-motion, both in isolation and combined with samples from other elevations in the same video frames.

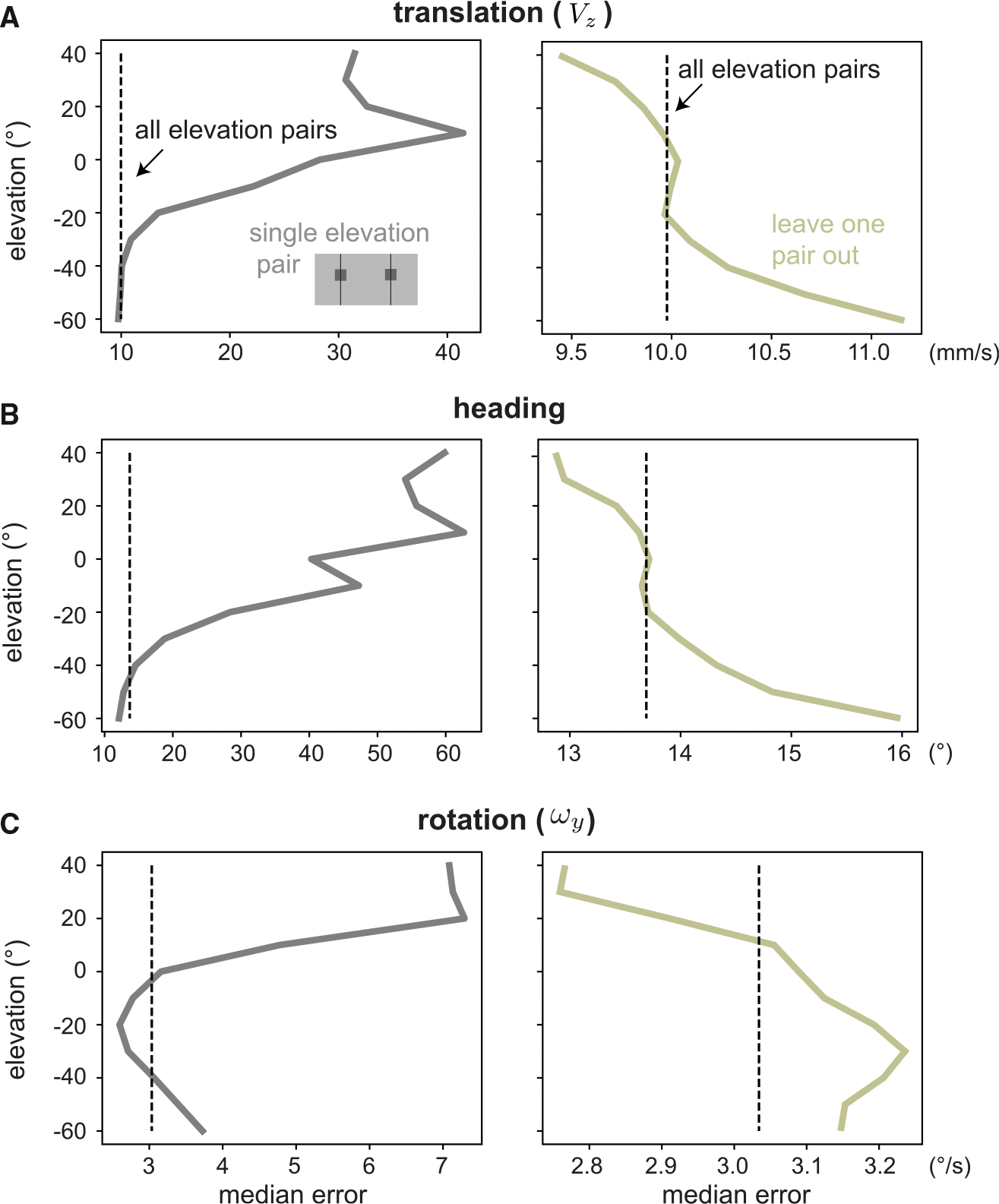

Figure 6 shows the elevation-dependent errors for forward translation (Figure 6A), heading direction (Figure 6B), and rotation speed (Figure 6C) from these data. The first column compares the estimation errors from single-sample estimates (gray line, illustrated with inset of visual field) with the error from using all elevation pairs in each frame (dashed black line). In general, single-elevation estimates perform less well than using all of the data, but in each case, there are elevations where a single sample is as effective or more effective than using the entire field. These correspond to the high-performing elevations in our simulation: −60° for translation and −20° for rotation. The rest of the visual field contains many misleading flow vectors, ultimately doing more harm than good. We also considered the effect of excluding single-elevation samples from the full field (Figure 6, second column). For both translation and rotation, ignoring data from the lower field reduces performance but ignoring data from the upper field improves it.

Figure 6. Ignoring the upper field improves motion estimates from optic flow measured in the native habitat of the zebrafish.

We demonstrate the value of spatial biases by showing an advantage to excluding elevations from self-motion estimation across our dataset of natural optic flows. For velocity distribution and dataset size, see Table S2.

(A–C) We consider forward translation (A), heading (B), and rotation (C) errors for single RFs pairs, comparing with a baseline of using all elevations (dotted vertical line). When using a single elevation (first column), the best regions predicted by our simulations are equal to or outperform the baseline. When all but one elevations are used (second column), removing lower field samples damages performance while removing upper field samples improves it.

DISCUSSION

Taken together, these results suggest that spatial biases in optic flow sampling represent an adaptation to reliably estimate self-motion in noisy natural environments. Here, we highlight how the current approach can be extended and contextualize our findings in the broader literature on motion processing in the zebrafish.

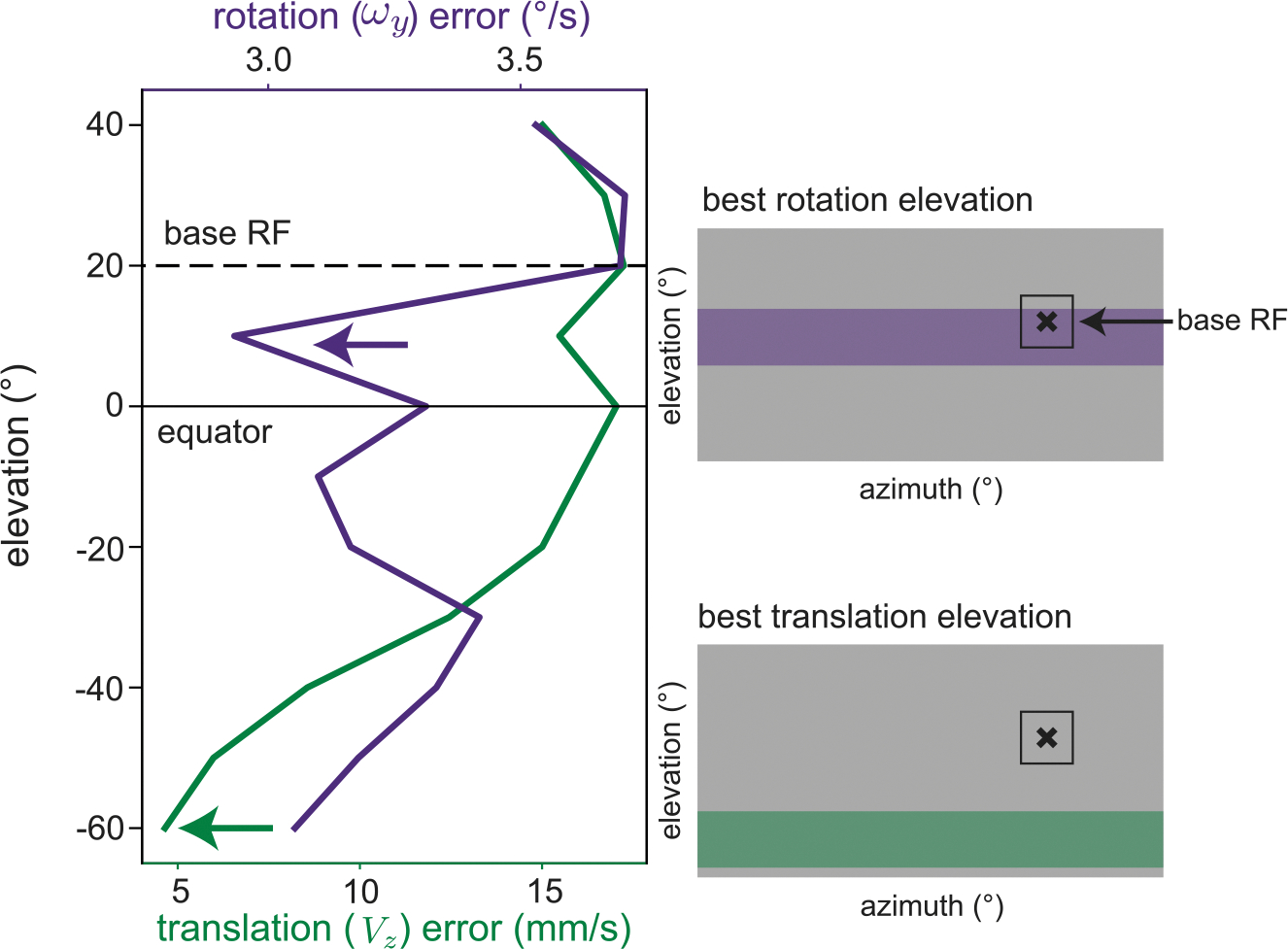

Spatial resolution across the visual field

The retinas and brains of many organisms feature anatomical and functional spatial biases that are not yet fully understood. These additional spatial biases can be readily incorporated into the current framework to explore new questions. For example, our predictions for a lower field bias in rotation estimation in larval zebrafish appears to contradict a recent study showing more robust behavioral responses to rotational optic flow in the upper visual field.38 It was hypothesized that higher cone density in the upper visual field may contribute to this rotational spatial bias. We can test the hypothesis that variation in retinal resolution is sufficient to overcome elevation-dependent noise patterns in rotation estimation. To do so, we modeled the retinal resolution bias by reducing sparsity by a factor of 4 for elevations above the equator, on the assumption that more sample locations increases the probability of detecting sparse scene points. We then considered a base RF in the upper visual field, placed laterally and at a 20° elevation to stay fully below the waterline, and examined the mean self-motion estimation error across azimuths for each possible elevation of a second RF (Figure 7). For rotation, the best second elevation moved into the upper visual field, whereas for translation, the best second RF elevation remained in the lower visual field, consistent with the spatial biases seen in both behavioral responses. This preliminary observation suggests that anatomical biases can be powerful enough to change the reliability of optic flow across space. Although the upper visual field may not be a more reliable source of self-rotation information per se, other behavioral pressures (e.g., hunting, predator avoidance) may drive photoreceptor density in this region and affect downstream optic flow processing for self-motion.74 These interactions across different spatial biases and different anatomical/functional stages would be a fruitful direction for future work. Based on this analysis, we might predict, for example, that genetically diverse but closely related species, such as cichlids, should also have different spatial biases in optic flow processing. These biases could be predicted independent of behavioral experiments by taking similar measurements from their anatomy and environment.75

Figure 7. Spatially varying resolution of the front end of the sensory system can shift rotation bias.

Beginning with the compound swimming setting in Figure 5, we modified our sparsity model to increase sample probability by a factor of 4 in the upper visual field, as a proxy for higher retinal resolution. In this setting, a base receptive field located at 20° elevation (black dashed line) and 90° azimuth is better paired with another upper field RF for rotation (purple arrow) while still benefiting from a lower field partner for translation (green arrow). Compare with Figure 5D in which both types of motion estimation show lower error in the lower field. Spatial variations in anatomical resolution may be responsible for a slight upper field bias observed in rotation responses in the larval zebrafish.

Behavioral adaptation and energy allocation

An important driver of sensory and motor systems throughout the animal kingdom is metabolic efficiency. In addition to the energetic demands of processing sensory information, many animals also sample the world using eye, head, and body movements. Complex energy tradeoffs determine ideal motion patterns for optimal sensing.44 It is perhaps no surprise then that spatial biases in optic flow responses emerge as being particularly important for understanding highly energy-constrained animals such as insects and simple vertebrates such as the larval zebrafish.27,29,30,32,33 In more complex-brained animals, like humans, such spatial biases are seen in low-level dynamic tasks like keeping us upright as we stand31 and walk,14 which may require faster and more efficient visual processing. Limitations on energy and response time may drive organisms to rely on heuristics and clever tricks over higher-level scene processing.

In this context, it is interesting to consider whether the larval zebrafish visual system is adapted to larval or adult swimming behavior. Since the larval brain is more computationally constrained than the adult brain (many additional pretectal neurons will be added during ontogeny), we believe that it is likely that larvae are specifically adapted to larval behavior. Indeed, larval behavior has characteristic properties, such as swimming in discrete bouts, that may facilitate self-motion estimation with simple circuits.64 For example, during quick turns, larvae turn at a speed of about 30,000°/s.76 Since retinal speeds above 100°/s are unlikely to evoke motion responses in retinal ganglion cells,77 such high speeds are likely ignored by the fish when estimating self-motion, in favor of using the slower components of motion following the initial acceleration. Bouting thus likely matters for motion processing strategies, since it structures locomotor trajectories to be (1) mostly saccadic and (2) sometimes too fast for the associated optic flow to be perceivable. However, the spatial biases explored in the current report appear largely robust to different swimming behavior models, suggesting that both larval and adult zebrafish may benefit from the same spatial biases in optic flow processing. We therefore speculate that developmental plasticity of the lateral lower field bias may be relatively low, on the basis that it need not adapt between larval and adult behavior.

Conclusions

The self-motion estimation problem has been solved across the animal kingdom with a widespread reliance on spatially biased optic-flow processing, shaped by the combination of brains, behaviors, and backdrops that determine each species’ unique visual ecology. The larval zebrafish has emerged as a model organism in neuroscience, with a body of work describing their genetics, brain structures, and single-cell responses. The use of such model organisms is a powerful technique for moving neuroscience forward, but it must be done carefully, with a full understanding of the context that has driven their neural and behavioral responses. For example, both humans and zebrafish exhibit a lower field bias in optic flow responses, but our environments, behaviors, and receptive fields are drastically different. Rather than simply concluding that organisms benefit from lower field biases in general, we must consider that they may have arrived at similar solutions for solving diffent self-motion estimation problems.

STAR★METHODS

RESOURCE AVAILABILITY

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Emma Alexander (ealexander@northwestern.edu).

Materials availability

This study did not generate new unique reagents.

Data and code availability

The video dataset and the zebrafish behavioral data have been deposited at Zenodo and are accessible via the URL in the key resources table.54 All original code is on Github and accessible via the URL in the key resources table.55 Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

KEY RESOURCES TABLE.

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

|

| ||

| Deposited data | ||

|

| ||

| Custom Python code and information about field sites | This paper | https://doi.org/10.5281/zenodo.7120876 |

| Video dataset | This paper | https://doi.org/10.5281/zenodo.6604546 |

|

| ||

| Experimental models: Organisms/strains | ||

|

| ||

| Tg(elavl3:nls-GCaMP6s)mpn400 | Förster et al.78 | ZFIN ID: ZDB-ALT-170731-37 |

| Tg(elavl3:H2B-GCaMP6f)jf7 | Vladimirov et al.79 | ZDB-ALT-150916-4 |

| mitfa | Lister et al.80 | ZFIN ID: ZDB-GENE-990910-11 |

|

| ||

| Software and algorithms | ||

|

| ||

| Python 3.8 | python.org | RRID:SCR_008394 |

| Jupyter | jupyter.org | RRID:SCR_018315 |

| Numpy | numpy.org | RRID:SCR_008633 |

| SciPy | scipy.org | RRID:SCR_008058 |

| Pandas | pandas.pydata.org | RRID:SCR_018214 |

| h5py | h5py.org | https://doi.org/10.5281/zenodo.4584676 |

| Opencv (Matlab and Python versions) | opencv.org | RRID:SCR_015526 |

| FFmpeg | ffmpeg.org | RRID:SCR_016075 |

| Matlab 2022a | Mathworks | RRID:SCR_001622 |

EXPERIMENTAL MODEL AND SUBJECT DETAILS

The behavioral experiments with zebrafish were approved by the government authorities (Regierungspräsidim Tübingen) and carried out in accordance with German federal and Baden-Württemberg state law. Eight animals aged between 5 and 7 days post fertilization were used in this experiment. These animals had different genotypes (e.g., broadly expressing a GCaMP calcium indicator using the transgenic lines Tg(elavl3:nls-GCaMP6s)mpn40078 or Tg(elavl3:H2BGCaMP6f)jf779) that were likely heterozygous. The pigmentation ensured high visibility of the animal body against the background in the experiments (animals were likely heterozygous for mitfa80). The sex of zebrafish is only determined at late larval stage and genetic as well as environmental factors affect sex determination81. Therefore, the larvae used in our experiments did not have an (identifiable) sex yet and experiments were thus agnostic to sex.

METHOD DETAILS

Coordinate system

We adopt an East-North-Up, or ENU, geographic coordinate system to represent angular positions around the animal. In this system, all positions are defined relative to the animal’s head, and expressed as azimuth (horizontal angle, with positive values to the right of the animal), elevation (vertical angle, with positive values above the animal), and radius (or distance to the animal). The point directly in front of the animal (at the rostrum) is located at [0°, 0°] azimuth and elevation. Azimuth angles cover the range [−180°, 180°] and elevation angles [−90°, 90°]. Further details are described in Dehmelt et al.38

Estimating self-motion from optic flow

We denote the locations of physical points in the world relative to the eye as X,Y,Z, and we denote the radial distance of these points as r. At each angular location around the animal, with elevation α and azimuth θ in radians, the optic flow associated with a translation of (VX,VY,VZ) and a rotation of (ωX,ωY,ωZ) takes the form:

| (Equation 1) |

| (Equation 2) |

We refer to these as “ideal flows” because they will only occur when motion cues are dense and accurately sensed.

When examining contiguous local regions of the visual field, we consider instead a simplified pinhole camera model with focal length f and a planar sensor, so that world points are projected to pixel locations x = fX/Z, y = fY/Z (note we assume here an imaging plane in front of the pinhole, without loss of generality). In an ideal setting, optic flow on this plane takes the form:

| (Equation 3) |

| (Equation 4) |

These equations illustrate that the optic flow components in both systems can be modeled as weighted sums of optic flow templates, where the weights are translational and rotational components of self-motion and the templates are determined by a combination of location in the visual field, scene depth, and, in the tangent projection, focal length. If the depth of each point is known, we can render the library of six local optic-flow templates for each spatial region corresponding to the six components of self-motion (Figures 1B and 1C). We assume a unit focal length for these local projections, equivalent in the small angle regime to representing x and y in terms of visual angle rather than pixel location. In either setting, flattening the flow samples into a vector and stacking the flattened templates into a matrix A, we can consider the previous equations as

| (Equation 5) |

where is the 6-component self-motion velocity vector. Under ideal circumstances, the templates in A will be linearly independent, so that the velocity of self-motion can be recovered exactly by inverting the system. However, this recovery can degenerate in practice for a number of reasons, for example, when the measured optic flow is too sparse or impacted by other sources of motion. To model noise in optic flow available from natural environments, we initially assume additive Gaussian noise. For the noise model derived for the zebrafish habitat, we use a fitted generalized Gaussian additive noise model (see below).

To model sparsity in optic flow signals, we delete some percent of a signal’s samples at random. The samples must be removed from the calculation, rather than set to zero, because setting only the samples to zero indicates a signal of zero motion. While the sparsity of successfully computed optic flow samples provides a powerful metric for the quality of motion signals available in the environment, it is not clear how the early visual system of the zebrafish would detect and discard the samples we exclude. That is, we do not propose a specific model for how high contrast stationary signals are represented differently from low contrast, subthreshold signals with some motion. We simply assume that a sample with no motion signal is discarded.

This template-based approach for determining self-motion relies on some knowledge of scene geometry because the pattern of the translational templates depends on the distance of points from the animal. It is currently unknown how biological visual systems handle this ambiguity. We employ a plausible model of average scene geometry so that in our simulations the templates precisely match the simulated environment, but when using optic flow data from our video dataset directly the templates are only an approximate match.

General simulations: Global flow samples

To calculate the self-motion estimation errors shown in Figure 1 and Figure 2, we began by selecting a sparse, random sample of optic flow vectors from across the visual field. Sample locations were drawn uniformly at random in sets of 60 from a lattice of 20 azimuths and 9 elevations (that is, 33.3% of 180 possible locations, which we term 66.7% sparsity). For each random sample set, we then simulated the optic flow associated with random self-motion by selecting each self-motion component uniformly at random between −1 and 1 (in units of m/s for translations and rad/s for rotations). For each random self-motion trajectory, independent additive noise was applied to each flow vector, drawn from a Gaussian distrinution with a standard deviation of 0.25 (relative to a maximal per-component flow magnitude of 1). We then calculated the median absolute error of the self-motion estimate for each of the 6 velocity components as well as for the heading direction in the horizontal plane. In the plotted heatmaps, we report the median of median absolute errors at each location, for 10,000 iterations containing 10,000 random trajectories each. This method provides an estimate of the performance associated with each spatial location, independent of which other locations are measured at the same time, without asserting a specific population decoder. Similar patterns of results are obtained for different noise levels and sample set sizes.

To summarize the effects of spatial biases and compare biased sampling to completely unbiased sampling strategies, we then defined three global sampling strategies, each using half of the possible samples (90 out of 180). In the “full-field” strategy, samples are spread evenly across the visual field (unbiased). In Figure 1, we compared this strategy to two biased strategies: concentrating the samples away from the VZ foci of expansion/contraction or concentrating these samples towards these foci. We simulated 1000 batches of 1000 random trajectories and plot histograms of the median absolute VZ estimation error across these batches for each strategy. We then fitted each distribution with a Gaussian and computed the effect size between sampling strategies (the difference between the means normalized by the pooled standard deviation). In Figure 2, this analysis was performed the same way, except the two biased strategies were determined by performing a median split on the errors and selecting the sample locations associated with top half and bottom half of the errors.

Figure 1 shows results for 6DOF motion in a 1m radius sphere. In Figure 2 this baseline condition from Figure 1 has been altered in several ways. In Figure 2A, all velocity components except VZ are set to zero and removed from the templates. This in fact implies two restrictions – the first is that some directions of self-motion are successfully removed from the locomotive process that generates the optic flow samples, and the second is that the animal is sufficiently successful in this restriction that they can remove irrelevant DOFs from their estimation process. That is, in our template-based framework, if rotational self-motion is absent but the system still solves for rotation components, performance will not improve. Estimation errors are caused by the combination of noise in the input data and sensitivity in the estimation method, and this sensitivity is determined by the characteristics of the template matrix (e.g., condition number, minimum mean square error). A softer version of this restriction might be achieved through a Bayesian process that uses a strong zero-centered prior on some motion directions. In this 1DOF reconstruction, the matrix of templates in Equation 5 above is 120×1 instead of 120×6 in the 6DOF case. In Figure 2B, all velocity components except VX, VZ, and ωY are similarly set to zero and a template matrix of size 120×3 is used. In Figure 2C, the same three degrees of freedom are included in the motion trajectories, but the standard deviation of the additive Gaussian noise varies with elevation. Rather than a uniform value of 0.25 across the visual field, it varies linearly in elevation: from zero at the north pole to 0.25 at the south pole. In Figure 2D, the 3DOF motion continues, but the geometry changes from a 1m sphere (r=1) to a floor with distant walls (r = sinα for α < 0, r = ∞ for α ≥ 0). In addition to the VZ error, we also include errors in heading (tan−1(VZ/VX)).

General simulations: Local flow samples

The self-motion estimates from localized optic flow were calculated in much the same way, with minor modifications. In each region, a number of samples was generated, noise was added, then the sample was sparsified. For example, for a sparsification of 67%, a random number was uniformly generated between 0 and 1 at each pixel location, and any pixel with a value below 0.67 was set to 0, with the corresponding template value also set to zero to prevent systematic underestimation of speeds. For Figure 1 and Figure 2, each region is 10°×10° and originally includes 100 flow vectors before additive Gaussian noise with standard deviation 0.25 and sparsification of 67% (corresponding to selecting 33.3% of the possible samples as in the global flow simulation). We report the median absolute error associated with each local region, as well as showing full histograms of errors associated with the best, worst, and middle or median location. Similar patterns of results are obtained for different noise and sparsification levels.

Natural optic flow from larval zebrafish habitat field recording sites

We conducted a survey of zebrafish habitats in the Indian state of Assam over a two-week period in October 2019. This geographic region was chosen for its history of zebrafish sampling52,82 and due to its varied geography within relatively short distances. Using Guwahati as a base, we took daily excursions to potential sampling locations using local knowledge and satellite imagery of water features as guides. All recordings were conducted in areas outside of protected lands. Videos were acquired from nine sites across central Assam. Data from two sites were excluded from the current analysis because no videos were captured with camera motion or the camera was unstable. Thus, seven different sites are used here (Table S1). These sites had a range of different qualities (e.g., still vs. flowing water; bottom substrate: silt, sand, rock; vegetated vs. non-vegetated; shaded vs. full sun). See supplemental video for brief clips from each site. Adult zebrafish were observed at all sites that were sampled. Other surface feeders were also present, most commonly Aplocheilus lineatus and Rasbora species. It is important to consider that changes in water turbidity occur throughout the year in Assam. Turbidity levels increase dramatically during the monsoon season that spans the summer months,83 which can influence underwater visual statistics. We did not experience precipitation during the period when recordings were obtained, although there was substantial rainfall in the preceding weeks.

Camera calibration

Cameras

Videos were recorded using a high frame rate omnidirectional camera positioned in the water using a custom boom rig attached to a programmable robot. Underwater WiFi control of the camera was achieved by affixing a coaxial cable (6 cm exposed ends) to the camera’s dive case. Two recording devices of the same make and model were used (Insta360 One X). These devices have two fisheye cameras placed back to back, each with a field of view of approximately 180°. The devices were housed in a waterproof dive case during video recording. All videos were recorded at 100 frames per second with a pixel resolution of 1504×1504 for each fish-eye camera using the h.264 codec (MP4 file format). We used FFmpeg to extract 8-bit RGB frames from the compressed video and filtered the resulting frames with a Gaussian smoothing kernel (σ = 2 pixels) to reduce compression artifacts. During data acquisition, the cameras were set to a fixed ISO of 800 and a “cloudy” white balance profile. Exposure time for each video was chosen manually between 1/240 and 1/4000 seconds depending on the lighting conditions of each recording site. For each device, we performed a series of measurements to characterize the spatial distortion of the lenses, the spectral sensitivity of the color channels, the nonlinearity between pixel bit levels and world light intensity, and the spatial resolution (modulation transfer function). All calibration procedures were based on PNG frames recorded with the same settings used for in-field data collection, and with the camera housed within the dive case.

Spectral sensitivity

We characterized the sensitivity of each camera color channel as a function of the wavelength of incident light. First, we recorded a set of calibrated narrow-band light sources produced by a monochromator in steps of 10 nm from 380–790 nm. We modeled each light source as an impulse function and determined the sensitivity that best fit the cameras pixel response at each wavelength. Sensitivity was highly similar across the two cameras for each device, so we averaged the sensitivities together. It is important that the imagery analyzed is well-matched to the spectral sensitivity of zebrafish. The zebrafish retina has four cone variants that bestow broad spectral sensitivity ranging from the UV to red (UV cone λmax ~ 365nm; blue cone λmax ~ 415nm; green cone λmax 480nm; red cone λmax ~ 570nm).84,85 As such, we conducted our analyses on the camera’s central (green; G) channel which spans red cone spectral sensitivity and the upper half of green cone sensitivity (Figure S7A). Red and green cones are known to dominate zebrafish motion vision.86

Spatial calibration

The fisheye lenses introduce substantial spatial distortions across the video frames. We characterized the spatial distortion for each camera using a standard fisheye camera model defined by a set of 10 intrinsic parameters that describe the distortion magnitude at each pixel in terms of the distance and direction from a distortion center.87 Parameters for each camera and each color channel were estimated using the Computer Vision System Toolbox in Matlab, by finding the set of parameters that minimized the error between the measured and expected images of a set of calibration points (~1700 points on average were used for each camera). The fitted parameters for each calibration are provided in Table S3 along with the average reprojection error in pixels.

Response nonlinearity

Pixel values do not necessarily increase linearly with increases in radiometric power within the sensitivity range. To characterize these response nonlinearities, we again recorded a set of calibration images, this time captured from a broadband light source at 50 different calibrated intensity levels. This range was selected to encompass the full range and ceiling of the sensitivity at the chosen exposure duration. To confirm the floor of sensitivity, we also measured the dark response (the average pixel value returned when all illumination is absent). Using the spectral sensitivity profile described in the previous section, we created a look up table to convert the G pixel bit values (0–255) into linear light intensity within the spectral envelope of the channel. The linear bit values were used for the FS method of optic flow measurement (see below).

Spatial resolution

We also characterized the quality of the camera’s optics within the range of spatial frequencies pertinent to zebrafish motion perception. Using an approach adapted from the Siemens STAR Method,88 we imaged a calibration target with radially increasing bands (a pinwheel) at a range of locations in each camera’s field of view. We used the fitted camera distortion model to correct distortion of the pinwheel images and applied the linearization look up table. We then computed the Michelson contrast as a function of distance from the pinwheel center and converted into cycles/degree (cpd) based on the camera pixel resolution. Initially, we found that the two cameras in both devices exhibited slightly different transfer functions, with one camera always having higher resolution. To achieve consistent resolution in the spatial frequency range of interest, we increased the blur kernel applied to the higher resolution camera (σ = 3 pixels). This resulted in sensitivities plotted in Figure S7B.

Camera motion control

Camera trajectories were controlled using a custom robotic system built for the project. The system is supported by three expandable carbon fiber tripod legs (DragonPlate, New York) that screw into an aluminum base. An XYZ motorized gantry system (MOOG Animatics, Mountain View, California) is secured to the top of the base using a leveling screw apparatus (gantry system travel: 300 mm in X & Y, 150 mm in Z). An expandable horizontally oriented carbon fiber pole extends from the Z-axis gantry with a rotary motor affixed to the pole’s far end (Zaber Technologies, Vancouver, RSW60C-T3-MC03). The dive case for the Insta360 One X camera screws into the end of a vertically oriented expandable carbon fiber pole whose opposite end screws into the rotary motor. A variety of custom aluminum fittings for the robotic system were designed and machined by Micrometric (Toronto). The system is controlled by a Getac V200 (New Taipei City, Taiwan) rugged laptop running SMNC (geometric code software; MOOG Animatics) for gantry control and Zaber Console (Zaber Technologies) for rotary motor control. A CLICK programmable logic computer (AutomationDirect) triggered by the gantry system coordinates the timing of camera rotations relative to XYZ motion. All components of the system are powered by a small portable generator (iGen1200, Westinghouse).

We used camera trajectories that can roughly be interpreted as motion of a zebrafish relative to the visual surround (Table S2). Camera trajectories were in the horizontal plane, which is also the plane that zebrafish mostly use during swimming and presumably also mostly occupy during passive drifts. Pure rotation and pure translation were included, along with combined rotation and translation. That being said, these camera trajectories did not faithfully recapitulate swimming behavior: the camera is much larger (approximately 4.5 cm wide) than a larva (4 mm) and only certain speeds were accessible in the field, so that scene geometry and speed magnitudes do not correspond well to zebrafish behavior. In particular, the recorded camera turns were much slower than turns of a larva. Therefore our camera trajectories only correspond to some aspects of the expected self-motion patterns. Another question is whether the visual system is adapted to the motion statistics of passive drifts or locomotion. Passive drifts are oftentimes much slower than speeds during locomotion (in the beginning of a bout). Passive drifts are furthermore known to evoke optomotor responses and such responses are suppressed during an ongoing swim bout. Therefore it seems likely that the visual system is using information from the slower periods of self-motion (passive drift, and glides after bout initiation) to estimate ego-motion. However, further investigation is needed to understand the adaptation of the visual system to the precise locomotor kinematics of zebrafish larvae, which are also known to include frequent pitch bout/swimming in the water column.67,69

Measurement of optic flow, noise, sparsity

Data selection

We analyzed a subset of collected video data. For the selected trajectories, described in Table S2, we discarded data beyond four seconds (400frames) of video from each site, so that each velocity is represented roughly equally in our analysis. This duration was selected because at least five seconds of video were recorded for each velocity (with the exception of circular arcs, which were slightly shorter). We omitted 500ms from the beginning and end of each trajectory to account for acceleration artifacts. Optic flow measurements that would include a key frame were skipped, leaving over 90% of the frames for each trajectory. Some trajectories were unavailable in the collected data: the 50°/s clockwise rotation at Site 2 was missing, so the 50°/s counterclockwise rotation video at that site was used instead, reversed in time. An analysis of the optic flow for the 20°/s rotation on Oct 17th suggested that the camera rotational control malfunctioned, so we excluded this sample from the noise model. Circular arcs were only collected at Sites 3, 4, and 7.

Optic flow algorithms

Based on the spatial calibration of the cameras, local patches were sampled from the green channel of the video frames and undistorted to a local tangent projection. Patches were 40×40 pixels and covered 40°×40° in visual angle. Two optic flow calculation pipelines were used.