Abstract

Background

Owing to the prevalence of the coronavirus disease (COVID-19), coping with clinical issues at the individual level has become important to the healthcare system. Accordingly, precise initiation of treatment after a hospital visit is required for expedited processes and effective diagnoses of outpatients. To achieve this, artificial intelligence in medical natural language processing (NLP), such as a healthcare chatbot or a clinical decision support system, can be suitable tools for an advanced clinical system. Furthermore, support for decisions on the medical specialty from the initial visit can be helpful.

Materials and methods

In this study, we propose a medical specialty prediction model from patient-side medical question text based on pre-trained bidirectional encoder representations from transformers (BERT). The dataset comprised pairs of medical question texts and labeled specialties scraped from a website for the medical question-and-answer service. The model was fine-tuned for predicting the required medical specialty labels among 27 labels from medical question texts. To demonstrate the feasibility, we conducted experiments on a real-world dataset and elaborately evaluated the predictive performance compared with four deep learning NLP models through cross-validation and test set evaluation.

Results

The proposed model showed improved performance compared with competitive models in terms of overall specialties. In addition, we demonstrate the usefulness of the proposed model by performing case studies for visualization applications.

Conclusion

The proposed model can benefit hospital patient management and reasonable recommendations for specialties for patients.

Keywords: Bidirectional encoder representations from transformers, Deep learning, Medical specialty prediction, Medical question-and-answer post, Natural language processing

1. Introduction

The COVID-19 pandemic has disturbed medical care systems worldwide by affecting trends in hospital admissions [1], [2]. This has led to increased patient management requirements at the initial visit stage and a precise clinical diagnosis [3]. However, discrepancies between symptoms and the selected medical department frequently occur in the clinical process, resulting in various costs [4]. To relieve these unnecessary costs, when selecting an appropriate medical specialty, the symptoms of patients should be thoroughly considered.

The rapid progress of machine learning and deep learning has enabled the development of various implementations of artificial intelligence (AI) in the real world [5]. In terms of text data analysis, natural language processing (NLP) has provided word embeddings for numerical representations of text [6] and has been developed for various applications in medicine [7]. Accordingly, studies on medical AI dealing with text data have been proposed for clinical support applications, such as healthcare chatbots [8], [9], automated clinical diagnosis [10], and text-based report recognition [11].

In recent deep-learning-based NLP, bidirectional encoder representations from transformers (BERT) [12] has been considered state-of-the-art models. By employing a self-attention mechanism and transfer learning, BERT outperformed contemporary models in several language-understanding evaluation benchmarks for NLP downstream tasks. Although several post-BERT models [13], [14] have been developed, BERT is still widely used in various fields [15], [16].

Medical specialty prediction and recommendation methods have been studied in several ways. Habib et al. proposed a medical recommendation method using text generation and evaluated the method for a dataset across five specialties [17]. Weng et al. developed a medical subdomain classification model for clinical notes based on feature-based machine learning as well as convolutional neural networks (CNN) [18]. Lee et al. [19] presented a medical specialty recommendation method using sentences for a conversational AI chatbot [19]. These studies covered NLP problems for specialty prediction but considered partial specialties only, used hospital text data, or conducted sentence-level analysis. Therefore, we focus on medical specialty predictions by leveraging text data scraped from a medical question-and-answer portal website.

This study proposes a medical specialty prediction model based on a domain-specific pre-trained BERT. To demonstrate the validity of the proposed model, we evaluated its predictive performance and compared it with that of four deep learning NLP models. The proposed model showed outstanding performance for the comprehensive and individual aspects of medical specialties.

2. Materials and methods

2.1. Dataset

In this study, we exploit healthcare counsel posts in the NAVER portal, which provides medical questions and answers to services to general users in Korea. The posts were composed of a medical question, a medical specialty label, and an answer from doctors corresponding to the labeled specialty for the question. We employ the question and the corresponding specialty by extracting text through web crawling. Medical questions generally include explanations of symptoms and requests for medical guidance to obtain relevant solutions. The medical questions were represented in colloquial rather than written expressions. To preserve the properties of the patient-side text data and avoid contrived manipulation, we applied minimal preprocessing to remove tags and figures from the text.

2.2. Bidirectional encoder representations from transformers

BERT is a deep learning model that has shown remarkable performance in various natural language processing tasks compared to contemporary models. BERT has been evaluated on the General Language Understanding Evaluation (GLUE) benchmark, Stanford Question Answering Dataset (SQuAD), Situation with Adversarial Generations (SWAG), and CoNLL-2003 named entity recognition (NER). BERT employs transfer learning to improve language understanding, where the model is initially trained using a large-scale unlabeled corpus and is then fine-tuned for a specific downstream task. In fine-tuning, the last layer is replaced with an appropriate fully-connected layer for the targeted task.

BERT is composed of the encoders of the transformer model that employs stacked self-attention layers [17]. The attention layer performs the multiplication and softmax of the inputs as follows:

where denotes queries, denotes keys, denotes values, and denotes the dimensions of the keys. Multi-head attention performs parallel computation for separated attention heads and merges them as follows:

where is the fully-connected layer. In the self-attention layer of BERT, each head is calculated as follows:

where denotes the output of the previous layer. In the first encoder layer, is a tensor derived from the positional encoding of the embedded inputs. The inputs consist of tokens converted by a tokenizer, which relieves the out-of-vocabulary problem by splitting a word into smaller pieces. The first element of all the inputs is a special token ‘[CLS]’ for classification.

2.3. Pre-training of BERT

Before BERT is applied to various NLP tasks, the model undergoes a pre-training process by leveraging an unlabeled corpus. At this stage, the model performs two types of supervised learning for prediction with an unlabeled corpus. BERT employs the masked language model (MLM) and next-sentence prediction (NSP) to learn natural language concerning the relation between sentences and the meaning of words. In both tasks, the model uses an identical input constructed using a pair of sentences and concealing a part of the tokens. In MLM, the model is trained to predict certain masked tokens within token indices in the vocabulary dimension of a tokenizer for a categorical cross-entropy loss. Simultaneously, the model trains for the binary classification of the next sentence relationship for the inputs concerning the binary cross-entropy loss in the NSP. Subsequently, the model weights are updated by optimizing to minimize the summation of both losses.

2.4. Predicting the required medical specialty based on a pre-trained BERT

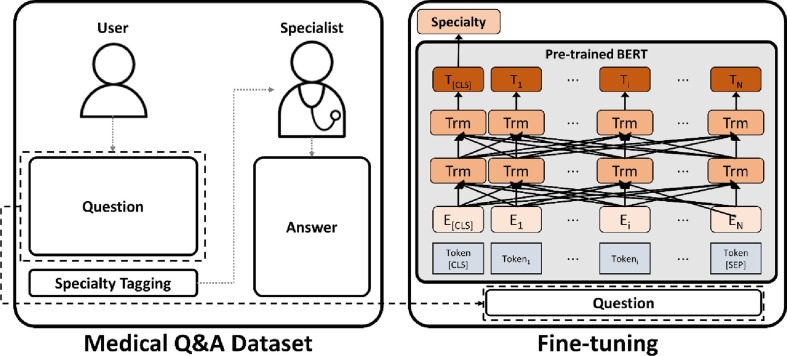

We exploited a pre-trained BERT and fine-tune the model for predicting the required medical specialty from colloquial narrative symptom text, as shown in Fig. 1 . We used the entire question text as input to consider multiple sentences. If the number of tokens exceeded the maximum length of the model, the input was pruned from the front to the maximum length.

Fig. 1.

A framework for medical specialty prediction from question text using a pre-trained BERT.

In this work, we use a pre-trained Korean Medical BERT (KM-BERT) model to address the domain-specific and language-specific datasets elaborately. KM-BERT was trained using the Korean medical corpus with a bidirectional wordpiece tokenizer for Korean [18]. In fine-tuning, the last layer of the pre-trained model for the MLM and NLP is replaced with a fully-connected layer. The output size of the layer is equal to the number of predictive specialties and is considered only in the first token tensor. Then, the model is fine-tuned to predict a single specialty using softmax activation in the last layer.

3. Results

3.1. Data collection

We retrospectively collected pairs of medical question text and specialty by scraping text from a website. The website provides an answering service regarding medical questions requested by users. Each user can upload a medical question when they need. Medical experts certified by the website only can examine the uploaded questions and answer them as a text reply. The experts consist of medical professionals such as physicians and dentists. The website provides the medical specialty of each expert.

We considered 27 specialty labels in this experiment, including anesthesiology and urology. Table 1 lists the number of questions that were collected for each specialty. We divided the dataset into training and test sets according to the collection date. The training set consisted of 50,454 questions and specialty pairs uploaded from 13/7/2020 to 13/7/2021. The test set comprised 31,858 pairs uploaded from 13/7/2021 to 13/9/2021. There was no overlap between the training and test sets.

Table 1.

The number of collected medical consultation question texts for 27 medical specialty labels.

| Specialty | N |

|

|---|---|---|

| Training set | Test set | |

| Anesthesiology | 1,980 | 1,980 |

| Cardiac and Thoracic Surgery | 636 | 46 |

| Cardiology | 1,090 | 184 |

| Dentistry | 1,980 | 1,980 |

| Dermatology | 1,980 | 1,980 |

| Emergency Medicine | 764 | 591 |

| Endocrinology | 718 | 169 |

| Family Medicine | 1,980 | 1,980 |

| Gastroenterology and Hepatology | 1,980 | 306 |

| General Surgery | 3,960 | 3,268 |

| Hematology and Oncology | 2,838 | 532 |

| Infectious Diseases | 716 | 146 |

| Nephrology | 481 | 67 |

| Neurology | 1,980 | 558 |

| Neurosurgery | 1,980 | 1,980 |

| Obstetrics and Gynecology | 5,537 | 2,644 |

| Ophthalmology | 1,980 | 1,980 |

| Orthopedic Surgery | 1,980 | 1,980 |

| Otolaryngology | 1,980 | 1,980 |

| Pediatrics | 1,979 | 389 |

| Plastic Surgery | 1,980 | 1,980 |

| Psychiatry | 1,980 | 500 |

| Pulmonology | 457 | 43 |

| Radiology | 1,980 | 422 |

| Rehabilitation Medicine | 1,980 | 1,980 |

| Rheumatology | 1,578 | 213 |

| Urology | 1,980 | 1,980 |

3.2. Experiment

To demonstrate the validity of the proposed model, we investigated the performance of the proposed model for medical specialty prediction using the dataset. We evaluated the performance in three ways: (1) validation in the training set, (2) evaluation in the test set, and (3) evaluation for each specialty. In the first evaluation, we performed 5-fold cross-validation to derive unbiased performance in the training set. We randomly split the training set into training and validation sets. The second evaluation was performed by separating the entire dataset according to the uploading date. The test set had a more skewed distribution than the training set in terms of the labeled specialty because of the relatively shorter collection period. Finally, we estimated the predictive performance of the models for each specialty.

In this experiment, we compared the proposed model with four competitive deep-learning-based NLP models for medical specialty prediction. We considered KoRean-BERT (KR-BERT) [18], multilingual BERT (M-BERT) [19], the CNN model, and the bidirectional long short-term memory (LSTM) model as competitors. KR-BERT is a language-specific pre-trained BERT model on the Korean corpus. M-BERT is a pre-trained model for 104 languages from Wikipedia. Lastly, two conventional deep learning models were evaluated for comparison.

In the evaluation process, we assessed each model for the prediction of specialty based on the question text. To assess each model precisely, we considered the average top-k accuracy, precision, recall, and F1 score as performance factors. The top-k accuracy denotes the accuracy when considering the top-k specialties as predictive positives by sorting the softmax output of the model. In other words, the performance was estimated by considering whether multiple specialty candidates contained the labeled answer, where k is an integer larger than one. The top-1 accuracy corresponds to the general classification accuracy. In addition, we evaluated the precision, recall, and F1 scores of the predictive performance of the models. We investigated the comprehensive performance of the targeted specialties by implementing a macro-averaged assessment as

where denotes a specialty label and is 27 in this experiment. We employed macro-averaging because the prediction performance for the minority of specialties was also considered essential.

3.3. Experiment results

This section reports the three types of evaluation results for medical specialty prediction. We used training epochs of 2, 3, 4, and 5; learning rates of 2e-5, 3e-5, and 5e-5; and batch sizes of 16 and 32 as hyperparameters for the fine-tuning of the BERT-based models. For the conventional deep learning models, the learning rates of 2e-3, 3e-3, and 5e-3 were used. The maximum length of the input text was 128.

Table 2 summarizes the 5-fold cross-validation results of the training set. For this evaluation, each model was trained five times for each fold. After completing the training, each model was evaluated using a validation set. Accordingly, Table 2 lists the average performance of the five folds. Each listed performance was measured using the best combination of hyperparameters for top-1 accuracy.

Table 2.

Summary of performance of medical specialty prediction via 5-fold cross-validation.

| Model | Top-1 Accuracy | Top-2 Accuracy | Top-3 Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KM-BERT | 0.706 | 0.830 | 0.885 | 0.664 | 0.661 | 0.658 |

| KR-BERT | 0.698 | 0.825 | 0.878 | 0.657 | 0.656 | 0.653 |

| M-BERT | 0.686 | 0.813 | 0.868 | 0.642* | 0.641 | 0.638* |

| CNN | 0.545 | 0.666 | 0.739 | 0.517* | 0.457 | 0.528* |

| LSTM | 0.601 | 0.729 | 0.795 | 0.557 | 0.535 | 0.541 |

The star (*) indicates at least one specialty with zero predictive positives.

Overall, the proposed model outperformed the competitive models regarding reported performance. In terms of a single prediction (top-1), the proposed model showed an accuracy of 0.706. The other four competitive models did not achieve an accuracy of >0.7. For the top-3 accuracy, the proposed model showed an accuracy of 0.885, closest to 0.9 among the compared models. This tendency was also observed in the precision, recall, and F1 scores, which were 0.664, 0.661, and 0.658, respectively, for the proposed model. The KR-BERT and M-BERT models followed in order with a slight performance gap. The precision of M-BERT and CNN was calculated by omitting specialties with zero predictive positives. Accordingly, F1 scores were derived equally.

Table 3 presents the evaluation results of the test set. Before the evaluation, each model was trained using the entire training set, with the best hyperparameters selected by cross-validation. After training, each model was evaluated using the test set. The Top-1 accuracy was relatively decreased compared with the cross-validation performance for the models, except for the CNN. Meanwhile, the top-3 accuracy increased for all the models. In terms of precision, KR-BERT surpassed KM-BERT with a gap of 0.008, and a lower performance was observed for the overall models. Accordingly, the F1 score was estimated to be lower, but there was no significant difference in the recall.

Table 3.

Summarized evaluation results for medical specialty prediction on the test set with the best hyperparameters obtained by cross-validation.

| Model | Top-1 Accuracy | Top-2 Accuracy | Top-3 Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KM-BERT | 0.685 | 0.830 | 0.891 | 0.551 | 0.660 | 0.579 |

| KR-BERT | 0.678 | 0.823 | 0.889 | 0.559 | 0.644 | 0.577 |

| M-BERT | 0.666 | 0.814 | 0.877 | 0.540 | 0.641 | 0.556 |

| CNN | 0.567 | 0.712 | 0.790 | 0.489* | 0.503 | 0.505* |

| LSTM | 0.595 | 0.736 | 0.814 | 0.473 | 0.542 | 0.468 |

The star (*) indicates at least one specialty with zero predictive positives.

Fig. 2 shows the accuracy of medical specialty prediction in more detail. We investigated the differences in the predictive performance of the three BERT-based models in the test set according to the specialty. Regarding top-2 accuracy, the proposed model achieved the best performance in 14 specialties, and KR-BERT and M-BERT showed the best prediction in 11 and 2 specialties, respectively.

Fig. 2.

Top-k accuracy of three BERT-based models for each medical specialty in the test set evaluation with best hyperparameters. Specialties are grouped according to the top-1 accuracies () of the proposed model being (A) over 0.75, (B) between 0.5 and 0.75, and (C) 0.5 or less.

As shown in Fig. 2A, these three models showed significantly outstanding performance for several specialties, such as ophthalmology, obstetrics and gynecology, and dentistry. These specialties tend to deal with relatively specific body parts or symptoms compared to other specialties. For ophthalmology, KM-BERT and M-BERT achieved a top-1 accuracy of 0.94, and KR-BERT achieved a value of 0.92. The top-3 accuracy of KM-BERT was 0.98, whereas that of the others was 0.97. Among these specialties, the largest performance gaps in KM-BERT, KR-BERT, and M-BERT were observed in the top-1 accuracy in pediatrics, with 0.77, 0.70, and 0.64, respectively.

Fig. 2B shows the specialties in which the top-1 accuracy of the proposed model is between 0.5 and 0.75. Regarding the top-1 accuracy, several specialties showed a significant performance gap between the models. Notable gaps between the highest and lowest performances were observed in infectious disease (0.21), anesthesiology (0.18), and neurology (0.11). Nonetheless, the gap decreased for the top-2 and top-3 accuracies.

Several specialties showed inferior accuracy, including emergency medicine, cardiac and thoracic surgery, and family medicine (Fig. 2C). This deterioration is attributed to the association of specialties with comprehensive treatment for similar symptoms or multidisciplinary care. For instance, cardiac and thoracic surgery tended to be predicted with the next priority of prediction for pulmonology. Among the specialties, emergency medicine showed the lowest predictive performance among the three models. Despite its worse performance, the proposed model showed notable performance compared to the other models, with a top-3 accuracy of 0.57.

4. Applications and case studies

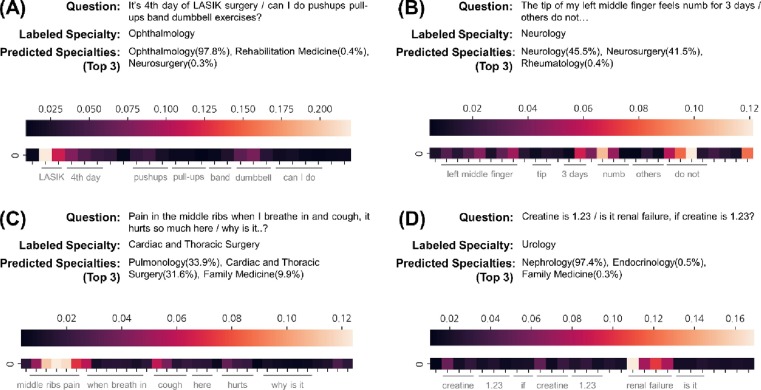

In this section, we demonstrate the application of the proposed framework based on pre-trained BERT through case studies. The application contains a visualization of the self-attention of the model to provide evidence for the feasibility of medical specialty prediction. We employed an average heatmap for all the attention heads and each token to interpret the model prediction. In this application, we consider the last self-attention layer for interpretability.

We provide four examples of medical specialty prediction from colloquial question texts translated from the original in Korean. Fig. 3 presents four examples of the implementation. Fig. 3A depicts the ophthalmological question. According to attention, the proposed model was likely to concentrate on ‘LASIK’ rather than other words. The proposed model appropriately predicts ophthalmology from a term related to the eye. Fig. 3B presents a description of pain in the finger for neurology. The model mainly focused on ‘do not’ and ‘numb’. As a result, neurology was adequately predicted along with a high predictive probability for neurosurgery. Fig. 3C shows the question regarding the symptoms while breathing. The model predicted the specialty to be pulmonology due to ‘middle ribs pain’ and ‘cough’, but the label was cardiac and thoracic surgery. Although the model did not provide an answer label, pulmonology could be a considerable candidate, and the model properly predicted it in the top three. Fig. 3D shows the question regarding renal disease with a creatinine condition. The model considered ‘renal’ as the most important word even though the word was near the end. This case was regarded as an incorrect prediction from the question, but the predicted result was more acceptable than the labeled specialty.

Fig. 3.

Case studies with four examples of applying medical specialty prediction based on the pre-trained BERT. The question translated from the original in Korean, the corresponding specialty label, and the top-3 specialties predicted by the proposed model are listed. The heatmap under the text denotes the average attention for each token. (A) Correctly predicted case with high predictive probability. (B) Case of correct prediction with uncertain prediction. (C) Incorrect prediction, but the label is in the top-3 predicted specialties. (D) Wrong prediction for the label.

5. Discussion

This study proposes a framework for medical specialty prediction based on pre-trained BERT from medical question text. To demonstrate the feasibility of the medical specialty prediction, we exploited medical questions and their corresponding specialty label datasets. The dataset was collected from the medical question-and-answer portal. Using this dataset, we evaluated the proposed model through cross-validation, a test set, and predictive performance for each specialty. To demonstrate the superiority of the proposed model, we compared it with two BERT-based models and two conventional deep learning models. The results were measured in terms of accuracy, precision, recall, and F1 score. In addition, case studies for the application of the proposed model were performed by visualizing the interpretable attention in the model. The results comprehensively demonstrated the usefulness of the proposed medical specialty prediction model.

The main contributions of this study can be summarized as follows: (1) comprehensive specialty prediction, (2) prediction from patient-side and question-level text, and (3) using BERT pre-trained for the medical domain. First, the proposed model considers the overall medical specialties commonly covered by most general hospitals for prediction. The medical recommendation model proposed by Habib yields a response through natural language generation for five of the most active specialties [20]. Unlike that study, our study covers 27 specialties independent of demand. In addition, our work reflects the properties of spoken words close to those of unrefined text because we used minimal preprocessing and multiple sentences as inputs. Consequently, the proposed model is more suitable for implementing medical AI for real-life patients than models trained using regular clinical notes [21], [22]. Finally, we employed a pre-trained BERT model using the medical corpus. The BERT model pre-trained on the medical corpus showed higher performance on medical downstream tasks than the base BERT model [16], [23]. Furthermore, the proposed model achieved higher performance than M-BERT by pre-training in a non-English language.

However, our study has several limitations. In general, the performance of predictive models significantly depends on the quality of the data. The data used in this study treated the medical specialty of the experts who answered the question with the correct label instead of confirming the actual clinic where the questioner received treatment. Accordingly, the quality cannot be fully guaranteed. Nevertheless, it can perform a more thorough evaluation of actual applications and a more explicit reflection of reality. In addition, the difficulty of prediction for each medical specialty is different. Medical specialties with prominent features in the question text, such as ophthalmology and obstetrics and gynecology, showed high predictive performance. However, medical specialties that deal with a wide range of organ systems and symptoms, such as family medicine and general surgery, showed low predictive performance. This performance difference seems to be affected by the medical specificity of the text and the number of data. In particular, emergency medicine showed significantly low performance compared to its importance. A cautious approach to the problem of predicting emergency medicine will be required. Therefore, a considerably careful interpretation of the prediction results is required for the practical application of the proposed model. In a future study, we will perform a further study to overcome these problems and improve the predictive performance for overall medical specialties.

6. Conclusion

The discrepancies between the diagnostic range of the medical specialty and the symptoms of patients may be associated with enormous costs for both the patient and the hospital. This work proposes a medical specialty prediction model based on domain-specific pre-trained BERT from health question texts. The proposed model showed higher predictive performance in the experiments compared with four competitive deep-learning-based NLP models. We expect that the proposed model can relieve the discrepancy problem by suggesting suitable specialties from the text and can be utilized by hospitals or healthcare institutions.

Summary Table.

| What was already known on the topic |

|---|

|

|

|

| What this study added to our knowledge |

|

|

CRediT authorship contribution statement

Yoojoong Kim: Conceptualization, Methodology, Software, Validation, Investigation, Writing – original draft, Visualization, Funding acquisition. Jong-Ho Kim: Resources. Young-Min Kim: Writing – review & editing, Supervision. Sanghoun Song: Writing – review & editing, Supervision. Hyung Joon Joo: Conceptualization, Writing – review & editing, Supervision.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

This research was supported by a grant of the Basic Science Research Program through the National Research Foundation of Korea (NRF), funded by the Ministry of Education (No. 2021R1I1A1A01044255).

References

- 1.Birkmeyer J.D., Barnato A., Birkmeyer N., Bessler R., Skinner J. The Impact Of The COVID-19 Pandemic On Hospital Admissions In The United States: Study examines trends in US hospital admissions during the COVID-19 pandemic. Health Aff. 2020;39(11):2010–2017. doi: 10.1377/hlthaff.2020.00980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kalanj K., Marshall R., Karol K., Tiljak M.K., Orešković S. The Impact of COVID-19 on Hospital Admissions in Croatia. Frontiers Public Health. 2021:1307. doi: 10.3389/fpubh.2021.720948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Allenbach Y., et al. Development of a multivariate prediction model of intensive care unit transfer or death: A French prospective cohort study of hospitalized COVID-19 patients. PLoS One. 2020;15(10):e0240711. doi: 10.1371/journal.pone.0240711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Fatima S., Shamim S., Butt A.S., Awan S., Riffat S., Tariq M. The discrepancy between admission and discharge diagnoses: Underlying factors and potential clinical outcomes in a low socioeconomic country. PLoS One. 2021;16(6):e0253316. doi: 10.1371/journal.pone.0253316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hamet P., Tremblay J. Artificial intelligence in medicine. Metabolism. 2017;69:S36–S40. doi: 10.1016/j.metabol.2017.01.011. [DOI] [PubMed] [Google Scholar]

- 6.T. Mikolov, K. Chen, G. Corrado, J. Dean, “Efficient estimation of word representations in vector space,” arXiv preprint arXiv:1301.3781, 2013.

- 7.Nadkarni P.M., Ohno-Machado L., Chapman W.W. Natural language processing: an introduction. J. Am. Med. Inform. Assoc. 2011;18(5):544–551. doi: 10.1136/amiajnl-2011-000464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nadarzynski T., Miles O., Cowie A., Ridge D. Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: A mixed-methods study. Digital Health. 2019;5 doi: 10.1177/2055207619871808. p. 2055207619871808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Safi Z., Abd-Alrazaq A., Khalifa M., Househ M. Technical Aspects of Developing Chatbots for Medical Applications: Scoping Review. J. Med. Internet Res. 2020;22(12):e19127. doi: 10.2196/19127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Magna A.A.R., Allende-Cid H., Taramasco C., Becerra C., Figueroa R.L. Application of Machine Learning and Word Embeddings in the Classification of Cancer Diagnosis Using Patient Anamnesis. IEEE Access. 2020;8:106198–106213. [Google Scholar]

- 11.Ong C.J., et al. Machine learning and natural language processing methods to identify ischemic stroke, acuity and location from radiology reports. PLoS One. 2020;15(6):e0234908. doi: 10.1371/journal.pone.0234908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “Bert: Pre-training of deep bidirectional transformers for language understanding,” arXiv preprint arXiv:1810.04805, 2018.

- 13.K. Clark, M.-T. Luong, Q. V. Le, and C. D. Manning, “Electra: Pre-training text encoders as discriminators rather than generators,” arXiv preprint arXiv:2003.10555, 2020.

- 14.Y. Liu et al., “Roberta: A robustly optimized bert pretraining approach,” arXiv preprint arXiv:1907.11692, 2019.

- 15.I. Chalkidis, M. Fergadiotis, P. Malakasiotis, N. Aletras, and I. Androutsopoulos, “LEGAL-BERT: The muppets straight out of law school,” arXiv preprint arXiv:2010.02559, 2020.

- 16.Lee J., et al. BioBERT: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics. 2020;36(4):1234–1240. doi: 10.1093/bioinformatics/btz682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.A. Vaswani et al., “Attention is all you need,” in Advances in neural information processing systems, 2017, pp. 5998-6008.

- 18.S. Lee, H. Jang, Y. Baik, S. Park, and H. Shin, “Kr-bert: A small-scale korean-specific language model,” arXiv preprint arXiv:2008.03979, 2020.

- 19.T. Pires, E. Schlinger, and D. Garrette, “How multilingual is multilingual BERT?,” arXiv preprint arXiv:1906.01502, 2019.

- 20.M. Habib, M. Faris, R. Qaddoura, A. Alomari, and H. Faris, “A Predictive Text System for Medical Recommendations in Telemedicine: A Deep Learning Approach in the Arabic Context,” IEEE Access, 2021.

- 21.Weng W.-H., Wagholikar K.B., McCray A.T., Szolovits P., Chueh H.C. Medical subdomain classification of clinical notes using a machine learning-based natural language processing approach. BMC Med. Inf. Decis. Making. 2017;17(1):1–13. doi: 10.1186/s12911-017-0556-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yao L., Mao C., Luo Y. Clinical text classification with rule-based features and knowledge-guided convolutional neural networks. BMC Med. Inf. Decis. Making. 2019;19(3):31–39. doi: 10.1186/s12911-019-0781-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.E. Alsentzer et al., “Publicly available clinical BERT embeddings,” arXiv preprint arXiv:1904.03323, 2019.