Abstract

Purpose

To compare performance, sample efficiency, and hidden stratification of visual transformer (ViT) and convolutional neural network (CNN) architectures for diagnosis of disease on chest radiographs and extremity radiographs using transfer learning.

Materials and Methods

In this HIPAA-compliant retrospective study, the authors fine-tuned data-efficient image transformers (DeiT) ViT and CNN classification models pretrained on ImageNet using the National Institutes of Health Chest X-ray 14 dataset (112 120 images) and MURA dataset (14 656 images) for thoracic disease and extremity abnormalities, respectively. Performance was assessed on internal test sets and 75 000 external chest radiographs (three datasets). The primary comparison was DeiT-B ViT vs DenseNet121 CNN; secondary comparisons included DeiT-Ti (Tiny), ResNet152, and EfficientNetB7. Sample efficiency was evaluated by training models on varying dataset sizes. Hidden stratification was evaluated by comparing prevalence of chest tubes in pneumothorax false-positive and false-negative predictions and specific abnormalities for MURA false-negative predictions.

Results

DeiT-B weighted area under the receiver operating characteristic curve (wAUC) was slightly lower than that for DenseNet121 on chest radiograph (0.78 vs 0.79; P < .001) and extremity (0.887 vs 0.893; P < .001) internal test sets and chest radiograph external test sets (P < .001 for each). DeiT-B and DeiT-Ti both performed slightly worse than all CNNs for chest radiograph and extremity tasks. DeiT-B and DenseNet121 showed similar sample efficiency. DeiT-B had lower chest tube prevalence in false-positive predictions than DenseNet121 (43.1% [324 of 5088] vs 47.9% [2290 of 4782]).

Conclusion

Although DeiT models had lower wAUCs than CNNs for chest radiograph and extremity domains, the differences may be negligible in clinical practice. DeiT-B had sample efficiency similar to that of DenseNet121 and may be less susceptible to certain types of hidden stratification.

Keywords: Computer-aided Diagnosis, Informatics, Neural Networks, Thorax, Skeletal-Appendicular, Convolutional Neural Network (CNN), Feature Detection, Supervised Learning, Machine Learning, Deep Learning

Supplemental material is available for this article.

© RSNA, 2022

Keywords: Computer-aided Diagnosis, Informatics, Neural Networks, Thorax, Skeletal-Appendicular, Convolutional Neural Network (CNN), Feature Detection, Supervised Learning, Machine Learning, Deep Learning

Summary

Visual transformer models had slightly lower overall performance and similar sample efficiency for disease classification using chest and extremity radiographs compared with convolutional neural networks but may be less susceptible to certain hidden stratification.

Key Points

■ Visual transformer models achieved slightly lower overall weighted area under the receiver operating curve (wAUC) than convolutional neural networks for disease detection on chest (eg, DeiT-B vs DenseNet121: 0.78 vs 0.79) and extremity (eg, 0.887 vs 0.893) radiographs.

■ Visual transformer models had sample efficiency similar to that of convolutional neural network models in response to decreasing amounts of training data, with slight progressive reductions in wAUC for both DeiT-B and DenseNet121 in all datasets down to 10% of training data, at which point performance degraded substantially.

■ Visual transformer models had a lower rate of chest tube presence among pneumothorax false-positives than convolutional architectures (43.1% [324 of 5088] vs 47.9% [2290 of 4782]) but showed similar rates of false-negatives and prevalence of specific types of abnormalities on false-negative extremity radiographs.

Introduction

The potential for deep learning models to automate diagnosis of disease on radiographs has great clinical implications in a time when the volume of images exceeds the number of available radiologists (1). Convolutional neural networks (CNNs) have shown promising performance in diagnosing disease on chest radiographs, sometimes outperforming radiologists (2–5). However, CNNs are limited by variable generalizability under shifts in the data distribution, where a model may perform well on an internal test dataset from the same population on which it was trained but perform worse on an external dataset from a different population (6). A related limitation is hidden stratification, where CNNs perform better or worse on specific subsets; for example, CNNs trained to identify pneumothorax on chest radiographs demonstrated higher areas under the receiver operating characteristic curve (AUCs) on images with chest tubes compared with those without chest tubes, apparently learning to identify chest tubes as a proxy for pneumothorax rather than identifying pneumothorax itself (2,7,8). Thus, although CNNs show impressive radiograph-based diagnostic performance, room for improvement remains.

A recently proposed alternative is the visual transformer (ViT) architecture, first described by Dosovitskiy et al (9) and improved on by Touvron et al (10) as data-efficient image transformers (DeiT), which has shown promising performance on general image datasets. These models modify the fully transformer-based models that have revolutionized the field of natural language processing (NLP) to computer vision tasks. Transformer-based NLP models process an entire input sentence and use a self-attention mechanism to selectively “pay attention to” specific parts of a sentence (11,12). ViT models use an analogous approach by dividing an input image into 16 × 16–pixel patches and projecting them into a linear embedding that is then passed to alternating multihead self-attention and multilayer perceptron layers and finally to a multilayer perceptron task head for classification, as with NLP applications. DeiT-B has achieved near–state-of-the-art performance for ImageNet classification and on-par performance with state-of-the-art CNNs in transfer learning to downstream tasks, even when fine-tuning on datasets as small as 2040 images (10), suggesting that they may be a viable improvement over CNNs for diagnosing disease on radiographs. However, this has not previously been well established in the literature.

The purpose of this study was to thoroughly compare the performance and sample efficiency of ViT and CNN models for diagnosis of disease on chest radiographs and abnormalities on upper extremity radiographs using transfer learning. We also evaluated whether ViT models demonstrate the same degree of hidden stratification as CNNs previously described for diagnosis of pneumothorax based on chest radiographs and diagnosis of abnormalities based on upper extremity radiographs (7).

Materials and Methods

Datasets

This Health Insurance Portability and Accountability Act–compliant, retrospective study used data from five public datasets. Because all data were in the public domain and no human patients were involved, institutional review board approval was not required. Data were analyzed from January 5, 2021, to April 10, 2022.

We evaluated two tasks: (a) diagnosis of thoracic diseases on chest radiographs and (b) diagnosis of abnormalities on upper extremity radiographs. For the chest radiograph domain, we used one training dataset (National Institutes of Health Chest X-ray 14) with a held-out test set and randomly selected 25 000 image subsets of three additional datasets (CheXpert, PadChest, and MIMIC) as external test sets (3,13,14). The Chest X-ray 14 and PadChest images were annotated for the presence of 14 abnormalities based on NLP of radiology reports and/or radiologist review of images, whereas CheXpert and MIMIC had annotations for only seven abnormalities based on NLP of radiology reports. For the extremity domain, we used the MURA (musculoskeletal radiographs) upper extremity dataset for training with an internal held-out test set, annotated for the presence or absence of any abnormality by radiologist review of images (15). Because MURA images are organized into studies and annotated at the study level, we assigned the study label to each image individually for training but evaluated at the study level by taking the mean model output across all images in a study. Appendix E1, section 1 (supplement) describes the datasets and preprocessing in detail.

Model Training and Evaluation

We used the DeiT architecture pretrained on ImageNet described by Touvron et al (10) to represent ViTs. In particular, we used DeiT-B, their largest model with 86 million parameters, for our primary analysis because it was the best-performing model in transfer learning using standard methods for fine-tuning historically used for CNNs. Although Touvron et al also evaluated the effect of knowledge distillation techniques that use a well-performing CNN as a teacher (10), we did not use distillation methods so that we could assess the performance of transformer architectures independently of CNNs and provide a more direct comparison of standard transfer learning approaches. To represent CNNs in our primary analysis, we used the DenseNet121 CNN (7 million parameters) pretrained on ImageNet (10,16) because it has demonstrated state-of-the-art performance for thoracic disease diagnosis on chest radiographs using Chest X-ray 14 and CheXpert datasets (2,3) and extremity radiograph diagnosis using the MURA dataset (15), and has served as a benchmark for CNNs trained on these datasets in public deep learning competitions (17,18). We secondarily explored DeiT-Ti (Tiny) (5 million parameters) as a ViT more similar in size to DenseNet121 and ResNet152 (60 million parameters) and EfficientNetB7 (64 million parameters) as well-known CNNs closer in size to DeiT-B that were also evaluated by Touvron et al.

All data and model acquisition, processing, training, and analysis were performed using Python 3.6 and the PyTorch framework. Final DeiT-B and DenseNet121 model training was performed using a single RTX 5000 GPU to enable comparison of total time needed to train. Code and all data splits used in this study are available at https://github.com/zachmurphy1/transformer-radiographs.

Grid search was used to optimize hyperparameters for DeiT-B and DenseNet121, with the best model considered that which yielded the maximal validation AUC. The search space (shown in Appendix E1, section 2 [supplement]) covered MixUp or CutMix augmentation (19,20), optimizer, dropout, initial learning rate, and weight decay, with 128 different hyperparameter combinations evaluated for each primary model (total number of GPU hours, 1789 [Appendix E1, section 3, supplement]). A fixed learning rate decay was used, with learning rate decaying to 10% every three epochs. Early stopping was implemented to guard against overfitting, with training stopping after five epochs without at least 1e-4 improvement in validation AUC and the model taken at the epoch with highest validation AUC. Random horizontal flip augmentation was used for all models. Hyperparameter optimization supported the use of stochastic gradient descent for DeiT-B and DenseNet121 models, dropout for DenseNet121 but not DeiT-B, and MixUp or CutMix for DenseNet121 but not DeiT-B (detailed in Appendix E1, section 4 [supplement]). A grid search was repeated for DeiT-Ti, ResNet152, and EfficientNetB7 on a subspace of the hyperparameters showing variability in the top 25% of runs from the primary DeiT and CNN models, with all other settings the same. This supported similar hyperparameters (Appendix E1, section 4 [supplement]), although MixUp or CutMix was not supported for ResNet152 on the chest radiograph task or EfficientNetB7 on either task. Final models were obtained by fine-tuning pretrained models on the combined training and validation sets using the identified optimal hyperparameters.

Comparison of Heatmaps between Models

One key theoretical advantage of ViT models over CNNs is use of their self-attention mechanism to gain a global understanding of an image rather than simply looking for local features (as in a CNN). Although methods of understanding and interpreting transformer-based models are an area of active development, maps of the attention layers in ViT models have been used analogously to gradient-weighted class activation mapping (Grad-CAM) visualization for CNNs (9). To explore differences in areas of radiographs that may be important to DeiT versus CNN models, we visually compared label-specific attention maps from DeiT-B and Grad-CAM++ maps from DenseNet121 on a subset of chest radiograph test images from each dataset that had one ground truth–positive label and were true-positives for that label on both DeiT-B and DenseNet121 and a subset of MURA test images that were true-positives on both datasets.

Statistical Analysis

All statistical analysis was performed using Python (version 3.6.9), SciPy (version 1.5.4), and scikit-learn (version 0.24.2). For all statistical analyses, statistical significance was defined as a P value less than .05.

Comparison of performance between models.—Models were evaluated on the four chest radiograph test sets and the MURA held-out test set. For each test set, we calculated the AUC for each disease label (for chest radiographs) and each body region (for MURA), as well as label-wise and extremity region–wise accuracy, precision, recall, and F1 scores at the threshold where true-positive rate was equal to 1 minus the false-positive rate. Label- and region-wise AUCs were averaged with weights by label or region prevalence, whereas other metrics were microaveraged to yield overall performance metrics. Chest radiograph models were evaluated at the image level, and MURA models were evaluated at the study level by averaging predictions for each image belonging to a study before thresholding. For the CheXpert and MIMIC datasets, which only have seven of the 14 Chest X-ray 14 labels, overall metrics were computed using only the seven labels. Bootstrapping with 1000 resamples was implemented to estimate 95% CIs for each metric. Models were compared using paired t tests on bootstrap resample measures.

Evaluation of sample efficiency.—Because the original ViT models described by Dosovitskiy et al (9) had lower performance than CNNs with smaller amounts of training data, we evaluated the effect of dataset size on DeiT-B and DenseNet121 model performance (sample efficiency). Using the optimal hyperparameters described above, we repeated fine-tuning of the DeiT-B and DenseNet121 pretrained models, progressively decreasing training and validation dataset sizes in increments of 10%, from 100% of data to 10% with an additional evaluation of 1% of dataset size. We then evaluated all models on the full Chest X-ray 14 test set, similar to previous work for CNNs by Dunnmon et al (21). Because of increased variance in model performance observed at smaller training set sizes depending on the training data randomly selected, we repeated training and evaluation on different nonoverlapping training data samples at the 1% and 10% dataset sizes. Using the Chebyshev inequality (22), we estimated that 10 replications at 1% and four replications at 10% would be sufficient to ensure similar estimator variance. In both cases, these sample numbers were sufficient to ensure accurate estimates of model performance (see Appendix E1, section 5 [supplement]). We compared model performance at 20% dataset sizes and above using paired t tests on the bootstrap sample metrics directly, and for 1% and 10% sizes using Wilcoxon signed rank tests comparing the distribution of mean weighted AUCs (wAUCs) across replications at a given size.

Evaluation of hidden stratification (failure analysis).—To evaluate for potential hidden stratification, we considered two known instances of hidden stratification in CNN models. First, CNNs have been shown to identify pneumothorax on chest radiographs better in images containing chest tubes compared with those without, presumably because chest tubes are treatments for pneumothorax and may be easier for CNNs to detect (7). Second, CNNs have shown higher diagnostic performance on extremity radiographs with orthopedic metal hardware than those with fractures and arthritis (7), presumably because the former is a less subtle finding than the latter (23). To explore whether ViTs are susceptible to these instances of hidden stratification, a board-certified radiologist (P.H.Y., with 7 years of experience interpreting radiographs) annotated chest radiographs for the presence of a chest tube in pneumothorax false-positives and false-negatives from each final DeiT-B and DenseNet121 model. The radiologist was blinded to whether images were false-positives or false-negatives from the DeiT-B or DenseNet121 model. We compared the proportion of false-positives having chest tubes between the DeiT-B and DenseNet121 and repeated this analysis for the false-negatives. The same radiologist annotated all false-negatives on the MURA test set between the DeiT-B and DenseNet121 models for the presence of arthritis, fracture or amputation, hardware or orthopedic fixation, or other abnormalities. We did not analyze MURA false-positives because these are ground truth normal.

Results

Dataset Characteristics

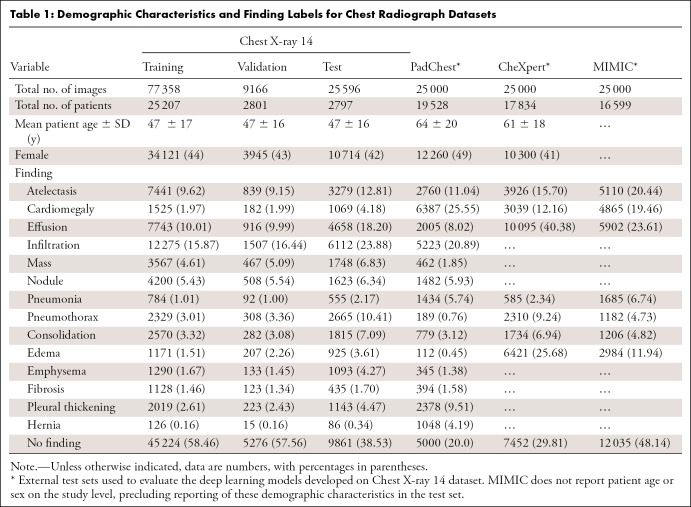

Distribution of demographic characteristics and disease labels within the different dataset splits are shown in Tables 1 and 2. The patients represented in the PadChest and CheXpert sets were older than those in the Chest X-ray 14 set. Whereas CheXpert had a sex distribution similar to that of Chest X-ray 14, the PadChest sample had a higher representation of women. Whereas the Chest X-ray 14 test set disease label distribution is reasonably similar to the training set, the external test sets have different label distributions, simulating real-world differences in disease prevalence (6).

Table 1:

Demographic Characteristics and Finding Labels for Chest Radiograph Datasets

Table 2:

Demographic Characteristics and Finding Labels for MURA Dataset

Overall Training and Performance

Table 3 shows the performance of final chest radiograph and MURA models trained using optimal hyperparameters evaluated on their respective test sets. Full metrics are available in Appendix E1, sections 6 and 7 (supplement).

Table 3:

Best Model Results for DenseNet121 and DeiT Architectures on Chest Radiograph and Extremity Radiograph Use Cases on Internal Held-out Test Sets

The chest radiograph DenseNet121 model met early stopping criteria after 12 epochs and took 136.34 minutes to train (11.36 min/epoch), whereas the DeiT-B model met stopping criteria after only four epochs and took 101.95 minutes to train (25.49 min/epoch). Likewise, for the MURA models, DenseNet121 used 15 epochs and took 74.78 minutes (4.99 min/epoch) and DeiT-B used eight epochs, taking 81.00 minutes (10.13 min/epoch).

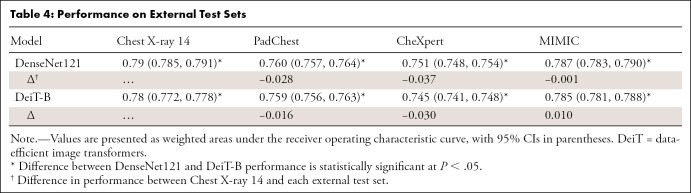

For the chest radiograph models, DeiT-B had a wAUC of 0.78 on the internal held-out test set, which was slightly lower than that of DenseNet121 at 0.79 (P < .001); likewise, DeiT-B was approximately 0.01 lower than DenseNet121 for accuracy, precision, recall, and F1 score (P < .001 for each). On external testing (Table 4), DenseNet121 slightly outperformed DeiT-B for all three test sets. However, the performance drop between internal and external held-out test sets was smaller for DeiT-B, with a mean wAUC change of −0.01 versus −0.02 for DenseNet 121. Regarding the additional architectures, DeiT-Ti performed slightly worse than DeiT-B (0.76 vs 0.78; P < .001). ResNet152 performed worse than DenseNet121 (0.785 vs 0.788; P < .001) but slightly better than DeiT-B (0.79 vs 0.78; P < .001). EfficientNetB7 outperformed DenseNet121 (0.792 vs 0.788; P < .001).

Table 4:

Performance on External Test Sets

For the MURA extremity domain, DeiT-B had a wAUC of 0.887, whereas DenseNet121 had a wAUC of 0.893 (P < .001). DeiT-B was approximately 0.01 lower for accuracy, precision, recall, and F1 score (P < .001 for each). DeiT-Ti performed worse than DeiT-B (0.85 vs 0.89; P < .001), whereas ResNet121 and EfficientNetB7 performed higher than DenseNet121 (0.900 and 0.898 vs 0.893; P < .001 and P < .001, respectively). For both chest radiographs and MURA, final model wAUCs all differed by less than 0.05.

Performance by Label or Body Region

Figure 1 shows AUCs by disease label or body region on the test sets for each model. For almost all chest radiograph disease labels in all four test sets, the DeiT-B model performed equally to or marginally lower than the DenseNet121 model (Fig 1A). Notable exceptions where DeiT-B outperformed DenseNet121 include hernia on Chest X-ray 14 (0.83 vs 0.81; P < .001) and PadChest (0.73 vs 0.68; P < .001), pneumonia on each external test set (PadChest, 0.68 vs 0.62; CheXpert, 0.66 vs 0.62; and MIMIC, 0.68 vs 0.67; P < .001 for each), cardiomegaly on each external test set (PadChest, 0.902 vs 0.895; CheXpert, 0.77 vs 0.76; and MIMIC, 0.73 vs 0.72; P < .001 for each), and consolidation on PadChest (0.78 vs 0.77; P < .001) and CheXpert (0.681 vs 0.676; P < .001).

Figure 1:

Chest radiograph and MURA (musculoskeletal radiograph) model performance. (A) Area under the receiver operating characteristic curve (AUC) by finding label for best DenseNet121 model and best data-efficient image transformers (DeiT-B) model on four chest radiograph test sets. The National Institutes of Health Chest X-ray 14 dataset (NIH CXR14) is internal whereas the others are external. (B) AUC by body region for best DenseNet121 model and best DeiT-B model on MURA test set. Vertical lines represent 95% CIs calculated using 1000-sample bootstrapping. Horizontal lines represent weighted average AUC by model. *Difference between DeiT-B and DenseNet121 is statistically significant at P < .05.

For the MURA models, DenseNet121 slightly outperformed DeiT-B on the shoulder (0.859 vs 0.856; P = .02), humerus (0.92 vs 0.89; P < .001), elbow (0.911 vs 0.907; P < .001), forearm (0.92 vs 0.91; P < .001), and wrist (0.93 vs 0.92; P < .001) regions. DeiT-B had slightly better performance than DenseNet121 on the hand region (0.86 vs 0.85; P < .001), whereas the two had similar performance on the finger region (0.87 vs 0.87; P = .30).

Sample Efficiency

Figure 2 shows the wAUC on the Chest X-ray 14 and MURA test sets for models trained on varying amounts of data. Using smaller amounts of data almost always resulted in slight progressive reductions in wAUC for both DeiT-B and DenseNet121 in all datasets down to 10% of training data, at which point performance degraded substantially. For chest radiographs, whereas DenseNet121 generally outperformed DeiT-B at 10% of data and greater, DeiT-B had superior performance at 1% training data on each test set except MIMIC. For MURA, DenseNet121 outperformed DeiT-B at all datasets greater than 10% of training data, and the two models had similar performance on the 10% and 1% datasets. Appendix E1, sections 6 and 7 (supplement) include detailed sample efficiency results.

Figure 2:

Weighted area under the receiver operating characteristic curve (AUC) by training and validation size for DenseNet121 and best data-efficient image transformers (DeiT-B) architectures for chest radiograph and MURA (musculoskeletal radiograph) models on respective test sets. Bars represent 95% CIs calculated using 1000-sample bootstrapping. *Difference between DeiT-B and DenseNet121 is statistically significant at P < .05. †Comparing distribution of means over multiple training data splits (10 splits for 1% dataset size and four splits for 10% dataset size).

Hidden Stratification

Table 5 shows contingency findings for DeiT-B and DenseNet121 for the reviewed subset of chest radiograph false-positives and false-negatives by the presence of a chest drain and MURA false-negatives by abnormality. Contingency tables for MURA by body region are included in Appendix E1, section 9 (supplement). The rate of chest drain presence in DeiT-B false-positives (43.1% [324 of 5088]) was lower than that in DenseNet121 false-positives (47.9% [2290 of 4782]) but similar for false-negatives (54.8% [324 of 591] vs 55.0% [306 of 556]). The presence of specific osseous abnormalities in MURA false-negatives was similar between the DeiT-B and DenseNet121 models overall and between anatomic regions, with arthritis more prevalent than fracture and hardware.

Table 5:

Number of False-Positives and False-Negatives for Chest X-ray 14 and MURA Datasets

Comparison of Heatmaps

Examination of attention maps and Grad-CAM++ maps showed notable differences. For chest radiographs, the label-specific attention rollout maps and Grad-CAM++ maps generally highlighted the expected area(s) of radiographic abnormality. However, the DeiT-B attention maps were more precise with smaller areas of activation, whereas the DenseNet121 Grad-CAM++ maps highlighted larger areas of the thorax (eg, highlighting large portions of the lung rather than specific parenchymal opacities) (Fig 3). Findings were similar on the MURA dataset, with both architectures highlighting general areas of abnormality, such as orthopedic hardware or areas of arthritis, but with DeiT-B activation maps having smaller, more precise areas of activation (Fig 3). A representative selection of additional attention and Grad-CAM++ maps for chest radiographs and extremity radiographs are available in Appendix E1, section 8 (supplement).

Figure 3:

Attention map (data-efficient image transformers [DeiT]) and gradient-weighted class activation (Grad-CAM++) map (DenseNet121) for true-positive prediction of mass by both models on (A) a National Institutes of Health Chest X-ray 14 test image and (B) a MURA test image. (A) The DeiT attention map is more precisely localized to the mass in the lingula compared with the DenseNet121 Grad-CAM++ map, which includes larger portions of the left lung that do not correspond to the mass. (B) The DeiT attention map more precisely localized to the fracture of the midhumeral diaphysis, whereas the Grad-CAM++ map localized to the humerus, which generally includes the fracture.

Discussion

CNNs have shown high diagnostic performance on radiographs but have proven susceptible to pitfalls, such as hidden stratification and limited generalization (6). In this study, we compared the performance of DeiT ViT models, a recently proposed alternative to CNNs, with multiple CNNs for disease diagnosis on chest radiographs and abnormalities on upper extremity radiographs. Our analysis demonstrated that ViT models achieved slightly lower overall wAUCs than CNNs for both chest and extremity radiographs. For example, the DeiT-B wAUC was 0.78 and 0.887 on chest radiographs and extremity internal test sets, respectively, compared with 0.79 and 0.893 for DenseNet121. ViT models had sample efficiency similar to that of CNNs, with smaller amounts of data almost always resulting in progressive reductions in wAUC for both in all datasets. Finally, ViT models showed less hidden stratification with lower rate of chest tube presence among pneumothorax false-positives than CNNs (43.1% [324 of 5088] vs 47.9% [2290 of 4782]).

DeiT-B models had slightly lower overall performance than DenseNet121 on chest radiographs and extremity applications, although with arguably negligible differences in clinical practice (AUC differences < 0.02). Similar findings were confirmed for other ViT and CNN architectures with all final DeiT models performing lower than each final CNN model. Our findings are consistent with those of Touvron et al (10), who reported near–state-of-the-art performance for DeiT-B on ImageNet classification and on-par performance with state-of-the-art CNNs in general imaging transfer learning tasks. These results suggest that there is currently no clear performance advantage to using DeiT-B over DenseNet121 for chest radiograph and extremity classification tasks. However, the similar performance of the DeiT-B model to DenseNet121 at this early stage (DeiT was introduced in 2021 compared with DenseNet in 2016) suggests that ViT models have the potential to continue to improve in the near future, especially when considering that ViT models demonstrated similar sample efficiency and required less training time compared with CNNs (see Appendix E1, discussion [supplement]). Furthermore, although the DeiT-B chest radiograph model generally underperformed compared with DenseNet121 on three external datasets, the drop in wAUC from internal to external test sets was slightly lower for DeiT-B (0.01 vs 0.02), suggesting that DeiT may have better generalizability (see Appendix E1, discussion [supplement]).

The attention and Grad-CAM++ maps provide insight into how each architecture processes, or “sees,” an image. Whereas Grad-CAM++ maps generally highlighted radiographically relevant regions of the images, the label-specific ViT attention maps more precisely localized the radiographic abnormalities. ViT models are based on attention mechanisms localized in space, which may be inherently advantageous for providing visual explanations; however, this requires further study for confirmation. Nevertheless, the qualitative differences in these maps support the hypothesis that ViT models fundamentally represent radiographic images differently than do CNNs, thereby conferring potential advantages.

Datasets and CNNs can be susceptible to hidden stratification (7,8), including high prevalence of chest drains associated with pneumothorax chest radiographs (23). Our exploratory subanalysis demonstrated that DeiT pneumothorax false-positives had lower chest tube prevalence, suggesting less susceptibility to this hidden stratification. However, we did not find a difference in the presence of chest tubes in pneumothorax false-negatives, which is more in line with previous studies reporting that chest radiographs without chest drains were highly prevalent in false-negative pneumothorax predictions made by a CNN trained on Chest X-ray 14 images (7). Regarding extremity subanalysis, false-negatives (ground truth positive) in both DeiT and DenseNet121 models were highly enriched with cases of arthritis compared with other diagnoses. This is consistent with findings from Oakden-Rayner et al (7), which showed that CNNs trained on the MURA dataset performed worst for arthritis, followed by other diagnoses. These findings suggest that ViT models may be less susceptible to some kinds of hidden stratification, although further evaluation is required to make definitive conclusions.

Our study had several limitations. First, we evaluated two ViT architectures and three CNN architectures only, and so our results may not generalize to other architecture types. We stress that our study was designed to be an initial evaluation of ViTs compared with CNNs. Second, in our hyperparameter grid search, we could not perform multiple runs at each grid point; however, we performed a comprehensive hyperparameter search exploring grid space, ultimately evaluating 128 different combinations of hyperparameters for each model. Third, whereas original DeiT work by Touvron et al (10) showed promising results using distillation, we chose not to use distillation to evaluate ViT architectures independently of CNNs and to provide an “apples to apples” comparison to conventional CNN transfer learning. Fourth, although we performed external validation of the chest radiograph models, we could not do so for the MURA-trained models because there are no other similar large-scale datasets of upper extremity abnormalities. Finally, our sample efficiency evaluation used hyperparameters identified using the entire training datasets; ideally, this would have been performed using models pretrained on ImageNet.

In conclusion, comparison of transfer learning of DeiT ViT models with CNN models for diagnosis of disease on chest radiographs and upper extremity radiographs demonstrated that CNN models consistently outperformed ViT models, although the absolute differences in performance were small and may not be clinically significant. DeiT-B had a lower prevalence of chest tubes in pneumothorax false-positives compared with DenseNet121, suggesting it is less susceptible to this specific kind of hidden stratification known to affect CNN models. Although ViTs have shown improvements over CNNs in general imaging datasets, our results suggest that they may not yet be ready to replace CNNs in radiographic diagnosis of disease. We optimistically recommend continued evaluation of ViTs for radiology as they mature in the coming years.

Authors declared no funding for this work.

Disclosures of conflicts of interest: Z.R.M. No relevant relationships. K.V. No relevant relationships. J.S. No relevant relationships. P.H.Y. RSNA Resident Research Grant and Johns Hopkins Discovery Award (payments made to institution for both); consulting fees from FHOrtho; associate editor of Radiology: Artificial Intelligence; former trainee editorial board member of Radiology: Artificial Intelligence; stock options Bunkerhill Health.

Abbreviations:

- AUC

- area under the receiver operating characteristic curve

- CNN

- convolutional neural network

- DeiT

- data-efficient image transformers

- Grad-CAM

- gradient-weighted class activation mapping

- MURA

- musculoskeletal radiographs

- NLP

- natural language processing

- ViT

- visual transformer

- wAUC

- weighted AUC

References

- 1. Bastawrous S , Carney B . Improving patient safety: avoiding unread imaging exams in the national VA enterprise electronic health record . J Digit Imaging 2017. ; 30 ( 3 ): 309 – 313 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Rajpurkar P , Irvin J , Ball RL , et al . Deep learning for chest radiograph diagnosis: a retrospective comparison of the CheXNeXt algorithm to practicing radiologists . PLoS Med 2018. ; 15 ( 11 ): e1002686 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Irvin J , Rajpurkar P , Ko M , et al . CheXpert: a large chest radiograph dataset with uncertainty labels and expert comparison . arXiv 1901.07031 [preprint] https://arxiv.org/abs/1901.07031. Posted January 21, 2019. Accessed January 1, 2022 .

- 4. Pan I , Cadrin-Chênevert A , Cheng PM . Tackling the Radiological Society of North America Pneumonia Detection Challenge . AJR Am J Roentgenol 2019. ; 213 ( 3 ): 568 – 574 . [DOI] [PubMed] [Google Scholar]

- 5. Bai HX , Wang R , Xiong Z , et al . Artificial intelligence augmentation of radiologist performance in distinguishing COVID-19 from pneumonia of other origin at chest CT . Radiology 2020. ; 296 ( 3 ): E156 – E165 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Zech JR , Badgeley MA , Liu M , Costa AB , Titano JJ , Oermann EK . Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: A cross-sectional study . PLoS Med 2018. ; 15 ( 11 ): e1002683 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Oakden-Rayner L , Dunnmon J , Carneiro G , Ré C . Hidden stratification causes clinically meaningful failures in machine learning for medical imaging . In: CHIL ‘20: Proceedings of the ACM Conference on Health, Inference, and Learning , Toronto, Canada , April 2–4, 2020 . New York, NY: : Association for Computing Machinery; , 2020. ; 151 – 159 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Yi PH , Kim TK , Yu AC , Bennett B , Eng J , Lin CT . Can AI outperform a junior resident? Comparison of deep neural network to first-year radiology residents for identification of pneumothorax . Emerg Radiol 2020. ; 27 ( 4 ): 367 – 375 . [DOI] [PubMed] [Google Scholar]

- 9. Dosovitskiy A , Beyer L , Kolesnikov A , et al . An Image is worth 16x16 words: transformers for image recognition at scale . arXiv 2010.11929 [preprint] https://arxiv.org/abs/2010.11929. Posted October 22, 2020. Accessed January 1, 2022 .

- 10. Touvron H , Cord M , Douze M , Massa F , Sablayrolles A , Jegou H . Training data-efficient image transformers & distillation through attention . arXiv 2012.12877 [preprint] https://arxiv.org/abs/2012.12877. Posted December 23, 2020. Accessed January 1, 2022 .

- 11. Vaswani A , Shazeer N , Parmar N , et al . Attention is all you need . arXiv1706.03762 [preprint] https://arxiv.org/abs/1706.03762. Posted June 12, 2027. Accessed January 1, 2022 .

- 12. Brown TB , Mann B , Ryder N , et al . Language models are few-shot learners . arXiv 2005.14165 [preprint] https://arxiv.org/abs/2005.14165. Posted May 28, 2022. Accessed January 1, 2022 .

- 13. Bustos A , Pertusa A , Salinas JM , de la Iglesia-Vayá M . PadChest: a large chest x-ray image dataset with multi-label annotated reports . Med Image Anal 2020. ; 66 : 101797 . [DOI] [PubMed] [Google Scholar]

- 14. Johnson AEW , Pollard TJ , Berkowitz SJ , et al . MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports . Sci Data 2019. ; 6 ( 1 ): 317 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Rajpurkar P , Irvin J , Bagul A , et al . MURA: large dataset for abnormality detection in musculoskeletal radiographs . arXiv 712.06957 [preprint] https://arxiv.org/abs/1712.06957. Posted December 11, 2017. Accessed January 1, 2022 .

- 16. torchvision.models — Torchvision 0.11.0 documentation . Web site. https://pytorch.org/vision/stable/models.html. Accessed January 1, 2022 .

- 17. CheXpert: a large chest radiograph dataset with uncertainty labels and expert comparison . arXiv 1901.07031 [preprint] https://arxiv.org/abs/1901.07031. Posted January 21, 2019. Accessed January 1, 2022 .

- 18. MURA bone x-ray deep learning competition . Web site. https://stanfordmlgroup.github.io/competitions/mura/. Accessed January 1, 2022 .

- 19. Zhang H , Cisse M , Dauphin YN , Lopez-Paz D . mixup: beyond empirical risk minimization . arXiv 1710.09412 [preprint] https://arxiv.org/abs/1710.09412. Posted October 25, 2017. Accessed January 1, 2022 .

- 20. Yun S , Han D , Oh SJ , Chun S , Choe J , Yoo Y . CutMix: regularization strategy to train strong classifiers with localizable features . arXiv 1905.04899 [preprint] https://arxiv.org/abs/1905.04899. Posted August 7, 2019. Accessed January 1, 2022 .

- 21. Dunnmon JA , Yi D , Langlotz CP , Ré C , Rubin DL , Lungren MP . Assessment of convolutional neural networks for automated classification of chest radiographs . Radiology 2019. ; 290 ( 2 ): 537 – 544 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Papoulis A , Pillai SU , Unnikrishna Pillai S . Probability, Random Variables, and Stochastic Processes . New York, NY: : McGraw-Hill; , 2002. . [Google Scholar]

- 23. Oakden-Rayner L . Exploring large-scale public medical image datasets . Acad Radiol 2020. ; 27 ( 1 ): 106 – 112 . [DOI] [PubMed] [Google Scholar]

- 24. Wightman R , Touvron H , Jégou H . ResNet strikes back: an improved training procedure in timm . arXiv 2110.00476 [preprint] https://arxiv.org/abs/2110.00476. Posted October 1, 2021. Accessed January 1, 2022 .

- 25. Wang X , Peng Y , Lu L , Lu Z , Bagheri M , Summers RM . ChestX-Ray8: hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases . 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) , Honolulu, HI : July 21–26, 2017 . Piscataway, NJ: : IEEE; , 2017. ; 3462 – 3471 . [Google Scholar]

- 26. Deng J , Dong W , Socher R , Li LJ , Li K , Li FF . ImageNet: a large-scale hierarchical image database . 2009 IEEE Conference on Computer Vision and Pattern Recognition , Miami, FL : June 20–25, 2009 . Piscataway, NJ: : IEEE; , 2009. ; 248 – 255 . [Google Scholar]

![Attention map (data-efficient image transformers [DeiT]) and gradient-weighted class activation (Grad-CAM++) map (DenseNet121) for true-positive prediction of mass by both models on (A) a National Institutes of Health Chest X-ray 14 test image and (B) a MURA test image. (A) The DeiT attention map is more precisely localized to the mass in the lingula compared with the DenseNet121 Grad-CAM++ map, which includes larger portions of the left lung that do not correspond to the mass. (B) The DeiT attention map more precisely localized to the fracture of the midhumeral diaphysis, whereas the Grad-CAM++ map localized to the humerus, which generally includes the fracture.](https://cdn.ncbi.nlm.nih.gov/pmc/blobs/56f8/9745440/8ced3d133e0f/ryai.220012.fig3.jpg)