Abstract

RNA-binding proteins (RBPs) are key co- and post-transcriptional regulators of gene expression, playing a crucial role in many biological processes. Experimental methods like CLIP-seq have enabled the identification of transcriptome-wide RNA–protein interactions for select proteins; however, the time- and resource-intensive nature of these technologies call for the development of computational methods to complement their predictions. Here, we leverage recent, large-scale CLIP-seq experiments to construct a de novo predictor of RNA–protein interactions based on graph neural networks (GNN). We show that the GNN method allows us not only to predict missing links in an RNA–protein network, but to predict the entire complement of targets of previously unassayed proteins, and even to reconstruct the entire network of RNA–protein interactions in different conditions based on minimal information. Our results demonstrate the potential of modern machine learning methods to extract useful information on post-transcriptional regulation from large data sets.

Keywords: RNA–protein interactions, graph neural networks, graphs, transfer learning

INTRODUCTION

RNA–protein interactions are fundamental in the regulation of gene expression. RNA-binding proteins (RBPs) are key in RNA splicing, processing, export, localization, and regulation of translation. Despite their importance, RNA–protein interactions are still relatively understudied, when compared with the DNA–protein interactions which are involved in the initiation and regulation of transcription. Many proteins with previously unsuspected RNA-binding properties are still being discovered, and more than 2000 human proteins have been experimentally determined to bind RNA (Brannan et al. 2016; Hentze et al. 2018; Liu et al. 2019). However, due to the difficulty of experimentally determining interactions between individual proteins and individual transcripts, the number of RBPs for which the identity of their interaction partners is known is much lower.

A major breakthrough in the study of RNA–protein interactions was the development of high-throughput techniques such as CLIP-seq (cross-linking and immunoprecipitation followed by next-generation sequencing) (Licatalosi et al. 2008). CLIP-seq enables the isolation of RBPs and the fragments of RNA which are bound to them, much in the way that ChIP-seq is used to determine regions of DNA bound by transcription factor proteins. Thanks to improvements in the technology such as the development of the eCLIP protocol (Van Nostrand et al. 2016), huge numbers of RBP binding sites are being verified. Despite these advances, practical and conceptual hurdles mean that we are still very far from a comprehensive mapping of the network of RNA–protein interactions. First of all, such networks are intrinsically condition dependent (for example, simply because specific transcripts might be present or absent in different conditions). Secondly, the experimentally determined interactions are inevitably noisy, meaning that both false positives and false negative results are likely. Thus, there is a need for computational methods to complement experimental techniques by filtering noise and predicting interactions for new conditions as well as new RBPs. Here, we consider the problem of predicting RNA–protein interaction (RPI) pairs adopting a machine learning perspective, where a model is trained on currently validated interactions, using RNA and protein sequences as inputs.

Most current predictive methods focus on the narrower problem of predicting binding sites for a specific protein within RNAs, often combining sequence and secondary structure of the target transcript (Kazan et al. 2010; Maticzka et al. 2014; Alipanahi et al. 2015; Uhl et al. 2021). Due to the lack of large-scale CLIP-seq data sets in the past, methods for predicting RNA–protein interaction pairs have only been trained on small data sets (Pan et al. 2019, Node features section). RPIseq (Muppirala et al. 2011) uses the sequence information of RNAs and proteins to predict interactions using SVM and random forests as classifiers. catRAPID omics (Agostini et al. 2013) uses the physiochemical properties of sequences to predict RNA–protein interactions on a genome-wide scale. Deep learning-based methods were also proposed (e.g., IPMiner [Pan et al. 2016], RPI-SAN [Yi et al. 2018], RPIFSE [Wang et al. 2019], RNAcommender [Corrado et al. 2016], and ELM∗ [Wang et al. 2018]), but due to data paucity they were not trained in an end-to-end way and usually relied on advanced feature engineering. As far as we can tell, all models for RNA–protein interaction prediction, such as RPIseq (Muppirala et al. 2011), IPminer (Pan et al. 2016), and recent models like NPI-GNN (Shen et al. 2021) have been designed for small data sets with a handful of proteins and targets (see Supplemental Table S1). The only method we found that was developed with large data sets in mind was RNAcommender (Corrado et al. 2016) (which in the original paper was trained and tested on heterogeneous data from different experiments).

In this paper, we propose to exploit new, large-scale eCLIP data sets (Van Nostrand et al. 2020) to shift the problem of RNA–protein interaction prediction to the network level, that is, attempting to predict the whole network of RPI in an organism in a particular condition in an end-to-end way. We use graph neural networks for predicting RPI, moving away from the paradigm of predicting the targets of a single protein toward leveraging whole network information. To achieve this, we curate a data set of RNA–protein interactions in homogeneous conditions using the high-throughput CLIP-seq data generated as part of the ENCODE project (Van Nostrand et al. 2020) to train our models. We also show that the model can be used to predict the interactions for previously unseen proteins as well as transfer the knowledge to a network observed under different biological conditions. We achieve this by using the similarity between the sequence-based features of proteins to elicit the embedding for a previously unseen protein. The results show the superiority of our approach in the biologically more relevant task of predicting interactions for proteins that were not encountered during training.

RESULTS

Proposed approach

Most RPI prediction tools start by assigning a feature representation to proteins and RNAs, and then train a supervised machine learning algorithm on a set of annotated positive/negative interactions. While this has been a successful strategy by and large, it does not explicitly leverage the network information: protein/RNA nodes are simply summarized as feature vectors, and knowledge about shared targets/regulators is not directly incorporated in the prediction algorithm. With the advent of large CLIP-seq data sets, this network-level information is likely to play an increasingly relevant role in improving algorithmic performance. In this paper, we propose to adopt link prediction algorithms based on Graph Neural Networks (GNNs) to perform network-based prediction of RPIs. In recent years, GNNs have become an indispensable tool for applying neural networks to the graph domain (for recent reviews, see Wu et al. 2020b; Zhou et al. 2020). GNNs use message passing between the nodes of a graph to nonlinearly transform feature vectors and learn low dimensional embeddings for predicting interactions between nodes. In a way, GNNs generalize the well-known convolution operation of neural networks to graphs (Wu et al. 2020b; Zhou et al. 2020). In this paper, we use graph convolutional network (GCN) (Kipf and Welling 2017) with two convolutional layers as the GNN model (see Supplemental Table S2 for a comparison with different GNN architectures).

Figure 1 illustrates the general workflow of our proposed framework. Briefly, CLIP-seq data for a specific cell line is used to identify RNA–protein interactions, thus creating an RPI network. Protein and RNA sequences are used to extract features for the nodes in the graph. RNA-seq for the cell line is used to identify abundant RNAs, which is subsequently used to identify highly likely negative interactions. The interactions and node features are then used to train a GNN (or other machine learning models), which transforms the features to learn low dimensional representations for the nodes and predicts interactions in different scenarios. In this paper, we use the graph convolutional network (GCN) of Kipf and Welling (2017) as the encoder. Further details can be found in Materials and Methods.

FIGURE 1.

Pictorial representation of the framework presented in this paper: the raw data is transformed to obtain node features, positive and negative interactions, which serve as input for the GNN. The trained model is used for making predictions as shown using the genome tracks.

Our models were trained using the large-scale eCLIP data sets for RNA–protein interactions extracted from the ENCODE project (see Data set section). We use RPIseq (Muppirala et al. 2011) and RNAcommender (Corrado et al. 2016) as the baseline models to compare against our GNN-based approach. For GCN and RPIseq, we consider variants, GCN (RNA) and RPIseq (RNA), where RNA-seq information is used to provide information about the presence and quantity of RNA in a given biological sample (see Materials and Methods for more details). Other similar methods like IPMiner (Pan et al. 2016) and NPI-GNN (Shen et al. 2021) fail to run on our data sets due to memory and computation time issues.

The models are evaluated under three different scenarios: (i) We assume that some percentage of the RNA–protein interactions are missing in the Transductive link prediction section; (ii) we perform leave-one-protein-out experiments in the Inductive link prediction section, assuming the availability of full interaction information for the remaining proteins; and (iii) transfer learning of RNA–protein interactions from a source cell line to a target cell line in the Transfer learning section. In scenarios (ii) and (iii), we use the similarity between the sequence-based features of proteins to elicit the embedding for a previously unseen protein. Using machine learning terminology, we refer to scenario (i) as transductive learning (as the set on which predictions are needed is part of the graph), scenario (ii) as inductive learning, and scenario (iii) as transfer learning.

Transductive link prediction

For the first evaluation of the models, we consider the scenario when varying percentages of positive edges are removed from the RPI network. An equal number of negative interactions, as defined in the Negative interactions section, for the test set can be sampled either uniformly at random, or proportional to the degree of each protein. The second setting is considerably harder in practice because the network has to implicitly learn the degree information from training data. Additionally, the harder setting is likely to be more representative of true biological missing data. For GCN, we use the AUROC on the validation set to select the best model. We run all experiments 10 times and report the average results and standard deviations, highlighting in boldface the best results (determined using two sample t-tests) in tables.

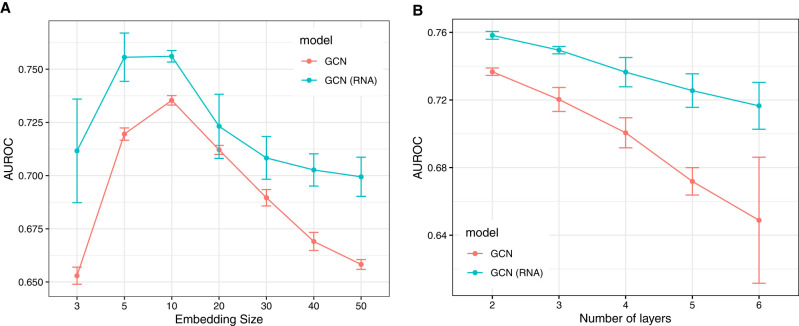

A hyperparameter that needs to be tuned for GCNs is the dimension of the final embedding of the nodes. In Figure 2, we plot the performance of three different variants of GCN as the final embedding dimension is varied (the hidden dimension is kept constant at 50). While all methods for all embedding dimensions provide results which are clearly better than random predictors, the trend shows a clear peak at dimensions between 5 and 10 for all methods; we therefore choose 10 as the final embedding size for all subsequent evaluations of GCN under different settings. We also test the impact of increasing the number of layers from two to six in GCN. The results in Figure 2 show that the performance degrades as we stack more layers in GCN, which can be attributed to the over-smoothing of the node representations (Oono and Suzuki 2020; Chen et al. 2020).

FIGURE 2.

Comparing the performance of the two GCN settings while varying the size of the final embedding (left) and the number of layers in GCN (right). The test set contains 20% edges and the hidden embedding size is set to 50. The error bars show the standard deviation on 10 independent trials.

The results in Tables 1 and 2 show the performance of different models on the transductive link prediction task for the “hard” setting of negative interactions on the RPI networks of K562 and HepG2 cells, respectively. The tests are performed varying the percentage of edges in the test set from 10% to 50%. Our results show that our GCN-based models are comparable with RPISeq and consistently outperform RNAcommender in the task of transductive link prediction by a clear margin. As expected, the performance drops as we increase the number of edges in the test set (thus decreasing the size of the training set); however, for all sizes of the training set the performance of the GCN approach remains above 70% AUROC. We observe that providing RNA-seq information consistently improves the predictive performance of both RPIseq and GCN (this effect is more pronounced when using word2vec-based node features in GCN, as seen in Supplemental Table S5).

TABLE 1.

Comparing the AUROC for transductive learning setting in K562 cell line with varying percent of edges in the test set (validation set contains 10% edges in all cases)

TABLE 2.

Comparing the AUROC for transductive learning setting in HepG2 cell line with varying percent of edges in the test set (validation set contains 10% edges in all cases)

Results for the simpler setting when negative test edges are selected at random are shown in Table 3. Here we see a significant improvement for all the approaches, with the GCN achieving test accuracies surpassing 90% (in some cases substantially so). In this case, the GCN outperforms RPIseq as well.

TABLE 3.

Comparing the AUROC for transductive learning setting in K562 cell line in “easy” setting while varying the percent of edges in the test set (validation set contains 10% edges in all cases)

Inductive link prediction

The ability to make de novo predictions of RNA–protein interactions is one of the biggest motivations for developing computational models for this problem. This is known as the problem of inductive (or out-of-sample) link prediction in the GNN community. Such analysis is particularly valuable as the model predictions can serve as a proxy for proteins for which high-throughput data is not currently available. In this setting, we create a training network by removing interaction data for the test and validation proteins. The trained model then computes the embedding for a new protein v using normalized feature similarity sim(xv, xu) (based on inverse Euclidean distance or cosine similarity) to previously seen proteins:

| (1) |

where is the set of proteins in the training network , while xu and zu are the features and embeddings of a previously seen protein u. We perform experiments with a single protein in the test set (with all highly likely negative interactions as defined in the Negative interactions section). This can create a potential class imbalance in the positive and negative interactions in the test and validation sets. We therefore also use the average precision (AP) metric introduced in the Link prediction and evaluation metrics section, which is a better measure for an imbalanced data set. The protein with the highest feature similarity to the test protein is chosen as the validation protein. This is justifiable from a biological standpoint as it allows to reduce bias in the model predictions.

Figure 3 compares the performance of different models over the entire set of proteins in the inductive link prediction setting. Each box plots the distribution of mean AUROC/AP for proteins in the K562 cell line (10 replications for each protein). The results show that on average all variations of GCN outperform RNAcommender (labeled as RNAcom in the plots) in the K562 cell line. More specifically, GCN with inverse distance-based similarity outperforms RNAcommender on 93.33% and 87.5% of proteins on AUROC and AP, respectively. When compared with RPIseq, GCN with inverse distance-based similarity is better on 85% of proteins on both AUROC and AP. Among the different settings of GCN, we observe that the choice of similarity function has very little impact on the model performance and even appending RNA-seq to the final embeddings does not seem to substantially improve model performance (although we do see some improvement in the HepG2 cell line, see Supplemental Fig. S3).

FIGURE 3.

Comparing the performance of various models for de novo prediction in K562 cell line. Each box shows the distribution of mean AUROC (A) or average precision (B) over the entire set of proteins when the model is tested for a single protein in the test set.

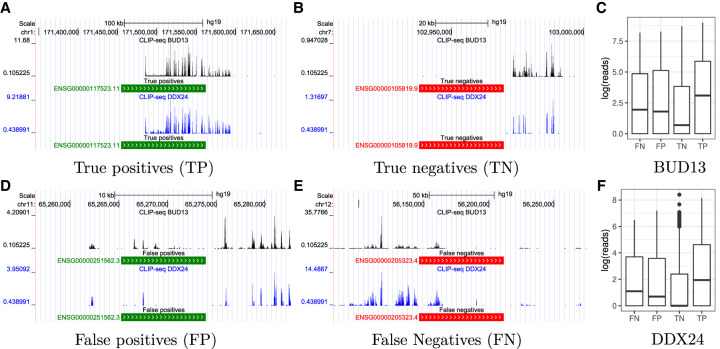

Figure 4 shows genome tracks annotated by the predictions of our model for two proteins, BUD13 and DDX24, in the inductive link prediction task for the K562 cell line. Figure 4A and B show example regions corresponding to predicted true positives and true negatives; as expected, true positives correspond to regions with a strong binding signal, while true negatives display a complete absence of signal. Figure 4D and E show examples of wrong predictions (false positives and false negatives, respectively): both examples show a modest amount of binding, likely representing regions that are borderline cases in the peak calling procedure. This suggests that the incorrect predictions by the model may correspond to potentially noisy regions. This point is illustrated globally using Figures 4C and F, which plot the distribution of reads per transcript for the four outcomes. We observe that the true positive and true negative predictions respectively have significantly higher and lower number of reads compared to the other cases, whereas the false positive and false negative predictions by the model have intermediate amounts of reads, potentially corresponding to noisy regions.

FIGURE 4.

The plots show representative genome tracks produced using the eCLIP data annotated by predictions made by our model under four different outcomes: true positives in A, true negatives in B, false positives in D, and false negatives in E. We consider two proteins, BUD13 and DDX24, in the inductive link prediction task for the K562 cell line. Positive predictions are shown in green and negative predictions in red. We also plot the distribution of reads (Fig. 4C,F) for the two proteins under the four outcomes.

Transfer learning

The ENCODE data set (Van Nostrand et al. 2020) consists of 223 eCLIP experiments for 150 proteins across two different cell lines (K562 and HepG2). This provides an opportunity to perform transfer learning, where a model learnt from the eCLIP data for a source cell line can be used to predict the interactions in a target cell line. This is potentially the most interesting aspect of our approach, as it would permit researchers to obtain a reasonable prediction of an RPI network in new conditions based on minimal information about the target condition.

To train a model for transfer learning, we split the interactions from the source cell line by assigning all interactions from a fixed percentage of randomly chosen proteins to the validation set. Splitting the data in this way allows us to choose a model that has higher predictive power on previously unseen proteins. For creating the test set using the target cell line, we only consider interactions with RNAs that already exist in the source cell line. This allows us to exclusively focus on transfer learning for proteins. Negative interactions in the test set (same number as positive interactions) are sampled uniformly at random from the highly likely negative interactions in the target cell line (“easy” setting in the Transductive link prediction section). This is a reasonable assumption because to sample negative interactions proportional to a protein's degree (“hard” setting), we need to have a priori knowledge about its interactions in the target cell line.

As in the Inductive link prediction section, we use Equation 1 to compute embeddings for the proteins in the test and validation sets. We specifically focus on the use of RNA-seq in transfer learning as it can provide information about RNA abundance in the target cell line. For transfer learning, we append the transcript per million (TPM) counts of the source cell line to the RNA embeddings during training, but replace it with the TPM counts in the target cell line for making the final predictions. Note that RNAcommender cannot be used for transfer learning,1 which is why it has been omitted in Tables 4 and 5.

TABLE 4.

Comparing the AUROC for transfer learning from K562 to HepG2

TABLE 5.

Comparing the AUROC for transfer learning from HepG2 to K562

The results in Tables 4 and 5 show that the GCN approach provides a good predictive accuracy even in the transfer learning mode, with AUROC values over 70% in most of the cases. Further, the best GCN variant also outperforms RPIseq in the transfer learning scenario. It should be noted that these results should be compared to Table 3 as we use the “easy” setting for negative edges in this section. Additionally, there appears to be a clear advantage of using RNA abundance data in transfer learning as GCN (RNA) is the best performing model in most cases. This is intuitively appealing, as it shows that the RNA-seq data clearly conveys some information about the state of the cell which is relevant to the prediction of the RPI network. Nevertheless, it is still very surprising that, even without RNA-seq information, GCN provides good predictive performance. To contextualize this observation, in Figure 5 we compare the ROC curve for GCN (RNA) to the prediction we would obtain by just assuming the two RPI networks to be the same on the set of proteins/RNAs shared by the two eCLIP experiments (naive transfer). Remarkably, the performance of this naive transfer approach is only marginally better than random, and considerably worse than the GCN prediction at the same false positive rate. This indicates that the GCN learns primarily robust interactions that are seen in multiple different environments, which are presumably hard-wired into the protein–RNA sequence features.

FIGURE 5.

ROC curve for transfer learning from K562 to HepG2 cell line for GCN (RNA). Red dot corresponds to the false positive and true positive rates if the edges from the source cell line are directly transferred to the target cell line.

We also observe that the performance of GCN degrades as the size of the validation set is increased. This implies that the model learns better by seeing more data from the source cell line instead of just overfitting to the training data. Comparing the results in Tables 4 and 5, we observe that transfer from HepG2 to K562 seems easier than in the other direction, specifically for GCN. There are obvious differences between the two data sets: more proteins were profiled within the K562, while the HepG2 data set had higher density of interactions, both of which may affect the complexity of the models learned. Additionally, the two biological systems are very different (one a leukaemia cell line, the other derived from a hepatocellular carcinoma), likely with different complexities. None of these observations, however, points to a clear reason why transfer should work better in one direction than the other. A follow-up study trying to investigate these modest differences in performance would likely need a deeper dive into the biology and novel experiments, and is thus outside the scope of this paper.

DISCUSSION

Experimental discovery of RPIs has been a major focus of research over the last 10 years. After a pioneering period where novel technologies were still being developed, the last few years have seen an effort toward scaling and standardizing the technology (Van Nostrand et al. 2016), resulting in the publication of the first large-scale compendia of RNA–RBP interactions in human cell lines (Van Nostrand et al. 2020).

These technological developments call for a change in the way RPI data are modeled. Earlier approaches (for review, see e.g., Pan et al. 2019) focused on predicting the targets (or the binding sites) of individual RBPs, treating potential target transcripts as i.i.d. observations thus enabling the deployment of machine learning approaches such as CNNs. Even when a network of interactions was used (e.g., in RPIseq [Muppirala et al. 2011]), the data sets did not consider genome-wide targets for proteins and were hence incomplete. Now, the availability of binding data from hundreds of RBPs leads to hundreds of correlated prediction tasks, calling for methods that can effectively leverage the network of interactions. In this spirit, our GNN model transfers information between different RBPs binding data, translating the problem of predicting the binding targets of an RBP to predicting the whole RPI network.

Our experiments demonstrate considerable promise in this attempt. While our GNN-based approach is on par or better than other competitors on the classical tasks of link prediction, it offers strong predictive performance in out-of-sample inductive predictions of the targets of an unseen RBP. Additionally, we show empirically that the GNN approach is also able to perform transfer learning, that is, predict the RPI network in a different (related) condition starting from RPI data from a well characterized condition, a task that was never attempted to our knowledge. Further, we found that the use of RNA-seq information enhanced the performance of the GNN model. Overall, our approach provides significant advantages and should be chosen for making de novo predictions of RNA–protein interactions, specifically when bolstered by the use of RNA-seq information.

While we believe GNNs hold much promise for the problem of RPI network prediction, a number of areas for future improvement are clearly open. First of all, proteins (and transcripts) are characterized solely by their sequence and their binding partners in our approach, making the task of predicting the full complement of binding partners for a new protein (inductive link prediction) difficult. In principle, the availability of additional node information (for example, in the form of protein-protein interactions, or of ontological annotations) could be easily incorporated in the GNN framework, potentially leading to significant improvements. Another area of great interest where improvements are certainly possible is transfer learning. Here, the question is to identify suitable covariates which can be used to measure the similarity of different domains. In this paper, we show that the use of RNA-seq data helps in the transfer learning task, presumably because it recapitulates some information on the state of the cell; nevertheless, more appropriate task descriptors might be found and integrated in the framework. While we found that a simple GNN architecture can provide strong predictive performance, there remains a need to further investigate heterogeneous GNN models and/or architectures specifically designed for link prediction. Finally, using richer features derived from structural information of proteins and RNAs is another aspect that can potentially improve performance of machine learning methods.

MATERIALS AND METHODS

Data set

CLIP-seq experiments can provide genome-wide binding sites for RBPs. To retrieve these binding sites, the CLIP-seq reads are first mapped to a reference genome, followed by identification of the peaks of reads using peak calling software. These peaks correspond to RBP binding sites based on a certain predefined cutoff, which can be used to identify the set of RNAs a protein binds to.

To construct the benchmark data sets, we use the eCLIP data set for two cell lines (HepG2 and K562) generated as part of the ENCODE project phase III (Van Nostrand et al. 2020). We use the highly reproducible peaks identified from the two replicates of the eCLIP data using the irreproducibility discovery rate (IDR) framework (Li et al. 2011) to obtain the binding regions. The gene corresponding to the binding site is obtained by using the bedtools intersect function (with a predefined minimum overlap between genomic features, we use 50% in this study) with the human genome (Gencode v19 is used). Repeating the procedure for each protein in the eCLIP data set we obtain a bipartite network G = (V, E, X) of RNA–protein interactions for a particular cell line. The network G contains a set of nodes V = R ∪ P, where R is the set of RNAs and P is the set of RBPs, an edge set E ⊆ R × P of RNA–protein interactions, and a matrix X of node features (see the Node features section for further details).

The final graph for the K562 cell line consists of 14,665 nodes (120 proteins and 14,545 RNAs) with 144,527 interactions between proteins and RNAs. The mean (out) degree of proteins is 1204.39 with a standard deviation of 1304.64, while the mean and standard deviation of the RNA (in) degree are 9.94 and 10.27, respectively. For the HepG2 cell line, the graph contains 15,018 nodes (103 proteins and 14,915 RNAs) and 145,509 edges. The mean (out) degree of proteins is 1412.71 with a standard deviation of 1380.69, while the mean and standard deviation of the RNA (in) degree are 9.76 and 10.03, respectively. Supplemental Figure S1 plots the distribution of protein and RNA degrees for the RPI networks in the two cell lines. As far as we know, we are the first ones to create a graph from a homogeneous data set with hundreds of RBPs using the eCLIP data set of Van Nostrand et al. (2020).

Negative interactions

CLIP-seq experiments provide information about binding sites from the peaks of reads, but they do not provide any information about unbound sites. False negatives are a well-known problem in CLIP-seq data because of the absence or low concentration of transcripts in the cell line used for experiments (Maticzka et al. 2014; Uhl et al. 2017). Appropriately defining negative samples is important for training machine learning algorithms (Mikolov et al. 2013a). Negative interactions play a crucial role in computation of the loss function, and recent work by Ying et al. (2018) has shown that appropriately choosing the negative samples can boost the performance of a link predictor. This becomes even more important for bipartite networks where randomly sampling two unconnected nodes can produce edge-types that do not exist in the data (RNA–RNA, for example).

To tackle this issue, the following two strategies have been commonly used to construct negative samples from CLIP-seq data (Pan et al. 2019): (i) use the regions where no binding sites are located as negative samples, or (ii) use randomly shuffled nucleotides in the positive sequences as negative samples. We augment the first strategy by utilizing the RNA-seq transcript abundance data to identify RNAs that have transcripts per million (TPM) counts more than the median value in the cell line but still do not have any peaks with the corresponding protein to define negative samples. This strategy allows the model to learn from highly likely unbound sites of real RNA sequences and alleviates the problem of false negatives described above. Using RNA-seq, we identify reliable noninteracting RNAs for each protein and consequently use these negative samples to define the training and test sets.

Node features

Node features are essential for training GNNs as they allow the neighborhood aggregation process to capture the hierarchical nonlinearities in the network data. The sequences of proteins and RNAs can be encoded as numeric vectors for training machine learning models. There are two common ways of encoding RNA sequences as numeric vectors (Pan et al. 2019): (i) one-hot vector, which is a bit vector that consists of all zeros except for a single dimension, and (ii) k-mer frequency vector, which is a vector consisting of frequencies of all k-mers, similar to bag-of-words (BOW) in natural language processing. We use the following feature extraction methods, which have been successfully used by previous methods for predicting RNA–protein interactions (Muppirala et al. 2011; Pan et al. 2016):

Proteins: Conjoint triad descriptors (Shen et al. 2007) abstract the features of proteins based on the classification of amino acids according to their dipoles and volumes of the side chains. Each protein sequence is encoded using a normalized three-gram frequency distribution extracted from a seven-letter reduced alphabet representation.

RNA: k-mer frequency distribution counts the frequency of individual k-mers (AAA, AAC, …, UUU are 3-mers) in a given RNA sequence. It is the simplest feature extraction method for RNAs, where k can be used as a hyperparameter. We use k = 6 for our experiments.

After obtaining the protein and RNA sequences for the nodes in the network (see Supplemental Material S1 for more details), the aforementioned feature extraction methods are used to obtain 73 = 343 and 46 = 4096 dimensional feature vectors for proteins and RNAs, respectively. For aggregating the features in a homogeneous GNN model such as GCN, node features should have the same dimensionality. To achieve this, we use the first 100 principal components of protein and RNA features to define the node features X for the network G. To account for the heterogeneous nature of the nodes in the network (proteins and RNAs), we append 1 and 0 to the protein and RNA features, respectively. This creates a separation in the feature space of proteins and RNAs, thus allowing the GNN to distinguish between the two types of nodes.

Features can also be extracted by treating RNA and protein sequences as a special kind of language, where k-mers can be treated as words and sequences as sentences. Natural language processing techniques such as word2vec (Mikolov et al. 2013b) can then be used to learn embeddings for protein and RNA sequences (Asgari and Mofrad 2015). Results for this alternative feature extraction scenario can be found in the Supplemental Information.

Baselines

We use RPIseq (Muppirala et al. 2011) and RNAcommender (Corrado et al. 2016) as the base-line models to compare against our GNN-based approach. RNAcommender is a recommender system capable of suggesting genome-wide RNA targets for unexplored RBPs using interaction information available from high-throughput experiments performed on other proteins. In our evaluations, we use sequence-based features instead of the advanced feature engineering2 (protein domain composition and the RNA predicted secondary structure) used in the original implementation of RNAcommender (Corrado et al. 2016). The sequence-based features are easier to collect and also ensure that all the methods compared in this paper use the same input features.

RPIseq (Muppirala et al. 2011) uses a family of classifiers to predict RNA–protein interactions using only sequence information. RPIseq uses 4-mer frequency distribution counts in a given RNA sequence as RNA features, while Conjoint triad descriptors (Shen et al. 2007) are used for creating protein features. In our experiments, we use a random forest with 20 trees, which was shown to outperform an SVM-based classifier in the original publication (Muppirala et al. 2011). For RPIseq, we consider a variant, RPIseq (RNA), where RNA-seq information is appended to the features in order to provide information about the presence and quantity of RNA in a given biological sample. This allows us to perform a fair comparison when both RPIseq and GCN are supplied with the additional RNA-seq information.

Graph neural networks

The ability to learn from the entire network of RNA–protein interactions enables us to build a single end-to-end model for predicting RNA–protein interactions. The GNN architecture creates a nonlinear permutation invariant transformation function on node, edge and graph features, which can be optimized for performing downstream learning tasks. The neighborhood aggregation process of GNNs allows us to capture the hierarchical nonlinearities in network data, thus learning low dimensional embeddings for the nodes of a graph. Further, the GNN architecture facilitates the aggregation of information from distant neighbors such as other proteins, thus learning better node representations. A lot of the existing GNN architectures can be directly translated into the framework of message passing neural networks (Gilmer et al. 2017), where each node sends and receives messages [using function Mk(·)] from its neighbors, and subsequently updates [using function Uk(·)] its own node state:

| (2) |

where is the hidden representation of node v in layer k, Mk (·) and Uk (·) are functions with learnable parameters, N (v) is the set of neighbors of node v, and … represent other features (such as edge features) that can be added to the message passing process. Node features, if available, can be used as the initial hidden representation for a node.

After K message passing layers, node embedding zv is produced for each node v as the final output, which can then be used for node, link, or graph level prediction tasks. The functions Mk(·) and Uk(·) share parameters across nodes, but each node is associated with an individual computation graph defined by its neighbors (Ying et al. 2019).

In this paper, we use graph convolutional network (GCN) (Kipf and Welling 2017) with two convolutional layers as the GNN model. GCN bridges the gap between spectral and spatial approaches for performing convolution over graph-based data (Wu et al. 2020b). The graph convolution operation of GCN can be written as:

| (3) |

where is the adjacency matrix with self-loops and is the diagonal degree matrix corresponding to is the matrix containing the hidden representation of nodes at layer k with H(0) = X, Θ (k) are the model parameters at layer k, and σ(·) is an element-wise activation function. Comparing Equations 2 and 3, for GCN the message and update functions take the following form (Gilmer et al. 2017):

Shchur et al. (2018) performed a comprehensive analysis of different GNN architectures and found that there is no clear winner when it comes to choosing a GNN architecture, at least on the benchmark data sets. Our results in Supplemental Table S2 also show that there is little difference between the performance of various GNN architectures. Following these observations, we decided to choose the simplest architecture with the fewest parameters for our experiments.

To add some biological context to the model, we consider using RNA-seq information for GCN. RNA-seq experiments provide high resolution information about the presence and quantity of all the RNAs in a given biological sample. RNA-seq can tell us which genes are turned on in a cell, and what their level of transcription is (Ozsolak and Milos 2011). Thus, one would assume that RNA abundance would be a good indicator of an RNA's ability of being bound by a protein. In GCN (RNA), we append log(1 + TPM) to the final node embedding Z of RNAs (set to −1 for all proteins) obtained after GCN layers. The modified embeddings are then used for computing the loss and predicting interactions.

Link prediction and evaluation metrics

The current knowledge of interactions in biological networks is often incomplete, which makes predicting missing interactions an important task (Muzio et al. 2020). Given the importance of RNA–protein interactions and the challenges associated with experimental methods, predicting potential interactions using computational models can complement our current knowledge (Corrado et al. 2016). While most existing studies focus on transductive link prediction (both nodes are in the graph), inductive (or out-of-sample) link prediction can prove immensely valuable for new proteins. Link prediction is often framed as a semisupervised learning problem, where the known links in a network are used to predict additional interactions (Al Hasan et al. 2006; Liben-Nowell and Kleinberg 2007). With the rise in the popularity of GNNs, specialized methods (Kipf and Welling 2016; Zhang and Chen 2018; Zhang et al. 2021) have been developed to deal with the link prediction task using GNNs. The link prediction task introduces additional challenges for GNNs as the method needs to learn link representations instead of node representations (Zhang et al. 2021). Link prediction is an important task for recommender systems (Wu et al. 2020a) and has been well-studied in the heterogeneous graph learning literature (Wang et al. 2020; Yang et al. 2020).

In a GNN for link prediction, the message passing procedure described in the Graph neural networks section is used to compute individual node representations zu, following which a function puv = f (zu, zv) can be used to predict the probability of the link (u, v). In our implementation, we use the dot product of the final embeddings as the function f (·) The model can be trained to maximize the likelihood of reconstructing the true adjacency matrix A using the binary cross entropy loss:

| (4) |

Splitting networks into training and test subnetworks is not trivial in link prediction problems. While performing the train-test split of edges, we need to make sure that every node in the training network has a non-empty set of neighbors so that the GNN can learn appropriate representations using the message passing process shown in Equation 2. To ensure this, test edges are sampled for each RNA while making sure that it stays connected in the training network.

Link prediction is a binary classification task and the performance of an algorithm can be evaluated using different metrics. These metrics can be divided into two broad categories: fixed-threshold metrics and threshold curves (Yang et al. 2015). In a research context, we generally do not have a reasonable threshold, which is why threshold curves and scalar measures summarizing them are widely used in the literature (Davis and Goadrich 2006; Clauset et al. 2008; Lichtenwalter et al. 2010). In this paper, we use area under the receiver operating characteristic (AUROC), and average precision (AP) to evaluate performance of different methods on the link prediction task. The receiver operating characteristic (ROC) curve represents the performance trade-off between true positives and false positives at different decision boundary thresholds. AUROC reflects the probability that a randomly chosen positive instance appears above a randomly chosen negative instance. AP summarizes the precision-recall curve, and is a better measure for a highly imbalanced data set (Davis and Goadrich 2006; Yang et al. 2015). AP can be computed using the following formula:

where βn and αn are the precision and recall at the nth threshold.

SUPPLEMENTAL MATERIAL

Supplemental material is available for this article.

Supplementary Material

ACKNOWLEDGMENTS

The authors want to thank Gianluca Corrado for sharing the implementation details of RNAcommender.

Footnotes

Article is online at http://www.rnajournal.org/cgi/doi/10.1261/rna.079365.122.

This conclusion was reached after discussions with the authors of RNAcommender.

This was done because it was not possible to perform the feature engineering for all the RNAs and proteins in our new data set.

REFERENCES

- Agostini F, Zanzoni A, Klus P, Marchese D, Cirillo D, Tartaglia GG. 2013. catRAPID omics: a web server for large-scale prediction of protein–RNA interactions. Bioinformatics 29: 2928–2930. 10.1093/bioinformatics/btt495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Al Hasan M, Chaoji V, Salem S, Zaki M. 2006. Link prediction using supervised learning. In SDM06: workshop on link analysis, counter-terrorism and security, Vol. 30, pp. 798–805. https://www.cs.rpi.edu/~zaki/PaperDir/LINK06.pdf [Google Scholar]

- Alipanahi B, Delong A, Weirauch MT, Frey BJ. 2015. Predicting the sequence specificities of DNA- and RNA-binding proteins by deep learning. Nat Biotechnol 33: 831–838. 10.1038/nbt.3300 [DOI] [PubMed] [Google Scholar]

- Asgari E, Mofrad MR. 2015. Continuous distributed representation of biological sequences for deep proteomics and genomics. PLoS ONE 10: e0141287. 10.1371/journal.pone.0141287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brannan KW, Jin W, Huelga SC, Banks CA, Gilmore JM, Florens L, Washburn MP, Van Nostrand EL, Pratt GA, Schwinn MK, et al. 2016. SONAR discovers RNA-binding proteins from analysis of large-scale protein-protein interactomes. Mol Cell 64: 282–293. 10.1016/j.molcel.2016.09.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen D, Lin Y, Li W, Li P, Zhou J, Sun X. 2020. Measuring and relieving the over-smoothing problem for graph neural networks from the topological view. In Proceedings of the AAAI conference on artificial intelligence, Vol. 34, pp. 3438–3445. 10.1609/aaai.v34i04.5747 [DOI] [Google Scholar]

- Clauset A, Moore C, Newman ME. 2008. Hierarchical structure and the prediction of missing links in networks. Nature 453: 98–101. 10.1038/nature06830 [DOI] [PubMed] [Google Scholar]

- Corrado G, Tebaldi T, Costa F, Frasconi P, Passerini A. 2016. RNAcommender: genome-wide recommendation of RNA–protein interactions. Bioinformatics 32: 3627–3634. 10.1093/bioinformatics/btw517 [DOI] [PubMed] [Google Scholar]

- Davis J, Goadrich M. 2006. The relationship between precision-recall and ROC curves. In ICML ’06: Proceedings of the 23rd international conference on machine learning, June 2006, pp. 233–240. 10.1145/1143844.1143874 [DOI] [Google Scholar]

- Gilmer J, Schoenholz SS, Riley PF, Vinyals O, Dahl GE. 2017. Neural message passing for quantum chemistry. Proceedings of the 34th international conference on machine learning. Proc Mach Learn Res 70: 1263–1272. https://proceedings.mlr.press/v70/gilmer17a.html. [Google Scholar]

- Hentze MW, Castello A, Schwarzl T, Preiss T. 2018. A brave new world of RNA-binding proteins. Nat Rev Mol Cell Biol 19: 327. 10.1038/nrm.2017.130 [DOI] [PubMed] [Google Scholar]

- Kazan H, Ray D, Chan ET, Hughes TR, Morris Q. 2010. RNAcontext: a new method for learning the sequence and structure binding preferences of RNA-binding proteins. PLoS Comput Biol 6: e1000832. 10.1371/journal.pcbi.1000832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kipf TN, Welling M. 2016. Variational graph auto-encoders. NIPS workshop on Bayesian deep learning, 2016, pp. 1–3. arXiv 10.1038/10.48550 [DOI] [Google Scholar]

- Kipf TN, Welling M. 2017. Semi-supervised classification with graph convolutional networks. In Proceedings of the 5th international conference on learning representations, ICLR 2017, pp. 1–14. https://openreview.net/forum?id=SJU4ayYgl [Google Scholar]

- Li Q, Brown JB, Huang H, Bickel PJ. 2011. Measuring reproducibility of high-throughput experiments. Ann Appl Stat 5: 1752–1779. 10.1214/11-AOAS466 [DOI] [Google Scholar]

- Liben-Nowell D, Kleinberg J. 2007. The link-prediction problem for social networks. J Am Soc Inform Sci Technol 58: 1019–1031. 10.1002/asi.20591 [DOI] [Google Scholar]

- Licatalosi DD, Mele A, Fak JJ, Ule J, Kayikci M, Chi SW, Clark TA, Schweitzer AC, Blume JE, Wang X, et al. 2008. Hits-clip yields genome-wide insights into brain alternative RNA processing. Nature 456: 464–469. 10.1038/nature07488 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lichtenwalter RN, Lussier JT, Chawla NV. 2010. New perspectives and methods in link prediction. In KDD '10: Proceedings of the 16th ACM SIGKDD international conference on knowledge discovery and data mining, pp. 243–252. 10.1145/1835804.1835837 [DOI] [Google Scholar]

- Liu L, Li T, Song G, He Q, Yin Y, Lu JY, Bi X, Wang K, Luo S, Chen YS, et al. 2019. Insight into novel RNA-binding activities via large-scale analysis of lncRNA bound proteome and IDH1-bound transcriptome. Nucleic Acids Res 47: 2244–2262. 10.1093/nar/gkz032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maticzka D, Lange SJ, Costa F, Backofen R. 2014. GraphProt: modeling binding preferences of RNA-binding proteins. Genome Biol 15: R17. 10.1186/gb-2014-15-1-r17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mikolov T, Sutskever I, Chen K, Corrado G, Dean J. 2013a. Distributed representations of words and phrases and their compositionality. NIPS, pp. 3111–3119. [Google Scholar]

- Mikolov T, Chen K, Corrado G, Dean J. 2013b. Efficient estimation of word representations in vector space. In Proceedings of ICLR workshops track, 2013. arXiv 10.1038/1301.3781 [DOI] [Google Scholar]

- Muppirala UK, Honavar VG, Dobbs D. 2011. Predicting RNA-protein interactions using only sequence information. BMC Bioinformatics 12: 489. 10.1186/1471-2105-12-489 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muzio G, O'Bray L, Borgwardt K. 2020. Biological network analysis with deep learning. Brief Bioinform 22: 1515–1530. 10.1093/bib/bbaa257 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oono K, Suzuki T. 2020. Graph neural networks exponentially lose expressive power for node classification. International conference on learning representations, 2020, Addis Ababa, Ethiopia. arXiv 10.1038/1905.10947 [DOI] [Google Scholar]

- Ozsolak F, Milos PM. 2011. RNA sequencing: advances, challenges and opportunities. Nat Rev Genet 12: 87–98. 10.1038/nrg2934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pan X, Fan YX, Yan J, Shen HB. 2016. IPMiner: hidden ncRNA-protein interaction sequential pattern mining with stacked autoencoder for accurate computational prediction. BMC Genomics 17: 582. 10.1186/s12864-016-2931-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pan X, Yang Y, Xia CQ, Mirza AH, Shen HB. 2019. Recent methodology progress of deep learning for RNA–protein interaction prediction. Wiley Interdiscip Rev RNA 10: e1544. 10.1002/wrna.1544 [DOI] [PubMed] [Google Scholar]

- Shchur O, Mumme M, Bojchevski A, Günnemann S. 2018. Pitfalls of graph neural network evaluation. Relational representation learning workshop, NeurIPS, 2018. arXiv 10.1038/1811.05868 [DOI] [Google Scholar]

- Shen J, Zhang J, Luo X, Zhu W, Yu K, Chen K, Li Y, Jiang H. 2007. Predicting protein–protein interactions based only on sequences information. Proc Natl Acad Sci 104: 4337–4341. 10.1073/pnas.0607879104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shen ZA, Luo T, Zhou YK, Yu H, Du PF. 2021. NPI-GNN: predicting ncRNA–protein interactions with deep graph neural networks. Brief Bioinform 22: bbab051. 10.1093/bib/bbab051 [DOI] [PubMed] [Google Scholar]

- Uhl M, Houwaart T, Corrado G, Wright PR, Backofen R. 2017. Computational analysis of clip-seq data. Methods 118: 60–72. 10.1016/j.ymeth.2017.02.006 [DOI] [PubMed] [Google Scholar]

- Uhl M, Tran VD, Heyl F, Backofen R. 2021. RNAProt: an efficient and feature-rich RNA binding protein binding site predictor. Gigascience 10: giab054. 10.1093/gigascience/giab054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Nostrand EL, Pratt GA, Shishkin AA, Gelboin-Burkhart C, Fang MY, Sundararaman B, Blue SM, Nguyen TB, Surka C, Elkins K, et al. 2016. Robust transcriptome-wide discovery of RNA-binding protein binding sites with enhanced CLIP (eCLIP). Nat Methods 13: 508–514. 10.1038/nmeth.3810 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Nostrand EL, Freese P, Pratt GA, Wang X, Wei X, Xiao R, Blue SM, Chen JY, Cody NAL, Dominguez D, et al. 2020. A large-scale binding and functional map of human RNA-binding proteins. Nature 583: 711–719. 10.1038/s41586-020-2077-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang L, You ZH, Huang DS, Zhou F. 2018. Combining high speed ELM learning with a deep convolutional neural network feature encoding for predicting protein-RNA interactions. IEEE/ACM Trans Comput Biol Bioinform 17: 972–980. 10.1109/TCBB.2018.2874267 [DOI] [PubMed] [Google Scholar]

- Wang L, Yan X, Liu ML, Song KJ, Sun XF, Pan WW. 2019. Prediction of RNA-protein interactions by combining deep convolutional neural network with feature selection ensemble method. J Theor Biol 461: 230–238. 10.1016/j.jtbi.2018.10.029 [DOI] [PubMed] [Google Scholar]

- Wang X, Bo D, Shi C, Fan S, Ye Y, Yu PS. 2020. A survey on heterogeneous graph embedding: methods, techniques. IEEE Transactions on Big Data. 10.1109/TBDATA.2022.3177455. [DOI] [Google Scholar]

- Wu S, Sun F, Zhang W, Xie X, Cui B. 2020a. Graph neural networks in recommender systems: a survey. ACM Comput Surv; 10.1145/3535101 [DOI] [Google Scholar]

- Wu Z, Pan S, Chen F, Long G, Zhang C, Yu PS. 2020b. A comprehensive survey on graph neural networks. In IEEE Trans Neural Netw Learn Syst 32: 4–24. 10.1109/TNNLS.2020.2978386 [DOI] [PubMed] [Google Scholar]

- Yang Y, Lichtenwalter RN, Chawla NV. 2015. Evaluating link prediction methods. Knowledge Inform Syst 45: 751–782. 10.1007/s10115-014-0789-0 [DOI] [Google Scholar]

- Yang C, Xiao Y, Zhang Y, Sun Y, Han J. 2020. Heterogeneous network representation learning: a unified framework with survey and benchmark. IEEE Trans Knowl Data Eng 34: 4854–4873. 10.1109/TKDE.2020.3045924 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yi HC, You ZH, Huang DS, Li X, Jiang TH, Li LP. 2018. A deep learning framework for robust and accurate prediction of ncRNA-protein interactions using evolutionary information. Mol Ther Nucleic Acids 11: 337–344. 10.1016/j.omtn.2018.03.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ying R, He R, Chen K, Eksombatchai P, Hamilton WL, Leskovec J. 2018. Graph convolutional neural networks for web-scale recommender systems. In Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery and data mining, London. pp. 974–983. [Google Scholar]

- Ying R, Bourgeois D, You J, Zitnik M, Leskovec J. 2019. GNNExplainer: generating explanations for graph neural networks. Adv Neural Inf Process Syst 32: 9240–9251. [PMC free article] [PubMed] [Google Scholar]

- Zhang M, Chen Y. 2018. Link prediction based on graph neural networks. Adv Neural Inf Process Syst 31. Montreal, Canada. arXiv 10.1038/1802.09691 [DOI]

- Zhang M, Li P, Xia Y, Wang K, Jin L. 2021. Labeling trick: a theory of using graph neural networks for multi-node representation learning. Adv Neural Inf Process Syst 34: 9061–9073. [Google Scholar]

- Zhou J, Cui G, Hu S, Zhang Z, Yang C, Liu Z, Wang L, Li C, Sun M. 2020. Graph neural networks: a review of methods and applications. AI Open 1: 57–81. 10.1016/j.aiopen.2021.01.001 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.