Abstract

Locomotion produces full-field optic flow that often dominates the visual motion inputs to an observer. The perception of optic flow is in turn important for animals to guide their heading and interact with moving objects. Understanding how locomotion influences optic flow processing and perception is therefore essential to understand how animals successfully interact with their environment. Here, we review research investigating how perception and neural encoding of optic flow are altered during self-motion, focusing on locomotion. Self-motion has been found to influence estimation and sensitivity for optic flow speed and direction. Nonvisual self-motion signals also increase compensation for self-driven optic flow when parsing the visual motion of moving objects. The integration of visual and nonvisual self-motion signals largely follows principles of Bayesian inference and can improve the precision and accuracy of self-motion perception. The calibration of visual and nonvisual self-motion signals is dynamic, reflecting the changing visuomotor contingencies across different environmental contexts. Throughout this review, we consider experimental research using humans, non-human primates and mice. We highlight experimental challenges and opportunities afforded by each of these species and draw parallels between experimental findings. These findings reveal a profound influence of locomotion on optic flow processing and perception across species.

This article is part of a discussion meeting issue ‘New approaches to 3D vision’.

Keywords: optic flow, mouse vision, human vision, locomotion, psychophysics

1. Introduction

Locomotion produces full-field optic flow that often dominates the visual motion inputs to an observer (figure 1) [1–7]. The perception of such visual motion is important for animals to guide their own movement within an environment and also to determine the relative movement of external objects, for example during prey capture [8,9] or predator avoidance [10]. Understanding how locomotion influences optic flow processing and perception is therefore essential to understand how animals successfully interact with their environment.

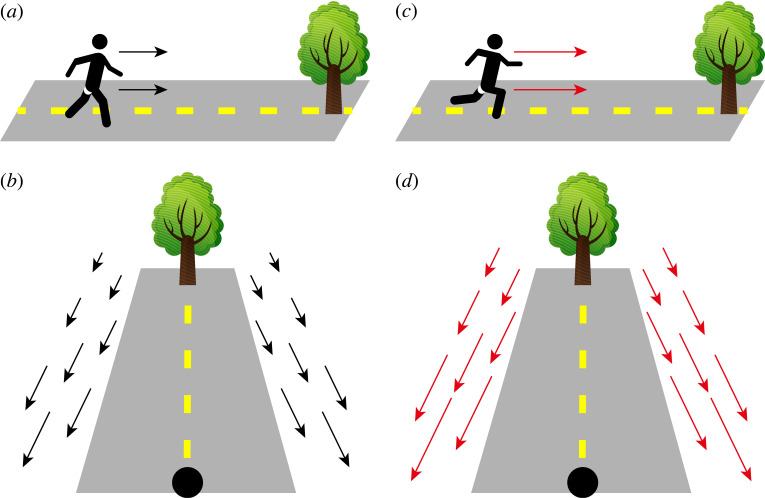

Figure 1.

Introduction to optic flow during locomotion. When a subject is walking toward a tree (a), they experience a characteristic optic flow pattern (b) that is expanding outwards from the target location. When the subject moves faster (c) they experience a correspondingly faster optic flow (d).

Perception and neural encoding of optic flow has historically been studied in stationary, fixating subjects. While these paradigms afforded tight control over experimental conditions necessary to investigate visual processing, they also have clear ethological limitations. Recent developments of experimental methods are however enabling a growing focus on the influence of self-motion and active behaviour on visual processing and perception [11–18]. In this article, we review research investigating the effects of self-motion on optic flow processing and perception, with a particular focus on movements relevant to locomotion. Throughout, we consider experimental research using humans, non-human primates and mice. In doing so we highlight the experimental challenges and opportunities afforded by each of these species, and, where possible, draw parallels between experimental findings obtained from each of them.

As the review is multidisciplinary, we first introduce some primers below to provide brief contextual background useful for reading this review.

[Primer A] Perceptual functions of optic flow

Movement of an observer through an environment produces relative motion of the 3D environment. This relative environmental motion is focused through the optics of the eyes onto the retinas, forming two-dimensional velocity fields, i.e. optic flow, which are influenced by both self-motion and the structure of the environment [5,18,19]. Perceiving optic flow, therefore, enables an observer to make inferences about their movement within an environment. Indeed, perception of optic flow can be used to estimate the direction [20] and speed [21,22] of self-motion, gain information about the structure and layout of objects within the environment [23,24] and parse external object motion from visual motion due to self-motion (flow parsing; [25]).

[Primer B] Visual cues for motion-in-depth and optic flow

A number of monocular and binocular visual cues provide information about optic flow and self-motion to an observer. Monocular cues include structured velocity fields as well as changes in the size and spatial frequency of visual objects [19,26]. Binocular cues include inter-ocular velocity differences (differences in velocity produced by a moving object projected onto two spatially separated eyes), and changes in binocular disparity [5,27]. Studies investigating perceptual sensitivity have found that the use of monocular and binocular cues for motion-in-depth varies substantially both across the visual field [28–30] and with viewing distance [31]. More generally, well-known errors in the perception of motion-in-depth can be explained by Bayesian inference of noisy sensory signals [32], suggesting that the influence of different visual cues on motion perception may depend on their reliability. Intriguingly, when humans are provided with feedback on the accuracy of their performance in a 3D motion perception task they can learn to leverage different visual cues [33,34]. The usage of different visual cues for the perception of optic flow is therefore likely to be flexible and context-dependent. An important consideration is therefore how display devices used in experiments may alter their use compared to natural viewing.

[Primer C] Optics of the primate and rodent visual systems

In this article, we review studies from primates and rodents. There are many obvious differences in the optics of the rodent and primate visual systems which have implications for optic flow processing. Whereas primate eyes face forward, most rodents have sideways-facing eyes. While such lateral placement gives rodents a larger field of view [35,36], it comes at a cost—the region of visual space where the field of view of the two eyes overlaps, known as the binocular zone, is substantially smaller in mice (approx. 40° compared to approx. 120° in humans; [37,38]) resulting in a smaller region where binocular cues for depth perception are available. Nevertheless, mice can discriminate stereoscopic depths [39] and, similar to primates, have neurons that are binocular disparity-sensitive, throughout visual cortex [37,40,41], indicating that binocular cues for depth are used by the mouse visual system.

The mouse retina is suitable for processing optic flow despite its low-acuity. While the density of photoreceptors in mouse retina is actually similar to that of primates [42–45], the smaller size of the mouse eye coupled with a larger field of view means that the photoreceptor per unit area of the visual scene is smaller than in primates [46]. Moreover, the high acuity of primate vision is largely due to the concentration of approximately 99% of cone photoreceptors within the fovea, an area that takes up approximately 1% of the retina [47]. As such, mouse vision is believed to be similar to that of primate peripheral vision [42,46,48]. Given the importance of peripheral vision in the perception of optic flow [49–51] and the use of optic flow in human observers to perceive visual events when low visual acuity is simulated [52], the mouse provides an appropriate model species for investigating perception and neural encoding of optic flow.

[Primer D] Neural encoding of optic flow

The neural encoding of optic flow is best understood in non-human primates. In particular, neurons in the dorsal Medial Superior Temporal (MSTd) area, a higher visual area within the dorsal stream of the primate visual system, have large receptive fields often covering both ipsi- and contralateral halves of the visual field, indicating their suitability for the encoding of full-field optic flow caused by self-motion [53]. Indeed, neurons in MSTd are selective for complex visual motion patterns such as expansions, contractions, rotations and spirals [54–57] that can arise from combinations of head and eye-movements (see [58] for a recent review). Moreover, tuning for these complex visual motion patterns can be invariant to the precise form of the stimulus used [59], as well as its location within the visual field [57].

Tuning for complex optic flow patterns, as observed in primate area MSTd [54–57], has not yet been identified in the mouse. However, neurons in a number of mouse visual cortical areas are selective for specific combinations of binocularly presented drifting gratings simulating forwards and backwards translations, as well as rotations [60], indicating that selectivity for optic flow patterns may be present. In particular, it was found that higher visual areas RL/A, followed by AM and PM, were enriched with neurons selective for translation or rotation compared to V1 [60]. Interestingly, mouse visual area RL is biased to represent the lower visual field [61,62], indicating that visual motion in the lower visual field may be important for signalling self-motion in the mouse, reflecting the proximity of mouse eyes to the ground plane. In accordance with this, neurons in the mouse visual cortex with receptive fields in the lower visual field tend to respond more strongly to coherent visual motion [63]. It will be important for future work comparing the neural encoding of optic flow in mice and primates to consider their distinct ecological niches.

It is unclear to what extent an analogous area to primate MST exists in the mouse visual system. More generally, a number of studies have sought to determine whether the mouse visual system has distinct processing streams analogous to the dorsal and ventral streams in primates [61,64–70], however, these studies have sometimes produced conflicting results. Given that both selectivity for coherent visual motion and tuning for visual speed are widespread in mouse visual areas [63,70,71], there may be a more distributed code for visual motion and optic flow in the mouse visual system.

[Primer E] Modulation of visual processing during locomotion

Many recent studies have investigated the influence of locomotion on visual processing in the mouse, often leveraging the spontaneous locomotion exhibited by mice when head-fixed on a treadmill [72,73]. In just over a decade these studies have revealed effects of locomotion throughout the mouse visual system: from the outputs of the retina [74,75], to thalamic and midbrain nuclei [76–80] and a range of cortical areas [73,80–83]. While we provide an overview of the main findings below, other reviews provide a more detailed account of the effects of locomotion and the pathways supporting them (e.g. [17]).

The effects of locomotion on visual processing are diverse and vary between visual areas and cortical layers [71,76,77,79,80,84,85] as well as genetically, physiologically and functionally defined cell-types [73,78,86–90]. Modulation of visual responses during locomotion has been described at multiple spatial scales. At a cellular level: membrane potentials show bidirectional changes [86,91,92]; spontaneous and evoked firing rates are altered [73,85]; and visual response dynamics are less transient [71]. In terms of visual tuning properties: locomotion increases spatial integration [93]; is linked to additive and multiplicative tuning gain for visual features such as orientation, direction and spatial frequency [84,94]; and also altered tuning preferences for visual speed [81]. Furthermore, joint tuning for optic flow speed and self-motion signals correlated with running speed have been described in a range of mouse visual areas [77,80,95], indicating that integration of self-motion and visual motion signals is widespread in the mouse visual system. At the scale of neural populations: locomotion is associated with reductions in pairwise noise correlations [76,96]; changes in LFP power spectra [73,96]; altered functional connectivity between brain areas [97] and changes in the geometry and structure of latent population activity [71,98].

While these changes in neural activity observed during locomotion generally indicate enhanced encoding of visual inputs, perceptual studies are thus far limited and provide mixed results as to whether locomotion also improves visual perception [91,99,100], with changes in behavioural performance likely dependent on the specific task context [100].

Are equivalent changes to visual processing also present in primates? A recent preprint found that locomotion also modulates visual responses in head-restrained marmosets, a non-human primate [101]. By contrast to mouse V1, where firing rates tend to increase during locomotion, firing rates were more likely to decrease in marmosets and the magnitude of firing rate changes was overall weaker. Interestingly, the effects of locomotion in marmosets varied between neurons responding in the fovea and the periphery, with the latter more likely to increase firing rates during locomotion. More generally, the authors noted that changes in firing rates correlated with locomotion could be explained as a shared gain factor across the recorded population in both mice and marmosets, suggesting that similar principles may underlie modulation of visual systems by locomotion in mice and primates. Further comparative experimental work should provide insights into these principles.

[Primer F] Experimental methods for investigating visual perception during movement

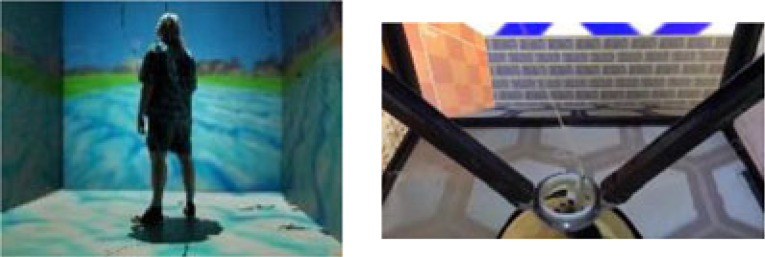

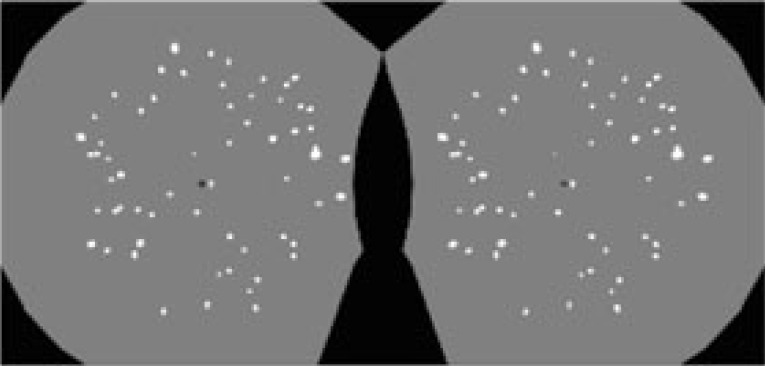

An increasing number of experimental methods are available to investigate the neural encoding and perception of optic flow by enabling the presentation of visual inputs to large areas of the visual field (table 1). These display environments can be coupled to subject self-motion (table 2) in various ways, enabling the investigation of optic flow processing and perception during subject movement.

Table 1.

An overview of the visual environments available to investigate the neural encoding and perception of optic flow. The table also summarizes available methods to couple visual displays to a subject's self-motion. VR ‘CAVE’ image from: https://commons.wikimedia.org/w/index.php?curid=868395.

| Real environments | Large display environments | Head-mounted displays |

|---|---|---|

|

|

|

| A photograph of a woodland. — Natural visual inputs. — World and eye cameras can be used to reconstruct observed visual scene [9,103].— Lenses [106] and prisms [107] can be used to manipulate visual inputs. |

Left: a VR ‘CAVE’. Right: two-dimensional rodent VR [102]. — Flexible presentation of visual inputs. — Curved or multiple display devices can achieve large visual field coverage. — Virtual [95,108,109] / augmented [108,110,111] reality. |

Left and right eye views of a head-mounted display. — Flexible stereoscopic presentations of visual inputs. — Virtual [104] / augmented reality [105]. |

| Coupling with movement | ||

| — Natural free movement. — Passive motion platforms and treadmills can be incorporated [112]. |

— One-dimensional/two-dimensional treadmills [49,72,102]. — Passive motion platforms [113,114]. — Free movement interaction is possible with motion tracking cameras (e.g. ‘VR CAVEs’) [115]. |

— Natural free movement using built-in tracking of rotational and translational head movements. — One-dimensional/two-dimensional treadmills [104]. — Passive motion platforms. |

Table 2.

An overview of the main features of different experimental methods used to investigate visual processing and perception in moving subjects.

| Type of movement | Active self-motion? | Proprioceptive cues? | Vestibular cues? |

|---|---|---|---|

| Free movement |  |

|

|

| Treadmills |  |

|

|

| Motion platforms |  |

|

|

2. Key findings

In the following sections, we highlight key findings regarding how the perception and neural encoding of optic flow are affected by movement related to locomotion. We begin each section with a description of key observations made using psychophysics in human subjects. We then discuss physiological and perceptual measurements in animal models that may provide mechanistic explanations for the observed effects, focusing on findings we feel are most relevant to perceptual phenomena.

(a) . Walking slows perception of optic flow speed

A striking phenomenon of human locomotion is that optic flow appears slower while walking [11,104,116–118]. Specifically, walking subjects choose faster optic flow speeds to match optic flow speeds viewed while stationary (figure 2a; [11,104]). This suggests that locomotion has a subtractive effect on the perception of optic flow speed. This subtractive effect is largely specific to walking as there is a reduced effect when self-motion is related to cycling or arm-cycling and no observable effect for an arbitrary periodic action (finger tapping; [119]). The subtractive effect can be present during treadmill walking, suggesting a role for proprioceptive and/or efference copy signals [104]. It is also observed during passive translation in a wheelchair [104], suggesting a role for vestibular signals. Normal walking approximately sums the effects of treadmill walking and passive translation in a wheelchair, indicating that nonvisual self-motion signals additively contribute to the magnitude of the effect [104]. Importantly, the effect also scales with walking speed, indicating that it goes beyond a binary behavioural state-dependent effect, and instead depends on continuous self-motion signals correlated to walking speed [104,119,120]. The effect also depends on the congruence of visual motion to self-motion [104,116] and is larger for faster visual speeds [117], demonstrating that it depends jointly on visual and nonvisual self-motion signals.

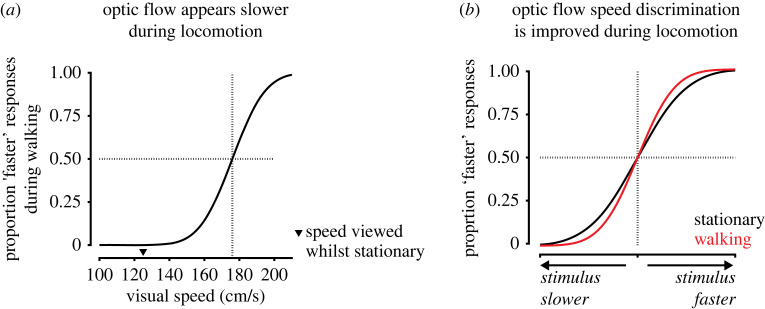

Figure 2.

Changes in optic flow speed perception during locomotion. (a) While walking, subjects perceive faster optic flow speeds to match optic flow speeds viewed when stationary, indicating that optic flow appears slower. This is illustrated by the point of subjective equality (indicated by dashed lines) being shifted to a faster visual speed compared to a reference speed (indicated by black triangle) viewed when stationary (illustration of results from [11]). (b) During walking subjects exhibit increased sensitivity to optic flow speeds faster than a threshold speed that approximately matches average walking speed (approx. 125 cm s−1 in [11]). As a result, psychometric curves for optic flow speed discrimination are slightly steeper when subjects are walking, indicating improved discrimination of optic flow speeds (illustration of results from [11]).

Is there a neural mechanism for optic flow to be perceived as being slower during locomotion? It is now well established that the encoding of visual speed by neurons throughout the mouse visual system is dependent on concurrent self-motion signals [71,77,80,81,95]. Of particular relevance, it has been reported that neurons in mouse V1 and higher visual areas AL and PM prefer faster visual speeds during locomotion [81]. If neural tuning changes between behavioural states, a downstream area trained to estimate visual speed using neural activity in one behavioural state could make errors estimating visual speed from neural activity occurring in another state. Therefore, systematic changes to tuning preferences during locomotion might result in biased predictions. Specifically, if a decoding area generates a model of visual speed tuning based on when the animal is stationary and subsequently attempts to decode visual speed when the animal is locomoting, it may underestimate visual speed since the neurons it is decoding from now prefer faster visual speeds. Thus, changes in visual speed tuning preferences between behavioural states are a potential neural mechanism underlying changes in the perceived speed of optic flow. However, it is not yet known whether visual speed tuning preferences similarly change between stationary and locomoting states in primates, and comparisons of mouse visual speed perception between stationary and locomoting states are not yet available. Future experiments comparing neural tuning for visual speed between stationary and locomoting states in head-fixed marmosets [101], alongside experiments investigating changes in perceptual estimation of visual speed in mice, would provide the means to test this.

(b) . Walking improves optic flow speed discrimination

What is the function of the slowing down of perceived optic flow speed during locomotion? One hypothesis is that it enables increased sensitivity to optic flow speed [11]. This hypothesis is based on the framework for a perceptual coordinate system for correlated variables proposed by Barlow [121,122]. The framework is based on an assumption that a subject can discriminate a fixed number of divisions of perceived optic flow speeds. Therefore, when walking reduces the perceived speed of optic flow it also reduces the range of perceived speeds that need to be encoded by a subject's visual system. This then enables the discrimination of finer differences of speed [11,104,121,122]. In agreement with this hypothesis, locomotion can improve the discrimination of optic flow speed (figure 2b; [11]). A similar result has been demonstrated using an adaptation paradigm [123]. Subjects who viewed an initial adapting stimulus that was moving were subsequently biased to perceive a test stimulus as moving with a slower speed. Interestingly, the adapting stimulus also improved subjects’ discrimination of the speed of the test stimulus, with improvements in discrimination proportional to the magnitude of perceptual bias. Thus, it may be a general principle that adaptation can increase sensitivity to visual motion at the cost of biasing perception, prioritizing sensitivity over accuracy [124].

We recently showed that the visual speed of moving dot fields can be better decoded from neural activity in locomoting mice [71], raising the possibility that perceptual sensitivity for visual speed is also improved in mice during locomotion, similar to humans [11]. We also found that visual speed could be decoded earlier following stimulus onset in locomoting mice [71], potentially reflecting an adaptive neural mechanism enabling mice to respond more rapidly to changing visual motion inputs. Improvements in the neural encoding of visual speed during locomotion vary between visual areas and are strongest in V1 and medial higher visual areas AM and PM [71]. Interestingly, areas AM and PM are biased to respond to the peripheral visual field [62] where changes in optic flow during locomotion are largest and may be best processed [125]. Mouse higher visual areas AM and PM may therefore be specialized for the neural encoding of optic flow during locomotion. Perceptual experiments will be required to determine whether locomotion also improves perceptual sensitivity for visual speed in mice, as well as providing a means to investigate the neural mechanisms underlying any changes in perception.

(c) . Self-motion alters flow parsing and heading perception

The subtractive effect of locomotion on the perceived speed of optic flow may also reflect perceptual stabilization of the visual environment [126], enabling the detection of motion within the environment by parsing visual motion caused by external motion from optic flow caused by self-motion. Consistent with this hypothesis, when visual object motion is presented simultaneously with optic flow simulating self-motion, humans and non-human primates can infer object motion by subtracting a visual estimate of self-motion based on the global optic flow pattern (‘flow parsing’; [25,127]). While flow parsing has primarily been investigated as a visual process [128], the subtractive effect of self-motion on optic flow speed perception indicates that nonvisual signals may also contribute. Moreover, congruent vestibular stimulation by passive translation promotes the perception of pattern motion when viewing bistable plaid stimuli [113], indicating that, when available, nonvisual self-motion signals contribute to the perception of global motion patterns such as optic flow. Indeed, in primates (both human and non-human) the inclusion of congruent vestibular signals via passive translation increases the compensation for visually simulated self-motion when judging object motion trajectories (figure 3; [129,131]). Furthermore, by shifting visual heading relative to walking direction within a virtual environment, it has been demonstrated that humans can use a combination of visual and nonvisual cues for self-motion to plan future interactions with objects [130,132]. Thus, when nonvisual self-motion signals are available they can contribute to the perceptual parsing of visual motion due to object motion from optic flow generated by self-motion.

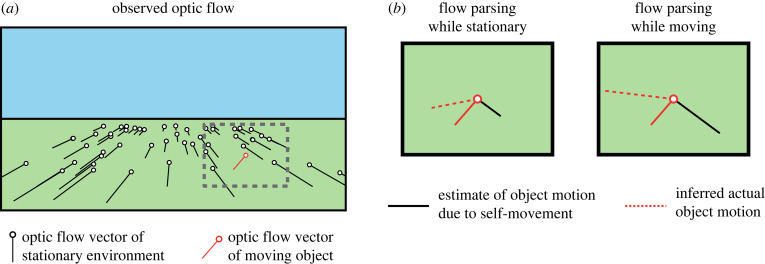

Figure 3.

Changes in flow parsing during self-motion. (a) Forwards translation over a simple ground plane produces a pattern of expanding optic flow (black lines). Observed visual motion of an object within the environment depends on a combination of simulated self-motion and object motion. (b) Shown is a zoomed-in view of the dashed grey box in (a). The flow parsing hypothesis posits that observers infer actual object motion (dashed red lines) by a vector subtraction of the estimated visual object motion due to self-motion (solid black lines) from observed object motion (solid red lines). The estimate of visual motion due to self-motion is larger in magnitude when an observer is moving (Right panel) compared to while stationary (Left panel), resulting in changes to inferred actual object motion (illustration of results from [129] based on schematic from [130]).

Self-motion signals can also improve the accuracy of heading direction perception from optic flow in the presence of interfering object motion. While humans and non-human primates can accurately judge self-motion heading from optic flow in stationary environments, the presence of object motion can bias estimates of heading (figure 4; [134,135]), particularly when optic flow is unreliable [133]. Thus, perceptual estimation of heading direction on the basis of visual cues alone is prone to errors in visually ambiguous settings. However, the inclusion of congruent vestibular stimulation via passive self-motion significantly reduces object motion-induced biases in heading perception (figure 4; [133]). Congruent vestibular inputs can therefore improve the accuracy of heading perception when visual object motion is present within the environment.

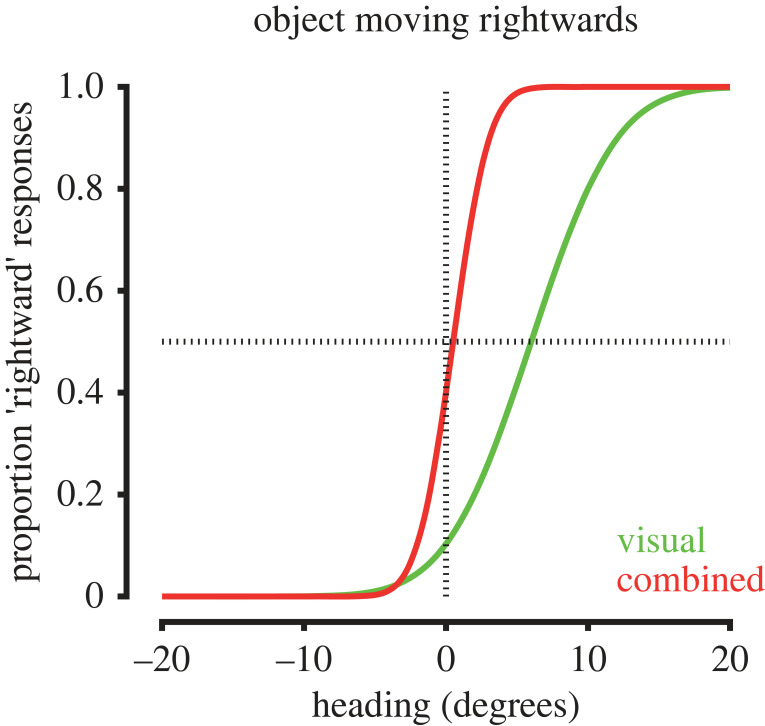

Figure 4.

Changes in estimates of heading in the presence of object motion during self-motion. The presence of moving objects can bias estimates of heading from optic flow. For example, the presence of a rightward moving object can bias estimates of visual heading to the left (green trace). The addition of congruent vestibular signals reduces these biases resulting in more accurate estimates of heading (red trace). Illustration of results from [133].

The neural mechanisms underlying flow parsing in primates are not well established. Candidate areas which may play a role in the primate visual system are the Middle Temporal (MT) area and MSTd [129], which are reciprocally connected [136]. Neurons in MSTd often have receptive fields that cover the majority of the visual field [137] and can encode heading direction from mixtures of visual and vestibular inputs [114,138]. Neurons in area MT have smaller receptive fields, are commonly tuned for visual motion direction and speed and exhibit anatomical clustering based on motion direction and speed preferences [139,140], making them suitable for the encoding of local object motion. As such, heading estimates based on activity in MSTd may be fed back to influence the representation of object motion in area MT (and vice versa). Neurons in MSTd that selectively respond to incongruent combinations of visual and vestibular motion direction may also play a role in assigning visual motion due to object motion during self-motion [141].

In the mouse, the higher-order visual thalamic Lateral Posterior (LP) nucleus (analogous to the primate pulvinar) contains neurons tuned for negative correlations between visual speed and running speed [77,80]. Projections from LP also appear to be the major driver of joint tuning for visual speed and running speed in higher visual cortical area AL, which also contains neurons that primarily encode negative correlations between visual speed and running speed [80]. These neurons are reminiscent of cells found in primate MSTd that encode incongruent combinations of visual and vestibular motion direction [141] and suggest a possible role for LP and AL in detecting object motion during self-motion. Interestingly, anatomical subregions of LP with distinct functional connectivity to other visual areas appear specialized for the encoding of either full-field or object motion [142], further indicating that LP may play a central role in flow parsing within the mouse visual system. The emergence of a flow parsing task for non-human primates [129] and the development of appropriate behavioural tasks for mice should provide more opportunities to investigate the neural mechanisms underlying the perceptual parsing of visual motion due to object motion from self-motion generated optic flow.

(d) . Visual and nonvisual signals are integrated for perception of self-motion

The perception of self-motion is a multisensory experience, combining visual, proprioceptive and vestibular signals with a range of other cues. Humans and non-human primates can integrate visual and nonvisual cues to estimate heading direction [143,144] and distance travelled [145–148], which can in turn increase perceptual precision (figure 5a; [133,149–151]). Integration of visual and nonvisual self-motion signals is largely consistent with Bayesian cue integration, whereby perceptual weights for each cue are proportional to their reliability, albeit with a tendency to overweight body-based vestibular (figure 5b; [149–151]) and proprioceptive cues [146–148]. Notably, inclusion of stereoscopic visual information results in more optimal cue integration in humans [152], indicating that the richness of sensory information available may be important for how cues are integrated. More generally this suggests that the integrative process is flexible and context-dependent.

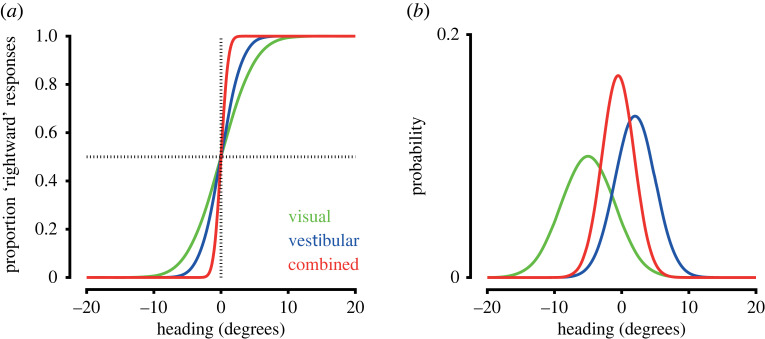

Figure 5.

Integration of visual and nonvisual self-motion signals. (a) Perceptual estimates of heading are more precise when both visual and nonvisual self-motion signals are present compared to unisensory conditions, resulting in steeper psychometric curves (red trace versus green and blue traces). (Illustration of results from [133]. (b) The integration of visual and nonvisual signals for estimating self-motion is largely consistent with Bayesian cue integration. Illustrated here for heading direction, the mean of the multisensory estimate (red trace) is a weighted sum of unisensory estimates from visual (green trace) and vestibular (blue trace) cues, with cue weights proportional to their reliability. The uncertainty of the multisensory estimate is reduced compared to unisensory estimates.

In primates, multisensory perception of heading direction has been strongly linked to activity of neurons in MSTd which respond to combinations of optic flow and vestibular input using a reliability-dependent weighted sum [153,154]. In addition, causally manipulating MSTd activity can alter behavioural reports of heading perception [155,156], indicating that it plays an important role in primate multisensory heading perception.

Recent experimental findings demonstrate that mice can also combine vestibular and visual cues to improve perceptual discrimination of angular velocity [157], as has been demonstrated in humans [158]. Mouse perceptual performance could be accounted for by linear decoding of neurons tuned to both visual and vestibular cues in the retrosplenial cortex [157], an area that may be important for integrating visual, vestibular and motor signals associated with self-motion in rodents [17] and also conveys vestibular information and influences turning-related signals in mouse V1 [103,159–161]. Mice also integrate visual and nonvisual signals to estimate distance travelled [162]. Mice trained to lick for reward at a given visual location within a virtual corridor exhibited biased licking responses when the visual gain of the environment was altered [162], indicating that they combined visual and nonvisual signals to estimate distance travelled. Simultaneously measured spatial position tuning of neurons in primary visual cortex and hippocampal CA1 shifted during gain changes, with the resulting decoded spatial position from these neurons corresponding well with the animals' behavioural performance [162,163]. Additionally, a range of mouse visual areas contain neurons tuned to combinations of visual speed and locomotion speed [77,80,95] which may play a role in the integration of visual and nonvisual self-motion signals for perceptual inference of self-motion speed. Thus, in both primates and mice, perception of self-motion is based on integration of optic flow with nonvisual signals.

(e) . Visual and nonvisual self-motion signals are dynamically calibrated

Multisensory integration leverages the relationships between different senses to enable more precise perceptual judgements [133,164]. However, the relationship between the visual and nonvisual signals associated with locomotion is contingent upon environmental context. For example, the visual speed of a textured surface is inversely proportional to its viewing distance. As such, a dynamic, context-dependent calibration process is necessary to maintain a valid model of the relationship between nonvisual locomotion signals and optic flow (multisensory cue calibration). Such dynamic calibration of visual and nonvisual signals has been observed in both humans and non-human primates using a number of experimental paradigms [112,132,165–170]. Many of these studies involved exposing subjects to a recalibrating context, with the after-effects of a potential recalibration assessed in a short time period following exposure. In one set of experiments, the recalibrating context was walking on a treadmill [166]. After spending approximately 10 min walking on a treadmill, subjects reported an accelerated perception of self-motion speed while walking normally in stationary surroundings. This effect was quantified by the time taken to walk a 5 m lap following treadmill exposure—despite subjects being instructed to walk at a constant, pre-specified speed, they gradually sped up over a duration of 2–3 min. This result can be interpreted as a series of recalibrations between walking speed and optic flow. During treadmill walking subjects recalibrated a slower optic flow speed to be associated with a given walking speed due to the lack of normal optic flow on a treadmill. When subjects were subsequently re-exposed to the normal contingency between optic flow and walking, the addition of normal optic flow then produced an accelerated sense of self-motion. As subjects then recalibrated to the normal contingency between optic flow and walking, the effect of treadmill walking wore off and subjects increased their walking speed to maintain a perception of constant perceived self-motion speed.

In another set of experiments subjects walked on a treadmill while being pulled by a tractor moving either faster or slower than treadmill speed so as to alter the gain of optic flow associated with locomotion and induce visuomotor recalibration [112]. Afterward, subjects were shown targets at a distance and, subsequently, asked to walk to them while blindfolded. Subjects who had been pulled by the tractor slower than their walking speed (low visual gain) overestimated distance to the targets, suggesting that they had calibrated a longer walking distance to be necessary to travel a set visual distance. By contrast, subjects pulled faster than their walking speed (high visual gain) underestimated distance to targets. Interestingly, a related study found that prolonged walking on a rotating circular disc in a visually stationary room caused subjects to subsequently walk in a circular trajectory when trying to walk in place while blindfolded [165], indicating that subjects had recalibrated a circular walking trajectory to be required to remain visually stationary. Thus, humans dynamically recalibrate nonvisual locomotion signals with optic flow, even when the contingency between them is unusual.

A common perceptual bias in virtual reality environments is the underestimation of distances. However, experience of closed-loop feedback can significantly improve these perceptual judgements [171–174], indicating that a calibration process between self-motion and corresponding optic flow can reduce naively occurring biases in virtual environments. The mechanisms underlying this recalibration remain poorly understood, although experiments manipulating the availability of specific visual cues may provide useful insights [34].

It has been proposed that multisensory cue calibration consists of two distinct processes—‘unsupervised’ and ‘supervised’ calibration [175,176]. Unsupervised cue calibration acts on cues that signal discrepant information by shifting perceptual estimates based on each cue toward each other. Unsupervised cue calibration, therefore, aims to achieve ‘internal consistency’ and does not depend on external feedback. By contrast, supervised cue calibration uses external feedback to shift perceptual estimates based on each cue with the aim of achieving accurate perception of the environment.

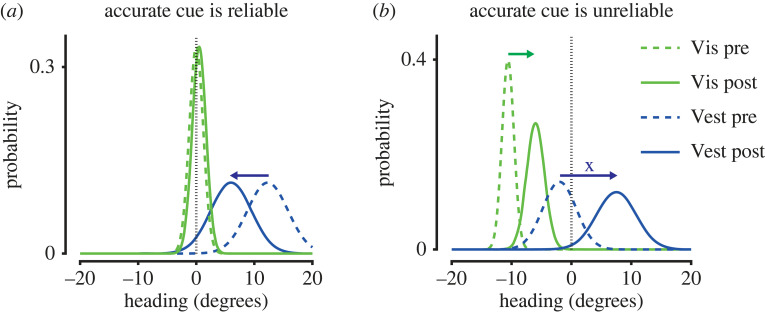

Multisensory cue calibration underlying the perception of heading direction has been investigated using an adaptation paradigm [175,176]. In a series of experiments, unisensory heading direction perception of humans and non-human primates was tested following adaptation to a visual-vestibular contingency whereby visual heading was 10° offset to heading signalled by vestibular stimulation during passive self-motion [175,176]. By contrast to multisensory integration, unsupervised cue calibration was independent of the relative reliability of cues (controlled by the motion coherence of the optic flow stimulus). Instead, vestibular adaptation was approximately twice as strong as visual adaptation, as assessed by reports of heading direction reported in unisensory trials following adaptation [175]. However, during supervised calibration, which was controlled using reward as external feedback for cue accuracy, calibration depended on both cue reliability and accuracy. When the less reliable cue was also inaccurate it was calibrated alone (figure 6a), but when the more reliable cue was inaccurate both cues were calibrated together as a combined percept (figure 6b). As a result, the reliable, inaccurate cue became more accurate, however, the less reliable, initially accurate cue became less accurate [176]. A recent study investigating the neural correlates of supervised multisensory calibration found that neurons in the Ventral Intraparietal (VIP) area, but not MSTd, exhibited shifts in tuning for vestibular and visual heading following adaptation that were correlated with behavioural performance in the task [177], in line with increased choice-related activity in VIP [178].

Figure 6.

Supervised calibration of visual (Vis) and nonvisual (Vest) self-motion signals. Supervised cue calibration depends on both the accuracy and reliability of cues. This is illustrated here for heading direction. The actual heading is indicated by the vertical dashed line and the reliability of cues is indicated by the relative variance of their distributions. (a) When the accurate cue is the most reliable, the inaccurate cue is calibrated alone. (b) When the accurate cue is the least reliable, both cues are calibrated together. (Illustration of results from [176]).

The neural mechanisms underlying cue calibration of visual and nonvisual self-motion signals have not been explicitly investigated in the mouse. A subset of V1 neurons has been identified that may play a role in detecting discrepancies between optic flow and nonvisual self-motion signals, as would occur during unsupervised calibration [109]. These neurons selectively respond to halts or perturbations of optic flow during locomotion [109,179,180]. While these responses are partly influenced by inputs from secondary motor and anterior cingulate cortex [181,182], the precise mechanisms driving responses to optic flow perturbations are debated [180]. Regardless of their mechanism, the responses of such neurons may be suited to trigger recalibration of optic flow and nonvisual self-motion signals by signalling when these signals disagree. Experiments combining perception and neurophysiology, alongside modelling approaches, would provide a means of investigating self-motion related multisensory cue calibration in mice and determine whether similar principles apply to those observed in primates.

3. Some considerations

(a) . Dissociating the effects of self-motion from changes in behavioural state

A key challenge for future research is dissociating effects of self-motion from co-occurring changes in behavioural and brain state. The mouse, in particular, has provided a number of insights into the effects of behavioural state on visual processing and perception [13,15,96,183,184]. For example, orofacial movements including whisking strongly correlate with locomotion and can drive large changes in neural activity [98,184,185], which if not carefully accounted for can confound inferences about neural activity underlying perception and behaviour [186]. Comparing the effects of different types of active and passive self-motion [104,187], alongside manipulation of visual inputs and their relationship to self-motion [82,104,187], can be used to carefully dissect and dissociate the various factors that may contribute to the influence of self-motion on visual processing and perception. A further consideration is that both mice and humans make distinct sets of eye movements during unrestrained active self-motion in order to stabilize visual flow [9,18,103,188], indicating that locomotion is associated with distinct perceptual strategies for sampling the visual scene. Moving forward, careful quantification of brain and behavioural state information [184,186,189–191] will be essential to dissociate the various factors that co-occur with locomotion.

(b) . Conscious awareness

To what extent does the integration of visual and nonvisual cues for self-motion occur without conscious awareness? Humans automatically adjust their locomotion speed to changes in optic flow patterns [21,192–194] and these adjustments are present in subjects with cortical blindsight [118], suggesting that visual control of locomotion can occur without explicit conscious awareness in humans. Changes in locomotion speed also bias human subjects' ability to discriminate between visual speeds in a 2-interval forced choice task [117], indicating that there is a degree of mandatory perceptual fusion of optic flow with nonvisual self-motion signals that prevents conscious access to isolated optic flow during locomotion. Evidence for the mandatory fusion of vestibular and visual cues has also been observed for the perception of heading direction [158], suggesting that this may be a general phenomenon in the multisensory perception of self-motion.

4. Conclusion and future directions

In this review, we brought together findings from distinct fields of research. While the influence of self-motion on optic flow perception has been most-studied in humans and non-human primates, the effects of locomotion on neural activity are best characterized in mice. We believe each of these species affords unique insights into the influence of self-motion on optic flow processing and perception, and ultimately that a multi-species approach, therefore, provides the best way forwards. To this end, we hope this review provides a useful starting point by highlighting existing cross-over in these areas of research as well as the distinct experimental opportunities afforded by each of these species.

Experimental approaches to investigate vision during movement have had various constraints in available methodologies for each species. For example, non-human primate studies have generally investigated the influence of vestibular signals on optic flow processing and perception during passive movement using motion platforms. However, developing technologies are expanding experimental opportunities (see [195] for a useful review of recording methodologies available in moving subjects). For example, recordings of head-restrained marmosets free to locomote on a treadmill [101] should enable the investigation of optic flow processing and perception during active locomotion in a non-human primate and therefore enable more direct comparisons with findings from mice using similar experimental assays.

The recent development of lightweight eye tracking cameras for mice [9,103,188,196–198] enables simultaneous monitoring of head and eye movements during free movement, making it possible to reconstruct on a moment-by-moment basis the visual scene as viewed by a subject. Continued development of this technology alongside appropriate analysis methods will enable experimental investigation of visual encoding and perception in unrestrained, naturally behaving subjects, for example during prey capture [8,9] or predator avoidance [10]. This can be further enhanced by the ability to present interactive augmented reality environments to animals [110,111].

In humans, wireless head-mounted displays (HMDs) allow for precise control of binocular visual stimulation to a subject during active unrestrained movement. The continued development of this technology should allow for more comfortable, realistic and immersive visual experiences. Moreover, a number of groups have begun to combine head-mounted displays with non-invasive recording methods such as electroencephalography [199], and functional near-infrared spectroscopy [200], enabling simultaneous recording of neural activity during visual behavioural tasks.

As the availability of experimental technologies increases, so does the capacity for analogous experiments across species. This in turn allows for more direct comparisons of the effects of self-motion on optic flow processing and perception. Indeed, advances in training protocols mean that mice can now be routinely trained to perform a range of visual psychophysics tasks [100,157,159,201–204] similar to those successfully used to investigate visual perception in humans and non-human primates. Using approaches across animal species and with different brain recording techniques, we are now in a strong position to investigate the influence of self-motion on optic flow processing and perception. These approaches will be essential to understand how animals successfully interact with dynamic environments and should, moreover, provide insights into principles of sensorimotor coding in mammalian species.

Contributor Information

Edward A. B. Horrocks, Email: edward.horrocks.17@ucl.ac.uk.

Aman B. Saleem, Email: aman.saleem@ucl.ac.uk.

Data accessibility

This article has no additional data.

Authors' contributions

E.H.: conceptualization, formal analysis, investigation, writing—original draft, writing—review and editing; I.M.: conceptualization, funding acquisition, investigation, project administration, supervision, writing—original draft, writing—review and editing; A.B.S.: conceptualization, funding acquisition, investigation, project administration, supervision, writing—original draft, writing—review and editing.

All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Conflict of interest declaration

We declare we have no competing interests.

Funding

This work was supported by The Sir Henry Dale Fellowship from the Wellcome Trust and Royal Society (grant no. 200501); the Human Frontier in Science Program (grant no. RGY0076/2018) to A.B.S.; a Biotechnology and Biological Sciences Research Council studentship to E.H.

References

- 1.Helmholtz H, Southall J (eds). 1925. Treatise on physiological optics III. The perceptions of vision. New York, NY: Optical Society of America; [cited 17 May 2022]. See https://psycnet.apa.org/record/1925-10311-000. [Google Scholar]

- 2.Gibson JJ. 1966. The senses considered as perceptual systems. J. Aesthetic Educ. 3, 142. [Google Scholar]

- 3.Lee DN. 1980. The optic flow field: the foundation of vision. Phil. Trans. R. Soc. Lond. B. 290, 169-179. [DOI] [PubMed] [Google Scholar]

- 4.Longuet-Higgins HC, Prazdny K. 1980. The interpretation of a moving retinal image. Proc. R. Soc. Lond. B 208, 385-397. [DOI] [PubMed] [Google Scholar]

- 5.Cormack LK, Czuba TB, Knöll J, Huk AC. 2017. Binocular mechanisms of 3D motion processing. Annu. Rev. Vis. Sci. 3, 297-318. ( 10.1146/annurev-vision-102016-061259) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rogers B. 2021. Optic flow: perceiving and acting in a 3-D world. i-Perception 12, 2041669520987257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Niehorster DC. 2021. Optic flow: a history. i-Perception 12, 204166952110557. ( 10.1177/20416695211055766) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hoy JL, Yavorska I, Wehr M, Niell CM. 2016. Vision drives accurate approach behavior during prey capture in laboratory mice. Curr. Biol. 26, 3046-3052. ( 10.1016/j.cub.2016.09.009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Holmgren CD, Stahr P, Wallace DJ, Voit KM, Matheson EJ, Sawinski J, Bassetto G, Kerr JN. 2021. Visual pursuit behavior in mice maintains the pursued prey on the retinal region with least optic flow. Elife 10, e70838. ( 10.7554/eLife.70838) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.De Franceschi G, Vivattanasarn T, Saleem AB, Solomon SG. 2016. Vision guides selection of freeze or flight defense strategies in mice. Curr. Biol. 26, 2150-2154. ( 10.1016/j.cub.2016.06.006) [DOI] [PubMed] [Google Scholar]

- 11.Durgin FH, Gigone K. 2007. Enhanced optic flow speed discrimination while walking: contextual tuning of visual coding. Perception 36, 1465-1475. ( 10.1068/p5845) [DOI] [PubMed] [Google Scholar]

- 12.Britten KH. 2008. Mechanisms of self-motion perception. Annu. Rev. Neurosci. 31, 389-410. ( 10.1146/annurev.neuro.29.051605.112953) [DOI] [PubMed] [Google Scholar]

- 13.Busse L, Cardin JA, Chiappe ME, Halassa MM, McGinley MJ, Yamashita T, Saleem AB. 2017. Sensation during active behaviors. J. Neurosci. 37, 10 826-10 834. ( 10.1523/JNEUROSCI.1828-17.2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mestre D. 2017. CAVE versus head-mounted displays: ongoing thoughts. Electron Imaging 2017, 31-35. ( 10.2352/ISSN.2470-1173.2017.3.ERVR-094) [DOI] [Google Scholar]

- 15.Froudarakis E, Fahey PG, Reimer J, Smirnakis SM, Tehovnik EJ, Tolias AS. 2019. The visual cortex in context. Annu. Rev. Vis. Sci. 5, 317-339. ( 10.1146/annurev-vision-091517-034407) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Parker PRL, Brown MA, Smear MC, Niell CM. 2020. Movement-related signals in sensory areas: roles in natural behavior. Trends Neurosci. 43, 581-595. ( 10.1016/j.tins.2020.05.005) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chaplin TA, Margrie TW. 2020. Cortical circuits for integration of self-motion and visual-motion signals. Curr. Opin Neurobiol. 60, 122-128. ( 10.1016/j.conb.2019.11.013) [DOI] [PubMed] [Google Scholar]

- 18.Matthis JS, Muller KS, Bonnen KL, Hayhoe MM. 2022. Retinal optic flow during natural locomotion. PLoS Comput. Biol. 18, e1009575. ( 10.1371/journal.pcbi.1009575) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gibson JJ. 1957. Optimal motions and transformations as stimuli for visual perception. Psychol. Rev. 64, 288-295. ( 10.1037/h0044277) [DOI] [PubMed] [Google Scholar]

- 20.Warren WH, Hannon DJ. 1988. Direction of self-motion is perceived from optical flow. Nature 336, 162-163. ( 10.1038/336162a0) [DOI] [Google Scholar]

- 21.Prokop T, Schubert M, Berger W. 1997. Visual influence on human locomotion Modulation to changes in optic flow. Exp. Brain Res. 114, 63-70. ( 10.1007/PL00005624) [DOI] [PubMed] [Google Scholar]

- 22.Campbell MG, Ocko SA, Mallory CS, Low IIC, Ganguli S, Giocomo LM. 2018. Principles governing the integration of landmark and self-motion cues in entorhinal cortical codes for navigation. Nat. Neurosci. 21, 1096-1106. ( 10.1038/s41593-018-0189-y) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Helmholtz H. 1910. Handbook of physiological optics, 3rd edn. (eds A Gullstrand, J von Kries, W Nagel), vol. 1. Voss; [cited 17 May 2022]. See Https://scholar.google.com/scholar?hl=en&q=Helmholtz+H.+%281910%29.+Handbuch+der+physiologischen+Optik+%5BHandbook+of+physiological+optics%5D.+%28Vol.+3.+3rd+ed.%29+A.+Gullstrand%2C+J.+von+Kries+and+W.+Nagel+%28Eds.%29.+Voss.+

- 24.Koenderink JJ, van Doorn AJ. 1976. Local structure of movement parallax of the plane. J Opt Soc Am 66, 717-723. ( 10.1364/JOSA.66.000717) [DOI] [Google Scholar]

- 25.Warren PA, Rushton SK. 2008. Evidence for flow-parsing in radial flow displays. Vision Res. 48, 655-663. ( 10.1016/j.visres.2007.10.023) [DOI] [PubMed] [Google Scholar]

- 26.Howard IP, Fujii Y, Allison RS. 2014. Interactions between cues to visual motion in depth. J. Vis. 14, 14. ( 10.1167/14.2.14) [DOI] [PubMed] [Google Scholar]

- 27.Whritner JA, Czuba TB, Cormack LK, Huk AC. 2021. Spatiotemporal integration of isolated binocular three-dimensional motion cues. J. Vis. 21, 2. ( 10.1167/jov.21.10.2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Richards W, Regan D. 1973. A stereo field map with implications for disparity processing. Investig. Opthalmology Vis. Sci. 12, 904-909. [PubMed] [Google Scholar]

- 29.Czuba TB, Rokers B, Huk AC, Cormack LK. 2010. Speed and eccentricity tuning reveal a central role for the velocity-based cue to 3D visual motion. J. Neurophysiol. 104, 2886-2899. ( 10.1152/jn.00585.2009) [DOI] [PubMed] [Google Scholar]

- 30.Thompson L, Ji M, Rokers B, Rosenberg A. 2019. Contributions of binocular and monocular cues to motion-indepth perception. J. Vis. 19, 1-16. ( 10.1167/19.3.2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Cutting JE, Vishton PM. 1995. Perceiving Layout and Knowing Distances. In Perception of space and motion, pp. 69-117. Amsterdam, The Netherlands: Elsevier. [Google Scholar]

- 32.Rokers B, Fulvio JM, Pillow JW, Cooper EA. 2018. Systematic misperceptions of 3-D motion explained by Bayesian inference. J. Vis. 18, 23. ( 10.1167/jov.18.3.23) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fulvio JM, Rokers B. 2017. Use of cues in virtual reality depends on visual feedback. Sci. Rep. 7, 1-13. ( 10.1038/s41598-017-16161-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Fulvio JM, Ji M, Thompson L, Rosenberg A, Rokers B. 2020. Cue-dependent effects of VR experience on motion-in-depth sensitivity. PLoS ONE 15, e0229929. ( 10.1371/journal.pone.0229929) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hughes A. 1979. A schematic eye for the rat. Vision Res. 19, 569-588. ( 10.1016/0042-6989(79)90143-3) [DOI] [PubMed] [Google Scholar]

- 36.Wallace DJ, Greenberg DS, Sawinski J, Rulla S, Notaro G, Kerr JND. 2013. Rats maintain an overhead binocular field at the expense of constant fusion. Nature 498, 65-69. ( 10.1038/nature12153) [DOI] [PubMed] [Google Scholar]

- 37.Scholl B, Burge J, Priebe NJ. 2013. Binocular integration and disparity selectivity in mouse primary visual cortex. J. Neurophysiol. 109, 3013-3024. ( 10.1152/jn.01021.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Henson DB. 1993. Visual fields. Oxford, UK: Oxford University Press. [Google Scholar]

- 39.Samonds JM, Choi V, Priebe NJ. 2019. Mice discriminate stereoscopic surfaces without fixating in depth. J. Neurosci. 39, 8024-8037. ( 10.1523/JNEUROSCI.0895-19.2019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.La Chioma A, Bonhoeffer T, Hübener M. 2019. Area-specific mapping of binocular disparity across mouse visual cortex. Curr. Biol. 29, 2954-2960.e5. ( 10.1016/j.cub.2019.07.037) [DOI] [PubMed] [Google Scholar]

- 41.la Chioma A, Bonhoeffer T, Hübener M. 2020. Disparity sensitivity and binocular integration in mouse visual cortex areas. J. Neurosci. 40, 8883-8899. ( 10.1523/JNEUROSCI.1060-20.2020) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Jeon CJ, Strettoi E, Masland RH. 1998. The major cell populations of the mouse retina. J. Neurosci. 18, 8936-8946. ( 10.1523/JNEUROSCI.18-21-08936.1998) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Wikler KC, Williams RW, Rakic P. 1990. Photoreceptor mosaic: number and distribution of rods and cones in the rhesus monkey retina. J. Comp. Neurol. 297, 499-508. ( 10.1002/cne.902970404) [DOI] [PubMed] [Google Scholar]

- 44.Wikler KC, Rakic P. 1990. Distribution of photoreceptor subtypes in the retina of diurnal and nocturnal primates. J. Neurosci. 10, 3390-3401. ( 10.1523/JNEUROSCI.10-10-03390.1990) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Packer O, Hendrickson AE, Curcio CA. 1989. Photoreceptor topography of the retina in the adult pigtail macaque (Macaca nemestrina). J. Comp. Neurol. 288, 165-183. ( 10.1002/cne.902880113) [DOI] [PubMed] [Google Scholar]

- 46.Huberman AD, Niell CM. 2011. What can mice tell us about how vision works? Trends Neurosci. 34, 464-473. ( 10.1016/j.tins.2011.07.002) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Perry VH, Cowey A. 1985. The ganglion cell and cone distributions in the monkey's retina: implications for central magnification factors. Vision Res. 25, 1795-1810. ( 10.1016/0042-6989(85)90004-5) [DOI] [PubMed] [Google Scholar]

- 48.Naarendorp F, Esdaille TM, Banden SM, Andrews-Labenski J, Gross OP, Pugh EN. 2010. Dark light, rod saturation, and the absolute and incremental sensitivity of mouse cone vision. J. Neurosci. 30, 12 495-12 507. ( 10.1523/JNEUROSCI.2186-10.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Caramenti M, Pretto P, Lafortuna CL, Bresciani JP, Dubois A. 2019. Influence of the size of the field of view on visual perception while running in a treadmill-mediated virtual environment. Front. Psychol. 10, 2344. ( 10.3389/fpsyg.2019.02344) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.McManus M, D'Amour S, Harris LR. 2017. Using optic flow in the far peripheral field. J. Vis. 17, 3. [DOI] [PubMed] [Google Scholar]

- 51.Rogers C, Rushton SK, Warren PA. 2017. Peripheral visual cues contribute to the perception of object movement during self-movement. i-Perception 8, 204166951773607. ( 10.1177/2041669517736072) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Pan JS, Bingham GP. 2013. With an eye to low vision: optic flow enables perception despite image blur. Optom. Vis. Sci. 90, 1119-1127. ( 10.1097/opx.0000000000000027) [DOI] [PubMed] [Google Scholar]

- 53.Van Essen DC, Gallant JL. 1994. Neural mechanisms of form and motion processing in the primate visual system. Neuron 13, 1-10. ( 10.1016/0896-6273(94)90455-3) [DOI] [PubMed] [Google Scholar]

- 54.Tanaka K, Saito HA. 1989. Analysis of motion of the visual field by direction, expansion/contraction, and rotation cells clustered in the dorsal part of the medial superior temporal area of the macaque monkey. J. Neurophysiol. 62, 626-641. ( 10.1152/jn.1989.62.3.626) [DOI] [PubMed] [Google Scholar]

- 55.Duffy CJ, Wurtz RH. 1991. Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. J. Neurophysiol. 65, 1329-1345. ( 10.1152/jn.1991.65.6.1329) [DOI] [PubMed] [Google Scholar]

- 56.Duffy CJ, Wurtz RH. 1991. Sensitivity of MST neurons to optic flow stimuli. II. Mechanisms of response selectivity revealed by small-field stimuli. J. Neurophysiol. 65, 1346-1359. ( 10.1152/jn.1991.65.6.1346) [DOI] [PubMed] [Google Scholar]

- 57.Graziano MSA, Andersen RA, Snowden RJ. 1994. Tuning of MST neurons to spiral motions. J. Neurosci. 14, 54-67. ( 10.1523/JNEUROSCI.14-01-00054.1994) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Wild B, Treue S. 2021. Primate extrastriate cortical area MST: a gateway between sensation and cognition. J. Neurophysiol. 125, 1851-1882. ( 10.1152/jn.00384.2020) [DOI] [PubMed] [Google Scholar]

- 59.Geesaman BJ, Andersen RA. 1996. The analysis of complex motion patterns by form/cue invariant MSTd neurons. J. Neurosci. 16, 4716-4732. ( 10.1523/JNEUROSCI.16-15-04716.1996) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Rasmussen RN, Matsumoto A, Arvin S, Yonehara K. 2021. Binocular integration of retinal motion information underlies optic flow processing by the cortex. Curr. Biol. 31, 1165-1174.e6. ( 10.1016/j.cub.2020.12.034) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Garrett ME, Nauhaus I, Marshel JH, Callaway EM, Garrett ME, Marshel JH, Callaway EM. et al. 2014. Topography and areal organization of mouse visual cortex. J. Neurosci. 34, 12 587-12 600. ( 10.1523/JNEUROSCI.1124-14.2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Zhuang J, Ng L, Williams D, Valley M, Li Y, Garrett M, Waters J. 2017. An extended retinotopic map of mouse cortex. Elife 6, e18372. ( 10.7554/eLife.18372) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Sit KK, Goard MJ. 2020. Distributed and retinotopically asymmetric processing of coherent motion in mouse visual cortex. Nat. Commun. 11, 1-4. ( 10.1038/s41467-019-13993-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Wang Q, Sporns O, Burkhalter A. 2012. Network analysis of corticocortical connections reveals ventral and dorsal processing streams in mouse visual cortex. J. Neurosci. 32, 4386-4399. ( 10.1523/JNEUROSCI.6063-11.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Smith IT, Townsend LB, Huh R, Zhu H, Smith SL. 2017. Stream-dependent development of higher visual cortical areas. Nat. Neurosci. 20, 200. ( 10.1038/nn.4469) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Juavinett AL, Callaway EM. 2015. Pattern and component motion responses in mouse visual cortical areas. Curr. Biol. 25, 1759-1764. ( 10.1016/j.cub.2015.05.028) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Murakami T, Matsui T, Ohki K. 2017. Functional segregation and development of mouse higher visual areas. J. Neurosci. 37, 9424-9437. ( 10.1523/JNEUROSCI.0731-17.2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Nishio N, Tsukano H, Hishida R, Abe M, Nakai J, Kawamura M, Aiba A, Sakimura K, Shibuki K. 2018. Higher visual responses in the temporal cortex of mice. Sci. Rep. 8, 1-12. ( 10.1038/s41598-018-29530-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Yu Y, Stirman JN, Dorsett CR, Smith SL. 2021. Selective representations of texture and motion in mouse higher visual areas. bioRxiv. 2021.12.05.471337. ( 10.1101/2021.12.05.471337v1). [DOI]

- 70.Han X, Vermaercke B, Bonin V. 2022. Diversity of spatiotemporal coding reveals specialized visual processing streams in the mouse cortex. Nat. Commun. 13, 1-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Horrocks EAB, Saleem AB. 2021. Distinct neural dynamics underlie the encoding of visual speed in stationary and running mice. bioRxiv. 2021.06.11.447904. ( 10.1101/2021.06.11.447904v1) [DOI]

- 72.Dombeck DA, Khabbaz AN, Collman F, Adelman TL, Tank DW. 2007. Imaging large-scale neural activity with cellular resolution in awake, mobile mice. Neuron 56, 43-57. ( 10.1016/j.neuron.2007.08.003) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Niell CM, Stryker MP. 2010. Modulation of visual responses by behavioral state in mouse visual cortex. Neuron 65, 472-479. ( 10.1016/j.neuron.2010.01.033) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Schröder S, Steinmetz NA, Krumin M, Pachitariu M, Rizzi M, Lagnado L, Harris KD, Carandini M. 2020. Arousal modulates retinal output. Neuron 107, 487-495.e9. ( 10.1016/j.neuron.2020.04.026) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Liang L, Fratzl A, Reggiani JDS, El Mansour O, Chen C, Andermann ML. 2020. Retinal inputs to the thalamus are selectively gated by arousal. Curr. Biol. 30, 3923-3934.e9. ( 10.1016/j.cub.2020.07.065) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Erisken S, Vaiceliunaite A, Jurjut O, Fiorini M, Katzner S, Busse L. 2014. Effects of locomotion extend throughout the mouse early visual system. Curr. Biol. 24, 2899-2907. ( 10.1016/j.cub.2014.10.045) [DOI] [PubMed] [Google Scholar]

- 77.Roth MM, Dahmen JC, Muir DR, Imhof F, Martini FJ, Hofer SB. 2016. Thalamic nuclei convey diverse contextual information to layer 1 of visual cortex. Nat. Neurosci. 19, 299-307. ( 10.1038/nn.4197) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Aydın Ç, Couto J, Giugliano M, Farrow K, Bonin V. 2018. Locomotion modulates specific functional cell types in the mouse visual thalamus. Nat. Commun. 9, 1-12. ( 10.1038/s41467-018-06780-3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Savier EL, Chen H, Cang J. 2019. Effects of locomotion on visual responses in the mouse superior colliculus. J. Neurosci. 39, 9360-9368. ( 10.1523/JNEUROSCI.1854-19.2019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Blot A, Roth MM, Gasler I, Javadzadeh M, Imhof F, Hofer SB. 2021. Visual intracortical and transthalamic pathways carry distinct information to cortical areas. Neuron 109, 1996-2008.e6. ( 10.1016/j.neuron.2021.04.017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Andermann ML, Kerlin AM, Roumis DK, Glickfeld LL, Reid RC. 2011. Functional specialization of mouse higher visual cortical areas. Neuron 72, 1025-1039. ( 10.1016/j.neuron.2011.11.013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Mika Diamanti E, Reddy CB, Schröder S, Muzzu T, Harris KD, Saleem AB, Carandini M. et al. 2021. Spatial modulation of visual responses arises in cortex with active navigation. Elife 10, 1-15. ( 10.7554/eLife.63705) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Christensen AJ, Pillow JW. 2022. Reduced neural activity but improved coding in rodent higher-order visual cortex during locomotion. Nat. Commun. 13, 1-8. ( 10.1038/s41467-022-29200-z) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Dadarlat MC, Stryker MP. 2017. Locomotion enhances neural encoding of visual stimuli in mouse V1. J. Neurosci. 37, 3764-3775. ( 10.1523/JNEUROSCI.2728-16.2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Christensen AJ, Pillow JW. 2017. Running reduces firing but improves coding in rodent higher-order visual cortex. [cited 2018 Sep 25] ( 10.1101/214007) [DOI]

- 86.Polack P-O, Friedman J, Golshani P. 2013. Cellular mechanisms of brain state-dependent gain modulation in visual cortex. Nat. Neurosci. 16, 1331-1339. ( 10.1038/nn.3464) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Pakan JMP, Lowe SC, Dylda E, Keemink SW, Currie SP, Coutts CA, Rochefort NL. 2016. Behavioral-state modulation of inhibition is context-dependent and cell type specific in mouse visual cortex. Elife 5, e14985. ( 10.7554/eLife.14985) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Dipoppa M, Ranson A, Krumin M, Pachitariu M, Carandini M, Harris KD. 2018. Vision and locomotion shape the interactions between neuron types in mouse visual cortex. Neuron 98, 602-615.e8. ( 10.1016/j.neuron.2018.03.037) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Millman DJ, et al. 2020. VIP interneurons in mouse primary visual cortex selectively enhance responses to weak but specific stimuli. Elife 9, 1-22. ( 10.7554/eLife.55130) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Bugeon S, Duffield Jet al. 2022. A transcriptomic axis predicts state modulation of cortical interneurons. Nature 607, 330-338. ( 10.1038/s41586-022-04915-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Bennett C, Arroyo S, Hestrin S. 2013. Subthreshold mechanisms underlying state-dependent modulation of visual responses. Neuron 80, 350-357. ( 10.1016/j.neuron.2013.08.007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Shimaoka D, Harris KD, Carandini M. 2018. Effects of arousal on mouse sensory cortex depend on modality. Cell Rep. 22, 3160-3167. ( 10.1016/j.celrep.2018.02.092) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Ayaz A, Saleem AB, Schölvinck ML, Carandini M. 2013. Locomotion controls spatial integration in mouse visual cortex. Curr. Biol. 23, 890-894. ( 10.1016/j.cub.2013.04.012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Mineault PJ, Tring E, Trachtenberg JT, Ringach DL. 2016. Enhanced spatial resolution during locomotion and heightened attention in mouse primary visual cortex. J. Neurosci. 36, 6382-6392. ( 10.1523/JNEUROSCI.0430-16.2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Saleem AB, Ayaz A, Jeffery KJ, Harris KD, Carandini M. 2013. Integration of visual motion and locomotion in mouse visual cortex. Nat. Neurosci. 16, 1864-1869. ( 10.1038/nn.3567) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Vinck M, Batista-Brito R, Knoblich U, Cardin JA. 2015. Arousal and locomotion make distinct contributions to cortical activity patterns and visual encoding. Neuron 86, 740-754. ( 10.1016/j.neuron.2015.03.028) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Clancy KB, Orsolic I, Mrsic-Flogel TD. 2019. Locomotion-dependent remapping of distributed cortical networks. Nat. Neurosci. 22, 778-786. ( 10.1038/s41593-019-0357-8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Stringer C, Pachitariu M, Steinmetz N, Reddy CB, Carandini M, Harris KD. 2019. Spontaneous behaviors drive multidimensional, brainwide activity. Science 364, eaav7893. ( 10.1126/science.aav7893) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Neske GT, Nestvogel D, Steffan PJ, McCormick DA. 2019. Distinct waking states for strong evoked responses in primary visual cortex and optimal visual detection performance. J. Neurosci. 39, 10 044-10 059. ( 10.1523/JNEUROSCI.1226-18.2019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.McBride EG, Lee SYJ, Callaway EM. 2019. Local and global influences of visual spatial selection and locomotion in mouse primary visual cortex. Curr. Biol. 29, 1592-1605.e5. ( 10.1016/j.cub.2019.03.065) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Liska JP, Rowley DP, Nguyen TTK, Muthmann J-O, Butts DA, Yates JL, Huk AC. 2022. Running modulates primate and rodent visual cortex via common mechanism but quantitatively distinct implementation. bioRxiv. 2022.06.13.495712. ( 10.1101/2022.06.13.495712v1) [DOI]

- 102.Chen G, King JA, Lu Y, Cacucci F, Burgess N. 2018. Spatial cell firing during virtual navigation of open arenas by head-restrained mice. Elife 7, e34789. ( 10.7554/eLife.34789) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Parker PRL, Abe ETT, Leonard ESP, Martins DM, Niell CM. 2022. Joint coding of visual input and eye/head position in V1 of freely moving mice. bioRxiv. [cited 2022 May 17];2022.02.01.478733. ( 10.1101/2022.02.01.478733v1) [DOI] [PMC free article] [PubMed]

- 104.Durgin FH, Gigone K, Scott R. 2005. Perception of visual speed while moving. J. Exp. Psychol. Hum. Percept. Perform. 31, 339-353. ( 10.1037/0096-1523.31.2.339) [DOI] [PubMed] [Google Scholar]

- 105.Itoh Y, Langlotz T, Sutton J, Plopski A. 2021. Towards indistinguishable augmented reality. ACM Comput. Surv. 54, 1-36. ( 10.1145/3453157) [DOI] [Google Scholar]

- 106.Rand KM, Barhorst-Cates EM, Kiris E, Thompson WB, Creem-Regehr SH. 2019. Going the distance and beyond: simulated low vision increases perception of distance traveled during locomotion. Psychol. Res. 83, 1349. ( 10.1007/s00426-018-1019-2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Herlihey TA, Rushton SK. 2012. The role of discrepant retinal motion during walking in the realignment of egocentric space. J. Vis. 12, 4. ( 10.1167/12.3.4) [DOI] [PubMed] [Google Scholar]

- 108.Grosso NA Del, Graboski JJ, Chen W, Blanco-Hernández E, Sirota A. 2017. Virtual Reality system for freely-moving rodents. bioRxiv. 2017 [cited 2022 Aug 2]; 161232. ( 10.1101/161232v1) [DOI]

- 109.Keller GB, Bonhoeffer T, Hübener M. 2012. Sensorimotor mismatch signals in primary visual cortex of the behaving mouse. Neuron 74, 809-815. ( 10.1016/j.neuron.2012.03.040) [DOI] [PubMed] [Google Scholar]

- 110.Stowers JR, et al. 2017. Virtual reality for freely moving animals. Nat. Methods. 14, 995-1002. ( 10.1038/nmeth.4399) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Lopes G, et al. 2021. Creating and controlling visual environments using bonvision. Elife 10, e65541. ( 10.7554/eLife.65541) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Rieser JJ, Pick HL, Ashmead DH, Garing AE. 1995. Calibration of human locomotion and models of perceptual-motor organization. J. Exp. Psychol. Hum. Percept. Perform. 21, 480-497. ( 10.1037/0096-1523.21.3.480) [DOI] [PubMed] [Google Scholar]

- 113.Hogendoorn H, Verstraten FAJ, MacDougall H, Alais D. 2017. Vestibular signals of self-motion modulate global motion perception. Vision Res. 130, 22-30. ( 10.1016/j.visres.2016.11.002) [DOI] [PubMed] [Google Scholar]

- 114.Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. 2006. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J. Neurosci. 26, 73-85. ( 10.1523/JNEUROSCI.2356-05.2006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Cruz-Neira C, Sandin DJ, Defanti TA. 1993 Surround-screen projection-based virtual reality: the design and implementation of the CAVE. In Proceedings of the 20th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ′93), Anaheim, CA, 2–6 August 1993, pp. 135–142. New York, NY: ACM. ( 10.1145/166117.166134) [DOI]

- 116.Thurrell AEI, Pelah A. 2002. Reduction of perceived visual speed during walking: effect dependent upon stimulus similarity to the visual consequences of locomotion. J. Vis. 2, 628. ( 10.1167/2.7.628) [DOI] [Google Scholar]

- 117.Souman JL, Freeman TCA, Eikmeier V, Ernst MO. 2010. Humans do not have direct access to retinal flow during walking. J. Vis. 10, 14-14. ( 10.1167/10.11.14) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Pelah A, Barbur J, Thurrell A, Hock HS. 2015. The coupling of vision with locomotion in cortical blindness. Vision Res. 110(PB), 286-294. ( 10.1016/j.visres.2014.04.015) [DOI] [PubMed] [Google Scholar]

- 119.Pelah A, Thurrell AEI. 2001. Reduction of perceived visual speed during locomotion: evidence for quadrupedal perceptual pathways in human? J. Vis. 1, 307. ( 10.1167/1.3.307) [DOI] [Google Scholar]

- 120.Caramenti M, Lafortuna CL, Mugellini E, Khaled OA, Bresciani JP, Dubois A. 2018. Matching optical flow to motor speed in virtual reality while running on a treadmill. PLoS ONE 13, e0195781. ( 10.1371/journal.pone.0195781) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Barlow HB, Földiák P. 1989. Adaptation and decorrelation in the cortex. In Comput neuron, pp. 54-72. [Google Scholar]

- 122.Barlow HB. 1990. A theory about the functional role and synaptic mechanism of visual after-effects. In Vision: coding and efficiency (ed. Blakemore C), Cambridge, UK: Cambridge University Press. [Google Scholar]

- 123.Clifford CWG, Wenderoth P. 1999. Adaptation to temporal modulation can enhance differential speed sensitivity. Vision Res. 39, 4324-4331. ( 10.1016/S0042-6989(99)00151-0) [DOI] [PubMed] [Google Scholar]

- 124.Durgin FH. 2009. When walking makes perception better. Curr. Dir. Psychol. Sci. 18, 43-47. ( 10.1111/j.1467-8721.2009.01603.x) [DOI] [Google Scholar]

- 125.Saleem AB. 2020. Two stream hypothesis of visual processing for navigation in mouse. Curr. Opin Neurobiol. 64, 70-78. ( 10.1016/j.conb.2020.03.009) [DOI] [PubMed] [Google Scholar]

- 126.Wallach H. 1987. Perceiving a stable environment when one moves. Annu. Rev. Psychol. 38, 1-27. ( 10.1146/annurev.ps.38.020187.000245) [DOI] [PubMed] [Google Scholar]

- 127.Rushton SK, Niehorster DC, Warren PA, Li L. 2018. The primary role of flow processing in the identification of scene-relative object movement. J. Neurosci. 38, 1737-1743. ( 10.1523/JNEUROSCI.3530-16.2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Rushton SK, Warren PA. 2005. Moving observers, relative retinal motion and the detection of object movement. Curr. Biol. 15, R542-R543. ( 10.1016/j.cub.2005.07.020) [DOI] [PubMed] [Google Scholar]

- 129.Peltier NE, Angelaki DE, DeAngelis GC. 2020. Optic flow parsing in the macaque monkey. J. Vis. 20, 8. ( 10.1167/jov.20.10.8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 130.Fajen BR, Matthis JS. 2013. Visual and non-visual contributions to the perception of object motion during self-motion. PLoS ONE 8, e55446. ( 10.1371/journal.pone.0055446) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 131.Dokka K, MacNeilage PR, DeAngelis GC, Angelaki DE. 2015. Multisensory self-motion compensation during object trajectory judgments. Cereb. Cortex. 25, 619-630. ( 10.1093/cercor/bht247) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 132.Fajen BR, Parade MS, Matthis JS. 2013. Humans perceive object motion in world coordinates during obstacle avoidance. J. Vis. 13, 25. ( 10.1167/13.8.25) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.Dokka K, Deangelis GC, Angelaki DE. 2015. Multisensory integration of visual and vestibular signals improves heading discrimination in the presence of a moving object. J. Neurosci. 35, 13 599-13 607. ( 10.1523/JNEUROSCI.2267-15.2015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 134.Royden CS, Hildreth EC. 1996. Human heading judgments in the presence of moving objects. Percept. Psychophys. 58, 836-856. ( 10.3758/BF03205487) [DOI] [PubMed] [Google Scholar]

- 135.Warren WH, Saunders JA. 1995. Perceiving heading in the presence of moving objects. Perception 24, 315-331. ( 10.1068/p240315) [DOI] [PubMed] [Google Scholar]