Artificial Intelligence (AI) is an emerging technology that uses complex algorithms to arrive at an outcome over a range of circumstances, leveraging the ability of computer systems to perform tasks that would usually require human levels of intelligence.1-3 The use of AI in cancer care is rapidly expanding: a May 2022 PubMed search of the term cross-referenced with cancer revealed approximately 26,000 citations, with more than 60% published in the past five years. Ethical considerations for AI in oncology include patient equity, privacy, and autonomy; the roles of human- and machine-based judgment; and the patient-oncologist relationship.3-5 Relative to other parts of medicine, the implications of oncology AI are outsized, and some are idiosyncratic. Oncology AI tools apply to not one but two genomes (germline and somatic); can greatly complicate the existing weight of bias, discrimination, and structural racism in cancer care; and can subtly undermine patient and physician autonomy, leading to cancer care that is algorithmic rather than patient-centered. These diverse concerns, in the context of unreserved enthusiasm for AI, challenge a future where oncology AI is both widely implemented and ethically acceptable. We propose that adapting a process-focused approach for deploying AI in cancer care, such as the accountability for reasonableness framework (A4R),6 can address these concerns and realize a future where oncology AI is ethically deployed.

Support and Skepticism of Oncology AI

Public support for implementing AI in cancer care is broad but may be overenthusiastic. This can be seen in lay press articles such as “AI Took a Test to Detect Lung Cancer. It Got an A” in The New York Times7 and studies demonstrating that a cancer survival prediction tool is among the most anticipated uses of health care AI.8 Patients have high levels of interest and trust in using AI to detect breast9 and skin cancer.2 Oncologists' support for AI, however, appears less robust. Although a Japanese survey found favorable oncologist perceptions of AI's clinical significance,1 US-based clinicians are unconvinced,3 with two thirds reporting that AI will have limited or no impact in the coming years.10 Skepticism may vary by the technology's proximity to clinical decisions: a study of UK physicians found that AI-based imaging diagnostics were acceptable to almost twice as many respondents as AI-based clinical management.11 Suspicions also arise when oncologists recall past enthusiasm for promising treatments12 that have yet to deliver (eg, cancer vaccination)13 or led to adverse outcomes (eg, stem-cell transplantation for breast cancer).14

Positive results from AI tools have been matched by concerning outcomes, such as data from a study assessing cervical cancer treatment recommendation concordance between oncologists and IBM's Watson for Oncology, a clinical decision-support system.15 Among 300 Chinese patients, 27% of recommendations were discordant, with most disagreements stemming from patient preference. This highlights a central issue in AI development: as models are trained to maximize specific outcomes, such as overall survival, they may disregard patient preferences, and as a result, their autonomy. Not every patient seeks the same goal.16,17

Ethical Concerns for Oncology AI

Concerns regarding autonomy in cancer care are inter-related with equity and privacy. All three stem from the increasing divide between patients, how their data are used for developing AI tools, and how tools are reapplied during their care. At present, the lack of defined processes for evaluating and disclosing how a tool's data set represents a given cancer population—and what extrapolation is necessary for deriving an outcome or applying it—can make seemingly small biases lead to discrimination during care delivery.4,18 Given the many inequities of oncology care, there is reason to believe that prejudicial algorithms may exacerbate them.17,19,20 Indeed, models built on biased data cannot predict accurately,5,21 but an environment of hype will engender such models' use.22 Alternatively, if the oncology community develops standards and processes for AI's ethical development and monitoring, it can help remediate care inequities rather than extend them.5,17,23

The ability to create cancer care AI that minimizes bias while maximizing privacy and autonomy rests upon whose data are intentionally included or excluded, when patients consent to data use, and when that use is disclosed. Data selection presents situations where algorithms maximize an outcome beneficial to the majority but not for minoritized groups.5,24 This is especially important for a field where there are two inter-related genomes, the germline and somatic, and non-Hispanic Whites are over-represented in oncology biobanks.25 These worries are compounded for AI tools used outside clinical interactions, where patients (and sometimes oncologists) may be less likely to recognize care biases or autonomy infringements. Examples include radiology, pathology, and clinical pathway decision-support tools, where few patients are aware of their existence or impact.26

Beyond the bias of data sets, ethical concerns regarding machine-based judgment center on the trustworthiness of black box AI predictions that cannot be entirely understood by researchers, much less oncologists or patients. This issue is one of inexplicability, where stakeholders cannot evaluate how AI tools reach a prediction, such as if a biopsy is cancerous.2,18,27-29 Some developers have recognized this issue, including post hoc interpretable models that attempt to illuminate their AI's black box.18 Nonetheless, such attempts are approximations, and this lack of intrinsic interpretability may explain some of the mistrust oncologists have in AI-based predictions. Beneath the hype, these concerns have been insufficiently addressed through an ethical framework.

A4R-OAI: An Ethical Process Framework for Oncology AI

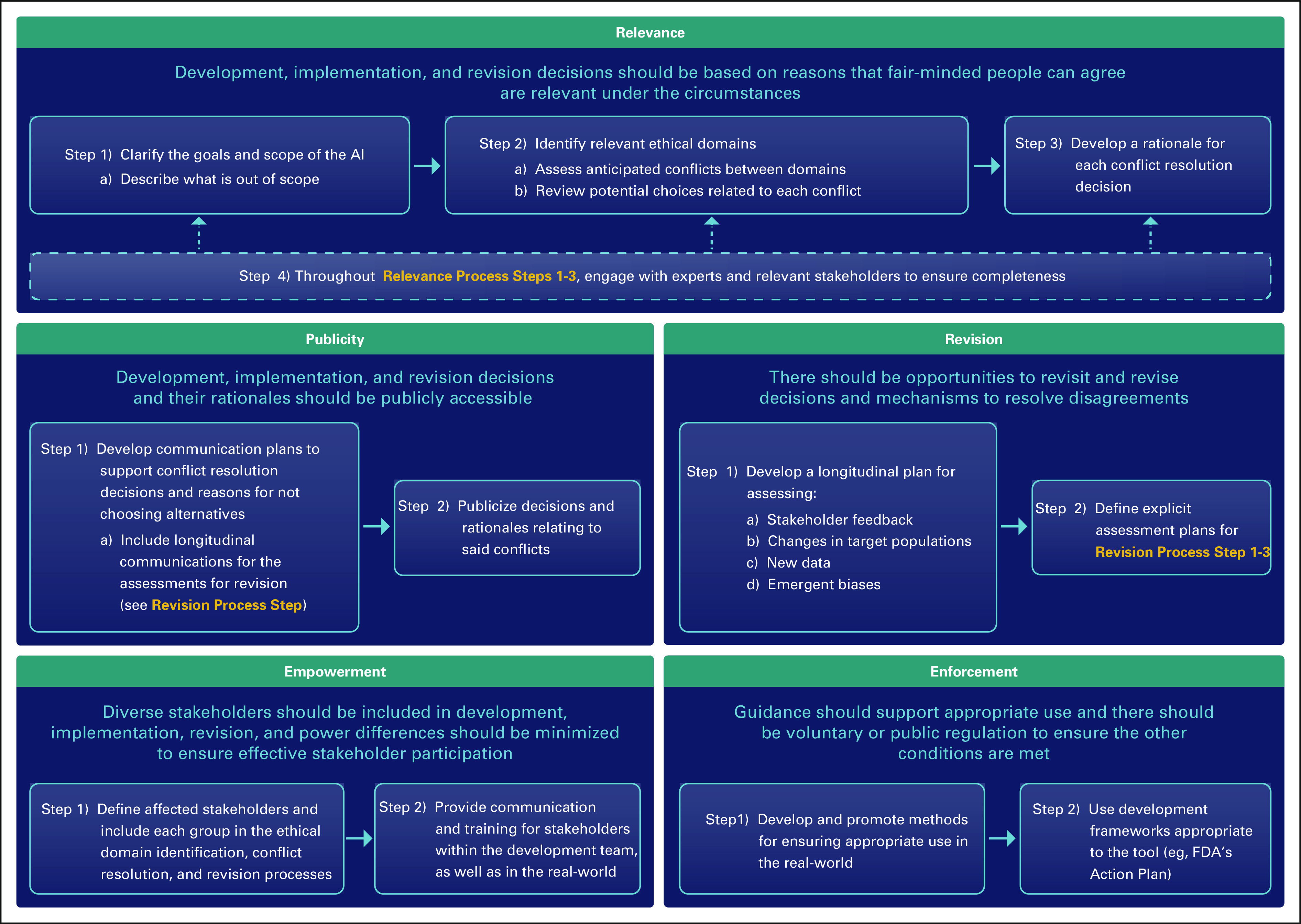

Process-focused ethical decision frameworks are useful structures for addressing challenging issues in biomedicine when it is difficult to reach agreement regarding acceptable outcomes. They are frequently used for priority-setting during scarce resource allocation—such as chemotherapy shortages30—where there are competing ethically acceptable outcomes such as treating the youngest or sickest first. The most widely used process framework is A4R,6 which outlines five principles to establish the legitimacy of decisions for stakeholders. They are (1) relevance: decisions should be based on reasons that fair-minded people can agree are relevant under the circumstances; (2) publicity: decisions and their rationales should be publicly accessible; (3) revision: there should be opportunities to revisit and revise decisions and mechanisms to resolve disagreements; (4) empowerment: power differences should be minimized to ensure effective stakeholder participation; and (5) enforcement: there should be voluntary or public regulation to ensure the other conditions are met.

An A4R-based framework for oncology AI is especially useful now, when AI implementation is beginning and ethical domains for the use of AI in oncology have been outlined. A widely cited outline from Australian and New Zealand establishes ethical AI domains as safety, privacy and protection of data, avoidance of bias, transparency and expandability, application of human values, decision making on diagnosis and treatment, teamwork, responsibility for decisions made, and governance.4 An A4R-based approach defines how to apply these domains in real-world oncology practice, recognizing that some will need to be prioritized at the expense of others, and there will be disagreements over which should take precedent.

For instance, most agree that AI tools should be transparent, representative of, and proven effective within their target population4,17,18; however, at what point does transparency encroach too much on privacy and data protection, what constitutes sufficient representativeness, and where should the balance between representativeness and extrapolation lie? The balancing required for developing, validating, and implementing AI tools that are ethically acceptable to stakeholders necessitates an implementation structure. Adapting A4R to Oncology AI (A4R-OAI; Fig 1)31 leverages a broadly acceptable approach for introducing individual AI tools and addressing multiple ethical domains.

FIG 1.

A4R-OAI, an oncology AI-adapted accountability for reasonableness process framework. AI, artificial intelligence; FDA, US Food and Drug Administration.

The A4R principle of Relevance in AI requires deciding on the proposed scope of the tool being developed, how each relevant ethical domain applies, which domains may conflict, and which stakeholder groups should be engaged. Relevance implicitly addresses patient and oncologist AI skepticism by including them in the development process, thereby promoting the patient-oncologist relationship and keeping respect for autonomy at the center of clinical decision making.5,16,17 Patient autonomy is best respected in AI when individual values are built into the decision-making process.17,20 Relevance upholds this strategy, which MacDougall has termed value-flexible design.16 By ensuring that respect for patients and their values remains at the center of care, remediating oncology care disparities through AI may also be possible.4,16,17

Publicity addresses multiple concerns over transparency in AI: data set accessibility, how data are used in development, and how tools are deployed and monitored. The concrete processes of Publicity in A4R consists of developing formal communications plans regarding the who, what, where, when, and why of decisions, and which communication methods are most appropriate. There is ongoing debate about whether transparency can truly exist for predictions that are unexplainable, and to what extent inexplicability, or computational reliabilism,32 is acceptable. Publicity in oncology AI must balance explicability and reliabilism such that both are considered during development and subject to public acceptance during implementation.

A middle ground that may surface, and is balanced across multiple ethical domains, is defining communication strategies specific to each stakeholder group (eg, data scientists, oncologists, and patients). Understanding that clinical oncologists may not have the expertise to explain an AI tool, one team defined and explained the metrics used in their tool's algorithms.33 Another developed a post hoc interpretation model where the algorithm computes an outcome, an explanation for how it arrived at that outcome for different stakeholder types, and a confidence interval. This respects transparency and the inherit imperfection of prediction while inviting stakeholders to understand some aspects of the decision process.29 On the larger scale, publicity fosters connections to dissemination and implementation science and promotes the role of data scientists in the communication of comprehensible AI to oncologists and patients.

Revision addresses feedback received from publicity while guarding against concerns over emergent biases and new data that may make AI tools discriminatory or irrelevant over time. This portion of the process framework establishes a formal plan for longitudinal data monitoring and decision review to ensure high quality in both how and where the AI is implemented.18,20 Revision also allows for a rebalancing of priorities if, for instance, acceptance of computational reliabilism changes over time, or new data prompt a potential expansion of the tool into a new population.

Empowerment facilitates effective participation and training of stakeholders during the development and implementation process. Pertinent to all ethical domains and other A4R principles, empowerment ensures minority opinions are included, publicized, and their concerns monitored for, even if final decisions in development or implementation do not reflect their viewpoints. Empowerment is not restricted to those involved in development. It includes empowering oncologists and patients who will use the tool by ensuring accessible communication tools, training for users, and procedures for voicing concerns about the tool after initial implementation. Finally, empowerment involves explicit characterization of power imbalances among the stakeholders involved in tool development, as well as consideration of how the tool may influence power dynamics in the real world. Technologic advancements can accentuate power imbalances between groups,34 and empowerment requires prospective consideration and planning to minimize this effect.

Finally, enforcement in the context of oncology AI development and implementation centers on providing guidance on appropriate use of the AI, supporting patients and oncologists, and ensuring deployment of the above processes. Guidance may include asking oncologists to input information regarding the clinical situation and display a warning if it is different from those in which the tool was developed; supports include educational materials for each stakeholder group. Enforceable policies on AI development are nascent, such as the Food and Drug Administration Action Plan,35 but they represent promising steps that bolster the other A4R principles.

There is increasing support for the implementation of AI into oncology. Although there are profound ethical considerations, particularly with respect to bias, inexplicability, and autonomy, A4R-OAI provides a process by which to address these challenges. Its flexibility allows for evaluation of numerous ethical domains and their greater or lesser applicability to individual AI tools. Its design fosters norms of inclusion, diversity, and accountability that cancer care continues to strive toward. Moreover, it promotes the necessary expansion of cancer health communication strategies to understand AI technology and assists in maintaining the patient-oncologist relationship, specifically leveraging the role of technology creators. If this occurs, AI may be implemented into oncology both ethically and with stakeholder support.

Kenneth L. Kehl

Employment: Change Healthcare (I)

Honoraria: Roche, IBM (Inst)

Jonathan M. Marron

Honoraria: Genzyme

Consulting or Advisory Role: Partner Therapeutics

Open Payments Link: https://openpaymentsdata.cms.gov/physician/802634/summary

Eliezer M. Van Allen

Stock and Other Ownership Interests: Syapse, Tango Therapeutics, Genome Medical, Microsoft, ervaxx, Monte Rosa Therapeutics, Manifold Bio

Consulting or Advisory Role: Syapse, Roche, Third Rock Ventures, Takeda, Novartis, Genome Medical, InVitae, Illumina, Tango Therapeutics, ervaxx, Janssen, Monte Rosa Therapeutics, Manifold Bio, Genomic Life

Speakers' Bureau: Illumina

Research Funding: Bristol Myers Squibb, Novartis, Sanofi (Inst)

Patents, Royalties, Other Intellectual Property: Patent on discovery of retained intron as source of cancer neoantigens (Inst), Patent on discovery of chromatin regulators as biomarkers of response to cancer immunotherapy (Inst), Patent on clinical interpretation algorithms using cancer molecular data (Inst)

Travel, Accommodations, Expenses: Roche/Genentech

No other potential conflicts of interest were reported.

SUPPORT

Supported by the McGraw/Patterson Fund to A.H. and G.A.A; NCI R00CA245899 to K.L.K; Doris Duke Charitable Foundation 2020080 to K.L.K.

AUTHOR CONTRIBUTIONS

Conception and design: All authors

Administrative support: Gregory A. Abel

Data analysis and interpretation: All authors

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

A Process Framework for Ethically Deploying Artificial Intelligence in Oncology

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/jco/authors/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Kenneth L. Kehl

Employment: Change Healthcare (I)

Honoraria: Roche, IBM (Inst)

Jonathan M. Marron

Honoraria: Genzyme

Consulting or Advisory Role: Partner Therapeutics

Open Payments Link: https://openpaymentsdata.cms.gov/physician/802634/summary

Eliezer M. Van Allen

Stock and Other Ownership Interests: Syapse, Tango Therapeutics, Genome Medical, Microsoft, ervaxx, Monte Rosa Therapeutics, Manifold Bio

Consulting or Advisory Role: Syapse, Roche, Third Rock Ventures, Takeda, Novartis, Genome Medical, InVitae, Illumina, Tango Therapeutics, ervaxx, Janssen, Monte Rosa Therapeutics, Manifold Bio, Genomic Life

Speakers' Bureau: Illumina

Research Funding: Bristol Myers Squibb, Novartis, Sanofi (Inst)

Patents, Royalties, Other Intellectual Property: Patent on discovery of retained intron as source of cancer neoantigens (Inst), Patent on discovery of chromatin regulators as biomarkers of response to cancer immunotherapy (Inst), Patent on clinical interpretation algorithms using cancer molecular data (Inst)

Travel, Accommodations, Expenses: Roche/Genentech

No other potential conflicts of interest were reported.

REFERENCES

- 1.Oh S, Kim JH, Choi SW, et al. : Physician confidence in artificial intelligence: An online mobile survey. J Med Internet Res 21:e12422, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Nelson CA, Perez-Chada LM, Creadore A, et al. : Patient perspectives on the use of artificial intelligence for skin cancer screening: A qualitative study. JAMA Dermatol 156:501-512, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McCradden MD, Baba A, Saha A, et al. : Ethical concerns around use of artificial intelligence in health care research from the perspective of patients with meningioma, caregivers and health care providers: A qualitative study. CMAJ Open 8:E90-E95, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kenny LM, Nevin M, Fitzpatrick K: Ethics and standards in the use of artificial intelligence in medicine on behalf of the Royal Australian and New Zealand College of Radiologists. J Med Imaging Radiat Oncol 65:486-494, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Char DS, Shah NH, Magnus D: Implementing machine learning in health care—Addressing ethical challenges. N Engl J Med 378:981-983, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Daniels N, Sabin JE: Accountability for reasonableness: An update. BMJ 337:a1850, 2008 [DOI] [PubMed] [Google Scholar]

- 7.Grady D: A.I. Took a Test to Detect Lung Cancer. It Got an A., New York, NY, The New York Times. 2019, pp 17 [Google Scholar]

- 8.Antes AL, Burrous S, Sisk BA, et al. : Exploring perceptions of healthcare technologies enabled by artificial intelligence: An online, scenario-based survey. BMC Med Inform Decis Mak 21:221, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jonmarker O, Strand F, Brandberg Y, et al. : The future of breast cancer screening: What do participants in a breast cancer screening program think about automation using artificial intelligence? Acta Radiol Open 8:2058460119880315, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cardinal Health Specialty Solutions, Oncology Insights: Views on the impact of emerging technologies from specialty physicians nationwide, 2019. https://www.cardinalhealth.com/content/dam/corp/web/documents/publication/CardinalHealth-Oncology-Insights-June-2019.pdf [Google Scholar]

- 11.Layard Horsfall H, Palmisciano P, Khan DZ, et al. : Attitudes of the surgical team toward artificial intelligence in neurosurgery: International 2-stage cross-sectional survey. World Neurosurg 146:e724-e730, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Emanuel EJ, Wachter RM: Artificial intelligence in health care. JAMA 321:2281-2282, 2019 [DOI] [PubMed] [Google Scholar]

- 13.Misguided cancer goal. Nature 491:637-637, 2012 [DOI] [PubMed] [Google Scholar]

- 14.Mello MM, Brennan TA: The controversy over high-dose chemotherapy with autologous bone marrow transplant for breast cancer. Health Aff (Millwood) 20:101-117, 2001 [DOI] [PubMed] [Google Scholar]

- 15.Zou FW, Tang YF, Liu CY, et al. : Concordance study between IBM Watson for Oncology and real clinical practice for cervical cancer patients in China: A retrospective analysis. Front Genet 11:200, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.McDougall RJ: Computer knows best? The need for value-flexibility in medical AI. J Med Ethics 45:156-160, 2019 [DOI] [PubMed] [Google Scholar]

- 17.Lindvall C, Cassel CK, Pantilat SZ, et al. : Ethical considerations in the use of AI mortality predictions in the care of people with serious illness. Health Affairs Forefront, September 16, 2020. 10.1377/forefront.20200911.401376 [Google Scholar]

- 18.Wang F, Kaushal R, Khullar D: Should health care demand interpretable Artificial intelligence or accept “black box” medicine? Ann Intern Med 172:59-60, 2020 [DOI] [PubMed] [Google Scholar]

- 19.Kiener M: Artificial intelligence in medicine and the disclosure of risks. AI Soc 36:705-713, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.DeCamp M, Lindvall C: Latent bias and the implementation of artificial intelligence in medicine. J Am Med Inform Assoc 27:2020-2023, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gijsberts CM, Groenewegen KA, Hoefer IE, et al. : Race/ethnic differences in the associations of the Framingham risk factors with carotid IMT and cardiovascular events. PLoS One 10:e0132321, 2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.London AJ: Artificial intelligence in medicine: Overcoming or recapitulating structural challenges to improving patient care? Cell Rep Med 3:100622, 2022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Di Nucci E: Should we be afraid of medical AI? J Med Ethics 45:556-558, 2019 [DOI] [PubMed] [Google Scholar]

- 24.McDougall RJ: No we shouldn't be afraid of medical AI; it involves risks and opportunities. J Med Ethics 45:559, 2019 [DOI] [PubMed] [Google Scholar]

- 25.Hantel A, Kohlschmidt J, Eisfeld AK, et al. : Inequities in alliance acute leukemia clinical trial and biobank participation: Defining targets for intervention. J Clin Oncol 10.1200/JCO.22.00307 [epub ahead of print on June 13, 2022] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wind A, van der Linden C, Hartman E, et al. : Patient involvement in clinical pathway development, implementation and evaluation—A scoping review of international literature. Patient Educ Couns 105:1441-1448, 2021 [DOI] [PubMed] [Google Scholar]

- 27.Anderson M, Anderson SL: How should AI Be developed, validated, and implemented in patient care? AMA J Ethics 21:E125-E130, 2019 [DOI] [PubMed] [Google Scholar]

- 28.Nagy M, Sisk B: How will artificial intelligence affect patient-clinician relationships? AMA J Ethics 22:E395-E400, 2020 [DOI] [PubMed] [Google Scholar]

- 29.Poon AIF, Sung JJY: Opening the black box of AI-Medicine. J Gastroenterol Hepatol 36:581-584, 2021 [DOI] [PubMed] [Google Scholar]

- 30.Hantel A: A protocol and ethical framework for the distribution of rationed chemotherapy. J Clin Ethics 25:102-115, 2014 [PubMed] [Google Scholar]

- 31.Joint Centre for Bioethics, University of Toronto. Ethical decision-making about scarce resources: A guide for managers and governors, 2012. https://jcb.utoronto.ca/wp-content/uploads/2021/03/A4R_Implementation_Guide2019.pdf [Google Scholar]

- 32.Durán JM, Jongsma KR: Who is afraid of black box algorithms? On the epistemological and ethical basis of trust in medical AI. J Med Ethics 47:329-335, 2021 [DOI] [PubMed] [Google Scholar]

- 33.Handelman GS, Kok HK, Chandra RV, et al. : Peering into the black box of artificial intelligence: Evaluation metrics of machine learning methods. AJR Am J Roentgenol 212:38-43, 2019 [DOI] [PubMed] [Google Scholar]

- 34.Weiss D, Rydland HT, Oversveen E, et al. : Innovative technologies and social inequalities in health: A scoping review of the literature. PLoS One 13:e0195447, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.US Food and Drug Administration: Artificial intelligence and machine learning (AI/ML) software as a medical device action plan, 2021. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device [Google Scholar]