Abstract

This panel study in Austria in 2020 (NW1 = 912, NW2 = 511) explores distinct audience segments regarding beliefs in misinformation, conspiracy, and evidence statements on COVID-19. I find that citizens fall into seven segments, three of which endorse unsupported claims: The threat skeptics selectively accept misinformation and evidence; the approvers tend to accept all types of information; and the misinformed believe in misinformation and conspiracy statements while rejecting evidence. Further analyses suggest that the misinformed increasingly sought out COVID-19 threat-negating information from scientific sources, while also overall attending to threat-confirming information. These patterns have practical implications for correcting misperceptions.

Keywords: disinformation, information-seeking, mass media, misinformation, quantitative analysis, public understanding of science, survey methods

Rarely has the daily life of citizens been so dependent on emerging scientific evidence than at the onset of the COVID-19 pandemic. The majority of citizens heeded scientists’ converging consensus to take the public health threat of COVID-19 seriously and to counteract it with social distancing, masks, and vaccinations (Druckman et al., 2021). Simultaneously, citizens were confronted with narratives that contradicted the best available evidence (Brennen et al., 2020; Enders et al., 2020). Believing in unsubstantiated claims around COVID-19 can be consequential, as it might dissuade citizens from following health measures that protect the well-being and aid the economic recovery of societies (Druckman et al., 2021). Scholars have warned that once individuals form misperceptions, they become resistant to attempts to correct their beliefs (Kuklinski et al., 1998) or even be further polarized by them, which might increase animosities between societal groups (Dan & Dixon, 2021).

Yet, the so-called “misinformed” share of citizens might be marked by substantial heterogeneity. While some hold misperceptions because they believe an alleged expert who shares pseudo-scientific facts (Stecula et al., 2022), others might have a general tendency to endorse conspiracy theories suggesting that powerful actors secretly steer the events (Uscinski et al., 2020). Believing in one type of claim does not necessarily lead to accepting the other (Enders et al., 2020). Furthermore, holding misperceptions might not automatically result in rejecting evidence. Instead, some individuals might be selective about both supported and unsupported facts or even find both types of claims equally plausible (Agley & Xiao, 2021). Thus, unidimensional indices might fail to reveal the diversity within those holding misperceptions.

Taking this heterogeneity as a point of departure, the main goals of this study are twofold: First, using latent profile analysis (LPA), I empirically explore constellations of beliefs in misinformation, conspiracy claims, and evidence on COVID-19. LPA infers homogeneous, latent subpopulations from individuals’ response patterns on a certain set of indicators (see, for example, Masyn, 2013). In this study, LPA is used to categorize respondents into different groups—also called profiles—that share similar beliefs based on their acceptance or rejection of evidence, misinformation, or conspiracy statements on COVID-19. This allows for a more nuanced investigation into the diversity of citizens’ beliefs regarding COVID-19.

Second, I aim to generate insights into whether the most extreme segment—those that endorse misinformation and reject evidence—are unique in how their information-seeking behaviors patterns develop. Recent studies have revealed that COVID-19 misperceptions are linked to media diets that contain fewer news media or public broadcasters (Bridgman et al., 2020; Heiss et al., 2021; Lee et al., 2022), more partisan media sources (Romer & Jamieson, 2020), and more social media and alternative online sources (Agley & Xiao, 2021; Eberl & Lebernegg, 2022; but also see Lee et al., 2022). However, going beyond platform-specific findings, we know less about which exact content misinformed individuals are seeking out. In line with the concept of selective exposure, I differentiate between seeking information that confirms and that negates the threat of COVID-19. In doing so, I build on and go beyond prior studies that have examined information-seeking behaviors for different audience segments in other contexts (Detenber et al., 2016; M. S. Schäfer et al., 2022). Specifically, this study contributes to existing research by testing not only where the misinformed segment searches for information, but also which type of information they seek out most frequently. Learning about information-seeking behavior is highly relevant since selective information-seeking and avoidance can further polarize audiences, affect compliance with preventive health measures (Zheng et al., 2022), and expose them to new unsupported claims. Furthermore, knowing which information sources different audiences turn to is an important first step for designing audience-specific interventions to counter misperceptions.

To this end, I analyze data from a two-wave panel study conducted in August and October 2020 in Austria. The results call for a more fine-grained investigation into the different groups in the population that hold misperceptions.

Misperceptions

Changing recommendations on COVID-19 health-protective measures have illustrated that the generation of knowledge is a dynamic process, leading to constant changes in what constitutes misperceptions and accurate perceptions (Kuklinski et al., 1998; Vraga & Bode, 2020). Yet, in many areas, evidence coming from scientific experts on COVID-19 consolidated over time, supporting some beliefs more than others. Early on in the pandemic, researchers established that COVID-19 poses a threat to health systems and is transmissible via the air (Cucinotta & Vanelli, 2020). However, not all citizens placed trust in these findings (Bridgman et al., 2020; Roozenbeek et al., 2020).

A sizable body of scientific literature has dealt with the discrepancy between public beliefs and accessible evidence. Scholars have leveraged theories on information processing (Pennycook & Rand, 2019), motivations (van Stekelenburg et al., 2020), and the rapid spread of low-quality information (Vosoughi et al., 2018) to shed light on the emergence of misperceptions. Following the definition of Nyhan and Reifler (2010), I define misperceptions “as cases in which people’s beliefs about factual matters are not supported by clear evidence and expert opinion” (p. 305). Due to the dynamic nature of evidence, I consider individuals’ misalignment with the contemporaneous expert consensus as a hallmark of misperceptions (Vraga & Bode, 2020). Specifically, I evaluate individuals’ beliefs regarding COVID-19 against evidence generated by scientists in the field or recommendations by regulatory bodies, such as the World Health Organization (WHO) at the time of the study.

Belief in Misinformation, Conspiracy Theories, and Evidence

Misperceptions might manifest in different ways. Citizens might hold misperceptions because they believe verifiably false claims that are disseminated intentionally (i.e., disinformation) or unintentionally (i.e., misinformation) in their information environment (Nyhan, 2020). During the COVID-19 pandemic, such claims commonly concerned public policies, false information about the spread of the virus, or the characteristics of the infection (Brennen et al., 2020). Thus, misperceptions can form when individuals accept such false claims (hereafter referred to as “misinformation” as an umbrella term) as true.

Furthermore, misperceptions can take the form of unsupported beliefs that are neither verifiable nor falsifiable. Accordingly, conspirational beliefs fall into the category of misperceptions (Nyhan, 2020). Conspiracy theories are described as “unique epistemological accounts that refute official accounts and instead propose alternative explanations of events or practices by referring to individuals or groups acting in secret” (Mahl et al., 2022, p. 17). This element of secrecy makes it notoriously difficult to prove conspiracy claims wrong. To give an example, a conspiracy theorist might find it unsurprising that no evidence exists for the fabrication of COVID-19 as a bio-weapon, since they might expect secret groups to cover up the traces of their actions.

Recent studies suggest that misinformation beliefs and conspiracy beliefs should not be treated interchangeably. Enders et al. (2020) show that belief in misinformation is not automatically linked to greater susceptibility to conspiracy theories. Specific parts of the population are predisposed toward adopting conspiracy beliefs, due to several “epistemic, existential, and social motives” (Douglas & Sutton, 2017). Vice versa, believing in a conspiracy theory is not a necessary precondition for being open to misinformation. For instance, citizens might adopt misinformation because it is propagated by elite sources. Scholars found that in discourses in the U.S., political leaders and partisan news outlets spread conflicting messages about the threat of COVID and the efficacy of countermeasures (Bolsen & Palm, 2022; P. S. Hart et al., 2020). Against this backdrop, some individuals have adopted specific false beliefs because they were disseminated by politicians they support and trust (Druckman et al., 2021; Enders et al., 2020). Furthermore, according to the theory of the cultural cognition of risk, individuals’ acceptance of information is determined by how well it aligns with their pre-existing worldview and values (Kahan et al., 2011). In line with this reasoning, previous studies support the notion that COVID-19 beliefs, attitudes, and behaviors are linked to individuals’ perceptions, for instance, their interpersonal trust (Siegrist & Bearth, 2021). Thus, individuals might selectively endorse some conspiracy or misinformation statements, without showing a general susceptibility to all false claims on COVID-19.

Moreover, we lack knowledge on whether those that endorse misinformation and conspiracy theories fully reject evidence on COVID-19. Some authors hold the view that citizens’ belief in false or unsupported information “leads them to resist accepting and using the correct facts even if these are made available” (Kuklinski et al., 1998, p. 146). There is tentative evidence that this relationship, by and large, also holds true in the context of COVID-19. In their cross-sectional analysis, Druckman et al. (2021) found that groups that accept COVID-19 misinformation also tend to reject accurate information. Alternatively, individuals may adopt a critical stance against mainstream information while being attracted to various alternative narratives—including misinformation and conspiracy claims. Newman et al. (2022) found evidence of this so-called “distrust mindset” among individuals that rejected COVID-19 vaccines. Those holding a distrust mindset demonstrated a smaller gap in discernment between beliefs in accurate and inaccurate information. Consequently, their responses gravitated more strongly toward the mid-categories on the belief scales for both accurate and inaccurate statements. Furthermore, Agley and Xiao (2021) found that certain parts of the population find both inaccurate statements and an official explanation of the emergence of COVID-19 equally believable, suggesting a co-existence of contradictory beliefs in their minds. These inconsistencies show that more research is needed to shed light on citizens’ beliefs in misinformation, conspiracy theories, and evidence, and how those beliefs relate to each other.

Profiles of COVID-19 Beliefs

In light of differences in individuals’ COVID-19 beliefs, the question arises whether these variations follow systematic patterns. Segmentation analyses allow researchers to “divide the general public into relatively homogeneous, mutually exclusive subgroupings” (Hine et al., 2014, p. 442). Regarding beliefs, audience segmentation is a useful tool to empirically identify interpretative communities on certain issues (Hine et al., 2014; Metag & Schäfer, 2018). Such homogeneous groups are often referred to as audience segments or profiles. LPA is one of the most common approaches to quantitatively investigate different subpopulations (Metag & Schäfer, 2018).

In this study, I am specifically interested in groups that form distinct beliefs concerning misinformation, conspiracy theories, and evidence for three main reasons: First, by contrasting beliefs in misinformation and conspiracy theories with beliefs in evidence, I investigate the outer ends of a belief spectrum ranging from clearly unsubstantiated to substantiated beliefs. Because these beliefs lie at the extremes, their endorsement is also especially influential in affecting subsequent behaviors, such as the adoption of health-protective measures (Merkley & Loewen, 2021; Roozenbeek et al., 2020; Stecula & Pickup, 2021). Second, these beliefs can be clearly conceptually distinguished by (a) their (mis-)alignment with current evidence (i.e., factuality, see Kapantai et al., 2021) and (b) the presence or absence of an intentional act led by secret groups (Mahl et al., 2022). Such “theoretically related, yet distinct” (Spurk et al., 2020, p. 4) concepts are an important precondition for using LPA. Third, as outlined above, there is tentative evidence that these beliefs can form different constellations (see Agley & Xiao, 2021; Enders et al., 2020).

To shed light onto different empirical constellations of beliefs about COVID-19, I pose the following research question:

Research Question 1 (RQ1): Which latent profiles of belief in misinformation, belief in conspiracy theories, and belief in evidence statements can be identified?

Information-Seeking Among Citizens Holding Misperceptions

One central question in the study of misperceptions is how individuals can uphold their misperceptions in the face of opposing evidence. In this context, information-seeking can be a critical strategy not only to form new opinions, but also to bolster existing ones (Fransen et al., 2015). By selecting information that supports existing attitudes, or avoiding information that conflicts with one’s views, individuals might be better able to shield their beliefs from unwanted influences (W. Hart et al., 2009). Therefore, information-seeking patterns of misinformed and evidence-resistant citizens might systematically differ from other individuals. Moreover, research suggests that information-seeking can have important downstream effects on individuals’ intentions and behaviors during the COVID-19 pandemic (Zheng et al., 2022).

There is mounting evidence that different sources of information have played a vital role in the formation of misperceptions about COVID-19. In this study, I will focus on the role of legacy media, alternative media, and scientific sources due to their high salience in the COVID-19 debate. Furthermore, it is important to know if misinformed individuals can still be reached by mainstream or elite sources (i.e., legacy media and scientific sources) or if they fully retreat to alternative media environments.

Research so far suggests a negative relationship between COVID-19 misperceptions and legacy media use: The more individuals endorse misinformation or conspiracy beliefs, the less they turn to legacy media as a source of information (Borah et al., 2022; Bridgman et al., 2020; Heiss et al., 2021; Lee et al., 2022). However, there is a high degree of variability in how different outlets report on COVID-19. Findings from the U.S. context, for instance, suggest that conservative media use is linked to holding misperceptions (Borah et al., 2022; Romer & Jamieson, 2020; Stecula & Pickup, 2021). Similarly, in the Austrian context, the commercial TV program Servus TV played an important role in catering to audiences that were critical of the mainstream interpretation of events (Eberl & Lebernegg, 2021).

Next to legacy media, scientists and health agencies directly transmitted up-to-date information on COVID-19 to the public. On the one hand, reliance on scientists and medical professionals as information sources is associated with less acceptance of misinformation claims (Borah et al., 2022). On the other hand, by selectively using scientific evidence, those that endorse misinformation and conspiracy theories can legitimize their claims (Oliveira et al., 2021). For instance, the most extreme endorsers of conspiracy theories more frequently read scientific magazines than other parts of the population (M. S. Schäfer et al., 2022).

When individuals do not see their position reflected in legacy media and elite sources, they might also turn to alternative media. I understand alternative media as media that provides “access to alternative voices, alternative arguments, alternative sets of ‘facts,’ and alternative ways of seeing” (Harcup, 2003, p. 371). Alternative media formats range from websites to groups and pages on social media and TV shows (Stecula et al., 2022). While some alternative media have come under scrutiny for harboring harmful content, such as disinformation, Stecula et al. (2022) provide an example in which alternative media aligned with official recommendations by promoting vaccinations as a safe and effective way to combat COVID-19.

In summary, there are notable variations in the information provided on COVID-19 between and within different types of sources that might affect misperceptions. Therefore, to get a fuller picture of citizens’ information-seeking, we need to understand not only where individuals search for information, but also which information specifically they are searching for within these diverse sources.

Selective Exposure to COVID-19 Threat-Negating Information

Early works in communication theory recognized that individuals’ attention to information might be guided by their pre-existing attitudes. In their study on the effects of political campaigning, Lazarsfeld et al. (1948) found that people “selected political material in accord with their own taste and bias” (p. 80). With the emergence of a high-choice media environment, the paradigm of selective exposure—the “motivated selection of messages matching one’s beliefs” (Stroud, 2014, p. 1)—received renewed attention in communication science (Stroud, 2008). Overall, meta-analytical evidence supports that there is a tendency for individuals to select congenial information over information that challenges prior beliefs (W. Hart et al., 2009). While the effect is primarily studied in regard to political partisanship, it was also replicated for issue-specific information selection (Knobloch-Westerwick & Meng, 2009).

Since misinformation on the threat of COVID-19 and the effectiveness of COVID-19 policies is most common (Brennen et al., 2020), individuals that strongly endorse those misperceptions might also be more likely to further select information that reflects those beliefs. Such information might also serve to counterargue evidence that runs counter to those beliefs. Therefore, I hypothesize that across different sources, misinformed and evidence-resistant individuals are more likely to increasingly seek information that negates the threat of COVID-19:

Hypothesis 1 (H1): Profiles with high levels of belief in misinformation and conspiracy statements and low levels of belief in evidence statements at W1 seek out more COVID-19 threat-negating information in (a) legacy media, (b) scientific sources, and (c) alternative media sources from W1 to W2 as compared to other profiles.

Selective Avoidance of COVID-19 Threat-Confirming Information

While individuals tend to select more belief-congruent information, there is less evidence that they also purposefully avoid belief-challenging information (Garrett, 2009; Merkley & Loewen, 2021). First, news consumption is highly habitual and therefore unlikely to change within a short timeframe (Schnauber & Wolf, 2016). Second, information, such as the current infection rates or changes in policies is useful for individuals, even if they endorse misperceptions. When incongruent information is useful, people are less likely to avoid it even though it might challenge their beliefs (Stroud, 2014).

Nevertheless, studies so far suggest that avoidance of COVID-19 information and news was a common phenomenon early in the pandemic. S. Schäfer et al. (2022) reported that three of four participants in their representative survey study in Austria actively avoided news about the COVID-19 crisis. Their study further suggests that citizens seek to avoid COVID-19 information to shield themselves from news’ negative impacts on their psychological well-being, and also due to low trust in media (S. Schäfer et al., 2022). Tentative results also hint at a link between misperceptions and avoidance. A comparative study found that exposure to misinformation increases avoidance of COVID-19 information across different national contexts (Kim et al., 2020). Furthermore, a longitudinal study from Singapore revealed that when individuals actively avoid the news and are frequently confronted with false claims, they are more likely to endorse COVID-19 misinformation (Tandoc & Kim, 2022). However, it is unclear whether this relationship is reciprocal.

Since it is unclear whether those holding misperceptions are also less likely to seek out information that confirms the threat of COVID-19, I pose a research question:

Research Question 2 (RQ2): Do profiles with high levels of belief in misinformation and conspiracy statements and low levels of belief in evidence statements at W1 seek out less COVID-19 threat-confirming information in (a) legacy media, (b) scientific sources, and (c) alternative media sources from W1 to W2 as compared to other profiles?

Method

Data come from a two-wave panel study conducted between the first and second epidemic waves of the COVID-19 pandemic in Autumn 2020 in Austria. The study was part of a larger survey on misinformation, political communication, and green advertising. The online survey sample provider Dynata recruited participants based on nationally representative quotas for age (MW1 = 45.95, SDW1 = 14.32) and gender (53.0% female) in the first wave of data collection. While not representative, respondents were also recruited from diverse educational backgrounds (low education: 34.3%; intermediate education: 44.4%; higher education: 21.3%). The Institutional Review Board of the Department of Communication at the University of Vienna approved the study (ID: 20200724_018).

Nine hundred ninety respondents participated in the first wave (W1) of data collection between August 10 and 21, 2020. To ensure data quality, respondents taking less than 10 minutes to complete the 25-minute-long survey were deemed invalid cases.1 Only valid cases from W1 (NW1 = 912) were eligible to participate in the second wave (W2), which was fielded between October 19 and October 27, 2020. Five hundred fifteen respondents finished the questionnaire, out of which a final sample of NW2 = 511 could be matched with responses in W1.

Panel attrition (44.0%) was independent of individuals’ information-seeking behaviors in W1. The attrition rate, however, was partly dependent on demographic factors. There was a higher percentage of female respondents that only completed W1 (57.0% females) as compared to both waves (48.9% females), χ2(1, N = 731) = 7.24, p = .007. Participants who completed both waves were also slightly older (M = 47.45) as compared to those that completed only W1 (M = 44.04), t(910) = −3.60, p < .001, and had a higher share of highly educated respondents (W1 only: 18.2%; both waves: 24.1%), χ2(2, N = 912) = 6.30, p = .043. To minimize potential attrition biases, I employ full information maximum likelihood (FIML) technique.

Measures

All independent variables and controls were measured in Wave 1, except for political ideology. If not indicated otherwise, items were measured on scales from 1—lowest level to 7—highest level. All items, instructions, and confirmatory factor analysis (CFA) factor loadings are reported in Supplemental Appendix A, Table A1.

Diagnostic Items for Belief Segments

To measure belief in misinformation, conspiracy, and evidence statements, I selected a total of 14 statements (see Table 1). Respondents rated the perceived veracity of these statements on a scale from 1—completely false to 7—completely true. I selected statements that might be impactful based on their high circulation and for which contemporaneous expert consensus could be identified (Vraga & Bode, 2020). For more information on the contemporaneous expert consensus and circulation, see Supplemental Appendix B, Table B1.

Table 1.

Item Wordings of Latent Profile Indicators.

| Item | M | SD |

|---|---|---|

| Misinformation statements | ||

| People have been infected with COVID-19 long before 2019 [mis1] | 3.64 | 1.95 |

| Only about 0.1 percent of infected people die from COVID-19 [mis2] | 3.99 | 1.91 |

| Individuals without symptoms do not spread COVID-19 [mis3] | 2.32 | 1.92 |

| RNA vaccines being developed against the novel coronavirus alter the human genome in the long term [mis4] | 3.35 | 1.66 |

| Wearing a mask causes one to inhale harmful amounts of CO2 [mis5] | 3.34 | 2.11 |

| Among those carrying the coronavirus, less than 1% experienced the disease [mis6] | 3.44 | 1.77 |

| Evidence statements | ||

| The substance hydroxychloroquine showed no positive influence on the course of infections with COVID-19 in clinical studies [evi1] | 4.00 | 1.64 |

| The World Health Organization (WHO) recommends the use of face masks if physical distance cannot be maintained [evi2] | 5.35 | 1.95 |

| COVID-19 can damage other organs besides the lungs, such as the kidney, liver, and pancreas [evi3] | 4.87 | 1.81 |

| In Austria, there was slight excess mortality during the first COVID-19 wave. That is, more people died than would have been expected based on data from previous years [evi4] | 4.04 | 2.01 |

| It is possible to use the Stopp Corona-app provided by the Red Cross without disclosing any personal data [evi5] | 3.74 | 2.11 |

| Conspiracy statements | ||

| 5G technology is being used deliberately to spread the coronavirus [con1] | 2.04 | 1.68 |

| The novel coronavirus was intentionally created in a lab [con2] | 3.14 | 1.99 |

| Bill Gates wants to use vaccines to reduce population growth [con3] | 2.39 | 1.85 |

Note. N = 912.

Dependent Variables

To measure threat-confirming and threat-negating information-seeking, for each source—legacy media, alternative media, and scientific sources—a separate index was constructed. A CFA using robust maximum likelihood estimation supports the distinctness of the constructs, χ2(510) = 679.05, p < .001, comparative fit index (CFI) = .99, Tucker-Lewis index (TLI) = .99, root mean square error of approximation (RMSEA) = .02. The measures were modeled after studies that investigate safe and unsafe discussion (see, for example, Eveland & Shah, 2003), asking about the search for threat-confirming and threat-negating information from 1—never to 7—very often within the past 2 months. For the index on threat-confirming information-seeking, respondents indicated how often they sought out information that suggested that the coronavirus posed a strong threat using three items (legacy media: MW1 = 4.94, SDW1 = 1.56, MW2 = 4.86, SDW2 = 1.58; alternative media: MW1 = 3.08, SDW1 = 1.94, MW2 = 3.09, SDW2 = 1.90; scientific sources: MW1 = 4.43, SDW1 = 1.70, MW2 = 4.31, SDW2 = 1.65). For threat-negating information-seeking, individuals indicated how often they sought out information which disagrees that the coronavirus poses a strong threat, again using three items (legacy media: MW1 = 2.68, SDW1 = 1.42, MW2 = 2.78, SDW2 = 1.39; alternative media: MW1 = 2.54, SDW1 = 1.67, MW2 = 2.59, SDW2 = 1.61; scientific sources: MW1 = 2.85, SDW1 = 1.50, MW2 = 2.99, SDW2 = 1.53). Definitions and examples of the different sources were presented directly before the question (see Supplemental Appendix A).

Controls

I measured threat perceptions of COVID-19 (MW1 = 4.74, SDW1 = 1.67) using three items adapted from the work of Kittel et al. (2020). Populist attitudes (MW1 = 4.78, SDW1 = 1.07) were assessed using five items taken from the work of Müller et al. (2017). Furthermore, I measured trust in scientists (MW1 = 4.75, SDW1 = 1.40) using three items developed by McCright et al. (2013). The four items for media trust (MW1 = 4.45, SDW1 = 1.38) were based on the validated scale by Kohring and Matthes (2007). Furthermore, I controlled for individuals’ political ideology on a scale from 0—left to 10—right (MW2 = 4.76, SDW2 = 1.95) and respondents’ age, gender (dummy coded for 1 = female), and educational background using two dummy variables for high and intermediate educational levels.

Analytical Strategy

I turned to LPA to identify different constellations of beliefs in misinformation, evidence, and conspiracy statements. LPA is related to other latent variable approaches, such as structural equation modeling (SEM). However, as opposed to SEM, this method seeks to identify different latent groups within a population that are similar in their responses to a set of variables and therefore results in a categorical latent variable. For each individual, LPA then estimates a probability of belonging to a specific latent group. Researchers have argued that LPA is a more favorable analytical strategy for identifying subpopulations as compared to cluster analysis (Hine et al., 2014) as it allows researchers to fit different models to the data and compare how well they fit the data based on several indicators.

I estimated the optimal fitting model ranging from one-class up to nine-class solutions in LatentGold 6.0. To avoid local maxima, the number of starting values was increased to 5,000. For model selection, I rely on the Bayesian information criterion (BIC), the sample-adjusted Bayesian information criterion (SABIC), Vuong-Lo-Mendell-Rubin likelihood ratio test (VLMR-LRT), and bootstrapped likelihood ratio test (BLRT). In line with prior studies, I also weight the profiles by theoretical plausibility and evaluated if “an additional profile adds a substantial new variable formation” (Spurk et al., 2020, p. 13), that is, a qualitatively new constellation of answers.

The data underlying the analyses can be accessed via the Open Science Framework (doi:10.17605/OSF.IO/6Q9Y3).

Results

Model Selection

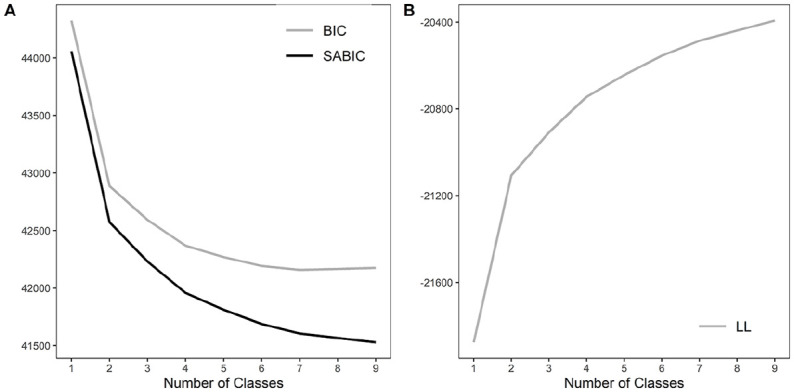

In the first step, I analyzed how many latent belief profiles can be identified. The seven-class model achieved the lowest BIC value, indicating that it was the best-fitting model in consideration of the models’ complexity (see Supplemental Table C1, Appendix C1). While the SABIC continued to decrease with each additional class, the gains in model fit diminished more strongly after the five-profile solution (see Figure 1A). Similarly, the adjusted Lo-Mendell-Rubin-adjusted likelihood ratio test (LMR-LRT) and the BLRT did not indicate a favorable model based on nonsignificant p values. A plot of log-likelihood (LL) values shows that the extraction of an additional profile after the seven-class solution achieves only small improvements in fit (see Figure 1B).

Figure 1.

Values of (A) BIC and SABIC as well as (B) LL for Different Class Solutions.

Note. BIC = Bayesian information criterion; SABIC = sample-adjusted Bayesian information criterion; LL = log-likelihood.

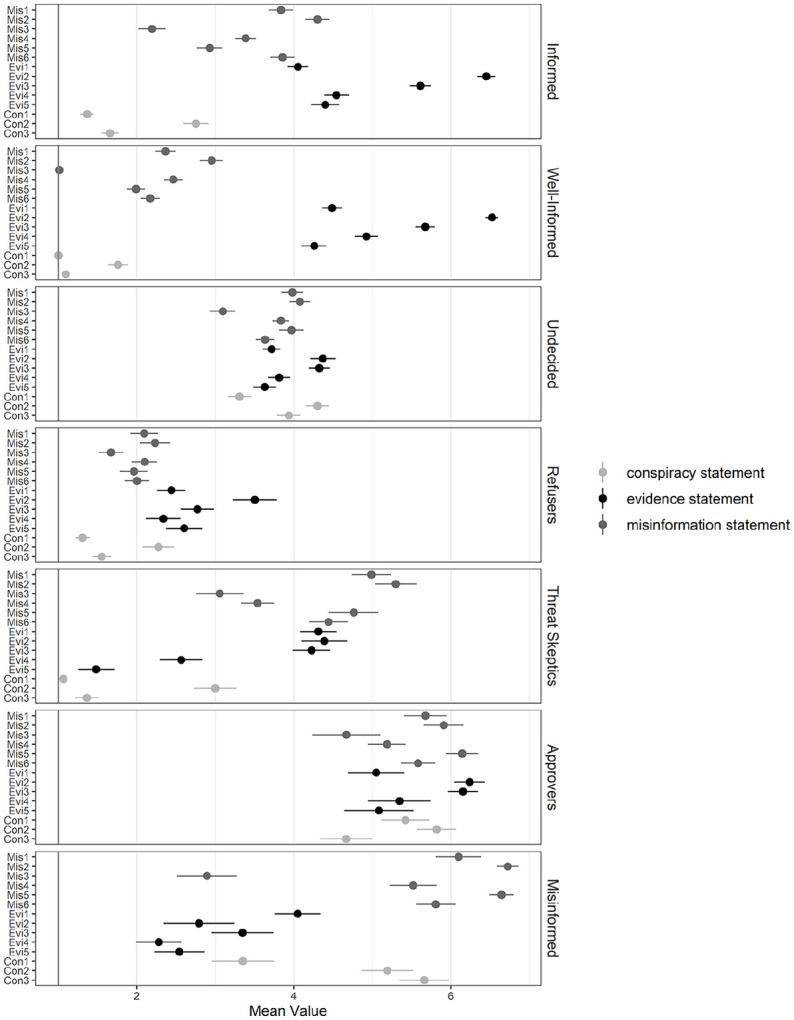

Next, I evaluated models based on their interpretability and content value. For the models up to Model 7, qualitatively new profiles appeared that clearly differed in their constellations of answers to the belief questions. In contrast, Solutions 8 to 9 only provided quantitatively different solutions, but no qualitatively new profiles. Based on these content considerations and the BIC, I, therefore, selected the seven-class model for further investigation. The entropy value (.79) suggested that respondents could be classified into latent profiles with an acceptable degree of certainty. In the following, I will describe each of the latent classes (see also Figure 2).

Figure 2.

Distribution of Belief in Misinformation, Evidence, and Conspiracy Statements Across Latent Belief Profiles.

Note. Vertical lines represent −/+ 1*SD. Higher values indicate higher levels of belief.

The informed (n = 214, 23.4%) showed tendencies toward a higher acceptance of evidence overall. They agreed with the statement that the WHO recommends masks and that COVID-19 affects multiple organs, but showed neither rejection nor acceptance of the remaining evidence statements. They were also characterized by lower acceptance of misinformation, although some degree of variability exists in their ratings. Specifically, they chose mid-category ratings when it comes to misinformation on the mortality rate, the first occurrence of COVID-19 infections, and the percentage of infected individuals that also develop symptoms. Furthermore, they were also marked by low levels of belief in conspiracy statements.

In contrast, the well-informed (n = 232, 25.4%) rejected all misinformation statements as false, while rating evidence statements as moderately to completely true. They expressed strong disbelief in conspiracy statements. Therefore, the group showed the highest discernment between evidence-congruent and evidence-incongruent claims.

The undecided (n = 222, 24.3%) were marked by choosing mid-categories on most items, with low levels of discernment between evidence, misinformation, and conspiracy statements. Only statements on the asymptomatic spread of COVID-19 and the intentional use of the G5-technology show a slight tendency toward being rejected.

The refusers (n = 85, 9.3%), in contrast, rated all statements as false or completely false. They are slightly less rejecting of the statement that the WHO issued a mask recommendation, but show a clear rejection of most other statements regardless of their (mis-)alignment with contemporaneous evidence.

The threat skeptics (n = 70, 7.7%) rated statements as true or false based on the specific issue under question. While they tended to disbelieve conspiracy statements, they showed a high degree of variability around misinformation statements. They specifically endorsed misinformation that questions the threat posed by COVID-19, for example, by suggesting that COVID-19 had existed already before 2019, that mortality rates are below 0.1% and that only 1% of those that carry the virus develop symptoms. They express concern around some policies, for example, by agreeing with the statement that masks might trap harmful levels of CO2 under the fabric, showing strong disbelief that none of their personal data are stored by the official contact tracing app, and questioning the official statistics on excess mortality. Overall, the pattern suggests that threat skeptics questioned the effectiveness and safety of governmental measures, as well as the official interpretation of COVID-19 as a health threat.

The approvers (n = 49, 5.4%) mirror the refusers in giving similar ratings to all statements, yet differ in that they tend to agree with claims regardless of the evidence underlying them. In other words, they agree with all statements, regardless of whether they contain misinformation, conspiracy claims, or evidence. Consequently, their discernment between different claims is low.

The misinformed (n = 40, 4.4%) are the smallest segment in the sample. Notably, they evaluate misinformation statements as true, with only one exception: They show low levels of belief in the statement that COVID-19 cannot be transmitted by individuals without symptoms. Regarding the evidence statements, they rejected the majority of the evidence statements as false. The statement on the ineffectiveness of hydroxychloroquine as a COVID-19 cure marked the exception—in this case, the misinformed tended to choose the middle category. Next to the approvers, they also showed higher levels of belief in conspiracy theories as compared to other segments.

To test the robustness of the profiles, I tested for criterion-related validity. For full reporting of all tests, see Supplemental Appendix D. In addition, I tested whether profiles were robust concerning low-effort responding. Repeating the LPA excluding low-effort responders showed that insufficient responding did not affect the number or overall quality of belief profiles. However, for case exclusion based on intra-individual response variability, an indicator for straight-lining, there were shifts in the relative size of the segments. Specifically, the size of the undecided dropped to 17.1%, while the informed segment increased in size (29.6%). Thus, segment sizes should be interpreted with caution.

Predicting Information-Seeking

Next, I analyzed information-seeking patterns of the misinformed segment compared to other segments using an auto-regressive latent variable panel model (i.e., predicting the dependent variable in W2 while controlling for W1 levels). Membership assignments to the different profiles in W1 were dummy coded and entered as predictors into the model, with the misinformed as a reference category. I allowed error variances of identical items to covary2 and used robust ML estimation. First, I evaluated the measurement model of all latent variables (including controls) in a CFA, which was satisfactory based on robust estimations of χ2(1,086) = 1,546.07, p < .001, CFI = .98, TLI = 98, RMSEA = .02, and factor loadings λ > .70. Only the index for populist attitudes showed factor loadings below the mark of λ > .70. However, since the items describe different dimensions of populist attitudes, I chose to retain all items in order to avoid losing substantial information based on excluding measures. Therefore, no items were excluded from the analysis. The structural model demonstrated sufficient model fit, χ2(1,611) = 2,819.25, p < .001, CFI = .96, TLI = .95, RMSEA = .03. For detailed results on structural paths including control variables, see also Supplemental Appendix C, Tables C2 and C3.

Seeking Out Threat-Negating Information

Hypothesis 1 stated that individuals in the misinformed segment are more likely to seek information that questions the threat of COVID-19 from (a) the legacy media, (b) scientific sources, and (c) alternative media from W1 to W2. Results showed that the well-informed, b = −0.89, SE = 0.42, β = −.31, p = .035, and the refusers, b = −0.87, SE = 0.43, β = −.20, p = .046, were significantly less likely to seek out threat-negating information in the legacy media than the misinformed. While also showing a negative coefficient, there were no significant differences between the misinformed and the undecided, b = −0.53, SE = 0.40, β = −.18, p = .194, the informed, b = −0.48, SE = 0.42, β = −.16, p = .246, the treat skeptics, b = −0.78, SE = 0.43, β = −.17, p = .068, and the approvers, b = −0.66, SE = 0.48, β = −.12, p = .168. Thus, H1a is only party confirmed.

Regarding scientific sources, the data showed a uniform pattern: The undecided, b = −0.83, SE = 0.34, β = −.26, p = .014; informed, b = −0.99, SE = 0.37, β = −.30, p = .007; well-informed, b = −1.16, SE = 0.38, β = −.36, p = .002; refusers, b = −1.28, SE = 0.40, β = −.27, p = .001; threat skeptics, b = −0.93, SE = 0.38, β = −.18, p = .014; and approvers, b = −1.11, SE = 0.45, β = −.18, p = .014, showed significantly lower levels of threat-negating information-seeking from scientific sources from W1 to W2 as compared to the misinformed. These results support H1b.

The analysis of threat-negating information-seeking in alternative media yielded mixed results. The data revealed significant differences between the misinformed and the informed, b = −0.91, SE = 0.44, β = −.26, p = .039; the well-informed, b = −0.99, SE = 0.45, β = −.29, p = .028; and the threat skeptics, b = −1.29, SE = 0.45, β = −.23, p = .004. However, there are no significant differences between the misinformed and the undecided, b = −0.65, SE = 0.42, β = −.19, p = .120; refusers, b = −0.90, SE = 0.47, β = −.18, p = .054; or approvers, b = −0.92, SE = 0.50, β = −.14, p = .065. Thus, there is only partial evidence for H5c.

Seeking Out Threat-Confirming Information

Next, I tested whether the misinformed also show different patterns of information-seeking in regard to threat-confirming information from W1 to W2 (RQ2). The findings suggested that the misinformed significantly increased threat-confirming information-seeking in the legacy media when compared against the undecided, b = −1.46, SE = 0.40, β = −.41, p < .001; the informed, b = −1.15, SE = 0.41, β = −.32, p = .005; the well-informed, b = −0.98, SE = 0.42, β = −.28, p = .019; the refusers, b = −1.21, SE = 0.44, β = −.23, p = .006; the threat skeptics, b = −0.99, SE = 0.44, β = −.17, p = .024; and the approvers b = −1.24, SE = 0.48, β = −.18, p = .011.

I found no evidence that the misinformed differ from the other groups in their threat-confirming information-seeking from scientific sources: The coefficients were non-significant for the undecided, b = 0.10, SE = 0.32, β = .03, p = .757; the informed, b = 0.11, SE = 0.35, β = .03, p = .759; the well-informed, b = 0.32, SE = 0.35, β = .09, p = .365; the refusers, b = 0.24, SE = 0.40, β = .05, p = .546; the threat skeptics, b = −0.19, SE = 0.37, β = −.03, p = .610; and the approvers, b = 0.41, SE = 0.42, β = .06, p = .325.

Finally, the misinformed also indicated higher levels of threat-confirming information-seeking in alternative media from W1 to W2 as compared to the undecided, b = −0.97, SE = 0.45, β = −.23, p = .031; the informed, b = −1.01, SE = 0.48, β = −0.24, p = .034; the well-informed, b = −1.35, SE = 0.48, β = −.32, p = .005; and the threat skeptics, b = −1.42, SE = 0.48, β = −.21, p = .003. I found no differences between the misinformed and the refusers, b = −0.97, SE = 0.50, β = −.15, p = .056; or the approvers, b = −0.48, SE = 0.57, β = −.06, p = .398.

To check for the robustness of the findings, I repeated the analysis excluding individuals that scored low (below 1.26) on the intra-individual response variability to flag straight-lining. The differences between the refusers and the misinformed in threat-negating information-seeking in legacy media turned non-significant, b = −0.85, SE = 0.44, β = −.20, p = .051, while the differences between threat-confirming information-seeking in alternative media turned significant, b = −1.02, SE = 0.52, β = −.16, p = .049. In addition, the difference between the approvers and the misinformed in threat-negating information-seeking in alternative media turned significant, b = −1.12, SE = 0.54, β = −.16, p = .038. Thus, differences between the smaller profiles in the sample should only be interpreted cautiously due to their variability. Nevertheless, the main direction of the findings stayed intact.

To gain further insights into the overall trends of information-seeking, I further examined the baseline levels of information-seeking for each profile. As can be seen in Supplemental Table C4, Appendix C, the baseline levels of threat-confirming information-seeking in legacy media were significantly lower among the misinformed as compared to other segments.

Discussion

Misperceptions can affect individual and public decision-making on consequential issues. Yet, to fully understand the individuals holding these misperceptions, we need a comprehensive view that takes the diversity of this group into account. The present study adds nuance to our understanding of misperceptions about COVID-19 by identifying seven latent profiles based on citizens’ beliefs in misinformation, conspiracy theories, and evidence. Furthermore, I assess if information acquiescence on COVID-19 among the misinformed segment might serve the function of bolstering pre-existing attitudes as suggested by selective exposure theories.

The findings confirm that a small proportion of misinformed citizens, in the strictest sense of the word, form a homogeneous group. This group shows high levels of belief in both misinformation and conspiracy statements while rejecting evidence statements on COVID-19. Importantly, the results preliminarily dispel the fear that the misinformed make up a large share of the public. In the sample of this study, only around 5% fall into this category, supporting prior studies that found the misinformed to be a minority (Druckman et al., 2021; Roozenbeek et al., 2020).

Furthermore, the LPA identified two related groups that deserve further attention. The group of threat skeptics showed a high level of fluctuation in their assessment of both misinformation and evidence statements. Threat skeptics were skeptical of the effectiveness of governmental measures and more open toward statements that question the threat of COVID-19. Yet, they rejected conspiracy theories and selectively accepted some of the evidence. Their assessment mirrors the politicized nature of COVID-19 information (Bolsen & Palm, 2022; P. S. Hart et al., 2020) and might be an expression of their reservations against the government’s approach to deal with the pandemic. In addition, the approvers showed high levels of acceptance of both misinformation and conspiracy statements. In contrast to the misinformed, they also rated evidence statements as true. Both of these groups might be classified as “misinformed” by conventional indices of misperceptions, but form distinctive groups in LPA.

The majority of respondents fell into the four remaining categories, which predominantly rated misinformation or conspiracy statements as false. The informed expressed uncertainty around some statements, but overall discerned supported from unsupported statements. The expressed uncertainty might be a function of the constant changes in the emerging evidence on COVID-19 and therefore reflect a rational position, but could also indicate a lack of knowledge. The well-informed showed low misperceptions and high evidence acceptance. The undecided chose mid-categories across all statements, which might indicate uncertainty, and also disinterest or low-effort responding. Finally, the refusers rated all statements as false. While the latter group might appear as ranking low on conventional misperceptions scales, their generalized skepticism might be detrimental to democratic decision-making processes and deserves further scholarly attention. Taken together, the results demonstrate that there is a substantial amount of variability in individuals’ beliefs around (mis-)information about COVID-19.

Another key finding of this study concerns the pattern of information-seeking of the misinformed segment as opposed to other segments. Based on selective exposure studies (W. Hart et al., 2009), I theorized that individuals that hold misperceptions about COVID-19 might also increase the amount of threat-negating information-seeking from W1 to W2 to further bolster their views. Results show that the increasing levels of threat-negating information-seeking in scientific sources set the misinformed apart from other segments. In contrast, the misinformed showed similar levels of threat-negating information-seeking compared to most segments when it comes to legacy media. As for alternative media, the findings are mixed.

These findings highlight the importance of scientific sources for bolstering attitudes among the misinformed. The misinformed might use expert voices and evidence selectively to support their own views and to collect arguments that can be used to counter other positions. This aligns with prior studies that conspiracy theorists often turn to scientific sources (M. S. Schäfer et al., 2022), for instance, to legitimize their views (Oliveira et al., 2021). Whether or not the sought-out scientific information is accurate cannot be inferred from the findings of this study—it is possible that misinformed individuals use alleged scientific sources that do not follow scientific standards, and they might also selectively choose high-quality evidence.

Interestingly, this study found that the misinformed significantly increased their level of threat-confirming information-seeking in the legacy media as compared to all other groups within the timeframe of this study. This finding conflicts with the concept of selective avoidance, which would suggest that citizens avoid counter-attitudinal information to shield their attitudes (Garrett, 2009; Stroud, 2014). Potentially, these effects can be explained by a catch-up effect. Cross-sectional data revealed that the levels of threat-confirming information-seeking in legacy media among the misinformed were initially lower than in other segments. With rising COVID-19 case numbers in the autumn of 2020, the misinformed might have increased their legacy media use to get information on local COVID-19 outbreaks and policy changes. This is in line with the notion that information utility might drive information selection regardless of the congruence of the information with one’s pre-existing beliefs (Stroud, 2014).

With regard to alternative media, the findings were mixed but also overall pointed toward an increase in threat-confirming information-seeking. However, the misinformed did not differ in their use of scientific sources for seeking out threat-conforming information. Again, these differences between sources might be explained by the fact that legacy media, as opposed to alternative media and scientific sources, offered greater informational utility. Overall, these findings parallel other studies that found that individuals might selectively use information that bolsters their views, while not necessarily avoiding counter-attitudinal content altogether (Garrett, 2009; Merkley & Loewen, 2021).

Practical Implications

The observed heterogeneity in COVID-19 misperceptions suggests that there is a need for diverse and targeted intervention strategies. Threat skeptics appear to be receptive to at least some messages communicating evidence. As noted earlier, their selective rejection might be linked to pre-existing attitudes and the politicization and polarization of COVID-19 communication. Based on prior studies, it could be fruitful to develop interventions that use value-congruent messages (Kahan et al., 2011), scientific consensus messages (Kerr & Linden, 2022), or specifically warn about the politicization of science (Bolsen & Druckman, 2015). For approvers, interventions should aim at increasing discernment between substantiated and unsubstantiated claims. Thus, this group might profit from interventions that encourage critical deliberation of encountered information, such as inoculation (Roozenbeek et al., 2022) and accuracy reminders (Pennycook & Rand, 2019).

Based on their belief and information-seeking patterns, the misinformed might be easy to reach, but difficult to persuade. They frequently engage with threat-contesting scientific sources, which is where they can source counterarguments to refute other evidence. Thus, the misinformed likely defensively process fact-checking and other misinformation correction messages. Prior literature proposed to employ visual elements and storytelling, which are more face-preserving for recipients as opposed to directly challenging their beliefs (Dan & Dixon, 2021). However, more research is needed to determine whether these correction strategies are effective in real-life contexts.

Limitations

This study has a number of limitations that deserve special mention. First, three of the response patterns identified as groups in the LPA—the refusers, the undecided, and the approvers—are marked by response patterns with little variation on the scale, resembling extreme or acquiescence response styles or straight-lining. When excluding speeders and potential straight liners, the same latent groups formed, which supports the robustness of the findings. The category of main interest—the misinformed—was not affected by the exclusion of careless responders. Nevertheless, further research is needed to corroborate the meaningfulness of the refusers, undecided, and approvers beyond response styles.

Second, the findings cannot be translated to other topical, national, or temporal contexts. Additional studies are needed to see if similar profiles form under different circumstances. On a positive note, LPA opens up an interesting area for misinformation researchers that might add nuance to the investigation of misperceptions across different topics.

Third, using self-reports to measure information-seeking comes with biases. Individuals tend to over-report news use (Scharkow, 2016), which is why the baseline level of media consumption should be interpreted with caution. Behavioral measures, such as browser history data provide a more precise picture of the exact sources that individuals used. Moreover, it would be interesting to test whether different segments attend to a range of diverse sources, or if they simply seek out a small number of favored outlets in future studies.

Fourth, panel models with auto-regressive effects do not allow making causal inferences. That is, it is uncertain whether individuals change information-seeking patterns because they belong to a certain misinformation profile or because of third factors they have in common that have not been controlled for in the model. I included a range of control variables to address this problem, but I cannot exclude the possibility that unobserved third variables might explain the proposed relationships. The analysis is also restrained by the fact that I only used two waves of data collection, which does not allow for separating within- and between effects.

Conclusion

Based on LPA, this study shows that not everyone that scores high on misperception indices about COVID-19 sweepingly rejects evidence. Some individuals might consider misinformation, conspiracy theories, and evidence as equally plausible, while others might be highly selective in what they believe regardless of whether the presented information aligns or misaligns with the scientific consensus. Only a minority of participants in this study gravitates toward both misinformation and conspiracy theories while showing a clear rejection of the scientific evidence. Interestingly, in their information-seeking behavior, this group might especially rely on scientific sources that legitimize the view that COVID-19 does not pose a threat to individuals and society. Yet, the findings of this study suggest that they do not isolate within echo chambers, and also increasingly sought out legacy media information at a time when COVID-19 cases were on the rise. This study was only a first starting point: There is still a need to better understand which factors predict these belief profiles, if similar profiles emerge in different contexts, and how they respond to different interventions aimed at reducing misperceptions.

Supplemental Material

Supplemental material, sj-docx-1-scx-10.1177_10755470221142304 for Investigating the Heterogeneity of Misperceptions: A Latent Profile Analysis of COVID-19 Beliefs and Their Consequences for Information-Seeking by Marlis Stubenvoll in Science Communication

Acknowledgments

I would like to thank Prof. Mike Schäfer for providing highly valuable and detailed feedback on the method, results, and structure of the paper. Furthermore, members of the IKMZ Science Communication Division at the University of Zurich, most of all Daniela Mahl and Dr. Sabrina Heike Kessler, provided important feedback that helped improve the paper methodologically and conceptually.

Author Biography

Marlis Stubenvoll (MA, Aarhus University/University of Amsterdam) is a PhD candidate at the University of Vienna. Her research interests include media effects, misinformation and resistance to evidence, climate change communication, and targeted political advertising.

Since the median respondent took 29 minutes to finish the survey, the cut-off point of 10 minutes indicates that respondents took less than half of the time needed by the typical respondent. Thus, a substantial proportion of items and instructions were at best superficially read by the respondent.

Instead of using correlations of error terms, scholars often recommend using method factors instead (Podsakoff et al., 2003). Since this approach resulted in estimation problems, specifically negative variances for some indicators, this study refrained from using this approach. Examining the model without correlated error terms did not result in any changes in the results of this study.

Footnotes

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the University of Vienna via the uni:docs fellowship.

ORCID iD: Marlis Stubenvoll  https://orcid.org/0000-0003-1870-0403

https://orcid.org/0000-0003-1870-0403

Supplemental Material: Supplemental material for this article is available online at http://journals.sagepub.com/doi/suppl/10.1177/10755470221142304.

References

- Agley J., Xiao Y. (2021). Misinformation about COVID-19: Evidence for differential latent profiles and a strong association with trust in science. BMC Public Health, 21(1), 1–12. 10.1186/s12889-020-10103-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolsen T., Druckman J. N. (2015). Counteracting the politicization of science. Journal of Communication, 65(5), 745–769. 10.1111/jcom.12171 [DOI] [Google Scholar]

- Bolsen T., Palm R. (2022). Politicization and COVID-19 vaccine resistance in the U.S. Progress in Molecular Biology and Translational Science, 188(1), 81–100. 10.1016/bs.pmbts.2021.10.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borah P., Austin E., Su Y. (2022). Injecting disinfectants to kill the virus: Media literacy, information gathering sources, and the moderating role of political ideology on misperceptions about COVID-19. Mass Communication and Society. Advance online publication. 10.1080/15205436.2022.2045324 [DOI]

- Brennen J. S., Simon F. M., Howard P. N., Nielsen R. K. (2020, April7). Types, sources, and claims of COVID-19 misinformation. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/types-sources-and-claims-covid-19-misinformation

- Bridgman A., Merkley E., Loewen P. J., Owen T., Ruths D., Teichmann L., Zhilin O. (2020). The causes and consequences of COVID-19 misperceptions: Understanding the role of news and social media. Harvard Kennedy School Misinformation Review, 1, 1–18. 10.37016/mr-2020-028 [DOI] [Google Scholar]

- Cucinotta D., Vanelli M. (2020). WHO declares COVID-19 a pandemic. Acta Bio-Medica, 91(1), 157–160. 10.23750/abm.v91i1.9397 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dan V., Dixon G. N. (2021). Fighting the infodemic on two fronts: Reducing false beliefs without increasing polarization. Science Communication, 43(5), 674–682. 10.1177/10755470211020411 [DOI] [Google Scholar]

- Detenber B. H., Rosenthal S., Liao Y., Ho S. S. (2016). Audience segmentation for campaign design: Addressing climate change in Singapore. International Journal of Communication, 10, 4736–4758. https://ijoc.org/index.php/ijoc/article/view/4696/1797 [Google Scholar]

- Douglas K. M., Sutton R. M. (2017). The psychology of conspiracy theories. Current Directions in Psychological Science, 26(6), 538–542. 10.1177/0963721417718261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Druckman J. N., Ognyanova K., Baum M. A., Lazer D., Perlis R. H., Volpe J., Della Santillana M., Chwe H., Quintana A., Simonson M. (2021). The role of race, religion, and partisanship in misperceptions about COVID-19. Group Processes and Intergroup Relations, 24(4), 638–657. 10.1177/1368430220985912 [DOI] [Google Scholar]

- Eberl J.-M., Lebernegg N. S. (2021, August6). Die alternativen COVID-Realitäten des österreichischen TV-Publikums [The alternative COVID realities of Austria TV audiences]. Austrian Corona Panel Project: Corona-Blog. https://viecer.univie.ac.at/en/projects-and-cooperations/austrian-corona-panel-project/corona-blog/corona-blog-beitraege/blog125/

- Eberl J.-M., Lebernegg N. S. (2022). The pandemic through the social media lens. MedienJournal, 45(3), 5–15. 10.24989/medienjournal.v45i3.2037 [DOI] [Google Scholar]

- Enders A. M., Uscinski J. E., Klofstad C., Stoler J. (2020). The different forms of COVID-19 misinformation and their consequences. Harvard Kennedy School Misinformation Review, 1(8), 1–21. 10.37016/mr-2020-48 [DOI] [Google Scholar]

- Eveland W. P., Shah D. V. (2003). The impact of individual and interpersonal factors on perceived news media bias. Political Psychology, 24(1), 101–117. 10.1111/0162-895X.00318 [DOI] [Google Scholar]

- Fransen M. L., Smit E. G., Verlegh P. W. J. (2015). Strategies and motives for resistance to persuasion: An integrative framework. Frontiers in Psychology, 6(August), 1–12. 10.3389/fpsyg.2015.01201 [DOI] [PMC free article] [PubMed]

- Garrett K. R. (2009). Politically motivated reinforcement seeking: Reframing the selective exposure debate. Journal of Communication, 59(4), 676–699. 10.1111/j.1460-2466.2009.01452.x [DOI] [Google Scholar]

- Harcup T. (2003). “The unspoken—said.” Journalism, 4(3), 356–376. 10.1177/14648849030043006 [DOI] [Google Scholar]

- Hart P. S., Chinn S., Soroka S. (2020). Politicization and polarization in COVID-19 news coverage. Science Communication, 42(5), 679–697. 10.1177/1075547020950735 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart W., Albarracín D., Eagly A. H., Brechan I., Lindberg M. J., Merrill L. (2009). Feeling validated versus being correct: A meta-analysis of selective exposure to information. Psychological Bulletin, 135(4), 555–588. 10.1037/a0015701 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heiss R., Gell S., Röthlingshöfer E., Zoller C. (2021). How threat perceptions relate to learning and conspiracy beliefs about COVID-19: Evidence from a panel study. Personality and Individual Differences, 175, Article 110672. 10.1016/j.paid.2021.110672 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hine D. W., Reser J. P., Morrison M., Phillips W. J., Nunn P., Cooksey R. (2014). Audience segmentation and climate change communication: Conceptual and methodological considerations. Wiley Interdisciplinary Reviews: Climate Change, 5(4), 441–459. 10.1002/wcc.279 [DOI] [Google Scholar]

- Kahan D. M., Jenkins-Smith H., Braman D. (2011). Cultural cognition of scientific consensus. Journal of Risk Research, 14(2), 147–174. 10.1080/13669877.2010.511246 [DOI] [Google Scholar]

- Kapantai E., Christopoulou A., Berberidis C., Peristeras V. (2021). A systematic literature review on disinformation: Toward a unified taxonomical framework. New Media & Society, 23(5), 1301–1326. 10.1177/1461444820959296 [DOI] [Google Scholar]

- Kerr J. R., Linden S. (2022). Communicating expert consensus increases personal support for COVID-19 mitigation policies. Journal of Applied Social Psychology, 52(1), 15–29. 10.1111/jasp.12827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kittel B., Kritzinger S., Boomgaarden H., Prainsack B., Eberl J.-M., Kalleitner F., Lebernegg N. S., Partheymüller J., Plescia C., Schiestl D. W., Schlogl L. (2020). Austrian Corona Panel Project (SUF edition). 10.11587/28KQNS [DOI]

- Kim H. K., Ahn J., Atkinson L., Kahlor L. A. (2020). Effects of COVID-19 misinformation on information seeking, avoidance, and processing: A multicountry comparative study. Science Communication, 42(5), 586–615. 10.1177/1075547020959670 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knobloch-Westerwick S., Meng J. (2009). Looking the other way: Selective exposure to attitude-consistent and counterattitudinal political information. Communication Research, 36(3), 426–448. 10.1177/0093650209333030 [DOI] [Google Scholar]

- Kohring M., Matthes J. (2007). Trust in news media. Development and validation of a multidimensional scale. Communication Research, 34(2), 231–252. 10.1177/0093650206298071 [DOI] [Google Scholar]

- Kuklinski J. H., Quirk P. J., Schwieder D. W., Rich R. F. (1998). Just the facts, Ma ‘ am: Political facts and public opinion. The Annals of the American Academy of Political and Social Science, 560, 143–154. 10.1177/0002716298560001011 [DOI] [Google Scholar]

- Lazarsfeld P. F., Berelson B., Gaudet H. (1948). The people’s choice. How the voter makes up his mind in a presidential campaign (2nd ed.). Columbia University Press. [Google Scholar]

- Lee T., Johnson T. J., Weaver D. H. (2022). Navigating the coronavirus infodemic: Exploring the impact of need for orientation, epistemic beliefs and type of media use on knowledge and misperception about COVID-19. Mass Communication and Society. Advance online publication. 10.1080/15205436.2022.2046103 [DOI]

- Mahl D., Schäfer M. S., Zeng J. (2022). Conspiracy theories in online environments: An interdisciplinary literature review and agenda for future research. New Media & Society. Advance online publication. 10.1177/14614448221075759 [DOI]

- Masyn K. E. (2013). Latent class analysis and finite mixture modeling. In Little T. D. (Ed.), The Oxford handbook of quantitative methods (Vol. 2, pp. 551–611). Oxford University Press. [Google Scholar]

- McCright A. M., Dentzman K., Charters M., Dietz T. (2013). The influence of political ideology on trust in science. Environmental Research Letters, 8(4), 044029. 10.1088/1748-9326/8/4/044029 [DOI] [Google Scholar]

- Merkley E., Loewen P. J. (2021). Anti-intellectualism and the mass public’s response to the COVID-19 pandemic. Nature Human Behaviour, 5(6), 706–715. 10.1038/s41562-021-01112-w [DOI] [PubMed] [Google Scholar]

- Metag J., Schäfer M. S. (2018). Audience segments in environmental and science communication: Recent findings and future perspectives. Environmental Communication, 12(8), 995–1004. 10.1080/17524032.2018.1521542 [DOI] [Google Scholar]

- Müller P., Schemer C., Wettstein M., Schulz A., Wirz D. S., Engesser S., Wirth W. (2017). The polarizing impact of news coverage on populist attitudes in the public: Evidence from a panel study in four European democracies. Journal of Communication, 67(6), 968–992. 10.1111/jcom.12337 [DOI] [Google Scholar]

- Newman D., Lewandowsky S., Mayo R. (2022). Believing in nothing and believing in everything: The underlying cognitive paradox of anti-COVID-19 vaccine attitudes. Personality and Individual Differences, 189(August2021), 111522. 10.1016/j.paid.2022.111522 [DOI] [PMC free article] [PubMed]

- Nyhan B. (2020). Facts and myths about misperceptions. Journal of Economic Perspectives, 34(3), 220–236. 10.1257/jep.34.3.220 [DOI] [Google Scholar]

- Nyhan B., Reifler J. (2010). When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2), 303–330. 10.1007/s11109-010-9112-2 [DOI] [Google Scholar]

- Oliveira T., Evangelista S., Alves M., Quinan R. (2021). “Those on the right take chloroquine”: The illiberal instrumentalisation of scientific debates during the COVID-19 pandemic in Brasil. Javnost, 28(2), 165–184. 10.1080/13183222.2021.1921521 [DOI] [Google Scholar]

- Pennycook G., Rand D. G. (2019). Lazy, not biased: Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition, 188, 39–50. 10.1016/j.cognition.2018.06.011 [DOI] [PubMed] [Google Scholar]

- Podsakoff P. M., MacKenzie S. B., Lee J. Y., Podsakoff N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88(5), 879–903. 10.1037/0021-9010.88.5.879 [DOI] [PubMed] [Google Scholar]

- Romer D., Jamieson K. H. (2020). Conspiracy theories as barriers to controlling the spread of COVID-19 in the U.S. Social Science and Medicine, 263, 113356. 10.1016/j.socscimed.2020.113356 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roozenbeek J., Schneider C. R., Dryhurst S., Kerr J., Freeman A. L. J., Recchia G., van der Bles A. M., van der Linden S. (2020). Susceptibility to misinformation about COVID-19 around the world. Royal Society Open Science, 7(10), 201199. 10.1098/rsos.201199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roozenbeek J., van der Linden S., Goldberg B., Rathje S., Lewandowsky S. (2022). Psychological inoculation improves resilience against misinformation on social media. Science Advances, 8(34), 1–12. 10.1126/sciadv.abo6254 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schäfer M. S., Mahl D., Füchslin T., Metag J., Zeng J. (2022). From hype cynics to extreme believers. Typologizing the Swiss population’s COVID-19-related conspiracy beliefs, their corresponding information behavior and social media use. International Journal of Communication, 16, 2885–2910. https://ijoc.org/index.php/ijoc/article/view/18863 [Google Scholar]

- Schäfer S., Aaldering L., Lecheler S. (2022). “Give me a break!” Prevalence and predictors of intentional news avoidance during the COVID-19 pandemic. Mass Communication and Society. Advance online publication. 10.1080/15205436.2022.2125406 [DOI]

- Scharkow M. (2016). The accuracy of self-reported internet use—A validation study using client log data. Communication Methods and Measures, 10(1), 13–27. 10.1080/19312458.2015.1118446 [DOI] [Google Scholar]

- Schnauber A., Wolf C. (2016). Media habits and their impact on media platform selection for information use. Studies in Communication—Media, 1, 105–127. 10.5771/2192-4007-2016-1-105 [DOI] [Google Scholar]

- Siegrist M., Bearth A. (2021). Worldviews, trust, and risk perceptions shape public acceptance of COVID-19 public health measures. Proceedings of the National Academy of Sciences of the United States of America, 118(24), 1–6. 10.1073/pnas.2100411118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spurk D., Hirschi A., Wang M., Valero D., Kauffeld S. (2020). Latent profile analysis: A review and “how to” guide of its application within vocational behavior research. Journal of Vocational Behavior, 120, 1–29. 10.1016/j.jvb.2020.103445 [DOI] [Google Scholar]

- Stecula D. A., Motta M., Kuru O., Jamieson K. H. (2022). The great and powerful Dr. Oz? Alternative health media consumption and vaccine views in the United States. Journal of Communication, 72(3), 374–400. 10.1093/joc/jqac011 [DOI] [Google Scholar]

- Stecula D. A., Pickup M. (2021). How populism and conservative media fuel conspiracy beliefs about COVID-19 and what it means for COVID-19 behaviors. Research and Politics, 8(1), 1–9. 10.1177/2053168021993979 [DOI] [Google Scholar]

- Stroud N. J. (2008). Media use and political predispositions: Revisiting the concept of selective exposure. Political Behavior, 30(3), 341–366. 10.1007/s11109-007-9050-9 [DOI] [Google Scholar]

- Stroud N. J. (2014). Selective exposure theories. In Kenski K., Jamieson K. H. (Eds.), The Oxford handbook of political communication (pp. 531–548). Oxford Academic. 10.1093/oxfordhb/9780199793471.013.009 [DOI]

- Tandoc E. C., Jr., Kim H. K. (2022). Avoiding real news, believing in fake news? Investigating pathways from information overload to misbelief. Journalism. Advance online publication. 10.1177/14648849221090744 [DOI] [PMC free article] [PubMed]

- Uscinski J. E., Enders A. M., Klofstad C., Seelig M., Funchion J., Everett C., Wuchty S., Premaratne K., Murthi M. (2020). Why do people believe COVID-19 conspiracy theories? Harvard Kennedy School Misinformation Review, 1, 1–12. 10.37016/mr-2020-015 [DOI] [Google Scholar]

- van Stekelenburg A., Schaap G., Veling H., Buijzen M. (2020). Correcting misperceptions: The causal role of motivation in corrective science communication about vaccine and food safety. Science Communication, 42(1), 31–60. 10.1177/1075547019898256 [DOI] [Google Scholar]

- Vosoughi S., Roy D., Aral S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151. 10.1126/science.aap9559 [DOI] [PubMed] [Google Scholar]

- Vraga E. K., Bode L. (2020). Defining misinformation and understanding its bounded nature: Using expertise and evidence for describing misinformation. Political Communication, 37(1), 136–144. 10.1080/10584609.2020.1716500 [DOI] [Google Scholar]

- Zheng H., Jiang S., Rosenthal S. (2022). Linking online vaccine information seeking to vaccination intention in the context of the COVID-19 pandemic. Science Communication, 44(3), 320–346. 10.1177/10755470221101067 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-docx-1-scx-10.1177_10755470221142304 for Investigating the Heterogeneity of Misperceptions: A Latent Profile Analysis of COVID-19 Beliefs and Their Consequences for Information-Seeking by Marlis Stubenvoll in Science Communication