Abstract

A panel sponsored by the American College of Medical Informatics (ACMI) at the 2021 AMIA Symposium addressed the provocative question: “Are Electronic Health Records dumbing down clinicians?” After reviewing electronic health record (EHR) development and evolution, the panel discussed how EHR use can impair care delivery. Both suboptimal functionality during EHR use and longer-term effects outside of EHR use can reduce clinicians’ efficiencies, reasoning abilities, and knowledge. Panel members explored potential solutions to problems discussed. Progress will require significant engagement from clinician-users, educators, health systems, commercial vendors, regulators, and policy makers. Future EHR systems must become more user-focused and scalable and enable providers to work smarter to deliver improved care.

Keywords: electronic health records, burnout, professional, burnout, psychological, documentation, HITECH act (American Recovery and Reinvestment Act), cognition

INTRODUCTION

Overview

At the 2021 AMIA Annual Symposium in San Diego, California, the American College of Medical Informatics (ACMI) sponsored a panel discussion entitled, “Are Electronic Health Records dumbing down clinicians?” The current authors comprise the panel participants and GBM as ACMI President moderated the panel. While panelists do not believe that current trainees and practitioners are less intelligent or less engaged in critical thinking than previous generations, use of electronic health record (EHRs) systems acutely and chronically can diminish user abilities. Aspects of EHR use can impact clinicians’ skills, medical knowledge, and reasoning overall reducing clinicians’ abilities to render care optimally.

Background history

From 1965 to 2005, roughly a dozen academic clinical informatics groups pioneered de novo institutional EHRs.1 Commercially developed EHRs also developed often from academic systems.1 Academic home-grown EHRs were noteworthy for being highly responsive to local users’ needs, largely because their development teams included, or had collegial relationships with, local end users. These systems rapidly evolved in response to recognized problems and suggested optimizations. Compared to earlier paper-based care delivery methods, these pioneering EHRs improved clinical practice to be safer, more efficient, and more compliant with national guidelines (eg, References2–7).

Despite these positive outcomes, several critical perspectives were lost. Most evidence had been generated by a relatively small group of institutions. Studies reporting EHRs’ impacts on patient outcomes, as opposed to care delivery processes, were scarce. Early systems were highly diverse in initial designs, technical foundations, functional needs, and idiosyncratic methods of revision. System portability across sites was extremely difficult.

Nevertheless, by the early 2000s, enthusiastic advocacy by individuals, institutions, and national informatics organizations for wide-scale adoption of EHRs convinced the White House and Congress to incentivize widespread “meaningful use” of EHRs in hospitals and clinics. As a stimulus bill (ie, not infrastructure), the HITECH Act of 2009 used a combination of early financial incentives and subsequent penalties.8,9 Its goal was to improve clinical care through a nationwide, multi-billion-dollar EHR rollout program.

What had not been foreseen was that most US healthcare institutions did not have the capacity or skills required to implement, maintain, and optimize an EHR locally. The great majority of US healthcare delivery sites are smaller in size than the pioneering academic health centers and lacked informatics expertise. Most hospitals and clinics saw installation of commercial vendor-developed EHRs as the only viable path forward, despite the many drawbacks of such systems. The main advantage of the commercial systems was the support EHR vendors provided. However, commercial EHRs typically had more cumbersome user interfaces, were far less amenable to local control and evolution, and were very expensive to install.10

Inadvertently, HITECH sponsored adoption of often incompletely developed, inflexible, and poorly vetted commercial EHRs designed as “one size fits all” solutions.11 Compounding this, installation sites often had minimal sociotechnical readiness. Many EHR installations violated Bates’ “ten commandments” for clinical system effectiveness, including: “simple interventions work best,” “fit into the user’s workflow,” “anticipate needs and deliver in real time,” “speed is everything,” “ask for information only when you really need it,” “monitor impact, get feedback, and respond,” and “manage and maintain your knowledge-based systems.”12 The emphasis on billing functionality contained in commercial EHRs added to care providers’ burdens.

Adverse effects of HITECH-motivated installations are widely documented (eg, References8,13–16). While Halamka and Tripathi noted “…at a high level, the 2009 HITECH Act accomplished something miraculous: the vast majority of U.S. hospitals and physicians became active users of EHRs,”9 conversely, “…We lost the hearts and minds of clinicians … We tried to drive cultural change with legislation. In a sense, we gave clinicians suboptimal cars, didn’t build roads, and then blamed them for not driving.”9 Colicchio and Cimino constructed a list of unintended consequences of nationwide EHR adoption13: failed expectations due to unmet hype; inability to de-install expensive dysfunctional systems due to the penalties in time and money of doing so; widespread adoption of poorly tested systems; clinician burnout due to diminished patient contact time as a result of nonclinical activities involved in EHR use; and data obfuscation in uninformative, redundant, bloated clinical records accompanied by overwhelming burden of unnecessary alerts and reminders. They concluded that these unintended consequences have caused “potential safety hazards and led to delayed or incorrect decisions at the point of care.”13

HOW EHRS IMPAIR CLINICIANS

The panel provided examples of how EHR use can impair clinicians’ performances along with important benefits. Many advantages of EHR use documented by early EHR developers2–6 exist in some form in today’s EHRs. Examples include drug allergy alerting, evidence-based order sets, availability previous patient visit information, and care improvement analytics. Nevertheless, as described in this manuscript, today’s EHRs can diminish the net effectiveness of such benefits ultimately impeding optimal clinician performance.

Situational clinician impairment during active use of EHRs

One clinician-panelist noted that end-users are reluctant to question advice (or lack thereof) from EHRs. Too many false positive alerts can de-condition clinicians’ abilities to respond to rare critical alerts. At one institution, up to 15% of drug orders triggered a drug–drug interaction (DDI) alert. Clinicians overrode 97% of these DDI alerts; of the remaining 3%, approximately half of the clinician-made changes were found to be harmful. The conclusion was that no measurable safety benefits from the DDI alert had accrued resulting in the institution retiring the vendor provided DDI solution and shifting to “home-grown” alerts firing less frequently.

Another panelist detailed adverse effects of EHR use on nurses. A critical concern is that EHR use obscures the overall nursing narrative about the patient.17 Previously, nurses would serially chronicle the patient’s status and the care delivered in narrative nursing notes providing a “gestalt” (an organized whole that is perceived as more than the sum of its parts) allowing others to develop a mental model of the patient’s problems and an understandable account of what happened particularly at the end of a shift. Current EHRs emphasize and encourage nurses to enter data via flowsheets which contain hundreds of data entry cells which are time-consuming to complete, require significant scrolling, may not be relevant to the current patient, and do not easily summarize the overall, coherent story of what happened17 ultimately making it difficult to discern what clinically worked and what didn’t. Another bothersome aspect of nursing EHR documentation are the excessive number of reminders from the concern that (especially less experienced) nurses will miss important parts of care delivery. All nurses then experience a dehumanizing and mentally exhausting panoply of reminders, many of which are unnecessary. At one large institution, of an astounding 739 000 interruptive EHR-generated alerts (stopping workflow until a response was entered) over 3 months, only 4% were acted upon as valid. Of another 1.3 million non-interruptive alerts (declarative statements about the patient’s condition that might merit attention), only 2.5% resulted in an action. The process of admitting a new inpatient requires a nurse to perform 400–500 clicks,18 illustrative of the overburden of work taking nurses away from more personalized bedside care. Nationally, there are 4 million registered nurses and 1 million licensed practical nurses. Freeing 60–90 min per day that nurses now experience in cumbersome, unproductive EHR chores, would create sufficient time to transform healthcare delivery locally and nationally—enabling more value-added activities.

Two clinician-panelists described how EHRs often obscure important patient-related information. For example, chart review can be like “flying blind” while trying to locate particular notes or specific information in a myriad of confusing, poorly categorized EHR entries and menus. Especially with of face-to-face patient interactions, this can make clinicians seem less competent and erodes trust. Even worse, it can potentially result in patient harm. As part of a quality control activity, the panelist who was an administrative leader would audit charts of inpatient mortalities to determine whether the cause of death was expected, or unexpected and potentially preventable. The auditor observed that, per previous reports,19 clinicians using EHRs frequently copy and pasted previous days’ notes and examinations with minimal changes each day, ultimately resulting in confusing and confounding documentation.

For example, admission notes provide early clinical impressions regarding potential diagnoses and treatment. Those impressions can be wrong. As actual diagnoses become clearer, the plan of action more refined. When aspects of the admission note are carried forward with minimal changes, diagnosis refinement and revised therapeutic planning may be lost. When a chart mentioned heart failure as a past or ongoing diagnosis, clinicians frequently assumed that the patient had left-sided failure and treated the patient accordingly despite half of echocardiogram reports in the “heart failure” patients indicating normal left ventricular function.20 Those patients had chronic obstructive pulmonary disease with right heart failure and required different therapies. Yet, the diagnosis of chronic heart failure persisted in the notes and, often, in patient treatments.

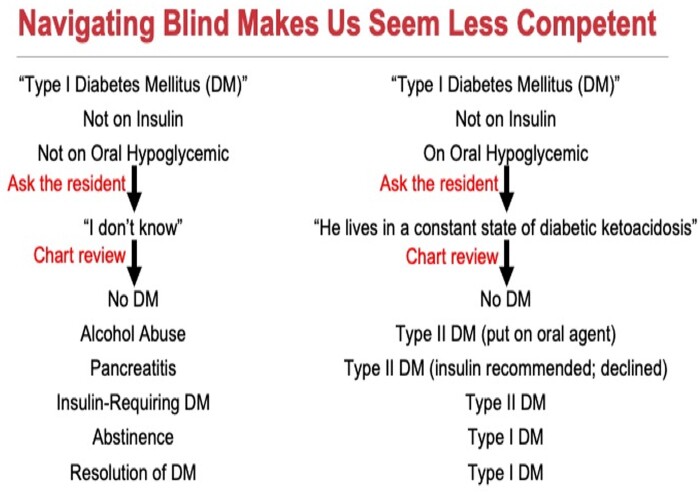

Another panelist described how 2 patients at their institution had been labeled in the EHR as having Type I (insulin-dependent) diabetes mellitus over serial hospital admissions. Despite the diagnostic labels, one patient was not on insulin or oral hypoglycemic agents (treatments for Type I and Type II diabetes, respectively) and the other patient was on oral hypoglycemic agents alone (the wrong therapy for Type I diabetes) (Figure 1). The first patient had previously suffered a severe bout of pancreatitis which transiently induced Type I diabetes, which resolved. The diagnosis of Type I diabetes carried forward over the years. The other patient long ago was correctly diagnosed with Type II diabetes and started on oral hypoglycemic agents appropriately. However, a transcription error changed the past history of “Type II diabetes” to “Type I diabetes.” The incorrect diagnosis remained for many years, even though the patient was on the correct therapy for Type II diabetes. Both panelists noted that physician-users of EHRs experience problems and distractions fostering these types of errors with excess, burdensome, unhelpful reminders.

Figure 1.

Lack of attention to EHR details can obfuscate patients’ histories. EHR: electronic health record.

EHR use can lead to errors of commission (users accepting bad or inappropriate system-generated advice) and errors of omission (users assuming nothing is wrong if the system does not issue a warning). One panelist described clinical decision support that was not up to date on the latest preventive guidelines, where clinicians did not question the recommendations. Conversely, inadequate algorithms for detecting and preventing drug toxicity can fail to warn the clinician and harm patients. Some EHR systems fail to detect potential acetaminophen overconsumption when acetaminophen and other combination medications containing acetaminophen are prescribed. Thus, the system may not block concurrent administration of potentially toxic doses of acetaminophen.

Long-lasting adverse EHR effects on clinicians (beyond EHR use)

Two authors noted that a decades-old, standalone diagnostic aid used previously at their institution contained 1800 possible descriptors for history and physical examination (H&P) finding. A 2017 analysis of the medical service admission H&P note templates at the same hospital found that the templates facilitated entry of a maximum of 360 possible descriptors, suggesting that exam results were less detailed (and potentially thorough). Although clinicians could painstakingly add information, those additions would be hidden from subsequent routine view by default and would require extra effort to uncover or reuse.

Using a “gold standard” set of 20 000 possible patient H&P-related descriptors from various sources, including locally sources, the National Library of Medicine’s Unified Medical Language System, WordNet synonyms (consortium led by Princeton University), and clinical knowledge from anonymized EHR notes, the 2 authors found that from 1996 to 2009 the average number of “gold standard” non-negated meaningful clinical terms used in an admission H&P note steadily decreased by 25% (430 [1996–1998] to 320 [2007–2009]). They also analyzed adult patient clinical descriptions in New England Journal of Medicine Clinicopathological Conference notes, using the same “gold standard” descriptors. Again, there was a 25% decrease in the number of non-negated descriptors used per case (670 descriptors [1965–1978] to 500 [2001–2014]). This trend produces clinical documents with less information granularity potentially lacking the richness necessary for clinician-users to fully understand what is happening with their patients.

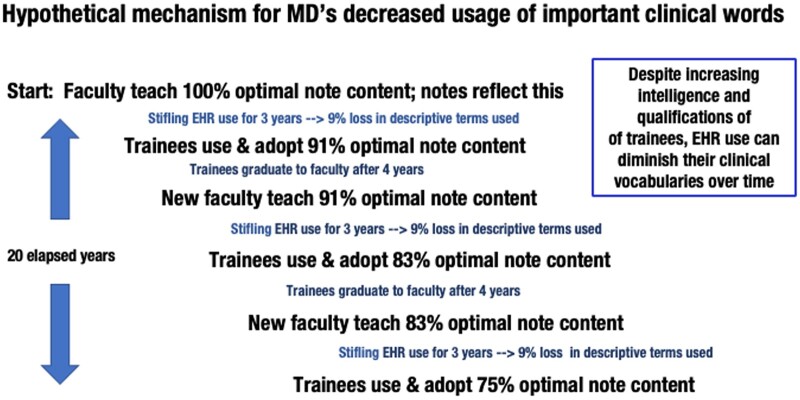

The study authors posited that medical trainees’ clinical vocabularies could have diminished in the manner observed. If 20 years ago, faculty clinicians used a 100% complete set of clinical descriptors in their handwritten notes and after EHR adoption patient descriptions had 9% fewer descriptors, the limited 91% subset of terms becomes the new norm for the next generation of trainees. As seen in Figure 2, the cyclic reduction in clinician’s descriptors repeats every 4 years as trainees become new faculty. Diminished patient descriptors could adversely impact clinicians’ ability to recognize disease presentations and reason diagnostically.

Figure 2.

Postulated mechanism for narrowing of clinicians’ descriptive vocabularies over time.

One clinician-panelist noted that individuals who are trained in critical care procedures, such as emergency intubation of patients, lose those abilities over time if they are not practiced regularly. Similarly, previous manual routine ordering of total parenteral nutrition (TPN) replaced by an automated, error-correcting TPN EHR ordering system. This had reduced TPN ordering errors by 90% compared to paper-based TPN ordering. One day, the clinician had used a paper-based TPN ordering form, but the clinician was unable to complete manual TPN ordering without the assistance of the ancillary staff. Thus, even use of exemplary EHR solutions can thus lead to loss of clinicians’ manual skills previously required in the paper world. This can become dangerous during system downtimes or when clinicians see patients in unfamiliar hospitals or clinic settings.

Several clinician-panelists gave examples of how current EHR configurations affect trainees, decreasing their ability to participate in care. In teaching hospitals prior to EHR implementation, medical students could write orders in patient charts. Students’ orders were annotated to not to be acted upon until countersigned by a qualified physician. When EHRs were introduced into teaching hospitals, many sites forbade medical students from entering orders without mechanisms to ensure that a supervisory physician would sign off on the orders in a timely manner. (While unsigned orders had been a problem prior to EHR implementation, it was now a problem that was no longer hidden in paper stacks in medical record departments but could be queried simply in the EHR increasing compliance risks for the Joint Commission.) Medical students thus lost the valuable educational experience of writing orders that could be discussed and corrected by their supervisors.

When a care team makes rounds with supervisors and trainees glued to individual computer screens, fewer spontaneous learning opportunities occur than when everyone faces one another in a group discussion. Opportunities to discuss with trainees the underlying reasons for the EHR-generated advice may also be lost. Overall, trainees lose valuable experiences in synthesizing information in complex settings.

POTENTIAL SOLUTIONS

Panelists discussed important opportunities and directions for next-generation EHRs. Proposed improvements broadly fell into 4 categories: (1) institutional and end-user readiness and competency, (2) EHR design and capabilities, (3) regulatory policies and closer healthcare system-vendor partnerships, and (4) decoupling clinical documentation from billing and regulatory requirements so that clinical notes contain only that information necessary to care for the patient. A range of stakeholders must design and revise future EHR systems for success: healthcare providers, informaticians, educators, health system operations, EHR vendors, and policy makers including the federal Office of the National Coordinator for Health Information Technology (ONC).

Greater readiness and competency for future EHR users includes better EHR training. Simulations can play a key role. For example, teaching about EHR downtimes using simulations could be critical in preparing for actual events especially with more frequent prolonged downtimes hospital ransomware attacks increase.21 Downtime readiness activities should be complemented by well-built policies, procedures, and alternative methods for record-keeping and order transmission.

Undergraduate and post-graduate medical education also has an important role in teaching clinicians about EHR deficiencies and encouraging trainees to be part of the solution for making EHR systems better. Some healthcare systems are implementing programs to improve functionality using crowd-sourcing approaches—so-called “Getting Rid of Stupid Stuff in the EHR” initiatives.22 Educational approaches can help by re-emphasizing the scope of information obtainable during medical school physical diagnosis courses and introducing a broad range of descriptors that expand trainees’ considerations. Coupled with this is a need for improved documentation tools. Academic investigators could also develop metrics to demonstrate the efficiency and breadth of patient-related information capture.

Ultimately, EHRs must have more efficient mechanisms for data capture (eg, ambient technology for clinical record capture) and extraction (eg, natural language processing). Several prototypes now exist whereby patient records are created unobtrusively as a byproduct of care delivery. One panelist also recognized the opportunity to use the data captured during care delivery as an important resource to improve the design of EHRs and to optimize system navigation with more useful workflows.

Panelists noted that ONC and certified health information technology processes play critical roles in enacting broad improvements to present-day EHRs. Current EHR certification processes do not adequately measure important real-time human–computer interaction factors. Future criteria should ideally evaluate dynamic user interface qualities, efficiency of use, and user satisfaction. Measures should include the number of clicks required to accomplish a given task, time to document, time to order, and time to detect critically important clinical information stored within EHRs. System certification should measure EHRs’ abilities to minimize redundant or less valuable documentation and maximize the presentation of information. Similarly, a national-standard grading scale that could be applied to measure the urgency and criticality of a given alert might help to reduce alert fatigue by diminishing interruptive alerting. Such a scale might look like: 5 = patient will die if alert not heeded; 4 = some patients will experience significant morbidity or mortality if alert not heeded; 3 = non-life-threatening adverse consequences likely if alert not heeded, … etc.

These proposed changes are a necessary part of EHR evolution. They are the beginning components of a call to action that will transform EHRs. Future EHRs must embed a greater degree of intelligence, support team-based care, and new care models. They must support clinicians’ abilities to record and find pertinent information quickly, so that care providers can make better decisions more efficiently.23 Informaticians are uniquely positioned to partner with policymakers, commercial vendors, and clinician users to develop, implement, and optimize these solutions.

AUTHOR CONTRIBUTIONS

All authors made substantial contributions to the conception and design of the work, drafting the work and critically revising it, final approval of the version published, and agreed to be accountable for all aspects of the work.

ACKNOWLEDGMENTS

The authors would like to acknowledge the American Medical Informatics Association for the opportunity to present these perspectives as a panel at the 2021 Annual Symposium of the American Medical Informatics Association in San Diego, California as well as Elizabeth Lindemann, MHA, for assistance in audio-recording the panel presentation.

CONFLICT OF INTEREST STATEMENT

None declared.

Contributor Information

Genevieve B Melton, Department of Surgery, University of Minnesota, Minneapolis, Minnesota, USA; Center for Learning Health System Sciences, University of Minnesota, Minneapolis, Minnesota, USA; Institute for Health Informatics, University of Minnesota, Minneapolis, Minnesota, USA.

James J Cimino, Department of Medicine, University of Alabama at Birmingham, Birmingham, Alabama, USA; Informatics Institute, University of Alabama at Birmingham, Birmingham, Alabama, USA; Center for Clinical and Translational Science, University of Alabama at Birmingham, Birmingham, Alabama, USA.

Christoph U Lehmann, Department of Pediatrics, University of Texas Southwestern Medical Center, Dallas, Texas, USA; Department of Population & Data Sciences, University of Texas Southwestern Medical Center, Dallas, Texas, USA; Lyda Hill Department of Bioinformatics, University of Texas Southwestern Medical Center, Dallas, Texas, USA; Clinical Informatics Center, University of Texas Southwestern Medical Center, Dallas, Texas, USA.

Patricia R Sengstack, School of Nursing, Vanderbilt University, Nashville, Tennessee, USA; Frist Nursing Informatics Center, Vanderbilt University, Nashville, Tennessee, USA.

Joshua C Smith, Department of Biomedical Informatics, Vanderbilt University, Nashville, Tennessee, USA.

William M Tierney, Richard M. Fairbanks School of Public Health, Indiana University, Indianapolis, Indiana, USA; Department of Population Health, University of Texas at Austin Dell Medical School, Austin, Texas, USA.

Randolph A Miller, Department of Biomedical Informatics, Vanderbilt University, Nashville, Tennessee, USA.

Data Availability

There is no primary dataset.

REFERENCES

- 1. Collen MF, Miller RA.. The early history of hospital information systems for inpatient care in the United States. In: Collen MF, Ball MJ, eds. The History of Medical Informatics in the United States. London: Springer-Verlag; 2015. [Google Scholar]

- 2. Gardner RM, Pryor TA, Warner HR.. The HELP hospital information system: update 1998. Int J Med Inform 1999; 54 (3): 169–82. [DOI] [PubMed] [Google Scholar]

- 3. Teich JM, Glaser JP, Beckley RF, et al. The Brigham integrated computing system (BICS): advanced clinical systems in an academic hospital environment. Int J Med Inform 1999; 54 (3): 197–208. [DOI] [PubMed] [Google Scholar]

- 4. McDonald CJ, Overhage JM, Tierney WM, et al. The Regenstrief Medical Record System: a quarter century experience. Int J Med Inform 1999; 54 (3): 225–53. [DOI] [PubMed] [Google Scholar]

- 5. Brown SH, Lincoln MJ, Groen PJ, et al. VistA–U.S. Department of Veterans Affairs national-scale HIS. Int J Med Inform 2003; 69 (2–3): 135–56. [DOI] [PubMed] [Google Scholar]

- 6. Bates DW, Leape LL, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA 1998; 280 (15): 1311–6. [DOI] [PubMed] [Google Scholar]

- 7. Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006; 144 (10): 742–52. [DOI] [PubMed] [Google Scholar]

- 8.HITECH Act of 2009, 42 USC sec 139w-4(0)(2) (February 2009), sec 13301, subtitle B: Incentives for the Use of Health Information Technology.

- 9. Halamka J, Tripathi M.. The HITECH era in retrospect. N Engl J Med 2017; 377 (10): 907–9. [DOI] [PubMed] [Google Scholar]

- 10. Boonstra A, Broekhuis M.. Barriers to the acceptance of electronic medical records by physicians from systematic review to taxonomy and interventions. BMC Health Serv Res 2010; 10: 231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Koppel R, Lehmann CU.. Implications of an emerging EHR monoculture for hospitals and healthcare systems. J Am Med Inform Assoc 2015; 22 (2): 465–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003; 10 (6): 523–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Colicchio TK, Cimino JJ, Del Fiol G.. Unintended consequences of nationwide electronic health record adoption. J Med Internet Res 2019; 21 (6): e13313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Ash JS, Gorman PN, Seshadri V, et al. Computerized physician order entry in U.S. hospitals: results of a 2002 survey. J Am Med Inform Assoc 2004; 11 (2): 95–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Campbell EM, Sittig DF, Ash JS, et al. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2006; 13 (5): 547–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Massaro TA. Introducing physician order entry at a major academic medical center: II. Impact on medical education. Acad Med 1993; 68 (1): 25–30. [DOI] [PubMed] [Google Scholar]

- 17. Varpio L, Rashotte J, Day K, King J, Kuziemsky C, Parush A.. The EHR and building the patient’s story: a qualitative investigation of how EHR use obstructs a vital clinical activity. Int J Med Inform 2015; 84 (12): 1019–28. [DOI] [PubMed] [Google Scholar]

- 18. Swietlik M, Sengstack P.. An evaluation of nursing admission assessment documentation to identify opportunities for burden reduction. J Inform Nurs 2020; 5 (3): 6–11. [Google Scholar]

- 19. Tsou AY, Lehmann CU, Michel J, Solomon R, Possanza L, Gandhi T.. Safe practices for copy and paste in the EHR. Systematic review, recommendations, and novel model for health IT collaboration. Appl Clin Inform 2017; 26 (01): 12–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Rosenman M, He J, Martin J, et al. Database queries for hospitalizations for acute congestive heart failure: flexible methods and validation based on set theory. J Am Med Inform Assoc 2014; 21 (2): 345–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Slayton TB. Ransomware: the virus attacking the healthcare industry. J Leg Med 2018; 38 (2): 287–311. [DOI] [PubMed] [Google Scholar]

- 22. Ashton M. Getting rid of stupid stuff. N Engl J Med 2018; 379 (19): 1789–91. [DOI] [PubMed] [Google Scholar]

- 23. Glaser J. It’s Time for a New Kind of Electronic Health Record. Boston, MA: Harvard Business Review; 2020. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

There is no primary dataset.