PURPOSE:

Despite evidence of clinical benefits, widespread implementation of remote symptom monitoring has been limited. We describe a process of adapting a remote symptom monitoring intervention developed in a research setting to a real-world clinical setting at two cancer centers.

METHODS:

This formative evaluation assessed core components and adaptations to improve acceptability and fit of remote symptom monitoring using Stirman's Framework for Modifications and Adaptations. Implementation outcomes were evaluated in pilot studies at the two cancer centers testing technology (phase I) and workflow (phase II and III) using electronic health data; qualitative evaluation with semistructured interviews of clinical team members; and capture of field notes from clinical teams and administrators regarding barriers and recommended adaptations for future implementation.

RESULTS:

Core components of remote symptom monitoring included electronic delivery of surveys with actionable symptoms, patient education on the intervention, a system to monitor survey compliance in real time, the capacity to generate alerts, training nurses to manage alerts, and identification of personnel responsible for managing symptoms. In the pilot studies, while most patients completed > 50% of expected surveys, adaptations were identified to address barriers related to workflow challenges, patient and clinician access to technology, digital health literacy, survey fatigue, alert fatigue, and data visibility.

CONCLUSION:

Using an implementation science approach, we facilitated adaptation of remote symptom monitoring interventions from the research setting to clinical practice and identified key areas to promote effective uptake and sustainability.

INTRODUCTION

Remote symptom monitoring facilitated by electronic patient-reported outcomes (ePROs) has demonstrated clinical benefits in multiple efficacy randomized clinical trials.1-3 In the pivotal trial by Basch et al, patients with metastatic solid tumors receiving remote symptom monitoring experienced significantly improved symptom control, quality of life, length of time on treatment, hospitalization rates, and overall survival when compared with standard of care.1,2 Similarly, Denis et al conducted a randomized clinical trial of patients with lung cancer randomly assigned to ePRO remote symptom monitoring versus standard of care, observing a substantial significant median survival benefit to remote symptom monitoring.3 These studies led to the development of the PRO-TECT trial that implemented ePROs in 26 community oncology practices.4 The intervention was well received: 79% of nurses reported information was helpful in documentation, 84% noted increased efficiency of patient discussions, and 75% reported the information was useful for patient care. Furthermore, 91% of oncologists found the ePRO information useful and 65% used ePROs in decision making.4 On the basis of this encouraging evidence, incorporation of ePROs, such as remote symptom monitoring, is included as a practice transformation activity for Medicare's Enhanced Oncology Model5 and in the Oncology Medical Home standards released jointly by American Society of Clinical Oncology and the Community Oncology Alliance.6

However, widespread implementation of remote symptom monitoring with ePROs has been limited and remains an underutilized resource outside of highly controlled research trials. In real-world settings, barriers exist for implementation at technical and workflow levels (eg, time constraints and interpretation of data).7 It is important to maintain core components of interventions, which are the functions or principles necessary to provide the desired outcomes.8 At the same time, adaptations are inevitably needed for integration into standard of care. Adaptations are specific, planned, and purposeful changes to the intervention that enhance acceptability and fit to a local context.8 Documentation of core components and adaptations for successful implementation are essential to support scale up and spread efforts. The objective of this study is to describe the core components of our remote symptom monitoring intervention, the process of adapting this intervention for uptake in real-world settings, and key adaptations made to facilitate acceptability and fit for use in routine clinical practice.

METHODS

Design

This formative evaluation assessed core components and adaptations to improve acceptability and fit of remote symptom monitoring using Stirman's Framework for Modifications and Adaptations. Formative evaluation includes strategic modification of interventions before implementation into practice.9 Our formative evaluation included three phases. In phase I, the primary goal was to assess barriers to use of the technology platform (Carevive, Boston, MA) used to deliver the remote symptom monitoring intervention. In phase II, the primary goal was to identify workflow barriers and adaptations. Phase III included early implementation of implementation across all cancer types, during which additional adaptations were made. The duration of patient engagement was 6 weeks, 3-6 months, and 6 months for phase I, II, and III, respectively. This work was approved by the University of Alabama at Birmingham (UAB) Institutional Review Board for phases I-III and the University of South Alabama for phase III. Staff informal consent was implied by participation in interviews. All ePROs were implemented as standard of care, thus patients did not provide informed consent. Adaptations were made and tracked as part of a quality improvement initiative.

Setting

This study was conducted at the UAB and at the University of South Alabama Mitchell Cancer Institute (MCI). UAB and MCI are located in Alabama, which has a large number of census block groups with high area deprivation index scores, reflecting the low socioeconomic status of the state.10 These institutions serve a population that includes 25% Black patients, 30% rural residents, and 10% of people who are socioeconomically disadvantaged (dual eligible for Medicare and Medicaid). UAB and MCI are the only two academic medical centers in Alabama.

Remote Symptom Monitoring Intervention

The remote symptom monitoring intervention implemented at UAB and MCI is consistent with the evidence-based intervention shown to be effective by Basch et al1,2 and consistent with the European Society of Medical Oncology clinical practice guidelines.11 Our process includes a navigator or care coordinator explaining the rationale for remote symptom monitoring to patients and guiding them through the technical aspects of enrollment and participation (eg, setting up an account and how to complete surveys). Patients complete a weekly remote symptom assessment using the ePRO version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE; Fig 1).12 If a patient reports one or more symptoms meeting a predefined threshold or a change in symptoms are reported, an automated alert is sent to a nurse who calls the patient, coordinates care, and communicates with the physician as needed. Data from remote symptom monitoring are available for clinicians in clinic as part of a dashboard in the electronic health record (EHR) for treatment decisions.

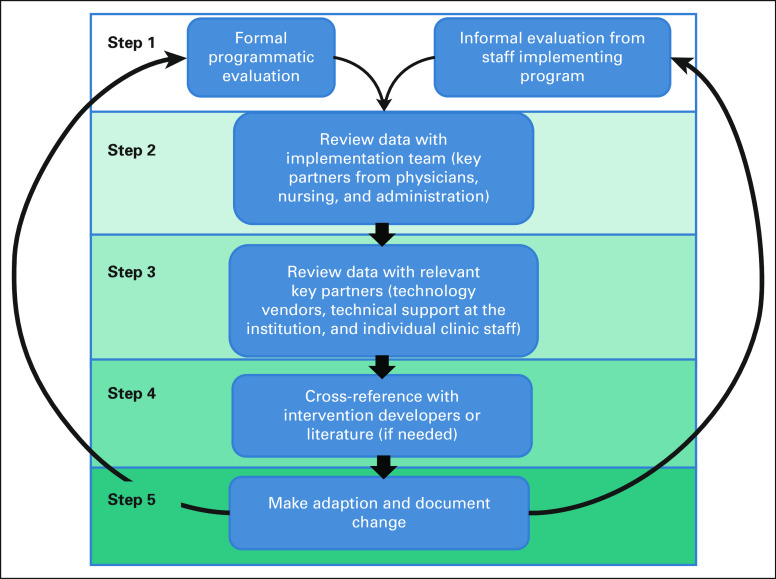

FIG 1.

Process for identifying and implementing intervention adaptations.

Integration Into the Electronic Medical Record

To facilitate workflow, remote symptom monitoring was increasingly integrated in the EHR over the course of the three phases. In phase I, a link to the technology platform was included in the EHR. In phase II, the dashboard was integrated in the patient's individual record and alert features were modified to alert as a result within the message center inbox of the nurse designated to respond to the alert to each patient. In phase III, additional minor modifications were made to data visibility and alert closure options.

Process for Identifying Core Components

The team that conducted the randomized clinical trials (E.M.B. and A.M.S.) reviewed the detailed protocols from the trials1,2 and the intervention deployed as part of this implementation. The intervention developers identified core components necessary for fidelity, which were reviewed with the implementation team and documented.

Implementation Science Frameworks

The updated Framework for Modifications and Adaptations (FRAME) by Stirman et al provides a robust mechanism for reporting on adaptations to the intervention that occur in the implementation process. This framework includes eight components, which are used to characterize recommended adaptations: (1) when and how in the implementation process the modification was made, (2) whether the modification was planned, (3) who determined the modification should be made, (4) what was modified, (5) at what level of delivery the modification was made, (6) type or nature of context- or content-level modifications, (7) whether the modification was fidelity-consistent, and (8) reasons for the modification.13 We tracked these medications in real time.

Process for Adaptation

The adaptation process is shown in Figure 1. Step 1 includes both formal and informal evaluation of remote symptom monitoring implementation. Step 2 involved reviewing feedback with the implementation team. Any potential modifications were reviewed with relevant key partners (step 3) and cross-referenced with the intervention developers (Principle Investigator of the randomized trials) when needed (step 4). When consensus was reached, the adaptation was made and documented (step 5). Finally, the implementation team regrouped with key partners to assess whether the adaptation addressed the intended barrier (step 6). Key adaptations from the pilots and early standard-of-care implementation are described using FRAME.

Formative Evaluation

Quantitative evaluation.

Quantitative evaluation conducted during pilot studies (phase I and phase II) included review of patient demographics and implementation outcomes.14 These outcomes included service penetrance (% of patients agreeing to participate in the pilot studies), compliance (% of surveys completed), completion (% of questions completed within opened surveys), and provider adoption (% alert closure).15 Implementation outcomes were calculated from data abstracted from the EHR and focused on feasibility and acceptability.

Qualitative evaluation.

Qualitative evaluation was conducted using semistructured interviews with nurse navigators (ie, care coordinators), physicians, and administrative leaders between phase I and phase II. All participants provided informed consent. Interviews were audio-recorded, transcribed verbatim, and deidentified. Using content analysis,16 the study Principle Investigator (G.R.) and two medical students (H.B.T. and F.R.L.) independently coded using an inductive coding approach to identify barriers, facilitators, and recommended adaptations to remote symptom monitoring that would increase acceptability or fit of the intervention using NVivo. All three coders reviewed and discussed themes and exemplary quotes until consensus was reached.

In addition to the formal evaluation, the implementation team captured clinical team and administrator feedback on barriers and suggested adaptations as informal field notes throughout the course of all three implementation phases. Field notes included discussions occurring during and between clinical team meetings in the form of verbal feedback. Field notes were reviewed for key themes by the investigative team.

RESULTS

Identification of Core Components

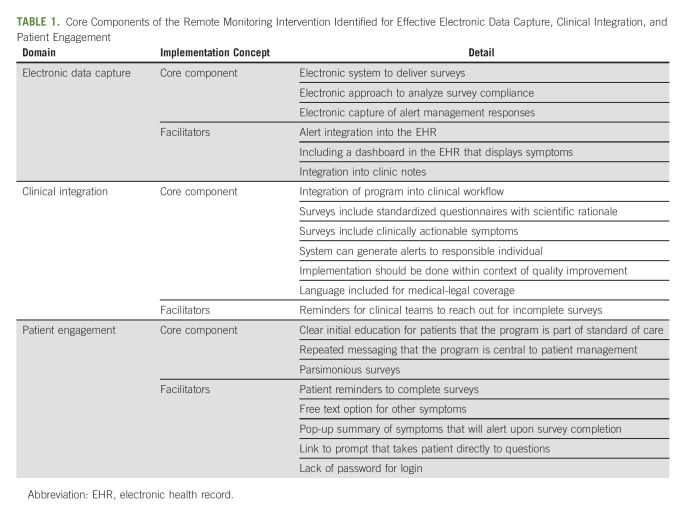

The results of the analysis identifying core components of remote symptom monitoring with ePROs in original efficacy and effectiveness research evaluation are shown in Table 1. Although a flexible system that allows for multiple modalities to collect surveys is ideal, the surveys should be able to be completed at home. Remote symptom monitoring should be delivered electronically to enable efficient survey administration and prompt reporting to the clinical team. Furthermore, ePRO symptom monitoring that is integrated into the EHR optimizes the workflow and visibility of the data. Another core component to implementation is the patient's introduction and orientation to the ePRO system as a routine standard of care, which is reinforced on follow-up encounters as needed. The symptoms evaluated in remote symptom monitoring should be actionable, with available clinical responses to manage symptoms. Although some systems can only deliver surveys in clinic, which still has potential for improving communication about symptoms, maximal benefit is observed when remote delivery is available to capture symptoms between clinic visits. Given the nature of home-based interventions, a system must be in place to monitor survey compliance in real time for incomplete surveys and provide outreach to those patients. This typically includes automated reminders followed by a phone call with the option for patients to complete assessments over the phone. Finally, the system must have the capacity to generate automated alerts, and a designated clinician must assume responsibility for monitoring alerts and engaging with the patient to provide appropriate support.

TABLE 1.

Core Components of the Remote Monitoring Intervention Identified for Effective Electronic Data Capture, Clinical Integration, and Patient Engagement

Adaptations

Formal quantitative evaluation (phase I).

Phase I was administered from September 2019 to November 2019, during which 23 patients with lymphoma, breast, gastrointestinal, and genitourinary cancers were approached for participation; of these, 40% self-reported an Eastern Cooperative Oncology Group performance status of ≥ 3 (confined to bed or chair ≥ 50% of waking hours). Three patients declined participation, yielding a service penetrance of 87%. Of the 20 participants who participated, 70% completed all surveys; 15% completed 50%-99% of surveys during a 6-week period. Only 15% of patients completed < 50% of surveys (compliance). Of 368 symptoms reported, 20% included a severe symptom alert.

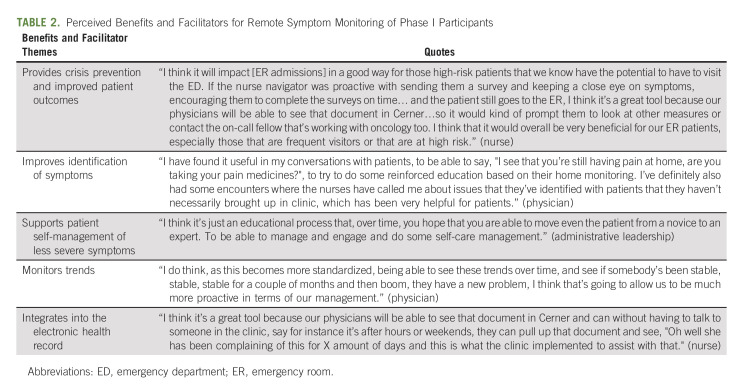

Qualitative evaluation (between phase I and II).

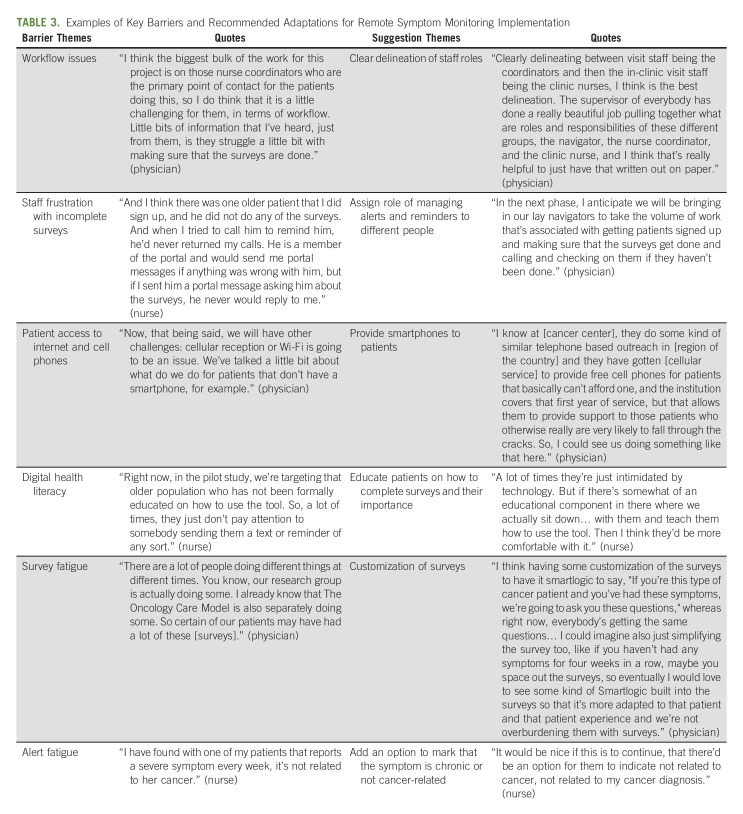

Physicians (n = 5), nurses/nurse navigators (n = 5), and administrative leaders (n = 2) participated in interviews about barriers, facilitators, and recommended adaptations after phase I. The FRAME was used to document specific components of the adaptation process garnered from interviews.13 No substantial technical difficulties were reported (eg, surveys not reaching patient and nurses unable to electronically close alerts). The clinical teams identified key facilitators regarding perceived benefit and supports for the intervention, including recognition that the intervention provided crisis prevention and enhanced patient outcomes, improved identification of symptoms, supported patient self-management of less severe symptoms, and monitored trends over time, and was integrated into the EHR (Table 2). At the same time, the clinical teams identified barriers, with associated suggestions for improvement (Table 3). The nurses expressed frustration about having to call patients about completing surveys, as this was felt to be practicing below their nursing license. Furthermore, they were also concerned about the number of surveys the institution administered to patients in general (eg, satisfaction surveys and surveys about handwashing). The nurses also reported concerns about patients who had alerts that were not felt to be actionable. For example, fatigue was common (25% of surveys), yet the nurses did not feel they had available interventions for fatigue. Additionally, they noted some patients reported severe symptoms for chronic pain, yet when the nurses called, the patient stated the pain was chronic and managed with medications or was not related to their cancer. Finally, physicians commented that they were not adequately engaged since the alerts did not come to them and requested more visibility of the data and integration into the EHR.

TABLE 2.

Perceived Benefits and Facilitators for Remote Symptom Monitoring of Phase I Participants

TABLE 3.

Examples of Key Barriers and Recommended Adaptations for Remote Symptom Monitoring Implementation

Formal quantitative evaluation (phase II).

In myeloma and acute leukemia phase I pilots, 47 eligible patients were approached by research assistants who function as lay navigators; 35 patients agreed to participate in a 3-month remote symptom-monitoring program (74% service penetrance). Patients included both new patients and patients already on treatment. Of patients with acute leukemia and myeloma, 89% and 82% of all surveys administered were opened, respectively. The majority of participants were age 60-74 years (80%); 20% were age 75 years or older. Average rate of completion of surveys opened for patients with acute leukemia was 94% and for patients with myeloma was 92%. Of 294 symptoms reported, 70% included a severe symptom alert. All alerts were closed at study completion, although time to closure was not captured (provider adoption).

Field notes-clinical teams (phase II-III).

All additional feedback provided by the clinical teams was captured as field notes in real time and integrated into the FRAME table for recommended adaptations. The research assistants expressed concerns over variability in the education about the program to patients and completion of the intake process needed to start surveys, given multiple steps in the patient enrollment. They requested a more automated, streamlined approach, such as a video that walked patients through the enrollment process. Among the nurses, there were concerns about alert fatigue and who was the most appropriate person to respond to alerts. For example, many patients with leukemia completed surveys initially in the inpatient setting, but the outpatient nurse identified as responsible for managing alerts did not believe she was the appropriate person to receive this information when she was not managing inpatient care. The clinic nurses also shared the concerns about chronic symptoms where patients when called stated that they wanted to report without desiring a callback about the symptom.

Field notes-administrators (before phase III).

When considering plans for health system-wide rollout, the cancer center administration felt that limiting remote symptom monitoring to only those with advanced-stage cancer would be challenging because of difficulty discerning who had advanced-stage versus early-stage cancer and may not capture all those in need of the intervention. Instead, cancer center administration elected to move forward with all patients on active chemotherapy, immunotherapy, or targeted therapies. Given that patients could be on treatment for an extended duration, the decision was made to administer surveys for 6 months only for each patient if they remained on the same treatment or transitioned to survivorship, instead of the 12 months used in prior randomized control trials.4 If they started a new therapy, they reinitiated surveys for another 6 months. This approach aligned with the 6-month episodes in Medicare's episode-based payment approach of the Oncology Care Model.17

Summary of adaptations.

Using this formative evaluation, the implementation team made adaptations to the program to prepare for and address challenges encountered across UAB and MCI. These adaptations, categorized using FRAME, addressed barriers related to workflow challenges, alert fatigue, and data visibility (Table 4).

TABLE 4.

Identified Adaptations for Effective Implementation of Remote Symptom Monitoring Using the Framework for Modifications and Adaptations

DISCUSSION

This study identified adaptations to overcome barriers to implementing research-tested, evidence-based remote symptom monitoring with ePROs into real-world clinical settings. The barriers targeted by these adaptations are similar to barriers encountered in other remote symptom monitoring programs. For example, in the broad rollout within the Texas Oncology community oncology multisite practice by Patt et al,18 they highlighted challenges related to ePRO completion compliance and lack of reminder text or e-mail prompts for patients not completing surveys. In another evaluation of home symptom monitoring for patients with head and neck cancers, key barriers included the energy, time, and motivation needed from team members for implementation, the needed workplace adjustments, and the need of technical support.19 Although these studies suggested barriers that are similar to those noted in our evaluation, they did not specifically include planned or actual adaptations to address these challenges as is done in this manuscript. Given the call to implement remote symptom monitoring broadly in oncology,5,6 it is warranted not only to describe barriers, but also to show how institutions can approach adaptation to the intervention developed in randomized clinical efficacy trials, while maintaining fidelity to the intervention.

Although the adaptations themselves are anticipated to be beneficial for future implementation, this manuscript also provides an approach to adaptation that can be modeled when implementing complex, multilevel interventions in general. It is beneficial to capture both formal programmatic evaluations, as well as field notes, because both influence decisions for modifying and implementing the intervention.

Often, the ongoing dialogue amongst the implementation team and rationale for change between formal evaluations is not well captured, which limits the understanding of the adaptation process. We recommend assigning responsibility of field-note-taking to a member of the implementation team during both formal meetings and ad hoc communication. In addition, use of a more structured approach to decision making, as noted in Figure 1, can ensure that relevant key partners are included in the process and that adaptations to the intervention are communicated effectively. Finally, health care systems must recognize the iterative nature of adaptation and that this process requires continued maintenance to prevent unwanted programmatic drift that affects fidelity. As such, we recommend reviewing modifications at least quarterly to ensure a standard approach across disease sites. We acknowledge that this does take time and resources to complete both initial data capture and review of materials, but is critical for understanding the evolution of changes to interventions and implementation processes. As such, we recommend at least one project manager to support the implementation, facilitate key partners engagement, and enact needed changes.

This study has several limitations. We describe the adaptation process and key adaptations for two centers located in the Southern United States. Other sites may differ in their institutional culture, staffing expectations, implementation readiness, and individual barriers experienced. However, on the basis of the joint experience of the two participating sites and commonality with prior literature on barriers, we do anticipate that barriers encountered in these initial three phases are not unique to these practices. This study included a limited number of providers, thus additional insights may be gained with more participants. Additionally, this study focused on early adaptation of remote symptom monitoring and does not capture adaptations that are made to maintain a well-established program. We did not explicitly capture the time spent on reviewing the intervention, selecting adaptations, or implementing changes. Future work will be required to assess adaptations for sustainability. In addition, ongoing evaluation is being conducted to formally capture patient perspectives and describe implementation strategies (eg, use of physician champions, audit, and feedback).

In conclusion, adaptations to the intervention of remote symptom monitoring with ePROs developed in efficacy clinical trials are needed to facilitate effective standard of care implementation. The use of formative evaluation to guide the adaptation process provides a mechanism for selecting appropriate key intervention components and adaptations to enhance fit and acceptability.

Gabrielle B. Rocque

This author is an Associate Editor for JCO Oncology Practice. Journal policy recused the author from having any role in the peer review of this manuscript.

Consulting or Advisory Role: Pfizer, Flatiron Health, Gilead Sciences

Research Funding: Carevive Systems, Genentech, Pfizer

Travel, Accommodations, Expenses: Gilead Sciences

Smith Giri

Honoraria: CareVive, OncLive, Sanofi

Research Funding: Carevive Systems, Pack Health, Sanofi (Inst)

Doris Howell

Leadership: Carevive Systems

Consulting or Advisory Role: Carevive

Research Funding: AstraZeneca (Inst)

Ethan M. Basch

Consulting or Advisory Role: SIVAN Innovation, Carevive Systems, Navigating Cancer, AstraZeneca, N-Power Medicine, Resilience Care

Other Relationship: Centers for Medicare and Medicaid Services, National Cancer Institute, American Society of Clinical Oncology, JAMA-Journal of the American Medical Association, Patient-Centered Outcomes Research Institute (PCORI)

Open Payments Link: https://openpaymentsdata.cms.gov/physician/427875/summary

Angela M. Stover

Honoraria: Pfizer, Association of Community Cancer Centers (ACCC), Henry Ford Health System, Purchaser Business Group on Health

Consulting or Advisory Role: Navigating Cancer

Research Funding: Urogen pharma (Inst), SIVAN Innovation (Inst)

No other potential conflicts of interest were reported.

SUPPORT

This work was supported by the National Institute of Nursing Research (R01NR019058).

AUTHOR CONTRIBUTIONS

Conception and design: All authors

Financial support: Gabrielle B. Rocque

Administrative support: Gabrielle B. Rocque, D’Ambra N. Dent, Stacey A. Ingram, Jennifer Young Pierce, Chelsea L. McGowen

Provision of study materials or patients: Gabrielle B. Rocque, Stacey A. Ingram, Omer Jamy, Smith Giri, Jennifer Young Pierce

Collection and assembly of data: Gabrielle B. Rocque, Stacey A. Ingram, Nicole E. Caston, Haley B. Thigpen, Fallon R. Lalor, Jennifer Young Pierce, Chelsea L. McGowen, Chao-Hui Sylvia Huang

Data analysis and interpretation: All authors

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Adaptation of Remote Symptom Monitoring Using Electronic Patient-Reported Outcomes for Implementation in Real-World Settings

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/op/authors/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Gabrielle B. Rocque

This author is an Associate Editor for JCO Oncology Practice. Journal policy recused the author from having any role in the peer review of this manuscript.

Consulting or Advisory Role: Pfizer, Flatiron Health, Gilead Sciences

Research Funding: Carevive Systems, Genentech, Pfizer

Travel, Accommodations, Expenses: Gilead Sciences

Smith Giri

Honoraria: CareVive, OncLive, Sanofi

Research Funding: Carevive Systems, Pack Health, Sanofi (Inst)

Doris Howell

Leadership: Carevive Systems

Consulting or Advisory Role: Carevive

Research Funding: AstraZeneca (Inst)

Ethan M. Basch

Consulting or Advisory Role: SIVAN Innovation, Carevive Systems, Navigating Cancer, AstraZeneca, N-Power Medicine, Resilience Care

Other Relationship: Centers for Medicare and Medicaid Services, National Cancer Institute, American Society of Clinical Oncology, JAMA-Journal of the American Medical Association, Patient-Centered Outcomes Research Institute (PCORI)

Open Payments Link: https://openpaymentsdata.cms.gov/physician/427875/summary

Angela M. Stover

Honoraria: Pfizer, Association of Community Cancer Centers (ACCC), Henry Ford Health System, Purchaser Business Group on Health

Consulting or Advisory Role: Navigating Cancer

Research Funding: Urogen pharma (Inst), SIVAN Innovation (Inst)

No other potential conflicts of interest were reported.

REFERENCES

- 1. Basch E, Deal AM, Kris MG, et al. Symptom monitoring with patient-reported outcomes during routine cancer treatment: A randomized controlled trial. J Clin Oncol. 2016;34:557–565. doi: 10.1200/JCO.2015.63.0830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Basch E, Deal AM, Dueck AC, et al. Overall survival results of a trial assessing patient-reported outcomes for symptom monitoring during routine cancer treatment. JAMA. 2017;318:197–198. doi: 10.1001/jama.2017.7156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Denis F, Basch E, Septans AL, et al. Two-year survival comparing web-based symptom monitoring vs routine surveillance following treatment for lung cancer. JAMA. 2019;321:306–307. doi: 10.1001/jama.2018.18085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Basch E, Stover AM, Schrag D, et al. Clinical utility and user perceptions of a digital system for electronic patient-reported symptom monitoring during routine cancer care: Findings from the PRO-TECT trial. JCO Clin Cancer Inform. 2020;4:947–957. doi: 10.1200/CCI.20.00081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Center for Medicare and Medicaid Services . Enhancing Oncology Care Model. https://innovation.cms.gov/innovation-models/enhancing-oncology-model [Google Scholar]

- 6. Woofter K, Kennedy EB, Adelson K, et al. Oncology Medical Home: ASCO and COA standards. JCO Oncol Pract. 2021;17:475–492. doi: 10.1200/OP.21.00167. [DOI] [PubMed] [Google Scholar]

- 7. Howell D, Molloy S, Wilkinson K, et al. Patient-reported outcomes in routine cancer clinical practice: A scoping review of use, impact on health outcomes, and implementation factors. Ann Oncol. 2015;26:1846–1858. doi: 10.1093/annonc/mdv181. [DOI] [PubMed] [Google Scholar]

- 8.Chambers DA, Vinson CA, Norton WE. Advancing the Science of Implementation across the Cancer Continuum. New York, NY: Oxford University Press; 2018. [Google Scholar]

- 9. Elwy AR, Wasan AD, Gillman AG, et al. Using formative evaluation methods to improve clinical implementation efforts: Description and an example. Psychiatry Res. 2020;283:112532. doi: 10.1016/j.psychres.2019.112532. [DOI] [PubMed] [Google Scholar]

- 10. Kind AJH, Buckingham WR. Making neighborhood-disadvantage metrics accessible—The neighborhood atlas. N Engl J Med. 2018;378:2456–2458. doi: 10.1056/NEJMp1802313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. DiMaio MBE, Denis F, Fallowfield LJ, et al. The role of patinet-reported outcome measures in the continuum of cancer clinical care: ESMO clinical practice guidelines. Ann Oncol. 2022;33:878–892. doi: 10.1016/j.annonc.2022.04.007. [DOI] [PubMed] [Google Scholar]

- 12.National Cancer Institute . Patient-Reported Outcomes Version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE™) 2016. http://healthcaredelivery.cancer.gov/pro-ctcae/ [Google Scholar]

- 13. Wiltsey Stirman S, Baumann AA, Miller CJ. The FRAME: An expanded framework for reporting adaptations and modifications to evidence-based interventions. Implement Sci. 2019;14:58. doi: 10.1186/s13012-019-0898-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38:65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Stover AM, Haverman L, van Oers HA, et al. Using an implementation science approach to implement and evaluate patient-reported outcome measures (PROM) initiatives in routine care settings. Qual Life Res. 2021;30:3015–3033. doi: 10.1007/s11136-020-02564-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Vaismoradi M, Turunen H, Bondas T. Content analysis and thematic analysis: Implications for conducting a qualitative descriptive study. Nurs Health Sci. 2013;15:398–405. doi: 10.1111/nhs.12048. [DOI] [PubMed] [Google Scholar]

- 17. Kline R, Adelson K, Kirshner JJ, et al. The Oncology Care Model: Perspectives from the Centers for Medicare & Medicaid Services and participating oncology practices in academia and the community. Am Soc Clin Oncol Ed Book. 2017;37:460–466. doi: 10.1200/EDBK_174909. [DOI] [PubMed] [Google Scholar]

- 18. Patt D, Wilfong L, Hudson KE, et al. Implementation of electronic patient-reported outcomes for symptom monitoring in a large multisite community oncology practice: Dancing the Texas two-step through a pandemic. JCO Clin Cancer Inform. 2021;5:615–621. doi: 10.1200/CCI.21.00063. [DOI] [PubMed] [Google Scholar]

- 19. Dronkers EAC, Baatenburg de Jong RJ, van der Poel EF, et al. Keys to successful implementation of routine symptom monitoring in head and neck oncology with “Healthcare Monitor” and patients' perspectives of quality of care. Head Neck. 2020;42:3590–3600. doi: 10.1002/hed.26425. [DOI] [PMC free article] [PubMed] [Google Scholar]