Abstract

Objectives

Differentiation between COVID-19 and community-acquired pneumonia (CAP) in computed tomography (CT) is a task that can be performed by human radiologists and artificial intelligence (AI). The present study aims to (1) develop an AI algorithm for differentiating COVID-19 from CAP and (2) evaluate its performance. (3) Evaluate the benefit of using the AI result as assistance for radiological diagnosis and the impact on relevant parameters such as accuracy of the diagnosis, diagnostic time, and confidence.

Methods

We included n = 1591 multicenter, multivendor chest CT scans and divided them into AI training and validation datasets to develop an AI algorithm (n = 991 CT scans; n = 462 COVID-19, and n = 529 CAP) from three centers in China. An independent Chinese and German test dataset of n = 600 CT scans from six centers (COVID-19 / CAP; n = 300 each) was used to test the performance of eight blinded radiologists and the AI algorithm. A subtest dataset (180 CT scans; n = 90 each) was used to evaluate the radiologists’ performance without and with AI assistance to quantify changes in diagnostic accuracy, reporting time, and diagnostic confidence.

Results

The diagnostic accuracy of the AI algorithm in the Chinese-German test dataset was 76.5%. Without AI assistance, the eight radiologists’ diagnostic accuracy was 79.1% and increased with AI assistance to 81.5%, going along with significantly shorter decision times and higher confidence scores.

Conclusion

This large multicenter study demonstrates that AI assistance in CT-based differentiation of COVID-19 and CAP increases radiological performance with higher accuracy and specificity, faster diagnostic time, and improved diagnostic confidence.

Key Points

• AI can help radiologists to get higher diagnostic accuracy, make faster decisions, and improve diagnostic confidence.

• The China-German multicenter study demonstrates the advantages of a human-machine interaction using AI in clinical radiology for diagnostic differentiation between COVID-19 and CAP in CT scans.

Supplementary Information

The online version contains supplementary material available at 10.1007/s00330-022-09335-9.

Keywords: COVID-19, Artificial intelligence, Computed tomography, Radiologists

Introduction

The coronavirus disease 2019 (COVID-19) prompted an ongoing pandemic and has raised a global public health concern. More than 220 million cases have been confirmed worldwide up to September 2021 [1]. COVID-19 radiological imaging data continues to grow at a disproportionate rate compared to the number of available trained radiologists, contributing to a dramatic increase in radiologists’ workload. Therefore, an effective method is urgently needed for accurate and rapid diagnosis of COVID-19.

The World Health Organization (WHO) recommends the use of a reverse transcription-polymerase chain reaction test (RT-PCR) as the gold standard to confirm the diagnosis of a suspected COVID-19 infection [2]. Some studies have shown that chest computed tomography (CT) examination is a widely available, rapid, and under certain conditions, more sensitive than antibody or RT-PCR tests at various stages of the infection [3, 4]. Therefore, the WHO recommends the further use of chest CT to diagnose COVID-19. However, a meta-analysis suggests a low specificity in differentiating pneumonia-related lung changes due to the overlapping imaging characteristics between COVID-19 and non-COVID-19 pneumonia in chest CT [5]. Artificial intelligence (AI) is an emerging technology that can automatically extract quantitative imaging features to support radiological decision-making, increase workflow efficiency and improve clinical management [6]. Previous studies have integrated AI for COVID-19 detection, diagnosis, and prognosis using multicenter CT scans [7–18]. These existing AI studies presented a good performance in detecting COVID-19 but revealed several limitations: first, the data were mostly exclusive from China or the United States, without including larger amounts of European data [7–12, 14, 18]. Previous studies mostly include the initial chest CT of COVID-19 cases but without any specific consideration of the CT-based early, progressive, peak, and absorption stage[8, 10–12, 15]. This might lead to a situation where predominantly identical, morphologically typical, and thus simply recognizable COVID-19 stages were preferentially or exclusively included in the analysis and the AI algorithm development. Finally, most studies focus on the AI system’s performance and compare this with the radiologists’ performance [12, 16, 17]. Although studies included the AI result as assistance for radiologists, the benefit was only quantified in terms of increased accuracy [8, 10, 11]. Different levels of confidence in the diagnostic decisions and the individual time for diagnosis, as associated criteria determining the clinical benefit using an AI algorithm in an international multicenter dataset, including all COVID-19 stages, were not evaluated to our knowledge.

Thus, the aims of this study were (1) to develop an AI algorithm to distinguish between COVID-19 and CAP using a multicenter, multi-vendor CT imaging dataset, (2) to compare the diagnostic accuracy of the AI algorithm with the performance of human radiologists, (3) to identify potential improvements in the diagnostic performance of radiologists in terms of reporting time, confidence and accuracy by using the developed AI algorithm as additional assistance compared with the performance of the radiologists without AI assistance. In conclusion, the potential advantages of additional use of AI assistance for COVID-19 detection in everyday radiology shall be discussed.

Materials and methods

Patient population

This retrospective study received ethical approval, and informed consent was waived at all participating hospitals (Jilin: 2020-595, Wuhan: [2020]17, Ningbo: PJ-NBEY-KY-2020-194-01, Cologne: 20-1676, Frankfurt: 20-719, and Heidelberg: S-293/2020).

Inclusion criteria were as follows: (1) chest CTs with inflammatory infiltrations. (2) inclusion to the COVID-19 group occurred in case of a positive RT-PCR test within 48 h before the CT examination. (3) The inclusion criteria to the CAP group were a CT scan collected before the first outbreak of COVID-19 (from February 23, 2016, to December 31, 2019) or after the outbreak of COVID-19 (since January 7, 2020), an additional microbiological diagnosis of bacterial or virus-related pneumonia and negative COVID-19 RT-PCR test was required.

Chest CTs were characterized by inflammatory infiltrations, exclusion from lung tumors, tuberculosis, trauma, and postoperative scarred lesions. Further lung changes, which have no potential overlap with acute inflammation, were classified and recorded according to Fleischner Society guidelines (see Table S2 in the supplementary material [19, 20].

Referring to the COVID-19 guideline [21], based on the CT characteristics and the gap between CTs and symptoms onset, the COVID-19 group was divided into early (0–3 days), progressive (4–7 days), peak (8–14 days), absorption (≥15 days) stages [22]. To reflect the clinical distribution of COVID-19 (50%) and CAP (50%), a homogenous test dataset of different stages and severities per stage was used in the test dataset. Briefly, earlier COVID-19 stages (early and progressive) 30.5%, later stages (peak and absorption) 19.5%, and CAP with mild, moderate, and extensive severities including 25% viral and 25% bacterial pneumonia (Table 1).

Table 1.

Imaging stages of COVID-19 and etiological classification of CAP

| Disease | Stages/etiology | Training and validation dataset | Chinese test dataset | German test dataset | % Chinese-German test datasets |

|---|---|---|---|---|---|

| COVID-19 | Early | 53 | 51 | 50 | 16.8% |

| Progressive | 292 | 57 | 25 | 13.7% | |

| Peak | 48 | 18 | 50 | 11.3% | |

| Absorption | 69 | 24 | 25 | 8.2% | |

| CAP | Bacterial | 197 | 75 | 75 | 25.0% |

| Viral | 135 | 75 | 75 | 25.0% | |

| N/A | 197 | 0 | 0 | 0 |

N/A, not available. It referred to CAP patients with some clinical infection symptoms but without positive pathogenic results

Data workflow for AI training and imaging analysis without and with AI support

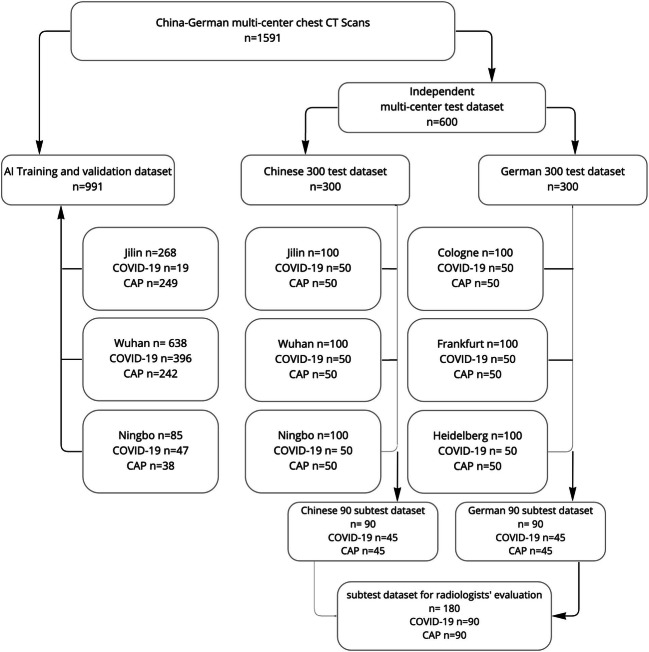

For AI training and validation, and for non-AI-assisted and AI-assisted CT analysis, a total of 1591 chest CT scans were divided into three independent datasets (Fig. 1): (1) AI training and validation dataset: 989 patients with 991 CT scans (462 COVID-19 and 529 CAP) from three centers in China (2) independent Chinese test dataset of 300 CTs from 290 patients with 100 CTs of every Chinese center with 50 COVID-19 and 50 CAP respectively (without any duplications from the training dataset), and (3) independent German test dataset of 300 CTs from 300 patients with 100 CTs of every German center with 50 COVID-19 and 50 CAP respectively. From Chinese-German test datasets, a balanced subtest dataset of 180 CTs (90/90 China and Germany) was selected for subsequent international radiologists’ intrareader evaluation without and with AI support.

Fig. 1.

Distribution of the Chinese-German multicenter, multivendor dataset for AI classification model development and composition for un-assisted and AI-assisted imaging analysis

Development of 3D AI algorithm

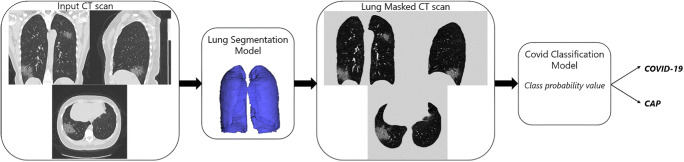

An AI algorithm that can differentiate COVID-19 from CAP infection on chest CT was developed using the IntelliSpace Discovery platform (ISD, v3.0.6, Philips Healthcare, Best, The Netherlands). The classification algorithm is based on two deep learning models, a segmentation model and a classification model (Fig. 2). The lung segmentation model (LSM) is based on an encoder-decoder conventional neural network (CNN) architecture called SegNet [23]. The network is fed with individual 2D slices and the corresponding manual ground-truth lung segmentations during training. The input CT scans to the LSM were resampled to an in-plane resolution of 1 x 1 mm, resized to 512 x 512, and intensity clipped to a range of −1000 to 500 Hounsfield unit (HU). During inference, the LSM accepts the entire CT volume for each subject and generates a 3D lung segmentation (Figure S1 in supplementary material shows a schematic of the CNN during inference). The LSM was trained on 35,000 CT slices and validated on 9,500 CT slices, reaching a validation Dice-index of 0.94 ± 0.03. The 3D COVID-19 classification model (CCM) is based on the ResNet50 architecture, called COVNet [7, 24]. During training, the network is supplied with the masked lung region and the corresponding class labels (i.e., COVID-19 or CAP). The input CT scans to the CCM were resized to an in-plane size of 224 x 224, down-sampled by a factor 5 in the Z-direction, intensity clipped to a range of −1250 to 250 HU, and intensity normalized between 0 and 1. Data augmentation such as random rotation, flipping, and adding Gaussian noise to the input data during training was applied. All features calculated from the input CT slices were pooled using a max-pool operation and passed to a fully connected layer with the SoftMax activation function to produce class probabilities. During inference, on the independent Chinese-German test dataset, the CCM accepts the entire CT volume for each subject and outputs the class label (Figure S2 in supplementary material shows the schematic of the CCM). The CCM was trained and validated using the 5-fold cross-validation. The five models from the 5-fold cross-validation are simultaneously applied to the CT volume, and the class probability values are ensembled to obtain the final class label. CT scans of the training and validation dataset were evaluated using a 5-fold cross-validation strategy. Firstly, the training and validation dataset was randomly divided into five folds without overlapping samples. Secondly, five different models (models 1–5) were trained and evaluated. Lastly, the results from the five models were combined using a model ensemble strategy to obtain the final predictions on the independent Chinese-German test dataset.

Fig. 2.

Overview of the proposed CT classification workflow. A CT scan is used as input. A deep learning-based lung segmentation model segments the lungs, which are used to mask out the lung regions. A COVID-19 classification model takes as input the masked lungs and provides a classification either as COVID-19 or as CAP

Radiologist performance evaluation without and with AI assistance

Radiological image interpretation was performed in three steps: (I) radiological image evaluation of the 300 test dataset from China and Germany independent of one or the other. (II) A subtest dataset of 90 CTs consisting of 45 COVID-19 and 45 CAP cases from each country, resulting in 180 cases, was evaluated. The 180 CT scans were evaluated simultaneously by four Chinese and four German radiologists at Jilin and Cologne online. For a detailed analysis of the image evaluation performance, accuracy of diagnosis, time for evaluation, and confidence of diagnosis were tracked within the online evaluation session (see below). The entire evaluation process of the 180 CTs was performed twice, once without (IIa) and followed with AI assistance (IIb). In the subsequent step (IIb), CTs were displayed in changed order, and the interval between the evaluation sessions was set to over three months to avoid image recognition (May 17th, 2021, without AI assistance; September 15th, 2021, with AI assistance).

Therefore, in a quiet environment, the eight radiologists (D.L. and L.W. from Jilin, China and P.F. and J.K. from Cologne, Germany, as experienced radiologists, S.Y. and N.M. from Jilin, China and M.W. and M.R. from Cologne, Germany, as unexperienced radiologists, inexperienced radiologists with < 5 years of radiological training and read less than 50 COVID-19 cases, as well as experienced radiologists with ≥ 5 years of radiological training and read more than 100 COVID-19 cases) evaluated the CT images using the ISD platform through remote online network simultaneously. All radiologists reviewed the images by setting the lung window of 1600 window width and −600 window level and giving the information on the patients’ sex and age. The radiologists made their diagnostic decisions independently and not by consensus. As much time as desired could be taken for evaluation and diagnosis. The radiologists were allowed to zoom in or rotate the images. The subtest dataset was anonymized and stored in their ISD platform. Two project executors (M.F. from Jilin, China, and R.S. from Cologne, Germany) recalled and played the images and did not participate in the evaluation.

Radiologists classified the images as COVID-19 or CAP, and their self-experienced confidence and time to make a diagnostic decision. The individual confidence in the diagnosis was scored using the 5-point Likert scale (1 = very certain diagnosis, 2 = certain diagnosis, 3 = likely diagnosis, 4 = uncertain diagnosis, 5 = very uncertain “ambiguous” diagnosis), the time from the start evaluating to the final decision was measured with a digital stopwatch. The overall accuracy, sensitivity, and specificity of the diagnosis were assessed.

Statistical analysis

Demographic characteristics were compared between COVID-19 and CAP groups by using the χ 2 test for categorical variables and the t-test for continuous variables. Statistical data analysis was performed using R version 3.6.2 on Rstudio version 1.2.5033.35 (https://cran.r-project.org/). Figures were plotted using the ggplot2 package [25]. Continuous variables were reported as mean and standard deviation (SD). Statistical hypothesis testing of the non-parametric dichotomous performance data was calculated from 2 × 2 contingency tables using McNemar's test, Wilcoxon signed-rank test for the ordinal scaled data, or Student's t-test for parametric data. Intra- and interreader reliability was tested using the Fleiss' kappa (< .4 fair, < .6 moderate, < .8 substantial, > .8 excellent) for the non-parametric performance data using the irr package. Statistical significance was defined as p < .05.

Results

Patient characteristics

In total, 1591 CT scans, divided into 762 CT scans of 753 patients with present COVID-19 pneumonia and 829 CT scans of 826 patients with present CAP acquired from six centers in China and Germany between February 2016 and May 2021, were included. The 1279 patients from China included CT data from the University Hospital of Jilin (n = 358), Wuhan (n = 736), and Ningbo (n = 185). The 300 patients from Germany contained data from the University Hospitals Cologne (n = 100), Heidelberg (n = 100), and Frankfurt (n = 100). For a detailed presentation of the individual groups, see Fig. 1.

The mean age of patients in the COVID-19 group was 54.2 ± 14.3 years, with 369 male and 384 female patients. The mean age of patients in the CAP group was 60.3 ± 15.9 years, with 478 male and 348 female patients (see Table 2). The mean age of patients in the 180 cases (90 cases from each country) subtest dataset was 54.9 ± 14.4 years, with 94 male and 86 female patients. Patients in the CAP group were older than those in the COVID-19 group within the Chinese portion of the 300 cases. Furthermore, there were no significant differences in age or gender distribution between the groups. Detailed patient characteristics are shown in Table 2. Further included lung changes, which have no potential overlap with acute inflammation, were classified according to Fleischner Society guidelines [19, 20](see Table S2) and demonstrated no significant difference in frequency of occurrence between the COVID-19 group and CAP group.

Table 2.

Demographic values of the patients in the COVID-19 and CAP groups

| Country | left | COVID-19 | CAP | ||||

|---|---|---|---|---|---|---|---|

| N | Sex | Age | N | Sex | Age | ||

| China | Jilin | 69 scans, 61 patients | 35 male, 26 female | 41.8 ± 12.9 (16, 62) | 299 scans, 297 patients | 167 male, 130 female | 57.1 ± 16.0 (18, 95) |

| Wuhan | 446 scans, 445patients | 196 male, 249 female | 57.8 ± 15.1 (15, 98) | 292 scans, 291 patients | 148 male, 143 female | 57.4 ± 18.1 (4, 89) | |

| Ningbo | 97 scans, 97 patients | 36 male, 61 female | 50.4 ± 14.3 (17, 86) | 88 scans, 88 patients | 69 male, 19 female | 69.0 ± 13.6 (32, 90) | |

| Germany | Cologne | 50 scans, 50 patients | 26 male, 24 female | 59.7 ± 14.1 (29, 88) | 50 scans, 50 patients | 28 male, 22 female | 59.6 ± 18.9 (18, 89) |

| Frankfurt | 50 scans, 50 patients | 42 male, 8 female | 58.5 ± 13.9 (36, 85) | 50 scans, 50 patients | 32 male, 18 female | 59.9 ± 13.3 (21, 90) | |

| Heidelberg | 50 scans, 50 patients | 34 male, 16 female | 56.9 ± 15.6 (20, 85) | 50 scans, 50 patients | 34 male, 16 female | 58.9 ± 15.4 (19, 86) | |

| Total | 762 scans, 753 patients | 369 male, 384 female | 54.2 ± 14.3 (15, 98) | 829 scans, 826 patients | 478 male, 348 female | 60.3 ± 15.9 (4, 95) | |

COVID-19, coronavirus disease 2019; CAP, community-acquired pneumonia; N, number; Ages are reported as means ± standard deviations; Values in parentheses are range

AI-based diagnosis

The diagnostic performance of the AI model in the Chinese-German test datasets (Table 3) was 76.5%, 67.9%, and 85,7% (accuracy, sensitivity, specificity). The diagnostic performance within the various datasets of the AI model did not differ significantly. The diagnostic performance was marginally below the diagnostic performance of the radiologists (without AI assistance) but, on overall average, did not significantly different from it (p > .05).

Table 3.

Performance of the AI algorithm at the international datasets

| Performance of AI | Chinese 300 test dataset | German 300 test dataset | Chinese 90 subtest dataset | German 90 subtest dataset |

|---|---|---|---|---|

| Accuracy (%) | 77.5 | 75.6 | 83.9 | 75.3 |

| Sensitivity (%) | 68.8 | 66.9 | 81.0 | 63.6 |

| Specificity (%) | 86.8 | 84.7 | 86.7 | 87.8 |

AI, artificial intelligence; Values are the percentage

Within the identical subtest dataset, the AI algorithm achieved a performance of 80.0%, 72.1%, and 87.2% (accuracy, sensitivity, specificity). Within the reference subgroup of cases with earlier COVID-19 stages (early and progressive), the performance of the AI algorithm was 78.6%, 68.3%, and 87.2% (accuracy, sensitivity, specificity). In contrast, the performance was 82.0%, 76.9%, and 87.1% (accuracy, sensitivity, specificity) in the advanced stages (peak and absorption) [22]. The AI algorithm significantly better differentiated the subgroups of later than early COVID-19 stages (p < .05).

Radiologists’ diagnosis in the test datasets

In the combined test dataset, a performance in the arbitrary classification of the different types of pneumonia (COVID-19 vs. CAP), the German-Chinese average performance was 78.9%, 72.7%, and 84.1% (accuracy, sensitivity, specificity). In the German 300 test dataset, the German radiologists achieved performance in classifying the different types of pneumonia of 86.0%, 84.4%, and 85.1% (accuracy, sensitivity, specificity). Solely in this part of the dataset, the radiologists from Germany achieved a significantly better performance than the AI (p < .05). In the Chinese 300 test dataset, a performance in classifying the different types of pneumonia of 71.9%, 60.9%, and 83.0% (accuracy, sensitivity, specificity) was achieved by the Chinese radiologists.

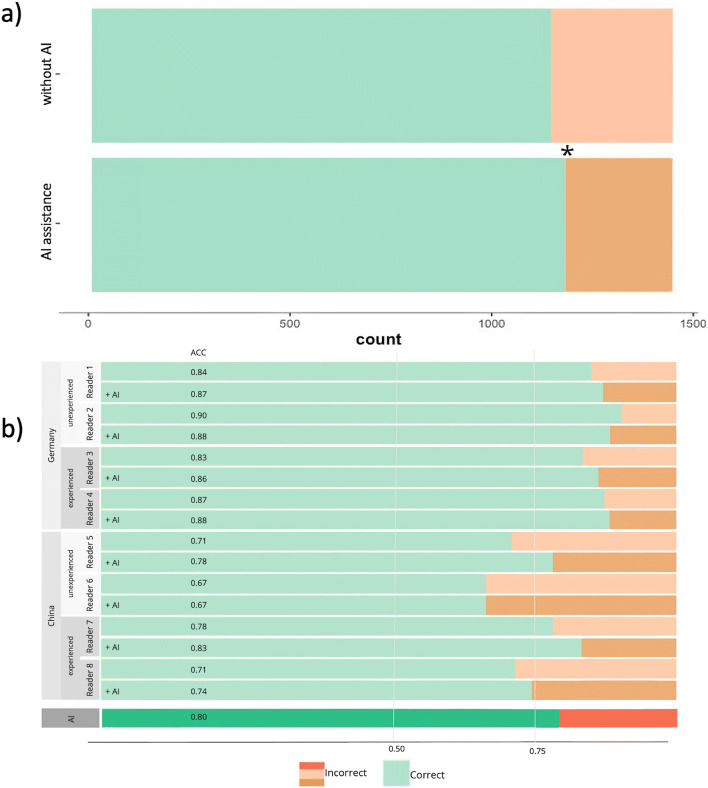

Radiologists’ diagnosis without AI assistance in the subtest dataset

Without AI assistance, the diagnostic performance of the eight radiologists in the Chinese-German subtest dataset with 180 cases regarding the diagnosis of either COVID-19 or CAP pneumonia resulted in 1440 decisions with a result of 79.1%, 79.0 %, and 79.2% (accuracy, sensitivity, specificity). The diagnostic performance of the radiologists from Germany was slightly better (86.3%, 88.9%, 83.6%) than the performance of their colleagues from China (71.9%, 69.2%, 74.7%). The mean evaluation time without AI assistance was 36.2 ± 22.1 s. The mean confidence score was 2.53 (see Table 4). In the present experiment, no significant overall difference in diagnostic performance between experienced and inexperienced radiologists occurred (p > .05; see Fig. 3b).

Table 4.

Results of the evaluation regarding the decision CAP or COVID-19 pneumonia

| Without AI assistance | With AI assistance | |

|---|---|---|

| Accuracy (%) | 79.1 | 81.5* |

| Sensitivity (%) | 79.0 | 71.4* |

| Specificity (%) | 79.2 | 91.5* |

| Time (mean) | 36.2 ± 22.1 sec | 26.9 ± 10.8 sec ** |

| Mean confidence | 2.53 | 2.20 |

| Confidence regarding the diagnosis (n) | Very certain diagnosis: 229 | Very certain diagnosis: 349* |

| Certain diagnosis: 535 | Certain diagnosis: 550 | |

| Likely diagnosis: 422 | Likely diagnosis: 445 | |

| Uncertain diagnosis: 217 | Uncertain diagnosis: 83** | |

| Very uncertain “ambiguous” diagnosis: 37 | Very uncertain “ambiguous” diagnosis: 13** |

COVID-19, coronavirus disease 2019; CAP, community-acquired pneumonia; AI, artificial intelligence; sec, second; n, number; *sig. p < .05; **sig. p < .01

Fig. 3.

a Comparison of correct diagnoses with and without AI assistance in the runs. b Different performances of each radiologist split by country and level of experience, and the AI result in the corresponding data set (2x 90 cases)

Without AI assistance, within the reference subgroup of cases with earlier COVID-19 stages (early and progressive), the performance of the eight radiologists was 79.5%, 81.9%, and 77.5% (accuracy, sensitivity, specificity). In contrast, the performance was 75.2%, 74.9%, and 75.5% (accuracy, sensitivity, specificity) in the advanced stages (peak and absorption). The eight radiologists’ evaluations without AI assistance, early stages were significantly better differentiated than late stages within the two subgroups (p < .05).

Radiologists’ diagnosis with AI assistance in the subtest dataset

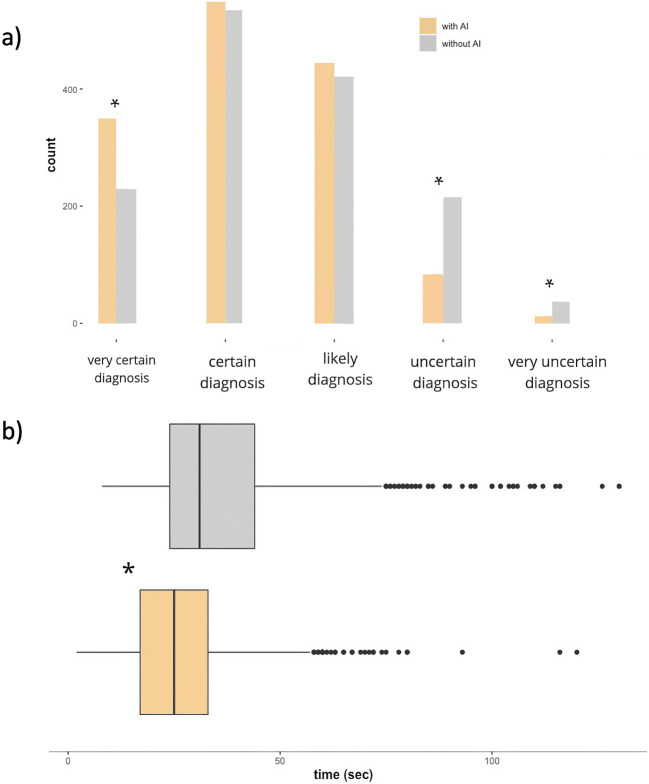

With the presentation of the AI results prior to the individual diagnosis (AI assistance), better results were achieved on average with 81.5%, 71.4%, and 91.5% (accuracy, sensitivity, specificity). Also, looking at the individual radiologists from Germany (87.2%, 83.1%, 91.4%) and China (75.7%, 59.7%, 91.7%), both achieved significantly higher accuracy rates (p < .05, see Fig. 3). The average evaluation time to decision with AI assistance was 26.9 ± 10.8 s, resulting in a significantly faster time interval to diagnosis (p < .05; see Table 4, Fig. 4b). The interreader reliability between the eight radiologists within the round without AI assistance showed a relatively low kappa of 0.34 (poor), which represents a relatively heterogeneous assessment of the cases. In the round with AI assistance, the kappa increased markedly to 0.58 (moderate), indicating more homogeneous diagnostic decisions.

Fig. 4.

a Different levels of radiologists' confidence in diagnoses using the 5-point Likert scale with and without AI. b Mean time to diagnosis (seconds)

The mean confidence score was also significantly reduced to 2.20 and showed a significantly (p > .05) a higher proportion of “clear decisions” in the AI-assisted (n = 349 vs. n = 229) diagnostic round. Similarly, the proportion of "uncertain" diagnoses and “very uncertain” diagnoses was significantly reduced with AI assistance (n = 83 vs. n = 217, n = 13 vs. n = 37; see Table 4, Fig. 4a).

With AI assistance, within the reference subgroup of cases with earlier COVID-19 stages (early and progressive), the performance of the eight radiologists was 81.9%, 70.5%, and 91.5% (accuracy, sensitivity, specificity). In contrast, the performance was 81.8%, 72.0%, and 91.7% (accuracy, sensitivity, specificity) in the advanced stages (peak and absorption). In the early COVID-19 subgroup and in the later COVID-19 subgroup, the use of AI assistance significantly improved the radiologists’ performance (p < .05).

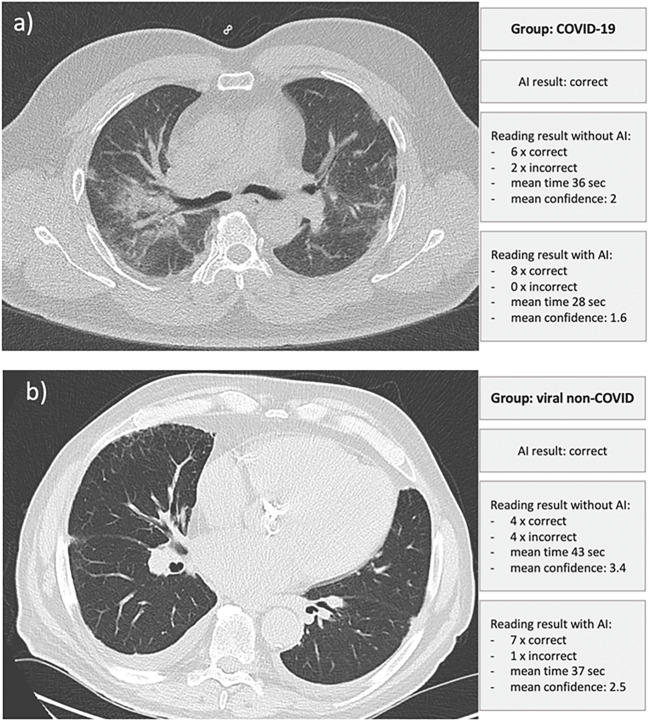

Based on the decision of the eight radiologists in the first evaluation round without AI assistance, a maximum number of eight correct readers’ decisions between COVID-19 vs. CAP was achievable per case. The cases were classified into a maximum of eight correct decisions per case in “clear cases” with six or more correct ratings from the readers. In contrast, “difficult cases” were classified as those that received fewer than six, and “ambiguous cases” were characterized as those CT scans that received less than 50% (fewer than four correct ratings). This analysis, according to the case difficulty, showed that there was a minor significant difference (p < .05) in the cases classified as “clear,” where more errors were committed with AI assistance. In the cases classified as “difficult,” there was a significant (p < .05) reduction in the number of incorrect diagnoses in the subsequent diagnosis with AI assistance. In the cases already categorized as “ambiguous,” there were numerically fewer errors. However, this finding did not prove to be significant (p > .05). Figure 5 showed examples of the cases classified correctly by AI and the result in terms of accuracy, confidence, and diagnostic time of the eight radiologists using the AI assistance.

Fig. 5.

Examples of the included cases, each with the correct AI result and the respective performance data of the radiologists' readings. a Correct AI classification. Using this result, all eight radiologists decided on the correct diagnosis, whereas without AI assistance, two were incorrect. Overall, this resulted in a faster reporting time and a higher degree of confidence. The right centrally located ground-glass opacities and beginning consolidations could have been the causative factor for the radiologists’ partially wrong result without AI assistance. b Only four of eight radiologists in the initial round without AI correctly classified images of viral non-Covid pneumonia, which could be due to the discrete, predominantly peripheral infiltrates. The AI correctly classified the image, resulting in a mainly correct diagnosis with a higher degree of confidence by the radiologists in the AI-assisted round (seven of eight now made a correct decision)

Discussion

Since the onset of the global COVID-19 pandemic in March 2020, it has been widely recognized that, in addition to conventional laboratory testing methods, radiological identification was also a robust option to diagnose and follow up COVID-19 pneumonia. CT scans of COVID-19 patients present characteristic morphological features, which several research groups exploited to develop AI-based networks for automated differentiation between COVID-19 pneumonia and pneumonia caused by other pathogens. To the best of our knowledge, this is the first study analyzing the potential improvement of AI assistance in radiologist decision-making on an international multicenter, multivendor CT dataset from China and Germany. This study provides deep insights into the advantages of AI-supported diagnosis in terms of accuracy, confidence in diagnosis, and time to diagnosis as a benefit for clinical implementation in daily routine.

Our classifier achieved excellent accuracy comparable to both similar published algorithms and the performance of human experts. Other international multicenter studies, which use publicly available data, present AI algorithms with accuracy scores of up to 92% [8, 11–13, 18]. It must be considered that some of these studies achieve slightly better performance rates in terms of accuracy, specificity, and sensitivity than our AI model, which may be partly caused by the heterogeneity of the included pathogen groups.

COVID-19 pneumonia exhibits different radiological morphologies during the infection stages and thus may have some overlap in appearance with other types of pneumonia, mainly non-COVID viral infections [26, 27]. Whereas previous studies did not consider the approved CT morphology defining time course, stage, and severity of the disease, which imposes a selection bias by including only the early stages of COVID-19 pneumonia with typical findings for COVID-19 [12]. In our study, COVID-19 CT scans were acquired before, during, and after hospitalization, including all stages of COVID-19, with difficult and overlapping findings, especially at the later stages of pulmonary infection. The training and validation dataset included a heterogeneous set of early-to-absorption stages images with mild to extensive severities, which might have favored misclassification in the test dataset due to a substantial morphological overlap with other viral pneumonia [26, 27]. This finding is in line with Harmon et al [13], who report examinations of different disease stages resulting in overall sensitivity rates of 75.1%, comparable to our study. Moreover, the Zhang et al [11] and Li et al [7] studies included non-pneumonia CT scans in the control cohort, where it can be assumed that these imaging features are significantly different from that of COVID-19, thus improving the accuracy score. The performance dependence of the AI algorithm on the COVID-19 stage was demonstrated in the present study by looking at the subtest datasets with earlier (early and progression) and later (peak and absorption), where the algorithm was superior to distinguish later COVID-19 stages from other types of pneumonia.

In addition to the development of an AI-based algorithm, our study incorporated eight radiologists’ evaluations of the Chinese-German 180 subtest dataset with and without the assistance of an AI-based algorithm. In these trials, it was shown that the radiologists' final diagnosis improved significantly in accuracy, time, and self-experienced confidence when the AI-based assistance was consulted. These findings are consistent with the results of Bai et al [8] in a larger international cohort. However, our data demonstrate the benefit of time and confidence in using AI assistance in diagnostic decisions. A further sub-analysis showed that the use of AI assistance is useful in the early and late COVID-19 stages. However, it seems that its use seems particularly useful in the later stage group, especially due to the poorer differentiation performance of radiologists within the later stages and the superior performance of the AI algorithm. Moreover, in additional analysis, we demonstrated that AI assistance was most valuable in difficult-to-categorize cases and improved the radiologists’ diagnosis.

In routine clinical work, it is difficult for radiologists to make a dichotomic diagnosis of COVID-19 or CAP based on CT features alone, often with different confidence levels [28, 29]. Herein, AI-assisted diagnosis improved both speed and confidence in the diagnostic process. Therefore, the presented AI model can enhance clinical efficiency, which is crucial during the overload of the healthcare system in the current pandemic. Routine AI-assisted reporting could be particularly useful in sudden COVID-19 disease peaks in the winter months. At the same time, an AI assistance system may be an important tool to assist a generation of younger radiologists in further diagnosis who do not have the experience of the first major pandemic episodes. In this sense, AI assistance is also a way to provide the image-based knowledge gathered in earlier stages of the global pandemic for potentially further COVID-19 pandemic episodes.

This study found no significant difference between inexperienced and experienced readers regarding the measured performance data, comparable to Giannakis et al [30]. This study suggests that experienced and inexperienced readers can benefit from using AI assistance in significantly faster reporting times, higher diagnostic accuracy, and higher confidence.

To summarize the strengths of our study, we developed a deep learning model using three Chinese centers, including nearly 1000 patients. We tested the algorithm using an independent Chinese-German test dataset of 600 patients. To the best of our knowledge, the usage of the Chinese-German test dataset is unique compared to previous studies. In addition, in this study, a large balanced, and clinically relevant CT dataset of varying pneumonia was used, including all stages of COVID-19 disease. Finally, this is the first large international multicenter study to demonstrate the benefits of using AI-based assistance for daily radiology usage during the global COVID-19 pandemic.

Limitations of the present study include the retrospective design and the sole inclusion of patients with “CT positive” pneumonia, which leads to a selection bias that only patients with visible acute or subacute pulmonary inflammation are included. In contrast, patients with negative CT screening but positive RT-PCR were not included. However, this also supports this work because only in CT with pneumonic infiltrations the radiologist will demand AI support. In addition, we divided COVID-19 into different stages according to the time based on the onset of symptoms and CT image manifestations. However, in detail, we did not categorize them into typical, atypical, and intermediate types. In addition, it is to be mentioned that our AI algorithm and the subsequent experiment regarding the radiologists’ decisions only perform dichotomous decisions between COVID-19 and CAP. Other possible differential diagnoses, e.g., malignant diseases, are not considered. Hence, our proposed method is limited to the clinical context of infectious lung disease. The clinical setting and laboratory examination might be included in additional AI algorithms to improve the classifier’s performance. Furthermore, follow-up studies should address the cases identified herein in which AI assistance is particularly useful. Thus, a follow-up study should clarify to what extent the benefit of AI assistance depends on the causative pathogen, the extent of the affected lung volume in combination with the detailed COVID-19 stage, or the individual confidence level of the radiologist. In addition, a corresponding AI should be integrated into the clinical routine, and the benefit in everyday practice should be recorded within the framework of a prospective clinical evaluation. In this context, it would be useful to conduct a large-scale study to determine user satisfaction using AI assistance in daily radiology practice.

In conclusion, we developed an AI-based diagnostic tool to classify CT datasets as cases of COVID-19 or CAP. Our classifier yielded good accuracy. We demonstrated that using this AI as an assistance system leads to higher diagnostic accuracy while decisions were made faster and more confidently than in a solely radiologist-based evaluation. The present study shows for the first time that the use of AI algorithms as an assistance system can significantly improve radiologists’ performance in the diagnostic evaluation of COVID-19 CTs in times of an ongoing global pandemic.

Supplementary Information

(DOCX 3894 kb)

Acknowledgments

This work is supported by Sino-German Center for Research Promotion (SGC), a project entitled CT-based Deep Learning Algorithm in Diagnosis and evaluation of COVID-19:An International Multi-center Study (C-0007).

Abbreviations

- AI

Artificial intelligence

- CAP

Community-acquired pneumonia

- CCM

COVID-19 classification model

- CNN

Conventional neural network

- COVID-19

Coronavirus disease 2019

- CT

Computed tomography

- HU

Hounsfield unit

- ISD

IntelliSpace Discovery platform

- LSM

Lung segmentation model

- RT-PCR

Reverse transcription- polymerase chain reaction test

Funding

This study has received funding from Sino-German Center for Research Promotion (SGC), a project entitled CT-based Deep Learning Algorithm in Diagnosis and evaluation of COVID-19:An International Multi-center Study (C-0007). Jilin Provincial Key Laboratory of Medical imaging & big data (20200601003JC) and Radiology and Technology Innovation Center of Jilin Province (20190902016TC). China International Medical Foundation, Imaging Research, SKY,(Z-2014-07-2003-03). This work was supported by RACOON (NUM), under BMBF grant number 01KX2021.

Declarations

Guarantors

The scientific guarantors of this publication are Huimao ZHANG (Department of Radiology, The First Hospital of Ji Lin University, Changchun, China) and Thorsten Persigehl (Diagnostic and Interventional Radiology, University Hospital Cologne, Germany)

Conflict of interest

Rahil Shahzad, Frank Thiele, Michael Perkuhn, Xiaochen Huai are employees of Philips Healthcare, other authors declare no conflicts of interest and had full control over all data and guarantee for correctness.

Statistics and biometry

Two of the authors (Jonathan Kottlors and Rahil Shahzad) have significant statistical expertise.

Informed consent

The study is on human subjects: Written informed consent was waived by the Institutional Review Board.

Ethical approval

This retrospective study received ethical approval and informed consent was waived at all participating hospitals (Jilin: 2020-595, Wuhan: [2020]17, Ningbo: PJ-NBEY-KY-2020-194-01, Cologne: 20-1676, Frankfurt: 20-719, and Heidelberg: S-293/2020).

Methodology

• retrospective

• diagnostic or prognostic study

• multicenter study

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Fanyang Meng, Jonathan Kottlors, Huimao Zhang and Thorsten Persigehl contributed equally.

References

- 1.World Health Organization WHO Coronavirus (COVID-19) Dashboard. World Health Organization, Geneva. Available via https://covid19.who.int. Accessed Sept 2021

- 2.Akl EA, Blažić I, Yaacoub S, et al. Use of chest imaging in the diagnosis and management of COVID-19: a WHO rapid advice guide. Radiology. 2021;298(2):E63–E69. doi: 10.1148/radiol.2020203173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fang Y, Zhang H, Xie J, et al. Sensitivity of chest CT for COVID-19: comparison to RT-PCR. Radiology. 2020;296(2):E115–E117. doi: 10.1148/radiol.2020200432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ai T, Yang Z, Hou H, et al. Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology. 2020;296(2):E32–E40. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sun Z, Zhang N, Li Y, Xu X (2020) A systematic review of chest imaging findings in COVID-19. Quant Imaging Med Surg 10:1058–1079. 10.21037/qims-20-564 [DOI] [PMC free article] [PubMed]

- 6.Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18(8):500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Li L, Qin L, Xu Z, et al. Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: evaluation of the diagnostic accuracy. Radiology. 2020;296(2):E65–E71. doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bai HX, Wang R, Xiong Z, et al. Artificial intelligence augmentation of radiologist performance in distinguishing COVID-19 from pneumonia of other origin at chest CT. Radiology. 2020;296(3):E156–E165. doi: 10.1148/radiol.2020201491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yu Q, Wang Y, Huang S, et al. Multicenter cohort study demonstrates more consolidation in upper lungs on initial CT increases the risk of adverse clinical outcome in COVID-19 patients. Theranostics. 2020;10(12):5641–5648. doi: 10.7150/thno.46465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ni Q, Sun ZY, Qi L, et al. A deep learning approach to characterize 2019 coronavirus disease (COVID-19) pneumonia in chest CT images. Eur Radiol. 2020;30(12):6517–6527. doi: 10.1007/s00330-020-07044-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zhang K, Liu X, Shen J, et al (2020) Clinically applicable AI system for accurate diagnosis, quantitative measurements, and prognosis of COVID-19 pneumonia using computed tomography. Cell 181(6):1423-1433.e11. 10.1016/j.cell.2020.04.045 [DOI] [PMC free article] [PubMed]

- 12.Mei X, Lee H-C, Diao K-Y, et al. Artificial intelligence-enabled rapid diagnosis of patients with COVID-19. Nat Med. 2020;26(8):1224–1228. doi: 10.1038/s41591-020-0931-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Harmon SA, Sanford TH, Xu S, et al. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat Commun. 2020;11(1):4080. doi: 10.1038/s41467-020-17971-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wang S, Zha Y, Li W, et al. A fully automatic deep learning system for COVID-19 diagnostic and prognostic analysis. Eur Respir J. 2020;56(2):2000775. doi: 10.1183/13993003.00775-2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wu G, Yang P, Xie Y, et al. Development of a clinical decision support system for severity risk prediction and triage of COVID-19 patients at hospital admission: an international multicentre study. Eur Respir J. 2020;56(2):2001104. doi: 10.1183/13993003.01104-2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jin C, Chen W, Cao Y, et al. Development and evaluation of an artificial intelligence system for COVID-19 diagnosis. Nat Commun. 2020;11(1):5088. doi: 10.1038/s41467-020-18685-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chassagnon G, Vakalopoulou M, Battistella E, et al. AI-driven quantification, staging and outcome prediction of COVID-19 pneumonia. Med Image Anal. 2021;67:101860. doi: 10.1016/j.media.2020.101860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lee EH, Zheng J, Colak E, et al. Deep COVID DeteCT: an international experience on COVID-19 lung detection and prognosis using chest CT. NPJ Digit Med. 2021;4(1):11. doi: 10.1038/s41746-020-00369-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lynch DA, Austin JHM, Hogg JC, et al. CT-definable subtypes of chronic obstructive pulmonary disease: a statement of the Fleischner Society. Radiology. 2015;277(1):192–205. doi: 10.1148/radiol.2015141579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shih AR, Nitiwarangkul C, Little BP, et al. Practical application and validation of the 2018 ATS/ERS/JRS/ALAT and Fleischner Society guidelines for the diagnosis of idiopathic pulmonary fibrosis. Respir Res. 2021;22(1):124. doi: 10.1186/s12931-021-01670-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Jin Y-H, Cai L, Cheng Z-S, et al. A rapid advice guideline for the diagnosis and treatment of 2019 novel coronavirus (2019-nCoV) infected pneumonia (standard version) Mil Med Res. 2020;7(1):4. doi: 10.1186/s40779-020-0233-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pan F, Ye T, Sun P, et al. Time course of lung changes at chest CT during recovery from coronavirus disease 2019 (COVID-19) Radiology. 2020;295(3):715–721. doi: 10.1148/radiol.2020200370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Badrinarayanan V, Kendall A, Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell. 2017;39(12):2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 24.He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Available via https://arxiv.org/pdf/1512.03385.pdf. Accessed 15 Oct 2021

- 25.Team RC . R: a language and environment for statistical computing. Vienna: Austria; 2019. [Google Scholar]

- 26.Bai HX, Hsieh B, Xiong Z, et al. Performance of radiologists in differentiating COVID-19 from non-COVID-19 viral pneumonia at chest CT. Radiology. 2020;296(2):E46–E54. doi: 10.1148/radiol.2020200823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Li B, Li X, Wang Y, et al. Diagnostic value and key features of computed tomography in Coronavirus Disease 2019. Emerg Microbes Infect. 2020;9(1):787–793. doi: 10.1080/22221751.2020.1750307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Prokop M, van Everdingen W, van Rees VT, et al. CO-RADS: a categorical CT assessment scheme for patients suspected of having COVID-19-definition and evaluation. Radiology. 2020;296(2):E97–E104. doi: 10.1148/radiol.2020201473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Simpson S, Kay FU, Abbara S, et al. Radiological Society of North America Expert Consensus Statement on Reporting Chest CT Findings Related to COVID-19. Endorsed by the Society of Thoracic Radiology, the American College of Radiology, and RSNA-Secondary Publication. J Thorac Imaging. 2020;35(4):219–227. doi: 10.1097/RTI.0000000000000524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Giannakis A, Móré D, Erdmann S, et al. COVID-19 pneumonia and its lookalikes: how radiologists perform in differentiating atypical pneumonias. Eur J Radiol. 2021;144:110002. doi: 10.1016/j.ejrad.2021.110002. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 3894 kb)