Abstract

Due to the COVID-19 pandemic, millions of people around the world have been wearing masks. This has negatively affected the reading of facial emotions. In the current study, the ability of participants' emotional recognition of faces and the eye region alone (similar to viewing masked faces) was analyzed in conjunction with psychological factors such as their capacity to empathize, systemize and the degree of autistic traits. Data from 403 healthy adults between 18 and 40 years revealed a significant difference between faces and eyes-only conditions for accuracy of emotion recognition as well as emotion intensity ratings, indicating a reduction in the capacity to recognize emotions and experience the emotion intensities of individuals wearing masks. As expected, people who were more empathetic were better at recognizing both ‘facial’ and ‘eyes-only’ emotions. This indicates that empathizers might have an upper hand in recognizing emotions of masked faces. There was a negative correlation between the degree of autistic traits and emotion recognition in both faces and eyes-only conditions. This suggests that individuals with higher levels of autistic traits would have greater difficulty recognizing emotions of both faces with and without masks. None of the psychological factors had a significant relationship with emotion intensity ratings. Finally, systemizing tendencies had no correlation with either emotion recognition or emotion intensity ratings.

Keywords: COVID-19, Empathizing, Systemizing, Autistic traits, Emotion recognition, Masks

1. Introduction

The naturalist Charles Darwin was one of the first scientists to suggest that humans share a set of basic emotions (Darwin, 2005). In the 1970s, Paul Eckman concluded, based on extensive experiments in different cultures across the world, that there are six universal basic emotions expressed through the face. These include anger, surprise, disgust, joy, fear, and sadness (Ekman & Friesen, 1971).

Emotion recognition is an important part of human communication. The emotions expressed by a person can affect the behavior and feelings of the observer; these are in turn dependent on the emotions expressed by the other communication partner. According to the emotion as social information (EASI) model, the basic emotions expressed by a person can affect the observer by activating inferential processes and affective reactions. With respect to inferential processes, when a person expresses an emotion such as ‘anger,’ the observer can infer that they might have done something wrong and may change their behavior or apologize. In terms of affective reactions, emotions expressed by the person can spread from the expresser to the observer; this may also influence the likeability of the expresser. For example, expressing happiness may increase likeability of the expresser and the observer may start feeling happy themselves (Van Kleef, 2009).

Another automatic process which is an important aspect of emotional communication is overt and covert mimicry of facial expressions of emotions (usually measured using facial electromyography) (Bavelas, Black, Lemery, & Mullett, 1986; Dimberg, Andréasson, & Thunberg, 2011; Hsee, Hatfield, Carlson, & Chemtob, 1990). There is also evidence of cross-modal mimicry where facial emotions can alter the vocal pitch of the receivers (see Karthikeyan & Ramachandra, 2017). Finally, recognition of emotions also plays an important role in perceptions of trustworthiness (Oosterhof & Todorov, 2009).

Many studies have shown that the ‘eye’ and the ‘mouth’ regions of the face provide important cues for the recognition of emotions (see Boucher & Ekman, 1975; Eisenbarth & Alpers, 2011; Guarnera, Hilchy, Cascio, Carubba, & Buccheri, 2017; Wegrzyn, Vogt, Kireclioglu, Schneider, & Kissler, 2017). Due to the COVID-19 pandemic, millions of people around the world have been wearing masks. Consequently, an automatic and important nonverbal communication task (reading facial emotions) has been negatively affected (Carbon, 2020; Grundmann, Epstude, & Scheibe, 2021; Marini, Ansani, Paglieri, Caruana, & Viola, 2021; Noyes, Davis, Petrov, Gray, & Ritchie, 2021).

Although the perceivers can gain a great deal of information by observing the eye regions of their communication partners, not having information from their mouth regions, which are completely covered by masks, can negatively impact their emotion recognition. It can impede inferential processing and affective reactions. That is, not being able to accurately recognize facial emotions may lead to inappropriate behavioral responses by the observer and may also affect the likeability of the person expressing the emotions. Mimicry of facial expressions of emotions, vocal responses to facial emotions, and perceptions of trustworthiness can also be hampered. All these can affect forming friendships and other relationships in social as well as work environments. Furthermore, they can compound the communication difficulties faced by people with neurocognitive disorders (Schroeter, Kynast, Villringer, & Baron-Cohen, 2021).

1.1. Emotion recognition: the potential role of empathizing, systemizing, and autistic traits

Empathy can be divided into cognitive and affective empathy. Cognitive empathy (ToM) refers to understanding and predicting the emotions of others; affective empathy is experiencing the emotions expressed by others (Baron-Cohen & Wheelwright, 2004). Research has shown a positive correlation between emotion recognition ability and empathy (Besel & Yuille, 2010). Studies have also shown that individuals with disorders such as autism, psychopathy, antisocial behaviors etc., which affect emotional recognition, have an underlying deficit in empathy (Blair, 2005; Hunnikin, Wells, Ash, & Van Goozen, 2019).

There has also been evidence from a large-scale study in the general population with 3345 participants that autistic traits are positively correlated with one's ability to systemize, which refers to analyzing different rule-governed systems and understanding how they work, and negatively related to empathizing capacity (Svedholm-Häkkinen, Halme, & Lindeman, 2018). Given that emotion recognition is related to empathy, and that autistic traits are related to the capacity to empathize (negative correlation) and systemize (positive correlation), it is reasonable to hypothesize that recognizing basic emotions of people wearing masks, by looking only at the eye region of faces, is positively correlated with empathizing but negatively correlated with systemizing and autistic traits. Therefore, the primary aim of the current study was to examine the relationship between the ability to recognize emotions of people wearing masks (relying mainly on cues from the eye region alone) and empathizing, systemizing, and autistic traits. A study by Guo (2012) has shown the positive influence of emotion intensity on the accuracy of emotion recognition. Given this association, we wanted to examine the relationship between emotion intensity ratings and empathizing, systemizing, and autistic traits.

2. Methods

The study was conducted using a web-based survey platform, Qualtrics. Participants were recruited through the authors' university and Qualtrics survey panels. The panel participants received compensation from Qualtrics. Four hundred and three participants (Males = 218, Females =185) ranging in age from 18 to 40 years who were generally healthy with no reported history of neurological/psychological issues, learning disability, hearing, and visual problems (normal or corrected-to-normal vision) participated in this study. They had to identify the emotion of a woman expressing a basic emotion by selecting the correct one from a choice of two (target and a foil with the same valence). For example, the target and foils were HAPPY vs. Surprise, AFRAID vs. Angry, DISGUST vs. Sad, DISTRESS vs. Sad (the capitalized words were the targets). This method has been successfully used in the past by Baron-Cohen, Wheelwright, and Jolliffe (1997). Furthermore, our participants were shown pictures of only the ‘upper part’ of the face of the same woman expressing the same emotions (similar to viewing the emotions of a person wearing a mask). Although the upper part of the face was shown, the participants had to rely largely on the eye region to decode the emotions. A recent study by Wegrzyn et al. (2017), using fine-grained masking of different regions of faces expressing basic emotions, has revealed ‘eyes’ and ‘mouth’ regions to be the most diagnostic in upper and lower parts of the face respectively for emotion recognition. Therefore, upper part of the face stimuli from here onwards will be referred to as eye stimuli/trials.

They also had to rate the intensity of each emotion on a 100-point scale for both the face and the eye stimuli. A total of 7 basic emotions (happy, sad, angry, afraid, surprise, disgust, and distress), taken from Baron-Cohen et al. (1997), were used for both the ‘face’ and ‘eyes’ trials (surprise, happy and angry were repeated twice but with different poses). In total, there were 10 pictures for the ‘face’ and 10 pictures for the ‘eyes’ trials (see Fig. 1 for example). The order of presentation of the ‘face’ and ‘eyes’ trials was randomized. Half of the participants were presented with the ‘face’ trial first and the other half were shown the ‘eyes’ trial first. The presentation of stimuli within each trial was also randomized. Finally, following emotion recognition, the same participants completed three questionnaires on ‘empathizing,’ using Empathy Quotient or EQ (Baron-Cohen & Wheelwright, 2004); ‘systemizing,’ using Systemizing Quotient or SQ (Baron-Cohen, Richler, Bisarya, Gurunathan, & Wheelwright, 2003); and assessment of ‘autistic traits,’ using Autism Quotient or AQ (Baron-Cohen, Wheelwright, Skinner, Martin, & Clubley, 2001). EQ had a total of 40 questions with a possible maximum score of 80; SQ had 75 questions with a possible maximum score of 150; AQ had 50 questions with a possible maximum score of 50. Example statements for EQ are, “I can easily tell if someone else wants to enter a conversation” and “I can pick up quickly if someone says one thing but means another.” The choices are strongly agree, slightly agree, slightly disagree, strongly disagree. Example statements for AQ are “I prefer to do things with others rather than on my own” and “I frequently find that I don't know how to keep a conversation going.” The choices are definitely agree, slightly agree, slightly disagree, definitely disagree. Example statements for SQ are “I find it very easy to use train timetables, even if this involves several connections” and “When I look at a building, I am curious about the precise way it was constructed.” The choices are strongly agree, slightly agree, slightly disagree, strongly disagree. Although low empathy and high systematizing tendencies are part of autistic traits, the AQ mainly focuses on the “broader phenotype” and contains questions related to social skills, attention, communication, and imagination (Baron-Cohen et al., 2001).

Fig. 1.

Example of face and eyes-only stimuli used for the study. The pictures above represent the emotion ‘happy’ (stimuli from Baron-Cohen et al., 1997).

3. Results

3.1. Recognition of face vs eyes-only emotions

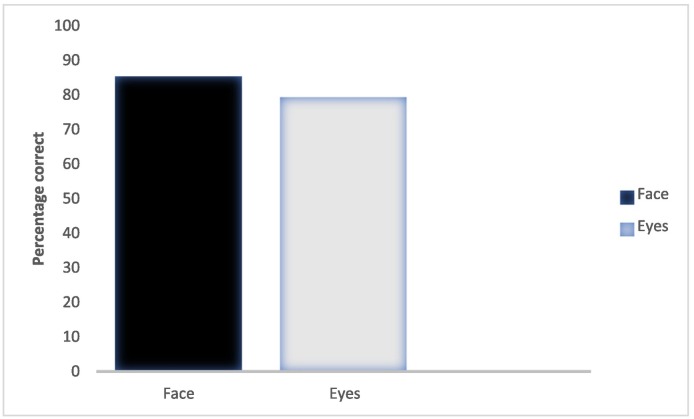

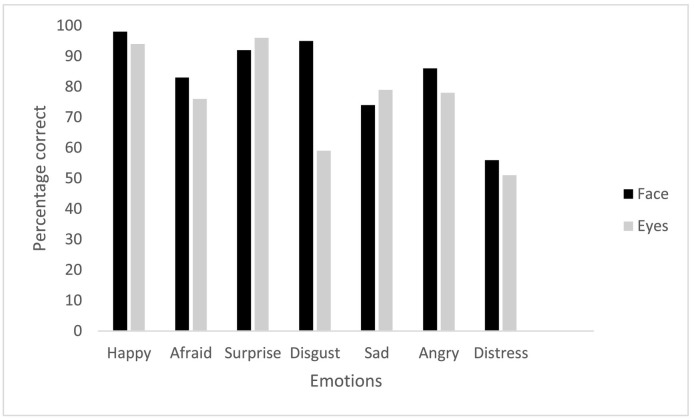

Overall, a paired sample t-test revealed a significant difference between facial and eyes-only conditions on accuracy of emotion recognition [t (2820) = 8.67, p < 0.001)] (see Fig. 2 for mean percentage of correct recognition responses). A paired sample t-test conducted separately for happy [t (402) = 3.51, p < 0.001)], afraid [t (402) = 2.53, p = 0.012)], surprise [t (402) = −2.96, p = 0.003)], disgust [t (402) = 13.63, p < 0.001)], sad [t (402) = −1.86, p = 0.064)], anger [t (402) = 6.11, p < 0.001)], and distress [t (402) = 1.59, p = 0.113)] revealed a significant difference between facial vs. eyes-only emotion recognition for all emotions except sadness and distress (see Fig. 3 for mean percentage of correct recognition responses for individual emotions). As seen in Fig. 3, the mean recognition accuracy was the best for happy in the face condition and best for surprise in the eyes condition. It was the worst for distress in both facial and eyes-only conditions. Surprise and sadness were the only emotions that were better in the eyes-only condition (faces = 92%; eyes = 96% for surprise; faces = 74%, eyes = 79% for sad).

Fig. 2.

Mean percentage of correct recognition of emotions for faces (black) and eyes-only conditions (white).

Fig. 3.

Mean percentage of correct recognition of 7 basic emotions for faces (black) and eyes-only conditions (white).

Overall, there was also a significant difference between facial vs. eyes-only emotional intensity ratings [t (2820) = 6.44, p < 0.001)]. The means and standard deviations for the emotion intensities are provided in Table 1 . A paired sample t-test conducted separately for emotion intensities for happy [t (402) = 8.492, p < 0.001)], afraid [t (402) = −8.540, p < 0.001)], surprise [t (402) = −1.576, p = 0.116)], disgust [t (402) = 16.773, p < 0.001)], sad [t (402) = −1.483, p = 0.139)], anger [t (402) = 0.895, p = 0.371)], and distress [t (402) = 1.680, p = 0.094)] revealed a significant difference between facial vs. eyes-only emotion intensities for only happy, afraid, and disgust.

Table 1.

Means and standard deviations (SD) for face and eyes-only emotion intensities (100-point scale) for various emotions.

| Emotions | Faces | Eyes |

|---|---|---|

| Happy | 77.3 (15.5) | 70.9 (17.3) |

| Afraid | 51.9 (22.9) | 68.0 (21.0) |

| Surprise | 72.1 (16.2) | 73.3 (16.3) |

| Disgust | 75. 4 (18.2) | 54.5 (24.2) |

| Sad | 55.7 (27.0) | 57.4 (23.7) |

| Anger | 65.7 (19.6) | 64.9 (18.5) |

| Distress | 78.3 (17.4) | 76.8 (17.3) |

3.2. Correlation between face and eyes-only emotions with EQ, SQ, and AQ

The means and standard deviations for EQ, SQ, and AQ are provided in Table 2 . A Pearson product-moment correlation revealed significant positive correlation between EQ and recognition of facial emotions (r = 0.217, n = 403, p < 0.001) and emotions displayed in eyes-only (r = 0.176, n = 403, p < 0.001). Significant negative correlations were seen between AQ and recognition of both facial (r = −0.221, n = 403, p < 0.001) and eyes-only emotion recognition (r = − 0.179, n = 403, p < 0.001). SQ had no significant correlation with both facial (r = −0.023, n = 403, p = 0.650) and eyes-only conditions (r = −0.056, n = 403, p = 0.259). There was no significant correlation between EQ, SQ, AQ and the emotion intensities (p > 0.05). Finally, there was a significant negative correlation between AQ and EQ (r = −0.525 n = 403, p < 0.001) and a significant positive correlation between SQ and EQ (r = 0.399 n = 403, p < 0.001). No significant correlation was seen between SQ and AQ (r = −0.065, p = 0.191).

Table 2.

Means and standard deviations (SD) for Empathy Quotient (EQ), Systemizing Quotient (SQ) and Autism Quotient (AQ).

| Variable | Mean | SD |

|---|---|---|

| EQ (Max = 80) | 42.5 | 12.35 |

| SQ (Max =150) | 63.6 | 25.19 |

| AQ (Max =50) | 20.6 | 5.56 |

3.3. Gender differences on key measures

Finally, the mean percentage of accuracy for recognition of face emotions for males was 83.07% and for females was 89.51%. The mean percentage of accuracy for recognition of eyes-only emotions for males was 76.47% and for females was 84.27%. An independent sample t-test revealed that the recognition of facial emotions by females (M = 8.95, SD = 1.09) was significantly higher than that of males (M = 8.30, SD = 1.31), t (401) = −5.37, p = 0.005. The same results emerged for recognition of eyes- only emotions as well; the mean accuracy for recognition of eyes- only emotions by females (M = 8.43, SD = 1.09) was significantly higher than that of males [(M = 7.65, SD = 1.37), t (399) = 6.38, p < 0.001]. The mean EQ scores for females (M = 47.32, SD = 11.50) was significantly higher than that of males [(M = 38.32, SD = 11.50), t (403) = −7.819, p < 0.001]. The mean SQ scores for males (M = 69.55, SD = 26.22) was significantly higher than that of females [(M = 56.55, SD = 22.01), t (403) = 5.290, p < 0.001]. Finally, the mean AQ scores for males (M = 21.94, SD = 4.870) was significantly higher than that of females [(M = 19.01, SD = 5.915), t (403) = 5.454, p < 0.001].

3.4. Validity and internal consistency

The convergent validity of the eyes test was compared with the face test using the Pearson correlation and the correlation was significant (r = 0.723, p < 0.001). The convergent validity between the psychological measures (AQ, EQ, and SQ) was also significant. There was a significant negative correlation between AQ and EQ (r = −0.525 n = 403, p < 0.001), a significant positive correlation between SQ and EQ (r = 0.399 n = 403, p < 0.001), and a significant negative correlation between AQ and SQ (r = −0.065, p < 0.001). The internal consistency as measured by the item-rest correlation for the face and eyes-only emotions was 0.723. The item-rest scores for EQ, SQ, and AQ were 0.496, 0.331, and 0.253 respectively.

4. Discussion

The results of this online study showed that people were better at recognizing emotions expressed by whole faces when compared to emotions expressed by the eye-regions alone. The whole face is important for recognizing basic emotions and the results indicate the downside of mask wearing in social situations. This is in line with recent studies that have shown the negative effects of mask wearing on people's accuracy of emotion recognition (Carbon, 2020; Grundmann et al., 2021; Marini et al., 2021; Noyes et al., 2021). However, sad (accuracy of recognition for ‘sad faces’ = 74%; accuracy of recognition for ‘sad eyes’ =79%) and distress (accuracy of recognition for ‘distress faces’ = 56%; accuracy of recognition for ‘distress eyes’ = 51%) were two emotions that were not affected by not having information from the mouth region (having information from the eye region alone). In fact, the recognition accuracy increased for sad when only the eye region was shown but it was not statistically significant. This is in agreement with previous studies which have shown the importance of eyes in detecting sadness (Boucher & Ekman, 1975; Eisenbarth & Alpers, 2011; Guarnera et al., 2017; Guo, 2012). However, this contrasts with a recent study by Marini et al. (2021), which showed a drop from 93% to 70% accuracy in detecting sadness in the no mask when compared to the mask condition. They have attributed this to a ceiling effect in the no mask condition. To our knowledge, this is the first study to show the saliency of eye cues in detecting distress in a large sample; the accuracy was however, lower for eyes but not significantly different from whole faces showing distress. This finding is similar to Baron-Cohen et al. (1997) which showed that 46% of participants passed the distress trial by looking at the whole face when compared to 44% who passed looking at the eye region alone; this study, however, only had 50 subjects.

Finally, a paired t-test revealed that the emotion ‘surprise’ was significantly better in the eyes-only condition. This finding is also in contrast with other studies that have shown the importance of eyes for fear, sadness and anger but not surprise (Eisenbarth & Alpers, 2011; Guarnera et al., 2017). This is, however, consistent with the study by Baron-Cohen et al. (1997) which showed a jump from 82.4% (faces) to 88.2% (eyes) pass rate for surprise in a small sample of just 17 subjects. The current findings are also supported by another study which showed that people gazed more frequently at the eye region for angry, fearful, and surprise when compared to other emotions (Guo, 2012). In summary, recognition of sadness, distress, and surprise may not be affected by mask wearing. In fact, people might be better at recognizing surprise and sadness to some extent in people wearing masks.

Overall, the results also showed that the participants marked facial emotions as more ‘intense’ when compared to eyes-only emotions. However, an analysis of individual emotions showed that this was true only for happy and disgust. They in fact, marked eyes-only emotions to be more intense for 'afraid'. This suggests that, for at least some basic emotions, people may not have difficulty gauging the intensity of emotions of people wearing masks.

Overall, both EQ and AQ but not SQ had an influence on emotion recognition of both faces and eyes-only emotions. As expected, people who were more empathetic were better at recognizing both ‘facial’ and ‘eyes only’ emotions when compared to people who had less empathy (Besel & Yuille, 2010). This indicates that the capacity to empathize might be a benefit in emotion recognition of masked faces. The significant negative correlations between AQ and facial and eyes-only emotions indicate that people with higher levels of autistic traits had greater difficulty in recognizing emotions expressed via both facial and eyes-only emotions. This indicates that people with higher levels of autistic traits may have difficulty with recognizing emotions of both people wearing and not wearing masks. In fact, the correlation was much higher for recognition of facial emotions relative to eyes-only emotions. People with higher levels of autistic traits already have difficulty in reading facial emotions (Uljarevic & Hamilton, 2013) and mask-wearing may not add further difficulty. Finally, it is important to note that empathizing and autistic traits had significant correlation with emotion recognition alone and not with emotion intensity ratings. This finding needs to be explored further by using emotions of different intensity levels. Systemizing was the only variable which had no significant relationship with either emotion recognition or emotion intensity ratings (both faces and eyes).

Interestingly, EQ and SQ had a significant positive correlation. This was unexpected and inconsistent with previous studies (see Greenberg, Warrier, Allison, & Baron-Cohen, 2018). However, it is consistent with a recent study by Sindermann, Cooper, and Montag (2019) where they attribute this relation to the cognitive components shared by the two measures. Although the relationship between these psychological measures was not the focus of this study, this was an interesting finding which needs to be explored further in future investigations.

Overall, there was a gender difference on all the key measures. Females were significantly better than males on both face and eyes-only emotional recognition. These findings are consistent with other studies which have shown the superiority of females in decoding emotions (Olderbak, Wilhelm, Hildebrandt, & Quoidbach, 2018; Sullivan, Campbell, Hutton, & Ruffman, 2017; Thompson & Voyer, 2014). Females were also significantly better on EQ. Males on the other hand were significantly better on SQ and AQ. These findings are in agreement with a recent large-scale study with 671,606 participants (Greenberg et al., 2018).

Given the downside of wearing masks on emotion recognition, people should start paying more attention to non-verbal communication, body language, gestures, and vocal emotions. Replacing surgical and other standard masks with transparent ones or even face shields could also be helpful (Carbon, 2020; Marini et al., 2021; Mheidly, Fares, Zalzale, & Fares, 2020). Schroeter et al. (2021) have recommended the use of “teleconsultation”, which can include non-medical procedures such as counseling, speech therapy, psychotherapy etc. for people with various neurocognitive disorders. This would provide clients and caregivers an effective way of communicating with professionals in a mask-free setting.

4.1. Limitations and future research

To the best of our knowledge this is the first study to indirectly explore the effects of wearing masks on emotion recognition in relation to psychological factors such as empathizing, systemizing, and autistic traits. Although providing participants with pictures of the upper face region was similar to showing pictures of people wearing masks there could have been some qualitative differences which could have affected the results. However, the face stimuli used in the current study were developed by Baron-Cohen et al. (1997) in which an actress had to pose various facial expressions and the emotions were selected by two independent judges. We believe that using these stimuli for whole faces and to represent masked faces (by cutting lower half of the faces) could perhaps have more validity than merely presenting photographs of masked faces expressing various emotions.

Moreover, emotion recognition in social situations is dynamic and usually does not involve reading the emotions of static faces. The still pictures used in the current study could have affected the findings. Since the study was conducted online and it had several questionnaires (165 questions in total), we wanted to keep the emotion recognition as simple as possible. Therefore, we just used pictures from Baron-Cohen et al. (1997) which had only one female actor. Using pictures from different actors and both genders would add more information to such studies. However, the study by Baron-Cohen et al. (1997) has shown that these effects are robust and not affected by the gender of stimuli. As this study was conducted online, some of the participants could have completed the study on smart phones where the stimuli appear much smaller than when seen on desktop computers.

As individuals all around the world are affected by COVID-19 and are wearing masks, their unconscious communicative skill of emotion recognition is being negatively affected. As this study was limited to American participants, it would greatly increase the validity and applicability of the study to administer it to individuals from cultures and countries all around the world.

4.2. Conclusion

The results from this study shed light on the relationship between basic emotion recognition and psychological factors such as empathizing, systemizing, and autistic traits. Overall, whole faces are important for emotion recognition. Empathizing and autistic traits were significantly correlated with emotion recognition albeit in opposite directions. None of the psychological factors had any significant correlation with emotion intensity ratings. Systemizing was the only factor that had no significant correlation with either emotion recognition or intensity ratings. Despite the disadvantages of mask wearing on emotion recognition, it is reassuring to know that at least some basic emotions such as sadness, distress, and surprise may not be affected by masks. Alternative ways of communication and the use of teleconsultation can mitigate some of the negative effects of mask wearing.

CRediT authorship contribution statement

Vijayachandra Ramachandra: Conceptualization, Methodology, Writing-original draft, Writing-review and editing, Data Analysis, Project Administration. Hannah Longacre: Writing-review and editing, data organization.

Funding

This work was supported by Marywood University Faculty Development Funds.

Acknowledgments

We would like to thank the Qualtrics panel for help with data collection. We also wish to thank the anonymous reviewers for their valuable feedback.

References

- Baron-Cohen S., Richler J., Bisarya D., Gurunathan N., Wheelwright S. The systemizing quotient: An investigation of adults with Asperger syndrome or high–functioning autism, and normal sex differences. Philosophical Transactions of the Royal Society of London B: Biological Sciences. 2003;358(1430):361–374. doi: 10.1098/rstb.2002.1206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S., Wheelwright S. The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. Journal of Autism and Developmental Disorders. 2004;34:163–175. doi: 10.1023/B:JADD.0000022607.19833.00. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S., Wheelwright S., Jolliffe T. Is there a “language of the eyes”? Evidence from normal adults and adults with autism or Asperger syndrome. Visual Cognition. 1997;4:311–331. [Google Scholar]

- Baron-Cohen S., Wheelwright S., Skinner R., Martin J., Clubley E. The autism-spectrum quotient (AQ): Evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders. 2001;31(1):5–17. doi: 10.1023/A:1005653411471. [DOI] [PubMed] [Google Scholar]

- Bavelas J.B., Black A., Lemery C.R., Mullett J. “I show how you feel”: Motor mimicry a communicative act. Journal of Personality and Social Psychology. 1986;50:322–329. [Google Scholar]

- Besel L.D.S., Yuille J.C. Individual differences in empathy: The role of facial expression recognition. Personality & Individual Differences. 2010;49(2):107–112. doi: 10.1016/j.paid.2010.03.013. [DOI] [Google Scholar]

- Blair R.J.R. Responding to the emotions of others: Dissociating forms of empathy through the study of typical and psychiatric populations. Consciousness and Cognition. 2005;14(4):698–718. doi: 10.1016/j.concog.2005.06.004. [DOI] [PubMed] [Google Scholar]

- Boucher J.D., Ekman P. Facial areas and emotional information. Journal of Communication. 1975;25(2):21–29. doi: 10.1111/j.1460-2466.1975.tb00577.x. [DOI] [PubMed] [Google Scholar]

- Carbon C.C. Wearing face masks strongly confuses counterparts in reading emotions. Frontiers in Psychology. 2020;11 doi: 10.3389/fpsyg.2020.566886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darwin C. Appelton (original work published 1872); New York, NY: 2005. The expression of emotion in man and animals. [Google Scholar]

- Dimberg U., Andréasson P., Thunberg M. Emotional empathy and facial reactions to facial expressions of emotions. Journal of Psychophysiology. 2011;25:26–31. [Google Scholar]

- Eisenbarth H., Alpers G.W. Happy mouth and sad eyes: Scanning emotional facial expressions. Emotion. 2011;11(4):860–865. doi: 10.1037/a0022758. [DOI] [PubMed] [Google Scholar]

- Ekman P., Friesen W.V. Constants across cutures in the face and emotion. Journal of Personality and Social Psychology. 1971;17(2):124–129. doi: 10.1037/h0030377. [DOI] [PubMed] [Google Scholar]

- Greenberg D.M., Warrier V., Allison C., Baron-Cohen S. Testing the empathizing-systemizing theory of sex differences and the extreme male brain theory of autism in half a million people. Proceedings of the National Academy of Sciences of the United States of America. 2018;115(48):12152–12157. doi: 10.1073/pnas.1811032115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grundmann F., Epstude K., Scheibe S. Face masks reduce emotion-recognition accuracy and perceived closeness. PLoS ONE. 2021;16(4) doi: 10.1371/journal.pone.0249792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guarnera M., Hilchy Z., Cascio M., Carubba S., Buccheri S. Facial expressions and the ability to express emotions from the eyes or mouth: A comparison between children and adults. Journal of Genetic Psychology. 2017;178(6):309–318. doi: 10.1080/00221325.2017.1361377. [DOI] [PubMed] [Google Scholar]

- Guo K. Holistic gaze strategy to categorize facial expression of varying intensities. PLoS ONE. 2012;7(8) doi: 10.1371/journal.pone.0042585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsee C.K., Hatfield C., Carlson J.G., Chemtob C. The effect of power on susceptibility to emotional contagion. Cognition & Emotion. 1990;4:327–340. [Google Scholar]

- Hunnikin L.M., Wells A.E., Ash D.P., Van Goozen S.H. The nature and extent of emotion recognition and empathy impairments in children showing disruptive behaviour referred into a crime prevention programme. European Child & Adolescent Psychiatry. 2019:1–9. doi: 10.1007/s00787-019-01358-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karthikeyan S., Ramachandra V. Are vocal pitch changes in response to facial expressions of emotions potential cues of empathy? A preliminary report. Journal of Psycholinguistic Research. 2017;46(2):457–468. doi: 10.1007/s10936-016-9446-y. [DOI] [PubMed] [Google Scholar]

- Marini M., Ansani A., Paglieri F., Caruana F., Viola M. The impact of facemasks on emotion recognition, trust attribution and re-identification. Scientific Reports. 2021;11:5577. doi: 10.1038/s41598-021-84806-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mheidly N., Fares M.Y., Zalzale H., Fares J. Effect of face masks on interpersonal communication during the COVID-19 pandemic. Frontiers in Public Health. 2020;8 doi: 10.3389/fpubh.2020.582191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noyes E., Davis J.P., Petrov N., Gray K.L.H., Ritchie K.L. Royal Society Open Science; 2021. The effect of face masks and sunglasses on identity and expression recognition with super-recognizers and typical observers. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olderbak S., Wilhelm O., Hildebrandt A., Quoidbach J. Sex differences in facial emotion perception ability across the lifespan. Cognition & Emotion. 2018;22:1–10. doi: 10.1080/02699931.2018.1454403. [DOI] [PubMed] [Google Scholar]

- Oosterhof N.N., Todorov A. Shared perceptual basis of emotional expressions and trustworthiness impressions from faces. Emotion. 2009;9(1):128–133. doi: 10.1037/a0014520. [DOI] [PubMed] [Google Scholar]

- Schroeter M.L., Kynast J., Villringer A., Baron-Cohen S. Face masks protect from infection but may impair social cognition in older adults and people with dementia. Frontiers in Psychology. 2021;12 doi: 10.3389/fpsyg.2021.640548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sindermann C., Cooper A., Montag C. Empathy, autistic tendencies, and systemizing tendencies—Relationships between standard self-report measures. Frontiers in Psychiatry. 2019;10:307. doi: 10.3389/fpsyt.2019.00307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sullivan S., Campbell A., Hutton S.B., Ruffman T. What’s good for the goose is not good for the gander: Age and gender differences in scanning emotion faces. The Journal of Gerontology, Series B: Psychological Sciences & Social Sciences. 2017;72:441–447. doi: 10.1093/geronb/gbv033. [DOI] [PubMed] [Google Scholar]

- Svedholm-Häkkinen A.M., Halme S., Lindeman M. Empathizing and systemizing are differentially related to dimensions of autistic traits in the general population. International Journal of Clinical and Health Psychology. 2018;18(1):35–42. doi: 10.1016/j.ijchp.2017.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson A.E., Voyer D. Sex differences in the ability to recognise non-verbal displays of emotion: A meta-analysis. Cognition & Emotion. 2014;28:1164–1195. doi: 10.1080/02699931.2013.875889. [DOI] [PubMed] [Google Scholar]

- Uljarevic M., Hamilton A. Recognition of emotions in autism: A formal meta-analysis. Journal of Autism and Developmental Disorders. 2013;43:1517–1526. doi: 10.1007/s10803-012-1695-5. [DOI] [PubMed] [Google Scholar]

- Van Kleef G.A. How emotions regulate social life: The emotions as social information (EASI) model. Current Directions in Psychological Science. 2009;18:184–188. doi: 10.1111/j.1467-8721.2009.01633.x. [DOI] [Google Scholar]

- Wegrzyn M., Vogt M., Kireclioglu B., Schneider J., Kissler J. Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS ONE. 2017;12(5) doi: 10.1371/journal.pone.0177239. [DOI] [PMC free article] [PubMed] [Google Scholar]