Abstract

This paper proposes a new deep learning approach to better understand how optimistic and pessimistic feelings are conveyed in Twitter conversations about COVID-19. A pre-trained transformer embedding is used to extract the semantic features and several network architectures are compared. Model performance is evaluated on two new, publicly available Twitter corpora of crisis-related posts. The best performing pessimism and optimism detection models are based on bidirectional long- and short-term memory networks.

Experimental results on four periods of the COVID-19 pandemic show how the proposed approach can model optimism and pessimism in the context of a health crisis. There is a total of 150,503 tweets and 51,319 unique users. Conversations are characterised in terms of emotional signals and shifts to unravel empathy and support mechanisms. Conversations with stronger pessimistic signals denoted little emotional shift (i.e. 62.21% of these conversations experienced almost no change in emotion). In turn, only 10.42% of the conversations laying more on the optimistic side maintained the mood. User emotional volatility is further linked with social influence.

Keywords: Covid-19 pandemic, Sociome, Conversation, Emotion classification, Emotion shift

1. Introduction

1.1. Background

Optimism and pessimism can strongly impact the psychological and physical health of individuals. These are distinct modes of thinking that are best conceptualized as a continuum with many degrees of emotion. Some people tend to incline their opinions, feelings and behaviour toward one of the extremes of this continuum (Brownlie & Shaw, 2019 Hecht, 2013;).

Optimism is defined as a personality trait that reflects the extent to which people hold generalized favourable expectancies for their future (Carver & Scheier, 2014)(Carver, Scheier & Segerstrom, 2010). Conversely, pessimism is understood as a personality trait that is characterised by a customary expectation of negative outcomes. Pessimism and negative attitudes are often associated with depression and anxiety while optimism tends to reduce stress and promote better physical health and overall well-being (M. F Scheier & Carver, 1985.).

At the same time, an individual can be optimistic regarding a specific area of life but pessimistic regarding other aspects. Moreover, individuals may shift positions on the optimism-pessimism continuum as the timeline unfolds and, most notably, depending on the surrounding social context. Specifically, social media interactions can trigger emotional mimicry, a process often considered a basic mechanism of emotion contagion (Herrando & Constantinides, 2021)(Wróbel & Imbir, 2019). Research on the moderating role of social factors in emotional contagion and emotional mimicry on social platforms highlights the idea that digital emotion contagion does not occur blindly, but rather in response to a variety of emotional stimuli (Goldenberg & Gross, 2020)(Ferrara & Yang, 2015).

Within this context, understanding the optimism and pessimism flowing in social media in the context of a global pandemic, such as COVID-19, is of timely practical interest in the management of present and future health crises. The behaviour of individuals and their cooperation with necessary social restrictions is majorly dependant on their emotional response to, and their perception of, the risk presented by the pandemics (Soroya, Farooq, Mahmood, Isoaho & Zara, 2021)(Abbas, Wang, Su & Ziapour, 2021)(Crocamo et al., 2021). Often, the fear of infection (e.g. its sources and consequences) and the constant checking of information (including fear-evoking information, fake news and conspiracy theories about the dangers of contagion) lead to interacting forms of worry, avoidance, and coping responses, manifested by various traumatic stress symptoms (e.g. nightmares and intrusive thoughts) (Taylor et al., 2020). Recent studies show the importance of observing depressive symptoms and psychosocial stressors on social media (Danielle, Smith et al., 2017)(Danny, ten, Bathina, Rutter & Bollen, 2020) as well as public fear and hesitancy (Chen, Han & Luli, 2021).

Modelling optimism and pessimism in the context of social media has thus important applications to personal health and social wellness.

1.2. Motivation and contributions of this paper

This work proposes a new sociome-based approach to better understand how optimism and pessimism feelings are conveyed in online conversations during health crises, namely the COVID-19 pandemic. The goal is to explore the conversational contexts where optimism and pessimism emerge and develop. Instead of classifying users as optimists or pessimists, the exploration of emotional conversation patterns within and across periods is helpful to detect and interpret shifts in emotion, namely social influence. Also, this exploration is relevant to depict emotional responses to meaningful events during the crisis.

These insights are of practical relevance because the development of emotions in response to health crises remains uncertain and social media offers a unique opportunity to analyse voluntary and public expressions of emotional states and, most notably, how these are perceived in and affected by social media.

The bidirectional encoder representation from transformers (BERT) is used to extract the semantic features from the posts (Devlin, Chang, Lee & Toutanova, 2018) and various neural network architectures are applied for feature extraction and classification. The reconstruction of social conversations enables the contextualisation of emotions and the evaluation of emotional frequency and volatility. The main contributions of this work are as follows:

-

•

novel optimism and pessimism detection models that combine the pre-trained BERT model and a neural network to better understand sentence semantics and process information from social media posts.

-

•

a method combining emotion detection and conversation reconstruction to analyse the influence of social interactions in emotional shifts.

-

•

two new, public semantically annotated corpora to evaluate optimism and pessimism predictive models over social media posts;

-

•

new insights into the flows and shifts of feelings happening in Twitter conversations about COVID-19 during four relevant periods of the pandemic.

To the best of the authors’ knowledge, this work is the first to reconstruct emotional flows in social media conversations about the COVID-19 pandemic.

The rest of this paper is structured as follows. Section 2 presents related work, i.e. a review of sociome-based analyses in the scope of health crises and the state-of-the-art for modelling optimism and pessimism in social media. Section 3 details the proposed methodology based on the Transformer Encoder architecture and the reconstruction of social conversational contexts, including the corpora, the pre-processing, and the experimental setup. Section 4 describes the evolving of optimism and pessimism feelings in four periods of the pandemic as a proof-of-concept of the practical abilities of the approach. Section 5 discusses the individual and social implications of emotion sharing on social media work and pinpoints some future work. Finally, Section 6 summarises the most important takeaways from this.

2. Related work

Recent works have portrayed the propagation of situational information on social media and, most notably, the impact of emotions in the propagation scale of information (Huang, Cao, Yang, Luo & Chao, 2021). Some studies looked into the sociome for insights into the public response to the COVID-19 pandemic, notably the psychological burden of lockdown and other social restrictions (Benzel, 2020 van Bavel et al., 2020; Xue et al., 2020;). Information overload was linked to a set of negative psychological and behavioural responses called information anxiety (Soroya et al., 2021). In turn, another study reported an increasing state of psychophysical numbing, i.e. Twitter users are increasingly fixated on mortality, but in a decreasingly emotional and increasingly analytic tone (Dyer & Kolic, 2020). The extraction of the emotions and sentiments of people from social media was also proposed as a valuable means to help the authorities to identify people with depression or prone to suicidal tendencies (Sharma & Sharma, 2020). Other infoveillance studies aimed to identify the main topics posted by social media users related to the COVID-19 pandemic and applied sentiment analysis to detect the spread of racism, hate, anxiety and depression as a result of the outbreaks, e.g. (Abd-Alrazaq, Alhuwail, Househ, Hamdi & Shah, 2020 Brindha, Jayaseelan & Kadeswara, 2020; Saha et al., 2019; Schild et al., 2020;). Also, a new web portal is tracking mood in India (Venigalla, Vagavolu & Chimalakonda, 2020).

Beyond the pandemic, the present work is related to a growing body of research on the use of machine learning in understanding human emotion and risk perception for a wider range of practical applications of situational awareness, health crisis management and health risk prevention. Optimism and pessimism are associated with various personality factors and are the main subjects of interest of several physiological and sociological studies, e.g. (Brownlie & Shaw, 2019 Carver et al., 2010;; Michael F Scheier et al., 2020). amongst other findings, these studies showed that language use is an independent and meaningful way of exploring personality, and personality is correlated with optimism. Therefore, given a large enough text corpus, computational models hold great potential to recognise expressions of optimism automatically.

One of the earliest efforts in developing an emotion model was made by Shaver and colleagues (Shaver, Schwartz, Kirson & O'Connor, 1987). They selected a group of words as emotion words, which were then annotated based on their similarity and grouped into categories (i.e. minimising inter-category similarity and maximising intra-category similarity). At the bottom of their abstract-to-concrete emotion hierarchy laid six basic emotions, i.e. joy, love, surprise, sadness, anger, and fear. Three main paths have been pursued to evolve the techniques of emotion sensing based on this hierarchy. First, the association of events and objects with specific emotions via a “commonsense dictionary”. Second, the use of an ontology to recognise and derive the overall emotions of texts based on syntax and semantics. Last, the identification of the necessary elements that arouse each emotion and the training of recognition models to match these elements in the text. Moreover, most of the emotions are classified into either having positive or negative valence. For example, anger and sadness are always negative emotions and joy and happiness are always positive emotions. However, some emotions, such as surprise, can be neutral/moderate, positive, or negative, depending on the context. Emotions can also occur in varying levels of intensity that may elicit a more or less intense response to the stimuli. Reviews of current emotion categorization models and algorithms can be found in Poria, Majumder, Mihalcea and Hovy (2019) Wang, Ho and Cambria (2020);.

As a further research step, social media data have been successfully used to build models of people's psychological traits as well as to predict aspects of personality. In particular, deep learning approaches have been proposed to analyse sentiments (Naseem, Razzak, Musial & Imran, 2020 Pota, Ventura & Catelli, 2021;), to identify personality traits (Ren, Shen, Diao & Xu, 2021) or to detect irony (González, Hurtado & Pla, 2020). However, computational analyses that explore optimism and pessimism are fairly recent (Saha et al., 2019) (Harb, Ebeling & Becker, 2020).

One of the first works focusing on these aspects aimed to predict the most optimistic and pessimistic users on Twitter. The classifiers were built based on a simple bag of words representation and using traditional machine learning algorithms, i.e. Naïve Bayes, Nearest Neighbour, Decision Tree, Random Forest, Gradient boosting and Stochastic Gradient Descent (Ruan, Wilson & Mihalcea, 2016). Currently, more emphasis is put on incorporating textual semantic similarities and the individual's intentions to detect the characteristics of optimistic and pessimistic feelings correctly.

Caragea et al. investigated the performance of deep learning models to detect optimistic and pessimistic tweets at both the tweet and user levels, including bidirectional long short term memory networks (BiLSTMs), convolutional neural networks (CNNs), and Stacked Gated Recurrent Neural Networks (RNNs) (Caragea, Dinu & Dumitru, 2018). In addition, these authors compared the performance of such specialised models to common sentiment analysers and showed that the latter are unable to capture the expression of optimism and pessimism in posts adequately. Another work proposed a method based on the XLNet language model, i.e. auto-regressive language models, and the deep consensus algorithm for the detection of optimistic and pessimistic tweets (Alshahrani, Ghaffari, Amirizirtol & Liu, 2020). With similar purposes, other studies have demonstrated that word vector embeddings, namely word2vec and Glove, are well equipped to capture the semantic and contextual similarities of tweets posted during health outbreaks (Alorini, Rawat & Alorini, 2021 Khatua, Khatua & Cambria, 2019; Naseem, Razzak, Khushi, Eklund & Kim, 2021; Sciandra, 2020;). Fine-grained sentiment analysis is pursued by aspect-based sentiment methods. Notably, recent works leverage graph neural models by either learning the dependency information from contextual words to aspect words based on the dependency tree of the sentence or using the affective dependencies of the sentence according to the specific aspect (Liang, Su, Gui, Cambria & Xu, 2022 Phan, Nguyen & Hwang, 2022; Trisna & Jie, 2022; Xiao et al., 2022;). Overall, the rationale of all these works was to make the models more sensitive to the contexts of the words and thus, to better handle polysemy and syntactic relationships such as negation.

The present work aligns with these previous deep learning works and investigates for the first time the training and tuning of BERT to detect optimistic and pessimistic tweets. No comparison with previous results is provided since the aim here is to study the flow of emotions in social media conversations about health topics, namely health crises. Hence, a new collection of tweets was curated based on the relevance of their contents to health issues (i.e. COVID-19 discussion), and without taking into account any knowledge of the predisposition of the posting user to pessimism or optimism. Also noteworthy, the detection models proposed here are coupled with a conversation reconstruction mechanism that enables the analysis of emotion flows throughout and across conversations.

3. Materials and methods

This section introduces the proposed methodology for the detection and analysis of optimistic and pessimistic feelings in COVID-19 conversations on Twitter. As illustrated in Fig. 1 , the methodology entails the preparation of corpora, the training of optimism and pessimism classifiers, the reconstruction of conversations, and the analysis of emotion flows and shifts. The analysis is centred on four relevant periods in the COVID-19 pandemic. The main objectives are to observe the flow of emotions throughout the conversations as well as how individuals may be affected by the conversational context.

Fig. 1.

The workflow implemented to retrieve, process and analyse optimistic and pessimistic emotions flowing in Twitter conversations about COVID-19.

The next sections detail the main steps of the methodology.

3.1. Data collection

The retrieval of the tweets discussing the COVID-19 pandemic and the metadata associated with the corresponding user accounts was performed using the Tweepy Python library, which complies with the public Twitter REST API (Roesslein, 2020). Public tweets, in English, were collected from April, 1st 2020 till September, 26rd 2020, using main keywords about the pandemic, such as “COVID-19″, “#coronavirusoutbreak", “coronavirus" or “#COVID19”. The rationale was to observe how different events throughout the pandemic affected users and thus, be able to observe fluctuations in mood and how these are motivated by the social interplay.

The analysis focused on periods of particular relevance during the timeline of the pandemic as follows:

-

•

8th-20th April (P1): China reopens Wuhan, after a 76-day lockdown. Several states of the USA and countries in Europe were already in lockdown or discussing lockdown strategies (Ruktanonchai et al., 2020).

-

•

18th-30th May (P2): the 73rd World Health Assembly, achieved a global and commitment to fight the COVID-19 pandemic. USA reported 100,000 deaths due to COVID-19. In Europe, the number of confirmed cases in the United Kingdom, Russia, France, Spain, and Italy kept growing rapidly (COVID-19 Dashboard by the center for Systems Science and Engineering (CSSE) at Johns Hopkins University (JHU), 2021).

-

•

15th-27th July (P3): the COVAX Facility is created to guarantee rapid, fair and equitable access to COVID-19 vaccines. It secured engagement from more than 150 countries while several reports predicted the start of a second wave (Cacciapaglia, Cot & Sannino, 2020).

-

•

14th-26rd September (P4): announcement of large-scale, multi-country Phase 3 clinical trials for several COVID-19 vaccine candidates. The political campaign for the presidency of the USA had started and Europe was entering the second wave of the pandemic (Bontempi, 2021)(Johnson, Pollock & Rauhaus, 2020).

3.2. Corpora preparation

Two semantically annotated corpora were prepared to assist in predictive modelling. The rationale for the construction of two datasets instead of a single one was to portrait expressions of optimism and pessimism separately since the absence of one does not necessarily imply the presence of the other (Gasper, 2018)(Cambria, Poria, Gelbukh & Thelwall, 2017). So, models could be trained to classify tweets that convey different feelings as well as those that do not show any feeling.

The process of annotation of the two corpora involved similar annotation guidelines and identical post-processing to ensure the high quality of the final annotations. In particular, two annotators with curation experience were recruited. Tweets were randomly selected from the retrieved dataset and each dataset was annotated independently by the two annotators. Annotation guidelines dictated that all the tweets labelled as pessimistic or optimistic should clearly show the mood of the user. Tweets expressing a different mood, multiple moods, or void emotion should be annotated as non-pessimistic or non-optimistic. At each round of annotation, annotators and authors gathered to discuss annotation differences in each corpus. Whenever possible, differences were resolved and annotation guidelines were amended (if need be). Considering time and cost limitations, each corpus was submitted to two rounds of annotation and a final consensus session. Unresolved conflicts led to the discard of those tweets.

The first corpus has 1110 tweets labelled as optimistic and 1274 tweets labelled as non-optimistic. The second corpus has 1004 tweets labelled as pessimistic and 1133 tweets labelled as non-pessimistic. The corpora and the annotation guidelines are available in Supplementary Material 1 and Supplementary Material 2, respectively.

3.3. Machine learning optimism and pessimism classification

As a baseline, three commonly used machine learning algorithms, including Support Vector Machines (Cortes, Vapnik & Saitta, 1995), Random Forest (Breiman, 2001) and Naïve Bayes (McCallum & Nigam, 1998), were tested. Data pre-processing ensured the elimination of stopwords as well as other unnecessary data, such as HTML tags, mentions, special characters, unnecessary spaces, and non-ASCII characters. Tokens were then lemmatised and the TF-IDF vectorization technique was applied to obtain the feature vectors.

The Scikit-learn library supported all experiments (Pedregosa et al., 2011) The evaluation was implemented using 10-fold cross-validation and the best model setup was chosen based on the weighted f-score value Table 1. shows the hyperparameters used in the machine learning models.

Table 1.

Hyper-parameters of the machine learning models.

| Model | Parameter | Range |

|---|---|---|

| Support Vector Machines | C | 0.1, 1, 10, 100, 200, 300, 400, 500, 600, 700, 800, 900, 1000 |

| gamma | 1, 0.1, 0.01, 0.001, 0.0001 | |

| kernel | linear, "rbf", "poly", "sigmoid" | |

| Naive Bayes | Alpha | logspace(0, −9, num=100) |

| Random Forest | Number of estimators | 100, 300, 500, 800, 1200 |

| Maximum depth | 5, 8, 15, 25, 30 | |

| Minimum sample split | 2, 5, 10, 15, 100 | |

| Minimum samples leaf | 1, 2, 5, 10 |

3.4. Transformer-based optimism and pessimism classification

The modelling of the optimism and pessimism classifiers was based on two embeddings, i.e. the GloVe embedding (Pennington, Socher & Manning, 2014) and the pre-trained BERT Base uncased model (Devlin et al., 2018). The torchtext and the Hugging Face's transformers libraries for PyTorch support the corresponding implementations (Paszke et al., 2019)(Wolf et al., 2020).

Basic corpora pre-processing entailed the elimination of mentions, URLs and non-ASCII characters. For the GloVe-based models, the text was tokenized word by word and resulted in a 300-dimensional vector representing each input sequence. For the BERT-based models, the BERT tokenizer was applied to transform the pre-processed text and this representation was fed into the BERT's transformer encoder. As output, the pre-trained BERT model produced a 768-dimensional vector representing each input sequence. In both strategies, due to the short length of the tweets, the maximum sequence length was set to 128, and cases of shorter or longer length were padded with zero values or truncated to the maximum length, respectively.

The model evaluation considered three deep learning architectures for each of these embeddings Fig. 2. describes these deep learning architectures, namely: the base classification layer (i.e. a linear neural network), a convolutional neural network and a bidirectional long short term memory (Bi-LSTM) recurrent neural network.

Fig. 2.

The implemented deep learning architectures.

The models were then fine-tuned using an Adam optimizer that minimised the cross-entropy loss function as follows (see details in Table 1):

-

•

Linear neural network (LNN): this architecture connects the BERT or GloVe output to a simple linear neural network. The input layer (768- or 300-dimensional vector, in BERT or Glove, respectively) is fully-connected in a hidden layer of 50 units. The hidden layer uses a Rectified Linear Unit (ReLu). Finally, the output layer contains the results of classification.

-

•

CNN: the embedding vectors are concatenated creating a matrix that connects to a convolutional neural network (Kim, 2014). The BERT matrix [n x m] is of size 32 × 768 and the GloVe matrix [n x m] is of size 32 × 300. The convolution operations were applied in different window sizes, i.e. [2 x m], [3x m] and [4 x m] regions, using the ReLU activation function and 1-max pooling to down-sample the input representation. As shown in Fig. 2, results are then concatenated in a simple vector. A dropout layer was added to deal with overfitting, and a softmax function accounts for the distribution of the probability between classes.

-

•

Bi-LSTM: this architecture has a hidden layer with 150 units in the case of GloVe and 384 units in the case of BERT. The output is fully connected in a softmax function to generate the final classification.

As shown in Table 2 , during hyper-parametrization all architectures used ReLU activation. To avoid overfitting, a dropout layer is used with adjusted value for each transformer and data. The dropout rate was tuned independently for each model and set to . Optimization is done through Adam, with , , and . The learning rate cycles started from an initial learning rate of and a maximum of .

Table 2.

Hyper-parameters of the fine-tuned BERT Base uncased model and GloVe.

| Name parameter | BERT values | GloVe values |

|---|---|---|

| Back-propagation | ReLU | ReLU |

| Batch size | 16, 32 | 16, 32 |

| Dropout | 0.3 | 0.3 |

| Epochs | 1–4 | 1–15 |

| Learning rate | 5e-5, 3e-5, 2e-5 | 5e-5, 3e-5, 2e-5 |

| Loss function | Cross Entropy | Cross Entropy |

| Max Length | 128 | 128 |

| Optimizer | Adam | Adam |

The results of the fine-tuning of the proposed architectures (i.e. LNN, CNN and Bi-LSTM) are fully disclosed in Supplementary Material 3. The performance of the models was evaluated based on 10-fold cross-validation and using the macro-averaged F-score metric as reference. For each of the two classification tasks, both the validation set and the test set encompassed 10% of randomly selected (stratified) records in the corpus. Fine-tuning was based on the performance over the validation set and model performance was assessed over the test set.

The BERTBASE-Linear and BERTBASE-Bi-LSTM models showed better performance than those with GloVe-Bi-LSTM embeddings and traditional machine learning models. In particular, the BERTBASE-Linear and the BERTBASE-Bi-LSTM models had similar performance for optimism prediction, but the BERTBASE-Bi-LSTM model outperformed the other models for pessimism detection. The BERTBASE-CNN model presented lower performance than the GloVe-CNN model.

Therefore, the implementation of the proposed method used the BERTBASE-Bi-LSTM models. The source code is provided in Github (https://github.com/gbgonzalez/optimism_pessimist_twitter). Supplementary Material 3 provides a comprehensive description of the experiments.

3.5. Conversation reconstruction and emotion characterization

The reconstruction of conversations started by collecting the tweets linked to the previously retrieved COVID-19 tweets, i.e. those tweets that replied to these tweets and the tweets that motivated these tweets as a reply. Such reconstruction was primarily based on the information entailed in the in_response_to_status_id field of the tweets. The tweets about COVID-19 could have started the conversation or been in the middle or end of the conversation. Conversational paths missing conversational partners due to tweet or account deletion were excluded.

Conversation depth was calculated as the largest number of edges from a leaf to the root of the tree, i.e. the largest number of replies linked in a conversational path. Conversely, conversation width was defined as the total of leaf nodes, i.e. number of interaction paths in the conversation. Only conversations of depth equal to or above 5 levels were considered in the further analysis because very short conversations would not enable a proper analysis of the emotional flows. The final dataset contained a total of 14,063 conversations.

The BERTBASE-Bi-LSTM classifiers (name as Bi-LSTMOP and Bi-LSTMPE for simplicity) were applied to characterise the emotions flowing during these conversations. The prediction thresholds of the Bi-LSTMOP and the Bi-LSTMPE models were 0.87 and 0.92, respectively. Supplementary Material 3 details how these thresholds were calculated. Tweets that were predicted both optimistic and pessimistic (i.e. 0.18% of the total of tweets) or for which no emotion was detected (i.e. 26.22% of the total of tweets) were labelled as indeterminate.

Conversations were then analysed in terms of the emotions expressed in their tweets, namely the prevalence of optimism/pessimism and how social interaction confirms or overpowers initial feelings. The emotion frequency of optimism or pessimism in conversation i was calculated as

| (2) |

Likewise, the ratio of shift in the conversation i was calculated as

| (3) |

where shift_score is the number of replies that denote an emotion different to that of the tweet they reply to.

The conversations were clustered based on the structural and emotional patterns exhibited. The k-means method supported this exploration and the grid search optimization technique was applied to find the optimal number of clusters based on the minimization of the Davies-Bouldin index, i.e. the ratio between the cluster scatter and the cluster's separation.

As a complementary perspective of analysis, users were similarly clustered to explore their patterns of optimism and pessimism across conversations. Some additional metrics about the emotions of the user were introduced for this purpose.

The influence of the user j was measured in terms of the number of followers (i.e. users that follow user j) and followees (i.e. users that user j follows) as a means to account for possible spammers (i.e. users with many followees and few followers) (Riquelme & González-Cantergiani, 2016):

| (4) |

The participation of the user j in a conversation c is measured as the number of tweets posted by the user in the conversation (i.e. tweetc,j) divided by the number of tweets in the conversation (i.e. tweetc):

| (5) |

The average user participation is measured as the sum of all user contributions to conversations divided by the total number of participated conversations:

| (6) |

Similarly to the metric emotion_frequency of a conversation, the emotion_frequency of user j was calculated as the number of tweets of user j of a given emotion divided by the total number of tweets posted by this user.

Likewise, the volatility of the feelings of user j across conversations is measured in terms of the number of times the feelings of user j changed in a conversation (i.e. volatility_score) and the total of conversations the user participated in, i.e.

| (7) |

In the analysis of conversational emotion patterns, namely emotion shifts, user account verification and user influence are cross-inspected. The top 25 most influencing users in each of the four periods were manually inspected in support of the metric-guided observations. All data is available at Supplementary material 4.

4. Results

4.1. Characterisation of COVID-related conversations

Table 3 describes the general characteristics of the conversations reconstructed for the four periods under analysis. The largest number of conversations was reconstructed for the first period, i.e. April 8th-20th. In turn, the last period, i.e. September 14th-26th September, had fewer conversations, but these conversations had the highest average of tweets per conversation. The number of unique users was similar for all periods and relatively low, i.e. 3 or 4 users per conversation.

Table 3.

Summary of the results for the four periods of the COVID-19 pandemic.

| P1 | P2 | P3 | P4 | |

|---|---|---|---|---|

| Total of conversations | 4507 | 3262 | 3868 | 2426 |

| Total of tweets | 47,593 | 32,077 | 43,221 | 27,612 |

| Total of unique users | 17,237 | 10,754 | 14,645 | 8683 |

| Average of tweets in conversation | 10.56 | 9.83 | 11.17 | 11.38 |

| Average of users in conversation | 3.82 | 3.3 | 3.78 | 3.58 |

| Conversation width | 2.02 | 2.07 | 2.25 | 2 |

| Conversation depth | 8.71 | 8.1 | 8.89 | 9.63 |

| Pessimism frequency | 0.68 | 0.63 | 0.66 | 0.65 |

| Optimism frequency | 0.07 | 0.08 | 0.07 | 0.07 |

| Indeterminate frequency | 0.24 | 0.29 | 0.29 | 0.28 |

| Emotion shift | 0.39 | 0.41 | 0.4 | 0.4 |

Looking into the average values of conversation width and depth, one may observe that conversations had few paths of interaction, but the depth of existing paths reflects a significant interest in keeping the interaction (i.e. above 8 levels of reply between those 3 or 4 users). In general, pessimism is more pronounced than optimism, whilst emotional shift is typically above 30%. This denotes more, and perhaps more explicit, expressions of pessimistic feelings on COVID-19 discussions and the existence of many posts of undetermined or inexistent expression of feelings.

4.2. Emotion shifting in conversations

While looking into possible relations between emotion shifts and the dynamics of the conversations, it is relevant to analyse whether the involvement of more participants makes it more likely to happen a shift in optimistic or pessimistic feelings, or whether more intimate conversations are more prone to these changes. Similarly, it is relevant to study if the number of tweets affects these shifts as well.

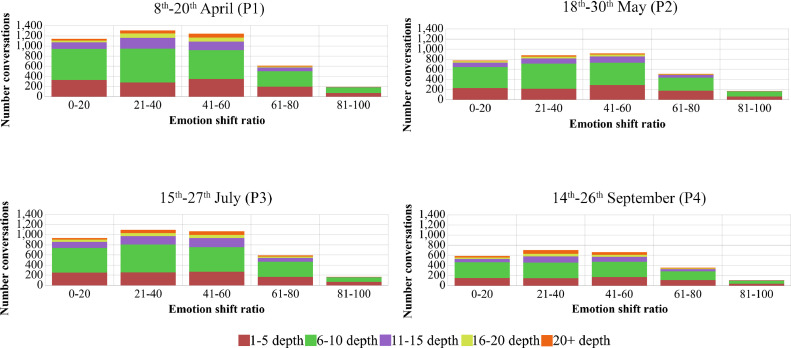

Figs. 3, 4 and 5 show the conversation distribution in terms of the number of users involved, the number of tweets posted and the depth of the conversation, respectively. As a general observation, in all the periods, many conversations showed a shift ratio around 40–60% (approximately ≈ 27–28% of the total of conversations) or had a low shift around 21–40% (approximately 26–29% of the total of conversations). As stated earlier, there were on average 3 or 4 users per conversation, but it is interesting to observe that those conversations with a higher number of participants (i.e. the bars coloured in orange, yellow and lilac) did not denote a higher value of emotion shift (Fig. 3). That is, although more users participate, it seems that they were not emotionally influenced by one another in a significant way. It is uncommon to find conversations with many participants in the bins denoting a shift equal to or higher than 60%. Conversations limited to a small number of users (i.e. 5 or fewer, and bars coloured in red), and a small fraction of those having 6 up to 10 users (i.e. bars coloured in green), denote more propensity to change in feelings at some extent.

Fig. 3.

Comparative analysis of the ratio of emotion shift and the number of users in the conversation in the four periods.

Fig. 4.

Comparative analysis of the ratio of emotion shift and the number of tweets in the conversation in the four periods.

Fig. 5.

Comparative analysis of the ratio of emotion shift and the number of tweets in the conversation in the four periods of time.

During P1, i.e. the reopening of Wuhan, conversations involved many users showing shifts in emotion (i.e. bars coloured in green, orange and yellow in bins with ratios between 20% and 60%). In period P4, i.e. the period corresponding to vaccine trial announcements, conversations involved fewer participants and great shifts in emotion were less common, namely for largely participated conversations.

The number of tweets of a conversation is not necessarily proportional to the number of users, since the same user may reply several times Fig. 4. shows the relation between the number of tweets and the emotion shifts detected in conversations. One may observe a similar relation of the number of tweets with the ratio of emotion shift across the four periods. That is, both larger and smaller conversations are spread across the emotion shift bins. The highest values of shifting occur for smaller conversations (i.e. green and red coloured bars), even though most of such conversations are in bins equal or below 60%. Larger conversations (i.e. orange and yellow coloured bars) are typically below that threshold as well.

The short conversations with little shift in emotion (i.e. 0–20%) included tweets mostly from non-verified accounts, which talked about the COVID-19 situation in specific countries/areas and personal issues. For example, tweets discussing school closing such as “Asking for myself and a few friends

Questions for School Openings: • If a teacher tests positive for COVID-19 are they required to quarantine for 2–3 weeks? Is their sick leave covered, paid? • If that teacher has 5 classes a day with 30 students each, do all 150 of 1/5″ and tweets expressing a personal opinion about death “Death is very constant but surprising. #deeperlife #coronavirus".

Questions for School Openings: • If a teacher tests positive for COVID-19 are they required to quarantine for 2–3 weeks? Is their sick leave covered, paid? • If that teacher has 5 classes a day with 30 students each, do all 150 of 1/5″ and tweets expressing a personal opinion about death “Death is very constant but surprising. #deeperlife #coronavirus".

Larger conversations were more frequent in the P3 period, i.e. the launching of the COVAX Facility. Interestingly, those conversations in the range of 21–40% of shift developed around US COVID-19 related politics with tweets like "PELOSI IMPLODES: Nancy Claims the ‘Trump Virus’ is Killing Thousands of Americans." In turn, P3 conversations experiencing 40% up to 60% of emotion shift were more focused on protective measures against COVID-19, notably discussing whether or not to wear a masque. For example, conversations with tweets like "Don't be selfish - Wear a masque. We can crush this curve, but we have to do it TOGETHER."

Finally, the analysis of conversation depth versus emotion shift shows to what extent the development of a conversation tends to involve some change in terms of optimistic and pessimistic feelings (Fig. 5). Although contributing to the smallest set of conversations (i.e. approximately 17% of the conversations), the fourth period had more polarised and longer conversations. P4 conversations with lower emotion shift (i.e. below 20%) were still focused on protective measures or political topics. However, there was also an increasing number of conversations around the COVID-19 vaccines and the anticipated next peaks of the pandemic, which showed a higher ratio of shift (i.e. 21–40%).

For example, vaccine debates included tweets like: “Inventing a #COVID19 vaccine is just a first step. For it to make a material difference in the pandemic, we must also manufacture and distribute it, and people must take it (with confidence that it's safe). Let's talk about the often-overlooked, unsexy problem of DISTRIBUTION. 1/” and conversations about crisis management contained tweets such as “Imagine if UK had locked down harder and faster during the first peak of Covid. How many lives would have been saved? How much more quickly could we have relaxed measures? Of course, hindsight is easy. But making the same mistake twice in a row is dumb.”

As an inter-period observation, conversations with the highest shift ratios were located in the periods when more conversations occurred, that is, the 8th-20th April period and the 15th-27th July period. Notably, conversations with more emotion changes and greater depth fell into P3, i.e. at the time the COVAX Facility was launched. Regardless of the period, most conversations discussed the evolution of the pandemic, crisis management at a national level (i.e. countries with bigger incidence), and protective measures. Conversations denoting a higher shift in emotion typically entailed challenging opinions. For example, the use of protective masks generated many debates in P3 as well as the efficacy of the vaccines in the last period.

4.3. Optimistic and pessimistic patterns within conversations

COVID-19 conversations were clustered to explore the most distinctive similarities. As shown in Table 4 , in the first period, there was a division into 3 clusters, but in the rest of the periods, the division was into 4 clusters. In all periods, there was a common pattern, i.e. the first cluster contains more contrasting emotions (higher shift ratio) and is the cluster with the largest number of optimist tweets. Cluster 2 contains the highest number of conversations with indeterminate emotions. Finally, cluster 3 and cluster 4 present the highest frequencies of pessimism. The conversations in cluster 3 were quite polarised while the conversations in cluster 4 show some emotional shift.

Table 4.

Clustering of the COVID-19 conversations.

|

During the first period, conversations denoting more pessimism formed the biggest cluster, i.e. cluster 3 and were not prone to shift. These conversations included statements such as “Can you stop being so wilfully ignorant before you get yourself, your family and friends killed?”, “health system cant hold on” or “how covid-19 will seize your rights and destroy our economy”. In turn, P1 conversations laying more on the optimistic side, i.e. located in cluster 2, involved fewer participants and showed little emotion shift. For example, these conversations included cheerful statements such as “Varied activities can keep one's spirits up!”, “Ok! Thanks

”, “Happy!”, “unsung heroes!” or “be a part of the solution!”. The last cluster contains conversations with a weaker emotion signal, but still more on the optimist side, and a higher ratio of shifts in emotion. That is, in this cluster are conversations where participants presented a broader spectrum of emotions and the flow of conversation did not converge to a given side of the spectrum.

”, “Happy!”, “unsung heroes!” or “be a part of the solution!”. The last cluster contains conversations with a weaker emotion signal, but still more on the optimist side, and a higher ratio of shifts in emotion. That is, in this cluster are conversations where participants presented a broader spectrum of emotions and the flow of conversation did not converge to a given side of the spectrum.

In the second and third periods, the group with the highest number of conversations was the group that denotes the most change in emotions but with the highest number of pessimistic tweets (i.e. group 4). This new cluster obtained the greatest interactions and depth in their conversations. During P2, topics such as the possible benefits of hydroxychloroquine or the United Kingdom's strategy against COVID in the middle of Brexit were discussed. In P3, the topics of interest were the wearing of masks, city closures and school closings, and updating the COVID-19 statistics (i.e. the number of deaths, infected and recovered).

The model obtained for the last period (that is, P4) was quite similar. Nonetheless, on average, the conversations in clusters 3 and 4 were more similar in terms of the number of tweets, depth and width. In the conversations showing greater pessimism, the users expressed concerns about the evolving of the pandemic, e.g. “continues to go the wrong way”, “the death rate per cases is very high”, “Disastrous news”, “Now I fear start of October”, “serious problems” or “Stop the lies.”, or “shameful, dangerous and irresponsible”.

4.4. Optimism and pessimism across conversations

By clustering the users participating in COVID-19 conversations throughout the four periods, it is possible to further explore the consistency of certain aspects of users’ emotions, notably the empathy with one another's feelings (Table 5 ).

Table 5.

Clustering of the users discussing COVID-19 by period.

|

Although being the smallest of all the clusters, the users participating the most and changing feelings more often lay in the last period, i.e. cluster 3 in P4. Furthermore, looking into the user's influence and, in particular, the top 25 most influential users per period, it was interesting to notice that not all of these users had verified accounts (see details in Supplementary material 4). In particular, some of the top 25 influential users in P2 and P3 did not have verified accounts (2 users and 1 user, respectively). The verified accounts typically relate to news (such as Cable News Network (CNN), BBC and New Delhi Television (NDTV)), politicians (such as Joe Biden, Donald Trump and Muhammadu Buhari), and well-known public figures (such as the Neil deGrasse Tyson and Bill Gates).

As a general observation regarding the role of influential users in COVID-19 conversations, results show that users were more participatory, and displayed a wider range of emotional states, when influential users or verified accounts initiated the conversation. As illustrated in Table 4, one of the clusters gathers together the most influential users and exposes the great emotional volatility they exhibited in each of the four periods. Another immediate observation is that the active participators showing optimistic feelings are more prone to emotional shifts. Also, there is a great contrast between conversation starters, who are grouped in a particular cluster (i.e. cluster 3 and sometimes cluster 4), and socialisers, who typically show high response rates across all clusters and periods.

5. Discussion

5.1. Results of research

The difference between the present work and related research on the computational analysis of optimism and pessimism in social media lies in three main aspects. First, the use of the BERT embeddings, which are trained utilising transformers, a coalition of encoders and attention mechanisms, to capture the intricate emotional semantics of the messages. Transformer-based neural networks have been successfully applied to several text classification tasks, including sentiment analysis (González et al., 2020) (Ren et al., 2021). Second, different neural network architectures are compared to build binary classification models. Since the absence of optimism does not imply the expression of pessimism, and vice-versa, the ensemble of independent binary models is well suited to evaluate probabilities on both sides of the continuum and improve overall performance. Notably, it allows the detection of messages void of emotion or expressing conflicting emotions.

Third, the combination of mood detection at the tweet level with conversation reconstruction, i.e. optimism and pessimism are depicted within conversational flows to study emotional shifts and signal frequency. Compared to previous approaches, the focus is not set on delving into detailed traits of optimism or pessimism (e.g. anxiety or depression), but rather to look into the social influence of the participants and the prevalence of a given emotion signal in changing or reinforcing others feelings.

Overall, optimistic conversations were typically less common and less participated, which was somewhat expected given the stress and trauma experienced at the time. Previous studies reported increasing use of social platforms during the pandemic as a coping mechanism for social, physical distancing. At the same time, these studies alerted about exacerbating long-term negative feelings (Danny et al., 2020)(Ferrara & Yang, 2015). In the current corpora, the pessimistic mood of the users is not strongly noticed across conversations, but these feelings are not likely demoted throughout the conversation. Pessimistic users tend to have a low influence on the social platform whereas the users with higher influence scores are quite participative and volatile. Interestingly, the announcement of the launching of the phase 3 trials of the first vaccines in the fourth period under analysis led to more optimistic conversations as well as more participation in pessimistic conversations.

5.2. Practical implications

This work has valuable implications for practice. Information on individual and collective mood from social media is of added value for the development of tools that support the design of actionable public health intervention strategies. Previous works offered a post-level analysis of emotions whereas the new method proposed the systematic conversation-level depiction of the flows of emotions. The ability to depict these emotional flows in online conversations is key to monitoring and controlling potential health risks encouraged by social interplay during periods of severe trauma and distress.

Also noteworthy, the proposed method could be applied to analyse optimism and pessimism in other languages (for example, Spanish or French). The main requirement would be the use of language-specific text pre-processing techniques and embeddings. Stopwords lookup lists, lemmatization tools and alike resources are available for main languages. Regarding the deep learning methods, there is a multilingual version of Glove (Pennington et al., 2014) and several BERT pre-trained model options, such as BETO (a pre-trained model of BERT in Spanish) (Cañete et al., 2020) or CamemBERT (a pre-trained model in French) (Martin et al., 2020). Multilingual models such as BERT-base-multilingual could also be an option (Devlin et al., 2018).

From the cognitive-behavioural perspective, the proposed sociome-based learning approach is a relevant tool for the surveillance and management of pandemics, health crises and trauma-related stress. Likewise, it may be used to capitalize on existing online support, maximising the spread of positive feelings and improving people's moods and attitudes. Besides, the new COVID-19 tweet corpora are valuable to train and test emotion detection models on social media contents, namely regarding situational awareness during health crises.

The proposed approach can thus be applied to artificial intelligence systems for targeted awareness campaigns and emotional support. In particular, emotional traits may be combined with other information to design empathic ways to approach vulnerable people and to amplify optimism throughout similar conversational contexts. The ultimate goal is to prevent and fight back the onset of mental health issues (e.g. depression and anxiety) and alert individuals and communities of potential risk behaviours (e.g. social distancing and vaccination).

5.3. Limitations

The most immediate limitation is motivated by the fact that the capture of raw data was carried out using the free Twitter API, i.e. the number of available tweets is restricted by the amount of information that the platform allows to extract. For this reason, a full data retrieval through automated dashboard vendors, or using a paid service of the Twitter API, may provide further insights.

Moreover, the current analysis is based on all the Twitter messages written in English. Since each country has adopted different restrictions and lockdown measures, possible clustering of users across different English-speaking countries could be interesting from a practical perspective. However, the introduction of geographic information is not mandatory on Twitter (similar to age and gender) and most of the user accounts did not supply such information. To some extent, such information could be potentially obtained by combining the semantical, geographical and visual profile information with the geolocation of the Twitter messages and third-party, specialised databases, such as GeoNames. However, it would entail an additional curation effort that was out of the scope of the current work.

Similarly, Twitter labels on state-affiliated accounts could provide additional context about accounts that are controlled by certain official representatives of governments, state-affiliated media entities and individuals closely associated with those entities. Yet, such data is not currently available through the Twitter API.

5.4. Directions for future research

There are also interesting directions for future research to extend this work. The current study is just a first step in the emotional characterisation of conversational contexts or researching the psychological and social well-being of individuals and populations. Future research may extend this study by linking emotional conversation traits with other infodemiology data to look into the attenuating effects of online affect labelling regarding health policies and recommendations, including misconceptions, misinformation and risk-prone social conducts are advocated and spread online.

Twitter accounts could be further investigated in terms of potential bots and non-individual users. In the present case study, analysis accounted for verified accounts, which is a piece of information readily available through the API. Further description of the “players” could be conducted after the flows are delineated (i.e. after the application of the proposed method). For example, one could inspect who is systematically polarising conversations and which bots or non-individuals are actively looking to change opinions and those that just want to blend in.

On a similar note, future research can explore whether and how different types of health knowledge would need to be processed differently by social media users, so that the feelings about certain topics may lay more on the optimistic side. Likewise, it would be relevant to study how health organisations, knowledgeable individuals and influencers can be effective disseminators of optimism. Notably, the reported increase in mental health issues due to physical distancing, quarantining processes, and social isolation makes research on these topics pivotal to systematically investigate, understand, and tailor appropriate interventions.

6. Conclusions

Signatures of public emotion and attention are present in social media data. The need to focus on this sharing to study individuals’ opinions and social behaviour towards the COVID-19 pandemic is timely because in interpreting them one may unravel the affective risk inherent to these daily interactions. So, this work proposes a new deep learning methodology for the automatic detection of optimistic and pessimistic tweets and the social contextualisation of these emotions.

The methodology was applied to social media conversations about the COVID-19 pandemic. Classifier fine-tuning entailed the coupling of the BERT transformer embedding with several learning architectures. Based on the obtained f-score values, the BERTBASE-Bi-LSTM models, i.e. bidirectional long short-term memory models, supported further detection of optimistic and pessimistic tweets. The predictions of these models for four periods of the pandemic enabled an in-depth observation of how the evolution of the health crisis affected the emotional frequency of the conversations and motivated emotional shifts in the individuals. In addition to depicting emotional flow within conversations, conversation starters or active engagers were depicted based on social influence and their emotional patterns across conversations.

Overall, the sociome analysis approach proposed to understand the public perception of the COVID-19 pandemic holds great potential to unravel the positive aspects as well as the risks inherent to daily online interactions during health crises. These insights can be used to better adapt crisis management plans to the needs and concerns expressed by the population as the crisis evolves.

CRediT authorship contribution statement

Guillermo Blanco: Methodology, Investigation, Software, Writing – original draft, Writing – review & editing. Anália Lourenço: Conceptualization, Supervision, Validation, Writing – original draft, Writing – review & editing.

Declarations of competing interest

The authors declare no conflicts of interest regarding the publication of this paper.

Acknowledgements

This study was supported by MCIN/AEI/ 10.13039/501100011033 under the scope of the CURMIS4th project (Grant PID2020–113673RB-I00), the Consellería de Educación, Universidades e Formación Profesional (Xunta de Galicia) under the scope of the strategic funding of ED431C2018/55-GRC Competitive Reference Group, the “Centro singular de investigación de Galicia” (accreditation 2019–2022) and the European Union (European Regional Development Fund - ERDF)- Ref. ED431G2019/06, and the Portuguese Foundation for Science and Technology(FCT) under the scope of the strategic funding of UIDB/04469/2020 unit. SING group thanks CITI (Centro de Investigación, Transferencia e Innovación) from the University of Vigo for hosting its IT infrastructure. Funding for open access charge: Universidade de Vigo/CISUG.

Footnotes

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.ipm.2022.102918.

Appendix. Supplementary materials

References

- Abbas J., Wang D., Su Z., Ziapour A. The role of social media in the advent of COVID-19 pandemic: crisis management, mental health challenges and implications. Risk Management and Healthcare Policy. 2021;14:1917. doi: 10.2147/RMHP.S284313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abd-Alrazaq A., Alhuwail D., Househ M., Hamdi M., Shah Z. Top concerns of tweeters during the COVID-19 pandemic: infoveillance study. Journal of Medical Internet Research. 2020;22(4):e19016. doi: 10.2196/19016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alorini G., Rawat D.B., Alorini D. IEEE International Conference on Communications. 2021. LSTM-RNN based sentiment analysis to monitor COVID-19 opinions using social media data. [DOI] [Google Scholar]

- Alshahrani A., Ghaffari M., Amirizirtol K., Liu X. Proceedings of the International Joint Conference on Neural Networks. 2020. Identifying optimism and pessimism in twitter messages using XLNet and deep consensus. [DOI] [Google Scholar]

- Benzel E. Optimism versus pessimism: The choice is yours. World Neurosurgery. 2020;144 doi: 10.1016/j.wneu.2020.09.133. xxi. [DOI] [PubMed] [Google Scholar]

- Bontempi E. The europe second wave of COVID-19 infection and the Italy “strange” situation. Environmental Research. 2021;193 doi: 10.1016/j.envres.2020.110476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiman L. Random forests. Machine Learning. 2001;45(1):5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- Brindha D., Jayaseelan R., Kadeswara S. Social media reigned by information or misinformation about COVID-19: A phenomenological study. SSRN Electronic Journal. 2020 doi: 10.2139/ssrn.3596058. May. [DOI] [Google Scholar]

- Brownlie J., Shaw F. Empathy rituals: Small conversations about emotional distress on twitter. Sociology. 2019;53(1):104–122. doi: 10.1177/0038038518767075. [DOI] [Google Scholar]

- Cacciapaglia G., Cot C., Sannino F. Second wave COVID-19 pandemics in Europe: A temporal playbook. Scientific Reports. 2020;10(1):15514. doi: 10.1038/s41598-020-72611-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cambria E., Poria S., Gelbukh A., Thelwall M. Sentiment analysis is a big suitcase. IEEE Intelligent Systems. 2017;32(6):74–80. doi: 10.1109/MIS.2017.4531228. [DOI] [Google Scholar]

- Cañete, J., Chaperon, G., Fuentes, R., Ho, J.-.H., Kang, H., & Perez, J. (2020). Spanish pre-trained BERT Model. Workshop Paper at PML4DC, ICLR, 1–10.

- Caragea C., Dinu L.P., Dumitru B. Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, EMNLP. Vol. 2018. 2018. Exploring optimism and pessimism in twitter using deep learning; pp. 652–658. [DOI] [Google Scholar]

- Carver C.S., Scheier M.F. Trends in cognitive sciences. Elsevier Ltd; 2014. Dispositional optimism; pp. 293–299. Vol. 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carver C.S., Scheier M.F., Segerstrom S.C. Optimism. Clinical Psychology Review. 2010;30(7):879–889. doi: 10.1016/j.cpr.2010.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J., Han E.Le, Luli G.K. COVID-19 vaccine–related discussion on twitter: Topic modeling and sentiment analysis. J Med Internet Res. 2021;23(6):E24435. doi: 10.2196/24435. 2021Https://Www.Jmir.Org/2021/6/E24435, 23(6), e24435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cortes C., Vapnik V., Saitta L. Support-vector networks. Machine Learning. 1995;20(3):273–297. doi: 10.1007/BF00994018. 1995320. [DOI] [Google Scholar]

- COVID-19 Dashboard by the Center for Systems Science and Engineering(CSSE) at Johns Hopkins University (JHU). (2021). https://coronavirus.jhu.edu/map.html.

- Crocamo C., Viviani M., Famiglini L., Bartoli F., Pasi G., Carrà G. Surveilling COVID-19 emotional contagion on twitter by sentiment analysis. European Psychiatry. 2021;64(1):1–6. doi: 10.1192/j.eurpsy.2021.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danielle, Smith H., Cheney T., Stoddard G., Coppersmith G., Bryan C., Conway M. Understanding depressive symptoms and psychosocial stressors on twitter: A corpus-based study. Journal of Medical Internet Research. 2017;19(2):E48. doi: 10.2196/JMIR.6895. 2017Https://Www.Jmir.Org/2017/2/E48, 19(2), e6895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danny Thij, ten M., Bathina K., Rutter L.A., Bollen J. Social media insights into US mental health during the COVID-19 pandemic: Longitudinal analysis of twitter data. Journal of Medical Internet Research. 2020;22(12) doi: 10.2196/21418. 2020E21418 Https://Www.Jmir.Org/2020/12/E21418, 22(12), e21418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devlin, J., Chang, M.-.W., Lee, K., & Toutanova, K. (2018). BERT: Pre-training of deep bidirectional transformers for language understanding. http://arxiv.org/abs/1810.04805.

- Dyer J., Kolic B. Public risk perception and emotion on twitter during the Covid-19 pandemic. Applied Network Science. 2020;5(1) doi: 10.1007/s41109-020-00334-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrara E., Yang Z. Measuring emotional contagion in social media. PloS one. 2015;10(11):1–14. doi: 10.1371/journal.pone.0142390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gasper K. Utilizing neutral affective states in research: theory, assessment, and recommendations. Emotion Review. 2018;10(3):255–266. doi: 10.1177/1754073918765660. [DOI] [Google Scholar]

- Goldenberg A., Gross J.J. Digital emotion contagion. Trends in Cognitive Sciences. 2020;24(4):316–328. doi: 10.1016/j.tics.2020.01.009. [DOI] [PubMed] [Google Scholar]

- González J.Á., Hurtado L.F., Pla F. Transformer based contextualization of pre-trained word embeddings for irony detection in twitter. Information Processing and Management. 2020;57(4) doi: 10.1016/j.ipm.2020.102262. [DOI] [Google Scholar]

- Harb J.G.D., Ebeling R., Becker K. A framework to analyze the emotional reactions to mass violent events on Twitter and influential factors. Information Processing & Management. 2020;57(6) doi: 10.1016/j.ipm.2020.102372. [DOI] [Google Scholar]

- Hecht D. The neural basis of optimism and pessimism. Experimental Neurobiology. 2013;22(3):173–199. doi: 10.5607/en.2013.22.3.173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrando C., Constantinides E. Emotional contagion: A brief overview and future directions. Frontiers in Psychology. 2021;12(July):1–7. doi: 10.3389/fpsyg.2021.712606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang W., Cao B., Yang G., Luo N., Chao N. Turn to the internet first? Using online medical behavioral data to forecast COVID-19 epidemic trend. Information Processing and Management. 2021;58(3) doi: 10.1016/j.ipm.2020.102486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson A.F., Pollock W., Rauhaus B. Mass casualty event scenarios and political shifts: 2020 election outcomes and the U.S. COVID-19 pandemic. Administrative Theory & Praxis. 2020;42(2):249–264. doi: 10.1080/10841806.2020.1752978. [DOI] [Google Scholar]

- Khatua A., Khatua A., Cambria E. A tale of two epidemics: Contextual word2vec for classifying twitter streams during outbreaks. Information Processing and Management. 2019;56(1):247–257. doi: 10.1016/j.ipm.2018.10.010. [DOI] [Google Scholar]

- Kim Y. EMNLP 2014 - 2014 Conference on Empirical Methods in Natural Language Processing, Proceedings of the Conference. 2014. Convolutional neural networks for sentence classification; pp. 1746–1751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang B., Su H., Gui L., Cambria E., Xu R. Aspect-based sentiment analysis via affective knowledge enhanced graph convolutional networks. Knowledge-Based Systems. 2022;235 doi: 10.1016/J.KNOSYS.2021.107643. [DOI] [Google Scholar]

- Martin, L., Muller, B., Ortiz Suárez, P.J., .Dupont, Y., Romary, L., de la Clergerie, É. et al. (2020). CamemBERT: A tasty french language model. 7203–7219. 10.18653/v1/2020.acl-main.645. [DOI]

- McCallum A., Nigam K. AAAI/ICML-98 Workshop on Learning for Text Categorization. 1998. A comparison of event models for naive bayes text classification; pp. 41–48. https://doi.org/10.1.1.46.1529. [Google Scholar]

- Naseem U., Razzak I., Khushi M., Eklund P.W., Kim J. COVIDSenti: A large-scale benchmark twitter data set for COVID-19 sentiment analysis. IEEE Transactions on Computational Social Systems. 2021;8(4):976–988. doi: 10.1109/TCSS.2021.3051189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naseem U., Razzak I., Musial K., Imran M. Transformer based deep intelligent contextual embedding for twitter sentiment analysis. Future Generation Computer Systems. 2020;113:58–69. doi: 10.1016/j.future.2020.06.050. [DOI] [Google Scholar]

- Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury Google, J., Chanan, G. et al. (2019). PyTorch: An Imperative style, high-performance deep learning library.

- Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., et al. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research. 2011;12:2825–2830. [Google Scholar]

- Pennington J., Socher R., Manning C.D. EMNLP 2014 - 2014 Conference on Empirical Methods in Natural Language Processing, Proceedings of the Conference. 2014. GloVe: Global Vectors for Word Representation; pp. 1532–1543. [DOI] [Google Scholar]

- Phan H.T., Nguyen N.T., Hwang D. Convolutional attention neural network over graph structures for improving the performance of aspect-level sentiment analysis. Information Sciences. 2022;589:416–439. doi: 10.1016/J.INS.2021.12.127. [DOI] [Google Scholar]

- Poria S., Majumder N., Mihalcea R., Hovy E. Emotion recognition in conversation: Research challenges, datasets, and recent advances. IEEE access : practical innovations, open solutions. 2019;7:100943–100953. doi: 10.1109/ACCESS.2019.2929050. [DOI] [Google Scholar]

- Pota, M., Ventura, M., & Catelli, R. (2021). An Effective BERT-based pipeline for twitter sentiment analysis : A case study in italian. 1–21. [DOI] [PMC free article] [PubMed]

- Ren Z., Shen Q., Diao X., Xu H. A sentiment-aware deep learning approach for personality detection from text. Information Processing and Management. 2021;58(3) doi: 10.1016/j.ipm.2021.102532. [DOI] [Google Scholar]

- Riquelme F., González-Cantergiani P. Measuring user influence on twitter: A survey. Information Processing and Management. 2016;52(5):949–975. doi: 10.1016/j.ipm.2016.04.003. [DOI] [Google Scholar]

- Roesslein, J. (2020). Tweepy: Twitter for python!https://github.com/Tweepy/Tweepy.

- Ruan X., Wilson S.R., Mihalcea R. 54th Annual Meeting of the Association for Computational Linguistics, ACL 2016 - Short Papers. 2016. Finding optimists and pessimists on twitter; pp. 320–325. [DOI] [Google Scholar]

- Ruktanonchai N.W., Floyd J.R., Lai S., Ruktanonchai C.W., Sadilek A., Rente-Lourenco P., et al. Assessing the impact of coordinated COVID-19 exit strategies across Europe. Science (New York, N.Y.) 2020;369(6510):1465–1470. doi: 10.1126/science.abc5096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saha K., Torous J., Ernala S.K., Rizuto C., Stafford A., De Choudhury M. A computational study of mental health awareness campaigns on social media. Translational Behavioral Medicine. 2019;9(6):1197–1207. doi: 10.1093/tbm/ibz028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheier M.F., Carver C.S. Optimism, coping, and health: Assessment and implications of generalized outcome expectancies. Health psychology : Official journal of the division of health psychology, American Psychological Association. 1985;4(3):219–247. doi: 10.1037/0278-6133.4.3.219. [DOI] [PubMed] [Google Scholar]

- Scheier Michael F., Swanson J.D., Barlow M.A., Greenhouse J.B., Wrosch C., Tindle H.A. Optimism versus pessimism as predictors of physical health: A comprehensive reanalysis of dispositional optimism research. The American Psychologist. 2020 doi: 10.1037/amp0000666. [DOI] [PubMed] [Google Scholar]

- Schild, L., Ling, C., Blackburn, J., Stringhini, G., Zhang, Y., & Zannettou, S. (2020). “Go eat a bat, Chang!”: An early look on the emergence of sinophobic behavior on web communities in the face of COVID-19. ArXiv, 2.

- Sciandra A. Proceedings - IEEE Symposium on Computers and Communications, 2020-July. 2020. COVID-19 Outbreak through Tweeters’ Words: Monitoring Italian Social Media Communication about COVID-19 with Text Mining and Word Embeddings. [DOI] [Google Scholar]

- Sharma S., Sharma S. Analyzing the depression and suicidal tendencies of people affected by COVID-19’s lockdown using sentiment analysis on social networking websites. Journal of Statistics and Management Systems. 2020:1–19. doi: 10.1080/09720510.2020.1833453. [DOI] [Google Scholar]

- Shaver P., Schwartz J., Kirson D., O'Connor C. Emotion knowledge: Further exploration of a prototype approach. Journal of Personality and Social Psychology. 1987;52(6):1061–1086. doi: 10.1037//0022-3514.52.6.1061. [DOI] [PubMed] [Google Scholar]

- Soroya S.H., Farooq A., Mahmood K., Isoaho J., Zara S.e. From information seeking to information avoidance: Understanding the health information behavior during a global health crisis. Information Processing and Management. 2021;58(2) doi: 10.1016/j.ipm.2020.102440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor S., Landry C.A., Paluszek M.M., Rachor G.S., Gordon J., Asmundson G. Worry , avoidance , and coping during the COVID-19 pandemic : A comprehensive network analysis. Journal of Anxiety Disorders. 2020;76(August) doi: 10.1016/j.janxdis.2020.102327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trisna, K.W., .& Jie, H.J. (2022). Deep Learning Approach for aspect-based sentiment classification: A comparative review. 10.1080/08839514.2021.2014186. [DOI]

- van Bavel J.J., Baicker K., Boggio P.S., Capraro V., Cichocka A., Cikara M., et al. COVID-19 pandemic response. Nature Human Behaviour. 2020:1–12. doi: 10.1038/s41562-020-0884-z. [DOI] [PubMed] [Google Scholar]

- Venigalla, A.S.M., Vagavolu, D., & Chimalakonda, S. (2020). Mood of india during Covid-19 - An interactive web portal based on emotion analysis of twitter data. ArXiv, 65–68.

- Wang Z., Ho S.B., Cambria E. A review of emotion sensing: Categorization models and algorithms. Multimedia Tools and Applications. 2020;79(47–48):35553–35582. doi: 10.1007/S11042-019-08328-Z/TABLES/12. [DOI] [Google Scholar]

- Wolf T., Debut L., Sanh V., Chaumond J., Delangue C., Moi A., et al. Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations. 2020. Transformers: State-of-the-art natural language processing; pp. 38–45.https://www.aclweb.org/anthology/2020.emnlp-demos.6 [Google Scholar]

- Wróbel M., Imbir K.K. Broadening the perspective on emotional contagion and emotional mimicry: The correction hypothesis. Perspectives on Psychological Science. 2019;14(3):437–451. doi: 10.1177/1745691618808523. [DOI] [PubMed] [Google Scholar]

- Xiao L., Xue Y., Wang H., Hu X., Gu D., Zhu Y. Exploring fine-grained syntactic information for aspect-based sentiment classification with dual graph neural networks. Neurocomputing. 2022;471:48–59. doi: 10.1016/J.NEUCOM.2021.10.091. [DOI] [Google Scholar]

- Xue J., Chen J., Hu R., Chen C., Zheng C., Su Y., et al. Twitter discussions and emotions about the COVID-19 pandemic: Machine learning approach. Journal of Medical Internet Research. 2020;22(11):1–14. doi: 10.2196/20550. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.