Abstract

With the circulation of misinformation about the COVID-19 pandemic, the World Health Organization has raised concerns about an “infodemic,” which exacerbates people’s misperceptions and deters preventive measures. Against this backdrop, this study examined the conditional indirect effect of social media use and discussion heterogeneity preference on COVID-19-related misinformation beliefs in the United States, using a national survey. Findings suggested that social media use was positively associated with misinformation beliefs, while discussion heterogeneity preference was negatively associated with misinformation beliefs. Furthermore, worry of COVID-19 was found to be a significant mediator as both associations became more significant when mediated through worry. In addition, faith in scientists served as a moderator that mitigated the indirect effect of discussion heterogeneity preference on misinformation beliefs. That is, among those who had stronger faiths in scientists, the indirect effect of discussion heterogeneity preference on misinformation belief became more negative. The findings revealed communication and psychological factors associated with COVID-19-related misinformation beliefs and provided insights into coping strategies during the pandemic.

Keywords: Misinformation, COVID-19, Social media, Discussion heterogeneity, Worry, Faith in scientists

1. Introduction

During the COVID-19 pandemic, the World Health Organization (WHO) has raised concerns about an “infodemic” (World Health Organization., 2020a, Vraga et al., 2020, p. 475) as the COVID-19-related misinformation have been spread and exacerbated on social media platforms. Since the outbreak of the pandemic, various types of misinformation revolving the origin of the virus, the harmfulness of the virus, and the ways in which the virus can be spread have been circulating (Brennen et al., 2020). Scholars have lamented a number of negative consequences of misinformation, including the crystallization of hostile attitudes toward others and the destruction of the deliberative democracy (Garrett, 2011). Given these worrisome ramifications, factors associated with misinformation beliefs warrant further examinations, and the COVID-19 pandemic provides a novel context (Vraga et al., 2020). Scholars suggested that beliefs in the COVID-19-related misinformation would trigger negligence in prevention and reluctance to protective measures (Barua et al., 2020), which in turn, could lead to the increase in fatalities.

As of the date of the current study, the United States has been leading the world in the infection and fatality rates of COVID-19 (WHO, 2020b). Several Pew polls revealed that considerable amounts of Americans have reported believing in conspiracy theories about COVID-19, such as the theories that the virus was intentionally created in a lab and that vaccines were already available (e.g., Garrison, 2020, Pew Research Center, 2020). Plenty of studies have also endeavored to unravel the communicative, psychological, cognitive, and ideological factors shaping people’s misinformation beliefs (e.g., Allington et al., 2020, Anspach and Carlson, 2018). However, given the novelty of the pandemic, empirical findings at the current phase are sporadic rather than conclusive; mixed results have abounded.

Against this backdrop, the current study attempts to build a moderated mediation model to examine whether a communicative behavior—social media use, and a psychological factor—preference for discussion heterogeneity, would associate with individuals’ beliefs in the COVID-19-related misinformation. In addition, given the information glut has elicited negative emotions such as worry (Ma and Miller, 2020), which likely in turn affect the beliefs in misinformation, this study also attempts to investigate the mediating effect of worry. Further, considering the widespread conspiracy theory claiming that the virus is a hoax, the resulting dispute over the authenticity of the virus (Gruzd and Mai, 2020), as well as the conflict between the religious beliefs and scientistic discourses among the American society, the current study incorporates faith in scientists as a moderator, to probe whether the main effects were contingent upon the extent to which individuals hold faith in scientists.

Theoretically, the study is committed to providing brand new evidence to the extant knowledge with respect to the role social media use and discussion heterogeneity preference play in shaping people’s misperceptions. Practically, through delineating the associations across the examined factors, the current study could provide important insights into the antecedents and prerequisites of misinformation beliefs, as well as the viable solutions needed by scholars and decision makers to curb the “infodemic” (Vraga et al., 2020, p. 475; WHO, 2020a).

1.1. Social media use and misinformation beliefs

Misinformation is broadly conceptualized as “cases in which people’s beliefs about factual matters are not supported by clear evidence and expert opinion” (Nyhan and Reifler, 2010, p. 305). As Southwell et al. (2018, p. 3) have put, misinformation speaks to “a category of claim for which there is at least substantial disagreement (or even consensus rejection) when judged as to truth value among the widest feasible range of observers.” Hence, misinformation belief refers to the faith and beliefs in such unverified information (Anspach and Carlson, 2018).

Scholars have raised concerns about the negative consequences of the particularly prominent misinformation abound on varieties of issues in the health domains, including the deterrence of effective preventive measures and the decrease of people’s awareness of the harmfulness of viruses and diseases (Allington et al., 2020, Bode and Vraga, 2018, Vraga et al., 2020). During the COVID-19 pandemic, several types of misinformation have been abounding globally, while two among them have garnered particularly large numbers of believers in the U.S. According to Pew, three-in-ten Americans believe in the theory that the COVID-19 was created in a lab (Pew Research Center, 2020), and one third in the U.S. believed that a vaccine exists already (Garrison, 2020). The current study focuses on both misinformation beliefs.

The worrisome ramifications have triggered scholarly attentions to where and how misinformation was produced and disseminated. Numerous studies have lent credence to social media’s role as the misinformation hub (e.g., Allington et al., 2020, Anspach and Carlson, 2018, Valenzuela et al., 2019). For instance, Valenzuela et al. (2019) suggested that social media use was positively associated with misinformation sharing. Anspach and Carlson (2018) indicated that users of social media such as Twitter and Facebook were more likely to be misinformed about the featured topic, tending to “report the factually-incorrect information” (p. 697). Analyzing survey samples, Allington et al. (2020) revealed that during the COVID-19 pandemic, the more heavily people relied on Twitter, Facebook or YouTube as their main information sources, the more likely they believed in the COVID-19 related conspiracy theories.

Many studies have revealed the reasons as to why misinformation goes viral easily in the social media environment (e.g., Chen et al., 2015). For instance, Chen et al. (2015) indicated that people shared misinformation mainly due to its unique information characteristics, namely, misinformation were usually more “interesting,” “new and eye-catching” and “can be a good topic of conversation” than authentic information (p. 587). They also suggested that people did not prioritize accuracy and authenticity while sharing information on social media. This finding echoes the argument that most misinformation is based on conspiracy theories, claiming to expose malicious purposes of certain organizations or individuals or to reveal secrets and untold stories (Craft et al., 2017). Such clickivism, bizarreness and conspiracism garnered greater amounts of attention and dissemination (Peter and Koch, 2019, Uscinski and Parent, 2014). Furthermore, scholars also suggested that excessive use of social media usually lead to social media fatigue, which could lead users to be less inclined to authenticate news before sharing (Ravindran et al., 2014). This argument is buttressed by a subsequent study, which revealed that excessive use of social media and the resulting fatigue was positively associated with fake news sharing online (i.e., Talwar et al., 2019).

Building upon the literature, the following hypothesis is proposed:

H1: Social media use would be positively associated with COVID-19 related misinformation beliefs.

1.2. Discussion heterogeneity preference and misinformation beliefs

Discussion heterogeneity is conceptualized as exposure to diverse viewpoints and engagement in discussions with those viewpoints (Kim et al., 2013, Scheufele et al., 2006). As the defining characteristics of a deliberative democracy speak to the free flow of information, examination of alternatives with scrutiny, comprehension of different opinions, and high level of political understanding and tolerance (Habermas, 1989, Kim et al., 2013, MUTZ, 2002), discussion heterogeneity is considered crucial for the formation and development of a deliberative democracy. The concept of discussion heterogeneity is originally stemmed from Festinger’s (1957) cognitive dissonance theory, which hypothesized that people tend to selectively expose to like-minded information while avoiding information incongruent with their pre-existing beliefs, in order to reduce cognitive dissonance.

Building upon this theoretical basis, discussion heterogeneity preference refers to the psychological predilection for discussion with people holding different viewpoints (Kim et al., 2013, Scheufele et al., 2006). A sizable body of literature has suggested that if individuals only expose themselves to like-minded others, they will become less likely to tolerant alternative viewpoints and be reluctant to re-examine their pre-existing opinions or make self-corrections, which in turn, intensifies the ideological polarization at the societal level (Kim et al., 2013, Scheufele et al., 2006).

Moreover, scholars have also highlighted that reluctancy and rejection of engaging in discussions with various viewpoints could exacerbate the prevalence of misinformation. For instance, Törnberg (2018) found that lack of discussion heterogeneity on social media contributed to the virality of misinformation. Jost and associates (2018) also indicated that the preference for homogenous social networks and the favor of an echo chamber environment were “conducive to the spread of misinformation” (p. 77). Likewise, through analyzing Facebook data in the Italian and the U.S. contexts, Zollo and Quattrociocchi (2018) found that “the size of misinformation cascades may be approximated by the same size of the echo chamber” (p. 193); in other words, the discussion network homogeneity was significantly tied to the dissemination scale of misinformation. On the other hand, however, many have also unraveled that a quality heterogenous discussion network helped curb the spread of misinformation, which in turn, reduced people’s misperceptions such as conspiracy beliefs and biases (e.g., Bode and Vraga, 2018, Chou et al., 2018).

The mechanism of the positive tie between heterogeneity preference and the reduced misinformation beliefs is rather straightforward. Namely, effective discussions with people with different viewpoints contribute to deliberations and persuasions, which in turn, reduce simplistic thinking and facilitate self-corrections (Bode and Vraga, 2018, Kim and Kim, 2017). Building upon the reviewed literature, the second hypothesis is formulated:

H2: Discussion heterogeneity preference would be negatively associated with misinformation beliefs.

1.3. Worry as mediator

In addition to the main associations, the current study also seeks to understand whether worry of COVID-19 would mediate both associations. Defined as the negatively charged emotion due to an individual’s cognitive risk analysis on an antecedent (Borkovec, 2002), worry is an important variable to consider when investigating the latent process by which media consumption and certain psychological preferences lead people to accept or deny information (Bauer et al., 2020, Emery et al., 2014). For instance, through analyzing a national panel about cigarette impact, Emery et al. (2014) found that when participants were exposed to smoke-related messages charged with images, their worries increased, and the latter, in turn, promoted feelings toward quitting smoke (Emery et al., 2014). Bauer et al. (2020) also found that worry was a significant mediator between stressors and anxiety/depression. In the context of climate change, Kim et al. (2020) indicated that worry could “capture individuals’ attention and motivate more in-depth message processing” (Kim et al., 2020, p. 7). In a nutshell, worry, as a psychological factor, mediated the association between communicative behaviors and psychological responses (Emery et al., 2014, Kim et al., 2020, Nabi et al., 2018, Skurka et al., 2018).

Considering the novelty of the COVID-19 pandemic, extremely limited research has hitherto examined the mediating role of “worry”. However, a sizable body of literature has already lent credence to (1) the positive effect of social media use on worry (Chae, 2015, Vannucci et al., 2017) and (2) the positive effect of heterogeneity on psychological well-beings and mental health (Carmichael et al., 2017, Kim and Kim, 2017). Specifically, the excessive use of social media and the online information abundance were found to exacerbate worry (Chae, 2015, Morin-Major et al., 2016, Vannucci et al., 2017). For example, aligned with Morin-Major et al., 2016, Vannucci et al., 2017 demonstrated “a positive association” between social media use and worry (p. 165). Discussion heterogeneity, on the contrary, could facilitate people’s psychological well-being while decreasing worry (e.g., Kim and Kim, 2017). Therefore, the following hypotheses are postulated regarding the first set of pathways of the mediation model:

H3a: Social media use would be positively associated with worry.

H3b: Discussion heterogeneity preference would be negatively associated with worry.

Studies have also confirmed the role of worry in leading to higher likelihood of misperceptions, suggesting that when people are worried, their abilities of information discerning would weaken (e.g., Botzen et al., 2009, Mol et al., 2020). For instance, through analyzing a survey, Botzen et al. (2009) found that worry was positively associated with misperception. This finding was further bolstered by a recent survey analysis (Mol et al., 2020), which revealed that higher levels of worry was related to greater misperception. Hence, a hypothesis regarding the second path of the mediation model is proposed:

H4: Worry would be positively associated with misinformation beliefs.

The juxtaposition of H3 and H4 speaks to two mediation models. That is, (1) frequent social media use would lead to increased worry of the COVID-19, the latter in turn triggers increased misinformation beliefs; (2) discussion heterogeneity would lead to lower levels of worry, which would mediate the effect of the former on misinformation beliefs. Hence, the following hypothesis is put forth:

H5: Worry of COVID-19 would be a significant mediator of the associations (a) between social media use and misinformation beliefs and (b) between discussion heterogeneity preference and misinformation beliefs.

1.4. Faith in scientists as moderator

Motivated reasoning is conditional rather than pervasive (Kunda, 1990). Copious scholars have endeavored to investigate individual-level and contextual factors mitigating motivated reasoning (e.g., Bolsen et al., 2014, Miller et al., 2016). In terms of misperceptions such as conspiracy theory endorsement and misinformation beliefs, Miller et al. (2016) suggested that faith can mitigate “ideologically driven endorsement” of misinformation (p. 827). Decades of studies have lent credence to the role faith plays in moderating communication effects on beliefs and perceptions (e.g., Ardèvol-Abreu et al., 2018, Miller et al., 2016). However, prior studies have examined faith through various dimensions, including faith in media outlets (Ardèvol-Abreu et al., 2018), faith in the message deliverer, and faith in media ownership (Williams, 2012). Given the uniqueness of the COVID-19 context, the current study focuses on faith in scientists.

The current study conceptualizes and operationalizes faith in scientists through two dimensions, namely, faith in the efficacy of scientists and faith in the validity of scientists. Faith in the efficacy of scientists speaks to the extent to which individuals “believe that scientists can solve the problems of humankind” (Carton, 2010, p. 12). Faith in the validity of scientists pertains to the priority and emphasis an individual places on science and scientists above other social actors, such as politicians and religious figures, which is widely studied in the context of health or scientific crises (e.g., Dohle et al., 2020, Nisbet, 2005, Sibley et al., 2020).

The current study hypothesizes that faith would moderate two mediation paths, namely, (1) the effects of social media use and discussion heterogeneity preference on worry, and (2) the indirect effects of social media use and discussion heterogeneity preference on misinformation beliefs through worry, the mediator. With regard to the first moderation, studies have lent evidence that the extent to which social media use and discussion heterogeneity influence emotions, affections and public opinions could be contingent upon the extent to which an individual trusts in science and the competence of scientists (e.g., Chinn et al., 2018, Diehl et al., 2019).

In terms of the moderated mediation model, scholars have suggested that strong value or faith can act as “a perceptual screen…that influences audiences to select and privilege a subset of considerations that are consistent or reinforce their predispositions” (Hart and Nisbet, 2012, p. 703). For example, Carton (2010) found that faith in scientific efficacy in terms of life improvement has moderated the effect of nuclear energy beliefs on nuclear energy attitudes. In the context of COVID-19, Sibley et al. (2020) reported that people have experienced a surge in trust in science, which is, in turn, associated with lower conspiracist ideation. Likewise, Dohle et al. (2020) examined the extent to which individuals agreed with the argument that “politicians rely on the recommendations of scientists in order to overcome the corona crisis” (p. 7), finding that the more strongly people hold such belief in the validity of scientists, the more likely they were willing to adopt protective measures during the pandemic.

In the U.S., considering the high rate of religious beliefs, the fact that the majority of the Americans believed that science often is in conflict with religion (Pew Research Center, 2015), as well as the disputes between religious and scientific believers with respect to the efficacy of preventive measures such as mask-wearing and social-distancing during the COVID-19 pandemic (Evans and Hargittai, 2020), it is rather important to examine whether faith in scientists would vary the main effects on misinformation beliefs among the U.S. public. Taken together, the final hypotheses are formulated:

H6a: Faith in scientists would moderate the associations (a) between social media use and worry and (b) between discussion heterogeneity preference and worry.

H6b: Faith in scientists would moderate the indirect associations (a) between social media use and misinformation beliefs and (b) discussion heterogeneity preference and misinformation beliefs, operated via worry, the mediator.

2. Method

2.1. Sample and procedure

The current study analyzes the 2020 American National Election Studies (ANES) Exploratory Testing Survey. The fieldwork of sampling was conducted between April 10 and 18, 2020. Respondents were general American citizens of voting age. Three separate opt-in Internet panel vendors were recruited and performed the online sampling. Qualtrics survey platform was used for questionnaire building.1 The combined final sample size is 3080.2

The current study conducted a two-phase data cleaning based on this initial sample. First, SPSS 25.0′s data cleaning functionality was utilized to remove incomplete samples from the initial raw data. Specifically, all cases that failed to complete answering any single item were removed so as to guarantee that no void cases would be included in the analyzed data no matter what items were ultimately utilized to comprise the measurement of variables. As a result, 797 incomplete questionnaires were excluded, yielding 2286 valid samples. Second, in the original questionnaire, the ANES comprised two items related to misinformation beliefs of COVID-19, which is a key endogenous variable of the current study. Both items were divided into two statements, one is a piece of misinformation and one is not. After the respondents have selected one of the statements they believed in, a following item was further put forth, asking the respondents to indicate their extents of confidence in the statement they chose. In light of the structure of the original questionnaire, the current study excluded those who selected the non-misinformation while only included those who selected the misinformation, because the latter were directed to the indication their confidence levels whereas the former were not. As a result, 482 valid samples were generated for analysis, among which 44.4% were reported female (N female = 214). All respondents were aged 18 or above (M = 40.62, SD = 13.95).

2.2. Measures

2.2.1. Endogenous variables

2.2.1.1. Social media use

Given the penetration rates of Twitter and Facebook in the U.S. (Pew Research Center., 2019a, Pew Research Center, 2019b), plenty of studies have considered the representativeness of both platforms and operationalized social media use as the frequencies of using both platforms (e.g., Valenzuela et al., 2014, Velasquez and Quenette, 2018). Consistent with these studies, the ANES measured social media use through two items, “How often do you use Facebook” and “How often do you use Twitter” via a 7-point Likert scale (1 = less than once a month, 7 = many times every day) (M = 5.75, SD = 1.45, r = 0.533, p < .001).

2.2.1.2. Discussion heterogeneity preference

Respondents were asked to indicate the frequencies with which they found helpful to hear “the political views of friends who disagree with you” (1 = all the time, 5 = never), the extent to which the respondents found that political differences hurt their relationships with “close family members” and “with friends” (1 = a great deal, 5 = not at all). All three items were reverse coded before analysis (M = 3.27, SD = 0.81, α = 0.94).

2.2.1.3. Worry of COVID-19

Consistent with other COVID-19 research (e.g., Ma and Miller, 2020), worry is operationalized as worry of getting the virus. Accordingly, one item “How worried are you personally about getting the coronavirus (COVID-19)?” was asked through a Liker scale (1 = extremely worried, 5 = not at all worried) (M = 3.44, SD = 1.37).

2.2.1.4. Faith in scientists

Faith in scientists was measured by three items, “When it comes to public policy decisions, whom do you tend to trust more, ordinary people or experts?” (1 = trust ordinary people much more, 5 = trust experts much more), “In general, how important science should be for making government decisions?” (1 = not at all important, 5 = extremely important), and “How much do ordinary people need the help of experts to understand complicated things like science and health?” (1 = not at all, 5 = a great deal) (M = 3.00, SD = 0.77, α = 0.72).

2.2.1.5. Belief in the lab misinformation

Respondents were first asked to select one from the two statements “1 = The coronavirus (COVID-19) was developed intentionally in a lab” and “2 = The coronavirus (COVID-19) was not developed intentionally in a lab.” Among those who selected the first statement, a follow-up item asked them to identify the extent to which they were confident about that statement (1 = not at all confident, 5 = extremely confident) (M = 3.62, SD = 1.16).

2.2.1.6. Belief in the vaccine misinformation

Respondents were first asked to select one from the two statements “1 = Currently, there is a vaccine for the coronavirus (COVID-19).” and “2 = Currently, there is no vaccine for the coronavirus (COVID-19).” Among those who selected the first statement, a follow-up item asked them to identify the extent to which they are confident about that statement (1 = not at all confident, 5 = extremely confident) (M = 3.45, SD = 1.19).

2.2.2. Exogenous variables

Exogenous (control) variables include gender (female: 44.3%), age (M = 40.63, SD = 13.94), education (M = 4.36 [Associate degree], SD = 1.92), income (M = 13.64, SD = 8.05), and race (non-white: 60.1%). Prior scholars have suggested that political ideology and interests should be controlled to remove the concern that the associations across main variables are too spurious (Kenski and Stroud, 2006, McCright and Dunlap, 2011); hence, political ideology (1 = very conservative, 7 = very liberal) (M = 4.15, SD = 2.10), and political interest (1 = not at all interested, 4 = extremely interested) (M = 3.43, SD = 0.83) are also included as covariates.

2.3. Analytical strategy

In addressing the hypotheses, hierarchical multiple regression was modeled whereby misinformation beliefs were regressed on the independent variables. Hayes’ (2013) PROCESS macro model 4 was performed for mediation analysis, and model 58 for moderated mediation analysis.

3. Results

3.1. Preliminary test

Prior to testing the hypotheses and research question, bivariate correlations were computed as preliminary analysis. The results were exhibited in Table 1 .

Table 1.

Bivariate correlations across all variables.

| Variables | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

– | |||||||||||

|

0.043 | – | ||||||||||

|

−0.231*** | −0.191*** | – | |||||||||

|

−0.328*** | −0.111* | 0.544*** | – | ||||||||

|

0.011 | −0.181*** | 0.004 | 0.007 | – | |||||||

|

−0.043 | −0.237*** | ,031*** | 0.057 | 0.090* | – | ||||||

|

−0.148** | −0.072*** | 0.229*** | 0.270*** | 0.532 | 0.044 | – | |||||

|

−0.149** | −0.209*** | 0.201*** | 0.229*** | −0.028 | 0.106* | 0.205*** | – | ||||

|

0.204*** | 0.413*** | −0.461*** | −0.376*** | −0.054 | −0.174*** | −0.285*** | −0.427*** | – | |||

|

−0.047 | −0.035** | 0.072** | 0.092* | −0.011 | 0.101* | 0.214*** | 0.147** | −0.161*** | – | ||

|

−0.070 | −0.093* | 0.120** | 0.173*** | 0.018 | 0.066 | 0.169** | 0.138** | −0.119** | 0.230*** | – | |

|

−0.099* | 0.011 | 0.066 | 0.098* | −0.083 | −0.024 | 0.287*** | 0.153** | −0.222*** | 0.148** | 0.211*** | – |

|

−0.088† | 0.027 | 0.068 | 0.130** | −0.047 | −0.018 | 0.214*** | 0.212*** | −0.175*** | 0.180*** | 0.234*** | 0.662*** |

Note. †p = .05 (marginally significant), *p < .05, **p < .01, ***p < .001.

3.2. Testing for main direct effects

H1 hypothesized that social media use would positively associate with misinformation beliefs. Hierarchical multiple regression was modeled whereby both types of misinformation beliefs were regressed on social media use. As can be seen in Table 2 , beyond all controls, social media use was found positively associated with both the lab belief (b = 0.052, SE = 0.041, p < .05) and the vaccine belief (b = 0.133, SE = 0.042, p < .01), lending full support to H1.

Table 2.

Hierarchical multiple regressions on both misinformation of COVID-19.

| Misinformation belief 1 (lab) | Misinformation belief 2 (vaccine) | |||

|---|---|---|---|---|

| Step 1 | b | SE | b | SE |

| Constant | 3.900*** | 0.405 | 3.485*** | 0.412 |

| Gender | −0.183 | 0.117 | −0.134 | 0.119 |

| Age | 0.003 | 0.004 | 0.003 | 0.004 |

| Education | 0.020 | 0.036 | 0.023 | 0.037 |

| Income | 0.009 | 0.009 | 0.011 | 0.009 |

| Race | −0.186 | 0.112 | −0.079 | 0.114 |

| Political ideology | −0.005 | 0.027 | −0.014 | 0.027 |

| Political interest | 0.367*** | 0.068 | 0.270*** | 0.71 |

| Model R2 | 0.025 | 0.020 | ||

| F for R2 | 1.851 | 1.484 | ||

| Step 2 | ||||

| Constant | 3.611*** | 0.548 | 2.366*** | 0.556 |

| Gender | −0.132 | 0.114 | −0.083 | 0.116 |

| Age | 0.010 | 0.004 | 0.009 | 0.005 |

| Education | −0.033 | 0.037 | −0.015 | 0.037 |

| Income | 0.002 | 0.008 | 0.005 | 0.009 |

| Race | −0.171 | 0.109 | −0.054 | 0.110 |

| Political ideology | −0.018 | 0.026 | −0.027 | 0.026 |

| Political interest | 0.285*** | 0.070 | 0.179* | 0.072 |

| Social media use | 0.052* | 0.041 | 0.133** | 0.042 |

| Discussion heterogeneity preference | −0.168*** | 0.044 | −0.092* | 0.045 |

| Worry of COVID-19 | 0.126* | 0.051 | 0.147** | 0.051 |

| Δ R2 | 0.025*** | 0.020*** | ||

| Model R2 | 0.091*** | 0.072*** | ||

| F for R2 | 7.833*** | 11.088*** | ||

Note. Cell entries are unstandardized coefficients. *p < .05, **p < .01, ***p < .001.

H2 postulated that preference for discussion heterogeneity would associate negatively with misinformation beliefs. As Table 2 demonstrates, discussion heterogeneity preference was negatively associated with the lab belief (b = −0.168, SE = 0.044, p < .001) and the vaccine belief (b = −0.092, SE = 0.045, p < .05). H2 is also fully supported.

3.3. Testing for mediation

Hayes’ (2013) PROCESS macro model 4 was conducted to investigate the hypothesized mediation effect. For the lab belief, results suggested that higher levels of social media use were associated with increased worry (b = 0.097, SE = 0.022, p < .001), and the latter was associated with increased belief in the lab misinformation (b = 0.180, SE = 0.066, p < .01). The procedure of bias-corrected percentile bootstrap showed that the indirect effect based on 5000 bootstrap samples was significant, ab = 0.033, SE = 0.012, 95% CI = [0.0061, 0.0309].

When it comes to the vaccine misinformation belief, likewise, higher levels of social media use were associated with increased worry (b = 0.146, SE = 0.030, p < .001), and the latter was associated with increased belief in the vaccine misinformation (b = 0.131, SE = 0.061, p < .01). The procedure of bias-corrected percentile bootstrap showed that the indirect effect of social media use on the belief in the vaccine misinformation through worries about COVID-19 based on 5000 bootstrap samples was significant, ab = 0.020, SE = 0.017, 95% CI = [0.0024, 0.0426]. Social media use was also found to positively associate with vaccine misinformation belief independent of its effect on worries (b = 0.157, SE = 0.040, p < .001). In other words, when mediated by worry of COVID-19, the more frequently people used social media, the more likely they believed in both misinformation. Therefore, H3a, 4a and 5a were fully supported.

H3b proposed that worries of COVID-19 would mediate the association between discussion heterogeneity preference and both misinformation beliefs. For the lab misinformation belief, results suggested that higher discussion heterogeneity preference was associated with decreased worry (b = −0.193, SE = 0.036, p < .001), the latter was associated with increased lab belief (b = 0.160, SE = 0.060, p < .001). The procedure of bias-corrected percentile bootstrap showed that the indirect effect of discussion heterogeneity preference on the belief in the lab misinformation through worry based on 5000 bootstrap samples was significant, ab = −0.051, SE = 0.021, 95% CI = [−0.0821, −0.0104]. Discussion heterogeneity preference was also found to negatively associated the lab misinformation belief independent of its effect on worry (b = −0.209, SE = 0.072, p < .01).

When it comes to the belief of the vaccine misinformation, results suggested that greater discussion heterogeneity preference was associated with decreased worry (b = −0.271, SE = 0.063, p < .001), and the latter was associated with increased vaccine misinformation belief (b = 0.192, SE = 0.048, p < .001). The procedure of bias-corrected percentile bootstrap showed that such indirect effect based on 5000 bootstrap samples was significant, ab = −0.053, SE = 0.030, 95% CI = [−0.1022, −0.1068]. Hence, H3b, 4b and 5b are also supported. In other words, when mediated by worries of COVID-19, the more people preferred discussion heterogeneity, the less likely they believed in both misinformation.

3.4. Testing for moderated mediation

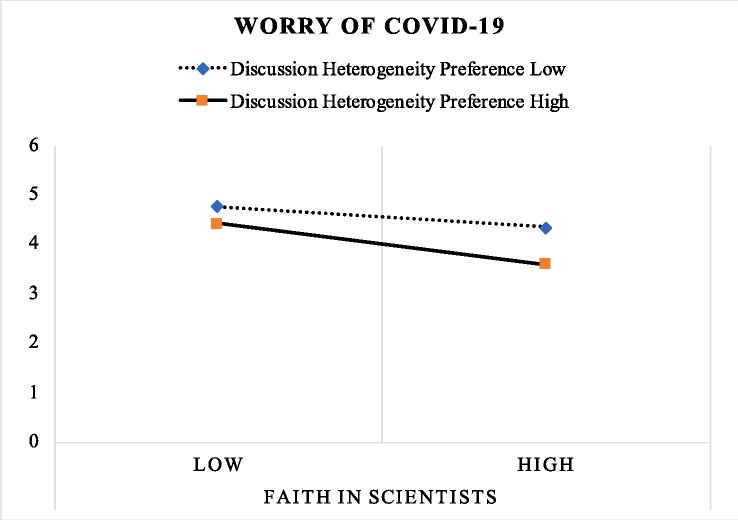

In examining H6, Hayes’ (2013) PROCESS macro model 58 was utilized. Results initially revealed that the interaction between faith in scientists and discussion heterogeneity preference on worry was significant (b = 0.252, SE = 0.052, p < .001). Fig. 1 shows the interaction. Simple slope tests demonstrated that for people who had greater faith in scientists, the negative effect of discussion heterogeneity preference on worry was significant and stronger (b = −0.549, SE = 0.098, p < .001) than those who had lower faith in scientists (b = −0.301, SE = 0.069, p < .001). However, no other interaction has emerged. Hence, H6a is partially supported.

Fig. 1.

Interaction of discussion heterogeneity preference and faith in scientists on worry of COVID-19.

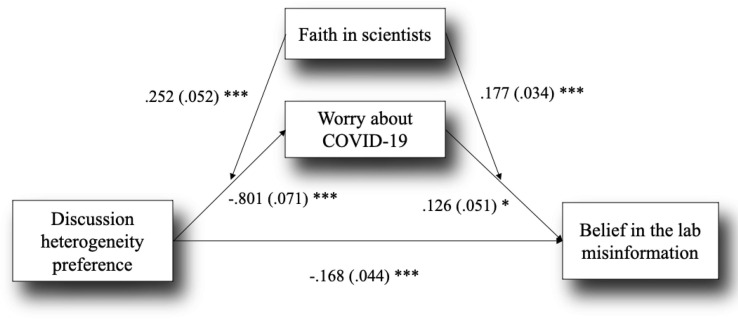

When it comes to H6b, the findings of bias-corrected percentile bootstrap indicated that the indirect effect of discussion heterogeneity preference on belief in the lab misinformation through worry was moderated by faith in scientists, with the index of moderated mediation = 0.038, SE = 0.021, 95% CI = [0.0104, 0.0769]. In other words, among people who had stronger faith in scientists, the indirect, negative effect of discussion heterogeneity preference was enhanced b = −0.113, SE = 0.035, 95% CI = [−0.1876, −0.0305], compared with those who had lower faith in scientists b = −0.076, SE = 0.032, 95% CI = [−0.1004, −0.0202]. However, faiths in scientists did not moderate other indirect effects, lending partial support to H6b. Fig. 2 displays the final moderated mediation model.

Fig. 2.

The final moderated mediation model. Note. Unstandardized coefficients were reported with standard errors in parentheses. *p < .05, ***p < .001.

4. Discussion

In the contexts of the COVID-19 pandemic, this study examined a moderated mediation model of social media use, discussion heterogeneity preference, worry, and faith in scientists on misinformation beliefs. Several findings merit further discussions.

First, findings highlighted that during the pandemic, frequent use of social media was associated with higher levels of misinformation beliefs. This result echoed prior arguments that social media have generated fertile breeding grounds on which misinformation could circulate (Allington et al., 2020, Apuke and Omar, 2020). The ease of use, the egalitarian access and the fragmented agenda-building power could contribute to a deliberative democracy but can also lead to the rampancy of misinformation if the fact-checking system remains inadequate (Pew Research Center, 2017). In other words, the decentralization of voice needs to be incorporated with valid fact-checking mechanism to establish a real deliberative democracy, because the antecedent of the free flow of information, a defining characteristic of deliberative democracy, pertains to the authenticity of such information (Habermas, 1989). On the contrary, fully circulated misinformation could backfire a healthy deliberative democracy as it can lead to hostility to conflicting views, the intensification of online incivility, and the rejection of scientism (Garrett, 2011). In the context of the COVID-19 pandemic, rampant misinformation would justify the neglect of prevention and control (Barua et al., 2020, Vraga et al., 2020).

Discussion heterogeneity preference was found to negatively associate with misinformation. This once again suggested that the exchange of diversified views provides the possibility for the existence of corrective information (Bode and Vraga, 2018), which is not only conducive to debunking misinformation, stimulating self-correction among the misinformation believers, but also beneficial to eliminating the solidification and crystallization of the echo chamber effect (Jost et al., 2018, Zollo and Quattrociocchi, 2018). In the context of health crises, heterogeneous discussion networks in the decentralized social network environment could facilitate self-correction and purification, and to a certain extent alleviate the polarization between the misinformation believers and the scientific discourses (Barberá, 2014).

Additionally, the current study also found that worry played a mediating role. This result well-aligns with prior findings that affective heuristics could occur after the functioning of a certain psychological mechanism or media exposure, which either buffer or facilitates the behavioral or psychological outcome (Bauer et al., 2020, Emery et al., 2014). In the context of the COVID-19 pandemic, this finding indicated that the tie between social media use and misinformation beliefs became more positive when mediated by worry. On the contrary, the linkage between heterogeneity preference and misinformation believe became more negative when mediated by worry. Such reduction of worry might relate to the relaxation of vigilance against the harmfulness of the virus, it could also pertain to the elimination of blind fear due to the exposure to diversified opinions and corrective information (Emery et al., 2014, Kim et al., 2020, Nabi et al., 2018, Skurka et al., 2018).

Lastly, two moderations effects were also found. First, among those who hold stronger faith in scientists, the negative effect of discussion heterogeneity preference on worry was enhanced. This is quite understandable as people’s unfounded worries originated from misperceptions could be reduced if one hold stronger faith in scientists. More importantly, during conversations with different people holding heterogeneous beliefs and perceptions, people believing in conspiracy theories would be rebutted and corrected directly by people believing in scientists’ discourses. Upon such interpersonal deliberation and misinformation correction, worry could be reduced (Jost et al., 2018, Zollo and Quattrociocchi, 2018).

Furthermore, it is also found that among those who had stronger faith in scientists, the indirect, negative effect of discussion heterogeneity preference on misinformation belief through worry was also enhanced. In other words, although people who are into heterogeneous discussions are often unlikely to fall for misinformation, if they have a stronger and firmer faith in scientists at the same time, they would become even less likely to believe in misinformation. This finding is also fairly reasonable as it confirmed the exclusivity of belief in scientists and misperceptions. This finding highlighted the importance of faith in scientists in an era when misinformation is widespread while the truth is oftentimes unlikely to tell. As prior scholars have put it, scientific values and firm trust in scientists could serve as “a perceptual screen,” which affects individuals in terms of their selections of subsets of considerations “that are consistent or reinforce their predispositions” (Hart and Nisbet, 2012). Hence, when scientists’ remarks are at the opposite of misinformation, the firm belief in scientists could serve as a solid “perceptual screen” that filters out or minimizes the misleading impact of misinformation on their perceptions.

However, it is worth noting that faith was not a significant moderator for the indirect effect of social media use on worry. Social media users are more of passive information consumers without deliberative discussions like people in heterogeneous discussions do. Hence, when scientists’ discourses were not targeted at correcting a specific piece of conspiracy theory/misinformation one consumed just from social media, and when no people are around to have deliberation and debate with, the emotion of worry stemmed from specific misinformation among those passive and atomized social media users would not reduce, even if they had faith in scientists (Fieseler and Fleck, 2013). Despite this explanation, future scholars are encouraged to further probe the conditionalities under which faith’s moderating role is different.

4.1. Practical implications

This study has several implications. First, as frequent use of social media during the pandemic was related to stronger misinformation beliefs, the implementation of fact-checking mechanisms on social media is urgently needed. Social media have plenty of merits and grips in terms of the egalitarian access of information, the equality of content production and dissemination, full exertion of civic power, and the dynamic intermedia agenda setting, while valid fact-checking could help alleviate the hindrances of misinformation to the maturation of a deliberative democracy.

Second, in the case that the fact-checking mechanism has not been fully popularized, discussion network heterogeneity should be embraced, in that diversified information and viewpoints from others can not only contribute to the effectiveness of quality information flow but also provide opportunities for frequent self-reflection and self-correction. This internalized self-correction could remedy the temporary absence of the fact-checking mechanism. In the context of the COVID-19 pandemic and other health crises, people are encouraged to regularly exchange their information with friends who have different knowledge structures, so as to better re-visit, re-examine and update their existing knowledge and beliefs.

More importantly, faith in scientists is much warranted because other societal agents, such as governments, may distort or downplay the harmfulness of the epidemic for their own purposes and interests, and even much misinformation was of a political motive, such as shifting responsibility and attributing the virus to conspiracies. Against this backdrop, the discourse of scientists is more likely to eliminate the negative ramifications of misinformation, encouraging people to rationally adopt science-based preventive measures.

4.2. Limitations

This study is not without limitations. First, the data is of a cross-sectional nature, which prohibited the researcher from further inferring causative associations. Future scholars are encouraged to keep track of ANES’ or other national surveys and utilize multi-wave, panel data to further observe changes and fluctuations of people’s attitudes over time. Second, due to the limitation of the secondary data, the current study measures social media use only based on frequencies. Given the interactive nature of social media platforms, future scholars should consider assessing people’s sharing and expression intentions. Furthermore, the correlation coefficient between the two items measuring social media use only demonstrates a “moderate correlation” (Schober et al., 2018, p. 1765). Although previous studies have lent credence to the usability of moderate correlation (e.g., Koo and Li, 2016, Schober et al., 2018), it is not an ideal association. Therefore, future scholars could consider using self-created measurement, probably through incorporating more social media platforms, to assess social media use, so as to avoid the simplicity of the secondary dataset. Last but not least, the current study examined two prominent misinformation revolving the COVID-19, namely, the misinformation about the lab and the vaccine. The selection of both misinformation was buttressed and justified by the statistics from several Pew polls (e.g., Pew Research Center, 2020). However, other conspiracy theories also started to abound as the pandemic prolongs. For instance, many suggested that Bill Gates was committed to profiting from the massive vaccination, many asserted that the pandemic is a part of China’s global depopulation plan, many also declared that 5G technology is related with the virus (e.g., Ahmed et al., 2020, Miller, 2020). Future scholars are recommended to explore more types of misinformation and people’s misperceptions of them.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

More information can be retrieved from ANES website at https://electionstudies.org/data-center/2020-exploratory-testing-survey/

The ANES did not release specific response rate for this exploratory survey.

References

- Ahmed W., Vidal-Alaball J., Downing J., Seguí F.L. COVID-19 and the 5G conspiracy theory: Social network analysis of Twitter data. J. Med. Internet Res. 2020;22(5):e19458. doi: 10.2196/19458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allington D., Duffy B., Wessely S., Dhavan N., Rubin J. Health-protective behaviour, social media usage and conspiracy belief during the COVID-19 public health emergency. Psychol. Med. 2020;1–7 doi: 10.1017/S003329172000224X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anspach N.M., Carlson T.N. What to believe? Social media commentary and belief in misinformation. Polit. Behav. 2018;42(3):697–718. doi: 10.1007/s11109-018-9515-z. [DOI] [Google Scholar]

- Apuke O.D., Omar B. Fake news and COVID-19: Modelling the predictors of fake news sharing among social media users. Telematics Inform. 2020:101475. doi: 10.1016/j.tele.2020.101475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ardèvol-Abreu A., Hooker C.M., Gil de Zúñiga H. Online news creation, trust in the media, and political participation: Direct and moderating effects over time. Journalism. 2018;19(5):611–631. doi: 10.1177/1464884917700447. [DOI] [Google Scholar]

- Barberá, P. 2014. How social media reduces mass political polarization. Evidence from Germany, Spain, and the US. Job Market Paper, New York University, 46.

- Barua Z., Barua S., Aktar S., Kabir N., Li M. Effects of misinformation on COVID-19 individual responses and recommendations for resilience of disastrous consequences of misinformation. Progress Disast. Sci. 2020;8:1–9. doi: 10.1016/j.pdisas.2020.100119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer E.A., Braitman A.L., Judah M.R., Cigularov K.P. Worry as a mediator between psychosocial stressors and emotional sequelae: Moderation by contrast avoidance. J. Affect. Disord. 2020;266:456–464. doi: 10.1016/j.jad.2020.01.092. [DOI] [PubMed] [Google Scholar]

- Bode L., Vraga E.K. See something, say something: correction of global health misinformation on social media. Health Commun. 2018;33(9):1131–1140. doi: 10.1080/10410236.2017.1331312. [DOI] [PubMed] [Google Scholar]

- Bolsen T., Druckman J.N., Cook F.L. The influence of partisan motivated reasoning on public opinion. Polit. Behav. 2014;36(2):235–262. doi: 10.1007/s11109-013-9238-0. [DOI] [Google Scholar]

- Borkovec T.D. Life in the future versus life in the present. Clin. Psychol. Sci. Practice. 2002;9(1):76–80. [Google Scholar]

- Botzen W.J.W., Aerts J.C.J.H., van den Bergh J.C.J.M. Dependence of flood risk perceptions on socioeconomic and objective risk factors. Water Resour. Res. 2009;45(10):1–15. [Google Scholar]

- Brennen, J.S., Simon, F.M., Howard, P.N., Nielsen, R. K. 2020. Types, sources, and claims of Covid-19 misinformation. Reuters Institute. Retrieved from https://reutersinstitute.politics.ox.ac.uk/types-sources-and-claims-Covid-19-misinformation.

- Carmichael J.T., Brulle R.J., Huxster J.K. The great divide: understanding the role of media and other drivers of the partisan divide in public concern over climate change in the USA, 2001–2014. Clim. Change. 2017;141(4):599–612. doi: 10.1007/s10584-017-1908-1. [DOI] [Google Scholar]

- Carton, A.D. 2010. Environmental worldview and faith in science as moderators of the relationship between beliefs about and attitudes toward nuclear energy. Master Thesis, Georgia State University. https://scholarworks.gsu.edu/psych_theses/73.

- Chae J. Online cancer information seeking increases cancer worry. Comput. Hum. Behav. 2015;52:144–150. doi: 10.1016/j.chb.2015.05.019. [DOI] [Google Scholar]

- Chen X., Sin S.-C., Theng Y.-L., Lee C.S. Why students share misinformation on social media: motivation, gender, and study-level differences. J. Acad. Librarianship. 2015;41(5):583–592. doi: 10.1016/j.acalib.2015.07.003. [DOI] [Google Scholar]

- Chinn S., Lane D.S., Hart P.S. In consensus we trust? Persuasive effects of scientific consensus communication. Public Underst. Sci. 2018;27(7):807–823. doi: 10.1177/0963662518791094. [DOI] [PubMed] [Google Scholar]

- Chou W.-Y., Oh A., Klein W.M.P. Addressing health-related misinformation on social media. JAMA. 2018;320(23):2417. doi: 10.1001/jama.2018.16865. [DOI] [PubMed] [Google Scholar]

- Craft S., Ashley S., Maksl A. News media literacy and conspiracy theory endorsement. Commun. Public. 2017;2(4):388–401. doi: 10.1177/2057047317725539. [DOI] [Google Scholar]

- Diehl T., Huber B., Gil de Zúñiga H., Liu J. Social media and beliefs about climate change: A cross-national analysis of news use, political ideology, and trust in science. Int. J. Public Opinion Res. 2019 doi: 10.1093/ijpor/edz040. Epub ahead of print 18 November 2019. [DOI] [Google Scholar]

- Dohle, S., Wingen, T., Schreiber, M. 2020, May 29. Acceptance and adoption of protective measures during the COVID-19 pandemic: The role of trust in politics and trust in science. 10.31219/osf.io/w52nv. [DOI]

- Emery L.F., Romer D., Sheerin K.M., Jamieson K.H., Peters E. Affective and cognitive mediators of the impact of cigarette warning labels. Nicotine Tob. Res. 2014;16(3):263–269. doi: 10.1093/ntr/ntt124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans J.H., Hargittai E. Who doesn’t trust Fauci? The public’s belief in the expertise and shared values of scientists in the COVID-19 pandemic. Socius. 2020;6 doi: 10.1177/2378023120947337. [DOI] [Google Scholar]

- Festinger L. Stanford University Press; Stanford, CA: 1957. A theory of cognitive dissonance. [Google Scholar]

- Fieseler C., Fleck M. The pursuit of empowerment through social media: structural social capital dynamics in CSR-blogging. J. Bus. Ethics. 2013;118(4):759–775. doi: 10.1007/s10551-013-1959-9. [DOI] [Google Scholar]

- Garrett R.K. Troubling consequences of online political rumoring. Human Commun. Res. 2011;37(2):255–274. [Google Scholar]

- Garrison, J. 2020, April 24. Nearly one-third of Americans believe a coronavirus vaccine exists and is being withheld, survey finds. USA TODAY. Retrieved from https://www.usatoday.com/story/news/politics/2020/04/24/coronavirus-one-third-us-believe-vaccine-exists-is-being-withheld/3004841001/.

- Gruzd A., Mai P. Going viral: how a single tweet spawned a COVID-19 conspiracy theory on Twitter . Big Data Soc. 2020 doi: 10.1177/2053951720938405. [DOI] [Google Scholar]

- Habermas J. MIT; Cambridge, MA: 1989. The structural transformation of the public sphere. [Google Scholar]

- Hart P.S., Nisbet E.C. Boomerang effects in science communication: how motivated reasoning and identity cues amplify opinion polarization about climate mitigation policies. Commun. Res. 2012;39(6):701–723. doi: 10.1177/0093650211416646. [DOI] [Google Scholar]

- Hayes A.F. Guilford publications; New York, NY: 2013. An introduction to mediation, moderation, and conditional process analysis: A regression-based approach. [Google Scholar]

- Jost J.T., van der Linden S., Panagopoulos C., Hardin C.D. Ideological asymmetries in conformity, desire for shared reality, and the spread of misinformation. Curr. Opinion Psychol. 2018;23:77–83. doi: 10.1016/j.copsyc.2018.01.003. [DOI] [PubMed] [Google Scholar]

- Kenski K., Stroud N.J. Connections between internet use and political efficacy, knowledge, and participation. J. Broadcast. Electronic Media. 2006;50(2):173–192. doi: 10.1207/s15506878jobem5002_1. [DOI] [Google Scholar]

- Kim B., Kim Y. College students’ social media use and communication network heterogeneity: implications for social capital and subjective well-being. Comput. Hum. Behav. 2017;73:620–628. doi: 10.1016/j.chb.2017.03.033. [DOI] [Google Scholar]

- Kim S.C., Pei D., Kotcher J.E., Myers T.A. Predicting responses to climate change health impact messages from political ideology and health status: cognitive appraisals and emotional reactions as mediators. Environ. Behav. 2020 doi: 10.1177/0013916520942600. [DOI] [Google Scholar]

- Kim Y., Hsu S.-H., de Zúñiga H.G. Influence of social media use on discussion network heterogeneity and civic engagement: The moderating role of personality traits: Social media & personality traits. J. Commun. 2013;63(3):498–516. doi: 10.1111/jcom.12034. [DOI] [Google Scholar]

- Koo T.K., Li M.Y. A Guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropractic Med. 2016;15(2):155–163. doi: 10.1016/j.jcm.2016.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kunda Z. The case for motivated reasoning. Psychol. Bull. 1990;108(3):480. doi: 10.1037/0033-2909.108.3.480. [DOI] [PubMed] [Google Scholar]

- Ma H., Miller C. Trapped in a double bind: Chinese overseas student anxiety during the COVID-19 pandemic. Health Commun. 2020;1–8 doi: 10.1080/10410236.2020.1775439. [DOI] [PubMed] [Google Scholar]

- McCright A.M., Dunlap R.E. The politicization of climate change and polarization in the American public's views of global warming, 2001–2010. Sociol. Q. 2011;52(2):155–194. doi: 10.1111/j.1533-8525.2011.01198.x. [DOI] [Google Scholar]

- Miller J.M. Do COVID-19 conspiracy theory beliefs form a monological belief system? Can. J. Pol. Sci. 2020;53(2):319–326. doi: 10.1017/S0008423920000517. [DOI] [Google Scholar]

- Miller J.M., Saunders K.L., Farhart C.E. Conspiracy endorsement as motivated reasoning: The moderating roles of political knowledge and trust: conspiracy endorsement as motivated reasoning. Am. J. Polit. Sci. 2016;60(4):824–844. doi: 10.1111/ajps.12234. [DOI] [Google Scholar]

- Mol J.M., Botzen W.J.W., Blasch J.E., Moel H. Insights into flood risk misperceptions of homeowners in the Dutch River Delta. Risk Anal. 2020;40(7):1450–1468. doi: 10.1111/risa:13479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morin-Major J.K., Marin M.-F., Durand N., Wan N., Juster R.-P., Lupien S.J. Facebook behaviors associated with diurnal cortisol in adolescents: Is befriending stressful? Psychoneuroendocrinology. 2016;63:238–246. doi: 10.1016/j.psyneuen.2015.10.005. [DOI] [PubMed] [Google Scholar]

- Mutz Diana.C. Cross-cutting social networks: Testing democratic theory in practice. Am. Polit. Sci. Rev. 2002;96(1):111–126. doi: 10.1017/S0003055402004264. [DOI] [Google Scholar]

- Nabi R.L., Gustafson A., Jensen R. Framing climate change: Exploring the role of emotion in generating advocacy behavior. Sci. Commun. 2018;40(4):442–468. doi: 10.1177/1075547018776019. [DOI] [Google Scholar]

- Nisbet M.C. The competition for worldviews: Values, information, and public support for stem cell research. Int. J. Public Opin. Res. 2005;17(1):90–112. doi: 10.1093/ijpor/edh058. [DOI] [Google Scholar]

- Nyhan B., Reifler J. When corrections fail: The persistence of political misperceptions. Polit. Behav. 2010;32(2):303–330. doi: 10.1007/s11109-010-9112-2. [DOI] [Google Scholar]

- Peter C., Koch T. Countering misinformation: Strategies, challenges, and uncertainties. SCM Stud. Commun. Media. 2019;8(4):431–445. [Google Scholar]

- Pew Research Center. 2015, October 22. Perception of Conflict Between Science and Religion. Retrieved from https://www.pewresearch.org/science/2015/10/22/perception-of-conflict-between-science-and-religion/.

- Pew Research Center. 017, October 19. The Future of Truth and Misinformation Online. Retrieved from https://www.pewresearch.org/internet/2017/10/19/the-future-of-truth-and-misinformation-online/.

- Pew Research Center. 2019a. Share of U.S. adults using social media, including Facebook, is mostly unchanged since 2018. Retrieved from https://www.pewresearch.org/fact-tank/2019/04/10/share-of-u-s-adults-using-social-media-including-facebook-is-mostly-unchanged-since-2018/.

- Pew Research Center. (2019b). Social Media Fact Sheet. Retrieved from https://www.pewresearch.org/internet/fact-sheet/social-media/.

- Pew Research Center. (2020, April 8). Nearly Three-in-Ten Americans Believe COVID-19 was Made in a Lab. Retrieved from https://www.pewresearch.org/fact-tank/2020/04/08/nearly-three-in-ten-americans-believe-covid-19-was-made-in-a-lab/.

- Ravindran T., Yeow Kuan A.C., Hoe Lian D.G. Antecedents and effects of social network fatigue: Antecedents and Effects of Social Network Fatigue. J. Assoc. Inf. Sci. Technol. 2014;65(11):2306–2320. doi: 10.1002/asi.23122. [DOI] [Google Scholar]

- Sibley C.G., Greaves L.M., Satherley N., Wilson M.S., Overall N.C., Lee C.H., et al. Effects of the COVID-19 pandemic and nationwide lockdown on trust, attitudes toward government, and well-being. Am. Psychol. 2020;75(5):618–630. doi: 10.1037/amp0000662. [DOI] [PubMed] [Google Scholar]

- Scheufele D.A., Hardy B.W., Brossard D., Waismel-Manor I.S., Nisbet E. Democracy based on difference: Examining the links between structural heterogeneity, heterogeneity of discussion networks, and democratic citizenship. J. Commun. 2006;56(4):728–753. [Google Scholar]

- Schober P., Boer C., Schwarte L.A. Correlation coefficients: Appropriate use and interpretation. Anesth. Analg. 2018;126(5):1763–1768. doi: 10.1213/ANE.0000000000002864. [DOI] [PubMed] [Google Scholar]

- Skurka C., Niederdeppe J., Romero-Canyas R., Acup D. Pathways of influence in emotional appeals: Benefits and tradeoffs of using fear or humor to promote climate change-related intentions and risk perceptions. J. Commun. 2018;68(1):169–193. [Google Scholar]

- Southwell B.G., Thorson E.A., Sheble L. In: Misinformation and Mass Audiences. Southwell B.G., Thorson E.A., Sheble L., editors. University of Texas Press; Austin: 2018. Introduction: Misinformation among mass audiences as a focus for inquiry; pp. 1–11. [Google Scholar]

- Talwar S., Dhir A., Kaur P., Zafar N., Alrasheedy M. Why do people share fake news? Associations between the dark side of social media use and fake news sharing behavior. J. Retail. Consumer Services. 2019;51:72–82. doi: 10.1016/j.jretconser.2019.05.026. [DOI] [Google Scholar]

- Törnberg P., . Echo chambers and viral misinformation: Modeling fake news as complex contagion. PLoS One. 2018;13(9):e0203958. doi: 10.1371/journal.pone.0203958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uscinski J.E., Parent J.M. Oxford University Press; Oxford, UK: 2014. American Conspiracy Theories. [Google Scholar]

- Valenzuela S., Arriagada A., Scherman A. Facebook, Twitter, and youth engagement: A quasi-experimental study of social media use and protest behavior using propensity score matching. Int. J. Commun. 2014;8:25. [Google Scholar]

- Valenzuela S., Halpern D., Katz J.E., Miranda J.P. The paradox of participation versus misinformation: social media, political engagement, and the spread of misinformation. Digital J. 2019;7(6):802–823. doi: 10.1080/21670811.2019.1623701. [DOI] [Google Scholar]

- Vannucci A., Flannery K.M., Ohannessian C.M. Social media use and anxiety in emerging adults. J. Affect. Disord. 2017;207:163–166. doi: 10.1016/j.jad.2016.08.040. [DOI] [PubMed] [Google Scholar]

- Velasquez A., Quenette A.M. Facilitating social media and offline political engagement during electoral cycles: Using social cognitive theory to explain political action among Hispanics and Latinos. Mass Commun. Soc. 2018;21(6):763–784. doi: 10.1080/15205436.2018.1484489. [DOI] [Google Scholar]

- Vraga E.K., Tully M., Bode L. Empowering Users to Respond to Misinformation about Covid-19. Media Commun. (Lisboa), 2020;8(2):475–479. [Google Scholar]

- Williams A.E. Trust or bust?: Questioning the relationship between media trust and news attention. J. Broadcast. Electronic Media. 2012;56(1):116–131. doi: 10.1080/08838151.2011.651186. [DOI] [Google Scholar]

- World Health Organization. (2020a). Infodemic management: Infodemiology. World Health Organization. Retrieved from https://www.who.int/teams/ risk- communication/infodemic- management.

- World Health Organization (2020b). WHO Coronavirus Disease (COVID-19) Dashboard. Retrieved from https://covid19.who.int.

- Zollo F., Quattrociocchi W. In: Complex Spreading Phenomena in Social Systems. Lehmann S., Ahn Y.Y., editors. Springer; 2018. Misinformation spreading on Facebook; pp. 177–196. [Google Scholar]