Abstract

When acquiring information about choice alternatives, decision makers may have varying levels of control over which and how much information they sample before making a choice. How does control over information acquisition affect the quality of sample-based decisions? Here, combining variants of a numerical sampling task with neural recordings, we show that control over when to stop sampling can enhance (i) behavioral choice accuracy, (ii) the build-up of parietal decision signals, and (iii) the encoding of numerical sample information in multivariate electroencephalogram patterns. None of these effects were observed when participants could only control which alternatives to sample, but not when to stop sampling. Furthermore, levels of control had no effect on early sensory signals or on the extent to which sample information leaked from memory. The results indicate that freedom to stop sampling can amplify decisional evidence processing from the outset of information acquisition and lead to more accurate choices.

Keywords: active sampling, decision-making, electroencephalography, information search, number processing

Introduction

Humans routinely acquire information about choice alternatives before deciding between them. In many situations, decision makers can control which and how much information they sample. For example, when deciding which of 2 products to buy, a customer may deliberately study reviews and testimonials before making a final choice. In other situations, the availability and amount of relevant information is determined by external factors. For instance, when selecting job applicants in an organization that uses standardized interviews, an employer must decide based on the applicants’ answers to the same set of predefined questions. More generally, decision scenarios can differ in the extent to which an agent has control over sampling, in terms of which and how much information is sampled before a choice is made.

One experimental setup suitable for studying how control over sampling may affect decision-making is a numerical sampling paradigm (Hertwig et al. 2004; Hertwig and Erev 2009) in which participants can view sequential samples of possible choice outcomes before deciding for one or the other option. The paradigm has been used extensively in behavioral studies of risky choice to examine how decision makers choose between options about which they learned from experience (i.e. through sampling the payoff distribution; “experience-based” decisions) as opposed to from formal description (where participants would be explicitly informed that there is, e.g. “25% chance to obtain €10, otherwise €0”; Hertwig 2015; Wulff et al. 2018). Across these studies, researchers have also varied the extent to which participants were able to control the sampling process themselves. While the standard paradigm allows participants to decide freely which alternatives to sample and how often (Hertwig and Erev 2009), some studies have prespecified the total number of samples to be taken (Hau et al. 2008; Ungemach et al. 2009; Fleischhut et al. 2014; Gonzalez and Mehlhorn 2016) or included matched (“yoked”) conditions in which participants had no control at all over the sampling sequence (Rakow et al. 2008). However, the latter variants of the sampling paradigm have been devised primarily to reduce confounds in comparison with decisions from description (Rakow and Newell 2010). Therefore, it still remains unclear how control over sampling may alter experience-based decision-making itself.

Several lines of evidence suggest that a sense of control can be beneficial in cognitive tasks (Gureckis and Markant 2012; Murayama et al. 2016). Agency in information acquisition has, for instance, been found to improve subsequent memory performance (Voss et al. 2011), even when exposure to the information was held constant (Murty et al. 2015). Another line of work has shown better performance in tasks self-selected by the participant than when the same tasks were selected by an experimenter (Murayama et al. 2015). More generally, various studies have identified performance benefits associated with volitional control per se and indicated that such effects could be mediated by motivational factors (Patall et al. 2008; Patall 2012). However, the effects of control cannot easily be generalized across domains. In some contexts, control does not seem to impact task performance (Flowerday and Schraw 2003; Flowerday et al. 2004) or can be detrimental—for instance, when control is perceived as irrelevant or as too complex (Katz and Assor 2007; but see Murayama et al. 2015).

In the domain of decisions from experience using the sampling paradigm (Hertwig et al. 2004; Hertwig and Erev 2009), understanding of the role of agency in the sampling process is rather incomplete. In a recent meta-analysis, Wulff et al. (2018) suggested that control over sampling appears to alter the temporal weighting of numerical samples in subsequent choice. Specifically, when participants were given full control over sampling, their choices indicated stronger “recency” effects (i.e. a tendency to overweight the later samples in a sequence, which is routinely observed in sequential tasks with discrete samples, e.g. Anderson 1964; Weiss and Anderson 1969; Tsetsos et al. 2012; Cheadle et al. 2014; Wyart et al. 2015; Spitzer et al. 2017; Kang and Spitzer 2021). However, the meta-analysis by Wulff et al. (2018) was limited to comparisons across studies and did not address the general performance benefits (or drawbacks) that may be associated with control over sampling or the neurocognitive processes that might underlie them.

Here, we used specially designed variants of a numerical sampling paradigm combined with electroencephalogram (EEG) recordings to study how control over sampling affects experience-based decision-making. We systematically varied whether participants (i) were free to decide how much information to sample and from which option (full control), or (ii) could decide only from which option to sample but with a prespecified total number of samples (partial control), or (iii) had no control over sampling at all (no control). Importantly, our design controlled for differences in stimulus presentation by matching the sample sequences in the no-control conditions with those in the self-controlled tasks (full or partial control). We found that full, but not partial, control over sampling had a beneficial effect on choice accuracy and that this benefit was associated with a stronger encoding of numerical sample information from the outset of information acquisition.

Materials and methods

Participants

Forty healthy volunteers took part in the experiment (20 female, 20 male; mean age 26.3 ± 3.7 years; all right-handed). All participants provided written informed consent and received a flat fee of €10 and €10 per hour as compensation, as well as a performance-dependent bonus (€9.35 ± €0.48 on average). The study was approved by the ethics committee of the Max Planck Institute for Human Development.

Experimental design

The tasks were variants of the classic sampling paradigm described in Hertwig and Erev (2009). On each trial, in all experimental conditions, participants were asked to decide between 2 choice options (left or right), each of which could return 1 of 2 different reward values (displayed as an Arabic digit between 1 and 9; Fig. 1a, green digit). Prior to making a final decision for one of the options, participants viewed samples from each option. That is, they could preview potential choice outcomes (Fig. 1a, white digits). Each option returned one outcome (e.g. “1”) with probability P, and another outcome (e.g.” 9″) with probability 1 − P. The outcome probability P of the options ranged from 0.1 to 0.9 (in steps of 0.1) and remained constant in the course of a trial. We constrained the outcome values and probabilities on each trial such that (i) none of the 4 possible outcome values (2 for each option) were identical and (ii) the difference in expected value between the 2 options was always 0.9 (based on piloting results). Under these constraints, the choice problems presented on each trial were selected pseudorandomly, with the additional restriction that each sample value (1, 2, …, 9) occurred with approximately equal probability across the experiment. Participants were instructed to learn from the observed samples and to finally select the option that they expected to return the higher reward (i.e. the larger numerical value). Participants were told that the reward returned by their final choice would influence their bonus payout at the end of the experiment.

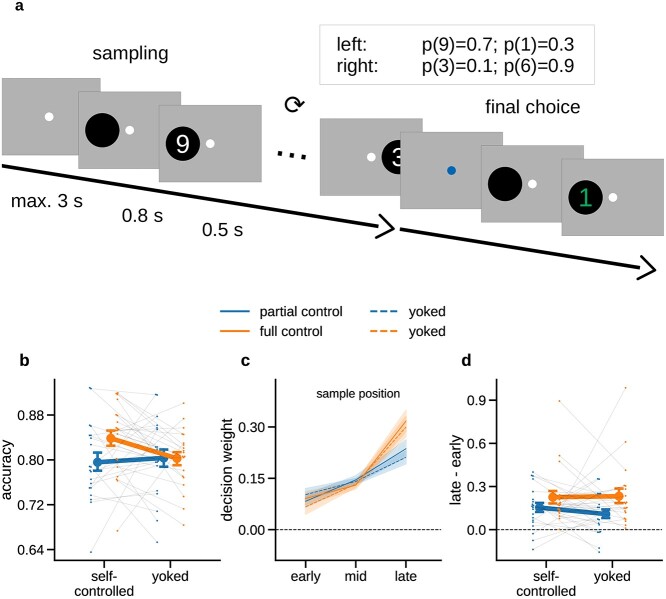

Fig. 1.

Experimental task and behavioral results. a) Schematic illustration of an example trial. Participants were asked to decide which of 2 choice options (left or right) would yield a larger numerical outcome. Before committing to a choice, participants could draw up to 19 samples (full control group) or were required to draw a fixed number of 12 samples (partial control group). Samples are shown as white digits; the final choice outcome is shown in green. The inset table shows the outcome values and probabilities for the 2 choice options in the example trial. In yoked baseline conditions, participants judged replays of previously recorded sampling streams. b) Mean accuracy (proportion of times the option with the higher mean of samples was chosen) in each condition. c) Decision weights (see Materials and Methods) of samples occurring early, mid, or late in the sampling sequence, for each sampling condition. d) Difference in decision weight between late and early samples. Higher values indicate that late samples had a stronger relative influence on choice than early samples (“recency” effect). Error indicators in all panels show SE.

Half of the participants were assigned to the “full control” condition, where they were free to sample from the left or right option as often as they wished before making a final choice. The only restriction on sampling in the full control condition was that a sample had to be taken within 3 s (otherwise the trial was restarted) and that the total number of samples could not exceed 19. The other half of participants were assigned to the “partial control” condition, which was identical to the full control condition except that a fixed number of 12 samples had to be drawn on every trial. The number of samples was based on pilot data where free-sampling participants took approximately 12 samples on average. In other words, participants in the partial control condition were also free to sample from the left or right option but had no control over when to stop (or continue) sampling: They were always prompted to make a final choice after the 12th sample.

In both sampling conditions, the beginning of a new trial was signaled by a green fixation stimulus (a combination of bull’s-eye and cross hair; Thaler et al. 2013) that turned white after 1 s. Upon pressing the left or right button on a USB response pad (using the left or right hand, respectively), participants were shown a black circular disk (diameter 5° visual angle) 4.5° to the left (choice option 1) or right (choice option 2) of fixation after 0.2–0.4 s (randomly varied). After another delay of 0.8 s, the number sample was presented in white (font Liberation Sans, height 4°) in the disk area for 0.5 s (see Fig. 1 for a schematic illustration). After this, the disk disappeared and participants were given 3 s to draw the next sample. The black disk served as a spatial cue to minimize differences in surprise about the sample location (left/right) in yoked conditions without sampling control (see below). The sampling procedure was repeated depending on condition (partial control: 12 samples; full control: up to 19 samples), and the resulting sample sequences (including their precise timing) were recorded (see yoked conditions below). In the full control condition, a third button on the response pad (above the “right” button) was available to stop the sampling sequence. In all conditions, after the sampling was finished, the fixation stimulus changed color to blue for 1 s and participants were asked to make a final choice between the left and right options. The button and display procedure for the final choice was identical to that for drawing samples, except that the final choice outcome was displayed in green to indicate the eventually obtained reward. The rewards (i.e. the payouts from the final choices) were converted to Euros with a factor of 0.005 and added as a bonus to participant’s reimbursement after the experiment (see Participants above).

Within both groups (full and partial control), each participant additionally performed the task in a “yoked” condition, where they had no control over sampling. Here, participants made decisions based on replays of previously recorded sampling streams (without any control over which and how many samples were shown or their timing). Accordingly, we refer to the yoked conditions as the no-control baseline conditions. In each group, half of the participants first performed the self-controlled sampling task (full or partial) and subsequently performed the no-control task with a replay of their own sampling sequences. In informal debriefing after the experiment, none of these participants reported to have noticed that they viewed exact replays of their own sampling sequences. The other half of the participants in each group performed the no-control task first (yoked to the sampling sequences of another participant in the same group) and the respective self-controlled task second. Control analysis showed no differences in choice accuracy between participants who performed the baseline task first (yoked to another participant’s sequences) or second (yoked to their own sequences) (all P > 0.05). Furthermore, in the subset of participants who were yoked to another participant, the difficulty of active versus yoked sampling sequences did not differ (all P > 0.05). Each participant performed 100 trials (5 blocks of 20 trials with short breaks between blocks) in the self-controlled and yoked task variant, respectively.

Participants in the full control group drew on average 8.6 samples (SD = 4.2, median = 8), compared with the 12 samples that had to be drawn in the partial control group. Due to the principled impossibility of matching full and partial control trials (e.g. with respect to the precise length and timing of the sampling sequences on individual trials), all our analyses focus on comparisons of differences to the yoked baseline condition within each group. This analysis strategy rules out stimulus confounds that may arise, for instance due to “amplification effects” under full control, where the decision to stop sampling may be more likely when the momentary difference between the accumulated option values happens to be large (Hertwig and Pleskac 2010).

The experiment was programmed in Python using the Psychopy package (Peirce et al. 2019) and run on a Windows 10 PC. The experiment code is available on Zenodo (https://doi.org/10.5281/zenodo.3354368). Behavioral responses were recorded using a USB response pad (The Black Box ToolKit Ltd, United Kingdom). Throughout the experiment, eye movements were recorded using a Tobii 4C Eye-Tracker (Tobii Technology, Sweden; sampling rate 90 Hz). To reduce eye movements, participants’ gaze position was analyzed online while the experiment was run in all sampling conditions. The program displayed a warning message and restarted the trial whenever the gaze left an elliptical area centered on the central fixation stimulus (width 5° visual angle, height 2.85° visual angle) more than 4 times during a trial. Saccades towards the outcome samples were robustly detected with these settings. On average, 3% of trials per participant were restarted due to a lack of fixation or failure to draw a sample within 3 s (see above). Offline analyses confirmed that participants generally held fixation in the remaining trials.

Supplementary tasks

After the main experiment, participants performed an additional short task on the same choice problems, where the options were not explored through sampling but described formally on screen (e.g. “8 with 60% or 4 with 40%?”). Due to a coding error, much of the data (84%) from this task was incorrectly recorded and the results are thus not reported here. Participants further completed a brief numeracy questionnaire (Berlin Numeracy Test, BNT; Cokely et al. 2012). Exploratory analysis showed no significant correlations of the effects reported in our main analysis with BNT scores.

EEG recording

The experiment was performed in an electrically shielded and soundproof cabin. Scalp EEG was recorded with 64 active electrodes (actiCap, Brain Products GmbH Munich, Germany) positioned according to the international 10% system. Electrode FCz was used as the recording reference. We additionally recorded the horizontal and vertical electrooculogram (EOG) and electrocardiogram (ECG) using passive electrode pairs with bipolar referencing. All electrodes were prepared to have an impedance of less than 10 kΩ. The data were recorded using a BrainAmp DC amplifier (Brain Products GmbH Munich, Germany) at a sampling rate of 1,000 Hz, with an RC high-pass filter with a half-amplitude cutoff at 0.016 Hz (roll-off: 6 dB/octave) and low-pass filtered with an anti-aliasing filter of half-amplitude cutoff 450 Hz (roll-off: 24 dB/octave). The dataset is organized in Brain Imaging Data Structure format (BIDS; Gorgolewski et al. 2016) according to the EEG extension (Pernet et al. 2019) and is available on GIN (https://doi.org/10.12751/g-node.dtyh14).

Behavioral data analysis

We quantified choice accuracy in each condition as the proportion of trials on which participants chose the option in which the observed samples were on average larger. A choice was thus defined as correct when the experienced samples of the chosen option had the higher arithmetic mean. This choice corresponds to that of a noiseless ideal observer in the task, given the presented samples. Differences in accuracy between sampling conditions were analyzed using a mixed 2 × 2 analysis of variance (ANOVA, self-controlled/yoked; full/partial), followed up with Bonferroni-corrected pairwise t-tests. All statistical tests reported (including in the EEG analyses, see below) are 2-tailed.

Based on previous work, we expected participants to show a recency effect, that is, a relative overweighting of later samples. To quantify recency effects in the behavioral data, we used a reverse correlation approach (Neri et al. 1999; Spitzer et al. 2016) based on logistic regression. We first divided the samples in a trial into early, mid, and late samples. The first and last 2 samples in a trial were defined as early and late samples, respectively, and the remaining samples as “mid” samples. Trials with fewer than 5 samples overall were discarded in this analysis (between 1% and 41.5% of trials per participant, mean = 13.3%). For each participant, task condition, and time window, we regressed the participant’s final choices (left: 0, right: 1) onto the numerical sample values (numbers 1, 2, …, 9 rescaled to −4, −3,..., 4), where the values for the left option were sign-flipped to reflect their opposite impact on the probability of choosing the right option (Spitzer et al. 2017). The regression coefficients resulting from this analysis provide a measure of “decision weight,” that is, of the influence that number samples (early, mid, or late) had on choice. We quantified recency as the difference in weight between late and early samples (Fig. 1d). Differences in recency between conditions were assessed with a 2 × 2 ANOVA specified analogously as above.

EEG preprocessing

The EEG recordings were visually inspected for noisy segments and bad channels. Ocular and cardiac artifacts were corrected using independent component analysis (ICA). To this end, we high-pass filtered a copy of the raw data at 1 Hz and downsampled it to 250 Hz. We then ran an extended infomax ICA on all EEG channels and time points that were not marked as bad in the prior inspection. Using the EOG and ECG recordings, we identified stereotypical eyeblink, eye movement, and heartbeat artifact components through correlation with the independent component time courses. We visually inspected and rejected the artifact components before applying the ICA solution to the original raw data (Winkler et al. 2015). We then filtered the ICA-cleaned data between 0.1 and 40 Hz, interpolated bad channels, and re-referenced each channel to the average of all channels. Next, the data were epoched from −0.2 to 0.8 s relative to each individual number sample onset. Remaining bad epochs were rejected using a thresholding approach from the FASTER pipeline (Step 2; Nolan et al. 2010). On average, n = 1,925 clean epochs (93.85%) per participant were retained for analysis. The epochs were downsampled to 250 Hz and baseline corrected relative to the period from −0.2 to 0 s before stimulus onset. All EEG analyses were performed in Python using MNE-Python (Gramfort et al. 2013), MNE-BIDS (Appelhoff et al. 2019), and custom code. All analysis code is available on Zenodo (https://doi.org/10.5281/zenodo.5929222).

Event-related potential analysis

EEG analyses are reported for the epochs around the onset of the individual number samples. We first examined lateralized visual event-related potential (ERP) components to test whether early visual processing differed between the sampling conditions. To this end, we subtracted the ERP for stimuli presented on the right from the ERP for stimuli presented on the left and then subtracted the mean signal of right-hemispheric (O2, PO4, PO8, PO10) occipitoparietal channels of interest (based on previous literature; Eimer 1998) from the corresponding left-hemispheric (O1, PO3, PO7, PO9) channels. Mean amplitudes of the lateralized evoked potential were extracted from prototypical time windows (P1 ERP component: 80–130 ms, N1 ERP component: 140–200 ms) for each sampling condition and analyzed in a mixed 2 × 2 ANOVA (self-controlled/yoked; full/partial).

We further examined centro-parietal evoked responses (CPP/P3, averaged over the early, mid, and late samples in each trial) as a potential correlate of decisional evidence accumulation (O’Connell et al. 2012; Twomey et al. 2015; Pisauro et al. 2017). To this end, we averaged the signal over centro-parietal channels (Cz, C1, C2, CPz, CP1, CP2, CP3, CP4, Pz, P1, P2) and focused on a time window from 300 to 600 ms, based on previous analyses of CPP/P3 responses during visual stimulus sequences (Polich 2007; Wyart et al. 2015; Spitzer et al. 2017).

Representational similarity analysis

To examine the encoding of numerical sample value in multivariate ERP patterns, we used an approach based on representational similarity analysis (RSA; Kriegeskorte and Kievit 2013). For RSA, the ERPs were additionally smoothed (Grootswagers et al. 2016) with a Gaussian kernel (35 ms half duration at half maximum). We used a conventional ERP-RSA approach (e.g. Spitzer et al. 2017; Luyckx et al. 2019), where the representational geometry of a stimulus space (here, sample values 1–9) is characterized by the multivariate (dis-)similarity between the ERP topographies (comprising all 64 channels) associated with each sample value. Representational dissimilarity was computed at each time point of the ERP, between each pair of stimuli (using Euclidean distance as dissimilarity measure), yielding a 9 × 9 representational dissimilarity matrix (RDM; see Fig. 3a, lower) at each time point. We refer to the RDMs computed from the ERP data as “ERP-RDMs.”

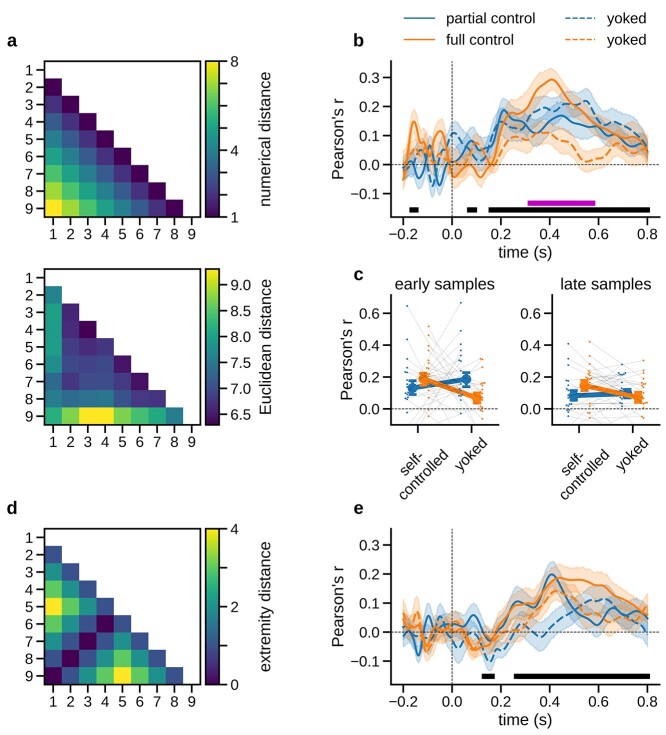

Fig. 3.

RSA results. a) Upper: Model RDM reflecting the pairwise numerical distance between sample values. Lower: Grand mean ERP-RDM averaged across participants and sampling conditions in a representative time window between 300 and 600 ms after sample onset. b) Time course of numerical distance effects in multivariate ERP patterns, plotted separately for each sampling condition. Black bar indicates time windows of significant numerical distance encoding (collapsed across sampling conditions). Purple bar indicates the time window of significant differences between sampling conditions (interaction effect, see Results). c) Mean numerical distance effects by condition. Left: First half of samples in each choice trial. Right: Second half. d) Model RDM reflecting the sample values’ extremity in terms of their absolute distance from the midpoint of the sample range (i.e. 5). e) Time course of extremity encoding in multivariate ERPs, plotted separately for each sampling condition. All error bars and shadings show SE.

To the extent that multivariate ERP patterns encode numerical sample information, they should show a “numerical distance” effect (e.g. Spitzer et al. 2017; Teichmann et al. 2018; Luyckx et al. 2019). That is, the representational dissimilarity between, for example, numbers “2” and “3” should be smaller than that between “2” and “4,” which, in turn, should be smaller than that between “1” and “4,” and so forth, for any pairing of numbers. To assess numerical distance effects in our ERP-RDMs, we created a theoretical model RDM (Fig. 3a upper) where each cell reflects the actual numerical difference between sample values (i.e. the numerical distance between “3” and “7” is 4, and that between “4” and “6” is 2). We then quantified the match between the model RDM and the ERP-RDM at each time point by computing the correlation (Pearson’s r) between the two, with stronger correlation indicating stronger encoding of numerical magnitude in multivariate ERP patterns (see also Spitzer et al. 2017; Teichmann et al. 2018). Correlations between model- and ERP-RDMs were restricted to the lower triangle (excluding the diagonal) to omit redundant matrix entries.

In addition to numerical distance, we examined the extent to which ERP patterns encoded the “extremity” of a sample value (i.e. its absolute difference from the midpoint of the sample range, 5; Fig. 3d). To avoid confounds by potential deviations from a uniform distribution of sample values across the experiment, we additionally orthogonalized each model RDM to an RDM reflecting the relative frequency of numerical sample occurrences (see also Spitzer et al. 2017). However, qualitatively similar results were obtained when this orthogonalization step was omitted.

For statistical analysis, we used t-tests against zero with cluster-based permutation testing (Maris and Oostenveld 2007) to control for multiple comparisons over time points (10,000 iterations, cluster-defining threshold P = 0.05). We then recomputed the ERP-RSA separately for each sampling condition to test for differences in number encoding. Differences between conditions were examined using mixed 2 × 2 ANOVAs (self-controlled/yoked; full/partial), again using cluster-based permutation testing to control for multiple comparisons over time points. Analogous RSA analyses were performed separately on the first and second half of samples from each trial (Fig. 3c).

Analysis of neurometric distortions

Our basic RSA of numerical distance and extremity assumes a linear representation of numerical magnitude, where the representational distance between, for example, “3” and “5” is the same as that between e.g. “7” and “9.” However, based on previous work (e.g. Nieder 2016; Spitzer et al. 2017; Luyckx et al. 2019), the neural representation of numbers might be nonlinearly distorted. That is, the neural number representation might be compressed (such that the representational distance between e.g. “8” and “9” is smaller than that between “5” and “6”) or anti-compressed (such that the distance between “8” and “9” is larger). To examine such potential distortions (see also Spitzer et al. 2017), we transformed the numerical sample values using a parameterized power function  , where

, where  are the numerical sample values (1–9 normalized to the range [−1, 1]), exponent

are the numerical sample values (1–9 normalized to the range [−1, 1]), exponent  determines the shape of the transformation (

determines the shape of the transformation ( compression,

compression,  linear,

linear,  anti-compression; see inset plot in Fig. 4b for illustration of the resulting distortions), and

anti-compression; see inset plot in Fig. 4b for illustration of the resulting distortions), and  reflects an overall bias towards smaller (

reflects an overall bias towards smaller ( ) or larger numbers (

) or larger numbers ( ). We then created model RDMs (analogously as above) from the thus transformed values (

). We then created model RDMs (analogously as above) from the thus transformed values ( ), for different values of

), for different values of  (varied between 0.5 and 10) and

(varied between 0.5 and 10) and  (varied between −0.75 and 0.75; where

(varied between −0.75 and 0.75; where  =1 and

=1 and  = 0 corresponds to linear/unbiased transformation). For each parameter combination, we correlated the resulting model RDM with the ERP-RDM (analogously as above). In each participant, the parameter combination for which the model RDM correlated most strongly with the ERP-RDM was used as the estimate of the participant’s neurometric distortion. Statistical analysis of the neurometric parameters proceeded with conventional statistical tests on the group level.

= 0 corresponds to linear/unbiased transformation). For each parameter combination, we correlated the resulting model RDM with the ERP-RDM (analogously as above). In each participant, the parameter combination for which the model RDM correlated most strongly with the ERP-RDM was used as the estimate of the participant’s neurometric distortion. Statistical analysis of the neurometric parameters proceeded with conventional statistical tests on the group level.

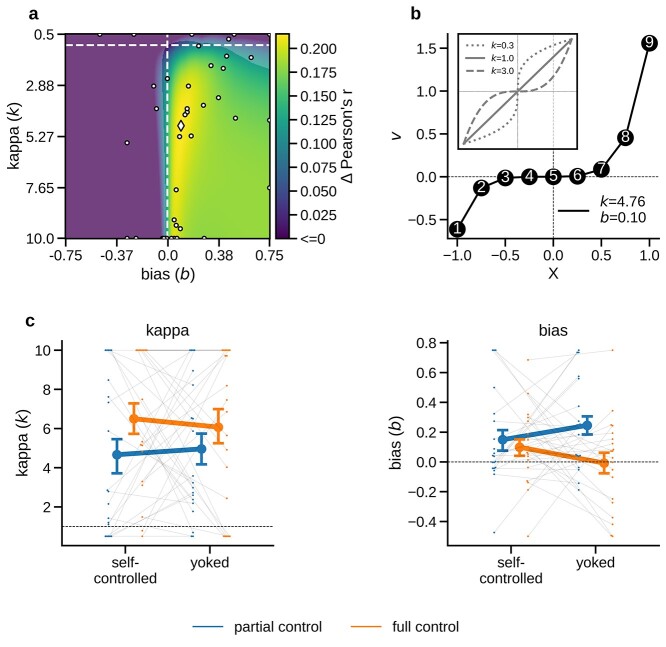

Fig. 4.

Neurometric distortions. a) Grand mean neurometric map, combined across all task conditions. Color scale indicates increase of encoding strength in multivariate ERPs ( , averaged over distance- and extremity models) as a function of nonlinear distortions of numerical value (

, averaged over distance- and extremity models) as a function of nonlinear distortions of numerical value ( : compression;

: compression;  : anti-compression,

: anti-compression,  : bias). Dashed lines indicate linear (

: bias). Dashed lines indicate linear ( ) and unbiased (

) and unbiased ( ) models. Parts of the map that are not overlaid with an opaque mask contain values with a significant increase relative to unbiased linear encoding (P < 0.001, corrected using false discovery rate). White markers show maxima (diamond: mean; dots, individual participants). b) Neurometric function, parameterized according to the maximum mean correlation identified in a. Inset plots illustrate exemplary compressive (

) models. Parts of the map that are not overlaid with an opaque mask contain values with a significant increase relative to unbiased linear encoding (P < 0.001, corrected using false discovery rate). White markers show maxima (diamond: mean; dots, individual participants). b) Neurometric function, parameterized according to the maximum mean correlation identified in a. Inset plots illustrate exemplary compressive ( < 1), linear (

< 1), linear ( = 1), and anti-compressive (

= 1), and anti-compressive ( > 1) distortions. c) Neurometric parameter estimates in the individual sampling conditions, left: exponent (

> 1) distortions. c) Neurometric parameter estimates in the individual sampling conditions, left: exponent ( ); right: bias (

); right: bias ( ); see Methods and Results for details. Error bars show SE.

); see Methods and Results for details. Error bars show SE.

Results

Participants (n = 40) observed sequential samples (Arabic digits 1–9) of the potential rewards of choice options (left/right) before deciding on one of them (Fig. 1a). In different conditions, participants (i) could determine from which option(s) to sample and when to stop sampling (“full control,” 1–19 samples/trial, n = 20 participants) or (ii) could determine only from which option to sample for a fixed number of samples (“partial control,” 12 samples/trial, n = 20 participants). Each participant additionally performed the task in a “yoked” condition with matched sample sequences (see Materials and Methods) that they could not control. Our behavioral and EEG analyses focus on the effects of control (full or partial) relative to the respective matched (yoked) no-control conditions.

Behavior

Mean choice accuracy (i.e. the percentage of trials on which participants chose the option in which the average of the sampled values was larger, see Methods) was 83.8% under full control (SE = 1.4%, yoked baseline: 80.3%, SE = 1.2%) and 79.6% under partial control (SE = 1.6%, yoked baseline: 80.3%, SE = 1.6%). A mixed 2 × 2 ANOVA with the factors control over sampling (self-controlled or yoked; within participants) and control type (full or partial; between participants) showed no main effects [self-controlled/yoked: F(1,38) = 2.143, P = 0.151, ηp2 = 0.053; full/partial: F(1,38) = 1.321, P = 0.258, ηp2 = 0.034], but a significant interaction of the 2 factors [F(1,38) = 5.108, P = 0.03, ηp2 = 0.118]. Post hoc tests showed significantly higher accuracy under full control than in the yoked baseline [t(19) = 2.644, P = 0.032, d = 0.605, Bonferroni corrected], but no such effect under partial control [t(19) = −0.561, P > 0.9, d = −0.108]. Thus, relative to matched baseline conditions, we found an accuracy benefit of control over sampling under full control but not under partial control.

We next examined whether and how the temporal weighting of sample information differed between conditions. To this end, we examined the samples’ decision weights (see Materials and Methods, Behavioral data analysis) separately for early, mid-, and late portions of the sampling sequence (Fig. 1c). As expected based on previous work (Anderson 1964; Weiss and Anderson 1969; Tsetsos et al. 2012; Cheadle et al. 2014; Wyart et al. 2015; Spitzer et al. 2017; Kang and Spitzer 2021), we found a pronounced recency pattern, with decision weight generally increasing over the course of the trial. In other words, later samples had a higher impact on the final choice than earlier samples. For comparison between sampling conditions, we quantified recency as the difference in decision weight between late and early samples (Fig. 1d). A mixed 2 × 2 ANOVA, specified analogously as for accuracy above, showed no significant main effects [self-controlled/yoked: F(1,38) = 0.8, P = 0.377, ηp2 = 0.021; full/partial: F(1,38) = 3.363, P = 0.075, ηp2 = 0.081] and no interaction between the 2 factors [F(1,38) = 1.483, P = 0.231, ηp2 = 0.038]. Thus, we found no impact of control over sampling on recency. To summarize the behavioral results, full control over sampling was characterized by increased choice accuracy but was not distinguished in the extent to which sample information “leaked” (Usher and McClelland 2001), or was forgotten, in the course of a trial.

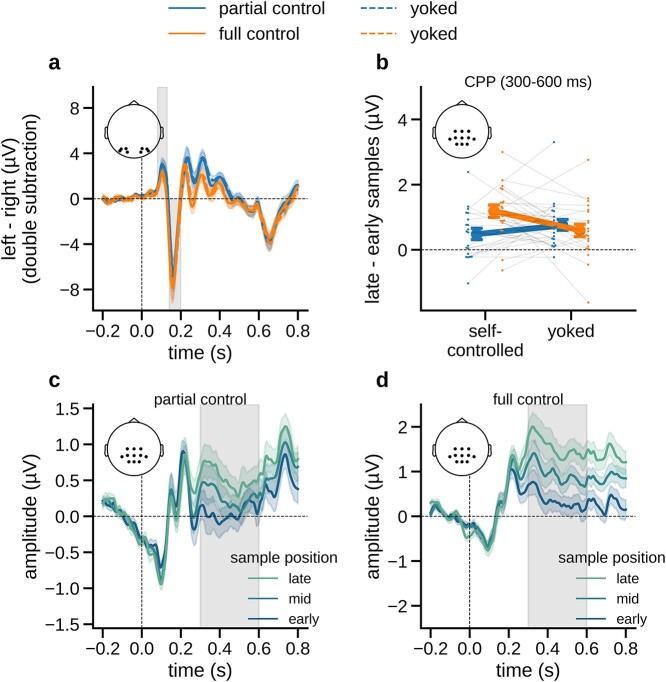

Visual evoked responses

Turning to the EEG data, we first examined visual evoked responses to test whether the sampling conditions differed in terms of early sensory processing of the sample stimuli (e.g. due to potential differences in stimulus-directed visual attention; Luck et al. 2000). Figure 2a shows the occipitoparietal ERP difference between stimuli occurring in the right and left visual fields, subtracted between contralateral channels (see Materials and Methods, ERP analysis). Statistical analysis showed no differences between sampling conditions in the time window of either the P1 (80–130 ms) or the N1 component (140–200 ms) of the visual ERP [all F(1,38) < 1.71, all P > 0.20, all ηp2 < 0.044; mixed 2 × 2 ANOVAs specified as in the behavioral analysis above]. We thus found no evidence for differences in early visual processing between the sampling conditions.

Fig. 2.

Univariate EEG results with ERPs time-locked to number sample onset. a) Early visual ERPs (left − right stimuli, right channels subtracted from left channels) in each sampling condition. Gray shadings indicate time windows of the P1 and N1 components, respectively (80–130 ms and 140–200 ms). b) The difference in centro-parietal (CPP) amplitudes between samples occurring late versus early in the trial (see panels c and d), plotted separately for each sampling condition (including yoked). c) The “ramping up” of CPP amplitudes (0.3–0.6 s) over early, mid, and late samples in the partial control condition. Gray shadings indicate the time window from which average amplitudes were extracted in panel b. d) Same as c, for the full control condition. Error indicators in all panels show SE.

Centro-parietal positivity/P3

We next examined centro-parietal positivity (CPP) responses over centro-parietal channels between 300 and 600 ms after stimulus onset. The amplitude of the CPP response to a sample generally increased in the course of the trial (Fig. 2b–d), which is in line with previous studies implicating the CPP in decision formation (O’Connell et al. 2012; Twomey et al. 2015). Figure 2c and d illustrates the monotonic ramping up of CPP across samples occurring early, mid, and late in the trial (see Materials and Methods) under partial and full control. Descriptively, the build-up of CPP appeared stronger under full control. For statistical analysis, we examined the increase in CPP amplitude from early to late samples in the individual sampling conditions (Fig. 2b). A significant increase in amplitude was evident in each condition (including yoked; Fig. 2b, all P < 0.02, t-tests against zero, uncorrected). A mixed 2 × 2 ANOVA comparing the amplitude difference between conditions showed no significant main effects [self-controlled/yoked: F(1,38) = 1.579, P = 0.217 ηp2 = 0.04; full/partial: F(1,38) = 1.534, P = 0.223, ηp2 = 0.039], but a significant interaction [F(1,38) = 11.408, P = 0.002, ηp2 = 0.231]. Post hoc t-tests showed that the CPP increased more steeply in the full control condition than in the yoked baseline [t(19) = 2.772, P = 0.024, d = 0.687, paired t-test, corrected], whereas no such effect was evident under partial control [t(19) = −1.932, P = 0.137, d = −0.355]. Thus, the increased choice accuracy under full control was accompanied by a steeper increase of centro-parietal decision signals within trials (O’Connell et al. 2012; Twomey et al. 2015; Wyart et al. 2015; Spitzer et al. 2016). Importantly, these effects were observed in comparison against matched (yoked) baseline, ruling out that they were attributable to any specific characteristics of the self-sampled stimulus sequences.

Representational similarity analysis

Our results so far show that decisions made with full control over sampling were more accurate and accompanied by a stronger build-up of parietal choice signals (Fig. 2c–d), whereas there were no differences in early visual processing (Fig. 2a) or in the extent to which sample information “leaked” over time (i.e. no difference in recency effects; Fig. 1b–c). One possibility is that a benefit of full control may have arisen at the stage of numerical processing, in encoding a sample’s abstract value (i.e. its numerical magnitude, which is to be integrated into the subjectively perceived value of the choice option). We used an RSA-based approach (see Materials and Methods) to examine the neural encoding of the samples’ numerical magnitude, building on previous findings of numerical distance effects in multivariate ERP patterns (Spitzer et al. 2017; Teichmann et al. 2018; Luyckx et al. 2019; Sheahan et al. 2021). Specifically, we correlated the multivariate similarity structure of samples (1–9) in our ERP data with theoretical models reflecting (i) numerical distance and (ii) extremity of the sample values (see Materials and Methods).

Numerical distance

We found robust encoding of numerical magnitude in terms of a numerical distance effect in multivariate ERP signals between approximately 160 and 800 ms after sample onset (Fig. 3b, Pcluster < 0.001, t-test against zero), which replicates and extends previous findings in tasks without sampling control (Spitzer et al. 2017; Teichmann et al. 2018; Luyckx et al. 2019; Sheahan et al. 2021). To test whether the strength of this effect differed between levels of sampling control, we examined its time course in the various conditions (full, partial, yoked baselines) using mixed 2 × 2 ANOVAs (specified analogously as above). The analysis showed no main effects (all Pcluster > 0.05) but a significant interaction cluster between 320 and 580 ms (Pcluster = 0.009). We next compared the average numerical distance effects in the time window of this cluster. We found the effect to be significantly larger (relative to yoked baseline) under full control [t(19) = 3.65, P = 0.003, d = 1.05, corrected] but not under partial control [t(19) = −1.065, P = 0.6, d = −0.340, corrected]. In other words, the encoding of numerical magnitude in sample-level neural signals was enhanced under full control, mirroring the pattern of findings for CPP build-up (Fig. 2b) and choice accuracy (Fig. 1b).

We next asked whether the enhanced number encoding under full control was driven only by late samples occurring near the time of the decision to stop sampling. To this end, we repeated the RSA analysis separately for the first (Fig. 3c, left) and second (Fig. 3c, right) half of the samples in a trial. Importantly, a significant enhancement under full control relative to yoked baseline was already evident in the first half of samples [t(19) = 2.279, P = 0.034, d = 0.707], that is, long before participants stopped sampling. The effect in the second half of samples was similar [t(19) = 2.237, P = 0.037, d = 0.673; partial control: both P > 0.24]. In sum, we found no indication that enhanced number encoding under full control occurred only near the time of deliberate (vs. forced) stopping. Rather, the effect appeared to emerge early in the sampling sequence. We note again that we only interpret effects in relation to the respective matched (yoked) control conditions, as other comparisons may suffer from nontrivial stimulus confounds (see Materials and Methods, Experimental design).

Extremity

Inspection of the empirically observed ERP-RDM (Fig. 3a, lower) suggests that besides numerical distance, the multivariate ERP patterns also encoded the extremity of the sample values (i.e. their absolute distance from the midpoint of the sample range, see also Spitzer et al. 2017; Luyckx et al. 2019). Using a model RDM of numerical extremity (Fig. 3d; note that the model is orthogonal to the numerical distance RDM in Fig. 3a, upper), we found a significant effect between approximately 260 and 800 ms (t-test against zero, Pcluster < 0.001) in the ERP data collapsed across conditions. However, testing for differences between sampling conditions yielded no significant results (all Pcluster > 0.05). Together, while both numerical distance and numerical extremity were reflected in the multivariate ERP data, only numerical distance mirrored the enhancement under full control that was observed in CPP build-up and in behavior.

Neurometric distortions

Recent studies of sequential number comparisons (without participant control over sampling) have shown that neural number representations can be distorted (e.g. compressed or anti-compressed) away from the perfectly linear distance structure of our idealized model RDMs (Fig. 3a; see Methods). We used a “neurometric” approach (Spitzer et al. 2017) to test (i) whether such distortions were replicated in our task and (ii) whether they differed between levels of control. To this end, we parameterized our model RDMs to reflect the distance structure of transformed values  , where

, where  are the numerical sample values (1–9 normalized to the range [−1, 1]), exponent

are the numerical sample values (1–9 normalized to the range [−1, 1]), exponent  determines the shape of the transformation (

determines the shape of the transformation ( compression;

compression;  linear;

linear;  anti-compression), and

anti-compression), and  reflects a bias towards smaller (

reflects a bias towards smaller ( ) or larger numbers (

) or larger numbers ( ). Our ERP data, averaged across all conditions, were best explained by parameterizations

). Our ERP data, averaged across all conditions, were best explained by parameterizations  and

and  (Fig. 4a; both P < 0.003, t-tests of individual subject maxima against 1 and 0, respectively, averaged over parameterized distance and extremity). Thus, the neural number representation was anti-compressed and biased towards larger magnitudes (Fig. 4b), strongly resembling the distortions observed in previous work (Spitzer et al. 2017; Luyckx et al. 2019). In comparisons between levels of control, however, we found no evidence for differences in the degree of anti-compression (Fig. 4c, left; both P > 0.545, t-tests of

(Fig. 4a; both P < 0.003, t-tests of individual subject maxima against 1 and 0, respectively, averaged over parameterized distance and extremity). Thus, the neural number representation was anti-compressed and biased towards larger magnitudes (Fig. 4b), strongly resembling the distortions observed in previous work (Spitzer et al. 2017; Luyckx et al. 2019). In comparisons between levels of control, however, we found no evidence for differences in the degree of anti-compression (Fig. 4c, left; both P > 0.545, t-tests of  against yoked baselines or bias; Fig. 4c, right; both P > 0.131, t-tests of

against yoked baselines or bias; Fig. 4c, right; both P > 0.131, t-tests of  against yoked baselines). In other words, under full control, the encoding of numerical sample information was amplified (Fig. 3b and c) without any notable changes in its general representational geometry.

against yoked baselines). In other words, under full control, the encoding of numerical sample information was amplified (Fig. 3b and c) without any notable changes in its general representational geometry.

Lastly, we examined whether our neurometric findings were also reflected in participants’ sampling behavior. Stronger neural encoding of larger sample values (as suggested by the neurometric bias towards large numbers, cf. Fig. 4b) may imply that these values (e.g. “9” or “8”) may drive behavior more strongly than small numbers (e.g. “1” to “2”), despite their nominally identical diagnosticity for the options’ mean values. If that was the case, participants in the full control condition should have been more likely to stop sampling after large numbers. Empirically, this should register in a relatively later mean position of large numbers (on average across trials) within the self-terminated sequences under full control, compared to the fixed-length sequences under partial control (where number values are expected to be uniformly distributed across the sequence by design). Indeed, we found that the mean relative position (relative to the sequence’s length) of a sample increased with its numerical magnitude (1–9) in the full control conditions (P = 0.005, linear trend analysis) but not in the partial control condition (P > 0.78). In other words, participants showed a tendency to stop sampling after larger numbers, consistent with the finding of a neurometric bias towards larger numbers. We report this additional aspect of self-terminated sampling for the sake of completeness; our yoked design warrants that our findings about the effects of control over sampling are unaffected by it.

Discussion

Using variants of a numerical sampling paradigm and controlling for stimulus confounds, we observed increased choice accuracy when participants had control over the sampling process before committing to a choice. On the neural level, the behavioral benefit was reflected in a stronger encoding of the numerical sample information in multivariate EEG patterns and in a steeper build-up of centro-parietal choice signals. The key determinant of these effects was participants’ control over “how much” information to sample. Freedom to decide only which options to sample, but not when to stop sampling, did not bring about the same effects, neither in behavior nor in neural signals.

Drawing on a well-established sequential sampling framework (Gold and Shadlen 2007; Ratcliff and McKoon 2008; O’Connell et al. 2012), our behavioral and neural findings provide a neurocognitive perspective on how control over sampling may boost choice accuracy. We observed no differences in early visual ERPs known to be modulated by top-down visual attention (Mangun and Hillyard 1991; Luck et al. 1994, 2000), but a robust enhancement further downstream in the processing hierarchy, at the level of symbolic number encoding (Ansari et al. 2005; Nieder and Dehaene 2009). Our results replicate recent findings of a “neuronal numberline” in multivariate ERP patterns, where the neural representation of, for example, number “6” is more similar to that of “7” than to that of “9” (Spitzer et al. 2017; Teichmann et al. 2018; Luyckx et al. 2019; Sheahan et al. 2021). We found this multivariate encoding of numerical magnitude to be amplified under full control, mirroring the pattern observed in behavioral performance. Importantly, number encoding was already enhanced for samples occurring early in the trial, long before participants stopped sampling to make a final choice. Likewise, the behavioral benefit appeared driven by early and late samples alike, as indicated by the absence of differences in temporal weighting. Consistent with these findings, we also observed a steeper rise in parietal indices of evidence accumulation (CPP/P3; O’Connell et al. 2012; Twomey et al. 2015) “across” samples, as if each individual sample contributed stronger evidence to the ongoing decision formation. In a sequential sampling framework where evidence is accumulated into a running decision variable (Gold and Shadlen 2007; Kiani et al. 2008; Ratcliff and McKoon 2008; O’Connell et al. 2012; Glickman and Usher 2019), our EEG and behavioral findings may thus both be attributable to an improvement in numerical evidence processing.

One possible explanation for our findings relates to motivational factors. Previous work has shown that the ability to actively control the environment and/or one’s subjective experiences can have beneficial effects, for example, on memory (Voss et al. 2011; Murty et al. 2015), self-regulation, and error monitoring (Legault and Inzlicht 2013), learning and inductive inference (Gureckis and Markant 2012; Markant and Gureckis 2014), and various other aspects of cognition and behavior (Patall et al. 2008; Leotti et al. 2010; Leotti and Delgado 2011; Patall 2012; Murayama et al. 2016). Our findings add to these literature by showing that control can also confer benefits in sample-based decision-making, specifically when participants can control when to stop sampling. While the extrinsic rewards for choice accuracy were identical across our task conditions, control over stopping can add an incentive to optimize the time spent on a trial (Ostwald et al. 2015; Tickle et al. 2020). There is typically a trade-off between speed and accuracy of task execution (Heitz 2014), such that faster decisions come at the cost of lower accuracy (but see Gigerenzer et al. 2011). However, the present findings under full control cannot be explained by such a trade-off, given that we observed benefits relative to yoked trials of identical length. As we used exact copies of participant-generated sampling sequences in our baseline conditions, we can also rule out the possibility that the results are attributable to amplification effects (Hertwig and Pleskac 2010), where participants tend to stop sampling when the cumulative difference between options happens to be large (leading to objectively easier trials; see below). With these simpler explanations ruled out, our findings suggest that control per se may lead to more efficient sample encoding, potentially through increased task engagement when decision time can be optimized on a trial-by-trial basis.

We found no differences between conditions in the temporal weighting of sample information over the course of a trial. A clear recency effect (relative overweighting of late samples) was evident in all task conditions, including yoked baselines. This pattern appears to be at odds with a previous meta-analysis of numerical sampling studies (Wulff et al. 2018), where recency effects were observed solely in conditions with full agency over sampling. However, the present findings are consistent with routine observations of recency effects in other sequential integration tasks where sample presentation is entirely experimenter-controlled (Anderson 1964; Weiss and Anderson 1969; Tsetsos et al. 2012; Cheadle et al. 2014; Wyart et al. 2015; Spitzer et al. 2017; Luyckx et al. 2019; Kang and Spitzer 2021). Here, using carefully designed yoked control conditions, we found no evidence that the strength of recency effects (which may arise, e.g. by forgetting, or leakage of sample information over time; Usher and McClelland 2001) would depend on the level of control over sampling. We also found no differences in the representational geometry of the sampled information in neural signals. Neurometric analysis showed an anti-compression of numerical values (Spitzer et al. 2017; Luyckx et al. 2019) in all conditions, regardless of the level of control. The absence of differences in these more qualitative aspects of information processing in our tasks suggests that the cognitive benefits of full control may best be described as an overall increase in the gain of neural processing (Donner and Nieuwenhuis 2013; Eldar et al. 2013; Murphy et al. 2016), which may amplify the critical decisional information in a sample (here, numerical magnitude).

None of the benefits observed under full control were evident in the partial control condition, where participants could only decide which option to sample next, but not when to terminate sampling. Although this condition gave participants some level of agency (relative to the yoked conditions without control; Chambon et al. 2020; Weiss et al. 2021), we suspect that it may not have induced a strong sense of control over the task. It even seems possible that participants may have perceived the requirement to perform a prescribed number of sampling actions as externally controlled and a cognitive burden (see also Sullivan-Toole et al. 2017). Indeed, post hoc examination of left/right sampling patterns showed that our participants resorted to stereotypical sampling routines (either alternating between options: “a-b-a-b-…” or sampling first one option and then the other: “a-a-a-…-b-b-b”) in 67.61% of trials (relative to the yoked conditions without control; for related findings, see Hills and Hertwig 2010). In other words, participants made little use of the freedom to vary their left/right sampling strategy trial by trial (and/or sample by sample), potentially due to a lack of perceived benefits (Dixon and Christoff 2012). In this light, it is perhaps not surprising that we found no processing enhancements under partial control, in either behavior or neural signals.

Numerical sampling tasks similar to ours have been used extensively in the past to study decisions from experience (Hertwig et al. 2004) in complement to the common use of symbolic descriptions to study risky choice (Kahneman and Tversky 1979; Juechems et al. 2021). Experience-based choices can differ systematically from description-based choice, especially in terms of probability weighting (Hertwig and Erev 2009; Wulff et al. 2018). A much-discussed aspect of this “description–experience gap” is that participants in experience-based tasks tend to rely on relatively few samples (Hau et al. 2010; Plonsky et al. 2015; Wulff et al. 2018). Also in our experiment, participants in the full control condition chose to sample less than they could have (Furl and Averbeck 2011). Although one explanation is that small samples can render choices objectively simpler (Hertwig and Pleskac 2008, 2010), our findings suggest that small samples may also defy typical accuracy trade-offs if the decision to stop sampling lies in the autonomy of the sampling agent (see also Petitet et al. 2021). Granting participants’ full control over sampling may thus not only enable but directly promote reliance on small samples through more efficient processing of the sample evidence.

Finally, our multivariate EEG analysis also showed a neural signature of the samples’ “extremity” (Fig. 3d and e), which did not differ between levels of control over sampling. Future work may investigate the potential significance of this finding with respect to the role of extreme events in experience-based decisions (e.g. Ludvig and Spetch 2011; Ludvig et al. 2014, 2018).

In summary, we found that control over sampling can enhance the neural encoding of decision information and improve choice accuracy. The results add to a growing collection of findings that exercising agency can benefit performance in cognitive tasks and shed light on the neural processes that may support such benefits.

Acknowledgments

We thank Agnessa Karapetian, Clara Wicharz, Jann Wäscher, Yoonsang Lee, and Zhiqi Kang for help with data collection, Dirk Ostwald and Casper Kerrén for helpful discussions and feedback, and Susannah Goss for editorial assistance.

Contributor Information

Stefan Appelhoff, Center for Adaptive Rationality, Max Planck Institute for Human Development, Lentzeallee 94, 14195 Berlin, Germany; Research Group Adaptive Memory and Decision Making, Max Planck Institute for Human Development, Lentzeallee 94, 14195 Berlin, Germany.

Ralph Hertwig, Center for Adaptive Rationality, Max Planck Institute for Human Development, Lentzeallee 94, 14195 Berlin, Germany.

Bernhard Spitzer, Center for Adaptive Rationality, Max Planck Institute for Human Development, Lentzeallee 94, 14195 Berlin, Germany; Research Group Adaptive Memory and Decision Making, Max Planck Institute for Human Development, Lentzeallee 94, 14195 Berlin, Germany.

Funding

BS is supported by a European Research Council Consolidator Grant ERC-2020-COG-101000972.

Conflict of interest statement. The authors declare no competing interests.

Data availability

The dataset in BIDS format is available on GIN (https://doi.org/10.12751/g-node.dtyh14).

Code availability

All code is available on Zenodo (Analysis code: https://doi.org/10.5281/zenodo.5929222; experiment code: https://doi.org/10.5281/zenodo.3354368).

Ethics information

The study was approved by the ethics committee of the Max Planck Institute for Human Development.

Author contributions

SA, RH, and BS: Conceptualization, project administration, writing—review and editing.

SA and BS: Methodology, writing—original draft.

SA: Formal analysis, investigation, validation, visualization, data curation, software.

BS: Supervision.

RH: Resources.

References

- Anderson NH. Test of a model for number-averaging behavior. Psychon Sci. 1964:1:191–192. [Google Scholar]

- Ansari D, Garcia N, Lucas E, Hamon K, Dhital B. Neural correlates of symbolic number processing in children and adults. Neuroreport. 2005:16:1769–1773. [DOI] [PubMed] [Google Scholar]

- Appelhoff S, Sanderson M, Brooks TL, Vliet M v, Quentin R, Holdgraf C, Chaumon M, Mikulan E, Tavabi K, Höchenberger R, et al. MNE-BIDS: organizing electrophysiological data into the BIDS format and facilitating their analysis. J Open Source Softw. 2019:4:1896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambon V, Théro H, Vidal M, Vandendriessche H, Haggard P, Palminteri S. Information about action outcomes differentially affects learning from self-determined versus imposed choices. Nat Hum Behav. 2020:4:1–13. [DOI] [PubMed] [Google Scholar]

- Cheadle S, Wyart V, Tsetsos K, Myers N, de Gardelle V, Herce Castañón S, Summerfield C. Adaptive gain control during human perceptual choice. Neuron. 2014:81:1429–1441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cokely ET, Galesic M, Schulz E, Ghazal S, Garcia-Retamero R. Measuring risk literacy: the Berlin Numeracy Test. Judgm Decis Mak. 2012:7:25–47. [Google Scholar]

- Dixon ML, Christoff K. The decision to engage cognitive control is driven by expected reward-value: neural and Behavioral evidence. PLoS One. 2012:7:e51637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donner TH, Nieuwenhuis S. Brain-wide gain modulation: the rich get richer. Nat Neurosci. 2013:16:989–990. [DOI] [PubMed] [Google Scholar]

- Eimer M. The lateralized readiness potential as an on-line measure of central response activation processes. Behav Res Methods Instrum Comput. 1998:30:146–156. [Google Scholar]

- Eldar E, Cohen JD, Niv Y. The effects of neural gain on attention and learning. Nat Neurosci. 2013:16:1146–1153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleischhut N, Artinger F, Olschewski S, Volz K, Hertwig R. 2014. Sampling of social information: Decisions from experience in bargaining. In: Bello P,, Guarini M,, McShane M,, Scassellati B, editors. Program of the 36th Annual Conference of the Cognitive Science Society. Austin, TX: Cognitive Science Society. p. 1048–1053. [Google Scholar]

- Flowerday T, Schraw G. Effect of choice on cognitive and affective engagement. J Educ Res. 2003:96:207–215. [Google Scholar]

- Flowerday T, Schraw G, Stevens J. The role of choice and interest in reader engagement. J Exp Educ. 2004:72:93–114. [Google Scholar]

- Furl N, Averbeck BB. Parietal cortex and insula relate to evidence seeking relevant to reward-related decisions. J Neurosci. 2011:31:17572–17582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gigerenzer G, Hertwig R, Pachur T. Heuristics. Oxford, England: Oxford University Press; 2011. [Google Scholar]

- Glickman M, Usher M. Integration to boundary in decisions between numerical sequences. Cognition. 2019:193:104022. [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007:30:535–574. [DOI] [PubMed] [Google Scholar]

- Gonzalez C, Mehlhorn K. Framing from experience: cognitive processes and predictions of risky choice. Cogn Sci. 2016:40:1163–1191. [DOI] [PubMed] [Google Scholar]

- Gorgolewski KJ, Auer T, Calhoun VD, Craddock RC, Das S, Duff EP, Flandin G, Ghosh SS, Glatard T, Halchenko YO, et al. The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Sci Data. 2016:3:160044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gramfort A, Luessi M, Larson E, Engemann DA, Strohmeier D, Brodbeck C, Goj R, Jas M, Brooks T, Parkkonen L, et al. MEG and EEG data analysis with MNE-Python. Front Neurosci. 2013:7:1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grootswagers T, Wardle SG, Carlson TA. Decoding dynamic brain patterns from evoked responses: a tutorial on multivariate pattern analysis applied to time series neuroimaging data. J Cogn Neurosci. 2016:29:677–697. [DOI] [PubMed] [Google Scholar]

- Gureckis TM, Markant DB. Self-directed learning: a cognitive and computational perspective. Perspect Psychol Sci. 2012:7:464–481. [DOI] [PubMed] [Google Scholar]

- Hau R, Pleskac TJ, Kiefer J, Hertwig R. The description-experience gap in risky choice: the role of sample size and experienced probabilities. J Behav Decis Mak. 2008:21:493–518. [Google Scholar]

- Hau R, Pleskac TJ, Hertwig R. Decisions from experience and statistical probabilities: why they trigger different choices than a priori probabilities. J Behav Decis Mak. 2010:23:48–68. [Google Scholar]

- Heitz RP. The speed-accuracy tradeoff: history, physiology, methodology, and behavior. Front Neurosci. 2014:8:1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hertwig R. Decisions from experience. In: Keren G, Wu G, editors. The Wiley Blackwell handbook of judgment and decision making. Chichester: John Wiley & Sons, Ltd.; 2015. pp. 239–267. [Google Scholar]

- Hertwig R, Erev I. The description–experience gap in risky choice. Trends Cogn Sci. 2009:13:517–523. [DOI] [PubMed] [Google Scholar]

- Hertwig R, Pleskac TJ. The game of life: how small samples render choice simpler. In: Chater N, Oaksford M, editors. The probabilistic mind: prospects for Bayesian cognitive science. Oxford, England: Oxford University Press; 2008. pp. 209–236. [Google Scholar]

- Hertwig R, Pleskac TJ. Decisions from experience: why small samples? Cognition. 2010:115:225–237. [DOI] [PubMed] [Google Scholar]

- Hertwig R, Barron G, Weber EU, Erev I. Decisions from experience and the effect of rare events in risky choice. Psychol Sci. 2004:15:534–539. [DOI] [PubMed] [Google Scholar]

- Hills TT, Hertwig R. Information search in decisions from experience: do our patterns of sampling foreshadow our decisions? Psychol Sci. 2010:21:1787–1792. [DOI] [PubMed] [Google Scholar]

- Juechems K, Balaguer J, Spitzer B, Summerfield C. Optimal utility and probability functions for agents with finite computational precision. Proc Natl Acad Sci. 2021:118:1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979:47:263–292. [Google Scholar]

- Kang Z, Spitzer B. Concurrent visual working memory bias in sequential integration of approximate number. Sci Rep. 2021:11:5348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katz I, Assor A. When choice motivates and when it does not. Educ Psychol Rev. 2007:19:429–442. [Google Scholar]

- Kiani R, Hanks TD, Shadlen MN. Bounded integration in parietal cortex underlies decisions even when viewing duration is dictated by the environment. J Neurosci. 2008:28:3017–3029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Kievit RA. Representational geometry: integrating cognition, computation, and the brain. Trends Cogn Sci. 2013:17:401–412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Legault L, Inzlicht M. Self-determination, self-regulation, and the brain: autonomy improves performance by enhancing neuroaffective responsiveness to self-regulation failure. J Pers Soc Psychol. 2013:105:123–138. [DOI] [PubMed] [Google Scholar]

- Leotti LA, Delgado MR. The inherent reward of choice. Psychol Sci. 2011:22:1310–1318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leotti LA, Iyengar SS, Ochsner KN. Born to choose: the origins and value of the need for control. Trends Cogn Sci. 2010:14:457–463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luck SJ, Hillyard SA, Mouloua M, Woldorff MG, Clark VP, Hawkins HL. Effects of spatial cuing on luminance detectability: psychophysical and electrophysiological evidence for early selection. J Exp Psychol Hum Percept Perform. 1994:20:887–904. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Woodman GF, Vogel EK. Event-related potential studies of attention. Trends Cogn Sci. 2000:4:432–440. [DOI] [PubMed] [Google Scholar]

- Ludvig EA, Spetch ML. Of black swans and tossed coins: is the description-experience gap in risky choice limited to rare events? PLoS One. 2011:6:e20262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ludvig EA, Madan CR, Spetch ML. Extreme outcomes sway risky decisions from experience: risky decisions and extreme outcomes. J Behav Decis Mak. 2014:27:146–156. [Google Scholar]

- Ludvig EA, Madan CR, McMillan N, Xu Y, Spetch ML. Living near the edge: how extreme outcomes and their neighbors drive risky choice. J Exp Psychol Gen. 2018:147:1905–1918. [DOI] [PubMed] [Google Scholar]

- Luyckx F, Nili H, Spitzer B, Summerfield C. Neural structure mapping in human probabilistic reward learning. elife. 2019:8:e42816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mangun GR, Hillyard SA. Modulations of sensory-evoked brain potentials indicate changes in perceptual processing during visual-spatial priming. J Exp Psychol Hum Percept Perform. 1991:17:1057–1074. [DOI] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007:164:177–190. [DOI] [PubMed] [Google Scholar]

- Markant DB, Gureckis TM. Is it better to select or to receive? Learning via active and passive hypothesis testing. J Exp Psychol Gen. 2014:143:94–122. [DOI] [PubMed] [Google Scholar]

- Murayama K, Matsumoto M, Izuma K, Sugiura A, Ryan RM, Deci EL, Matsumoto K. How self-determined choice facilitates performance: a key role of the ventromedial prefrontal cortex. Cereb Cortex. 2015:25:1241–1251. [DOI] [PubMed] [Google Scholar]

- Murayama K, Izuma K, Aoki R, Matsumoto K. Your choice motivates you in the brain: The emergence of autonomy neuroscience. In: Recent developments in neuroscience research on human motivation. Bingley, West Yorkshire, England: Emerald Group; 2016. pp. 95–125. [Google Scholar]

- Murphy PR, Boonstra E, Nieuwenhuis S. Global gain modulation generates time-dependent urgency during perceptual choice in humans. Nat Commun. 2016:7:13526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murty VP, DuBrow S, Davachi L. The simple act of choosing influences declarative memory. J Neurosci. 2015:35:6255–6264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neri P, Parker AJ, Blakemore C. Probing the human stereoscopic system with reverse correlation. Nature. 1999:401:695–698. [DOI] [PubMed] [Google Scholar]

- Nieder A. The neuronal code for number. Nat Rev Neurosci. 2016:17:366–382. [DOI] [PubMed] [Google Scholar]

- Nieder A, Dehaene S. Representation of number in the brain. Annu Rev Neurosci. 2009:32:185–208. [DOI] [PubMed] [Google Scholar]

- Nolan H, Whelan R, Reilly RB. FASTER: fully automated statistical thresholding for EEG artifact rejection. J Neurosci Methods. 2010:192:152–162. [DOI] [PubMed] [Google Scholar]

- O’Connell RG, Dockree PM, Kelly SP. A supramodal accumulation-to-bound signal that determines perceptual decisions in humans. Nat Neurosci. 2012:15:1729–1735. [DOI] [PubMed] [Google Scholar]

- Ostwald D, Starke L, Hertwig R. A normative inference approach for optimal sample sizes in decisions from experience. Front Psychol. 2015:6:1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patall EA. The motivational complexity of choosing: a review of theory and research. In: Ryan RM, editors. The Oxford handbook of human motivation. Oxford, England: Oxford University Press; 2012. pp. 247–279. [Google Scholar]

- Patall EA, Cooper H, Robinson JC. The effects of choice on intrinsic motivation and related outcomes: a meta-analysis of research findings. Psychol Bull. 2008:134:270–300. [DOI] [PubMed] [Google Scholar]

- Peirce J, Gray JR, Simpson S, MacAskill M, Höchenberger R, Sogo H, Kastman E, Lindeløv JK. PsychoPy2: experiments in behavior made easy. Behav Res Methods. 2019:51:195–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pernet CR, Appelhoff S, Gorgolewski KJ, Flandin G, Phillips C, Delorme A, Oostenveld R. EEG-BIDS, an extension to the brain imaging data structure for electroencephalography. Sci Data. 2019:6:103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petitet P, Attaallah B, Manohar SG, Husain M. The computational cost of active information sampling before decision-making under uncertainty. Nat. Hum Behav. 2021:5:935–946. [DOI] [PubMed] [Google Scholar]

- Pisauro MA, Fouragnan E, Retzler C, Philiastides MG. Neural correlates of evidence accumulation during value-based decisions revealed via simultaneous EEG-fMRI. Nat Commun. 2017:8:15808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plonsky O, Teodorescu K, Erev I. Reliance on small samples, the wavy recency effect, and similarity-based learning. Psychol Rev. 2015:122:621–647. [DOI] [PubMed] [Google Scholar]

- Polich J. Updating P300: an integrative theory of P3a and P3b. Clin Neurophysiol. 2007:118:2128–2148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rakow T, Newell BR. Degrees of uncertainty: an overview and framework for future research on experience-based choice. J Behav Decis Mak. 2010:23:1–14. [Google Scholar]

- Rakow T, Demes KA, Newell BR. Biased samples not mode of presentation: re-examining the apparent underweighting of rare events in experience-based choice. Organ Behav Hum Decis Process. 2008:106:168–179. [Google Scholar]

- Ratcliff R, McKoon G. The diffusion decision model: theory and data for two-choice decision tasks. Neural Comput. 2008:20:873–922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheahan H, Luyckx F, Nelli S, Teupe C, Summerfield C. Neural state space alignment for magnitude generalization in humans and recurrent networks. Neuron. 2021:109:1214–1226. [DOI] [PubMed] [Google Scholar]

- Spitzer B, Blankenburg F, Summerfield C. Rhythmic gain control during supramodal integration of approximate number. NeuroImage. 2016:129:470–479. [DOI] [PubMed] [Google Scholar]

- Spitzer B, Waschke L, Summerfield C. Selective overweighting of larger magnitudes during noisy numerical comparison. Nat Hum Behav. 2017:1:1–8. [DOI] [PubMed] [Google Scholar]

- Sullivan-Toole H, Richey JA, Tricomi E. Control and effort costs influence the motivational consequences of choice. Front Psychol. 2017:8:1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teichmann L, Grootswagers T, Carlson T, Rich AN. Decoding digits and dice with magnetoencephalography: evidence for a shared representation of magnitude. J Cogn Neurosci. 2018:30:999–1010. [DOI] [PubMed] [Google Scholar]

- Thaler L, Schütz AC, Goodale MA, Gegenfurtner KR. What is the best fixation target? The effect of target shape on stability of fixational eye movements. Vis Res. 2013:76:31–42. [DOI] [PubMed] [Google Scholar]

- Tickle H, Tsetsos K, Speekenbrink M, Summerfield C. Optional stopping in a heteroscedastic world (preprint). PsyArXiv. 2020. 10.31234/osf.io/t7dn2. [DOI] [PubMed] [Google Scholar]

- Tsetsos K, Chater N, Usher M. Salience driven value integration explains decision biases and preference reversal. Proc Natl Acad Sci. 2012:109:9659–9664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Twomey DM, Murphy PR, Kelly SP, O’Connell RG. The classic P300 encodes a build-to-threshold decision variable. Eur J Neurosci. 2015:42:1636–1643. [DOI] [PubMed] [Google Scholar]

- Ungemach C, Chater N, Stewart N. Are probabilities overweighted or underweighted when rare outcomes are experienced (rarely)? Psychol Sci. 2009:20:473–479. [DOI] [PubMed] [Google Scholar]

- Usher M, McClelland JL. The time course of perceptual choice: the leaky, competing accumulator model. Psychol Rev. 2001:108:550–592. [DOI] [PubMed] [Google Scholar]

- Voss JL, Gonsalves BD, Federmeier KD, Tranel D, Cohen NJ. Hippocampal brain-network coordination during volitional exploratory behavior enhances learning. Nat Neurosci. 2011:14:115–120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss DJ, Anderson NH. Subjective averaging of length with serial presentation. J Exp Psychol. 1969:82:52–63. [Google Scholar]

- Weiss A, Chambon V, Lee JK, Drugowitsch J, Wyart V. Interacting with volatile environments stabilizes hidden-state inference and its brain signatures. Nat Commun. 2021:12:2228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winkler I, Debener S, Müller K, Tangermann M. 2015. On the influence of high-pass filtering on ICA-based artifact reduction in EEG-ERP. In: 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE: New York, U.S. p. 4101–4105. [DOI] [PubMed]

- Wulff D, Mergenthaler-Canseco M, Hertwig R. A meta-analytic review of two modes of learning and the description-experience gap. Psychol Bull. 2018:144:140–176. [DOI] [PubMed] [Google Scholar]

- Wyart V, Myers NE, Summerfield C. Neural mechanisms of human perceptual choice under focused and divided attention. J Neurosci. 2015:35:3485–3498. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset in BIDS format is available on GIN (https://doi.org/10.12751/g-node.dtyh14).