Abstract

Purpose

Considering several patients screened due to COVID-19 pandemic, computer-aided detection has strong potential in assisting clinical workflow efficiency and reducing the incidence of infections among radiologists and healthcare providers. Since many confirmed COVID-19 cases present radiological findings of pneumonia, radiologic examinations can be useful for fast detection. Therefore, chest radiography can be used to fast screen COVID-19 during the patient triage, thereby determining the priority of patient’s care to help saturated medical facilities in a pandemic situation.

Methods

In this paper, we propose a new learning scheme called self-supervised transfer learning for detecting COVID-19 from chest X-ray (CXR) images. We compared six self-supervised learning (SSL) methods (Cross, BYOL, SimSiam, SimCLR, PIRL-jigsaw, and PIRL-rotation) with the proposed method. Additionally, we compared six pretrained DCNNs (ResNet18, ResNet50, ResNet101, CheXNet, DenseNet201, and InceptionV3) with the proposed method. We provide quantitative evaluation on the largest open COVID-19 CXR dataset and qualitative results for visual inspection.

Results

Our method achieved a harmonic mean (HM) score of 0.985, AUC of 0.999, and four-class accuracy of 0.953. We also used the visualization technique Grad-CAM++ to generate visual explanations of different classes of CXR images with the proposed method to increase the interpretability.

Conclusions

Our method shows that the knowledge learned from natural images using transfer learning is beneficial for SSL of the CXR images and boosts the performance of representation learning for COVID-19 detection. Our method promises to reduce the incidence of infections among radiologists and healthcare providers.

Keywords: Self-supervised learning, Transfer learning, COVID-19 detection, Chest X-ray images

Introduction

The coronavirus disease 2019 (COVID-19) caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV2) has emerged as one of the deadliest viruses of the century, resulting in about 326 million infected people and over 5.5 million deaths worldwide as of January 17, 2022.1 Despite the unprecedented COVID-19 pandemic, medical facilities have faced many challenges, including a critical shortage of medical resources, and many healthcare providers have themselves been infected [20]. Due to the highly contagious nature of COVID-19, early screening has become increasingly important to prevent its further spread and reduce the incidence of infections among radiologists and healthcare providers [39].

As a sequel to that, polymerase chain reaction is currently considered the gold standard in COVID-19 confirmation because of its high accuracy and takes several hours to get the result [38]. Since many confirmed COVID-19 cases present radiological findings of pneumonia, radiologic examinations can be useful for fast detection [32]. Therefore, chest X-ray (CXR) images can be used to fast screen COVID-19 during the patient triage, thereby determining the priority of patient’s care to help saturated medical facilities in a pandemic situation [33].

Based on the above findings, several studies have been conducted to detect COVID-19 using CXR images with the help of different deep learning technologies [3]. Different neural network architectures, transfer learning techniques, and ensemble methods have been proposed to improve the performance of automatic COVID-19 detection [18, 19, 29]. For example, [26] proposed to use transfer learning with several deep convolutional neural networks (DCNNs), such as VGGNet [36], ResNet [13], and DenseNet [15] to detect COVID-19 using CXR images. Their results achieved good performance on a small COVID-19 dataset. Moreover, [16] proposed to ensemble deep feature extraction for the support vector machines classifier, fine-tuning of CNN, and end-to-end training of CNN to obtain better COVID-19 detection performance.

Recently, self-supervised learning (SSL) methods have received widespread attention [17]. Unlike supervised learning, self-supervised learning can learn good representations without manually designed labels, reducing the labeled cost and time. For example, [10, 28] proposed studies that played a jigsaw game on images and predicted the rotation degrees of images for learning good representations, respectively. Furthermore, SSL methods have been effective on different medical datasets [40]. Transfer learning [30] is a technique where a model trained on one task is reused on a second related task, which is commonly used in medical image analysis. Since the learned representations using SSL on the target dataset are insufficient, transfer learning from different datasets may make up for the shortcomings of SSL to obtain better representations. Several studies on different tasks (e.g., forecasting adverse surgical events [7] and hand mesh recovery [8]) have shown the effectiveness of combining SSL and transfer learning.

In this paper, we propose a new learning scheme called self-supervised transfer learning for detecting COVID-19 using CXR images. Our method consists of three stages, first is the supervised pre-training on labeled natural images. The next stage is the self-supervised pre-training on unlabeled CXR images, and finally, the fine-tuning on labeled CXR images. We show that the knowledge learned from natural images with transfer learning is beneficial for SSL on the CXR images and boosts representation learning performance for COVID-19 detection. Our method can learn discriminative representations from CXR images. Furthermore, we realized promising detection results even when using a few labeled data for fine-tuning.

Theoretical background

Considering that the learned representations using SSL on the target dataset are insufficient, transfer learning from different datasets can be employed to augment shortcomings of SSL and obtain better representations. We propose to learn discriminative representations from CXR images by using transfer learning and SSL. We show that the knowledge learned from natural images using transfer learning benefits the SSL on the CXR images and boosts representation learning performance for COVID-19 detection.

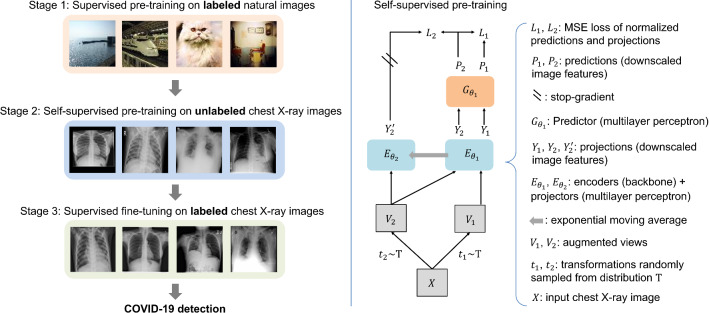

An overview of the proposed method is shown in Fig. 1. Our method consists of three stages, first is supervised pre-training on labeled natural images, next is self-supervised pre-training on unlabeled CXR images, and final is supervised fine-tuning on labeled CXR images. Specifically, we use the model pre-trained on ImageNet [9] as the encoder in self-supervised pre-learning. Then, after self-supervised pre-training on unlabeled CXR images, a well-trained encoder is obtained, and a classifier is added behind it for supervised fine-tuning. Finally, after the supervised fine-tuning on labeled CXR images, we can use it to detect COVID-19 effectively.

Fig. 1.

Overview of the proposed method. The left shows the concept illustration of our method and the right shows the self-supervised pre-training process of our method

As shown in Fig. 1, given an input CXR image X without label information, two transformations and are randomly sampled from a distribution T to generate two views and [14]. Specifically, these transformations are combined using standard data augmentation methods such as cropping, resizing, flipping, and Gaussian blurring [25]. Thus, , and are output representations processed by the encoders and . and are output representations processed by the predictor . The predictor is designed to make the network structure asymmetric, thereby preventing a collapse in learning [12]. Finally, we define the loss L for updating parameters as follows:

| 1 |

where and are used to compare the normalized representations from two views of the same image as follows:

| 2 |

| 3 |

where and represent the normalized representations of (). Then the total loss L is used to update the parameters of the encoder as follows:

| 4 |

where and represent the optimizer and learning rate, respectively. The weights of are an exponential moving average of the weights of and are updated as follows:

| 5 |

where represents the moving average degree. The gradient is not backpropagated through the encoder for stable training [5]. Thus, we can learn discriminative representations from CXR images using the transfer learning from natural images and SSL on CXR images. After the SSL process, we fine-tuned the labeled CXR images for COVID-19 detection using the encoder .

Methodology

Dataset and settings

We used the largest open COVID-19 CXR dataset in this study [34]. The dataset has 21,165 CXR images in four categories, all CXR images are pixels and in png format. In this dataset, 3616 positive COVID-19 CXR images are collected from public datasets, publications, and websites. More details about the used dataset can be found on the official website.2 One example of each category in the dataset is shown in Fig. 4. 80% of the dataset were used as the training set and the remaining 20% were used as the test set. Sensitivity (Sen), specificity (Spe), the harmonic mean (HM) of Sen and Spe [Eqs. (6)–(8)], the area under the ROC curve (AUC), and the accuracy (Acc) were used as evaluation indexes [24].

| 6 |

| 7 |

| 8 |

where TP, TN, FP, and FN represent the number of true positive, true negative, false positive, and false negative, respectively. For calculating Sen, Spe, HM, and AUC, the COVID-19 category is taken as positive, and the other categories are taken as negative. We used ResNet50 encoder and stochastic gradient descent optimizer. Hyperparameters of the proposed method [e.g., in Eq. (4) and in Eq. (5)] are shown in Table 1. All 21,165 CXR images without label information in the training set were used for self-supervised pre-training. All experiments were conducted using the PyTorch framework with an NVIDIA Tesla P100 GPU with 16G memory. The training time of the self-supervised pre-training and supervised fine-tuning is about 47 and 35 min, respectively.

Fig. 4.

Grad-CAM++ visual explanations of the proposed method. Red represents high-attention and blue represents low-attention

Table 1.

Hyperparameters of the proposed method

| Hyperparameter | Value |

|---|---|

| SSL epoch | 40 |

| Fine-tuning epoch | 30 |

| Batch size | 256 |

| Learning rate () | 0.03 |

| Momentum | 0.9 |

| Weight decay | 0.0004 |

| Moving average () | 0.996 |

| MLP hidden size | 4096 |

| Projection size | 256 |

| View size | 128 |

We used several SSL methods such as Cross [22], BYOL [12], SimSiam [5], PIRL-Jigsaw [27], and SimCLR [6], transfer learning (using ImageNet [9] pre-trained weights), and training from scratch as comparative methods. Note that PIRL-Jigsaw and PIRL-Rotation are based on jigsaw and rotation pretext tasks, respectively. Additionally, Additionally, we compared six pretrained DCNNs (ResNet18, ResNet50, ResNet101, InceptionV3 [37], DenseNet201, and CheXNet [35]) with the proposed method. To verify the effectiveness of our method even when using a few labeled data, we randomly selected data from the training set (1, 10, and 50%). Note that the ratio selected in each category is the same.

Experimental results

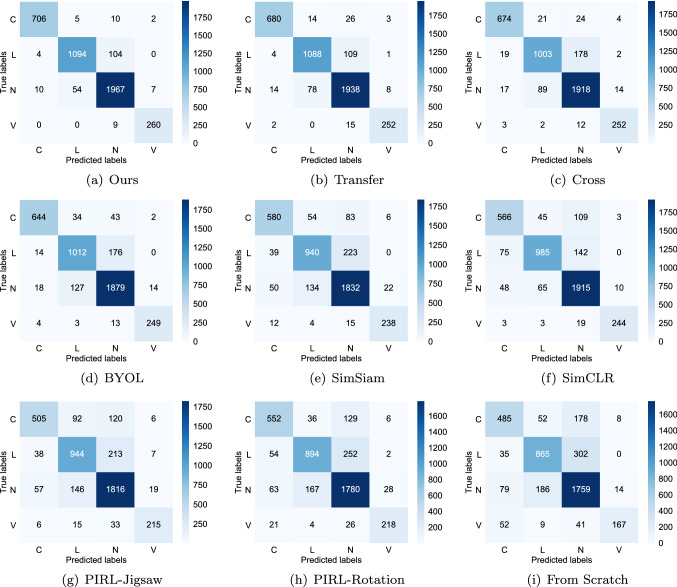

COVID-19 detection results compared with different methods are shown in Table 2 and Fig. 2. The results show the average and variance of the last 10 fine-tuning epochs. From Table 2, our method drastically outperformed other comparative methods and significantly improve COVID-19 detection performance compared with using SSL or transfer learning alone. Specifically, when using all training data, transfer learning achieved HM, AUC, and Acc scores of 0.968, 0.997, and 0.936, respectively, and the best SSL method Cross achieved HM, AUC, and Acc scores of 0.955, 0.995, and 0.908, respectively. However, when using both transfer learning and SSL, our method achieved HM, AUC, and Acc scores of 0.985, 0.999, and 0.953, respectively. Figure 2 shows the confusion matrix of our method (a) and other comparative methods (b)–(i). We can see that our method has great advantages in the recognition of all categories.

Table 2.

COVID-19 detection results compared with different methods

| Method | Sen | Spe | HM | AUC | Acc |

|---|---|---|---|---|---|

| Ours (Transfer + SSL) | 0.972 ± 0.003 | 0.997 ± 0.001 | 0.985 ± 0.001 | 0.999 ± 0.000 | 0.953 ± 0.001 |

| Transfer | 0.944 ± 0.004 | 0.994 ± 0.001 | 0.968 ± 0.002 | 0.997 ± 0.000 | 0.936 ± 0.001 |

| Cross [22] | 0.923 ± 0.005 | 0.991 ± 0.001 | 0.955 ± 0.002 | 0.995 ± 0.000 | 0.908 ± 0.001 |

| BYOL [12] | 0.895 ± 0.005 | 0.987 ± 0.001 | 0.939 ± 0.003 | 0.991 ± 0.000 | 0.894 ± 0.001 |

| SimSiam [5] | 0.794 ± 0.013 | 0.972 ± 0.002 | 0.874 ± 0.007 | 0.972 ± 0.000 | 0.849 ± 0.001 |

| SimCLR [6] | 0.778 ± 0.006 | 0.965 ± 0.002 | 0.862 ± 0.003 | 0.996 ± 0.000 | 0.876 ± 0.001 |

| PIRL-Jigsaw [27] | 0.685 ± 0.014 | 0.973 ± 0.003 | 0.804 ± 0.009 | 0.954 ± 0.000 | 0.821 ± 0.001 |

| PIRL-Rotation [27] | 0.760 ± 0.009 | 0.962 ± 0.002 | 0.849 ± 0.005 | 0.960 ± 0.001 | 0.817 ± 0.001 |

| From Scratch | 0.665 ± 0.013 | 0.954 ± 0.003 | 0.783 ± 0.008 | 0.935 ± 0.001 | 0.774 ± 0.002 |

The bold denotes the best performance in all methods

Fig. 2.

Confusion matrix of our method (a) and other comparative methods (b)–(i)

Furthermore, Table 3 shows COVID-19 detection results of our method and different models reported in [34]. Specifically, the best model InceptionV3 achieved Sen of 0.935, Spe of 0.955, HM score of 0.945, and Acc of 0.935. However, when using transfer learning and SSL, our method with ResNet50 achieved Sen of 0.972, Spe of 0.997, HM score of 0.985, and Acc of 0.953. Experimental results show that the knowledge learned from natural images using transfer learning is beneficial for SSL on the CXR images and boosts representation learning for COVID-19 detection.

Table 3.

COVID-19 detection results of our method and different models reported in [34]

| Method | Sen | Spe | HM | Acc |

|---|---|---|---|---|

| Ours | 0.972 | 0.997 | 0.985 | 0.953 |

| ResNet18 | 0.934 | 0.955 | 0.944 | 0.934 |

| ResNet50 | 0.930 | 0.955 | 0.942 | 0.930 |

| ResNet101 | 0.930 | 0.951 | 0.940 | 0.930 |

| InceptionV3 | 0.935 | 0.955 | 0.945 | 0.935 |

| DenseNet201 | 0.927 | 0.954 | 0.940 | 0.927 |

| CheXNet | 0.932 | 0.955 | 0.943 | 0.932 |

The bold denotes the best performance in all methods

COVID-19 detection results in different training data volumes are shown in Fig. 3. The results contain the average of the last 10 fine-tuning epochs. From Fig. 3, our method significantly improved COVID-19 detection in small data volumes, such as 1 and 10% of the training set (169 and 1693 images) compared with other methods, and achieved promising detection performance even for 50% of the training set. Examples of CXR images and their Grad-CAM++ [4] visual explanations of the proposed method are shown in Fig. 4, where the highlight regions are used for decision-making. These visualization results increase the confidence and reliability of the proposed method, by confirming the accuracy of the decision-making on the relevant region of the CXR images [2].

Fig. 3.

COVID-19 detection results in different fine-tuning data volumes: a HM and b Acc

Discussion

Considering several patients screened due to COVID-19 pandemic, the use of deep learning for computer-aided detection has strong potential in assisting clinical workflow efficiency and reducing the incidence of infections among radiologists and healthcare providers [1, 11]. Here, we proposed a new learning scheme called self-supervised transfer learning for COVID-19 detection using CXR images. Our findings show that the proposed method boosts COVID-19 detection on the largest open COVID-19 CXR dataset. Especially, when using a small amount of labeled training data for the final fine-tuning, our method drastically outperformed other methods.

Due to the completely different infection status, the number of medical resources, and data sharing policies of COVID-19 in different countries and cities, there is the likelihood of limited labeled training data [31]. Nonetheless, the proposed method can still be applied to this case for high-performance COVID-19 detection. Our work sprouts from clinical needs and the proposed method can be applied to other diseases detection not only COVID-19. Although the experimental results are promising, the proposed method should be evaluated on other COVID-19 datasets or datasets of different diseases for any potential bias. Moreover, our previous study [21, 23] can improve the effectiveness and security of medical data sharing among different medical facilities, which fits well with the proposed self-supervised transfer learning and is expected to be applied in clinical.

Our method also has limitations. For example, our method has three stages and hence is not end-to-end. The encoder should be saved in the pre-training stage and continue to update the parameters in the later stages, which is more complicated in operation. In our experiments, we want to explore the impact and robustness of the initial parameters on different fine-tuning stages and data volumes. Hence, we use the average of the last 10 fine-tuning epochs to test the performance. Also, since we evaluate model performance in different subsets of settings (i.e., 1, 10, and 50%), it is expensive to perform N-fold cross-validation in all settings. However, N-fold cross-validation is a more common method, which we will consider in our future work.

Conclusion

A new learning scheme called self-supervised transfer learning for detecting COVID-19 from CXR images has been proposed in this paper. We showed that the knowledge learned from natural images with transfer learning benefits the SSL on the CXR images and boosts the representation learning performance for COVID-19 detection. Our method can learn discriminative representations from CXR images without manually annotated labels. Experimental results showed that our method achieved promising results on the COVID-19 CXR dataset. Furthermore, our method can help reduce the incidence of infections among radiologists and healthcare providers.

Acknowledgements

This study was supported in part by AMED Grant Number JP21zf0127004, the Hokkaido University-Hitachi Collaborative Education and Research Support Program, and the MEXT Doctoral program for Data-Related InnoVation Expert Hokkaido University (D-DRIVE-HU) program. This study was conducted on the Data Science Computing System of Education and Research Center for Mathematical and Data Science, Hokkaido University.

Declaration

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

No ethics approval is required.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Guang Li, Email: guang@lmd.ist.hokudai.ac.jp.

Ren Togo, Email: togo@lmd.ist.hokudai.ac.jp.

Takahiro Ogawa, Email: ogawa@lmd.ist.hokudai.ac.jp.

Miki Haseyama, Email: mhaseyama@lmd.ist.hokudai.ac.jp.

References

- 1.Bertolini M, Brambilla A, Dallasta S, Colombo G. High-quality chest CT segmentation to assess the impact of COVID-19 disease. Int J Comput Assist Radiol Surg. 2021;16(10):1737–1747. doi: 10.1007/s11548-021-02466-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Brunese L, Mercaldo F, Reginelli A, Santone A. Explainable deep learning for pulmonary disease and coronavirus COVID-19 detection from X-rays. Comput Methods Programs Biomed. 2020;196:105608. doi: 10.1016/j.cmpb.2020.105608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cau R, Faa G, Nardi V, Balestrieri A, Puig J, Suri JS, SanFilippo R, Saba L. Long-COVID diagnosis: from diagnostic to advanced AI-driven models. Eur J Radiol. 2022;148:110164. doi: 10.1016/j.ejrad.2022.110164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chattopadhay A, Sarkar A, Howlader P, Balasubramanian VN (2018) Grad-CAM++: generalized gradient-based visual explanations for deep convolutional networks. In: IEEE winter conference on applications of computer vision (WACV), pp 839–847 (2018)

- 5.Chen X, He K (2021) Exploring simple siamese representation learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

- 6.Chen T, Kornblith S, Norouzi M, Hinton G (2020) A simple framework for contrastive learning of visual representations. In: Proceedings of the international conference on machine learning (ICML)

- 7.Chen H, Lundberg SM, Erion G, Kim JH, Lee SI. Forecasting adverse surgical events using self-supervised transfer learning for physiological signals. NPJ Digit Med. 2021;4(1):1–13. doi: 10.1038/s41746-021-00536-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chen Z, Wang S, Sun Y, Ma X (2021) Self-supervised transfer learning for hand mesh recovery from binocular images. In: Proceedings of the IEEE/CVF international conference on computer vision (ICCV), pp 11626–11634

- 9.Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 248–255

- 10.Gidaris S, Singh P, Komodakis N (2018) Unsupervised representation learning by predicting image rotations. In: Proceedings of the international conference on learning representations (ICLR)

- 11.Gifani P, Shalbaf A, Vafaeezadeh M. Automated detection of COVID-19 using ensemble of transfer learning with deep convolutional neural network based on CT scans. Int J Comput Assist Radiol Surg. 2021;16(1):115–123. doi: 10.1007/s11548-020-02286-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Grill JB, Strub F, Altché F, Tallec C, Richemond P, Buchatskaya E, Doersch C, Avila Pires B, Guo Z, Gheshlaghi Azar M, Piot B, Kavukcuoglu K, Munos R, Valko M (2020) Bootstrap your own latent—a new approach to self-supervised learning. In: Proceedings of the advances in neural information processing systems (NeurIPS)

- 13.He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 770–778

- 14.He K, Fan H, Wu Y, Xie S, Girshick R (2020) Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 9729–9738

- 15.Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 4700–4708

- 16.Ismael AM, Şengür A (2021) Deep learning approaches for COVID-19 detection based on chest X-ray images. Expert Syst Appl 164:114054 [DOI] [PMC free article] [PubMed]

- 17.Jing L, Tian Y (2020) Self-supervised visual feature learning with deep neural networks: a survey. IEEE Trans Pattern Anal Mach Intell [DOI] [PubMed]

- 18.Khan AI, Shah JL, Bhat MM. Coronet: a deep neural network for detection and diagnosis of COVID-19 from chest X-ray images. Comput Methods Programs Biomed. 2020;196:105581. doi: 10.1016/j.cmpb.2020.105581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Khan SH, Sohail A, Khan A, Hassan M, Lee YS, Alam J, Basit A, Zubair S. COVID-19 detection in chest X-ray images using deep boosted hybrid learning. Comput Biol Med. 2021;137:104816. doi: 10.1016/j.compbiomed.2021.104816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Leung MST, Lin SG, Chow J, Harky A. COVID-19 and oncology: service transformation during pandemic. Cancer Med. 2020;9(19):7161–7171. doi: 10.1002/cam4.3384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Li G, Togo R, Ogawa T, Haseyama M (2020) Soft-label anonymous gastric X-ray image distillation. In: Proceedings of the IEEE international conference on image processing (ICIP), pp 305–309

- 22.Li G, Togo R, Ogawa T, Haseyama M (2021) Self-supervised learning for gastritis detection with gastric X-ray images. arXiv preprint arXiv:2104.02864 [DOI] [PubMed]

- 23.Li G, Togo R, Ogawa T, Haseyama M. Compressed gastric image generation based on soft-label dataset distillation for medical data sharing. Comput Methods Programs Biomed. 2022;227:107189. doi: 10.1016/j.cmpb.2022.107189. [DOI] [PubMed] [Google Scholar]

- 24.Li G, Togo R, Ogawa T, Haseyama M (2022) Self-knowledge distillation based self-supervised learning for COVID-19 detection from chest X-ray images. In: Proceedings of the IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 1371–1375

- 25.Li G, Togo R, Ogawa T, Haseyama M (2022) Tribyol: Triplet byol for self-supervised representation learning. In: Proceedings of the IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 3458–3462

- 26.Minaee S, Kafieh R, Sonka M, Yazdani S, Soufi GJ. Deep-COVID: Predicting COVID-19 from chest X-ray images using deep transfer learning. Med Image Anal. 2020;65:101794. doi: 10.1016/j.media.2020.101794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Misra I, vann der Maaten L (2020) Self-supervised learning of pretext-invariant representations. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 6707–6717

- 28.Noroozi M, Favaro P (2016) Unsupervised learning of visual representations by solving jigsaw puzzles. In: Proceedings of the European conference on computer vision (ECCV), pp 69–84

- 29.Ozturk T, Talo M, Yildirim EA, Baloglu UB, Yildirim O, Acharya UR. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput Biol Med. 2020;121:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng. 2009;22(10):1345–1359. doi: 10.1109/TKDE.2009.191. [DOI] [Google Scholar]

- 31.Peiffer-Smadja N, Maatoug R, Lescure FX, D’ortenzio E, Pineau J, King JR. Machine learning for COVID-19 needs global collaboration and data-sharing. Nat Mach Intell. 2020;2(6):293–294. doi: 10.1038/s42256-020-0181-6. [DOI] [Google Scholar]

- 32.Qi X, Brown LG, Foran DJ, Nosher J, Hacihaliloglu I. Chest X-ray image phase features for improved diagnosis of COVID-19 using convolutional neural network. Int J Comput Assist Radiol Surg. 2021;16(2):197–206. doi: 10.1007/s11548-020-02305-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Quiroz-Juárez MA, Torres-Gómez A, Hoyo-Ulloa I, León-Montiel RdJ, U’Ren AB (2021) Identification of high-risk COVID-19 patients using machine learning. PLoS One 16(9):e0257234 [DOI] [PMC free article] [PubMed]

- 34.Rahman T, Khandakar A, Qiblawey Y, Tahir A, Kiranyaz S, Kashem SBA, Islam MT, Al Maadeed S, Zughaier SM, Khan MS. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput Biol Med. 2021;132:104319. doi: 10.1016/j.compbiomed.2021.104319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rajpurkar P, Irvin J, Zhu K, Yang B, Mehta H, Duan T, Ding D, Bagul A, Langlotz C, Shpanskaya K, Lungren MP, Ng AY (2017) Chexnet: radiologist-level pneumonia detection on chest X-rays with deep learning. arXiv preprint arXiv:1711.05225

- 36.Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: Proceedings of the international conference on learning representations (ICLR)

- 37.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 2818–2826

- 38.Tahamtan A, Ardebili A. Real-time RT-PCR in COVID-19 detection: issues affecting the results. Expert Rev Mol Diagn. 2020;20(5):453–454. doi: 10.1080/14737159.2020.1757437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Whiteside T, Kane E, Aljohani B, Alsamman M, Pourmand A. Redesigning emergency department operations amidst a viral pandemic. Am J Emerg Med. 2020;38(7):1448–1453. doi: 10.1016/j.ajem.2020.04.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhou Z, Sodha V, Pang J, Gotway MB, Liang J. Models genesis. Med Image Anal. 2021;67:101840. doi: 10.1016/j.media.2020.101840. [DOI] [PMC free article] [PubMed] [Google Scholar]