Abstract

Introduction

With surgical opportunities becoming increasingly restricted for orthopaedic trainees, simulation training is a valuable alternative at providing sufficient practice. This pilot study aims to assess the potential effectiveness of low-fidelity simulation in teaching medical students basic arthroscopic skills and the feasibility of its incorporation into formal student training programmes.

Methods

Twenty-two medical students completed pre- and post-training tests on the Probing (Task 1) and Maze (Task 2) exercises from the Sawbones ‘Fundamentals of Arthroscopy Surgery Training’ (FAST) programme. Training consisted of practising horizon control, deliberate linear motion and probing within 25min over a period of days. Completion time and error frequency were measured. The difference in performance was assessed using a paired two-tailed t-test. Qualitative data were collected.

Results

Test completion time decreased significantly by a mean of 83s (±46s, 95% confidence intervals [CI] 37 to 129) for Task 1 (p=0.001) and 105s (±55s, 95% CI 50 to 160) for Task 2 (p=0.0007). Frequency of direct visualisation errors decreased significantly by a mean of 1.0 errors (±1.0 errors, 95% CI 0.1 to 2.0) for Task 1 (p = 0.04) and 0.8 errors (±0.8 errors, 95% CI 0.1 to 1.6) for Task 2 (p = 0.04). At post-training, 82% of participants were willing to incorporate FAST into formal training.

Conclusions

Low-fidelity simulators such as FAST can potentially teach basic arthroscopic skills to medical students and are feasible for incorporation into formal training. They also give students a cost-effective and safe basic surgical training experience.

Keywords: Arthroscopy, Simulation training, Orthopaedics, Orthopaedic procedures

Introduction

Training for orthopaedic surgeons was significantly disrupted by the recent global pandemic, during which operative opportunities were diminished owing to the cancellation of routine elective surgery.1 Combined with modern working hours restrictions,2,3 these issues highlight the need for implementing training modalities outside the operating room to ensure continued development of surgical competence. This is particularly important for arthroscopic procedures, because of the steep learning curve and high risk of iatrogenic injuries.4 Training via specific simulators allows junior trainees to learn basic surgical techniques in a safe and time-effective manner with no risk to patients and minimal ethical constraints.5

Arthroscopic simulations can be broadly categorised into high-fidelity or low-fidelity.6 Low-fidelity simulators include box-trainers and dry anatomical models that teach basic arthroscopic skills such as knot tying, bimanual dexterity, triangulation skills and grasping.6 High-fidelity simulators recreate a more realistic environment by the use of cadaveric models, live animal simulation and virtual reality simulation.6 By contrast to low-fidelity simulators, which are low-cost and quick and easy to set up, the practicality and associated high costs of high-fidelity simulators create a significant barrier to their incorporation into many surgical programmes. Furthermore, high-fidelity simulators are less versatile because they require a designated simulation lab, whereas low-fidelity simulators can be transported and set up in any convenient space. Low-cost self-made simulators7–9 such as the cigar box arthroscopy trainer and grapefruit training model have also been developed with similar efficacy to their more expensive, highly manufactured counterparts.7,10

The Fundamentals of Arthroscopy Surgery Training (FAST) workstation is a low-cost, low-fidelity pre-made simulator manufactured by Sawbones (USA). It was established in 2011 in conjunction with the FAST programme; a structured online curriculum that deconstructs arthroscopy into basic motor elements to improve hand–eye coordination abilities with the use of a scope and intruments.11

The limited number of studies evaluating FAST have focused either on proving that performance on the simulator correlates with arthroscopic experience12–14 or validating the knot-tying module15,16 (one of the six modules of the FAST programme). To our knowledge, only one small study has explored the effectiveness of FAST at teaching basic arthroscopic skills such as triangulation.17 Further validation of the effectiveness of FAST as a teaching tool was therefore warranted, especially as this training method has great cost-savings and low risks associated with its set-up and use.

In this pilot study, we used FAST to train medical students because they are novices with no prior surgical experience, therefore allowing the true effects of FAST to be better evaluated. We also hope this early-years ‘taster session’ may potentially encourage more trainees towards a surgical career. Our key objectives were to:

-

•

assess the potential effectiveness of a low-fidelity simulator at teaching basic arthroscopic skills;

-

•

evaluate the feasibility of incorporating such a training model into formal training programmes, for students with an interest in surgery.

Methods

Participants

Between November and December 2020, medical students rotating through our orthopaedic department were recruited on a rolling basis throughout the trial period. No incentives were offered. Twenty-three students were invited via email, and twenty-two accepted (96% acceptance rate). Because elective theatre arthroscopy lists were significantly reduced (owing to the COVID-19 pandemic), medical students were keen to gain another form of surgical experience, likely resulting in our observed high acceptance rate. Consent was not necessary because students accepted our invitation voluntarily to participate in this study. In order to assess and standardise baseline characteristics, participants provided information via a pre-training questionnaire regarding their handedness and whether they had any previous formal simulation experience or training.

Simulation training and testing

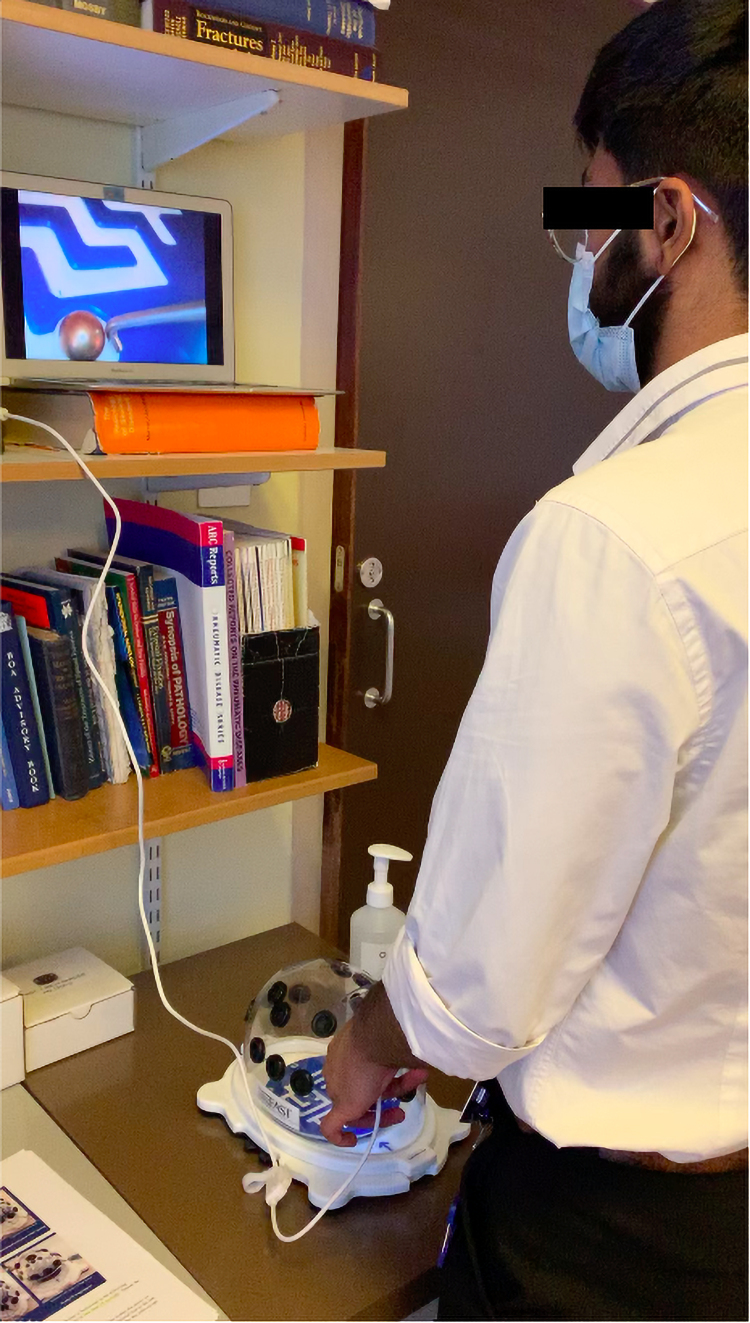

The study was performed on a standard office desk in the orthopaedic registrar’s office of a university teaching hospital. Training and study assessment tests took place over two sessions. In the first session, participants were given a demonstration on arthroscopy and FAST. Each participant then completed a pre-training questionnaire. Before their initial FAST assessment test, students were shown the official instructional videos for the assigned tasks, developed by Greg Nicandri.18 Participants were assessed on exercises adapted from the FAST programme: Task 1 (Probing exercise from Module 2) and Task 2 (Maze exercise from Module 5). Task 1 was chosen because it tests triangulation, camera centring and probing skills. Task 2 tests all of the aforementioned skills, as well as tracking and object manipulation. Assessment tests and training exercises were performed using the transparent dome, allowing the assessor to monitor errors made by the candidate.

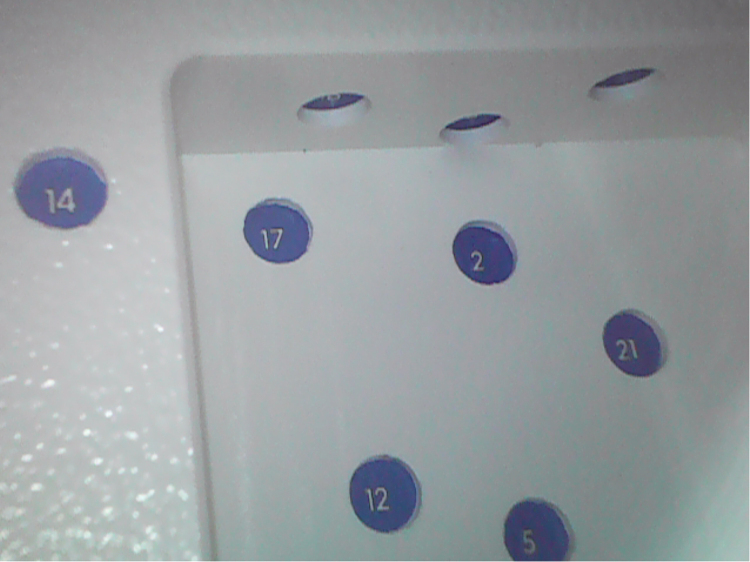

Before the pre-training assessment test, participants were given 2min to familiarise themselves with the FAST workstation and the Supereyes (China) 7mm USB 0° endoscope. Workstation set-up is illustrated in Figure 1. During Task 1, participants were asked to probe ten numbers in a specific sequence, as instructed by the assessor (Figure 2). The number sequence was generated using a random number generator. In Task 2, participants were asked to probe and guide a metal ball from one end of a maze to the other (Figure 3).

Figure 1 .

Medical student performing Task 2 (Maze exercise)

Figure 2 .

View of the probing platform in Task 1 visualised using the Supereyes 7mm USB 0° endoscope

Figure 3 .

View of the maze in Task 2 visualised using the Supereyes 7mm USB 0° endoscope

Following the pre-training test, participants were shown instructional videos on three exercises (‘Horizon control’, ‘Deliberate linear motion’ and ‘Gross probing’) from FAST Module 1. These exercises focus on basic visualisation and triangulation skills, which are prerequisites of Tasks 1 and 2 in this study. Participants were then given approximately 15min to practise each exercise once.

In the follow-up session at a later date, participants were given 10min to practise each of the same three exercises from Fast Module 1 again. Following this, students completed a post-training assessment test using the same design as for the pre-training test but with different numbers generated in Task 1. A post-training questionnaire was also performed at the end of this test and training programme.

Outcome measures

Two primary outcome measures were used to assess arthroscopic performance. Time to complete the test was measured by an assessor using a stopwatch. The number of errors made was assessed to ensure quality was not being compromised to complete the task in a shorter time. In Task 1, the number of times the participant looked down directly at the transparent arthroscopy box (direct visualisation error) was recorded. In Task 2, the number of times the ball escaped the maze (ball escape error) was also recorded, in addition to ‘direct visualisation errors’. The only exception made was when the participant looked down to change the scope and instrument portals when needing to access the posterior sections of the maze.

Our secondary outcome measure was to ascertain the subjective effectiveness of the FAST training programme. This was assessed using a pre- and post-training questionnaire. Participants were asked:

-

•

whether they thought simulation training would be a good use of their time;

-

•

to score the FAST simulation programme on how useful they thought it was at improving their motor skills, on a scale of 1 to 10 (1 being ‘not useful’ and 10 being ‘very useful’);

-

•

whether they wanted this programme incorporated formally into their orthopaedic rotations.

Feasibility of the training programme was assessed by adherence to the programme, and the candidates preferred number of days between tests.

Data analysis

Statistical analyses were conducted using Microsoft Excel v. 15.6. Statistical testing was deemed appropriate for this pilot study because the sample size exceeded 20. A paired two-tailed t-test was used to determine significant differences between the pre-training and post-training performance outcomes. Observed differences were considered significant if p<0.05. Means are presented with standard deviations (sd) and 95% confidence intervals (CI) where appropriate. A scatter plot and regression coefficient were used to determine associations between the reduction in number of errors and pre-training and post-training completion time differences. Programme adherence rate and participants’ qualitative outcomes on the usefulness of FAST and its potential to be incorporated into formal training are presented as percentages under the given headings.

Results

Participants

Twenty-two medical students were included in this study. Table 1 shows the baseline profile of participants. Most of the participants were in their fourth year (82%), right-handed (91%) and did not have any previous formal arthroscopy training or experience. All candidates had an interest in surgery as a potential future career. The mean interval between the pre- and post-training assessment tests was 3.4days (sd 1.7days).

Table 1 .

Baseline profile of participants (n=22)

| Characteristic | n | % |

|---|---|---|

| Year group | ||

| MBBS 3 | 1 | 4 |

| MBBS 4 | 18 | 82 |

| MBBS 5 | 3 | 14 |

| Handedness | ||

| Right | 20 | 91 |

| Left | 2 | 9 |

Primary outcomes (quantitative data)

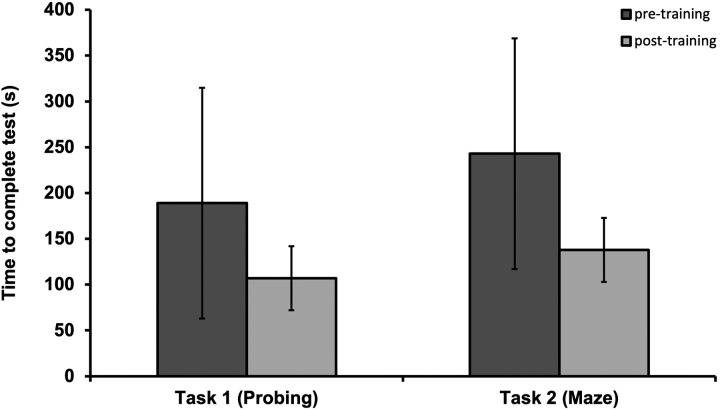

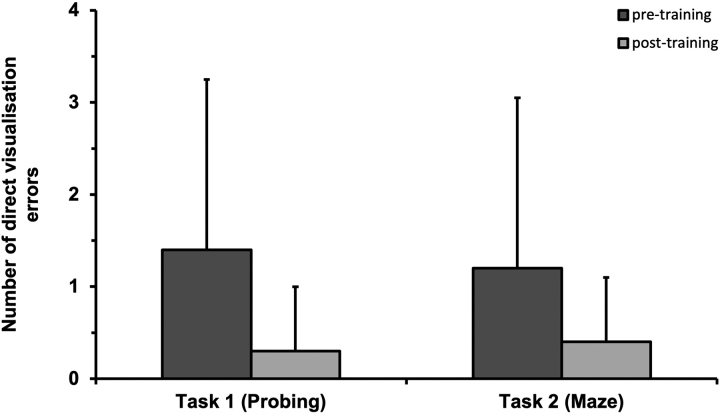

Primary outcome measures are noted in Table 2. Mean time to complete for Task 1 (Probing) was 189s (sd 126s) in the pre-training test and 107s (sd 33s) in the post-training test. The difference in completion times were significant (p=0.001), with participants improving their times by a mean of 83s (±46s, 95% CI 37 to 129) after training. The mean number of direct visualisation errors made in Task 1 was 1.4 (sd 2.1 errors) pre-training and 0.3 (sd 0.6 errors) post-training. The difference in error frequency was also significant (p=0.04), with participants reducing their direct visualisation errors by a mean of 1.0 errors (±1.0 errors, 95% CI 0.1 to 2.0) after training.

Table 2 .

Primary outcome measures (means) for Task 1 and Task 2 assessment tests

| Task 1 (Probing) | Task 2 (Maze) | |

|---|---|---|

| Time to complete (s) | ||

| Pre-training (sd) | 189 (126) | 243 (130) |

| Post-training (sd) | 107 (33) | 138 (49) |

| Improvement between tests (95% CI) | 83 (37 to 129) | 105 (50 to 160) |

| p-value for difference between tests | 0.001 | 0.0007 |

| Direct visualisation errors (n) | ||

| Pre-training (sd) | 1.4 (2.1) | 1.2 (1.7) |

| Post-training (sd) | 0.3 (0.6) | 0.4 (0.7) |

| Improvement between tests (95% CI) | 1.0 (0.1 to 2.0) | 0.8 (0.1 to 1.6) |

| p-value for difference between tests | 0.04 | 0.04 |

| Ball escape errors (n)a | ||

| Pre-training (sd) | – | 0.4 (0.7) |

| Post-training (sd) | – | 0.4 (0.6) |

| Improvement between tests (95% CI) | – | 0.0 (−0.4 to 0.4) |

| p-value for difference between tests | – | 1.00* |

*Non-significant difference (p>0.05)

aBall escape error only applicable to task 2 maze exercise

In Task 2 (Maze), mean time to completion was 243s (sd 130s) pre-training and 138s (sd 49s) post-training. The difference in completion time was significant (p=0.0007) with participants improving their times by 105s (±55s, 95% CI 50 to 160) after training. The mean number of direct visualisation errors made was 1.2 (sd 1.7 errors) pre-training and 0.4 (sd 0.7 errors) in the post-training test. The difference in error frequency was significant (p=0.04), with number of direct visualisation errors reduced by 0.8 (±0.8 errors, 95% CI 0.1 to 1.6) after training. The mean number of ‘ball escape’ errors was 0.4 (sd 0.7 errors) pre-training and 0.4 (sd 0.6 errors) in the post-training test. There was no significant difference in this outcome (p=1.00).

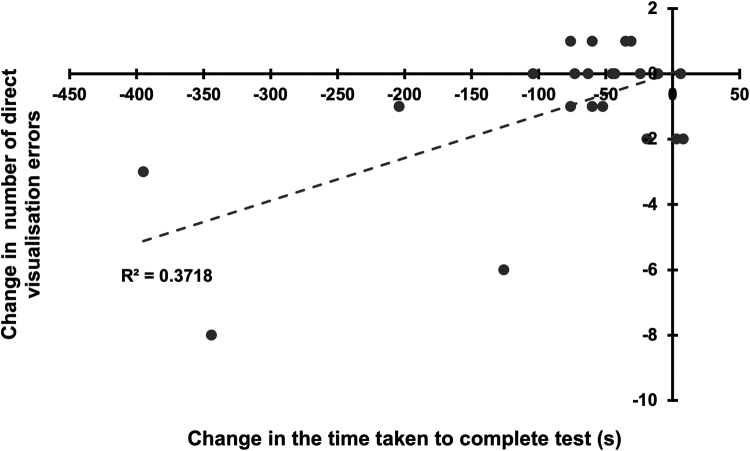

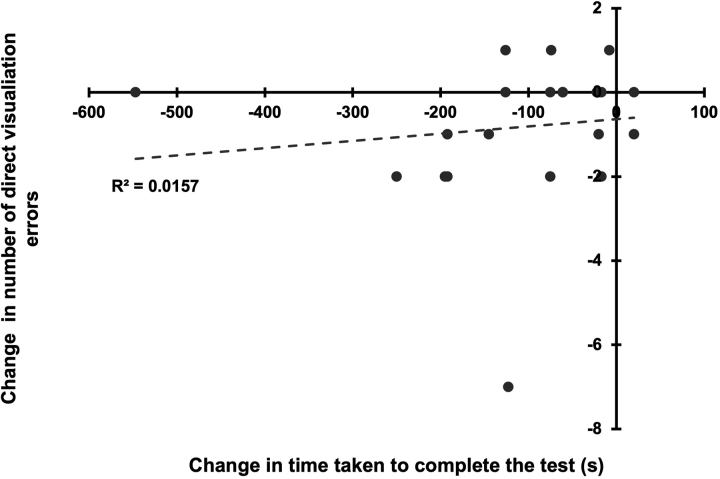

Improvements in the statistically significant primary outcome measures (‘time to completion’ and ‘direct visualisation errors’) are illustrated in Figures 4 and 5. Scatter plots (Figures 6 and 7) between the change in number of direct visualisation errors and change in task completion times resulted in correlation coefficients R=0.6 (R2=0.4) for Task 1 and R=0.1 (R2=0.02) for Task 2. Number of ball escape errors was not analysed because no difference between tests was noted.

Figure 4 .

Time to completion in pre- and post-training tests, with error bars indicating standard deviation

Figure 5 .

Number of direct visualisation errors made in pre- and post-training tests, with error bars indicating standard deviation

Figure 6 .

Scatter plot showing correlation between number of direct visualisation errors and reduction in test completion time for Task 1

Figure 7 .

Scatter plot showing correlation between number of direct visualisation errors and reduction in test completion time for Task 2

Secondary outcomes (qualitative data)

Pre-training and post-training questionnaire responses are summarised in Table 3. Before training, 96% of participants answered ‘Yes’ to the time-effectiveness of FAST, after training this increased to 100%.

Table 3 .

Participants’ views on the effectiveness of the FAST programme: pre- and post-training

| Pre-training | Post-training | |||

|---|---|---|---|---|

| n | % | n | % | |

| Good use of time? | ||||

| Yes | 21 | 96 | 22 | 100 |

| No | 1 | 4 | 0 | 0 |

| Incorporate simulation training into orthopaedic rotation? | ||||

| Yes | 12 | 55 | 18 | 82 |

| Maybe | 9 | 41 | 4 | 18 |

| No | 1 | 4 | 0 | 0 |

| Usefulness of FAST at improving motor skills (1–10) | ||||

| 1–4 | 0 | 0 | 0 | 0 |

| 5 | 3 | 14 | 0 | 0 |

| 6 | 0 | 0 | 0 | 0 |

| 7 | 3 | 14 | 1 | 5 |

| 8 | 10 | 45 | 5 | 23 |

| 9 | 2 | 9 | 9 | 41 |

| 10 | 4 | 18 | 7 | 32 |

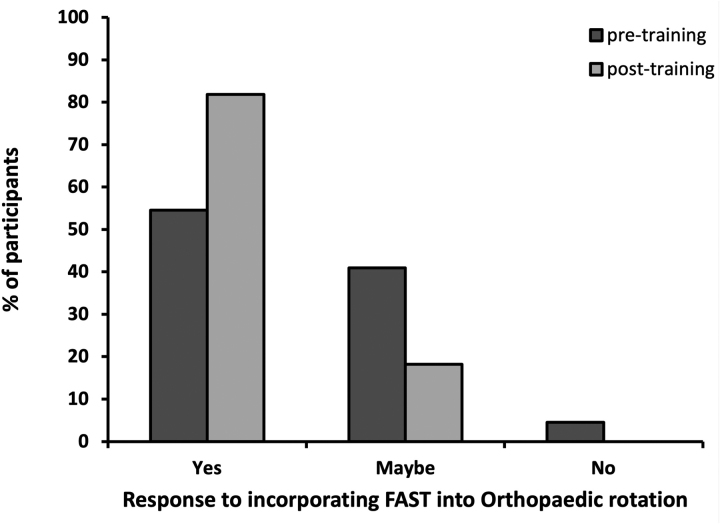

The response to formal incorporation of simulation training into orthopaedic rotations was ‘Yes’ in 55% of participants prior to training, and 82% following training (Figure 8).

Figure 8 .

Participant responses to incorporating FAST into medical students’ orthopaedic rotations

When asked (before training) on the usefulness of FAST at improving motor skills, 45% of participants gave FAST a score of 8 (1 being ‘not at all useful’ and 10 being ‘very useful’). The lowest score given was 5 by 14% of participants, and the highest score was 10, given by 18% of participants. After training, participants were increasingly more confident regarding the usefulness of FAST, with 73% of participants giving a score of 9 or above.

Discussion

This pilot study was designed to examine the potential effectiveness of low-fidelity simulation at teaching basic arthroscopic skills to novice individuals, and the feasibility of incorporating such a training model into structured training for junior trainees. This trial was not designed to formally validate the full efficacy of the FAST workstation and programme, so the results must be interpreted in context.

Our results suggest that low-fidelity simulation can potentially teach basic arthroscopic skills to students with an interest in surgery. There was a significant difference in the pre-training and post-training completion times for both Task 1 (p=0.001) and Task 2 (p=0.0007). Completion time decreased by a mean of 83s for Task 1 and 105s for Task 2. The number of direct visualisation errors also decreased significantly for both tasks (p=0.04), by a mean of 1.0 errors for Task 1 and 0.8 errors for Task 2. The standard deviations of mean completion times and number of direct visualisation errors also decreased after training, suggesting that training can potentially reduce the variation in arthroscopic skills within a cohort. We further note a moderate to strong association between reduction in completion time and change in visualisation errors in Task 1 (R=0.6), but no association between reduction in completion time and number of direct visualisation errors in Task 2 (R=0.1). This suggests that low-fidelity simulators improve overall arthroscopic performance when assessing both parameters in unison. Similar observations have been made by Meeks et al, who evaluated the performance of 15 medical students on FAST.17 The Meeks et al study found significant reductions in the average completion times for Task 1 and Task 2 over the full 6-week study period.17

Meeks et al also found a significant decrease in the number of ‘track deviation errors’ in Task 2.17 In our study, however, we observed that the ‘ball escape’ outcome measure (similar to ‘track deviation errors’), and ‘direct visualisation errors’, did not fully capture other key aspects of arthroscopic surgery; for example, control over the field of view, camera dexterity, instrument dexterity and bimanual dexterity as outlined by the Arthroscopic Surgical Skills Evaluation Tool (ASSET) criteria.19 For future studies an ASSET score sheet adapted specifically for low-fidelity simulations could be developed to replace current methods of measuring error.

The encouraging potential for incorporating FAST into formal training is illustrated by the large majority of participants (82%) suggesting incorporation of this type of workstation. This enthusiasm for low-fidelity simulations was also found by Baxter et al and Goyal et al, in which 80% and 82% of participants expressed desire to train on FAST to develop their skills, respectively.12,13 We also note that although almost all participants thought FAST would be a good use of their time, in pre-training this enthusiasm was not translated to willingness to adopt FAST in real life. This difference highlights how low-fidelity simulation is only cost-effective when it is utilised in practice. Positive feasibility of the programme was illustrated by an overwhelming adherence rate of 100% and a mean of only 3.4days (sd 1.7days) between the two tests. Our set-up on a standard office desk in an orthopaedic registrar’s office also demonstrates that FAST can be set up easily, quickly and effectively with minimal resources such as the need of a formal simulation laboratory, as required by other studies.16

The strengths of this study include its prospective nature and relatively large participant sample size. The programme was designed so that participants could complete it with minimal time commitment, which may have contributed to the high adherence rate. To accurately recreate standard arthroscopic set-up, the monitor was placed at eye level and the workstation set up below, therefore encouraging hand–eye coordination without direct visualisation. Qualitative outcomes were assessed both before and after training to reduce reliance on retrospective recall, and the use of medical students reduced bias because they were a novice population with no previous surgical experience.

The success of other aspects of FAST have also been explored by different studies. Pedowitz et al validated the FAST knot-tying module and Schneider et al applied the FAST knot-tester as an objective feedback tool for improving knot-tying proficiency.15,16 Baxter et al and Goyal et al also concluded that performance on FAST correlates with arthroscopic experience,12,13 and a study on orthopaedic trainees by Tofte et al showed a significant correlation between FAST performance and trainee training grade.14

Limitations

The main limitation of this study is the lack of a control group for comparison. Given that practice improves motor skills, it is possible that the pre-training test provided enough practice to lead to improved performance post training. To minimise this influence of memory and tricks gained in the first test, an inter-session test interval of at least one day was used so that participants were less likely to remember test patterns and the position of certain numbers in Task 1. In addition, participants were not allowed further practice with the assessment (test) tasks during training sessions.

As noted by a number of participants, the 0° endoscope did not fully recreate the arthroscopic experience. Our particular endoscope could not accurately simulate the degree of inclination and field of view of the more commonly used 30° arthroscopes. This meant some numbers could not be visualised in the probing exercise (Task 1). The process by which we replaced these numbers from the randomly generated sequence may have introduced bias into our results, because certain numbers are easier to probe than others. Possible solutions include assembling a home-made, low-cost 30° arthroscope as designed by Ling et al.20

Despite numerous studies validating various low- and high-fidelity arthroscopic simulators, there is insufficient evidence about the transferability of these simulation skills to the operating theatre.5,21 This uncertainty combined with their high costs may explain the low prevalence of simulation-based training in formal surgical programmes. A recent national survey found that only 64% of UK Trauma and Orthopaedics training programmes had access to arthroscopy simulation.22 Easily accessible and cost-effective simulators such as FAST can potentially advance the scope of arthroscopic training. To facilitate this implementation of next generation surgical training, further research on skill subsets that can be transferred in vivo by such simulators is required.21

Conclusions

This pilot study demonstrates the potential effectiveness of a low-fidelity arthroscopic simulator (FAST) at teaching basic arthroscopic skills to medical students. Its potential for incorporation into formal training and feasibility of delivery to students with a surgical interest have also been demonstrated. The results of this pilot study are very encouraging and suggest this intervention merits further evaluation. We plan to incorporate the use of this low-fidelity simulator for ST3 registrars who have had minimal knee arthroscopy exposure, before they perform supervised live surgery in a longitudinal study.

References

- 1.Gonzi G, Gwyn R, Rooney Ket al. The role of orthopaedic trainees during the COVID-19 pandemic and impact on post-graduate orthopaedic education. Bone Joint Open 2020; 1: 676–682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hartle A, Gibb S, Goddard A. Can doctors be trained in a 48 hour working week? BMJ 2014; 349: g7323. [DOI] [PubMed] [Google Scholar]

- 3.Inaparthy P, Sayana M, Maffulli N. Evolving trauma and orthopedics training in the UK. J Surg Educ 2013; 70: 104–108. [DOI] [PubMed] [Google Scholar]

- 4.Price A, Erturan G, Akhtar Ket al. Evidence-based surgical training in orthopaedics. Bone Joint J 2015; 97-B: 1309–1315. [DOI] [PubMed] [Google Scholar]

- 5.Luzzi A, Hellwinkel J, O’Connor Met al. The efficacy of arthroscopic simulation training on clinical ability: a systematic review. Arthrosc – J Arthrosc Relat Surg 2020; 37: 1000–1007. [DOI] [PubMed] [Google Scholar]

- 6.Cannon W, Nicandri G, Reinig Ket al. Evaluation of skill level between trainees and community orthopaedic surgeons using a virtual reality arthroscopic knee simulator. J Bone Joint Surg 2014; 96: e57. [DOI] [PubMed] [Google Scholar]

- 7.Sandberg R, Sherman N, Latt L, Hardy J. Cigar box arthroscopy: a randomized controlled trial validates nonanatomic simulation training of novice arthroscopy skills. Arthrosc – J Arthrosc Relat Surg 2017; 33: 2015–2023. [DOI] [PubMed] [Google Scholar]

- 8.Arealis G, Holton J, Rodrigues Jet al. How to build your simple and cost-effective arthroscopic skills simulator. Arthrosc Tech 2016; 5: e1039–e1047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Molho D, Sylvia S, Schwartz Det al. The grapefruit: an alternative arthroscopic tool skill platform. Arthrosc – J Arthrosc Relat Surg 2017; 33: 1567–1572. [DOI] [PubMed] [Google Scholar]

- 10.Frank R, Rego G, Grimaldi Fet al. Does arthroscopic simulation training improve triangulation and probing skills? A randomized controlled trial. J Surg Educ 2019; 76: 1131–1138. [DOI] [PubMed] [Google Scholar]

- 11.AANA | Arthroscopy Association of North America. Aana.org. 2021. https://www.aana.org/AANAIMIS/Members/Education/FAST_Program/Members/Education/FAST-Program.aspx?hkey=dfedc6ad-2662-46e1-8897-615b3b91c170 (cited December 2022).

- 12.Baxter J, Bhamber N, Patel R, Tennent D. The FAST workstation shows construct validity and participant endorsement. Arthrosc Sports Med Rehabil 2021; 3: e1133–e1140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Goyal S, Radi M, Ramadan I, Said H. Arthroscopic skills assessment and use of box model for training in arthroscopic surgery using sawbones – ‘FAST’ workstation. SICOT-J 2016; 2: 37–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tofte J, Westerlind B, Martin Ket al. Knee, shoulder, and fundamentals of arthroscopic surgery training: validation of a virtual arthroscopy simulator. Arthrosc – J Arthrosc Relat Surg 2017; 33: 641–646.e3. [DOI] [PubMed] [Google Scholar]

- 15.Pedowitz R, Nicandri G, Angelo Ret al. Objective assessment of knot-tying proficiency with the fundamentals of arthroscopic surgery training program workstation and knot tester. Arthrosc – J Arthrosc Relat Surg 2015; 31: 1872–1879. [DOI] [PubMed] [Google Scholar]

- 16.Schneider A, Davis W, Walsh Det al. Use of the fundamentals of arthroscopic surgical training workstation for immediate objective feedback during training improves hand-tied surgical knot proficiency. Simul Healthc: J Soc Simul Healthc 2021; 16: 311–317. [DOI] [PubMed] [Google Scholar]

- 17.Meeks B, Kiskaddon E, Sirois Zet al. Improvement and retention of arthroscopic skills in novice subjects using fundamentals of arthroscopic surgery training (FAST) module. J Am Acad Orthop Surg 2020; 28: 511–516. [DOI] [PubMed] [Google Scholar]

- 18.Nicandri G. Dr Nicandri's YouTube channel. Youtube.com. https://www.youtube.com/channel/UCUKhJl4XJHIJmvvJPGNZ3ag/videos (cited December 2022).

- 19.Koehler R, Amsdell S, Arendt Eet al. The arthroscopic surgical skill evaluation tool (ASSET). Am J Sports Med 2013; 41: 1229–1237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ling J, Teo S, Mohamed Al-Fayyadh Met al. Low-Cost self-made arthroscopic training camera Is equally as effective as commercial camera: a comparison study. Arthrosc – J Arthrosc Relat Surg 2019; 35: 596–604. [DOI] [PubMed] [Google Scholar]

- 21.Frank R, Wang K, Davey Aet al. Utility of modern arthroscopic simulator training models: a meta-analysis and updated systematic review. Arthrosc – J Arthrosc Relat Surg 2018; 34: 1650–1677. [DOI] [PubMed] [Google Scholar]

- 22.James H, Gregory R, Tennent Det al. Current provision of simulation in the UK and republic of Ireland trauma and orthopaedic specialist training: a national survey. Bone Joint Open 2020; 1: 103–114. [DOI] [PMC free article] [PubMed] [Google Scholar]