Abstract

Wearable devices are being developed faster and applied more widely. Wearables have been used to monitor movement-related physiological indices, including heartbeat, movement, and other exercise metrics, for health purposes. People are also paying more attention to mental health issues, such as stress management. Wearable devices can be used to monitor emotional status and provide preliminary diagnoses and guided training functions. The nervous system responds to stress, which directly affects eye movements and sweat secretion. Therefore, the changes in brain potential, eye potential, and cortisol content in sweat could be used to interpret emotional changes, fatigue levels, and physiological and psychological stress. To better assess users, stress-sensing devices can be integrated with applications to improve cognitive function, attention, sports performance, learning ability, and stress release. These application-related wearables can be used in medical diagnosis and treatment, such as for attention-deficit hyperactivity disorder (ADHD), traumatic stress syndrome, and insomnia, thus facilitating precision medicine. However, many factors contribute to data errors and incorrect assessments, including the various wearable devices, sensor types, data reception methods, data processing accuracy and algorithms, application reliability and validity, and actual user actions. Therefore, in the future, medical platforms for wearable devices and applications should be developed, and product implementations should be evaluated clinically to confirm product accuracy and perform reliable research.

Keywords: wearables, biosensor, electrooculography (EOG) sensor, electroencephalography (EEG) sensor, sweat sensor, emotion evaluation, stress sensor

1. Introduction

1.1. The History of Wearable Biosensors

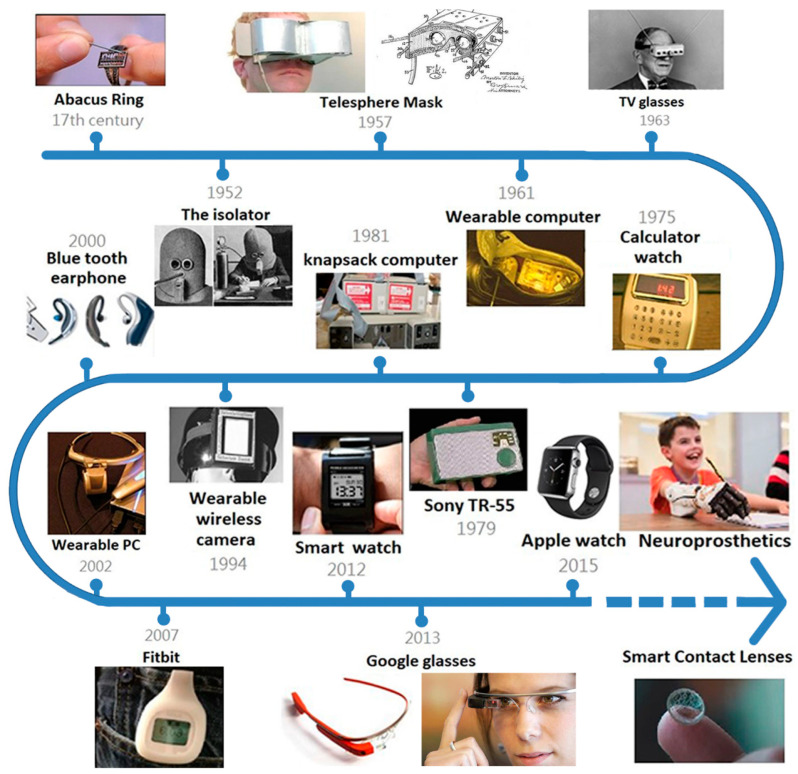

Wearable devices were developed earlier than is commonly believed. The timeline of milestones in the development of wearable devices is provided in Figure 1. The “wisdom ring” was invented in China as early as the 17th century. This abacus with seven rows of seven beads is only 1.2 cm long and 0.7 cm wide. This small size required the use of hairpins, which were typical hair decorations for women [1]. In the Western world, in July 1925, Hugo Gernsback invented the isolation helmet, which encloses the entire head and completely isolates the user from external noise to prevent distraction [2].

Figure 1.

Evolution of wearable devices. The wisdom ring was developed in the 17th century [1]. In July 1925, Hugo Gernsback invented the isolation helmet [2]. In 1957, Morton Helig created the Telesphere Mask [3]. In June 1961, Edward Thorp and Claude Shannon invented the first portable computer [4]. In 1963, Hugo Gernsback designed a pair of TV glasses [5]. In 1975, the first computer watch was released. In the 1980s, the Walkman and knapsack computer were launched [1]. In 1994, Steve Mann developed head-mounted smart glasses [6]. In the 2000s, the iPod and Bluetooth were introduced. In 2002 and 2007, wearable PCs and the Fitbit were released. Since 2010, health watches, Google Glasses, Apple watches, and Oculus Rift headsets have been developed [1]. Since 2020, neuroprosthetics, smart contact lenses and other wearables have been investigated.

Currently, wearable devices usually refer to wearable electronic products, such as smart watches, smart bracelets, smart contact lenses, and smart tattoos. In 1955, Sony introduced the first transistor radio, the Sony TR-55, and other electronic wearables were developed soon after. In 1957, Morton Helig created the Telesphere Mask, a head-mounted display that shows 3D movies and provides stereo surround sound [3]. In June 1961, the first portable computer was invented by Edward Thorp and Claude Shannon, who were mathematics professors at MIT. This computer was only as large as a matchbox. Edward Thorp and Claude Shannon brought the device to a Las Vegas casino and tested it on a roulette wheel, where 44% of their predictions of the ball’s position on the wheel were correct [4]. In 1963, Hugo Gernsback invented a pair of glasses that combined two antennas, and TV channels could be selected through buttons on the front of the glasses [5]. In the 1970s, the functions of wearable devices became increasingly complex.

The first computer watch was released in 1975. The first head-mounted camera was also invented in the 1970s and was specifically designed for blind people. The camera was linked to a vest, and vibrations were generated on the vest to provide information about the surroundings. In the 1980s, the Walkman became a popular wearable music player. Wearable devices were used not only for amusement but also in health care. In 1987, the first digital hearing aid was released. Fitness trackers were also invented in this era. Sensors such as heart monitors, direction sensors and vibration detectors have been widely incorporated to increase the capabilities of wearable devices. Moreover, health awareness has increased, which led to the increasing use of wearable devices in health care in the 1990s [1].

In 1994, Canadian researcher Steve Mann developed head-mounted smart glasses and fixed them on his skull, requiring special tools for removal. Steve Mann also worked on improving this device. He invented EyeTab, which combines smart glasses with computers and the internet. Therefore, he is known as the father of wearable devices [6]. In the 1990s, Olivetti invented the portable Active Badge, which provided user locations via infrared signals. In the 2000s, the iPod and Bluetooth were developed, leading to progress in wearable devices, which became thinner, lighter, and wireless. Since 2010, the functions and sales of wearable devices have increased rapidly due to the popularity of the Internet of Things and wireless devices. Health watches, Google Glasses, Apple Watches, Oculus Rift headsets, etc., were developed in this era. In the 2020s, wearable devices have become closely related to our lives, with the possibility of movement information being evaluated and received instantly [1]. Wearable devices have shown significant research value and application potential in many fields, such as industry, medical care, the military, education, and entertainment. In 2018, Mary Meeker stated that wearables were the target of the third wave of industry cycles after personal computers, smartphones, and tablets [7].

With the increasing necessity of wearables, scientific research is developing rapidly. Specialized laboratories and research groups that focus on wearable smart devices have been established in many research institutions, such as Carnegie Mellon University, the Massachusetts Institute of Technology, the Korea Institute of Science and Technology, and the School of Engineering of the University of Tokyo. Innovative patents and technologies are being developed faster than ever before. The American Institute of Electrical and Electronics Engineers organized the Wearable IT Committee and wearable computing columns in several academic journals. The IEEE ISWC, an international scholarly conference on wearable smart devices, was held for the first time in 1997.

1.2. The Development Potential of Wearable Devices

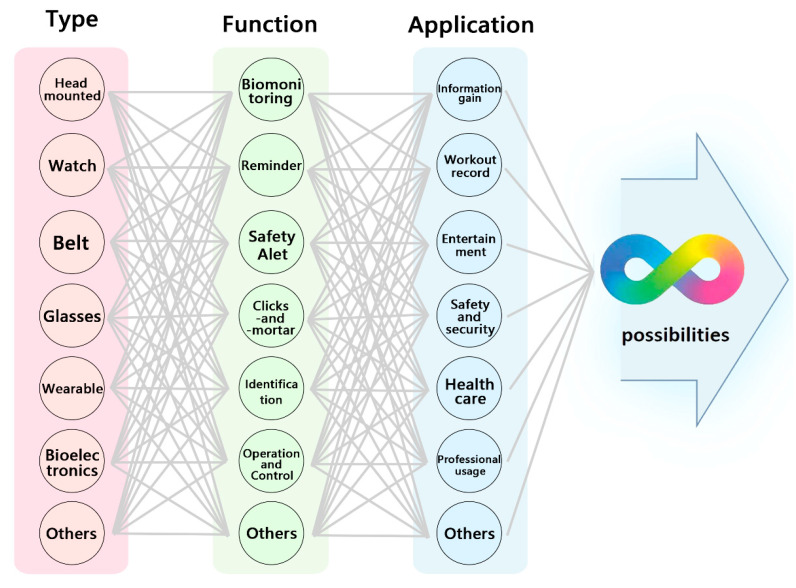

With the development of science and technology, physiological sensors and wearable devices with various forms have been proposed, including watches, bracelets, headphones, hats, shoes, clothes, necklaces, belts, patches, prosthetics, and glasses. Therefore, wearable devices have great development potential (Figure 2). Furthermore, with economic growth, the increasing prevalence of the internet, millennial population growth and the acceptance of intelligent technology, an increasing number of companies and research units are investing resources in the research, development and innovation of wearable devices and equipment.

Figure 2.

Application of wearable technology. Different shapes and materials are combined with various sensors and functions and adapted to specific applications, showing the potential of wearable devices. Different types of wearables, such as watches, bracelets, headphones, hats, shoes, clothes, necklaces, belts, patches, prosthetics, and glasses, have been developed. Wearables with functions related to biomonitoring, reminders, safety alerts, click and mortar retailing, identification, operation and control have been proposed. Finally, the functions can be applied for information gain, workout records, entertainment, safety and security, health care, and professional usage [8,9].

Wearables offer significant economic benefits [8]. The COVID-19 pandemic and chronic diseases have changed lifestyles, which has accelerated the application of wearable technology in medical care and long-term measurement and monitoring. Wearable medical devices with wireless communication technology can easily collect physiological data according to the diagnostic needs, such as data related to cardiopulmonary function, exercise patterns, sweat content, tear content, tissue oxygen content, sleep, emotional state, cognitive function, and brain states after concussion. In addition, wearable devices can function as pulse oximeters, insulin pumps, and ECG monitors. After the medical data are collected and transmitted, the data can be uploaded to a platform and managed and interpreted by artificial intelligence. As a result, personal health care can be achieved remotely, which could decrease the number of hospital visits. These data can allow doctors and hospitals to assess the health status of individuals via telemedicine services, thereby reducing complications of chronic diseases. As a result, global digital healthcare market research is rapidly increasing. Due to the popularization of the 5G network, the improvement in sensor accuracy, and the creation of platforms with increasingly complete management and analysis capabilities, this market is expected to grow further [9].

1.3. Detection of Physical and Psychological Stress with Wearable Devices

Physical and psychological stress have attracted considerable attention because stress can affect multiple body and brain functions. Facial expressions, language, physiological states, biochemical values, and behavioral patterns change with depression, stress, anxiety, fatigue, sleep disturbance, lethargy, loss of appetite, and hyperactivity. Therefore, cortisol, prolactin, human growth hormone (hGH), adrenocorticotropic hormone (ACTH), and lactate in blood and saliva have been widely adopted to evaluate psychological stress. Biomarkers can be assessed invasively and noninvasively. Blood tests are the typical invasive method, and noninvasive methods include the detection of eye blinks [10], pupil dilation [11], electroencephalography (EEG) changes [12], and body fluid composition [13]. Through video monitoring, body language, gestures, and physical activity could be used to estimate users’ emotional states. Previous studies have confirmed that the visual stimuli in the International Affective Picture System (IAPS) could be used to arouse emotional responses. After five minutes of stimulation, which included groups of pleasant and unpleasant images, the subjects were assessed, including their body fluids. The researchers discovered that cortisol levels, EEG patterns, blink rates, and heart rhythm variability were all altered, which indicates that these data could potentially be utilized in stress detection systems [10].

When wearable devices are used for emotion detection, multiple factors must be comprehensively evaluated. Heart rate, heart rate variability, respiratory rate, blood pressure, EEG readings, electromyography (EMG) readings, electrooculography (EOG) readings, plethysmography (PPG) readings, galvanic skin response (GSR), skin temperature, etc. [14,15] could be used to assess emotions and stress level. These physiological changes can help assess and interpret the subject’s current external situation, executive motivation, and emotional development. Stress levels could be measured and monitored to protect the health of people working in stressful situations, such as doctors, members of the military, traffic controllers, and emergency service personnel (e.g., police, paramedics and firefighters) [16,17,18]. However, there are limitations regarding stress level recognition if only one detection method is used. This article introduces practical applications of wearable devices that detect brain waves, eye movements, and sweat components, the development of an emotion detection system, and the limitations of related research.

2. Electroencephalogram

2.1. What Is an EEG?

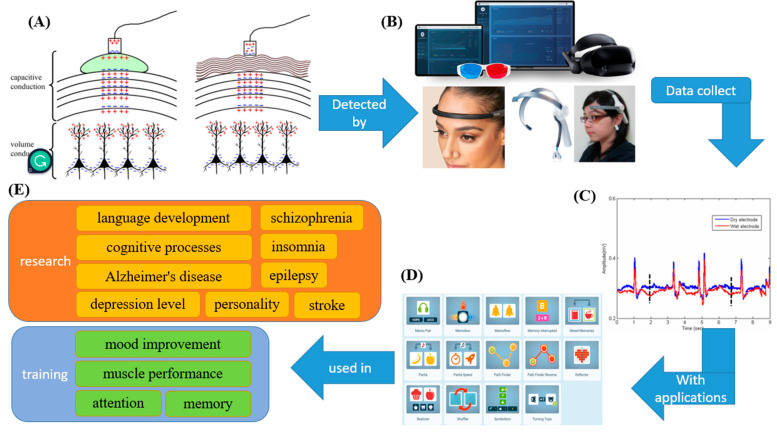

As the human brain operates, EEG signals arise from the activities of synchronized synapses among various populations of cortical neurons, which are pyramidal cells organized along cortical columns [19]. Near the dendrites, the excitation of a postsynaptic neuron generates an extracellular voltage that is more negative than other areas along the neuron, with regions of positive and negative charge separated by some distance. The sum of nearby positively and negatively charged areas is detected by the electrodes [20]. The difference in charge can be detected through the skin. However, the brain is not an organ of uniform size. Nerve cells have myelin sheaths, creating a physical barrier that ions cannot pass through. In addition, the different tissue densities in the brain can affect the propagation of electrical signals [21]. Moreover, the pia mater, dura mater, skull, and scalp are outside the brain and form a series of insulating layers that complicate electrical signal transmission. Therefore, a gel is required as a conductor to amplify the signals (Figure 3A) [22]. However, brain wave measurements are difficult to obtain because the eyes and muscles can generate electric fields. Furthermore, the skull and scalp barrier may attenuate the electrical signals. Therefore, how to correctly analyze EEG signals is also an essential issue [23,24].

Figure 3.

Wearable EEG detection device and application. (A) Neurons generate extracellular voltages, and the electrodes detect the sum of nearby positively and negatively charged areas. To amplify brain waves, gel or dry electrodes are essential [23]. (B) Wearables collect electrical signals [25]. (C) The data are collected and computed [24]. (D) Wearables can be used in various applications [26]. (E) The analyzed data could be used in research on depression, Alzheimer’s disease, epilepsy, schizophrenia, language development, cognitive processes, memory, attention, personality, stroke, insomnia, mood improvement, and muscle performance.

German physiologist Hans Berger published the first human brainwave literature essay in 1929. Brainwaves were collected in the absence of stimulation. This means that the primary electrophysiological activity of the brain was measured [27]. The EEG signals of healthy people can be divided into α, β, θ and δ waves, according to the frequency and amplitude of the signals. The frequency of α waves is 8 to 13 times per second, and while these waves occur in all brain regions, they appear most often in the occipital region. In general, α waves show the basic rhythm of the healthy adult brain and are considered the best brain waves for learning and thinking. The frequency of β waves is 14 to 30 times per second, and these waves are more evident in the frontal, temporal and central regions. β waves are more widely observed during mental activity and emotional excitement, such as when a person’s eyes are opened and while thinking. In contrast, when the eyes are closed and at rest, β waves appear only in the frontal area. In general, the α wave is the primary brain rhythm. However, approximately 6% of people show evidence of β rhythms on EEG when mentally stable, indicating that β waves may be a fundamental wave. The frequency of θ waves is 4 to 7 times per second, and adults can often show evidence of this waveform when they are drowsy. In addition, θ waves are closely related to the limbic system, triggering deep memories and strengthening long-term memories. Therefore, the θ wave is “the gate to memory and learning”. The δ wave frequency is 0.5 to 3 times per second and often appears at the forehead. Healthy adults show evidence of this wave only when they are in a deep sleep. Therefore, θ and δ waves collectively refer to human rest states and are generally not registered in the awake state. Therefore, θ and δ waves are called slow waves [28].

2.2. How Is EEG Data Collected?

Gel is usually essential for detecting brain waves, as gel diminishes the impedance between the skin and the electrode surface. However, the gel hinders the use of brainwave detectors as wearable devices because gel leaves residues on the scalp, and electrode leakage could result in a short circuit between adjacent electrodes. Furthermore, gel dries out during prolonged use, resulting in a decrease in the EEG signal value [29]. Therefore, semidry or dry electrodes must be developed to replace gel-type wet electrodes [30]. Dry electrodes can be divided into three categories: contact electrodes, noncontact electrodes, and insulated electrodes. The main difference between noncontact and insulated electrodes is that the bottom of the insulated electrode is composed of insulating material. In contrast, noncontact electrodes are formed of metal and can be coupled with hair or clothing. Although the signal quality of contact electrodes is better, noncontact and insulated electrodes are easier to apply in wearable devices. However, dry electrodes still have limitations. Noise can increase quickly when the user moves [31]. Therefore, in 2011, Lin et al. developed a dry foam gel to replace wet electrodes. This dry electrode is composed of a conductive polymer foam made of polyurethane. Moreover, this dry electrode reduces the resistance between the skin and the electrode and can be used during movement. The signal qualities are better than those of the wet electrode during long-term EEG detection [24].

2.3. The Application of EEG in Measuring Physical Situations, Relieving Stress and Managing Emotions

When a specific physical or psychological event occurs during EEG recording, the voltage fluctuations in the EEG data can be measured and recorded, and the noise can be filtered. Then, the changes in brain potential can be calculated, and the event-related potential (ERP) can be determined (Figure 3C). The correspondence between EEG, ERP, and specific physical and psychological events could be used to assess the level of depression [32,33], Alzheimer’s disease [34,35], epilepsy [36,37], schizophrenia [38,39], language development [40,41], cognitive processes [42,43,44], memory [42,45], attention [45,46,47], personality [12], stroke [48,49], insomnia [50], mood improvement [12], and muscle performance [44,47,51,52] (Figure 3E).

Brain waves can not only indicate user situations but also improve external performance through brainwave training. For example, cognitive functions, such as working memory, attention, visual processing speed, and mental and emotional states, could be enhanced by deliberately changing eye movements, which can significantly boost optimal performance [52,53,54,55,56,57,58]. Surgeons can reduce surgery time by 26% and improve performance by training sensory motor rhythm-theta brain waves [52]. Therefore, many wearable devices that can perform EEG and EOG and detect other physiological states have been developed. Moreover, the data that are collected by wearables can be analyzed by associated applications. Then, the individual’s current situation could be determined, cognitive functions could be trained, and stress could be relieved. For example, Muse™ by InterAxon is an EEG detector that can be combined with other machinery and apps (e.g., Lowdown Focus, Opti Brain™ Version 3.12) for emotion management. Another application, NeuroTracker, was established 30 years ago as a research tool (Figure 3B) [59,60]. NeuroTracker was created as a training tool to improve cognitive functions, including working memory, visual processing speed, and attention [61,62]. NeuroTracker has been used to examine and teach muscle control to athletes [63,64], improve the cognitive function of concussion patients [65,66] and improve physical competence in older adults [67]. Many other wearables have been combined with applications, and this set of devices can be used to assess, estimate, establish and maintain concentration, message processing speed, memory, and problem-solving skills. These wearables include Fit Brains Trainer (Rosetta Stone, Arlington, VA, USA), Elevate Brain Training (Elevate, San Francisco, CA, USA), Lumosity (Lumos Labs, San Francisco, CA, USA) and (NeuroNation, Berlin, Germany). Furthermore, NeuroNation products can be reimbursed by German health insurance [26,68,69] (Figure 3D).

2.4. Emotion Detection with EEG

Emotional changes can be evaluated directly via EEG signals because emotions are related to voltage changes caused by the flow of ionic currents among neurons in the brain [70]. The parietal lobe is related to algesia, gustation, and critical thinking. The temporal lobe is responsible for hearing and memory. The occipital lobe manages vision-related tasks. The frontal lobe is primarily associated with sensation, critical thinking, speech, and movement [71]. The left hemisphere is more active during positive emotions, and the right hemisphere is more active during negative emotions [72,73] because the distribution of alpha waves is changed by different emotions [74]. This EEG signal asymmetry can be applied to emotion detection. Gonzalez et al. used the BioCNN hardware with a convolutional neural network to improve neural signal quality. The accuracy of emotion recognition was approximately 85%, which was higher than that obtained by other evaluation methods (approximately 77.57%) [75]. Moreover, the electrode type, the data collection algorithm, the willingness of users and the information processing methods could significantly impact the accuracy of emotion recognition [73,76,77].

2.5. Limitations of EEG Detection

Many factors affect EEG measurements. Electrode placement, electrode types, signal amplifier quality, signal processing methods, and user movements (eye movement, blinking, facial expression changes, etc.) can cause a considerable amount of noise, which can be difficult for EEG signals to handle. For EEG sensors to be used in wearables, the abovementioned problems must be addressed. Consequently, contact electrodes acquire data from only the prefrontal lobe, and other sensors, such as EOG, magnetoencephalography (MEG), body temperature and heartbeat sensors, are utilized to obtain additional information for comprehensive application judgment [78,79].

3. Eye Movement

3.1. In What Ways Do Eyes Move?

Eye movements can provide significant amounts of information. Human eyes move constantly, and some actions are unintentional or subconscious. For example, eyes enter a state of high-speed movement called saccades when we read, where the eyes move rapidly from word to word. When we enter a room, our eyes make broad sweeps of the surroundings. Eyes also make small, unconscious movements to counteract head shaking and provide stable vision when we walk. During sleep, eyes move from side to side during the rapid eye movement stage. Eye movements have been shown to reveal thinking processes [80,81]. Moreover, pupil dilation is associated with uncertainty in decision-making [81,82], and eye movements can facilitate memory retrieval [83,84,85]. The following methods of measuring eye movement are discussed: eye tracking and EOG.

3.2. How Can Eye Movement Data Be Collected?

3.2.1. Eye Tracking

Eye movement detection data have been widely used in research, such as work related to cognitive load recognition, reading comprehension, presentation design, distraction and attention guidance, and some human–computer interfaces (HCIs) [80]. Eye trackers are a standard system for detecting different eye movements and are composed of small cameras that can be installed on tables, shelves or in unique caps with no eye contact. Eye position data can be recorded to track the pupil position, fixation time, fixation direction, fixation repetitions, saccade length, and pupil diameter. This type of device is a video-based eye tracker [86,87].

However, there are some difficulties in measuring and utilizing eye movement data. Physiological studies have found a speed difference between the eyes during eye saccades. Eye movements to the nasal side are slower, while those to the outside world are faster. This slight gap, known as the fixational disparity, is positively related to the saccade distance. This slight gap is most likely due to the change in the distance between two letters while reading; the eyes converge after staring, which indicates that this fixational disparity could be adjusted by various circumstances [88]. Therefore, sitting posture, binocular correction, and head and body movement significantly impact eye movement detection. The physiology of eye movements and machine correction are technical issues in measuring eye movements [89,90].

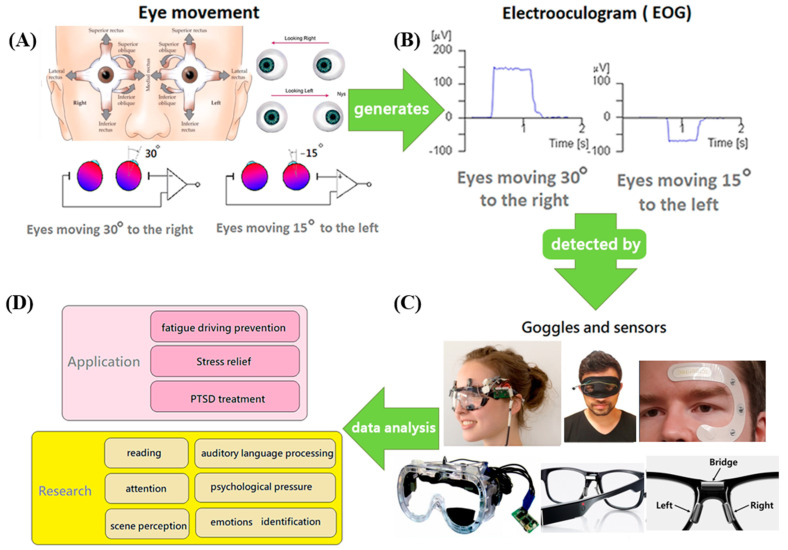

3.2.2. EOG

Some limitations in eye movement detection are due to environmental and user issues. Therefore, eye potential measurement is a solution for movement tracking. Eye potential refers to the potential difference of several tens of mV generated between the cornea and retina of the eye (Figure 4A). In general, the human cornea side is positively charged, and the retina side is negatively charged; thus, a potential difference can be recognized (Figure 4B). To measure this potential difference, electrodes can be placed on the skin around the eyes, and the possible changes were found to result from eye movements and blinking. The sensor could detect and measure the potential difference, which could be used to investigate the line of sight, mental state, and fatigue level [91,92]. Recently, EOG sensors have been adopted in medical applications, which are associated with embedded user interfaces (UIs) for improved usage. These sensors can be used in drowsiness detection [92].

Figure 4.

Wearable EOG detection device and application. (A) Six muscles control the fine-motor movements of each eye [93]. (B) Electrooculography (EOG) signals are positive when the eyes move toward the right (horizontally). In contrast, EOG signals are negative when the eye moves to the left [91,92]. (C) Several goggles and sensors have been developed to detect EOG signals [10,94,95,96]. (D) After data analysis, the wearables for detecting EOG could be applied in fatigued driving prevention [97], stress relief and PTSD treatment. Moreover, EOG wearables can be used as research equipment to explore the mechanisms of reading, attention, scene perception, auditory language processing, psychological pressure and emotional identification.

Compared to EEG, EOG shows a better signal, higher amplitude, better signal-to-noise ratio, and easier recording conditions [98]. However, EOG signal collection suffers from similar problems as EEG signal collection. For example, the sensing electrodes require the use of gel between the skin and the electrodes to enhance the response, or the sensors need to be wired. Regardless of the electrode type, it can be inconvenient or uncomfortable for users [97,99]. Therefore, in the development of new wearables for EOG measurement, two to three dry electrodes were embedded in eyeglass frames and nose pads [10,94]. In another study, some electrodes were made of silver and fixed in the appropriate location by a polyurethane membrane to collect data [100] (Figure 4C). Regardless of the electrode placement method, the wearing comfort, convenience, and data collection accuracy must be addressed by developers.

3.3. Different Glasses-Type Wearables for Fatigue Detection and Human-Computer Interfaces

Eye movements provide a considerable amount of information that can be applied in neuroscience, psychology, industrial engineering, human factors engineering, marketing/advertising, computer science, etc. [101]. Psychology research has worked to obtain information on reading [101,102,103], scene perception [104,105,106], problem solving [106,107], auditory language processing [108,109], attention [110], emotion recognition and psychological stress [17,111], PTSD treatment and emotional stress relief [112]. Therefore, glasses-type wearable devices for EOG or eye movement detection could be applied to investigate fatigued driving, identify emotional changes, and treat PTSD and in applications related to reading, attention, image perception, problem-solving, and auditory-language processing (Figure 4D).

In eye-assisted selection and entry (EASE), the time of eye fixation is used as an indicator, and maneuvering a cursor on a screen usually requires between 78.81 and 131.45 ms of sustained focus [113]. In 2006, EASE studies showed that only 81% of the subject choices were correctly classified by eye trackers. This human-machine interaction using eye movements is highly dependent on the design of the interface. “KIBITZER” is a wearable device that was developed in 2010 that can detect the line of sight of users and the current scene through an eye tracker on the head, and two camera lenses increase the accuracy of eye movement data. The eye movement data are transmitted to a computer, allowing the user to receive text or voice explanations to better understand the environment. However, this system has some shortcomings. When the environmental light is too bright, the pupil size and gaze-tracking functions can be disturbed. In contrast, when the environment is dark and sufficient artificial light sources are not provided, the system cannot detect the environment [114].

Due to the limitations of eye trackers, different applications related to EOG signals have been developed in human-machine interface research, such as eye disease recognition [115], virtual computer keyboard and mouse control [116,117], wheelchair control [118], computer games [119], machine control [120], and simplified Chinese eye writing systems [121]. However, the accuracy of the obtained data is limited if the data are collected only by eye trackers or EOG measurements. Multimodal eye movement signal recording methods that incorporate EEG, EMG, and eye trackers can significantly improve human-machine interface system performance [122,123].

In 2021, a device that detects human eye movements via video-based methods, EOG signals and infrared oculography was developed. Infrared oculography uses the reflection of near-infrared light on the cornea to determine the eye position and can be used in dark environments. In this system, hardware and software are adopted through the cooperation of video systems, dual Purkinje images and optical eye-tracking systems to improve image processing. With this system, eye movement detection accuracy has dramatically improved. The highest accuracy of approximately 96% was achieved with upward eye movements, while looking to the right resulted in the lowest accuracy of approximately 87.67%. Almost 100% of invalid information, such as blinking and wide-ranging glances, was ignored. This human–computer interaction system for detecting eye movements can distinguish ten eye movements (i.e., up, down, left, right, far left, far right, upper left, lower left, upper right, and lower right). Moreover, this system provides an eye–dial interface that can enable patients with amyotrophic lateral sclerosis (ALS), brainstem stroke, brain or spinal cord injury, muscular dystrophy, cerebral palsy, or multiple sclerosis to express their intentions and smoothly communicate with others [95]. However, this system has some drawbacks. Infrared sensors are expensive to manufacture, and long-term infrared exposure may cause eye discomfort.

“JINS MEME” is a glasses-type wearable device that was released by the Japanese glasses manufacturer JIN in May 2014. This device is equipped with an eye potential sensing function, and through eye movement detection and blinking, the intention and physical state of users can be inferred in real time. Gaze movement can be embedded in smartphone and in-vehicle device applications. Users can turn pages and select icons by looking at the smartphone screen. Through the continuous acquisition and analysis of gaze and blink information, the system can detect user fatigue and drowsiness because the eyes make unique movements, such as irregular or rapid blinking, when people want to sleep or are stressed [10]. Thus, JINS MEME could help prevent drowsy driving [124,125]. In 2015, another study combined EEG and oxyhemoglobin concentration detection to develop a fatigue detection system that could more accurately determine state changes during fatigued driving [126,127,128].

There are advantages and disadvantages to using EOG and EEG in human–computer interaction techniques. EOG signals may extend to the entire scalp when human eyes are rotated, which may cause interference between EOGs and EEGs. EOG detection in human–computer interactions requires considerable physical strength for some patients who cannot use their eye muscles well. In contrast, if only EEG data are desired, EOGs are a primary source of interference. Brainwave fragments disturbed by EOGs are usually deleted, which is called the rejection method and may lead to data loss and bias and failure to observe eye movement-related effects. Therefore, EOG signals could be measured independently and subtracted from EEG data using various regression methods to provide data without human interference. Many methods of removing EOG signals from EEGs have limitations [129,130,131]. Although EEG detection consumes less physical power, EEG signals have higher noise and lower accuracy than EOG signals. Therefore, the combination of EOGs and EEGs provides better results in human-computer interactions [132,133,134]. Thus, many wearable devices have combined EEGs and EOGs. When detecting fatigue levels and sleep behaviors, EEG signals are often added for collective judgment to obtain accurate interpretations [135,136].

3.4. Emotion Detection by EEGs

User interest, visual search processes, and information processing have been analyzed using eye movements in previous studies. Moreover, the blink frequency, fixation rate, maximum fixation duration, total fixation dispersion, maximum fixation dispersion, saccade rate, average saccade duration, saccade delay, average saccade amplitude, and EOG signals can reflect emotional performance [137]. Pupil diameter is the most commonly used feature for emotion recognition. The pupil diameter increases during positive emotions. Therefore, pupil size is considered to be a reliable indicator of positive emotion [138]. Anwar et al. used the pupil position and size captured by eye tracking devices and the fixation duration and proposed a facial expression recognition and eye gaze estimation system. Happiness, anger, sadness, neutral emotions, surprise, disgust, and fear could be recognized by the system, and the rate of correct emotion discrimination was approximately 90% [139]. Raudonis et al. divided emotions into four types, neutral, disgusted, funny and interested, and used eye movement speed, pupil size and pupil position for emotion recognition. The average recognition accuracy was approximately 90%, and the highest precision was obtained when classifying “interest” [140]. However, detecting emotions via eye movements requires multiple variables to increase the recognition accuracy to 85%. If only EOG data are used to detect emotions, the recognition rate for four emotions is approximately 88.3% [141] or 77.11% [142]. If only the pupil size is used to determine emotion, the accuracy rates are only 58.9% [143] and 59% [144]. Therefore, it is necessary to use a multimodal method in emotional judgment to improve the data accuracy and diversify the identifiable emotions.

3.5. Limitations of Eye Movement Detection

The movement of human eyes is affected not only by the physiological and psychological conditions of the individual but also by the light intensity of the environment and the type of electrodes. The accuracy of signal processing affects the interpretation of the data. Therefore, multimodal collaborative judgment is essential for addressing the limitations of eye movement detection [100,133,145,146]. Furthermore, the various electrodes, sensors, signal receivers and algorithms developed for wearable devices have made eye movement detection more popular.

4. Sweat Detection

4.1. Sweat Components

Most biomarkers are present in the blood, and sweat is transported to the surface of the skin through dermal ducts. Therefore, biomarker concentrations in sweat could correspond to those in the blood and can be considerable indicators of human health [147]. The ions, metabolites, acids, hormones, small proteins, and peptides secreted in sweat can be detected to identify various physiological states [148,149]. Additionally, sweat glands are found in almost every part of the skin and can easily be accessed without using needles or other invasive devices [147]. As a result, sweat detection has become the most popular method in wearable biomonitoring research, more so than the detection of tears, interstitial fluid (ISF), breath, saliva, or wound exudate [150].

Sweat is secreted by sweat glands, and this process is regulated by the autonomic nervous system. The excretion and evaporation of sweat helps to carry away a large amount of heat, thereby cooling the individual, and the sweat components not only remove waste but also moisturize the skin, soften keratin, cause the epidermis to acidify and inhibit the breeding of bacteria. Sweat is mainly composed of water (99%), and the remaining elements include electrolytes (sodium, potassium, magnesium, and calcium), lactic acid, urea, ammonia, protein, carbonate, cortisol, and various neuropeptides and cytokines [151]. The concentration of sweat compounds varies among individuals due to the differences in sweat gland activity and secretion rate. The average person sweats only approximately 600–700 mm3 per day [152].

4.2. How Can the Components of Sweat Be Detected?

Sweat can continuously and noninvasively provide abundant biomarker measurements of ions, drugs, metabolites, and biomolecules, including K+, Na+, Ca2+, Cl−, lactate, glucose, ammonia, ethanol, urea, cortisol, and various neuropeptides and cytokines. Different methods for analyzing sweat have been developed. For example, potentiometry and square wave stripping voltammetry can be used to detect ions in sweat [153,154,155], and chronoamperometry can be used to detect drugs [147,156,157,158], glucose [155,159,160], and metabolites, such as lactate [161] and peptides [162], in sweat. In addition to bioelectronics-related methods used to examine sweat components, specific bioassays that combine metallic materials with antibodies have been developed to detect small concentrations of chemicals in sweat. For example, cortisol in sweat can be measured by zinc oxide nanosheets, which combine with cortisol antibodies and are very sensitive (they can detect concentrations ranging from 1 to 200 ng/mL in sweat). Cortisol can reflect psychological or physiological stress [163,164,165]. Muscle activity has been predicted by K+ [153] and lactate concentrations in sweat. The quantities of these two compounds in sweat are the same as those in blood [154]. Thus, K+ and lactate could be used to evaluate the physical exertion and exercise intensity of users [105,106]. In addition, the glucose concentration in sweat can be used to continuously monitor blood glucose [155].

In addition to body function judgment and analysis, sweat detection can conveniently be used to detect physiological and psychological stress. Sweat is normally secreted to regulate body temperature. Nevertheless, when a person’s anxiety level increases, the autonomic nervous system produces a series of physiological responses to adapt to stress, such as increased heart rate, pupil dilation and sweat secretion that occurs over the entire skin, especially on a person’s palms, soles, and underarms; this response is usually unrelated to ambient temperature [15,156] However, sweating increases and decreases during mental relaxation and sleep [157]. The amount of lactic acid and urea in sweat increases significantly when individual stress increases [17]. Previous studies have shown that fearful people have disgusted facial expressions [158], increased alertness [159,160], and stronger smelling sweat. However, various stresses can affect hormone secretion, such as corticotropin-releasing factor (CRF), ACTH, mineralocorticoids, insulin, and hGH [161,162]. In 2016, a combination of sensors and antibodies was developed, in which gold nanoparticles and cortisol antigens were coated on the surface of the sensor. When cortisol bound to the surface, the gold nanoparticles turned red. This technique is a stable and competitive method that can be used to develop color-changing wearables to detect user stress levels [163].

4.3. The Application of Sweat Detection to Determine Body Movement and Emotion

The components in sweat are complex and easy to detect, and various sweat component detection sensors have been developed. In 2021, sweat-detecting colorimetric ion-selective photoelectrodes were weaved into the fabrics of innovative sportswear to detect pH, Na+ and K+ concentrations in sweat to assess the physical activity level of the wearer [155]. Moreover, two smartwatches called Cortisol Apta-Watch (CATCH) and CortiWatch can detect the quantities of cortisol in sweat to reflect physical and mental stress. With minimal errors, cortisol concentrations in sweat can be revealed by zeta potential, Fourier transform infrared spectroscopy (FTIR), and electrochemical measurements. Therefore, people could be informed about their stress levels, and stress levels could easily be monitored, allowing doctors to provide more straightforward diagnoses and treatments [150,164].

4.4. Limitations of Sweat Detection

Wearable devices that use sweat detection to assess physical or psychological health offer great benefits. The components and concentrations in sweat can represent the chemicals in the blood. Thus, diabetes, kidney function, exercise intensity, cystic fibrosis and alcohol concentration could be monitored, and physiological changes could be uncovered [150]. However, many difficulties must be overcome. The composition ratios in sweat differ from those in plasma. Thus, converting sweat concentrations into plasma concentrations is the most critical issue that must be solved. Moreover, the quantities and chemical amounts in sweat differ among individuals, and microelectrodes cause the skin to sweat. Whether the composition of the induced sweat is the same as that of actual sweat needs to be confirmed. The activity patterns of individuals also affect sweat secretion [150,166]. Thus, sweat composition analysis may be sufficiently accurate to estimate physical and psychological states.

5. Summary and Outlook

Wearable devices for physiological detection have been adopted in various applications, such as monitoring personal health, assessing and controlling psychophysiological states, evaluating stress, preventing brain lesions, and controlling robots, robotic arms or devices such as wheelchairs, by detecting eye movements, brain waves and sweat [69,167,168]. This technology has become a necessity for some people. In addition to monitoring physiological values, wearable devices have been increasingly used to detect emotions, reduce stress and facilitate cognitive training. Therefore, an increasing number of research institutions are focusing on EEG, EOG, and cortisol applications to improve data accuracy in emotional detection. Many technical difficulties must be overcome in the development of new technology. The activity patterns of users should not affect the accuracy of the sensor, and the sensitivity of the sensor should be adjustable. Moreover, the efficacy of wearable sensors must be scientifically confirmed. These problems need to be addressed. Commercial products that are composed of wearables and biosensors have little evidence to support the benefits that they claim, and their practical value is controversial [169,170]. It is crucial to determine whether the physiological data collected by these devices are accurate and if it is medically ethical to collect these data. In the future, the increasing use of wearables in health and sports performance could benefit researchers in collecting data [171]. The cooperation between biosensors and applications could support the development of precision medicine, generate substantial financial profits and improve the quality of life of users. Therefore, health and performance technology companies should collaborate with researchers to confirm the efficacy and scientific value of their products so that biosensors can be used in everyday life [171].

Author Contributions

Conceptualization, J.-Y.W., H.-M.D.W. and L.-D.L.; data curation, J.-Y.W., L.-D.L.; formal analysis, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; funding acquisition, C.T.-S.C., H.-M.D.W. and L.-D.L.; investigation, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; methodology, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; project administration, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; resources, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; software, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; supervision, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; validation, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; visualization, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; writing—original draft, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L.; writing—review and editing, J.-Y.W., C.T.-S.C., H.-M.D.W. and L.-D.L. All authors have read and agreed to the published version of the manuscript.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare that there are no conflict of interest.

Funding Statement

This research was supported in part by the National Science and Technology Council of Taiwan under grant numbers 110-2221-E-400-003-MY, 111-3114-8-400-001, 111-2314-B-075-006, and 111-2221-E-035-015; and by the National Health Research Institutes of Taiwan under grant numbers NHRI-EX108-10829EI, NHRI-EX111-11111EI, and NHRI-EX111-11129EI.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zheng M., Liu P.X., Gravina R., Fortino G. An emerging wearable world: New gadgetry produces a rising tide of changes and challenges. IEEE Syst. Man Cybern. Mag. 2018;4:6–14. doi: 10.1109/MSMC.2018.2806565. [DOI] [Google Scholar]

- 2.Gernsback H. The isolator. Sci. Invent. 1925;13:214–281. [Google Scholar]

- 3.Brockwell H. Forgotten genius: The man who made a working VR machine in 1957. TechRadar. 2016;7 [Google Scholar]

- 4.Thorp E.O. The invention of the first wearable computer; Proceedings of the Digest of Papers. Second International Symposium on Wearable Computers (Cat. No. 98EX215); Pittsburgh, PA, USA. 19–20 October 1998; pp. 4–8. [Google Scholar]

- 5.Oppermann L., Prinz W. Introduction to this Special Issue on Smart Glasses. I-COM. 2016;15:123–132. doi: 10.1515/icom-2016-0028. [DOI] [Google Scholar]

- 6.Mann S. Smart clothing: The shift to wearable computing. Commun. ACM. 1996;39:23–24. doi: 10.1145/232014.232021. [DOI] [Google Scholar]

- 7.Meeker M., Wu L. Internet Trends 2018. Kleiner Perkins; Menlo Park, CA, USA: 2018. [Google Scholar]

- 8.Hickey A. Digital Health. Elsevier; Amsterdam, The Netherlands: 2021. The rise of wearables: From innovation to implementation; pp. 357–365. [Google Scholar]

- 9.Yousif M., Hewage C., Nawaf L. IOT technologies during and beyond COVID-19: A comprehensive review. Future Internet. 2021;13:105. doi: 10.3390/fi13050105. [DOI] [Google Scholar]

- 10.Sengupta J., Baviskar N., Shukla S. Biosignal acquisition system for stress monitoring; Proceedings of the International Conference on Advances in Information Technology and Mobile Communication; Bangalore, India. 27–28 April 2012; pp. 451–458. [Google Scholar]

- 11.Ren P., Barreto A., Huang J., Gao Y., Ortega F.R., Adjouadi M. Off-line and on-line stress detection through processing of the pupil diameter signal. Ann. Biomed. Eng. 2014;42:162–176. doi: 10.1007/s10439-013-0880-9. [DOI] [PubMed] [Google Scholar]

- 12.Raymond J., Varney C., Parkinson L.A., Gruzelier J.H. The effects of alpha/theta neurofeedback on personality and mood. Cogn. Brain Res. 2005;23:2–3. doi: 10.1016/j.cogbrainres.2004.10.023. [DOI] [PubMed] [Google Scholar]

- 13.Polliack A., Taylor R., Bader D. Sweat analysis following pressure ischaemia in a group of debilitated subjects. J. Rehabil. Res. Dev. 1997;34:303–308. [PubMed] [Google Scholar]

- 14.Cheol Jeong I., Bychkov D., Searson P.C. Wearable devices for precision medicine and health state monitoring. IEEE Trans. Biomed. Eng. 2018;66:1242–1258. doi: 10.1109/TBME.2018.2871638. [DOI] [PubMed] [Google Scholar]

- 15.Taj-Eldin M., Ryan C., O’Flynn B., Galvin P. A review of wearable solutions for physiological and emotional monitoring for use by people with autism spectrum disorder and their caregivers. Sensors. 2018;18:4271. doi: 10.3390/s18124271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Diaz-Romero D.J., Rincón A.M.R., Miguel-Cruz A., Yee N., Stroulia E. Recognizing emotional states with wearables while playing a serious game. IEEE Trans. Instrum. Meas. 2021;70:1–12. doi: 10.1109/TIM.2021.3059467. [DOI] [Google Scholar]

- 17.Zheng W.-L., Liu W., Lu Y., Lu B.-L., Cichocki A. Emotionmeter: A multimodal framework for recognizing human emotions. IEEE Trans. Cybern. 2018;49:1110–1122. doi: 10.1109/TCYB.2018.2797176. [DOI] [PubMed] [Google Scholar]

- 18.Suiçmez A., Cengiz T., Odabas M.S. An Overview of Classification of Electrooculography (EOG) Signals by Machine Learning Methods. Gazi Univ. J. Sci. Part C Des. Technol. 2022;10:330–338. doi: 10.29109/gujsc.1130972. [DOI] [Google Scholar]

- 19.Buzsáki G., Anastassiou C.A., Koch C. The origin of extracellular fields and currents—EEG, ECoG, LFP and spikes. Nat. Rev. Neurosci. 2012;13:407–420. doi: 10.1038/nrn3241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Silva F.L.d. EEg-fMRI. Springer; Berlin/Heidelberg, Germany: 2009. EEG: Origin and measurement; pp. 19–38. [Google Scholar]

- 21.Nunez P.L., Srinivasan R. Electric Fields of the Brain: The Neurophysics of EEG. Oxford University Press; New York, NY, USA: 2006. p. 178. [Google Scholar]

- 22.Usakli A.B. Improvement of EEG signal acquisition: An electrical aspect for state of the art of front end. Comput. Intell. Neurosci. 2010;2010:1–7. doi: 10.1155/2010/630649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jackson A.F., Bolger D.J. The neurophysiological bases of EEG and EEG measurement: A review for the rest of us. Psychophysiology. 2014;51:1061–1071. doi: 10.1111/psyp.12283. [DOI] [PubMed] [Google Scholar]

- 24.Lin C.-T., Liao L.-D., Liu Y.-H., Wang I.-J., Lin B.-S., Chang J.-Y. Novel dry polymer foam electrodes for long-term EEG measurement. IEEE Trans. Biomed. Eng. 2010;58:1200–1207. doi: 10.1109/TBME.2010.2102353. [DOI] [PubMed] [Google Scholar]

- 25.Liu N.-H., Chiang C.-Y., Chu H.-C. Recognizing the degree of human attention using EEG signals from mobile sensors. Sensors. 2013;13:10273–10286. doi: 10.3390/s130810273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gil H., Gonçalves V. The use of «Peak & Neuronation» digital applications for the inclusion of older adults: A case study at USALBI; Proceedings of the 2017 International Symposium on Computers in Education (SIIE); Lisbon, Portugal. 9 November 2017; pp. 1–5. [Google Scholar]

- 27.İnce R., Adanır S.S., Sevmez F. The inventor of electroencephalography (EEG): Hans Berger (1873–1941) Child’s Nerv. Syst. 2021;37:2723–2724. doi: 10.1007/s00381-020-04564-z. [DOI] [PubMed] [Google Scholar]

- 28.Brazier M.A. The analysis of brain waves. Sci. Am. 1962;206:142–155. doi: 10.1038/scientificamerican0662-142. [DOI] [PubMed] [Google Scholar]

- 29.Ferree T.C., Luu P., Russell G.S., Tucker D.M. Scalp electrode impedance, infection risk, and EEG data quality. Clin. Neurophysiol. 2001;112:536–544. doi: 10.1016/S1388-2457(00)00533-2. [DOI] [PubMed] [Google Scholar]

- 30.Li G.-L., Wu J.-T., Xia Y.-H., He Q.-G., Jin H.-G. Review of semi-dry electrodes for EEG recording. J. Neural Eng. 2020;17:051004. doi: 10.1088/1741-2552/abbd50. [DOI] [PubMed] [Google Scholar]

- 31.Shad E.H.T., Molinas M., Ytterdal T. Impedance and noise of passive and active dry EEG electrodes: A review. IEEE Sens. J. 2020;20:14565–14577. [Google Scholar]

- 32.De Aguiar Neto F.S., Rosa J.L.G. Depression biomarkers using non-invasive EEG: A review. Neurosci. Biobehav. Rev. 2019;105:83–93. doi: 10.1016/j.neubiorev.2019.07.021. [DOI] [PubMed] [Google Scholar]

- 33.Keren H., O’Callaghan G., Vidal-Ribas P., Buzzell G.A., Brotman M.A., Leibenluft E., Pan P.M., Meffert L., Kaiser A., Wolke S. Reward processing in depression: A conceptual and meta-analytic review across fMRI and EEG studies. Am. J. Psychiatry. 2018;175:1111–1120. doi: 10.1176/appi.ajp.2018.17101124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cassani R., Estarellas M., San-Martin R., Fraga F.J., Falk T.H. Systematic review on resting-state EEG for Alzheimer’s disease diagnosis and progression assessment. Dis. Mrk. 2018;2018:1–26. doi: 10.1155/2018/5174815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rossini P.M., Di Iorio R., Vecchio F., Anfossi M., Babiloni C., Bozzali M., Bruni A.C., Cappa S.F., Escudero J., Fraga F.J. Early diagnosis of Alzheimer’s disease: The role of biomarkers including advanced EEG signal analysis. Report from the IFCN-sponsored panel of experts. Clin. Neurophysiol. 2020;131:1287–1310. doi: 10.1016/j.clinph.2020.03.003. [DOI] [PubMed] [Google Scholar]

- 36.Sharmila A. Epilepsy detection from EEG signals: A review. J. Med. Eng. Technol. 2018;42:368–380. doi: 10.1080/03091902.2018.1513576. [DOI] [PubMed] [Google Scholar]

- 37.Amin U., Benbadis S.R. The role of EEG in the erroneous diagnosis of epilepsy. J. Clin. Neurophysiol. 2019;36:294–297. doi: 10.1097/WNP.0000000000000572. [DOI] [PubMed] [Google Scholar]

- 38.Barros C., Silva C.A., Pinheiro A.P. Advanced EEG-based learning approaches to predict schizophrenia: Promises and pitfalls. Artif. Intell. Med. 2021;114:102039. doi: 10.1016/j.artmed.2021.102039. [DOI] [PubMed] [Google Scholar]

- 39.Perrottelli A., Giordano G.M., Brando F., Giuliani L., Mucci A. EEG-based measures in at-risk mental state and early stages of schizophrenia: A systematic review. Front. Psychiatry. 2021;12:653642. doi: 10.3389/fpsyt.2021.653642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Alday P.M. M/EEG analysis of naturalistic stories: A review from speech to language processing. Lang. Cogn. Neurosci. 2019;34:457–473. doi: 10.1080/23273798.2018.1546882. [DOI] [Google Scholar]

- 41.Tromp J., Peeters D., Meyer A.S., Hagoort P. The combined use of virtual reality and EEG to study language processing in naturalistic environments. Behav. Res. Methods. 2018;50:862–869. doi: 10.3758/s13428-017-0911-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hanslmayr S., Sauseng P., Doppelmayr M., Schabus M., Klimesch W. Increasing individual upper alpha power by neurofeedback improves cognitive performance in human subjects. Appl. Psychophysiol. Biofeedback. 2005;30:1–10. doi: 10.1007/s10484-005-2169-8. [DOI] [PubMed] [Google Scholar]

- 43.Vernon D., Egner T., Cooper N., Compton T., Neilands C., Sheri A., Gruzelier J. The effect of training distinct neurofeedback protocols on aspects of cognitive performance. Int. J. Psychophysiol. 2003;47:75–85. doi: 10.1016/S0167-8760(02)00091-0. [DOI] [PubMed] [Google Scholar]

- 44.Egner T., Gruzelier J.H. Ecological validity of neurofeedback: Modulation of slow wave EEG enhances musical performance. Neuroreport. 2003;14:1221–1224. doi: 10.1097/00001756-200307010-00006. [DOI] [PubMed] [Google Scholar]

- 45.Egner T., Gruzelier J.H. Learned self-regulation of EEG frequency components affects attention and event-related brain potentials in humans. Neuroreport. 2001;12:4155–4159. doi: 10.1097/00001756-200112210-00058. [DOI] [PubMed] [Google Scholar]

- 46.Egner T., Gruzelier J.H. EEG biofeedback of low beta band components: Frequency-specific effects on variables of attention and event-related brain potentials. Clin. Neurophysiol. 2004;115:131–139. doi: 10.1016/S1388-2457(03)00353-5. [DOI] [PubMed] [Google Scholar]

- 47.Cheng M.-Y., Wang K.-P., Hung C.-L., Tu Y.-L., Huang C.-J., Koester D., Schack T., Hung T.-M. Higher power of sensorimotor rhythm is associated with better performance in skilled air-pistol shooters. Psychol. Sport Exerc. 2017;32:47–53. doi: 10.1016/j.psychsport.2017.05.007. [DOI] [Google Scholar]

- 48.Doerrfuss J.I., Kilic T., Ahmadi M., Holtkamp M., Weber J.E. Quantitative and qualitative EEG as a prediction tool for outcome and complications in acute stroke patients. Clin. EEG Neurosci. 2020;51:121–129. doi: 10.1177/1550059419875916. [DOI] [PubMed] [Google Scholar]

- 49.Sebastián-Romagosa M., Udina E., Ortner R., Dinarès-Ferran J., Cho W., Murovec N., Matencio-Peralba C., Sieghartsleitner S., Allison B.Z., Guger C. EEG biomarkers related with the functional state of stroke patients. Front. Neurosci. 2020;14:582. doi: 10.3389/fnins.2020.00582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zangani C., Casetta C., Saunders A.S., Donati F., Maggioni E., D’Agostino A. Sleep abnormalities across different clinical stages of Bipolar Disorder: A review of EEG studies. Neurosci. Biobehav. Rev. 2020;118:247–257. doi: 10.1016/j.neubiorev.2020.07.031. [DOI] [PubMed] [Google Scholar]

- 51.Raymond J., Sajid I., Parkinson L.A., Gruzelier J.H. Biofeedback and dance performance: A preliminary investigation. Appl. Psychophysiol. Biofeedback. 2005;30:65–73. doi: 10.1007/s10484-005-2175-x. [DOI] [PubMed] [Google Scholar]

- 52.Ros T., Moseley M.J., Bloom P.A., Benjamin L., Parkinson L.A., Gruzelier J.H. Optimizing microsurgical skills with EEG neurofeedback. BMC Neurosci. 2009;10:1–10. doi: 10.1186/1471-2202-10-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Komarudin K., Mulyana M., Berliana B., Purnamasari I. NeuroTracker Three-Dimensional Multiple Object Tracking (3D-MOT): A Tool to Improve Concentration and Game Performance among Basketball Athletes. Ann. Appl. Sport Sci. 2021;9 doi: 10.29252/aassjournal.946. [DOI] [Google Scholar]

- 54.Park S.-Y., Klotzbier T.J., Schott N. The effects of the combination of high-intensity interval training with 3D-multiple object tracking task on perceptual-cognitive performance: A randomized controlled intervention trial. Int. J. Environ. Res. Public Health. 2021;18:4862. doi: 10.3390/ijerph18094862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Sorrell J.M. The Aging Brain: Can Cognitive Decline Be Reversed? J. Psychosoc. Nurs. Ment. Health Serv. 2021;59:13–16. doi: 10.3928/02793695-20210611-03. [DOI] [PubMed] [Google Scholar]

- 56.Carelli L., Solca F., Tagini S., Torre S., Verde F., Ticozzi N., Ferrucci R., Pravettoni G., Aiello E.N., Silani V. Gaze-Contingent Eye-Tracking Training in Brain Disorders: A Systematic Review. Brain Sci. 2022;12:931. doi: 10.3390/brainsci12070931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Lee T.L., Yeung M.K., Sze S.L., Chan A.S. Eye-tracking training improves inhibitory control in children with attention-deficit/hyperactivity disorder. Brain Sci. 2021;11:314. doi: 10.3390/brainsci11030314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Liao C.-Y., Chen R.-C., Tai S.-K. Emotion stress detection using EEG signal and deep learning technologies; Proceedings of the 2018 IEEE International Conference on Applied System Invention (ICASI); Tokyo, Japan. 13–17 April 2018; pp. 90–93. [Google Scholar]

- 59.Pylyshyn Z.W., Storm R.W. Tracking multiple independent targets: Evidence for a parallel tracking mechanism. Spat. Vis. 1988;3:179–197. doi: 10.1163/156856888X00122. [DOI] [PubMed] [Google Scholar]

- 60.Stockman C. Can a technology teach meditation? Experiencing the EEG headband interaxon muse as a meditation guide. Int. J. Emerg. Technol. Learn. (Ijet) 2020;15:83–99. doi: 10.3991/ijet.v15i08.12415. [DOI] [Google Scholar]

- 61.Parsons B., Magill T., Boucher A., Zhang M., Zogbo K., Bérubé S., Scheffer O., Beauregard M., Faubert J. Enhancing cognitive function using perceptual-cognitive training. Clin. EEG Neurosci. 2016;47:37–47. doi: 10.1177/1550059414563746. [DOI] [PubMed] [Google Scholar]

- 62.Tran J., Gallagher Poehls C. Honors Thesis. Loyola Marymount University; Los Angeles, CA, USA: 2018. NeuroTracker Cognitive Function and its Relationship to GPA in College Students; p. 219. [Google Scholar]

- 63.Moen F., Hrozanova M., Stiles T. The effects of perceptual-cognitive training with Neurotracker on executive brain functions among elite athletes. Cogent Psychol. 2018;5:1544105. doi: 10.1080/23311908.2018.1544105. [DOI] [Google Scholar]

- 64.Vartanian O., Coady L., Blackler K. 3D multiple object tracking boosts working memory span: Implications for cognitive training in military populations. Mil. Psychol. 2016;28:353–360. doi: 10.1037/mil0000125. [DOI] [Google Scholar]

- 65.Corbin-Berrigan L.-A., Kowalski K., Faubert J., Christie B., Gagnon I. Could Neurotracker be used as a clinical marker of recovery following pediatric mild traumatic brain injury? An exploratory study. Brain Inj. 2020;34:385–389. doi: 10.1080/02699052.2020.1723699. [DOI] [PubMed] [Google Scholar]

- 66.Corbin-Berrigan L.-A., Kowalski K., Faubert J., Christie B., Gagnon I. Three-dimensional multiple object tracking in the pediatric population: The NeuroTracker and its promising role in the management of mild traumatic brain injury. Neuroreport. 2018;29:559–563. doi: 10.1097/WNR.0000000000000988. [DOI] [PubMed] [Google Scholar]

- 67.Legault I., Allard R., Faubert J. Healthy older observers show equivalent perceptual-cognitive training benefits to young adults for multiple object tracking. Front. Psychol. 2013;4:323. doi: 10.3389/fpsyg.2013.00323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Byrom B., McCarthy M., Schueler P., Muehlhausen W. Brain monitoring devices in neuroscience clinical research: The potential of remote monitoring using sensors, wearables, and mobile devices. Clin. Pharmacol. Ther. 2018;104:59–71. doi: 10.1002/cpt.1077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Liao L.-D., Lin C.-T., McDowell K., Wickenden A.E., Gramann K., Jung T.-P., Ko L.-W., Chang J.-Y. Biosensor technologies for augmented brain–computer interfaces in the next decades. Proc. IEEE. 2012;100:1553–1566. doi: 10.1109/JPROC.2012.2184829. [DOI] [Google Scholar]

- 70.Luneski A., Konstantinidis E., Bamidis P. Affective medicine. Methods Inf. Med. 2010;49:207–218. doi: 10.3414/ME0617. [DOI] [PubMed] [Google Scholar]

- 71.Lystad R.P., Pollard H. Functional neuroimaging: A brief overview and feasibility for use in chiropractic research. J. Can. Chiropr. Assoc. 2009;53:59. [PMC free article] [PubMed] [Google Scholar]

- 72.Schmidt L.A., Trainor L.J. Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical emotions. Cogn. Emot. 2001;15:487–500. doi: 10.1080/02699930126048. [DOI] [Google Scholar]

- 73.Tandle A.L., Joshi M.S., Dharmadhikari A.S., Jaiswal S.V. Mental state and emotion detection from musically stimulated EEG. Brain Inform. 2018;5:1–13. doi: 10.1186/s40708-018-0092-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Trainor L.J., Schmidt L.A. Processing Emotions Induced by Music. Oxford University Press; New York, NY, USA: 2003. pp. 310–324. [Google Scholar]

- 75.Gonzalez H.A., Muzaffar S., Yoo J., Elfadel I.M. BioCNN: A hardware inference engine for EEG-based emotion detection. IEEE Access. 2020;8:140896–140914. doi: 10.1109/ACCESS.2020.3012900. [DOI] [Google Scholar]

- 76.Leon E., Clarke G., Sepulveda F., Callaghan V. Real-time physiological emotion detection mechanisms: Effects of exercise and affect intensity; Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference; Shanghai, China. 17–18 January 2006; pp. 4719–4722. [DOI] [PubMed] [Google Scholar]

- 77.Joshi V.M., Ghongade R.B. EEG based emotion detection using fourth order spectral moment and deep learning. Biomed. Signal Process. Control. 2021;68:102755. doi: 10.1016/j.bspc.2021.102755. [DOI] [Google Scholar]

- 78.Boto E., Seedat Z.A., Holmes N., Leggett J., Hill R.M., Roberts G., Shah V., Fromhold T.M., Mullinger K.J., Tierney T.M. Wearable neuroimaging: Combining and contrasting magnetoencephalography and electroencephalography. NeuroImage. 2019;201:116099. doi: 10.1016/j.neuroimage.2019.116099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Chen Y.-H., Sawan M. Trends and challenges of wearable multimodal technologies for stroke risk prediction. Sensors. 2021;21:460. doi: 10.3390/s21020460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Rosch J.L., Vogel-Walcutt J.J. A review of eye-tracking applications as tools for training. Cogn. Technol. Work. 2013;15:313–327. doi: 10.1007/s10111-012-0234-7. [DOI] [Google Scholar]

- 81.Preuschoff K., ’t Hart B.M., Einhäuser W. Pupil dilation signals surprise: Evidence for noradrenaline’s role in decision making. Front. Neurosci. 2011;5:115. doi: 10.3389/fnins.2011.00115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Brunyé T.T., Gardony A.L. Eye tracking measures of uncertainty during perceptual decision making. Int. J. Psychophysiol. 2017;120:60–68. doi: 10.1016/j.ijpsycho.2017.07.008. [DOI] [PubMed] [Google Scholar]

- 83.Johansson R., Johansson M. Look here, eye movements play a functional role in memory retrieval. Psychol. Sci. 2014;25:236–242. doi: 10.1177/0956797613498260. [DOI] [PubMed] [Google Scholar]

- 84.Christman S.D., Garvey K.J., Propper R.E., Phaneuf K.A. Bilateral eye movements enhance the retrieval of episodic memories. Neuropsychology. 2003;17:221. doi: 10.1037/0894-4105.17.2.221. [DOI] [PubMed] [Google Scholar]

- 85.Hannula D.E., Althoff R.R., Warren D.E., Riggs L., Cohen N.J., Ryan J.D. Worth a glance: Using eye movements to investigate the cognitive neuroscience of memory. Front. Hum. Neurosci. 2010;4:166. doi: 10.3389/fnhum.2010.00166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Viswanathan A.R. Head-Mounted Eye Tracker. Cornell University; New York, NY, USA: 2011. [Google Scholar]

- 87.Huang M.X., Kwok T.C., Ngai G., Chan S.C., Leong H.V. Building a personalized, auto-calibrating eye tracker from user interactions; Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems; San Jose, CA, USA. 7–12 May 2016; pp. 5169–5179. [Google Scholar]

- 88.Mourant R.R., Rockwell T.H. Mapping eye-movement patterns to the visual scene in driving: An exploratory study. Hum. Factors. 1970;12:81–87. doi: 10.1177/001872087001200112. [DOI] [PubMed] [Google Scholar]

- 89.Holmqvist K., Blignaut P. Small eye movements cannot be reliably measured by video-based P-CR eye-trackers. Behav. Res. Methods. 2020;52:2098–2121. doi: 10.3758/s13428-020-01363-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Ware C., Mikaelian H.H. An evaluation of an eye tracker as a device for computer input2; Proceedings of the SIGCHI/GI conference on Human factors in computing systems and graphics interface; Toronto, ON, Canada. 5–9 April 1987; pp. 183–188. [Google Scholar]

- 91.Economou S., Stefanis C. Electrooculographic (EOG) findings in manic-depressive illness. Acta Psychiatr. Scand. 1979;60:155–162. doi: 10.1111/j.1600-0447.1979.tb03583.x. [DOI] [PubMed] [Google Scholar]

- 92.Blue C. Utlizing eye-tracking technology and data to augment UX-UI web development curriculum; Proceedings of the INTED2021 Proceedings; Online. 8–9 March 2021; pp. 424–430. [Google Scholar]

- 93.Malmivuo J., Plonsey R. Bioelectromagnetism—Principles and Applications of Bioelectric and Biomagnetis Fields. Oxford University Press; New York, NY, USA: 1995. p. 2. [Google Scholar]

- 94.Kosmyna N., Morris C., Sarawgi U., Nguyen T., Maes P. AttentivU: A wearable pair of EEG and EOG glasses for real-time physiological processing; Proceedings of the 2019 IEEE 16th International Conference on Wearable and Implantable Body Sensor Networks (BSN); Chicago, IL, USA. 19–22 May 2019; pp. 1–4. [Google Scholar]

- 95.Lin C.-T., Jiang W.-L., Chen S.-F., Huang K.-C., Liao L.-D. Design of a wearable eye-movement detection system based on electrooculography signals and its experimental validation. Biosensors. 2021;11:343. doi: 10.3390/bios11090343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Bulling A., Roggen D., Tröster G. Eyemote–towards context-aware gaming using eye movements recorded from wearable electrooculography; Proceedings of the International Conference on Fun and Games; Eindhoven, The Netherlands. 20–21 October 2018; pp. 33–45. [Google Scholar]

- 97.Bulling A., Roggen D., Tröster G. Wearable EOG goggles: Seamless sensing and context-awareness in everyday environments. J. Ambient Intell. Smart Environ. 2009;1:157–171. doi: 10.3233/AIS-2009-0020. [DOI] [Google Scholar]

- 98.Milanizadeh S., Safaie J. EOG-based HCI system for quadcopter navigation. IEEE Trans. Instrum. Meas. 2020;69:8992–8999. doi: 10.1109/TIM.2020.3001411. [DOI] [Google Scholar]

- 99.Bulling A., Ward J.A., Gellersen H., Tröster G. Eye movement analysis for activity recognition using electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2010;33:741–753. doi: 10.1109/TPAMI.2010.86. [DOI] [PubMed] [Google Scholar]

- 100.Shustak S., Inzelberg L., Steinberg S., Rand D., Pur M.D., Hillel I., Katzav S., Fahoum F., De Vos M., Mirelman A. Home monitoring of sleep with a temporary-tattoo EEG, EOG and EMG electrode array: A feasibility study. J. Neural Eng. 2019;16:026024. doi: 10.1088/1741-2552/aafa05. [DOI] [PubMed] [Google Scholar]

- 101.Duchowski A.T. A breadth-first survey of eye-tracking applications. Behav. Res. Methods Instrum. Comput. 2002;34:455–470. doi: 10.3758/BF03195475. [DOI] [PubMed] [Google Scholar]

- 102.Rayner K. Eye movements in reading and information processing: 20 years of research. Psychol. Bull. 1998;124:372. doi: 10.1037/0033-2909.124.3.372. [DOI] [PubMed] [Google Scholar]

- 103.Degno F., Loberg O., Zang C., Zhang M., Donnelly N., Liversedge S.P. Parafoveal previews and lexical frequency in natural reading: Evidence from eye movements and fixation-related potentials. J. Exp. Psychol. Gen. 2019;148:453. doi: 10.1037/xge0000494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Rayner K., Castelhano M.S. Eye Movements during Reading, Scene Perception, Visual Search, and While Looking at Print Advertisements. Psychology Press; East Sussex, UK: 2008. [Google Scholar]

- 105.David E.J., Gutiérrez J., Coutrot A., Da Silva M.P., Callet P.L. A dataset of head and eye movements for 360 videos; Proceedings of the 9th ACM Multimedia Systems Conference; Amsterdam, The Netherlands. 12–15 June 2018; pp. 432–437. [Google Scholar]

- 106.Knoblich G., Ohlsson S., Raney G.E. An eye movement study of insight problem solving. Mem. Cogn. 2001;29:1000–1009. doi: 10.3758/BF03195762. [DOI] [PubMed] [Google Scholar]

- 107.Van Marlen T., Van Wermeskerken M., Jarodzka H., Van Gog T. Effectiveness of eye movement modeling examples in problem solving: The role of verbal ambiguity and prior knowledge. Learn. Instr. 2018;58:274–283. doi: 10.1016/j.learninstruc.2018.07.005. [DOI] [Google Scholar]

- 108.Sussman R., Campana E., Tanenhaus M., Carlson G. Verb-based access to instrument roles: Evidence from eye movements; Proceedings of the Poster Presented at the 8th Annual Architectures and Mechanisms of Language Processing Conference; Tenerife, Canary Islands, Spain. 2–4 September 2002. [Google Scholar]

- 109.Gagl B., Golch J., Hawelka S., Sassenhagen J., Poeppel D., Fiebach C.J. Reading at the speed of speech: The rate of eye movements aligns with auditory language processing. BioRxiv. 2018;6:429–442. doi: 10.1038/s41562-021-01215-4. [DOI] [PubMed] [Google Scholar]

- 110.Kowler E., Anderson E., Dosher B., Blaser E. The role of attention in the programming of saccades. Vis. Res. 1995;35:1897–1916. doi: 10.1016/0042-6989(94)00279-U. [DOI] [PubMed] [Google Scholar]

- 111.Guo J.-J., Zhou R., Zhao L.-M., Lu B.-L. Multimodal emotion recognition from eye image, eye movement and EEG using deep neural networks; Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Berlin, Germany. 23–27 July 2019; pp. 3071–3074. [DOI] [PubMed] [Google Scholar]

- 112.Lenferink L., Meyerbröker K., Boelen P. PTSD treatment in times of COVID-19: A systematic review of the effects of online EMDR. Psychiatry Res. 2020;293:113438. doi: 10.1016/j.psychres.2020.113438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Møllenbach E., Lillholm M., Gail A., Hansen J.P. Single gaze gestures; Proceedings of the 2010 symposium on eye-tracking research & applications; Austin, TX, USA. 22–24 March 2010; pp. 177–180. [Google Scholar]

- 114.Baldauf M., Fröhlich P., Hutter S. KIBITZER: A wearable system for eye-gaze-based mobile urban exploration; Proceedings of the 1st Augmented Human International Conference; Megève, France. 2–4 April 2010; pp. 1–5. [Google Scholar]

- 115.Bowers A.R., Lovie-Kitchin J.E., Woods R.L. Eye movements and reading with large print and optical magnifiers in macular disease. Optom. Vis. Sci. 2001;78:325–334. doi: 10.1097/00006324-200105000-00016. [DOI] [PubMed] [Google Scholar]

- 116.López A., Arévalo P., Ferrero F., Valledor M., Campo J. SENSORS, 2014 IEEE. IEEE; New York, NY, USA: 2014. EOG-based system for mouse control; pp. 1264–1267. [Google Scholar]

- 117.Tangsuksant W., Aekmunkhongpaisal C., Cambua P., Charoenpong T., Chanwimalueang T. Directional eye movement detection system for virtual keyboard controller; Proceedings of the 5th 2012 Biomedical Engineering International Conference; Muang Ubon Ratchathani, Thailand. 5–7 December 2012; pp. 1–5. [Google Scholar]

- 118.Champaty B., Jose J., Pal K., Thirugnanam A. Development of EOG based human machine interface control system for motorized wheelchair; Proceedings of the 2014 Annual International Conference on Emerging Research Areas: Magnetics, Machines and Drives (AICERA/iCMMD); Kottayam, India. 24–26 July 2014; pp. 1–7. [Google Scholar]

- 119.Deng L.Y., Hsu C.-L., Lin T.-C., Tuan J.-S., Chang S.-M. EOG-based Human–Computer Interface system development. Expert Syst. Appl. 2010;37:3337–3343. doi: 10.1016/j.eswa.2009.10.017. [DOI] [Google Scholar]

- 120.Ubeda A., Ianez E., Azorin J.M. An integrated electrooculography and desktop input bimodal interface to support robotic arm control. IEEE Trans. Hum. -Mach. Syst. 2013;43:338–342. doi: 10.1109/TSMCC.2013.2241758. [DOI] [Google Scholar]

- 121.Ding X., Lv Z. Design and development of an EOG-based simplified Chinese eye-writing system. Biomed. Signal Process. Control. 2020;57:101767. doi: 10.1016/j.bspc.2019.101767. [DOI] [Google Scholar]

- 122.Ma J., Zhang Y., Cichocki A., Matsuno F. A novel EOG/EEG hybrid human–machine interface adopting eye movements and ERPs: Application to robot control. IEEE Trans. Biomed. Eng. 2014;62:876–889. doi: 10.1109/TBME.2014.2369483. [DOI] [PubMed] [Google Scholar]

- 123.Belkhiria C., Boudir A., Hurter C., Peysakhovich V. EOG-Based Human–Computer Interface: 2000–2020 Review. Sensors. 2022;22:4914. doi: 10.3390/s22134914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124.Dhuliawala M., Lee J., Shimizu J., Bulling A., Kunze K., Starner T., Woo W. Smooth eye movement interaction using EOG glasses; Proceedings of the 18th ACM International Conference on Multimodal Interaction; Tokyo, Japan. 12–16 November 2016; pp. 307–311. [Google Scholar]

- 125.Uema Y., Inoue K. JINS MEME algorithm for estimation and tracking of concentration of users; Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2017 ACM International Symposium on Wearable Computers; Maui, HI, USA. 11–15 September 2017; pp. 297–300. [Google Scholar]

- 126.Foy H.J., Chapman P. Mental workload is reflected in driver behaviour, physiology, eye movements and prefrontal cortex activation. Appl. Ergon. 2018;73:90–99. doi: 10.1016/j.apergo.2018.06.006. [DOI] [PubMed] [Google Scholar]

- 127.Roy R., Venkatasubramanian K. EKG/ECG based driver alert system for long haul drive. Indian J. Sci. Technol. 2015;8:8–13. doi: 10.17485/ijst/2015/v8i19/77014. [DOI] [Google Scholar]

- 128.Lin C.-T., King J.-T., Chuang C.-H., Ding W., Chuang W.-Y., Liao L.-D., Wang Y.-K. Exploring the brain responses to driving fatigue through simultaneous EEG and fNIRS measurements. Int. J. Neural Syst. 2020;30:1950018. doi: 10.1142/S0129065719500187. [DOI] [PubMed] [Google Scholar]

- 129.Schlögl A., Keinrath C., Zimmermann D., Scherer R., Leeb R., Pfurtscheller G. A fully automated correction method of EOG artifacts in EEG recordings. Clin. Neurophysiol. 2007;118:98–104. doi: 10.1016/j.clinph.2006.09.003. [DOI] [PubMed] [Google Scholar]

- 130.Moretti D.V., Babiloni F., Carducci F., Cincotti F., Remondini E., Rossini P., Salinari S., Babiloni C. Computerized processing of EEG–EOG–EMG artifacts for multi-centric studies in EEG oscillations and event-related potentials. Int. J. Psychophysiol. 2003;47:199–216. doi: 10.1016/S0167-8760(02)00153-8. [DOI] [PubMed] [Google Scholar]

- 131.Woestenburg J., Verbaten M., Slangen J. The removal of the eye-movement artifact from the EEG by regression analysis in the frequency domain. Biol. Psychol. 1983;16:127–147. doi: 10.1016/0301-0511(83)90059-5. [DOI] [PubMed] [Google Scholar]

- 132.Hosni S.M., Shedeed H.A., Mabrouk M.S., Tolba M.F. EEG-EOG based virtual keyboard: Toward hybrid brain computer interface. Neuroinformatics. 2019;17:323–341. doi: 10.1007/s12021-018-9402-0. [DOI] [PubMed] [Google Scholar]

- 133.Lee M.-H., Williamson J., Won D.-O., Fazli S., Lee S.-W. A high performance spelling system based on EEG-EOG signals with visual feedback. IEEE Trans. Neural Syst. Rehabil. Eng. 2018;26:1443–1459. doi: 10.1109/TNSRE.2018.2839116. [DOI] [PubMed] [Google Scholar]