Abstract

Artificial intelligence (AI), a rousing advancement disrupting a wide spectrum of applications with remarkable betterment, has continued to gain momentum over the past decades. Within breast imaging, AI, especially machine learning and deep learning, honed with unlimited cross-data/case referencing, has found great utility encompassing four facets: screening and detection, diagnosis, disease monitoring, and data management as a whole. Over the years, breast cancer has been the apex of the cancer cumulative risk ranking for women across the six continents, existing in variegated forms and offering a complicated context in medical decisions. Realizing the ever-increasing demand for quality healthcare, contemporary AI has been envisioned to make great strides in clinical data management and perception, with the capability to detect indeterminate significance, predict prognostication, and correlate available data into a meaningful clinical endpoint. Here, the authors captured the review works over the past decades, focusing on AI in breast imaging, and systematized the included works into one usable document, which is termed an umbrella review. The present study aims to provide a panoramic view of how AI is poised to enhance breast imaging procedures. Evidence-based scientometric analysis was performed in accordance with the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guideline, resulting in 71 included review works. This study aims to synthesize, collate, and correlate the included review works, thereby identifying the patterns, trends, quality, and types of the included works, captured by the structured search strategy. The present study is intended to serve as a “one-stop center” synthesis and provide a holistic bird’s eye view to readers, ranging from newcomers to existing researchers and relevant stakeholders, on the topic of interest.

Keywords: artificial intelligence, deep learning, machine learning, breast imaging, mammogram, scientometric analysis, umbrella review

1. Introduction

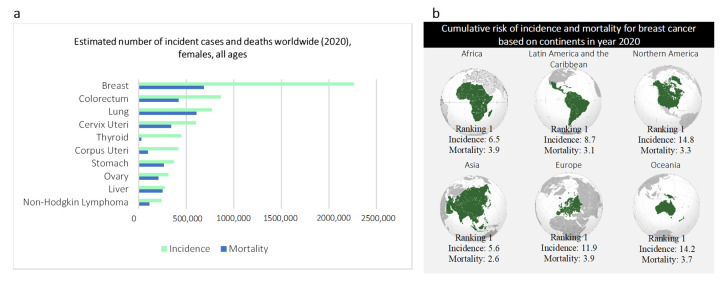

Breast cancer, the first and oldest description of cancer found in history, was discovered in Egypt in approximately 3000 BC, where the term “cancer” remains in use [1,2]. The ancient Egyptian textbook on trauma surgery describes eight cases of ulcers or tumors cauterized using a fire drill with the conclusion: there is no treatment [1,2]. To date, breast cancer remains a global challenge, proclaimed as the leading mortality (cancer) with 684,996 deaths (15.5% of all cancer cases amongst women) reported across the world in 2020 [3]. Breast cancer is the most frequently diagnosed cancer among women, regardless of socioeconomic, geographic subgroups, race, and ethnicity [3,4] (Figure 1). Breast cancer is a non-communicable disease that emerges in variegated forms, self-subsistent, and interacts dynamically through an adaptive process with its microenvironment. Given this complexity, breast imaging modalities have become a pivotal component for every stage in cancer management, starting from the initial cancer detection, followed by accurate demarcation of neoplastic lesions, treatment response assessment, and post-treatment follow-up. The introduction of promising imaging modalities (e.g., mammograms) has reshaped the landscape and horizon of cancer management. Early detection of breast cancer using mammograms in conjunction with advanced treatment have been proven to decrease mortality by 30.0% as of 1989 [5,6]. This finding is supported by recent incident-based mortality and randomized trial studies finding women with mammogram screening have a statistically significant reduction in mortality and probability of developing advanced breast cancer by 41.0% and 25.0%, respectively [7].

Figure 1.

Breast cancer statistics as of 2020 [3]. (a), estimated number of incidence cases and deaths worldwide in year 2020 (females, all ages). (b), Cumulative risk of incidence and mortality for breast cancer based on continents in year 2020.

When a suspicious lesion is detected by an imaging modality, a series of cascading clinical procedures of observation, testing, and/or empiric biopsy is initiated. According to the standard clinical protocol, the suspicious lesions would be demarcated, distinguished from the non-neoplastic lesion/mimicker, and classified into different cancer aggressiveness levels. The optimal treatment is then proposed while a temporal monitor of the cancer is performed from time to time. Although there are several exceptions (e.g., imaging using nuclear medicine whereby quantitative measurement (i.e., metabolic activity) can be obtained based on specific uptake value), the conventional breast imaging evaluation relies mainly upon the qualitative features (e.g., shape, size, and density of cancer). These features are collectively termed semantic features [8,9]. Thanks to the advancement in computational mathematics, now, the semantic features can be modeled and quantified, collectively forming the core tenets of radiomics [10,11,12]. Radiomics, also known as quantitative imaging [13,14,15,16], is equipped with the high-throughput extraction of quantitative data, which empowers the conversion of qualitative image features into mineable data [17,18,19]. As a voluminous amount of multi-dimensional data is made available via this advancement, the efficiency and efficacy of data interpretation may pose a challenge to medical experts. To fully utilize the extracted data as a meaningful clinical endpoint, AI could make substantial strides in data management and data perception.

AI methodologies, particularly machine learning and deep learning, have demonstrated remarkable improvements across multiple spectrums of application. Recently, numerous studies have highlighted the superiority of deep learning (a subset of machine learning) against ground truth labels and, in some task-specific cases, surpassing the conventional expert-oriented methods [20,21,22,23,24,25]. Despite the data-hungry nature of deep learning, it offers comprehensive management and correlation of concentrated multivariate data, which potentially have no limit in cross-data/case referencing. Owing to this, the concentrated semantic data mineable from radiomics which essentially describes the radiographic aspects of the breast would be an ideal scientific-rich resource for training a deep learning model. Furthermore, deep learning allows better generalizability characteristics across different imaging modalities, high robustness to noise, increases efficiency, reduces the error rate, as well as enables and enriches data visualization.

At the dawn of this rousing technological advancement, this article aims to provide a panoramic view of how AI is positioned to aid in breast imaging procedures. Over the years, increasing interest in AI integration within breast imaging has captured the attention of numerous researchers in surveying and reviewing such integration along different facets. To avoid reinventing the wheel, the authors intended to connect these review works and focus on collating and synthesizing new insights and drawing constructive conclusions while highlighting the research gaps, challenges, and potential future direction. The idea of investigating review work as the research object is unique. To systematize this purpose into one usable document, an umbrella review [26,27] was adopted and aided with scientometric analysis [28] for comprehensive qualitative and quantitative synthesis activities purposes. This study aims to provide a brief yet broad overview alongside a holistic bird’s eye view to the readers, ranging from newcomers to existing researchers and relevant stakeholders, on the topic of interest.

1.1. Breast Imaging Modalities

Back in 1913, Albert Salomon, one of the pioneers of mammograms, a surgeon as well as emeritus professor had reported his impactful monograph on the radiographic investigation of mastectomy specimens. Albert Salomon’s work reveals that highly infiltrating cancer was distinguishable from circumscribed cancer [29]. These remarkable efforts were recommenced by Otto Kleinschmidt in 1927. Subsequently, in late 1960, a self-contained mobile mammographic unit was first developed by Philip Strax [30]. This mammographic unit was capable of providing mammography services to 70 women per day. To date, mammograms remain the mainstay in breast imaging [9,31,32,33].

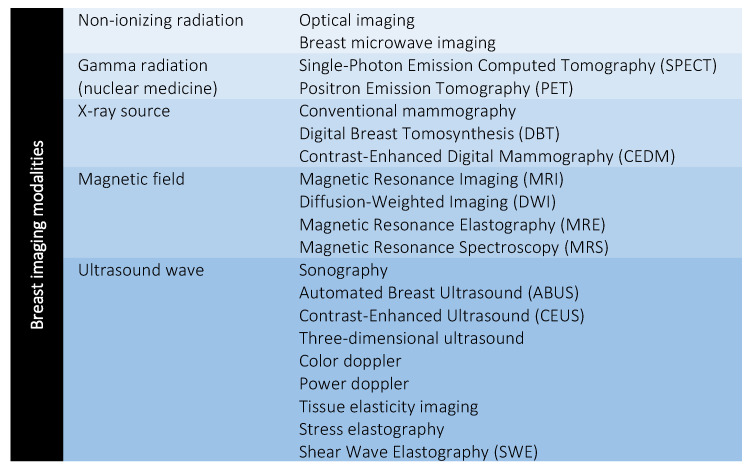

Over the years of ever-increasing demand for quality healthcare, researchers have actively sought promising imaging modalities as adjunctive imaging modalities to complement screening outputs. The mammogram is reported with a sensitivity of 68.0%–90.0% and approximately 62.0% for women at the age of 50 and 40–49, respectively [34]. The sensitivity of mammography is found to decrease to 57.0% for women with dense breasts but increased up to 93.0% for women with breasts in high adipose tissue [35]. In 2000, the first digital mammogram, namely Senographe 2000 D was approved by the Food and Drug Administration (FDA). Senographe 2000 D is equipped with an X-ray generator where the image acquisition method is similar to the conventional film-screen mammogram [29]. In later years, the digital mammogram has advanced to novel digital breast tomosynthesis (DBT) and contrast-enhanced digital mammography (CEDM). Other promising propitious imaging modalities (e.g., ultrasonography and magnetic resonance imaging (MRI)) are gradually introduced to use as adjuncts to mammograms for breast cancer screening. Several randomized trial studies have highlighted the applicability of these adjunctive modalities in complementing the sensitivity of mammograms, especially in the detection of early-stage and infiltrating cancers for asymptomatic women, regardless of breast density [36,37,38]. To date, within breast cancer, there are approximately 20 imaging modalities available to improve screening sensitivity and support medical experts with assistive information. Figure 2 summarizes the breast imaging modalities, clustered in accordance with different sources of radiation.

Figure 2.

Breast imaging modalities. Figure reproduced from [34] under Creative Commons CC BY license, Springer Nature.

1.2. AI in Breast Imaging

The idea of critical thinking and mimicking intelligence behavior was first conceived by Alan Turing back in 1950 [39]. The phrase “artificial intelligence” was later coined by John McCarthy in 1956 to specifically denote the subject area of science and engineering in machine intelligence [39,40,41]. Over the years, continuous exploration in AI has concretely reshaped, evolved, and broadly encompassed applications in everyday life. Within breast imaging, advancements in both computers and various imaging modalities have synergically led to rapid progress in AI integration.

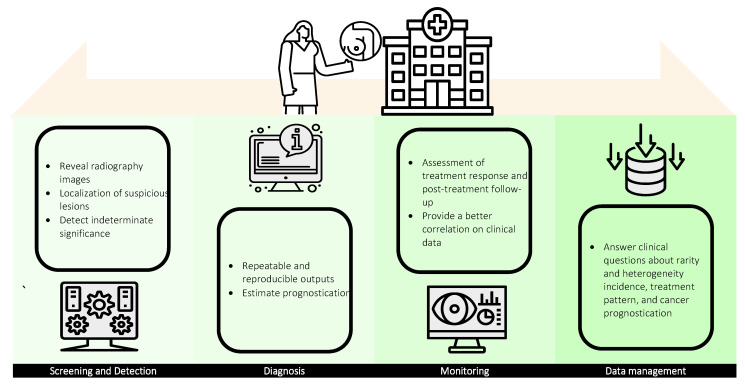

AI finds great value in cancer management, comprising four facets: screening and detection, diagnosis, monitoring, and data management as a whole (Figure 3). Screening is a clinical procedure to reveal radiography images of the breast using breast imaging modalities while detection refers to the localization of objects of interest (e.g., signs of cancer) in these images. Both screening and detection can be characterized under the umbrella term of computer-aided detection (CADe). AI-assisted screening methodologies have been found to improve radiography image quality and facilitate subsequent detection and diagnosis procedures in suspicious lesions [42,43]. Although AI-based detection tools are no more accurate than radiologists in cancer detection [44,45], CADe offers consistent sensitivity in the detection of subtle changes, especially in indeterminate significance [46]. To date, pattern recognition algorithms and deep learning models are employed as auxiliary aids to locate microcalcification lesions as well as support the detection of possible missing abnormalities, which generally improve the sensitivity of suspicious lesion detection.

Figure 3.

Utility of AI in breast imaging: screening and detection, diagnosis, monitoring, and data management.

Diagnosis is a systematic procedure comprising the evaluation of disease etiology, patient medical history, physical examination, diagnostic imaging, and laboratory results. With the integration of AI, computer-aided diagnosis (CADx) offers repeatable and reproducible outputs, such as staging (based on tumor, node, metastasis (TNM) classification [47]) and grading (based on Nottingham Histopathology Grading (NHG) system [48]) of breast cancer, via systematic processing of quantitative lesion information. CADx could also be extended to estimate prognostication in accordance with the detected ailment and predict the possible outcomes based on the given clinical treatments. Similar to radiomics, AI has a paramount role in interpreting imaging genomics which provides meaningful multivariate biological information (e.g., gene expression, chromosome copy number, somatic mutation, and relevant molecular significances). Correlating information from both of these emerging fields with the help of AI has great potential in complementing diagnosis output and synergistically leads to precision medicine.

Temporal monitoring of cancer has a paramount role in cancer management, especially in the assessment of treatment response and post-treatment follow-up. The present clinical routine in cancer monitoring using predefined metrics (e.g., tumor longest diameter) listed by the World Health Organization (WHO) and Response Evaluation Criteria in Solid Tumors (RECIST) provides limited information and is found insufficient to fully define the cancer status [49,50]. AI-based monitoring, however, can provide a better correlation of meaningful clinical data regardless of the complexity with improved capability in capturing subtle changes over time. Although a mature AI-based monitoring system is not yet available, the great utility and increasing roles of AI in cancer temporal monitoring are remarkable.

In every stage of cancer management, healthcare systems are generating multi-dimensional data creating and establishing themselves as big-data repositories. Big data are defined as a large amount of information that grows in three aspects: volume, velocity, and variety (known as 3 Vs), and that is unmanageable using conventional methods, such as software and/or internet-based platforms [51,52]. Big data are highly relevant in healthcare, especially in answering clinical questions about rarity and heterogeneity incidence, treatment patterns, and cancer prognostication. In recent years, the National Institutes of Health (NIH) has taken initiative toward establishing electronic health records by launching a program, namely “All of us” (https://allofus.nih.gov/) aiming to collect one million or more patient data over the next few years. Considering the roles of managing and analyzing big data retrospectively and predicting the potential outputs, AI is well suited to resolve these challenges and is invaluable with great utility in meeting the requirements.

2. Systematic Literature Search Methodology

2.1. Research Question

The primary research question of this umbrella review is: “What are the patterns, trends, quality, and types of reviews that documented AI applications in breast imaging over the years?” The research question is intentionally formulated in a broad manner to maximize the inclusion of review works describing AI applications in different breast imaging modalities.

2.2. Search Strategy

The umbrella review is intended to include review articles over the past decades. Thus, no date or year restriction was included in the search strategy. The systematic search was conducted in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [53]. To identify the relevant review works, a structured search query was first formalized using the union of keywords in two primary facets: AI and breast imaging alongside a set of synonym terms (Table 1), aided with “AND” and “OR” operators. AI and breast imaging were used as the primary keywords to assure the inclusion of all umbrella terms corresponding to each keyword. For example, machine learning, deep learning, computer vision, data science, and automation are umbrella terms for AI. For example, all 20 breast imaging modalities are encompassed within the keyword: breast imaging. The comprehensive search was performed in databases, such as PubMed, Scopus, Web of Science, and Google Scholar. Once a relevant review work was identified, the references of the corresponding review work were screened to identify new relevant review works that had not been retrieved throughout the initial search. This process was iterated until no new relevant review works were found.

Table 1.

Search string.

| Operator | Dimensions | Keywords, Synonyms, and Alternative Terms |

|---|---|---|

| AND | AI | artificial intelligence OR AI OR machine learning OR deep learning OR expert system |

| Breast imaging | breast imaging OR breast imaging modality |

2.3. Study Selection

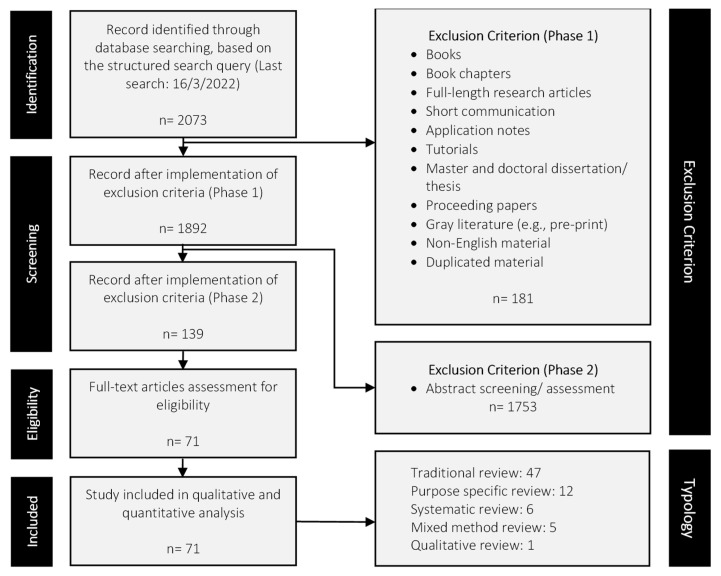

To assure the quality and acceptability of the included works, only peer-reviewed journal review articles were considered eligible. In Phase 1, books, book chapters, full-length research articles, short communication, application notes, tutorials, masters and doctoral theses, proceeding papers, gray literature (e.g., pre-print), non-English material, and duplicated material were excluded. The last search performed using the structured search query was on 16 March 2022. In Phase 2, abstract screening was first performed, and subsequently, the remaining review works were subjected to full-text screening. The primary inclusion criteria are: (1) the review works must describe the integration of AI and/or any subset of AI and (2) the review works must concentrate focus on any modalities (either one or more) within breast imaging. Review works that do not comply with the inclusion criteria were considered irrelevant and excluded. For example, review works reporting solely on applications of AI (or any subset of AI) in healthcare or documenting solely the application of imaging modalities within breast cancer is not the interest of this umbrella review. Figure 4 summarizes the PRISMA guideline alongside the exclusion criteria of this umbrella review. Overall, 2073 review works were retrieved from the initial search using the structured search query. In Phase 1, 181 review works were excluded, leaving 1892 articles. Abstract screening in Phase 2 eliminated 1753 review works, leaving 139 articles. After the full-text screening, 71 review works remained and these articles were included in the subsequent qualitative and quantitative synthesis activities.

Figure 4.

Study selection flowchart based on the PRISMA guidelines [53].

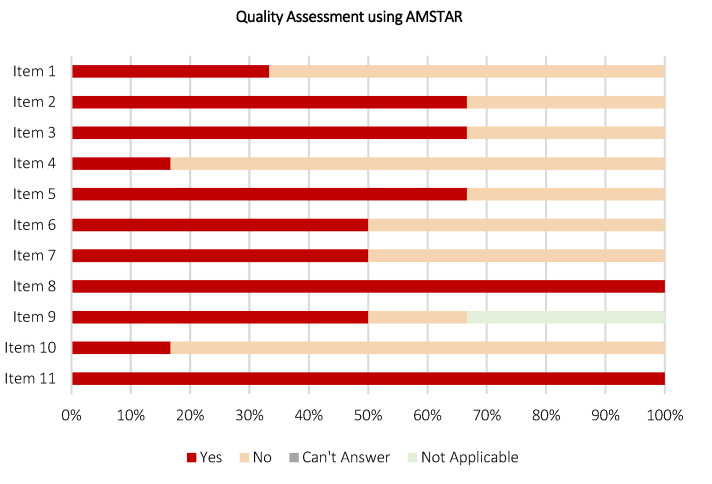

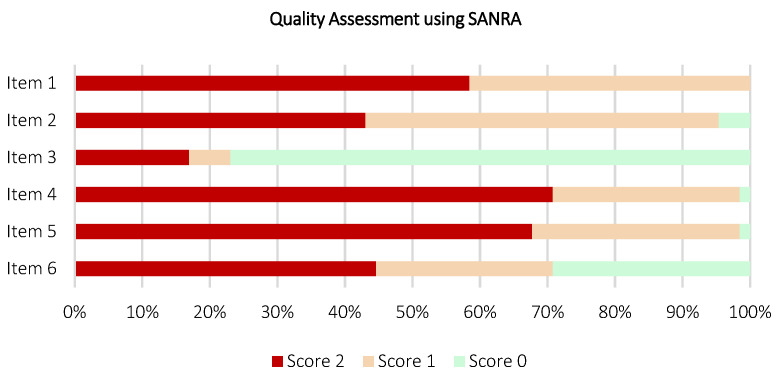

2.4. Assessment of Reporting and Study Quality

Quality assessment in review works is crucial and reflects capability in preparing comprehensible and subjective reporting. Depending on the type of review, the quality of reporting in the included review works was assessed using appraisal tools, such as A Measurement Tool to Assess Systematic Reviews (AMSTAR) [54] and Scale for the Assessment of Narrative Review Articles (SANRA) [55]. These appraisal tools provide a checklist for the assessment and validation of reporting quality determining if the review works are comprehensive, equipped with added values to the reader, proper referencing, and assessing the likelihood of publication bias. AMSTAR is used to assess the methodological quality of systematic reviews, consisting of 11 components for content validity. Whereas, SANRA is used to assess the methodological quality of non-systematic reviews, which consists of six components. Depending on the type of review, each component within AMSTAR or SANRA was analyzed for each included work by two authors independently and answered with “Yes”, “No”, “Can’t answer”, and “Not applicable” (for AMSTAR) or given a score ranging from 0 (general low) to 2 (general high) (for SANRA). A third author is involved, if and only if discordances are raised in the assessment of reporting quality. As a result, the AMSTAR and SANRA results will be primarily used here to provide a summary of the reporting quality of the included works. For this umbrella review, similarly, the content validity, risk of bias, and applicability were assessed by two authors independently using the AMSTAR appraisal tool.

2.5. Data Extraction

To answer the research question, qualitative and quantitative data from the included review works were meticulously distilled in order to create a meaningful and functional summary endpoint. Qualitative data, such as the type of imaging modalities, AI techniques, and highlights of the included review work, were extracted, organized, compiled, and tabulated to offer a “one-stop center” synthesis. Quantitative data, such as bibliometric information and co-occurrence analysis, were collated and synthesized to offer a “bird’s eye” view of the topic of interest. The mineable data were systematized using graphics, charts, and tables. For each included review work, information such as author name and affiliation were manually disambiguated, e.g., “Lamb, Leslie R.”, “Lamb”, and “Leslie R. Lamb” refer to the same author; “Harvard Medical School” and “HMS” refer to the same affiliation. Still, subsequent quantitative synthesis activity using software such as VOSViewer will interpret them as independent authors or affiliations if a disambiguation step is not performed.

2.6. Data Reporting

The key findings of the umbrella review, including data analysis, qualitative, and quantitative synthesis were presented narratively using a descriptive approach. The analysis was supported with graphics, charts, and tables, aiming to highlight the key features and facilitate comprehension. Microsoft Excel 2019 was used for data compilation. VOSViewer 1.6.18 software for windows was used to perform bibliometric analysis [56]. The typology of the included review works was illustrated using a pie chart. The most contributing journals and publishers were illustrated using bar charts. Temporal scientometric data were illustrated using a line chart. Geographical scientometric data were illustrated using a world map chart. Subject area profiling was illustrated using a tree map chart. The quality assessment was illustrated using stacked bar charts, and co-occurrence analysis and keywords mapping were illustrated using bibliometric networks. A table was used to systematize the qualitatively mineable data (e.g., type of imaging modalities, AI techniques, and highlights of the included review work) from each included review work.

2.7. Threats to Study Validity

To minimize potential bias and assure study validity, in this review, a structured methodology was adopted: (1) Study selection bias: the search activity shall comply with the structured search query formalized in Section 2.2 and study selection criteria as in Section 2.3. (2) Data selection bias: two authors independently performed the search activity using the structured search query, followed by abstract and full-text screenings. Meetings and discussions were organized to resolve disparities raised at any phase. If necessary, a third author was engaged to draw the conclusion. (3) Functionality assessment: a self-assessment was conducted using the adapted appraisal tool, AMSTAR, to guide and assure the functionality of this umbrella review.

3. Results

To answer the primary research question of this umbrella review, qualitative and quantitative data are extracted from the 71 included review works and systematized into nine sub-sections, revealing the patterns (see Section 3.5, Section 3.6, Section 3.7 and Section 3.8), trends (see Section 3.2, Section 3.3 and Section 3.4), quality (see Section 3.9), and types of reviews (see Section 3.1) which documented AI applications in breast imaging.

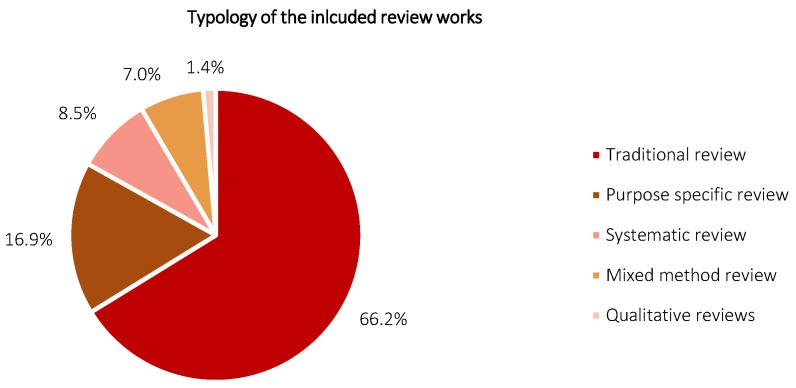

3.1. Typology of the Included Review Works

Figure 5 shows the typology of the included review works. The typology was assessed in accordance with the criteria stated in [26], and classified into the traditional review, purpose-specific review, systematic review, mixed-method review, and qualitative review. In brief, a traditional review aims to include extensive literature with efforts made to evaluate the quality of each included study, and to perform a high degree of analytic description alongside critical conceptual synthesis. A purpose-specific review focuses on identifying specific viewpoints, ideas, and/or concepts and targets to define attributes of the viewpoints. A systematic review employs a structured search strategy, encompassing a well-defined methodology or guideline and focusing in qualitative and quantitative synthesis activities. A mixed-method review offers a combination of various review methodologies but usually inclusive a systematic literature review (e.g., a combination of qualitative and quantitative review methodologies). A qualitative review, alternatively termed as experiential review, encompasses qualitative data synthesis popularized within a specific scope. According to Figure 5, the included review works constitute 66.2% (47 reviews), 16.9% (12 reviews), 8.5% (six reviews), 7.0% (five reviews), and 1.4% (one review) for traditional review, purpose-specific review, systematic review, mixed-method review, and qualitative review, respectively. The traditional review is found to be the most prevalent typology here, followed by purpose-specific review and systematic review. Mixed-method review and qualitative review are relatively scarce, corresponding to the body of the included works. The distribution of typology is speculated to be highly correlated to the popularity of review type amongst researchers in AI-dominant subject areas, such as computer science and engineering, where a remarkable rise in systematic reviews has been observed over the past five years (i.e., 2018: one review; 2021: five reviews). Traditional review and purpose-specific reviews both show a noticeable lack of well-defined methodology, which signals the need for structure guidelines as one of the research avenues. Notably, the qualitative data, e.g., imaging modalities, AI techniques, and highlights of each included review work, are systematized in Appendix A (Table A1) in accordance with the respective typology.

Figure 5.

Typology of the included review works.

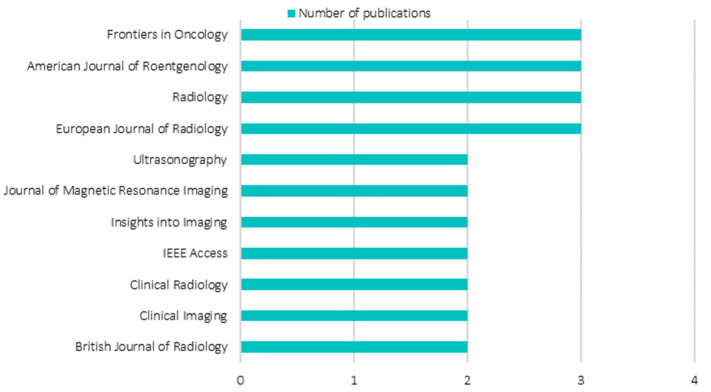

3.2. Distribution of the Most Contributing Journals

Figure 6 shows the most contributing journals publishing review articles pertaining to AI in breast imaging. Considering the ubiquitous characteristic of the keyword: AI. In order to avoid ambiguity, only journals with at least two review article publications are included. According to Figure 6, journals with two review articles publication, each contributing 2.8% towards the whole, are Ultrasonography, Journal of Magnetic Resonance Imaging, Insights into Imaging, IEEE Access, Clinical Radiology, Clinical Imaging, and British Journal of Radiology. Whereas journals with three review article publications, each contributing 4.2% towards the whole, are Frontiers in Oncology, American Journal of Roentgenology, Radiology, and European Journal of Radiology. All the journals aforementioned, apart from IEEE Access which is a multi-disciplinary engineering journal, share highly similar aims and scope. These journals are dedicated to education and frontier strategies, typically in state-of-the-art reviews, opinions, and research articles in oncology, radiology, nuclear medicine, and imaging. Interestingly, over the years, amongst the most contributing journals, none were from AI-oriented journals, such as IEEE Transactions on Pattern Analysis and Machine Intelligence. It is reasonable to conclude that the review works in AI in breast imaging are heavily skewed towards the keyword: breast imaging, where AI technology is viewed as a rousing advancement that offers betterment in various aspects in the field of interest.

Figure 6.

Analysis of most contributing journals.

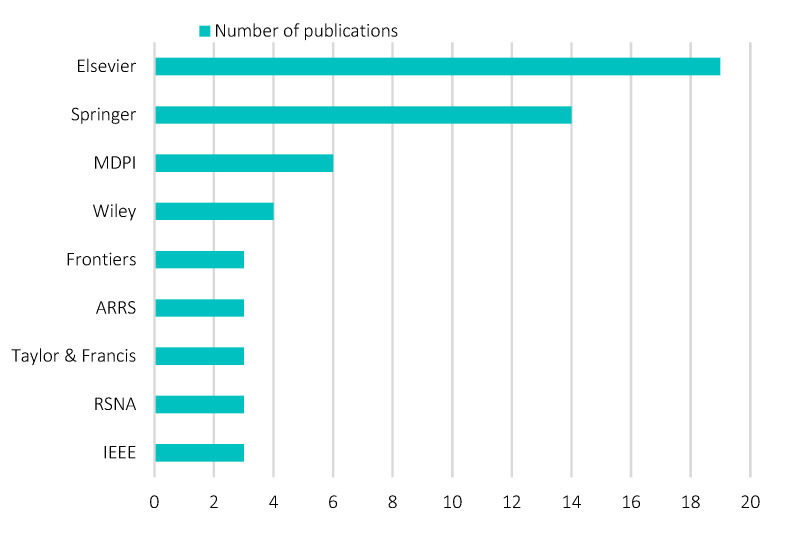

3.3. Distribution of the Most Contributing Publishers

To assure an unbiased data selection, the structured search query was performed on general databases, such as PubMed, Scopus, Web of Science, and Google Scholar, in lieu of search engines provided by specific publishers, such as ScienceDirect, SpringerLink, and Multidisciplinary Digital Publishing Institute (MDPI). Figure 7 shows the most contributing (top five) publishers of review works pertaining to AI in breast imaging. Overall, 21 publishers were retrieved. Elsevier appeared to be the most contributing publisher on the topic of interest, contributing 26.8% (19 review articles), followed by Springer (19.7%, 14 review articles), MDPI (8.5%, six review articles), and Wiley (5.6%, four review articles). Frontiers, ARRS, Taylor & Francis, RSNA, and IEEE each contributed 4.2%, equivalent to three review articles. Overall, the publishers demonstrate an oligopoly relationship on the topic of interest, with predominance held by Elsevier and Springer. This finding is aligned with a previous study that focused on scientometric analysis publications sampled across multivariate topics from 1973 to 2013 [57]. The oligopoly relationship, typically between Elsevier and Springer in the global publishing industry, is speculated to remain similar across different topics. Evidence of this is found in a recent report analyzing bibliographical information of publishers [58] as well as in a recent systematic review in precision agriculture [59]. In recent years, pressure from various stakeholders has led to research transformation from closed access to open access. Open access journals are observed with an increase in normalized impact factor and average relative citations [60], which together are the elemental factors (statistically significant) influencing publication tendency amongst researchers [61]. Publishers, such as Elsevier, Springer, and MDPI, are making swift progress in transforming journals to open access. For example, in 2017, Elsevier indicated their intention to make the journal transition and work towards the principles behind the Sponsoring Consortium for Open Access Publishing in Particle Physics (SCOAP3) as well as to construct alternative access models tailored according to different geographical needs. For example, Springer is committed to accelerating the adoption of open access by complying with the institutional open access agreement. For example, MDPI was one of the pioneers in open-access scholarly publications as of 1996.

Figure 7.

Analysis of most contributing publisher.

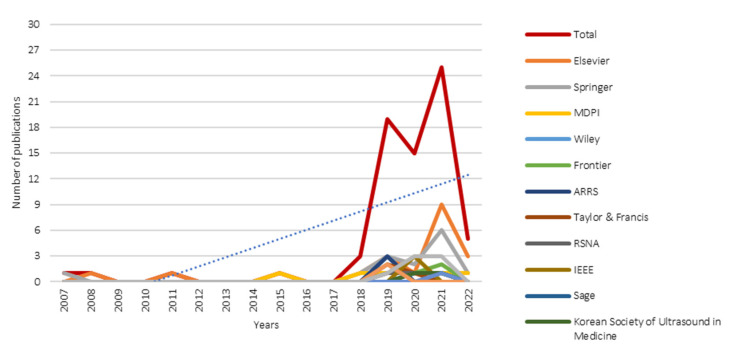

3.4. Temporal Scientometric Analysis

Figure 8 shows the temporal scientometric analysis of the included review works. As mentioned in Section 2.2, no date or year restriction was included in the structured search query. According to Figure 8, review articles complying with the structured search query could be traced back as early as 2007 (one review article), which was published in La Radiologia Medica, Springer. Despite significant progress in understanding the theoretical underpinnings of AI having begun in 1956, to incorporate in the medical domain, e.g., breast imaging, a number of validations and hurdles must be first overcome. Notable research interest in the publication of review articles is observed only after a decade (i.e., 2018) from the first review article in 2007. This observation could be attributed to the increased reliability and utility of AI across multiple non-medical spectrums, indirectly gaining trust and level of acceptance of AI reliability amongst medical experts. In the ever-increasing demand for quality healthcare, the “one-size-fits-all” approach is no longer valid. Progress in medical domains, such as breast imaging, is moving towards precision medicine. In recent years, researchers have actively sought rigorous clinical proof of AI utility in breast imaging and progressively made concerted attempts to push AI technology from pilot tests to validation in clinical trials, aiming to apply it as a fundamental segment in the standard clinical protocol. The blue dotted line in Figure 8 shows the overall publication trendline. Overall, a linear increment is observed across 15 years (i.e., 2007 to 2022).

Figure 8.

Temporal scientometric analysis of the included review works.

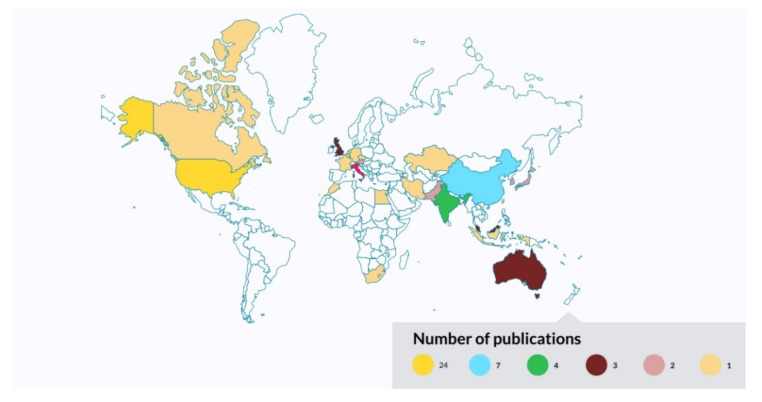

3.5. Geographical Scientometric Analysis

Figure 9 shows the geographical scientometric analysis of the included review works. For each review article, only the geographical information of the corresponding author is retrieved. For articles with more than one corresponding author, each geographical information is allowed to contribute once to the scientometric analysis. According to Figure 9, the United States appeared to be the most contributing country summarizing research works pertaining to AI in breast imaging as of 2014, corresponding to 33.8% (24 review articles) of the entire body of literature. This is followed by China (contributing 9.9%, seven review articles); India, Italy, and the United Kingdom (each contributing 5.6%, four review articles); Australia (contributing 4.2%, three review articles); and Austria, Japan, Korea, Malaysia, and Pakistan (each contributing 2.8%, two review articles).

Figure 9.

Geographical scientometric analysis of the included review works.

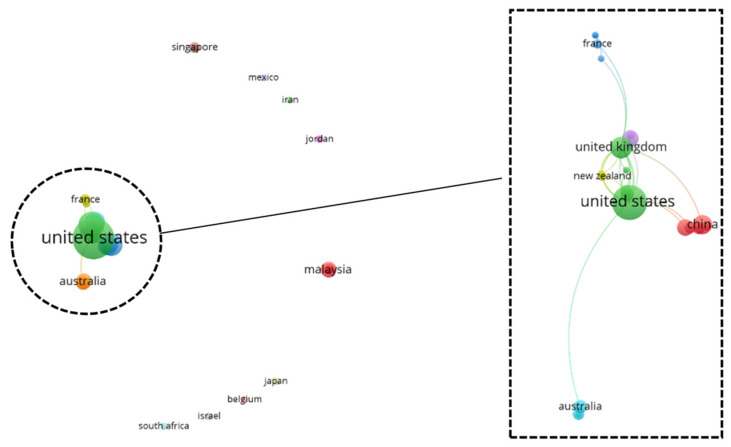

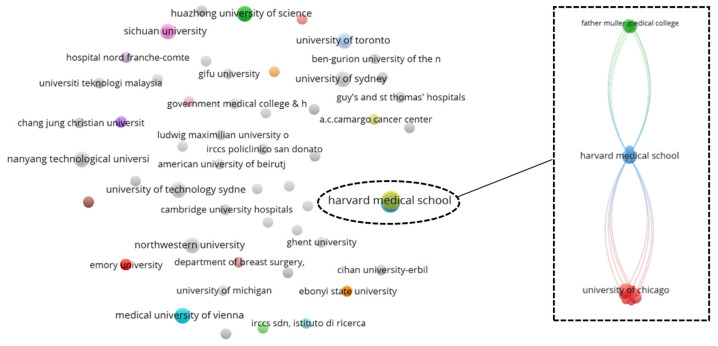

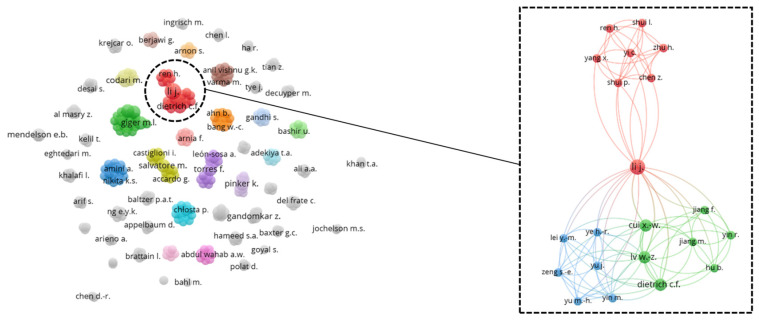

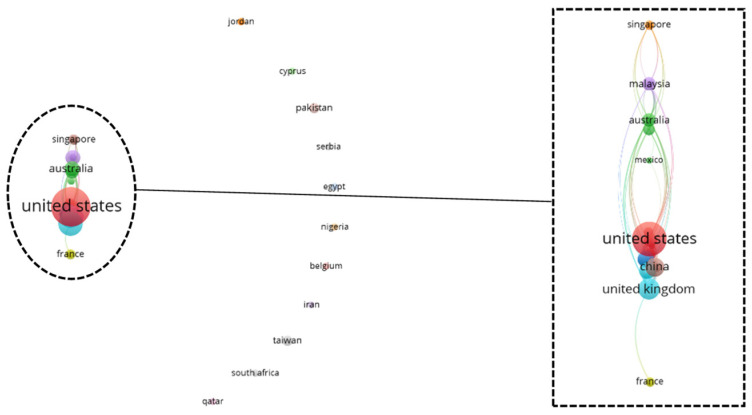

To gain a holistic overview of the captured geographical information, a top-down co-authorship bibliographic analysis is performed, analyzing three aspects on a cascading level: country (Figure 10), affiliation (Figure 11), and author (Figure 12). Co-authorship is a commonly used operational proxy in studying research collaboration (researcher oriented) as of 1980 [62]. Briefly, in Figure 10, Figure 11 and Figure 12, each item is represented using a label and a node. The size of the label and node reflects the weight of the specific item, such that the higher the weightage, the larger the label and node. The color of the node reflects a local cluster, connected using lines that are termed links. The shorter the links, the stronger the relatedness of both items.

Figure 10.

Co-authorship bibliographic network analysis: country.

Figure 11.

Co-authorship bibliographic network analysis: affiliation.

Figure 12.

Co-authorship bibliographic network analysis: author.

According to Figure 10, the United States has the strongest link strength across 42 countries, attaining a value of 21, followed by the United Kingdom (total link strength of 17), Malaysia, Netherlands, and New Zealand (each with a total link strength of six). There are 15 local clusters found in the country’s co-authorship network. Interestingly, nine small local clusters were found isolated from one another, forming a local network with fewer than two countries. The six connected clusters are highlighted in Figure 10. The United States has a strong relationship with countries, such as the Netherlands, Canada, and the United Kingdom. This could be attributed to efforts by government agencies, such as the National Institute of Health (NIH), in promoting medical research and supporting scientific studies in the medical division.

According to Figure 11, the Harvard Medical School appeared as the affiliation with the strongest total link strength of 15, a predominance across 162 affiliations. A total of 52 local clusters are found in the affiliation co-authorship network, such that 49 local clusters are isolated from one another. The remaining three connected clusters are highlighted in Figure 11. Amongst the connected clusters, Harvard Medical School has the highest connectivity weightage with a close relatedness to MIT Lincoln Laboratory, forming a small local cluster with only two affiliations.

According to Figure 12, the author, namely Li J. has the strongest total link strength with a value of 23, followed by Giger M.L., Gillies R.J., and Schabath M.B., each with a total link strength of 19. A total of 327 authors are found in the author co-authorship network, forming 54 clusters. Amongst the clusters, only three clusters are inter-connected and are highlighted in Figure 12.

It is undeniable that research collaboration greatly improves scientific findings and jointly concerted efforts in resolving complex engineering problems. International collaboration for AI integration in breast imaging is below expectations, however. Based on the network analysis, in a broad view, a high number of isolated local clusters reflects a low rate of international collaboration on the three cascaded levels, which indicates the need for efforts in nurturing multinational collaboration, establishing global resources, as well as knowledge sharing for the mutual good in resolving world health issues.

3.6. Bibliographic Coupling Network Analysis: Country

Bibliographic coupling, like co-authorship, however publication oriented, inherits the benefits of recommending relevant works. When a common work is cited as a reference in two documents, they are thus bibliographically coupled [63]. Here, a strong total link strength reflects a high number of common works cited as references in the respective review article. Figure 13 shows the bibliographic coupling network, analyzed at the country level. According to Figure 13, the United States has the strongest total link strength, with a value of 646, followed by the United Kingdom (total link strength: 630), China (total link strength: 357), Australia (total link strength: 329), and South Korea (total link strength: 328). A total of 21 clusters are found across 42 items, such that 13 local clusters are isolated from one another. The eight connected clusters are highlighted in Figure 13. Notably, the top five countries with the strongest total link strength are within the eight connected clusters, alongside other remarkable items (e.g., Singapore, Malaysia, Italy, and France), creating local networks and subsequently local clusters.

Figure 13.

Bibliographic coupling network analysis: country.

3.7. Subject Area Profiling

Figure 14 shows a tree map profiling the subject areas of the included review works, distilled across 56 journals in accordance with the Scopus database. Importantly, journals can be indexed in one or more subject areas, positioned in accordance with the aims and scope of the journal. For example, CA: A Cancer Journal for Clinicians is a single subject area, and a medical research-oriented journal focuses only on medicine. For example, Sensors is a multi-disciplinary journal indexed across five distinct subject areas: Physics and Astronomy, Engineering, Computer Science, Chemistry, as well as Biochemistry, Genetics, and Molecular Biology. According to Figure 14, most of the journals are within the subject areas of Medicine (76.8%, 43 journals), followed by Computer Science (21.4%, 12 journals), Engineering (17.9%, 10 journals), Biochemistry, Genetics, and Molecular Biology (17.9%, 10 journals), and Physics and Astronomy (10.7%, six journals). The subject areas collated are plausible and aligned with the finding in Figure 6, such that the most contributing journals pertaining to AI in breast imaging are within the medical division and are biased towards the keyword: breast imaging.

Figure 14.

Subject area profiling.

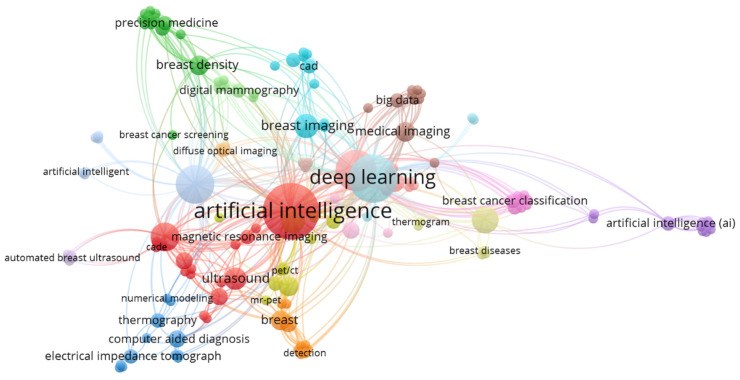

3.8. Keywords Co-Occurrence Network

Figure 15 shows the keywords co-occurrence network distilled from the included review articles, which impart the trend and focus of the scientific interest within AI in breast imaging over the past decades. The network constitutes 150 items, creating 18 local clusters, and is dominated by AI and its umbrella terms. AI, deep learning, and machine learning exhibit the strongest total link strength with values of 143, 112, and 91, respectively, followed by breast cancer (total link strength: 76) and mammogram (total link strength: 38). According to Figure 15, although various adjunctive breast imaging modalities (e.g., ultrasound, MRI, thermography, PET, and CT) have been studied every so often, mammogram, however, remains the focal point for AI integration. From a broader perspective, Figure 6 and Figure 15 together reveal the researchers’ proclivity towards the publication of their review works, such that the options of journals and keywords are respectively breast imaging and AI-oriented.

Figure 15.

Indexed keywords co-occurrence mapping.

3.9. Quality Assessment of the Included Review Works

Figure 16 and Figure 17 depict the stacked bar charts for quality assessment of the included review works, constituting six systematic reviews and 65 non-systematic reviews using AMSTAR and SANRA appraisal tools, respectively. According to Figure 16, on AMSTAR adherence, a majority of the included systematic reviews scored “yes” ratings on eight out of 11 AMSTAR components. Only two included systematic reviews were rated “yes” for Item 1 [64,65] and one systematic review [64] was rated “yes” for both Items 4 and 10. Overall, the included systematic review works demonstrate promising relevance, capable to extract and synthesize data from the available evidence, with a guided methodological reporting procedure to formalize findings. Nonetheless, a low number of reviews found reporting data in Items 1 (“priori” design assessment), 4 (inclusion criteria assessment), and 10 (publication bias assessment) flagged the need for extra priority in reporting these items as part of the future research avenue. According to Figure 17, on SANRA adherence, most of the included non-systematic reviews attained “score 2” ratings on four out of six SANRA components. For Item 2 (aims and formulation of questions assessment), 28 reviews were rated as “score 2”, alongside 34 and three reviews were rated as “score 1” and “score 0”, respectively. For Item 3 (literature search assessment), only 11 reviews were rated “score 2”, followed by four, and 50 reviews were rated as “score 1” and “score 0”, respectively. As aforementioned, the non-systematic reviews have a noticeable lack of well-defined methodology. Here, the quality assessment outcomes further support this statement, reflected by a low number of data reports in Items 2 and 3, which specifically assess the structure of review methodology, from the research question formalizing and data sourcing perspectives. The full quality assessment scoring tables for each included review work using AMSTAR and SANRA are available in Appendix A (Table A2 and Table A3, respectively).

Figure 16.

Quality assessment on six included review works (systematic review) using AMSTAR appraisal tool.

Figure 17.

Quality assessment on 65 included review works (non-systematic review) using SANRA appraisal tool.

4. Self-Assessment, Limitations, Challenges, and Future Direction

4.1. Self-Assessment

The present study focuses on the scientometric analysis of an umbrella review on the topic pertaining to AI in breast imaging over the past decades. Structured review methodological procedures were adopted to collate and formulate findings revealing patterns, trends, quality, and types of the included review works in the topic of interest. The search strategy, publication threat, and methodologies, such as PRISMA guidelines, were adopted and detailed in Section 2. AMSTAR appraisal tool was used to study self-assessment in view of the face and content validity (Table 2). According to Table 2, the present study scored “yes” for all 11 AMSTAR components. To support the self-assessed AMSTAR rating, justifications were given for each AMSTAR component, as recommended by [66]. Here, within review works, the authors advocate the inclusion of study self-assessment as a fragment of the structured review methodological procedures. Quality assessment is a crucial instrument to assure the relevancy of the review work toward the topic of interest while avoiding possible bias and examining the face and content validity.

Table 2.

Study assessment on this umbrella review using AMSTAR appraisal tool.

| Item | Description | Rating | Justification |

|---|---|---|---|

| 1 | Was an ‘a priori’ design provided? | + | Yes, the research questions and inclusion criteria are provided in Section 2.1 and Section 2.3, respectively |

| 2 | Was there duplicate study selection and data extraction? | + | Yes, excluded as detailed in Figure 4 |

| 3 | Was a comprehensive literature search performed? | + | Yes, as detailed in Section 2.2 |

| 4 | Was the status of publication (i.e., grey literature) used as an inclusion criterion? | − | No, inclusion criterion is provided in Section 2.3 |

| 5 | Was a list of studies (included and excluded) provided? | + | Yes, provided in Appendix A |

| 6 | Were the characteristics of the included studies provided? | + | Yes, provided in Appendix A |

| 7 | Was the scientific quality of the included studies assessed and documented? | + | Yes, as detailed in Section 3.9 |

| 8 | Was the scientific quality of the included studies used appropriately in formulating conclusions? | + | Yes, the scientific quality of the included review works from different perspectives were considered (Section 3). Recommendations and future direction are provided in Section 4.3 |

| 9 | Were the methods used to combine the findings of studies appropriate? | + | Yes, as detailed in Section 2.6 |

| 10 | Was the likelihood of publication bias assessed? | + | Yes, as detailed in Section 2.7 and Section 4.2 |

| 11 | Was the conflict of interest stated? | + | Yes, as detailed in the Conflicts of Interest Section |

+: Yes; −: No.

4.2. Limitation

The findings of this umbrella review are subjected to several limitations. First, only review works made available in full text using the English language were included for the subsequent synthesis activities. This could possibly introduce bias to the analysis outputs, describing the patterns, trends, quality, and types of review work within the dedicated scope. Second, as detailed in Section 2.3, review articles solely describing AI application (or any subset of AI) in healthcare or solely reporting the application of various breast imaging modalities were not within the interest of this umbrella review. As a result, review articles that offer emerging AI with potentially useful insights and utility in breast imaging may be excluded. Third, review works that were not populated by databases such as PubMed, Scopus, Web of Science, and Google Scholar were not included in the synthesis activities.

Moving toward precision medicine, AI finds great utility in assisting medical experts in every stage involving clinical decisions. As technology continues to develop in concert with advances in engineering, mathematics, and computer science, AI has emerged as one of the primary research areas at rapid rates. Although patterns, trends, and progress pertaining to AI in breast imaging are expected to evolve in the near future, the scientometric findings of this umbrella review continue to be viable and reflect the current state of research globally over the past decades. The umbrella review complies with a comprehensive search strategy using structured methodology such as PRISMA (Section 2.2), delineates study inclusion criteria (Section 2.3), and scrutinizes the threats to study validity (Section 2.7), alongside a self-assessment using AMSTAR.

4.3. Challenges and Future Direction

Over the years, AI has been advocated in breast imaging as a potential game-changer to meet the long-standing, constantly rising demand for high-quality healthcare, typically in precision medicine. Despite all of the reported successes, a number of obstacles and bottlenecks must be surmounted prior to the widespread clinical adoption. Breast imaging procedure generates medical images for diagnosis purposes and is rarely curated with labels, demarcation, and delineation of cancerous, non-cancerous, and microcalcification regions. Data curation is exorbitant in terms of both time and cost, requiring meticulous effort from experienced medical experts. This remains the core challenge in automating standard clinical procedures, exacerbating the adoption of data-hungry AI in cross-case referencing. Although methods, such as semi-supervised [67] and unsupervised [68] algorithms, could potentially alleviate this challenge, other barrages, such as data imbalance, are inevitable. Considering the plethora of breast imaging modalities, standardization in the ground truth and benchmarking is crucial for generalizability purposes in AI, typically across different ground truth providers. To date, within breast imaging, the volume of scientific-rich resources made available for training purposes is encouraging [69]. However, the accessibility and amount of curated data are in a stagnant paradigm [70]. Concerted efforts from various parties, including government, clinical institutions, and universities, should be encouraged to foster the integration of AI in breast imaging.

From a regulatory standpoint, the FDA has regulated AI-assisted automated systems since the 1990s [71]. The accuracy, robustness, and generalizability of AI systems are enduring concerns. The AI systems are expected to cogently demonstrate consistent and persistent applicability before obtaining clearance from the respective regulatory bodies. As aforementioned, healthcare systems are now establishing themselves as big-data repositories. Running AI architectures, such as neural networks, across big data would profoundly offer comprehensive cross-data/case referencing. This, however, flagged data privacy and security concerns. Compliance with the data privacy and security acts necessitates solutions, such as cryptonets [72], where homophobic encryption allows the neural networks to perform data training over encrypted data. AI has challenged the patent system in light of patent law, which presumes that inventors are human [73]. If the intellectual property (IP) resultant from AI, which could be life-saving and beneficial within the medical imaging context, is not patentable, investors may lose interest or be less incentivized to fund projects with AI-oriented inventors leading to nationwide depletion of AI research.

Miseducated AI algorithms could violate ethical standards, corrupt morality, signal ethical dangers, and cause severe harm, in addition to aggravating societal medical pressure [74,75]. For example, when using cost as a proxy for healthcare requirements, AI falsely recognized that black patients were healthier than white patients who equally suffered from the same sickness, as fewer medical costs were spent on black patients [76]. For example, white patients were measurably given better care than black patients who were in comparable situations [77]. The AI algorithm could be unethical by design, mirrors unconscious racism, thoughts, and biases within the developers, and is further complicated and exacerbated by the tension between opting for optimal healthcare or medical profit [77,78]. Moreover, the AI algorithm is inherently objective in nature, with characteristics acquired from historic training data representing specific patient cohorts. Thus, under-represented cohorts may be afflicted by inaccurate diagnoses. The fairness of AI must be given priority. Adding more data and careful parameter fine-tuning may not be plausible as vulnerable groups, especially the under-resourced cohorts would be unrepresented.

To date, AI systems are likely to exhibit a “black box” characteristic, which lacks interpretability, explainability, and transparency, which, in totality, hinders trust in AI systems [9,79]. Trust in AI systems is crucial, reflecting the user’s level of acceptance of the clinical outcome provided by the AI systems [80]. The AI systems in breast imaging should be equipped with scientific reasons behind a clinical outcome and help the user decipher the underpinning factors. To keep healthcare affordable, AI should not be meant to prevent medical experts from making occasional mistakes, but to fully automate certain procedures that are currently performed by medical experts. Ultimately, AI in breast imaging should increase clinical productivity while avoiding unnecessary increases in healthcare costs.

Although AI can detect ailments and auxiliary findings that may be clinically beneficial, these findings may also be clinically irrelevant. During the clinical transition stage, the findings from AI shall be carefully distilled and interrogated by medical experts, evaluating relevance to avoid unnecessary increases in patients’ tension, healthcare costs, and unwanted side effects while improving AI robustness and applicability. Over time, as AI systems attain maturity, the findings from AI could be incorporated as part of the standard reporting procedure for diagnostic and prognostic purposes. Medical experts can now perform continuous temporal assessment and quality assurance on the AI systems, such as AI quality improvement (AI-QI), as suggested in [81].

AI in breast imaging is a complex problem, complicated and embodies cross-disciplinary collaboration and cross-topic knowledge. To ensure the long-term success of AI integration within breast imaging, it is imperative that experts from diverse scientific backgrounds work together. Recently, convergence science has emerged as one of the research models for tackling such complex challenges [82,83]. Convergence science is an active area, and its definition is evolving over time. Nonetheless, a general consensus has been reached such that convergence science is not simply a set of experts meeting one another, but over time, the experts absorb knowledge from one another, breakthrough the respective silos of knowledge, reshape research mindsets, complement respective research experiences, and ultimately foster the development of new solutions [82].

From a broader perspective, breast imaging should not be an isolated measure of cancer. Core and incidental reporting on measures, such as molecular signatures, biomarkers, the nature of cancers, environmental factors, socioeconomic status, and social network settings, shall be considered. AI in breast imaging should be seen as one of the fundamental measures, alongside other computer-aided systems (e.g., wearable sensors and medical expert systems), to be integrated for the improvement of outcome predictions in both breast cancer diagnostic and prognostic stages.

Widespread AI adoption in breast imaging is complicated by multiple factors, and this issue contemplates the acquisition of funding, leadership, sustainable mechanisms, and awareness among medical practitioners. Adequate funding is not always available; and even if such funding exists, the funding utilization is likely suboptimal with ineffective practices across multiple sectors. The right leadership is required to advocate for, manage, and oversee the implementation of AI in breast imaging. To promote the widespread adoption of AI in breast imaging regardless of geography or socioeconomic status, policies, procedures, and guidelines must be developed and nationwide infrastructure (e.g., computer systems and internet access) must be evaluated.

5. Summary

While algorithms across a broad spectrum of breast imaging applications have demonstrated potential, AI has emerged as the dominant research paradigm, with compelling utility converging towards precision medicine and automatic triage. Integration of AI in breast imaging is flourishing and is anticipated to continue. In this formal scientometric umbrella review, the authors examined the patterns, trends, quality, and types of the included review work from the past decades in an effort to provide a concise but comprehensive overview of the topic of interest. This scientometric umbrella review functions as a scientific, scholarly communication, evaluates the level of consensus, identifies research gaps, highlights challenges, and provides commentary on the future direction of the field. For newcomers to the field, the scientometric umbrella review provides a holistic and timely overview, offers valuable insight into the intellectual landscape, understanding the development of literature, and highlights the road ahead. For existing experienced researchers, the scientometric umbrella review serves as an instrument for keeping their knowledge updated, especially in identifying research areas that are potentially relevant but beyond their immediate research topic. For relevant stakeholders, the scientometric umbrella review can be used to prioritize research and project funding to benefit areas that require impactful and immediate solutions through AI integration within breast imaging.

Appendix A

Table A1 shows the qualitative data distilled from each included review work. Table A2 and Table A3 show the AMSTAR and SANRA rating for each included review work, respectively.

Table A1.

Qualitative data distilled from each included review on imaging modalities, AI techniques, and the corresponding review highlights.

| No. | References | Imaging Modalities |

AI Techniques | Cited By * | Highlights |

|---|---|---|---|---|---|

| Traditional Review | |||||

| 1 | [84] | Thermography | CNN | 21 |

|

| 2 | [85] | Thermography | ANN: RBFN, KNN, PNN, SVM, ResNet50, SeResNet50, V Net, Bayes Net, CNN, C-DCNN, VGG-16, Hybrid (ResNet-50 and V-Net), ResNet101, DenseNet, and InceptionV3 |

11 |

|

| 3 | [86] | X-ray, CT, ultrasound, MRI, nuclear, and microscopy | Deep learning | 38 |

|

| 4 | [87] | Mammogram, ultrasound, and MRI | Machine learning and deep learning | 1 |

|

| 5 | [88] | Mammogram, ultrasound, MRI, FDG PET/CT | Augmented intelligence and machine learning | 16 |

|

| 6 | [89] | Mammogram, ultrasound, MRI, | Machine learning, CADe, ANN, CNN, and NLP | 36 |

|

| 7 | [90] | Mammogram and ultrasound | Machine learning | 1 |

|

| 8 | [91] | Thermography and electrical impedance tomography |

Machine learning and CADe | 15 |

|

| 9 | [92] | Transillumination imaging, diffuse optical imaging, and near-infrared spectroscopy |

Machine learning | 13 |

|

| 10 | [93] | Mammogram, Tomosynthesis, ultrasound, DBCT, MRI, DWI, CT, NIR fluorescence, and SPECT | SVM, ANN, and robotics | 6 |

|

| 11 | [94] | PET and MRI | Fuzzy logic and neural network | 26 |

|

| 12 | [9] | Mammogram, tomosynthesis, DCE-MRI, and ultrasound | CADe and CADx systems | 449 |

|

| 13 | [95] | MRI | Analytical radiomic-based (human-engineered) and deep learning-based CADe | 44 |

|

| 14 | [96] | MRI | Machine learning and deep learning | 4 |

|

| 15 | [97] | MRI | 3D printing, augmented reality. Radiomics, and machine learning | 12 |

|

| 16 | [98] | Mammogram, ultrasound, and MRI | ANN, CNN, CADe, and GANs | 0 |

|

| 17 | [99] | Mammogram | Machine learning (supervised, unsupervised, reinforcement, and deep learning) | 6 |

|

| 18 | [100] | Mammogram and DBT | Deep learning | 89 |

|

| 19 | [101] | Ultrasonography | CNN | 9 |

|

| 20 | [102] | Mammogram, ultrasound, and MRI | Deep learning and CADe | 32 |

|

| 21 | [103] | Mammogram | Machine learning and radiomics | 19 |

|

| 22 | [104] | Mammogram, sonography, MRI, and image-guided biopsy | Deep learning and radiomics | 2 |

|

| 23 | [105] | Mammogram, ultrasound, PET, and MRI | ANN, SVM, and radiomics | 1 |

|

| 24 | [106] | Ultrasound | Deep learning | 5 |

|

| 25 | [107] | Mammogram and ultrasound | Eye tracking tool and CADe | 10 |

|

| 26 | [108] | Mammogram | CADe, CADx, machine learning, deep learning, and CNN | 48 |

|

| 27 | [109] | Mammogram and tomosynthesis | Deep learning | 0 |

|

| 28 | [110] | Nuclear medicine | Deep learning and radiomics | 1 |

|

| 29 | [111] | MRI, CT, PET, SPECT, ultrasound, tomosynthesis, and radiology | Neural network, deep learning, and machine learning | 227 |

|

| 30 | [112] | Mammogram, ultrasound, MRI, and tomosynthesis | ANN, CADe, CADx, CNN, deep learning, and machine learning | 8 |

|

| 31 | [113] | Mammogram, ultrasound, and MRI | Deep learning | 5 |

|

| 32 | [114] | Mammogram, ultrasound, MRI | SNN, SDAE, DBN, and CNN | 2 |

|

| 33 | [115] | Mammogram, ultrasound, MRI, and tomosynthesis | Deep learning and AI-CADe | 79 |

|

| 34 | [116] | Tomosynthesis, CT, and FDG PET/CT | ANN, DNN, SVM | 84 |

|

| 35 | [117] | Ultrasound | Machine learning and deep learning | 8 |

|

| 36 | [118] | Mammogram, tomosynthesis, ultrasonography, and MRI | CADe, radiomics, IoT, and machine learning tools | 17 |

|

| 37 | [119] | Mammogram and CT | Deep learning, CADe | 6 |

|

| 38 | [120] | Mammogram, ultrasound, PET, CT, and MRI | CADe, ANN | 127 |

|

| 39 | [121] | Ultrasound | CADx | 20 |

|

| 40 | [122] | Mammogram, ultrasound, MRI, and thermography | Machine learning, deep learning, and CADx | 42 |

|

| 41 | [123] | Mammogram | Radiomics | 0 |

|

| 42 | [124] | MRI and DCE-MRI | Radiogenomics | 0 |

|

| 43 | [125] | Tomosynthesis, MRI, ultrasound, MBI | Machine learning and CADx | 3 |

|

| 44 | [126] | Thermography | SVM, ANN, and CADx | 42 |

|

| 45 | [127] | Mammogram | AI-CADe | 62 |

|

| 46 | [128] | Mammogram and MRI | CNN | 1 |

|

| 47 | [70] | General breast imaging | Machine learning, ANN, deep learning | 11 |

|

| Purpose Specific Review | |||||

| 48 | [129] | Mammogram, ultrasound, SWE, SWV, and sonoelastography | CADx, SVM, CNN, LASSO, and ridge regression | 4 |

|

| 49 | [130] | Mammogram, ultrasound, and MRI | Deep learning and radiogenomics | 13 |

|

| 50 | [131] | Mammogram, ultrasound, and tomosynthesis | Machine learning and deep learning | 54 |

|

| 51 | [132] | EIT | PSO, ANN, GA, and other machine learning algorithms | 44 |

|

| 52 | [133] | DOT | Deep learning | 3 |

|

| 53 | [134] | Ultrasound | Deep learning | 2 |

|

| 54 | [135] | PET/CT and PET/MRI | Radiomics | 16 |

|

| 55 | [136] | Mammogram | CADe and CADx | 28 |

|

| 56 | [137] | Mammogram, ultrasound, DBT, and MRI | Deep learning, CADe, and CADx | 3 |

|

| 57 | [138] | Ultrasound | CADe and CNN | 0 |

|

| 58 | [139] | Thermography | SVM, ANN, BN, CADe, CNN, and GA | 0 |

|

| 59 | [140] | Thermography | ANN | 13 |

|

| Systematic Review | |||||

| 60 | [141] | Mammogram, ultrasound, MRI, DBPET, DWI, PWI, CT, PET/CT, and PET/MRI | Radiomics, machine learning, and deep learning | 5 |

|

| 61 | [142] | Thermography | SVM, ANN, DNN, and RNN | 81 |

|

| 62 | [143] | Mammogram, CT, and MRI | Machine learning, deep learning, and ANN, | 1 |

|

| 63 | [144] | Mammogram, ultrasound, CT, and MRI | Deep CNN | 2 |

|

| 64 | [65] | Mammogram, DCE-MRI | Machine learning and deep learning | 3 |

|

| 65 | [64] | Mammogram, ultrasound | CNN, ANN, DNN, MLP, SVM, DT, GA, KNN, NB, LR, LA, and GMM | 12 |

|

| Mixed Method Review | |||||

| 66 | [145] | Ultrasound and tomosynthesis | DNN, RCNN, faster RCNN, deep CNN, and ReLU | 4 |

|

| 67 | [146] | Mammogram, tomosynthesis, ultrasound, tomography, and MRI | Multilayered DNN | 12 |

|

| 68 | [147] | Mammogram and MRI | Radiomics | 22 |

|

| 69 | [148] | MRI | Machine learning and transfer learning | 0 |

|

| 70 | [149] | Mammogram, ultrasound, and MRI | ANN, SNN, CNN, and CADe | 41 |

|

| Qualitative Review | |||||

| 71 | [150] | Mammogram, CT, and MRI | Machine learning | 51 |

|

* Data retrieved from the Scopus database as of 17 June 2022. AI: artificial intelligence; CNN: Convolutional Neural Networks; ANN: Artificial Neural Network; RBFN: Radial Basis Function Network; KNN: K-Nearest Neighbors; PNN: Probability Neural Network; SVM: Support Vector Machine; C-DCNN: DeConvolutional Neural Networks; CT: Computer tomography; MRI: magnetic resonance imaging; FDG: fluorodeoxyglucose; PET: Positron Emission Tomography; CADe: computer-aided detection; NLP: natural language processing; 3D: three dimensional; DBCT: Dedicated breast computed tomography; DWI: diffusion-weighted imaging, NIR: Near-Infrared; SPECT: Single-photon emission computed tomography; CADx: computer-aided diagnosis; DCE-MRI: dynamic contrast-enhanced magnetic resonance imaging; DCIS: ductal carcinoma in situ; GANs: generative adversarial networks; DBT: digital breast tomosynthesis; CEDM: Contrast-enhanced digital mammography; SNN: Shallow Neural Network; SDAE: Stacked Denoising Autoencoder; DBN: Deep Belief Network; DNN: deep neural networks; IoT: Internet of things; MBI: molecular breast imaging; PACS: picture archiving and communications system; SWE: shear wave elastography; SWV: shear wave viscosity; LASSO: least absolute shrinkage and selection operator; MRE: Magnetic resonance elastography; EIT: Electrical impedance tomography; PSO: particle swarm optimization; GA: genetic algorithm; DOT: Diffuse optical tomography; BN: Bayesian network; CE-MRI: contrast-enhanced magnetic resonance imaging; NAC: Neoadjuvant chemotherapy; DBPET: Dedicated breast positron emission tomography; PWI: perfusion weighted imaging; RNN: recurrent neural network; MLP: Multi-layer Perceptron; DT: decision tree; NB: naïve bayes; LR: Logistic Regression; LA: Linear discriminant analysis; GMM: Gaussian Mixture Modelling; RCNN: Region-Based Convolutional Neural Network; ReLU: rectified linear unit; ALNM: axillary lymph node metastasis.

Table A2.

AMSTAR rating for each included review work (systematic review).

| No. | References | Items | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | ||

| Systematic Review | ||||||||||||

| 1 | [141] | − | − | − | − | − | − | − | + | ∅ | − | + |

| 2 | [142] | − | − | − | − | − | − | − | + | ∅ | − | + |

| 3 | [143] | − | + | + | − | + | + | + | + | + | − | + |

| 4 | [144] | − | + | + | − | + | + | − | + | − | − | + |

| 5 | [65] | + | + | + | − | + | + | + | + | + | − | + |

| 6 | [64] | + | + | + | + | + | − | + | + | + | + | + |

| Analysis | ||||||||||||

| + | 2 | 4 | 4 | 1 | 4 | 3 | 3 | 6 | 3 | 1 | 6 | |

| − | 4 | 2 | 2 | 5 | 2 | 3 | 3 | 0 | 1 | 5 | 0 | |

| ⊗ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| ∅ | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | |

| Total | 6 | |||||||||||

+: Yes; −: No; ⊗: Can’t answer; ∅: Not applicable.

Table A3.

SANRA rating for each included review work (non-systematic review).

| No. | References | Items | |||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | ||

| Traditional Review | |||||||

| 1 | [84] | 2 | 2 | 0 | 2 | 2 | 1 |

| 2 | [85] | 2 | 2 | 0 | 2 | 2 | 1 |

| 3 | [86] | 1 | 1 | 0 | 2 | 1 | 1 |

| 4 | [87] | 2 | 1 | 0 | 2 | 2 | 1 |

| 5 | [88] | 1 | 2 | 0 | 2 | 1 | 2 |

| 6 | [89] | 1 | 1 | 2 | 1 | 1 | 1 |

| 7 | [90] | 2 | 2 | 0 | 2 | 1 | 1 |

| 8 | [91] | 2 | 1 | 0 | 2 | 2 | 2 |

| 9 | [92] | 2 | 2 | 0 | 2 | 2 | 2 |

| 10 | [93] | 2 | 1 | 1 | 2 | 2 | 2 |

| 11 | [94] | 2 | 2 | 0 | 2 | 1 | 0 |

| 12 | [9] | 2 | 1 | 0 | 2 | 2 | 2 |

| 13 | [95] | 1 | 1 | 0 | 1 | 2 | 2 |

| 14 | [96] | 1 | 2 | 2 | 2 | 2 | 2 |

| 15 | [97] | 1 | 0 | 0 | 2 | 2 | 0 |

| 16 | [98] | 1 | 1 | 2 | 1 | 0 | 0 |

| 17 | [99] | 1 | 2 | 0 | 1 | 1 | 1 |

| 18 | [100] | 1 | 1 | 0 | 2 | 2 | 1 |

| 19 | [101] | 1 | 1 | 0 | 1 | 1 | 0 |

| 20 | [102] | 2 | 1 | 2 | 2 | 2 | 2 |

| 21 | [103] | 2 | 2 | 0 | 1 | 2 | 2 |

| 22 | [104] | 2 | 1 | 0 | 2 | 1 | 0 |

| 23 | [105] | 1 | 1 | 0 | 2 | 1 | 0 |

| 24 | [106] | 2 | 1 | 1 | 2 | 2 | 2 |

| 25 | [70] | 2 | 2 | 0 | 2 | 2 | 1 |

| 26 | [107] | 2 | 2 | 0 | 2 | 1 | 0 |

| 27 | [108] | 1 | 0 | 0 | 1 | 1 | 0 |

| 28 | [109] | 2 | 2 | 1 | 1 | 2 | 2 |

| 29 | [110] | 2 | 1 | 2 | 2 | 2 | 1 |

| 30 | [111] | 1 | 1 | 2 | 2 | 2 | 0 |

| 31 | [112] | 1 | 1 | 0 | 2 | 2 | 2 |

| 32 | [113] | 2 | 2 | 0 | 2 | 2 | 0 |

| 33 | [114] | 2 | 2 | 0 | 2 | 2 | 2 |

| 34 | [115] | 2 | 1 | 0 | 2 | 2 | 0 |

| 35 | [116] | 2 | 1 | 2 | 2 | 2 | 2 |

| 36 | [117] | 1 | 1 | 0 | 2 | 2 | 0 |

| 37 | [118] | 2 | 2 | 0 | 2 | 2 | 2 |

| 38 | [119] | 2 | 2 | 0 | 2 | 2 | 0 |

| 39 | [120] | 1 | 2 | 0 | 2 | 2 | 2 |

| 40 | [121] | 2 | 0 | 0 | 1 | 1 | 0 |

| 41 | [122] | 1 | 1 | 1 | 2 | 2 | 2 |

| 42 | [123] | 2 | 1 | 2 | 2 | 2 | 2 |

| 43 | [124] | 2 | 2 | 0 | 2 | 2 | 2 |

| 44 | [125] | 2 | 2 | 0 | 1 | 1 | 0 |

| 45 | [126] | 2 | 2 | 0 | 2 | 2 | 1 |

| 46 | [127] | 1 | 1 | 0 | 1 | 1 | 0 |

| 47 | [128] | 1 | 1 | 0 | 1 | 1 | 0 |

| Purpose Specific Review | |||||||

| 48 | [129] | 1 | 1 | 0 | 1 | 1 | 1 |

| 49 | [130] | 2 | 1 | 0 | 2 | 2 | 1 |

| 50 | [131] | 2 | 2 | 2 | 1 | 2 | 2 |

| 51 | [132] | 1 | 1 | 0 | 2 | 2 | 1 |

| 52 | [133] | 1 | 2 | 0 | 2 | 2 | 1 |

| 53 | [134] | 1 | 1 | 0 | 2 | 1 | 1 |

| 54 | [135] | 2 | 2 | 0 | 2 | 2 | 2 |

| 55 | [136] | 2 | 1 | 0 | 2 | 2 | 2 |

| 56 | [137] | 2 | 2 | 0 | 1 | 1 | 0 |

| 57 | [138] | 1 | 2 | 0 | 2 | 2 | 2 |

| 58 | [139] | 2 | 1 | 0 | 2 | 1 | 2 |

| 59 | [140] | 1 | 2 | 0 | 1 | 2 | 2 |

| Mixed Method Review | |||||||

| 60 | [145] | 2 | 1 | 0 | 1 | 1 | 0 |

| 61 | [146] | 1 | 1 | 0 | 2 | 2 | 2 |

| 62 | [147] | 1 | 1 | 0 | 2 | 2 | 1 |

| 63 | [148] | 2 | 2 | 2 | 1 | 2 | 2 |

| 64 | [149] | 2 | 2 | 2 | 2 | 2 | 2 |

| Qualitative Review | |||||||

| 65 | [150] | 2 | 1 | 0 | 0 | 2 | 2 |

| Analysis | |||||||

| Score 0 | 0 | 3 | 50 | 1 | 1 | 19 | |

| Score 1 | 27 | 34 | 4 | 18 | 20 | 17 | |

| Score 2 | 38 | 28 | 11 | 46 | 44 | 29 | |

| Total | 65 | ||||||

Author Contributions

Conceptualization, X.J.T. and W.L.C.; methodology, X.J.T.; validation, L.L.L., K.S.A.R. and I.H.B.; formal analysis, X.J.T. and W.L.C.; writing—original draft preparation, X.J.T.; writing—review and editing, X.J.T., W.L.C., L.L.L., K.S.A.R. and I.H.B.; visualization, X.J.T. and W.L.C.; funding acquisition, X.J.T. and L.L.L. All authors have read and agreed to the published version of the manuscript.

Conflicts of Interest

The authors declare no competing interests.

Funding Statement

This research was funded by internal grant from Tunku Abdul Rahman University of Management and Technology (TAR UMT) with grant number: UC/I/G2021-00078. The APC was funded in part by the Centre for Multimodal Signal Processing, Department of Electrical and Electronics Engineering, TAR UMT.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Faguet G.B. A brief history of cancer: Age-old milestones underlying our current knowledge database. Int. J. Cancer. 2015;136:2022–2036. doi: 10.1002/ijc.29134. [DOI] [PubMed] [Google Scholar]

- 2.US National Library of Medicine. An ancient Medical Treasure at your fingertips. NLM Tech Bull 2010. [(accessed on 30 October 2022)]; Available online: https://www.nlm.nih.gov/pubs/techbull/ma10/ma10_hmd_reprint_papyrus.html.

- 3.Sung H., Ferlay J., Siegel R.L., Laversanne M., Soerjomataram I., Jemal A., Bray F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021;71:209–249. doi: 10.3322/caac.21660. [DOI] [PubMed] [Google Scholar]

- 4.Chu J., Zhou C., Guo X., Sun J., Xue F., Zhang J., Lu Z., Fu Z., Xu A. Female Breast Cancer Mortality Clusters in Shandong Province, China: A Spatial Analysis. Sci. Rep. 2017;7:105. doi: 10.1038/s41598-017-00179-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Akin O., Brennan S., Dershaw D.D., Ginsberg M.S., Gollub M.J., Schöder H., Panicek D., Hricak H. Advances in oncologic imaging. CA Cancer J. Clin. 2012;62:364–393. doi: 10.3322/caac.21156. [DOI] [PubMed] [Google Scholar]

- 6.Evans W.P. Breast cancer screening: Successes and challenges. CA Cancer J. Clin. 2012;62:5–9. doi: 10.3322/caac.20137. [DOI] [PubMed] [Google Scholar]

- 7.Duffy S.W., Tabár L., Yen A.M., Dean P.B., Smith R.A., Jonsson H., Törnberg S., Chen S.L.-S., Chiu S.Y., Fann J.C., et al. Mammography screening reduces rates of advanced and fatal breast cancers: Results in 549,091 women. Cancer. 2020;126:2971–2979. doi: 10.1002/cncr.32859. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Napel S., Mu W., Jardim-Perassi B.V., Aerts H.J.W.L., Gillies R.J. Quantitative imaging of cancer in the postgenomic era: Radio(geno)mics, deep learning, and habitats. Cancer. 2018;124:4633–4649. doi: 10.1002/cncr.31630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bi W.L., Hosny A., Schabath M.B., Giger M.L., Birkbak N., Mehrtash A., Allison T., Arnaout O., Abbosh C., Dunn I.F., et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J. Clin. 2019;69:127–157. doi: 10.3322/caac.21552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yu J., Deng Y., Liu T., Zhou J., Jia X., Xiao T., Zhou S., Li J., Guo Y., Wang Y., et al. Lymph node metastasis prediction of papillary thyroid carcinoma based on transfer learning radiomics. Nat. Commun. 2020;11:4807. doi: 10.1038/s41467-020-18497-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang Y., Herrington D.M. Machine intelligence enabled radiomics. Nat. Mach. Intell. 2021;3:838–839. doi: 10.1038/s42256-021-00404-0. [DOI] [Google Scholar]

- 12.Roy S., Whitehead T.D., Quirk J.D., Salter A., Ademuyiwa F.O., Li S., An H., Shoghi K.I. Optimal co-clinical radiomics: Sensitivity of radiomic features to tumour volume, image noise and resolution in co-clinical T1-weighted and T2-weighted magnetic resonance imaging. Ebiomedicine. 2020;59:102963. doi: 10.1016/j.ebiom.2020.102963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Clarke L.P., Nordstrom R.J., Zhang H., Tandon P., Zhang Y., Redmond G., Farahani K., Kelloff G., Henderson L., Shankar L., et al. The Quantitative Imaging Network: NCI’s Historical Perspective and Planned Goals. Transl. Oncol. 2014;7:1–4. doi: 10.1593/tlo.13832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nordstrom R.J. The Quantitative Imaging Network in Precision Medicine. Tomography. 2016;2:239–241. doi: 10.18383/j.tom.2016.00190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Van Timmeren J.E., Cester D., Tanadini-Lang S., Alkadhi H., Baessler B. Radiomics in medical imaging-”how-to” guide and critical reflection. Insights Imaging. 2020;11:91. doi: 10.1186/s13244-020-00887-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rogers W., Seetha S.T., Refaee T.A.G., Lieverse R.I.Y., Granzier R.W.Y., Ibrahim A., Keek S.A., Sanduleanu S., Primakov S.P., Beuque M.P.L., et al. Radiomics: From qualitative to quantitative imaging. Br. J. Radiol. 2020;93:20190948. doi: 10.1259/bjr.20190948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gillies R.J., Kinahan P.E., Hricak H. Radiomics: Images Are More than Pictures, They Are Data. Radiology. 2016;278:563–577. doi: 10.1148/radiol.2015151169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Aerts H.J.W.L. The Potential of Radiomic-Based Phenotyping in Precision Medicine. JAMA Oncol. 2016;2:1636–1642. doi: 10.1001/jamaoncol.2016.2631. [DOI] [PubMed] [Google Scholar]

- 19.Roy S., Whitehead T.D., Li S., Ademuyiwa F.O., Wahl R.L., Dehdashti F., Shoghi K.I. Co-clinical FDG-PET radiomic signature in predicting response to neoadjuvant chemotherapy in triple-negative breast cancer. Eur. J. Nucl. Med. 2022;49:550–562. doi: 10.1007/s00259-021-05489-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mnih V., Kavukcuoglu K., Silver D., Rusu A.A., Veness J., Bellemare M.G., Graves A., Riedmiller M., Fidjeland A.K., Ostrovski G., et al. Human-level control through deep reinforcement learning. Nature. 2015;518:529–533. doi: 10.1038/nature14236. [DOI] [PubMed] [Google Scholar]

- 21.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 22.Moravčík M., Schmid M., Burch N., Lisý V., Morrill D., Bard N., Davis T., Waugh K., Johanson M., Bowling M. DeepStack: Expert-level artificial intelligence in heads-up no-limit poker. Science. 2017;356:508–513. doi: 10.1126/science.aam6960. [DOI] [PubMed] [Google Scholar]

- 23.Xiong W., Droppo J., Huang X., Seide F., Seltzer M.L., Stolcke A., Yu D., Zweig G. Toward Human Parity in Conversational Speech Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2017;25:2410–2423. doi: 10.1109/TASLP.2017.2756440. [DOI] [Google Scholar]

- 24.Pendleton S.D., Andersen H., Du X., Shen X., Meghjani M., Eng Y.H., Rus D., Ang M. Perception, Planning, Control, and Coordination for Autonomous Vehicles. Machines. 2017;5:6. doi: 10.3390/machines5010006. [DOI] [Google Scholar]

- 25.Hosny A., Parmar C., Quackenbush J., Schwartz L.H., Aerts H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Sutton A., Clowes M., Preston L., Booth A. Meeting the review family: Exploring review types and associated information retrieval requirements. Health Inf. Libr. J. 2019;36:202–222. doi: 10.1111/hir.12276. [DOI] [PubMed] [Google Scholar]

- 27.Slim K., Marquillier T. Umbrella reviews: A new tool to synthesize scientific evidence in surgery. J. Visc. Surg. 2022;159:144–149. doi: 10.1016/j.jviscsurg.2021.10.001. [DOI] [PubMed] [Google Scholar]

- 28.Chen C., Song M. Visualizing a field of research: A methodology of systematic scientometric reviews. PLoS ONE. 2019;14:e0223994. doi: 10.1371/journal.pone.0223994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kalaf J.M. Mammography: A history of success and scientific enthusiasm. Radiol. Bras. 2014;47:VII–VIII. doi: 10.1590/0100-3984.2014.47.4e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Trivedi U., Omofoye T.S., Marquez C., Sullivan C.R., Benson D.M., Whitman G.J. Mobile Mammography Services and Underserved Women. Diagnostics. 2022;12:902. doi: 10.3390/diagnostics12040902. [DOI] [PMC free article] [PubMed] [Google Scholar]