Abstract

Semantic communication is not focused on improving the accuracy of transmitted symbols, but is concerned with expressing the expected meaning that the symbol sequence exactly carries. However, the measurement of semantic messages and their corresponding codebook generation are still open issues. Expansion, which integrates simple things into a complex system and even generates intelligence, is truly consistent with the evolution of the human language system. We apply this idea to the semantic communication system, quantifying semantic transmission by symbol sequences and investigating the semantic information system in a similar way as Shannon’s method for digital communication systems. This work is the first to discuss semantic expansion and knowledge collision in the semantic information framework. Some important theoretical results are presented, including the relationship between semantic expansion and the transmission information rate. We believe such a semantic information framework may provide a new paradigm for semantic communications, and semantic expansion and knowledge collision will be the cornerstone of semantic information theory.

Keywords: semantic information theory, semantic communications, information theory, 6G, game theory

1. Introduction and Overview

It is well known that Shannon’s information theory [1] answers two fundamental questions in digital communication theory [2], namely about the ultimate data compression rate (answer: the entropy H) and the ultimate reliable transmission rate of communication (answer: the channel capacity C). It also addresses technical implementation problems in digital communication systems, enabling the end user to receive the same symbols as the sender. However, with the ever-increasing demand for intelligent wireless communications, communication architecture is evolving from only focusing on transmitting symbols to the intelligent interconnection of everything [3]. In the 1950s, Weaver [4] discussed the semantic problem of communications, and categorized communications into the following three levels:

Level A. How accurately can the symbols of communication be transmitted? (The technical problem.)

Level B. How precisely do the transmitted symbols convey the desired meaning? (The semantic problem.)

Level C. How effectively does the received meaning affect conduct in the desired way? (The effectiveness problem.)

Recently, semantic information theory including semantic communications has attracted much attention. However, how to set up a reasonable and feasible measure of semantic messages is still an open problem, which may be the greatest challenge for the new developments of semantic communication (SC) systems. On the other hand, currently in 6G networks, intelligent interconnections of everything will bring a new paradigm of the communication mode with semantic interaction. In this regard, finding a way to quickly reflect the semantic processing of messages will become one more promising pathway to 6G intelligent networking systems.

This paper proposes a new communication system framework based on information framework expansion and knowledge collision. It extends Shannon’s theory of communication (Level A) to a theory of semantic communication (Level B). This work is initially influenced by Choi and Park [5], but it provides further important contributions, which are as follows:

We generalize the work of Choi and Park from a single fixed semantic type to a dynamic expansion mode, associated with knowledge collision, which can reflect the asynchronous knowledge update processes between the sender and the receiver to some degree. In addition, it also takes into account the effect of channel noise in the new model.

We present a new measure related to the semantic communication system based on the framework with semantic expansion and knowledge collision, called the Measure of Comprehension and Interpretation, which can be used to quantify the semantic entropy of discrete sources.

We discuss the additional gains from semantic expansion and find the relationship between the semantic expansion and the transmission information rate. We demonstrate that knowledge matching of its asynchronous scaling up plays a key role in semantic communications.

As a primary work, the system proposed in this paper only focuses on discrete cases and makes some drastic simplifications. We assume that semantics are abstractly generated only from signals and knowledge, without considering other factors. In addition, semantic types, signals, responses, and knowledge instances (explained in Section 2) are represented by random variables. Moreover, we do not focus on the effectiveness problem (Level C), which is beyond the scope of this paper. However, we believe that these simplifications are necessary for us to focus on the “core” issues to clearly explain and set up connections within a general semantic information theory. To the best of our knowledge, this is the first study to explore the semantic expansion and the asynchronous scaling up of semantic knowledge. The insights gained from this work may be of great assistance to the development of future intelligent semantic communication systems and 6G.

The rest of this paper is organized as follows. Section 2 introduces the related work of semantic communications. It summarizes the key concepts and theoretical results of Shannon’s information theory and semantic information theory. In Section 3, we present the new generalized model of semantic communications from two aspects, one is an attempt to set up a close relationship with the Shannon communication model, and the other is to find a feasible modification of the model so that it can exactly reflect the quick surge of semantic communications from the user requirements. In Section 4, we lay out the implementation details of the simulation and discuss the experimental results. Finally, we conclude the paper in Section 5.

2. Related Work

In this section, we introduce the framework of semantic information theory, specifically the key concept, semantic entropy. We then go on to explore the works that we are concerned with, semantic communication as a signaling game with correlated knowledge bases.

2.1. Preliminaries

Although Shannon’s information did not address the semantic problem of communications, it provided important insights into the message-processing techniques associated with the focus of both the sender and the receiver’s attention. Thus, in this subsection, we briefly introduce the main concepts and theoretical results of Shannon’s information theory.

Entropy. It is a measure of the uncertainty of a random variable [2]. Let X be a discrete random variable with alphabet and probability mass function . The entropy of X is defined as follows:

| (1) |

Mutual information. It is a measure of the amount of information that one random variable contains about another. That is, mutual information can be seen as the reduction in the uncertainty of one random variable due to the knowledge of the other. Let us consider two random variables X and Y with a joint probability mass function and the marginal probability mass functions and . The mutual information between X and Y is defined as follows:

| (2) |

Channel capacity. The channel capacity is the maximum amount of mutual information given the conditional transit probability from X to Y, . It is defined by

| (3) |

where X and Y are the input and output of the channel, respectively.

Source coding theorem. As , N i.i.d. (independent identically distribution) random variables with entropy can be compressed into a little more than bits to represent them completely, the information loss can be negligible in this case. Conversely, if they are compressed into fewer than bits, there will be errors with a non-zero probability.

Channel coding theorem. For a discrete memoryless channel, all rates below capacity C are achievable. Conversely, any sequence of codes with a negligible error must obey the rule that its transmission rate R is not greater than the channel capacity, .

2.2. Semantic Information Theory

In the literature, there are some works that focus on the semantic information theory. Carnap and Bar-Hillel [6] were the first to propose the concept of semantic entropy, using logical probability rather than statistical probability to measure the semantic entropy of a sentence. For simplicity, it is necessary here to clarify that we use p and m to denote statistical probability and logical probability in this paper, respectively. The logical probability of a sentence is measured by the likelihood that the sentence is true in all possible situations [7]. Then, the semantic information of the message e is defined as follows:

| (4) |

where is the logical probability of e. However, this metric led to a paradox that any fact has an infinite amount of information when it contradicts itself, i.e., . Floridi [8] solved the paradox in Carnap and Bar-Hillel’s proposal [6], adopting the relative distance of semantics to measure the amount of information.

Bao et al. [7] defined the semantic entropy of a message x as follows:

| (5) |

where the logical probability of x is given by

| (6) |

W is the symbol set of a source, ⊧ is the proposition satisfaction relation, and is the set of models for x. In addition, is a probability measure, .

Besides the logical probability, there are some definitions of semantic entropy based on different backgrounds [9,10]. D’Alfonso [11] utilized the notion of truthlikeness to quantify semantic information. Kolchinsky and Wolpert [12] defined semantic entropy as the syntactic information that a physical system has about its environment, which is necessary for the system to maintain its own existence. Kountouris and Pappas [13] advocated for assessing and extracting the semantic value of data at three different granularity levels, namely the microscopic scale, mesoscopic scale, and macroscopic scale.

Analogous to Shannon’s information theory, some related theories at the semantic level, such as semantic channel capacity, semantic rate distortion, and information bottleneck [14], are also explored. Based on (5), Bao et al. further proposed the semantic channel coding theorem [7]. Moreover, Liu et al. [15] formulated rate distortion in semantic communication as follows:

| (7) |

where is the semantic distortion between source, X, and recovered information, , at the receiver. is the distortion between semantic representation, W, and received semantic representation, . In addition, some works [16,17] generalized it, leading to the development of semantic rate distortion theory.

In recent years, the fusion of semantic communication algorithms and learning theory [18,19,20,21] has driven the changes in communication architecture. Although these explorations did not fully open the door to semantic communication, they provided us with the motivation to move forward theoretically.

2.3. Semantic Communication as a Lewis Signaling Game with Knowledge Bases

Recently, Choi and Park [5] proposed an SC model based on the Lewis signaling game, which provided some meaningful results. Their work motivated us to conduct this study to some degree. Further, we make a generalization of this model by adding the scaling up update module of the knowledge instance at both the sender and the receiver, which may be asynchronous, and closer to real semantic communications in the era of intelligent communication, i.e., human to robots, robots to robots, machines to machines and cells to cells in bio-molecular communications, etc. Thus, we provide further details.

Suppose there is a semantic communication system where Alice is the sender and Bob is the receiver. Let denote the semantic type that includes semantic information or messages. Alice wishes to transmit T to Bob by sending a signal , and Bob chooses its response . Both Alice and Bob utilize their local knowledge bases and , respectively. The semantic architecture can be described as follows:

where and are knowledge instances. If , the communication process can be seen as successful. Moreover, the reward for one communication is defined as follows:

| (8) |

Our object is to maximize the average reward. We use the success rate of semantic agreement (SRSA) to represent it.

The knowledge base can be regarded as the side information. Alice has her knowledge base . The instance of Alice’s knowledge base at each time is , which affects the generation of semantic types. On the other hand, Bob has his knowledge base and its instance is . is the “core”, which is used to infer the intended message together with the received signal.

Based on these foundations, Choi and Park [5] derived some meaningful results. On the one hand, the similarity of the two knowledge instances plays a crucial role in SC. With limited bandwidth, the communication quality is regulated by . On the other hand, the SRSA is dependent on the similarity of knowledge bases at both ends of the communication, the sender and the receiver. However, a close relationship with Shannon’s communication model was not established. In this work, we will construct a generalization of the model by adding the knowledge scaling up update module at both the sender and the receiver, which may be asynchronous, but it can closely reflect the quick surge of semantic communications in many potential applications.

3. Semantic Communication Models

In this section, we will present the generalized model of semantic communications from two different aspects, one is an attempt to set up a close relationship with the Shannon communication model, and the other is to find a feasible modification of the model so that it can exactly reflect the quick surge of semantic communications from the user requirements. In this regard, we first set up a basic model, and then extend it by adding the knowledge base update functional module with information framework expansion and knowledge collision.

3.1. Motivation

Shannon’s communication system is concerned with the accurate transmission of symbols over channels. However, it does not take into account the differences in the knowledge backgrounds of the two parties, which affect whether the communication can convey the desired meaning. In other words, the matching of knowledge can also be treated as another special channel, although it may not actually exist in an explicit form. In this work, we denote it as a virtual channel. We want to fill this gap and propose an intelligent communication system with the coexistence of the real channel and virtual channel, making it easier to formulate the information framework. Thus, we consider not only the physical channel for symbol transmission but also the virtual channel for the transfer of knowledge between two parties. Moreover, the knowledge of both parties is also evolving, so we take into account the asynchronous scaling up updates of knowledge and semantic expansion.

3.2. Basic Model

Let us first observe the channel, which is the physical medium of message exchange, and plays a key role in the model setup of a complete semantic communication system. A communication channel is a system in which the output depends probabilistically on its input [2]. For simplification, we still use Alice and Bob as the two parties participating in the communication. Suppose Alice and Bob are at opposite ends of a memoryless channel, which can transmit physical signals. The channel is said to be memoryless if the probability distribution of the output depends only on the input at that time and it is conditionally independent of previous inputs or outputs. That is, the output of the channel is only related to the input at the current moment and no feedback processing is considered here.

We use S and to represent the input and output of the channel. Under the interference of the noise, the signal S becomes through channel transmission. Specifically, Alice observes the input S of the channel, and Bob observes the output . Let and represent the knowledge instance possessed by Alice and Bob, respectively. On the one hand, Alice encodes S as T with . This means that Alice uses the knowledge instance to process the signal S, resulting in the semantic type T, i.e., . On the other hand, Bob decodes into the response R with , which can be expressed as . The architecture of this process can be expressed as follows:

| (9) |

We denote the process as semantic encoding and as semantic decoding. They indicate the understanding of meaning at the semantic level by both parties. The definitions of symbols are summarized as follows:

Semantic Types: , is a random variable that is generated by Alice.

Signals: , is a signal that Alice sends to Bob.

Responses: , is a response that Bob chooses.

Knowledge instances: and represent the knowledge instance used by Alice and Bob during semantic encoding and decoding, respectively.

Lemma 1.

Under the knowledge instances and , the mutual information between T and is obtained as follows:

(10) where , and represent the conditional mutual information.

Proof.

Since and , we obtain and . With the characteristics of entropy and mutual information, it follows that

(11)

(12)

(13)

(14)

(15)

(16) which completes the proof. □

The mutual information between T and reflects the effectiveness of communication. It indicates the highest rate in bits per channel use at which information can be sent with an arbitrarily low probability of error. We note that consists of the following three terms:

, the mutual information between the input and output of the channel. It corresponds to the channel capacity in Shannon’s information theory.

, the mutual information between and given S and . It indicates the amount of information that two knowledge instances contain about each other when signals are known.

, the conditional mutual information between S and , and .

From Equation (10), we know that if Bob wants to fully understand what Alice means, the communication process needs to meet two conditions, one is the accuracy of the received symbols during channel transmission, and the other is the matching degree of the knowledge instances of both parties. In other words, (i) The transmission should be reliable. It indicates the effect of channel noise on signal transmission. Moreover, the physical carrier of this process actually exists, which we call an explicit channel. (ii) The two knowledge instances should be similar. Although there is no actual transmission between and , we assume that there is a virtual channel that reflects the probabilistic relationship between knowledge instances, which we call an implicit channel. The explicit channel and implicit channel together form the transmission medium of a communication system. Specifically, the characteristics of the explicit channel determine the value of the first term in Equation (10), and the implicit channel determines the second term. In addition, the last term is affected by both explicit and implicit channels.

If the communication system has only the explicit channel, it degenerates to the Shannon case, and . Furthermore, we are interested in the following three special cases (demonstrated in Appendix A).

- The explicit channel is noiseless, i.e., . In this setting, the Formula (10) can be simplified to

which is consistent with the result in [5]. The mutual information between T and is subjected to the entropy of the signal S and the conditional mutual information between and given S. In semantic communications or emergent communications, the signal S is usually limited. According to Equation (17), one can increase the conditional mutual information between and to solve the problem from another perspective. In other words, in the case of limited technical bandwidth, the SC performance can be improved by increasing the similarity between two knowledge instances.(17) - The implicit channel is noiseless, i.e., . This means that Alice and Bob have the same knowledge instance, so the communication performance will not be affected by the difference in the background of both parties. can be expressed as follows:

(18)

where is the mutual information between , S and . Even when the implicit channel is noiseless, is not exactly equal to , and we cannot ignore the influence of knowledge in SC. Compared to Shannon’s channel capacity, it adds a term, regulated by the relationship between the knowledge instance and the signal.(19)

Corollary 1.

If the implicit channel is noiseless, the mutual information between T and satisfies

(20) Moreover, if is a function of S and , the left equation holds; if is independent of the signal S or , the right equation holds.

Proof.

The non-negativity of mutual information, , means that can be upper bounded by . Because the mutual information is lower than the entropy, we obtain . By combining these results, we obtain

(21) which completes the proof of the bound of . □

-

III.Both explicit and implicit channels are noiseless, i.e., . In this setting, the mutual information between T and equals the entropy of T or ,

(22) Equation (22) indicates that Bob can perfectly understand the meaning of Alice when channels are noiseless. That is, no information is lost.

The following result shows the bounds of SRSA, constrained by the characteristics of the explicit and implicit channels.

Lemma 2.

The SRSA satisfies

(23)

Proof.

can be seen as an estimator for . Let Pr; then, with Fano’s Inequality, we obtain

(24) Since SRSA , we can obtain Equation (23), which completes the proof. □

3.3. EXK-SC Model

Let and denote the input signals of the explicit channel. and are their corresponding output signals. Similarly, and represent Alice’s knowledge instances. Alice encodes as with , and as with . On the other hand, Bob uses and , decoding and into and , respectively. In the basic model, the encoding and decoding processes of the two transmissions are

| (25) |

| (26) |

It is well known that the integration of lots of simple things can be expanded to a complex system and can even generate intelligence. Such an expansion process is consistent with the evolution of the human language system. Based on a similar idea, we extend the basic model proposed in Section 2.3 using expansion. In this context, Alice wishes to send an expansion of multiple signals to Bob. We first consider the case of two signals, which can be generalized to more instances. Now, what Alice sends is expanded from to . We use ⊕ to denote semantic expansion. Specifically, the expansion of signals only represents their semantic combination. For instance, ‘Shannon published a paper’ expands to ‘Shannon published a paper in The Bell system technical journal [1]’.

As known, expansion often implies collision and fusion. Similarly, the expansion of signals corresponds to the collision of knowledge bases in this work. For Alice, we use to represent the collision process, where ⊙ denotes the collision and is the collision factor. Specifically, is between 0 and 1, determined by the task. The collision factor reflects the role of compared to when the collision occurs. It can also be understood as the relative proportion of the contribution to the newly generated knowledge instance. The process of collision represents the knowledge scaling up updates, which may be synchronous or asynchronous. Similarly, we use to represent the knowledge collision of Bob, where is a collision factor. The expansion and collision process can proceed continuously as follows:

| (27) |

| (28) |

| (29) |

Without loss of generality, the one-step expansion architecture of semantic communications is described as follows:

| (30) |

We named it EXK-SC. Moreover, is called the Measure of Comprehension and Interpretation (MCI), which reflects the generation and evolution of semantics. It should be noted that T is not a simple logic combination of and , i.e., . For example, is ‘Apple Inc.’, it is a company and can be ‘Apple’. is ‘the thirteenth generation’, it is a number and can be ‘thirteen’. However, their collision may give rise to a new word called ‘iphone’, which is a mobile communication product. In particular, T reflects the result of S under the influence of knowledge collision.

Based on these definitions above, we obtain some new results.

Lemma 3.

The mutual information between T and is given by

(31)

Proof.

It is similar to that of Lemma 1, we omit the proof here. □

Equation (31) indicates that besides the characteristics of explicit and implicit channels, the relationship between and also affects the performance of communication.

Lemma 4.

When the explicit channel is noiseless, the gain brought by semantic expansion is given by

(32) where

(33)

Proof.

We note that

(34) Then,

(35)

(36)

(37)

(38) We have

(39) which completes the proof. □

Lemma 4 shows that the semantic enhancement, , is determined not only by the knowledge scaling up but also by the cost of the information rate over the explicit physical channel, . It exactly illustrates the relationship between the semantic expansion and the transmission information rate.

Lemma 5.

When the explicit channel is noiseless, the mutual information between T and is bounded by

(40)

Proof.

Since expansion would create more possibilities, leading to an increase in uncertainty, we can obtain

(41) Because of the non-negativity of entropy and conditioning reduces entropy, Equation (40) can be derived directly. We omit the proof. □

Lemma 5 shows the bounds of the semantic communication rate in the perfect explicit channel mode. The upper bound is composed of the entropy rate over the explicit channel and the collision of knowledge instances of the sender, which is reasonable, as expected.

4. Experiment and Numerical Results

In this section, we use SRSA to measure the performance of SC, especially the impact that asynchronous knowledge scaling up has on the system.

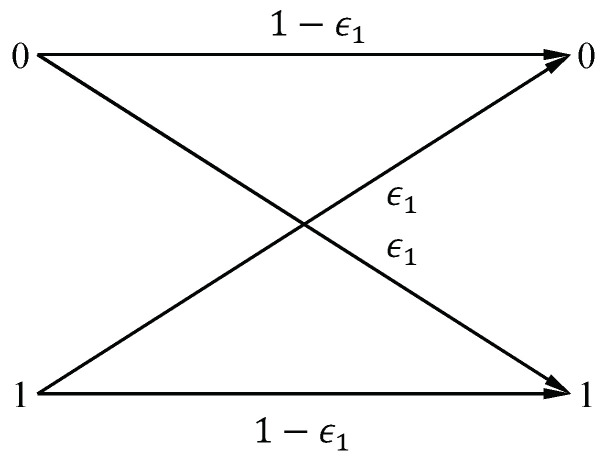

Basic Model. We use Q-learning in [22] to complete semantic encoding and decoding, which may reflect the continuous semantic learning process. Let , , . In addition, S, and are uniformly distributed. We would like to discuss the impact of the characteristics of the explicit channel and implicit channel on SC. We use the binary symmetric channel (BSC) to denote the explicit channel. The BSC is shown in Figure 1, which shows the probabilistic relationship between S and , with the error probability .

Figure 1.

Binary symmetric channel.

For the implicit channel, we assume the correlation between and satisfies

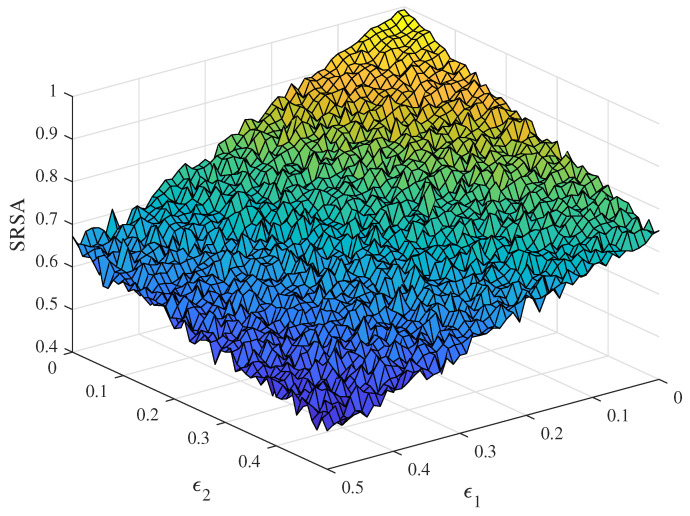

where is an independent random variable. illustrates the discrepancy between the knowledge bases at both parties of communications, . In Figure 2, we show the SRSA with and . When , SRSA reaches the maximum 1. As and increase, SASA will decrease in both directions. This indicates that two channels jointly determine the quality of communication. For high-quality SC, it is necessary to meet the condition that and with a high probability.

Figure 2.

The simulation results of SRSA with the explicit channel error probability and the implicit channel error probability .

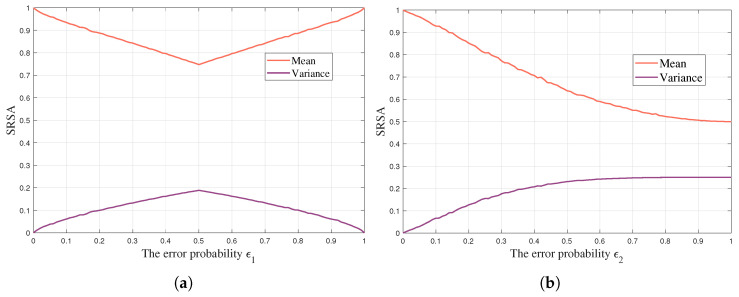

In addition, we discuss the impact of explicit and implicit channels on SC, respectively. We take the channel error and as the horizontal axis, and the SRSA entering the stable region as the vertical axis. Figure 3a shows the results of the explicit channel. As increases, the mean value of SRSA first decreases and then has a gradual increase. When = 0 or 1, the communication performance is the best and the mean is close to 1. Moreover, it falls to a low point when . On the other hand, the trend of variance is completely opposite to that of the mean. These results are consistent with the description of channels in Shannon’s information theory. Figure 3b shows the results of the implicit channel. As increases, the mean value of SRSA keeps decreasing, and the variance increases. It reflects the impact of knowledge misalignment on SC.

Figure 3.

A comparison of the impact of explicit and implicit channels on SC. (a) Explicit channel. (b) Implicit channel.

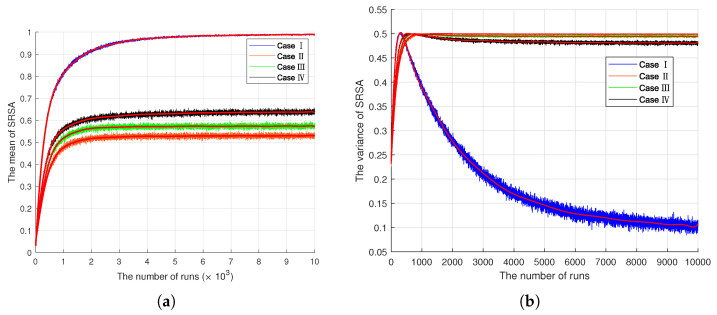

EXK-SC Model. We want to explore how semantic communication quality varies in the context of different relationships between the receiver and the sender’s knowledge instance. For simplicity, we assume that the explicit channel is noiseless, so we can focus on knowledge updates. That is, SRSA varies with the relationship of , and . We categorize it into four cases.

Case I: , and . The implicit channel is noiseless. Bob has the same asynchronous knowledge scaling-up updates mode as Alice. That is, the receiver has all the knowledge of the sender.

Case II: (with error probability 0.5), and . The receiver has partial knowledge of the sender.

Case III: , (with error probability 0.5) and . The receiver has partial knowledge of the sender.

Case IV: , and . The collision factors are not equal to this.

The symbols are consistent with the definitions in Section 3.3. Figure 4 illustrates the simulation results of SRSA with the number of runs, where Figure 4a is the mean and Figure 4b is the variance. In all four cases, the mean of SRSA gradually converges to a stable region. Case I is close to 1, which implies that when the receiver has all the knowledge of the sender, it can express exactly the same semantics as the sender. In other words, Bob has the same asynchronous knowledge scaling-up updates mode as Alice, which facilitates the success of SC. The curves of case II and III are almost the same, but the value of case II is always higher than that of case III. This is as expected because and play a leading role in the collision compared to and . Case IV reflects the learning of SRSA when Bob and Alice have different collision factors. The value of case IV is higher than that of II and III, which indicates that the collision factor plays a smaller role than the knowledge itself in SC. These results also show that learning can improve SC quality with only partial background knowledge; however, there is an upper bound. On the other hand, the variance of case I continues decreasing, and the other three cases also stabilize after peaking quickly. This further suggests that learning can evolve continuously with full knowledge, but there is an upper limit to it only with partial or no knowledge. This can be treated as the cost resulting from the imperfect knowledge base.

Figure 4.

The simulation results of SRSA with the number of runs. We divide it into four cases. Case I: , and ; II: (with an error probability of 0.5), and ; III: , (with an error probability of 0.5) and ; IV: , and . (a) The mean of SRSA. (b) The variance of SRSA.

5. Conclusions

In this paper, we presented the concept of semantic expansion and knowledge collision in SC. It represents the combination and superposition of information by the sender. Based on semantic expansion, we further proposed a semantic communication system called EXK-SC. Moreover, semantic expansion corresponds to knowledge collision, which provides the possibility for the evolution and upgrading of communication systems. On the other hand, we reached some conclusions for semantic information theory in the context of asynchronous scaling up updates of knowledge, while obtaining some bounds for SC. Specifically, the receiver’s understanding of the knowledge collision and updates determines the effectiveness of semantic communication.

It should be pointed out that in the near future, task-oriented semantic communication designs under this new framework may emerge as an interesting topic. Another topic of interest is how to set up the type class methods to provide more insights on the knowledge base formulation and the system design, which requires further study. Semantic communication is evolving towards intelligence. The insights gained from this work may be of assistance to the development of future semantic communication systems and 6G. It is also expected to pave the way for the design of next-generation real-time data networking and provide the foundational technology for a plethora of socially useful services, including autonomous transportation, consumer robotics, VR/AR, and the metaverse.

Acknowledgments

We thank Choi for the help and source code in [5].

Appendix A. Proof of the Mutual Information for Three Special Channel Cases

- The explicit channel is noiseless. Since , we have and . Moreover, S is a function of , it follows that . Similarly, is also a function of S, and we obtain . Thus, the formula (10) can be simplified to

(A1) - The implicit channel is noiseless. Since , the mutual information between T and is written as follows:

(A2) (A3) (A4) (A5) (A6)

It can also be expressed as follows:(A7) (A8) - Both explicit and implicit channels are noiseless, i.e.,. In this setting, we have . Thus, Equation (17) is written as follows:

Similarly, we also obtain .(A9)

Author Contributions

Conceptualization, P.F. and G.X.; methodology, P.F. and G.X.; software, G.X.; formal analysis, P.F. and G.X.; writing—original draft preparation, G.X.; writing—review and editing, P.F. and G.X.; visualization, P.F. and G.X.; supervision, P.F. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work was supported by the National Key Research and Development Program of China (Grant NO.2021YFA1000500(4)).

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Shannon C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948;27:379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x. [DOI] [Google Scholar]

- 2.Cover T.M., Thomas J.A. Elements of Information Theory. John Wiley & Sons; Hoboken, NJ, USA: 1991. [Google Scholar]

- 3.Shi G., Xiao Y., Li Y., Xie X. From semantic communication to semantic-aware networking: Model, architecture, and open problems. IEEE Commun. Mag. 2021;59:44–50. doi: 10.1109/MCOM.001.2001239. [DOI] [Google Scholar]

- 4.Weaver W. Recent contributions to the mathematical theory of communication. ETC A Rev. Gen. Semant. 1953:261–281. [Google Scholar]

- 5.Choi J., Park J. Semantic Communication as a Signaling Game with Correlated Knowledge Bases. arXiv. 20222209.00280 [Google Scholar]

- 6.Carnap R., Bar-Hillel Y. An Outline of a Theory of Semantic Information. Research Laboratory of Electronics, Massachusetts Institute of Technology; Cambridge, MA, USA: 1952. [Google Scholar]

- 7.Bao J., Basu P., Dean M., Partridge C., Swami A., Leland W., Hendler J.A. Towards a theory of semantic communication; Proceedings of the 2011 IEEE Network Science Workshop; West Point, NY, USA. 22–24 June 2011; pp. 110–117. [Google Scholar]

- 8.Floridi L. Outline of a theory of strongly semantic information. Minds Mach. 2004;14:197–221. doi: 10.1023/B:MIND.0000021684.50925.c9. [DOI] [Google Scholar]

- 9.Qin Z., Tao X., Lu J., Li G.Y. Semantic communications: Principles and challenges. arXiv. 20212201.01389 [Google Scholar]

- 10.Shi G., Gao D., Song X., Chai J., Yang M., Xie X., Li L., Li X. A new communication paradigm: From bit accuracy to semantic fidelity. arXiv. 20212101.12649 [Google Scholar]

- 11.D’Alfonso S. On quantifying semantic information. Information. 2011;2:61–101. doi: 10.3390/info2010061. [DOI] [Google Scholar]

- 12.Kolchinsky A., Wolpert D.H. Semantic information, autonomous agency and non-equilibrium statistical physics. Interface Focus. 2018;8:20180041. doi: 10.1098/rsfs.2018.0041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kountouris M., Pappas N. Semantics-empowered communication for networked intelligent systems. IEEE Commun. Mag. 2021;59:96–102. doi: 10.1109/MCOM.001.2000604. [DOI] [Google Scholar]

- 14.Tucker M., Shah J., Levy R., Zaslavsky N. Towards Human-Agent Communication via the Information Bottleneck Principle. arXiv. 20222207.00088 [Google Scholar]

- 15.Liu J., Zhang W., Poor H.V. A rate-distortion framework for characterizing semantic information; Proceedings of the 2021 IEEE International Symposium on Information Theory (ISIT); Virtual. 12–20 July 2021; pp. 2894–2899. [Google Scholar]

- 16.Chen J., Yu L., Wang J., Shi W., Ge Y., Tong W. On the Rate-Distortion-Perception Function. arXiv. 20222204.06049 [Google Scholar]

- 17.Stavrou P.A., Kountouris M. A rate distortion approach to goal-oriented communication; Proceedings of the 2022 IEEE International Symposium on Information Theory (ISIT); Espoo, Finland. 26 June–1 July 2022; pp. 590–595. [Google Scholar]

- 18.Weng Z., Qin Z. Semantic communication systems for speech transmission. IEEE J. Sel. Areas Commun. 2021;39:2434–2444. doi: 10.1109/JSAC.2021.3087240. [DOI] [Google Scholar]

- 19.Huang D., Tao X., Gao F., Lu J. Deep learning-based image semantic coding for semantic communications; Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM); Madrid, Spain. 7–11 December 2021; pp. 1–6. [Google Scholar]

- 20.Zhou Q., Li R., Zhao Z., Peng C., Zhang H. Semantic communication with adaptive universal transformer. IEEE Wireless Commun. Lett. 2021;11:453–457. doi: 10.1109/LWC.2021.3132067. [DOI] [Google Scholar]

- 21.Zhang H., Shao S., Tao M., Bi X., Letaief K.B. Deep Learning-Enabled Semantic Communication Systems with Task-Unaware Transmitter and Dynamic Data. arXiv. 2022 doi: 10.1109/JSAC.2022.3221991.2205.00271 [DOI] [Google Scholar]

- 22.Catteeuw D., Manderick B. The limits and robustness of reinforcement learning in Lewis signalling games. Connect. Sci. 2014;26:161–177. doi: 10.1080/09540091.2014.885303. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.