Abstract

Temporal sequences of neural activity are essential for driving well-timed behaviors, but the underlying cellular and circuit mechanisms remain elusive. We leveraged the well-defined architecture of the cerebellum, a brain region known to support temporally precise actions, to explore theoretically whether the experimentally observed diversity of short-term synaptic plasticity (STP) at the input layer could generate neural dynamics sufficient for sub-second temporal learning. A cerebellar circuit model equipped with dynamic synapses produced a diverse set of transient granule cell firing patterns that provided a temporal basis set for learning precisely timed pauses in Purkinje cell activity during simulated delay eyelid conditioning and Bayesian interval estimation. The learning performance across time intervals was influenced by the temporal bandwidth of the temporal basis, which was determined by the input layer synaptic properties. The ubiquity of STP throughout the brain positions it as a general, tunable cellular mechanism for sculpting neural dynamics and fine-tuning behavior.

Subject terms: Network models, Cerebellum

Neural circuit dynamics are thought to drive temporally precise actions. Here, the authors used a theoretical approach to show that synapses endowed with diverse short-term plasticity can act as tunable timers sufficient to generate rich neural dynamics.

Introduction

The neuronal representation of time on the sub-second timescale is a fundamental requisite for the perception of time-varying sensory stimuli, generation of complex motor plans, and cognitive anticipation of action1–4. But how neural circuits acquire specific temporal contingencies to drive precisely timed behaviors remains elusive. A progressive increase in firing rate (“ramping”) towards a threshold can represent different elapsed times by altering the slope of the ramping behavior. Elapsed time can also be encoded by a population of neurons that fire in a particular sequence (“time cells”)5–8. Sequential synaptic connections between neurons (synfire chains) can explain the neural sequences representing bird song9 and contribute to time delays necessary to cancel self-generated sensory stimuli in the electrosensory lobe of mormyrid fish10. Temporal dynamics of neural population activity can also be reproduced by training recurrent neural network models11–13. Nevertheless, the search for a candidate mechanism for generating a temporal reference (biological timer) for neural dynamics is an ongoing challenge.

Short-term synaptic plasticity (STP) is the rapid change in synaptic strength occurring over tens of milliseconds to seconds that is thought to transform presynaptic activity into distinct postsynaptic spike patterns14. Depression and facilitation of synaptic strength can act as low-and high-pass filters, respectively15, and synaptic depression can mediate gain modulation16,17. Network models of neocortical connectivity exhibit improved temporal pattern discrimination when augmented with STP18. Within recurrent neural networks, the long timescales of cortical synaptic facilitation provide the substrate for working memory19. Finally, low-gain recurrent network models that include STP also show enriched neural dynamics and generate neural representations of time20. However, experimental evidence of STP-dependent circuit computations is rare and is associated mainly with sensory adaptation21.

The cerebellar cortex is a prototypical microcircuit known to be important for generating temporally precise motor22 and cognitive behaviors23–26 on the sub-second timescale. It receives mossy fibers (MFs) from various sensory, motor and cortical areas. MFs are thought to convey contextual information and converge onto granule cells (GCs), the most numerous neuron in the brain. The excitatory GCs project onto the inhibitory molecular layer interneurons and Purkinje cells (PCs). PCs, being the sole output neurons of the cerebellar cortex, inhibit neurons in the deep cerebellar nuclei. According to the Marr-Albus-Ito model of cerebellar cortical circuit computations, precisely timed Purkinje cell activity can be learned by adjusting the synaptic weights formed by GCs with differing activity patterns27,28. This largely feed-forward circuitry has been proposed to learn the temporal contingencies required for prediction from neural sequences across the population of GCs within the input layer29. The synapses between MFs and GCs are highly variable in their synaptic strength and STP time course30. Therefore, we hypothesized that STP of MF-GC synapses could be used as internal timers for a population clock within the cerebellar cortex to generate neural dynamics necessary for temporal learning.

To elaborate this hypothesis, we modeled the cerebellar cortex as a rate-based two-layer perceptron network that includes realistic MF-GC connectivity and STP dynamics. The model reproduces learned PC activity associated with a well-known temporal learning task: delay eyelid conditioning31. The timescales of STP determined the temporal characteristics of the GC population activity, which defined the temporal window of PC temporal learning. The width of PC pauses scaled proportionally with the learned time intervals, similar to experimentally observed scalar variability of the eyelid conditioning behavior32. Additionally, we found that STP-driven GC activity was well suited to implement a Bayesian estimator of time intervals33. We propose that within neural circuits, dynamic synapses serve as tunable clocks that determine the bandwidth of neural circuit dynamics and enable learning temporally precise behaviors.

Results

Cerebellar cortex model with STP

The cerebellar cortex can be modeled as a two-layer perceptron that performs pattern separation of static inputs27,28,34,35. Cerebellar models of temporal processing are generally supplemented with additional mechanisms that generate temporally varying activity patterns in the GC layer10,29,36,37. To test whether heterogeneous MF-GC STP is sufficient to support temporal learning, we implemented STP of the MF-GC synapse in a simplified cerebellar cortex model, hereafter referred to as CCMSTP. This model deliberately omits all other potential sources of temporal dynamics. In particular, in most of the simulations presented here, we did not include recurrent connectivity (Fig. 1b). STP was simulated using a parallel vesicle pool model of the MF-GC synapse, similar to ref. 38. It comprises two readily releasable and depletable vesicle pools, synaptic facilitation, and postsynaptic desensitization. To reproduce the observed functional synaptic diversity, we set vesicle fusion probabilities (pv), synaptic pool sizes (N), and synaptic facilitation to match the relative strengths, paired-pulse ratios, and transient behaviors across five different types of synapses that were previously characterized30 (Fig 1a2–a6). Importantly, the longest timescale in CCMSTP is associated with a 2 s vesicle refilling time constant of the slow vesicle pool (τref = 2s, Fig. 1a1). To capture depression over long timescales38,39, we introduced a phenomenological parameter (pref = 0.6) that effectively mimics a simplified form of activity-dependent recovery from depression (see Methods).

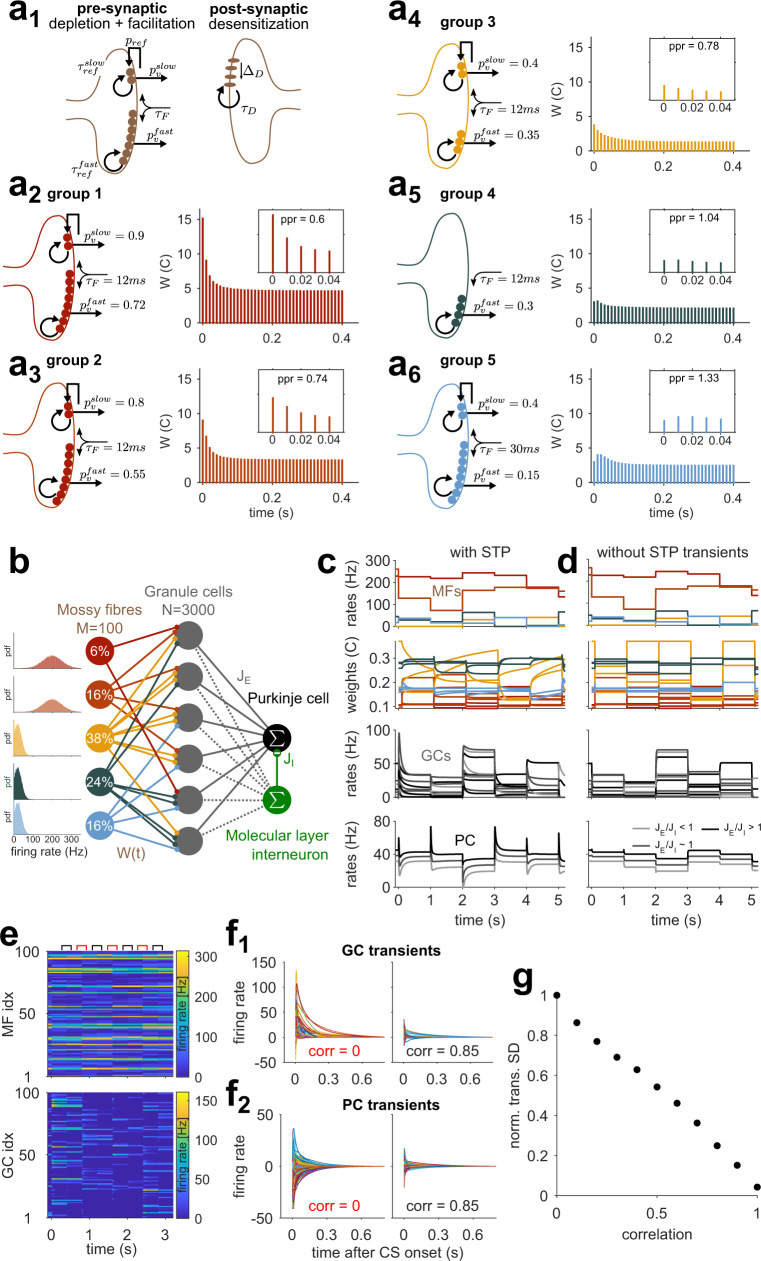

Fig. 1. Cerebellar cortex model with short-term synaptic plasticity within the input layer (CCMSTP).

a1 Synaptic model scheme showing the principal parameters. a2-6 Properties of the five model synapse types matching experimental groups from ref. 30. Left: Schemes show differences in presynaptic parameters; the postsynaptic side is identical for all groups. Right: average synaptic weights in response to repetitive 100 Hz stimulation as in ref. 39. Insets: First five responses with paired-pulse ratio (PPR) roughly mimic results from ref. 30. Color code for synapse groups is the same as in ref. 30. b Scheme of CCMSTP. MFs are classified according to the groups in (a). Percentages indicate relative frequency of MF groups. Insets: firing rate distributions for different MF groups. c Simulation of CCMSTP with randomly drawn weights. First panel: 5 sample MFs. Every second, MF activity is re-drawn from distributions in (b). Second panel: Normalized weights of 10 example MF-GC synapses. Third panel: activity of 10 sample GCs. Last panel: PC activity with different shades of gray indicating different E/I ratios onto the PC. d Same as c but without STP transient dynamics. Low amplitude GC and PC firing rate transients result from 10 ms GC integration time constant. e Example simulation in which correlated (black symbols) and uncorrelated (red symbols) MF patterns were presented to the network in alternation. The correlation coefficient for sequential patterns was . Firing rates are color-coded. f1 Steady-state subtracted GC responses from simulation in (e) for uncorrelated (left) and correlated MF pattern switches (right). f2 Same as (f1) but for PC. g Normalized standard deviation of PC transient amplitudes for switches between MF patterns of differing levels of correlation.

The CCMSTP consisted of firing rate units representing MFs, GCs, a single PC, and a single molecular layer interneuron, i.e., each neuron’s activity was represented by a single continuous value corresponding to an instantaneous firing rate. Each GC received 4 MF synapses, randomly selected from the different synapse types according to their experimentally characterized frequency of occurrence30. Importantly, we associated different synapse types with different MF firing rates (Fig. 1b, left panels, see Methods). High pv MF inputs were paired with high average firing rates (primary sensory groups 1, 2) and low pv synapses with MF inputs with comparatively low average firing rates (secondary/processed sensory groups 3, 4, 5), according to experimental observations40,41. We will reconsider this relationship below.

To examine CCMSTP network dynamics, input MF activity patterns were sampled every second from respective firing rate distributions shown in Fig. 1b. Each change in MF patterns evoked transient changes in MF-GC synaptic weights, which in turn generated transient GC firing rate responses that decayed at different rates to a steady-state (Fig. 1c). Similar to experimentally recorded PC responses to sensory stimuli in vivo42, switches between different MF activty patterns also generated heterogeneous transient changes in the PC firing rate, whose directions and magnitudes were controlled by the ratio of the average excitatory to inhibitory weight (Fig. 1c, bottom). In contrast, when MF-GC STP was removed, the transient GC and PC responses disappeared (Fig. 1d). The amplitude of the firing rate transients increased as the difference from one MF pattern to the next increased, similar to previous theoretical work16. Sequential delivery of uncorrelated MF firing patterns in CCMSTP (Fig. 1e) generated GC and PC transients with broadly distributed amplitudes (Fig. 1f1,2), which were progressively reduced as the relative change in MF rate decreased (Fig. 1g). Thus, dynamic MF-GC synapses allow both GCs and PCs to represent the relative changes in sensory stimuli.

Simulating PC pauses during eyelid conditioning

We next explored whether MF-GC STP diversity permits learning of precisely timed PC pauses associated with delay eyelid conditioning, a prototypical example of a cerebellar cortex-dependent learning. In this task, animals learn to use a conditioned stimulus (CS) to precisely time eyelid closure in anticipation of an aversive unconditioned stimulus (US). This eyeblink is driven by a preceding decrease in PC firing rates31,43 (Fig. 2a). Since the CS is typically constant until the time of the US and a precisely timed eyelid response can be learned even if the CS is replaced by direct and constant MF stimulation44,45, we modeled CS delivery in the CCMSTP by an instantaneous switch to a novel MF input pattern that persists over the duration of the CS (Fig. 2a). Most GC activity transients exhibited a characteristic rapid increase or decrease in firing rate, followed by an exponential-like decay in firing rate (Fig. 2c). In contrast to other models of eyelid conditioning29, the activity of most GCs in the CCMSTP peaked only once, occurring shortly (<50ms) after the CS onset (Fig. 2c). However, the distribution of GC firing rate decay times across the population was highly variable with a fraction of GCs showing decay times to 10% of the transient peak as long as 700 ms (Fig. 2c, d).

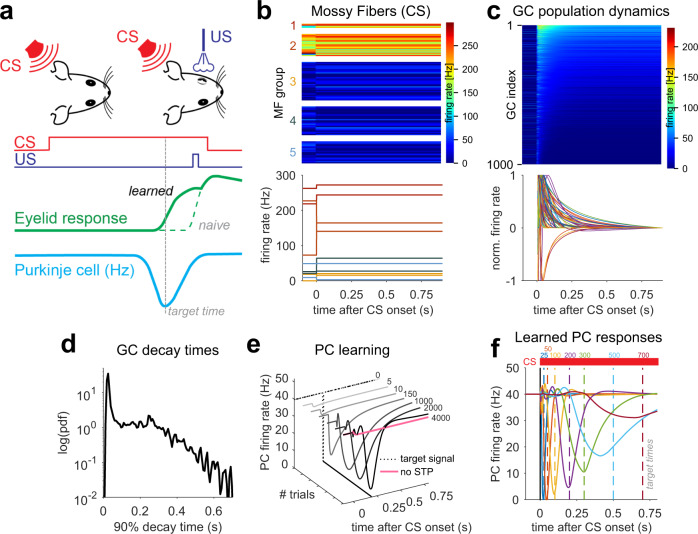

Fig. 2. Simulating Purkinje cell pauses during eyelid conditioning.

a Scheme of eyelid conditioning. CS: conditioned stimulus (red). US: unconditioned stimulus (violet). After experiencing CS and US pairings at a fixed temporal interval over many trials, the animal learns to close its eyelid just before the US is delivered (green). A pause in PC activity (blue) precedes the eyelid closure (target time, gray dashed line). b The CS is modeled as an instantaneous change in MF firing rate. Top: plot of firing rates of 100 MFs, sorted according to synaptic types (MF groups). MF firing rates are color-coded and drawn according to the distributions shown in Fig. 1b. Bottom: two sample MF rates per synaptic group. Colors as in Fig.1. c Model GC responses to the CS. Top: 1000 GCs sorted according to average firing rate after CS onset. Firing rates are color-coded. Bottom: steady-state subtracted and individually normalized GC transient responses. d Pdf of the distribution of GC activity decay times to 10% of the transient peak. e Example of delay eyelid conditioning over the course of 4000 learning steps for a 200 ms delay. Dashed line represents the target time used in the supervised learning procedure. Without STP-induced GC transients, no PC pause could be learned (pink line). f Simulated PC responses after 4000 learning trials for each target time (colored dashed lines).

To test whether the GC population dynamics could act as a basis set for learning the precisely timed PC firing rate pauses known to drive the eyelid response, we subjected the GC-PC synaptic weights to a gradient descent-based supervised learning rule46. The rule’s target signal consisted of a square pulse (zero firing rate at a specific time bin) at the designated time of the PC firing rate pause (Fig. 2e, dotted line). In the course of learning, there was a progressive acquisition of a pause in the PC firing rate (Fig. 2e). However, without MF-GC STP, the PC pause did not develop (Fig. 2e, pink). We tested learning of different delay intervals ranging from 25 ms to 700 ms and found that PC pauses could be generated for all delays. The PC pause amplitude and temporal precision (time and width) decreased with increasing CS-US delays (Fig. 2f), reminiscent of the shape of PC simple-spike pauses recorded during eyelid conditioning31.

Why might the learned PC pause amplitude and temporal precision be reduced for longer CS-US delays? The parameters associated with the learning algorithm (e.g. the number of iterations) are identical for each CS-US delay. The state of the GC population activity, in contrast, changes throughout the CS. Once all GC activity dynamics reach steady-state, temporal discrimination by PCs is diminished, and interval learning becomes impossible. In other words, for temporal learning to be effective, changes in GC firing rates must be prominent over the relevant timescale. Indeed, eyeblink conditioning simulations where slow or fast GCs were removed, the efficiency of generating PC pauses for short and long intervals were reduced (Fig. S2). CCMSTP simulations thus demonstrate that a GC temporal basis generated by MF-GC STP is sufficient to reproduce the cerebellar cortex computation underlying delay eyelid conditioning and suggests that the timescale of GC dynamics influences the timescale of behavioral learning.

Analysis of the synaptic mechanism underlying GC transient responses using a reduced model

PC temporal learning requires transient GC activity responses, which in our model only arise from STP at the MF-GC synapse30,39. How are the dynamics of synapses and GCs determined by quantal and firing rate parameters? The complexity of the full CCMSTP with many interacting parameters makes it difficult to assess the effect of each synaptic parameter. To overcome this challenge, we developed a reduced MF-GC synapse model, which was analytically solvable for an instantaneous and persistent switch of MF rates. This allowed us to identify the key computational building blocks of CCMSTP and explore how they control the overall behavior of the model. Specifically, we omitted short-term facilitation and postsynaptic desensitization and reduced the synaptic model to a single population of high pv synapses (“drivers”30) and a single population of low pv synapses (“supporters”30), each with a fast and a slow refilling ready-releasable pool (Fig. 3b), thus obtaining a model where STP results from vesicle depletion only. Each GC received exactly two driver and two supporter MF inputs with random and pairwise distinct identities (Fig. 3a).

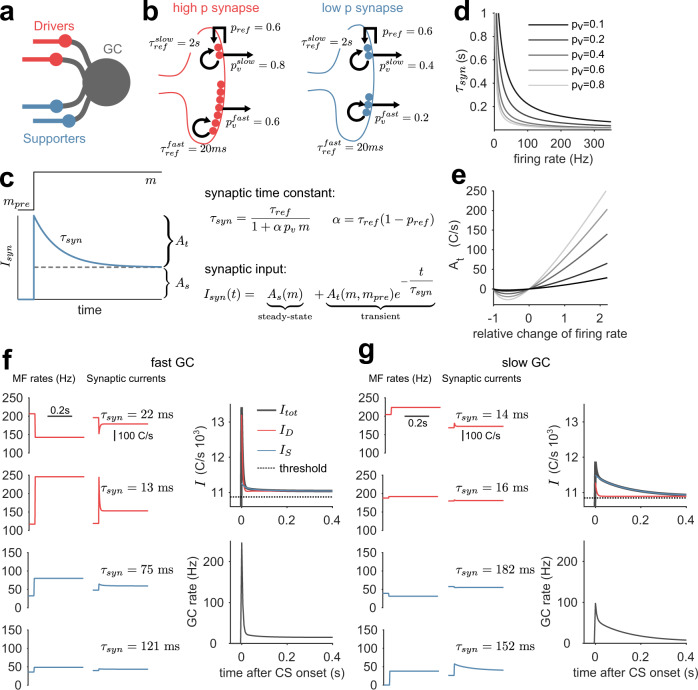

Fig. 3. MF-GC synaptic time constants and their relative weights determine the time course of GC responses.

a Scheme of GC inputs in the simplified synaptic model. Each GC receives exactly two distinct high release probability driver (red) and low release probability supporter MFs (blue). b Schemes of the reduced synaptic model of high (red) and low synapses (blue). c Left: scheme of single pool response with the time constant (blue line) to a firing rate switch during CS presentation (black solid line). The dashed black line separates the transient () from the steady-state amplitude (). Right: equations determining the synaptic time constant and synaptic input. d Slow vesicle pool time constant () versus presynaptic MF firing rate. Different shades of gray indicate different release probabilities. e Driver synapse transient amplitude () versus relative firing rate change for a baseline firing rate of 80 Hz (m−mpre/mpre, mpre= 80 Hz). A negative corresponds to a transient decrease in firing rate. Same color code as in (d). f Sample fast GC. Left: driver and supporter MF firing rates drawn from thresholded normal distributions ((200 Hz, 15 Hz) and (25 Hz, 15 Hz), respectively) and the corresponding synaptic responses. For clarity, only the of the respective slow pool is indicated. Upper right panel: GC threshold (dashed line), total synaptic input (black line), total driver input (red line), and total supporter input (blue line). The transient response is dominated by the driver input (red). Lower right panel: resulting GC firing rate response. g Like (f) but for a sample slow GC. The transient response is dominated by the supporter input (blue).

In this reduced model, an instantaneous and persistent switch of MF firing rates generates an average postsynaptic current (Isyn(t)) for each vesicle pool that is remarkably simple. It features a sharp transient change, followed by a mono-exponential decay to a steady-state synaptic current amplitude, As, (Fig. 3c) and can be generally expressed as

| 1 |

Here, As is a time-invariant component and is a transient component with synaptic relaxation time constant τsyn (Fig. 3c) and amplitude At. This transient component determines the synapse’s ability to encode the passage of time.

The solution of the synaptic dynamics model reveals the crucial dependence of τsyn and At on the presynaptic and firing rate parameters (see “Methods”):

| 2 |

Here, , m is the MF firing rate persisting during the CS, and the synaptic parameters pv, τref, and pref are defined as above. Equation (2) shows that τsyn is inversely related to the MF firing rate during the CS and the release probability, pv(Fig. 3d). Intuitively, this is because higher pv and/or m lead to a higher rate of synaptic vesicle fusion, and hence depletion, driving the synaptic response amplitude to steady-state faster. Conversely, slow time constants arise from low pv and/or low m with the maximum τsyn being equal to the vesicle recovery time τref.

The transient amplitude At is given by

| 3 |

Here, N is the number of release sites. Importantly, and in contrast to τsyn, At depends on the presynaptic MF firing rate before the CS, mpre, and the difference between the MF firing rates before and during the CS. In particular, for both rates sufficiently high, At becomes a linear function of the normalized difference between m and mpre, i.e. (Fig. 3e). At is sensitive to the relative and not the absolute change in presynaptic rate, as observed previously16.

The transient GC activity results from the sum of eight synaptic transient current components, (i.e. four inputs, each with two pools). To illustrate the interplay between the At and τsyn, we compared the behavior of each synaptic input for a selected fast and slow GC (Figs. 3f, g). Generally, synaptic inputs from supporters display longer transient currents than synaptic inputs from drivers (Figs. 3f, g, middle panels) due to their lower firing rates (Figs. 3f, g, left panels) and low pv (Fig. 3b). At is largely determined by the relative change in the respective presynaptic MF firing rates, (Fig. 3f and g, left panels). Thus, “fast” GCs are generated when the high pv driver inputs exhibit large relative changes in firing rates (Fig.3f). “Slow” GCs are generated from synapses with a small relative change in driver firing rates, but large relative supporter (low pv) rate changes paired with low supporter rates during the CS (Fig.3g). Taken together, in the reduced model τsyn and At determine the effective timescales of the GC responses and are explicitly influenced by quantal parameters, synaptic time constants, and the diversity of MF firing rates.

The explicit influence of synaptic parameters on temporal learning

Our simulations suggest that delay eyelid conditioning across multiple delays necessitates GC population dynamics spanning multiple timescales (Fig. 2, Fig. S2). Since individual GC firing rate dynamics depend on the At and τsyn of their synaptic inputs (Fig. 3), this implies that 1) the spectrum of τsyn available to the network should cover the relevant timescales and 2) the At associated with different τsyn, which can be understood as the relative weights of synaptic transient components, should be of comparable magnitude across τsyn. To illustrate these points, we used the reduced CCMSTP to simulate eyelid response learning with different firing rate properties and examined the relationship between τsyn, At, the GC temporal basis, and learning outcome. Importantly, since At and τsyn are not independent, the quantity of interest is their joint distribution. We initially set up a reference simulation by choosing MF firing rate distributions such that the diversity of GC transient responses and the temporal learning performance (Fig. 4a) were comparable to the CCMSTP with native synapses (Fig. 2f). For this case, the joint distribution shows that At decreased with increasing . Note that At is maximal when the MF firing rates increased from zero mpre to a finite m upon CS onset, maximizing m-mpre (Eq. 3, see also Fig. S3b, c). We quantified learning accuracy by calculating an error based on 1) the PC response amplitude, 2) its full width at half maximum and 3) the temporal deviation of its minimum from the target delay (Fig. 4a, fifth panel, Fig. S2a, see “Methods”"). Importantly, the degradation in temporal precision of the learned PC pauses for longer CS-US intervals was concomitant with the reduction of the At associated with longer τsyn (Fig. 4a). This suggests that inspection of the joint distribution of τsyn and At can provide insight into the temporal learning performance of the network.

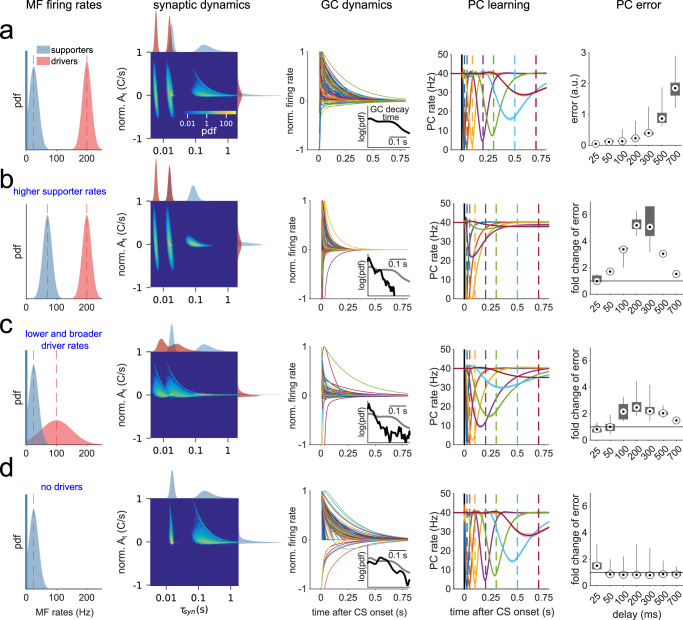

Fig. 4. Learning performance depends on MF firing rate distributions.

a First panel: Driver and supporter MF firing rate pdfs (μD = 200 Hz, μS = 25 Hz, σD = σS = 15 Hz). Second panel: Resulting joint At and τsyn distribution, featuring four partially overlapping clusters, corresponding to the slow and fast pools for driver and supporter synapses, and marginal distributions. The color code of the joint distribution scales logarithmically. Colors of marginal distributions indicate driver (red) and supporter (blue) components. Third panel: Normalized GC transient responses to CS. Inset: pdf of the distribution of decay times to 10% of the transient peak. Fourth panel: learned PC pauses, averaged over n = 20 simulations with different realizations of MF patterns and MF-GC connectivity. Dashed lines mark CS-US intervals (color code is the same as in Fig. 2e). Fifth panel: Error for each CS-US interval is calculated based on PC response amplitude, full-width at half-maximum and temporal deviation (Fig. S2a) and averaged over n = 20 realizations of MF patterns and MF-GC connectivity. Black lines indicate the distribution ranges; gray boxes indicate the 25th to 75th percentile range and black-white circles the medians. b Same as a, but with μS = 70 Hz. Inset: black line is the pdf for simulation with μS = 70 Hz and gray line is the pdf from (a) for comparison. Fifth panel: change in error relative to the average error in (a). c Same as (a), but with μD = 100 Hz and σD = 50 Hz. d Same as (a), but without driver inputs.

When changing only the mean firing rate of supporter MFs (μS) from 25 Hz to 70 Hz, the synaptic time constants were shortened due to the inverse relationship between τsyn and the mossy-fiber firing rate m (Fig. 4b, second panel). Consequently, and expectedly, the distribution of GC firing rate decay times was shifted to shorter values, and learning performance was degraded for all CS-US intervals, except the 25 ms delay (Fig. 4b). Lowering the mean firing rate of driver MFs (μD) from 200 Hz to 100 Hz and increasing the standard deviation (σD) from 15 Hz to 50 Hz, led to an overall increase of the time constants contributed by driver synapses, as well as an increase in their relative weight (At; Fig. 4c, second panel, marginals). As a result, the joint probability distribution shows a shift towards faster weighted time constants. It also follows that GC transients are accelerated, and learning precision is decreased for long CS-US intervals. Removing synaptic currents originating from driver synapses only disrupted learning PC pauses for the shortest CS-US interval (Fig. 4d). Reduced model simulations with systematic parameter scans across a wide range of MF firing rate distributions for both synapse types suggested that good synaptic regimes for temporal learning are achieved when driver synaptic weights are comparable or smaller than those of the slow supporting synapses (Fig. S4).

All the results taken together suggest that optimal learning occurs when the spectrum of τsyn available to the network covers behaviorally relevant timescales with balanced relative weights (At). Synaptic and GC activity timescales can therefore be tuned by simultaneously modulating pv and the absolute scale of m to provide the necessary distribution of τsyn, whereas the relative change of MF firing can be used to tune the weight (At) of τsyn.

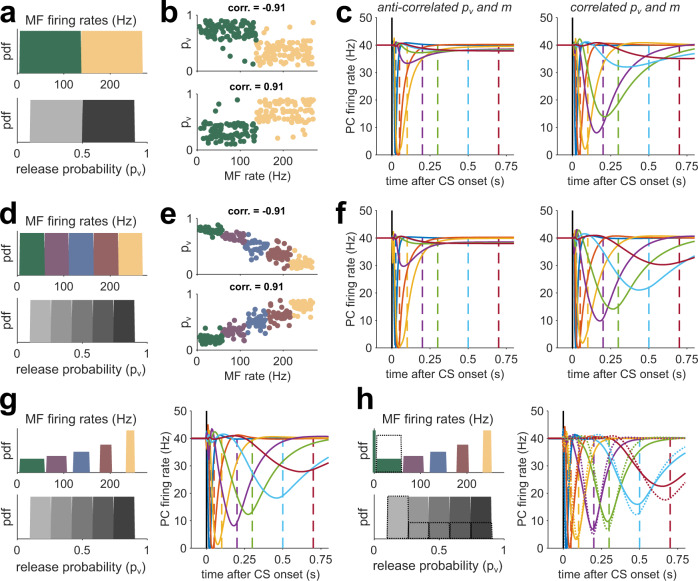

Firing rate and synaptic parameters that improve temporal learning performance

Thus far, we used the reduced model to explore how MF firing rates and synaptic properties influenced the timescales of GC activity and the temporal precision of learned PC pauses. The model, however, was constrained by (1) the use of only two synapse types, (2) fixed release probabilities (pv), (3) MF firing rates that were consistently higher for high pv synapses than their low pv counterparts, and (4) an equal number of driver and supporter synapses. We next considered how the relaxation of these assumptions and specific parameter combinations could influence the precision of learned PC pauses. In particular, we simulated reduced models where, in addition to MF firing rates, pv was sampled from continuous distributions.

Equation (2) suggests that a positive correlation between pv and m should broaden the distribution of τsyn and broaden the time window of learning. Specifically, we expect learning performance to improve when high(low) firing rate MFs are, on average, paired with high(low) pv synapses. We chose uniformly distributed pv and MF firing rates and split both of these equally into two contiguous groups (Fig. 5a). We performed training simulations in which we paired high pv(driver) synapses with high firing rates, or we paired low pv (supporter) synapses and high MF firing rates, and vice versa (Fig. 5b). Formally, this is equivalent to adjusting the rank correlation (crk) between the pv category (supporter or driver) and the m category (high or low, Fig. 5b). We found better learning performance when pv and m were positively correlated (Fig. 5c, Fig. S5). Indeed, primary vestibular afferents that form driver-like synapses have been shown to have high firing rates30,40 while supporter-like secondary vestibular afferents have low firing rates30,41.

Fig. 5. Correlating release probability and MF firing rates improves learning performance.

a Top: distribution of MF firing rates (m) used to drive the network, divided into low (supporter, green) and high (driver, yellow) rates. Bottom: Distribution of synaptic release probabilities (pv), divided into low (light gray) and high (dark gray) probabilities. b Top: pv versus m for 500 sample synapses for a network with a strong negative rank correlation between the m category (supporter or driver) and the pv category (high or low). Bottom: same as top, but for strongly positive correlated m and pv. c Learned PC pauses for low (left) and high (right) correlations. CS-US intervals are color-coded as in Fig. 2f. Each curve is the average of n = 20 simulations with different realizations of MF patterns and MF-GC connectivity. d–f Same as (a–c), but for distributions divided into five groups. g Left: MF rates and release probabilities for five synapse types where the average group firing rate is as in (d), but the firing rate variance progressively decreases with the average rate. Right: resulting PC eyelid response learning for high correlations. h Same as (g) but with zero-rate MFs added to the lowest rate distribution. Dashed lines indicate the case when the count of lowest rates and release probabilities is doubled.

Inspired by the number of synapse types observed experimentally30, we augmented the number of synapse groups from 2 to 5 without changing the pv and firing rate distributions (Fig. 5d). We reasoned that the introduction of a larger number of MG-GC synapse types would in principle permit a stronger linear correlation between pv and m to occur (Fig. 5e), leading to a broader τsyn spectrum (not shown) and an improved learning of PC pauses. Indeed, for high crk, the learning performance of the five group CCMSTP was better than that of the two-group CCMSTP (compare Fig. 5c and Fig. 5f, Fig. S5). These simulation results suggest that good temporal learning performance of CCMSTP can be achieved not simply by generating variability in parameters, but by structuring, or tuning, the relationship between pv and m.

Equipped with an understanding of how the synaptic and MF rate parameters can generate different synaptic time constants, we set out to further improve the temporal learning for longer CS-US delays by adjusting the variance of the clustered MF rate distributions. To increase the weighting of long τsyn, we inversely scaled the variance of the MF firing rate distributions with respect to the mean firing rate (Fig. 5g), thereby increasing At (Fig. 4c). As expected, PC pause learning was better than when using equal-width MF groups (Fig. 5g, Fig. S5). An additional enhancement of learning performance could be achieved by adding a small fraction of zero-rate MFs to the lowest group (Figs. 5g, 6% zero MFs, same fraction as in Fig. 4a), which provide maximal At(see Fig. 4). Finally, taking into account the experimental finding that low pv synapses are more frequent than high pv synapses30, we doubled the fraction of MFs and release probabilities in the lowest group, resulting in the best performance of all versions of CCMSTP tested here (Fig. 5g). These simulations show that positive correlations between vesicle release probability and presynaptic firing rate broaden the temporal bandwidth of circuit dynamics and improve temporal learning.

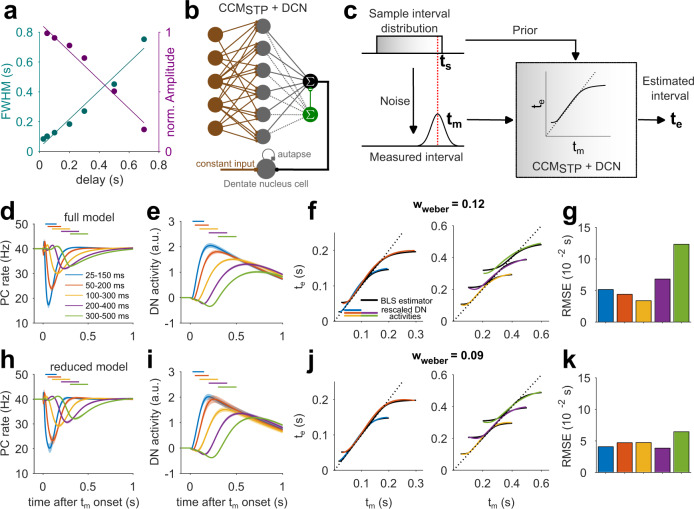

STP permits learning optimal estimates of time intervals

Humans and animals have an unreliable sense of time and their timing behavior exhibits variability that scales linearly with the base interval47. Previous work has found that humans seek to optimize their time interval estimates by relying on their prior expectations. A canonical example of this optimization is evident in the so-called ready-set-go-task48 in which subjects have to measure and subsequently reproduce different time intervals. It has been shown that when the intervals are drawn from a previously learned probability distribution (i.e., prior), subjects integrate their noisy measurements with the prior to generate optimal Bayesian estimates. For example, when the prior distribution is uniform, interval estimates are biased towards the mean of the prior, and biases are generally larger for longer intervals that are associated with more variable measurements (Fig. 6c). Such Bayes-optimal temporal computations are evident in a wide range of timing tasks such as time interval reproduction48, coincidence detection49, and cue combination50.

Fig. 6. STP-generated temporal basis enables the computation of Bayesian time-interval estimates.

a Full width at half maximum (cyan) and normalized amplitudes (magenta) of learned PC pauses versus delay interval from the experimentally constrained CCMSTP (see Fig. 2). Solid lines are linear fits. b Scheme of CCMSTP with added dentate nucleus cell. c Scheme of Bayesian integration. The sample interval ts (red dashed line, here drawn from a uniform distribution, upper left) is subject to a noisy measurement yielding a measured interval tm (lower left). CCMSTP implements Bayesian integration yielding an estimated interval te (right). d PC responses after 12,000 learning trials, averaged over n = 20 simulations with different realizations of MF patterns and MF-GC connectivity. Shaded area indicates standard deviation. Different colors represent learning of different uniform sample interval distributions. e Same as (d), but for DN cell activity. f Rescaled DN cell activity for different learned interval distributions (colored) and fitted theoretical Bayesian least squares (BLS) estimator (solid black line), with wweber = 0.12 resulting from fit. g Squared deviation of rescaled DN activity from the BLS estimator for all tested intervals. h–k Same as (d–g), but for the reduced model. The reduced model firing rate parameters were μD = 200 Hz, μS = 20 Hz, σD=10Hz and σS = 15 Hz and resulted in DN activity consistent with a Bayesian least squares model with wweber = 0.09.

A recent study developed a cerebellar model called TRACE for temporal Bayesian computations33. TRACE implements Bayesian integration by incorporating two features. First, it assumes that GCs form a temporal basis set that exhibits temporal scaling. This feature accounts for the scalar variability of timing. Second, it assumes that prior-dependent learning alters the GC-PC synapses. This feature allows the dentate nucleus neurons (DNs) downstream of PCs to represent a Bayesian estimate of the time interval.

In our analysis of eyelid conditioning (Fig. 2), we showed that CCMSTP generates PC firing rate pauses whose width and amplitude linearly scale with time (Fig. 6a). Therefore, we reasoned that CCMSTP might have the requisite features for Bayesian integration. To test this possibility quantitatively, we presented our model with variable intervals drawn from various prior distributions. The interval was introduced as a tonic input to MFs, similar to the CS in the eyelid simulations. The onset of this tonic input caused an abrupt switch of the MF input rates that persisted over the course of a trial. During learning, we subjected the model to intervals sampled randomly from a desired prior distribution.

We tested CCMSTP with five different uniform distributions of ready-set intervals (25-150 ms, 50–200 ms, 100–300 ms, 200–400 ms, 300–500 ms), resulting in PC pauses that broadened for longer interval distributions, and integrated DN activity that could easily match the Bayesian least-square model33 by adjusting a single parameter, the Weber fraction wweber (see “Methods”"; Fig. 6d, h). The reduced model interval estimates were more similar to the Bayesian estimates than for CCMSTP with native synaptic parameters, especially for the 200–400 ms and 300–500 ms intervals (Fig. 6h–k). Nevertheless, in both cases the CCMSTP simulations show that a GC basis generated by MF-GC STP is sufficient for driving Bayesian-like learning of time intervals spanning several hundreds of milliseconds. It should be noted that our GC temporal basis was not explicitly constructed to accommodate scalar properties. Nevertheless, as in the TRACE model, we observed that interval estimates were biased towards the mean and that these biases were larger for longer intervals. These results suggest that a GC basis set generated from the diverse properties of native MF-GC synapses likely exhibits a scalar property necessary for generating optimally timed behaviors.

Discussion

In order to generate temporally precise behaviors, the brain must establish an internal representation of time. This theoretical study posits that the diversity of synaptic dynamics is a fundamental mechanism for encoding sub-second time in neural circuits. By using eyelid conditioning as a benchmark task for the CCMSTP, we elucidated the conditions under which the variability in MF-GC synaptic dynamics generates a GC temporal basis set that represents elapsed time and is sufficient for temporal learning on a sub-second scale. According to David Marr’s levels of analysis of information processing systems51, our study connects all three levels, from the circuit computation (learning timed PC pauses) to its underlying algorithm (learning with a temporal basis set), and the fundamental biological mechanism (STP diversity).

STP diversity as a timer for neural dynamics

Cerebellar adaptive filter models posit that GCs act as a heterogeneous bank of filters that decompose MF activity into various time-varying activity patterns - or temporal basis functions - which are selected and summed by a synaptic learning rule at the PC to produce an output firing pattern that generates behaviors that minimize error signals arriving via climbing fibers36,37. CCMSTP can be viewed as an adaptive filter in which MF-GC synapses act as non-linear elements whose filter properties are determined by the experimentally defined synaptic parameters and modulated by the presynaptic MF firing rates.

Recent theoretical work proposes that a scale-invariant neuronal representation of a temporal stimulus sequence can be obtained by using a population of leaky integrators that produce exponentially decaying neural activity transients52. Indeed, exponential-like activity has been observed in the entorhinal cortex—a region that projects to the hippocampus6. The exponential-like population activity is reminiscent of the GC temporal basis set in CCMSTP following persistent firing rate changes. However, the MF-GC synaptic inputs are always a mixture of multiple exponential components. Nevertheless, our work suggests that STP could be a plausible biological mechanism explaining exponential dynamics in neuronal populations6 and merits further theoretical and experimental investigation.

The use of an instantaneous and persistent change in MF activity was motivated by the fact that eyelid conditioning can be achieved if the CS is replaced with a constant MF stimulation44,45,53. Recent evidence from pons recordings during reaching suggests that MF activity can be persistent with little dynamics54. For dynamic changes in MF rates, STP is likely to generate outputs that are phase-shifted and/or the derivatives of their input55. Using heterogeneity of MF-GC STP as a mechanism for adaptive filtering, even time-varying inputs will effectively be diversified within the GC layer and improve the precision of temporal learning.

Synapses within the prefrontal cortex56 and at thalamocortical connections57 exhibit diverse firing rate inputs and release probabilities58, generating synaptic dynamics that could drive complex neural dynamics. Reminiscent of PC firing rate pauses during eyelid conditioning, hippocampal time cells are thought to be generated by a linear combination of exponentially decaying input activity patterns from upstream entorhinal cortical neurons6. More generally, it has been shown that STP also provides a critical timing mechanism within a recurrent neural network model of neocortical activity by facilitating temporal pattern descrimination18. We note that all synapses in this study featured only a single STP timescale, but we expect that the addition of heterogeneous STP would further diversify the network’s dynamics and enhance its computational properties. Thus, these previous studies and our present study underscore the proposal that STP diversity is a tunable timing mechanism for generating neural dynamics across brain regions.

Timing mechanisms in the cerebellar cortex

In addition to MF-GC STP, the cerebellar cortex is equipped with multiple mechanisms potentially enabling temporal learning59. Indeed, time-varying MF inputs could directly provide a substrate for learning elapsed time60, but whether the observed diversity of MF firing is sufficient to mediate temporally precise learning is unknown and merits further exploration. Within the cerebellar cortex, unipolar brush cells are thought to provide delay lines to diversify GC activity patterns10,61,62, but these cell types are rare outside the mammalian vestibular cerebellum. The diversity of GC STP63 could add to the diversity of the effective GC-layer basis set64. Consistent with the importance of MF-GC STP, delay eyelid conditioning was selectively altered due to the loss of fast EPSCs in AMPAR KO mice65. Simulations including realistic NMDA and spillover dynamics66 can further enrich the temporal scales available to the network67. It would be of particular interest to investigate the role of MF-GC STP in the context of recurrent GC-Golgi-Cell-cell network models that have been shown to generate rich GC temporal basis sets12,29. Finally, we note that MF-GC STP and other timing mechanisms described above are not mutually exclusive but presumably act in concert with the diverse intrinsic properties of GCs68 and PCs69 to cover different timescales of learning or increase mechanistic redundancy.

Predictions of the CCMSTP

Our theory makes several testable predictions. The transient response amplitude of PCs, which is proportional to the relative change in firing rate, can serve as a detector of rapid changes in MF firing patterns (novelty) and thus amplify pattern discrimination similar to that demonstrated for synapse-dependent delay coding30. Consistent with this prediction, single whisker deflections have been shown to generate transient PC activity42.

CCMSTP predicts that persistent changes in MF activity would generate exponential-like GC activity profiles (Figs. 2, 4). However, although the majority of simulated GCs shown here are active at the onset of the CS, this is not a necessary feature of CCMSTP. When we included a single, average-subtracting Golgi cell (possibly representing the “common mode” of Golgi Cell population activity64), more GCs showed delayed onset firing and the variability of onset and peak times (Fig. S6). This did not affect the learning performance of simulated delay eyelid conditioning (Fig. S6). Note that our implementation of Golgi cell feedback is simplified and does not account for reciprocal inhibition between multiple Golgi cells, which in simulations has also been shown to generate diverse GC activity12,29. To test these predictions, MFs could be driven at constant rates using direct electrical or optogenetic stimulation of the cerebellar peduncle in vivo or the white matter in acute brain slices, with and without intact Golgi cell inhibition. Unfortunately, high-temporal resolution population recordings of GCs are challenging due to the small size of GC somata. In the future, small impendence silicon probe recordings70 or ultra-fast optical indicators71 might permit experimentally testing our hypotheses. If successful, we predict that the time course of GC responses should be diverse and exponential-like, with prominent delayed activity in some granule cells when Golgi cells are intact. Furthermore, decreasing or increasing the MF firing rate should in turn slow or accelerate GC responses, respectively. Finally, for complex behavioral experiments in which the MF activity is dynamic (and measurable), one could examine which circuit connectivity of the CCMSTP best reproduces the measured GC activity.

The CCMSTP is one of the few network models directly linking quantal synaptic parameters and presynaptic activity dynamics to population activity dynamics and temporal learning. Figures 3 and 4 show that the relative weight and temporal span of synaptic time constants dictate the distribution of GC firing rate decay times and, in turn, the timescales of temporal learning. Analytical solutions for simple synapse models (Eq. (3)) provide insight into how synaptic parameters influence STP. For example, high levels of correlation between pv and m, coupled with balanced relative weights of the synaptic time constants, generated a learning performance superior to the native synapses (Fig. 5d). Therefore, CCMSTP predicts that MFs forming driver synapses (high pv) would have a high baseline and stimulated firing rates, while MFs forming supporter synapses (low pv) would exhibit low baseline and stimulated firing rates, albeit with large relative changes in firing rates. Indeed, vestibular neurons, which have been shown to exhibit high firing rates72,73, produced MF-GC synapses with high release probability30. In the C3 zone of the anterior lobe in cats, specific firing rates were associated with different MF types74. It is tempting to hypothesize that nature tunes presynaptic activity and synaptic dynamics (perhaps by homeostatic or activity-dependent mechanisms) in order to preconfigure the window of temporal associations required for a particular behavior.

Choice of the cerebellar learning rule

The learning rule we used here was adapted from a previous modeling study that investigated cerebellar adaptation of the vestibular ocular reflex and was argued to be biologically plausible75. This synaptic weight update rule is mathematically equivalent to a gradient descent in which the error magnitude is transmitted via the climbing fiber75. Consequently, CCMSTP learning rule features graded climbing-fiber responses and a gradual reduction in climbing-fiber spiking that is concomitant with the progression of learning. These phenomena have been observed experimentally43,76. Moreover, a recent study that thoroughly investigated the role of the climbing fiber spike in cerebellar learning found that the GC and climbing-fiber spike pairings necessary for the induction of long-term depression/potentiation under physiological conditions are compatible with a stochastic gradient descent rule46. The CCMSTP learning rule can be seen as a deterministic variant of this.

Synaptic implementation of a Bayesian computation

Bayesian theories of behavior provide an attractive framework for understanding how organisms, including humans, optimize time perception and precise actions despite the cumulative uncertainty in sensory stimuli, neural representations, and generation of actions48,77. We found that CCMSTP could generate biased time estimates consistent with Bayesian computations. In general, the magnitude of biases for a Bayesian agent depends on the magnitude of timing variability (i.e., Weber fraction). In our simulations, model parameters corresponding to native synapses from the vestibular cerebellum produced biases that were optimal for a typical weber fraction of 0.12. However, CCMSTP is flexible and can be adjusted to generate optimal biases for a wide range of weber fractions. The exact relationship between model parameters and wweber is an important question for future research. We note that the timescales of synaptic properties observed empirically in the vestibular cerebellum30 are only suitable for generating optimal estimates for relatively short time intervals. Therefore, whether the synaptic mechanisms that underlie CCMSTP could accommodate timing behavior for longer timescales remains to be seen. One intriguing hypothesis is that synaptic parameters in different cerebellar regions are tuned to generate optimal estimates for different time intervals, similar to the timing variability observed for cerebellar long-term synaptic plasticity rules78.

Methods

MF-GC synapse model

The synaptic weight between the jth MF and the ith GC is denoted by Wij. The firing rate of the jth MF is represented by mj(t) and the average current per unit time transmitted by the synapse between GC i and MF j is

| 1 |

Time-dependent MF-GC synaptic weights were modeled using two ready-releasable vesicle pools38, each according to the general form established by Tsodyks and Markram79. A similar model was shown to accurately describe STP at the MF-GC synapse38. Accordingly, one vesicle pool was comparatively small, with a high release probability and a low rate of recovery from vesicle depletion (0.5 s−1), while the other was comparatively large, with low release probability and a high rate of recovery from depletion (20ms−1)38. We refer to these pools as’slow’ and’fast’, respectively. In the Hallermann model38, the slow pool is refilled by vesicles from the fast pool. For the sake of mathematical tractability, we modeled the pools as being refilled independently (see scheme in Fig. 1).

To model vesicle depletion, we use the variables xslow and xfast, denoting the fraction of neurotransmitter available at the slow and fast vesicle pool. The state of the pools between GC i and MF j at time t is then described by

| 2 |

where, and are the time constants of recovery from vesicle depletion for the slow and fast pools, and are identical for all synapses. The variables and denote the pools’ respective release probabilities at time t. Experimental data show that, in response to trains of action potentials, MF-GC synapses approach synaptic steady-state transmission with a long time constant38,39. This feature can be captured with a serial pool model38 (see scheme in Fig. S7). In order to capture this behavior with a parallel pool model, we added the phenomenological parameter pref to the slow pool’s dynamical equation. In mechanistic terms, pref can be thought of as the probability of immediately refilling a synaptic docking site after the release of a vesicle. This mechanism effectively mimics a simplified form of activity-dependent recovery from depression. The final release probabilities and are modulated by synaptic facilitation according to

| 3 |

Here, and denote the release probabilities for the fast and slow pools, respectively, and is the facilitation time constant. The index α denotes different synapse types (groups from Chabrol et al.30) and varies from 1 to 5. The average number of vesicles released at any time t can be written as:

| 4 |

Postsynaptic receptor desensitization induces an additional component of depression of phasic MF-GC synaptic transmission. As both pools share the same postsynaptic target, we model desensitization via the modulation of a single variable for each synapse type, which represents the synaptic quantal size and which is influenced by the total number of vesicles released from both pools:

| 5 |

where , τD is the time constant of recovery from desensitization, q0 is the quantal size in the absence of ongoing stimulation and ΔD is a proportionality factor that determines the fractional reduction of . As explained below, we set q0 = 1, i.e. qij(t) is normalized. Both τD and ΔD are identical across all synapse types. Finally, the total synaptic weight is equal to the sum of the contributions from both vesicle pools:

| 6 |

Synaptic parameters for generating diverse synaptic strength and dynamics

We set the synaptic parameters of our model to reproduce the average behavior of the 5 MF-GC synapse groups which were determined in ref. 30 based on unitary response current amplitudes, pair pulse ratios, and response coefficients of variation.

The vesicle pool refilling time constants and were set to the values measured at the MF-GC synapse in ref. 38 and were identical for all synapse groups. The time constant of facilitation for groups 1–4 was taken from ref. 39. The time constant of recovery from desensitization, τD, was set equal to the value reported in ref. 38 for all groups, and the parameters ΔD was chosen so as to obtain the relative reduction in quantal size reported in the same ref. 38. To qualitatively account for the slow approach to steady-state transmission observed in MF-GC synapses38,39 we set pref to a value of 0.6 for all synapse types.

To set the presynaptic quantal parameters, we matched model quantal parameters, q0, N and pv, to the average of those measured in ref. 30 for each synapse group. The estimation of the experimental values , and was carried out via multiple-probability fluctuation analysis30, which assumes a single vesicle pool. To constrain the corresponding parameters of our two-pool model, we assumed:

| 7 |

while keeping . Since the quantal size did not significantly differ between groups30, we set q0 = 1 for all groups for simplicity. As group 4 featured almost no STP, we modeled these synapses without slow pool.

The above equations do not have a unique solution. In order to constrain the synaptic parameters further, we additionally required that the relative unitary response current amplitudes between synapse groups and their pair pulse ratios approximately equal the experimentally measured ones. To account for the fact that group 5’s pair pulse ratio is larger than one, we set τF = 30 ms for this group, as in ref. 30.

Finally, we extracted the relative occurrence of each synapse type from ref. 30.

A set of synaptic parameters that reproduces the behavior of the five synapse groups from ref. 30 that we used in Figs. 1, 2, and 6 is summarized in Table 1.

Table 1.

Synaptic parameters used in full model

| Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Ref. | |

|---|---|---|---|---|---|---|

| 4 | 3 | 4 | – | 3 | 30 | |

| 16 | 12 | 6 | 10 | 12 | 30 | |

| 0.9 | 0.8 | 0.4 | – | 0.4 | 30 | |

| 0.72 | 0.55 | 0.35 | 0.3 | 0.15 | 30 | |

| [ms] | 2000 | 2000 | 2000 | – | 2000 | 38 |

| [ms] | 20 | 20 | 20 | 20 | 20 | 38 |

| [ms] | 12 | 12 | – | 12 | 30 | 30,39 |

| 0.6 | 0.6 | 0.6 | – | 0.6 | – | |

| 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 38 | |

| [ms] | 100 | 100 | 100 | 100 | 100 | 38 |

| occurrence | 6% | 16% | 38% | 24% | 16% | 30 |

MF firing rate parameters

MF firing rate distributions of the full CCMSTP were set according to the broad range described in the literature40,41,70,72,73,80–84. MFs forming synapse types 1 and 2, which convey primary sensory information, were set to high firing frequencies according to experimental observations40,41 (see Fig. 1b, left panels). In contrast, the firing rates for the other synapses types were lower70,83. For the full model, this led to synapses with high pv being associated with MF inputs with comparatively higher average firing rates (primary sensory groups 1, 2) and synapses with low pv being associated with MF inputs with comparatively lower average firing rates (secondary/processed sensory groups 3, 4, 5). We chose to describe MF firing rate distributions by Gaussian distributions whose negative tails were set to zero. Means and standard deviations of the Gaussian distributions were set such that the means and standard deviations of the resulting thresholded distributions resulted in the values summarized in Table 2.

Table 2.

MF firing rate parameters used in the full model

| Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | |

|---|---|---|---|---|---|

| [Hz] | 200 | 200 | 20 | 20 | 20 |

| [Hz] | 20 | 20 | 20 | 20 | 20 |

Cerebellar cortical circuit model

The standard cerebellar cortex model with STP (CCMSTP) consists of firing rate units corresponding to 100 MFs, 3000 GCs, a single PC, and a single molecular layer interneuron (MLI). The PC linearly sums excitatory inputs from GCs and inhibition from the MLI. Each GC receives four MF synapses, randomly selected from the different synapse types according to their experimentally characterized frequency of occurrence30. The synaptic inputs to the GCs and their firing rates are given by:

| 8 |

where the granule cell membrane time constant τg = 10ms. In the above equation, K is a set of four indices, randomly drawn from all MF. We require that at least one MF per GC belongs to groups 1, 2 or 5, as observed experimentally30. The gain and threshold are set individually for each GC i as explained below.

MLI activity is assumed to represent the average rate of the GC population, thus allowing each GC to have a net excitatory or inhibitory effect depending on the difference between the MLI-PC inhibitory weight and the respective GC-PC excitatory weight:

| 9 |

The synaptic weights between the ith GC and the PC and between the MLI and PC were defined as and , respectively. The total synaptic input to the PC is thus given by

| 10 |

is an input that maintains the spontaneous firing of the PC at 40 Hz.

Finally, the PC firing rate is given by

| 11 |

In Fig. 1, the GC-PC weights were drawn from an exponential distribution with mean equal to 1. To decrease or increase the ratio of the average excitatory to inhibitory weight, in Figs. 1c and 1d we set and respectively. The full CC model and the reduced model (described below) were numerically integrated using the Euler method with step size 0.5 ms.

GC Threshold and gain adjustment

Changing the statistics of the MF firing rate distributions changes the fraction of active GCs at any given time and the average GC firing rates. To avoid the confounding impact that co-varying these quantities has on learning performance when comparing different MF parameter sets, we adjusted GC thresholds, and gains such that, at steady state, the fraction of active GCs and the average GC firing rates were identical for all MF parameter choices. Specifically, we drew 1000 random MF patterns from the respective firing rate distributions, and we calculated the steady inputs values of the synaptic dynamics as follows:

| 12 |

| 13 |

| 14 |

With these, we obtained, for each GC, the distribution of steady-state inputs and firing rates:

| 15 |

We then adjusted and for each GC to maintain an average steady-state GC firing rate of 5 Hz for all patterns. The lifetime sparsity of each GC was set to 0.2, which is within the range of experimental observations84,85. Throughout the article, this adjustment was carried out every time we changed synaptic parameters (Fig. 5), the parameters of the MF firing rate distributions (Fig. 4) or the MF to synapse connectivity (Fig. 5).

Supervised learning rule

Purkinje cell pauses associated with eyelid conditioning acquisition were generated by adjusting JE,i using a supervised learning rule. The target PC firing rate was set as a Dirac pulse in which the PC rate is zero in the time bin around following the start of the CS.:

| 16 |

where in the time bin around and otherwise. We quantify the deviation of the PC firing rate from the target rate by the least squares loss E that is to be minimized during learning:

| 17 |

is the time interval after CS onset (at ) during which we require the PC to follow the target signal and is a time interval before CS onset during which the PC should fire at its spontaneous rate. denotes the deviation between the target and the actual PC output at time t. is a factor that we use to increase the sensitivity of the loss E function to the target time, and is given by:

| 18 |

In all main figures, we used and .

GC-PC weights were modified during learning using gradient descent to reduce the error E at each step of the learning algorithm:

| 19 |

Here, is a learning rate. For our simulations, we modified this basic rule in two ways. Firstly, similar to ref. 75, we explicitly simulated a climbing fiber (CF) rate, cf, that is modulated by the error signal according to

| 20 |

where is the spontaneous CF rate and β a proportionality factor. The CF rate was then used to update the synaptic weight according to the following equation:

| 21 |

where we also set when a learning iteration resulted in a negative weight. As the CF rate is required to be positive or zero, this formulation limits the error information transmitted to the PC compared to the simple gradient rule. This learning rule yields synaptic long-term depression when CF and GC are simultaneously active and long-term potentiation when GCs are active alone, consistent with experimental data on GC-PC synaptic plasticity59.

Furthermore, recent experimental findings suggest that the temporal properties of GC-PC plasticity rules are tuned to compensate for the typical delays expected for error information arriving in the cerebellar cortex78. Here, we did not explicitly model CF error information delays, and for the sake of simplicity, directly modeled the timing of PC activity to show that the GC basis set is sufficient to generate an appropriately timed PC pause.

To increase the learning speed, we added a Nesterov acceleration scheme to Eq. (21)86, introducing a momentum term to the gradient, i.e. weight updates made during a given iteration of the algorithm depended on the previous iteration. The implementation we chose additionally features an adaptive reset of the momentum term, improving convergence properties86. This addition is for practical convenience and does not reflect biological mechanisms.

For the weight learning, we subsampled the simulated GC rates by a factor of 10 and set η = 0.0025, and the initial distribution of weights to for all . For all eyelid response learning simulations, we chose (Figs. 2, 4, 5).

Error measure of learned Purkinje cell pause

We defined the error between the PC pause and the (see Fig. 4, S3, S4 and S5) in the following way:

| 22 |

The first term depends on the amplitude of the PC pause relative to baseline firing, yielding a small error when the amplitude goes to zero. The second term corresponds to the normalized width of the PC pause. Finally, the third term is the normalized deviation of the pause’s minimum from the target time, . To increase the importance of this term, we scaled it by a factor 5. The error measure in Figs. S4 and S5 is the sum of over all tested delays.

Reduced CC model

The reduced synaptic model included only two synapse types. We also neglected facilitation and desensitization, yielding constant release probabilities and constant normalized quantal size:

| 23 |

We obtain for the vesicle pool dynamics:

| 24 |

and the total synaptic weight becomes

| 25 |

Here the index denotes membership in the driver or supporter category. The synaptic currents of the reduced model are computed as in the full model. Each GC receives exactly two driver and two supporter MF inputs with random and pairwise distinct identities. To eliminate any non-synaptic dynamics from the reduced model, we removed the GC membrane time constant yielding GC dynamics that follow the synaptic input instantaneously:

| 26 |

Finally, GC threshold and gain adjustments were carried out similarly to the full CCMSTP where instead of Eqs. (12) and (14) we used Eq. (23).

Synaptic parameters of the reduced model

The parameters of the reduced model were set to create two synapse types that capture the essence of the experimentally observed synaptic behavior: a strong and fast driver synapse, and a weak and slow supporter synapse. All synaptic parameters of the model used in Figs. 3, 4 and 6 are summarized in Table 3.

Table 3.

Synaptic parameters used in reduced model

| Drivers | Supporters | |

|---|---|---|

| 3.5 | 4 | |

| 14 | 6 | |

| 0.8 | 0.4 | |

| 0.6 | 0.2 | |

| [ms] | 2000 | 2000 |

| [ms] | 20 | 20 |

| 0.6 | 0.6 | |

| occurrence | 50% | 50% |

In Fig. 5, firing rates and release probabilities were randomly drawn from uniform distributions. In detail, the release probabilities of the slow pool, , were drawn from distributions with a lower and upper bound of 0.1 and 0.9, respectively, (Fig. 5a, d, g, and h), and the corresponding release probabilities of the fast pool were calculated according to , keeping them strictly lower. The lower and upper bounds of the distribution of firing rates used in panels a and d were 5 Hz and 270 Hz, resulting in firing rate standard deviations of Hz for the two-groups case (Fig. 5a) and Hz for the five groups case (Fig. 5d). The bounds of the distributions in panels g and h were chosen to match the average group firing rates equal to those in panel d and firing rate standard deviations that increased with the group index, i.e. Hz for groups 1 to 5, respectively. Finally, the sizes of the slow vesicle pool were fixed at and the size of the fast vesicle pools were set to decrease with the group index, i.e. for the two-groups case, and for the five groups case. Finally, the desired rank correlation between identities and MF identities was achieved by creating a Gaussian copula reflecting their statistical dependency and reordering the marginal and MF distributions accordingly.

Derivation of and

In the reduced model, we derived an analytical solution to the synaptic current driving a GC in response to the CS. Since the equations describing slow and fast vesicle pool dynamics are formally very similar, we describe the derivation for a single slow pool only. Additionally, we suppress all indices for the sake of readability. We assume that the MF rate switches instantaneously from to at time . Integration of equations (Eq. (24)) from to t yields:

| 27 |

Here, and denote the steady-state values of x before (preCS) and after (CS) the firing rate switch. They are given by

| 28 |

with

| 29 |

Equation (27) defines the synaptic time constant that governs the speed of transition from a steady-state value before the CS to a steady-state value during the CS:

| 30 |

This equation is similar to one derived previously55,87. The total synaptic current per unit time for a single pool during the CS is given by

| 31 |

Combining Eqs. (27) and(31) we obtain

| 32 |

Thus, the transient amplitude for a single vesicle pool is

| 33 |

For a single synapse, the total transient amplitude is the sum of the individual fast pool and slow pool transients:

| 34 |

To generate the surface plots in Fig. 4 and Fig S3 we generated 105 firing rates from the driver and supporter MF rate distributions, respectively, and used Equations (30), (33) and (34) to calculate the corresponding values of the and . From these, the plots of the joint and distribution and the marginal distributions were generated using a two- or one-dimensional kernel density estimator, respectively88. Note that, formally, is maximal when . In that case, however, there is no synaptic transmission as . When plotting the joint - distribution in Fig. 4 and Fig S3, we therefore omitted time constants and transient amplitudes corresponding to .

Bayesian estimation of time intervals

To learn the mapping between tm and te, we presented CCMSTP with variable intervals drawn from various prior distributions (ts) subjected to measurement noise. The interval was introduced as a tonic input to MFs, similar to the CS in the eyelid simulations. The onset of this tonic input caused an abrupt switch of the MF input rates that persisted over the course of a trial. For each iteration of our learning algorithm, we generated target signals sampled randomly from one of five different uniform prior distributions: 25–150 ms, 50–200 ms, 100–300 ms, 200–400 ms, 300–500 ms. Learning was carried out separately for each interval and for 12000 iterations. We found that to achieve the correct biases for the two longest intervals, we had to introduce a higher CF baseline firing rate, Hz. The other learning parameters were kept the same as in the eyelid learning simulations.

In keeping with ref. 33, we modeled the DN neuron as an integrator, whose rate was calculated according to

| 35 |

where the is the weight of the inhibitory PC-DN synapse and is an external excitatory input to DN. It was set equal to the average PC firing rate during the interval period to ensure that excitation and inhibition onto the DN are of comparable size. For simplicity, we set .

In order to map the DN rate to a time axis (Fig. 6f, j), we rescaled every individual DN output curve according to:

| 36 |

where and are the maximum and minimum of the respective prior interval and and are the maximum and minimum values of the DN firing rate. Since the transformation described in Eq. (36) is linear, the essential features exhibited by the DN firing rate (i.e. its biases) are preserved.

To show how the theoretical Bayesian least squares (BLS) interval estimate can be obtained, we follow the reasoning from ref. 33. It is assumed that to estimate a time-interval, ts, subjects perform a noisy measurement, tm, according to:

| 37 |

Note that the standard deviation of the estimate of tm increases with the length of the interval ts with proportionality factor wweber, which is the weber fraction. Given the prior distribution of time intervals, , the Bayesian estimate of ts given tm is:

| 38 |

The BLS estimate is the expected value of the previous expression:

| 39 |

We performed a least squares fit of the BLS model to the CCMSTP outputs (from all five interval distributions simultaneously) with wweber as a single free parameter.

Recurrent Golgi cell inhibition

To probe the effect of recurrent inhibition in the reduced CCMSTP, we added one Golgi cell (GoC) that received excitatory inputs from all GCs and formed inhibitory synapses onto all GCs. For simplicity, we assumed that the GoC fires with a rate equal to the average GC firing rate, similarly to the MLI, and that all GoC to GC synapses have identical weights, Jgoc:

| 40 |

The above equations imply that, in this configuration, the GoC acts as an activity-dependent GC threshold.

To ensure that the overall GC activity level in the reduced CCMSTP with GoC inhibition is comparable to the case without, we require the same criterion as above: an average GC rate of 5 Hz and a fraction of activated GCs of 0.2 in steady state. Since the average GC input now depends on the average GC firing rate itself, manual adjustment of GC thresholds, , and gains, , carried out as above, is not feasible.

Instead, a steady-state solution of the set of Eq. (40) satisfying our requirements has to be found numerically. We first set up the CC network without the GoC and adjusted GC thresholds, , and gains, , according to the procedure described above. Note that in the reduced model, due to every GC receiving the same combination of inputs (i.e. 2 supporter and two driver inputs), both and are similar across GCs. We thus made the additional simplification of setting and for all GCs. We then reduced GC thresholds by 10% and introduced the GoC.

To obtain the average steady-state GC firing rate we assumed that the synaptic currents of a single GC are normally distributed across MF input patterns or, equivalently, across GCs. Mean and variance of the GC inputs are:

| 41 |

We can then express the average GC firing rate in the limit as:

| 42 |

where . The fraction of active GCs can be written as:

| 43 |

We can now impose that

| 44 |

and find a self-consistent solution of Eqs. (41), (42), and (43) by adjusting the parameters and . To do so we used the hybrid numerical root-finder from the GNU scientific library89 with default step size.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Supplementary information

Acknowledgements

A.B. thanks Gianluigi Mongillo and Zuzanna Piwkowska Zvonkine for helpful discussions. We thank the DiGregorio Lab for feedback on this manuscript. This work is supported by the Institut Pasteur, Centre National de la Recherche Scientifique, Fondation pour la Recherche Médicale (FRM EQU202003010555), Fondation pour l’Audition (FPA-RD-2018-8), BioPsy Laboratory of Excellence, and the Agence Nationale de la Recherche (ANR-17-CE16-0019, and ANR-18-CE16-0018, ANR-19-CE16 0019-02, ANR-21-CE16-0036-01), which were awarded to the laboratory of DAD.

Author contributions

All simulations and analyses were performed by A.B. A.B., M.W., M.J., and D.A.D. conceived the project and wrote the manuscript.

Data availability

No experimental data were generated in this study.

Code availability

Figures were generated with Matlab (R2019b) and python (3.8). All simulations were performed with C++11 using the GNU scientific library (2.6)89 and the armadillo library (11.0.1)90. The code is available on the following GitHub repository: https://github.com/alessandrobarri/cerebellar_cortex_input_STP.

Competing interests

The authors declare no competing interests.

Footnotes

Peer review information Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

A. Barri, Email: alessandrobarri@gmail.com

D. A. DiGregorio, Email: david.digregorio@pasteur.fr

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-022-35395-y.

References

- 1.Broome BM, Jayaraman V, Laurent G. Encoding and decoding of overlapping odor sequences. Neuron. 2006;51:467–482. doi: 10.1016/j.neuron.2006.07.018. [DOI] [PubMed] [Google Scholar]

- 2.Crowe DA, Averbeck BB, Chafee MV, Georgopoulos AP. Dynamics of parietal neural activity during spatial cognitive processing. Neuron. 2005;47:885–891. doi: 10.1016/j.neuron.2005.08.005. [DOI] [PubMed] [Google Scholar]

- 3.Harvey CD, Coen P, Tank DW. Choice-specific sequences in parietal cortex during a virtual-navigation decision task. Nature. 2012;484:62–68. doi: 10.1038/nature10918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sauerbrei BA, et al. Cortical pattern generation during dexterous movement is input-driven. Nature. 2020;577:386–391. doi: 10.1038/s41586-019-1869-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhou S, Masmanidis SC, Buonomano DV. Neural sequences as an optimal dynamical regime for the readout of time. Neuron. 2020;108:651–658.e5. doi: 10.1016/j.neuron.2020.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bright IM, et al. A temporal record of the past with a spectrum of time constants in the monkey entorhinal cortex. Proc. Natl Acad. Sci. 2020;117:20274–20283. doi: 10.1073/pnas.1917197117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.MacDonald CJ, Lepage KQ, Eden UT, Eichenbaum H. Hippocampal “time cells” bridge the gap in memory for discontiguous events. Neuron. 2011;71:737–749. doi: 10.1016/j.neuron.2011.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pastalkova E, Itskov V, Amarasingham A, Buzsaki G. Internally generated cell assembly sequences in the rat hippocampus. Science. 2008;321:1322–1327. doi: 10.1126/science.1159775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Long MA, Jin DZ, Fee MS. Support for a synaptic chain model of neuronal sequence generation. Nature. 2010;468:394–399. doi: 10.1038/nature09514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kennedy A, et al. A temporal basis for predicting the sensory consequences of motor commands in an electric fish. Nat. Neurosci. 2014;17:416–422. doi: 10.1038/nn.3650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Laje R, Buonomano DV. Robust timing and motor patterns by taming chaos in recurrent neural networks. Nat. Neurosci. 2013;16:925–933. doi: 10.1038/nn.3405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yamazaki T, Tanaka S. The cerebellum as a liquid state machine. Neural Netw. 2007;20:290–297. doi: 10.1016/j.neunet.2007.04.004. [DOI] [PubMed] [Google Scholar]

- 13.Toyoizumi T, Abbott LF. Beyond the edge of chaos: amplification and temporal integration by recurrent networks in the chaotic regime. Phys. Rev. E: Stat. Nonlin. Soft Matter Phys. 2011;84:051908. doi: 10.1103/PhysRevE.84.051908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dittman JS, Kreitzer AC, Regehr WG. Interplay between facilitation, depression, and residual calcium at three presynaptic terminals. J. Neurosci. 2000;20:1374–1385. doi: 10.1523/JNEUROSCI.20-04-01374.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Abbott LF, Regehr WG. Synaptic computation. Nature. 2004;431:796–803. doi: 10.1038/nature03010. [DOI] [PubMed] [Google Scholar]

- 16.Abbott LF, Varela JA, Sen K, Nelson SB. Synaptic depression and cortical gain control. Science. 1997;275:220–224. doi: 10.1126/science.275.5297.221. [DOI] [PubMed] [Google Scholar]

- 17.Rothman JS, Cathala L, Steuber V, Silver RA. Synaptic depression enables neuronal gain control. Nature. 2009;457:1015–1018. doi: 10.1038/nature07604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Buonomano DV, Merzenich MM. Temporal information transformed into a spatial code by a neural network with realistic properties. Science. 1995;267:1028–1030. doi: 10.1126/science.7863330. [DOI] [PubMed] [Google Scholar]

- 19.Mongillo G, Barak O, Tsodyks M. Synaptic theory of working memory. Science. 2008;319:1543–1546. doi: 10.1126/science.1150769. [DOI] [PubMed] [Google Scholar]