Abstract

Purpose

To evaluate the diagnostic accuracy of machine learning (ML) techniques applied to radiomic features extracted from OCT and OCT angiography (OCTA) images for diabetes mellitus (DM), diabetic retinopathy (DR), and referable DR (R-DR) diagnosis.

Design

Cross-sectional analysis of a retinal image dataset from a previous prospective OCTA study (ClinicalTrials.govNCT03422965).

Participants

Patients with type 1 DM and controls included in the progenitor study.

Methods

Radiomic features were extracted from fundus retinographies, OCT, and OCTA images in each study eye. Logistic regression, linear discriminant analysis, support vector classifier (SVC)-linear, SVC-radial basis function, and random forest models were created to evaluate their diagnostic accuracy for DM, DR, and R-DR diagnosis in all image types.

Main Outcome Measures

Area under the receiver operating characteristic curve (AUC) mean and standard deviation for each ML model and each individual and combined image types.

Results

A dataset of 726 eyes (439 individuals) were included. For DM diagnosis, the greatest AUC was observed for OCT (0.82, 0.03). For DR detection, the greatest AUC was observed for OCTA (0.77, 0.03), especially in the 3 × 3 mm superficial capillary plexus OCTA scan (0.76, 0.04). For R-DR diagnosis, the greatest AUC was observed for OCTA (0.87, 0.12) and the deep capillary plexus OCTA scan (0.86, 0.08). The addition of clinical variables (age, sex, etc.) improved most models AUC for DM, DR and R-DR diagnosis. The performance of the models was similar in unilateral and bilateral eyes image datasets.

Conclusions

Radiomics extracted from OCT and OCTA images allow identification of patients with DM, DR, and R-DR using standard ML classifiers. OCT was the best test for DM diagnosis, OCTA for DR and R-DR diagnosis and the addition of clinical variables improved most models. This pioneer study demonstrates that radiomics-based ML techniques applied to OCT and OCTA images may be an option for DR screening in patients with type 1 DM.

Financial Disclosure(s)

Proprietary or commercial disclosure may be found after the references.

Keywords: Artificial intelligence, Diabetic retinopathy, Machine learning, OCT angiography, Radiomics

Abbreviations and Acronyms: AI, artificial intelligence; AUC, area under the curve; DCP, deep capillary plexus; DM, diabetes mellitus; DR, diabetic retinopathy; FR, fundus retinographies; LDA, linear discriminant analysis; LR, logistic regression; ML, machine learning; OCTA, OCT angiography; RF, random forest; R-DR, referable DR; rbf, radial basis function; SCP, superficial capillary plexus; SVC, support vector classifier

Artificial intelligence (AI) applications in ophthalmology have exponentially grown in the last decade.1, 2, 3, 4 Artificial intelligence algorithms are used in multiple tasks, such as image recognition, image processing,5 or clinical data association and allow characterization of patient profiles, being able to provide evolution predictions,6 treatment response predictions,7 and associations with systemic data of interest.8 In the computer vision and image recognition field, the main applications have been focused in the retina subspecialty area, and in particular the study of the retinal complications of diabetes mellitus (DM), such as diabetic retinopathy (DR) or diabetic macular edema, as well as age-related macular degeneration and retinopathy of prematurity.9, 10, 11, 12

Fundus retinography (FR) has been the most common type of retinal image employed in these analysis, frequently using large datasets from existing DR screening programs.13, 14, 15, 16, 17 Recently, the diagnostic potential of AI applied to OCT images has also been investigated.3 Although most previous work done leverages FR or OCT images, scarce efforts have been implemented on the rich granular data afforded by OCT angiography (OCTA) images,18,19 because not many preexisting large datasets are available. OCT angiography is a newly developed, noninvasive retinal imaging technique that depicts details of the perifoveal vascular network with an unprecedented level of resolution, allowing the objective quantification of parameters, such as vessel density or flow impairment areas.20,21 Because this technique allows direct in vivo visualization of the microvascular circulation, it is sensible to think that the detection of subtle microvascular changes may be improved compared with other retinal techniques, especially in vascular diseases such as DM.

Radiomics is a specific methodology that refers to the extraction and analysis of a large number of quantitative features from medical images using computer vision and image processing techniques.22 The main interest of this approach is that these mathematical features offer potential to discover disease characteristics beyond human perception capacity, which can offer clinicians additional support for decision-making in diagnostic and therapeutic scenarios.23 Furthermore, replacing an image by its radiomic features enables the use of more standard classification models. These techniques have been applied in multiple medical imaging areas, such as tumor phenotyping in brain,24 breast,25 lung26 and prostate27 cancers, surgical planification,28 and treatment response predictions29,30 mainly employing magnetic resonance imaging and computed tomography scan image datasets. In DM, radiomics has been applied to abdominal computed tomography scans to assess the risk of DM,31 for early detection of diabetic kidney disease,32 and to evaluate pancreas lesions, including DM.33 In ophthalmology, recent reports have investigated their potential to predict intravitreal treatments durability in retinal vein occlusion and diabetic macular edema cases using widefield fluorescein angiography34,35 and OCT images36 and for diagnostic purposes in myopic maculopathy using FR images.37 To present date, we are not aware of any study directed to investigate the potential of radiomics applied to retinal images for DM or DR diagnosis.

The aim of this pioneer study was to evaluate the potential of radiomics techniques for DM and DR diagnostic classification, applied to a dataset of OCT and OCTA images from a previous prospective trial.38 Radiomic features will be extracted from retinal images and a standard set of classification models will be trained to evaluate the diagnostic accuracy of each image type individually and in combination to identify the optimal strategy for DM, DR, and referable DR (R-DR) diagnosis. Secondary aims were to evaluate the influence of adding clinical data, to explore the use of unilateral and bilateral image datasets for these predictions, and to further study the external validity of our findings in future studies.

Methods

Dataset Description

This study was approved by the Hospital Clinic of Barcelona institutional review board (HCB/2021/0350) and adhered to the Declaration of Helsinki. The retinal images dataset was collected in a prospective OCTA trial (ClinicalTrials.gov NCT03422965) with a study protocol described elsewhere.38 A written informed consent was obtained for all participants. A total of 593 individuals with retinal images corresponding to 1186 eyes and ocular and systemic data were recruited, 478 patients with type 1 DM (n = 956 eyes) and 115 healthy controls (n = 230 eyes). Systemic, ocular, and retinal images quality exclusion criteria were applied as described elsewhere39,40,41 and 439 individuals (n = 726 eyes) with a complete battery of all retinal images constituted the primary dataset for analysis. To evaluate the influence of a potential bilaterality bias, a secondary analysis was conducted by randomly selecting only 1 eye per patient (1 eye/1 patient) that included 439 patients (n = 439 eyes).

Retinal Images Characteristics

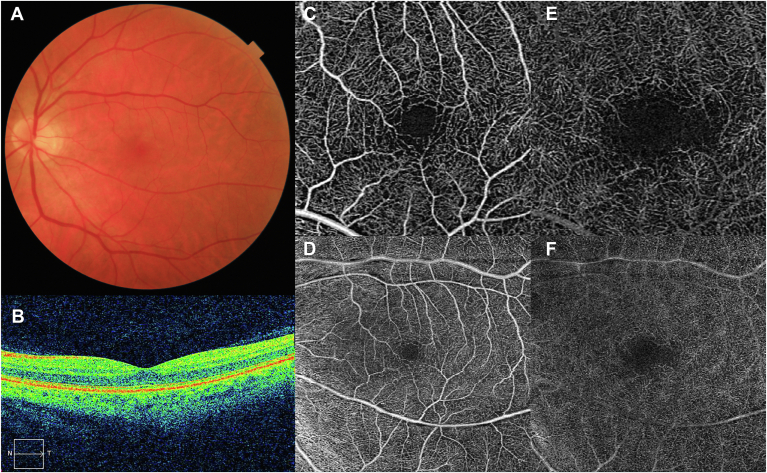

The battery of retinal images captured for each study eye included FR, OCT, and OCTA images (Fig 1). Fundus retinography images were obtained with a 45° field of view (Topcon DRI-Triton, Topcon Corp). OCT images included the central horizontal B scan centered in fovea using a macular cube 6 × 6 mm (512 × 128 pixels) scanning protocol (Cirrus 5000, Carl Zeiss Meditec). OCT angiography scanning protocols included 3 × 3 mm and 6 × 6 mm OCTA macular cubes. Two-dimensional en face OCTA images corresponding to the superficial capillary plexus (SCP) and deep capillary plexus (DCP) automatically segmented by the built-in software (Angioplex Zeiss) for both scan sizes were analyzed. OCT and OCTA images with artifacts or a signal strength index < 7 were excluded from analysis.

Figure 1.

Retinal images collected for each individual eye included in the study dataset. A, Fundus retinography (FR); B, Structural OCT macular scan; C, OCT angiography (OCTA) 3 × 3 mm superficial capillary plexus (SCP); D, OCTA 3 × 3 mm deep capillary plexus (DCP); E, OCTA 6 × 6 mm SCP; F, OCTA 6 × 6 mm DCP. (FR: Topcon DRI-Triton, Topcon Corp; OCT and OCTA: Cirrus 5000, Carl Zeiss Meditec).

Radiomics and Clinical Features

Two types of data were analyzed in this study, namely the clinical data collected during medical examination and the radiomic features derived from each type of retinal images.

- Clinical data: systemic data included age, sex, body mass index, smoking status, and DM duration, as described elsewhere.38 No additional ocular data were included for this study (i.e., axial length, refractive status, visual acuity, etc.).

- Radiomics data: radiomic features were extracted from all images in each study eye: FR, OCT, OCTA 3 × 3 mm SCP, OCTA 3 × 3 mm DCP, OCTA 6 × 6 mm SCP, and OCTA 6 × 6 mm DCP. A special category was created for all OCTA images subtypes combined (“OCTA - All”), created with the average radiomic features of each OCTA image in each case. A total of 91 radiomic features were extracted related to the statistics distribution in each individual image, such as the 10th percentile, 90th percentile, energy, interquartile range, kurtosis, maximum, mean, mean absolute deviation, median, minimum, range, robust mean absolute deviation, robust mean squared, root mean squared, skewness, total energy, and variance.

Missing values were imputed, one-hot encoding was applied on categorical data, and data were normalized (mean = 0, standard deviation = 1).

Machine Learning Models

A set of standard machine learning (ML) and related statistical techniques were used for classification purposes. Logistic regression (LR), Linear discriminant analysis (LDA), support vector classifiers (SVCs) using linear (SVC-linear), and radial basis function (SVC-rbf) kernel and random forest (RF) models were selected as statistical (LR and LDA) and ML classifiers (SVC-linear, SVC-rbf, and RF) based on their performance and adaptability to different environments. The dataset was split into training, validation, and test cohorts with cross-validation for each classification problem, model, and features subset, as described below.

Feature Selection, Model Optimization, and Performance

Feature selection was performed using mutual information (MI) to rank each individual feature (tenfold cross-validation) and a wrapper backward elimination feature selection process, starting from the 60 features with the highest MI. This process aimed to identify a simultaneously parsimonious and predictive feature subset and the most appropiate model parameters. A 5 to 4 double cross-validation process was performed to train, validate and test each feature subset and to select the model parameters in each step of the backward elimination procedure. From the resulting models, the area under the curve (AUC) was extracted from each double cross-validation split and receiver operating curves were constructed to graphically present the performance of the models.

Results

The demographics and baseline characteristics of included patients and eyes in the 3 classification tasks are described in Table 1. The diagnostic accuracy of the ML models constructed with the radiomic features extracted from each retinal imaging technique was quantified by their AUC performance and graphically presented in the form of box-whiskers plots and receiver operating curves, as detailed below.

Table 1.

Demographics and Baseline Characteristics of Study Patients and Eyes for All Classification Tasks: DM Diagnosis, DR Diagnosis, and R-DR Diagnosis

| Variable | Statistics | DM Diagnosis |

DR Diagnosis |

Referable DR Diagnosis |

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Controls | Type 1 DM | P Value | No DR | DR | P Value | Non R-DR | R-DR | P Value | ||

| General characteristics | n = 65 | n = 374 | n = 249 | n = 125 | n = 356 | n = 18 | ||||

| Age (y) | Mean (SD) | 43.81 (14.53) | 38.85 (11.50) | < 0.05∗ | 37.62 (11.78) | 41.29 (10.53) | < 0.05∗ | 38.56 (11.47) | 44.47 (10.88) | < 0.05 |

| Median (Q1, Q3) | 40.30 (31.10, 57.10) | 37.65 (29.83, 46.68) | 36.40 (27.40, 45.40) | 40.50 (34.30, 48.30) | 37.40 (29.40, 46.23) | 43.95 (36.67, 52.00) | ||||

| Sex, female | n (%) | 41 (63.1) | 195 (52.1) | 0.134 | 130 (52.2) | 65 (52.0) | 0.943 | 189 (53.1) | 6 (33.3) | 0.146 |

| Smoking habits | 0.069 | 0.305 | ||||||||

| Nonsmoker | n (%) | 43 (70.5) | 242 (64.9) | 0.477 | 166 (66.9) | 76 (60.8) | 0.291 | 231 (65.1) | 11 (61.1) | 0.802 |

| Actual smoker | n (%) | 5 (8.2) | 74 (19.8) | < 0.05 | 49 (19.8) | 25 (20.0) | 0.934 | 73 (20.6) | 1 (5.6) | 0.220 |

| Ex-smoker | n (%) | 13 (21.3) | 57 (15.3) | 0.318 | 33 (13.3) | 24 (19.2) | 0.180 | 51 (14.4) | 6 (33.3) | < 0.05 |

| Hypertension | n (%) | 6 (9.8) | 32 (8.6) | 0.933 | 17 (6.8) | 15 (12.0) | 0.136 | 29 (8.1) | 3 (16.7) | 0.192 |

| BMI (kg/m2) | Mean (SD) | 23.66 (3.77) | 24.68 (3.65) | < 0.05∗ | 24.40 (3.55) | 25.21 (3.79) | < 0.05∗ | 24.57 (3.62) | 26.73 (3.81) | < 0.05 |

| Median (Q1, Q3) | 22.89 (21.05, 25.46) | 24.16 (22.16, 26.84) | 23.81 (21.89, 26.48) | 24.69 (22.50, 27.28) | 24.09 (22.12, 26.60) | 26.90 (23.55, 29.68) | ||||

| DM- characterisitics | ||||||||||

| DM duration (y) | Mean (SD) | 0.00 (0.00) | 19.62 (10.70) | – | 16.27 (9.96) | 26.31 (8.85) | < 0.05∗ | 19.03 (10.46) | 31.90 (8.14) | < 0.05 |

| Median (Q1, Q3) | 0.00 (0.00, 0.00) | 19.25 (10.50, 27.05) | 15.40 (8.28, 22.20) | 25.95 (20.38, 32.90) | 19.00 (10.20, 26.30) | 30.10 (25.50, 36.00) | ||||

| HbA1c (2017) | Mean (SD) | 5.30 (0.33) | 7.43 (0.977) | < 0.05∗ | 7.37 (1.01) | 7.56 (0.89) | < 0.05∗ | 7.43 (0.99) | 7.57 (0.74) | 0.301 |

| Median (Q1, Q3) | 5.30 (5.10, 5.50) | 7.40 (6.80, 7.90) | 7.30 (6.70, 7.80) | 7.40 (6.98, 8.10) | 7.40 (6.80, 7.90) | 7.60 (6.90, 8.20) | ||||

| Ocular measures | n = 102 | n = 621 | n = 436 | n = 185 | n = 594 | n = 27 | ||||

| Visual acuity | Mean (SD) | 0.96 (0.07) | 0.96 (0.37) | < 0.05∗ | 0.97 (0.44) | 0.94 (0.08) | 0.155 | 0.97 (0.38) | 0.91 (0.12) | < 0.05 |

| Median (Q1, Q3) | 1.00 (0.95, 1.00) | 0.95 (0.95, 1.00) | 0.95 (0.95, 1.00) | 0.95 (0.95, 1.00) | 0.95 (0.95, 1.00) | 0.95 (0.90, 0.97) | ||||

| Axial length | Mean (SD) | 23.64 (1.08) | 23.62 (1.22) | 0.798 | 23.74 (1.18) | 23.36 (1.26) | < 0.05∗ | 23.66 (1.22) | 22.89 (0.86) | < 0.05 |

| Median (Q1, Q3) | 23.53 (22.94, 24.37) | 23.52 (22.82, 24.39) | 23.65 (22.90, 24.54) | 23.22 (22.68, 23.91) | 23.54 (22.84, 24.42) | 23.19 (22.43, 23.38) | ||||

| OCTA | n = 103 | n = 623 | n = 438 | n = 185 | n = 596 | n = 27 | ||||

| Vessel density (mm-1) | Mean (SD) | 20.87 (1.25) | 20.14 (1.67) | < 0.05∗ | 20.52 (1.52) | 19.24 (1.67) | < 0.05∗ | 20.22 (1.64) | 18.30 (1.26) | < 0.05 |

| Median (Q1, Q3) | 21.00 (20.20, 21.80) | 20.30 (19.10, 21.40) | 20.70 (19.50, 21.60) | 19.40 (18.20, 20.30) | 20.40 (19.20, 21.40) | 18.40 (17.60, 18.95) | ||||

| Perfusion density | Mean (SD) | 0.374 (0.022) | 0.367 (0.026) | < 0.05∗ | 0.371 (0.025) | 0.358 (0.026) | < 0.05∗ | 0.368 (0.025) | 0.348 (0.021) | < 0.05 |

| Median (Q1, Q3) | 0.377 (0.365, 0.389) | 0.370 (0.352, 0.385) | 0.375 (0.355, 0.390) | 0.363 (0.343, 0.377) | 0.371 (0.353, 0.386) | 0.350 (0.340, 0.363) | ||||

| FAZ area (mm2) | Mean (SD) | 0.240 (0.080) | 0.239 (0.098) | 0.360 | 0.236 (0.096) | 0.249 (0.102) | 0.125 | 0.237 (0.097) | 0.290 (0.104) | < 0.05 |

| Median (Q1, Q3) | 0.250 (0.190, 0.290) | 0.230 (0.170, 0.300) | 0.230 (0.170, 0.290) | 0.240 (0.180, 0.310) | 0.230 (0.170, 0.292) | 0.300 (0.255, 0.370) | ||||

| FAZ perimeter (mm) | Mean (SD) | 2.070 (0.433) | 2.095 (0.488) | 0.999 | 2.055 (0.465) | 2.189 (0.528) | < 0.05∗ | 2.077 (0.479) | 2.479 (0.535) | < 0.05 |

| Median (Q1, Q3) | 2.150 (1.860, 2.350) | 2.090 (1.790, 2.415) | 2.060 (1.790, 2.370) | 2.190 (1.820, 2.570) | 2.070 (1.788, 2.400) | 2.540 (2.220, 2.815) | ||||

| FAZ circularity | Mean (SD) | 0.674 (0.080) | 0.655 (0.087) | < 0.05∗ | 0.666 (0.081) | 0.626 (0.094) | < 0.05∗ | 0.658 (0.085) | 0.581 (0.092) | < 0.05 |

| Median (Q1, Q3) | 0.680 (0.630, 0.730) | 0.660 (0.600, 0.720) | 0.670 (0.620, 0.728) | 0.640 (0.560, 0.700) | 0.670 (0.610, 0.720) | 0.560 (0.525, 0.650) | ||||

| Structural OCT | n = 103 | n = 621 | n = 436 | n = 185 | n = 594 | n = 27 | ||||

| Central macular thickness (μm) | Mean (SD) | 258.5 (18.9) | 262.0 (20.5) | < 0.05∗ | 261.1 (20.0) | 264.3 (21.6) | 0.076 | 261.7 (20.22) | 270.3 (26.2) | 0.065 |

| Median (Q1, Q3) | 256.0 (245.0, 272.0) | 262.0 (250.0, 276.0) | 261.0 (249.7, 275.0) | 266.0 (250.0, 279.0) | 262.0 (250.0, 276.0) | 272.0 (251.0, 286.0) | ||||

| Macular volume | Mean (SD) | 10.28 (0.49) | 10.31 (0.69) | 0.773 | 10.31 (0.61) | 10.30 (0.86) | 0.103 | 10.304 (0.695) | 10.430 (0.538) | 0.143 |

| Median (Q1, Q3) | 10.20 (9.90, 10.60) | 10.30 (10.00, 10.60) | 10.30 (10.00, 10.60) | 10.20 (9.90, 10.50) | 10.30 (10.0, 10.5) | 10.40 (10.10, 10.75) | ||||

| Macular thickness average (μm) | Mean (SD) | 285.31 (13.77) | 284.54 (18.28) | 0.874 | 284.60 (20.14) | 284.40 (12.95) | 0.108 | 284.323 (18.397) | 289.259 (15.009) | 0.169 |

| Median (Q1, Q3) | 284.0 (276.0, 294.5) | 285.0 (278.0, 293.0) | 286.0 (278.0, 293.0) | 284.0 (276.0, 292.0) | 285.000 (277.250, 292.000) | 288.000 (279.000, 298.500) | ||||

BMI = body mass index; DM = diabetes mellitus; DR = diabetic retinopathy; FAZ = foveal avascular zone; HbA1c = glycated hemogloblin; OCTA = OCT angiography; Q1 = quartile 1; Q3 = quartile 3; R-DR = referable DR; SD= standard deviation. ∗P < 0.05.

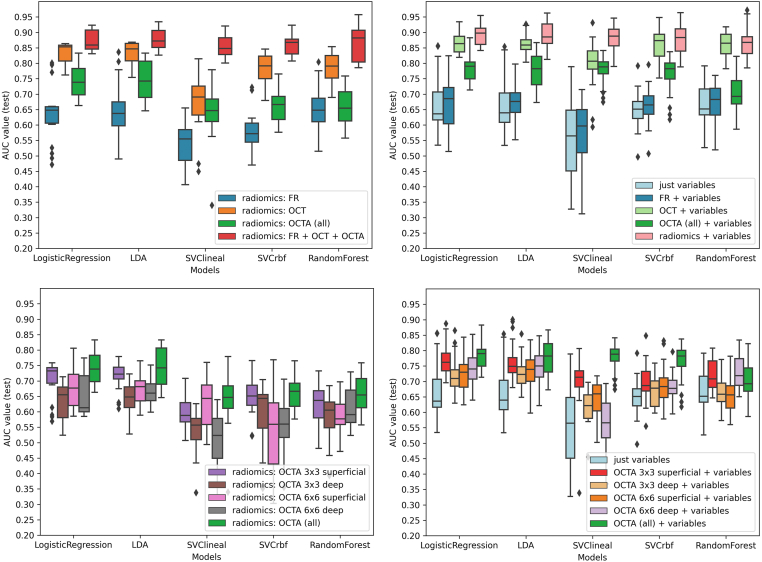

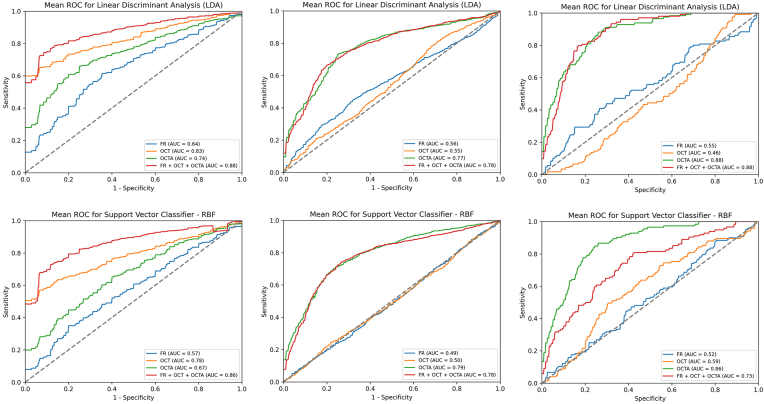

Classification Task 1: DM Diagnosis

The performance of the models for the classification of patients with DM is shown in AUC box plots (Fig 2) and receiver operating curves (Fig 3). OCT showed the best performance to predict a DM diagnosis in all the models (Fig 3, left column). In LR, the highest mean AUC was observed for OCT (AUC, 0.82; standard deviation 0.08) compared with FR (AUC, 0.63, 0.08) or OCTA (AUC, 0.74, 0.04) (Fig 2, top-left). Interestingly, the combination of all retinal imaging techniques showed a slightly superior mean AUC (0.87, 0.03). These results were also consistent in the LDA, SVC-linear and SVC-rbf-trained models.

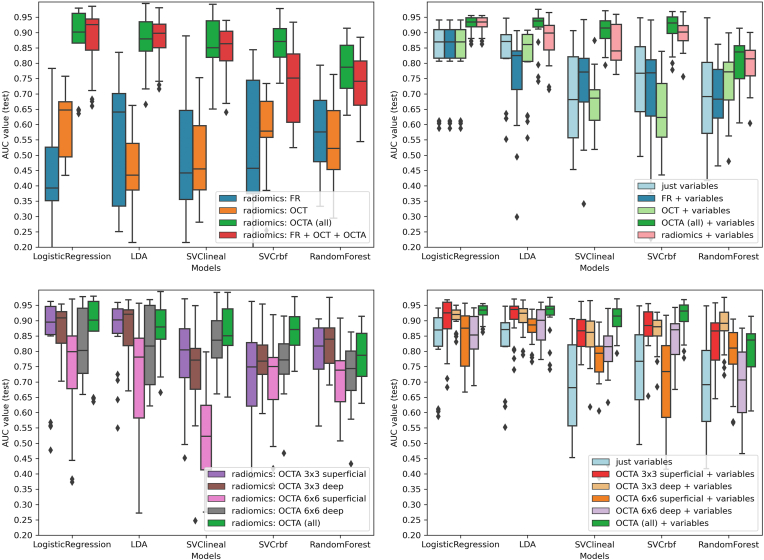

Figure 2.

Diabetes mellitus (DM) diagnosis: machine learning models performance for DM classification based on radiomic features of retinal images. Box-and-whiskers plots representing area under the curve (AUC) values for each retinal image type. Top-left: radiomics extracted from each retinal image type. Top right: radiomics extracted from each retinal image type + clinical variables. Bottom-left: radiomics, extracted from each OCT angiography (OCTA) type. Bottom-right: radiomics, extracted from each OCTA type + clinical variables. FR = fundus retinography; LDA = Linear discriminant analysis; rbf = radial basis function; SVC = support vector classifier.

Figure 3.

Receiver operating characteristic (ROC) curves of models performance for diabetes mellitus (DM), diabetic retinopathy (DR), and referable DR (R-DR) diagnosis. Left column: ROC curves for DM diagnosis detailed by models. Central column: ROC curves for DR diagnosis detailed by models. Right column: ROC curves for R-DR diagnosis detailed by models. Top row: example of a linear model (linear discriminant analysis [LDA]). Bottom row: example of a nonlinear model (Support Vector Classifier–radial basis function [rbf]). AUC = area under the curve; FR = fundus retinography; OCTA = OCT angiography.

Classification Task 2: DR Diagnosis

For DR diagnosis, the best AUC performance was observed for OCTA (Fig 3, central column, and Fig 4). In the LR model, OCTA achieved the best performance (AUC, 0.77, 0.03), compared with FR (0.56, 0.09) and OCT (0.54, 0.03), and similar results were consistently achieved with LDA, SVC-linear, SVC-rbf, and RF models (Fig 4, top-left). The combination of all techniques did not achieve a superior performance (AUC 0.77, 0.02). The best performance was observed for 3 × 3 mm SCP (AUC. 0.76, 0.04) compared with 3 × 3 mm DCP (AUC, 0.73, 0.03), 6 × 6 mm SCP (AUC, 0.69, 0.06), or 6 × 6 mm DCP (AUC, 0.74, 0.04). The combination of all OCTA scanning protocol images slightly improved the performance in all the models (Fig 4, bottom-left).

Figure 4.

Diabetic retinopathy (DR) diagnosis: machine learning models performance for DR classification based on radiomic features of retinal images. Box-and-whiskers plots representing area under the curve (AUC) values for each retinal image type. Top-left: radiomics extracted from each retinal image type. Top right: radiomics extracted from each retinal image type + clinical variables. Bottom-left: radiomics, extracted from each OCT angiography (OCTA) type. Bottom-right: radiomics, extracted from each OCTA type + clinical variables. FR = fundus retinography; LDA = linear discriminant analysis; rfb = radial basis function; SVC = support vector classifier.

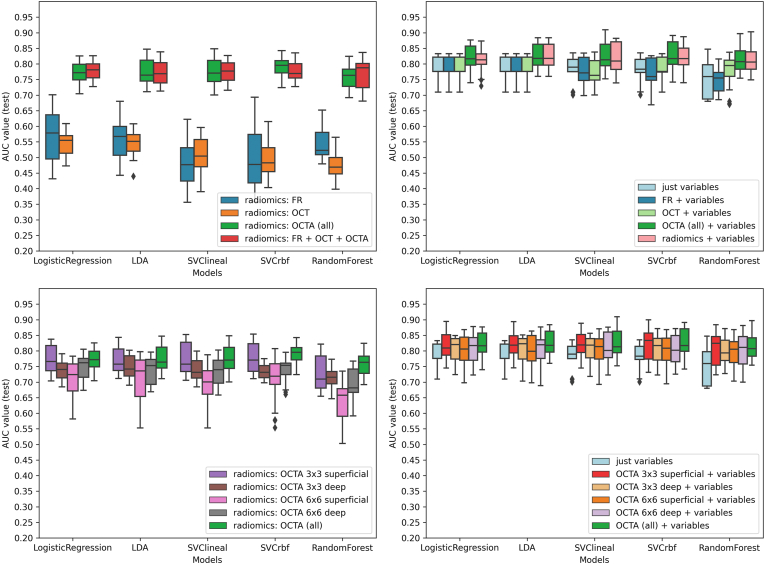

Classification Task 3: Referable DR Diagnosis

For R-DR diagnosis, OCTA images achieved again the best AUC performance (Fig 3, right column, and Fig 5). In the LR model, OCTA achieved better performance (AUC, 0.87, 0.12), than FR (0.42, 0.18) and OCT (0.60, 0.11); with similar results achieved with LDA, SVC-linear, SVC-rbf, and RF models (Fig 5, top-left). The combination of all retinal imaging techniques did not achieve a superior performance (AUC, 0.87, 0.10). The best performance was observed for 3 × 3 mm DCP (AUC, 0.86, 0.08) compared with 3 × 3 mm SCP (AUC, 0.83, 0.15), 6 × 6 mm SCP (AUC, 0.74, 0.18) or 6 × 6 mm DCP (AUC, 0.82, 0.11). The combination of all OCTA scanning protocol images did not improve the performance of the models (Fig 5, bottom-left).

Figure 5.

Referable diabetic retinopathy (R-DR) diagnosis: machine learning models performance for R-DR classification based on radiomic features of retinal images. Box-and-whiskers plots representing area under the curve (AUC) values for each retinal image type. Top-left: radiomics extracted from each retinal image type. Top right: radiomics extracted from each retinal image type + clinical variables. Bottom-left: radiomics, extracted from each OCT angiography (OCTA) type. Bottom-right: radiomics, extracted from each OCTA type + clinical variables. FR = fundus retinography; LDA = linear discriminant analysis; rbf = radial basis function; SVC = support vector classifier.

Impact of Clinical Variables Data Addition to the Predictive Models

The impact of the clinical variables (detailed in the Methods section) on the models' prediction for the 3 tasks was also analyzed, as presented in Tables 2 and 3 and Figures 2 through 5. For DM diagnosis, the addition of clinical data variables improved the performance of the models in all the individual and combined retinal image techniques (Fig 2, top right) as well as the OCTA scanning protocols (Fig 2, bottom-right). Consistently, similar improvements were observed for DR and R-DR diagnosis with all images (Figs 4 and 5, top right) and OCTA scanning protocols (Figs 4 and 5, bottom-right).

Table 2.

Models Performance Disclosed by Retinal Imaging Type and OCTA Scanning Protocol (n = 726)

| Task | Data | Models | FR | OCT | OCTA (All) | OCTA (3 × 3 SCP) | OCTA (3 × 3 DCP) | OCTA (6 × 6 SCP) | OCTA (6 × 6 DCP) | FR + OCT + OCTA† |

|---|---|---|---|---|---|---|---|---|---|---|

| DM diagnosis | Images | LR | 0.63 (0.08) | 0.82 (0.03)∗ | 0.74 (0.04) | 0.70 (0.06) | 0.63 (0.05) | 0.67 (0.06) | 0.65 (0.06) | 0.87 (0.03) |

| LDA | 0.64 (0.10) | 0.82 (0.04)∗ | 0.74 (0.06) | 0.71 (0.04) | 0.64 (0.05) | 0.67 (0.04) | 0.66 (0.04) | 0.87 (0.03) | ||

| SVC-Linear | 0.53 (0.07) | 0.67 (0.08)∗ | 0.64 (0.09) | 0.59 (0.05) | 0.53 (0.06) | 0.62 (0.08) | 0.50 (0.08) | 0.85 (0.03) | ||

| SVC-rbf | 0.57 (0.06) | 0.78 (0.04)∗ | 0.66 (0.04) | 0.65 (0.05) | 0.59 (0.10) | 0.53 (0.13) | 0.55 (0.08) | 0.86 (0.03) | ||

| RF | 0.65 (0.07) | 0.78 (0.04)∗ | 0.66 (0.05) | 0.62 (0.06) | 0.58 (0.07) | 0.58 (0.05) | 0.61 (0.05) | 0.86 (0.05) | ||

| + clinical variables | LR | 0.67 (0.08) | 0.86 (0.03)∗ | 0.78 (0.04) | 0.77 (0.05) | 0.71 (0.06) | 0.72 (0.05) | 0.74 (0.05) | 0.89 (0.03) | |

| LDA | 0.67 (0.06) | 0.86 (0.03)∗ | 0.77 (0.05) | 0.76 (0.06) | 0.73 (0.04) | 0.72 (0.05) | 0.75 (0.05) | 0.89 (0.04) | ||

| SVC-Linear | 0.57 (0.09) | 0.79 (0.07)∗ | 0.77 (0.04) | 0.69 (0.09) | 0.61 (0.05) | 0.69 (0.09) | 0.56 (0.08) | 0.88 (0.03) | ||

| SVC-rbf | 0.66 (0.06) | 0.85 (0.05)∗ | 0.76 (0.06) | 0.69 (0.06) | 0.66 (0.04) | 0.69 (0.06) | 0.68 (0.04) | 0.87 (0.04) | ||

| RF | 0.67 (0.05) | 0.86 (0.03)∗ | 0.70 (0.06) | 0.72 (0.04) | 0.66 (0.05) | 0.65 (0.06) | 0.72 (0.05) | 0.87 (0.05) | ||

| DR diagnosis | Images | LR | 0.56 (0.09) | 0.54 (0.03) | 0.77 (0.03)∗ | 0.76 (0.04)∗ | 0.73 (0.03) | 0.69 (0.06) | 0.74 (0.04) | 0.77 (0.02) |

| LDA | 0.55 (0.07) | 0.54 (0.04) | 0.77 (0.04)∗ | 0.76 (0.04)∗ | 0.74 (0.03) | 0.70 (0.08) | 0.73 (0.04) | 0.77 (0.03) | ||

| SVC-Linear | 0.47 (0.07) | 0.50 (0.05) | 0.77 (0.04)∗ | 0.77 (0.05)∗ | 0.74 (0.03) | 0.68 (0.06) | 0.73 (0.04) | 0.77 (0.03) | ||

| SVC-rbf | 0.49 (0.09) | 0.49 (0.05) | 0.78 (0.03)∗ | 0.77 (0.04)∗ | 0.73 (0.02) | 0.70 (0.07) | 0.73 (0.03) | 0.77 (0.03) | ||

| RF | 0.54 (0.04) | 0.47 (0.03) | 0.75 (0.03)∗ | 0.72 (0.05)∗ | 0.71 (0.03) | 0.63 (0.06) | 0.69 (0.05) | 0.76 (0.04) | ||

| + clinical variables | LR | 0.78 (0.04) | 0.78 (0.04) | 0.82 (0.04)∗ | 0.81 (0.04)∗ | 0.80 (0.03) | 0.79 (0.05) | 0.80 (0.05) | 0.81 (0.04) | |

| LDA | 0.78 (0.04) | 0.78 (0.04) | 0.82 (0.03)∗ | 0.82 (0.04)∗ | 0.80 (0.04) | 0.79 (0.05) | 0.79 (0.05) | 0.82 (0.03) | ||

| SVC-Linear | 0.77 (0.04) | 0.77 (0.04) | 0.82 (0.04)∗ | 0.81 (0.04)∗ | 0.80 (0.04) | 0.79 (0.05) | 0.80 (0.05) | 0.81 (0.05) | ||

| SVC-rbf | 0.77 (0.04) | 0.78 (0.04) | 0.82 (0.04)∗ | 0.81 (0.05)∗ | 0.80 (0.04) | 0.79 (0.05) | 0.80 (0.04) | 0.81 (0.04) | ||

| RF | 0.74 (0.03) | 0.77 (0.04) | 0.81 (0.04)∗ | 0.80 (0.05)∗ | 0.79 (0.04) | 0.79 (0.05) | 0.80 (0.06) | 0.81 (0.04) | ||

| Referable DR diagnosis | Images | LR | 0.42 (0.18) | 0.60 (0.11) | 0.87 (0.12)∗ | 0.83 (0.15) | 0.86 (0.08)∗ | 0.74 (0.18) | 0.82 (0.11) | 0.87 (0.10) |

| LDA | 0.55 (0.19) | 0.45 (0.11) | 0.87 (0.08)∗ | 0.86 (0.11) | 0.86 (0.10)∗ | 0.70 (0.20) | 0.80 (0.13) | 0.87 (0.08) | ||

| SVC-Linear | 0.49 (0.19) | 0.49 (0.13) | 0.85 (0.10)∗ | 0.76 (0.14) | 0.73 (0.14) | 0.76 (0.14) | 0.83 (0.09) | 0.83 (0.08) | ||

| SVC-rbf | 0.52 (0.20) | 0.58 (0.11) | 0.73 (0.11)∗ | 0.70 (0.18) | 0.77 (0.09)∗ | 0.69 (0.14) | 0.77 (0.10) | 0.73 (0.13) | ||

| RF | 0.57 (0.12) | 0.53 (0.12) | 0.77 (0.08)∗ | 0.79 (0.08) | 0.83 (0.07)∗ | 0.71 (0.10) | 0.72 (0.10) | 0.73 (0.09) | ||

| + clinical variables | LR | 0.82 (0.11) | 0.82 (0.11) | 0.92 (0.02)∗ | 0.89 (0.08) | 0.90 (0.03)∗ | 0.84 (0.09) | 0.90 (0.03) | 0.92 (0.02) | |

| LDA | 0.75 (0.14) | 0.81 (0.10) | 0.91 (0.06)∗ | 0.90 (0.06) | 0.90 (0.04)∗ | 0.87 (0.04) | 0.88 (0.06) | 0.87 (0.07) | ||

| SVC-Linear | 0.74 (0.14) | 0.66 (0.08) | 0.90 (0.04)∗ | 0.86 (0.05)∗ | 0.84 (0.07) | 0.76 (0.10) | 0.79 (0.11) | 0.85 (0.06) | ||

| SVC-rbf | 0.70 (0.16) | 0.63 (0.11) | 0.91 (0.05)∗ | 0.88 (0.06)∗ | 0.86 (0.06) | 0.70 (0.13) | 0.83 (0.07) | 0.89 (0.04) | ||

| RF | 0.68 (0.10) | 0.74 (0.10) | 0.79 (0.08)∗ | 0.84 (0.08) | 0.87 (0.06)∗ | 0.78 (0.10) | 0.70 (0.10) | 0.79 (0.07) |

Models performance described by mean area under the curve [AUC] (standard deviation).

DCP = deep capillary plexus; DM = diabetes mellitus; DR = diabetic retinopathy; FR = fundus retinography; LR = logistic regression; LDA = linear discriminant analysis; OCTA = OCT angiography; rbf = radial basis function; RF = random forest; SCP = superficial capillary plexus; SVC = support vector classifier.

The greatest AUCs by model and single image type.

Combination of all retinal images techniques.

Table 3.

Influence of Bilaterality on Models Performance. Comparison using 1 Eye per Patient (n = 439) or 2 Eyes per Patient (n = 726)

| Task | Data | Models | FR |

OCT |

OCTA (All) |

FR + OCT + OCTA |

||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 Eye | 2 Eyes | 1 Eye | 2 Eyes | 1 Eye | 2 Eyes | 1 Eye | 2 Eyes | |||

| DM diagnosis | Images | LR | 0.56 (0.13) | 0.63 (0.08)∗ | 0.82 (0.04) | 0.82 (0.03) | 0.71 (0.06) | 0.74 (0.04)∗ | 0.85 (0.03) | 0.87 (0.03)∗ |

| LDA | 0.50 (0.09) | 0.64 (0.10)∗ | 0.81 (0.04) | 0.82 (0.04)∗ | 0.72 (0.07) | 0.74 (0.06)∗ | 0.80 (0.04) | 0.87 (0.03)∗ | ||

| SVC-Linear | 0.51 (0.08) | 0.53 (0.07)∗ | 0.71 (0.07)∗ | 0.67 (0.08) | 0.62 (0.12) | 0.64 (0.09)∗ | 0.83 (0.03) | 0.85 (0.03)∗ | ||

| SVC-rbf | 0.49 (0.09) | 0.57 (0.06)∗ | 0.80 (0.04)∗ | 0.78 (0.04) | 0.64 (0.09) | 0.66 (0.04)∗ | 0.82 (0.03) | 0.86 (0.03)∗ | ||

| RF | 0.57 (0.09) | 0.65 (0.07)∗ | 0.78 (0.06) | 0.78 (0.04) | 0.62 (0.07) | 0.66 (0.05)∗ | 0.85 (0.03) | 0.86 (0.05)∗ | ||

| + clinical variables | LR | 0.67 (0.09) | 0.67 (0.08) | 0.87 (0.03)∗ | 0.86 (0.03) | 0.80 (0.06)∗ | 0.78 (0.04) | 0.89 (0.03) | 0.89 (0.03) | |

| LDA | 0.65 (0.09) | 0.67 (0.06)∗ | 0.86 (0.03) | 0.86 (0.03) | 0.75 (0.08) | 0.77 (0.05)∗ | 0.88 (0.04) | 0.89 (0.04)∗ | ||

| SVC-Linear | 0.49 (0.15) | 0.57 (0.09)∗ | 0.83 (0.04)∗ | 0.79 (0.07) | 0.77 (0.08) | 0.77 (0.04) | 0.88 (0.03) | 0.88 (0.03) | ||

| SVC-rbf | 0.61 (0.11) | 0.66 (0.06)∗ | 0.85 (0.03) | 0.85 (0.05) | 0.77 (0.08)∗ | 0.76 (0.06) | 0.88 (0.03)∗ | 0.87 (0.04) | ||

| RF | 0.69 (0.06)∗ | 0.67 (0.05) | 0.87 (0.05)∗ | 0.86 (0.03) | 0.72 (0.08)∗ | 0.70 (0.06) | 0.86 (0.05) | 0.87 (0.05)∗ | ||

| DR diagnosis | Images | LR | 0.54 (0.08) | 0.56 (0.09)∗ | 0.52 (0.08) | 0.54 (0.03)∗ | 0.75 (0.05) | 0.77 (0.03)∗ | 0.75 (0.04) | 0.77 (0.02)∗ |

| LDA | 0.58 (0.09)∗ | 0.55 (0.07) | 0.52 (0.08) | 0.54 (0.04)∗ | 0.74 (0.05) | 0.77 (0.04)∗ | 0.74 (0.05) | 0.77 (0.03)∗ | ||

| SVC-Linear | 0.48 (0.08)∗ | 0.47 (0.07) | 0.52 (0.07)∗ | 0.50 (0.05) | 0.76 (0.05) | 0.77 (0.04)∗ | 0.74 (0.05) | 0.77 (0.03)∗ | ||

| SVC-rbf | 0.52 (0.11)∗ | 0.49 (0.09) | 0.52 (0.08)∗ | 0.49 (0.05) | 0.74 (0.06) | 0.78 (0.03)∗ | 0.75 (0.05) | 0.77 (0.03)∗ | ||

| RF | 0.55 (0.06)∗ | 0.54 (0.04) | 0.50 (0.07)∗ | 0.47 (0.03) | 0.72 (0.04) | 0.75 (0.03)∗ | 0.68 (0.04) | 0.76 (0.04)∗ | ||

| + clinical variables | LR | 0.76 (0.03) | 0.78 (0.04)∗ | 0.76 (0.03) | 0.78 (0.04)∗ | 0.80 (0.04) | 0.82 (0.04)∗ | 0.80 (0.04) | 0.81 (0.04)∗ | |

| LDA | 0.76 (0.03) | 0.78 (0.04)∗ | 0.76 (0.03) | 0.78 (0.04)∗ | 0.80 (0.04) | 0.82 (0.03)∗ | 0.79 (0.03) | 0.82 (0.03)∗ | ||

| SVC-Linear | 0.77 (0.02) | 0.77 (0.04) | 0.77 (0.02) | 0.77 (0.04) | 0.79 (0.04) | 0.82 (0.04)∗ | 0.79 (0.04) | 0.81 (0.05)∗ | ||

| SVC-rbf | 0.77 (0.02) | 0.77 (0.04) | 0.77 (0.02) | 0.78 (0.04)∗ | 0.80 (0.05) | 0.82 (0.04)∗ | 0.78 (0.03) | 0.81 (0.04)∗ | ||

| RF | 0.73 (0.03) | 0.74 (0.03)∗ | 0.76 (0.02) | 0.77 (0.04)∗ | 0.78 (0.04) | 0.81 (0.04)∗ | 0.78 (0.03) | 0.81 (0.04)∗ | ||

| Referable DR diagnosis | Images | LR | 0.41 (0.14) | 0.42 (0.18)∗ | 0.52 (0.11) | 0.60 (0.11)∗ | 0.87 (0.14) | 0.87 (0.12)∗ | 0.88 (0.12)∗ | 0.87 (0.10) |

| LDA | 0.39 (0.11) | 0.55 (0.19)∗ | 0.48 (0.10)∗ | 0.45 (0.11) | 0.90 (0.09)∗ | 0.87 (0.08) | 0.88 (0.11)∗ | 0.87 (0.08) | ||

| SVC-Linear | 0.49 (0.20) | 0.49 (0.19)∗ | 0.47 (0.14) | 0.49 (0.13)∗ | 0.79 (0.20) | 0.85 (0.10)∗ | 0.81 (0.13) | 0.83 (0.08)∗ | ||

| SVC-rbf | 0.49 (0.15) | 0.52 (0.20)∗ | 0.52 (0.14) | 0.58 (0.11)∗ | 0.81 (0.12)∗ | 0.73 (0.11) | 0.82 (0.14)∗ | 0.73 (0.13) | ||

| RF | 0.59 (0.17)∗ | 0.57 (0.12) | 0.73 (0.13)∗ | 0.53 (0.12) | 0.80 (0.11)∗ | 0.77 (0.08) | 0.78 (0.13)∗ | 0.73 (0.09) | ||

| + clinical variables | LR | 0.82 (0.08)∗ | 0.82 (0.11) | 0.82 (0.10)∗ | 0.82 (0.11) | 0.90 (0.08) | 0.92 (0.02)∗ | 0.90 (0.09) | 0.92 (0.02)∗ | |

| LDA | 0.81 (0.09)∗ | 0.75 (0.14) | 0.73 (0.11) | 0.81 (0.10)∗ | 0.91 (0.07)∗ | 0.91 (0.06) | 0.81 (0.11) | 0.87 (0.07)∗ | ||

| SVC-Linear | 0.62 (0.24) | 0.74 (0.14)∗ | 0.52 (0.15) | 0.66 (0.08)∗ | 0.90 (0.07)∗ | 0.90 (0.04) | 0.89 (0.06)∗ | 0.85 (0.06) | ||

| SVC-rbf | 0.56 (0.25) | 0.70 (0.16)∗ | 0.69 (0.09)∗ | 0.63 (0.11) | 0.84 (0.11) | 0.91 (0.05)∗ | 0.86 (0.08) | 0.89 (0.04)∗ | ||

| RF | 0.74 (0.10)∗ | 0.68 (0.10) | 0.72 (0.14) | 0.74 (0.10)∗ | 0.80 (0.12)∗ | 0.79 (0.08) | 0.82 (0.09)∗ | 0.79 (0.07) | ||

DM = diabetes mellitus; DR = diabetic retinopathy; FR = fundus retinography; LR = logistic regression; LDA = linear discriminant analysis; OCTA = OCT angiography; rbf = radial basis function; RF = random forest; SVC = support vector classifier.

Models performance described by mean AUC (standard deviation).

The greatest AUCs obtained in 1 and 2 eyes cohorts (if different), disclosed by model and image type.

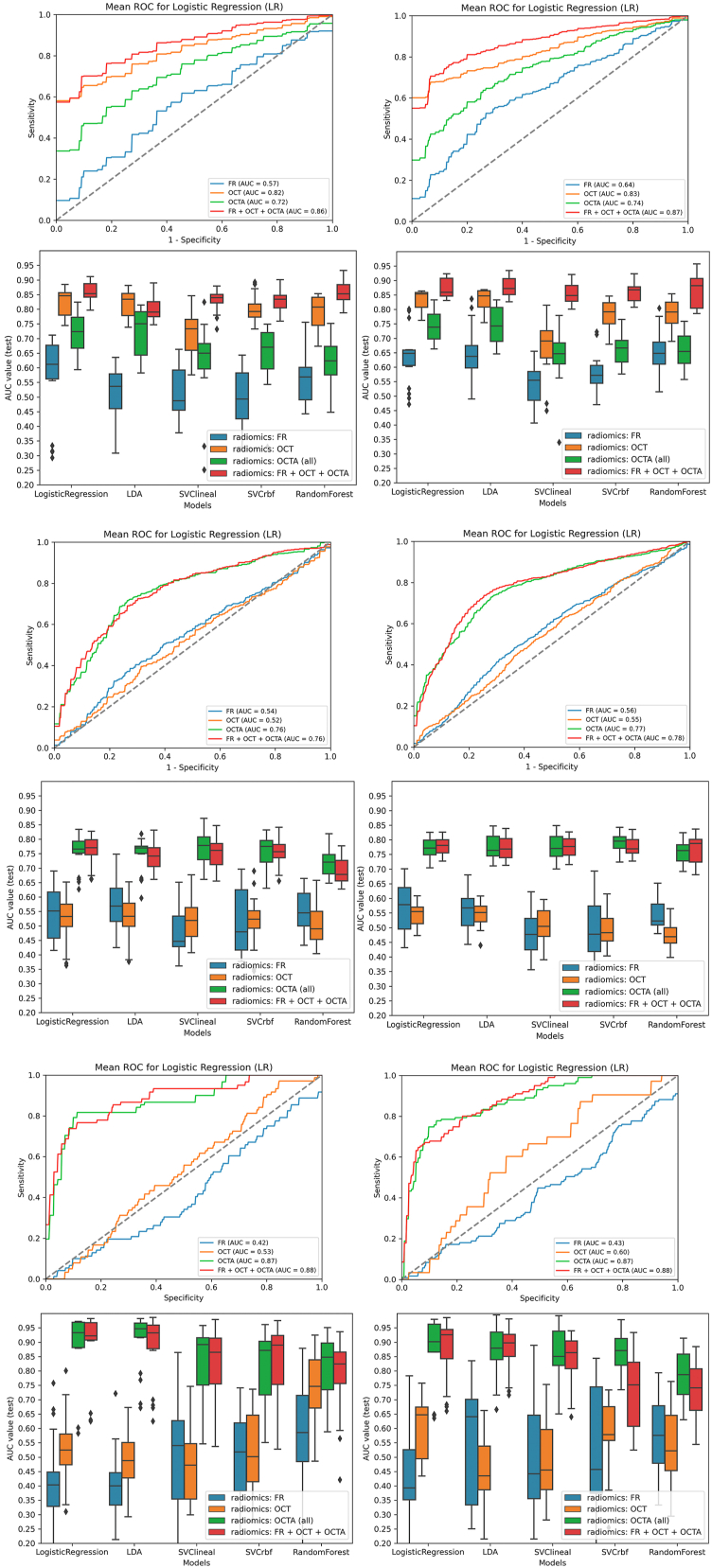

Influence of Unilateral versus Bilateral Image Datasets in Models Performance

For DM diagnosis, models' prediction in bilateral eyes image sets were not overall superior to the use of unilateral eyes set of images and favored 1 eye for OCT and 2 eyes for FR, OCTA, and the combination of images (Table 3, Fig 6). When clinical variables were included, these differences were reduced. For DR diagnosis, AUC values were greater for 1 eye in FR and OCT and for 2 eyes in OCTA and the combination of techniques in all ML models and image types. For R-DR, AUC values were similar for FR, OCT, OCTA, and the combination of techniques. The addition of clinical data variables improved the performance of all the individual and combined retinal image sets.

Figure 6.

Comparison of models performance for diabetes mellitus (DM), diabetic retinopathy (DR), and referable DR (R-DR) diagnosis using unilateral and bilateral datasets. Left column: unilateral dataset (1 eye per patient). Right column: bilateral dataset (2 eyes per patient). Top-Top: logistic regression (LR) receiver operating characteristic (ROC) curves for DM diagnosis for each retinal image type using 1 eye (left) and 2 eyes (right) per patient. Top-Bottom: box-and-whiskers plots representing area under the curve (AUC) values for DM diagnosis for each model and retinal image type, using 1 eye (left) and 2 eyes (right) per patient. Middle-top: LR ROC curves for DR diagnosis for each retinal image type using 1 eye (left) and 2 eyes (right) per patient. Middle-Bottom: box-and-whiskers plots representing AUC values for DR diagnosis for each model and retinal image type, using 1 eye (left) and 2 eyes (right) per patient. Bottom-top: LR ROC curves for R-DR diagnosis for each retinal image type using 1 eye (left) and 2 eyes (right) per patient. Bottom-Bottom: box-and-whiskers plots representing AUC values for R-DR diagnosis for each model and retinal image type, using 1 eye (left) and 2 eyes (right) per patient. FR = fundus retinography; OCTA = OCT angiography; LDA = linear discriminant analysis; rbf = radial basis function; SVC = support vector classifier.

Discussion

This study demonstrates the diagnostic potential of ML classifiers applied to the radiomic features extracted from OCT and OCTA images for DM, DR, and R-DR diagnosis. The best performance of the ML models is achieved in OCT images for DM diagnosis, and OCTA images for DR and R-DR diagnosis. Interestingly, the best OCTA scanning protocol to identify DR is the 3 × 3 mm SCP scan, while for R-DR it is the 3 × 3 mm DCP scan. We demonstrate that the addition of clinical variables improves the performance of all ML models and types of retinal images. The relevance of this pioneer project is that it demonstrates that radiomic-based ML techniques may be applied to different types of retinal images as an effective method to identify DM and DR cases, as a step forward to allow the automatic detection of these conditions in larger scale cohorts.

Radiomics is an emerging translational field of research. The main interest of this methodology lies in its ability to extract mineable multivariate quantitative data from medical images using computer vision techniques, with the potential to discover disease characteristics beyond human perception capacity.22 To the best of our knowledge, this is the first study directed to evaluate the potential of radiomics applied to OCTA images, a recently developed noninvasive retinal imaging technique, and also the first one directed to assess this potential in a multimodal series of retinal imaging techniques for DM, DR, and R-DR diagnosis.

The results reported for DM diagnosis suggest that our radiomics-based approach is able to discriminate between controls and patients with DM, particularly in OCT images. Most of the AI studies in this field of ophthalmology have been conducted in existing image datasets from DR screening programs with patients with DM but no healthy controls. For this reason, AI for DM diagnosis has been applied to clinical data in nutritional surveys42 and hospital data,43 but no consistent data are available for retinal images for this task. There is an exponential growth in the number of OCT scans performed daily in the community setting, and our results suggest that this approach would potentially be implemented as a general screening tool for type 1 DM diagnosis, the less frequent type of DM, in the general population. In this scenario, because of the marginal improvement observed with the combination of imaging techniques (AUC, 0.82 versus 0.87), the addition of clinical variables (AUC, 0.82 versus 0.86) or both (AUC, 0.82 versus 0.89) cost-benefit studies will be required to further investigate whether these items should be included in potential strategies designed for DM diagnosis in the general population in the future.

OCT angiography was the imaging technique that achieved the best performance for DR diagnosis, and consistently showed superior performance in all models, particularly in the 3 × 3 SCP scanning protocol images. This is an important point because some previous studies have suggested that the DCP is affected earlier in DR development. Nevertheless, the SCP is less prone to have projection artifacts, a factor that could explain, at least in part, the results observed. Conversely to these findings, our results for DR and R-DR diagnosis suggest that the combination of techniques does not improve the performance of the models. Unfortunately, no previous studies have been conducted to specifically evaluate radiomics features in OCTA datasets. To benchmark our results, we have separately analyzed DR and R-DR diagnosis because most of the previous ML studies conducted in FR from DR screening programs frequently describe detection rates only for R-DR cases.14,44 Future comparative studies will reveal where radiomics applied to OCTA images fit in the diagnostic algorithm of DR and R-DR detection.

With regards to technical considerations, the performance of the models was overall consistent when experiments were conducted in both datasets, with bilateral eyes and unilateral eyes. This is relevant because most of the AI applied to ophthalmology studies include both eyes of patients, with a potential risk of correlation bias that could lead to model overfitting. We have observed no differences for DM diagnosis and only slightly superior results for DR and R-DR diagnosis using bilateral eyes, especially in OCTA images. We do believe that this may be related to the greater number of images analyzed for each individual eye because of the different scanning protocols employed. Also, an important point is that, in our dataset, linear models seem to achieve better and more robust results, suggesting that these simpler models may be more appropriate for these type of classification tasks, possibly in relationship with the data type or the number of instances evaluated.

This study presents a series of strengths and limitations. First, the study cohort includes patients with type 1 DM, when most of the DR screening programs only include type 2 DM or do not differentiate between both DM types. The results of this study may not be applicable to patients with type 2 DM because multiple differences in presenting age, ocular, and systemic comorbidities prevent this comparison. However, this also confers high internal validity because it is allegedly the largest type 1 DM retinal imaging dataset of the literature.38, 39, 40 Second, control and DM groups differed in some baseline characteristics, such as age, smoking status, and body mass index, which may have influenced the results. Third, for OCT images, only a single B scan centered in the fovea has been used to extract the radiomics features, when each macular cube scan consists of 128 consecutive B scans, which could potentially under or overestimate the results reported either way. Fourth, the subgroup “OCTA all” included 4 images instead of 1; however, no superior results were observed compared with single 3 × 3 OCTA scans, suggesting that this may have not influenced the results. And finally, although most of the clinical variables included in the models are just demographics (i.e., age, sex, etc.), adding this information may require data collection beyond the processing of retinal images, limiting the potential deployment of this strategy in the community setting.

In conclusion, this proof-of-concept study demonstrates that the radiomic features extracted from OCT and OCTA images permit the identification of patients with DM and DR with a standard set of ML classifiers. For each task evaluated, recommendations about specific imaging tests (OCT for DM diagnosis and OCTA for DR and R-DR diagnosis) and protocols (OCTA 3 × 3 mm SCP and DCP scans) for the best performance of the models are provided. Moreover, the value of adding clinical data and the impact of using images from one or both eyes is also evaluated, to provide readers with relevant information for planning future strategies for each classification task. Given the expected DM prevalence increase in the next decades because of population aging, the market size of the clinical need, and the predicted economic cost associated with DM in the near future, all the efforts directed to find the optimal diagnostic tool should be a priority for the health care system. In this scenario, the exploration of the potential of AI combined with radiomics in state-of-the-art noninvasive retinal image techniques, such as OCT and OCTA, seems to be a promising avenue of research.

Manuscript no. XOPS-D-22-00152R1.

Footnotes

Disclosures:

All authors have completed and submitted the ICMJE disclosures form.

The authors made the following disclosures: A.V.: Grants – Spanish research grant (grant no.: PID2019-104551RB-I00).

J.Z.V.: Grant – Fundació La Marató de TV3, La Marató 2015, Diabetis i Obesitat (grant no.: 201633.10), Instituto de Salud Carlos III (grant nos.: PI18/00518, PI21/01384).

E.R.: Grant – Spanish research grant (grant no.: PID2019-104551RB-I00).

The other authors have no proprietary or commercial interest in any materials discussed in this article.

HUMAN SUBJECTS: Human subjects were included in this study. This study was approved by the Hospital Clinic of Barcelona institutional review board (HCB/2021/0350) and adhered to the Declaration of Helsinki. A written informed consent was obtained for all participants.

No animal subjects were used in this study.

Author Contributions:

Conception and design: Vellido, Romero, Zarranz-Ventura.

Data collection: Bernal-Morales, Alé-Chilet, Barraso, Marin-Martinez, Feu-Basilio, Rosinés-Fonoll, Hernandez, Vilá, Castro-Dominguez, Oliva, Vinagre, Ortega, Gimenez, Zarranz-Ventura.

Analysis and interpretation: Carrera-Escalé, Benali, Rathert, Martin-Pinardel, Bernal-Morales, Vinagre, Ortega, Gimenez, Vellido, Romero, Zarranz-Ventura.

Obtained funding: Zarranz-Ventura

Overall responsibility: Carrera-Escalé, Ortega, Gimenez, Vellido, Romero, Zarranz-Ventura.

References

- 1.Topol E.J. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25:44–56. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 2.Ting D.S.W., Pasquale L.R., Peng L., et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol. 2019;103:167–175. doi: 10.1136/bjophthalmol-2018-313173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.De Fauw J., Ledsam J.R., Romera-Paredes B., et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med. 2018;24:1342–1350. doi: 10.1038/s41591-018-0107-6. [DOI] [PubMed] [Google Scholar]

- 4.Zarranz-Ventura J., Bernal-Morales C., Saenz de Viteri M., et al. Artificial intelligence and ophthalmology: current status. Arch Soc Española Oftalmol (Engl ED) 2021;96:399–400. doi: 10.1016/j.oftale.2021.06.001. [DOI] [PubMed] [Google Scholar]

- 5.Maloca P.M., Lee A.Y., De Carvalho E.R., et al. Validation of automated artificial intelligence segmentation of optical coherence tomography images. PLoS One. 2019;14 doi: 10.1371/journal.pone.0220063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Yim J., Chopra R., Spitz T., et al. Predicting conversion to wet age-related macular degeneration using deep learning. Nat Med. 2020;26:892–899. doi: 10.1038/s41591-020-0867-7. [DOI] [PubMed] [Google Scholar]

- 7.Bogunovic H., Waldstein S.M., Schlegl T., et al. Prediction of anti-VEGF treatment requirements in neovascular AMD using a machine learning approach. Invest Ophthalmol Vis Sci. 2017;58:3240–3248. doi: 10.1167/iovs.16-21053. [DOI] [PubMed] [Google Scholar]

- 8.Wagner S.K., Fu D.J., Faes L., et al. Insights into systemic disease through retinal imaging-based oculomics. Transl Vis Sci Technol. 2020;9:6. doi: 10.1167/tvst.9.2.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Burlina P.M., Joshi N., Pekala M., et al. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 2017;135:1170–1176. doi: 10.1001/jamaophthalmol.2017.3782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wang Y., Zhang Y., Yao Z., et al. Machine learning based detection of age-related macular degeneration (AMD) and diabetic macular edema (DME) from optical coherence tomography (OCT) images. Biomed Opt Express. 2016;7:4928–4940. doi: 10.1364/BOE.7.004928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.ElTanboly A., Ismail M., Shalaby A., et al. A computer-aided diagnostic system for detecting diabetic retinopathy in optical coherence tomography images. Med Phys. 2017;44:914–923. doi: 10.1002/mp.12071. [DOI] [PubMed] [Google Scholar]

- 12.Brown J.M., Campbell J.P., Beers A., et al. Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. JAMA Ophthalmol. 2018;136:803–810. doi: 10.1001/jamaophthalmol.2018.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Poplin R., Varadarajan A.V., Blumer K., et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. 2018;2:158–164. doi: 10.1038/s41551-018-0195-0. [DOI] [PubMed] [Google Scholar]

- 14.Gulshan V., Peng L., Coram M., et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 15.Xie Y., Nguyen Q.D., Hamzah H., et al. Artificial intelligence for teleophthalmology-based diabetic retinopathy screening in a national programme: an economic analysis modelling study. Lancet Digit Health. 2020;2:e240–e249. doi: 10.1016/S2589-7500(20)30060-1. [DOI] [PubMed] [Google Scholar]

- 16.van der Heijden A.A., Abramoff M.D., Verbraak F., et al. Validation of automated screening for referable diabetic retinopathy with the IDx-DR device in the Hoorn diabetes care system. Acta Ophthalmol. 2018;96:63–68. doi: 10.1111/aos.13613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Grzybowski A., Brona P., Lim G., et al. Artificial intelligence for diabetic retinopathy screening: a review. Eye (Lond) 2020;34:451–460. doi: 10.1038/s41433-019-0566-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hua C.H., Kim K., Huynh-The T., et al. Convolutional network with twofold feature augmentation for diabetic retinopathy recognition from multi-modal images. IEEE J Biomed Health Inform. 2021;25:2686–2697. doi: 10.1109/JBHI.2020.3041848. [DOI] [PubMed] [Google Scholar]

- 19.Le D., Alam M., Yao C., et al. Transfer learning for automated OCTA detection of diabetic retinopathy. Transl Vis Sci Technol. 2019;2:35. doi: 10.1167/tvst.9.2.35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Spaide R.F., Fujimoto J.G., Waheed N.K., et al. Optical coherence tomography angiography. Prog Retin Eye Res. 2018;64:1–55. doi: 10.1016/j.preteyeres.2017.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kalra G., Zarranz-Ventura J., Chahal R., et al. Optical computed tomography (OCT) angiolytics: a review of OCT angiography quantiative biomarkers. Surv Ophthalmol. 2021;67:1118–1134. doi: 10.1016/j.survophthal.2021.11.002. [DOI] [PubMed] [Google Scholar]

- 22.Kumar V., Gu Y., Basu S., et al. Radiomics: the process and the challenges. Magn Reson Imaging. 2012;30:1234–1248. doi: 10.1016/j.mri.2012.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rizzo S., Botta F., Raimondi S., et al. Radiomics: the facts and the challenges of image analysis. Eur Radiol Exp. 2018;2:36. doi: 10.1186/s41747-018-0068-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wu G., Chen Y., Wang Y., et al. Sparse representation-based radiomics for the diagnosis of brain tumors. IEEE Trans Med Imaging. 2018;37:893–905. doi: 10.1109/TMI.2017.2776967. [DOI] [PubMed] [Google Scholar]

- 25.Braman N.M., Etesami M., Prasanna P., et al. Intratumoral and peritumoral radiomics for the pretreatment prediction of pathological complete response to neoadjuvant chemotherapy based on breast DCE-MRI. Breast Cancer Res. 2017;19:57. doi: 10.1186/s13058-017-0846-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Thawani R., McLane M., Beig N., et al. Radiomics and radiogenomics in lung cancer: a review for the clinician. Lung Cancer. 2018;115:34–41. doi: 10.1016/j.lungcan.2017.10.015. [DOI] [PubMed] [Google Scholar]

- 27.Penzias G., Singanamalli A., Elliott R., et al. Identifying the morphologic basis for radiomic features in distinguishing different Gleason grades of prostate cancer on MRI: preliminary findings. PLoS One. 2018;13 doi: 10.1371/journal.pone.0200730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Huang Y.Q., Liang C.H., He L., et al. Development and validation of a radiomics nomogram for preoperative prediction of lymph node metastasis in colorectal cancer. J Clin Oncol. 2016;34:2157–2164. doi: 10.1200/JCO.2015.65.9128. [DOI] [PubMed] [Google Scholar]

- 29.Núñez L.M., Romero E., Julià-Sapé M., et al. Unraveling response to temozolomide in preclinical GL261 glioblastoma with MRI/MRSI using radiomics and signal source extraction. Sci Rep. 2020;10 doi: 10.1038/s41598-020-76686-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Prasanna P., Patel J., Partovi S., et al. Radiomic features from the peritumoral brain parenchyma on treatment-naïve multi-parametric MR imaging predict long versus short-term survival in glioblastoma multiforme: preliminary findings. Eur Radiol. 2017;27:4188–4197. doi: 10.1007/s00330-016-4637-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lu C.Q., Wang Y.C., Meng X.P., et al. Diabetes risk assessment with imaging: a radiomics study of abdominal CT. Eur Radiol. 2019;29:2233–2242. doi: 10.1007/s00330-018-5865-5. [DOI] [PubMed] [Google Scholar]

- 32.Deng Y., Yang B.R., Luo J.W., et al. DTI-based radiomics signature for the detection of early diabetic kidney damage. Abdom Radiol (NY) 2020;45:2526–2531. doi: 10.1007/s00261-020-02576-6. [DOI] [PubMed] [Google Scholar]

- 33.Abunahel B.M., Pontre B., Kumar H., Petrov M.S. Pancreas image mining: a systematic review of radiomics. Eur Radiol. 2021;31:3447–3467. doi: 10.1007/s00330-020-07376-6. [DOI] [PubMed] [Google Scholar]

- 34.Moosavi A., Figueiredo N., Prasanna P., et al. Imaging features of vessels and leakage patterns predict extended interval aflibercept dosing using ultra-widefield angiography in retinal vascular disease: findings from the PERMEATE study. IEEE Trans Biomed Eng. 2021;68:1777–1786. doi: 10.1109/TBME.2020.3018464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Prasanna P., Bobba V., Figueiredo N., et al. Radiomics-based assessment of ultra-widefield leakage patterns and vessel network architecture in the PERMEATE study: insights into treatment durability. Br J Ophthalmol. 2021;105:1155–1160. doi: 10.1136/bjophthalmol-2020-317182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Sil Kar S., Sevgi D.D., Dong V., et al. Multi-compartment spatially-derived radiomics from optical coherence tomography predict anti-VEGF treatment durability in macular edema secondary to retinal vascular disease: preliminary findings. IEEE J Transl Eng Health Med. 2021;9 doi: 10.1109/JTEHM.2021.3096378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Du Y., Chen Q., Fan Y., et al. Automatic identification of myopic maculopathy related imaging features in optic disc region via machine learning methods. J Transl Med. 2021;19:167. doi: 10.1186/s12967-021-02818-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Zarranz-Ventura J., Barraso M., Alé-Chilet A., et al. Evaluation of microvascular changes in the perifoveal vascular network using optical coherence tomography angiography (OCTA) in type I diabetes mellitus: a large scale prospective trial. BMC Med Imaging. 2019;19:91. doi: 10.1186/s12880-019-0391-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Barraso M., Alé-Chilet A., Hernández T., et al. Optical coherence tomography angiography in type 1 diabetes mellitus. Report 1: diabetic retinopathy. Transl Vis Sci Technol. 2020;9:1–15. doi: 10.1167/tvst.9.10.34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bernal-Morales C., Alé-Chilet A., Martín-Pinardel R., et al. Optical coherence tomography angiography in type 1 diabetes mellitus. Report 4: glycated haemoglobin. Diagnostics. 2021;11:1537. doi: 10.3390/diagnostics11091537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Alé-Chilet A., Bernal-Morales C., Barraso M., et al. Optical coherence tomography angiography in type 1 diabetes mellitus—report 2: Diabetic kidney disease. J Clin Med. 2022;11:1–15. doi: 10.3390/jcm11010197. In this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Han L., Luo S., Yu J., et al. Rule extraction from support vector machines using ensemble learning approach: an application for diagnosis of diabetes. IEEE J Biomed Health Inform. 2015;19:728–734. doi: 10.1109/JBHI.2014.2325615. [DOI] [PubMed] [Google Scholar]

- 43.Shankaracharya Odedra D., Samanta S., Vidyarthi A.S. Computational intelligence-based diagnosis tool for the detection of prediabetes and type 2 diabetes in India. Rev Diabet Stud. 2012;9:55–62. doi: 10.1900/RDS.2012.9.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ting D.S.W., Cheung C.Y.-L.L., Lim G., et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318:2211–2223. doi: 10.1001/jama.2017.18152. [DOI] [PMC free article] [PubMed] [Google Scholar]