Abstract

Aim

The aim of this study was to investigate how the prescribing knowledge and skills of junior doctors in the Netherlands and Belgium develop in the year after graduation. We also analysed differences in knowledge and skills between surgical and nonsurgical junior doctors.

Methods

This international, multicentre (n = 11), longitudinal study analysed the learning curves of junior doctors working in various specialties via three validated assessments at about the time of graduation, and 6 months and 1 year after graduation. Each assessment contained 35 multiple choice questions (MCQs) on medication safety (passing grade ≥85%) and three clinical scenarios.

Results

In total, 556 junior doctors participated, 326 (58.6%) of whom completed the MCQs and 325 (58.5%) the clinical case scenarios of all three assessments. Mean prescribing knowledge was stable in the year after graduation, with 69% (SD 13) correctly answering questions at assessment 1 and 71% (SD 14) at assessment 3, whereas prescribing skills decreased: 63% of treatment plans were considered adequate at assessment 1 but only 40% at assessment 3 (P < .001). While nonsurgical doctors had similar learning curves for knowledge and skills as surgical doctors (P = .53 and P = .56 respectively), their overall level was higher at all three assessments (all P < .05).

Conclusion

These results show that junior doctors' prescribing knowledge and skills did not improve while they were working in clinical practice. Moreover, their level was under the predefined passing grade. As this might adversely affect patient safety, educational interventions should be introduced to improve the prescribing competence of junior doctors.

Keywords: graduate, medical education, clinical pharmacology, medical education, pharmacotherapy, prescribing knowledge and skills

What is already known about this subject

Final‐year medical students not only feel unprepared in prescribing drugs, but also have inadequate knowledge and skills to perform this task.

Junior doctors are responsible for most prescribing errors.

What this study adds

The prescribing knowledge of junior doctors does not increase, and their prescribing skills even deteriorated, in the year after graduation.

Junior doctors lack sufficient prescribing knowledge and skills.

Education in clinical pharmacology and therapeutics should be intensified for both under‐ and postgraduates.

1. INTRODUCTION

According to the Guide to Good Prescribing from the World Health Organization (WHO), doctors should be able to prescribe rationally because poor prescribing adversely affects patient safety and healthcare costs. 1 , 2 , 3 , 4 , 5 However, as shown recently, final‐year medical students in Europe lack adequate prescribing competencies at graduation, 6 , 7 probably because of insufficient education in clinical pharmacology and therapeutics (CP&T) during their training. 8 This is worrying because junior doctors write the most (63‐78%) hospital prescriptions and make the most prescribing errors (9‐10% of all their prescriptions). 9 , 10 It is widely assumed that junior doctors, or at least the ones that prescribe most frequently (ie, nonsurgical doctors), increase their prescribing knowledge and skills by learning in practice. 3 , 8 , 11 , 12 However, this has never been studied longitudinally.

The aim of this study was to investigate how the prescribing knowledge and skills of junior doctors in the Netherlands and Belgium develop in the year after graduation. We also analysed differences in knowledge and skills between surgical and nonsurgical junior doctors, between primary and secondary care junior doctors, and between (non)registrars and physician researchers. We hypothesised that the prescribing knowledge and skills of all junior doctors would increase significantly over time as a result of learning and doing in practice.

2. METHODS

2.1. Study design and participants

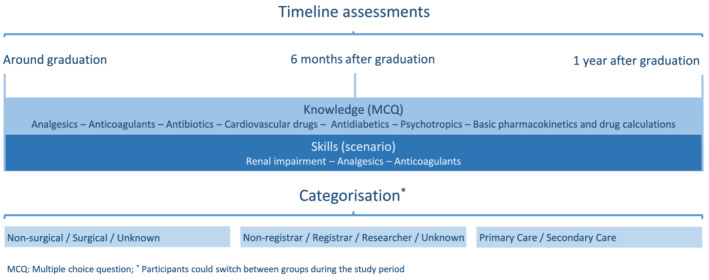

This longitudinal (1‐year) prospective cohort study involved doctors in their first year after graduation from medical schools in the Netherlands (n = 8) and Belgium (n = 3). In total, all 1584 graduated doctors in the period between July 2016 and March 2017 were invited to complete an online assessment at three different moments: assessment 1 = about graduation (±4 weeks), assessment 2 = 6 months after graduation, assessment 3 = 1 year after graduation (Figure 1). For each assessment, the participants had to provide information about their specialty and job level. With this information they were divided into surgical or nonsurgical junior doctors, and further categorised into registrar, nonregistrar or researcher (who sometimes have clinical tasks and are often involved in teaching students), and into primary or secondary care doctors (Appendices S1 and S2 ). In the Netherlands and Flanders all recent graduates are considered as junior doctors, and unlike in some other countries they are able to become a registrar directly after graduation. Missing data were reported as “unknown”. Power analysis determined that a sample size of at least 394 participants over three assessments was required (assuming a mean score at assessment 1 of 70% (SD ± 5) and at assessment 3 of 90% (SD ± 10), paired α = 0.05 two‐sided and β = 0.90). Post hoc power analysis (considering 326 participants) showed there would be enough power to compare assessment 1 with assessment 2, and assessment 2 with assessment 3. The Medical Ethics Review Committee of Amsterdam University Medical Center declared that the study did not fall within the scope of the Medical Research Involving Human Subjects Act (WMO) (number 2016.273). Moreover, the study protocol was approved for all participating universities in the Netherlands and Belgium by the Ethical Review Board of the Netherlands Association of Medical Education (NVMO) (NVMO‐ERB 729). Written informed consent was obtained in advance from all participants. Participants received their scores and were given a 50‐euro voucher as reasonable compensation for their invested time after study completion.

FIGURE 1.

Study overview

2.2. Assessment tool: Design

Each assessment consisted of 35 multiple‐choice questions (MCQs) and three polypharmacy clinical case scenarios. The MCQs were extracted from the database of the Dutch National Pharmacotherapy Assessment, which was designed to assess knowledge about medication related to safety (eg, contraindications and interactions). 13 , 14 Since the MCQs were used in both the Netherlands and Belgium, minor modifications were made to adjust for differences in drug accessibility and use, and national practice guidelines. The clinical case scenarios were developed by 12 senior clinical pharmacologists to assess the prescribing skills (ie, the ability to write a prescription safely and unambiguously without supervision 15 ). For each scenario, the participant was asked to draw up a treatment plan via a structured form, including pharmacological and nonpharmacological policy, and follow‐up management (Appendix S3 ). For the pharmacological policy, the participant could start new drugs (maximum two per case), alter the current dosage regimen or time of administration, or discontinue currently prescribed drugs. On average, two major additions/adjustments (eg, starting antibiotic treatment and lowering the dosage of drugs because of renal impairment) were required for each scenario and one to three small adjustments (eg, optimising time of administration and starting calcium/vitamin D supplementation).

The topics are derived from the Dutch National Pharmacotherapy Assessment and were chosen because they are known to cause the majority of preventable serious adverse reactions in clinical practice, 13 , 16 The MCQs of each assessment covered seven topics (five questions each): (1) analgesics; (2) anticoagulants; (3) antibiotics; (4) cardiovascular drugs; (5) antidiabetics; (6) psychotropics; (7) basic pharmacokinetics and drug calculations. The clinical case scenarios of each assessment covered three topics: (1) renal impairment; (2) analgesics; (3) anticoagulants. The content differed per assessment, but the topics and the type of questions remained the same. Appendix S3 and S4 show the test matrix and example questions.

2.3. Assessment tool: Validity and reliability

To establish content and face validity, a test matrix was used. The content validity of the Dutch National Pharmacotherapy Assessment, the source of our MCQs, has been studied by Jansen et al and was considered good, with 75.8% of the questions being rated as “essential” and 24.2% as either “useful, but not essential” or “not necessary”. 14 The content validity of the clinical case scenarios was also considered good by nine experts (physicians and clinical pharmacologists not otherwise involved in the study who were invited to participate via the Dutch Society for Clinical Pharmacology and Biopharmacy), with 72.7% of the cases being rated as “essential”, 25.7% as “useful, but not essential” and 1.5% as “not necessary” (Appendix S5 ).

To assess the reliability of the assessments we calculated the internal consistency (Cronbach alpha) and the degree of difficulty of each question (proportion correct answers, P value), and determined the capacity of the questions to distinguish doctors with adequate or inadequate prescribing knowledge (R ir score).

On the assumption that a Cronbach alpha value of at least 0.65 is indicative of sufficient internal consistency, the three assessments had sufficient internal consistency (Cronbach's alpha of 0.70, 0.69 and 0.76, respectively). 17 , 18 The P values showed that the assessments consisted of both easy and difficult questions. As can be seen in Table 1, the MCQs of assessment 2 seemed to have been easier than those of the other two assessments. With the aim of evaluating the differences in difficulty between the assessments, we randomly assigned a homogenous group of fourth‐year medical students (n = 103) of one medical school to one of the three assessments. All students were prepared to take the examination since it was part of their curriculum. The results showed that they performed better in assessment 2 (both P < .001), and, as expected, worse than the junior doctors (Appendix S6). All assessments had comparable and mainly poor to adequate R ir scores (Table 1), 17 which is inherent to examining ready knowledge.

TABLE 1.

R ir scores and P values per assessment

| Assessment 1 (n = 35) | Assessment 2 (n = 35) | Assessment 3 (n = 35) | |

|---|---|---|---|

| R ir < 0.19 (poor) | 15 | 15 | 9 |

| R ir 0.20‐0.29 (adequate) | 15 | 13 | 16 |

| R ir 0.30‐0.39 (good) | 4 | 7 | 8 |

| R ir > 0.40 (very good) | 1 | 0 | 2 |

| P < .44 (difficult question) | 8 | 5 | 10 |

| P = 0.44‐0.90 (medium difficulty) | 25 | 25 | 22 |

| P > .90 (easy question) | 2 | 5 | 3 |

2.4. Data collection

At each centre, a local coordinator recruited participants just before graduation. All (nearly) graduated students were sent an email, with a link to the online assessment. They had 1 month to complete the assessment, but reminders were sent out after 1 and 2 weeks, if necessary. The online assessment took approximately 60 minutes to complete. Participation was on a voluntary basis and results had no consequences. Participants were explicitly requested not to use resources for the knowledge part (MCQs), but were allowed to use resources for the skills part (case scenarios). All participants were explicitly requested not to consult third parties during the assessments. All data were collected encrypted, with participants being assigned a unique number.

2.5. Scoring and data analysis

The MCQs were marked as correct or incorrect (1‐0). Scores are presented as percentages of the maximum score (with a standard deviation) and as percentages of passed or failed. As with the Dutch National Pharmacotherapy Assessment, we considered a score of 85% correct answers (80% with correction for guessing) as passing the test since the assessed knowledge is essential for patient safety. 13 , 14

Three aspects of the clinical case scenarios were scored: (1) nonpharmacological policy; (2) pharmacological policy; (3) follow‐up management. All parts were classified as good (= 2 points), sufficient (= 1 point) or insufficient (= 0 points) on the basis of a predefined answer model. The pharmacological policy was screened for prescribing errors, as defined by Dean and colleagues, 19 and classified as good when at least the main problem and one or two minor problem(s) were treated correctly, sufficient when only the main problem was treated correctly and insufficient when the main problem was treated incorrectly. The three parts together determined the classification of the total treatment plan: 0‐1 insufficient, 2‐3 sufficient, 4‐6 good.

All cases were independently scored in one bulk after study completion and blinded for participant information (ie, name, university, specialty) by two investigators (E.D. and D.B.) and discussed until consensus was reached. If necessary, the expert panel involved in developing the case scenarios was consulted. Only the data of participants who completed all assessments were analysed. Because participants switched between the categories and were followed up longitudinally, (multilevel) linear mixed models and generalised estimating equations were used to assess differences in scores over time for continuous and ordinal variables, respectively. The linear mixed models included fixed effect for assessment and a random intercept for participant; the generalised estimating equations assumed an ordinal distribution with logit link function. For calculating differences between groups within one assessment, we used chi‐square tests, ANOVA tests (with post hoc Turkey HSD corrected for multiple testing) or Student's t‐tests. To assess the correlation between knowledge and skills, we used generalised estimating equations, with skills as outcome and knowledge as an independent continuous variable. P values <.05 were considered to be statistically significant. Data were collected and analysed in SPSS 26.0 (IBM Corp., Armonk, NY, USA) and in Stata version 14 (StataCorp LLC, 2020).

3. RESULTS

In total, 556 (35.1%) junior doctors participated, 326 (58.6%) of whom completed the MCQs and 325 (58.5%) the clinical case scenarios of all three assessments. For demographics see Table 2.

TABLE 2.

Demographics

| Nonregistrar | Registrar | Research/PhD | Unknown | ||

|---|---|---|---|---|---|

|

Assessment 1 Around graduation (n = 326) |

Surgical a (n = 35) | 24 | 5 | 2 | 4 |

| Nonsurgical a (n = 243) | 128 | 77 | 31 | 7 | |

| Unknown (n = 48) | … | … | … | 48 | |

|

Assessment 2 +6 months (n = 326) |

Surgical (n = 49) | 36 | 7 | 4 | 2 |

| Nonsurgical (n = 267) | 145 | 83 | 33 | 6 | |

| Unknown (n = 10) | … | … | … | 10 | |

|

Assessment 3 +12 months (n = 326) |

Surgical (n = 45) | 31 | 8 | 6 | 0 |

| Nonsurgical (n = 270) | 132 | 90 | 42 | 6 | |

| Unknown (n = 11) | … | … | … | 11 |

For exact categorisation, see Appendix S1.

3.1. Knowledge

Linear mixed modelling showed that prescribing knowledge scores changed significantly in the year after graduation (P < .001). The mean total knowledge score increased from 69.4% (SD 13.0) at assessment 1 to 77.1% (SD 11.4) at assessment 2 (P < .001, 95% confidence interval [CI] 6.6‐8.9) but decreased to 71.7% (SD 13.8) at assessment 3 (P < .001, 95% CI 1.2‐3.5). On the basis of a “pass” score of 85% correct answers, 54 (17%) doctors passed assessment 1, 87 (27%) passed assessment 2 and 64 (20%) passed assessment 3.

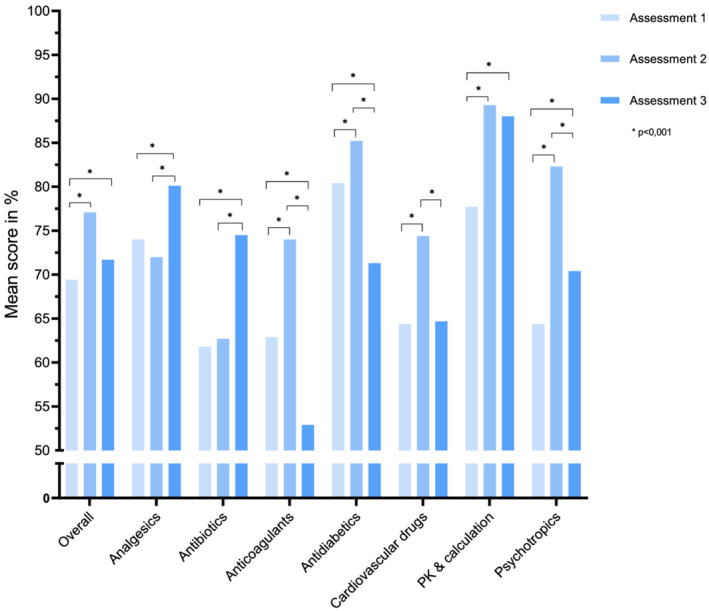

As shown in Figure 2, the knowledge scores of five of the seven question topics (anticoagulants, cardiovascular drugs, antidiabetics, psychotropics, and basic pharmacokinetics and drug calculations) increased significantly in the first 6 months (all P < .001), but with the exception of basic pharmacokinetics and drug calculations, decreased during the subsequent 6 months (all P < .001). By 1 year, participants had significantly increased their scores on prescribing knowledge of analgesics, antibiotics, psychotropics, and basic pharmacokinetics and drug calculations, but knowledge scores of antidiabetics and anticoagulants declined (all P < .001). Participants scored lowest on anticoagulants (mean score 52.9% at assessment 3) while the questions were not more difficult according to the difficulty index (for details see Appendix S7 ).

FIGURE 2.

Mean knowledge scores per topic (n = 326). PK, pharmacokinetics

3.2. Skills

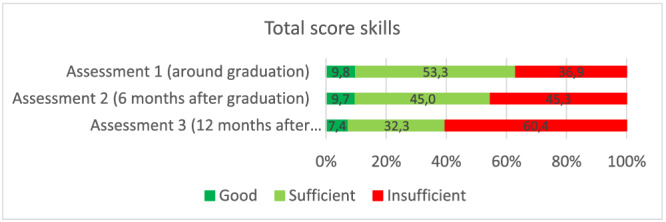

In total 2777 treatment plans were scored, of which 1455 (52.4%) were classified as at least sufficient (ie, sufficient or good). Generalised estimating equations showed a significant change in prescribing skills scores over time (P < .001): 63.1% of treatment plans were scored as at least sufficient at assessment 1 but only 39.6% at assessment 3 (P < .001) (Figure 3). One year after graduation, the prescribing skills scores for analgesics and renal impairment in particular had deteriorated (63.4% vs 22.9% and 62.4% vs 38.7% of the treatment plans were scored as at least sufficient, both P < .001). With an overall average of 34.1% of at least sufficient pharmacological policies, this part had the worst scores. In all assessments together, most errors were “missing an adjustment in the current treatment plan” (29.4%) or “indicated medication omitted” (21.9%). Nonpharmacological policies showed the greatest deterioration 1 year after graduation (60.7% vs 27.6% of nonpharmacological policies were scored as at least sufficient) (Appendices S8‐S10 ). In total, 396 (14.3%) pharmacological policies were scored as potentially harmful, mainly due to (a combination of) either overdosing, starting a contraindicated drug or omitting an indicated drug. Three (0.1%) policies were scored as harmful, and three (0.1%) as potentially lethal (case 1, daily dose of 8975 mg paracetamol for more than 1 week; case 2, daily dose of 100 mg piritramide; case 3, 50 mg oxycodone four times a day).

FIGURE 3.

Score development of prescribing skills (n = 325)

3.3. Subanalysis

Nonsurgical and surgical doctors had similar learning curves for prescribing knowledge and skills scores in the year after graduation (interaction time‐function P = .53 and P = .56, respectively). The same is true for the individual topics, except for knowledge on cardiovascular drugs. Surgical doctors' knowledge scores declined by 11.4%, whereas the scores of nonsurgical doctors increased by 1.7% in the year after graduation (P = .009). Moreover, after 1 year nonsurgical doctors outperformed surgical doctors in knowledge on analgesics, cardiovascular drugs, antidiabetics, psychotropics, basic pharmacokinetics and drugs calculation, and in skills on prescribing anticoagulants (for details see Appendices S11‐S13 ). Registrars and nonregistrars had a significant different development in their prescribing knowledge scores compared with physician researchers (interaction time‐function P = .029). Where the former two had a modest increase in their scores, had the latter a modest decrease (all P < .05). However, the prescribing skills scores of registrars declined more than those of nonregistrars and physician researchers (P < .001 and P = .002, respectively). The prescribing skills scores of doctors working in primary care declined more than those of doctors working in secondary care (P = .013). Analysis confirmed that the better a doctor's knowledge, the better their skills, with an odds ratio of 1.2 (95% CI 1.1‐1.3) for every 10% increase in knowledge.

4. DISCUSSION

Our findings show that junior doctors' scores on a prescribing knowledge and skills assessment had not improved at 1 year post graduation when the doctors were working in clinical practice. While their knowledge scores remained at a level similar to that when they graduated, their skills scores had decreased after 1 year. Moreover, the overall prescribing knowledge and skills scores of junior doctors was below the predefined passing grade. Similar results have been reported previously by the Working Group on Safe Prescribing of the British Pharmacological Society (BPS) and the European Association of Clinical Pharmacology and Therapeutics (EACPT) in 2007, and in recent cross‐sectional studies. 15 , 20 , 21 In particular, junior doctors lacked adequate knowledge about prescribing cardiovascular drugs and anticoagulants, and skills in prescribing analgesics and prescribing for patients with renal impairment. There was no difference in the learning curves of frequent versus infrequent prescribers (nonsurgical vs surgical doctors). However, nonsurgical doctors were more knowledgeable and had better prescribing skills than surgical doctors. While the knowledge of registrars remained stable, for primary care doctors their prescribing skills seemed to decrease after graduation. These results might explain why Dornan et al and Ryan and colleagues found minor increases (1.9% and 1.2%, respectively) in prescribing errors among second‐year foundation trainees. 9 , 10

In our study, 86.4% of the junior doctors worked in clinical practice and were probably in daily contact with the topics assessed. Thus, the poor prescribing skills, and to a lesser extent the prescribing knowledge (which is often taught in an earlier stage of medical school), cannot be ascribed to a poor retention of basic science knowledge (about 2 years 22 ) and lack of practice. It might be that busy junior doctors do not have enough time to think or learn about appropriate drug choices and just copy their supervisors' drug choice or follow protocols and guidelines. 11 , 23 Electronic prescribing might facilitate this lack of development of own knowledge and skills because these programs make suggestions about which drugs to prescribe (eg dosage, contraindications or possible interactions).

It is possible that the assessments covered a too wide range of topics. However, like the BPS/EACPT Working Group on Safe Prescribing and Jansen and colleagues, 14 , 15 we think that all junior doctors and not only generalists (eg, general practitioners or internists) should have extensive knowledge and skills in prescribing so that they can prescribe effectively and safely regardless of their specialty. Moreover, the assessed drug groups are widely used, and are responsible for most preventable hospital admissions for adverse drug reactions in the Netherlands. 16 Although the need for extensive knowledge and skills could be made redundant by the availability of electronic prescribing systems, which alert prescribers to potential prescribing errors, in practice doctors tend to override alerts due to “alert fatigue” – too many and often irrelevant warnings. 24 , 25 , 26 , 27 Moreover, these systems are not always accessible, and during emergency situations a doctor should have enough ready knowledge to make quick, yet safe and effective, decisions.

It could be argued that the results are not a true reflection of the doctors' skills and knowledge because the doctors may not have taken the assessments seriously or were in a rush. However, the junior doctors expressed their enthusiasm via emails, were very eager to receive their personal scores and took their time to complete the assessments (62 minutes [interquartile range, IQR 46‐92], 53 minutes [IQR 38‐79] and 51 minutes [IQR 37‐84] for assessments 1 to 3, respectively).

It is noteworthy that skills in “nonpharmacological policies” and “follow‐up management” showed the greatest decline. This might be because the participants paid less attention to these aspects because they had been asked to evaluate pharmacotherapy, which, in the eyes of junior doctors, might mean the prescribing of medicines only. However, this information is specifically requested in the treatment plans. Most studies of prescribing skills do not focus on these aspects, although Wimble et al reported that junior doctors lacked knowledge in drawing up an appropriate follow‐up management plan. 28

While there appeared to be significant differences in the level and development of prescribing knowledge and skills in the different participant subgroups, the clinical relevance of these differences is doubtful. The differences in knowledge between surgical and nonsurgical doctors in assessment 3 (8.8%) and in skills in all three assessments are probably of clinical relevance, possibly reflecting the greater exposure of nonsurgical doctors to the large arsenal of medicines available for the treatment of patients with nonsurgical health problems. Nevertheless, both subgroups had inadequate prescribing knowledge and skills. The larger decline in prescribing skills seen in registrars and primary care doctors is possibly clinically relevant. This might be because some of the clinical case scenarios focused on secondary, hospital care settings, whereas more than half of the registrars were primary care doctors (59%, 54% and 56% per assessment, respectively), compared to a quarter of the nonregistrars (22%, 22% and 24% per assessment). Moreover, registrars are probably more likely to copy their supervisors since these junior doctors (like medical students) have more personal guidance. 11 However, the aim was that all cases were relevant for all junior doctors, and that all junior doctors should be able to set up a safe treatment plan for the designed cases. The group of physicians and clinical pharmacologists (in training) not otherwise involved in the study confirmed that the cases met these requirements (Appendix S5 ).

The assessments had comparable R ir scores and showed sufficient internal consistency. In fact, the internal consistency in our study was higher than that of similar studies of prescribing knowledge (mean internal consistency in our study was 0.72 vs 0.5 to 0.7 in others). 7 , 14 , 29 , 30 The difficulty index of the assessments did differ, in that the MCQs of assessment 2 were “easier” than the questions in the two other assessments, which were of similar difficulty. This means that the increase in prescribing knowledge during the first 6 months is potentially nonexistent.

4.1. Strengths and limitations

As far as we know, this is the first international, multicentre, longitudinal study to assess the prescribing knowledge and skills of junior doctors after graduation. However, when interpreting our findings, a number of limitations should be kept in mind. First, there is a small chance that we did not invite all graduated junior doctors to take part. Moreover, not all 1584 graduated junior doctors participated in our study. Nevertheless, the cohort is still representative, with 326 junior doctors working in various clinical fields in the Netherlands and Belgium. Second, the participants who completed all three assessments were possibly more interested and therefore probably more competent in pharmacotherapy. Alternatively, the less competent junior doctors might have been less likely to participate. This type of selection bias could have led to an overestimation of the true level of knowledge and skills. Third, the assessment itself could have played a role in the development of prescribing knowledge and skills. Participants probably studied their specific weak points after the assessments without really increasing their prescribing knowledge and skills by learning in practice. This might have led to an overestimation of the true development of knowledge and skills. Fourth, an assessment itself is a limitation since it is not similar to prescribing in practice, so the results may not be a true reflection of prescribing knowledge and skills in daily practice. However, this is the most feasible study design and comes closest to assessing junior doctors' true knowledge and skills. Moreover, the case scenarios were scored without blinding for assessment number. This could have led to bias. On the other hand, the case scenarios were scored in one bulk per topic after study completion. This possibly reduced the inter‐ and intraobserver variability. Fifth, the assessments were not supervised. It is possible that participants used resources such as formularies and/or guidelines for the knowledge part, or completed the assessments together or asked for help. However, this is also true in clinical practice, where doctors can consult colleagues or access decision‐support facilities. Sixth, the reliability analysis showed that the questions had poor‐to‐adequate ability to distinguish between good and poor participants. However, this is inherent to assessing ready knowledge and could also be due to the small number of questions per topic and differences in education. The R ir score becomes less reliable when good participants lack knowledge on one specific topic.

5. CONCLUSION

To conclude, this international, multicentre, longitudinal study suggests that junior doctors' prescribing knowledge and skills did not improve in the year after graduation while they were working in various clinical fields. Although their prescribing knowledge remained stable, their prescribing skills deteriorated. Whether junior doctors prescribed frequently or not seemed not to influence these findings. Moreover, the level of prescribing knowledge and skills was below the predefined passing grade. This might have negative consequences for patient safety and should be corrected. Since we cannot assume that junior doctors will automatically become better prescribers within their first year after graduation, we propose the following recommendations to improve their prescribing knowledge and skills: (1) education in CP&T in the undergraduate curriculum should be intensified to increase competence by the time of graduation; (2) studies should investigate which educational interventions are suitable for junior doctors; (3) structured, longitudinal educational programmes on prescribing knowledge and skills for junior doctors should be developed, with emphasis on therapeutic reasoning.

CONTRIBUTORS

E.D., D.B., F.R., B.J., W.K., G.D., P.J., A.D., T.C., J.vS., I.dWS., L.P., R.G., M.H., M.R., M.vA., C.K. and J.T. contributed to study design and data collection. E.D., D.B., M.R. and J.T. contributed to the writing of the report. E.D. and B.L.W. contributed to the data analysis. All authors contributed to data interpretation and approved the final version of the submitted report.

CONFLICT OF INTEREST

All authors declared no competing interests for this work.

Supporting information

Appendix S1 Categorisation surgical vs nonsurgical

Appendix S2 Categorisation primary and secondary care

Appendix S2 Format skills question

Appendix S3 Test matrix

Appendix S4 Example clinical case scenario

Appendix S5 Rating clinical case scenarios by physicians and clinical pharmacologists (in training) not otherwise involved in the study

Appendix S6 Score control group

Appendix S7 Scores per topic (knowledge part)

Appendix S8 Score per topic (skills part)

Appendix S9 Score per part of the treatment plan

Appendix S10 Classification and number of prescribing errors

Appendix S11 Score development per subgroup on prescribing knowledge

Appendix S12 Score development per subgroup on prescribing skills

Appendix S13 Score development surgical vs nonsurgical doctors on prescribing knowledge and skills

ACKNOWLEDGMENTS

This study was funded by ZonMw, The Netherlands Organization for Health Research and Development, project number: 83600095004.

Donker EM, Brinkman DJ, van Rosse F, et al. Do we become better prescribers after graduation: A 1‐year international follow‐up study among junior doctors. Br J Clin Pharmacol. 2022;88(12):5218‐5226. doi: 10.1111/bcp.15443

The authors confirm that the Principal Investigator for this paper is Jelle Tichelaar.

Funding information ZonMw, The Netherlands Organization for Health Research and Development, Grant/Award Number: 83600095004

DATA AVAILABILITY STATEMENT

The data that support the findings of this study will be available directly after publication from the corresponding author upon reasonable request. Proposals may be submitted up to 24 months following article submission. The data will be shared after de‐identification.

REFERENCES

- 1. Dean B, Schachter M, Vincent C, Barber N. Causes of prescribing errors in hospital inpatients: a prospective study. Lancet. 2002;359(9315):1373‐1378. doi: 10.1016/S0140-6736(02)08350-2 [DOI] [PubMed] [Google Scholar]

- 2. Leendertse AJ, Egberts AC, Stoker LJ, van den Bemt PM, HARM Study Group . Frequency of and risk factors for preventable medication‐related hospital admissions in the Netherlands. Arch Intern Med. 2008;168(17):1890‐1896. doi: 10.1001/archinternmed.2008.3 [DOI] [PubMed] [Google Scholar]

- 3. De Vries TPGM, Henning M, Hogerzeil RH, Hans V, Fresle DA. Guide to Good Prescribing – A Practical Manual. Geneva: World Heath Organisation; 1994. [Google Scholar]

- 4. Avery AJ, Ghaleb M, Barber N, et al. The prevalence and nature of prescribing and monitoring errors in English general practice: a retrospective case note review. Br J Gen Pract. 2013;63(613):e543‐e553. doi: 10.3399/bjgp13X670679 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Slight SP, Howard R, Ghaleb M, Barber N, Franklin BD, Avery AJ. The causes of prescribing errors in English general practices: a qualitative study. Br J Gen Pract. 2013;63(615):e713‐e720. doi: 10.3399/bjgp13X673739 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Brinkman DJ, Tichelaar J, Graaf S, Otten RHJ, Richir MC, van Agtmael MA. Do final‐year medical students have sufficient prescribing competencies? A systematic literature review. Br J Clin Pharmacol. 2018;84(4):615‐635. doi: 10.1111/bcp.13491 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Brinkman DJ, Tichelaar J, Schutte T, et al. Essential competencies in prescribing: A first European cross‐sectional study among 895 final‐year medical students. Clin Pharmacol Ther. 2017;101(2):281‐289. doi: 10.1002/cpt.521 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Brinkman DJ, Tichelaar J, Okorie M, et al. Pharmacology and therapeutics education in the European Union needs harmonization and modernization: A cross‐sectional survey among 185 medical schools in 27 countries. Clin Pharmacol Ther. 2017;102(5):815‐822. doi: 10.1002/cpt.682 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Dornan T, Ashcroft D, Heathfield H, et al. An in depth investigation into causes of prescribing errors by foundation trainees in relation to their medical education. EQUIP study. 2009. (Available at: https://www.gmc-uk.org/-/media/documents/FINAL_Report_prevalence_and_causes_of_prescribing_errors.pdf_28935150.pdf., December 2009).

- 10. Ryan C, Ross S, Davey P, et al. Prevalence and causes of prescribing errors: the PRescribing Outcomes for Trainee Doctors Engaged in Clinical Training (PROTECT) study. PLoS One. 2014;9(1):e79802. doi: 10.1371/journal.pone.0079802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Tichelaar J, Richir MC, Avis HJ, Scholten HJ, Antonini NF, De Vries TP. Do medical students copy the drug treatment choices of their teachers or do they think for themselves? Eur J Clin Pharmacol. 2010;66(4):407‐412. doi: 10.1007/s00228-009-0743-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Tichelaar J, van Kan C, van Unen RJ, et al. The effect of different levels of realism of context learning on the prescribing competencies of medical students during the clinical clerkship in internal medicine: an exploratory study. Eur J Clin Pharmacol. 2015;71(2):237‐242. doi: 10.1007/s00228-014-1790-y [DOI] [PubMed] [Google Scholar]

- 13. Kramers C, Janssen BJ, Knol W, et al. A licence to prescribe. Br J Clin Pharmacol. 2017;83(8):1860‐1861. doi: 10.1111/bcp.13257 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Jansen DRM, Keijsers C, Kornelissen MO, Olde Rikkert MGM, Kramers C. Towards a “prescribing license” for medical students: development and quality evaluation of an assessment for safe prescribing. Eur J Clin Pharmacol. 2019;75(9):1261‐1268. doi: 10.1007/s00228-019-02686-1 [DOI] [PubMed] [Google Scholar]

- 15. Maxwell SR, Cascorbi I, Orme M, Webb DJ, Joint BPS/EACPT Working Group on Safe Prescribing . Educating European (junior) Doctors for Safe Prescribing. Basic Clin Pharmacol Toxicol. 2007;101(6):395‐400. doi: 10.1111/j.1742-7843.2007.00141.x [DOI] [PubMed] [Google Scholar]

- 16. Warlé‐van Herwaarden MF, Kramers C, Sturkenboom MC, van den Bemt PM, De Smet PA. Targeting outpatient drug safety: recommendations of the Dutch HARM‐Wrestling Task Force. Drug Saf. 2012;35(3):245‐259. doi: 10.2165/11596000-000000000-00000 [DOI] [PubMed] [Google Scholar]

- 17. Ebel RL, Frisbie DA. Essential of educational measurement. Englewood Cliffs, New Jersey: Prentice Hall; 1991. [Google Scholar]

- 18. Tavakol M, Dennick R. Making sense of Cronbach's alpha. Int J Med Educ. 2011;2:53‐55. doi: 10.5116/ijme.4dfb.8dfd [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Dean B, Barber N, Schachter M. What is a prescribing error? Quality in Health Care: QHC. 2000;9(4):232‐237. doi: 10.1136/qhc.9.4.232 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Harding S, Britten N, Bristow D. The performance of junior doctors in applying clinical pharmacology knowledge and prescribing skills to standardized clinical cases. Br J Clin Pharmacol. 2010;69(6):598‐606. doi: 10.1111/j.1365-2125.2010.03645.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Starmer K, Sinnott M, Shaban R, Donegan E, Kapitzke D. Blind prescribing: a study of junior doctors' prescribing preparedness in an Australian emergency department. Emerg Med Australas. 2013;25(2):147‐153. doi: 10.1111/1742-6723.12061 [DOI] [PubMed] [Google Scholar]

- 22. Custers EJ, Ten Cate OT. Very long‐term retention of basic science knowledge in doctors after graduation. Med Educ. 2011;45(4):422‐430. doi: 10.1111/j.1365-2923.2010.03889.x [DOI] [PubMed] [Google Scholar]

- 23. Council GM . National training survey 2019; Initial findings report. 2019. (Available at: https://www.gmc-uk.org/-/media/national-training-surveys-initial-findings-report-20190705_2.pdf, 2019).

- 24. Kuperman GJ, Bobb A, Payne TH, et al. Medication‐related clinical decision support in computerized provider order entry systems: a review. J Am Med Inform Assoc. 2007;14(1):29‐40. doi: 10.1197/jamia.M2170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Metzger J, Welebob E, Bates DW, Lipsitz S, Classen DC. Mixed results in the safety performance of computerized physician order entry. Health Aff (Millwood). 2010;29(4):655‐663. doi: 10.1377/hlthaff.2010.0160 [DOI] [PubMed] [Google Scholar]

- 26. Ancker JS, Edwards A, Nosal S, et al. Effects of workload, work complexity, and repeated alerts on alert fatigue in a clinical decision support system. BMC Med Inform Decis Mak. 2017;17(1):36. doi: 10.1186/s12911-017-0430-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. van der Sijs H, Aarts J, Vulto A, Berg M. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc. 2006;13(2):138‐147. doi: 10.1197/jamia.M1809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Wimble K, Yeong K. Improving patient follow‐up after inpatient stay. BMJ Qual Improv Rep. 2012;1(1):u474.w148. doi: 10.1136/bmjquality.u474.w148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Keijsers CJ, Brouwers JRBJ, de Wildt DJ, et al. A comparison of medical and pharmacy students' knowledge and skills of pharmacology and pharmacotherapy. Br J Clin Pharmacol. 2014;78(4):781‐788. doi: 10.1111/bcp.12396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Keijsers CJ, Leendertse AJ, Faber A, Brouwers JR, de Wildt DJ, Jansen PA. Pharmacists' and general practitioners' pharmacology knowledge and pharmacotherapy skills. J Clin Pharmacol. 2015;55(8):936‐943. doi: 10.1002/jcph.500 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1 Categorisation surgical vs nonsurgical

Appendix S2 Categorisation primary and secondary care

Appendix S2 Format skills question

Appendix S3 Test matrix

Appendix S4 Example clinical case scenario

Appendix S5 Rating clinical case scenarios by physicians and clinical pharmacologists (in training) not otherwise involved in the study

Appendix S6 Score control group

Appendix S7 Scores per topic (knowledge part)

Appendix S8 Score per topic (skills part)

Appendix S9 Score per part of the treatment plan

Appendix S10 Classification and number of prescribing errors

Appendix S11 Score development per subgroup on prescribing knowledge

Appendix S12 Score development per subgroup on prescribing skills

Appendix S13 Score development surgical vs nonsurgical doctors on prescribing knowledge and skills

Data Availability Statement

The data that support the findings of this study will be available directly after publication from the corresponding author upon reasonable request. Proposals may be submitted up to 24 months following article submission. The data will be shared after de‐identification.