Summary

Mind-controlled wheelchairs are an intriguing assistive mobility solution applicable in complete paralysis. Despite progress in brain-machine interface (BMI) technology, its translation remains elusive. The primary objective of this study is to probe the hypothesis that BMI skill acquisition by end-users is fundamental to control a non-invasive brain-actuated intelligent wheelchair in real-world settings. We demonstrate that three tetraplegic spinal-cord injury users could be trained to operate a non-invasive, self-paced thought-controlled wheelchair and execute complex navigation tasks. However, only the two users exhibiting increasing decoding performance and feature discriminancy, significant neuroplasticity changes and improved BMI command latency, achieved high navigation performance. In addition, we show that dexterous, continuous control of robots is possible through low-degree of freedom, discrete and uncertain control channels like a motor imagery BMI, by blending human and artificial intelligence through shared-control methodologies. We posit that subject learning and shared-control are the key components paving the way for translational non-invasive BMI.

Subject areas: Techniques in neuroscience, Machine learning, Robotics

Graphical abstract

Highlights

-

•

Three participants learned to drive a non-invasive BMI-actuated wheelchair

-

•

Direct transfer of learned BMI skills to wheelchair control

-

•

Subject learning and robotic intelligence are key to translational BMI-actuated robots

Techniques in neuroscience; Machine learning; Robotics

Introduction

Daily usage of brain-controlled robots and neuroprostheses is the paramount promise of brain-machine interface (BMI) for people suffering from severe motor disabilities (Ramsey and Millán, 2020). The last years have seen a flourish of BMI neurorobotic prototypes using both implanted and non-invasive approaches (Tonin and Millán, 2021). Several studies have shown the potential of BMI to control robotic devices such as robotic arms (Hochberg et al., 2012; Collinger et al., 2013; Jianjun et al., 2016), exoskeletons (Pfurtscheller et al., 2003; Rupp et al., 2015), wheelchairs (Iturrate et al., 2009; Millán et al., 2009; Diez et al., 2013; Carlson and Millán, 2013; Kaufmann et al., 2014; Cruz et al., 2021) and telepresence robots (Millán et al., 2004; Leeb et al., 2013,2015) by successfully decoding users’ intents from brain activity. However, despite these impressive technical developments, the vast majority of this literature reports on able-bodied users or on single-case end-user studies with short evaluation protocols (Perdikis and Millán, 2020; Tonin and Millán, 2021). Longitudinal experimentation on BMI-based robotics with end-user cohorts is markedly scarce and this ultimately prevents their efficient transition from laboratory prototyping to real-world settings.

As with general BMI robots, the literature of BMI-controlled wheelchairs (Leaman and La, 2017; Diez, 2018) is limited to single-session evaluation with able-bodied users (Vanacker et al., 2007; Iturrate et al., 2009; Millán et al., 2009; Carlson and Millán, 2013; Kaufmann et al., 2014; Diez, 2018; Cruz et al., 2021), or case studies with a single end-user (Leeb et al., 2007; Lopes et al., 2013). It is worth noting that Diez et al. (2013) and Cruz et al. (2021) reported on brain-driven wheelchairs with more end-users in single-session evaluation. However, most of these works have relied on stimuli-driven BMI paradigms (Iturrate et al., 2009; Diez et al., 2013; Kaufmann et al., 2014; Cruz et al., 2021) that pose severe constraints to the possibility of establishing ecological control (i.e., split attention, obstruction of visual field, visual fatigue) (Millán et al., 2009; Wolpaw et al., 2018). It is worth noting that no brain-actuated wheelchair controlled via an implanted BMI has been reported, which corroborates the great challenge of this application regarding brain control.

Here, we hypothesized that non-invasive BMI-controlled wheelchairs are mature for clinical translation and long-term usability by end-users if grounded on two pillars: mutual learning between user and decoder and the integration of robotic intelligence in the control loop. The first pillar, mutual learning, has been recently highlighted as a key component for successful, longitudinal, plug-and-play BMI operation (McFarland et al., 2010; Collinger et al., 2013; Jianjun et al., 2016; Perdikis et al., 2018; Edelman et al., 2019; Perdikis and Millán, 2020; Silversmith et al., 2021). However, it remains unclear how learning interactions between the user, the decoder and the device affect the control of the neuroprosthesis and how the acquisition of BMI skills translates into optimal control of the robotic device. The second pillar, robotic intelligence, has been only marginally explored in the BMI literature (Millán et al., 2004; Tonin et al., 2010; Carlson and Millán, 2013; Leeb et al., 2015; Cruz et al., 2021). Indeed, most BMIs have usually neglected the robotic component by relegating the robot to a mere actuator of the user’s commands. Here, we underline the importance of coupling user and robot intelligence through a shared-control approach to accomplish complex navigation tasks in ecological settings with a spontaneous BMI.

In this work, we demonstrate that three individuals affected by severe tetraplegia after spinal cord injury (SCI) learned to operate a self-paced sensorimotor rhythm (SMR)-based BMI to drive an intelligent robotic wheelchair in real-world scenarios with different degrees of proficiency. Furthermore, we demonstrate that wheelchair control proficiency strongly depends on robust BMI skills acquired during the longitudinal training and on the support of shared-control algorithms exploiting robot’s intelligence.

Thus, we posit that mutual learning and shared-control may be the two cornerstones of developing robust and effective brain-actuated neuroprostheses and, to date, the most promising pathways towards fulfilling the translational promise of BMI.

Results

Three participants (P1-P3, see Table 1) enrolled in a longitudinal training protocol with the aim of learning to modulate SMR elicited by two mental tasks, namely, imagined movements of both hands and both feet. During the BMI training period (5, 3 and 2 months, respectively, with an intensity of 3 sessions per week), participants were seated on a customized powered wheelchair (Figure 1A) and they were asked to control a visual feedback mimicking a steering wheel rotating to the left or to the right according to the mental task decoded by the BMI decoder (Figure 1B). In this phase, in-place rotations of the wheelchair followed commands correctly delivered by the user to provide a richer reward to the participant and promote the human-robot coupling. Several days after finishing the training, participants were asked to drive the wheelchair in a real-world scenario with the support of the robot’s intelligence (Figures 1C and 1D).

Table 1.

Participants’ information

| Participant | Age | Pathology | Time of lesion | Residual mobility | Additional | Experimental sessions |

|||

|---|---|---|---|---|---|---|---|---|---|

| #run | #day | #week | #month | ||||||

| P1 | 26 | Complete spasmodic tetraplegia sub C3 | 05.06.2008 | None below the neck | Assisted ventilation Temporary interruption of the training due to hospitalization |

199 | 29 | 13 | 5 |

| P2 | 59 | Tetraplegia sub C5/C6 | 06.12.2009 | Capacity of lifting the arms Slight finger movement |

53 | 11 | 7 | 3 | |

| P3 | 56 | Tetraplegia sub C5 | 18.11.1996 | Capacity of lifting the arms Slight finger movement |

65 | 11 | 7 | 2 | |

Figure 1.

Customized powered wheelchair

(A) The customized powered wheelchair used in the study, equipped with an RGB-D camera, a laser rangefinder and a small monitor for the BMI feedback.

(B) Two examples of the visual feedback presented to participants, inspired by a conventional steering wheel. In the upper row, the cued paradigm used during the calibration and evaluation sessions is illustrated. In the bottom, the feedback used during the navigation sessions (without any cues instructing the participant which BMI command to forward) is shown. (C) Schematic representation of the shared-control navigation system.

(D) The ecological experimental field for the navigation phase of the study, cluttered with typical furniture of a clinical environment. Participants were asked to mentally drive the wheelchair through four waypoints (WP1-4).

Acquisition of BMI control skills during longitudinal training

Figure 2A illustrates the evolution over sessions of the BMI decoding accuracy (i.e., percentage of successful motor imagery (MI) trials within each evaluation session) for each participant.

Figure 2.

Evolution of BMI training for each participant

(A) Evolution of decoding performance over sessions for each participant. The solid black line represents the least-squares fit of the decoding accuracy values.

(B) Decoding performance averaged within the first 10 (light grey) and the last 10 (dark grey) runs. Error bars report the standard deviation. ∗∗∗ indicate a p-value less than 0.001.

(C) Evolution of FD over sessions for each participant. The solid black line represents the least-squares fit of the FD values. On top, the topographic representation of the FD averaged over the first and the last 10 runs.

(D) FD averaged within the first 10 (light grey) and the last 10 (dark grey) runs. Error bars report the standard deviation. ∗ and ∗∗∗ indicate a p-value less than 0.05 and 0.001, respectively.

(E) Evolution of command latency (time to deliver a BMI command) over sessions for each participant. The solid black line represents the least-squares fit of the latency values.

(F) Command latency averaged within the first 10 (light grey) and the last 10 (dark grey) runs. Error bars report the standard deviation. ∗ and ∗∗ indicate a p-value less than 0.05 and 0.01, respectively.

(G) Correlation between FD and command latency computed per run for each participant. Vertical dashed lines in panels (A), (C) and (E) indicate the session when the decoder was re-calibrated.

All participants exhibited low performance (additionally reported through information transfer rate (ITR) in parenthesis, see section “method details”) at training onset: Specifically, P1’s accuracy in the first session was 45.0% (ITR 0.0023 bits/s), P2’s 55.0% (0.1186 bits/s) and P3’s 43.3% (0.0880 bits/s) (The chance level of classification accuracy at the 95% confidence interval is estimated to be 60% given the use of a 2-class MI BMI and the collection of around 60 trials on average in each training session (Combrisson and Jerbi, 2015). The reason why the initial accuracy of every subject was below chance level is because trials have a limited duration, or timeout, which are considered failed trials (see section “method details”)). P1 represents a clear-cut case of BMI skill acquisition through feedback training, because he exhibited a significant performance improvement over time (, e-7), where is the Pearson correlation coefficient between accuracy and session index and is the p-value of the corresponding correlation’s statistical test using Student’s t-distribution. As a result of training, P1 achieved exceptional control in the last session (95.0%/0.2685 bits/s) and optimal performance in the penultimate training session (100%/0.5041 bits/s). P3 also exhibited a strong decoding accuracy increase during the first seven (out of total ten) evaluation sessions (, ) resulting in 98.3% accuracy and 0.2561 bits/s in session 7, which however decreased in the last three sessions. This is probably because of the new decoder introduced in session 7 which, despite yielding an excellent accuracy as built with data from the previous day, provided different feedback dynamics that confused the participant a week later (session 8) and required him to re-establish BMI control. Satisfactory restoration of performance was ultimately achieved in the last session (74%/0.2292 bits/s), constituting great improvement with respect to this participant’s performance at training onset (first session, 43.3%).

On the contrary, P2’s decoding performance curve was flat (, ). Nevertheless, this user’s spontaneous SMR modulation ability at training onset rapidly enabled him to acquire a stable, although moderate, level of decoding accuracy throughout the training period (68.2%13.9% average accuracy and 0.09580.0392 bits/s average ITR), so as to permit wheelchair brain control despite the absence of learning effects.

Figure 2B summarizes the existence of strong (and statistically significant) enhancement of BMI accuracy between the first and last 10 runs for P1. Specifically, P1 improved considerably (from 37.511.1% to 87.017.03%, e-4, paired Student’s t-test). Also P3 did (from 67.018.3% to 91.012.9%, ) if we exclude the last three sessions. The effects are analogous in terms of ITR. As shown by the enhanced feature discriminancy (separability) in P3’s last sessions (see Figure 2C and analysis below), it seems that the reason behind this setback was the need for P3 to reacquire BMI control. It is reasonable to assume that P3 could have otherwise replicated the good performances exhibited in the first seven sessions with longer training.

Although BMI decoders were intermittently re-calibrated to exploit possible emerging task-specific neural patterns, new decoders were only infrequently applied (dashed vertical lines in Figure 2A) to minimize interference with the participants’ learning process. P1’s decoder was re-calibrated 6 times (mostly in the first 2 weeks), whereas those of P2 and P3 only 4 and 3 times, respectively. Classifier re-calibration yielded improved performance with respect to the previous session for P1 (on average, with and without re-calibration) and for P3 ( with and without re-calibration). For P2, who did not exhibit strong learning effects, there was no effect ( with and without).

The presence of subject learning for P1 and P3 (but not for P2) is corroborated by the evolution of their feature discriminancy (FD) throughout training. Feature discriminancy reflects the participant’s ability to modulate specific, task-dependent frequency bands in localized regions during MI tasks (Perdikis and Millán, 2020) and is estimated as the Fisher Score of power spectral density (PSD) features within each session, averaged across the selected features used by the decoder. As well established in the literature (Wolpaw and McFarland, 2004; Vidaurre et al., 2011) and corroborated by our recent work (Perdikis et al., 2018; Tortora et al., 2022), a measure of brain pattern separability like FD is ideal for monitoring subject learning effects and is the most fundamental variable for explaining the BMI system’s performance (Perdikis and Millán, 2020). This is reasonable, because even the most elaborate machine learning models will be unable to discern mental classes that exhibit no separability in the selected feature space. Figure S1 confirms that accuracy significantly correlates with FD for all participants.

Figure 2C illustrates the progression of FD over sessions, which matches that of decoding performance (Figure 2A): only P1 and P3 show consistent, significant positive FD trends (, and , , respectively). No such effect is found for P2 (, ). The evolution of the spatial distribution of FD is reported with topographies, highlighting the consistency of the most discriminant features over time, as well as their alignment with the anticipated electroencephalography (EEG) correlates of the MI tasks performed by the participants (Pfurtscheller and Lopes da Silva, 1999). The heat maps in Figure S2 depict the percentage of runs during the evaluation phase where each PSD feature was used and further confirm that frequently selected features coincide with known SMR signatures. Table S3 reports the spatio-spectral features selected in each participant’s decoder. Figure 2D compares the average FD increase between the first and the last 10 runs for each participant. Significant improvements are reported for the average FD for P1 and P3 ( and , respectively), further substantiating subject learning. These results show the strong spatial and spectral localization of PSD features, suggesting that participants progressively developed the ability to modulate localized brain rhythms for each BMI task.

Figure 2E reveals another essential aspect of acquiring BMI skills to drive a wheelchair, namely command latency (i.e., time needed to deliver a correct BMI command). P1 and P3 improved their average command latency, whereas P2 deteriorated it. Figure 2F illustrates the average command latency in the first and last 10 runs for all participants. Finally, as shown in Figure 2G, the two facets of BMI learning studied here in addition to the conventional decoding accuracy (i.e., feature discriminancy, command latency) correlate significantly with one another for P1 and P3 (, and , , respectively), providing further evidence that the observed trends are the result of successful user training.

Effects of BMI training on brain connectivity

We investigated the hypothesis that cortical plasticity underpinning BMI learning manifests with functional connectivity changes, by identifying short-time direct directed transfer function (SdDTF) connectivity features that evolved through training and were consistent with BMI accuracy. Only the ,-band SdDTF connectivity associated to the MI task “both feet” for participant P1 (Figure 3, blue) and ,-band of MI task “both hands” for participant P3 (Figure 3, green) met these criteria. Critically, these two participants also exhibited BMI learning with respect to both accuracy and discriminancy of task-dependent SMR (Figure 2). On the contrary, P2 showed an absence of BMI learning and functional connectivity changes.

Figure 3.

SdDTF connectivity analysis. The SdDTF connectivity against the command accuracy (on the left) and session index (on the right)

Light blue and green dots represent the values for participants P1 and P3, respectively. Solid colored lines represent the least-squares fit of the values of the corresponding participant.

In detail, the SdDTF value for P1 differed significantly between the first and last evaluation sessions (corrected ), correlated with the session index and with accuracy (, identical correlation results are coincidental). The corresponding values for P3 were ; and , respectively. These results indicate that distributed functional plasticity (Jarosiewicz et al., 2009; Wander et al., 2013), as manifested by SdDTF connectivity progression, accompanies and may be subserving BMI skill acquisition.

Driving the wheelchair in an ecological environment

Figure 4A shows the heat map of the trajectories generated by all participants (see also Videos S1 and S2). The color code indicates the regions of the map less (blue) and most (yellow) frequently visited. The black line depicts the optimal trajectory. Qualitatively, the real trajectories tend to deviate from the optimal one mostly around the waypoints WP2 and WP4, which required particularly accurate BMI control to properly approach the turn (WP2) and precise command timing to successfully drive the wheelchair towards the corridor (WP4).

Figure 4.

Navigation results

(A) Heat map visualizing the trajectories followed by all participants. In blue/yellow the least/most frequently visited regions of the map, respectively, are displayed. The black solid line represents the optimal trajectory, computed as the average of 20 manually generated trajectories using a keyboard.

(B) Success rate achieved by each participant in passing through the waypoints (in light blue, light red and light green for P1-P3, respectively).

(C) Boxplots of waypoint acquisition duration (i.e., time needed to complete the path to each of the waypoints) for each participant.

(D) Boxplots of the number of commands delivered by each participant along their path to the waypoints.

(E) Fréchet distance between each participant’s path to each of the waypoints and the optimal trajectory.

Boxplots report the median, the lower and upper quartiles, any outliers, and the minimum and maximum values that are not outliers.

Quantitatively, Figure 4B reports the percentage of waypoints marked as completed for each participant (where 100% indicates that the given waypoint was reached in every repetition, and 0% that it was never reached). All participants were able to successfully pass through WP1 in each repetition (100%); WP2 was always reached by P1 (100%), whereas P2 and P3 accomplished the task in the 20.0% and 66.6% of their attempts, respectively. The difficulty level of WP3 was relatively low, as P1 and P3 achieved it in every attempt (100%), and P2 in 75.0% of cases. Finally, WP4 was found to be the most difficult waypoint, with P1 accomplishing the task with 80.0% success rate, P3 with 20.0%, whereas P2 was unable to correctly navigate towards the corridor.

Another aspect of navigation competency is the time needed to reach each waypoint (Figure 4C). P1 was faster than P2 and P3 (on average per waypoint: 58.24.4 s vs. 94.58.9 s vs. 105.66.9 s, and , one-way ANOVA, post hoc test with Bonferroni correction). P3 proved to be the slowest, but more accurate than P2 (Figure 4B). The number of delivered BMI commands was similar across all participants (Figure 4D), with P3 slightly exceeding the average, which justifies the higher task duration in completing the task (10.61.0, 5.02.1 and 18.91.6 on average per waypoint).

We also compared the trajectories to the optimal one using the distribution of Fréchet distances (Figure 4E). P1 closely followed the optimal path on average, whereas BMI-actuated navigation for P2 and P3 exhibited larger deviations (0.720.10 m, 1.120.21 m, 1.270.16 m, between P1 and P3, one-way ANOVA, post hoc test with Bonferroni correction).

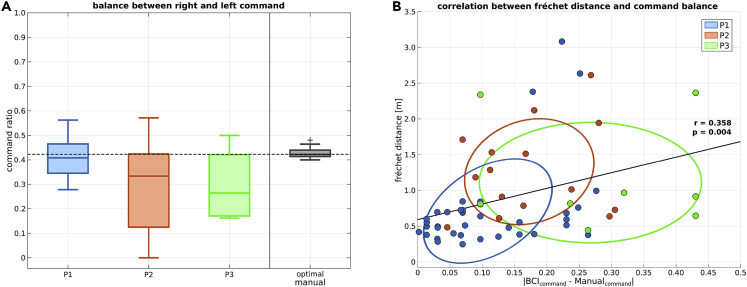

To investigate how BMI control affects the navigation performance, we considered the balance between the two commands (i.e., turn left/right). Command balance is a good indicator of BMI performance (in the absence of cues that are necessary to formally derive command accuracy), because BMI malfunction in a binary interface commonly manifests as bias towards one of the two commands. Figure 5A illustrates the normalized ratio as the number of left commands over the total number of commands, so that a value of 0.5 denotes perfect balance, 0.0 complete bias to the right and 1.0 to the left. We computed the optimal balance for the navigation task by considering the case of optimal control modality (simulation with a discrete manual interface; please refer to section “method details - optimal and random trajectories”). In our navigation task, the optimal command balance was 0.420.008, median and standard error. P1’s performance is very close to the optimal one (0.400.03). Conversely, P2 and P3 were biased (0.330.09, 0.260.05); however, in both cases, sufficient control over the unbiased command is preserved to account for a good level of usability.

Figure 5.

BMI command balance for each participant

(A) Boxplots of the balance between right and left commands for participants P1-P3 and for the case of optimal control (in light blue, light red, light green and grey, respectively). A value of 0.0 denotes complete bias to the right and 1.0 to the left. The horizontal dashed line represents the optimal balance for our specific navigation task (0.42). Boxplots report the median, the lower and upper quartiles, any outliers, and the minimum and maximum values that are not outliers.

(B) The Fréchet distance of each route against the corresponding command balance value from the optimal one. Each participant is represented by a color (light blue, light red and light green for P1, P2 and P3, respectively). Solid black line represents the least-squares fit of all values. Ellipses represent the confidence regions (50%) for each distribution.

Figure 5B illustrates the Fréchet distance of each completed route against the absolute value of the difference between the command balance and the optimal balance. Interestingly, each participant’s attempts form well-defined clusters. Specifically, a low value of absolute balance difference, as in the case of P1, tends to associate with trajectories with low Fréchet distance (i.e., close to the optimal trajectory). On the contrary, higher differences (as for P2 and P3) yield larger Fréchet distances. The relation between these two variables is further supported by a positive correlation (, ). Hence, the level of BMI control seems to determine the quality of wheelchair control.

The role of shared-control

To isolate and determine the crucial contribution of shared-control to the navigation performances, participants should have had to repeat the tasks without robotic assistance (Leeb et al., 2015), which was deemed too costly given the strict logistics of this study. Nevertheless, we simulated the navigation results with a probabilistic input interface specifically modeled on the control characteristics of each participant—i.e., BMI accuracy and command latency. See section “method details” for the implementation of the simulated environment as well as on the design of the probabilistic input interface.

Table 2 depicts the percentage of reached waypoints in the simulation for each participant with or without the assistance of shared-control. Results achieved during the actual experiment have been also reported for comparison and completeness. First of all, the simulated results with shared-control enabled are in line with the actual results achieved by each participant. This validates the simulation and the probabilistic input controller. Second, the comparison between the results with and without shared-control clearly shows the crucial contribution of robotic assistance. The percentage of reached waypoints dropped to 82.5% in the case of P1 and decreased by more than half for P2 and P3 (22.5% and 32.5%, respectively).

Table 2.

Success rate per waypoint in the actual experiment and in simulation with and without the assistance of shared control

| P1 |

P2 |

P3 |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| actual |

simulation |

actual |

simulation |

actual |

simulation |

||||

| with assistance | with assistance | without assistance | with assistance | with assistance | without assistance | with assistance | with assistance | without assistance | |

| WP1 | 100.0% | 100.0% | 100.0% | 100.0% | 100.0% | 60.0% | 100.0% | 100.0% | 60.0% |

| WP2 | 100.0% | 100.0% | 90.0% | 20.0% | 70.0% | 20.0% | 66.6% | 70.0% | 40.0% |

| WP3 | 100.0% | 100.0% | 70.0% | 60.0% | 40.0% | 10.0% | 100.0% | 60.0% | 20.0% |

| WP4 | 80.0% | 90.0% | 70.0% | 0.0% | 0.0% | 0.0% | 20.0% | 40.0% | 10.0% |

| Average | 95.0% | 97.5% | 82.5% | 45.0% | 52.5% | 22.5% | 73.3% | 67.5% | 32.5% |

Another aspect that reveals the assistance provided by shared-control is the percentage of time where participants did not deliver any command to the wheelchair, and thus, the navigation was fully in charge of the robotic intelligence. For participants with high BMI accuracy and low command latency (P1 and P3), the shared-control still supported the navigation but only for 46.713.6% and 42.318.4% percentage of time, respectively. On the other hand, shared-control played an important role in the case of P2, helping to drive the wheelchair in absence of user’s commands for 83.34.8% of the run duration.

Discussion

The contribution of this work to the state-of-the-art is multifold. To the best of our knowledge, this is the only report of a BMI-driven wheelchair that at the same time: (1) Includes several end-users with severe motor impairment; (2) assesses learning dynamics over several months; (3) employs a self-paced MI BMI, independent of external stimuli; (4) evaluates it in a quasi-ecological scenario, outside a controlled laboratory environment.

BMI translational studies remain surprisingly limited (Birbaumer et al., 1999; Pfurtscheller et al., 2003; Wolpaw and McFarland, 2004; Kübler et al., 2005; Leeb et al., 2007, Leeb et al., 2013, 2015; McFarland et al., 2010; Kim et al., 2011; Gilja et al., 2012, 2015; Hochberg et al., 2012; Collinger et al., 2013; Wang et al., 2013; Perdikis et al., 2014, Perdikis et al., 2018; Aflalo et al., 2015; Jarosiewicz et al., 2015; Rupp et al., 2015; Bouton et al., 2016; Saeedi et al., 2016; Vansteensel et al., 2016; Ajiboye et al., 2017; Schwemmer et al., 2018; Silversmith et al., 2021; Cruz et al., 2021), hindering the transition of BMI from the lab to clinics and end-user homes. Our work helps alleviate this shortcoming. Importantly, because of the requirements for continuous and multi-degree of freedom control, most BMI-controlled robots have involved implanted BMI approaches (Hochberg et al., 2012; Collinger et al., 2013; Aflalo et al., 2015; Ajiboye et al., 2017). However, not all end-users are qualified, nor may wish, to receive an implant (e.g., people with existing brain lesions). Hence, our study is a critical addition to a limited literature of non-invasive, self-paced BMI-controlled robots (Leeb et al., 2013,2015; Rupp et al., 2015).

Our work shows that the participants’ ability to navigate in a natural, cluttered clinical environment (Figure 4) is directly proportional to the acquired BMI skills (Figure 2). This is clearly the case for P1 who exhibited low decoding performances at training onset (45%). However, both user’s adaptation to the BMI decoder (subject learning) and the concurrent re-calibration of the decoder (machine learning) led to near-optimal BMI performance (95.0%) at the end of his training as well as high navigation performance. P2 and P3, on the other hand, reached similar levels of BMI performance and wheelchair control. Nevertheless, only P3, who exhibited strong learning effects, successfully accomplished the whole navigation task.

Furthermore, we found significant functional connectivity changes only for the two participants displaying learning effects (Figure 3). These findings are well aligned with literature reporting distributed cortical adaptation during BMI skill learning (Jarosiewicz et al., 2009; Wander et al., 2013). The SdDTF features emerging as significantly regulated by BMI learning concern information flows within (P3) or across (P1) the broad sensorimotor cortical areas associated to one of the adopted MI tasks. Decrease in SdDTF connectivity for “both feet” task indicates the emergence of a more focal EEG modulation over the medial line; whereas the increase in SdDTF connectivity for “both hands” is supported by evidence of the role of the supplementary motor area (covered by electrodes FCz and Cz) in bimanual movements (Sadato et al., 1997) and bimanual skill acquisition (Andres et al., 1999). The SdDTF features are also consistent with the spectral components included in the decoders for P1 and P3 (Figure S2 and Table S3), which are well-known SMR EEG correlates (Leeb et al., 2013; Pfurtscheller and Lopes da Silva, 1999) and drive bimanual skill acquisition (Andres et al., 1999).

Additional evidence that learned BMI skills support navigation performance comes from the analysis of the command balance metric (Figure 5) showing that participants were able to replicate the same level of BMI performance achieved at the end of their training without a need to re-calibrate the decoder before wheelchair navigation. Such a direct transfer of learned BMI skills to the operation of a complex brain-actuated device by our three participants, all of them with severe tetraplegia, is rarely reported, including implanted BMI systems (Leeb et al., 2015; Silversmith et al., 2021). We postulate that this represents a critical advance towards home and clinical use of brain-controlled assistive mobility technology.

Beyond decoding performance

Our results support recent criticisms in the literature on the traditional use of decoding performance as the ultimate (and, usually, only) metric of BMI aptitude and learning (Chavarriaga et al., 2016; Perdikis and Millán, 2020). Despite what is often assumed, a minimum level of accuracy such as 70% (Leeb et al., 2013) may be a necessary, but not sufficient condition to achieve successful control of a BMI-actuated device. Instead, skill acquisition grounded on neuroplastic changes has shown to be critical for translational BMI (Wolpaw and McFarland, 2004; Perdikis et al., 2018). Similarly, here, participant P2 exhibited sufficient modulation of spontaneous SMR to support “adequate” decoding ( accuracy). Yet, this participant’s wheelchair control was compromised. On the contrary, participant P1 and, more interestingly, P3 (who achieved similar accuracy to P2) drove the BMI-actuated intelligent wheelchair with high performance, after showcasing subject learning (Figure 2). We thus postulate that plasticity-induced BMI skill acquisition may, on the one hand, represent a greater “internalization” of BMI skill leading to automaticity (Wolpaw and McFarland, 2004) (i.e., the participant no longer consciously employs the predefined MI tasks, but rather spontaneously forwards the intended device command). On the other hand, such “deep” subject learning may also indicate the attainment of a richer BMI skill set, beyond decoding accuracy. Indeed, P1 and P3, in addition to improving the separability of brain patterns (Figures 2C and 2D), also developed another critical facet of BMI-based wheelchair control, namely, command latency (Figures 2E and 2F).

Mutual learning in BMI

Here, as in previous work (Perdikis et al., 2018; Tortora et al., 2022), we adopt the term “mutual learning” in its widest and most inclusive sense: any BMI training paradigm involving closed-loop control that enables subject learning (improved brain signal modulation through feedback, a form of operant conditioning), and a BMI decoder model that infers the underlying mental task from incoming brain signals after data-driven parameter estimation (machine learning). We thus make no distinction between co-adaptive training regimes where the machine learning process takes place “on-the-fly” (continuous, real-time, “online” adaptation), and those where decoder adaptation is intermittent and “offline” (like in the particular and more conventional case employed here), without suggesting superiority of one scheme over the other or, on the contrary, that they are fully equivalent. We prefer “mutual learning” over “co-adaptation”, as the latter terminology tends to imply the use of online adaptation, although we consider the two terms to be interchangeable. Importantly, in accordance with the conceptualization framework of mutual learning in BMI that we have recently introduced (Perdikis and Millán, 2020), we distinguish and disentangle the distinct roles of subject and machine learning, a distinction we believe is independent of the particular mutual learning design. Specifically, we consider a hierarchical structure of learning objectives where the final, common goal of maximizing the application performance can only be acquired if (1) at the first level, the participant has learned (or, is spontaneously able) to produce separable, differentiable brain patterns for the involved mental tasks, and (2) at the second hierarchical level, the machine learns to identify suitable feature subsets and decoder parameters that optimize classification accuracy (and other critical aspects like command latency). High BMI performance then supports dexterous brain-actuated application control, as shown here. Hence, both subject and machine learning are necessary prerequisites for BMI applications, and none is sufficient on its own. Specifically, machine learning alone may not guarantee successful translational BMI (as part of the co-adaptation literature seems to imply). Although improved accuracy can often result from the adoption of more elaborate machine learning (e.g., moving from linear models to complex non-linear decoders and/or learning algorithms) able to discover optimal separation hypersurfaces in high-dimensional feature spaces, lack of separability means that no such separation rules exist whatsoever. In these cases, one needs to first invest in subject learning to create the discriminant mental task-dependent distributions that machine learning can then exploit. In that sense, we find that subject learning is the foundation upon which the overall mutual learning architecture relies.

BMI co-adaptation literature has established that continuous or intermittent decoder re-calibration is usually (Orsborn et al., 2014; Perdikis et al., 2018; Tortora et al., 2022) (but, not necessarily always (Perdikis and Millán, 2020; Cunha et al., 2021)) beneficial to both the maximization of BMI performance and the participant’s learning because an updated decoder can exploit the emergence of better MI features (as a result of subject learning), eliminate non-stationarity effects (e.g., feature distribution drifts), and maintain a stable, or even increase, decoding performance. Nevertheless, decoder re-calibration is not without drawbacks, the main one because of changes in the feedback dynamics that may negatively affect subject learning—which is based on operant conditioning. We thus adopted a conservative approach (Perdikis et al., 2018; Tortora et al., 2022), where an individual decoder was re-calibrated only in the case new emerging features were substantially more discriminant than the current ones, or there was clear and persisting BMI performance decrease (a sign of intense non-stationarity effects). This mutual learning approach delivered an excellent outcome for P1 and was largely effective for P3, but completely failed to improve P2’s baseline performance. These results are consistent with the literature and confirm that, in general, the question whether there exists a universal mutual learning scheme able to optimize BMI control of any prospective user (and what this would exactly look like) is still open.

The case of P3 highlights some of the above caveats in mutual learning. P3’s original decoder included only features in the band, whereas the one retrained before session 7 introduced band features that had evolved into the most discriminant ones as a result of training (Table S3). We assume that the highly overlapping feature subsets between the previous and new decoders initially allowed P3 to exhibit high accuracy in session 7; however, because of natural drift in EEG, it significantly altered the dynamics of the feedback the participant was used to, resulting in severely compromised accuracy in session 8. The classifiers trained in sessions 7–10 increasingly employed features with the last one (the classifier also used for navigation) exclusively consisting of them. It turns out that sessions 7–10 correspond to a second subject learning phase, where P3 managed to transition from volitional control of to rhythms—the latter providing the most separable features, see Figure 2C, third panel—so as to reinstate performance back to 70% accuracy. We conjecture that because this second training period only lasted 4 sessions, P3’s performance could have returned to near-perfect accuracy had the logistics permitted longer training. P3’s mutual learning case illustrates how machine learning interventions in BMI must be examined not only with respect to their ability to optimally classify data (as in other fields), but also with regard to the impact they may exert on the participant’s BMI skill learning and consolidation (Perdikis and Millán, 2020; Cunha et al., 2021).

Shared control

The role of robotic assistance in brain actuated devices has been widely demonstrated in literature (Millán et al., 2004, 2009; Vanacker et al., 2007; Tonin et al., 2010, Tonin et al., 2020; Carlson and Millán, 2013; Lopes et al., 2013; Leeb et al., 2015; Cruz et al., 2021; Tonin and Millán, 2021; Beraldo et al., 2022). In this work, we confirm these results and we highlight the crucial contribution of shared-control in generating complex trajectories of the wheelchair. The comparison between the two navigation modalities (i.e., shared-control enabled and disabled) showed a clear decrease in the percentage of reached waypoints without the support of robotic intelligence (on average from 72.532.5% to 45.833.7%). Although the reduction might be considered moderate in the case of P1 (from 97.5% to 82.5%), it appears critical to successfully complete the navigation task for P2 and P3 (from 52.5% to 22.5% and from 67.5% to 32.5%, respectively). This is further reflected by the percentage of time in which participants did not deliver any BMI command and fully relied on the assistance of shared-control (46.713.6%, 83.34.8%, and 42.318.4% for P1, P2 and P3, respectively). These results are in line with the BMI skill acquired by each participant during the training. P1, who achieved great BMI control (Figure 2A), only partially needed the assistance of robotic intelligence. On the contrary, P2 and P3 who exhibited adequate but mediocre BMI performances, strongly relied on the support of shared-control to accomplish the requested navigation task.

Driving a robotic device with BMIs is an utmost challenge that is only partially comparable to the control of software applications or to the paradigms traditionally used to evaluate BMI performances. The control of a brain-actuated wheelchair involves skills that go beyond the usual decoding accuracy by entailing the ability not only to produce commands with low latency and at precise time but also to not deliver any command if the user wants to keep the device in the current status—in our specific case, to let the wheelchair move forward. The results achieved in this work allow us to highlight how shared-control—and, in general, human-robot interaction approaches and collaborative robotics—may support the user to achieve safety, efficiency and usability of the brain-controlled wheelchair, especially in the case of mediocre BMI performance.

Limitations of the study

Despite the results presented here substantiate the role of end-user training for translational BMI applications, the study suffers certain limitations. The first regards the re-calibration procedure of the BMI decoder. As discussed above, when and how to re-calibrate the BMI decoder to incorporate new emerging features or to track the participant’s learned EEG modulations is still an open question. Here we relied on the operator’s expertise for the update of the BMI decoder and we referred to the traditional 70% level of BMI performance to move from the training phase to the navigation phase. However, these are sub-optimal criteria that do not take into consideration the essential neurophysiological changes that training should promote. Future studies should investigate how to couple machine learning and subject learning.

Second, our study is limited to three participants. More and larger studies are needed to determine the exact translational potential of BMI assistive robotic technology. Furthermore, because all participants had a similar clinical profile, we cannot provide hints about usability by people with conditions other than SCI.

Third, with respect to the brain-controlled wheelchair, a major limitation is the small number of available mental commands—2.5: left, right and forward if none of the other two are delivered. Such a control strategy assumes that the user is able to not deliver any command to implicitly let the wheelchair go forward. Theoretically, in a 2-class BMI the probability output of the BMI decoder should uniformly oscillate between the two class extremes (i.e., ) if the user is not actively engaged in any mental task. However, it has been already demonstrated in literature that this is not the case (Tonin et al., 2020). Indeed, for most BMI users, the probability output at rest follows a bimodal distribution by assuming extreme values close to one class or to the other. This implies that the users must actively control the BMI (by balancing the two mental tasks) to not deliver any command and to let the wheelchair move forward. This is known in literature as intentional non-control (Birch et al., 2002; Perdikis et al., 2018). Previous works already demonstrated that end-users could learn to balance the output of a 2-class BMI and keep the application in the current state (Perdikis et al., 2018; Tortora et al., 2022). Furthermore, shared-control might help by mitigating accidental commands (Beraldo et al., 2022). Nevertheless, this remains a major limitation of the proposed BMI and a source of high workload for the user. Future research will need to take into account these aspects by explicitly training the user to balance the two mental tasks as well as by incorporating further degrees of freedom and continuous control of the device (Tonin et al., 2020).

Finally, future studies should also focus on issues that have not been addressed here, like the collection of formal end-user experience and satisfaction feedback, and the study of plasticity with complementary imaging (e.g., functional magnetic resonance imaging) which could further boost the usability of brain-actuated wheelchairs by people with severe motor disabilities.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Software and algorithms | ||

| Matlab 2021b | MathWorks | https://www.mathworks.com/ |

| OpenGL library | OpenGL | https://www.opengl.org/ |

| Robotic Operating System | ROS | https://www.ros.org/ |

| Other | ||

| eego™sport | ANT Neuro | https://www.ant-neuro.com/ |

| TDX SP2 | Invacare | https://global.invacare.com/ |

| Hokuyo URG-04LX-UG01 | Hokuyo | https://www.hokuyo-aut.jp/ |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, José del R. Millán (jose.millan@austin.utexas.edu).

Materials availability

This study did not generate new unique reagents.

Experimental model and subject details

Participants

All participants (P1-P3) were male, tetraplegic after spinal-cord injury and wheelchair users (Table 1). P1 was 26 yearsold at the time of recruitment, with complete spastic tetraplegia sub C3 after a traumatic accident in 2008. P1 did not have mobility below the neck and relied on assisted ventilation. P1’s BMI training phase was interrupted for two months due to a severe infection and his consequent hospitalization and, later, for another three weeks during Christmas holidays. Participant P2 was 59 yearsold and suffered from tetraplegia sub C5/C6 since 2009. His residual motor functions were limited to lifting arms and slight movement of the fingers. P3 was 56 years old, tetraplegic sub C5 since 1996 and he could slightly move arms and fingers.

Recruitment took place at the Universitätsklinikum Bergmannsheil hospital (Bochum, Germany). Written informed consent was provided by all participants before their enrolment to the study. The study was approved by the ethical committee of the Faculty of Medicine, University of Bochum, Germany, with protocol number 17–6196.

Method details

Acquisition, processing and classification of EEG data

EEG was acquired with a 32-channel amplifier (eego™, ANT Neuro, Netherlands) at 512 Hz sampling rate. We recorded 31 electrodes over the sensorimotor cortex: F1, Fz, F2, FC5, FC3, FC1, FCz, FC2, FC4, FC6, C5, C3, C1, Cz, C2, C4, C6, CP5, CP3, CP1, CP2, CP4, CP6, P5, P1, P3, Pz, P2, P4, P6, POz. An additional electrode was placed on the left canthus to record electrooculogram.

EEG signals were spatially filtered with a Laplacian derivation and the PSD of each channel was computed by means of Welch’s method with a resolution of 2 Hz within the frequency range from 4 to 48 Hz in consecutive, 1 s-long sliding windows shifted by 62.5 ms. The decoder was built by selecting the most discriminant features (channelfrequency pairs) from the processed spatiospectral components. Feature selection was done in two steps. First, features were ranked according to their discriminant power with respect to the two MI tasks through canonical variate analysis (Galán et al., 2007) and taking into account the stability of these values across the calibration runs. Second, operators selected features that were neurophysiologically relevant. These features were used to train a Gaussian classifier (Leeb et al., 2013). Participants’ individual BMI decoders were re-calibrated regularly during the evaluation phase of their training to adapt to their evolving EEG signals.

During closed-loop, online control of the visual feedback or the wheelchair, each feature vector extracted in real-time was fed to the Gaussian decoder and outputted a discrete probability distribution over the two MI tasks. Data samples with uncertain probability distribution (i.e., close to uniform) were rejected (threshold defined at 0.55). Surviving samples were integrated with an evidence accumulation framework based on exponential smoothing (Leeb et al., 2013), where all samples from the beginning of each trial influence the current position of the visual feedback shown to the user. Once the accumulated evidence reached a predefined threshold, the command associated to the MI task (i.e., turn right or turn left) was delivered to the wheelchair.

For training runs, a configurable trial timeout was imposed. In case of timeout, the trial is considered “rejected”.

Performance evaluation of cued, closed-loop (online) training is done through decoding accuracy and ITR. Accuracy is reported as the percentage (%) of correct trials (i.e., trials where the subject was able to reach the desired–indicated by a cue–decision threshold of the feedback system before timeout) over the total number of trials in a run. Accuracy is then averaged over runs within each session and reported at the level of sessions. For ITR we adapt the classical definition to account for “rejected” (no-decision) trials and variable trial lengths occurring in our BMI approach (Millán et al., 2004): , where the number of classes ( in this study), the average trial duration (including timeout trials, also referred to as command latency), the probability of rejection (i.e., the % of rejected trials) and the probability of correct decision (i.e., the % of correct trials over non-rejected trials). Note that only coincides with decoding accuracy when there are no rejected trials, and will be in general greater than that (since timeout trials are not counted as errors, which is the case for decoding accuracy). ITR is also reported as each session’s average. The ITR unit with this approach is bits/s.

Brain connectivity

Effective brain connectivity among EEG channels was computed with the short-time direct directed transfer function (SdDTF) (Biasiucci et al., 2018), a variant of the direct directed transfer function (dDTF) (Korzeniewska et al., 2003) method which is suitable for studying the dynamics of effective connectivity in short time scales. SdDTF connectivity was initially extracted for all possible channel pairs in sliding windows of 1 s, shifted by 250 ms (75% overlapping) within the band [1,30] Hz with 1 Hz resolution and using an underlying autoregressive model of order. Subsequently, SdDTF was averaged within larger scalp regions of interest (ROI), specifically, Left (C1, C3, FC1, FC3, CP1, CP3), Right (C2, C4, FC2, FC4, CP2, CP4) and Medial (Cz, FCz) cortical areas. The directional connectivity from one ROI to another was calculated by averaging the SdDTF values of all available channel pairs whose origin falls within the first ROI and the terminal in the second one. To calculate the effective connectivity in the (8–12 Hz) and (18–30 Hz) bands we averaged the 1 Hz resolution SdDTF estimates within the corresponding bands. All MI trials of the closed-loop evaluation BMI runs were used for SdDTF extraction. Final session-wise estimates were determined by averaging all SdDTF windows within any trial of all the runs executed in the respective session.

Candidate SdDTF features were first determined by selecting only those features whose distributions were significantly different between the first and last session of training (non-parametric, two-sided Wilcoxon ranksum tests with Bonferroni correction). Secondly, to eliminate false positives, the resulting pool of features was further narrowed down by considering only those that were significantly correlated ( for Pearson’s correlation coefficient, statistical testing based on Student’s t distribution) with the session index, thus accounting for a gradual and smooth connectivity modulation taking place over the training period. Finally, we imposed that any connectivity evolution should also significantly correlate with BMI accuracy.

BMI training and navigation phases

The training phase started with a calibration session enabling EEG data collection for subsequent modeling the individual BMI decoders. Each participant sat on a customized wheelchair and followed the instructions of a cue-based visual paradigm indicating the MI task (i.e., both hands or both feet) to perform in each trial (Figure 1B). The visual paradigm mimicked a steering wheel rotating to the left or to the right according to the task currently employed. A trial consisted of four parts: fixation period (3000 ms); cue period (red left or blue right arrow in the middle of wheel) indicating which MI task to perform (1000 ms); continuous feedback period where the steering wheel was moving proportionally to the accumulated evidence; command period where a threshold was reached and the command was delivered to the wheelchair (4000 ms). During the calibration sessions, the movement of the feedback was artificially generated and moving towards the correct direction.

In the evaluation sessions, participants trained to drive the wheel with their BMI during 604.2 (median and standard error) trials per session, grouped in 60.4 blocks (or runs). In this phase, we initially adopted a positive reinforcement strategy, where the feedback (wheel) only visualized positive advances (i.e., wheel moving only towards the correct direction when the output of the BMI decoder was correct), otherwise maintaining its position. Furthermore, we included a timeout period (20000 ms) after which the trial stopped and was considered unsuccessful. As the participants acquired BMI control, we interleaved the positive reinforcement strategy with runs where the wheel motion was only dependent on the BMI decoder output (i.e., it could move towards the correct or the wrong direction). In case the participant was able to reach the instructed threshold, the trial was considered successful and the wheelchair was rotated in place by an angle of 45°. Afterwards, a new trial followed. The rationale was to reinforce, early on, a strong association between BMI control and wheelchair feedback.

Finally, in the navigation phase, the wheel movement was completely dependent on the output of the decoder, and the command period was reduced to 1000 ms. Furthermore, in this phase, the participant was in full control of the interface, deciding at will which motor task to perform next according to the navigation requirements of the wheelchair (absence of symbolic cue).

A BMI command was delivered to the wheelchair when the integrated probabilities reached subject-specific thresholds (please refer to the section “acquisition, processing and classification of EEG data”). Thresholds were set to 0.65 (for both classes) for participants P1 and P2, and 0.6 for participant P3.

The criterion for progressing from the training to the navigation phase was adequate decoding BMI performance (70%) in the last evaluation session.

In the navigation phase, participants were asked to actively drive the wheelchair in a realistic indoor environment (Figure 1D). The experimental field consisted of a 15.017.12 m room in the clinic cluttered with usual furniture (i.e., mobile beds, chairs, rehabilitation tools). Participants had to mentally drive the wheelchair across the room by passing through four predefined waypoints WP1–4.

The position and the number of the waypoints were decided to uniquely define the track but—at the same time—to leave the freedom to each participant to select the preferred trajectory. Furthermore, participants were instructed to complete the track as quickly as possible.

Trajectories always started at the bottom right of the room, passed through the narrow passage in between the beds and the panel (WP1), reached the opposite end of the room at the right side, turn around counterclockwise (WP2), passed again the narrow passage (WP3), and took a right turn after the beds to approach the doorway (WP4) and complete the route. Each waypoint had to be passed in the correct direction to be marked as completed. The overall route was designed to comprise approximately the same number of right and left turns. The optimal trajectory was estimated through a discrete manual interface (i.e., computer keyboard) by computing the average route from 20 repetitions of the navigation task.

In total, this study reports on 29 (5 months), 11 (3 months) and 11 (2 months) experimental sessions (days) for participants P1, P2 and P3, respectively. Overall, participants performed 199, 53, and 65 BMI runs and, among them, 10, 5 and 6 repetitions of the driving task in the navigation phase, respectively. The navigation phase took place several days after training offset. P3 performed only a single navigation session for logistic reasons.

Customized powered wheelchair

We customized a commercial powered wheelchair (TDX SP2, Invacare®, United States) in order to allow for external, computer–based, control of the motors and integrate the sensors required for gathering information about the surrounding environment exploited by our semi-autonomous navigation system (Figure 1A). The custom–made control board was directly connected to the wheelchair’s communication bus and to the laptop through a USB serial converter (S27-USB-SERIAL, Acroname © , United States). Furthermore, we added two derivations from the wheelchair’s power bus in order to provide additional power plugs at reduced voltage (5 V and 12 V). Regarding mechanical interventions, we placed two metallic shelves on the back of the wheelchair to accommodate the laptop running the BMI and shared-control processing and the EEG amplifier. Moreover, we incorporated three movable metallic arms to hold the monitor, the RGB-D camera and the laser rangefinder. All arms could be easily displaced to facilitate seating of the user on the wheelchair. The monitor (8 inches) was placed in front of the user to allow the visualization of the BMI feedback. The RGB-D camera (Microsoft Kinect ©, Microsoft, United States) and the 240 laser rangefinder (Hokuyo URG-04LX-UG01, Hokuyo, Japan) were placed in the front of the wheelchair to detect possible obstacles in its path.

Shared-control navigation system

The navigation system is based on a local potential field approach that governs the temporal evolution of the motion parameters (Schöner and Dose, 1992; Bicho and Schöner, 1997). On the one hand, translation velocity of the wheelchair is generated by the following equation:

| (Equation 1) |

where represents the maximum translation velocity (0.2 m/s), the frontal safe distance (0.2 m) and the distance of the closest obstacle.

On the other hand, angular velocity depends on the positions (distance and orientation ) of obstacles (repellers) and eventual targets (attractors) in the surroundings of the wheelchair. Angular velocity is computed by:

| (Equation 2) |

with , and the number of detected obstacles or targets in the proximity of the wheelchair. and represent the strength and the influence of each obstacle/target detected and they are computed as reported in Equations 3 and 4, respectively:

| (Equation 3) |

where represents the distance of the –obstacle or –target, the frontal safe distance and the decay parameter (0.8). The repelling or attracting nature of the generated force is defined by the sign of the parameter. A negative generates a repelling force and it is associated to the detected obstacles; on the other hand, a positive creates an attractive influence corresponding to targets.

| (Equation 4) |

where is the angular resolution ( rad), is the width of the wheelchair (0.6 m), is the lateral safe distance (0.2 m) and is the distance of the –obstacle or –target.

Distance and orientation of obstacles were acquired by the equipped sensors. 3D data from the camera and the 2D readings from the laser rangefinder were projected into a costmap centered on the wheelchair’s reference system. Then, we applied a proximity filter in order to populate a repellers vector containing the angles and the distances of the closest obstacles. Similarly, we created an attractors vector populated by the last command delivered by the BMI system. A right or left BMI command was translated in a virtual attractor 1 m far from the wheelchair and at with respect to the current motion direction.

The navigation system has been implemented in C++ within the Robotic Operating System (ROS) ecosystem. We provided custom modules for the bi-directional communication between the BMI system and ROS.

Simulation results

We evaluated the role of the shared-control during navigation tasks by means of a-posteriori simulation. First, we created a virtual environment closely replicating the real experimental field. The virtual environment (including furniture) was designed in Gazebo (Gazebo 3D robotics simulator), a well-known open-source robotic simulation suite. Second, we implemented a stand-alone module in charge to continuously determine the ground-truth correct command. At each time , we computed the angle between the current wheelchair pose and the position of the next waypoint . The resulting angle was then converted in a categorical variable . Third, we implemented a probabilistic input interface based on the command latency and BMI accuracy achieved by each participant in the previous recording session. At each time t, the probabilistic input interface had a probability to fire any command (correct or incorrect). The probability distribution was modeled according to the latest minimum and maximum command latency of each participant (i.e., an uniform distribution between 2.78 and 3.40 s for P1, between 4.85 and 8.70 s for P2, and between 0.69 and 2.27 s for P3). The probability that the fired command was correct (with respect to the ground-truth correct command ) was based on the latest BMI accuracy of each participant (i.e., P1: 95%, P2: 66%, and P3: 74%).

We evaluated the contribution of the robotic assistance by comparing the results of the simulated navigation runs in two modalities: with shared-control enabled and disabled. In the modality with shared-control, the temporal evolution of the linear and angular velocity was determined by the possible repellers (obstacles) or attractors (BMI commands) in the surroundings, as in the case of the actual experiments. In the modality without shared-control, the angular velocity of the wheelchair was only determined by the BMI commands, while the linear velocity was still depending on the obstacles in the proximity. Although this does not represent a complete absence of assistance, we considered it more fair to prevent any collision of the device. We performed 10 repetitions per modality for each participant. Furthermore, for each participant the probabilistic input interface simulated the same command accuracy and command latency that they achieved in the last training session.

Optimal and random trajectories

We evaluated the BMI users’ navigation performance with respect to an optimal control modality computed within Gazebo, where we fully reconstructed the experimental field and exploited the same navigation algorithms used during the study. We provided commands to the navigation system (i.e., turn left or turn right) through a discrete manual interface (i.e., computer keyboard). We performed 20 repetitions of the navigation tasks and we computed the optimal trajectory as the average route (Figure 4A, solid black line).

Furthermore, we simulated a random control modality in order to verify the imperative need of a high-level human supervision to accomplish the navigation task. We designed a control module sending a random left or right command every 1 to 5 s. We repeat the simulation 20 times. Figure S4 illustrates the resulting trajectories and highlights the fact that the navigation system alone was not able to reach all waypoints in the route.

Quantification and statistical analysis

All statistical analyses have been performed in MATLAB. Details of the statistical analyses have been reported in the main text, in the figure captions and in the methods. The details include the statistical tests used, the number of samples, the confidence interval, definition of center and dispersion measures. Significance between sample distributions have been computed with paired Student’s t-test, while correlation between two variables with Pearson correlation coefficient.

Acknowledgments

This work was partially supported by MIUR (Italian Minister for Education) under the initiative “Departments of Excellence” (Law 232/2016) and by the Department of Information Engineering of the University of Padova with the grant TONI_BIRD2020_01. We are grateful to the physiotherapists of the Universitätsklinikum Bergmannsheil hospital (Bochum, Germany) and to participants’ caregivers and relatives for the fundamental support during the study.

Author contributions

L.T., M.A., T.S., R.O., and J.d.R.M. conceived the experiment. M.A., T.S., and R.O. recruited the participants. L.T., S.P., B.O., K.L., and J.d.R.M. implemented the experiment. L.T., T.K., J.P., B.O., M.A., T.S., R.O., and J.d.R.M., led the experiment. L.T., S.P., R.O, and J.d.R.M., implemented the analyses and analyzed the results. All authors contributed to the writing of the paper.

Declaration of interests

The authors declare no competing interests.

Published: November 18, 2022

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.isci.2022.105418.

Supplemental information

Data and code availability

-

•

All the data reported in this paper will be shared by the lead contact upon reasonable request.

-

•

This paper does not report original code. The original code will be shared by the lead contact upon reasonable request.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon reasonable request.

References

- Aflalo T., Kellis S., Klaes C., Lee B., Shi Y., Pejsa K., Shanfield K., Hayes-Jackson S., Aisen M., Heck C., et al. Decoding motor imagery from the posterior parietal cortex of a tetraplegic human. Science. 2015;348:906–910. doi: 10.1126/science.aaa5417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ajiboye A.B., Willett F.R., Young D.R., Memberg W.D., Murphy B.A., Miller J.P., Walter B.L., Sweet J.A., Hoyen H.A., Keith M.W., et al. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration. Lancet. 2017;389:1821–1830. doi: 10.1016/S0140-6736(17)30601-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andres F.G., Mima T., Schulman A.E., Dichgans J., Hallett M., Gerloff C. Functional coupling of human cortical sensorimotor areas during bimanual skill acquisition. Brain. 1999;122:855–870. doi: 10.1093/brain/122.5.855. [DOI] [PubMed] [Google Scholar]

- Beraldo G., Tonin L., Millán J.d.R., Menegatti E. Shared intelligence for robot teleoperation via bmi. IEEE Trans. Hum. Mach. Syst. 2022;52:400–409. [Google Scholar]

- Biasiucci A., Leeb R., Iturrate I., Perdikis S., Al-Khodairy A., Corbet T., Schnider A., Schmidlin T., Zhang H., Bassolino M., et al. Brain-actuated functional electrical stimulation elicits lasting arm motor recovery after stroke. Nat. Commun. 2018;9:2421. doi: 10.1038/s41467-018-04673-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bicho E., Schöner G. The dynamic approach to autonomous robotics demonstrated on a low-level vehicle platform. Robot. Autonom. Syst. 1997;21:23–35. [Google Scholar]

- Birbaumer N., Ghanayim N., Hinterberger T., Iversen I., Kotchoubey B., Kübler A., Perelmouter J., Taub E., Flor H. A spelling device for the paralyzed. Nature. 1999;398:297–298. doi: 10.1038/18581. [DOI] [PubMed] [Google Scholar]

- Birch G.E., Bozorgzadeh Z., Mason S.G. Initial on-line evaluations of the LF-ASD brain-computer interface with able-bodied and spinal-cord subjects using imagined voluntary motor potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 2002;10:219–224. doi: 10.1109/TNSRE.2002.806839. [DOI] [PubMed] [Google Scholar]

- Bouton C.E., Shaikhouni A., Annetta N.V., Bockbrader M.A., Friedenberg D.A., Nielson D.M., Sharma G., Sederberg P.B., Glenn B.C., Mysiw W.J., et al. Restoring cortical control of functional movement in a human with quadriplegia. Nature. 2016;533:247–250. doi: 10.1038/nature17435. [DOI] [PubMed] [Google Scholar]

- Carlson T., Millán J.d.R. Brain-controlled wheelchairs: a robotic architecture. IEEE Robot. Autom. Mag. 2013;20:65–73. [Google Scholar]

- Chavarriaga R., Fried-Oken M., Kleih S., Lotte F., Scherer R. Heading for new shores! overcoming pitfalls in BCI design. Brain Comput. Interfaces. 2016;4:60–73. doi: 10.1080/2326263X.2016.1263916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collinger J.L., Wodlinger B., Downey J.E., Wang W., Tyler-Kabara E.C., Weber D.J., McMorland A.J.C., Velliste M., Boninger M.L., Schwartz A.B. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet. 2013;381:557–564. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Combrisson E., Jerbi K. Exceeding chance level by chance: the caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. J. Neurosci. Methods. 2015;250:126–136. doi: 10.1016/j.jneumeth.2015.01.010. [DOI] [PubMed] [Google Scholar]

- Cruz A., Pires G., Lopes A., Carona C., Nunes U.J. A self-paced bci with a collaborative controller for highly reliable wheelchair driving: experimental tests with physically disabled individuals. IEEE Trans. Hum. Mach. Syst. 2021;51:109–119. [Google Scholar]

- Cunha J.D., Perdikis S., Halder S., Scherer R. Post-adaptation effects in a motor imagery brain-computer interface online coadaptive paradigm. IEEE Access. 2021;9:41688–41703. [Google Scholar]

- Diez P.F., Torres Müller S.M., Mut V.A., Laciar E., Avila E., Bastos-Filho T.F., Sarcinelli-Filho M. Commanding a robotic wheelchair with a high-frequency steady-state visual evoked potential based brain-computer interface. Med. Eng. Phys. 2013;35:1155–1164. doi: 10.1016/j.medengphy.2012.12.005. [DOI] [PubMed] [Google Scholar]

- Diez P. Academic Press, Elsevier; 2018. Smart Wheelchairs and Brain-Computer Interfaces: Mobile Assistive Technologies. [Google Scholar]

- Edelman B.J., Meng J., Suma D., Zurn C., Nagarajan E., Baxter B.S., Cline C.C., He B. Noninvasive neuroimaging enhances continuous neural tracking for robotic device control. Sci. Robot. 2019;4:eaaw6844. doi: 10.1126/scirobotics.aaw6844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galán F., Ferrez P.W., Oliva F., Guardia J., Millán J.d.R. Feature extraction for multi-class BCI using canonical variates analysis. 2007 IEEE International Symposium on Intelligent Signal Processing. 2007:1–6. [Google Scholar]

- Gilja V., Nuyujukian P., Chestek C.A., Cunningham J.P., Yu B.M., Fan J.M., Churchland M.M., Kaufman M.T., Kao J.C., Ryu S.I., Shenoy K.V. A high-performance neural prosthesis enabled by control algorithm design. Nat. Neurosci. 2012;15:1752–1757. doi: 10.1038/nn.3265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilja V., Pandarinath C., Blabe C.H., Nuyujukian P., Simeral J.D., Sarma A.A., Sorice B.L., Perge J.A., Jarosiewicz B., Hochberg L.R., et al. Clinical translation of a high-performance neural prosthesis. Nat. Med. 2015;21:1142–1145. doi: 10.1038/nm.3953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hochberg L.R., Bacher D., Jarosiewicz B., Masse N.Y., Simeral J.D., Vogel J., Haddadin S., Liu J., Cash S.S., van der Smagt P., Donoghue J.P. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485:372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iturrate I., Antelis J.M., Kübler A., Minguez J. A noninvasive brain-actuated wheelchair based on a P300 neurophysiological protocol and automated navigation. IEEE Trans. Robot. 2009;25:614–627. [Google Scholar]

- Jarosiewicz B., Chase S.M., Fraser G.W., Velliste M., Kass R.E., Schwartz A.B. Functional network reorganization during learning in a brain-computer interface paradigm. Proc. Natl. Acad. Sci. USA. 2008;105:19486–19491. doi: 10.1073/pnas.0808113105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jarosiewicz B., Sarma A.A., Bacher D., Masse N.Y., Simeral J.D., Sorice B., Oakley E.M., Blabe C., Pandarinath C., Gilja V., et al. Virtual typing by people with tetraplegia using a self-calibrating intracortical brain-computer interface. Sci. Transl. Med. 2015;7:313ra179. doi: 10.1126/scitranslmed.aac7328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meng J., Zhang S., Bekyo A., Olsoe J., Baxter B., He B. Noninvasive electroencephalogram based control of a robotic arm for reach and grasp tasks. Sci. Rep. 2016;6:38565. doi: 10.1038/srep38565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaufmann T., Herweg A., Kübler A. Toward brain-computer interface based wheelchair control utilizing tactually-evoked event-related potentials. J. NeuroEng. Rehabil. 2014;11:7. doi: 10.1186/1743-0003-11-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim S.P., Simeral J.D., Hochberg L.R., Donoghue J.P., Friehs G.M., Black M.J. Point-and-click cursor control with an intracortical neural interface system by humans with tetraplegia. IEEE Trans. Neural Syst. Rehabil. Eng. 2011;19:193–203. doi: 10.1109/TNSRE.2011.2107750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korzeniewska A., Mańczak M., Kamiński M., Blinowska K.J., Kasicki S. Determination of information flow direction among brain structures by a modified directed transfer function (dDTF) method. J. Neurosci. Methods. 2003;125:195–207. doi: 10.1016/s0165-0270(03)00052-9. [DOI] [PubMed] [Google Scholar]

- Kübler A., Nijboer F., Mellinger J., Vaughan T.M., Pawelzik H., Schalk G., McFarland D.J., Birbaumer N., Wolpaw J.R. Patients with ALS can use sensorimotor rhythms to operate a brain-computer interface. Neurology. 2005;64:1775–1777. doi: 10.1212/01.WNL.0000158616.43002.6D. [DOI] [PubMed] [Google Scholar]

- Leaman J., La H.M. A comprehensive review of smart wheelchairs: past, present, and future. IEEE Trans. Hum. Mach. Syst. 2017;47:486–499. [Google Scholar]

- Leeb R., Friedman D., Müller-Putz G.R., Scherer R., Slater M., Pfurtscheller G. Self-paced asynchronous BCI control of a wheelchair in virtual environments: a case study with a tetraplegic. Comput. Intell. Neurosci. 2007;2007:1–8. doi: 10.1155/2007/79642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leeb R., Perdikis S., Tonin L., Biasiucci A., Tavella M., Creatura M., Molina A., Al-Khodairy A., Carlson T., Millán J.d.R. Transferring brain-computer interfaces beyond the laboratory: successful application control for motor-disabled users. Artif. Intell. Med. 2013;59:121–132. doi: 10.1016/j.artmed.2013.08.004. [DOI] [PubMed] [Google Scholar]

- Leeb R., Tonin L., Rohm M., Desideri L., Carlson T., Millán J.d.R. Towards independence: a BCI telepresence robot for people with severe motor disabilities. Proc. IEEE. 2015;103:969–982. [Google Scholar]

- Lopes A.C., Pires G., Nunes U. Assisted navigation for a brain-actuated intelligent wheelchair. Robot. Autonom. Syst. 2013;61:245–258. [Google Scholar]

- McFarland D.J., Sarnacki W.A., Wolpaw J.R. Electroencephalographic (EEG) control of three-dimensional movement. J. Neural. Eng. 2010;7 doi: 10.1088/1741-2560/7/3/036007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Millán J.d.R., Renkens F., Mouriño J., Gerstner W. Noninvasive brain-actuated control of a mobile robot by human EEG. IEEE Trans. Biomed. Eng. 2004;51:1026–1033. doi: 10.1109/TBME.2004.827086. [DOI] [PubMed] [Google Scholar]

- Millán J.d.R., Galán F., Vanhooydonck D., Lew E., Philips J., Nuttin M. Asynchronous non-invasive brain-actuated control of an intelligent wheelchair. 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society. 2009:3361–3364. doi: 10.1109/IEMBS.2009.5332828. [DOI] [PubMed] [Google Scholar]

- Orsborn A.L., Moorman H.G., Overduin S.A., Shanechi M.M., Dimitrov D.F., Carmena J.M. Closed-loop decoder adaptation shapes neural plasticity for skillful neuroprosthetic control. Neuron. 2014;82:1380–1393. doi: 10.1016/j.neuron.2014.04.048. [DOI] [PubMed] [Google Scholar]

- Perdikis S., Leeb R., Williamson J., Ramsay A., Tavella M., Desideri L., Hoogerwerf E.J., Al-Khodairy A., Murray-Smith R., Millán J.d.R. Clinical evaluation of BrainTree, a motor imagery hybrid BCI speller. J. Neural. Eng. 2014;11 doi: 10.1088/1741-2560/11/3/036003. [DOI] [PubMed] [Google Scholar]

- Perdikis S., Millán J.d.R. Brain-machine interfaces: a tale of two learners. IEEE Syst. Man Cybern. Mag. 2020;6:12–19. [Google Scholar]

- Perdikis S., Tonin L., Saeedi S., Schneider C., Millán J.d.R. The Cybathlon BCI race: successful longitudinal mutual learning with two tetraplegic users. PLoS Biol. 2018;16 doi: 10.1371/journal.pbio.2003787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfurtscheller G., Lopes da Silva F.H. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 1999;110:1842–1857. doi: 10.1016/s1388-2457(99)00141-8. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G., Müller G.R., Pfurtscheller J., Gerner H.J., Rupp R. Thought–control of functional electrical stimulation to restore hand grasp in a patient with tetraplegia. Neurosci. Lett. 2003;351:33–36. doi: 10.1016/s0304-3940(03)00947-9. [DOI] [PubMed] [Google Scholar]