Summary

Increasing demand for surgery and anaesthesia has created an imperative to manage anaesthetic workforce and caseload. This may include changes to distribution of cases amongst anaesthetists of different grades, including non‐physician anaesthetists. To achieve this safely, an assessment of case complexity is essential. We present a novel system for scoring complexity of cases in anaesthesia, the Oxford Anaesthetic Complexity score. This integrates patient, anaesthetic, surgical and systems factors, and is different from assessments of risk. We adopted an end‐user development approach to the design of the score, and validated it using a dataset of anaesthetic cases. Across 688 cases, the median (IQR [range]) complexity score was 19 (17–22 [15–33]). Cases requiring a consultant anaesthetist had a significantly higher median (IQR [range]) score than those requiring a senior trainee at 22 (20–25 [15–33]) vs. 19(17–21 [15–28]), p < 0.001. Cases undertaken in a tertiary acute hospital had a significantly higher score than those in a district general hospital, the median (IQR [range]) scores being 20 (17–22 [15–33]) vs. 17 (16–19 [17–28]), p < 0.001. Receiver‐operating characteristic analysis showed good prediction of complexity sufficient to require a consultant anaesthetist, with area under the curve of 0.84. Any rise in complexity above baseline (score > 15) was strongly predictive of a case too complex for a junior trainee (positive predictive value 0.93). The Oxford Anaesthetic Complexity score can be used to match cases to different grades of anaesthetist, and can help in defining cases appropriate for the expanding non‐physician anaesthetist workforce.

Keywords: complexity, risk, scoring, workforce

Introduction

Demand for surgical procedures has increased significantly in the UK and internationally over recent years [1]. This has created a parallel demand for provision of anaesthesia and peri‐operative care, on a background of an existing shortfall in the anaesthetic workforce [2]. A number of bodies have suggested increasing numbers of non‐physician anaesthetists to solve this problem [2, 3, 4]. To function effectively as part of the anaesthesia multidisciplinary team, and ensure patient safety, these practitioners will need to work on cases that are suitably complex (or non‐complex) for their scope of practice and level of training, as is true for trainee anaesthetists [5]. No definition currently exists for case complexity in anaesthetics, and hence there is no objective method for the allocation of cases to different grades of anaesthetist.

Complexity is usually described in terms of patients with multiple comorbidities and associated treatments, and the interaction between these elements [6]. This concept of the ‘medically complex patient’ is intuitive to many physicians, but does not account for non‐patient factors, which may be environmental, social or cultural [7]. Similarly, a surgeon could understand complexity in terms of technical features – procedural difficulty – but not include the medical conditions and therapies as mentioned earlier [8]. These concepts are also different from risk, which is a measure of likelihood of a given (unwanted) outcome [9].

A description of complexity from the viewpoint of the anaesthetist, the peri‐operative physician at the interface of medicine and surgery, must therefore include both patient and non‐patient factors. We aimed to develop an objective scoring system for complexity in anaesthesia, and validate this score against a large case dataset.

Methods

This study used information already routinely collected and anonymised at source. As such, no formal review by an ethics committee was required, and the project proceeded under the regulations governing local audit and quality improvement.

Consensus discussion amongst the authors, with reference to existing risk scoring systems and clinical experience, provided a set of core variables to form the basis of the Oxford Anaesthetic Complexity score. These were then grouped into subsections: age; BMI; patient; anaesthetic/technique; systems/environment; and surgical. For each variable, 22 consultant anaesthetists were surveyed to answer the question, ‘How much do the following factors influence the complexity of a case for the anaesthetist involved?’, with answers given on a 5‐point Likert scale, from 1 (does not increase complexity) to 5 (strongly increases complexity). A free text section was offered, asking ‘Are there any other factors you think increase complexity?’ Surveys were sent to anaesthetists in the tertiary referral centres of Oxford University Hospitals, and a separate district general hospital, not used in the case data collection exercise (Royal Berkshire Hospital).

The median survey result for each variable was used to define a score weight, from 1 to 5, and any opposite statements for binary variables were given a score of 1 (i.e. the opposite of ‘predicted difficult airway’ is ‘no predicted difficult airway’, which is assumed not to increase complexity, and therefore scored 1). Incomplete surveys were excluded from results.

Themes highlighted in free text answers were assessed for frequency of repeated mentions amongst respondents, and the most common were included. Score weight for additional variables was decided by authors' agreement.

Having derived our working measure of complexity, we tested it out on a local dataset. A snapshot survey of anaesthetic case complexity (separate from the score element survey already described) was undertaken at Oxford University Hospitals NHS Trust, covering the John Radcliffe Hospital (tertiary acute centre), the Churchill Hospital (transplant/cancer centre), the Nuffield Orthopaedic Centre and the Horton General Hospital (district general hospital), lasting 7 days in September/October 2021. Anaesthetists were asked to describe the objective features of the case they were currently anaesthetising (e.g. age, BMI, anticipated difficult airway etc), provide a free text description of any additional anaesthetic complexity elements, and then give an opinion on the grade of anaesthetist required to care for that particular patient (Core Trainee 1–2, Specialty Trainee 3–4 or 5–7 or consultant). Non‐physician anaesthetists are not currently employed in the study centres, so were not offered as an option. Anaesthetists had no prior knowledge of the Oxford Anaesthetic Complexity scoring system, and were unaware of elements/weightings, but were given a brief explanation of the data collection task by an author at the start of each operating theatre session. Anonymised theatre records were used to complete missing data points. Cases were recorded only during daytime shift hours, i.e. 07:30–20:00. The authors then assigned an Oxford Anaesthetic Complexity score to each case, with variables weighted accordingly. This score was matched to the grade of anaesthetist suggested for the given case.

Cases were grouped by hospital, surgical specialty and grade of anaesthetist thought appropriate for that case, and differences in Oxford Anaesthetic Complexity score were assessed between groups using the Mann–Whitney U‐test. The receiver operating characteristic (ROC) curve was calculated for the Oxford Anaesthetic Complexity score when predicting cases requiring a consultant anaesthetist. Score cut‐offs were assessed using Youden's J‐statistic. The positive and negative predictive values of Oxford Anaesthetic Complexity scores for identification of cases too complex for Core Trainee anaesthetists were calculated. Statistical analysis was performed using Real Statistics Resource Pack software (Release 7.6; www.real‐statistics.com).

Results

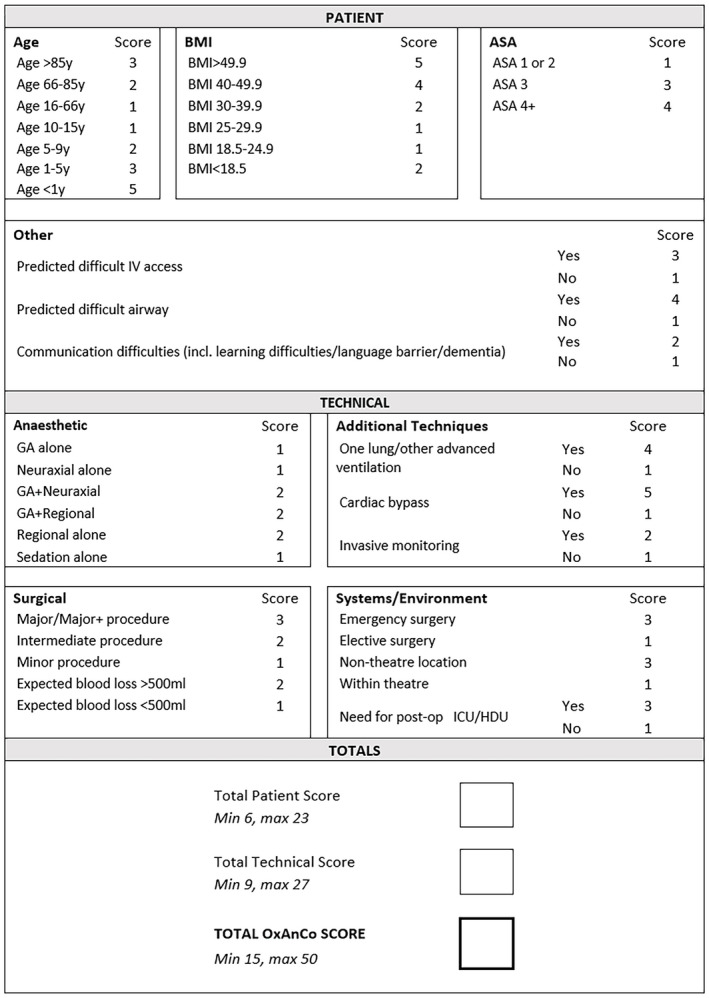

A total of 19 of 22 surveys were completed fully (86.4%). Of these, 10 were from anaesthetists working at tertiary referral hospitals and nine from those working at the separate district general hospital. Median scores for the influence of each element on complexity provided the weighting for the final Oxford Anaesthetic Complexity score (Fig. 1).

Figure 1.

The Oxford Anaesthetic Complexity score. Element weights taken from median clinician survey results. [Colour figure can be viewed at wileyonlinelibrary.com]

The most common free text answer, appearing in 21% of responses, was patient communication difficulty, including learning difficulties, dementia, delirium or language barrier. This was therefore included in the final score, with a weighting of 2 (decided by consensus amongst the authors).

The maximum (i.e. most complex) Oxford Anaesthetic Complexity score is 50, which describes a hypothetical patient aged < 1 y; with difficult intravenous access; ASA physical status 4; a difficult airway; communication difficulty (perhaps with parents); BMI > 49.9; having general and regional anaesthesia; one‐lung ventilation; cardiac bypass; and invasive monitoring for emergency surgery in a non‐theatre location, for a major procedure with blood loss > 500 ml, requiring postoperative critical care. The minimum score is 15, which describes a patient aged 10–66 y with no predicted difficult intravenous access or difficult airway; no communication problems; a BMI 18.5–29.9, for general anaesthesia alone for an elective in‐theatre minor surgery with blood loss < 500 ml, no invasive monitoring, cardiopulmonary bypass or advanced ventilation, without requirement for postoperative critical care.

Over a 7‐day period, 688 anaesthetic cases were captured, managed by 167 anaesthetists. Of these, 400 (58.1%) took place in the tertiary acute hospital, 107 (15.6%) in the transplant/cancer centre, 95 (13.8%) in the orthopaedic centre and 75 (10.9%) in the district general hospital. Cases deemed sufficiently complex to require a consultant anaesthetist were most frequent (226, 32.9%), whereas only 55 (7.99%) were judged appropriate for a Core Trainee to anaesthetise (Table 1). The most common operating specialties were orthopaedics (145, 21.1%) and general surgery (86, 12.5%).

Table 1.

Number of cases scored for complexity. Values are number (proportion).

| Group | Cases |

|---|---|

| Total | 688 (100%) |

| Location | |

| Tertiary referral centres total | 613 (89%) |

| Tertiary acute hospital | 400 (58%) |

| Transplant/cancer centre | 107 (16%) |

| Orthopaedic centre | 95 (14%) |

| Psychiatric centre | 4 (1%) |

| Overspill tertiary (independent hospital) | 7 (1%) |

| District general hospital | 75 (11%) |

| Grade of anaesthetist required | |

| Consultant | 226 (33%) |

| Specialty Trainee 5–7 | 212 (31%) |

| Specialty Trainee 3–4 | 195 (28%) |

| Core Trainee 1–2 | 55 (8%) |

| Specialty | |

| Cardiac surgery | 14 (2%) |

| Cardiology | 21 (3%) |

| Dental | 5 (1%) |

| ENT | 45 (7%) |

| Gastroenterology | 11 (2%) |

| General surgery | 86 (13%) |

| Gynaecology | 55 (8%) |

| Maxillofacial | 23 (3%) |

| Neurosurgery | 39 (6%) |

| Obstetrics | 47 (7%) |

| Ophthalmology | 18 (3%) |

| Orthopaedics/trauma | 145 (21%) |

| Other | 39 (6%) |

| Plastics | 37 (5%) |

| Psychiatry | 4 (1%) |

| Radiology | 16 (2%) |

| Thoracic | 5 (1%) |

| Urology | 64 (9%) |

| Vascular | 14 (2%) |

Other: cases missing data on operative specialty.

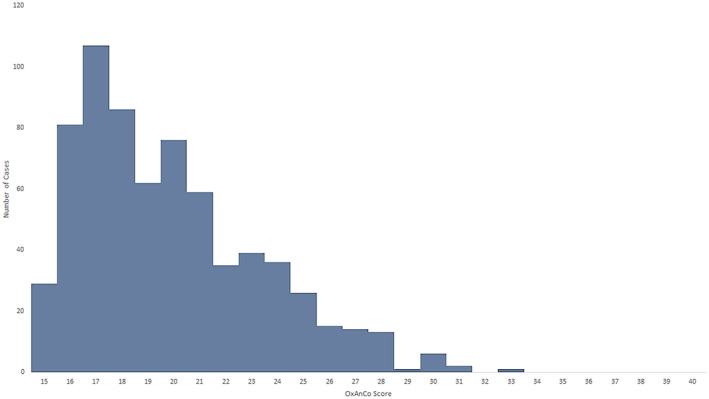

The median (IQR [range]) complexity score across all cases was 19 (17–22 [15–33]) (Fig. 2). The median (IQR [range]) score of cases in the district general hospital was 17 (16–19 [15–28]) vs. 20 (17–22 [15–33]) in the tertiary acute hospital, p < 0.001; 19 (17–22 [15–30]) in the transplant/centre, p < 0.001; and 19 (17–22 [15–30]) in the orthopaedic centre, p < 0.001.

Figure 2.

Distribution of Oxford Anaesthetic Complexity scores across all cases reviewed. [Colour figure can be viewed at wileyonlinelibrary.com]

Cases deemed sufficiently complex to require a consultant anaesthetist had a median (IQR [range]) complexity score of 22 (20–25 [15–22]). Cases judged appropriate for a Specialty Trainee in years 5–7 were less complex, at 19 (17–21 [15–28]), p < 0.001. Those judged appropriate for Specialty Trainee in years 3–4 were less complex than those judged appropriate for a more senior trainee, with a median (IQR [range]) complexity score of 18 (16–19 [15–27]), p < 0.001, and those judged appropriate for Core Trainees in years 1 and 2 were the least complex, with a median (IQR [range]) score of 17 (16–18 [15–21]), p < 0.01 (Table 2 and online Supporting Information, Fig. S1).

Table 2.

Number of cases scored for complexity. Values are median (IQR [range]).

| Group | Median complexity score | p value |

|---|---|---|

| Total | 19 (17–22 [15–33]) | |

| Location | ||

| Tertiary referral centres total | 20 (17–22 [15–33]) | |

| Tertiary acute hospital | 20 (17–22 [15–33]) | <0.001* |

| Transplant/cancer centre | 19 (17–22 [15–30]) | <0.001* |

| Orthopaedic centre | 19 (17–21.5 [15–30]) | <0.001* |

| Psychiatric centre | 21 (19–23.75 [19–26]) | |

| Overspill tertiary theatres | 17 (17–19.5 [16–24]) | |

| District general hospital | 17 (16–19.5 [15–28]) | |

| Grade of anaesthetist required | ||

| Consultant | 22 (20–25 [15–33]) | |

| Specialty Trainee 5–7 | 19 (17–21 [15–28]) | <0.001† |

| Specialty Trainee 3–4 | 18 (16–19 [15–27]) | <0.001† |

| Core Trainee 1–2 | 17 (16–18 [15–21]) | 0.0018† |

*p values for comparison to scores at district general hospital. †p values for comparison to scores for the grade above, i.e. Specialty Trainee 5–7 vs. Consultant, Specialty Trainee 3–4 vs. Specialty Trainee 5–7, Core Trainee 1–2 vs. Specialty Trainee 3–4.

The specialties with the most complex anaesthetic cases were cardiac surgery; cardiology; vascular surgery; radiology; and thoracic surgery, with median (IQR [range]) complexity scores of 27 (27–28 [26–31]), 23 (22–24 [18–30]), 23 (21–23 [17–27]), 22 (21–25 [18–26]) and 22 (21–22 [20–24]), respectively. The specialties with the lowest anaesthetic complexity score were dental; plastic surgery; and gastroenterology, with median (IQR [range]) scores of 17 (17–18 [15–19]), 17 (16–20 [15–25]) and 16 (15–16.5 [15–21]), respectively (see online Supporting Information, Table S1). Thirty‐nine cases (5.7%) had specialty information missing and were excluded from the analysis.

Receiver operating characteristic curves demonstrated good performance of the complexity score in predicting cases requiring a consultant anaesthetist (area under ROC curve 0.84) (see online Supporting Information, Figure S2). Youden's J was highest at a complexity score of 19, with a sensitivity of 0.77 and specificity of 0.68. A score of > 15 had a positive predictive value for a case being too complex for a Core Trainee anaesthetist of 0.93 and a negative predictive value of 0.28.

Discussion

Validation of our new complexity score against a large dataset of real‐world cases showed excellent discrimination between cases based on the grade of anaesthetist required to manage each one.

Ours is the first study to describe an objective method of assessing the complexity of a case in anaesthesia. Surgical and anaesthetic risk has been described using scores for many years [10, 11, 12, 13], but our complexity score includes important additional factors that these miss, and measures complexity, which is a separate concept from risk. Some elements of our Oxford Anaesthetic Complexity score are themselves risk‐assessment tools (e.g. ASA physical status), are descriptions of whole‐patient risk (e.g. the need for postoperative critical care) or have an evidence‐based link to risk (e.g. age [14], BMI [15]). We also include an assessment of surgical complexity in the form of National Institute for Health and Care Excellence grades of surgery [16]. Our integration of these, and inclusion of other patient, anaesthetic, surgical and systems factors is novel. This study does not aim to correlate patient outcome with complexity, but this is an obvious future direction, which will allow computation of system performance, as has been described in congenital heart surgery [17].

Task and patient complexity themselves have been investigated and modelled previously for patients with infectious disease [18], for the general internal medicine patient population [19] and for patients with HIV [20]. Our approach of taking multiple dimensions of complexity together is similar to these studies, but is the first to focus on anaesthesia. Comparison of an objective score to a subjective expert‐defined outcome is a method used previously in asthma diagnosis [21], although that study used logistic regression to define questionnaire element weights. Our survey‐based weighting is analogous to ‘theoretical’ or ‘judgement‐derived’ weights based on researcher or expert opinion [22], and is a companion to panel rating systems for score content validation [23].

The strength of this study lies in the clinician‐driven design of the score. Elements are included and weighted based on feedback from practising anaesthetists, and we combine previously described methods of involving clinician experts in both score building and testing [21, 22, 23]. This means that end‐users of the score have directly influenced its creation, a process shown to be important in emergency medicine audit and measurement projects [24] and mental health interventions [25]. The small range of complexity scores seen, with a skew towards lower complexity, representing the reality of clinical practice [26, 27], is a likely product of this end‐user design. In addition, the score has been validated against a large real‐world dataset of anaesthetic cases, covering multiple subspecialties, hospital and operating theatre environments. Tracking case complexity using datasets like this will allow institutions to model expected complexity for a given setting (e.g. for a particular surgical list, or within or outside daytime working hours). If a case is then more complex than the expected complexity for that list, changes can be made (e.g. to the grade of anaesthetist present). Similarly, lists with reliably low‐complexity cases may be staffed by more junior anaesthetists (including non‐physician anaesthetists). If scores are computed pre‐operatively, including at time of outpatient pre‐assessment, then staffing can be adjusted well in advance, improving efficiency and safety.

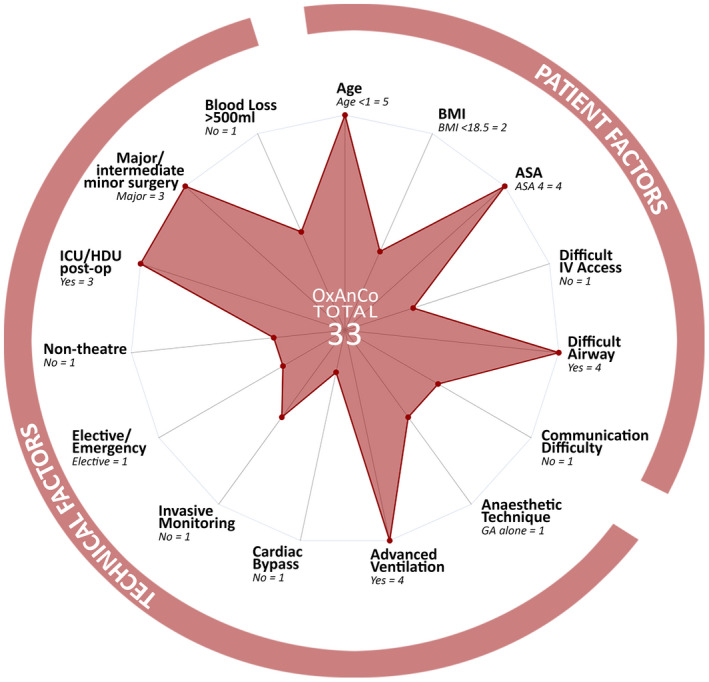

Another strength of our score is how easily it translates to graphical form (Fig. 3); improving visualisation of data through graphs has been shown to improve judgement of hypertension control [28]. Presenting the complexity score visually both provides a rapid overview of total complexity, and the ability to identify if a single element is driving a higher score.

Figure 3.

Oxford Anaesthetic Complexity score presented as a radar chart for the most complex case in our dataset, scoring 33. [Colour figure can be viewed at wileyonlinelibrary.com]

We acknowledge that a scoring system may not capture all factors influencing case complexity. We mitigated this limitation by allowing free‐text responses to surveys, such that clinicians could offer additional elements to add to the score, and a future survey with a larger sample size could allow yet more elements to be included. We did not include the opportunity for modifying scores, either up or down, based on clinician opinion of complexity, although this appears in one widely‐used scoring system for surgical risk [12].

The strong correlation of our Oxford Anaesthetic Complexity score with grade of anaesthetist required could be related to the overlap between groups of anaesthetists involved in both the score elements survey and the case data collection. However, only nine of the surveyed group were from the centres used in the data collection exercise, completed by a total of 167 clinicians, making this influence minimal. Anaesthetists providing case data and opinion on required grade were also unaware of the components and weighting of the final complexity score. The correlation may instead be a feature of a pre‐existing ‘organic’ match between case complexity and grade of anaesthetist, borne of subjective institutional experience. However, since no other case complexity score for anaesthesia yet exists, the value of our scoring system in this setting remains: to measure and define this matching objectively, and allow meaningful research and audit over time.

We note that there is no reference standard for assessment of anaesthetic complexity against which our new score could be compared. We used clinician opinion of complexity, in terms of training grade of anaesthetist required for a case, as the comparator. The UK training system sets out specific competencies for junior anaesthetists in terms of their ability to deal with complex patients [29], and thus adds a level of objectivity to this gestalt assessment of complexity. Notably, this system currently excludes non‐physician anaesthetists, but scope‐of‐practice and grade‐equivalency assessments are ongoing for this group [5]. Importantly, our complexity score showed excellent discrimination between groups when applied in this way, and predicted need for consultant presence well. Any rise in scores above the minimum (>15) was strongly predictive of a case too complex for junior anaesthetists (Core Trainee 1–2), although low scores did not reliably predict a case appropriate for this group (i.e. a more senior anaesthetist could still be required). We did not ask respondents why they felt a particular case required a consultant anaesthetist, so other concerns or individual biases may have led to results where a low scoring case was rated as consultant only.

Our Oxford Anaesthetic Complexity score is a clinician‐designed, real‐world tested model of case complexity in anaesthetics. It is inclusive of, but distinct from, patient and surgical risk. We have shown excellent external validity of the score by comparing scores to grades of anaesthetist required for a case, and demonstrating separation in complexity between district general and tertiary referral hospital cases. The score can be used to assess cases individually, or measured across time to show expected complexity, and thus influence theatre and list planning. Complexity scores can be calculated at any time in the patient journey, from first anaesthetic review (physician or non‐physician) to post‐hoc for use in audit. This makes the score a powerful tool for clinicians and managers to use to improve safety and efficiency, by streaming cases to an appropriate grade of anaesthetist based on an objective assessment. This is vital in an age of changing landscapes in anaesthetic training and workforce (particularly the growth of the non‐physician anaesthetist role), limited resources, and increasing need for surgery and anaesthesia.

Supporting information

Table S1. Surgical specialties ranked based on median Oxford Anaesthetic Complexity score, from most to least complex.

Figure S1. Oxford Anaesthetic Complexity scores for cases grouped by grade of anaesthetist required. Box limits are quartiles 1 and 3, whiskers show range. L‐R: Consultant, ST5‐7, ST3‐4, CT1‐2.

Figure S2. Receiver‐operating characteristic curve for Oxford Anaesthetic Complexity score when predicting complexity sufficient to require a consultant anaesthetist. Area under curve 0.84.

Acknowledgements

No external funding or competing interests declared.

Contributor Information

E. Ridgeon, Email: elliott.ridgeon@gmail.com.

A. Elrefaey, @AhmedEl14305911.

References

- 1. Carr A, Smith JA, Camaradou J, Prieto‐Alhambra D. Growing backlog of planned surgery due to COVID‐19. British Medical Journal 2021; 372: n339. [DOI] [PubMed] [Google Scholar]

- 2. Royal College of Anaesthetists . Medical Workforce Census Report 2020. 2020. https://www.rcoa.ac.uk/sites/default/files/documents/2020‐11/Medical‐Workforce‐Census‐Report‐2020.pdf (accessed 29/07/2022).

- 3. McClure H. Desflurane, workforce and morality. Anaesthesia News 2022 . https://anaesthetists.org/Home/Resources‐publications/Anaesthesia‐News‐magazine/Anaesthesia‐News‐Digital‐April‐2022/Desflurane‐workforce‐and‐morality (accessed 14/07/2022).

- 4. Health Education England . Anaesthesia Associates. https://www.hee.nhs.uk/our‐work/medical‐associate‐professions/anaesthesia‐associates (accessed 05/06/2022).

- 5. Royal College of Anaesthetists . Planning the introduction and training for Physicians' Assistants (Anaesthesia). 2016: 37–8. https://www.rcoa.ac.uk/sites/default/files/documents/2020‐02/Planning‐introduction‐training‐PAA‐2016.pdf (accessed 14/07/2022).

- 6. Schaink AK, Kuluski K, Lyons RF, Fortin M, Jadad AR, Upshur R, Wodchis WP. A scoping review and thematic classification of patient complexity: offering a unifying framework. Journal of Comorbidity 2012; 2: 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Upshur REG. Understanding clinical complexity the hard way: a primary care journey. Healthcare Quarterly 2016; 19: 24–8. [DOI] [PubMed] [Google Scholar]

- 8. Miyazaki T, Imperatori A, Jimenez M, et al. An aggregate score to stratify the technical complexity of video‐assisted thoracoscopic lobectomy. Interactive Cardiovascular and Thoracic Surgery 2019; 28: 728–34. [DOI] [PubMed] [Google Scholar]

- 9. Bould MD, Hunter D, Haxby EJ. Clinical risk management in anaesthesia. Continuing Education in Anaesthesia, Critical Care and Pain 2006; 6: 240–3. [Google Scholar]

- 10. American Society of Anesthesiologists . ASA Physical Status Classification System. https://www.asahq.org/standards‐and‐guidelines/asa‐physical‐status‐classification‐system (accessed 04/06/2022).

- 11. Protopapa KL, Simpson JC, Smith NCE, Moonesinghe SR. Development and validation of the Surgical Outcome Risk Tool (SORT). British Journal of Surgery 2014; 101: 1774–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Bilimoria KY, Liu Y, Paruch JL, Zhou L, Kmiecik TE, Ko CY, Cohen ME. Development and evaluation of the universal ACS NSQIP surgical risk calculator: a decision aid and informed consent tool for patients and surgeons. Journal of the American College of Surgeons 2013; 217: 833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Copeland GP, Jones D, Walters M. POSSUM: a scoring system for surgical audit. British Journal of Surgery 2005; 78: 355–60. [DOI] [PubMed] [Google Scholar]

- 14. Turrentine FE, Wang H, Simpson VB, Jones RS. Surgical risk factors, morbidity, and mortality in elderly patients. Journal of the American College of Surgeons 2006; 203: 865–77. [DOI] [PubMed] [Google Scholar]

- 15. Tjeertes EEKM, Hoeks SSE, Beks SSBJC, Valentijn TTM, Hoofwijk AAGM, Stolker RJRJ. Obesity ‐ a risk factor for postoperative complications in general surgery? BMC Anesthesiology 2015; 15: 112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. National Institute for Health and Care Excellence . Routine preoperative tests for elective surgery. 2016. https://www.nice.org.uk/guidance/ng45 (accessed 14/07/2022). [PubMed]

- 17. Lacourgayet F, Clarke D, Jacobs J, et al. The Aristotle score: a complexity‐adjusted method to evaluate surgical results. European Journal of Cardio‐Thoracic Surgery 2004; 25: 911–24. [DOI] [PubMed] [Google Scholar]

- 18. Islam R, Weir C, Del FG. Clinical complexity in medicine: a measurement model of task and patient complexity. Methods of Information in Medicine 2016; 55: 14–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Liechti FD, Beck T, Ruetsche A, et al. Development and validation of a score to assess complexity of general internal medicine patients at hospital discharge: a prospective cohort study. British Medical Journal Open 2021; 11: 41205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Ben‐Menahem S, Sialm A, Hachfeld A, Rauch A, Von Krogh G, Furrer H. How do healthcare providers construe patient complexity? A qualitative study of multimorbidity in HIV outpatient clinical practice. British Medical Journal Open 2021; 11: 51013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Hirsch S, Frank TL, Shapiro JL, Hazell ML, Frank PI. Development of a questionnaire weighted scoring system to target diagnostic examinations for asthma in adults: a modelling study. BMC Family Practice 2004; 5: 30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Avila ML, Stinson J, Kiss A, Brandão LR, Uleryk E, Feldman BM. A critical review of scoring options for clinical measurement tools. BMC Research Notes 2015; 8: 612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Streiner DL, Norman GR, Cairney J. Health Measurement Scales: A Practical Guide to Their Development and Use. 5th edn. Oxford: Oxford University Press, 2014. [Google Scholar]

- 24. Van Deen WK, Cho ES, Pustolski K, et al. Involving end‐users in the design of an audit and feedback intervention in the emergency department setting ‐ a mixed methods study. BMC Health Services Research 2019; 19: 270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. de Beurs D, van Bruinessen I, Noordman J, Friele R, van Dulmen S. Active involvement of end users when developing web‐based mental health interventions. Frontiers in Psychiatry 2017; 8: 72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Kwa CXW, Cui J, Lim DYZ, Sim YE, Ke Y, Abdullah HR. Discordant American Society of Anesthesiologists physical status classification between anesthesiologists and surgeons and its correlation with adverse patient outcomes. Scientific Reports 2022; 12: 7110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Marian AA, Bayman EO, Gillett A, Hadder B, Todd MM. The influence of the type and design of the anesthesia record on ASA physical status scores in surgical patients: paper records vs. electronic anesthesia records. BMC Medical Informatics and Decision Making 2016; 16: 29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Shaffer VA, Wegier P, Valentine KD, et al. Use of enhanced data visualization to improve patient judgments about hypertension control. Medical Decision Making 2020; 40: 785–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Royal College of Anaesthetists . 2021 Curriculum structure and learning syllabus. 2021. https://rcoa.ac.uk/training‐careers/training‐anaesthesia/2021‐anaesthetics‐curriculum/2021‐curriculum‐structure (accessed 05/06/2022).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1. Surgical specialties ranked based on median Oxford Anaesthetic Complexity score, from most to least complex.

Figure S1. Oxford Anaesthetic Complexity scores for cases grouped by grade of anaesthetist required. Box limits are quartiles 1 and 3, whiskers show range. L‐R: Consultant, ST5‐7, ST3‐4, CT1‐2.

Figure S2. Receiver‐operating characteristic curve for Oxford Anaesthetic Complexity score when predicting complexity sufficient to require a consultant anaesthetist. Area under curve 0.84.