Abstract

Augmented reality (AR) is the superimposition of a virtual environment on the real world. The use of AR in spine surgery continues to grow, with multiple companies and products becoming available. The proposed benefits of AR include decreased attention shift, decreased line-of-site interruption, opportunity for more minimally invasive approaches, decreased radiation exposure to the operative team, and improved pedicle screw accuracy. In this review, we examine our institutional experiences with utilization and implementation of some of the current AR products.

Keywords: augmented reality, heads-up navigation, future technologies, 3D overlays

Introduction

Computer-based navigation systems in spine surgery provide surgeons with real-time guidance based on object tracking platforms that correlate imaging with in vivo anatomy. While the application for these technologies has primarily been limited to hardware placement, advancements in machine learning, imaging modalities, and additional technologies have increased their use and scope in practice. Typically, navigation technology relies on a reference frame used in conjunction with cross-sectional imaging to develop an interactive guide to a patient’s anatomy.1 In doing so, these systems enable real-time imaging guidance while reducing, if not eliminating, intraoperative radiation exposure to the operative team.2 Furthermore, the use of this technology has been shown to improve the precision and accuracy of instrumentation.3–5

Augmented reality (AR) is the superimposition of a virtual environment on the real world.6 The use of AR in spine surgery is an emerging field that spans the gambit from a “simple” heads-up navigation display to more cutting-edge live 3-dimensional (3D) overlays onto the operative field. Varied products are available in this space, each with their own pros and cons.7

In the simplest form of AR, “heads-up navigation,” the surgeon wears a head-mounted display screen that allows direct visualization of intraoperative radiographs or even computed tomography (CT)-based navigation images. Relative to traditional navigation, the key advantages of this technology are avoidance of attention shift and line-of-sight interruption.8,9 Attention shift occurs when surgeons must turn their head and/or shift attention away from the surgical field and toward a remote display screen. Attention shift has been linked to adverse effects on intraoperative surgeon performance and may be a source of error in navigated spinal instrumentation.10 Similarly, line-of-sight interruption occurs when an object in the field, such as surgeon’s hands or another instrument, blocks the view of the remote navigation camera. This leads to loss of navigation until the obstruction is resolved.11 In newer systems, 3D overlays of a patient’s anatomy can be projected onto the surgical field for real-time interaction without full operative exposure. This shift in technology allows for further minimization of attention shift, line-of-sight disruption, and enabling of minimally invasive approaches.

As a result of these unique advantages, interest in AR-assisted spine surgery has rapidly grown. The technology carries a potential for improved precision and accuracy, workflow efficiency, and cost-effectiveness, all in the setting of a relatively low learning curve.4,9 In the present review, we highlight AR technology that is currently available and shed light on the outcomes associated with the use of these novel methods. Specifically, we address our 2 institutional experiences with 3D overlay technology provided by both Augmedics XVision system (Augmedics, Arlington Heights, IL, USA) and Holosurgical ARIA navigation system (Holo Surgical, Inc, Chicago IL, USA).

Augmedics

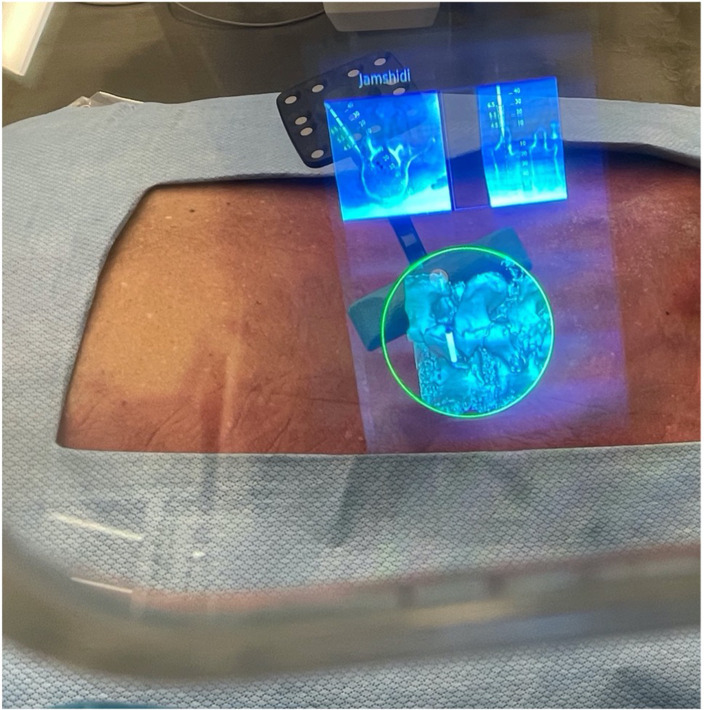

Augmedics XVision system is a novel navigation and AR system that allows the user to interpret 2D CT navigational anatomy as well as a 3D anatomic overlay through near eye retina display through use of a wireless headset (Figures 1 and 2). Advantages associated with the XVision include a built-in surgical tracking system that can accurately determine the position of surgical tools in real time, eliminating line-of-sight issues noted with traditional navigation systems, as well as a completely wireless system allowing for movement within the operating room.12

Figure 1.

Augmedics XVision headset.

Figure 2.

Surgeon views through the XVision headset demonstrating virtual 3-dimensional projection and 2-dimensional cross-sectional navigation cuts. Jamshidi needle in place.

Early cadaveric studies have shown promise for the XVision system. Molina et al presented a cadaveric analysis of 5 specimens that were instrumented bilaterally from T6 to L5 by 3 spine surgeons with no significant prior experience with XVision or other AR prior to the trial.4 A total of 120 pedicle screws were placed after traditional open posterior exposure using the AR technology. Following instrumentation, CT was performed, and the imaging was submitted to 2 neuroradiologists for independent accuracy grading of the pedicle screw placement. A modified Heary-Gertzbein Scale, which judges screw placement based on the presence and significance of a breach, was utilized to quantify this accuracy.13,14 The authors reported an overall screw accuracy of 96.7%, with values of 97.1% in the thoracic spine and 96.0% in the lumbar spine, respectively. Upon comparison of these values to those in the literature, the authors found that AR-assisted screw placement was noninferior to traditional computer-assisted navigation, noninferior to robotic computer-assisted navigation, and superior to freehand insertion.

A similar cadaveric analysis was subsequently performed by Molina et al to assess the accuracy of percutaneous pedicle screw placement and also to include sacral (S1) instrumentation in analysis.4 Five cadaveric specimens were instrumented from T5 to S1 using minimally invasive percutaneous methods without exposure of the spine. Methodology was otherwise largely identical to the previous study. The authors reported an overall implant insertion accuracy of 99.1%, with a thoracic accuracy of 98.2% and lumbosacral accuracy of 100%, respectively. Specifically, only a single pedicle screw was misplaced when a 5.5-mm diameter screw was placed within a 3.7-mm diameter pedicle, resulting in a 3.9-mm medial canal breach.

To date, we have performed 55 cases using XVision for the placement of 272 pedicle screws in the minimally invasive surgery and traditional open surgery settings. There have been no reported complications in this cohort related to hardware placement. Despite our relatively limited experience overall, we have been able to optimize the workflow and incorporation of this technology, especially in the setting of multilevel percutaneous screw placement.

The workflow for XVision is similar to that of traditional navigation techniques used for pedicle screw placement. Preference regarding the timing of pedicle screw placement is largely based on surgeon preference and additional procedures being performed in conjunction with posterior instrumentation. Typically, pedicle screws are placed prior to osteotomies or other procedures that destabilize the spine in order to allow for more accurate registration. Following localization, a reference frame is clamped to the spinous process, typically 2 to 3 levels above the most cranial instrumented level. Next, an O-Arm (Medtronic, Littleton MA) is used to obtain intraoperative multiplanar CT imaging that is fed directly into the XVision platform. It is typically possible to instrument up to 5 levels in the lumbar spine using a single O-Arm spin.

During image acquisition, the surgeons are fitted with their customized XVision headsets that are freestanding headsets with a portable battery. At this point, all navigated images and real-time 3D image overlays are visualized through the near eye display. As noted above, reference frame to tracker line-of-site inference is minimal given the imbedded tracking system within the headset. The 3D overlay allows rapid pedicle start point localization and verification on cross-sectional imaging (Figure 3). Each instrument used for pedicle screw insertion can be registered with the tracking system, including awl, tap, drill, and final screw.

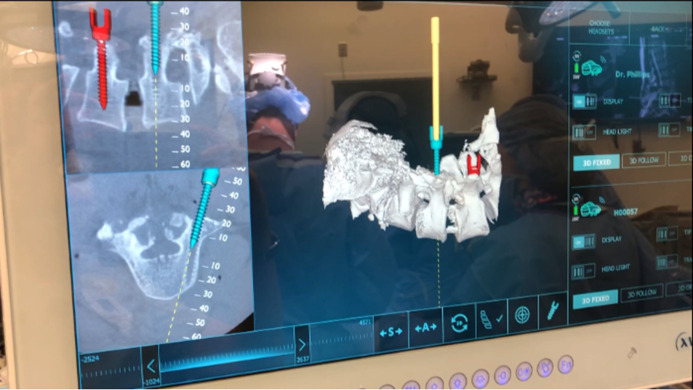

Figure 3.

View from display screen demonstrating live screw representation on the 3-dimensional model of the spine as well as on cross-sectional imaging (similar images are seen by the operating team in the heads-up display).

Using the XVision platform, the ideal starting point can be marked on the skin based on the projected trajectory on the 3D overlay and cross-sectional imaging. Following cannulation of the pedicle, the screw trajectory and desired screw width and length can be saved and overlayed onto the cross-sectional imaging, obviating the need for direct pedicle screw measurement.

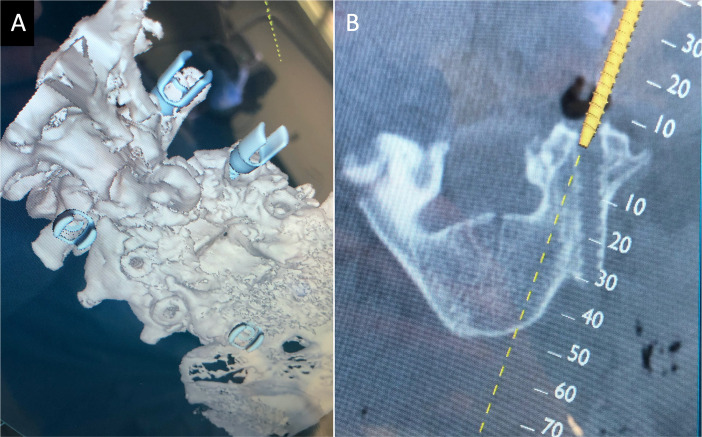

The early primary benefits appear to be a short learning curve given natural posture during use, minimal operating room footprint (headset only), and minimal surgical workflow disruption (no more than traditional navigation). In our experience, this technology appears to be best suited when supplementing additional minimally invasive techniques (eg, minimally invasive surgery interbody fusion). This is especially true in cases requiring revision instrumentation, whereby AR is capable of both identifying existing hardware to facilitate removal and assisting in accurate placement of new instrumentation (Figure 4).

Figure 4.

(A) A 3-dimensional spine representation demonstrating new instrumentation and previous pedicle screw start points. (B) Cross-sectional imaging demonstrating the ability to actively change the trajectory of the new implant.

When comparing XVision to similar next generation navigation equipment such as robotics, there are many overlapping benefits, but some characteristics of the XVision system may make it preferable in certain settings. When compared with robotics, although no head-to-head studies have been performed regarding implant accuracy, both rely exclusively on image navigation and likely have a similar profile of improved accuracy compared with freehand techniques.15,16 Some of the benefits over robotics are substantially lower upfront capital cost for the XVision system and decreased operating room footprint of the device itself. These benefits of decreased capital cost and footprint may be best realized in price-sensitive settings such as ambulatory surgery centers.

Holosurgical

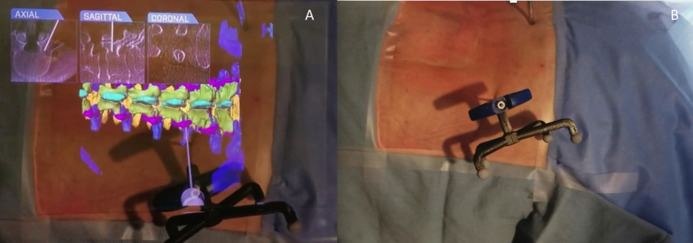

The feasibility of AR-based virtual anatomy projection and artificial intelligence–based anatomy segmentation of the Holosurgical ARAI navigation system has been assessed in a cadaveric study.17 The ARAI surgical navigation system correctly and accurately identified the starting points at all attempted levels (N = 24). The virtual anatomy image overlay precisely corresponded to the actual anatomy in all the tested scenarios. The virtual anatomy image projected was responsive, and the surgeon’s perspective was updated in real time while the autonomous lumbar spine semantic segmentation was an accurate means to automatically identify the vertebral body anatomy and provide measurements for implants and placement trajectories (Figure 5).18

Figure 5.

(A) Virtual 3-dimensional projection of the lumbar spine; virtual Jamshidi needle along with the orthogonal planes demonstrating placement of navigated needle. (B) Same cadaver specimen in prone position demonstrating the actual Jamshidi needle placement. Head to the left.

Holosurgical and XVision are by no means the only products on the market offering utilization of AR technology. There are multiple companies and products that offer “heads-up” display of 2D navigated images and x-ray images. In regard to specific 3D anatomic overlays in the surgical field, Brainlab (Munich, Germany) and Phillips (Philips Healthcare, Best, the Netherlands) also have products with this feature. The specific features of the Brainlab system include a microscope-based heads-up display system. The Phillips hybrid OR system relies on a mounted cone beam CT scanner with mounted cameras for tracking, the images of which are displayed on a boom mounted screen. Each product has its own proprietary features beyond the scope of this review.

Future Directions

The major benefit of AR implementation is the ability to obtain accurate anatomic information with minimal to no exposure.12 As the 3D overlay becomes more and more accurate, a broader range of procedures can be utilized safely and effectively. There are a number of barriers to universal adaptation and use, many of which are similar to traditional navigation, including varying levels of inaccuracy between systems, registration and recalibration (movement or displacement of reference frame), capital cost, and workflow adaptation.12,19 More specific to AR-based modalities themselves are image latency, poor image resolution/quality, accurate 3D representation, perceived learning curve, and visual fatigue (near eye display). Many of these barriers are able to be circumvented with advancement in computer and image processing. As the images and overlays become more and more accurate, the only major barrier may be cost, which will likely be offset by decreased complications, length of stay, and need for revisions.

Ongoing advancement in AR technology will address the current barriers in use. Advancement in computer processing and continued implementation of artificial intelligence and machine-learning algorithms will allow for real-time, anatomically accurate representations of subcutaneous anatomy on an even more granular level. This type of anatomic accuracy lends itself to the ability of this technology to be utilized for reliable decompressions, osteotomies, tumor resections, and complex instrumentation with more and more minimal exposure.

As the exposure becomes more minimalist and the virtual representations become more accurate, there is a hypothesized merging of robotic surgery and AR, where an offsite surgeon could control a robotic system remotely relying on AR-based images.20 The future of these 2 technologies is not competetive but instead synergystic. AR allows for improved robotic oversight and improved visualization even with the most minimalist of approaches. Further iterations of both of these technologies will continue to exand the limits of spine surgery.

References

- 1. Virk S, Qureshi S. Navigation in minimally invasive spine surgery. J Spine Surg. 2019;5(Suppl 1):S25–S30. 10.21037/jss.2019.04.23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Costa F, Cardia A, Ortolina A, Fabio G, Zerbi A, Fornari M. Spinal navigation: standard preoperative versus intraoperative computed tomography data set acquisition for computer-guidance system: radiological and clinical study in 100 consecutive patients. Spine (Phila Pa 1976). 2011;36(24):2094–2098. 10.1097/BRS.0b013e318201129d [DOI] [PubMed] [Google Scholar]

- 3. Tian N-F, Xu H-Z. Image-guided pedicle screw insertion accuracy: a meta-analysis. Int Orthop. 2009;33(4):895–903. 10.1007/s00264-009-0792-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Molina CA, Phillips FM, Colman MW, et al. A cadaveric precision and accuracy analysis of augmented reality–mediated percutaneous pedicle implant insertion: presented at the 2020 AANS/CNS joint section on disorders of the spine and peripheral nerves. J Neurosurg Spine. 2020;1:1–9. [DOI] [PubMed] [Google Scholar]

- 5. Molina CA, Theodore N, Ahmed AK, et al. Augmented reality–assisted pedicle screw insertion: a cadaveric proof-of-concept study. J Neurosurg Spine. 2019;31(1):139–146. 10.3171/2018.12.SPINE181142 [DOI] [PubMed] [Google Scholar]

- 6. Laverdière C, Corban J, Khoury J, et al. Augmented reality in orthopaedics: a systematic review and a window on future possibilities. The Bone & Joint Journal. 2019;101:1479–1488. [DOI] [PubMed] [Google Scholar]

- 7. Yoon JW, Chen RE, Han PK, Si P, Freeman WD, Pirris SM. Technical feasibility and safety of an intraoperative head-up display device during spine instrumentation. Int J Med Robot. 2017;13(3). 10.1002/rcs.1770 [DOI] [PubMed] [Google Scholar]

- 8. Ortega G, Wolff A, Baumgaertner M, Kendoff D. Usefulness of a head mounted monitor device for viewing intraoperative fluoroscopy during orthopaedic procedures. Arch Orthop Trauma Surg. 2008;128(10):1123–1126. 10.1007/s00402-007-0500-y [DOI] [PubMed] [Google Scholar]

- 9. Molina CA, Sciubba DM, Greenberg JK, Khan M, Witham T. Clinical accuracy, technical precision, and workflow of the first in human use of an augmented-reality head-mounted display stereotactic navigation system for spine surgery. Oper Neurosurg (Hagerstown). 2021;20(3):300–309. 10.1093/ons/opaa398 [DOI] [PubMed] [Google Scholar]

- 10. Léger É, Drouin S, Collins DL, Popa T, Kersten-Oertel M. Quantifying attention shifts in augmented reality image-guided neurosurgery. Healthc Technol Lett. 2017;4(5):188–192. 10.1049/htl.2017.0062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Rahmathulla G, Nottmeier EW, Pirris SM, Deen HG, Pichelmann MA. Intraoperative image-guided spinal navigation: technical pitfalls and their avoidance. Neurosurg Focus. 2014;36(3):E3. 10.3171/2014.1.FOCUS13516 [DOI] [PubMed] [Google Scholar]

- 12. Sakai D, Joyce K, Sugimoto M, et al. Augmented, virtual and mixed reality in spinal surgery: a real-world experience. J Orthop Surg (Hong Kong). 2020;28(3):2309499020952698. 10.1177/2309499020952698 [DOI] [PubMed] [Google Scholar]

- 13. Heary RF, Bono CM, Black M. Thoracic pedicle screws: postoperative computerized tomography scanning assessment. J Neurosurg. 2004;100(4 Suppl Spine):325–331. 10.3171/spi.2004.100.4.0325 [DOI] [PubMed] [Google Scholar]

- 14. Gertzbein SD, Robbins SE. Accuracy of pedicular screw placement in vivo. Spine (Phila Pa 1976). 1990;15(1):11–14. 10.1097/00007632-199001000-00004 [DOI] [PubMed] [Google Scholar]

- 15. Fichtner J, Hofmann N, Rienmüller A, et al. Revision rate of misplaced pedicle screws of the thoracolumbar spine-comparison of three-dimensional fluoroscopy navigation with freehand placement: a systematic analysis and review of the literature. World Neurosurg. 2018;109:e24–e32. 10.1016/j.wneu.2017.09.091 [DOI] [PubMed] [Google Scholar]

- 16. Chan A, Parent E, Wong J, Narvacan K, San C, Lou E. Does image guidance decrease pedicle screw-related complications in surgical treatment of adolescent idiopathic scoliosis: a systematic review update and meta-analysis. Eur Spine J. 2020;29(4):694–716. 10.1007/s00586-019-06219-3 [DOI] [PubMed] [Google Scholar]

- 17. Siemionow KB, Katchko KM, Lewicki P, Luciano CJ. Augmented reality and artificial intelligence-assisted surgical navigation: Technique and cadaveric feasibility study. J Craniovertebr Junction Spine. 2020;11(2):81–85. 10.4103/jcvjs.JCVJS_48_20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Siemionow K, Luciano C, Forsthoefel C, Aydogmus S. Autonomous image segmentation and identification of anatomical landmarks from lumbar spine intraoperative computed tomography scans using machine learning: a validation study. J Craniovertebr Junction Spine. 2020;11(2):99–103. 10.4103/jcvjs.JCVJS_37_20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Yoon JW, Chen RE, Kim EJ, et al. Augmented reality for the surgeon: systematic review. Int J Med Robot. 2018;14(4):e1914. 10.1002/rcs.1914 [DOI] [PubMed] [Google Scholar]

- 20. Madhavan K, Kolcun JPG, Chieng LO, Wang MY. Augmented-reality integrated robotics in neurosurgery: are we there yet? Neurosurg Focus. 2017;42(5):E3. 10.3171/2017.2.FOCUS177 [DOI] [PubMed] [Google Scholar]