Abstract

Novel coronavirus disease 2019 (COVID-19) has rapidly spread throughout the world; however, it is difficult for clinicians to make early diagnoses. This study is to evaluate the feasibility of using deep learning (DL) models to identify asymptomatic COVID-19 patients based on chest CT images. In this retrospective study, six DL models (Xception, NASNet, ResNet, EfficientNet, ViT, and Swin), based on convolutional neural networks (CNNs) or transformer architectures, were trained to identify asymptomatic patients with COVID-19 on chest CT images. Data from Yangzhou were randomly split into a training set (n = 2140) and an internal-validation set (n = 360). Data from Suzhou was the external-test set (n = 200). Model performance was assessed by the metrics accuracy, recall, and specificity and was compared with the assessments of two radiologists. A total of 2700 chest CT images were collected in this study. In the validation dataset, the Swin model achieved the highest accuracy of 0.994, followed by the EfficientNet model (0.954). The recall and the precision of the Swin model were 0.989 and 1.000, respectively. In the test dataset, the Swin model was still the best and achieved the highest accuracy (0.980). All the DL models performed remarkably better than the two experts. Last, the time on the test set diagnosis spent by two experts—42 min, 17 s (junior); and 29 min, 43 s (senior)—was significantly higher than those of the DL models (all below 2 min). This study evaluated the feasibility of multiple DL models in distinguishing asymptomatic patients with COVID-19 from healthy subjects on chest CT images. It found that a transformer-based model, the Swin model, performed best.

Keywords: Asymptomatic coronavirus-disease-2019 patients, Chest CT images, Convolutional neural networks, Transformer, Deep learning, Transfer learning

Background

The novel coronavirus disease 2019 (COVID-19) caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) has rapidly spread throughout the world, posing a serious danger to global health. Patients with COVID-19 may experience a variety of symptoms, ranging from asymptomatic infection to acute upper respiratory illness, and even severe respiratory failure [1].

Early detection of COVID-19 is aided by a combination of clinical, laboratory, and imaging findings [2]. For example, Ozdemir et al. [3] proposed a novel method for automatic COVID-19 diagnosis using ECG data, with a high accuracy of 96.20%. However, the limited application of ECG tests among patients with COVID-19, as well as variations in the ECG images, makes it less effective and popular than CT examinations. Additionally, Togacar et al. [4] utilized MobileNetV2 and SqueezeNet deep learning (DL) models with SVM classification for differentiating between COVID-19, pneumonia, and normal chest X-ray images. Although they achieved the 99.27% accuracy, the training dataset was greatly limited, and the image preprocessing needed to be normalized and standardized. Furthermore, X-ray cannot visualize the lung lobes at multiple layers, such as with chest computed tomography (CT), which might result in the missed diagnosis. Therefore, chest computed tomography (CT) scans, which are noninvasive and have rapid acquisition speed and excellent sensitivity, are widely used. Previous studies have reported chest CT characteristics in COVID-19–infected individuals, including ground-glass opacities, focal unilateral or bilateral involvement, diffuse and peripheral distribution, and consolidations [5–9]. Furthermore, abnormal chest CT findings compatible with COVID-19 can occur days before detecting SARS-CoV-2 RNA [2, 10]. Thus, the use of chest CT scans, as a rapid supplementary diagnostic measure, may help physicians make a presumptive diagnosis of COVID-19 [2, 11].

Despite its advantages, it is difficult and time-consuming for radiologists to recognize these subtle radiological variations between COVID-19 and pneumonia caused by other etiologies. Due to the enormous number of radiologic tests during the pandemic, this can lead to misdiagnosis, and COVID-19 diagnoses being missed. The usual approach for diagnosing COVID-19 infection is a real-time reverse-transcriptase polymerase chain reaction (RT-PCR) [12]. However, some patients with RT-PCR confirmed SARS-CoV-2 infection may present normal CT features according to radiologists’ interpretations. The RT-PCR was positive, but the lung CT diagnosis was normal, which was referred to be the asymptomatic [12]. This made it difficult for radiologists to detect COVID-19 patients who were asymptomatic [13, 14].

One of the most important duties in reducing the spread of the virus is early detection [12, 15]. However, the increasing number of asymptomatic patients, as well as the possibility of transmission from asymptomatic carriers of SARS-CoV-2, makes it difficult to curtail the spread of this pandemic [16, 17].

Artificial intelligence (AI) has been proved to help in the detection of COVID-19 and to distinguish the difference between COVID-19 and pneumonia caused by other etiologies [18]. To date, various AI-aided diagnostic systems based on X-ray or CT scans have been found to be very promising for diagnosing COVID-19 [18–25]. Harmon et al. [18] proposed a series of DL algorithms for the classification of COVID-19 pneumonia, with high accuracy of 90.8%. Li et al. [19] developed an automated AI system for segmenting and quantifying the COVID-19-infected lung regions on chest CT images, using the UNet structure. Sedik et al. [20] presented two models, based on convolutional neural networks (CNNs) and convolutional long short-term memory, for boosting the accuracy of COVID-19 detection. As we know, however, the research on using AI to help identify the asymptomatic patients is limited. Therefore, this study aims to develop various DL models, based on CNNs or transformer architectures, for detecting asymptomatic COVID-19 patients on chest CT images.

Materials and Methods

Chest CT Images

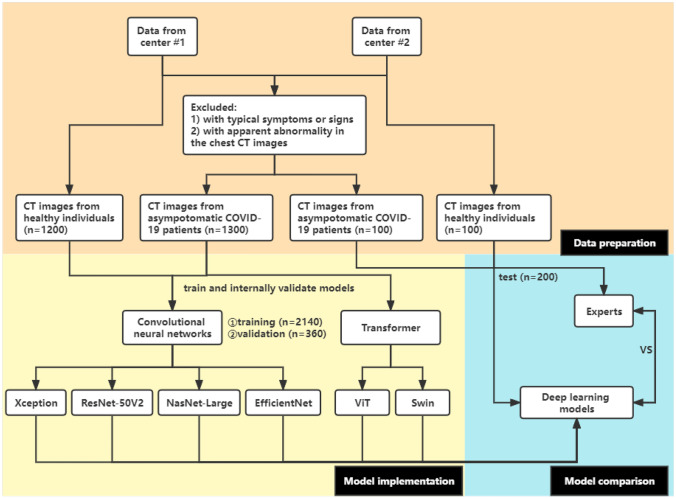

CT images were obtained from Yangzhou Third People’s Hospital (center #1) from August 2020 to June 2021 and from the First Affiliated Hospital of Soochow University (center #2) in 2020; the images were from COVID-19 patients and healthy individuals. All the patients enrolled in this study were confirmed to be positive for COVID-19 by RT-PCR 48 h before or after taking a chest CT exam. The asymptomatic patients were defined as having no evident symptoms. Each CT dataset was reviewed by two radiologists with rich experience, with more than 10 years of chest CT experience in consensus. The images from center #1 (n = 2500) were used for training and internally validating models, while the images from center #2 (n = 200) were used for externally validating the models. This retrospective study was approved by the ethics and review board of the First Affiliated Hospital of Soochow University. Informed consent was waived. The details are presented in Fig. 1.

Fig. 1.

A flowchart of this study

Image Preprocessing

The CT images were downloaded from a medical image cloud platform (www.ftimage.cn). The integrated CT database was saved in PNG format, using a lung window with 5-mm thickness, a 1500 ± 100 Hounsfield unit (HU) window width and a – 600 ± 50 HU window level. All the images were rescaled to 331 × 331 pixels, and then the pixel values were normalized from a range between 0 and 255 to between 0 and 1. To ensure the loss of image information related to pulmonary lesions, a senior radiologist confirmed the PNG format images. No other preprocessing such as image segmentation was carried out.

Implementation of Pretrained CNN-Based Architectures

We explored four pretrained CNNs, including Xception [26], NASNet-Large [27], ResNet-50V2 [28], and EfficientNet [29], for the classification task. These models were pretrained on the ImageNet database (www.image-net.org). Transfer learning was applied by combining the existing CNN layers with additional activation layers for the learning of the target classification.

Input Layer

Each image was normalized as 331 × 331 pixels, padded if necessary and then fed into the pretrained CNN layers.

Pretrained CNN Layers

A typical CNN was composed of a convolutional layer, a pooling layer, and a fully connected layer (dense layer), with rectified linear unit (ReLU) activation function. In this study, we selected four CNNs and retained the convolutional layers, each followed by average pooling layers, to extract feature maps from input images by using 2-dimensional filters. Additionally, the ReLU activation function was required to evaluate the output of each layer. All the layers of these pretrained CNN models were frozen.

Additional Layers

Subsequent to the pretrained CNN layers, three dense layers with a Sigmoid activation function replaced the original fully connected layers, which acted as a classifier. The output sizes for the pretrained Xception model, the NASNet-Large model, the ResNet-50V2 model, and the EfficientNet model are 2048 × 2048, 4032 × 4032, 2048 × 2048, and 1280 × 1280, respectively. The output sizes for the subsequent three layers are 1024 × 1024, 512 × 512, and 2 × 2.

Implementation

We implemented the CNNs based on transfer learning using Keras, where the TensorFlow framework as a backbone provided a Python application programming interface. The Adam optimizer and the binary cross-entropy cost function, with a learning rate of 0.0001 and a batch size of 16, were used to train the models. Firstly, the dataset from center #1 was randomly split into two subsets (85% as a training set, 15% as a validation set) and was fed into the Xception, NASNet-Large, ResNet-50V2, and EfficientNet architectures. Early-stop was applied to determine how many epochs were needed for training a model via callback function for the lowest validation cost. The validation set was used to fine tune the hyperparameters of the models. Lastly, the performance of the models was externally tested by the center #2 dataset.

Implementation of Transformer-Based Architectures

Compared with the sequential input of CNNs, the transformer architecture is characterized by synchronous input based on the self-attention mechanism. This study selected shifted window transformer (Swin) [30] and vision transformer (ViT) [31] models with the encoder and decoder parts of the transformer. The transformer encoder consists of three main components: input embedding, multihead attention, and feed-forward neural networks.

Input Embedding

Due to synchronization, we used sine and cosine functions for position encoding so that two architectures could identify the sequential order. Then, the position encoding matrix was combined with the input images for training the models.

Self-Attention Mechanism

Multihead self-attention applied multiple self-attention mechanism to calculate the attention score of the input vectors, which were first separately mapped to query, key, and value vectors. The scaled dot-product attention function was used to calculate scores based on queries, keys, and values, and then a sum of the scores was computed for the self-attention layer’s output. Additionally, a normalized residual connection was added to the output. When training, the learning rates of the two algorithms were both 0.001, and the epochs were both 100.

Output Embedding

A feed-forward neural network, followed by layer normalization, was applied to generate feature-embedding vectors. This fully connected network contained a ReLU activation function. The Sigmoid function converted these vector values into predictive probability values.

Comparison Between Deep Learning Models and Experts

To compare the performance of the models and medical experts, images from the test dataset were identified by two experts, one with 5 years of experience (junior) and the other one with more than 10 years of experiences (senior) in the field of radiology.

Statistical Analysis

We established DL models using Python software (version: 3.9). We assessed model performance by calculating accuracy, recall, and precision using R software (version: 4.1.0, The R Foundation). More specifically, matrices, consisting of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN), were enumerated to measure the efficiency of the classifier. Additionally, areas under the receiver operating characteristic curves (AUCs) were calculated to evaluate the robustness of our models. The formulas were as follows: accuracy = (TP + TN)/(TP + FP + FN + TN); precision = TP/(TP + FP); recall = TP/(TP + FN).

Results

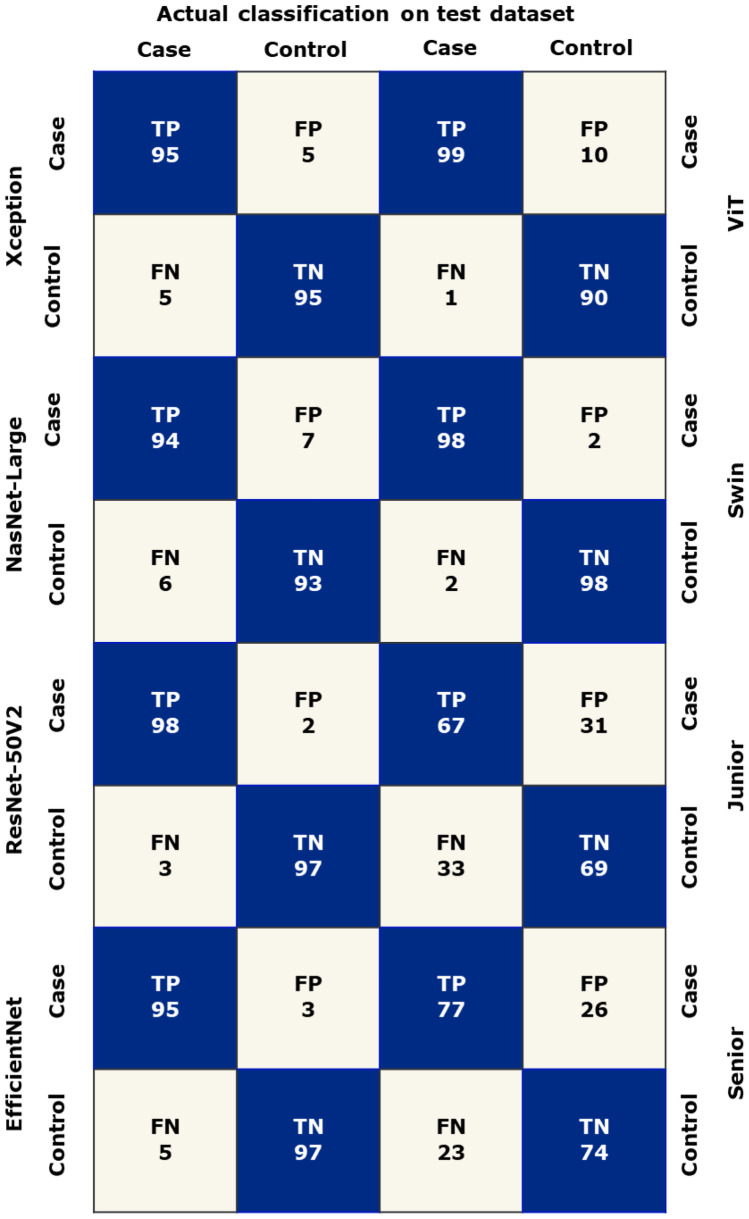

A total of 2700 chest CT images were obtained in this study, consisting of 1400 images of the COVID-19 group and 1300 images of the normal group. Data from center #1 was randomly split into the training set and validation set at a ratio of 8.5:1.5 (2140 vs. 360). The images from center #2 were regarded as the test set (n = 200). According to the aforementioned methods, these CT images were fed into the models based on four pretrained CNNs and two transformer architectures for binary classification. The performance of our proposed models is listed in Fig. 2 and shown in Table 1, while the confusion matrix is shown in Fig. 3.

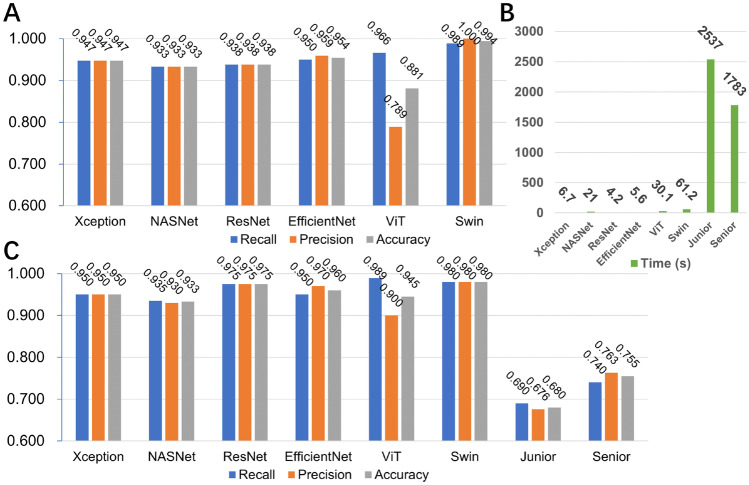

Fig. 2.

The performance of six models and two experts. A The six models’ performance in the validation dataset; B the times spent on the test dataset; C the six models’ and two experts’ performance in the test dataset

Table 1.

The performance of six models and two experts

| Convolutional neural networks | Transformer | Human | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Xception | NasNet-Large | ResNet-50V2 | EfficientNet | ViT | Swin | Junior | Senior | ||

| Training set | Recall | 0.969 | 1.000 | 0.996 | 0.984 | 0.999 | 0.970 | - | - |

| Specificity | 0.967 | 1.000 | 0.996 | 0.991 | 1.000 | 0.900 | - | - | |

| Accuracy | 0.968 | 1.000 | 0.996 | 0.988 | 1.000 | 0.935 | - | - | |

| AUC | 0.995 | 1.000 | 1.000 | 0.999 | 1.000 | 0.948 | - | - | |

| F1-score | 0.968 | 1.000 | 0.996 | 0.988 | 1.000 | 0.937 | - | - | |

| Validation set | Recall | 0.947 | 0.933 | 0.938 | 0.950 | 0.972 | 0.989 | - | - |

| Specificity | 0.947 | 0.933 | 0.938 | 0.959 | 0.789 | 1.000 | - | - | |

| Accuracy | 0.947 | 0.933 | 0.938 | 0.954 | 0.881 | 0.994 | - | - | |

| AUC | 0.978 | 0.968 | 0.974 | 0.987 | 0.881 | 0.947 | - | - | |

| F1-score | 0.947 | 0.933 | 0.938 | 0.954 | 0.891 | 0.994 | - | - | |

| Test set | Recall | 0.950 | 0.940 | 0.970 | 0.950 | 0.990 | 0.980 | 0.690 | 0.740 |

| Specificity | 0.950 | 0.930 | 0.980 | 0.970 | 0.900 | 0.980 | 0.670 | 0.770 | |

| Accuracy | 0.950 | 0.935 | 0.975 | 0.960 | 0.945 | 0.980 | 0.680 | 0.755 | |

| AUC | 0.987 | 0.983 | 0.996 | 0.991 | 0.997 | 0.998 | 0.680 | 0.755 | |

| F1-score | 0.950 | 0.935 | 0.975 | 0.960 | 0.947 | 0.980 | 0.683 | 0.751 | |

| Time (s) | 6.7 | 21 | 4.2 | 5.6 | 30.1 | 61.2 | 2537 | 1783 | |

Fig. 3.

A confusion matrix of two experts and six models. True positives, TP; true negatives, TN; false positives, FP; false negatives, FN

In the validation dataset, the Swin model achieved the highest accuracy of 0.994, followed by the EfficientNet model (0.954) and Xception (0.947) (Fig. 2A). The recall and the precision of the Swin model were 0.989 and 1.000, respectively.

In regard to the test dataset, the four CNN models were time-friendly and were all less than 10 s. In the two transformer models, it cost 30 s in the ViT model and 61 s in the swin model. However, the two medical experts spent remarkably longer time (1783s by the senior and 2537 s by the junior), as shown in Fig. 2B.

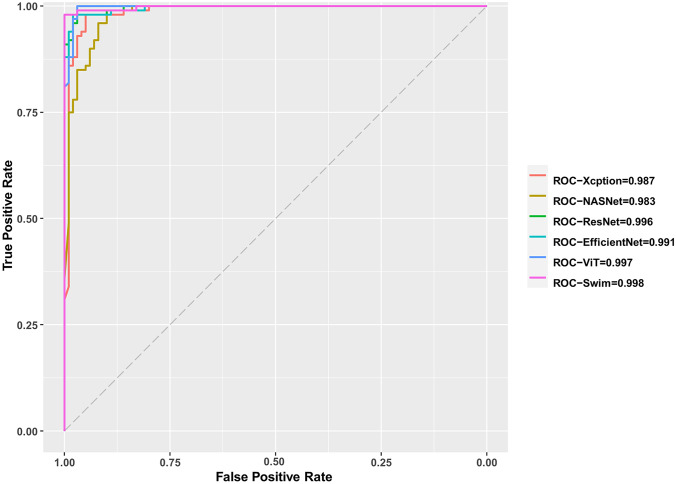

In terms of the performance on the test dataset, the swin model was still the best. The accuracy was 0.980, followed by ResNet (0.975) and EfficientNet (0.960). Furthermore, the junior expert presented an accuracy of 0.680, a recall of 0.690, and a precision of 0.676, while the senior expert showed an accuracy of 0.755, a recall of 0.740, and a precision of 0.763, as shown in Fig. 2C. Additionally, the swin model achieved the highest AUC (0.998) among all the models and the two experts, as shown in Table 1 and Fig. 4.

Fig. 4.

ROC curves of six models with AUCs. ROC, receiver operating characteristic; AUC, area under ROC

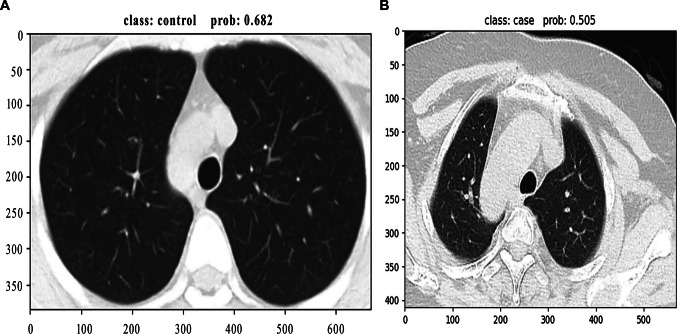

Moreover, the two cases misclassified by the swin model are shown in Fig. 5; one was misdiagnosed as a normal image with a probability of 0.682 and another was misdiagnosed as a COVID-19 image with a probability of 0.505.

Fig. 5.

Two misclassified cases predicted by the Swin model. Swin, shifted window transformer. A Misdiagnosed as normal image with a probability of 0.682; B misdiagnosed as COVID-19 image with a probability of 0.505

Discussion

In this study, we developed four DL models based on CNNs and two transformer architectures to distinguish asymptomatic COVID-19 patients from healthy individuals based on chest CT images. It showed that the transformer-architecture-based model, namely, the swin model, presented the best performance. Furthermore, all the DL models produced better performance than the two experts and spent significantly less time.

Currently, methods for diagnosing COVID-19 are not limited to conventional techniques such as RT-PCR and Chest-CT. Some new modern detection methods have been developed including loop-mediated isothermal amplification (LAMP), droplet digital PCR (dd-PCR), and microarrays for COVID-19 detection [32]. Although these new methods showed high sensitivity and specificity in recent studies, they have not yet been widely used due to the specific environment or the high expenditure [33–35]. Thus, on most occasions, RT-PCR is still the gold standard for diagnosing COVID-19 infection, despite its lack of accuracy and high time consumption [36]. In addition, Chest-CT is also widely adopted clinically as an auxiliary diagnostic method for COVID-19 diagnosis due to its rapid acquisition speed and high sensitivity [8]. However, when compared to RT-PCR tests, Chest-CT has its own advantages, including celerity and convenience. Specifically, it often took at least 6 h for RT-PCR tests to show results, while CT reports could be issued immediately. If COVID-19 spreads widely, large-scale PCR tests will consume more time, human resources, materials, and funds than CT examinations. Furthermore, a chest CT scan can sometimes detect COVID-19 infection based on common CT findings alone even if the RT-PCR test is negative [37–41]. However, in regard to patients who display no clinical symptoms and false-negative RT-PCR results, it may be difficult for radiologists to distinguish between healthy people and asymptomatic infections based on CT features, especially when the newer more modern methods are not accessible. As the number of asymptomatic patients increases, undocumented infections will accelerate the rapid spread of SARS-CoV-2 around the world [16], posing a threat to outbreak control [42].

The past decade has witnessed the emergence of AI as a practicable tool in clinical management [20]. Preliminary studies have confirmed that AI-aided chest CTs had good sensitivities for detecting COVID-19 lung pathologies [43–45]. Sen et al. proposed a model to extract features from chest CT images via CNN and then accurately screened out the most significant COVID-19 characteristics—from the patients’ chest CT images [46]. Bai et al. established an AI model that could differentiate COVID-19 from other pneumonia effectively on chest CTs (accuracy, 96%; and specificity, 96%) and found that AI helped radiologists perform better [47]. In our study, the CT images of asymptomatic patients showed no obvious symptoms, making it difficult to differentiate healthy people from asymptomatic patients. Thus, we adopted four CNN- and two transformer-architecture-based models to evaluate the feasibility of DL in identifying the CT images of asymptomatic COVID-19 patients. On the one hand, we found that the models’ performance was superior to that of the experts, the main reason being that DL algorithms can capture or calculate the subtle differences between images that radiologists cannot detect or understand. DL algorithms are data driven for feature extraction and can automatically obtain deep and specific feature representations based on learning from numerous samples. Moreover, an end-to-end approach allows these algorithms to adapt to the specific medical task requirements [48]. Additionally, DL algorithms can solve with high-dimensional data due to multiple techniques such as the loss function, optimizer, and hidden layers. On the other hand, the results showed that some DL models performed better than others, which we think is because of the different architectures and hyperparameters. CNNs focus on local modeling by convolution kernels, while transformer focuses on global modeling by a self-attention mechanism. Moreover, model accuracy varies with the size of parameters and the throughput. Previous studies suggest that neither CNN nor transformer is completely suitable for all model sizes [49]. Therefore, the model performance of different DL algorithms varies. In addition, these DL models were pretrained on the ImageNet [50], making the process time-saving and less complicated [25], which was easy to use for clinicians.

The novelty of this study is that computer-aided systems are used for processing and analyzing medical images, which greatly improves the value of medical image utilization and the accuracy of clinical diagnosis. Moreover, in the context of AI, the noninvasive diagnosis of CT images of asymptomatic-infected patients provides an important solution to the current challenges to some extent.

However, this study had several limitations. Our data volume was limited, and more datasets from other regions or countries are needed for further verification. Furthermore, these models were trained and tested in retrospective datasets, which might affect the performance in the prospective research. Additionally, the lack of the included patients’ details, such as demographic information, medical history, and even laboratory tests, is another limitation. Multimodal fusion is a potential future trend that could greatly improve model performance, and we will pay more attention to this research direction.

Conclusions

In conclusion, it was feasible and effective to use DL models for differentiating asymptomatic COVID-19 patients from healthy people on chest CT images. Our study might offer insights into the further application of AI for asymptomatic COVID-19 clinical diagnoses.

Abbreviations

- COVID-19

Coronavirus disease 2019

- CNNs

Convolutional neural networks

- DL

Deep learning

- ViT

Vision transformer

- Swim

Shifted window transformer

- ROC

The receiver operating characteristic

- AUCs

Areas under the receiver operating characteristic curve

- SARS-CoV-2

Severe acute respiratory syndrome coronavirus 2

- RT-PCR

Reverse-transcriptase polymerase chain reaction

- CT

Computed tomography

- TP

True positives

- TF

True negatives

- FP

False positives

- FN

False negatives

- LAMP

Loop-mediated isothermal amplification

- RT-LAMP

Reverse transcription-LAMP

- dd-PCR

Droplet digital PCR

Author Contribution

Z.J.Z. and X.C. performed the study concept and the design; Y.M.Y., L.X.L., and W.Z.L. performed development of methodology and writing. L.X.L. and Z.J.Z. contributed to revision of the paper; Z.Y.J., H.Y., X.Y.H., and L.J.X. provided acquisition, analysis and interpretation of data, and statistical analysis; G.J.W., Y.C.Y., and L.L. were responsible for description and visualization of data. Z.J.Z. provided technical and material support. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Youth Program of Suzhou Health Committee of Jinzhou Zhu (grant number KJXW2019001) and a student extracurricular research project of Soochow University Medical College (grant number 2021YXBKWKY050).

Data Availability

Not applicable. Data are, however, available from the authors upon reasonable request and with permission of the corresponding author.

Declarations

Ethics Approval and Consent to Participate

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of the first Affiliated Hospital of Soochow University (protocol code 109 and date of approval: March 31, 2022).

Consent for Publication

Informed consent was waived due to retrospective nature of this study. The asymptomatic COVID-19 patients and healthy individuals from Yangzhou Third People’s Hospital (center #1) from August 2020 to June 2021 and from the First Affiliated Hospital of Soochow University (center 2#) in 2020 waived the informed consent.

Conflict of Interest

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Minyue Yin, Xiaolong Liang, and Zilan Wang contributed equally to this study.

References

- 1.Guan WJ, Ni ZY, Hu Y, et al. Clinical Characteristics of Coronavirus Disease 2019 in China. N Engl J Med. 2020;382(18):1708–1720. doi: 10.1056/NEJMoa2002032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chen G, Lu M, Shi Z, et al. Development and validation of machine learning prediction model based on computed tomography angiography-derived hemodynamics for rupture status of intracranial aneurysms: a Chinese multicenter study. Eur Radiol. 2020;30(9):5170–5182. doi: 10.1007/s00330-020-06886-7. [DOI] [PubMed] [Google Scholar]

- 3.Ozdemir MA, Ozdemir GD, Guren O. Classification of COVID-19 electrocardiograms by using hexaxial feature mapping and deep learning. BMC Med Inform Decis Mak. 2021;21(1):170. doi: 10.1186/s12911-021-01521-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Togacar M, Ergen B, Comert Z. COVID-19 detection using deep learning models to exploit Social Mimic Optimization and structured chest X-ray images using fuzzy color and stacking approaches. Comput Biol Med. 2020;121:103805. doi: 10.1016/j.compbiomed.2020.103805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chen N, Zhou M, Dong X, et al. Epidemiological and clinical characteristics of 99 cases of 2019 novel coronavirus pneumonia in Wuhan, China: a descriptive study. Lancet. 2020;395(10223):507–513. doi: 10.1016/s0140-6736(20)30211-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wang D, Hu B, Hu C, et al. Clinical Characteristics of 138 Hospitalized Patients With 2019 Novel Coronavirus-Infected Pneumonia in Wuhan, China. JAMA. 2020;323(11):1061–1069. doi: 10.1001/jama.2020.1585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ai T, Yang Z, Hou H, et al. Correlation of Chest CT and RT-PCR Testing for Coronavirus Disease 2019 (COVID-19) in China: A Report of 1014 Cases. Radiology. 2020;296(2):E32–e40. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chung M, Bernheim A, Mei X, et al. CT Imaging Features of 2019 Novel Coronavirus (2019-nCoV) Radiology. 2020;295(1):202–207. doi: 10.1148/radiol.2020200230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Huang C, Wang Y, Li X, et al. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet. 2020;395(10223):497–506. doi: 10.1016/s0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bernheim A, Mei X, Huang M, et al. Chest CT Findings in Coronavirus Disease-19 (COVID-19): Relationship to Duration of Infection. Radiology. 2020;295(3):200463. doi: 10.1148/radiol.2020200463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rubin GD, Ryerson CJ, Haramati LB, et al. The Role of Chest Imaging in Patient Management During the COVID-19 Pandemic: A Multinational Consensus Statement From the Fleischner Society. Chest. 2020;158(1):106–116. doi: 10.1016/j.chest.2020.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wiersinga WJ, Rhodes A, Cheng AC, Peacock SJ, Prescott HC. Pathophysiology, Transmission, Diagnosis, and Treatment of Coronavirus Disease 2019 (COVID-19): A Review. Jama. 2020;324(8):782–793. doi: 10.1001/jama.2020.12839. [DOI] [PubMed] [Google Scholar]

- 13.Pham TD. A comprehensive study on classification of COVID-19 on computed tomography with pretrained convolutional neural networks. Sci Rep. 2020;10(1):16942. doi: 10.1038/s41598-020-74164-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bai HX, Hsieh B, Xiong Z, et al. Performance of Radiologists in Differentiating COVID-19 from Non-COVID-19 Viral Pneumonia at Chest CT. Radiology. 2020;296(2):E46–e54. doi: 10.1148/radiol.2020200823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.McCloskey B, Dar O, Zumla A, Heymann DL. Emerging infectious diseases and pandemic potential: status quo and reducing risk of global spread. Lancet Infect Dis. 2014;14(10):1001–1010. doi: 10.1016/s1473-3099(14)70846-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bai Y, Yao L, Wei T, et al. Presumed Asymptomatic Carrier Transmission of COVID-19. Jama. 2020;323(14):1406–1407. doi: 10.1001/jama.2020.2565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kronbichler A, Kresse D, Yoon S, Lee KH, Effenberger M, Shin JI. Asymptomatic patients as a source of COVID-19 infections: A systematic review and meta-analysis. Int J Infect Dis. 2020;98:180–186. doi: 10.1016/j.ijid.2020.06.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Harmon SA, Sanford TH, Xu S, et al. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat Commun. 2020;11(1):4080. doi: 10.1038/s41467-020-17971-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Li Z, Zhong Z, Li Y, et al. From community-acquired pneumonia to COVID-19: a deep learning-based method for quantitative analysis of COVID-19 on thick-section CT scans. Eur Radiol. 2020;30(12):6828–6837. doi: 10.1007/s00330-020-07042-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sedik A, Iliyasu AM, Abd El-Rahiem B, et al: Deploying Machine and Deep Learning Models for Efficient Data-Augmented Detection of COVID-19 Infections. Viruses 12(7):769, 2020. 10.3390/v12070769 [DOI] [PMC free article] [PubMed]

- 21.Zhang J, Xie Y, Pang G, et al. Viral Pneumonia Screening on Chest X-Rays Using Confidence-Aware Anomaly Detection. IEEE Trans Med Imaging. 2021;40(3):879–890. doi: 10.1109/tmi.2020.3040950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zhang K, Liu X, Shen J, et al. Clinically Applicable AI System for Accurate Diagnosis, Quantitative Measurements, and Prognosis of COVID-19 Pneumonia Using Computed Tomography. Cell. 2020;181(6):1423–1433.e11. doi: 10.1016/j.cell.2020.04.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ozturk T, Talo M, Yildirim EA, Baloglu UB, Yildirim O, Rajendra Acharya U. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput Biol Med. 2020;121:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wang L, Lin ZQ, Wong A. COVID-Net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci Rep. 2020;10(1):19549. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Turkoglu M: COVIDetectioNet: COVID-19 diagnosis system based on X-ray images using features selected from pre-learned deep features ensemble. Appl Intell (Dordr) 51:1213–1226, 2020. 10.1007/s10489-020-01888-w [DOI] [PMC free article] [PubMed]

- 26.Chollet F: Xception: Deep Learning with Depthwise Separable Convolutions. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR): 1800–1807, 2017

- 27.Zoph B, Vasudevan V, Shlens J, et al: Learning Transferable Architectures for Scalable Image Recognition. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition: 8697–8710, 2018

- 28.He K, Zhang X, Ren S, et al: Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR):770–778, 2016

- 29.Tan M, Le QVJA: EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv:19805.11946, 2019

- 30.Liu Z, Lin Y, Cao Y, et al: Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. arXiv:2013.14030, 2021

- 31.Dosovitskiy A, Beyer L, Kolesnikov A, et al: An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv:2010.11929, 2021

- 32.Roberts A, Chouhan RS, Shahdeo D, et al. A Recent Update on Advanced Molecular Diagnostic Techniques for COVID-19 Pandemic: An Overview. Front Immunol. 2021;12:732756. doi: 10.3389/fimmu.2021.732756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Wang M, Fu A, Hu B, et al. Nanopore Targeted Sequencing for the Accurate and Comprehensive Detection of SARS-CoV-2 and Other Respiratory Viruses. Small (Weinheim an der Bergstrasse, Germany). 2020;16(32):e2002169. doi: 10.1002/smll.202002169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Matute T, Nuñez I, Rivera M, et al: Homebrew reagents for low cost RT-LAMP. medRxiv : the preprint server for health sciences. 2021. 10.1101/2021.05.08.21256891

- 35.Dong L, Zhou J, Niu C, et al. Highly accurate and sensitive diagnostic detection of SARS-CoV-2 by digital PCR. Talanta. 2021;224:121726. doi: 10.1016/j.talanta.2020.121726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zu ZY, Jiang MD, Xu PP, et al. Coronavirus Disease 2019 (COVID-19): A Perspective from China. Radiology. 2020;296(2):E15–e25. doi: 10.1148/radiol.2020200490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rubin GD, Ryerson CJ, Haramati LB, et al. The Role of Chest Imaging in Patient Management during the COVID-19 Pandemic: A Multinational Consensus Statement from the Fleischner Society. Radiology. 2020;296(1):172–180. doi: 10.1148/radiol.2020201365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Vashist SK: In Vitro Diagnostic Assays for COVID-19: Recent Advances and Emerging Trends. Diagnostics (Basel, Switzerland). 10(4):202, 2020. 10.3390/diagnostics10040202 [DOI] [PMC free article] [PubMed]

- 39.Dong D, Fang MJ, Tang L, et al. Deep learning radiomic nomogram can predict the number of lymph node metastasis in locally advanced gastric cancer: an international multicenter study. Ann Oncol. 2020;31(7):912–920. doi: 10.1016/j.annonc.2020.04.003. [DOI] [PubMed] [Google Scholar]

- 40.Long C, Xu H, Shen Q, et al. Diagnosis of the Coronavirus disease (COVID-19): rRT-PCR or CT? Eur J Radiol. 2020;126:108961. doi: 10.1016/j.ejrad.2020.108961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Xie X, Zhong Z, Zhao W, Zheng C, Wang F, Liu J. Chest CT for Typical Coronavirus Disease 2019 (COVID-19) Pneumonia: Relationship to Negative RT-PCR Testing. Radiology. 2020;296(2):E41–e45. doi: 10.1148/radiol.2020200343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Li R, Pei S, Chen B, et al. Substantial undocumented infection facilitates the rapid dissemination of novel coronavirus (SARS-CoV-2) Science (New York, NY). 2020;368(6490):489–493. doi: 10.1126/science.abb3221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Wang S, Kang B, Ma J, et al. A deep learning algorithm using CT images to screen for Corona virus disease (COVID-19) Eur Radiol. 2021;31(8):6096–6104. doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Shi F, Wang J, Shi J, et al. Review of Artificial Intelligence Techniques in Imaging Data Acquisition, Segmentation, and Diagnosis for COVID-19. IEEE Rev Biomed Eng. 2021;14:4–15. doi: 10.1109/rbme.2020.2987975. [DOI] [PubMed] [Google Scholar]

- 45.Wang HK, Cheng Y, Song K. Remaining Useful Life Estimation of Aircraft Engines Using a Joint Deep Learning Model Based on TCNN and Transformer. Comput Intell Neurosci. 2021;2021:5185938. doi: 10.1155/2021/5185938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Sen S, Saha S, Chatterjee S, Mirjalili S, Sarkar R: A bi-stage feature selection approach for COVID-19 prediction using chest CT images. Appl Intell (Dordrecht, Netherlands). 51:8985–9000, 2021. 10.1007/s10489-021-02292-8 [DOI] [PMC free article] [PubMed]

- 47.Bai HX, Wang R, Xiong Z, et al. Artificial Intelligence Augmentation of Radiologist Performance in Distinguishing COVID-19 from Pneumonia of Other Origin at Chest CT. Radiology. 2020;296(3):E156–e165. doi: 10.1148/radiol.2020201491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Celard P, Iglesias EL, Sorribes-Fdez JM, Romero R, Vieira AS, Borrajo L: A survey on deep learning applied to medical images: from simple artificial neural networks to generative models. Neural Comput Appl 1–33, 2022. 10.1007/s00521-022-07953-4 [DOI] [PMC free article] [PubMed]

- 49.Zhao Y, Wang G, Tang C, et al: A Battle of Network Structures: An Empirical Study of CNN, Transformer, and MLP. arXiv:abs/2108.13002, 2021

- 50.Shin HC, Roth HR, Gao M, et al. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans Med Imaging. 2016;35(5):1285–1298. doi: 10.1109/tmi.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable. Data are, however, available from the authors upon reasonable request and with permission of the corresponding author.