Abstract

Objectives

To elaborate the application suitable for smartphones for estimation of Acoustic Voice Quality Index (AVQI) and evaluate its usability in the clinical setting.

Methods

An elaborated AVQI automatization and background noise monitoring functions were implemented into a mobile “VoiceScreen” application running the iOS operating system. A study group consisted of 103 adult individuals with normal voices (n = 30) and 73 patients with pathological voices. Voice recordings were performed in the clinical setting with “VoiceScreen” app using iPhone 8 microphones. Voices of 30 patients were recorded before and 1 month after phonosurgical intervention. To evaluate the diagnostic accuracy differentiating normal and pathological voice, the receiver-operating characteristic statistics, i.e., area under the curve (AUC), sensitivity and specificity, and correct classification rate (CCR) were used.

Results

A high level of precision of AVQI in discriminating between normal and dysphonic voices was yielded with corresponding AUC = 0.937. The AVQI cutoff score of 3.4 demonstrated a sensitivity of 86.3% and specificity of 95.6% with a CCR of 89.2%. The preoperative mean value of the AVQI [6.01(SD 2.39)] in the post-phonosurgical follow-up group decreased to 2.00 (SD 1.08). No statistically significant differences (p = 0.216) between AVQI measurements in a normal voice and 1-month follow-up after phonosurgery groups were revealed.

Conclusions

The “VoiceScreen” app represents an accurate and robust tool for voice quality measurement and demonstrates the potential to be used in clinical settings as a sensitive measure of voice changes across phonosurgical treatment outcomes.

Supplementary Information

The online version contains supplementary material available at 10.1007/s00405-022-07546-w.

Keywords: AVQI, Smartphone, VoiceScreen

Introduction

The universal use of smartphones made several validated applications in the field of otolaryngology available both to clinicians and patients, because these applications may provide patients with the possibility of some types of physical examination [1, 2]. This became especially important during the COVID-19 pandemic due to the restricted accessibility of medical services. Moreover, the COVID-19 pandemic has likely created an enduring impact on the healthcare system that is showing a tendency to transition from in-person to telehealth services [3].

The easy availability of healthcare-related apps to patients and healthcare providers stimulates their potential use in clinical practice, as the number of smartphone users worldwide is expected to reach about 6.6 billion by 2022 [4]. Therefore, smartphones may represent an effective tool for detection, assessment, and potentially care of common voice disorders, because these mobile communication devices are providing the convenience of portability and multifunctionality within one device, replacing previous hardware [5–12].

Several reports related to the management of voice disorders using voice assessment applications for mobile communication devices have already appeared in the literature. The study performed by Mat Baki et al. demonstrated that voice recordings performed and analyzed with OperaVoxTM application were statistically comparable to the ‘‘gold standard’’ [7]. In 2018, Cesari et al. elaborated an Android application Vox4Health to estimate in real time the possible presence of a voice disorder by calculating and analyzing the main acoustic voice measures extracted from vocalization of vowel/a/ [13]. Further development of the Vox4Heath proposed a new multi-parametric acoustic marker named Dysphonia Detection Index able to evaluate the voice quality and detect possible voice disorders [14]. An Voice Analyzer or “VA” smartphone program for quantitative analysis of voice quality proposed by Kojima et al. in 2018 and further development of the system by Fujimura et al. 2019 resulted in a highly accessible real-time acoustic voice analysis system (VArt) running the Android operating system. It also represented a new hoarseness index, i.e., a real-time Ra (Rart) [15, 16].

Despite the smartphones and mobile apps have the potential to be valuable tools in voice assessment outside the clinic, further efforts are needed for them to be effectively used in a clinical setting [17]. This consideration also includes the selection of appropriate acoustic voice parameters suitable for clinical use.

One of the most potential candidates for this purpose is an Acoustic Voice Quality Index (AVQI)—a multiparametric model for voice assessment proposed by Maryn et al. in 2010 is a multivariate construct based on a linear regression analysis that combines six acoustic markers [18, 19]. The significance of AVQI as a reliable clinical marker of overall voice quality has already been proven across multiple studies revealing adequate diagnostic accuracy with good discriminatory power of the AVQI in differentiating between normal and abnormal voice qualities, high and consistent concurrent validity, and test–retest variability, high sensitivity to voice quality changes through voice therapy [18, 20–24]. The recent meta-analyses on the validity of AVQI confirmed the AVQI to be a valid tool for the assessment of voice quality supporting the general clinical utility of the AVQI as a robust objective measure for evaluating overall dysphonia severity across languages and study methods. Consequently, nowadays, AVQI as a multiparametric construct of voice quality is recognized for its clinical and research applications around the globe [25, 26]. Of note is the feature that the AVQI values do not depend on gender and age, thus providing the perspectives for further generalization of this objective and quantitative measurement for detection of dysphonia severity level and dysphonia screening purposes [27, 28].

Results of the recent study proved the suitability of smartphone microphone recordings performed in a soundproof room for voice analysis and estimation of AVQI, thus validating the feasibleness of this type of microphone signal for the task of voice screening [29]. Furthermore, the results of the consequential study demonstrated that the AVQI measures obtained from smartphone microphones voice recordings with experimentally added ambient noise revealed an acceptable agreement with the results of the reference studio microphone simultaneous recordings in a sound-proof room, thus suggesting the suitability of smartphone microphone recordings performed even in the presence of acceptable ambient noise for estimation of AVQI [30]. However, to the best of our knowledge, currently, only the study of Grillo et al. in 2020 presented the application (VoiceEvalU8) that provides an automatic option for the reliable calculation of several acoustic voice measures and AVQI on iOS and Android smartphones using Praat source code and algorithms. This work demonstrated that acoustic measures including AVQI captured automatically by VoiceEvalU8 in the client's environment (the participants were instructed to be in a quiet room) were similar to acoustic measures captured manually by two raters using different Praat versions. The average AVQI value of 19 vocally healthy females was consistent with normative female data in the literature [31]. The results of the studies mentioned above enabled us to presume the feasibility of voice recordings captured with smartphone in an ordinary clinical setting for the estimation of AVQI.

However, it is not possible technically to compare directly smartphone recorded and computed AVQI with the original AVQI implementation and investigate the reliability of their agreement, because it is not possible to record simultaneously the same voice in a soundproof studio and in the common clinical setting. Consequently, the current research was designed to answer the following questions regarding the possibility of smartphone-based AVQI estimation in an ordinary clinical environment: (1) are the smartphone estimated average AVQI values consistent with normative and pathological AVQI data described in the literature; (2) is the diagnostic accuracy of smartphone-estimated AVQI relevant to differentiate normal and pathological voices; (3) is the smartphone-estimated AVQI appropriate to measure AVQI changes after phonosurgical interventions; and (4) is there evidence to indicate the benefit from the users’ point of view in the performance of smartphone AVQI application in clinical settings? We hypothesize that the use of smartphones for voice recordings and estimation of AVQI in an ordinary clinical environment will be feasible for the quantitative voice assessment.

Therefore, the present study aimed to elaborate the application suitable for smartphones for estimation of AVQI and evaluate its usability of estimation of AVQI in clinical settings.

Materials and methods

This study was approved by the Kaunas Regional Ethics Committee for Biomedical Research (No. P2-24/2013) and by Lithuanian State Data Protection Inspectorate for Working with Personal Patient Data (No. 2R-648 (2.6-1)).

The study group consisted of 103 adult individuals, 43 men, and 60 women. The mean age of the study group was 45.3 (SD 12.57) years. They were all examined at the Department of Otolaryngology of the Lithuanian University of Health Sciences, Kaunas, Lithuania. The clinical diagnosis of laryngeal disease served as the criterion to sort individuals into pathological voice or normal voice groups.

The pathological voice subgroup consisted of 73 patients, 28 men, and 45 women: mean age 47.7 years (SD 11.04). They presented with a relatively common and clinically discriminative group of laryngeal diseases and related voice disturbances, i.e., benign and malignant mass lesions of the vocal folds and unilateral paralysis of the vocal fold. The clinical diagnosis was made by an otolaryngologist and based on clinical examination (complaints, history, voice assessment) and results of video laryngostroboscopy (VLS) using an XION Endo-STROB DX device (XION GmbH, Berlin, Germany) 70° rigid endoscope and/or direct microlaryngoscopy. All the patients with mass lesions of the vocal folds underwent endolaryngeal phonosurgical interventions. The voices of all 73 patients were evaluated at the baseline; however, in the subgroup of 30 random patients with mass lesions of vocal folds, their voices were evaluated also in a 1-month follow-up after the treatment.

The normal voice subgroup consisted of 30 selected healthy volunteer individuals, 15 men, and 15 women: mean age 39.31 (SD 14.27) years. This subgroup was collected following three criteria to define a vocally healthy subject: (1) all selected subjects considered their voice as normal and had no actual voice complaints, no history of chronic laryngeal diseases or voice disorders; (2) no pathological alterations in the larynx of the healthy subjects were found during VLS; and (3) all these voice samples were evaluated as normal voices by otolaryngologists working in the field of voice.

Demographic data of the study group and diagnoses of the pathological voice subgroup are presented in Table 1.

Table 1.

Demographic data of the study group

| Diagnosis | Total | Age | |

|---|---|---|---|

| Mean | SD | ||

| Normal voice | 30 | 39.31 | 14.27 |

| Vocal fold nodules | 8 | 36.5 | 4.37 |

| Vocal fold polyp | 24 | 48 | 12.21 |

| Vocal fold cyst | 3 | 44 | 7.81 |

| Vocal fold cancer | 5 | 46 | 4.63 |

| Vocal fold polypoid hyperplasia | 16 | 53.56 | 7.65 |

| Vocal fold keratosis | 6 | 51.16 | 13.74 |

| Vocal fold papilloma | 7 | 45.14 | 14.29 |

| Unilateral vocal fold paralysis | 3 | 54.33 | 1.15 |

| Granuloma | 1 | 38 | - |

SD standard deviation

No correlations between the subject’s age, sex, and voice quality were found when tested with the AVQI in the previous study [27]. Therefore, in this study, the control and patients’ groups were considered suitable for AVQI-related data analysis despite these groups not being matched by sex and age.

Development of smartphone-based automated AVQI estimation application

The background noise monitoring, voice recording, and AVQI calculation with elaborated automatization functions were implemented for use with iOS operating devices. The Praat scripting functionality was used to automate the audio file preparation and selection process which was added as additional functionalities to the AVQI Praat script. Consequently, the “VoiceScreen” application allows voice recording, automatically extracting acoustic voice features, and displaying the AVQI result alongside a recommendation to the user. (For details see in Supplementary Material).

Voice recordings

Voice samples from each subject were recorded in an ordinary clinical environment, i.e., patient's ward using an internal (bottom) microphone of the smartphone iPhone 8 at a 30.0 cm distance from the mouth, keeping at about 90° microphone-to-mouth angle. The background noise environment averaged at 29.61 dB SPL and the signal-to-noise ratio (SNR), when compared against the voiced recordings, averaged 38.11 dB; therefore, this environment was considered suitable both for voice recordings and acoustic parameters extraction [32].

Each participant was required to complete two vocal tasks, which were digitally recorded. The tasks consisted of (a) sustaining phonation of the vowel sound [a:] for at least 4 s duration and (b) reading a phonetically balanced text segment in Lithuanian "Turėjo senelė žilą oželį" (The grandmother had a little grey goat). As the individual's vocal loudness level may act as a significant confounding factor during clinical voice assessment, the participants were asked to complete both vocal tasks at possibly equal levels, i.e., moderate and personally comfortable loudness and pitch. All voice recordings were captured and processed with the smartphone using the automated AVQI estimation system “VoiceScreen” app.

System usability scale

We assessed the usability of the “VoiceScreen” app employing the system usability scale (SUS) questionnaire. The SUS represents a global, widely employed, subjective assessment tool of usability of a system or product [33]. This scale consists of 10 items rated with a Likert-style five-point response format ranging from 1 (strongly disagree) to 5 (strongly agree). A total of 68 points represent the average usability score across systems [34]. The total scores also correspond with seven qualitative adjective ratings, ranging from “worst imaginable” at the low end to “best imaginable” at the high end or range 0–64 (not acceptable), 65–84 (acceptable), and 85–100 (excellent) [35].

All participants of this study were asked to fill in the SUS questionnaire immediately after the self-registration of AVQI using the iPhone 8 smartphone and “VoiceScreen” application. This test was done for both groups: patients before phonosurgical procedure and the normal voice control group. The linguistically and culturally adapted Lithuanian version of SUS was utilized for this study.

Statistical analysis

All statistical analyses were completed using IBM SPSS Statistics 25.0 (IBM Corp., Armonk, NY). Means of the groups and standard deviations (SD) for each parameter were obtained for the preoperative and one moth postoperative trials. Student t test and paired samples t test were used accordingly for the comparison of the means for two independent and two related samples. All results were considered statistically significant at p ≤ 0.05.

The receiver-operating characteristics (ROC) curve, sensitivity and specificity, and the "area under ROC curve" (i.e., AUC) were used to calculate the accuracy of AVQI in discriminating between normal and pathological voices. The applicability of the best cutoff score for a clinical decision was assessed by the Youden index and the balance between the "likelihood ratio for a positive result" (LR+) and the "likelihood ratio for a negative result" (LR−) [36]. To summarize accuracy measures and feasibility of AVQI using in a clinical setting, the correct classification rate (CCR) was calculated.

Results

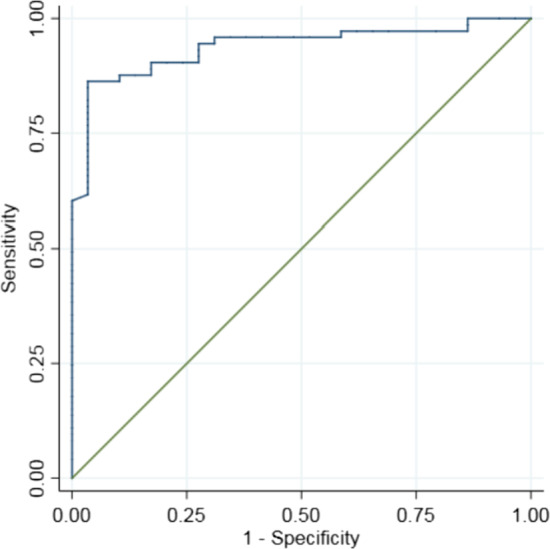

The ROC analysis applied to estimate the diagnostic accuracy to differentiate the normal and pathological voice of automatized AVQI version, i.e., "VoiceScreen” application, revealed an outstanding precision. First, the ROC curve was visually inspected to specify the optimal AVQI cutoff scores according to general interpretation guidelines [36]. The ROC curve and AUC occupied most of the graph’s space, already revealing respectable power to discriminate between normophonia and dysphonia Fig. 1.

Fig. 1.

Receiver-operating characteristics (ROC) curve illustrating the diagnostic accuracy of “VoiceScreen” app in discriminating normal/pathological voice

Second, as demonstrated by the AUC statistics a high level of precision of AVQI in discriminating between normal voices of the control group and dysphonic voices of the patients' group before phonosurgery was yielded with corresponding AUC = 0.937. Youden index of 82.6 was considered as optimum to identify the best cutoff score. The AVQI cutoff score of 3.4 demonstrated a sensitivity of 86.3% and specificity of 95.6% with CCR of 89.2%. The LR statistics compiled acceptable results under the threshold criteria of LR + ≥ 10 and almost reached a recommended LR − ≤ 0.1 value. (Table 2).

Table 2.

Statistics illustrating “VoiceScreen" ability to differentiate normal and pathological voices

| AUC | Threshold | Sensitivity | Specificity | CCR | LR + | LR− | Youden index | |

|---|---|---|---|---|---|---|---|---|

| AVQI | 0.937 | 3.4 | 86.3% | 95.6% | 89.2 | 25 | 0.14 | 82.6 |

AVQI acoustic voice quality index, AUC area under curve; CCR—correct classification rate, LR+ likelihood ratio for a positive result"; LR− likelihood ratio for a negative result

The mean values and standard deviations of the AVQI in the control group and the patients' group before and 1 month after phonosurgery are presented in Table 3.

Table 3.

Comparison of mean AVQI scores in the control group and patients' group before and after phonosurgery

| AVQI score | AVQI before surgery | AVQI after surgery | AVQI healthy | |

|---|---|---|---|---|

| AVQI before surgery | 6.01 (SD 2.39) | – | p < 0.001 | p < 0.001 |

| AVQI after surgery | 2.00 (SD 1.08) | p < 0.001 | – | p = 0.216 |

| AVQI control group | 2.34 (SD 1.06) | p < 0.001 | p = 0.216 | – |

AVQI acoustic voice quality index, SD standard deviation

As shown in Table 3, the mean AVQI values were statistically significantly higher in the patients’ group before phonosurgery, compared to the control group, thus reflecting considerably deteriorated voice quality in the patients' group. To evaluate the effectiveness of phonosurgical intervention in voice rehabilitation in a 1-month postoperative period, the mean postoperative values of AVQI were compared with the normal mean values of the control group. As follows from Table 3, the preoperative mean value of the AVQI [6.01(SD 2.39)] in the follow-up group decreased to 2.00 (SD 1.08) 1 month after phonosurgical intervention, demonstrating significant voice improvement. This was confirmed by the paired t test showing statistically significant differences between pre- and post-operative AVQI measurements (p < 0.001). Moreover, an independent sample t test revealed no statistically significant difference (p = 0.216) between AVQI measurements in a normal voice and 1-month follow-up after phonosurgery groups.

Results of the assessment of “VoiceScreen” app usability with SUS questionnaire both in patients’ and control groups are presented in Table 4.

Table 4.

Results of SUS assessment of the "VoiceScreen” app in voice disordered patients and control groups

| Measure | Mean (SD) | Median | Minimum | Maximum |

|---|---|---|---|---|

| SUS score in patients’ group | 92.8 (8.68) | 95 | 65 | 100 |

| SUS score in the control group | 97.9 (2.84) | 100 | 90 | 100 |

SUS system usability scale, SD standard deviation

As shown in Table 4, the mean SUS scores for the “VoiceScreen” app exceeded the recommended in the literature average usability score of 68 across systems and corresponded to a patient-perceived adjective rating of "excellent", with a mean of 92.8 points in voice disordered patients group and with a mean of 97.9 in the control group. Pearson correlation coefficient was used to investigate the correlations among SUS score and individual's age. No significant correlations between SUS scores and the participants age neither in patients' nor in control groups were found (r = − 0.24, p = 0.06 for patients’ group; r = − 0.15, p = 0.42 for control group).

Conclusions and discussion

In the present study, the novel “VoiceScreen” application for estimation of AVQI and detection of voice deteriorations in patients with various voice disorders and healthy controls was employed for the first time in the clinical setting in an ordinary clinical environment. Analysis of the results revealed that the AVQI showed a strong ability to discriminate between normal voices vs. pathological voices as determined via clinical diagnosis of the laryngeal disorder. The AVQI cutoff score of 3.4 was associated with very good test accuracy (AUC = 0.937), resulting in a balance between sensitivity and specificity (86.3% and 95.6%, respectively) with a CCR of 89.2%. Moreover, a comparison of the mean AVQI values before and 1 month after surgical treatment confirmed the effectiveness of phonosurgical treatment in voice rehabilitation and also demonstrated the sensitivity of AVQI measurements obtained with the "VoiceScreen” app to voice quality changes over time.

In general, this is in concordance with the data of the literature, confirming that smartphones have proven to be comparable to standard external microphones in recording and acoustic analysis of normal and dysphonic voices [8, 37–39]. The results of van der Woerd B et al. study demonstrated that smartphones can capture acceptable recordings for voice acoustic signal analysis even in non-optimized conditions, although a sound-treated setting is ideal for voice sample collection [40].

The less vulnerability of AVQI to environmental noise compared to other complex acoustic markers proved by Maryn et al. study served also as a substantial argument for choosing the AVQI estimation from voice recordings done with the smartphone in the present study [9]. Moreover, Grillo et al. demonstrated that different smartphones and the head-mounted microphone yielded no significant differences within-subject for both women and men when comparing AVQI across different voice analysis programs [39]. According to the results of Bottalico et al. study, the AVQI was also revealed as one of the most robust parameters regarding the interaction between acoustic voice quality parameters and room acoustics [41].

Summarizing the data of the literature and results of the present study allows presuming that the performance of “VoiceScreen” app in ordinary clinical environments presents the adequate and compatible performance as AVQI estimation under standard conditions (voice recording in sound-proof room, use of high-quality oral microphones and sophisticated sound cards) [29, 30]. Moreover, a comparison of the mean AVQI values before and 1 month after surgical treatment revealed no statistically significant differences between mean AVQI measurements in a normal voice and after phonosurgery groups confirming the effectiveness of phonosurgical treatment in voice rehabilitation and also demonstrating the sensitivity of AVQI measurements obtained with the "VoiceScreen” app to voice quality changes over time. AVQI has been proved as highly effective in differentiating normal vs. pathological voice based on perceptual assessment [18, 21, 24, 42]. The strong correlation between AVQI measures and auditory–perceptual assessment [Grade (G)] from the Grade Roughness Breathiness Asthenia Strain (GRBAS) protocol was revealed in a previous investigation [22, 43]. The results of other studies demonstrated that AVQI is also highly effective in differentiating normal and pathological voice based on the "gold standard", i.e., voice disorder diagnosis [29, 44]. The results of the present investigation are in concordance with this statement. However, the detailed assessment of the functional outcomes of endolaryngeal phonosurgical interventions was not in the scope of the present study. Therefore, we did not use the complete battery of voice assessment measures including auditory–perceptual evaluation.

In the present study, the possible impact of background noise on the accuracy of AVQI measurements with the "VoiceScreen” app was reduced by implementing an automated background noise monitoring function specially elaborated for this app. The background noise monitoring starts immediately after starting the voice test, and if the environment is not silent enough, i.e., a threshold limit of 30 dB SNR is not reached, the user is advised to select a more suitable, less noisy environment to be allowed to begin the voice recording process.

The analysis of the SUS tests showed that the application was well-received by the study participants achieving a SUS score average of about 94.5 points. The “VoiceSreen” app was considered easy to use, well-organized, and with a clear design. Our results showed that the "VoiceScreen” app is considered user-friendly both for patients and healthy individuals and the context is easily understood. Excellent usability scores of the “VoiceScreen” app registered with the SUS were likely related to intelligibility and simplicity of use, requiring only one button to control the system. Of note is the fact that high SUS scores have been achieved both in the patients and healthy controls groups independently of age, education, and occupation. This supports the feasibility of the “VoiceScreen” app in clinical settings as a simple, quick, and user-friendly tool.

Several limitations of the present pilot study have to be considered. The total study group was rather small and not equally representative of the wide diversity of voice disorders. The pathological voice subgroup consisted of only clinically evident and distinctive laryngeal diseases, i.e., benign and malignant mass lesions of the vocal folds. Therefore, the voice disturbances in this group were rather severe and required phonosurgical intervention. This circumstance possibly influenced the very high discriminative power of the AVQI obtained with the “VoiceScreen” app in differentiation normal/pathological voice. It is generally confirmed, that AVQI is a robust tool to discriminate among different degrees of vocal deviation, and more accurately between voices with moderate and severe deviations [45]. However, it is important to evaluate the feasibility and validity of the "VoiceScreen” app in cases when voices had been perceptually judged as slightly abnormal or normal despite the presence of laryngeal disease. Some evidence in the literature claims that in such cases the differentiation accuracy of AVQI could be insufficient [46]. Therefore, further studies on the usability of the "VoiceScreen” app in a wide diversity of voice pathology including functional voice disorders are required.

The current version of the "VoiceScreen” app is based on and running the iOS operating system thus limiting the availability only for iPhone smartphones, tablets, etc. Therefore, further development of the system is foreseen to make it cross-platforms suitable. Testing of the usability of the “VoiceScreen” app in other settings (for instance, general practitioner office) would also be highly desirable.

During the COVID-19 pandemic, patients’ interactions with healthcare providers became restricted due to the increased risk of exposure to COVID-19 for both of them. This enhances the importance of telemedicine and remote consultations. However, the universal use of smartphones makes applications in the field of voice assessment easily available to clinicians and patients. As a result, patients potentially may be provided with a primary tool for objective voice evaluation and screening. This may increase patients ‘ motivation for a timely visit to an otolaryngologist or voice specialist thereby improving early diagnostics of laryngeal diseases including laryngeal carcinoma.

In summary, according to the results of the present study, the "VoiceScreen" system

represents an accurate and robust tool for voice quality measurement and demonstrates the potential to be used in clinical settings as a sensitive measure of voice changes across phonosurgical treatment outcomes. The potential use of the iOS-based VoiceScreen app in a clinical setting could be in concordance with the standard of the European Laryngological Society basic protocol for functional assessment of voice pathology regarding the voice acoustics [43].

Moreover, portability, patient/user-friendliness, and applicability of the "VoiceScreen” app not only in clinical settings may ensure a wider utility and, therefore, may be preferred by patients and clinicians for voice assessment and data collection in both homes and clinical settings. On the other hand, acoustic voice analysis using the “VoiceScreen” app may be an important part of the follow-up and quantitative monitoring of voice treatment results.

Supplementary Information

Below is the link to the electronic supplementary material.

Funding

This research has received funding from European Regional Development Fund (project No 13.1.1-LMT-K-718-05-0027) under grant agreement with the Research Council of Lithuania (LMTLT). Funded as European Union’s measure in response to COVID-19 pandemic.

Declarations

Conflict of interest

No conflict of interests.

Ethical approval

This study was approved by the Kaunas Regional Ethics Committee for Biomedical Research (No. P2-24/2013) and by Lithuanian State Data Protection Inspectorate for Working with Personal Patient Data (No. 2R-648 (2.6-1).

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Casale M, Costantino A, Rinaldi V, et al. Mobile applications in otolaryngology for patients: an update. Laryngoscope Investig Otolaryngol. 2018;3(6):434–438. doi: 10.1002/lio2.201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Trecca EM, Lonigro A, Gelardi M, et al. Mobile applications in otolaryngology: a systematic review of the literature, apple app store and the google play store. Ann Otol Rhinol Laryngol. 2021;130(1):78–91. doi: 10.1177/0003489420940350. [DOI] [PubMed] [Google Scholar]

- 3.Grillo EU, Corej B, Wolfberg J. Normative values of client-reported outcome measures and self-ratings of six voice parameters via the VoiceEvalU8 app. J Voice. 2021 doi: 10.1016/j.jvoice.2021.10.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Statistica. Number of smartphone users worldwide. In: 2014–2020. https://www.statista.com/statistics/330695/number-of-smartphone-usersworldwide/. Accessed September 2021

- 5.Kardous CA, Shaw PB. Evaluation of smartphone sound measurement applications. J Acoust Soc Am. 2014;140(4):EL327. doi: 10.1121/1.4865269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Vogel AP, Rosen KM, Morgan AT, Reilly S. Comparability of modern recording devices for speech analysis: smartphone, landline, laptop, and hard disc recorder. Folia Phoniatr Logop. 2014;66(6):244–250. doi: 10.1159/000368227. [DOI] [PubMed] [Google Scholar]

- 7.Mat Baki M, Wood G, Alston M, et al. Reliability of OperaVOX against multidimensional voice program (MDVP) Clin Otolaryngol. 2015;40(1):22–28. doi: 10.1111/coa.12313. [DOI] [PubMed] [Google Scholar]

- 8.Manfredi C, Lebacq J, Cantarella G, et al. Smartphones offer new opportunities in clinical voice research. J Voice. 2017;1:111.e1–111.e7. doi: 10.1016/j.jvoice.2015.12.020. [DOI] [PubMed] [Google Scholar]

- 9.Maryn Y, Ysenbaert F, Zarowski A, Vanspauwen R. Mobile communication devices, ambient noise, and acoustic voice measures. J Voice. 2017;2:248.e11–248.e23. doi: 10.1016/j.jvoice.2016.07.023. [DOI] [PubMed] [Google Scholar]

- 10.Lebacq J, Schoentgen J, Cantarella G, et al. Maximal ambient noise levels and type of voice material required for valid use of smartphones in clinical voice research. J Voice. 2017;5:550–556. doi: 10.1016/j.jvoice.2017.02.017. [DOI] [PubMed] [Google Scholar]

- 11.Schaeffler F, Jannetts S, Beck J (2019) Reliability of clinical voice parameters captured with smartphones—measurements of added noise and spectral tilt. In: Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH. 10.21437/Interspeech.2019-2910

- 12.Munnings AJ. The current state and future possibilities of mobile phone “Voice Analyser” applications. Relat Otorhinolaryngol J Voice. 2019;34(4):527–532. doi: 10.1016/j.jvoice.2018.12.018. [DOI] [PubMed] [Google Scholar]

- 13.Cesari U, De Pietro G, Marciano E, et al. Voice disorder detection via an m-health system: design and results of a clinical study to evaluate Vox4Health. Biomed Res Int. 2018 doi: 10.1155/2018/8193694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Verde L, De Pietro G, Alrashoud M, et al. Dysphonia detection index (DDI): a new multi-parametric marker to evaluate voice quality. IEEE Access. 2019 doi: 10.1109/ACCESS.2019.2913444. [DOI] [Google Scholar]

- 15.Kojima T, Fujimura S, Hori R, et al. An innovative voice analyzer “VA” smart phone program for quantitative analysis of voice quality. J Voice. 2019 doi: 10.1016/j.jvoice.2018.01.026. [DOI] [PubMed] [Google Scholar]

- 16.Fujimura S, Kojima T, Okanoue Y, et al. Real-time acoustic voice analysis using a handheld device running android operating system. J Voice. 2019;34(6):823–829. doi: 10.1016/j.jvoice.2019.05.013. [DOI] [PubMed] [Google Scholar]

- 17.Petrizzo DPP. Smartphone use in clinical voice recording and acoustic analysis: a literature review. J Voice. 2021 doi: 10.1016/j.jvoice.2019.10.006. [DOI] [PubMed] [Google Scholar]

- 18.Maryn Y, De Bodt M, Roy N. The Acoustic Voice Quality Index: Toward improved treatment outcomes assessment in voice disorders. J Commun Disord. 2010;43:161–174. doi: 10.1016/j.jcomdis.2009.12.004. [DOI] [PubMed] [Google Scholar]

- 19.Maryn Y, Roy N. Sustained vowels and continuous speech in the auditory-perceptual evaluation of dysphonia severity. J Soc Bras Fonoaudiol. 2012;24(2):107–112. doi: 10.1590/S2179-64912012000200003. [DOI] [PubMed] [Google Scholar]

- 20.Barsties B, Maryn Y. The Acoustic Voice Quality Index: toward expanded measurement of dysphonia severity in German subjects. HNO. 2012 doi: 10.1007/s00106-012-2499-9. [DOI] [PubMed] [Google Scholar]

- 21.Hosokawa K, Barsties B, Iwahashi T, et al. Validation of the acoustic voice quality index in the japanese language. J Voice. 2017;31:260.e1–260.e9. doi: 10.1016/j.jvoice.2016.05.010. [DOI] [PubMed] [Google Scholar]

- 22.Uloza V, Petrauskas T, Padervinskis E, et al. Validation of the acoustic voice quality index in the Lithuanian language. J Voice. 2017;31:257.e1–257.e11. doi: 10.1016/j.jvoice.2016.06.002. [DOI] [PubMed] [Google Scholar]

- 23.Kim GH, Lee YW, Bae IH, et al. Validation of the acoustic voice quality index in the Korean Language. J Voice. 2019;33:948.e1–948.e9. doi: 10.1016/J.JVOICE.2018.06.007. [DOI] [PubMed] [Google Scholar]

- 24.Kankare E, Barsties V, Latoszek B, Maryn Y, et al. The acoustic voice quality index version 02.02 in the Finnish-speaking population. Logoped Phoniatr Vocol. 2020;45:49–56. doi: 10.1080/14015439.2018.1556332. [DOI] [PubMed] [Google Scholar]

- 25.Jayakumar T, Benoy JJ. Acoustic Voice Quality Index (AVQI) in the measurement of voice quality: a systematic review and meta-analysis. J Voice. 2022 doi: 10.1016/J.JVOICE.2022.03.018T. [DOI] [PubMed] [Google Scholar]

- 26.Batthyany C, Latoszek BBV, Maryn Y. Meta-analysis on the validity of the acoustic voice quality index. J Voice. 2022 doi: 10.1016/J.JVOICE.2022.04.022. [DOI] [PubMed] [Google Scholar]

- 27.Barsties V, Latoszek B, Ulozaitė-Stanienė N, Maryn Y, et al. The influence of gender and age on the acoustic voice quality index and dysphonia severity index: a normative study. J Voice. 2017 doi: 10.1016/j.jvoice.2017.11.011. [DOI] [PubMed] [Google Scholar]

- 28.Batthyany C, Maryn Y, Trauwaen I, et al. A case of specificity: How does the acoustic voice quality index perform in normophonic subjects? Appl Sci. 2019 doi: 10.3390/app9122527. [DOI] [Google Scholar]

- 29.Ulozaite-Staniene N, Petrauskas T, Šaferis V, Uloza V. Exploring the feasibility of the combination of acoustic voice quality index and glottal function index for voice pathology screening. Eur Arch Oto-Rhino-Laryngol. 2019;276:1737–1745. doi: 10.1007/s00405-019-05433-5. [DOI] [PubMed] [Google Scholar]

- 30.Uloza V, Ulozaitė-Stanienė N, Petrauskas TKR. Accuracy of acoustic voice quality index captured with a smartphone—measurements with added ambient noise. J Voice. 2021;S0892–1997(21):00073–74. doi: 10.1016/j.jvoice.2021.01.025. [DOI] [PubMed] [Google Scholar]

- 31.Grillo EU, Wolfberg J. An assessment of different praat versions for acoustic measures analyzed automatically by VoiceEvalU8 and manually by two raters. J Voice. 2020;S0892–1997(20):30442–30452. doi: 10.1016/j.jvoice.2020.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Deliyski DD, Shaw HS, Evans MK. adverse effects of environmental noise on acoustic voice quality measurements. J Voice. 2005;19:15–28. doi: 10.1016/j.jvoice.2004.07.003. [DOI] [PubMed] [Google Scholar]

- 33.Brooke J. The system usability scale: a quick and dirty usability scale. Usability Eval Ind. 1996;189:4–7. [Google Scholar]

- 34.Bangor A, Kortum PT, Miller JT. An empirical evaluation of the system usability scale. Int J Hum Comput Interact. 2008;24:574–594. doi: 10.1080/10447310802205776. [DOI] [Google Scholar]

- 35.Mclellan S, Muddimer A, Peres SC. The effect of experience on system usability scale ratings. J Usability Stud. 2012;7:56–67. [Google Scholar]

- 36.Dollaghan CA. The handbook for evidence-based practice in communication disorders. Baltimore: MD Brooks; 2007. [Google Scholar]

- 37.Lin E, Hornibrook J, Ormond T. Evaluating iPhone recordings for acoustic voice assessment. Folia Phoniatr Logop. 2012 doi: 10.1159/000335874. [DOI] [PubMed] [Google Scholar]

- 38.Uloza V, Padervinskis E, Vegiene A, et al. Exploring the feasibility of smart phone microphone for measurement of acoustic voice parameters and voice pathology screening. Eur Arch Otorhinolaryngol. 2015;272:3391–3399. doi: 10.1007/s00405-015-3708-4. [DOI] [PubMed] [Google Scholar]

- 39.Grillo EU, Brosious JN, Sorrell SL, Anand S. Influence of smartphones and software on acoustic voice measures. Int J Telerehabilit. 2016;8:9–14. doi: 10.5195/IJT.2016.6202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.van der Woerd B, Wu M, Parsa V, Doyle PC, Fung K. Evaluation of acoustic analyses of voice in nonoptimized conditions. J Speech Lang Hear Res. 2020;63(12):3991–3999. doi: 10.1044/2020_JSLHR-20-00212. [DOI] [PubMed] [Google Scholar]

- 41.Bottalico P, Codino J, Cantor-Cutiva LC, et al. Reproducibility of voice parameters: the effect of room acoustics and microphones. J Voice. 2018 doi: 10.1016/j.jvoice.2018.10.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kim GH, Lee YW, Bae IH, Park HJ, Lee BJ, Kwon SB. Comparison of two versions of the acoustic voice quality index for quantification of dysphonia severity. J Voice. 2020;34(3):489.e11–489.e19. doi: 10.1016/j.jvoice.2018.11.013. [DOI] [PubMed] [Google Scholar]

- 43.Dejonckere PH, Bradley P, Clemente P, et al. A basic protocol for functional assessment of voice pathology, especially for investigating the efficacy of (phonosurgical) treatments and evaluating new assessment techniques. Guideline elaborated by the Committee on Phoniatrics of the European Laryngological Society (ELS) Eur Arch Otorhinolaryngol. 2001;258:77–82. doi: 10.1007/S004050000299. [DOI] [PubMed] [Google Scholar]

- 44.Barsties V, Latoszek B, Ulozaitė-Stanienė N, Petrauskas T, et al. Diagnostic accuracy of dysphonia classification of DSI and AVQI. Laryngoscope. 2019;129:692–698. doi: 10.1002/LARY.27350. [DOI] [PubMed] [Google Scholar]

- 45.Englert M, Lopes L, Vieira V, Behlau M. Accuracy of acoustic voice quality index and its isolated acoustic measures to discriminate the severity of voice disorders. J Voice. 2020 doi: 10.1016/j.jvoice.2020.08.010. [DOI] [PubMed] [Google Scholar]

- 46.Faham M, Laukkanen AM, Ikävalko T, Rantala L, Geneid A, Holmqvist-Jämsén S, Ruusuvirta K, Pirilä S. Acoustic voice quality index as a potential tool for voice screening. J Voice. 2021;35(2):226–232. doi: 10.1016/j.jvoice.2019.08.017. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.