Abstract

Given the significant advance of virtual care in the past year and half, it seems timely to focus on quality frameworks and how they have evolved collaboratively across health care organizations. Massachusetts General Hospital’s (MGH) Center for TeleHealth and Mass General Brigham's (MGB) Virtual Care Program are committed to hosting annual symposia on key topics related to virtual care. Subject matter experts across the country, health care organizations, and academic medical centers are invited to participate. The inaugural MGH/MGB Virtual Care Symposium, which focused on rethinking curriculum, competency, and culture in the virtual care era, was held on September 2, 2020. The second MGH/MGB Virtual Care Symposium was held on November 2, 2021, and focused on virtual care quality frameworks. Resultant topics were (1) guiding principles necessary for the future of virtual care measurement; (2) best practices deployed to measure quality of virtual care and how they compare and align with in-person frameworks; (3) evolution of quality frameworks over time; (4) how increased adoption of virtual care has impacted patient access and experience and how it has been measured; (5) the pitfalls and barriers which have been encountered by organizations in developing virtual care quality frameworks; and (6) examples of how quality frameworks have been applied in various use cases. Common elements of a quality framework for virtual care programs among symposium participants included improving the patient and provider experience, a focus on achieving health equity, monitoring success rates and uptime of the technical elements of virtual care, financial stewardship, and clinical outcomes. Virtual care represents an evolution in the access to care paradigm that helps keep health care aligned with other modern industries in digital technology and systems adoption. With advances in health care delivery models, it is vitally important that the quality measurement systems be adapted to include virtual care encounters. New methods may be necessary for asynchronous transactions, but synchronous virtual visits and consults can likely be accommodated in traditional quality frameworks with minimal adjustments. Ultimately, quality frameworks for health care will adapt to hybrid in-person and virtual care practices.

Abbreviation and acronyms: AMA, American Medicine Association; App, application; MGH, Massachusetts General Hospital; MGB, Mass General Brigham; NQF, National Quality Forum; QA, quality assurance; QC, quality control; QI, quality improvement

Evolution of the Virtual Care Landscape in the United States

Virtual care encompasses a wide range of information and communication technologies and care delivery systems. The World Health Organization has defined virtual care as the secure use of information and communication technologies in support of health and health-related fields, including health care services, health surveillance, health literature, and health education, knowledge, and research. Virtual care delivery may include any or all the following components: live synchronous audio-video telemedicine, store-and-forward (asynchronous) care delivery, electronic consultations, telephone visits, portal messaging, remote patient monitoring, pre-visit planning, interactive care plans, advanced care at home, and more, as the field is evolving rapidly. Virtual care represented less than 1% of the total health care delivery volume and most clinicians had never used virtual care in their practices in the United States before the COVID-19 pandemic. More than half of clinicians and patients used virtual care for the first time in the first few months of the pandemic. Subsequent research showed that clinicians and patients have responded positively to the presence of virtual care. The American Medical Association (AMA) reports that 85% of physicians indicate that virtual care increased timeliness of care, 75% agreed that virtual care allowed them to deliver high-quality care, and 70% anticipated increasing virtual care in their practices. Refer to Table 1 for advantages of virtual care. It appears that virtual care is here to stay. In the US, the central question is now focused on how best to offer virtual care services, hybrid virtual care and in-person care, and digitally enabled care, representing fully integrated virtual care and in-person care on the basis of clinical appropriateness. The increased demand for virtual care from patients and from clinicians during the COVID-19 pandemic is expected to continue post-pandemic. Consequently, health systems are re-evaluating their technological platforms, operations, and strategies to develop an enduring long-term approach to virtual care, including quality measurement. AMA developed tools to measure the value of virtual care, including 6 value streams: clinical quality, safety, and outcomes, access, experience of patient, experience of clinician, financial impact, and health equity, however an agreed on framework for the assessment of virtual care quality is lacking.

Table 1.

Potential Advantages of Virtual Care

| Improved access |

|

| Cost efficiencies |

|

| Improved quality |

|

| Patient expectations |

|

General Principles of Quality Measurement for Virtual Care

Quality of care could be assessed based on the care being delivered and the condition being treated rather than on the modality or on the location used to deliver care. As an example, quality measures for heart failure are not different based on whether the care is delivered in a hospital-based ambulatory practice or a free-standing office building, on the ground floor of a building with radiographic capabilities or on the third floor of a building without any imaging capabilities. Similarly, the fact that care is delivered virtually does not necessarily impact quality measures for any specific condition. Virtual care is merely a form of care delivery. Taking it 1 step further, a condition-specific outcome measure should not necessarily be changed based on whether care is delivered via synchronous telemedicine, audio only (telephone), or asynchronously, recognizing that certain examination techniques (eg, auscultation) or physiologic measurements (eg, vital signs) may require modification or be decoupled from the virtual encounter.

Virtual care is a rapidly evolving health care modality, and adoption expanded exponentially during the COVID-19 pandemic.1 Frequently the conversation appears to focus on virtual care versus in-person care, rather than on the hybrid model that intermingles the 2 based on patient need and convenience to produce the highest value. Virtual care may be best viewed as 1 modality out of many to be used in a comprehensive health care system. Virtual care and in-person care appear to be intricately intertwined. Each could be used with some patients at a certain point of time. Neither is likely to be used successfully in all patients all the time.2 Virtual care may afford health care systems the opportunity to re-imagine care,3 and excessive comparison to in-person care models may limit its ability to improve health care delivery. This area would benefit from more research.

Potential advantages of virtual care may include, but are not limited to, increased patient and caregiver convenience (no air, shuttle bus, or rail travel, no ride-hailing, no parking, no wayfinding, no incremental food and lodging, no lost work-time, no child, spouse, family, and pet care accommodations, no interactions with challenging COVID-19 requirements and personal protective equipment), potentially more undivided attention from the clinician, a greater percentage of the total time required from the patient being dedicated to the clinical interaction, the enhanced ability to include family members that could not be present otherwise, and the ability to observe home environments and perform more accurate medication reconciliation when important. These advantages have resulted in improved patient experience scores that are higher than scores for in-person visits at MGB hospitals and equivalent scores at Mayo Clinic.4 Jefferson Health has reported net promoter scores in the 50-90 range.5 Theoretically, the economics of virtual care could be very favorable. Patients and providers can reduce travel expenses. Patients can reduce the time away from work or other home activities. Providers can provide care outside the office environment and traditional office hours.

However, as of today, future reimbursement is unclear and health care systems struggle to make strategic determinations about whether they can decrease leases and move from purely “bricks and mortar” to hybrid models of “clicks and mortar” care. Thus, the optimal balance between expenses related to virtual care platforms and expenses related to maintaining leases is unclear. Quality of care cannot easily be addressed without considering financial implications. The National Quality Forum (NQF) considers it a domain in the virtual care measurement framework.6

The notion of a “complete history and physical examination” may be outmoded. During in-person office visits, not all patients are fully undressed. Many patients, if not most, do not receive complete general physical examinations. There may not be a compelling reason for most repeat dermatology encounters to have a cardiovascular or neurological system exam, for example. It is possible that the comprehensive physical examination remains a relic of attempts to prevent payers from denying payment rather than improving quality of care. On the other hand, one could do a level 5 physical examination in a virtual care encounter. More importantly, however, it is the clinician’s ability to determine when a patient needs more than what can be performed virtually and escalate care to a higher level. This is not particularly different as compared with primary care providers referring patients that they have seen in the office to the emergency department or to another specialist. This is also not substantially different from the emergency physician seeking specialty consults or choosing to admit the patient to an observation unit for further diagnostic testing.

Both patient and the provider experiences are important. The experience journey begins with finding and adopting the most appropriate consultative technology modality and associated terminology for the patient. Is it telehealth, telemedicine, a virtual visit, remote monitoring, or an e-consult? Does the patient know the difference between “audio” vs “audio/video” virtual visits or are they more familiar with terms like “telephone” or “Zoom” visit? Is it a first-time or a follow-up visit? Is it a new or familiar provider and patient? Is it new or pre-existing clinical issue? Matching the visit mode to the clinical problem may enhance safety and outcomes.

Not all patients may need the same type of care, even those receiving virtual care. Various support “bundles” for chronic disease management, screening, and acute care may be necessary. When is a blood pressure cuff needed? A weight-scale? A thermometer? A glucometer? Patient education and guidance may be required not just to address technology but also to inform what can and cannot be effectively done via virtual care and what is necessary to do during the visit. For example, a routine follow-up for a diabetic may require a HbA1c but not a physical examination. Determining the clinical appropriateness of various visit types and procedures is an important task for clinical researchers and professional societies to define.

Quality measurements could include assessing the experience of care for both episodic virtual care visits and longitudinal care that is a hybrid of in-person and virtual care. Measuring the unique problems related to understanding the success of virtual care based on patient and provider experiences may be critical in any quality framework.

As we move forward assessing quality related to virtual care, we may need to recognize that quality is quality, regardless of how it is delivered. The quality measures could focus on the symptoms, disease entity, or condition being treated, and the achievement of appropriate outcomes or process measures being evaluated and treated. If the building collapses during an in-person visit or the patient gets caught in an elevator, both would be seen as poor-quality care and a poor patient experience. If the telemedicine technology does not work, it is problematic and thus should be regularly assessed. At Jefferson Health, for example, data regarding the technical quality of each synchronous audio-video telemedicine visit are documented immediately after the call as part of the clinical documentation. This provides an “early warning system” if web browser or technology upgrades are having a negative impact.

Measurement frameworks could assess total care rather than episodic care and we believe that the hybrid “clicks and mortar” approach could be incorporated into quality metrics.5

Developing a Framework for Virtual Care Quality

The use of virtual care as a model of providing care to low-acuity patients is being increasingly examined as a method to provide needed services outside of the hospital setting. The types of technological modalities associated with virtual care are more patient-centered, reduce the burden on the emergency department, urgent care facilities, and emergency medical services, enhance interactions with providers to improve patient care, and enable direct provider–patient interaction 7. However, before the COVID-19 pandemic, virtual care was typically only offered under limited circumstances.8 During the onset of the pandemic, virtual care services expanded significantly, and payers, such as the Centers for Medicare & Medicaid Services, paid for nearly all services provided through virtual care in the same manner as they reimbursed in-person encounters.9,10 As the pandemic evolves, there is a question as to whether payers should continue to reimburse services in the same manner or whether the delivery and payment of virtual care should recede back to pre-pandemic times, in which services, originating sites, and modalities were restricted. An argument for potentially reinstituting the restrictions is overarching concerns regarding the quality of virtual care services and whether their use provides the same level of care that patients receive onsite and in-person. Establishing valid and reliable metrics to track success for virtual care programs is vital to promoting quality and influencing rational policy decisions.

In 2016, the United States Department of Health and Human Services called on the NQF to develop a framework to create metrics to assess the use and effectiveness of virtual care services across multiple clinical settings.6 The results of this work, which were overseen and governed by a 22-member technical advisory panel, focused on the following measurement domains viewed as critical to understanding the impact of virtual care on clinical outcomes: (1) access to care – determines whether the use of virtual care services allows remote individuals to obtain clinical services effectively and whether remote hospitals can provide specialized services; (2) financial impact – assesses the financial impact/cost of virtual care services; (3) patient and provider experience – represents the usability and effect of virtual care on patients, care team members, and the community at large, and whether the use of virtual care results in a level of care that individuals and providers expect; and (4) effectiveness – represents the health care system, clinical, operational, and technical aspects of virtual care.

Because of the complex interactions between the implementation and use of various virtual care modalities, multiple aspects of this framework likely apply to different virtual care issues. The assessment, evaluation, and effectiveness of virtual care are multidimensional; thus, quality measurement requires varied and diverse approaches. The NQF measures are likely to be universally adopted, and therefore mature programs should be encouraged to measure and report quality outcomes in alignment with these recommendations.

COVID-19 created a turbocharged environment for health care systems to adopt virtual modalities of care delivery almost overnight. As Stanford Health Care ramped its virtual care delivery to meet patient demands in early 2020, it asked a critical question: “Given this rapid adoption, how can we ensure that we deliver the same level of quality and value with virtual care as we are known for in on-site and in-person care visits?” The question led to creation of the committee on virtual care quality that wrestled with questions on framework, scope, governance, and measurement. The committee was charged with developing a framework to monitor and ensure quality, safety, and equity of care delivered through virtual means compared with historical in-person visits while taking into consideration both adult and pediatric footprints and its applicability to academic and community practices across the enterprise. The committee was comprised of representation across the organization from physicians, quality, digital health, and operational leaders. The group grounded itself in the quality improvement framework consisting of: (1) domains – high-level strategic ‘themes’; (2) measures – quality measures in 3 groups: outcomes, processes, and balancing; (3) metrics – the technical metrics associated with each type of quality measure; and (4) tactics – tactical actions operationalized by the organization and stakeholder groups. The application of the framework allowed the group to define 6 domains of quality and clinical effectiveness – clinical quality, social equity, safety and harm avoidance, resource use, advancing value/lowering cost of care, and finally innovation and market differentiation. Through expert user groups, cross-cutting metrics were defined for each domain allowing Stanford Health Care to create its first dashboard measuring quality and value in the virtual care space with measures such as “emergency department visit rate after video visit vs telephone vs in-person visit” or electronic health record-embedded provider survey measuring provider-judged clinical effectiveness compared with alternatives. These cross-cutting measures by relative domain allow all the service lines to assess and compare different modalities of care delivery against sets of clinical outcomes while having the ability to measure social equity impact on communities we serve.

There is a continuum between the concepts of health care quality and value, and often a conflation between the 2. Part of this stems from the lack of a common and generally accepted definition of “value”,11 with organizations tending to focus on virtual care features rather than understanding the true reasons that users turn to virtual care products and services. At its core, a health care organization’s virtual care products and services have no intrinsic value; instead, the clinical context determines how users form applicable perceptions.

Mayo Clinic College of Medicine and Science developed a value definition – with the understanding of what levers to pull and under what circumstances – for best practices and with built-in iterative, continuous learning. Mayo Clinic Center for Digital Health conducted an environmental scan to inform the development of a framework around which to base the value definition. This process included identifying existing and potential new quality measure concepts related to virtual care. Information was gathered from existing quality frameworks such as those developed by the AMA.12 Furthermore, recognizing that virtual care does not represent a different type of health care, but rather a different modality of health care delivery, Mayo Clinic wanted to further ground its framework in core concepts of value and quality such as the quadruple aim.13 Using these concepts as a basis, Mayo Clinic was able to construct a framework with 4 domains around which to conceptualize value for its virtual care programs. This framework leverages the similar structural and functional concepts as described by the NQF’s guidance on telehealth framework development. The framework serves as the conceptual model for organizing the ideas and provides high-level guidance and direction for virtual care measurement priorities and their impact on health delivery and outcomes. Domains, each representing high-level ideas and concepts, help describe the quality measurement framework. The domains ensure that the health care institution tracks performance against key priorities and ties global measures to program and experimental quality metrics. The framework includes subdomains which provide smaller categories or groupings within a domain. The measurement concepts represent ideas for a measure that include a description of the measure and the target population.

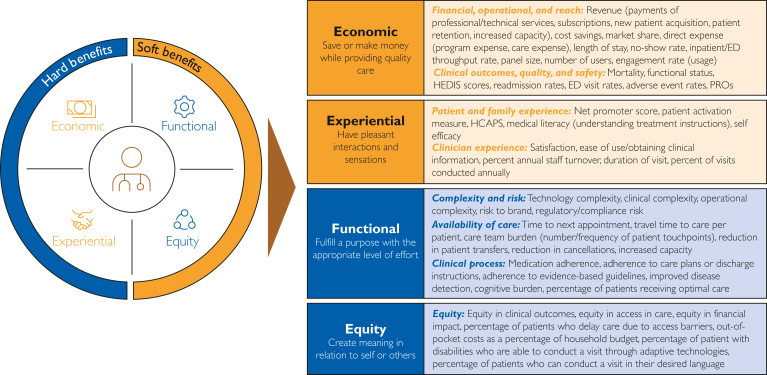

A 4-domain model provides the best combination of utility, simplicity, and accuracy in covering the main components of virtual care quality and value. Based on thematic analyses of frameworks for virtual care like those from the AMA and the Institute for Health care Improvement, guidance on virtual care framework development from the NQF, and best practices from the broader consumer space, Mayo Clinic identified 4 dimensions that best organize the subdomains and quality measure concepts (Figure): (1) economics – describing the financial and cost impacts, as moderated by the clinical effectiveness and safety of these models; (2) experience – describing the overall experience of either receiving or delivering care through these modalities; (3) function – describing the effectiveness in impacting the clinical operating environment; and (4) equity in access to clinical services.

Figure.

Four-domain model of virtual care value.

Across the framework, it was recognized that some domains included concepts that would lend themselves easily to quantification. In acknowledgment of this, these domains were categorized into hard benefits (representing those domains with measures that would be more likely to be directly quantified) vs soft benefits (representing those domains with measures and impact that would be more likely to be described through qualitative methods or quantified through proxy or surrogate measures). It was acknowledged that this is a general description of these domains, with there being variability in the quantitative and qualitative nature of the quality measures that comprise these domains.

With these concepts in mind, the institution wished to delve further into some of the measures that comprise these domains. Within the first domain (economics), quality measures include those related to financial and operational performance as well as reach. These, among others, included revenue generated from professional and technical fees considering overall costs of care for public and private payors, organizational cost savings, abilities to reach more patients or provide greater patient management capacity, and operational impacts that result in the efficient use of resources. Clinical outcomes considered the ability to effect avoidable readmissions and emergency department visits, effect on morbidity and mortality indices, and reductions in medical errors and adverse event rates.

The second domain (experience) included quality measures related to patient and family experience. These include likelihood to recommend (and Net Promoter Score), effect on patient self-management, shared decision making, and increase in patients’ knowledge of care. Clinician experience included overall satisfaction as well as comfort with virtual care application and procedures, quality of communications with patients, and satisfaction with care delivery method.

The third domain (function) includes quality measures which represent impact to the whole health care system, clinical, operational, and technical aspects of care such as complexity, which include balancing quality measures with challenges introduced by the technology itself or with integrating the technology into an effective clinical workflow. Availability considers the timely receipt of health services; reduced cancellations; and patient, family, and caregivers’ time related to travel or reductions in time away from work. Clinical process considers factors such as impact on practice patterns; appropriateness of services; patient compliance with care regimens/care plans/discharge instructions; diagnostic accuracy, cognitive burden, and the ability to obtain actional information (sufficient to inform clinical decision making); and guideline adherence.

The fourth and final domain focused on equity, which considers how effectively an organization can provide access to health services for those living in rural and urban communities, access to health services for those living in medically underserved areas, access to appropriate specialists based on the needs of the patient, and delivery of the care based on patient-specific needs (language, physical access needs, etc.), and addresses digital literacy issues.

When considering how to balance these measures when delivering a product or service, an organization must assess what users need and how well the organization delivers on those needs: users may be willing to give up some value in a less important dimension if high value is achieved in another, more important one, but there may be a threshold for how much willingness to trade-off depending on the overall context and quality of care.

Although application of quality frameworks within virtual care assessment is essential to ensure delivery of high-quality care, this assessment is irrelevant if patients do not use virtual care. Most discussions regarding barriers to patients’ uptake of virtual care are focused on issues of device access and knowledge in using devices and the internet, commonly referred to as digital literacy. Yet there are other important barriers to patients using virtual care that are essential to consider, including issues of trust, acceptability, and relevance of virtual care for the individual. “Digital readiness” is a term that more broadly incorporates these other important patient barriers to virtual care uptake.14

There are some existing scales to assess digital literacy. The Digital Health Literacy Instrument is a 21-item scale instrument that covers the domains of operational skills, navigation skills, information seeking, evaluating reliability, determining relevance, adding self-generated content to web-based apps, and protecting privacy.15 Another, the eHealth Literacy Assessment Toolkit, is derived from 7 health-related and digitally related tools.16 The eHealth Literacy Assessment Toolkit covers the domains of functional health literacy, health literacy self-assessment, familiarity with health and health care, knowledge of health and disease, technology familiarity, technology confidence, and incentives for engaging with technology. To date, there is no existing tool that incorporates all the domains of digital readiness, including and not limited to device access, knowledge of technology use, trust, acceptability, and relevance. Development of such a tool is essential to ensure that populations can be routinely screened for virtual care barriers, with the goal of tailoring intervention deployment to those in need and ultimately ensuring digital health equity.

Similarities of existing organizational frameworks include structural elements like quality measurement concepts, domains, subdomains, an emphasis on access, experience, financial impact, safety, effectiveness, equity, and adoption of outcome measures. Some differences among existing organizational frameworks include incorporation of digital literacy, digital readiness, innovation and market differentiation, and trust. Several uncovered complexities and challenges of designing and adopting virtual care quality frameworks include retrospective and prospective data collection, storage, and regular analysis, missing data, gaps in data, differential weighting of various factors or domains, considerations of composite or global measures, addressing uncertainty (precision issues) in estimations, caution over introduction of bias, assuring objectivity, confronting qualitative measures versus quantitative measures and the relative balance of both.

Applying Quality Framework to Ambulatory Virtual Visit use Cases

As discussed previously, the 2016 NQF document on quality in virtual care is a recommended starting point for anyone starting a quality improvement (QI) project. We further describe how to adapt this framework and give concrete examples of metrics that can be used in initial QI work. By its nature, all QI work is iterative and needs to be adapted to the local context and type of virtual care delivery.

Quality Improvement Metrics for Virtual Care

Any QI program for virtual care should focus on the broad categories of patient experience and satisfaction, technical issues, compliance with regulatory requirements, health equity, and the appropriateness and safety of the virtual care visit. Because patients started using virtual care at much higher rates during the pandemic, there were concerns about care quality; thus, it is essential to solicit patient feedback and critical for continuing to improve programs over time. Two of the most frequently used surveys are the Press Ganey and Clinician and Group Consumer Assessment of Health care Providers and Systems, which have been adapted for the virtual care use case.17 In addition, qualitative comments are helpful for context and specific issues that arise. Other organizations use the Net Promoter Score which asks respondents how likely they are to recommend the service or company.18 Developed in 2003, by Bain & Company, this survey is simple and easy to complete, allowing comparison to industries and companies outside of health care. It is considered the gold standard for customer loyalty.

The University of Washington has developed a QI/QA (quality improvement/quality assurance) program with 4 features.19 First, there is an anonymous reporting tool on all system computers that allows any user to report a suspected safety event and flag it as a virtual care visit. These safety events are reviewed regularly by a clinician and a root-cause analysis is performed. The second component is a patient survey, which is sent by email to a random sample of patients who have had a virtual care visit. It solicits both quantitative and qualitative data, which is reviewed regularly by the virtual care office and clinical leadership for opportunities for improvement. Third, 2 clinicians independently perform random monthly chart reviews of ambulatory virtual care visits. They use a 3-page HIPAA-compliant, web-based tool (Research Electronic Data Capture, REDCap) to query such things as whether a physical examination was performed and if it was adequate, whether consent was obtained and documented, and whether the visit was appropriate for virtual care. Data is summarized and areas of concern are addressed with education and outreach. Lastly, the QI program has an annual area of focus. Some examples of focus areas include appropriate antibiotic prescribing and assuring equitable access to Spanish-speaking patients.

Key to the success of any QI program is support by system administration. This includes staff to support the QI work, multiple data inputs, and a governance/reporting structure. A streamlined workflow is essential for any successful virtual care program and should include guidance on scheduling a virtual care visit (including conditions that are most amenable to virtual care visits), preparing for a virtual care visit, working with a tele-presenter, incorporating trainees, obtaining and documenting consent, troubleshooting technology issues, and arranging for follow-up tests and appointments. Some patients have an urgent need (ie, suicidal ideation, chest pain, stroke symptoms) which require prompt in-person evaluation. Therefore, staff should be trained on best practices for documenting call-back numbers and physical location of patient and have a pathway for getting patients seen promptly.

At the MGB Health System, the existing quality framework for clinical care and the Institute of Medicine domains of quality were used as the scaffolding on which virtual care quality measurement was overlaid. The approach leveraged the Donabedian20 model of structures, processes, and outcomes as areas of focus (Table 2). The safety event reporting system included keyword search capability to identify events associated with virtual care, and every quarter the system’s patient quality and safety governance committees meet to review all reported cases. The decision to use the existing quality management systems was deliberate and reflected the position that virtual care as the modality should not determine the quality metric but should be a covariate that is interrogated to make sure it is not leading to decreased quality. There was the recognition that patients are not randomly assigned to virtual vs in-person care, and that providers have their own preferences in care delivery modalities that likely impact the choice of when to use virtual care.

Table 2.

Donabedian Model for Assessing Quality of Virtual Care

| STEEEP framework | Structure | Process | Outcome |

|---|---|---|---|

| General | Local site committee/group docks into central team and central committee | Identification of general virtual care quality concerns for proactive mitigation Local trend review and forwarding to enterprise committee for aggregation, issue identification, discussion, and remediation |

Track STEEEP outcome data (eg, virtual care patient experience survey) |

| Safety |

|

|

|

| Patient-centeredness |

|

|

|

STEEEP, Safe, Timely, Effective, Efficient, Equitable, and Patient Centered.

Donabedian model for assessing quality of virtual care expressed as a general framework, and then populated for the Institute of Medicine domains of quality. Two illustrative examples are provided for safety and patient-centeredness.

The rates of care delivery in virtual vs in-person care are compared by race, ethnicity, preferred language, geography, and measures of social vulnerability. Disparities in the use of virtual care video (vs audio) led to a research grant proposal on methods to address the digital divide and improve health equity that was funded in July 2021. The team has published several papers on the nature of virtual care adoption, the disparities in adoption, and the characteristics of patients and providers who adopt virtual care.21, 22, 23

Applying a Quality Framework to Asynchronous use Cases

Advancing quality metrics in asynchronous virtual care delivery requires an intentional and innovative approach. Asynchronous telehealth services leverage technology to “store and forward” clinical information between patients and their care teams, including digital images and clinical data.24 Therefore, compared with synchronous services such as video telemedicine visits with a provider, asynchronous services are not real time interactions between a patient and a service provider. Within the domain of asynchronous services, there are a wide range of solutions, each with different goals and desired outcomes. Therefore, when approaching the development of quality frameworks for asynchronous solutions, the first step is to identify the value(s) being provided by the service and its associated outcomes. Given the range of asynchronous services that are in use today, each with its own value propositions and outcomes targets, a single quality framework cannot be applied to all services and use cases. To illustrate this, 3 approaches to developing quality frameworks for asynchronous services are discussed herein.

Exploring Data Quality for Visual-Based Practices

Asynchronous virtual care relies on the quality of the data available for diagnosis and treatment decisions; data and image quality are requisite for quality outcomes. Specifically, in visual-based specialties, such as radiology and pathology, there are strong relationships between image and data quality with diagnostic decision efficacy and efficiency, hence impacting patient care and outcomes.25,26 There are 4 key aspects in the data chain where image and data quality can be impacted: acquisition, transfer, display, and storage. Professional societies27,28 have developed practice guidelines that incorporate QA, quality control (QC), and QI measures and protocols. The challenge to creating a uniform quality framework or set of metrics is that there is no single one-size-fits all solution as requirements differ by technology and specialty. However, many technologies and specialties do leverage common principles. In addressing quality metrics in imaging, there are technical standards to apply across specialties, plus applications to help ensure image and data quality remain high.29, 30, 31, 32 There are also tools that are easily applied to assess image and data quality in the digital environment.33 Increasingly, artificial intelligence tools are being developed for tasks such as detection, diagnosis, prediction, workflow, and QC/QA/QI; these are likely to strongly impact the accuracy of asynchronous data used in virtual care. Care must be taken as we incorporate artificial intelligence tools into the clinical workflow as there are many opportunities for bias, corruption, and manipulation of data with some tools.

There is also the issue of how to deal with patient-generated health data because a good amount is asynchronous (eg, photos taken with cell phones, smartwatch data signals) and often not of optimal quality. There needs to be a clear distinction between provider-initiated vs patient-initiated data and perhaps that should be based on data acquired with provider-initiated medical-grade devices. With patient-initiated data, there are concerns not only about quality (eg, false positive and negative rates, data input incorrectly) and amount (need for pre-processing), but integrity and security, so there need to be gatekeeper mechanisms in place before the data can be transferred asynchronously through a portal email (eg, virus check).

Digital Interactive Care Plans

The development of a quality framework to evaluate the efficacy and impact of digital interactive care plans was explored. A digital interactive care plan application (app) was developed and implemented to facilitate the two-way exchange of health information between patients and their care team.34,35 Several digital interactive care plans were developed by the Mayo Clinic to engage and empower patients to participate in self-care for chronic conditions, health events, and health maintenance.

Once enrolled in the care plan, patients receive health guidance information, complete symptom assessments, and report their biometric data through the app. The clinical data is then automatically escalated to their care team when intervention and follow-up is needed. This program requires that patients use their own smart device to access the patient app. Therefore, this solution does not address the digital divide that exists for many patients who do not have their own smart device as well as internet or cellular access to use the app.36 Programs can address these challenges by providing patients with devices that are enabled with cellular access for patients to use the app. However, anti-kickback laws present obstacles that make this difficult for health care providers to improve access for these patients and address the digital divide.

To assess digital interactive care plans from the ideation stage through implementation, a quality framework was developed by a multi-disciplinary team. The framework included 3 categories of focus to examine the desirability, viability, and feasibility of the solution. Desirability focuses on the value that the solution provides to patients and care teams. From the patient perspective, the goal is to improve their health outcomes, improve the efficiency of care provided, detect symptoms so that care teams can intervene early, and improve the patient experience. Care plans aim to decrease the care team burden and improve the staff’s experience in managing their patient population. An important factor within the desirability category is the anticipated volumes of the potential care plan, with the goal being to positively impact as many patients as possible and to be able to implement the care plan in as many practices as possible. For this category and the others, these criteria are tied to a score so that care plans that provide the most benefits to patients and care teams are scored higher in this category.

Viability focuses on the business value or opportunity generated by the initiative. This enables providers and care teams to consider the value that can be captured through implementation of the care plan in the form of revenue generation, cost reduction, and strategic alignment. Examples of cost reductions include the realization of cost savings through reducing health care utilization, hospitalization, and length of stay. For scoring, plans that we anticipate will generate costs savings, particularly in multiple areas, will score higher than plans that do not. Finally, care plans that can be used in both primary and specialty care are scored higher in strategic alignment, because they can serve more practices and patients.

Finally, the technical and operational feasibility of the digital interactive care plan is weighed. This category provides criteria for assessing the readiness of the providers and care teams that will be utilizing the care plan in practice, the technical fit between the clinical goals of the care plan and the technology, alignment with best practices for implementation, and cost. Technical readiness is assessed based on the alignment of features and functionality of the product with the functionality that is being requested by the practice to optimally support the care plan use case.

This is a critical part of the value assessment that enables us to ensure that the platform and solution meets the requirements for the minimally viable product. If not, the care plan is likely not a good fit, and this item is weighted significantly to impact the total value score. Finally, operational readiness and cost is evaluated. Factors beyond technology and practice readiness that might impact the feasibility of a care plan are evaluated, such as support for patients to ensure they engage in using the plan.

Once a care plan idea has been evaluated with the framework and its value has been defined, the individual values are cross walked to metrics or data that are used to measure the outcomes of the care plan as identified in the assessment. This forms the re-measurement plan that is used to assess the outcomes and impact of the care plan for patients, care teams, and the health care organization. Within the re-measurement plan, a standard set of metrics is typically included, in addition to those specific to an individual care plan. The standard set of metrics include those that measure patient and care team adoption, escalations of clinical care, frequency of communication between patients and care team, and patient compliance. Patient and care team feedback is also included in the standard set of metrics.

E-consults

It is requisite that health systems assess and ensure the quality of care in provider-to-provider asynchronous subspecialty patient care. E-consults, in providing access to specialty care, must maintain both patient safety and distinguish care acuity needs. E-consults can leverage access to care and address acuity by potentially displacing the need for in-person or even virtual visits.37,38 E-consults are particularly relevant and impactful in addressing “quick questions” as well as in mitigating prolonged wait times for scheduled appointments or allowing critical tests to be ordered in advance of a specialty visit. “Quick questions” may request advice in patient management, consideration for appropriateness of subspecialty referral, or guidance in referral acuity need (eg, within a week or month). E-consults can also bridge work-up and management by the referring provider until the appointment time, determine need for a sooner appointment, and help with follow-up questions and collection of more information for care management. E-consultants focus on patients who truly need subspecialty care, providing quick answers without extra visits or co-pays. E-consults offer the benefit of being included in the patient’s chart, providing documentation of specialty guidance (rather than the undocumented “curbside consult approach”), and imparting the opportunity for data gathering and assessment. Quality measures can be assessed including conversion to new patient referrals, urgency of referral, wait times for in-person or virtual care appointment, provider time spent on consultation, and referring and consultant providers’ satisfaction. Additionally, resource utilization is improved by optimizing new patient subspecialty visits, as well as triaging higher acuity and more complex patients being seen. The saving of wait times for scheduled appointments, time of travel, and specialty appointment, and visit co-pays result in improved patient satisfaction.

Challenges remain, however, including insurance company reimbursement, optimizing provider-to-provider communication skills, interhospital e-consultations, crossing state lines, and resident and fellow training curricula in asynchronous care delivery.

Evidence Gaps

There are questions about virtual care quality and quality frameworks that could be answered by further research.39 Clinical appropriateness, clinical outcomes, clinical parity, and equity/access are commonly cited themes deserving of further research and exploration.39 One specific question is whether virtual care improves patient outcomes. There appears to be continued deliberation about whether virtual care results in higher or lower quality care than traditional visits. Some randomized trials comparing virtual care with in-person care have found that virtual care may be a safe clinical option; however, these studies have had important limitations and have evaluated only a small fraction of virtual care’s multitude of applications.39 Additionally, clinical trials have generally compared fully virtual care with in-person care when much care is delivered in hybrid fashion. Finally, clinicians are using a wide range of virtual tools and services, and digital applications to interact with patients. Ideally, quality frameworks could be better informed by clinical and economic outcome assessments of these mixed models.

Conclusion

Virtual care represents a much-needed evolution in the access to care paradigm that helps keep health care aligned with other modern industries in digital technology and systems adoption. With this new modality of care delivery, it is vitally important that the quality measurement systems be adapted to include these encounters. New methods may be needed for asynchronous transactions, but synchronous virtual visits and consults can likely be accommodated in traditional quality frameworks with minimal adjustments. Active quality surveillance is critical whenever any significant changes are introduced into the care delivery paradigm, and virtual care is no exception. As COVID-19 caseloads decrease and hospital systems have time to regain their footing, it is time for an intentional commitment to quality measurement in virtual care. As seen in this symposium and documented in this manuscript, there are many alternative approaches to quality measurement implementation, and overarching guiding principles from the Institute of Medicine and the Association of American Medical Colleges can help guide institutions in their efforts to adapt. Common elements of a quality framework for virtual care programs among symposium participants include improving the patient and provider experience, a focus on achieving health equity, monitoring success rates and uptime of the technical elements of virtual care, financial stewardship, and clinical outcomes.

Potential Competing Interests

Dr Lee Schwamm reports administrative support was provided by Massachusetts General Hospital.

Footnotes

Grant Support: Center for TeleHealth, Massachusetts General Hospital and Harvard Medical School.

References

- 1.Bolster M.B., Chandra S., Demaerschalk B.M., et al. Crossing the virtual chasm: practical considerations for rethinking curriculum, competency, and culture in the virtual care era. Acad Med. 2022;97(6):839–846. doi: 10.1097/ACM.0000000000004660. [DOI] [PubMed] [Google Scholar]

- 2.Kulcsar Z., Albert D., Ercolano E., Mecchella J.N. Telerheumatology: a technology appropriate for virtually all. Semin Arthritis Rheum. 2016;46(3):380–385. doi: 10.1016/J.SEMARTHRIT.2016.05.013. [DOI] [PubMed] [Google Scholar]

- 3.Hollander J.E., Sites F.D. 2020. The transition from reimagining to recreating health care is now. [DOI] [Google Scholar]

- 4.Ploog N.J., Coffey J., Wilshusen L., Demaerschalk B. Outpatient visit modality and parallel patient satisfaction: a multi-site cohort analysis of telemedicine and in-person visits during the COVID-19 pandemic. J. Patient Exp. 2022;9(3):93–101. doi: 10.35680/2372-0247.1704. [DOI] [Google Scholar]

- 5.Hollander J., Neinstein A. 2020. Maturation from adoption-based to quality-based telehealth metrics. [DOI] [Google Scholar]

- 6.Creating a Framework to Support Measure Development for Telehealth. National Quality Forum. Accessed December 24, 2022. https://www.qualityforum.org/Publications/2017/08/Creating_a_Framework_to_Support_Measure_Development_for_Telehealth.aspx

- 7.Finn J.C., Fatovich D.M., Arendts G., et al. Evidence-based paramedic models of care to reduce unnecessary emergency department attendance--feasibility and safety. BMC Emerg Med. 2013;13(1) doi: 10.1186/1471-227X-13-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Rural Telehealth and Healthcare System Readiness Measurement Framework. National Quality Forum. Accessed December 24, 2022. https://www.qualityforum.org/Publications/2021/11/Rural_Telehealth_and_Healthcare_System_Readiness_Measurement_Framework_-_Final_Report.aspx

- 9.Text - H.R.748 - 116th Congress (2019 to 2020) - CARES Act Congress.gov. https://www.congress.gov/bill/116th-congress/house-bill/748/text

- 10.U.S. Centers for Medicare & Medicaid Services. Coronavirus Waivers & Flexibilities. NEW—Waivers & Flexibilities for Health Care Providers. U.S. Centers for Medicare & Medicaid Services. https://www.cms.gov/coronavirus-waivers. Accessed December 24, 2022.

- 11.Marzorati C., Pravettoni G. Value as the key concept in the health care system: how it has influenced medical practice and clinical decision-making processes. J Multidiscip Healthc. 2017;10:101–106. doi: 10.2147/JMDH.S122383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Return on health: moving beyond dollars and cents in realizing the value of virtual care. American Medical Association. https://www.ama-assn.org/practice-management/digital/amas-return-health-telehealth-framework-practices. Accessed December 24, 2022.

- 13.Sikka R., Morath J.M., Leape L. The Quadruple Aim: care, health, cost and meaning in work. BMJ Qual Saf. 2015;24(10):608–610. doi: 10.1136/bmjqs-2015-004160. [DOI] [PubMed] [Google Scholar]

- 14.Digital literacy and learning in the United States Pew Research Center. https://www.pewresearch.org/internet/2016/09/20/digital-readiness-gaps/

- 15.van der Vaart R., Drossaert C. Development of the digital health literacy instrument: measuring a broad spectrum of health 1.0 and health 2.0 skills. J Med Internet Res. 2017;19(1):e27. doi: 10.2196/jmir.6709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Karnoe A., Furstrand D., Christensen K.B., Norgaard O., Kayser L. Assessing competencies needed to engage with digital health services: development of the ehealth literacy assessment toolkit. J Med Internet Res. 2018;20(5):e178. doi: 10.2196/JMIR.8347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ramaswamy A, Yu M, Drangsholt S, et al. Patient satisfaction with telemedicine during the covid-19 pandemic: retrospective cohort study. J Med Internet Res. 2020;22(9):e20786. 10.2196/20786. [DOI] [PMC free article] [PubMed]

- 18.Qualtrics Your Guide to Net Promoter Score (NPS) in 2022. https://www.qualtrics.com/experience-management/customer/net-promoter-score/

- 19.Kong-Wong C. Quality improvement for telehealth: lessons learned during the COVID-19 pandemic. BMJ Quality. 2022 WMSMSAAESJ. Ahead of print. [Google Scholar]

- 20.Donabedian A., Wheeler J.R., Wyszewianski L. Quality, cost, and health: an integrative model. Med Care. 1982;20(10):975–992. doi: 10.1097/00005650-198210000-00001. [DOI] [PubMed] [Google Scholar]

- 21.Zachrison K.S., Yan Z., Samuels-Kalow M.E., Licurse A., Zuccotti G., Schwamm L.H. Association of physician characteristics with early adoption of virtual health care. JAMA Netw Open. 2021;4(12) doi: 10.1001/jamanetworkopen.2021.41625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zachrison K.S., Yan Z., Schwamm L.H. Changes in virtual and in-person health care utilization in a large health system during the covid-19 pandemic. JAMA Netw Open. 2021;4(10) doi: 10.1001/jamanetworkopen.2021.29973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zachrison K.S., Yan Z., Sequist T., et al. Patient characteristics associated with the successful transition to virtual care: Lessons learned from the first million patients. J Telemed Telecare. 2021 doi: 10.1177/1357633X211015547. [DOI] [PubMed] [Google Scholar]

- 24.Deshpande A., Khoja S., Lorca J., et al. Asynchronous telehealth: a scoping review of analytic studies. Open Med. 2009;3(2):e69–e91. [PMC free article] [PubMed] [Google Scholar]

- 25.Waite S., Scott J.M., Legasto A., Kolla S., Gale B., Krupinski E.A. Systemic error in radiology. AJR Am J Roentgenol. 2017;209(3):629–639. doi: 10.2214/AJR.16.17719. [DOI] [PubMed] [Google Scholar]

- 26.Error Reduction and Prevention in Surgical Pathology. Schmidt R.L., Cohen M.B., Nakhleh R.G., editors. Am. J. Clin. Pathol. 2016;145(1):140–141. doi: 10.1093/AJCP/AQV090. [DOI] [Google Scholar]

- 27.Andriole K.P., Ruckdeschel T.G., Flynn M.J., et al. ACR-AAPM-SIIM practice guideline for digital radiography. J Digit Imaging. 2013;26(1):26–37. doi: 10.1007/s10278-012-9523-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pantanowitz L., Dickinson K., Evans A.J., et al. American Telemedicine Association clinical guidelines for telepathology. J Pathol Inform. 2014;5(1):39. doi: 10.4103/2153-3539.143329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Quigley E.A., Tokay B.A., Jewell S.T., Marchetti M.A., Halpern A.C. technology and technique standards for camera-acquired digital dermatologic images: a systematic review. JAMA Dermatol. 2015;151(8):883–890. doi: 10.1001/jamadermatol.2015.33. [DOI] [PubMed] [Google Scholar]

- 30.DICOM https://www.dicomstandard.org/

- 31.ICC Medical Imaging Working Group International Color Consortium. https://www.color.org/groups/medical/medical_imaging_wg.xalter

- 32.Interoperability in Healthcare HIMSS. https://www.himss.org/resources/interoperability-healthcare

- 33.Mcneill K.M., Major J., Roehrig H., Krupinski E. Practical methods of color quality assurance for telemedicine systems. 2002;20:111–116. [Google Scholar]

- 34.Coffey J.D., Christopherson L.A., Glasgow A.E., et al. Implementation of a multisite, interdisciplinary remote patient monitoring program for ambulatory management of patients with COVID-19. NPJ Digit Med. 2021;4(1):123. doi: 10.1038/s41746-021-00490-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Haddad T.C., Blegen R.N., Prigge J.E., et al. A scalable framework for telehealth: the mayo clinic center for connected care response to the COVID-19 pandemic. 2021;2(1):78–87. doi: 10.1089/TMR.2020.0032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wood B.R., Young J.D., Abdel-Massih R.C., et al. Advancing digital health equity: a policy paper of the Infectious Diseases Society of America and the HIV Medicine Association. Clin Infect Dis. 2021;72(6):913–919. doi: 10.1093/cid/ciaa1525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Miloslavsky E.M., Bolster M.B. Addressing the rheumatology workforce shortage: A multifaceted approach. Semin Arthritis Rheum. 2020;50(4):791–796. doi: 10.1016/j.semarthrit.2020.05.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lockwood M.M., Wallwork R.S., Lima K., Dua A.B., Seo P., Bolster M.B. Telemedicine in adult rheumatology: in practice and in training. arthritis care res (Hoboken) 2022;74(8):1227–1233. doi: 10.1002/acr.24569. [DOI] [PubMed] [Google Scholar]

- 39.Mehrotra A., Uscher-Pines L. Informing the debate about telemedicine reimbursement - what do we need to know? N Engl J Med. 2022;387(20):1821–1823. doi: 10.1056/NEJMp2210790. [DOI] [PubMed] [Google Scholar]