Graphical abstract

Keywords: Hammerstein-Wiener system, Parameter estimation, Evolutionary heuristics, Weighted differential evolution, Genetic algorithms

Highlights

-

•

A new application of WDE for estimation of nonlinear Hammerstein-Wiener systems.

-

•

Designed WDE is implemented for measured input, internal and output data viably.

-

•

The sundry scenarios of HWM establish robustness, stability and convergence of WDE.

-

•

Statistical observations prove efficacy for controlled autoregressive scenarios.

Abstract

Introduction

Knacks of evolutionary computing paradigm-based heuristics has been exploited exhaustively for system modeling and parameter estimation of complex nonlinear systems due to their legacy of reliable convergence, accurate performance, simple conceptual design ease implementation ease and wider applicability.

Objectives

The aim of the presented study is to investigate in evolutionary heuristics of weighted differential evolution (WDE) to estimate the parameters of Hammerstein-Wiener model (HWM) along with comparative evaluation from state-of-the-art counterparts. The objective function of the HWM for controlled autoregressive systems is efficaciously formulated by approximating error in mean square sense by computing difference between true and estimated parameters.

Methods

The adjustable parameters of HWM are estimated through heuristics of WDE and genetic algorithms (GAs) for different degrees of freedom and noise levels for exhaustive, comprehensive, and robust analysis on multiple autonomous trials.

Results

Comparison through sufficient large number of graphical and numerical illustrations of outcomes for single and multiple execution of WDE and GAs through different performance measuring metrics of precision, convergence and complexity proves the worth and value of the designed WDE algorithm. Statistical assessment studies further prove the efficacy of the proposed scheme.

Conclusion

Extensive simulation based experimentations on measure of central tendency and variance authenticate the effectiveness of the designed methodology WDE as precise, efficient, stable, and robust computing platform for system identification of HWM for controlled autoregressive scenarios.

Introduction

The block-structured models have emerged as a potential research topic in the domain of nonlinear parameter estimation, characterized by connections of linear dynamic and nonlinear static subsystems. Among the block-oriented structures, the Hammerstein and Wiener models are the most well-known, simple, and effective configurations with vast applications in chemical engineering, biomedical engineering, control systems, signal processing, and power electronics [1], [2], [3]. The Hammerstein model constitutes a static nonlinear block followed by linear dynamic block and the Wiener model comprises of a linear dynamic cascaded with a static nonlinearity [4]. The union of both the Hammerstein and the Wiener system generates the Hammerstein-Wiener model, which consists of one dynamic linear block sandwiched between two static nonlinear blocks having applications in all fields of science and engineering including nonlinear industrial processes [5], controls [6], signal processing [7], and instrumentation [8]. Several parameter estimation procedures have been formulated for identification of the Hammerstein-Wiener model, mainly including one-shot set-membership method [9], subspace method [10], blind approach [11], over parametrization [12], recursive least square algorithm [13], maximum likelihood method [14] iterative method [15] multi-signal-based method [16] and fractional approach [17]. All these proposed algorithms are deterministic procedures being broadly employed for the parameter estimation of Hammerstein-Wiener models having their own advantages, applications, and shortcomings but stochastic solvers based on evolutionary heuristics procedures have not yet been explored for the efficient parameter estimation of Hammerstein-Wiener models. Nature inspired heuristics based stochastic solvers have been extensively explored for constrained and unconstrained optimization problems arising in physical systems [18], [19], [20], [21] including plasma physics [22], astrophysics [23], atomic physics [24], electrical power systems [25], electrical machines [26], quantum mechanics [27], electronic devices [28], signal processing [29], electric circuits [30], nanofluidic systems[31], energy [32], computer virus models [33], biomedical engineering [34], thermodynamics [35] supply chain management [36], scheduling problem [37], and finance [38]. Furthermore, the evolutionary algorithms have also been applied for parameter estimation of block-oriented models including controlled autoregressive [39], controlled autoregressive moving average systems [40], Hammerstein [41], [42], wiener [43] and feedback nonlinear systems [44]. These applications motivated authors to exploit evolutionary computing heuristics as an alternate, accurate, and reliable parameter estimation technique for Hammerstein-wiener systems and their fractional variants developed on similar pattern as reported in [45], [46]. The potential features of the designed scheme are listed below:

-

•

A new application of evolutionary heuristic paradigms based on Weighted Differential Evolution is introduced for accurate parameter estimation of nonlinear Hammerstein-Wiener systems and comparative analysis with counterparts to prove the worth and efficacy.

-

•

The designed scheme is effectively implemented to estimate the model parameters in terms of measured input and output data, as well as, the internal variables associated with the prior estimations of the subsequent measures.

-

•

The robustness, stability and convergence of the designed metaheuristic paradigm are established through decision variables of the Hammerstein-Wiener systems of different lengths corrupted with process noise scenarios.

-

•

Statistical observations on measure on central tendency and variance further prove the efficacy of the designed methodology WDE as precise, efficient, stable, robust and alternate computing platform for system identification of HWM for controlled autoregressive scenarios.

Remaining of the article is structured as follows: In Section 2, the parameter estimation model of Hammerstein-wiener systems, problem formulation, explanation of designed methodology is given. In Section 3 the different models of Hammerstein -wiener system and their simulation results are provided in detail. While last section summarizes the study including conclusion and future recommendations.

Design methodology

In this section, two-stage method is employed to estimate the parameters of Hammerstein-Wiener system, in the first phase, the mathematical models of the Hammerstein-Wiener systems are developed along with the cost function definition. In the second phase, evolutionary computing algorithms as optimization techniques are described to estimate the input as well as output nonlinear blocks parameters and noise model parameters of the Hammerstein-Wiener systems.

Hammerstein-Wiener system model

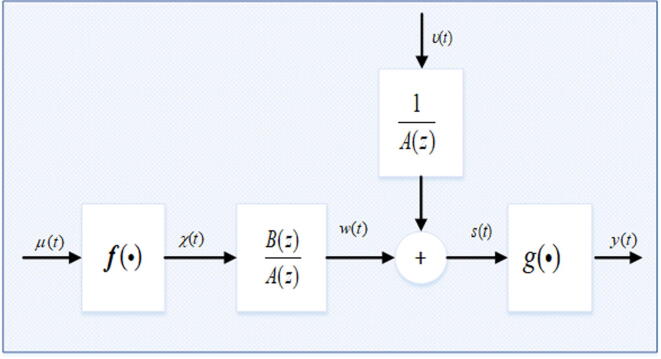

The Hammerstein-Wiener model is block-oriented model as shown in Fig. 1 is expressed mathematically as [30]:

| (1) |

| (2) |

| (3) |

| (4) |

Where the system input is , is system’s output and is stochastic white noise with zero mean, while and represents system internal variables. , and are polynomials of known orders and , in the unit backward shift operator and defined as:

| (5) |

Fig. 1.

The generic block diagram of Hammerstein-Weiner system.

Moreover, is the nonlinear block’s output having m basis functions with coefficients as:

| (6) |

The output nonlinearity is invertible and defined having basis functions with coefficients as:

| (7) |

Using (1), (2), (5) and (6), can be written as.

| (8) |

Using (3) and (8), can be defined as:

| (9) |

Substituting (9) in (7), we have:

| (10) |

The parameter vector for the Hammerstein-Wiener system from (10) is given as:

| (11) |

Using (11) gives:

| (12) |

The Thiel's inequality coefficient index is employed for the error function formulation of Hammerstein-Wiener models as:

| (13) |

here stands for desired output for ith observation and for estimated response of the actual output, while l represents the total number of instances. The estimated response for with respective information vector is mathematically written as:

| (14) |

| (15) |

For the ideal case of parameter estimation, the estimated output approach its optimal as

Optimization procedure

Metaheuristic evolutionary strategies like WDE and GAs that are proposed in this work for parameter estimation of Hammerstein-Wiener model are briefly explained here.

WDE is a latest bi-population algorithm from the family of evolutionary heuristics developed to solve nearly all real-valued unimodal and multimodal optimization problems [47]. WDE is capable of efficiently finding evolutionary search direction. Additionally, in WDE population diversity remain stable and does not decline swiftly which leads to effective searches in next upcoming iterations. A few benchmark problems include GPS network adjustment problem, Pressure-vessel, Speed-reducer Welded-beam design [47], and camera calibration [48].

GAs introduced by Holland [49], is a stochastic, effective, and broadly used evolutionary computation algorithm developed to solve real-valued numerical optimization problems [49]. GAs is easy implemented, robust, simple, efficient and reliable global search algorithm that uses three basic operators, like crossover, mutation and selection for generating new efficient population with better fitness [50]. The individuals with better fitness are less likely to trap in local minima. Few recent applications of GAs include electrical circuits [50], supply chain [51], lungs cancer [52] and wind speed prediction [53].

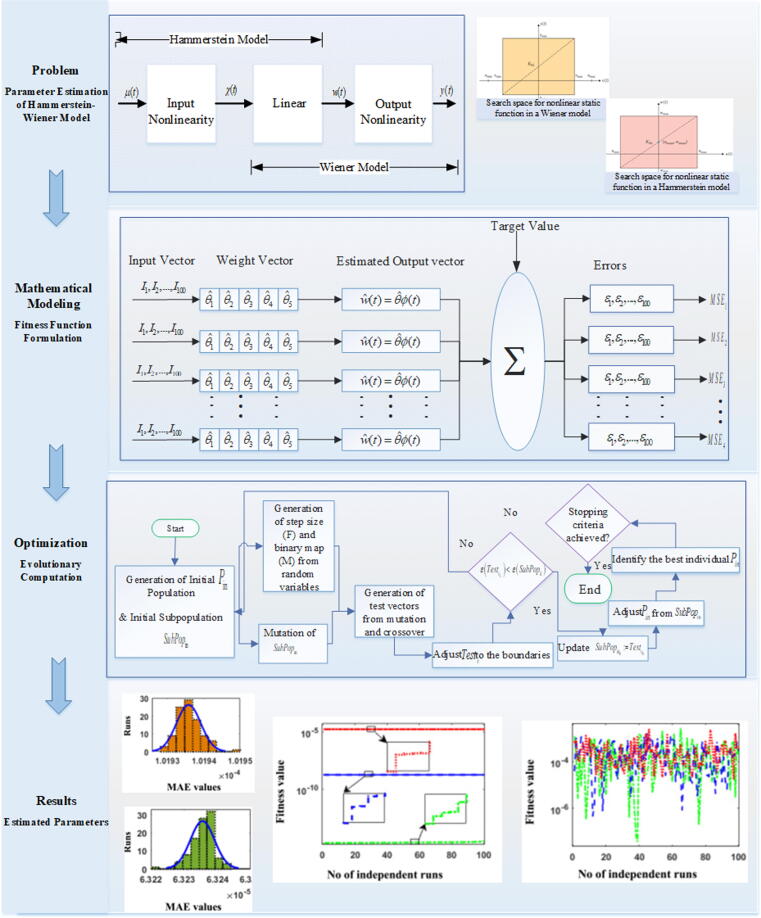

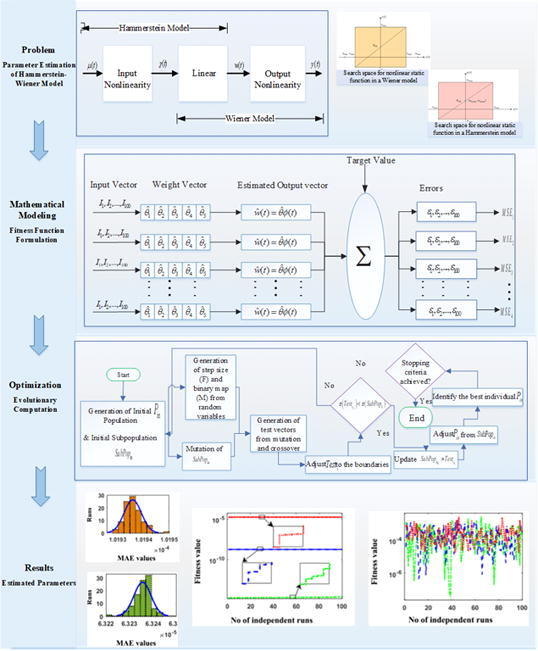

Efficacy of WDE, and GAs are the inspirations to the authors to use these evolutionary heuristics for finding optimal parameters of Hammerstein-Wiener system. Flow chart with procedural steps of GAs is shown in Fig. 3. Furthermore, the detailed stepwise procedures of WDE for Hammerstein-Wiener model is given as pseudocode form in Table 1. The designed methodology of the proposed work is illustrated in Fig. 2.

Fig. 3.

The generic block diagram of Hammerstein.

Table 1.

Pseudocode of WDE.

|

Fig. 2.

Workflow Diagram of parameter estimation problem of nonlinear Wiener system.

Performance indices

In this study, four performance operators i.e., , , , and are utilized to validate the performance of the proposed evolutionary algorithms. These performance operators along with their mathematical description with respect to true and estimated parameters are provided in this section.

is mathematically written as:

| (16) |

is mathematically written as:

| (17) |

is defined as:

| (18) |

mathematical formulation is as follows:

| (19) |

The magnitude of these performance metrics should approach zero for an ideal model.

Results and discussion

In this section, results of the experiments are discussed for two different examples of Hammerstein-Wiener system on the basis of different length of parameter vector and noise variances conducted though evolutionary computing heuristics WDE and GAs.

Model: I

In this Hammerstein-Wiener model, five unknown entities in the parameter vector are taken for estimation with polynomial type nonlinearity in both input and output typt nonlinearity and is mathematical form as:

| (19) |

The objective function of nonlinear nonlinera Wiener model example 1 is formulated as described in equation (22) with K = 20 and N = 6 as:

| (20) |

Model: II

In this example, eight unknown parameters are taken for estimation of nonlinear Hammerstein Wiener model with polynomial type output nonlinearity and is mathematically defined as:

| (21) |

Likewise, the objective function of nonlinear nonlinear Wiener model example 2 is given as:

| (22) |

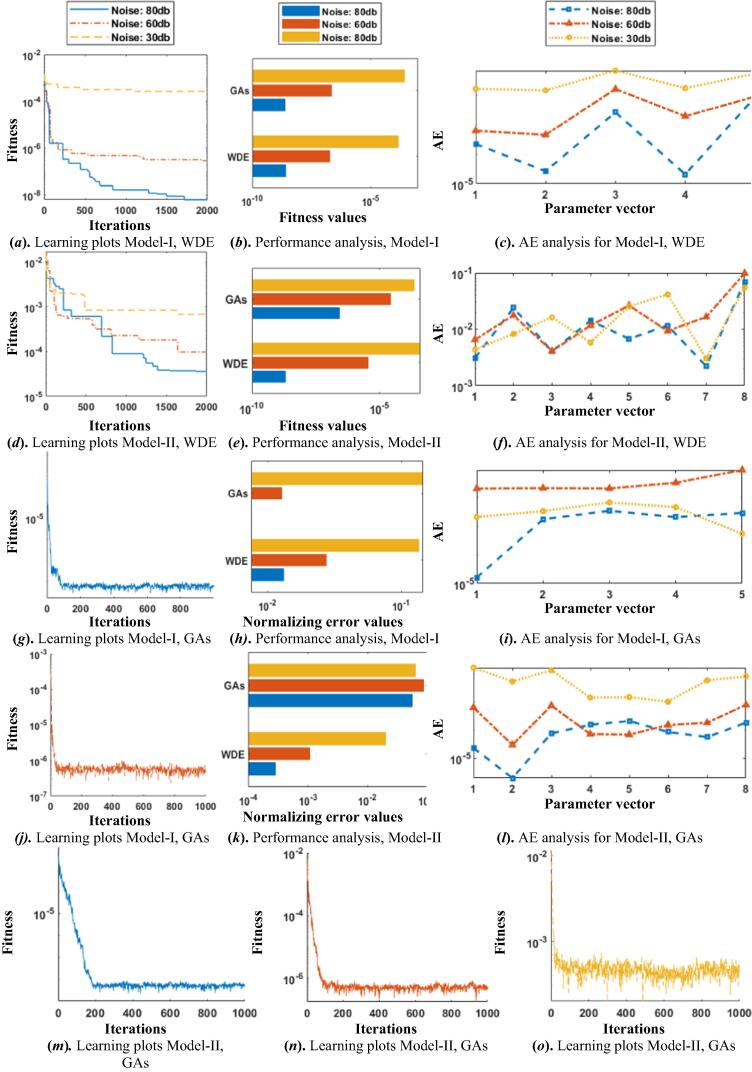

In these two Hammerstein Wiener models, input signal is taken as randomly generated signal of zero mean and unit variance, while noise is also a random signal with mean zero and constant variance. The parameter estimation of Hammerstein Wiener models is performed through the wellknown evolutionary computational heuristics i.e., WDE and GAs for optimization of fitness functions for 20 snap shots. The results of proposed scheme based on learning curves along with absolute error analysis are given in Fig. 4. The iterative convergence graphs of fitness in case of WDE for Model-I and II are presented in subfigs. 4(a) and (d), respectively while for GAs the learning curves are shown in subfig. 4(g) for Model-I, 80db noise, subfig. 4(j) Model-I, 60db noise, subfigs. 4(m-o) and Model-II, 80db, 60db and 30db noise levels, respectively. It is observed that both the algorithms are convergent but convergence of WDE is slightly superior than GAs. Comparison based on fitness values are also shown here in subfig 5(b) and 5(e) for Model I and II, respectively, while normalizing error plots comparisons are presented in subfig 5(h) and 5(k) for Model I and II respectively. In these plots, it can be observed that fitness values achieved by WDE is higher than GAs, also it can be seen that fitness valus decreases as the noise level increases. Along with this comparison, absolute errors (AEs) for the two Hammerstein-Wiener model for the three noise scenarios are shown in graphical form in subfig 5(f) and 5(i) for Model I and II respectively in case of WDE and in subfig 5(c) and 5(f) for Model I and II respectively in case of WDE while in subfig 5(i) and 5(l) for Model I and II respectively in case of GAs. The AE magnitudes are found in the range of 10−9, 10−7, 10−4 for noise levels 80db, 60db and 30db with WDE, and 10−7, 10−5, 10−4 for noise levels 80db, 60db and 30db with GAs for Model-I. while almost similar trend is found for GAs. Consistent accuracy is found for both the algorithms while slight degradration in the accuracy is observed with an increase in the noise levels for the proposed schemes.Fig. 5.

Fig. 4.

Plots for Iterative adaptation of fitness function for the HW model.

Fig. 5.

Plots based on fitness values for the HW model.

Analysis of accuracy of the designed scheme is performed for 100 iterations for the two models of Hammerstein-Wiener system and results based on fitness values are plotted in semilogrithmic style for better analysis in Fig. 6. It can be observed that the respective magnitudes found close to 10−9, 10−7, 10−4 with noise levels 80db, 60db and 30db in case of Model-I, and 10−5, 10−4, 10−3 with noise levels 80db, 60db and 30db for in case of Model-II with WDE.Almost similar trend is found for GAs. Very small high fitness values proves the accuracy of the scheme.

Fig. 6.

Comparison of the results based on performance indices for HW model for WDE.

The efficacy and relaiblility of the proposed evolutionary heuristics is validated through performance indices i.e., error function, , , , and . Results in term of the best run based on minimum fitness, as well as the complexity measures based on time, generations consumed, and function counts are listed in Table 2. It is quite clear that the noticed close to 10−3 and 10−2 for Model I with WDE and GAs, while in case of Model II, magnitudes are found close to 10−2 and 10−1, for Model II with WDE and GAs. The more values close to zeros of the performance indices proves the consistency and precision of the proposed evolutionary algorithms.

Table 2.

Performance comparison on the best runs of WDE and GAs algorithm.

| Scheme | Algorithm | Noise db |

Accuracy Measures |

Complexity Measures |

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| e | Time | Gens | Funcount | |||||||

| WDE | I | 80 | 5.65E−09 | 1.32E−02 | 2.35E−03 | 4.05E−03 | 6.61E−03 | 1.55 | 2000 | 52,052 |

| 60 | 3.84E−07 | 1.64E−02 | 5.71E−03 | 8.44E−03 | 1.38E−02 | 1.62 | 2000 | 52,052 | ||

| 30 | 2.88E−04 | 8.19E−02 | 3.46E−02 | 4.14E−02 | 6.60E−02 | 1.47 | 2000 | 52,052 | ||

| II | 80 | 1.98E−05 | 3.24E−02 | 1.69E−02 | 2.36E−02 | 2.81E−02 | 1.55 | 2000 | 52,052 | |

| 60 | 3.64E−05 | 2.18E−01 | 2.38E−02 | 3.60E−02 | 4.22E−02 | 0.77 | 2000 | 52,052 | ||

| 30 | 5.60E−04 | 3.09E−02 | 1.99E−02 | 2.68E−02 | 3.17E−02 | 0.47 | 2000 | 52,052 | ||

| GAs | I | 80 | 4.80E−09 | 7.55E−03 | 2.01E−03 | 2.31E−03 | 3.77E−03 | 1000 | 1000 | 320,320 |

| 60 | 3.86E−07 | 4.62E−01 | 3.38E−03 | 2.31E−03 | 6.44E−03 | 1000 | 1000 | 320,320 | ||

| 30 | 3.58E−04 | 9.74E−01 | 3.61E−02 | 2.31E−03 | 7.37E−02 | 1000 | 1000 | 320,320 | ||

| II | 80 | 4.78E−09 | 5.22E−01 | 9.90E−05 | 2.31E−03 | 1.45E−04 | 142.09 | 1000 | 320,320 | |

| 60 | 4.08E−07 | 3.86E−01 | 3.33E−04 | 2.31E−03 | 5.37E−04 | 137.56 | 1000 | 320,320 | ||

| 30 | 4.58E−04 | 2.40E−01 | 6.59E−03 | 2.31E−03 | 1.01E−02 | 131.62 | 1000 | 320,320 | ||

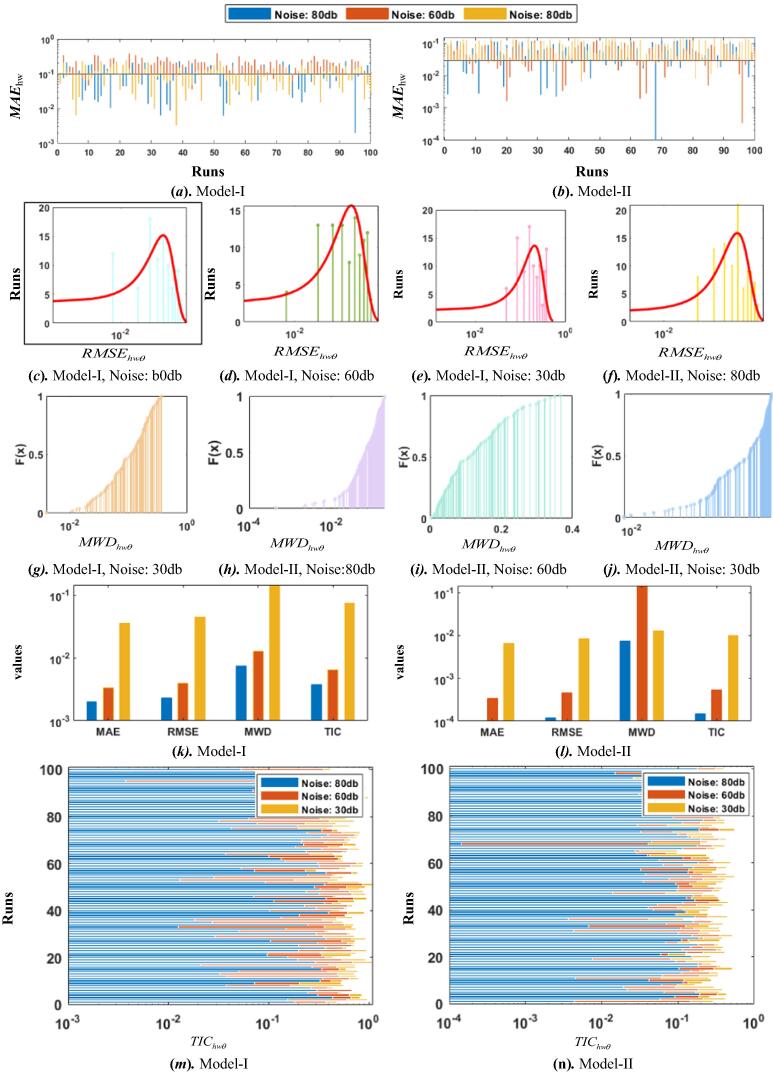

Comparison via different performance indices i.e., error function, , , and are employed to further examine the accuracy of the designed schemes and shown in pictoral form in Fig. 7 for WDE and GAs, respectively. The magnitudes for both the models are shown in graphical form for 100 independent runs in subfig. 7(a), and subfig. 7 (b), for Model I and II, respectively. In case of WDE and in subfig. 8 (a), and subfig. 8 (b), for Model I and II, respectively. In case of GAs. It can be seen from the figures that the MAE values for WDE in case of Model I and II are found in the range of 10−3 to 10−1 for noise levels 80db, 60db and 30db. In order to further examine the precision, results as the plots of histogram are investigated based on RMSE values for both WDE and GAs are shown in subfigs. 6 (c-f), and subfigs. 7 (c-f), respectively. In addition to histogram graphs, empirical Cumulative Distribution Function graphs are also plotted in terms of MWD magnitudes for Hammerstein Wiener models with both designed schemes and are shown in subfigs. 6 (g-j), and subfigs. 7 (g-j), respectively, for WDE and GAs, respectively. In subfigs. 6 (k-l), and subfigs. 7 (k-l), comparative bar graphs are ploted both with WDE and GAs, respectively for Model I and II. In order to endorse the accuracy further, stacked bar graphical illustrations are also shown in terms of TIC values for Model I and II in subfigs. 6 (m-n), and subfigs. 7 (m-n), for WDE and GAs, respectively. and similar trend is found for GAs outcomes. These all graphical illustrations validate the consistent accuracy of the two proposed heuristic strategies for the parameter estimation of Hammerstein Wiener systems.

Fig. 7.

Comparison of the results based on performance indices for HW model for GAs.

Further analysis of precision on 100 independent runs of the algorithms is carried out through the statistical performance measures via mean, best, and worst values of fitness and statistical outcomes for the two proposed evoltuionary heuristics are listed in Table 3, Table 4 for respective Models I and II with three noise level added in Hammerstein Wiener system. It can be observed that with the increase in the noise level, there is a decrease in the performance of the WDE and GAs, and same is the trend with the also with the increase in the unknown poarameters of the model as less degree of freedom make parameter estimation problem more stiff.

Table 3.

Comparative analysis through results of statistics for Model II of system.

| Model | Algorithm |

Noise (db) |

Values |

Parameter Vector |

||||

|---|---|---|---|---|---|---|---|---|

| i = 1 | i = 2 | i = 3 | i = 4 | i = 5 | ||||

| I | WDE | 80 | Best | 0.550 | 0.150 | −0.303 | 0.180 | 0.141 |

| Mean | 0.550 | 0.150 | −0.234 | 0.180 | 0.151 | |||

| Worst | 0.550 | 0.150 | 0.000 | 0.180 | 0.143 | |||

| 60 | Best | 0.551 | 0.150 | −0.284 | 0.182 | 0.159 | ||

| Mean | 0.550 | 0.150 | −0.242 | 0.179 | 0.177 | |||

| Worst | 0.555 | 0.148 | −0.390 | 0.181 | 0.470 | |||

| 30 | Best | 0.532 | 0.165 | −0.369 | 0.162 | 0.204 | ||

| Mean | 0.522 | 0.144 | −0.252 | 0.187 | 0.507 | |||

| Worst | 0.646 | 0.113 | −0.474 | 0.328 | 0.978 | |||

| GA | 80 | Best | 0.550 | 0.148 | −0.303 | 0.182 | 0.153 | |

| Mean | 0.550 | 0.126 | −0.512 | 0.307 | 0.150 | |||

| Worst | 0.550 | 0.047 | −0.950 | 0.570 | 0.150 | |||

| 60 | Best | 0.552 | 0.153 | −0.294 | 0.176 | 0.149 | ||

| Mean | 0.550 | 0.150 | −0.458 | 0.275 | 0.151 | |||

| Worst | 0.550 | 0.045 | −0.993 | 0.604 | 0.131 | |||

| 30 | Best | 0.530 | 0.129 | −0.320 | 0.212 | 0.062 | ||

| Mean | 0.551 | 0.084 | −0.603 | 0.354 | 0.432 | |||

| Worst | 0.552 | 0.054 | −0.944 | 0.566 | 0.977 | |||

| Actural θ | 0.550 | 0.150 | −0.3-- | 0.180 | 0.150 | |||

Table 4.

Comparison through results of statistics for Model II of system.

| Model | Algorithm |

Noise (db) |

Value |

Parameter Vector |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| i = 1 | i = 2 | i = 3 | i = 4 | i = 5 | i = 6 | i = 7 | i = 8 | ||||

| II | WDE | 80 | Best | 0.55 | 0.82 | 0.15 | −0.36 | 0.49 | 0.17 | −0.15 | 0.08 |

| Mean | 0.54 | 0.78 | 0.11 | −0.26 | 0.70 | 0.27 | −0.20 | 0.27 | |||

| Worst | 0.44 | 0.63 | 0.07 | −0.17 | 1.00 | 0.41 | −0.25 | 0.45 | |||

| 60 | Best | 0.56 | 0.78 | 0.15 | −0.34 | 0.53 | 0.19 | −0.17 | 0.05 | ||

| Mean | 0.54 | 0.79 | 0.11 | −0.25 | 0.72 | 0.28 | −0.21 | 0.24 | |||

| Worst | 0.50 | 0.73 | 0.07 | −0.16 | 1.00 | 0.50 | −0.22 | 0.44 | |||

| 30 | Best | 0.55 | 0.81 | 0.13 | −0.34 | 0.53 | 0.22 | −0.15 | 0.10 | ||

| Mean | 0.54 | 0.77 | 0.11 | −0.26 | 0.70 | 0.28 | −0.19 | 0.32 | |||

| Worst | 0.44 | 0.68 | 0.07 | −0.21 | 0.98 | 0.40 | −0.26 | 0.62 | |||

| GAs | 80 | Best | 0.55 | 0.80 | 0.15 | −0.35 | 0.50 | 0.18 | −0.15 | 0.15 | |

| Mean | 0.55 | 0.80 | 0.12 | −0.28 | 0.68 | 0.24 | −0.20 | 0.15 | |||

| Worst | 0.55 | 0.80 | 0.09 | −0.22 | 0.81 | 0.29 | −0.24 | 0.15 | |||

| 60 | Best | 0.55 | 0.80 | 0.15 | −0.22 | 0.80 | 0.29 | −0.24 | 0.15 | ||

| Mean | 0.55 | 0.80 | 0.12 | −0.22 | 0.67 | 0.24 | −0.20 | 0.15 | |||

| Worst | 0.55 | 0.80 | 0.09 | −0.28 | 0.77 | 0.28 | −0.23 | 0.15 | |||

| 30 | Best | 0.56 | 0.80 | 0.08 | −0.20 | 0.91 | 0.30 | −0.26 | 0.18 | ||

| Mean | 0.55 | 0.80 | 0.11 | −0.25 | 0.76 | 0.27 | −0.23 | 0.15 | |||

| Worst | 0.56 | 0.82 | 0.13 | −0.29 | 0.57 | 0.20 | −0.17 | 0.10 | |||

| Actural θ | 0.55 | 0.80 | 0.15 | 0.35 | 0.50 | 0.18 | −0.15 | 0.15 | |||

Complexity analysis is computed for the proposed evolutionary algorithms WDE and GAs via average time spent, mean generations executed and average times fitness functions are executed during optimization for finding optimal parameters of Hammerstein Wiener models. Computational complexity was analysed for 100 independent runs of both evolutionary schemes and are listed in Table. 5. It can be seen that the values of average time executed, generations completed and functions evaluation are around 0.35 ± 0.004, 200, 8040 for WDE, and 1.13 ± 0.096, 600, 24,040 for GAs, in case of Model I. It can be seen that among these two evolutionary heuristic algorithm, WDE is relatively less computationaly complex than that of GAs. Also with the increase in the dimensionality of the Hammerstein Wiener model, the computational complexity of WDE and GAs rises. All the computational work is performed on computer station, having Intel(R) Core (TM) i7-4770 CPU @3.40 GHz processor, 8 GB RAM.

Table 5.

Comparison through complexity operators for system.

| Algorithm | Mode |

Noise (db) |

Complexity measures |

|||||

|---|---|---|---|---|---|---|---|---|

|

Time |

Generations |

Function counts |

||||||

| Mean | STD | Mean | STD | Mean | STD | |||

| WDE | I | 80 | 0.358 | 0.003 | 200 | 0 | 8040 | 0 |

| 60 | 0.362 | 0.004 | 200 | 0 | 8040 | 0 | ||

| 30 | 0.358 | 0.002 | 200 | 0 | 8040 | 0 | ||

| II | 80 | 0.699 | 0.004 | 400 | 0 | 16,040 | 0 | |

| 60 | 0.711 | 0.006 | 400 | 0 | 16,040 | 0 | ||

| 30 | 0.700 | 0.003 | 400 | 0 | 16,040 | 0 | ||

| GAs | I | 80 | 1.038 | 0.004 | 600 | 0 | 24,040 | 0 |

| 60 | 1.127 | 0.096 | 600 | 0 | 24,040 | 0 | ||

| 30 | 1.044 | 0.012 | 600 | 0 | 24,040 | 0 | ||

| II | 80 | 0.189 | 0.003 | 200 | 0 | 4020 | 0 | |

| 60 | 0.186 | 0.001 | 200 | 0 | 4020 | 0 | ||

| 30 | 0.203 | 0.022 | 200 | 0 | 4020 | 0 | ||

Conclusions and future recommendations

In this study a novel application of evolutionary heuristic paradigm based on Weighted Differential Evolution and Genetic Algorithms are exploited for accurate parameter estimation of nonlinear Hammerstein-Wiener systems with various noise scenarios and number of unknown elements in the parameter vector. Experimental results prove that both algorithms are reasonably convergent and accurate however the performance of WDE is relatively better by means of precision and complexity indices. Results through statistics validate that proposed evolutionary algorithms are quite efficient but performance of the algorithms declines as the noise level increases. Comparative analysis via different performance measuring indices i.e., , , , and also validate the consistency of the designed procedures. Furthermore, computational complexity of GAs is found more than WDE based on time consumed, iterations completed, and function counts. Also, with the increase in the length of the parameter vector of the Hammerstein Wiener model, the complexity of WDE and GAs increases and same trend is observed with the increase in the noise levels. The novel designs evolutionary heuristics are indeed effective algorithms for parameter estimation problems of block-oriented models.

In future, the newly introduced nature inspired heuristics [54], [27], [55], [56], [50], [57] like firefly, gravitational search optimization algorithm, bat algorithm, ant bee colony optimization and their recently introduced fractional variants can be good alternatives to boost the accuracy of the proposed Hammerstein Wiener structures.

Compliance with Ethics Requirements

Human and animal rights statements : All the authors of the manuscript declared that there is no research involving human participants and/or animal.

Informed consent : All the authors of the manuscript declared that there is no material that required informed consent.

Data Availability: My manuscript has no data associated with it.

CRediT authorship contribution statement

Ammara Mehmood: Conceptualization, Methodology, Software, Investigation. Muhammad Asoif Zahoor Raja: Visualization, Formal analysis, Validation.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Peer review under responsibility of Cairo University.

Contributor Information

Ammara Mehmood, Email: ammara@ee.knu.ac.kr.

Muhammad Asif Zahoor Raja, Email: rajamaz@yuntech.edu.tw.

References

- 1.Ayala H.V.H., Habineza D., Rakotondrabe M., dos Santos Coelho L. Nonlinear black-box system identification through coevolutionary algorithms and radial basis function artificial neural networks. Appl Soft Comput. 2020;87:105990. doi: 10.1016/j.asoc.2019.105990. [DOI] [Google Scholar]

- 2.Eykhoff P. vol. 14. Wiley; New York: 1974. (System identification). [Google Scholar]

- 3.Saniei E., Setayeshi S., Akbari M.E., Navid M. Parameter estimation of breast tumour using dynamic neural network from thermal pattern. J Adv Res. 2016;7(6):1045–1055. doi: 10.1016/j.jare.2016.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Li F., Jia L.i. Parameter estimation of Hammerstein-Wiener nonlinear system with noise using special test signals. Neurocomputing. 2019;344:37–48. [Google Scholar]

- 5.Zhang J., Tang Z., Xie Y., Li F., Ai M., Zhang G., et al. Disturbance-encoding-based neural Hammerstein-Wiener model for industrial process predictive control. IEEE Trans Syst, Man, Cybernet: Syst. 2022;52(1):606–617. [Google Scholar]

- 6.Cai H., Li P., Su C., Cao J. Double-layered nonlinear model predictive control based on Hammerstein-Wiener model with disturbance rejection. Measure Control. 2018;51(7-8):260–275. [Google Scholar]

- 7.Moriyasu R., Ikeda T., Kawaguchi S., Kashima K. Structured Hammerstein-Wiener model learning for model predictive control. IEEE Control Syst Lett. 2022;6:397–402. [Google Scholar]

- 8.Bai J., Mao Z., Pu T. Recursive identification for multi-input–multi-output Hammerstein-Wiener system. Int J Control. 2019;92(6):1457–1469. [Google Scholar]

- 9.Cerone V., Razza V., Regruto D. One-shot set-membership identification of Generalized Hammerstein-Wiener systems. Automatica. 2020;118:109028. doi: 10.1016/j.automatica.2020.109028. [DOI] [Google Scholar]

- 10.Luo X.-S., Song Y.-D. Data-driven predictive control of Hammerstein-Wiener systems based on subspace identification. Inf Sci. 2018;422:447–461. [Google Scholar]

- 11.Bai E.-W. A blind approach to the Hammerstein-Wiener model identification. Automatica. 2002;38(6):967–979. [Google Scholar]

- 12.Abouda S.E., Elloumi M., Koubaa Y., Chaari A. Over parameterisation and optimisation approaches for identification of nonlinear stochastic systems described by Hammerstein-Wiener models. Int J Model Ident Control. 2019;33(1):61–75. [Google Scholar]

- 13.Kozek M., Hametner C. Block-oriented identification of Hammerstein/Wiener-models using the RLS-algorithm. Int J Appl Electromagnet Mech. 2007;25(1-4):529–535. [Google Scholar]

- 14.Wills A., Schön T.B., Ljung L., Ninness B. Identification of hammerstein–wiener models. Automatica. 2013;49(1):70–81. [Google Scholar]

- 15.Vörös J. Iterative identification of nonlinear dynamic systems with output backlash using three-block cascade models. Nonlinear Dyn. 2015;79(3):2187–2195. [Google Scholar]

- 16.Park H.C., Sung S.W., Lee J. Modeling of Hammerstein-Wiener processes with special input test signals. Ind Eng Chem Res. 2006;45(3):1029–1038. [Google Scholar]

- 17.Giordano G., Gros S., Sjöberg J. An improved method for Wiener-Hammerstein system identification based on the Fractional Approach. Automatica. 2018;94:349–360. [Google Scholar]

- 18.Radaideh M.I., Shirvan K. Rule-based reinforcement learning methodology to inform evolutionary algorithms for constrained optimization of engineering applications. Knowl-Based Syst. 2021;217:106836. doi: 10.1016/j.knosys.2021.106836. [DOI] [Google Scholar]

- 19.Binh H.T.T., Thanh P.D., Thang T.B. New approach to solving the clustered shortest-path tree problem based on reducing the search space of evolutionary algorithm. Knowl-Based Syst. 2019;180:12–25. [Google Scholar]

- 20.MiarNaeimi F., Azizyan G., Rashki M. Horse herd optimization algorithm: A nature-inspired algorithm for high-dimensional optimization problems. Knowl-Based Syst. 2021;213:106711. doi: 10.1016/j.knosys.2020.106711. [DOI] [Google Scholar]

- 21.Salgotra R., Singh U., Singh S., Mittal N. A hybridized multi-algorithm strategy for engineering optimization problems. Knowl-Based Syst. 2021;217:106790. doi: 10.1016/j.knosys.2021.106790. [DOI] [Google Scholar]

- 22.Jadoon I., Raja M.A.Z., Junaid M., Ahmed A., Rehman A.u., Shoaib M. Design of evolutionary optimized finite difference based numerical computing for dust density model of nonlinear Van-der Pol Mathieu’s oscillatory systems. Math Comput Simul. 2021;181:444–470. [Google Scholar]

- 23.Sabir Z., Baleanu D., Raja M.A.Z., Guirao J.L.G. Design of neuro-swarming heuristic solver for multi-pantograph singular delay differential equation. Fractals. 2021;29(05):2140022. doi: 10.1142/S0218348X21400223. [DOI] [Google Scholar]

- 24.Sabir Z., Raja M.A.Z., Baleanu D. Fractional Mayer Neuro-swarm heuristic solver for multi-fractional Order doubly singular model based on Lane-Emden equation. Fractals. 2021;29(05):2140017. doi: 10.1142/S0218348X2140017X. [DOI] [Google Scholar]

- 25.Khan B.S., Raja M.A.Z., Qamar A., Chaudhary N.I. Design of moth flame optimization heuristics for integrated power plant system containing stochastic wind. Appl Soft Comput. 2021;104:107193. doi: 10.1016/j.asoc.2021.107193. [DOI] [Google Scholar]

- 26.Zhang J., Yao X., Li Y. Improved evolutionary algorithm for parallel batch processing machine scheduling in additive manufacturing. Int J Prod Res. 2020;58(8):2263–2282. [Google Scholar]

- 27.Houssein E.H., Mahdy M.A., Eldin M.G., Shebl D., Mohamed W.M., Abdel-Aty M. Optimizing quantum cloning circuit parameters based on adaptive guided differential evolution algorithm. J Adv Res. 2021;29:147–157. doi: 10.1016/j.jare.2020.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rodríguez A., Alejo-Reyes A., Cuevas E., Beltran-Carbajal F., Rosas-Caro J.C. An Evolutionary Algorithm-Based PWM Strategy for a Hybrid Power Converter. Mathematics. 2020;8(8):1247. [Google Scholar]

- 29.Mehmood A., Chaudhary N.I., Zameer A., Raja M.A.Z. Novel computing paradigms for parameter estimation in power signal models. Neural Comput Appl. 2020;32(10):6253–6282. [Google Scholar]

- 30.Mehmood A., Zameer A., Aslam M.S., Raja M.A.Z. Design of nature-inspired heuristic paradigm for systems in nonlinear electrical circuits. Neural Comput Appl. 2020;32(11):7121–7137. [Google Scholar]

- 31.Raja M.A.Z., Mehmood A., Khan A.A., Zameer A. Integrated intelligent computing for heat transfer and thermal radiation-based two-phase MHD nanofluid flow model. Neural Comput Appl. 2020;32(7):2845–2877. [Google Scholar]

- 32.Shahid F., Zameer A., Mehmood A., Raja M.A.Z. A novel wavenets long short term memory paradigm for wind power prediction. Appl Energy. 2020;269:115098. doi: 10.1016/j.apenergy.2020.115098. [DOI] [Google Scholar]

- 33.Sabir Z., Umar M., Raja M.A.Z., Baleanu D. Applications of Gudermannian neural network for solving the SITR fractal system. Fractals. 2021;29(08) doi: 10.1142/S0218348X21502509. [DOI] [Google Scholar]

- 34.Mehmood A., Zameer A., Chaudhary N.I., Ling S.H., Raja M.A.Z. Design of meta-heuristic computing paradigms for Hammerstein identification systems in electrically stimulated muscle models. Neural Comput Appl. 2020;32(16):12469–12497. [Google Scholar]

- 35.Mehmood A., Afsar K., Zameer A., Awan S.E., Raja M.A.Z. Integrated intelligent computing paradigm for the dynamics of micropolar fluid flow with heat transfer in a permeable walled channel. Appl Soft Comput. 2019;79:139–162. [Google Scholar]

- 36.Niu B., Tan L., Liu J., Liu J., Yi W., Wang H. Cooperative bacterial foraging optimization method for multi-objective multi-echelon supply chain optimization problem. Swarm Evol Comput. 2019;49:87–101. [Google Scholar]

- 37.Zou W.-Q., Pan Q.-K., Wang L. An effective multi-objective evolutionary algorithm for solving the AGV scheduling problem with pickup and delivery. Knowl-Based Syst. 2021;218:106881. doi: 10.1016/j.knosys.2021.106881. [DOI] [Google Scholar]

- 38.Yang X., Liu Y., Park G.-K. Parameter estimation of uncertain differential equation with application to financial market. Chaos, Solitons Fract. 2020;139:110026. doi: 10.1016/j.chaos.2020.110026. [DOI] [Google Scholar]

- 39.Mehmood A., Chaudhary N.I., Zameer A., Raja M.A.Z. Backtracking search optimization heuristics for nonlinear Hammerstein controlled auto regressive auto regressive systems. ISA Trans. 2019;91:99–113. doi: 10.1016/j.isatra.2019.01.042. [DOI] [PubMed] [Google Scholar]

- 40.Mehmood A., Zameer A., Raja M.A.Z., Bibi R., Chaudhary N.I., Aslam M.S. Nature-inspired heuristic paradigms for parameter estimation of control autoregressive moving average systems. Neural Comput Appl. 2019;31(10):5819–5842. [Google Scholar]

- 41.Mehmood A., Chaudhary N.I., Zameer A., Raja M.A.Z. Novel computing paradigms for parameter estimation in Hammerstein controlled auto regressive auto regressive moving average systems. Appl Soft Comput. 2019;80:263–284. [Google Scholar]

- 42.Mehmood A., Zameer A., Chaudhary N.I., Raja M.A.Z. Backtracking search heuristics for identification of electrical muscle stimulation models using Hammerstein structure. Appl Soft Comput. 2019;84:105705. doi: 10.1016/j.asoc.2019.105705. [DOI] [Google Scholar]

- 43.Hatanaka T, Uosaki K, Koga M. Evolutionary computation approach to Wiener model identification. In: Proceedings of the 2002 congress on evolutionary computation. CEC'02 (Cat. No. 02TH8600), vol. 1. IEEE; 2002. p. 914–9.

- 44.Mehne S.H.H., Mirjalili S. Moth-flame optimization algorithm: theory, literature review, and application in optimal nonlinear feedback control design. Nature-Inspired Optimizers. 2020:143–166. [Google Scholar]

- 45.Baleanu D., Sajjadi S.S., Jajarmi A., Defterli Ö. On a nonlinear dynamical system with both chaotic and nonchaotic behaviors: a new fractional analysis and control. Adv. Difference Eq. 2021;2021(1):234. [Google Scholar]

- 46.Jajarmi A., Baleanu D., Zarghami Vahid K., Mobayen S. A general fractional formulation and tracking control for immunogenic tumor dynamics. Math. Methods Appl. Sci. 2022;45(2):667–680. [Google Scholar]

- 47.Civicioglu P., Besdok E., Gunen M.A., Atasever U.H. Weighted differential evolution algorithm for numerical function optimization: a comparative study with cuckoo search, artificial bee colony, adaptive differential evolution, and backtracking search optimization algorithms. Neural Comput Appl. 2020;32(8):3923–3937. [Google Scholar]

- 48.Gunen M.A., Besdok E., Civicioglu P., Atasever U.H. Camera calibration by using weighted differential evolution algorithm: a comparative study with ABC, PSO, COBIDE, DE, CS, GWO, TLBO, MVMO, FOA, LSHADE, ZHANG and BOUGUET. Neural Comput Appl. 2020;32(23):17681–17701. [Google Scholar]

- 49.Goldberg DE, Holland JH. Genetic algorithms and machine learning; 1988.

- 50.Ushakov P.A., Maksimov K.O., Stoychev S.V., Gravshin V.G., Kubanek D., Koton J. Synthesis of elements with fractional-order impedance based on homogenous distributed resistive-capacitive structures and genetic algorithm. J Adv Res. 2020;25:275–283. doi: 10.1016/j.jare.2020.06.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Nezamoddini N., Gholami A., Aqlan F. A risk-based optimization framework for integrated supply chains using genetic algorithm and artificial neural networks. Int J Prod Econ. 2020;225:107569. doi: 10.1016/j.ijpe.2019.107569. [DOI] [Google Scholar]

- 52.Maleki N., Zeinali Y., Niaki S.T.A. A k-NN method for lung cancer prognosis with the use of a genetic algorithm for feature selection. Expert Syst Appl. 2021;164:113981. doi: 10.1016/j.eswa.2020.113981. [DOI] [Google Scholar]

- 53.Zameer A., Arshad J., Khan A., Raja M.A.Z. Intelligent and robust prediction of short term wind power using genetic programming based ensemble of neural networks. Energy Convers Manage. 2017;134:361–372. [Google Scholar]

- 54.Sadhu A.K., Konar A., Bhattacharjee T., Das S. Synergism of firefly algorithm and Q-learning for robot arm path planning. Swarm Evol Comput. 2018;43:50–68. [Google Scholar]

- 55.Kusyk J., Sahin C.S., Umit Uyar M., Urrea E., Gundry S. Self-organization of nodes in mobile ad hoc networks using evolutionary games and genetic algorithms. J Adv Res. 2011;2(3):253–264. [Google Scholar]

- 56.Han Z., Li S., Liu H. Composite learning sliding mode synchronization of chaotic fractional-order neural networks. J Adv Res. 2020;25:87–96. doi: 10.1016/j.jare.2020.04.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Gao W., Chen X., Chen D. Genetic programming approach for predicting service life of tunnel structures subject to chloride-induced corrosion. J Adv Res. 2019;20:141–152. doi: 10.1016/j.jare.2019.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]