Abstract

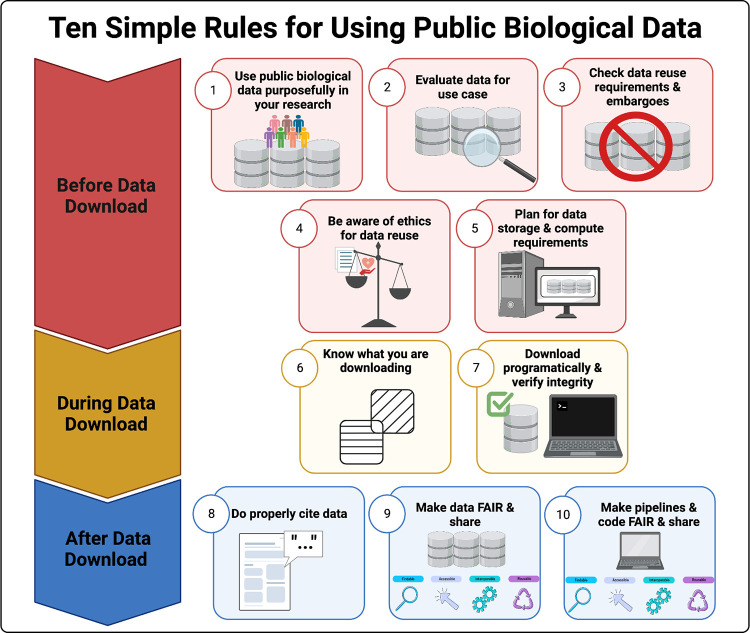

With an increasing amount of biological data available publicly, there is a need for a guide on how to successfully download and use this data. The 10 simple rules for using public biological data are: (1) use public data purposefully in your research; (2) evaluate data for your use case; (3) check data reuse requirements and embargoes; (4) be aware of ethics for data reuse; (5) plan for data storage and compute requirements; (6) know what you are downloading; (7) download programmatically and verify integrity; (8) properly cite data; (9) make reprocessed data and models Findable, Accessible, Interoperable, and Reusable (FAIR) and share; and (10) make pipelines and code FAIR and share. These rules are intended as a guide for researchers wanting to make use of available data and to increase data reuse and reproducibility.

This is a PLOS Computational Biology Methods paper.

Introduction

In recent years, with the advent of high-throughput sequencing technologies, advances in microscopy, and the growth of single-cell technologies, biology is set to overtake other data-heavy disciplines such as astronomy in terms of data storage needs [1]. There has been a dramatic increase in the number and size of data sets deposited by individual labs on data storage servers like the Gene Expression Omnibus (GEO) and dbGAP [2,3] and made available by large consortium efforts such as The Cancer Genome Atlas (TCGA) [4], the Genotype-Tissue Expression (GTEx) project [5], Bgee [6], Human Cell Atlas [7], and ENCODE [8,9]. These resources provide rich and diverse data to the biology research community. For example, TCGA is made up of clinical information (e.g., smoking status), molecular analyte data (e.g., sample portion weight), and molecular characterization data (e.g., imaging data, omics data). The additional commitment by funders, publishers, and individual scientists to make data sets (especially those funded by taxpayers and donors) publicly available has rapidly increased opportunities for data reuse by the broader scientific community. From January 2023, NIH will now require researchers to share data generated with NIH funds under the new Data Management and Sharing Policy [10] further leading to an increase in the availability of public data. With this growing data deluge, it seemed timely to provide guidelines on why, when, and how investigators can incorporate these valuable resources into their research programs as biological data reuse is good for science, cost efficient, and is the right thing to do in order to extract the greatest societal impact from the samples and funding that patients, donors, and taxpayers generously provide. Here, we draw upon our collective experience and expertise as molecular biologists, computational biologists, bioinformaticians, data scientists, and software developers to discuss Ten Simple Rules for using public data with the intention that it will serve as a useful guide (Fig 1). While this article focuses on computational biology and bioinformatics, the principles outlined here generally apply to other domains as well.

Fig 1. These 10 simple rules for using public data span checklist items for pre, during, and post data download.

Rule 1: Use public biological data purposefully in your research

Reasons for reusing public biological research data include cost-effectiveness and efficiency, access to data sets that would be difficult or impossible to regenerate, an increased sense of community, greater transparency and clarity of research, ability to retest and validate a shared data set, support for recognition of data ownership, and an increase in citations [11]. Critically, public biological data reuse can also promote workforce diversity and research inclusivity worldwide [12–14]. Use of public biological data provides individuals with a unique opportunity to leverage existing data sets in combination with other public data or their own. This allows for additional contexts to be explored within your own research. Comparing your research findings with a public data set analyzed in the same way can give nuance or clarity to results, especially in either further confirming what was observed (i.e., validation) or producing different results that help you to re-evaluate your approach or its applicability across cohorts. For example, you can validate current findings in public data, expand the number of samples, age groups, or other parameters of your current analysis, or explore molecular changes in a different system. Assessing public data can also aid in placing your research into the context of, and in perspective to, current research with public data therefore allowing comparisons to other work in the field.

Incorporating public biological data in your research project can also allow you to ask novel questions that the data generator may not have originally foreseen, further moving your own research and the field in new directions. Outside of expanding your research through exploration of new contexts, public data sets can also be used to generate hypotheses and/or guide future research by refining hypotheses for preliminary analyses. However, be mindful of data being appropriate for answering your hypotheses as outlined in Rule 2. With computational biology becoming ubiquitous in life science research, you can also use public data to drive novel method development and build modeling frameworks for systems biology. In fact, there have been efforts to standardize data sets for ease of use in such analysis [15]. In addition to improving your analysis, using public data also provides an opportunity to practice reproducible and interoperable research and further develop professional data science skills [11]. However, it is important to avoid the “sins of methodological research” (i.e., selective reporting, lack of replication studies, poor design of comparison studies, publication bias) [16] and therefore we suggest ensuring your research team has the proper methodological expertise to properly use and interpret public biological data sets.

In short, novel studies do not always require new data, and research including data reuse not only offers all of the benefits mentioned here, but can support community building and greater impact; as shown by Milham and colleagues [17] who found that neuroimaging research making use of public data was cited at the same or higher frequency in higher impact journals as compared to research using only self-generated data (this is expanded upon more in Rule 8). By using public biological data with care, your research can benefit from these benefits.

Rule 2: Evaluate data carefully for your use case

Before we get down to the business of downloading the data, we need to make sure we access appropriate data. As scientists, we are interested in finding signals and/or patterns in our data. However, patterns in a data set can arise from many different variables. Therefore, it is essential to carefully evaluate the available data for your particular use case; otherwise, the results obtained could be wrong and/or misleading. It is critical to examine the metadata (the data describing the data set) before downloading the actual data. Some important metadata variables to consider (but by no means exhaustive) relate to the (1) nature of the sample (e.g., the specific animal model or cell line(s), genotype, age, sex); (2) sample collection data (e.g., the type of sample collected, time points of collection); (3) platforms used (e.g., the sequencing or imaging platform); and (4) data quality metrics (e.g., sample size, groups of comparison). Not considering these variables can dramatically influence downstream analysis and interpretation and should therefore be considered before choosing the appropriate data set to use. For more details, see Rules 4 and 5 in “Ten simple rules for providing effective bioinformatics research support” [18].

Once the data set is deemed appropriate for your analytical goals, it is key to check for the confounding effects of metadata variables that might impact the statistical model and cause you to reach wrong conclusions. This includes review of data distributions and sampling as well as performing exploratory analysis and visualizations such as principal component analysis (PCA) and correlation analysis. “Ten simple rules for initial data analysis”[19] provides excellent pointers for performing initial data screening including how to craft a reproducible plan and pitfalls to avoid.

Rule 3: Be aware of, and adhere to, data reuse requirements, embargoes, etc.

Researchers looking to reuse controlled-access data (elaborated more in Rule 4) assume the responsibility (along with their legal and financial administrative offices) to protect the rights and welfare of the original participants. As such, data repositories with controlled-access data sets develop Data Access Request (DAR) processes to appropriately restrict access. DAR processes usually include agreeing to terms of access for the requested data, a Data Use Certification Agreement (DUA) between the requester’s institution and the repository, a description of the intended use, and an acknowledgment agreement. If a data set is available in a data portal (e.g., GEO [2], SRA [20]) that does not require controlled access, it is considered open access and can be freely downloaded through the portal. Even under these circumstances, the original data generators may still request acknowledgment upon use (a practice that should be followed whether or not the generators have requested it). A third possibility for acquiring controlled-access data for reuse is to contact the corresponding author of the paper directly to request data. However, this method can prove difficult for many reasons related to the author’s availability to fulfill the request (e.g., protected time to fulfill the request, ability to transfer the data, willingness to comply). Therefore, independent of the method of requesting access to restricted data, it is important to be patient, but persistent. Recently, many journals have begun implementing data availability manuscript sections that outline the portal where the data is stored and can be accessed.

Regardless of how the data is acquired, it is important to be aware of the legal, regulatory, and security obligations associated with its use. For example, data licenses might be in place to protect the original data generator’s rights by permitting secondary parties to reuse the data according to specific restrictions. Because countries have different regulations for data reuse [21], data licenses may clarify the uncertainty of requirements for data reusers. It is important to understand by which license or waiver the data to be reused is governed. In an effort to promote open access to data, many journals and data repositories operate under Creative Commons (CC BY 4.0) licenses that allow the freedom to share and adapt data as long as the original data generators are acknowledged for their contribution. For example, PLOS ONE stipulates that if the data associated with a published article in their journal is deposited in a repository with a licensing agreement, the agreement cannot be more restrictive than CC BY [22]. Researchers should identify which, if any, data license is governing the data they wish to reuse and respect any limitations associated with it.

Some data generators place an embargo on the data they generate in order to ensure they have time to publish initial findings. The data set may be submitted to a public repository but unavailable for download or publication for a certain length of time or until a specified date. Additionally, with the increased prevalence of pre-printing articles, data generators may wish to withhold their data sets until their article is published in a peer-reviewed journal. Being aware of data access and publication restrictions associated with the data set of interest and understanding who sets data restrictions can be complicated (e.g., funders, consortia, journals, individual labs), but convention, or in some cases the legal requirement, is to follow the stated restrictions.

Rule 4: Be aware of ethical considerations like confidentiality and protected health information

The privacy and ethics of data sharing are critical and it is the responsibility of researchers to protect and observe [23]. With regards to data reuse, you must verify that the data you are planning to reuse was ethically collected or generated, was collected for a purpose in alignment with additional applications, and ensure that the study does not use the data irresponsibly or immorally. It is our duty as scientists and citizens to move science forward while protecting sensitive data and presenting studies fairly. Secondary analysis of public data requires an Institutional Review Board (IRB) exemption confirming it does not fall within the regulatory definition of human subject research (see federal regulations on human subjects research protections (45 CFR 46.101(2)(b))) and a full review otherwise (e.g., software as a medical device where you are using AI or machine learning for diagnosis). Special considerations need to be made for data reuse:

Ethics by data type: The ethics of sharing and using data depend heavily on the data type. For example, cell lines, animal models, and microbial data are typically very low risk for privacy or ethical issues; that is not to say, however, that they have no risk (e.g., sharing pathogenic sequence data or alluding to locations for endangered species that could increase the risk of poaching) [24]. Ethical concerns are most common when working with human data.

HIPAA, PHI, and patient de-identification: The Health Insurance Portability and Accountability Act (HIPAA) of 1996 Privacy Rule is a federal law regarding protected health information (PHI) for individuals and their access to their own health information, as well as the specific permissible use and disclosure of PHI with other organizations [25]. Any purposeful or accidental disclosure of PHI as a HIPAA violation can lead to hefty fines. Health data used in research needs to be de-identified in a manner that makes re-identification highly unlikely unless that is the willful goal of the project. Genomics data is particularly susceptible to re-identification due to the uniquely identifying nature of the data itself; particularly when coupled with geographic collection metadata such as collection site. Well-constructed data usage agreements and controlled-access repositories can mitigate re-identification risks (see Rule 3).

Consent: Consent is an agreement between healthcare groups and participants that adheres to The Privacy Rule and HIPAA authorizations. For example, the GTEx Live Donor Informed Consent Template (BBRB-PM-0018 [26]) asserts exclusion of access to participant PHI that the generated data will be saved for many years, that it will be available to scientists around the world, and that data may be used broadly for medical research. It is important to ensure that data reuse does not violate any initially obtained consent.

Ethics specific to how/where data were obtained: Data may be obtained directly from an individual lab, or queried from a private or public repository. Publicly available data that can be downloaded by anyone tends to be at lowest risk for violating privacy and are the easiest to access (i.e., TCGA [4] and GTEx [5] gene expression data). However, when data is received from another investigator through direct sharing or from a controlled-access repository, it becomes your obligation to secure that data and not further share with unauthorized individuals [24].

Ethical design for data reuse: When reusing data, ensure fairness and equality with your representation of the data, including but not limited to ancestry or sex. For example, genetic study participation has been disproportionately overrepresented by European descendants, where one study found that as of 2018, individuals in GWAS catalogs consisted of 78% European, 10% Asian, 2% African, 1% Hispanic, and <1% for all other races [27]. In GTEx as of 2022, 84.6% donors were of white origin, 12.9% African American origin, 1.3% Asian origin, 1.1% unknown and no statistic for Hispanic/Latino origin [5]. Additionally, sex differences impact every area of health and have been largely disregarded in study design and have also been heavily unbalanced, especially in pharmaceutical trials where women were previously excluded entirely due to potential pregnancies during trials [28]. Since then, women are now included in study designs, though sex specificity has not been accounted for leading to vastly more adverse drug reactions in women due to inappropriate drug and dose recommendations [29]. Careful consideration of these factors will lead to more rigorous and accurate results and avoid perpetuating these issues in research.

Rule 5: Plan for needed data storage and compute requirements

Ask yourself, is my data “genomical” in size [30]? It is if you’re working in genomics, but regardless of your data, the amount of data being integrated in research is growing dramatically and the cost for storage and computation is at a rate not seen previously. Knowledge is power, and in this case, it is knowledge of the resources that will be needed before you need it that will benefit your work most. Data storage and computing hardware requirements should be determined and documented prior to downloading any data sets. This avoids potentially time consuming and expensive surprises by: (1) identifying gaps in the current infrastructure; and (2) allowing expert support staff an opportunity to investigate the viable solutions, where possible, in a timely manner. While needs vary by the individual situation and domain, here, we discuss various criteria to determine your needs. Data size, type, level of access, and security are the major considerations for where data needs to be stored. All invested parties who will retrieve, process, and consume the data need to be identified and involved in answering the following questions:

Data size:

What is the estimated size of data to be retrieved?

How many individual files are included?

How much data are expected to be produced during processing?

Do you need backups of the original or processed data?

Data type:

Are the data types large or small?

Are the file types binary or textual?

Are the files compressed?

Access requirements:

Who needs (or does not need) access to the data?

What is the level of access required?

How often will access be needed?

How often will reanalysis be necessary?

Do any of the users require (or prefer) a data sharing platform with a graphic interface for ease of access?

Are there any institutional policies or approvals to be considered prior to granting access?

Do external collaborators need to be provided access to the institutional setup?

Data security [31]:

Does the data include PHI?

Does the access need to be restricted?

What is the minimum level of data security required?

How will they be secured?

Will the data need to be deleted after a certain time period or event? Why, when, and how?

Are there any institutional policies or approvals to be considered?

Who will supervise data security?

How often (e.g., half-yearly, annually) will the adopted policies and implementations need to be reviewed and verified?

Answers to the above questions will facilitate discussions regarding optimal data storage options(s) and dictate if other institutional entities such as IT or the office of sponsored research need to be involved. Further, costs associated with data storage and reanalysis needs should also be considered when selecting storage locations. Commonly used data storage locations are personal computer(s), High Performance Computing (HPC) environments, and commercial cloud storage services (e.g., Dropbox, Box, AWS). Depending on the data, it may need to be split based on type and stored in a data-type-specific location best suited for its purpose. For example, large data such as FASTQ and VCF files may need to be stored at a location where they are accessible from an HPC environment for downstream processing, whereas spreadsheets and text documents may need to be stored in a cloud storage service (e.g., Box) to facilitate accessibility for non-computational team members.

Computing or hardware requirements will depend on the type of data and the processing planned [32]. A personal computer might suffice to process small data sets, but access to HPC or cloud computing (e.g., AWS, Microsoft Azure, Google Cloud) is often needed to process large data sets. HPCs and cloud computing offer several advantages such as fast processors, multicore chips, higher memory resources, and graphics processing units (GPU), which enable parallelization and scalability of a large number of jobs.

Rule 6: Know what you are downloading

As outlined in Rule 2, downstream analysis can be affected by how the data was collected and processed. This becomes an even greater problem when trying to integrate multiple public data sets, each collected and processed separately. So, be mindful of the processing differences between them. In recent years, a number of projects have been established that use and share common data processing pipelines across data collections, allowing researchers to more easily combine data for more complex analyses. For example, recount3 [33] is a uniformly processed resource of hundreds of thousands of human and mouse RNA-Seq data sets designed to facilitate meta-analysis and cross-study comparisons. Another great resource is the Bgee [6] database that contains “normal,” healthy, wild-type expression data across 52 different species thereby providing a comparable reference of gene expression by anatomical entities within and between species. A third example is BioDataome [34]. BioDataome has approximately 5,600 human and mouse expression and methylation data sets pre-processed in an analogous manner to allow for direct comparisons between data. All of these data repositories provide R packages facilitating programmatic data download (see Rule 7). Collections of equivalently processed data are becoming more of the norm, but data sets of interest may not be included in them. When this is the case, it may be necessary to reprocess the data to minimize the downstream impact of differences in upstream processing.

Rule 7: Download data programmatically; verify data integrity

Data downloads should be performed in a programmatic manner for consistency, scalability, and reliability. Several tools have been developed to provide direct programmatic access to large public databases (e.g., SRA [20], GEO [2], ENA [35], TCGA [4]) and they should be implemented when possible. One example of these tools is the SRA toolkit [36] that contains a number of commands linked to data downloads (e.g., “fasterq-dump” may be implemented to download FASTQ files and “vdb-validate” to check the integrity of SRA data sets). Additional examples include database-specific computational packages/libraries such as Bioconductor packages “recount3” [33] and “GenomicDataCommons” [37]. A key step in the data download process is validating the checksum (typically the MD5 hash) provided in the hosting database to verify data integrity prior to data analysis and interpretation. While these checks can be performed manually, some tools such as the Genomic Data Commons (GDC) Data Transfer Tool Client [37], will automatically validate MD5 checksums as part of the download process.

Depending on the number of files and tool functionality and design, customized scripts or workflows (e.g., Snakemake [38], NextFlow [39]) may be needed for scalability. When possible, publicly available workflows that adopt workflow management systems are recommended. One example is “fetchngs” [40] provided by nf-core [41]. This Nextflow pipeline [39] uses tools such as SRA-tools [36] to download FASTQ files and metadata based on a user-provided accession ID list. When performing downloads in this way, documenting the sample identifier and database sources becomes a critical step for reproducibility. For studies deposited in databases such as GEO [2], recording the GEO accession number along with sample identifiers may be sufficient; however, other data sources are updated on a continuous basis (e.g., Genotype-Tissue Expression (GTEx) Portal [5]). In such cases, the database version (e.g., GTEx Analysis Release V8—dbGaP Accession phs000424.v8.p2) and the date the download is performed should be recorded and reported in publications.

These practices should also be applied to data types beyond sample data including, for example, genome references (e.g., genome/transcriptome FASTA files, annotations files). Checksums from genomic reference files should be validated and details linked to the files should be recorded, including the FASTA file type (e.g., primary assembly or entire genomic sequence that includes assembly patches and haplotypes), database name, assembly name, and version or release number. The same principles should be applied to other reference files such as GTF/GFF [42].

Rule 8: Do properly cite data

As with any journal article/publication information and resources used, any data generated by other researchers should be credited and properly cited. This practice benefits the original data generator by providing a tangible demonstration of value and impact beyond the initial data publication. Failing to cite the data source withholds credit from researchers in the same way that failing to cite a journal article does. Public data citations support better quality and more transparent science, making a compelling argument for other researchers to contribute their own data to public data repositories. This process also supports improved reproducibility and credibility for your own research.

When it comes to citing data, the field still lacks a gold standard. However, existing best practices include citing the original paper where the data was published or a data object identifier (DOI) generated from a persistent database like Zenodo [43] or figshare [44]. In addition, consortium projects such as GTEx [5] and TCGA [4] often provide guidance on citation practices for their repositories. In an effort to increase the citability of public data and establish the data itself as a scientific output of value separate from the associated manuscript, many researchers now publish data sets independently from their associated publications. Journals for this purpose, such as Scientific Data, now exist [45]. If there is no guideline associated with the data set in general the citation should include the generators, where the data was obtained from, accession numbers, the version number for the data (if applicable), and the date it was accessed.

Non-profit organizations such as DataCite and Crossref provide unique persistent identifiers (PIDs) to data sets to improve tracking usage and facilitate linking to the publication of origin [46]. Check and see if the public data you are using in your analysis has a PID that can be cited or included in the methods section. In the future, PIDs may perhaps be linked to an individual’s ORCID number to provide a standardized data citation approach. A study looking at the correlation between if data was publicly available and the citation rate of the original paper demonstrated that those including publicly available data within the paper was associated with a 69% increase in citations [47]. Furthermore, investigators who share public data sets well have an increase in the impact of their own research. Articles with links to data repositories or that include PIDs are more highly cited than those without [24]. In summary, public data use benefits both the creator and the user.

Rule 9: Make your reprocessed data and models FAIR and share

All of the rules mentioned above are possible to adhere to because researchers made their data Findable, Accessible, Interoperable, and Reusable (FAIR) [48]. As a contributing member of the research ecosystem, you should pass it on, too! Make sure any additional data you generate, reuse, and integrate (i.e., data models), adheres to the FAIR principles. recount3 [33] and Bgee [6] are good examples of public data that have been reprocessed to correct for batch effects and made available under FAIR data principles. If your research is funded through the NIH, then you must adhere to a Data Management and Sharing Plan as outlined in the NIH policy starting in 2023 [10]. Recent research shows that only 6.8% of authors respond to requests for data sharing dramatically reducing the impact of most data and the knowledge to be gained from its use [49]. To be FAIR:

Improve data findability: As highlighted in Rule 8, submit your data to stable open-access public data repositories that provide DOIs such as Zenodo [43] and figshare [44]. Personal, lab, group, or institute sites are not good long-term solutions. Socialize your data by sharing on social media platforms such as Twitter. Blogs and news articles on lab and/or institute sites and presenting at conferences where data are clearly identified with DOIs help others to discover useful data. By diversifying where and how you share with the community about your data, you cast the widest net in order to catch the attention of potential researchers who would also benefit from access to your data.

Improve data accessibility: Open access to publications is important to science and plays a critical role in reproducing and advancing science. Making your data readily accessible is equally important. Share your raw as well as processed data so that the analysis performed can be reproduced fully and with minimal effort.

Improve data interoperability: Interoperability refers to the ability of data from different sources to be able to integrate with minimal effort [48]. A good example is the Fast Health Interoperability Resources (FHIR) standard for health care data exchange [50]. This becomes even more critical in studies where data from different sources are being reused, so make sure the data you provide is highly interoperable by including relevant metadata and adhering to appropriate and reasonable file and data conventions within the field.

Improve data reuse: Make your data reuse, reusable. Providing data in standard and popular formats goes a long way in making it reusable. Incomplete metadata and methods can severely limit the reuse (and usefulness) of data. Don’t skimp by providing the bare minimum data and metadata needed to satisfy the requirements of the granting body, governing body, journal, etc., that you’re looking to communicate your work through. Abstaining from providing all necessary assets to reproduce the work does a disservice to you, your colleagues, your lab and institute, and the scientific community. It is not just about the input and the output: There’s a whole bunch of research, development, refinement, knowledge, and other work done in between data input and output that needs to be captured, codified, and shared with the work itself.

Rule 10: Make your processing, pipelines, and code FAIR

Tools and methods used for the analysis and interpretation of public data sets should also adhere to the FAIR guidelines for coding and software development [51]. Many of the FAIR principles for data (outlined in Rule 9) are directly applicable to software, but others require modification for application to software [52]. For example, persistent identifiers should be generated and recorded for novel pipelines, software, and research tools. Findability of software should be linked to the traceability of the source code under version control (e.g., GitHub [53], GitLab [54], BitBucket [55]) and reporting of appropriate metadata such as software versions. Similarly, software and code associated with an analysis should be accessible through repositories and (when appropriate) software archives such as CRAN [56], Bioconductor [57], PyPI [58], and Conda [59]. The same is true for software dependencies. Software containers (e.g., Docker [60], Singularity [61]) can also be implemented to enable software portability and analysis reproducibility. In the absence of containerized software, the necessary information for how to build and install a published tool should be provided.

Because the dynamic nature of software can mean the most up to date version is different from the version that was published, proper documentation and tagging (e.g., GitHub tags linked to released versions of software) allows researchers to find the exact package versions used during a study, therefore facilitating reproducible analyses. Continuous integration approaches (the software development practice of automating builds, static analysis, and tests on code changes that were pushed to a central repository) can automate time-consuming tasks associated with pipeline and software development. Similarly, static code analysis tools in continuous integration pipelines can help automate source code quality analysis allowing early identification of potential bugs, security vulnerabilities, performance issues, or deviations from the project/organization and coding guidelines.

Inclusion of continuous integration and static code analysis tools allow for rapid feedback loops in code inspection, reducing the time reviewers need to spend reviewing, and reducing cost of time and funding of development maintenance [62,63]. Journals are increasingly requiring that the code used for analysis is made available [64]. In addition to ensuring that your processing, pipelines, and code are FAIR, the steps above will help to ensure code review is not an onerous task being performed during the submission process and your methods are reproducible for other scientists [65–67]. For more detailed information about how you can make your research more computationally reproducible, refer to [68].

Conclusion

In summary, biological data reuse is not only good for science, but also it is the right thing to do in order to extract the greatest societal impact from the samples and funding that patients, donors, and taxpayers generously provide. Here, we covered Ten Simple Rules for data reuse spanning the periods before, during, and after data download. This paper serves as a guide for both data users and generators in the community.

Funding Statement

VHO, EJW, AUA, LI, and EAW, and BNL are supported by U54OD030167 (www.nih.gov). VHO, TCH, and BNL are supported by R00HG009678 (www.nih.gov). VHO and BNL are supported by R03OD030604 (www.nih.gov). JHW is supported by T32GM008111 (www.nih.gov). JHW, TCH, BNL are supported by UAB Lasseigne Lab Startup funds (www.uab.edu). AUO, BW, MG, AT, and EAW are supported by UAB Worthey Lab funds (www.uab.edu). BW and EAW are supported by UG1HD107688 (www.nih.gov). BW, MG, and EAW are supported by the CF Foundation (WORTHE19A0) (www.cff.org). LI is supported by UAB CIRC funds (www.uab.edu). AT, LI, EAW, and BNL are members of the UAB Biological Data Science (U-BDS) Core (RRID:SCR_021766) (www.uab.edu). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Navarro FCP, Mohsen H, Yan C, Li S, Gu M, Meyerson W, et al. Genomics and data science: an application within an umbrella. Genome Biol. 2019;20:109. doi: 10.1186/s13059-019-1724-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Barrett T, Wilhite SE, Ledoux P, Evangelista C, Kim IF, Tomashevsky M, et al. NCBI GEO: archive for functional genomics data sets—update. Nucleic Acids Res. 2013;41:D991–5. doi: 10.1093/nar/gks1193 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mailman MD, Feolo M, Jin Y, Kimura M, Tryka K, Bagoutdinov R, et al. The NCBI dbGaP database of genotypes and phenotypes. Nat Genet. 2007;39:1181–1186. doi: 10.1038/ng1007-1181 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.The Cancer Genome Atlas Program. In: National Cancer Institute [Internet]. 13 Jun 2018. [cited 2022 Jun 6]. Available from: https://www.cancer.gov/about-nci/organization/ccg/research/structural-genomics/tcga. [Google Scholar]

- 5.GTEx Portal. [cited 2022 Apr 26]. Available from: https://gtexportal.org/home/tissueSummaryPage.

- 6.Bastian FB, Roux J, Niknejad A, Comte A, Fonseca Costa SS, de Farias TM, et al. The Bgee suite: integrated curated expression atlas and comparative transcriptomics in animals. Nucleic Acids Res. 2021;49:D831–D847. doi: 10.1093/nar/gkaa793 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Regev A, Teichmann SA, Lander ES, Amit I, Benoist C, Birney E, et al. The Human Cell Atlas. elife. 2017:6. doi: 10.7554/eLife.27041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.ENCODE Project Consortium. An integrated encyclopedia of DNA elements in the human genome. Nature. 2012;489:57–74. doi: 10.1038/nature11247 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Luo Y, Hitz BC, Gabdank I, Hilton JA, Kagda MS, Lam B, et al. New developments on the Encyclopedia of DNA Elements (ENCODE) data portal. Nucleic Acids Res. 2020;48:D882–D889. doi: 10.1093/nar/gkz1062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kozlov M. NIH issues a seismic mandate: share data publicly. Nature. 2022;602:558–559. doi: 10.1038/d41586-022-00402-1 [DOI] [PubMed] [Google Scholar]

- 11.Boté J-J, Termens M. Reusing Data Technical and Ethical Challenges. DESIDOC Journal of Library & Information. Technology. 2019:329–337. doi: 10.14429/djlit.39.06.14807 [DOI] [Google Scholar]

- 12.Parker M, Bull S. Sharing Public Health Research Data: Toward the Development of Ethical Data-Sharing Practice in Low- and Middle-Income Settings. J Empir Res Hum Res Ethics. 2015;10:217–224. doi: 10.1177/1556264615593494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Organization WH. Sharing and reuse of health-related data for research purposes: WHO policy and implementation guidance. World Health Organization. 2022:p. v, 18 p. [Google Scholar]

- 14.Genomic Data Science Community Network. Diversifying the genomic data science research community. Genome Res. 2022. doi: 10.1101/gr.276496.121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Introduction. In: Alevin-fry requant [Internet]. [cited 2022 May 24]. Available from: https://combine-lab.github.io/quantaf/.

- 16.Boulesteix A-L, Hoffmann S, Charlton A, Seibold H. A replication crisis in methodological research? Significance. 2020;17:18–21. doi: 10.1111/1740-9713.01444 [DOI] [Google Scholar]

- 17.Milham MP, Craddock RC, Son JJ, Fleischmann M, Clucas J, Xu H, et al. Assessment of the impact of shared brain imaging data on the scientific literature. Nat Commun. 2018;9:2818. doi: 10.1038/s41467-018-04976-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kumuthini J, Chimenti M, Nahnsen S, Peltzer A, Meraba R, McFadyen R, et al. Ten simple rules for providing effective bioinformatics research support. PLoS Comput Biol. 2020;16:e1007531. doi: 10.1371/journal.pcbi.1007531 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Baillie M, le Cessie S, Schmidt CO, Lusa L, Huebner M. Topic Group “Initial Data Analysis” of the STRATOS Initiative. Ten simple rules for initial data analysis. PLoS Comput Biol. 2022;18:e1009819. doi: 10.1371/journal.pcbi.1009819 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Leinonen R, Sugawara H, Shumway M. International Nucleotide Sequence Database Collaboration. The sequence read archive. Nucleic Acids Res. 2011;39:D19–D21. doi: 10.1093/nar/gkq1019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Labastida I, Margoni T. Licensing FAIR data for reuse. Data Intellegence. 2020;2:199–207. doi: 10.1162/dint_a_00042 [DOI] [Google Scholar]

- 22.PLOS ONE. [cited 2022 Jun 13]. Available from: https://journals.plos.org/plosone/s/data-availability.

- 23.Bonomi L, Huang Y, Ohno-Machado L. Privacy challenges and research opportunities for genomic data sharing. Nat Genet. 2020;52:646–654. doi: 10.1038/s41588-020-0651-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Byrd JB, Greene AC, Prasad DV, Jiang X, Greene CS. Responsible, practical genomic data sharing that accelerates research. Nat Rev Genet. 2020;21:615–629. doi: 10.1038/s41576-020-0257-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Office for Civil Rights (OCR). Summary of the HIPAA Privacy Rule. [cited 2022 Apr 26]. Available from: https://www.hhs.gov/hipaa/for-professionals/privacy/laws-regulations/index.html.

- 26.GTEx Informed Consent Template. [cited 2022 Apr 26]. Available from: https://biospecimens.cancer.gov/resources/sops/library.asp.

- 27.Sirugo G, Williams SM, Tishkoff SA. The Missing Diversity in Human Genetic Studies. Cell. 2019;177:26–31. doi: 10.1016/j.cell.2019.02.048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Oertelt-Prigione S, Mariman E. The impact of sex differences on genomic research. Int J Biochem Cell Biol. 2020;124:105774. doi: 10.1016/j.biocel.2020.105774 [DOI] [PubMed] [Google Scholar]

- 29.Zucker I, Prendergast BJ. Sex differences in pharmacokinetics predict adverse drug reactions in women. Biol Sex Differ. 2020;11:32. doi: 10.1186/s13293-020-00308-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Stephens ZD, Lee SY, Faghri F, Campbell RH, Zhai C, Efron MJ, et al. Big Data: Astronomical or Genomical? PLoS Biol. 2015;13:e1002195. doi: 10.1371/journal.pbio.1002195 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hart EM, Barmby P, LeBauer D, Michonneau F, Mount S, Mulrooney P, et al. Ten Simple Rules for Digital Data Storage. PLoS Comput Biol. 2016;12:e1005097. doi: 10.1371/journal.pcbi.1005097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Brandies PA, Hogg CJ. Ten simple rules for getting started with command-line bioinformatics. PLoS Comput Biol. 2021;17:e1008645. doi: 10.1371/journal.pcbi.1008645 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Wilks C, Zheng SC, Chen FY, Charles R, Solomon B, Ling JP, et al. recount3: summaries and queries for large-scale RNA-seq expression and splicing. bioRxiv. 2021. doi: 10.1186/s13059-021-02533-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lakiotaki K, Vorniotakis N, Tsagris M, Georgakopoulos G, Tsamardinos I. BioDataome: a collection of uniformly preprocessed and automatically annotated datasets for data-driven biology. Database. 2018;2018. doi: 10.1093/database/bay011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.EMBL-EBI. European Nucleotide Archive. [cited 2022 Jun 6]. Available from: https://www.ebi.ac.uk/ena/browser/home.

- 36.Sequence Read Archive Toolkit. [cited 2022 Jun 6]. Available from: https://trace.ncbi.nlm.nih.gov/Traces/sra/sra.cgi?view=software.

- 37.Morgan MT, Davis SR. GenomicDataCommons: a Bioconductor Interface to the NCI Genomic Data Commons. doi: 10.1101/117200 [DOI] [Google Scholar]

- 38.Mölder F, Jablonski KP, Letcher B, Hall MB, Tomkins-Tinch CH, Sochat V, et al. Sustainable data analysis with Snakemake. F1000Res. 2021;10:33. doi: 10.12688/f1000research.29032.2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Di Tommaso P, Chatzou M, Floden EW, Barja PP, Palumbo E, Notredame C. Nextflow enables reproducible computational workflows. Nat Biotechnol. 2017;35:316–319. doi: 10.1038/nbt.3820 [DOI] [PubMed] [Google Scholar]

- 40.Patel H, Beber ME, Han DW, Ewels P, Espinosa-Carrasco J, Bot N-C, et al. nf-core/fetchngs: nf-core/fetchngs v1.5—Copper Cat. 2021. doi: 10.5281/zenodo.5746702 [DOI] [Google Scholar]

- 41.Ewels PA, Peltzer A, Fillinger S, Patel H, Alneberg J, Wilm A, et al. The nf-core framework for community-curated bioinformatics pipelines. Nat Biotechnol. 2020;38:276–278. doi: 10.1038/s41587-020-0439-x [DOI] [PubMed] [Google Scholar]

- 42.GFF3—GMOD. [cited 2022 Jun 6]. Available from: http://gmod.org/wiki/GFF3.

- 43.European Organization for Nuclear Research, OpenAIRE. Zenodo CERN. 2013. doi: 10.25495/7GXK-RD71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Figshare. [cited 2022 Jun 6]. Available from: https://figshare.com/.

- 45.van den Berghe GJS-ASV, editor. Scientific Data. Nature Publishing Group. 2014-Current.

- 46.Pierce HH, Dev A, Statham E, Bierer BE. Credit data generators for data reuse. In: Nature Publishing Group UK [Internet]. 4 Jun 2019. [cited 2022 Jun 6]. doi: 10.1038/d41586-019-01715-4 [DOI] [PubMed] [Google Scholar]

- 47.Piwowar HA, Day RS, Fridsma DB. Sharing detailed research data is associated with increased citation rate. PLoS ONE. 2007;2:e308. doi: 10.1371/journal.pone.0000308 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wilkinson MD, Dumontier M, Aalbersberg IJJ, Appleton G, Axton M, Baak A, et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data. 2016;3:160018. doi: 10.1038/sdata.2016.18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Gabelica M, Bojčić R, Puljak L. Many researchers were not compliant with their published data sharing statement: mixed-methods study. J Clin Epidemiol. 2022. doi: 10.1016/j.jclinepi.2022.05.019 [DOI] [PubMed] [Google Scholar]

- 50.Index—FHIR v4.3.0. [cited 2022 Jun 10]. Available from: http://hl7.org/fhir/index.html.

- 51.Lamprecht A-L, Garcia L, Kuzak M, Martinez C, Arcila R, Martin Del Pico E, et al. Towards FAIR principles for research software. Data Sci. 2020;3:37–59. doi: 10.3233/ds-190026 [DOI] [Google Scholar]

- 52.Jiménez RC, Kuzak M, Alhamdoosh M, Barker M, Batut B, Borg M, et al. Four simple recommendations to encourage best practices in research software. F1000Res. 2017:6. doi: 10.12688/f1000research.11407.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Github. In: Github [Internet]. [cited 2022 Jun 6]. Available from: https://github.com/.

- 54.Gitlab. In: Gitlab [Internet]. [cited 2022 Jun 6]. Available from: https://about.gitlab.com/.

- 55.Bitbucket. In: Bitbucket [Internet]. [cited 2022 Jun 6]. Available from: https://bitbucket.org/product/.

- 56.The Comprehensive R Archive Network. [cited 2022 Jun 6]. Available from: https://cran.r-project.org/.

- 57.Bioconductor—Home. [cited 2022 Jun 6]. Available from: https://bioconductor.org/.

- 58.PyPI · The Python Package Index. In: PyPI [Internet]. [cited 2022 Jun 6]. Available from: https://pypi.org/.

- 59.Conda—Conda documentation. [cited 2022 Jun 6]. Available from: https://docs.conda.io/en/latest/.

- 60.Docker. [cited 2022 Jun 6]. Available from: https://www.docker.com/.

- 61.SingularityCE. In: Sylabs [Internet]. 31 Mar 2022 [cited 2022 Jun 6]. Available from: https://sylabs.io/singularity/.

- 62.Ferenc K, Otto K, de Oliveira Neto FG, López MD, Horkoff J, Schliep A. Empirical study on software and process quality in bioinformatics tools. bioRxiv. 2022:p. 2022.03.10.483804. doi: 10.1101/2022.03.10.483804 [DOI] [Google Scholar]

- 63.Georgeson P, Syme A, Sloggett C, Chung J, Dashnow H, Milton M, et al. Bionitio: demonstrating and facilitating best practices for bioinformatics command-line software. Gigascience. 2019:8. doi: 10.1093/gigascience/giz109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Cadwallader L, Mac Gabhann F, Papin J, Pitzer VE. Advancing code sharing in the computational biology community. PLoS Comput Biol. 2022;18:e1010193. doi: 10.1371/journal.pcbi.1010193 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Hunter-Zinck H, de Siqueira AF, Vásquez VN, Barnes R, Martinez CC. Ten simple rules on writing clean and reliable open-source scientific software. PLoS Comput Biol. 2021;17:e1009481. doi: 10.1371/journal.pcbi.1009481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Fungtammasan A, Lee A, Taroni J, Wheeler K, Chin C-S, Davis S, et al. Ten simple rules for large-scale data processing. PLoS Comput Biol. 2022;18:e1009757. doi: 10.1371/journal.pcbi.1009757 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Balaban G, Grytten I, Rand KD, Scheffer L, Sandve GK. Ten simple rules for quick and dirty scientific programming. PLoS Comput Biol. 2021;17:e1008549. doi: 10.1371/journal.pcbi.1008549 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Heil BJ, Hoffman MM, Markowetz F, Lee S-I, Greene CS, Hicks SC. Reproducibility standards for machine learning in the life sciences. Nat Methods. 2021;18:1132–1135. doi: 10.1038/s41592-021-01256-7 [DOI] [PMC free article] [PubMed] [Google Scholar]