Abstract

Adequate near and intermediate visual capacity is important in performing everyday tasks, especially after the introduction of smartphones and computers in our professional and recreational activities. Primary objective of this study was to review all available reading tests both conventional and digital and explore their integrated characteristics. A systematic review of the recent literature regarding reading charts was performed based on the PubMed, Google Scholar, and Springer databases between February and March 2021. Data from 11 descriptive and 24 comparative studies were included in the present systematic review. Clinical settings are still dominated by conventional printed reading charts; however, the most prevalent of them (i.e., Jaeger type charts) are not validated. Reliable reading capacity assessment is done only by those that comply with the International Council of Ophthalmology (ICO) recommendations. Digital reading tests are gaining popularity both in clinical and research settings and are differentiated in standard computer-based applications that require installation either in a computer or a tablet (e.g., Advanced VISION Test and web-based ones e.g., Democritus Digital Acuity Reading Test requires no installation). It is evident that validated digital tests will prevail in future clinical or research settings and it is upon ophthalmologists to select the one most compatible with their examination routine.

Keywords: digital reading chart, paper reading chart, presbyopia, low vision chart, reading acuity

INTRODUCTION

Adequate visual capacity is essential for the adaptation to the environment, contributing to our better perception of the world. All modern activities of daily living are vision-intensive. Adequate vision imposes no limits to the social, professional and personal objectives of the patient; within this context it should address tasks in the near, intermediate, and distant environment[1].

Current social and professional mandates, require perfect or almost perfect near and intermediate visual capacity, since the use of computers, tablets, and smartphones is an integral part of our lives.

Reading comprehension is defined as the ability to process text, to understand its meaning and to integrate with what the reader already knows. However, several factors interfere with reading capacity with the most prevalent one being presbyopia[2].

Presbyopia is the outcome of the age-related elasticity changes in the crystalline lens and its capsule. It manifests as a reduction in the amplitude of accommodation accompanied by inability of the longitudinal muscle fibers of the ciliary body to contract efficiently[3]–[7]. Prevalence of presbyopia is predicted to reach 1.8 billion by 2050[8]–[10].

Further to presbyopia, a series of other conditions interfere with normal intermediate and near visual capacity. Among them are cataract, age-related macular degeneration (AMD), glaucoma, and systemic diseases that affect the physiology of the retina such as diabetic retinopathy (DR) and hypertension[2].

It becomes obvious that since the reduction of the intermediate and near vision is associated with numerous ocular and systemic diseases, the evaluation of the reading capacity of the patient is part of the routine ophthalmological examination both for adults and adolescents. However, no common methodology exists in the quantification of reading capacity. A series of reading tests have been developed, both printed and digital, which introduce reading parameters that are supposed to reflect reading capacity.

Within this context, primary objective of this study was to conduct a systematic review on all available reading tests both conventional and digital and explore their integrated characteristics.

MATERIALS AND METHODS

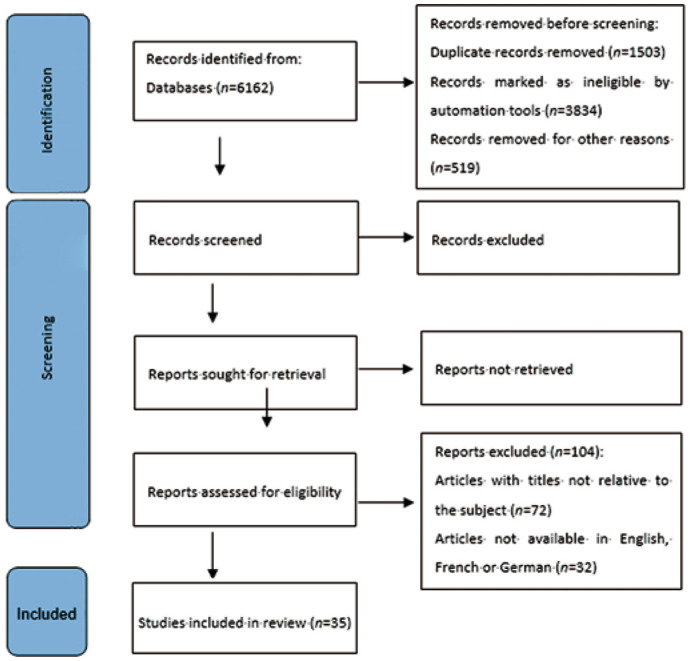

This systematic review followed the Preferred Reporting Items for Systematic Reviews (PRISMA) statements checklist. Study design adopted the mandates of the Problem/Population, Intervention, Comparison, Outcome (PICO) framework. PICO framework defined the selection criteria. Participants included patients with near distance reading difficulties before or after cataract surgery. The studied reading tests included both normal vision populations and low vision patients. Intervention consisted of cataract surgery or reading glasses. Some of the surveys compared digital reading charts with conventional ones. Outcome concerned the accordance between digital and paper reading charts and their application in everyday life and clinical routine.

The systematic search for relevant studies was performed by two independent reviewers based on PubMed, Google Scholar and Springer databases using the following search terms: digital chart AND paper chart, reading acuity (RA), presbyopia AND digital chart, computer AND reading test, reading chart AND near vision AND electronic devices AND low vision chart.

The search was conducted between February and March 2021. Search filters and language restrictions were not used in the initial search. The results were checked and only articles with titles relative to the subject were selected. Both comparative and descriptive studies were included in this review. Articles not available in English, French or German were excluded. When the eligible articles were not available in full text, abstracts were used as a source of information. Year of publication ranged from 1980 to 2020. All potential conflicts on the review process were resolved by a third senior reviewer (Labiris G). This article refers to a literature review that uses published data so it is not required to be approved by a Scientific Board.

RESULTS

Totally 35 articles (11 descriptive studies and 24 comparative studies) were included in the present systematic review (Table 1). Among them, 21 were prospective studies. Nine of the comparative studies dealt with digital charts, while eight studies studied near vision and its characteristics. Three of the studies compared different typed charts for near vision[11]–[48]. A PRISMA flow chart is demonstrated in Figure 1.

Table 1. Study design.

| Studies | References | No. of studies |

| Comparative studies | 11-18, 21, 24, 25, 27, 28, 33, 36, 37, 39, 41–46, 48 | 24 |

| Descriptive studies | 19, 20, 22, 23, 29, 30, 32, 34, 35, 38, 47 | 11 |

| Prospective studies | 11-18, 21, 24, 25, 33, 36, 39, 41–46, 48 | 21 |

Figure 1. PRISMA 2020 flow diagram for new systematic reviews.

Reading Parameters

The following are the most commonly used parameters in the assessment of reading capacity as suggested by the articles that were included in our systematic review.

Reading speed

Reading speed (RS) [in words per minute (wpm)]=60×(10−errors)/(time in seconds), where errors is the number of mistakes made by the patient in the current sentence and time (in seconds) is the patient's reading duration of the current sentence[11]–[15]. However, certain differences in the measuring methods were identified in the assessment of RS. Rhiu et al[14] calculated RS as letters per minute. Participants were asked to first read silenty and then aloud the sentences in question and they measured reading-only and reading-and-speaking speed. Hirnschall et al[18] did not take into consideration errors that were made while reading and patients were asked to read continuously without pausing. They also excluded sentences read with a reading speed less than 80 wpm from the statistical evaluation, stating that the reading speed of 80 wpm is the minimum to allow comprehensive recreational reading.

Reading acuity

RA is defined as the smallest print that the patient can read without making significant errors, calculated by the following formula: RA (logMAR)=1.4−(sentences×0.1)+(errors×0.01) for the MNREAD and IUREAD charts[12],[15] whereas for the Radner charts it is calculated by: log reading acuity determination (logRAD) score=logRAD for lowest line read+(0.005×syllables of incorrectly read words). Threshold RA was determined as the last sentence that could be read completely[13]. For the LPO Rosenbaum pocket screener, RA was recorded based on the last line for which all letters were correctly identified[16]. For the ETDRS chart, results were recorded by the examiner based on the smallest line for which four or more charts were identified correctly measured in logMAR[17].

Maximum reading speed

Maximum reading speed (MRS) measured in wpm, is defined as the patient's reading speed when reading is not limited by print size, and calculated by averaging the reading speed of the sentences with print size larger than the Critical Print Size (CPS)[12]–[13],[15].

Critical print size

CPS measured in logMAR, is defined as the smallest print size sentence that can be read with reading speed greater than or equal to the average reading speed of the larger logMAR print sentences minus 1.96 times the standard deviation (SD) of the reading speed of these sentences[15]. Hirnschall et al[18] defined this parameter as the smallest log-scaled print of lowercase letters read with a speed of more than 80 wpm and it was expressed in mm.

Reading accessibility index

Reading accessibility index (ACC) is defined as the mean reading speed of the 10 largest print sizes of the MNREAD acuity chart at 40 cm (1.3 to 0.4 logMAR), divided by 200 wpm, which is the mean reading speed of normally sighted young adults aged 18 to 39 years old.

Our review indicated that for the reliable measurement of reading parameters certain precautions should apply.

Memorization control

Memorization was avoided by using different chart and sentence sets for all trial runs[15] and by randomizing the order of the chart used first (printed or electronic)[13],[16]–[18]. In addition, a large number of sentences, randomly selected for each trial, was employed[12].

Distance monitoring

For digital application of reading charts, various methods that measure distance between the patient and the screen were utilized to minimize interference with true visual capacity metrics. Participants' pupillary distance (PD) was monitored by a face-tracking algorithm within the iPad app to ensure minimal distance change. When a ±4 cm deviation was detected, there was a warning to readjust the distance and retake the test[41]. Hirnschall et al[18] did not set a constant reading distance for their experiments but let the patients choose it, while measuring it with video stereophotogrammetry. Other studies monitored distance with the help of an examiner[13] using a tape meter and close observation[16]–[17] for both printed and digital charts.

Time measurement

Regarding printed versions, all studies timed the process with a stopwatch controlled by examiners[12]–[13],[15],[18]. It is believed that the examiner's response time from the time the patient starts reading until they press the stopwatch button can overestimate reading speed, thus creating the need for more automated methods. The subject's voice was recorded by microphone and an in-app stopwatch measured reading duration from text presentation to whenever the patient pressed the stop button[13],[18]. The examiner could visually inspect recordings to mark the start and end of sentences[12]. For the MNREAD application, a touch on the screen set the timer on simultaneously with the presentation of sentences, while a second touch stopped it, automatically recording reading time[15].

Error management

In printed charts, error trapping is done by the examiner. Certain digital charts offer the option of voice recording in order to confirm potential errors by the patient[12]–[13]. In the MNREAD app, a screen appeared after each sentence for the examiner to appoint the number of errors[15].

Reliable reading assessment with digital reading tests heavily depends on the specific characteristics of the display. These characteristics are[19]–[21]:

Gamma function

This is a mathematic function that was used to derive the potential range of display of contrast targets on the devices. L=νγ were L represents the luminance of the display, ν is the signal voltage and γ is the slope of the line of best fit in a log(L) versus log(V) function (gamma).

Luminance

Luminance is a measure of luminous intensity of light travelling in a given direction, per unit area. The SI unit for luminance is candela per square meter (cd/m2). In real-life positioning, luminance can differ between central and peripheral targets at different screen locations on a tablet display. Battery level can also affect luminance levels[20]. Nearby light sources could also affect luminance, not so much at perpendicular viewing, but when there is screen rotation of a certain degree[21].

Contrast

The contrast ratio (CR) is a property of displays, defined as the ratio of the luminance of the brightest color (white) to that of the darkest color (black). The higher the CR, the better.

Stability of display

It is important to determine the time it takes to reach within 1% variation of the final luminance value for a certain display in order to better control and standardize display settings. There have been a few studies mostly on tablet displays, but again research was limited on certain device models. For the iPad 3 the results for the time needed for display stability varied after the switched-off state, ranging from 15 to 1min. Therefore, 15min of screen function was advised before conducting tests with the device[19]. It took approximately 13min for the iPad mini Retina display to reach within 1% variation of the final luminance value[20]. As for the Google Nexus 10 and the Galaxy Tab 2 10.1, the longest it took the screen to stabilize was after 24h of switched-off state and was 10 and 8min respectively[21].

Screen resolution

Considering that the most common reading distance is typically 33–40 cm, higher resolutions would allow for 6/6 or even 6/5 vision testing. Regarding tablets, Tahir et al[21] suggest that for satisfactory vision testing a screen resolution of at least 100 pixels per cm is needed. Lower pixel densities, such as less than 132 ppi, 1024×768 pixels have been linked with a small, but nonsignificant decrease in reading speed when compared to reading a printed book[15].

Conventional Reading Charts

Standardization of the conventional reading charts begun 20 years ago, focusing primarily in clinical settings. Standardization process included the evaluation of the reproducibility, comparability, validity, interpretation and reliability of each reading test[22]–[23].

Nowadays, numerous reading tests are in clinical use, with the majority of them untested for their validity. The prevalent Jaeger reading charts that are used in clinical settings are not standardized[24] and do not address the criteria established by the International Council of Ophthalmology (ICO) or the EN ISO 8596 directive[24]–[26]. These standards include a logarithmic progression of print sizes, the calibration of test conditions, chart design, the specification of the distance test at all instances, the use of continuous text and a typeset material where the height of lower-case formats is at five minutes of arc. The following conventional printed reading tests comply with the ICO recommendations: the Sloan Reading Cards, the Bailey-Lovie Word Reading Charts, the MNREAD charts, the RADNER Reading Charts, the Colenbrander Continuous Text Near Vision Cards, the Smith-Kettlewell Reading Test (SKread), the Oculus Reading Probe II, the C-Read Charts and the Arabic-BAL Chart[24],[26]–[28].

Sloan reading cards: They use continuous text paragraphs with a variable length sizes. The smallest print size corresponds to a decimal acuity of 0.4 at the distance of 40 cm. Progression of print size is logarithmic.

The Bailey-Lovie Word Reading Charts: Uses unrelated words arranged in a logarithmic progression of size while each group of words is of approximately equal difficulty[29].

The MNREAD charts: This test is comprised of 60 characters including spaces, with a period at the end of a three-line sentence. They are suitable for low vision patients, as well[11].

The RADner reading charts: This test includes a series of comparable sentences that consist of 14 words in three lines, 27–28 characters per line and 22–24 syllables. Word length, position of words, number of characters, lexical difficulty, and linguistic characteristics are on a par with each other. The Radner Reading Charts are available in German, Spanish, English, French, Dutch, Italian, Swedish, Danish, Portuguese, Turkish, Hungarian, and Romanian, and further languages are in progress[30].

The Colenbrander Continuous Text Near Vision Cards: The sentences used have 44 characters including spaces and a different number of words, nine to 11 words. They are translated in 12 languages[32].

The Smith-Kettlewell Reading Test (SKread): Each test paragraph contains six single letters and ten random words with 60 characters including spaces in total but the number of words is equal in all paragraphs[31]. The Smith-Kettlewell Reading Test was introduced to test the performance of low-vision patients, to locate scotomas and determine their magnification needs[32]–[33].

The Oculus Reading Probe II: It uses text from a book by Sven Hegin and from The Jungle Book by Rudyard Kipling. It also includes symbols of music, Landolt rings, and tumbling Es and is available in German[32].

Eschenbach and Zeiss reading tests using long sentences also try to test low vision patients, while the Keeler Reading Test Types test speed and fluency of patients with low-vision[30].

The C-READ consists of three charts. Each chart consists of sixteen 12-character simplified Chinese sentences crafted from first- to third-grade textbooks[27].

Digital Reading Charts

Considering the rapid development of today's technology, numerous electronic devices are within reach for more and more people. Adequate visual ability is essential for a good quality of life and a medium of self-assessment could further improve patients' daily living[34]. Smartphones and tablet devices with high-definition screens are capable to host digital reading tests that could be used for self-examination, as well[35]. However, the evaluation of the digital reading tests and near-vision acuity charts is required prior to their introduction in clinical settings. Yeung et al[47] recently reviewed all available digital visual acuity charts that supported self-examination. From a total of 42 digital charts, 20 were based on conventional printed charts and only 4 of them were validated. Lewis and Smith[35] developed the first digital chart, that tested visual acuity using Arabic numbers, displayed to light-emitting diode (LED) or liquid crystal display (LCD) type numerals and was comparable in difficulty to Snellen letters. Their digital test was able to measure the visual acuity of young and healthy individuals and store their outcomes into a computer[35]. The RAD‐RD© is an automated computer program based on the RADNER reading charts that was developed to assess reading speed and reading acuity. It uses a computer and a microphone that records readers' voice and automatically determines the start and end of vocalization[46]. The Salzburg-Advanced is an electronic reading desk that can assess patients with AMD, DR and multifocal or accommodating intraocular lens. It can simulate a natural reading environment and calculate near or intermediate vision and reading speed at different illumination and contrast conditions. It is available in 10 languages[18]. The MNREAD test also underwent a digital transition, simplifying and standardizing testing methods, automating scoring methods, providing easy data sharing and increasing portability, with several test versions available on a single device. MNREAD allows high accuracy near vision testing by measuring CPS and Reading Acuity[36]. The IURead can be performed on a computer, tablet or mobile phone in order to test reading speed and reading acuity. It can be used for research purposes as it has 422 single sentences and can provide multiple repeats without reusing sentences and is also useful for low vision patients and presbyopes[12]. The Democritus Digital Acuity Reading Test—DDART is a web-based reading test that requires no installation to a local computer. It provides text size calibration, automatic reading timing due to audio recording and automatic calculation of RA, MRS, CPS and ACC[37]. Rhiu et al[14] selected sixty-three Korean sentences, adjusted them to match the design principles of the MNREAD chart and tested reading speed binocularly in a population with normal vision at 40 cm. A 3rd generation retina display iPad on maximum brightness was used to display the sentences. The app included a stop clock to automatically calculate reading speed in letter per minute and words per minute.

DISCUSSION

Our systematic review attempted to contribute to the body of knowledge on the present status of reading assessment. As mentioned above, reading capacity reflects near and intermediate vision capacity which is necessary for the citizen in the 21st century, as the majority lead a busy lifestyle with tasks that require adequate vision at all distances. Therefore, it is essential to find the most suitable means of examination that is reliable, efficient, reproducible and not time consuming.

Conventional printed reading charts still dominate in clinical settings, however the most prevalent of them (i.e., Jaeger type charts) are not validated and their outcomes should be interpreted with caution. Reliable reading capacity assessment is done only by the printed reading tests that comply with the ICO recommendations: the Sloan Reading Cards, the Bailey-Lovie Word Reading Charts, the MNREAD Charts, the RADNER Reading Charts, the Colenbrander Continuous Text Near Vision Cards, the Smith-Kettlewell Reading Test (SKread), the Oculus Reading Probe II, the C-Read Charts and the Arabic-BAL Chart[24],[26]–[28].

Digital reading tests are gaining popularity both in clinical and research settings since they offer reliability and ease of use. Furthermore, some can also be used from the safety of one's home and are easy to undertake and evaluate without the presence of an examiner being necessary. However, devices on the market are numerous with varying display qualities and standards which makes it difficult to evaluate and compare results from different devices. All screens require a precise standardization of the luminance, which needs time to constantly change and calibrate. Therefore, certain precautions should apply both in the examination procedure and the characteristics of the digital display. Digital reading charts are differentiated in standard computer-based applications that require installation either in a computer or a tablet e.g., Advanced VISION Test and web-based ones e.g., DDART that require no installation. It becomes obvious that web-based reading tests offer the advantage of functionality independent of the underlying hardware and consume minimal resources in the host computer.

Taking into consideration the advances of modern technology and the need for time-saving and standardized tests to aid clinical work, it seems that validated digital reading tests (computer-based and web-based ones) are set to prevail in clinical and research settings. All digital reading tests that were presented in this systematic review demonstrate adequate validity, reliability, and ease of use. It is upon the ophthalmologist's personal point of view to select the most compatible one with the clinical or research setting it is used for.

Acknowledgments

Conflicts of Interest: Ntonti P, None; Mitsi C, None; Chatzimichael E, None; Panagiotopoulou EK, None; Bakirtzis M, None; Konstantinidis A, None; Labiris G, None.

REFERENCES

- 1.Choi SU, Chun YS, Lee JK, Kim JT, Jeong JH, Moon NJ. Comparison of vision-related quality of life and mental health between congenital and acquired low-vision patients. Eye (Lond) 2019;33(10):1540–1546. doi: 10.1038/s41433-019-0439-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Senger C, Margarido MRRA, de Moraes CG, de Fendi LI, Messias A, Paula JS. Visual search performance in patients with vision impairment: a systematic review. Curr Eye Res. 2017;42(11):1561–1571. doi: 10.1080/02713683.2017.1338348. [DOI] [PubMed] [Google Scholar]

- 3.Singh P, Tripathy K. StatPearls. Treasure Island (FL): StatPearls Publishing; 2022. Jan, Presbyopia. 2022 Jul 12. [Google Scholar]

- 4.Fricke TR, Tahhan N, Resnikoff S, Papas E, Burnett A, Ho SM, Naduvilath T, Naidoo KS. Global prevalence of presbyopia and vision impairment from uncorrected presbyopia. Ophthalmology. 2018;125(10):1492–1499. doi: 10.1016/j.ophtha.2018.04.013. [DOI] [PubMed] [Google Scholar]

- 5.Torricelli AA, Junior JB, Santhiago MR, Bechara SJ. Surgical management of presbyopia. Clin Ophthalmol Auckl N Z. 2012;6:1459–1466. doi: 10.2147/OPTH.S35533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wolffsohn JS, Davies LN. Presbyopia: effectiveness of correction strategies. Prog Retin Eye Res. 2019;68:124–143. doi: 10.1016/j.preteyeres.2018.09.004. [DOI] [PubMed] [Google Scholar]

- 7.Schallhorn JM, Pantanelli SM, Lin CC, et al. Multifocal and accommodating intraocular lenses for the treatment of presbyopia. Ophthalmology. 2021;128(10):1469–1482. doi: 10.1016/j.ophtha.2021.03.013. [DOI] [PubMed] [Google Scholar]

- 8.Chan T, Friedman DS, Bradley C, Massof R. Estimates of incidence and prevalence of visual impairment, low vision, and blindness in the United States. JAMA Ophthalmol. 2018;136(1):12–19. doi: 10.1001/jamaophthalmol.2017.4655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bourne RRA. Uncorrected refractive error and presbyopia: accommodating the unmet need. Br J Ophthalmol. 2007;91(7):848–850. doi: 10.1136/bjo.2006.112862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kempen JH, Mitchell P, Lee KE, et al. Eye Diseases Prevalence Research Group The prevalence of refractive errors among adults in the United States, Western Europe, and Australia. Arch Ophthalmol. 2004;122(4):495–505. doi: 10.1001/archopht.122.4.495. [DOI] [PubMed] [Google Scholar]

- 11.Mansfield JS, Legge GE. The Psychophysics of Reading in Normal. 1st ed. Minnesota: CRC Press; 2007. p. 248. [Google Scholar]

- 12.Xu RF, Bradley A. IURead: a new computer-based reading test. Ophthalmic Physiol Opt. 2015;35(5):500–513. doi: 10.1111/opo.12233. [DOI] [PubMed] [Google Scholar]

- 13.Kingsnorth A, Wolffsohn JS. Mobile app reading speed test. Br J Ophthalmol. 2015;99(4):536–539. doi: 10.1136/bjophthalmol-2014-305818. [DOI] [PubMed] [Google Scholar]

- 14.Rhiu S, Kim M, Kim JH, Lee HJ, Lim TH. Korean version self-testing application for reading speed. Korean J Ophthalmol. 2017;31(3):202–208. doi: 10.3341/kjo.2016.0042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Calabrèse A, To L, He YC, Berkholtz E, Rafian P, Legge GE. Comparing performance on the MNREAD iPad application with the MNREAD acuity chart. J Vis. 2018;18(1):8. doi: 10.1167/18.1.8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tofigh S, Shortridge E, Elkeeb A, Godley BF. Effectiveness of a smartphone application for testing near visual acuity. Eye (Lond) 2015;29(11):1464–1468. doi: 10.1038/eye.2015.138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Han XT, Scheetz J, Keel S, Liao CM, Liu C, Jiang Y, Müller A, Meng W, He MG. Development and validation of a smartphone-based visual acuity test (vision at home) Transl Vis Sci Technol. 2019;8(4):27. doi: 10.1167/tvst.8.4.27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hirnschall N, Motaabbed JK, Dexl A, Grabner G, Findl O. Evaluation of an electronic reading desk to measure reading acuity in pseudophakic patients. J Cataract Refract Surg. 2014;40(9):1462–1468. doi: 10.1016/j.jcrs.2013.12.021. [DOI] [PubMed] [Google Scholar]

- 19.Aslam TM, Murray IJ, Lai MYT, Linton E, Tahir HJ, Parry NRA. An assessment of a modern touch-screen tablet computer with reference to core physical characteristics necessary for clinical vision testing. J R Soc Interface. 2013;10(84):20130239. doi: 10.1098/rsif.2013.0239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bodduluri L, Boon MY, Dain SJ. Evaluation of tablet computers for visual function assessment. Behav Res. 2017;49(2):548–558. doi: 10.3758/s13428-016-0725-1. [DOI] [PubMed] [Google Scholar]

- 21.Tahir HJ, Murray IJ, Parry NRA, Aslam TM. Optimisation and assessment of three modern touch screen tablet computers for clinical vision testing. PLoS One. 2014;9(4):e95074. doi: 10.1371/journal.pone.0095074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fischer R, Milfont TL. Standardization in psychological research. Int J Psychol Res. 2010;3(1):88–96. [Google Scholar]

- 23.Cicchetti DV. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol Assess. 1994;6(4):284–290. [Google Scholar]

- 24.Stifter E, König F, Lang T, Bauer P, Richter-Müksch S, Velikay-Parel M, Radner W. Reliability of a standardized reading chart system: variance component analysis, test-retest and inter-chart reliability. Graefes Arch Clin Exp Ophthalmol. 2004;242(1):31–39. doi: 10.1007/s00417-003-0776-8. [DOI] [PubMed] [Google Scholar]

- 25.MacKeben M, Nair UK, Walker LL, Fletcher DC. Random word recognition chart helps scotoma assessment in low vision. Optom Vis Sci. 2015;92(4):421–428. doi: 10.1097/OPX.0000000000000548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Colenbrander A. Consilium ophthalmologicum universale visual functions committee, visual acuity measurement standard. Ital J Ophthalmol. 1988;11:5–19. [Google Scholar]

- 27.Han QM, Cong LJ, Yu C, Liu L. Developing a logarithmic Chinese reading acuity chart. Optom Vis Sci. 2017;94(6):714–724. doi: 10.1097/OPX.0000000000001081. [DOI] [PubMed] [Google Scholar]

- 28.Alabdulkader B, Leat SJ. A standardized Arabic reading acuity chart: the balsam alabdulkader-leat chart. Optom Vis Sci. 2017;94(8):807–816. doi: 10.1097/OPX.0000000000001103. [DOI] [PubMed] [Google Scholar]

- 29.Bailey IL, Lovie JE. The design and use of a new near-vision chart. Am J Optom Physiol Opt. 1980;57(6):378–387. doi: 10.1097/00006324-198006000-00011. [DOI] [PubMed] [Google Scholar]

- 30.Radner W. Reading charts in ophthalmology. Graefes Arch Clin Exp Ophthalmol. 2017;255(8):1465–1482. doi: 10.1007/s00417-017-3659-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Labiris G, Toli A, Perente A, Ntonti P, Kozobolis VP. A systematic review of pseudophakic monovision for presbyopia correction. Int J Ophthalmol. 2017;10(6):992–1000. doi: 10.18240/ijo.2017.06.24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Vargas V, Radner W, Allan BD, Reinstein DZ, Burkhard Dick H, Alió JL, Near vision and accommodation committee of the American-European Congress of Ophthalmology (AECOS) Methods for the study of near, intermediate vision, and accommodation: an overview of subjective and objective approaches. Surv Ophthalmol. 2019;64(1):90–100. doi: 10.1016/j.survophthal.2018.08.003. [DOI] [PubMed] [Google Scholar]

- 33.Thaung J, Olseke K, Ahl J, Sjöstrand J. Reliability of a standardized test in Swedish for evaluation of reading performance in healthy eyes. Interchart and test-retest analyses. Acta Ophthalmol. 2014;92(6):557–562. doi: 10.1111/aos.12286. [DOI] [PubMed] [Google Scholar]

- 34.West SK, Munoz B, Rubin GS, Schein OD, Bandeen-Roche K, Zeger S, German S, Fried LP. Function and visual impairment in a population-based study of older adults. The SEE project. Salisbury Eye Evaluation. Invest Ophthalmol Vis Sci. 1997;38(1):72–82. [PubMed] [Google Scholar]

- 35.Lewis JM, Smith TJ. Digital visual acuity test using calculator-type numerals with geometric gradation. Ophthalmology. 1987;94(2):130–135. doi: 10.1016/s0161-6420(87)33486-4. [DOI] [PubMed] [Google Scholar]

- 36.Mansfield JS, Atilgan N, Lewis AM, Legge GE. Extending the MNREAD sentence corpus: computer-generated sentences for measuring visual performance in reading. Vis Res. 2019;158:11–18. doi: 10.1016/j.visres.2019.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Labiris G, Panagiotopoulou EK, Chatzimichael E, Tzinava M, Mataftsi A, Delibasis K. Introduction of a digital near-vision reading test for normal and low vision adults: development and validation. Eye Vis (Lond) 2020;7:51. doi: 10.1186/s40662-020-00216-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Radner W. Standardization of reading charts: a review of recent developments. Optom Vis Sci. 2019;96(10):768–779. doi: 10.1097/OPX.0000000000001436. [DOI] [PubMed] [Google Scholar]

- 39.Baskaran K, Macedo AF, He YC, Hernandez-Moreno L, Queirós T, Mansfield JS, Calabrèse A. Scoring reading parameters: an inter-rater reliability study using the MNREAD chart. PLoS One. 2019;14(6):e0216775. doi: 10.1371/journal.pone.0216775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Colenbrander A. Reading acuity—an important parameter of reading performance. Int Congr Ser. 2005;1282:487–491. [Google Scholar]

- 41.Cheung JPY, Liu DSK, Lam CCC, Cheong AMY. Development and validation of a new Chinese reading chart for children. Ophthalmic Physiol Opt. 2015;35(5):514–521. doi: 10.1111/opo.12228. [DOI] [PubMed] [Google Scholar]

- 42.Ramsdale C, Charman WN. Accommodation and convergence: effects of lenses and prisms in ‘closed-loop’ conditions. Ophthalmic Physiol Opt. 1988;8(1):43–52. [PubMed] [Google Scholar]

- 43.Radner W, Obermayer W, Richter-Mueksch S, Willinger U, Velikay-Parel M, Eisenwort B. The validity and reliability of short German sentences for measuring reading speed. Graefes Arch Clin Exp Ophthalmol. 2002;240(6):461–467. doi: 10.1007/s00417-002-0443-5. [DOI] [PubMed] [Google Scholar]

- 44.Rubin GS, Feely M, Perera S, Ekstrom K, Williamson E. The effect of font and line width on reading speed in people with mild to moderate vision loss. Ophthalmic Physiol Opt. 2006;26(6):545–554. doi: 10.1111/j.1475-1313.2006.00409.x. [DOI] [PubMed] [Google Scholar]

- 45.Chung STL. The effect of letter spacing on reading speed in central and peripheral vision. Invest Ophthalmol Vis Sci. 2002;43(4):1270–1276. [PubMed] [Google Scholar]

- 46.Radner W, Diendorfer G, Kainrath B, Kollmitzer C. The accuracy of reading speed measurement by stopwatch versus measurement with an automated computer program (rad-rd©) Acta Ophthalmol. 2017;95(2):211–216. doi: 10.1111/aos.13201. [DOI] [PubMed] [Google Scholar]

- 47.Yeung WK, Dawes P, Pye A, Neil M, Aslam T, Dickinson C, Leroi I. eHealth tools for the self-testing of visual acuity: a scoping review. NPJ Digit Med. 2019;2:82. doi: 10.1038/s41746-019-0154-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.McDonnell PJ, Lee P, Spritzer K, Lindblad AS, Hays RD. Associations of presbyopia with vision-targeted health-related quality of life. Arch Ophthalmol. 2003;121(11):1577–1581. doi: 10.1001/archopht.121.11.1577. [DOI] [PubMed] [Google Scholar]