Abstract

Background

Inferior oblique overaction (IOOA) is a common ocular motility disorder. This study aimed to propose a novel deep learning-based approach to automatically evaluate the amount of IOOA.

Methods

This prospective study included 106 eyes of 72 consecutive patients attending the strabismus clinic in a tertiary referral hospital. Patients were eligible for inclusion if they were diagnosed with IOOA. IOOA was clinically graded from +1 to +4. Based on photograph in the adducted position, the height difference between the inferior corneal limbus of both eyes was manually measured using ImageJ and automatically measured by our deep learning-based image analysis system with human supervision. Correlation coefficients, Bland-Altman plots and mean absolute deviation (MAD) were analyzed between two different measurements of evaluating IOOA.

Results

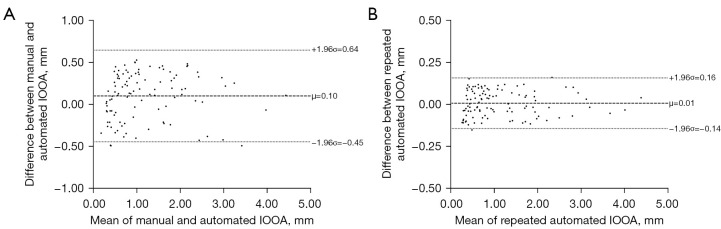

There were significant correlations between automated photographic measurements and clinical gradings (Kendall’s tau: 0.721; 95% confidence interval: 0.652 to 0.779; P<0.001), between automated and manual photographic measurements [intraclass correlation coefficients (ICCs): 0.975; 95% confidence interval: 0.963 to 0.983; P<0.001], and between two-repeated automated photographic measurements (ICCs: 0.998; 95% confidence interval: 0.997 to 0.999; P<0.001). The biases and MADs were 0.10 [95% limits of agreement (LoA): −0.45 to 0.64] mm and 0.26±0.14 mm between automated and manual photographic measurements, and 0.01 (95% LoA: −0.14 to 0.16) mm and 0.07±0.04 mm between two-repeated automated photographic measurements, respectively.

Conclusions

The automated photographic measurements of IOOA using deep learning technique were in excellent agreement with manual photographic measurements and clinical gradings. This new approach allows objective, accurate and repeatable measurement of IOOA and could be easily implemented in clinical practice using only photographs.

Keywords: Inferior oblique overaction (IOOA), automated image analysis, deep learning

Introduction

Inferior oblique overaction (IOOA) is a common ocular motility disorder characterized by overelevation of the eye in adduction, which is reported in 70% of patients with esotropia and 30% of patients with exotropia (1). Primary IOOA often presents in patients with infantile esotropia, accommodative esotropia, or intermittent exotropia (2), while secondary IOOA is caused by ipsilateral superior oblique palsy or contralateral superior rectus palsy (3). Various surgical procedures have been performed to weaken the inferior oblique (IO) muscle, such as myectomy, myotomy, recession, myopexy, and anteriorization (4-6). The surgical procedure usually depends on the amount of IOOA, which is evaluated by a grading scale. Clinically, IOOA is graded qualitatively from +1 to +4, by comparing the height of the inferior corneal limbus of both eyes in adducted position (7). However, the traditional clinical grading of IOOA primarily relies on the examiner’s experience and is subject to interobserver variability, making it difficult to obtain accurate and reliable measurement (8).

Several efforts have been made to quantitatively assess IOOA using photographs of the cardinal positions of gaze (9-11). However, human-computer interaction was still required for photographic analysis in previous studies, leading to subjectivity in the measurement. An optimized automated system with human supervision would provide a more rapid and more accurate tool for strabismus clinics. Deep learning with convolutional neural networks (CNNs) has reached ideal performance for ophthalmological image segmentation (12). In our previous studies, we have proposed novel approaches for automated measurement of ocular movements and eyelid morphology in healthy volunteers using CNN-based deep learning methods (13,14). Nevertheless, the modified limbus test which we used previously for measurement of IO was limited to participants with normal eyelid morphology and function (15). To propose an automated method with broader applicability, the current study intends to evaluate the amount of IOOA by comparing the height of the inferior corneal limbus of both eyes in adduction, using deep learning-based image analysis. We present the following article in accordance with the STARD reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-22-467/rc).

Methods

Study participants and clinical grading

Consecutive patients who were diagnosed with IOOA, attending the strabismus clinic in a tertiary referral hospital between September 2021 and March 2022, were invited to take part in this prospective study. All patients received detailed assessment, including history, best-corrected visual acuity, binocular movement testing, cover test, anterior and posterior segment examination. The exclusion criteria included previous surgery on the IO muscle, simultaneous dissociated vertical deviation, and vertical strabismus. IOOA of an eye was clinically graded from +1 to +4 by two experienced strabismus specialists (LL and XT), through comparing the height of the inferior corneal limbus of both eyes in the contralateral gaze (7). Any disagreements were resolved through discussion to reach a consensus.

Photography

Binocular movement testing was performed by a single experienced ophthalmologist to assure consistency in assessment. A digital camera (Canon EOS 450D, Canon Corporation, Tokyo, Japan) was placed 1m away in front of the patient at eye level. A circular marker with 10 mm in diameter was attached to the forehead of the patient as a reference. The patient was asked to follow an object presented by the examiner and photographs were taken in diagnostic positions of gaze, in accordance with standard clinical practice (7). Verbal encouragement was given to ensure stability of the patient’s head and maximum effort toward the extremes of gaze.

Manual photographic measurement

Manual measurement of IOOA based on the photographs in the contralateral gaze was conducted by an investigator using ImageJ (version 1.8.0; National Institutes of Health, Bethesda, MD, USA). Since the elliptical corneal margin was not fully visible in the photographs due to eyelid covering, a complete ellipse was extrapolated from the visible corneal margin of each eye in the contralateral gaze. Then, two horizontal lines across the inferior corneal limbus of both eyes were drawn to measure the height difference between both eyes, using the circular marker on the forehead as a reference. The height difference obtained by the investigator was taken as the manual measurement of IOOA.

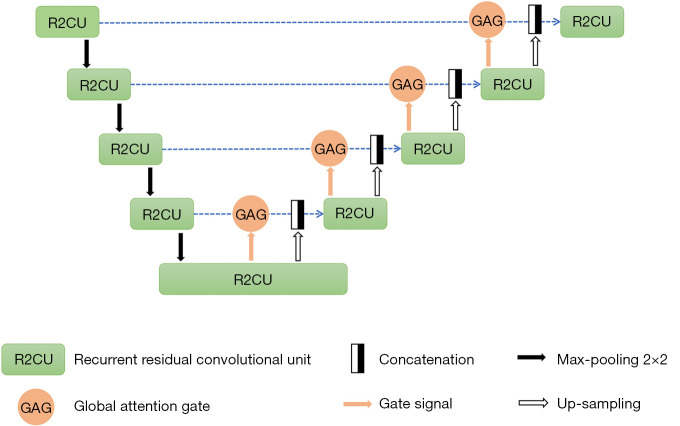

Automated photographic measurement via GAR2U-Net

Automated measurement of IOOA based on the photographs in the contralateral gaze was performed. This study proposed a novel recurrent residual CNN with global attention gate based on U-Net (GAR2U-Net), for eye location and eye segmentation (Figure 1). GAR2U-Net adopts recurrent residual convolutional units instead of basic convolutional units, and global attention gate (GAG) instead of the original skip connection. The GAG in the nth layer has a swin transformer of n stage (16). The output of each stage is decoded by a convolutional block and multiplied by the input feature map. All attention results are added as the output of GAG (Figure S1). After eye segmentation, complete corneal margin of each eye was plotted by ellipse fitting algorithm, with human supervision of fitting results. Then, the height difference between both eyes in the contralateral gaze was automated measured. The procedure of automated photographic measurement could be briefly described as follows (Figure 2 and Figure S2).

Figure 1.

The GAR2U-Net architecture proposed in this study for eye location and eye segmentation. GAR2U-Net, recurrent residual convolutional neural network with global attention gate based on U-net.

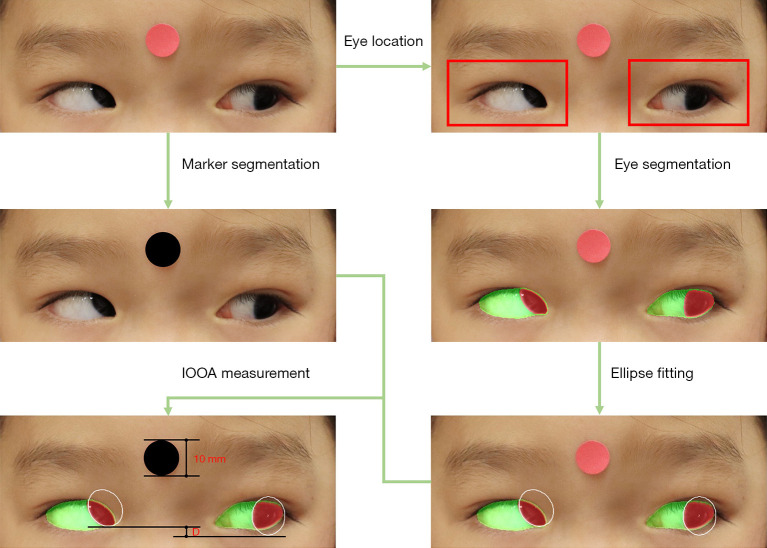

Figure 2.

The workflow of IOOA measurement using automated photographic method. This image is published with the patient’s consent. IOOA, inferior oblique overaction.

Step 1: 30,000 high-resolution facial images (60,000 eyes) with eye segmentation mask selected from the CelebFaces Attributes Dataset (17,18), were used to train the initial eye segmentation in full-size facial images, via first-stage GAR2U-Net. Parameters of the network model: epoch =200; batch size =32; input image size =512×512 pixels; logistic loss function: cross entropy; optimizer: Adam (lr =0.00001). After initial eye segmentation in full-size facial image, the eye was located by a bounding box, and thus the eye image was obtained.

Step 2: 10,000 eye images crawled from Google image search were manually annotated with cornea and eyelid margin, by well-trained ophthalmologists using magnetic lasso tools in Adobe Photoshop (version 22.4.2; Adobe Inc., San Jose, CA, USA) (19). These annotated images were used to train the cornea and eyelid segmentation network via second-stage GAR2U-Net. Parameters of the network model: epoch =200; batch size =32; input image size =256×256 pixels; logistic loss function: IoU loss; optimizer: Adam (lr =0.00001).

Step 3: facial images of patients with IOOA in the contralateral gaze were used as the test set. The output results of GAR2U-Net were smoothed to obtain pixel-level cornea mask and eyelid mask. For each point on the cornea boundary, the minimum distance between this point and the eyelid boundary was calculated, and then the distribution curve of the minimum distance for all points on the cornea boundary was generated. After that, the unimodal threshold algorithm was performed to locate the threshold of the minimum distance (20). The points on the cornea boundary with the minimum distance greater than the threshold were used to fit complete corneal limbus by ellipse fitting algorithm (21).

Ellipse is defined as the geometric figure which is the set of all points on a plane whose distance from two fixed points known as the foci remains a constant. We set the constant distance Dtarget, and two focal points with coordinates of (xfocus1, yfocus1) and (xfocus2, yfocus2). For the point (xi, yi) on the cornea boundary, the actual distance Di (1≤ i ≤ n) between this point and two focal points could be expressed as:

| [1] |

The variance I for a set of Di could be expressed as:

| [2] |

The optimal objective function was as follows:

| [3] |

Those coordinates xfocus1, yfocus1, xfocus2, yfocus2 to generate the minimum value of I were the coordinates of two focal points of the final ellipse.

After manual checking of the location of the ellipse and adjustment as necessary, the height difference between the inferior corneal limbus of both eyes was automated measured in pixels.

Step 4: adaptive threshold algorithm was applied to segment the circular marker on the patient’s forehead (22). The pixel/millimeter ratio was calculated and the measurement of IOOA was converted into millimeters.

Step 5: step 3 and step 4 were performed twice to obtain the mean value of automated measurement of IOOA.

Statistical analyses

The accuracy for automated eye segmentation tasks was evaluated using dice coefficients, by comparing automated to manual eye segmentation of the test set. The variance of measurements of IOOA using photographic methods across eyes with different clinical gradings was analyzed using one-way ANOVA followed by Bonferroni multiple comparison test. The agreement between two different methods for evaluation of IOOA, and between two measurements within automated photographic method were evaluated using Kendall’s tau correlation coefficient, or intraclass correlation coefficients (ICCs). The agreement was considered excellent if ICC >0.80, substantial if 0.60< ICC ≤0.80, moderate if 0.40< ICC ≤0.60, and poor if ICC ≤0.40 (23). In addition, Bland-Altman plots and mean absolute deviation (MAD) between measurements using two photographic methods, and between two measurements within automated photographic method were assessed. All statistical analyses were performed using SPSS (version 23; IBM Corporation, Chicago, IL, USA). It was considered statistically significant if P<0.05.

Sample size and time span of the study

The calculation of the minimum sample size was performed by using PASS software (version 2021; NCSS, Kaysville, UT, USA). With a pre-specified alpha of 0.05 and a pre-specified width of confidence interval of 0.20, a minimum number of eyes of 103 is required to detect the smallest possible value of 0.70 for ICC (24). This study was based on a consecutive series of patients and lasted about 7 months from September 2021 and March 2022, to collect the required number of eyes.

Ethical statement

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the institutional ethics board of the Second Affiliated Hospital of Zhejiang University, School of Medicine and informed consent was taken from all individual participants.

Results

In total, 106 eyes of 72 patients (24 males and 48 females) with IOOA were included in this study. The flow of participants through this study were shown in Figure S3. Among 77 patients diagnosed with IOOA, 2 were excluded due to previous surgery on the IO muscle, 1 due to simultaneous dissociated vertical deviation, and 2 due to simultaneous vertical strabismus. The mean age of patients was 17.6±12.7 years old, ranging from 4 to 56 years old. All patients were Asian. 66 patients had primary IOOA associated with concomitant esotropia (14 patients) or concomitant exotropia (52 patients), while 6 patients had secondary IOOA attributable to superior oblique palsy. Among 106 eyes with IOOA, 69, 24, 10, and 3 eyes were clinically graded as +1, +2, +3, and +4, respectively. The characteristics of the patients were demonstrated in Table S1. The dice coefficients for automated cornea and eyelid segmentation tasks in the test set were 0.956 and 0.950, respectively. Only 4.7% of automatically fitted ellipses of corneal limbus needed manual adjustment.

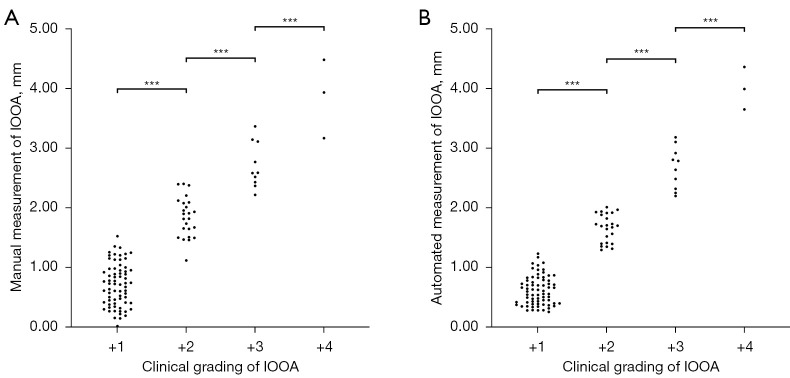

One-way ANOVA analysis revealed significant difference in photographic measurements of IOOA across eyes with different clinical gradings (manual photographic method: F=179.14, P<0.001; automated photographic method: F=375.45, P<0.001). Multiple comparison demonstrated higher values of photographic measurements in eyes with higher clinical gradings (Figure 3, Table 1). There were significant correlations between manual photographic measurement and clinical grading (Kendall’s tau: 0.709, 95% confidence interval: 0.642 to 0.766; P<0.001), and between automated photographic measurement and clinical grading (Kendall’s tau: 0.721; 95% confidence interval: 0.652 to 0.779; P<0.001).

Figure 3.

Scatterplots of IOOA measurements using two photographic methods across eyes with different clinical gradings. (A) Measurements using manual photographic method. (B) Measurements using automated photographic method. ***P<0.001 in multiple comparison test. IOOA, inferior oblique overaction.

Table 1. Manual and automated photographic measurements of IOOA across eyes with different clinical gradings.

| Clinical grading | Manual photographic measurement (mm) | Automated photographic measurement (mm) |

|---|---|---|

| +1 | 0.71±0.36 | 0.62±0.24 |

| +2 | 1.85±0.33 | 1.67±0.23 |

| +3 | 2.71±0.38 | 2.68±0.35 |

| +4 | 3.86±0.66 | 4.02±0.36 |

Data are presented as mean ± SD. IOOA, inferior oblique overaction; SD, standard deviation.

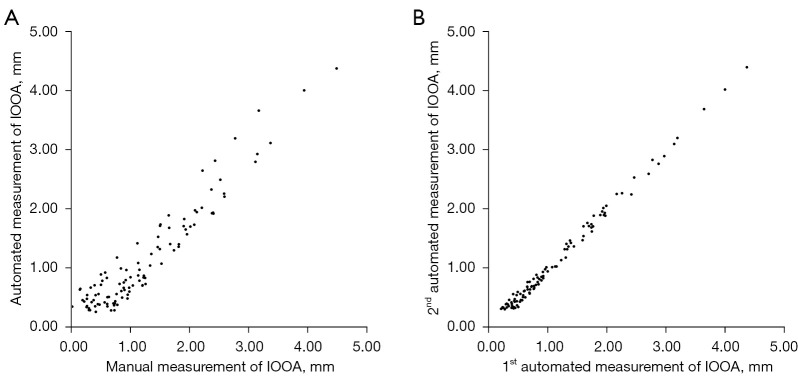

The scatterplots of IOOA measurements using two photographic methods and two measurements within automated photographic method were shown in Figure 4. There were significant correlations between automated and manual photographic measurements (ICC: 0.975; 95% confidence interval: 0.963 to 0.983; P<0.001), and between two-repeated automated photographic measurements (ICC: 0.998; 95% confidence interval: 0.997 to 0.999; P<0.001). Bland-Altman analyses (Figure 5) demonstrated that the biases were 0.10 [95% limits of agreement (LoA): −0.45 to 0.64] mm between automated and manual photographic measurements, and 0.01 (95% LoA: −0.14 to 0.16) mm between two-repeated automated photographic measurements. The Bland-Altman plots of the difference against the average of two measurements revealed no relationship between the discrepancy and the level of measurement, suggesting that 95% LoA would be appropriate. The mean values of automated and manual photographic measurements were shown in Table 2. The MADs were 0.26±0.14 mm between automated and manual photographic measurements, and 0.07±0.04 mm between two-repeated automated photographic measurements, indicating excellent inter- and intra-method reliability.

Figure 4.

Scatterplot of two measurements of IOOA. (A) Two measurements using manual and automated photographic methods. (B) Two-repeated automated photographic measurements. IOOA, inferior oblique overaction.

Figure 5.

Bland-Altman plots analyzing the agreement between two measurements of IOOA. (A) Agreement between two measurements using manual and automated photographic methods. (B) Agreement between two-repeated automated photographic measurements. Broken lines indicate mean (µ); dotted lines indicate 95% LoA (1.96σ). IOOA, inferior oblique overaction; LoA, limits of agreement.

Table 2. Values and MADs of IOOA measurements using photographic methods.

| Photographic methods | Mean ± SD (mm) | MAD ± SD (mm) |

|---|---|---|

| Inter-method | 0.26±0.14 | |

| Manual | 1.25±0.89 | |

| Automated | 1.15±0.88 | |

| Intra-method | 0.07±0.04 | |

| Automated (1st) | 1.15±0.88 | |

| Automated (2nd) | 1.15±0.87 |

MAD, mean absolute deviation; IOOA, inferior oblique overaction; SD, standard deviation.

Discussion

In this study, we proposed a deep learning-based image analysis method to automatically measure IOOA using photographs obtained in the adducted position, which was objective, accurate and repeatable. Higher values of photographic measurements of IOOA were found in eyes with higher clinical gradings. The automated photographic measurements were in excellent agreement with the manual photographic measurements and clinical gradings. Our image analysis system with human supervision could be considered a reliable technique for measuring IOOA in clinical practice.

IOOA is a common ocular motility disorder, which could cause socially noticeable hypertropia of the affected eye and/or symptomatic diplopia and asthenopia (25). Surgical intervention is often needed to either improve alignment and/or relieve symptoms. Accurate assessment of IOOA is important in diagnosis, surgical plan, and evaluation of surgical effects, especially when a patient visits different clinicians at different time. Traditionally, IOOA is subjectively graded using simple scales (e.g., +1 to +4) (7). Clinical grading is sensitive to clinician’s experience and is not suitable for quantification. Many devices have been applied to quantify ocular movements, but they are either expensive (e.g., scleral search coil), time consuming (e.g., manual perimeter), or limited to measurement range (e.g., synoptophore) (26). In strabismus clinics, photographs of the cardinal positions of gaze are often recorded in routine practice. Photographic technique for IOOA quantification has obvious advantages over the above-mentioned techniques, such as lower cost, easier acquisition, and wider measurement range.

IOOA is characterized by an abnormal elevation in adduction (27,28). Contralateral gaze of position was often used as the diagnostic position of IOOA (7,9). Several efforts have been made to quantitatively assess IOOA using photographs. Lim et al. manually overlapped the semitransparent photograph in the contralateral gaze to the photograph in the primary gaze. The overlapping image was converted to identify the margin of the corneal limbus, and then the angle corresponds to the direction of ocular movement was measured as the degree of IOOA (9). Yoon et al. drew a full ellipse to extrapolate the corneal limbus and to measure the rotated angle of the eyeball in both eyes based on a three-dimensional eye model. An angular difference of 5 degrees was defined as 1 unit of overaction (10). Rautha et al. used the photograph rotation tool in the iPhone Photo app to evaluate IO muscle functions, with a grid image overlapped on the photograph and the grade line positioned on the inferior corneal limbus of the abducted eye/fixing eye in supra-levoversion and supra-dextroversion (11). However, manual process of photographs was still required in previous studies, which leads to inefficiency and inevitable artificial error.

More recently, a few studies have attempted to measure ocular deviation/ocular movement using deep learning-based image processing techniques. Kang et al. used U-Net to segment sclera and limbus, and performed image registration of nine gaze images based on the primary gaze image. Yet, a circle rather than an ellipse was drawn for the limbus recognition process in their method, and the mathematical algorithm was tested only on two cranial nerve palsy patients (29). In our previous study, measurement of ocular movement after eye segmentation was based on each gaze image according to a modified limbus test, much simpler than measurement in overlapped image (14). However, the modified limbus test is focused on the distance from the limbus to the eyelid margin and is limited to individuals with normal eyelid (15). Therefore, the current study was designed to provide a method with broader applicability. The amount of IOOA was measured by comparing the height of the inferior corneal limbus of both eyes in the contralateral gaze after eye segmentation, without any restriction in regard to eyelid diseases.

There are many existing methods of facial feature detection, such as Fisherfaces (30), FaceNet (31), and MediaPipe face mesh (32). However, the existing methods are only suitable for full face recognition. At the time of COVID-19, the recruited patients usually wore masks. Thus, this study proposed GAR2U-Net as a new method for eye location, which had better performance with mask-wearing. Traditional U-Net algorithms are sensitive to eyelashes or shadow occlusion when segmenting eyes. In our pilot study, the authors have compared eye segmentation performance of GAR2U-Net with that of other networks, for example, U-Net, recurrent residual U-Net (33), and attention U-Net (34). GAR2U-Net, as a novel recurrent residual CNN with GAG, has better performance than other networks. Recurrent residual convolutional units have enhanced capability of integrating contextual information relative to basic convolutional units. Compared with original skip connection, GAG greatly improves the vision field, so that each layer can collect low- and high- level of features at the same time, substantially increasing the reasoning ability. Thus, GAR2U-Net proposed in this study has much better performance for eye segmentation than traditional U-Net algorithms. In most cases in this study, especially mild-to-moderate IOOA eyes, automated ellipse fitting seemed more precise than manual ellipse fitting. However, in a few cases, especially severe IOOA eyes, it was difficult to automatically fit an ellipse when only a tiny part of cornea could be segmented, which usually needed manual adjustment.

There are several limitations to the present study. First, deviation in the adducted position was not measured by alternate prism cover test. The correlation between photographic measurement of IOOA and hypertropia in the adducted position could be further explored. Second, it was difficult to fit the corneal limbus by a full ellipse in a few cases of eyes with little exposure of the corneal area. Thus, human supervision of the location of fitted ellipses was needed in our method, to guarantee the accuracy of measurement. Third, the diversity of sample was limited, because this study included more mild-to-moderate IOOA eyes than severe IOOA eyes. Fourth, the present study measured IOOA based on two-dimensional photographs, whereas real eyes are three-dimensional. Further study would be required to modify the current algorithm for its application to a three-dimensional eye model.

In conclusion, this study provided a new image analysis technique to automatically measure IOOA, which was in excellent agreement with manual measurements and clinical gradings. Using only photographs, our image analysis system with human supervision could be easily implemented in clinical practice. It would also provide a possibility for telemedicine because of the simplicity of image transmission.

Supplementary

The article’s supplementary files as

Acknowledgments

The authors thank Christian Johnson who is an English native speaker for language editing of the manuscript.

Funding: This work was supported by National Natural Science Foundation of China (No. 82000948 to L Lou, No. U20A20386 to J Ye, No. 81870635 to J Ye); National Key Research and Development Program of China (No. 2019YFC0118400 to J Ye); and Zhejiang Provincial Key Research and Development Plan (No. 2019C03020 to J Ye).

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the institutional ethics board of the Second Affiliated Hospital of Zhejiang University, School of Medicine and informed consent was taken from all individual participants.

Footnotes

Reporting Checklist: The authors have completed the STARD reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-22-467/rc

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-22-467/coif). The authors have no conflicts of interest to declare.

References

- 1.Caldeira JA. Some clinical characteristics of V-pattern exotropia and surgical outcome after bilateral recession of the inferior oblique muscle: a retrospective study of 22 consecutive patients and a comparison with V-pattern esotropia. Binocul Vis Strabismus Q 2004;19:139-50. [PubMed] [Google Scholar]

- 2.Bowling B. Kanski's clinical ophthalmology. Edinburgh: Elsevier, 2016. [Google Scholar]

- 3.Ozsoy E, Gunduz A, Ozturk E. Inferior Oblique Muscle Overaction: Clinical Features and Surgical Management. J Ophthalmol 2019;2019:9713189. 10.1155/2019/9713189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sieck EG, Madabhushi A, Patnaik JL, Jung JL, Lynch AM, Singh JK. Comparison of Different Surgical Approaches to Inferior Oblique Overaction. J Binocul Vis Ocul Motil 2020;70:89-93. 10.1080/2576117X.2020.1776566 [DOI] [PubMed] [Google Scholar]

- 5.Mohamed AA, Yousef H, Mounir A. Surgical outcomes of inferior oblique muscle weakening procedures for eliminating inferior oblique muscle overaction: a prospective randomized study. Delta J Ophthalmol 2019;20:88-94. [Google Scholar]

- 6.Kasem M, Metwally H, El-Adawy IT, Abdelhameed AG. Retro-equatorial inferior oblique myopexy for treatment of inferior oblique overaction. Graefes Arch Clin Exp Ophthalmol 2020;258:1991-7. 10.1007/s00417-020-04742-4 [DOI] [PubMed] [Google Scholar]

- 7.Vivian AJ, Morris RJ. Diagrammatic representation of strabismus. Eye (Lond) 1993;7:565-71. 10.1038/eye.1993.123 [DOI] [PubMed] [Google Scholar]

- 8.Wilson ME, Saunders RA, Trivedi RH. Pediatric ophthalmology: current thought and a practical guide. Berlin: Springer Berlin Heidelberg, 2009. [Google Scholar]

- 9.Lim HW, Lee JW, Hong E, Song Y, Kang MH, Seong M, Cho HY, Oh SY. Quantitative assessment of inferior oblique muscle overaction using photographs of the cardinal positions of gaze. Am J Ophthalmol 2014;158:793-9.e2. 10.1016/j.ajo.2014.06.016 [DOI] [PubMed] [Google Scholar]

- 10.Yoon CK, Yang HK, Kim JS, Hwang JM. An objective photographic analysis of ocular oblique muscle dysfunction. Am J Ophthalmol 2014;158:924-31. 10.1016/j.ajo.2014.07.035 [DOI] [PubMed] [Google Scholar]

- 11.Rautha YVBL, Carvalho LEMR, Vadas MFG, Uesugui CF, Sakano LY, Barcellos RB. Smartphone as a tool for evaluating oblique muscle dysfunction. Arq Bras Oftalmol 2022;85:263-8. 10.5935/0004-2749.20220041 [DOI] [PubMed] [Google Scholar]

- 12.Schmidt-Erfurth U, Sadeghipour A, Gerendas BS, Waldstein SM, Bogunović H. Artificial intelligence in retina. Prog Retin Eye Res 2018;67:1-29. 10.1016/j.preteyeres.2018.07.004 [DOI] [PubMed] [Google Scholar]

- 13.Cao J, Lou L, You K, Gao Z, Jin K, Shao J, Ye J. A Novel Automatic Morphologic Analysis of Eyelids Based on Deep Learning Methods. Curr Eye Res 2021;46:1495-502. 10.1080/02713683.2021.1908569 [DOI] [PubMed] [Google Scholar]

- 14.Lou L, Sun Y, Huang X, Jin K, Tang X, Xu Z, Zhang Q, Wang Y, Ye J. Automated Measurement of Ocular Movements Using Deep Learning-Based Image Analysis. Curr Eye Res 2022;47:1346-53. 10.1080/02713683.2022.2053165 [DOI] [PubMed] [Google Scholar]

- 15.Mai GH. Grading system for hyperfunction and hypofunction of extraocular muscles. Chinese Journal of Ophthalmology 2005;41:663-6. [Google Scholar]

- 16.Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B. Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021. [Google Scholar]

- 17.Liu Z, Luo P, Wang X, Tang X. Deep learning face attributes in the wild. In: 2015 IEEE International Conference on Computer Vision. New York: IEEE, 2015:3730-8. [Google Scholar]

- 18.Karras T, Aila T, Laine S, Lehtinen J. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint 2017. arXiv:1710.10196.

- 19.Harder J. Lasso Selection Tools. In: Harder J. Accurate Layer Selections Using Photoshop’s Selection Tools. Berkeley: Apress, 2022:185-212. [Google Scholar]

- 20.Coudray N, Buessler JL, Urban JP. Robust threshold estimation for images with unimodal histograms. Pattern Recogn Lett 2010;31:1010-9. 10.1016/j.patrec.2009.12.025 [DOI] [Google Scholar]

- 21.Kanatani K, Sugaya Y, Kanazawa Y. Ellipse fitting for computer vision: implementation and applications. Synthesis Lectures on Computer Vision 2016;6:1-141. 10.1007/978-3-031-01815-2 [DOI] [Google Scholar]

- 22.Mo S, Gan H, Zhang R, Yan Y, Liu X. A novel edge detection method based on adaptive threshold. In: 2020 IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC). IEEE, 2020:1223-6. [Google Scholar]

- 23.Bunce C. Correlation, agreement, and Bland-Altman analysis: statistical analysis of method comparison studies. Am J Ophthalmol 2009;148:4-6. 10.1016/j.ajo.2008.09.032 [DOI] [PubMed] [Google Scholar]

- 24.Bujang MA, Baharum N. A simplified guide to determination of sample size requirements for estimating the value of intraclass correlation coefficient: a review. Arch Orofac Sci 2017;12:1-11. [Google Scholar]

- 25.Shipman T, Burke J. Unilateral inferior oblique muscle myectomy and recession in the treatment of inferior oblique muscle overaction: a longitudinal study. Eye (Lond) 2003;17:1013-8. 10.1038/sj.eye.6700488 [DOI] [PubMed] [Google Scholar]

- 26.Hanif S, Rowe FJ, O’connor AR. A comparative review of methods to record ocular rotations. Br Ir Orthopt J 2015;6:47-51. 10.22599/bioj.8 [DOI] [Google Scholar]

- 27.von Noorden GK, Campos EC. Binocular vision and ocular motility: theory and management of strabismus. St. Louis: Mosby, 2002:386-7. [Google Scholar]

- 28.Kushner BJ. Multiple mechanisms of extraocular muscle "overaction". Arch Ophthalmol 2006;124:680-8. 10.1001/archopht.124.5.680 [DOI] [PubMed] [Google Scholar]

- 29.Kang YC, Yang HK, Kim YJ, Hwang JM, Kim KG. Automated Mathematical Algorithm for Quantitative Measurement of Strabismus Based on Photographs of Nine Cardinal Gaze Positions. Biomed Res Int 2022;2022:9840494. 10.1155/2022/9840494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zhang CY, Ruan QQ. Face Recognition Using L-Fisherfaces. Journal of Information Science & Engineering 2010;26:1525-37. [Google Scholar]

- 31.Schroff F, Kalenichenko D, Philbin J. Facenet: A unified embedding for face recognition and clustering. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2015:815-23. [Google Scholar]

- 32.Lugaresi C, Tang J, Nash H, McClanahan C, Uboweja E, Hays M, Zhang F, Chang CL, Yong MG, Lee J, Chang WT, Hua W, Georg M, Grundmann M. Mediapipe: A framework for building perception pipelines. arXiv preprint 2019. arXiv:1906.08172.

- 33.Alom MZ, Yakopcic C, Hasan M, Taha TM, Asari VK. Recurrent residual U-Net for medical image segmentation. J Med Imaging (Bellingham) 2019;6:014006. 10.1117/1.JMI.6.1.014006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Oktay O, Schlemper J, Folgoc LL, Lee M, Heinrich M, Misawa K, Mori K, McDonagh S, Hammerla NY, Kainz B, Glocker B, Rueckert D. Attention u-net: Learning where to look for the pancreas. arXiv preprint 2018. arXiv:1804.03999.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

The article’s supplementary files as