Abstract

Simple Summary

In this study, we aimed to investigate the use of deep learning for classifying whole-slide images of urine liquid-based cytology specimens into neoplastic and non-neoplastic (negative). To do so, we used a total of 786 whole-slide images to train models using four different approaches, and we evaluated them on 750 whole-slide images. The best model achieved good classification performance, demonstrating the promising potential of use of such models for aiding the screening process for urothelial carcinoma in routine clinical practices.

Abstract

Urinary cytology is a useful, essential diagnostic method in routine urological clinical practice. Liquid-based cytology (LBC) for urothelial carcinoma screening is commonly used in the routine clinical cytodiagnosis because of its high cellular yields. Since conventional screening processes by cytoscreeners and cytopathologists using microscopes is limited in terms of human resources, it is important to integrate new deep learning methods that can automatically and rapidly diagnose a large amount of specimens without delay. The goal of this study was to investigate the use of deep learning models for the classification of urine LBC whole-slide images (WSIs) into neoplastic and non-neoplastic (negative). We trained deep learning models using 786 WSIs by transfer learning, fully supervised, and weakly supervised learning approaches. We evaluated the trained models on two test sets, one of which was representative of the clinical distribution of neoplastic cases, with a combined total of 750 WSIs, achieving an area under the curve for diagnosis in the range of 0.984–0.990 by the best model, demonstrating the promising potential use of our model for aiding urine cytodiagnostic processes.

Keywords: urothelial carcinoma, urine, liquid-based cytology, deep learning, cancer screening, whole slide image

1. Introduction

For routine clinical practices, clinicians obtain urinary tract cytology specimens for the screening of urothelial carcinoma [1,2]. Urine specimens play a critical role in the clinical evaluation of patients who have clinical signs and symptoms (e.g., haematuria and painful urination) suggestive of pathological changes within the urinary tract [3]. Urothelial carcinoma is the most common malignant neoplasm detected by urine cytology. The most common site of origin of urothelial carcinoma is bladder. According to the Global Cancer Statistics 2020 [4], bladder cancer is the tenth most commonly diagnosed cancer with 573,278 of new cases and 212,536 of new deaths worldwide in 2020. Most of the bladder cancers are urothelial in origin (approximately 90% of bladder cancers) and primary adenocarcinoma of the bladder is rare [3,5,6]. Bladder cancer often presents insidiously. Haematuria is the most common presentation of bladder cancer, which is typically intermittent, frank, painless and at times present throughout micturition [3]. Delayed diagnosis of urothelial carcinoma is associated with high grade muscle invasion which has the potential to progress rapidly and cancer metastasis [3]. Of course, cystoscopy with a biopsy is the gold standard for diagnosis of urothelial carcinoma in clinical practice; however, it is aggressive and relatively inconvenient as a follow-up monitoring approach [7]. It has been reported that 48.6% of biopsy proven low-grade urothelial carcinomas had a urine cytodiagnosis of atypical or neoplastic suspicious, which could conclude that existing urine cytology screening and surveillance systems are accurate in diagnosing urothelial carcinoma [8]. Therefore, cytological urothelial carcinoma screening in urine specimens plays a key role in early stage cancer detection and treatment in routine clinical practices [9,10].

Liquid-based cytology (LBC) was developed as an alternative to conventional smear cytology in the 1990s [11]. LBC has several advantages in preparation and diagnostic process compared with conventional smear [12,13,14,15]. The LBC technique preserves the cells of interest in a liquid medium and removes most of the debris, blood, and exudate either by filtering or density gradient centrifugation [16,17]. LBC provides automated and standardized processing techniques that produce a uniformly distributed and cell-enriched slide [18,19,20]. Moreover, residual specimens can be used for additional investigations (e.g., immunocytochemistry) [21,22,23]. ThinPrep (Hologic, Inc., Marlborough, MA, USA) and SurePath (Becton Dickinson, Inc., Franklin Lakes, NJ, USA) for LBC specimen preparation have been approved by the US Food and Drug Administration (FDA). Compared to the conventional smear cytology, LBC has lower background elements, provides better cell preservation, and has a higher satisfaction rate [24]. As for the sensitivity, it has been reported that LBC achieved at 0.58 (CI: 0.51–0.65) and conventional smear achieved at 0.38 [7,11]. It is understandable that the efficiency of diagnosis employing the LBC is high because of the cell collection rate. It was shown that the accuracy of diagnoses made employing the LBC method can be increased by understanding the characteristics of the cell morphology in suspicious cases (e.g., high-grade urothelial carcinoma and low-grade urothelial carcinoma [25], and in other malignancies [26,27]). LBC specimens performed significantly better in urinary cytology when evaluating malignant categories especially high-grade urothelial carcinoma (HGUC), which facilitate a more accurate diagnosis than conventional preparations [15]. Moreover, from the standpoint of rationality, preparation and screening times were 2.25 and 1.33–2.00 times greater when using LBC (ThinPrep) compared with cytocentrifugation (conventional smear cytology) [28]. Therefore, computational screening (cytodiagnostic) aids for urine LBC specimens would be a great benefit for urothelial carcinoma screening as medial image analysis.

Whole-slide images (WSIs) are digitisations of the conventional glass slides obtained via specialised scanning devices (WSI scanners), and they are considered to be comparable to microscopy for primary diagnosis [29]. It has been reported that evaluation of WSI is generally equivalent to using conventional glass slides under microscopy [30]. The use of WSI has to some degree met the goals of saving pathologists working time and providing high quality pathological images with convenient access and easily navigable viewing online based software which saves resources and costs by eliminating slide glass shipping expenses [30]. The advent of WSIs led to the application of medical image analysis techniques, machine learning, and deep learning techniques for aiding pathologists in inspecting WSIs [31]. Importantly, a routine scanning of LBC slides in a single layer of WSIs would be suitable for further high throughput analysis (e.g., automated image based cytological screening and medical image analysis) [20]. Indeed, deep learning approaches and its clinical application to classify cytopathological changes (e.g., neoplastic transformation) were reported in the recent years [32,33,34,35,36,37,38,39,40,41].

In this study, we trained deep learning models based on convolutional neural networks (CNN) using a training dataset of 786 urine LBC (ThinPrep) WSIs. We evaluated the model on two test sets with a combined total of 750 WSIs, achieving ROC area under the curve (AUC) for WSI neoplastic classification in the range of 0.984–0.990.

2. Materials and Methods

2.1. Clinical Cases and Cytopathological Records

In this retrospective study, a total of 1556 LBC ThinPrep Pap test (Hologic, Inc.) conventionally prepared cytopathological slide glass specimens of human urine cytology were collected from a private clinical laboratory in Japan after routine cytopathological review of those glass slides by cytoscreeners and pathologists. The private clinical laboratory in Japan that provided urine LBC specimen glass slides in the present study was anonymized due to the confidentiality agreement. The LBC specimens were selected randomly to reflect a real clinical settings as much as possible. We have also collected LBC specimens so as to compile test sets with an equal balance and a clinical balance of negative and neoplastic. The equal balance test set consisted of 50% negative and 50% neoplastic urine LBC cases (Table 1). The clinical balance test set consisted of a ratio of 10 (negative) to 1 (neoplastic) urine LBC cases based on a real clinical setting which was reported by the Japanese Society of Clinical Cytology as the statistics on cytodiagnosis in 2016 to 2021 (https://jscc.or.jp/, accessed on 27 January 2022). Prior to the start of the experiments, the cytoscreeners and pathologists excluded inadequate LBC specimens (n = 21) which had inadequate cellularity or had significant artifacts like dust or ink markings. All WSIs were scanned at a magnification of ×20 using the same Leica Aperio AT2 Digital Whole Slide Scanner (Leica Biosystems, Tokyo, Japan) and were saved in the SVS file format with JPEG2000 compression. Each WSI was observed by at least two cytoscreeners or pathologists to confirm the diagnosis, with the final checking and verification performed by a senior cytoscreener or pathologist. We have confirmed that cytoscreeners and pathologists were able to classify (Table 1) from the visual inspection of the LBC ThinPrep Pap test (Hologic, Inc.) stained WSIs alone.

Table 1.

Datasets.

| Training | Validation | Test (Equal Balance) | Test (Clinical Balance) | Total | |

|---|---|---|---|---|---|

| Negative | 724 | 10 | 100 | 500 | 1334 |

| Class I | 360 | 5 | 50 | 250 | 665 |

| Class II | 364 | 5 | 50 | 250 | 669 |

| Neoplastic | 62 | 10 | 100 | 50 | 222 |

| Class III | 38 | 4 | 48 | 20 | 110 |

| Class IV | 11 | 3 | 23 | 14 | 51 |

| Class V | 13 | 3 | 29 | 16 | 61 |

| Total | 786 | 20 | 200 | 550 | 1556 |

In this study, we have classified urine LBC WSIs into two classes: one is negative and the other is neoplastic (Table 1). Negative WSIs were diagnosed as Class I or Class II and neoplastic WSIs were diagnosed as Class III, Class IV, or Class V in routine clinical cytodiagnosis (Table 1) (Class I: negative for HGUC; Class II: negative for HGUC with reactive urothelial epithelial cells; Class III: atypical urothelial epithelial cells and suspicious for LGUC; Class IV: LGUC and suspicious for HGUC; Class V: HGUC). The cytoscreeners and pathologists had to agree whether the output class was negative or neoplastic on each urine LBC WSI.

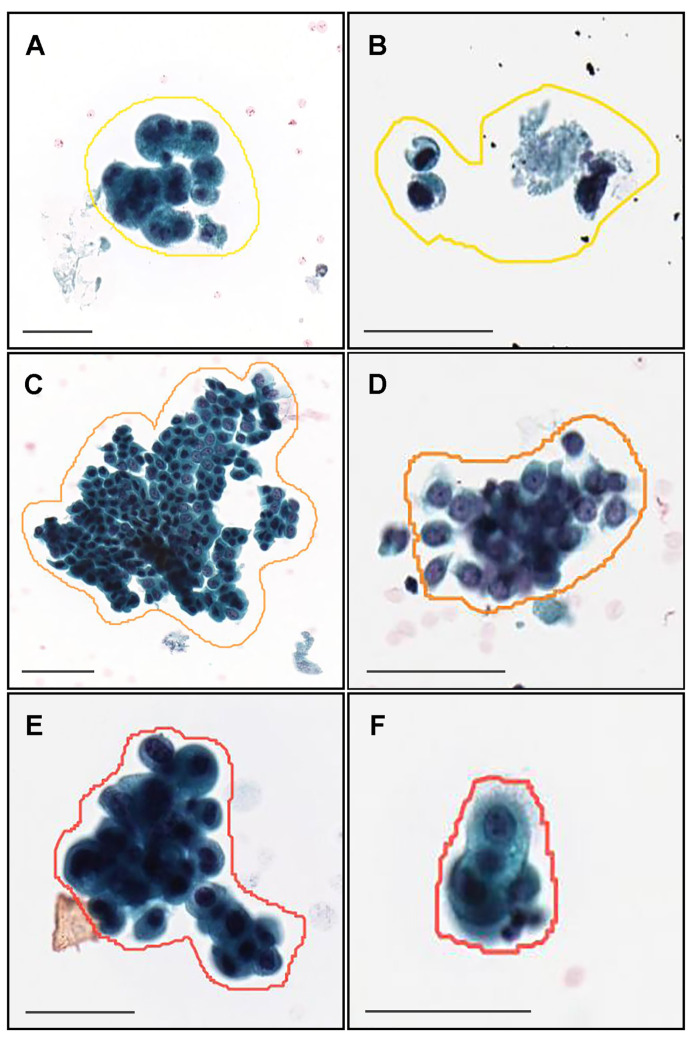

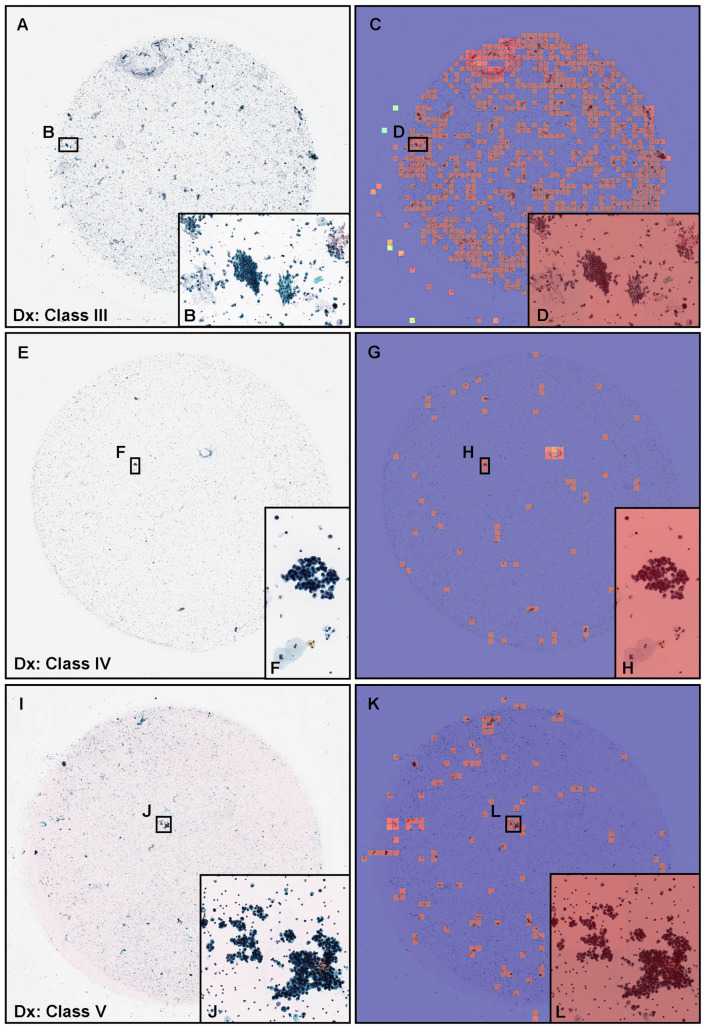

2.2. Annotation

A cohort of 62 training cases and 10 validation cases were manually annotated by experienced pathologists (Table 1). Coarse manually drawing polygonal annotations were obtained by free-hand drawing in-house online tool developed by customising the open-source OpenSeadragon tool at https://openseadragon.github.io/ (accessed on 25 July 2021) which is a web-based viewer for zoomable images. On average, the cytoscreeners and pathologists manually annotated 180 cells (or cellular clusters) per WSI. Annotated neoplastic WSIs consisted of Class III, Class IV, and Class V cytodiagnostic classes except for Class I and Class II (Table 1). We set three annotation labels for neoplastic urothelial epithelial cells: atypical cell, low-grade urothelial carcinoma (LGUC) cell, and high-grade urothelial carcinoma (HGUC) cell (Table 2 and Figure 1). For example, on the Class III (Figure 1A,B), Class IV (Figure 1C,D), and Class V (Figure 1E,F) WSIs, cytoscreeners and pathologists performed annotations around the atypical cells (Figure 1A,B), LGUC cells (Figure 1C,D), and HGUC cells (Figure 1E,F) based on the representative neoplastic urothelial epithelial cell morphology (e.g., hyperchromatism, irregular chromatin distribution, abnormalities of nuclear shape, increased nuclear/cytoplasmic ratio, irregular nuclear distribution, nuclear enlargement, abnormal cytoplasm, prominent nucleolus, cellular and nuclear polymorphism). If the WSIs were classified as Class V, for example, it would be possible to have atypical cell, LGUC cell, and HGUC cell annotations in a WSI. In contrast, the cytoscreeners and pathologists did not annotate areas where it was difficult to cytologically determine that the cells were neoplastic. The negative subset of the training and validation sets (Table 1) was not annotated and the entire cell spreading areas within the WSIs were used. The average annotation time per WSI was about 90 min. Annotations performed by the cytoscreeners and pathologists were modified (if necessary) and verified by a senior cytoscreener.

Table 2.

Annotation labels and numbers of annotation.

| Annotation Label | Number of Annotation |

|---|---|

| Atypical cell | 9950 |

| Low-grade urothelial carcinoma (LGUC) cell | 1646 |

| High-grade urothelial carcinoma (HGUC) cell | 1611 |

| Total | 13,207 |

Figure 1.

Representative manually drawing annotation image for neoplastic labels on urine liquid-based cytology (LBC) whole slide images (WSIs). The atypical urothelial cells (A,B) were annotated as atypical cell label. The suspected low grade urothelial carcinoma (LGUC) cells (C,D) were annotated as LGUC cell label and high grade utorhelial carcinoma (HGUC) cells (E,F) were annotated as HGUC cell label. The three labels (atypical cell, LGUC cell, and HGUC cell) were grouped as neoplastic label for fully supervised learning. Scale bars are 50 m.

2.3. Deep Learning Models

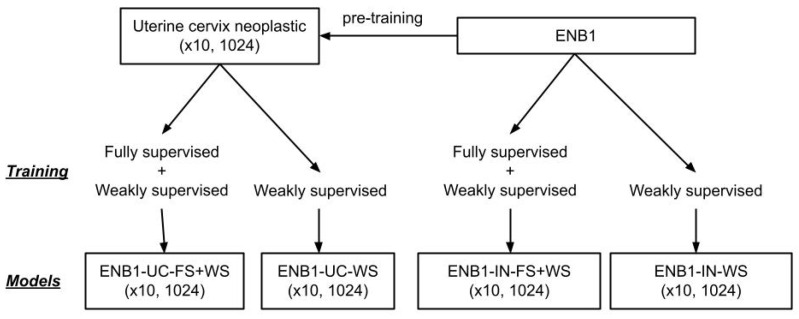

We performed training using transfer learning with fine-tuning using two different weight initialisations: ImageNet (IN) and pre-training on a uterine cervix (UC) neoplastic (×10, 1024) dataset from a previous study [35]. We used two different approaches for training during fine-tuning: fully supervised (FS) and weakly supervised (WS) learning. We used a modified version of EfficientNetB1 (ENB1) [42] with a tile size of 1024 × 1024 px. This resulted in a total of four models, all trained at magnification ×10 and tile size 1024 × 1024 px: ENB1-UC-FS+WS, ENB1-UC-WS, ENB1-IN-FS+WS, and ENB1-IN-WS. In addition, for comparaison with other model architectures, we trained models using ResNet50V2 [43], DenseNet121 [44] and InceptionV3 [45]. For these models we trained uisng FS + WS method and with initialisation from ImageNet, as we did not have access to models trained with these architecture on uterine cervix. We performed the fine-tuning of the models using the partial fine-tuning approach [46], which consists of only fine-tuning the affine parameters of batch-normalization layers and the final classification layer (Figure 2). starting with pre-trained weights on ImageNet.

Figure 2.

Training method and deep learning models overview. We performed training using two different weight initialisations: ImageNet (IN) and pre-training on a uterine cervix (UC) neoplastic (×10, 1024) dataset from a previous study. We used two different approaches for training: fully supervised (FS) and weakly supervised (WS) learning. This resulted in a total of four models, all trained at magnification ×10 and tile size 1024 × 1024px: ENB1-UC-FS+WS, ENB1-UC-WS, ENB1-IN-FS+WS, and ENB1-IN-WS.

Figure 2 shows an overview of the training method and trained deep learning models. The training methodology that we used in the present study was exactly the same as reported in our previous studies [47].

We performed slide tiling by extracting square tiles from tissue regions of the WSIs. We started by detecting the tissue regions in order to eliminate most of the white background. This was conducted by performing thresholding on a grayscale version of the WSIs using Otsu’s method [48]. For the CNN, we have used the EfficientNetB1 architecture [42] with a modified input size of 1024 × 1024 px to allow a larger view; this is based on cytologists’ input that they usually need to view the neighbouring cells around a given cell in order to diagnose more accurately. We used the partial fine-tuning approach [46] for the tuning the CNN component.

For training and inference, we then proceeded by extracting 1024 × 1024 px tiles from the tissue regions. We performed the extraction in real-time using the OpenSlide library [49]. To perform inference on a WSI, we used a sliding window approach with a fixed-size stride of 512 × 512 px (half the tile size). This results in a grid-like output of predictions on all areas that contained cells, which then allowed us to visualise the prediction as a heatmap of probabilities that we can directly superimpose on top of the WSI. Each tile had a probability of being neoplastic; to obtain a single probability that is representative of the WSI, we computed the maximum probability from all the tiles.

During fully supervised learning, we maintained an equal balance of positively and negatively labelled tiles in the training batch. To do so, for the positive tiles, we extracted them randomly from the annotated regions (annotation label: atypical cell, LGUC cell, and HGUC cell) of neoplastic WSIs, such that within the 1024 × 1024 px, at least one annotated cell was visible anywhere inside the tile. For the negative tiles, we extracted them randomly anywhere from the tissue regions of negative WSIs (Table 1). We then interleaved the positive and negative tiles to construct an equally balanced batch that was then fed as input to the CNN. In addition, to reduce the number of false positives, given the large size of the WSIs, we performed a hard mining of tiles, whereby at the end of each epoch, we performed full sliding window inference on all the negative WSIs in order to adjust the random sampling probability such that false positively predicted tiles of negative were more likely to be sampled.

During weakly supervised learning, to maintain the balance on the WSI, we oversampled from WSIs to ensure that the model trained on tiles from all WSIs in each epoch. We then switched to hard mining tiles. To perform hard mining, we alternated between training and inference. During inference, the CNN was applied in a sliding window fashion on all the tissue regions in the WSI, and we then selected the k tiles with the highest probability for being positive. This step effectively selects tiles that are most likely to be false positives when the WSI is negative. The selected tiles were placed in a training subset, and once that subset contained N tiles, training was initiated. We used , , and a batch size of 32.

During training, we performed real-time augmentation of the extracted tiles using variations of brightness, saturation, and contrast. We trained the model using the Adam optimisation algorithm [50], with the binary cross entropy loss, , , and a learning rate of . We applied a learning rate decay of every 2 epochs. We used early stopping by tracking the performance of the model on a validation set, and training was stopped automatically when there was no further improvement on the validation loss for 10 epochs. The model with the lowest validation loss was chosen as the final model.

2.4. Software and Statistical Analysis

The deep learning models were implemented and trained using the open-source TensorFlow library [51]. AUCs were calculated in python using the scikit-learn package [52] and plotted using matplotlib [53]. The 95% CIs of the AUCs were estimated using the bootstrap method [54] with 1000 iterations. The ROC curve was computed by varying the probability threshold from 0.0 to 1.0 and computing both the TPR and FPR at the given threshold.

3. Results

3.1. Insufficient AUC Performance of Whole Slide Image (WSI) Neoplastic Evaluation on Urine LBC WSIs Using Existing Series of LBC Cytopathological Model

Prior to training urine LBC neoplastic screening models, we applied existing LBC uterine cervix neoplastic screening model [35] and histopathological classification models and evaluated their AUC performances on urine LBC test sets (Table 1). This is summarised in Table 3.

Table 3.

ROC-AUC and log-loss scores for existing deep learning models to classify liquid-based cytology (LBC) and histopathology whole slide images (WSIs).

| Existing Models | ROC-AUC | Log Loss |

|---|---|---|

| Liquid-based cytology (LBC) | ||

| Uterine cervix Neoplastic (×10, 1024) | 0.836 [0.775–0.885] | 0.778 [0.620–0.989] |

3.2. High ROC-AUC Performance of Urine LBC WSI Evaluation of Neoplastic Urothelial Epithelial Cell Screening

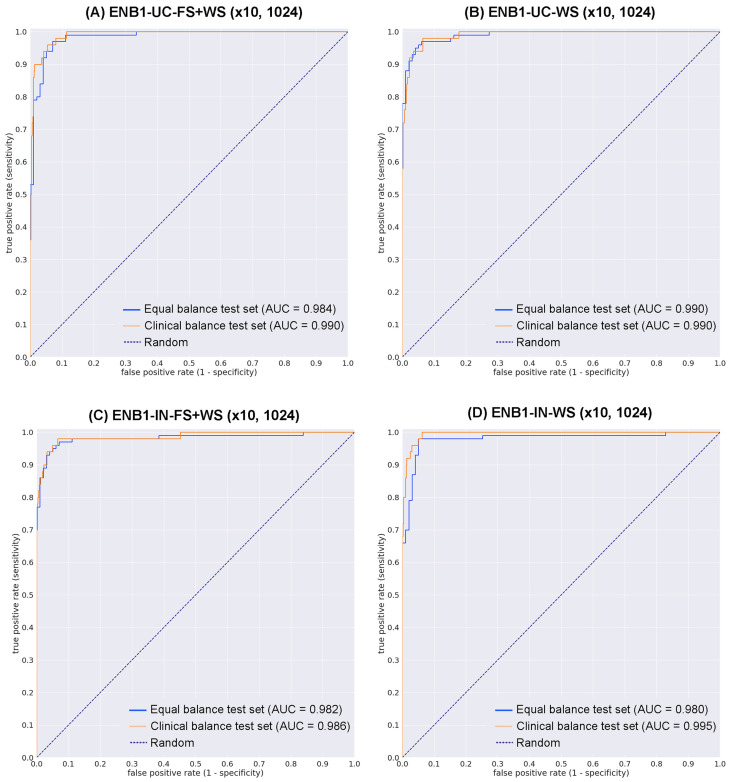

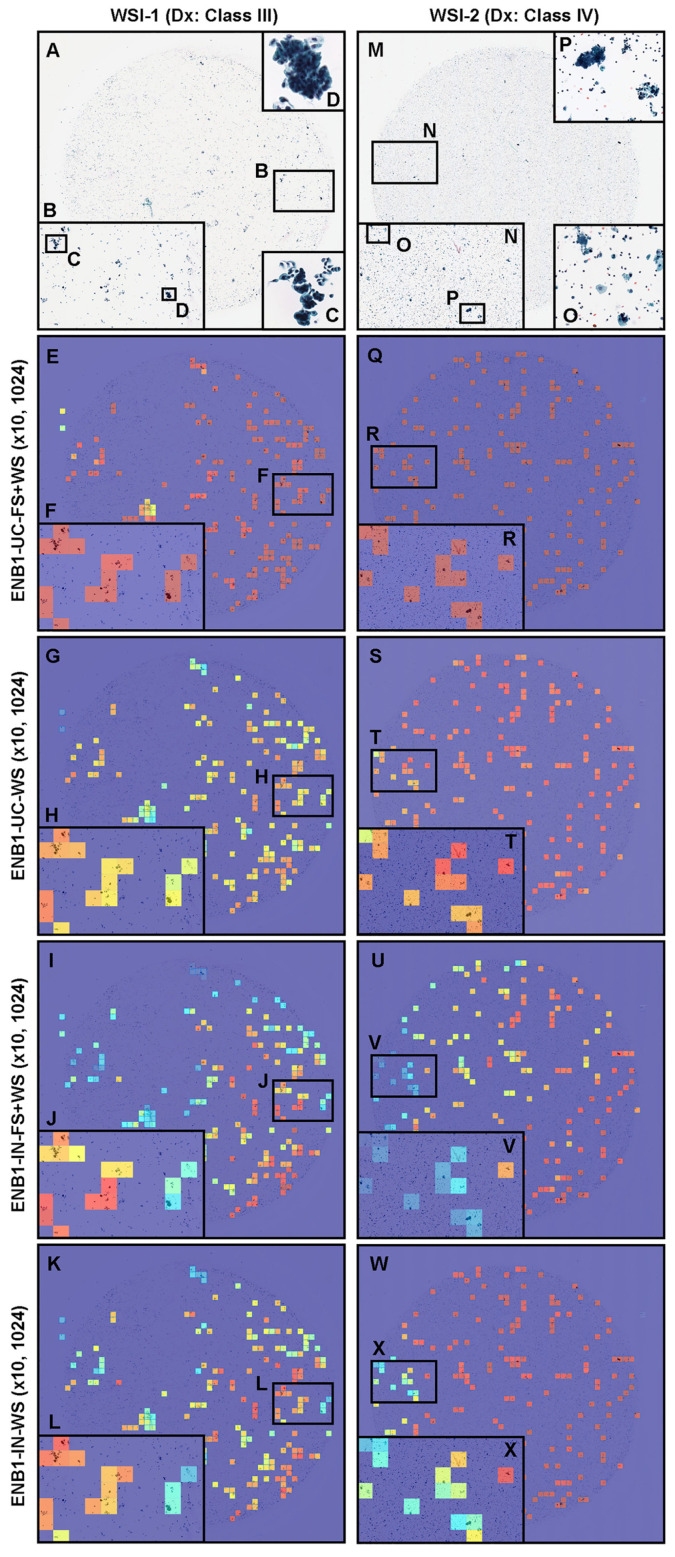

We trained four deep learning models ([ENB1-UC-FS+WS], [ENB1-UC-WS], [ENB1-IN-FS+WS], and [ENB1-IN-WS]) using transfer learning (TL) with fine-tuning [47] with fully supervised (FS) learning [35,55], and weakly supervised (WS) learning [56] approaches as described elsewhere. These models are all based on the EfficientNetB1 convolutional neural network (CNN) architecture. To compare transfer learning models’ performance ([ENB1-UC-FS+WS] and [ENB1-UC-WS]), we trained two models using EfficientNetB1 architecture at a same magnification of ×10 and tile size (1024 × 1024 px). To train deep learning models, we used a total of 62 neoplastic (with annotation) and 724 negative (without annotation) training set WSIs and 10 neoplastic (with annotation) and 10 negative (without annotation) validation set WSIs (Table 1). This resulted in four different models: (1) ENB1-UC-FS+WS (×10, 1024), (2) ENB1-UC-WS (×10, 1024), (3) ENB1-IN-FS+WS (×10, 1024), and (4) ENB1-IN-WS (×10, 1024). We evaluated these four different trained deep learning models on equal balance and clinical balance test sets (Table 1). For each test set (equal and clinical balance), we computed the ROC-AUC, log-loss, accuracy, sensitivity, and specificity and summarized in Table 4 and Figure 3 and Figure 4. Overall, four different trained deep learning models achieved equivalent ROC-AUC, log-loss, accuracy, sensitivity, and specificity at whole-slide level (WSI evaluation) in both equal and clinical balance test sets (Table 4, Figure 3). However, heatmap image appearances were different among four trained deep learning models (Figure 4). The localization patterns of predicted tiles were approximately same among four trained deep learning models (Figure 4). Looking at heatmap images of the same urine LBC WSIs (WSI-1 and WSI-2) (Figure 4) that were correctly predicted (true-positive) as neoplastic WSI using four different trained models, all models could predict tiles with neoplastic urothelial epithelial cells (Figure 4A–D,M–P) satisfactorily (Figure 4E–L,Q–X). However, probabilities in each neoplastic predicted tiles were totally different among four trained models (Figure 4). Among the four trained model, ENB1-UC-FS+WS exhibited the best tile prediction overall based on inspection of the heatmap images (Figure 4E,F,Q,R). Therefore, our results show that the ENB1-UC-FS+WS model is the best model for urine LBC neoplastic urothelial epithelial cell screening (Table 4 and Figure 4). Figures show representative WSIs of true positive (Figure 5), true negative (Figure 6), false positive (Figure 7), and false negative (Figure 8) from using the model [ENB1-UC-FS+WS].

Table 4.

ROC AUC, log loss, accuracy, sensitivity, and specificity results on the equal balance and clinical balance test sets.

| Test Set | ||

|---|---|---|

| Equal Balance | Clinical Balance | |

| ENB1-UC-FS+WS (×10, 1024) | ||

| ROC-AUC | 0.984 [0.969–0.995] | 0.990 [0.982–0.996] |

| Log-loss | 0.180 [0.123–0.259] | 0.223 [0.181–0.284] |

| Accuracy | 0.945 [0.905–0.970] | 0.946 [0.924–0.962] |

| Sensitivity | 0.960 [0.920–0.990] | 0.940 [0.861–1.000] |

| Specificity | 0.929 [0.862–0.972] | 0.946 [0.924–0.964] |

| ENB1-UC-WS (×10, 1024) | ||

| ROC-AUC | 0.990 [0.985–0.999] | 0.990 [0.981–0.997] |

| Log-loss | 0.251 [0.178–0.295] | 0.098 [0.081–0.119] |

| Accuracy | 0.955 [0.935–0.985] | 0.940 [0.920–0.960] |

| Sensitivity | 0.950 [0.911–0.990] | 0.980 [0.933–1.000] |

| Specificity | 0.960 [0.931–1.000] | 0.936 [0.915–0.958] |

| ENB1-IN-FS+WS (×10, 1024) | ||

| ROC-AUC | 0.982 [0.957–0.996] | 0.986 [0.963–0.998] |

| Log-loss | 0.225 [0.156–0.321] | 0.082 [0.063–0.106] |

| Accuracy | 0.950 [0.910–0.975] | 0.936 [0.918–0.956] |

| Sensitivity | 0.930 [0.863–0.971] | 0.960 [0.894–1.000] |

| Specificity | 0.970 [0.930–1.000] | 0.934 [0.914–0.955] |

| ENB1-IN-WS (×10, 1024) | ||

| ROC-AUC | 0.980 [0.960–0.997] | 0.995 [0.990–0.998] |

| Log-loss | 0.258 [0.185–0.289] | 0.128 [0.114–0.144] |

| Accuracy | 0.960 [0.940–0.990] | 0.944 [0.924–0.960] |

| Sensitivity | 0.970 [0.945–1.000] | 1.000 [1.000–1.000] |

| Specificity | 0.950 [0.914–0.990] | 0.938 [0.915–0.956] |

| ResNet50V2-IN-FS+WS (×10, 1024) | ||

| ROC-AUC | 0.962 [0.919–0.986] | 0.972 [0.935–1.000] |

| Log-loss | 0.238 [0.145–0.357] | 0.085 [0.050–0.124] |

| Accuracy | 0.916 [0.865–0.955] | 0.915 [0.884–0.950] |

| Sensitivity | 0.888 [0.812–0.937] | 0.949 [0.874–1.000] |

| Specificity | 0.945 [0.895–0.993] | 0.914 [0.875–0.950] |

| DenseNet121-IN-FS+WS (×10, 1024) | ||

| ROC-AUC | 0.945 [0.905–0.977] | 0.957 [0.922–0.988] |

| Log-loss | 0.233 [0.152–0.345] | 0.185 [0.146–0.224] |

| Accuracy | 0.919 [0.867–0.962] | 0.925 [0.887–0.958] |

| Sensitivity | 0.919 [0.835–0.977] | 0.921 [0.846–0.971] |

| Specificity | 0.957 [0.905–1.000] | 0.906 [0.869–0.945] |

| InceptionV3-IN-FS+WS (×10, 1024) | ||

| ROC-AUC | 0.959 [0.923–0.983] | 0.978 [0.940–1.000] |

| Log-loss | 0.239 [0.151–0.354] | 0.186 [0.177–0.198] |

| Accuracy | 0.912 [0.857–0.955] | 0.924 [0.895–0.959] |

| Sensitivity | 0.898 [0.820–0.957] | 0.956 [0.878–1.000] |

| Specificity | 0.954 [0.895–0.995] | 0.906 [0.868–0.941] |

Figure 3.

ROC curves on the test sets. (A) transfer learning (TL) from uterine cervix liquid-based cytology (LBC) model and fully and weakly supervised learning model, magnification at ×10 and tile size at 1024 × 1024 px (ENB1-UC-FS+WS (×10, 1024)); (B) TL from uterine cervix LBC model and weakly supervised learning model, magnification at ×10 and tile size at 1024 × 1024 px (ENB1-UC-WS (×10, 1024)); (C) EfficientNetB1 based fully and weakly supervised learning model, magnification at ×10 and tile size at 1024 × 1024 px (ENB1-IN-FS+WS (×10, 1024)); (D) EfficientNetB1 based weakly supervised learning model, magnification at ×10 and tile size at 1024 × 1024 px (ENB1-IN-WS (×10, 1024)).

Figure 4.

Neoplastic prediction comparison. Comparison of neoplastic predictions in the representative two neoplastic urine liquid-based cytology (LBC) whole-slide images (WSIs) (WSI-1 and WSI-2) of four trained deep learning models (ENB1-UC-FS+WS, ENB1-UC-WS, ENB1-IN-FS+WS, and ENB1-IN-WS). According to the cytopathological diagnostic (Dx) reports, WSI-1 (A–L) was diagnosed as Class III and WSI-2 (M–X) was diagnosed as Class IV—both were classified in the neoplastic class in this study. (A–D,M–P): LBC cytopathological images for WSI-1 (A–D) and WSI-2 (M–O); heatmap prediction images for ENB1-UC-FS+WS model in WSI-1 (E,F) and WSI-2 (Q,R); heatmap prediction images for ENB1-UC-WS model in WSI-1 (G,H) and WSI-2 (S,T); heatmap prediction images for ENB1-IN-FS+WS model in WSI-1 (I,J) and WSI-2 (U,V); heatmap prediction images for ENB1-IN-WS model in WSI-1 (K,L) and WSI-2 (W,X). The localization of predicted tiles in neoplastic WSIs (WSI-1 and WSI-2) were almost same in four models (ENB1-UC-FS+WS, ENB1-UC-WS, ENB1-IN-FS+WS, and ENB1-IN-WS). However, the model pre-trained from uterine cervix LBC model with fully and weakly supervised learning (ENB1-UC-FS+WS) showed the highest neoplastic probabilities (F,R) in neoplastic tiles (B–D,N–P) as compared to other models (G–L,S–X). The heatmap uses the jet color map where blue indicates low probability and red indicates high probability.

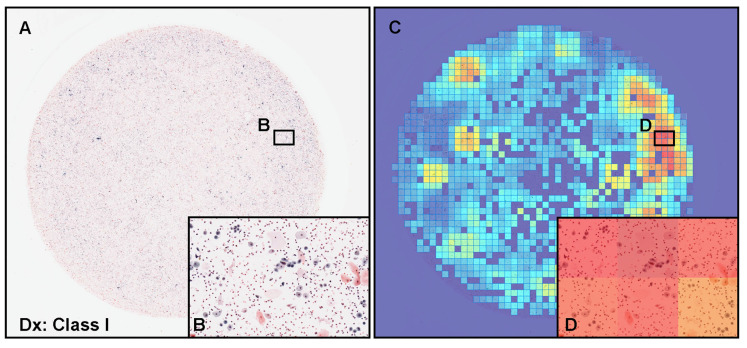

Figure 5.

Representative examples of true positive prediction. Neoplastic true positive prediction outputs on urine liquid-based cytology (LBC) whole-slide images (WSIs) from test sets using the ENB1-UC-FS+WS model. According to the cytopathological diagnostic (Dx) reports, (A) was diagnosed as Class III with atypical urothelial epithelial cells (B), (E) was diagnosed as Class IV with suspected low grade urothelial carcinoma (LGUC) cells (F), and (I) was diagnosed as Class V with suspected high grade utorhelial carcinoma (HGUC) cells (J). The heatmap images (C,D,G,H,K,L) show true positive predictions of neoplastic urothelial epithelial cells (D,H,L), which correspond, respectively, to atypical (B), suspected LGUC (F), and HGUC (J) cells. The heatmap uses the jet color map where blue indicates low probability and red indicates high probability.

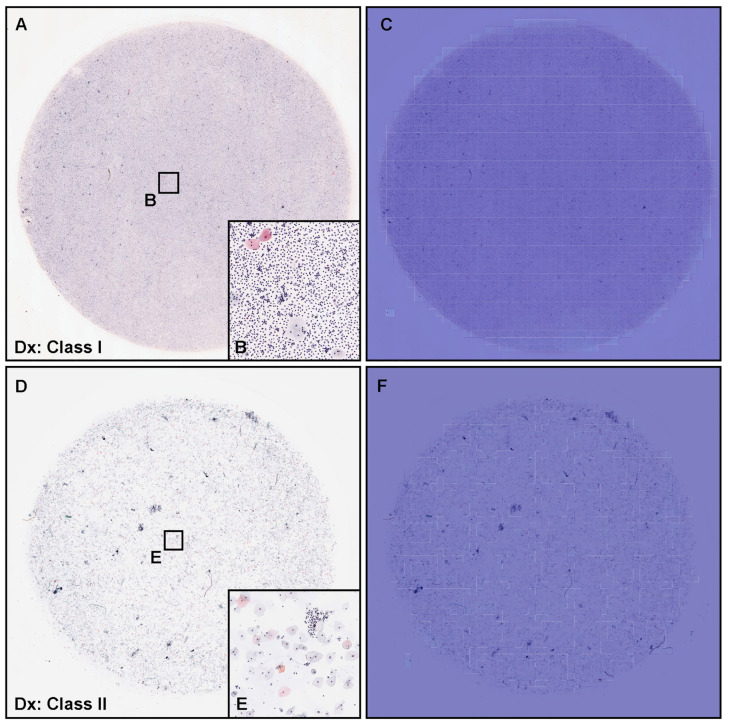

Figure 6.

Representative examples of true negative prediction. Two representative examples of neoplastic true negative prediction outputs on urine liquid-based cytology (LBC) whole-slide images (WSIs) from test sets using ENB1-UC-FS+WS model. According to the cytopathological diagnostic (Dx) reports, (A) was diagnosed as Class I and (B) was Class II, which were negative for urothelial neoplastic epithelial cells. Cytopathologically, (A) was pyuria which consisted of infective fluid (pus) with small number of non-atypical epithelial cells (B). (D,E) included urothelial epithelial cells with slight nuclear enlargement. The heatmap images (C,F) show true negative prediction of neoplastic epithelial cells. The heatmap uses the jet color map where blue indicates low probability and red indicates high probability.

Figure 7.

Representative example of false positive prediction. A representative example of neoplastic false positive prediction outputs on urine liquid-based cytology (LBC) whole-slide images (WSIs) from test sets using the ENB1-UC-FS+WS model. According to the cytopathological diagnostic (Dx) report, (A) was diagnosed as Class I and consisted of metaplastic squamous epithelial cells and non-atypical (non-neoplastic) urothelial epithelial cells with inflammatory cells (B). The heatmap images (C,D) show false positive predictions (D) which correspond, respectively, to (B). The heatmap uses the jet color map where blue indicates low probability and red indicates high probability.

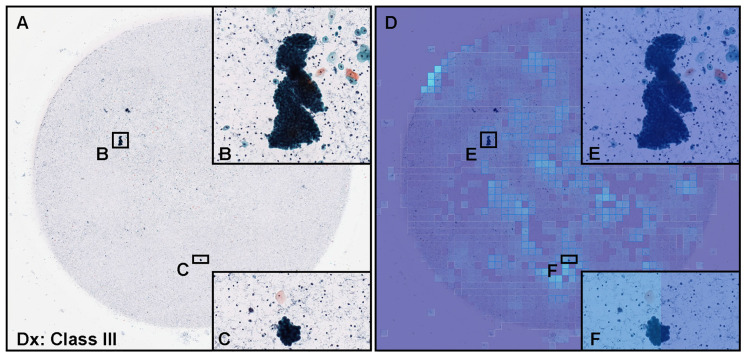

Figure 8.

Representative example of false negative prediction. A representative example of neoplastic false negative prediction outputs on urine liquid-based cytology (LBC) whole-slide images (WSIs) from test sets using the ENB1-UC-FS+WS model. According to the cytopathological diagnostic (Dx) report, (A) was diagnosed as Class III and included clusters of atypical urothelial epithelial cells (B,C). The heatmap images (D–F) show false negative predictions (E,F) which correspond, respectively, to (B,C). The heatmap uses the jet color map where blue indicates low probability and red indicates high probability.

3.3. True Positive Prediction

The model ENB1-UC-FS+WS satisfactorily predicted neoplastic urothelial epithelial cells in urine LBC WSIs (Figure 5). Cytopathologically, Figure 5A exhibited atypical urothelial epithelial cells (Figure 5B) and was diagnosed as Class III. Figure 5E showed low grade urothelial carcinoma (LGUC) cells (Figure 5F) and was diagnosed as Class IV. Figure 5I showed high grade urothelial carcinoma (HGUC) cells (Figure 5J) and was diagnosed as Class V. These three WSIs should be classified as neoplastic in this study. The heatmap images show true positive predictions of atypical utorhelial cells (Figure 5C,D), LGUC cells (Figure 5G,H), and HGUC cells (Figure 5K,L) which were confirmed by a cytoscreener and a cytopathologist by viewing original WSIs and predicted heatmap images. In contrast, in low probability tiles (light blue and blue background) (Figure 5), two independent cytoscreeners confirmed there were no neoplastic urothelial epithelial cells.

3.4. True Negative Prediction

The model ENB1-UC-FS+WS satisfactorily predicted negative cases (cytopathologically as Class I and Class II) in urine LBC WSIs (Figure 6). The heatmap images show true negative predictions of neoplastic urothelial epithelial cells (Figure 6C,F). In zero probability tiles (blue background color) (Figure 6C,F), there are no neoplastic urothelial epithelial cells in pyuria (cytodiagnosed as Class I) (Figure 6A) which consisted of infective fluid with small number of non-neoplastic epithelial cells (Figure 6B) and urothelial epithelial cells with slight nuclear enlargement (Figure 6D,E) (cytodiagnosed as Class II).

3.5. False Positive Prediction

A cytopathologically diagnosed negative (Class I) case (Figure 7A) consisted of metaplastic squamous epithelial cells and non-neoplastic urothelial epithelial cells (Figure 7B) was false positively predicted for neoplastic urothelial epithelial cells by our model [ENB1-UC-FS+WS ]. The heatmap image (Figure 7C) shows false positive predictions of neoplastic urothelial epithelial cells with high probability tiles (Figure 7D). Cytopathologically, there are non-neoplastic urothelial epithelial cells with a slightly increased nuclear cytoplasmic (N/C) ratio and metaplastic squamous epithelial cells (Figure 7B), which could be a major cause of false positive.

3.6. False Negative Prediction

According to the cytodiagnosis report and additional cytoscreener and cytopathologist’s review, in this urine LBC WSI (Figure 8A), there were cellular clusters of atypical (neoplastic) urothelial epithelial cells (Figure 8B,C) with high nuclear cytoplasmic ratio, indicating this WSI (Figure 8A) should be classified as neoplastic (Class III). However, our model [ENB1-UC-FS+WS ] did not predict or very low level predicted neoplastic urothelial epithelial cells (Figure 8D–F). It would be speculated that neoplastic urothelial epithelial cellular clustering could be a possible cause of false negative due to the overlapping morphology.

4. Discussion

In this study, we trained deep learning models for the classification of neoplastic urothelial epithelial cells in WSIs of urine LBC specimens. The best model (ENB1-UC-FS+WS) achieved overall a good performance with ROC-AUCs of 0.984 (CI: 0.969–0.995) on equal balance and 0.990 (CI: 0.982–0.996) on clinical balance test sets and low log-loss values of 0.180 (CI: 0.123–0.259) on equal balance and 0.223 (CI: 0.181–0.284) on clinical balance test sets, which reflects the clinical setting based on the statistics on cytodiagnosis in 2016 to 2021 by the Japanese Society of Clinical Cytology (https://jscc.or.jp/ accessed on 13 January 2022). Both equal and clinical balance test sets (Table 1) were collected based on the cytodiagnoses, reviewed by two independent cytoscreeners or cytopathologists, then verified by a senior cytoscreener or cytopathologist. We ensured that we had consensus on the diagnoses of the test set WSIs. Our best model (ENB1-UC-FS+WS) also achieved high accuracy (0.945–0.946), sensitivity (0.940–0.960), and specificity (0.929–0.946) in WSI level. It has been reported that at urine LBC (ThinPrep) WSI level, the deep learning model predicted neoplastic (positive) WSI at 0.842 (accuracy), 0.795 (sensitivity), and 0.845 (specificity) [57]. Our latest reported uterine cervix LBC (ThinPrep) model demonstrated accuracy at 0.907, sensitivity at 0.850, and specificity at 0.911 at WSI level [35]. In this study, we have trained total four deep learning models (ENB1-UC-FS+WS, ENB1-UC-WS, ENB1-IN-FS+WS, and ENB1-IN-WS) using two different weight initialisation: ImageNet and pre-trained uterine cervix neoplastic LBC model from a previous study [35] (Figure 2). At WSI level, these four models showed almost comparable ROC-AUC, log-loss, accuracy, sensitivity, and specificity (Table 4 and Figure 3). However, there was wide variety of tile level prediction as visualized by the heatmap images between the four models (Figure 4). Based on the WSI and tile level (heatmap) evaluations, we have concluded that the model (ENB1-UC-FS+WS) which was trained using the pre-trained uterine cervix LBC model [35] weight initialisation, performed best. As for the false-negative prediction outputs in the urine LBC WSI which was cytodiagnosed as Class III (Figure 8A), the model (ENB1-UC-FS+WS) could not predict neoplastic atypical urothelial epithelial cell cluster (Figure 8B–F) in which neoplastic urothelial epithelial cells were overlapping and nuclear shapes and structures were hard to determine in the WSI (Figure 8B,C). The model (ENB1-UC-FS+WS) could predict true negative urine crystal and cell debris precisely. False negative prediction outputs were most likely due to neoplastic urothelial epithelial cell clusters that mimicked urine crystal or cell debris.

According to the annual statistics on cytodiagnosis by the Japanese Society of Clinical Cytology (https://jscc.or.jp/, accessed on 13 January 2022), in 2021, there were 2,041,547 urine cytodianosis reports in Japan. In 2021, the total number of cytodiagnosis in Japan was 7,157,413. Therefore, the population of urine cytodiagnosis was approximately 28.5%. In Japan, urine cytology was the second most common cytology in 2021, as cervical cytology was the most common (3,289,877 cases, 50.0%) (https://jscc.or.jp/, accessed on 13 January 2022). LBC of urine specimens is commonly used in cytology laboratories throughout the world and various processing methods, such as ThinPrep and SurePath, have been reported [58,59]. The LBC technique preserves the cells of interest (e.g., urothelial epithelial cells) in a liquid medium and removes most of the debris, blood, and exudate either by filtering or density gradient centrifugation. The efficiency of diagnosis employing the LBC is high because of the cell collection rate. It was demonstrated that the accuracy of diagnoses made employing the LBC method can be increased by understanding the characteristics of the urothelial epithelial cell morphology in suspicious cases [25]. Following the appropriate LBC specimen preparation steps, cell morphology (structure) is satisfactorily preserved, which allows more accurate diagnosing of LBC slides as shown by the significant concordance between cytological and histological diagnosis (92%), the significant number of LGUC (20.5%) revealed by urinary cytology and validated by histology, and the low rate (8%) of misjudgement of cytological diagnosis [60]. In addition, the leftover urine LBC material can be used for other techniques such as immunocytochemistry, molecular biology and flow cytometry [61]. Therefore, LBC has been applied with good results in urine cytology and can be regarded as an appropriate substitute for conventional smear urine cytology. LBC techniques opens new possibilities for a systemic urothelial carcinoma screening by integrating digital pathology WSI technique and deep learning model(s), resulting a standardised high-quality readout (e.g., classification).

One limitation of this study is that it primarily included urine LBC (ThinPrep) WSIs (both training and test sets) from a single private clinical laboratory in Japan. Therefore, the deep learning models could potentially be biased to such specimens. Validations on a wide variety of specimens from multiple different origins (both clinical laboratories and hospitals) and other LBC method(s) (e.g., SurePath) would be essential for ensuring the robustness of the models. Another potential validation study should involve the comparison of the performance of the models against cytoscreeners and cytopathologists in a clinical setting.

5. Conclusions

In the present study, we trained deep learning models for the classification of neoplastic urine LBC WSIs. We have evaluated the models on two test sets (equal and clinical balance) achieving ROC-AUCs for diagnosis in the range of 0.984–0.990 by the best model (ENB1-UC-FS+WS). At WSI level, the model (ENB1-UC-FS+WS) achieved high accuracy (0.945–0.946), sensitivity (0.940–0.960), and specificity (0.929–0.946). Not only at WSI level, the model (ENB1-UC-FS+WS) satisfactorily predicted neoplastic urothelial epithelial cells (atypical, LGUC, and HGUC cells) by the heatmap images. Therefore, our model (ENB1-UC-FS+WS) can infer whether the urine LBC WSI is neoplastic (Figure 5) or negative (Figure 6) by inspecting model prediction outputs easily at WSI level as well as heatmap image, which makes it possible to use a deep learning model such as ours as a tool to aid in the urine LBC screening process in the clinical setting (workflow) for ranking cases by order of priority. Cytoscreeners and/or cytopathologists will need to perform full screening and subclassification (e.g., negative, atypical cells, suspicious for malignancy, and malignant) after the primary screening by our deep learning model, which could reduce their working time as the model would have highlighted the suspected neoplastic regions, and they would not have to perform an exhaustive search throughout the entire WSI.

Acknowledgments

We thank cytoscreeners and pathologists who have been engaged in reviewing cases, annotations, and cytopathological discussion for this study.

Author Contributions

M.T. designed the studies, performed experiments, and analyzed the data; M.T. and F.K. performed the computational studies; M.A. performed the pathological diagnoses and reviewed the cases; M.T. and F.K. wrote the manuscript; M.T. supervised the project. All authors reviewed and approved the final manuscript.

Institutional Review Board Statement

The experimental protocol in this study was approved by the ethical board of the private clinical laboratory in Japan. All research activities complied with all relevant ethical regulations and were performed in accordance with relevant guidelines and regulations in the private clinical laboratory. Due to the confidentiality agreement with the private clinical laboratory, the name of the private clinical laboratory cannot be disclosed.

Informed Consent Statement

Written informed consent to use cytopathological samples (liquid-based cytology glass slides) and cytopathological reports for research purposes in this study had previously been obtained from all patients and the opportunity for refusal to participate in research had been guaranteed by an opt-out manner.

Data Availability Statement

The datasets generated during and/or analysed during the current study are not publicly available due to specific institutional requirements governing privacy protection; however, they are available from the corresponding author and from the private clinical laboratory in Japan on reasonable request. The datasets that support the findings of this study are available from the private clinical laboratory (Japan), but restrictions apply to the availability of these data, which were used under a data-use agreement that was made according to the Ethical Guidelines for Medical and Health Research Involving Human Subjects as set by the Japanese Ministry of Health, Labour and Welfare (Tokyo, Japan) and, thus, are not publicly available. However, the datasets are available from the authors upon reasonable request for private viewing and with permission from the corresponding private clinical laboratory within the terms of the data use agreement and if compliant with the ethical and legal requirements as stipulated by the Japanese Ministry of Health, Labour and Welfare.

Conflicts of Interest

M.T. and F.K. are employees of Medmain Inc. All authors declare no competing interest.

Funding Statement

This study is based on results obtained from a project, JPNP14012, subsidized by the New Energy and Industrial Technology Development Organization (NEDO).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Northrup V., Acar B.C., Hossain M., Acker M.R., Manuel E., Rahmeh T. Clinical follow up and the impact of the Paris system in the assessment of patients with atypical urine cytology. Diagn. Cytopathol. 2018;46:1022–1030. doi: 10.1002/dc.24095. [DOI] [PubMed] [Google Scholar]

- 2.Brown F.M. Urine Cytology. Urol. Clin. N. Am. 2000;27:25–37. doi: 10.1016/S0094-0143(05)70231-7. [DOI] [PubMed] [Google Scholar]

- 3.DeSouza K., Chowdhury S., Hughes S. Prompt diagnosis key in bladder cancer. Practitioner. 2014;258:23–27. [PubMed] [Google Scholar]

- 4.Sung H., Ferlay J., Siegel R.L., Laversanne M., Soerjomataram I., Jemal A., Bray F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2021;71:209–249. doi: 10.3322/caac.21660. [DOI] [PubMed] [Google Scholar]

- 5.Shanks J.H., Iczkowski K.A. Divergent differentiation in urothelial carcinoma and other bladder cancer subtypes with selected mimics. Histopathology. 2009;54:885–900. doi: 10.1111/j.1365-2559.2008.03167.x. [DOI] [PubMed] [Google Scholar]

- 6.Baio R., Spiezia N., Marani C., Schettini M. Potential contribution of benzodiazepine abuse in the development of a bladder sarcomatoid carcinoma: A case report. Mol. Clin. Oncol. 2021;15:1–5. doi: 10.3892/mco.2021.2394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Luo Y., She D.L., Xiong H., Yang L., Fu S.J. Diagnostic value of liquid-based cytology in urothelial carcinoma diagnosis: A systematic review and meta-analysis. PLoS ONE. 2015;10:e0134940. doi: 10.1371/journal.pone.0134940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Raab S.S., Grzybicki D.M., Vrbin C.M., Geisinger K.R. Urine cytology discrepancies: Frequency, causes, and outcomes. Am. J. Clin. Pathol. 2007;127:946–953. doi: 10.1309/XUVXFXMFPL7TELCE. [DOI] [PubMed] [Google Scholar]

- 9.Sullivan P.S., Chan J.B., Levin M.R., Rao J. Urine cytology and adjunct markers for detection and surveillance of bladder cancer. Am. J. Transl. Res. 2010;2:412. [PMC free article] [PubMed] [Google Scholar]

- 10.Bastacky S., Ibrahim S., Wilczynski S.P., Murphy W.M. The accuracy of urinary cytology in daily practice. Cancer Cytopathol. Interdiscip. Int. J. Am. Cancer Soc. 1999;87:118–128. doi: 10.1002/(SICI)1097-0142(19990625)87:3<118::AID-CNCR4>3.0.CO;2-N. [DOI] [PubMed] [Google Scholar]

- 11.Son S.M., Koo J.H., Choi S.Y., Lee H.C., Lee Y.M., Song H.G., Hwang H.K., Han H.S., Yun S.J., Kim W.J., et al. Evaluation of urine cytology in urothelial carcinoma patients: A comparison of CellprepPlus® liquid-based cytology and conventional smear. Korean J. Pathol. 2012;46:68. doi: 10.4132/KoreanJPathol.2012.46.1.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Tripathy K., Misra A., Ghosh J. Efficacy of liquid-based cytology versus conventional smears in FNA samples. J. Cytol. 2015;32:17. doi: 10.4103/0970-9371.155225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lee M.W., Paik W.H., Lee S.H., Chun J.W., Huh G., Park N.Y., Kim J.S., Cho I.R., Ryu J.K., Kim Y.T., et al. Usefulness of Liquid-Based Cytology in Diagnosing Biliary Tract Cancer Compared to Conventional Smear and Forceps Biopsy. Dig. Dis. Sci. 2022 doi: 10.1007/s10620-022-07535-3. [DOI] [PubMed] [Google Scholar]

- 14.Honarvar Z., Zarisfi Z., Sedigh S.S., Shahrbabak M.M. Comparison of conventional and liquid-based Pap smear methods in the diagnosis of precancerous cervical lesions. J. Obstet. Gynaecol. 2022;42:2320–2324. doi: 10.1080/01443615.2022.2049721. [DOI] [PubMed] [Google Scholar]

- 15.Laucirica R., Bentz J.S., Souers R.J., Wasserman P.G., Crothers B.A., Clayton A.C., Henry M.R., Chmara B.A., Clary K.M., Fraig M.M., et al. Do liquid-based preparations of urinary cytology perform differently than classically prepared cases? Observations from the College of American Pathologists Interlaboratory Comparison Program in Nongynecologic Cytology. Arch. Pathol. Lab. Med. 2010;134:19–22. doi: 10.5858/2008-0673-CPR1.1. [DOI] [PubMed] [Google Scholar]

- 16.Grundhoefer D., Patterson B.K. Determination of liquid-based cervical cytology specimen adequacy using cellular light scatter and flow cytometry. Cytometry. 2001;46:340–344. doi: 10.1002/cyto.10025. [DOI] [PubMed] [Google Scholar]

- 17.Austin M., Ramzy I. Increased Detection of Epithelial Cell Abnormalities by Liquid-Based Gynecologic Cytology Preparations. Acta Cytol. 1998;42:178–184. doi: 10.1159/000331543. [DOI] [PubMed] [Google Scholar]

- 18.Makde M.M., Sathawane P. Liquid-based cytology: Technical aspects. Cytojournal. 2022;19:41. doi: 10.25259/CMAS_03_16_2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hussain E., Mahanta L.B., Borah H., Das C.R. Liquid based-cytology Pap smear dataset for automated multi-class diagnosis of pre-cancerous and cervical cancer lesions. Data Brief. 2020;30:105589. doi: 10.1016/j.dib.2020.105589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lahrmann B., Valous N.A., Eisenmann U., Wentzensen N., Grabe N. Semantic focusing allows fully automated single-layer slide scanning of cervical cytology slides. PLoS ONE. 2013;8:e61441. doi: 10.1371/journal.pone.0061441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Shidham V.B. Role of immunocytochemistry in cervical cancer screening. Cytojournal. 2022;19:42. doi: 10.25259/CMAS_03_17_2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Williams J., Kostiuk M., Biron V.L. Molecular Detection Methods in HPV-Related Cancers. Front. Oncol. 2022;12:864820. doi: 10.3389/fonc.2022.864820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rossi E.D., Bizzarro T., Longatto-Filho A., Gerhard R., Schmitt F. The diagnostic and prognostic role of liquid-based cytology: Are we ready to monitor therapy and resistance? Expert Rev. Anticancer. Ther. 2015;15:911–921. doi: 10.1586/14737140.2015.1053874. [DOI] [PubMed] [Google Scholar]

- 24.Kalantari M.R., Jahanshahi M.A., Gharib M., Hashemi S., Kalantari S. Direct Smear Versus Liquid-Based Cytology in the Diagnosis of Bladder Lesions. Iran. J. Pathol. 2022;17:56. doi: 10.30699/ijp.2021.528171.2646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Washiya K., Sato T., Miura T., Tone K., Kojima K., Watanabe J., Kijima H. Cytologic difference between benignity and malignancy in suspicious cases employing urine cytodiagnosis using a liquid-based method. Anal. Quant. Cytol. Histol. 2011;33:169–174. [PubMed] [Google Scholar]

- 26.Idrees M., Farah C.S., Sloan P., Kujan O. Oral brush biopsy using liquid-based cytology is a reliable tool for oral cancer screening: A cost-utility analysis. Cancer Cytopathol. 2022;130:740–748. doi: 10.1002/cncy.22599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Denton K.J. Liquid based cytology in cervical cancer screening. BMJ. 2007;335:1–2. doi: 10.1136/bmj.39262.506528.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Piaton E., Hutin K., Faynel J., Ranchin M., Cottier M. Cost efficiency analysis of modern cytocentrifugation methods versus liquid based (Cytyc Thinprep®) processing of urinary samples. J. Clin. Pathol. 2004;57:1208–1212. doi: 10.1136/jcp.2004.018648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Mukhopadhyay S., Feldman M.D., Abels E., Ashfaq R., Beltaifa S., Cacciabeve N.G., Cathro H.P., Cheng L., Cooper K., Dickey G.E., et al. Whole slide imaging versus microscopy for primary diagnosis in surgical pathology: A multicenter blinded randomized noninferiority study of 1992 cases (pivotal study) Am. J. Surg. Pathol. 2018;42:39. doi: 10.1097/PAS.0000000000000948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Malarkey D.E., Willson G.A., Willson C.J., Adams E.T., Olson G.R., Witt W.M., Elmore S.A., Hardisty J.F., Boyle M.C., Crabbs T.A., et al. Utilizing whole slide images for pathology peer review and working groups. Toxicol. Pathol. 2015;43:1149–1157. doi: 10.1177/0192623315605933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tsuneki M. Deep learning models in medical image analysis. J. Oral Biosci. 2022;64:312–320. doi: 10.1016/j.job.2022.03.003. [DOI] [PubMed] [Google Scholar]

- 32.Sukegawa S., Tanaka F., Nakano K., Hara T., Yoshii K., Yamashita K., Ono S., Takabatake K., Kawai H., Nagatsuka H., et al. Effective deep learning for oral exfoliative cytology classification. Cancer Cytopathol. 2022;130:407–414. doi: 10.1038/s41598-022-17602-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ou Y.C., Tsao T.Y., Chang M.C., Lin Y.S., Yang W.L., Hang J.F., Li C.B., Lee C.M., Yeh C.H., Liu T.J. Evaluation of an artificial intelligence algorithm for assisting the Paris System in reporting urinary cytology: A pilot study. Cancer Cytopathol. 2022;130:872–880. doi: 10.1002/cncy.22615. [DOI] [PubMed] [Google Scholar]

- 34.Tao X., Chu X., Guo B., Pan Q., Ji S., Lou W., Lv C., Xie G., Hua K. Scrutinizing high-risk patients from ASC-US cytology via a deep learning model. Cancer Cytopathol. 2022:preprint. doi: 10.1002/cncy.22560. [DOI] [PubMed] [Google Scholar]

- 35.Kanavati F., Hirose N., Ishii T., Fukuda A., Ichihara S., Tsuneki M. A Deep Learning Model for Cervical Cancer Screening on Liquid-Based Cytology Specimens in Whole Slide Images. Cancers. 2022;14:1159. doi: 10.3390/cancers14051159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Xie X., Fu C.C., Lv L., Ye Q., Yu Y., Fang Q., Zhang L., Hou L., Wu C. Deep convolutional neural network-based classification of cancer cells on cytological pleural effusion images. Mod. Pathol. 2022;35:609–614. doi: 10.1038/s41379-021-00987-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lin Y.J., Chao T.K., Khalil M.A., Lee Y.C., Hong D.Z., Wu J.J., Wang C.W. Deep Learning Fast Screening Approach on Cytological Whole Slides for Thyroid Cancer Diagnosis. Cancers. 2021;13:3891. doi: 10.3390/cancers13153891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bhatt A.R., Ganatra A., Kotecha K. Cervical cancer detection in pap smear whole slide images using convnet with transfer learning and progressive resizing. PeerJ Comput. Sci. 2021;7:e348. doi: 10.7717/peerj-cs.348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Holmström O., Linder N., Kaingu H., Mbuuko N., Mbete J., Kinyua F., Törnquist S., Muinde M., Krogerus L., Lundin M., et al. Point-of-Care Digital Cytology With Artificial Intelligence for Cervical Cancer Screening in a Resource-Limited Setting. JAMA Netw. Open. 2021;4:e211740. doi: 10.1001/jamanetworkopen.2021.1740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Lin H., Chen H., Wang X., Wang Q., Wang L., Heng P.A. Dual-path network with synergistic grouping loss and evidence driven risk stratification for whole slide cervical image analysis. Med. Image Anal. 2021;69:101955. doi: 10.1016/j.media.2021.101955. [DOI] [PubMed] [Google Scholar]

- 41.Cheng S., Liu S., Yu J., Rao G., Xiao Y., Han W., Zhu W., Lv X., Li N., Cai J., et al. Robust whole slide image analysis for cervical cancer screening using deep learning. Nat. Commun. 2021;12:1–10. doi: 10.1038/s41467-021-25296-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Tan M., Le Q. Efficientnet: Rethinking model scaling for convolutional neural networks; Proceedings of the International Conference on Machine Learning; Long Beach, CA, USA. 10–15 May 2019; London, UK: PMLR; 2019. pp. 6105–6114. [Google Scholar]

- 43.He K., Zhang X., Ren S., Sun J. Identity mappings in deep residual networks; Proceedings of the European Conference on Computer Vision; Amsterdam, The Netherlands. 11–14 October 2016; Berlin/Heidelberg, Germany: Springer; 2016. pp. 630–645. [Google Scholar]

- 44.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. Densely connected convolutional networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- 45.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. Rethinking the inception architecture for computer vision; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- 46.Kanavati F., Tsuneki M. Partial transfusion: On the expressive influence of trainable batch norm parameters for transfer learning. arXiv. 20212102.05543 [Google Scholar]

- 47.Tsuneki M., Abe M., Kanavati F. Transfer Learning for Adenocarcinoma Classifications in the Transurethral Resection of Prostate Whole-Slide Images. Cancers. 2022;14:4744. doi: 10.3390/cancers14194744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Otsu N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979;9:62–66. doi: 10.1109/TSMC.1979.4310076. [DOI] [Google Scholar]

- 49.Goode A., Gilbert B., Harkes J., Jukic D., Satyanarayanan M. OpenSlide: A vendor-neutral software foundation for digital pathology. J. Pathol. Inform. 2013;4:27. doi: 10.4103/2153-3539.119005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kingma D.P., Ba J. Adam: A method for stochastic optimization. arXiv. 20141412.6980 [Google Scholar]

- 51.Abadi M., Agarwal A., Barham P., Brevdo E., Chen Z., Citro C., Corrado G.S., Davis A., Dean J., Devin M., et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. [(accessed on 16 June 2021)]. Available online: tensorflow.org.

- 52.Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., Blondel M., Prettenhofer P., Weiss R., Dubourg V., et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011;12:2825–2830. [Google Scholar]

- 53.Hunter J.D. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 2007;9:90–95. doi: 10.1109/MCSE.2007.55. [DOI] [Google Scholar]

- 54.Efron B., Tibshirani R.J. An Introduction to the Bootstrap. CRC Press; Boca Raton, FL, USA: 1994. [Google Scholar]

- 55.Kanavati F., Ichihara S., Rambeau M., Iizuka O., Arihiro K., Tsuneki M. Deep learning models for gastric signet ring cell carcinoma classification in whole slide images. Technol. Cancer Res. Treat. 2021;20:15330338211027901. doi: 10.1177/15330338211027901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Kanavati F., Toyokawa G., Momosaki S., Rambeau M., Kozuma Y., Shoji F., Yamazaki K., Takeo S., Iizuka O., Tsuneki M. Weakly-supervised learning for lung carcinoma classification using deep learning. Sci. Rep. 2020;10:9297. doi: 10.1038/s41598-020-66333-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Sanghvi A.B., Allen E.Z., Callenberg K.M., Pantanowitz L. Performance of an artificial intelligence algorithm for reporting urine cytopathology. Cancer Cytopathol. 2019;127:658–666. doi: 10.1002/cncy.22176. [DOI] [PubMed] [Google Scholar]

- 58.Ren S., Solomides C., Draganova-Tacheva R., Bibbo M. Overview of nongynecological samples prepared with liquid-based cytology medium. Acta Cytol. 2014;58:522–532. doi: 10.1159/000363123. [DOI] [PubMed] [Google Scholar]

- 59.Nasuti J.F., Tam D., Gupta P.K. Diagnostic value of liquid-based (Thinprep®) preparations in nongynecologic cases. Diagn. Cytopathol. 2001;24:137–141. doi: 10.1002/1097-0339(200102)24:2<137::AID-DC1027>3.0.CO;2-5. [DOI] [PubMed] [Google Scholar]

- 60.Raisi O., Magnani C., Bigiani N., Cianciavicchia E., D’Amico R., Muscatello U., Ghirardini C. The diagnostic reliability of urinary cytology: A retrospective study. Diagn. Cytopathol. 2012;40:608–614. doi: 10.1002/dc.21716. [DOI] [PubMed] [Google Scholar]

- 61.Rossi E., Mulè A., Russo R., Pierconti F., Fadda G. Application of liquid-based preparation to non-gynaecologic exfoliative cytology. Pathologica. 2008;100:461–465. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analysed during the current study are not publicly available due to specific institutional requirements governing privacy protection; however, they are available from the corresponding author and from the private clinical laboratory in Japan on reasonable request. The datasets that support the findings of this study are available from the private clinical laboratory (Japan), but restrictions apply to the availability of these data, which were used under a data-use agreement that was made according to the Ethical Guidelines for Medical and Health Research Involving Human Subjects as set by the Japanese Ministry of Health, Labour and Welfare (Tokyo, Japan) and, thus, are not publicly available. However, the datasets are available from the authors upon reasonable request for private viewing and with permission from the corresponding private clinical laboratory within the terms of the data use agreement and if compliant with the ethical and legal requirements as stipulated by the Japanese Ministry of Health, Labour and Welfare.