Abstract

Objective

We developed a smartphone application for patients with rheumatoid arthritis (RA) that allows them to self‐monitor their disease activity in between clinic visits by answering a weekly Routine Assessment of Patient Index Data 3. This study was undertaken to assess the safety (noninferiority in the Disease Activity Score in 28 joints using the erythrocyte sedimentation rate [DAS28‐ESR]) and efficacy (reduction in number of visits) of patient‐initiated care assisted using a smartphone app, compared to usual care.

Methods

A 12‐month, randomized, noninferiority clinical trial was conducted in RA patients with low disease activity and without treatment changes in the past 6 months. Patients were randomized 1:1 to either app‐supported patient‐initiated care with a scheduled follow‐up consultation after a year (app intervention group) or usual care. The coprimary outcome measures were noninferiority in terms of change in DAS28‐ESR score after 12 months and the ratio of the mean number of consultations with rheumatologists between the groups. The noninferiority limit was 0.5 difference in DAS28‐ESR between the groups.

Results

Of the 103 randomized patients, 102 completed the study. After a year, noninferiority in terms of the DAS28‐ESR score was established, as the 95% confidence interval (95% CI) of the mean ΔDAS28‐ESR between the groups was within the noninferiority limit: −0.04 in favor of the app intervention group (95% CI −0.39, 0.30). The number of rheumatologist consultations was significantly lower in the app intervention group compared to the usual care group (mean ± SD 1.7 ± 1.8 versus 2.8 ± 1.4; visit ratio 0.62 [95% CI 0.47, 0.81]).

Conclusion

Patient‐initiated care supported by smartphone self‐monitoring was noninferior to usual care in terms of the ΔDAS28‐ESR and led to a 38% reduction in rheumatologist consultations in RA patients with stable low disease activity.

INTRODUCTION

Patients with rheumatoid arthritis (RA) are routinely scheduled for follow‐up appointments, but this format may not be sustainable. The American College of Rheumatology (ACR) workforce study estimates that by 2030 the number of projected rheumatologists will not even meet half the number of needed rheumatologists (1). The demand for more rheumatology health care providers is growing due to the increasing number of patients with RA and the overall increase in health care utilization. Additionally, the supply of health care is dropping due to a decreasing rheumatology workforce (1, 2). Therefore, we will need to provide more health care with the same capacity of people and resources (3, 4). Currently in The Netherlands, most patients with RA consult their physician every 3 to 6 months, following the EULAR guidelines (5). This method may be inefficient as, on the one hand, 75% of patients are in a low disease activity state or their disease is in remission (6), and, on the other hand, flares often occur between outpatient clinic visits and can therefore still be missed and left untreated (7). Thus, the current process needs to be optimized to remain sustainable.

As a solution, outpatient clinics could revert from preplanned visits to providing “health care on demand,” in which patients are expected to initiate health care themselves when needed. The effectiveness of patient‐initiated care in patients with RA is still under investigation. Hewlett et al, Primdahl et al, and Poggenborg et al have shown that patients who self‐initiate care (for 2 to 6 years) were clinically and psychologically at least as well and had fewer appointments than patients with physician‐initiated regular appointments (8, 9, 10). However, a similar Swedish study showed that, although patient‐initiated care was similar to traditional care in terms of clinical outcomes such as the Disease Activity Score in 28 joints using the erythrocyte sedimentation rate (DAS28‐ESR) (11) after 18 months, the appointment frequency did not differ between both groups (12). In 2 of these studies, a general practitioner and a research nurse were used instead of the rheumatologist to monitor or follow up patients; thus, although health care costs may have been saved, health care usage may have been redirected rather than decreased (10, 12). Furthermore, a potential downside of patient‐initiated care is that information on RA disease activity may be lacking for longer time periods, which could complicate disease activity–guided management. This could be resolved by letting patients monitor themselves with electronic patient‐reported outcomes (ePROs) in between clinic visits (self‐monitoring), which can provide disease activity information between visits, allowing for better disease activity–guided management (13).

So far, self‐monitoring of RA disease activity with ePROs has not been shown to improve patient satisfaction or disease activity (14), but improvements have been seen in terms of self‐management skills, patient empowerment, patient–physician interaction, and physical activity (15, 16, 17, 18). Several studies also demonstrate high acceptance rates of self‐monitoring and high questionnaire completion during studies (14, 19). In addition, self‐monitoring can lead to a reduction in outpatient clinic visits by ~50% (20, 21, 22). To date, none of these studies have combined patient‐initiated care (to reduce the number of visits) with self‐monitoring (to maintain disease control).

The objective of this study was to assess the safety and efficacy of patient‐initiated care combined with weekly ePRO self‐monitoring through a smartphone application in patients with RA and low disease activity. We hypothesized that combining patient‐initiated care with self‐monitoring would lead to a lower number of outpatient clinic visits while maintaining other health care outcomes such as disease activity and patient satisfaction.

PATIENTS AND METHODS

This was a 1‐year, randomized, controlled, noninferiority clinical trial, with blinded outcome assessment. The protocol was registered on www.trialregister.nl (NL7715) and approved by the Medical Ethics Committee of the Vrije Universiteit Medical Center Amsterdam. All patients provided oral and written informed consent prior to participation.

A detailed description of the methods of the trial has previously been published (23). Briefly, the inclusion criteria were as follows: 1) ≥18 years of age, 2) diagnosis of RA by a rheumatologist, 3) disease duration of ≥2 years, 4) DAS28‐ESR score of <3.2 at the start of the study, 5) owner of a smartphone, 6) and ability to read and write Dutch. Patients with a disease duration of ≥2 years were anticipated to have sufficient experience with their own disease and flaring of their disease activity to allow for patient‐initiated care. Patients were excluded if they had initiated or discontinued a conventional or biologic disease‐modifying antirheumatic drug (DMARD) in the previous 6 months or if they participated in another intervention trial. Treating rheumatologists were asked for permission by an author (BS) to recruit, by telephone, all patients with previously low disease activity noted in the electronic medical record (EMR) who attended the outpatient clinic. Patients were called twice, and if no response was obtained, no further attempts to include the patient were made.

Patients were randomized 1:1 to the app intervention group or the usual care group, following a computer‐generated random numbers sequence with a variable block size of 4, 6, or 8 in the online program Castor (24). The intervention consisted of weekly self‐monitoring (completing a Routine Assessment of Patient Index Data 3 [RAPID3] questionnaire in a smartphone app designed for this purpose) with a single preplanned consultation at the end of the trial period (23, 25). In the usual care group, preplanned outpatient clinic visits were continued at the discretion of the treating rheumatologist (usually every 3 to 6 months). After randomization, patients in the app intervention group received login credentials for the app. The first login was performed at Reade Rheumatology and Rehabilitation, to make sure this process was clear, and patients were shown the features of the app. Additionally, information about patient‐initiated care was provided, patients were told they could contact the outpatient clinic when they deemed it necessary (in case of questions or symptoms). Patients in the usual care group were also allowed to contact the outpatient clinic if necessary. At the start and end of the trial, blinded assessment of the DAS28‐ESR was performed by medical doctors or nurses, with no treatment relationship to the patient, who were called into the room during study visits. All medical doctors (PhD candidates) and nurses at Reade receive specific training for joint evaluations and have experience (multiple times per week) with joint evaluations.

The trial was performed at Reade, a secondary rheumatology care center in the region of Amsterdam in The Netherlands that employs 17 full‐ and part‐time rheumatologists. Everyone in The Netherlands has access to health care services, as long as they have health insurance, which is a mandatory requirement for all Dutch residents (26). The Netherlands has excellent network coverage, reaching as high as 96.8% overall, and 100% in Amsterdam; in addition, 97% of people have internet access at home and >87% of the Dutch adult population owns a smartphone (27, 28, 29). The mobile download speed currently ranks fifth worldwide (30).

The smartphone application

The app and its development following the Medical Research Council framework has been extensively described elsewhere (23, 25). In brief, the app notified patients each week to complete their RAPID3 in the app. The results of the RAPID3 could be used by patients to monitor themselves during the year, to reflect on the course of their disease, and to contact the outpatient clinic in a timely manner in case of progressive complaints. Additionally, communication with the physician/nurse at the outpatient clinic was easier, as they also had access to their data. In the app, a RAPID3 algorithm was used to identify potential RA flares. An increase in the RAPID3 score by >2 points (from the previous data point) combined with a RAPID3 score of >4 led to a flare notification. The notification informed the patient about the possible flare, linked to self‐management tips, and presented the advice to contact a rheumatology nurse if deemed necessary by the patient. Scores in the app were not used to trigger contact from clinician to patient. The data collected in the app was synchronized in real time with the EMR at Reade.

Primary outcome measures

The coprimary outcome measure was noninferiority in terms of change in DAS28‐ESR after 12 months. The noninferiority limit was set at 0.5 difference in DAS28‐ESR between the groups.

The second primary outcome measure was the number of visits with a rheumatologist. We recorded the number of consultations (by telephone and in person) with rheumatologists. The number of consultations (by telephone and in person) with nurses was evaluated as a secondary outcome.

Secondary outcome measures

Patient empowerment

The empowerment of patients was measured using the effective consumer scale 17 (EC‐17). In the EC‐17, patients score how often statements are true for them, to measure the patients’ skills in managing their own health care. Each item is scored from 0 (never) to 4 (always), and ultimately the total score is converted to a scale out of 100 (higher is better) (31).

Patient–physician interaction

The patient–physician interaction was measured with the 5‐item Perceived Efficacy in Patient–Physician Interactions (PEPPI‐5). The PEPPI‐5 is scored on a scale of 1 (not confident at all) to 5 (completely confident). The 5 items are added together to form a total score (out of a possible 25), with higher scores indicating more perceived efficacy in patient–physician interaction.

Patient compliance

To measure medication compliance, the 5‐item Compliance Questionnaire for Rheumatology (CQR‐5) was used. The CQR‐5 classifies patients as either “high” or “low” adherents.

Patient satisfaction with treatment

Satisfaction with treatment was measured with the 9‐item Treatment Satisfaction Questionnaire for Medication (TSQM‐9). The questionnaire measures satisfaction with treatment on 3 domains: effectiveness, convenience, and global satisfaction. TSQM scores have a range of 0 to 100, with higher scores indicating greater satisfaction.

Patient satisfaction with health care

Patient satisfaction with health care was measured on a 10‐point Likert scale on 3 domains: ease of contacting our hospital, satisfaction with health care received, and likelihood of recommending our hospital. Higher scores indicate greater satisfaction.

Physician satisfaction

Physician satisfaction with the new form of health care delivery and the way the results were incorporated in the EMR was measured on a 10‐point Likert scale (overall satisfaction) question and 6 5‐point Likert scale statements. Physicians rated how much they agreed with the statements, ranging from 1 (not at all) to 5 (very much). Higher scores indicate greater satisfaction.

Usability of the application

Finally, the System Usability Scale was used to determine the usability of the app, the scale ranges from 0 to 100, in which a score of 52 indicates “OK” usability and 72 “good” usability (32, 33). This outcome measure was accidentally not added to the protocol paper after it was suggested by the ethics review board.

COVID‐19 protocol amendments and power analysis

Due to the global pandemic, inclusion was prematurely stopped in April 2020 after 103 inclusions and changes were made to the initial trial protocol accordingly. Inclusion was stopped because new patients that were randomized into the usual care group would also have been monitored at a distance by rheumatologist during the entire study following the COVID‐19 contact restrictions. This would lead to 2 different control groups, which would complicate analyses and interpretation of the data. We reevaluated our power analysis in collaboration with a statistician (MB) and changed our noninferiority limit to 0.5 (initially 0.3), which is still below the minimally relevant change in DAS28‐ESR score of 0.6 (which is often chosen as noninferiority cutoff) (22). The assumed SD of 0.6 in DAS28‐ESR score for a group of RA patients remained unaltered. With these changes, 64 patients would be required to be 95% sure that the lower limit of a 95% confidence interval (95% CI) will be above the noninferiority limit of 0.5 if there were truly no difference between the standard and experimental treatment. Therefore, at the time of the inclusion stop, ample number of patients had been included to analyze the primary outcome measure.

At the start of the pandemic, for most patients, in‐person visits were changed to (nonvideo) telephone consultations. Typically, only flares were seen in the outpatient clinic after COVID onset. However, this policy fluctuated with the number of COVID‐19 infections and hospital admissions in The Netherlands. Therefore, telephone consultations with the rheumatologist were also counted toward the end point of number of consultations with a rheumatologist.

Statistical analysis

All analyses were conducted using SPSS version 25. The distribution of all outcomes was visually assessed (histogram). Normally distributed data are presented by the mean ± SD; otherwise, median and inner quartiles are reported. A 2‐sided P value less than 0.05 was considered statistically significant; because we only had 2 separate hypotheses, we decided not to correct for multiple testing. All outcomes were analyzed on a per‐protocol basis, as there was only 1 patient who dropped out of the study.

For the first primary outcome measure, noninferiority of the DAS28‐ESR, the between‐arm differences at follow‐up of DAS28‐ESR were analyzed by linear regression adjusted for the baseline value. For the second primary outcome measure, the number of visits with a rheumatologist, a Poisson distribution was assumed. Therefore, a (longitudinal) Poisson regression was performed, which analyzes the ratio of the total rate of outpatient visits and telephone consultations with a rheumatologist in the app intervention group compared to the usual care group. As a secondary outcome measure, the separate ratios of the intervention group's number of telephone and in‐person nurse consultations compared to that of the usual care group were evaluated. The assumptions of the regression models were evaluated.

For all other secondary outcome measures, continuous variables were compared to linear regression for normally distributed variables. In case the outcome variable was not normally distributed, the Δ (12‐month value − baseline value) was assessed, and if it was also not normally distributed, log transformation of the outcome was performed to see if the outcome became normally distributed. All outcomes were adjusted for age, sex, body mass index, education level, disease duration, and baseline score. Completion rates of the weekly questionnaires were presented as total numbers and percentages.

RESULTS

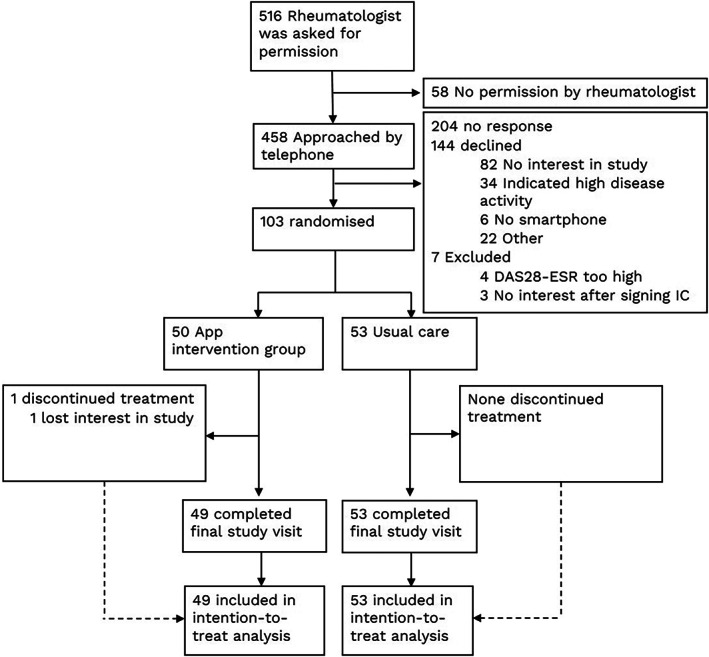

Between May 2019 and April 2020, 103 patients provided written informed consent and were randomized to the app intervention group (n = 50) or the usual care group (n = 53) (Figure 1). At the end of the 12‐month study period, 49 patients (98%) and 53 patients (100%) completed the final study visit in the app and usual care groups, respectively. Baseline characteristics of the 2 groups are presented in Table 1. The patients who were not interested in the study were slightly older (mean age 61 years), and 26% of them were male (versus 44% in the study).

Figure 1.

Consolidated Standards of Reporting Trials diagram of rheumatoid arthritis patient selection and flow of participants throughout the study of patient‐initiated care assisted using a smartphone app versus usual care. DAS28‐ESR = Disease Activity Score in 28 joints using the erythrocyte sedimentation rate; IC = informed consent.

Table 1.

Baseline characteristics of the study patients*

| App intervention group (n = 50) | Usual care group (n = 53) | |

|---|---|---|

| Age, years | 58 ± 13 | 57 ± 11 |

| Male sex, no. (%) | 22 (44) | 21 (40) |

| BMI, kg/m2 | 26 ± 4.4 | 26 ± 4.5 |

| Disease duration, median (IQR) years | 11 (5–18) | 9 (5–14) |

| Tertiary education, no. (%)† | 28 (56) | 27 (51) |

| DAS28‐ESR score | 1.7 ± 0.7 | 1.5 ± 0.7 |

| RAPID3 score | 2.3 ± 1.5 | 1.9 ± 1.4 |

| ACPA‐positive, no. (%) | 33 (66) | 40 (75) |

| RF‐positive, no. (%) | 29 (58) | 33 (62) |

Except where indicated otherwise, values are the mean ± SD. BMI = body mass index; IQR = interquartile range; DAS28‐ESR = Disease Activity Score in 28 joints using the erythrocyte sedimentation rate; RAPID3 = Rapid Assessment of Patient Index Data 3; ACPA = anti–citrullinated protein antibody; RF = rheumatoid factor.

Higher vocational or university education.

The DAS28‐ESR slightly increased in both groups (ΔDAS28‐ESR in app intervention group was 0.27 versus 0.35 in usual care group). Noninferiority was established, as the 95% CI of the mean difference in DAS28‐ESR between the groups was within the noninferiority limit: −0.04 in favor of the intervention group (95% CI −0.39, 0.30), adjusted for baseline DAS28‐ESR (no significant confounders).

After 12 months, the number of rheumatologist telephone consultations and outpatient clinic visits was significantly lower in the app intervention group, with a total visit rate ratio of 0.6 (95% CI 0.47, 0.80) relative to the total number of visits in the usual care group (P < 0.001). The total number of outpatient visits with nurses was also lower in the app intervention group (Table 2).

Table 2.

Number of consultations per group and ratio of number of visits in the app intervention group compared to the usual care group*

| App intervention group (n = 49) | Usual care group (n = 53) | Visit rate ratio (95% CI)† | P | |

|---|---|---|---|---|

| Rheumatologist consultations | ||||

| Telephone | 1.4 ± 1.6 | 1.8 ± 1.7 | 0.8 (0.59, 1.10) | 0.16 |

| Outpatient | 0.3 ± 0.6 | 1.1 ± 0.8 | 0.3 (0.18, 0.54) | <0.001 |

| Total | 1.7 ± 1.8 | 2.8 ± 1.4 | 0.6 (0.47, 0.81) | <0.001 |

| Nurse consultations | ||||

| Telephone | 0.4 ± 0.8 | 0.5 ± 0.9 | 0.4 (0.41, 1.43) | 0.40 |

| Outpatient | 0.1 ± 0.3 | 0.3 ± 0.6 | 0.3 (0.11, 0.81) | 0.02 |

| Total | 0.4 ± 0.9 | 0.8 ± 1.1 | 0.6 (0.34, 0.95) | 0.03 |

Values are the mean ± SD number of consultations per patient per year in each group, and the ratio (95% confidence interval [95% CI]) of the rate of visits in the app intervention group compared to the usual care group.

Results of per‐protocol analysis with a longitudinal Poisson regression are shown.

The proportion of in‐person rheumatologist consultations to total number of rheumatologist consultations (both in‐person and telephone consultations) changed pre‐ and post‐COVID (after March 1, 2020). In the intervention group, the proportions changed from 0.15 (3 of 20) to 0.20 (13 of 65), and in the control group, the proportion changed from 0.59 (22 of 37) to 0.30 (34 of 112). This suggests that the control group would likely have had more in‐person consultations without COVID and fewer telephone consultations.

The number of flare visits was 12 (in 11 patients) in the app intervention group and 18 (in 11 patients) in the control group. These consultations led to an intensification of treatment with DMARDS or steroids in 9 patients in both groups. For the app intervention group, 8 of 12 flare consultations were not preceded by a flare notification. During the study, there were 40 flare notifications, of which 36 did not lead to a consultation (10% of the prompts led to a consultation). Reasons for not contacting the outpatient clinic after a flare notification included the following: thought of flare but chose to wait and see (n = 20), complaints caused by something else (n = 6), did not think of flare/did not agree (n = 5), other (n = 2), or unknown (n = 3).

During the study, there were no significant differences between groups in patient‐reported disease activity (RAPID3), self‐management (EC‐17), patient–physician interaction (PEPPI‐5), or medication adherence (CQR‐5) at 12 months. Satisfaction with health care was high in both groups and not statistically different (Table 3).

Table 3.

Between‐group differences in change from baseline value for the secondary outcome measures*

| App intervention group (n = 49)† | Usual care (n = 53)‡ | Estimated intervention effect (95% CI)§ | |||

|---|---|---|---|---|---|

| RAPID3 | |||||

| Baseline | 2.3 ± 1.5 | 1.9 ± 1.4 | – | ||

| Δ12 months | −0.2 ± 1.5 | 0.3 ± 1.4 | −0.36 (−0.93, 0.21) | ||

| EC‐17 | |||||

| Baseline | 80.3 ± 11.8 | 78.6 ± 10.4 | – | ||

| Δ12 months | 0.4 ± 11.7 | 1.3 ± 7.5 | −0.33 (−3.75, 3.09) | ||

| PEPPI‐5 | |||||

| Baseline | 21.7 ± 2.5 | 21.3 ± 2.8 | – | ||

| Δ12 months | −0.5 ± 3.2 | 0.3 ± 2.5 | −0.87 (−2.00, 0.26) | ||

| TSQM‐9 | |||||

| Medication effectiveness | |||||

| Baseline | 73.8 ± 16.1 | 72.5 ± 21.2 | – | ||

| Δ12 months | −0.6 ± 12.5 | −1.6 ± 19.0 | 0.94 (−5.30, 7.17) | ||

| Medication convenience | |||||

| Baseline | 77.0 ± 13.3 | 77.6 ± 14.7 | – | ||

| Δ12 months | −1.4 ± 16.1 | 2.1 ± 12.9 | −4.01 (−9.65, 1.63) | ||

| Medication global satisfaction | |||||

| Baseline | 69.8 ± 14.5 | 71.2 ± 16.6 | – | ||

| Δ12 months | 1.5 ± 11.0 | 0.6 ± 13.0 | 0.10 (−4.58, 4.77) | ||

| Satisfaction with health care¶ | |||||

| Ease of contact | |||||

| Baseline, median (IQR) | 10 (9–10) | 10 (10–10) | – | ||

| Δ12 months | −0.1 ± 0.6 | −0.1 (0.8) | 0.01 (−0.28, 0.29) | ||

| Health care received | |||||

| Baseline, median (IQR) | 9 (8–9) | 9 (8–9) | – | ||

| Δ12 months | −0.1 ± 1.4 | 0.0 ± 1.3 | −0.10 (−0.62, 0.43) | ||

| Recommend hospital | |||||

| Baseline, median (IQR) | 9 (8–10) | 9 (8–10) | – | ||

| Δ12 months | −0.4 ± 1.8 | −0.1 ± 1.4 | −0.28 (−0.92, 0.37) | ||

| CQR‐5, no. (%)# | |||||

| Baseline | 32 (65) | 40 (76) | – | ||

| 12 months | 30 (63) | 40 (77) | 0.54 (0.21, 1.42) | ||

Except where indicated otherwise, values are the mean ± SD. Values at 12 months are the mean ± SD difference from baseline value. Lower Rapid Assessment of Patient Index Data 3 (RAPID3) scores indicate lower disease activity scores, while in all other outcome measures, higher scores are better. 95% CI = 95% confidence interval; EC‐17 = effective consumer scale 17; PEPPI‐5 = Perceived Efficacy in Patient–Physician Interaction Questionnaire 5; TSQM‐9 = 9‐item Treatment Satisfaction Questionnaire for Medication; IQR = interquartile range; CQR‐5 = Compliance Questionnaire for Rheumatology 5.

At 12 months, n = 48.

At 12 months, n = 52.

Adjusted for age, sex, disease duration, body mass index, education level, and baseline value.

Analysis of Δ (12‐month value – baseline value) to create normal distribution.

Classified as high medication adherence.

Patients rated the usability of the app (out of a possible 100) with a median of 78 (interquartile range [IQR] 60–90) at 6 months and 80 (IQR 65–93) at 12 months, which indicates good‐to‐excellent usability of the app. The mean ± SD of completed questionnaires was 31 ± 14 out of a possible 52 (59%), which translates to 1 completed questionnaire every 1.7 weeks (or 12 days). The mean ± SD completion rates during the first, second, third, and fourth quarter of the year were 58 ± 7%, 59 ± 5%, 66 ± 6%, and 54 ± 6%, respectively. In total, 10 of 17 rheumatologists included patients in the study, and 9 of 10 completed the final evaluation (of the telemedicine platform). On average, physician satisfaction was a 7.3 out of 10. Detailed results of the final evaluation are presented in Supplementary Table 1, available on the Arthritis & Rheumatology website at http://onlinelibrary.wiley.com/doi/10.1002/art.42292.

During the trial, 24 bug reports were made regarding 8 different bugs in the application. Most importantly, notifications did not work between July 2019 and February 2021 for most patients. Therefore, most patients received few or no reminders on the phone to complete their questionnaires during the study. In March 2020, automated email reminders were sent each week to patients to complete the questionnaire to minimize further impact of this bug. The adherence rate during the email reminders was similar (62%; 1,009 of 1,625) to that prior to the email reminders (58%; 537 of 923). Other bugs that were reported included the following: the possibility to fill out a negative morning stiffness time, newly made password not working, not being able to log in, not receiving a token to log in, fingerprint login not working, badge icon (red reminder “1”) not disappearing after completing the questionnaire, and inability to send the questionnaire. These problems were resolved and were not recurrent.

DISCUSSION

This randomized controlled trial compared app‐assisted, patient‐initiated care to usual care for patients with RA with low disease activity. Our findings show that the use of this intervention results in a significantly lower number (38%) of consultations with rheumatologists. Additionally, the intervention was noninferior to usual care in terms of disease activity, and patient reported outcomes remained high in the app intervention group.

Our findings show that it is possible to optimize RA health care delivery by letting patients initiate consultations and self‐monitor their disease. The inefficiency of the current system with preplanned consultations for disease monitoring is illustrated by the similar number of patient‐initiated extra consultations that were planned in both groups. Our results are corroborated by previous studies that demonstrated the ability to maintain disease activity outcomes while lowering the number of outpatient clinic visits following a monitoring‐at‐a‐distance protocol (of 51% after 2 years and 79% in 6 months) (20, 21) and patient‐initiated care (8, 9, 10). The results from Fredriksson et al contrast with our results, as they reported that patient‐initiated care (without an app) did not lower the number of consultations in their population (12). This could have been a consequence of the frequent nurse monitoring visits in the study, which could have counteracted to the effect of patient‐initiated care. Looking at the projected increase in health care demand and decrease in supply (or availability), the patient‐initiated strategy combined with self‐monitoring appears to be a very promising direction.

While health care utilization dropped, no difference was found in patient–physician interaction, health care satisfaction, self‐efficacy, or treatment satisfaction between the intervention and control groups. The lack of improvement following an intervention using a smartphone app in these outcomes was also found by Lee et al (14) but contrasts with other studies (15, 17, 34). In our study, the secondary outcome measures were already at favorable levels at baseline, which suggests that patients with low disease activity value the current system of face‐to‐face consultations, which has been previously described (35). From a value‐based health care perspective, the fact that these ePROs maintained favorable levels highlights that a similar quality of health care can be provided with less medical labor. In addition, patients have also acknowledged that prioritizing allocation of clinic visits, according to patient‐generated RA disease activity via an app, would be acceptable and fair when demand exceeded capacity (35).

The flare notification did not function as desired, as most flare consultations were not preceded by a flare notification. In most cases, the flare notification was given during a rise in complaints. The notification might have helped patients to reflect on the cause of their complaints and self‐manage their symptoms. However, with our current study design, it was not possible to evaluate the added effect and importance of weekly self‐monitoring with the app in addition to patient‐initiated care exclusively. The addition of a third arm (patient‐initiated care without the app) was discussed but meant that information on disease activity would be lacking for a full year. This was deemed unethical and inconsistent with EULAR and ACR treatment protocols. Therefore, a design following a recent telemedicine study protocol was chosen (36). The value of smartphone apps and monitoring has previously been indicated for patients and rheumatologists. Patients have been predominantly positive about online self‐monitoring, indicating that it helps them assess the course of their disease, that they feel less dependent on the health care professional, and that it aids them in communication with their physician (23, 37, 38, 39). In addition, rheumatologists with patients that self‐monitor with ePROs are less likely to have difficulty estimating how patients were doing compared to rheumatologists who do not have access to ePRO data (40).

The average response rate (59%) to questionnaires was relatively low compared to the previously reported rates of 91% by Austin et al, 79% by Colls et al, and 69% by Seppen et al (calculated from response rates) (25, 41, 42). This could be due to the duration of the present study, which was ≥6 months longer than the aforementioned studies (although adherence did not steadily decline during this study), the lack of notifications during a major part of the study, or the overall persuasive design of the app (43). So far, it is unclear how often patients have to be monitored to be able to target consultations according to need. The burden for patients will need to be kept as low as possible, while still collecting sufficient data to make informed treatment decisions. The results of the present study suggest that weekly collection (with 60% actual completion) is sufficient to maintain low disease activity.

Strengths of the study include the randomized, controlled design with blinded outcome assessment and the number of participants. The study expands the population in which telemonitoring has been successfully tested, after Salaffi et al and Pers et al previously showed that such a system can also be deployed for intensive telemonitoring and treatment of patients with recently diagnosed active RA or patients that recently changed treatments (20, 44). In theory, our results apply for many RA patients in affluent countries, namely those with a disease duration of >2 years who are experiencing a low disease activity state (>70% according to Haugeberg et al) (6) and have a smartphone. However, only 20% of the patients agreed to participate, and, specifically, women were less likely to participate. This illustrates that while health technology could be applicable to anyone, it is not adopted by everyone. This limited adoption is also reflected in the participating number of rheumatologists (10 of 17) and the somewhat neutral results of the physician satisfaction. Future research should focus on improving the adoption of health technologies by a larger population, which may be achieved with a more patient‐centered design with more focus on involving the patient in health technology programs by health care providers (45).

There are limitations to this study. First, generalizability is limited for 2 reasons: 1) only 20% of the patients who were approached were randomized to a treatment group, which could have introduced a selection bias, and 2) inclusion was limited to patients who are able to use technology such as smartphones. Even though smartphone usage has widely spread (>90% of adults in The Netherlands) (46), hospitals that use apps should remain aware of patients who have insufficient eHealth literacy to participate in telemedicine care (47). In our study, very few people declined for this purpose, but to remain inclusive of all patients, traditional ways of providing health care should remain available, or patients should be trained on how to participate in the new form of health care (telemedicine). From anecdotal experience, patients easily learned how to use the app and were rapidly comfortable using it. For those who need some extra time, an eHealth walk‐in clinic could be organized.

Second, our results show a decline in health care utilization but have not yet showed cost effectiveness of the intervention. Therefore, a cost effectiveness evaluation of this intervention will be performed to evaluate the economic effects of the intervention. Third, the use of the DAS28‐ESR to compare disease activity at 2 time points has limitations. Since no DAS28‐ESR data were collected between the study visits, it remains unclear what the DAS28‐ESR of both groups was throughout the year. Therefore, it is possible that the DAS28‐ESR was different in both groups over the course of the year. However, as illustrated by the RAPID3 results, disease activity of both groups appeared similar at all time points during the study. Looking at the real‐life observational data from Müskens et al, the reduction in the number of visits continues after the first year, while the average disease activity does not deteriorate (it even slightly improves) (20). Fourth, testing 2 separate hypotheses using a P value of <0.05 might be considered a weakness. However, the noninferiority limit would also have been reached with a 97.5% CI, and the P value for number of visits was below 0.025, the Bonferroni threshold for multiple testing in case of 2 tests.

In conclusion, these findings suggest that self‐initiated care combined with weekly self‐monitoring in patients with RA with low disease activity is safe in terms of the DAS28‐ESR, reduces the number of consultations with rheumatologists, and maintains high satisfaction with the health care received. Our intervention strategy may reduce the workforce that is needed per RA patient and could therefore decrease health care costs per patient, which will be evaluated in a separate analysis.

AUTHOR CONTRIBUTIONS

All authors were involved in drafting the article or revising it critically for important intellectual content, and all authors approved the final version to be published. Dr. Seppen had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Study conception and design

Seppen, ter Wee, van Schaardenburg, Roorda, Nurmohamed, Boers, Bos.

Acquisition of data

Seppen, Wiegel.

Analysis and interpretation of data

Seppen, Wiegel, ter Wee, Nurmohamed, Boers, Bos.

ROLE OF THE STUDY SPONSOR

This randomized controlled trial was an investigator‐initiated study funded by AbbVie. AbbVie had no role in the design of this study, nor during its execution, analysis, interpretation of the data, or decision to submit results.

Supporting information

Disclosure Form

Supplementary Table 1 Physician satisfaction with the app.

This trial was funded by AbbVie.

Author disclosures are available at https://onlinelibrary.wiley.com/action/downloadSupplement?doi=10.1002%2Fart.42292&file=art42292‐sup‐0001‐Disclosureform.pdf.

REFERENCES

- 1. Battafarano DF, Ditmyer M, Bolster MB, et al. 2015 American College of Rheumatology workforce study: supply and demand projections of Adult Rheumatology Workforce, 2015–2030. Arthritis Care Res (Hoboken) 2018;70:617–26. [DOI] [PubMed] [Google Scholar]

- 2. Kilian A, Upton LA, Battafarano DF, et al. Workforce trends in rheumatology. Rheum Dis Clin North Am 2019;45:13–26. [DOI] [PubMed] [Google Scholar]

- 3. Sheikh A, Sood HS, Bates DW. Leveraging health information technology to achieve the “triple aim” of healthcare reform. J Am Med Inform Assoc 2015;22:849–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Miloslavsky EM, Bolster MB. Addressing the rheumatology workforce shortage: a multifaceted approach. Semin Arthritis Rheum 2020;50:791–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Smolen JS, Breedveld FC, Burmester GR, et al. Treating rheumatoid arthritis to target: 2014 update of the recommendations of an international task force. Ann Rheum Dis 2016;75:3–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Haugeberg G, Hansen IJ, Soldal DM, et al. Ten years of change in clinical disease status and treatment in rheumatoid arthritis: results based on standardized monitoring of patients in an ordinary outpatient clinic in southern Norway. Arthritis Res Ther 2015;17:219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Flurey CA, Morris M, Richards P, et al. It's like a juggling act: rheumatoid arthritis patient perspectives on daily life and flare while on current treatment regimes. Rheumatology (Oxford) 2014;53:696–703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Poggenborg RP, Madsen OR, Dreyer L, et al. Patient‐controlled outpatient follow‐up on demand for patients with rheumatoid arthritis: a 2‐year randomized controlled trial. Clin Rheumatol 2021;40:3599–604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Primdahl J, Sorensen J, Horn HC, et al. Shared care or nursing consultations as an alternative to rheumatologist follow‐up for rheumatoid arthritis outpatients with low disease activity—patient outcomes from a 2‐year, randomised controlled trial. Ann Rheum Dis 2014;73:357–64. [DOI] [PubMed] [Google Scholar]

- 10. Hewlett S, Kirwan J, Pollock J, et al. Patient initiated outpatient follow up in rheumatoid arthritis: six year randomised controlled trial. BMJ 2005;330:171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Prevoo ML, van't Hof MA, Kuper HH, et al. Modified disease activity scores that include twenty‐eight–joint counts: development and validation in a prospective longitudinal study of patients with rheumatoid arthritis. Arthritis Rheum 1995;38:44–8. [DOI] [PubMed] [Google Scholar]

- 12. Fredriksson C, Ebbevi D, Waldheim E, et al. Patient‐initiated appointments compared with standard outpatient care for rheumatoid arthritis: a randomised controlled trial. RMD Open 2016;2:e000184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. US Department of Health and Human Services FDA Center for Drug Evaluation and Research, Center for Biologics Evaluation and Research, Center for Devices and Radiological Health. Guidance for industry: patient‐reported outcome measures: use in medical product development to support labeling claims: draft guidance. Health Qual Life Outcomes 2006;4:79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Lee YC, Lu F, Colls J, et al. Outcomes of a mobile app to monitor patient reported outcomes in rheumatoid arthritis: a randomized controlled trial. Arthritis Rheumatol 2021;73:1421–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Allam A, Kostova Z, Nakamoto K, et al. The effect of social support features and gamification on a Web‐based intervention for rheumatoid arthritis patients: randomized controlled trial. J Med Internet Res 2015;17:e14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Gossec L, Cantagrel A, Soubrier M, et al. An e‐health interactive self‐assessment website (Sanoia®) in rheumatoid arthritis. A 12–month randomized controlled trial in 320 patients. Joint Bone Spine 2018;85:709–14. [DOI] [PubMed] [Google Scholar]

- 17. Shigaki CL, Smarr KL, Siva C, et al. RAHelp: an online intervention for individuals with rheumatoid arthritis. Arthritis Care Res (Hoboken) 2013;65:1573–81. [DOI] [PubMed] [Google Scholar]

- 18. Van den Berg MH, Ronday HK, Peeters AJ, et al. Using internet technology to deliver a home‐based physical activity intervention for patients with rheumatoid arthritis: a randomized controlled trial. Arthritis Rheum 2006;55:935–45. [DOI] [PubMed] [Google Scholar]

- 19. Colls J, Lee YC, Xu C, et al. Patient adherence with a smartphone app for patient‐reported outcomes in rheumatoid arthritis. Rheumatology (Oxford) 2021;60:108–12. [DOI] [PubMed] [Google Scholar]

- 20. Pers YM, Valsecchi V, Mura T, et al. A randomized prospective open‐label controlled trial comparing the performance of a connected monitoring interface versus physical routine monitoring in patients with rheumatoid arthritis. Rheumatology (Oxford) 2021;60:1659–68. [DOI] [PubMed] [Google Scholar]

- 21. Muskens WD, Rongen‐van Dartel SA, Vogel C, et al. Telemedicine in the management of rheumatoid arthritis: maintaining disease control with less health‐care utilization. Rheumatol Adv Pract 2021;5:rkaa079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. De Thurah A, Stengaard‐Pedersen K, Axelsen M, et al. Tele‐health followup strategy for tight control of disease activity in rheumatoid arthritis: results of a randomized controlled trial. Arthritis Care Res (Hoboken) 2018;70:353–60. [DOI] [PubMed] [Google Scholar]

- 23. Seppen BF, L'Ami MJ, Rico SD, et al. A smartphone app for self‐monitoring of rheumatoid arthritis disease activity to assist patient‐initiated care: protocol for a randomized controlled trial. JMIR Res Protoc 2020;9:e15105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. CastorEDC . Castor, URL: https://www.castoredc.com/.

- 25. Seppen BF, Wiegel J, L'Ami MJ, et al. Feasibility of self‐monitoring rheumatoid arthritis with a smartphone app: results of two mixed‐methods pilot studies. JMIR Form Res 2020;4:e20165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Schäfer W, Kroneman M, Boerma W, et al, on behalf of the World Health Organization. The Netherlands: health system review; 2010. [Google Scholar]

- 27. Centraal Bureau Statistiek, Internet; toegang, gebruik en faciliteiten; 2012–2019. URL: https://www.cbs.nl/nl-nl/cijfers/detail/83429NED?dl=27A20.

- 28. Vergelijk 4G dekking van alle providers. URL: https://www.4gdekking.nl

- 29. Ons Van. Digitaal in 2018–Hoe staan we ervoor? URL: https://van-ons.nl/blog/digitale-strategie/digitaal-2018-hoe-staan-we-ervoor/.

- 30. Speedtest . Speedtest Global Index: ranking mobile and fixed broadband speeds from around the world on a daily basis. Global Median Speeds Mau 2022. URL: https://www.speedtest.net/global-index. [Google Scholar]

- 31. Ten Klooster PM, Taal E, Siemons L, et al. Translation and validation of the Dutch version of the Effective Consumer Scale (EC‐17). Qual Life Res 2013;22:423–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Bangor A, Kortum PT. An empirical evaluation of the System Usability Scale (SUS). Int J Hum‐Comput Int 2008;24:574–94. [Google Scholar]

- 33. Brooke J. SUS: A quick and dirty usability scale. Redhatch Consulting Ltd: United Kingdom; 1996. [Google Scholar]

- 34. Mollard E, Michaud K. A mobile app with optical imaging for the self‐management of hand rheumatoid arthritis: pilot study. JMIR Mhealth Uhealth 2018;6:e12221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Grainger R, Townsley HR, Ferguson CA, et al. Patient and clinician views on an app for rheumatoid arthritis disease monitoring: function, implementation and implications. Int J Rheum Dis 2020;23:813–27. [DOI] [PubMed] [Google Scholar]

- 36. De Jong MJ, van der Meulen‐de Jong AE, Romberg‐Camps MJ, et al. Telemedicine for management of inflammatory bowel disease (myIBDcoach): a pragmatic, multicentre, randomised controlled trial. Lancet 2017;390:959–68. [DOI] [PubMed] [Google Scholar]

- 37. Renskers L, Rongen‐van Dartel SA, Huis AM, et al. Patients' experiences regarding self‐monitoring of the disease course: an observational pilot study in patients with inflammatory rheumatic diseases at a rheumatology outpatient clinic in The Netherlands. BMJ Open 2020;10:e033321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Richter JG, Nannen C, Chehab G, et al. Mobile app‐based documentation of patient‐reported outcomes–3‐months results from a proof‐of‐concept study on modern rheumatology patient management. Arthritis Res Ther 2021;23:121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. White KM, Ivan A, Williams R, et al. Remote measurement in rheumatoid arthritis: qualitative analysis of patient perspectives. JMIR Form Res 2021;5:e22473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Bos WH, van Tubergen A, Vonkeman HE. Telemedicine for patients with rheumatic and musculoskeletal diseases during the COVID‐19 pandemic: a positive experience in the Netherlands. Rheumatol Int 2021;41:565–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Austin L, Sharp CA, van der Veer SN, et al. Providing ‘the bigger picture’: benefits and feasibility of integrating remote monitoring from smartphones into the electronic health record. Rheumatology (Oxford) 2020;59:367–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Colls J, Lee YC, Xu C, et al. Patient adherence with a smartphone app for patient‐reported outcomes in rheumatoid arthritis. Rheumatology (Oxford) 2020;60:108–12. [DOI] [PubMed] [Google Scholar]

- 43. Kelders SM, Kok RN, Ossebaard HC, et al. Persuasive system design does matter: a systematic review of adherence to web‐based interventions. J Med Internet Res 2012;14:e152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Salaffi F, Carotti M, Ciapetti A, et al. Effectiveness of a telemonitoring intensive strategy in early rheumatoid arthritis: comparison with the conventional management approach. BMC Musculoskelet Disord 2016;17:146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Solomon DH, Rudin RS. Digital health technologies: opportunities and challenges in rheumatology [review]. Nat Rev Rheumatol 2020;16:525–35. [DOI] [PubMed] [Google Scholar]

- 46. Eurostat statistics . URL: http://appsso.eurostat.ec.europa.eu/nui/submitViewTableAction.do. 2017.

- 47. Rijksinstituut voor Volksgezondheid en Milieu. Niet iedereen kan regie voeren over eigen zorg. 2014.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Disclosure Form

Supplementary Table 1 Physician satisfaction with the app.