OBJECTIVES:

To evaluate the methodologic rigor and predictive performance of models predicting ICU readmission; to understand the characteristics of ideal prediction models; and to elucidate relationships between appropriate triage decisions and patient outcomes.

DATA SOURCES:

PubMed, Web of Science, Cochrane, and Embase.

STUDY SELECTION:

Primary literature that reported the development or validation of ICU readmission prediction models within from 2010 to 2021.

DATA EXTRACTION:

Relevant study information was extracted independently by two authors using the Critical Appraisal and Data Extraction for Systematic Reviews of Prediction Modelling Studies checklist. Bias was evaluated using the Prediction model Risk Of Bias ASsessment Tool. Data sources, modeling methodology, definition of outcomes, performance, and risk of bias were critically evaluated to elucidate relevant relationships.

DATA SYNTHESIS:

Thirty-three articles describing models were included. Six studies had a high overall risk of bias due to improper inclusion criteria or omission of critical analysis details. Four other studies had an unclear overall risk of bias due to lack of detail describing the analysis. Overall, the most common (50% of studies) source of bias was the filtering of candidate predictors via univariate analysis. The poorest performing models used existing clinical risk or acuity scores such as Acute Physiologic Assessment and Chronic Health Evaluation II, Sequential Organ Failure Assessment, or Stability and Workload Index for Transfer as the sole predictor. The higher-performing ICU readmission prediction models used homogenous patient populations, specifically defined outcomes, and routinely collected predictors that were analyzed over time.

CONCLUSIONS:

Models predicting ICU readmission can achieve performance advantages by using longitudinal time series modeling, homogenous patient populations, and predictor variables tailored to those populations.

Keywords: critical care, critical illness, forecasting, intensive care units, risk

KEY POINTS

Question: What are characteristics of high-performing, generalizable, and transparent models for predicting ICU readmission?

Findings: Higher-performing ICU readmission prediction models used homogenous patient populations, specifically defined outcomes, and routinely collected predictors that were analyzed over time.

Meanings: Models predicting ICU readmission can achieve performance advantages by using longitudinal time series modeling, homogenous patient populations, and predictor variables tailored to those populations.

More than 4 million patients are admitted to ICUs in the United States each year (1). On average, 7% of all patients discharged from an ICU will be readmitted to the ICU (2). Episodes of readmission are associated with an approximately sevenfold increase in risk-adjusted mortality (3). With intensive care accounting for 25%–40% of all healthcare expenditures, intensivists seek to identify patients that can be safely transferred out of the ICU as early as possible (4). Yet, premature ICU discharge and unplanned readmission is associated with additional costs and prolonged hospital and ICU lengths of stay (5).

Accurately predicting ICU readmission has the potential to identify patients that would benefit from further medical optimization prior to transfer out of the ICU or continuous vital sign monitoring on hospital wards. Traditionally, clinical approaches to ICU discharge decisions involve examining the patient and performing a time-consuming, manual review of health records under strict time constraints. Under these conditions, clinicians tend to rely on heuristics, which can lead to cognitive errors (6–9). Not surprisingly, clinician predictions of a patient’s need for future ICU resources are variable and often inaccurate, to the detriment of patients (10, 11). Data-driven clinical decision-support tools can potentially minimize harm from cognitive errors by providing clinicians with accurate, precise predictions by modeling what has happened for similar patients in the past. Unfortunately, many lack accuracy, reproducibility, and clinical workflow integration. Better methods are needed to support ICU discharge decision-making.

To develop accurate and effective decision-support platforms that augment ICU discharge decision-making, we aim to 1) evaluate the methodologic rigor and predictive performance of models predicting ICU readmission and 2) understand the characteristics of high-performing, generalizable, and transparent prediction models.

MATERIALS AND METHODS

PubMed, Web of Science, Cochrane, and Embase were systematically searched for articles related to the development or validation of ICU readmission prediction models within the past 11 years (2010–2021) on April 1, 2021. Searches were tailored to each database to use the annotated concepts specific to each database, such as PubMed’s medical subject heading terms (see Supplemental Digital Content, Appendix A, http://links.lww.com/CCX/B130). The search terms were batched into three groups: ICU, readmission, and prediction/modeling.

Two authors independently reviewed the abstracts for article inclusion and exclusion criteria, with any disagreements settled by a third investigator. Full texts of the articles that passed the screening were reviewed for relevance to review objectives independently by two authors, resulting in a final set of articles. Study information was extracted from each of the relevant articles using the Critical Appraisal and Data Extraction for Systematic Reviews of Prediction Modelling Studies checklist; bias was evaluated using the Prediction model Risk Of Bias ASsessment Tool (PROBAST) (12, 13).

RESULTS

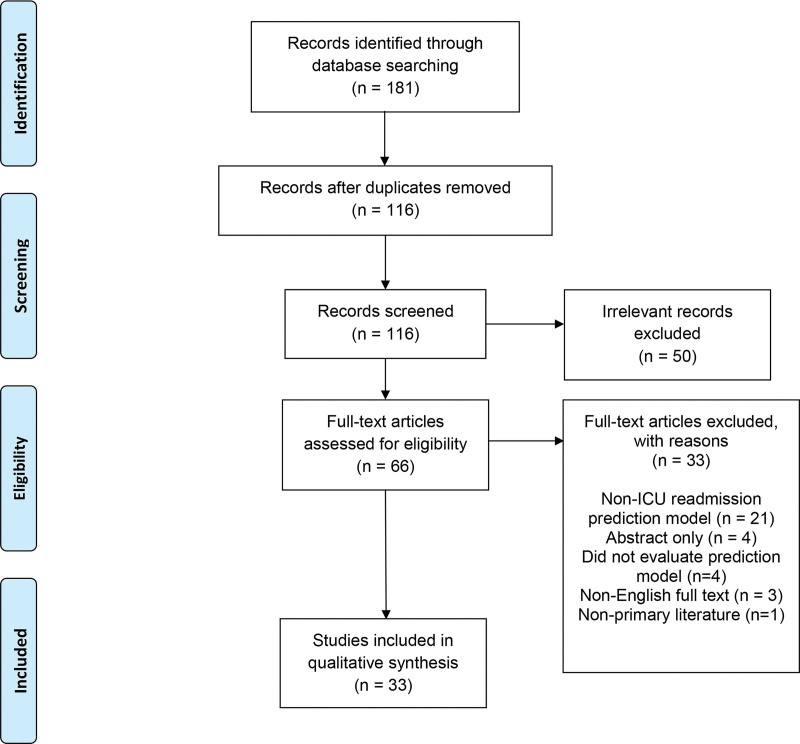

One-hundred sixteen unique articles were identified from the 181 articles returned by the four database searches (Fig. 1). Title/Abstract screening reduced the total number of articles to 66, which was further reduced to the final number of 33 after full-text screening. The primary reasons for exclusion were non-ICU readmission prediction model (21), abstract only (4), did not evaluate prediction model (4), non-English full text (3), nonprimary literature (1).

Figure 1.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow diagram.

Thirty-three studies were included in the final analysis, as summarized in Supplemental Table 1 (http://links.lww.com/CCX/B130) (14–46). Twenty-five studies recruited patients from a single center; eight studies (14–19, 29, 42) reported results from two or more centers. The most common source of patient data was Medical Information Mart for Intensive Care (MIMIC), which was used in nine studies (14, 19, 21–25, 30, 43) (Supplemental Table 2, http://links.lww.com/CCX/B130). ICUs with more specific patient populations (e.g., neuro ICU only or postoperative cardiac ICU only) sourced 12 studies (16, 17, 20, 25, 26, 29, 31, 32, 34, 37, 38, 44). Twenty-one studies used ICU readmission within windows that ranged from six hours to 30 days as the primary endpoint. Thirteen studies used a combined endpoint that included death (14, 15, 18, 22, 24–29, 42, 44, 45), cardiac arrest (14, 29), or rapid response team deployment (27) in addition to ICU readmission. One study evaluated ICU readmission as both a single and combined endpoint (26). One study derived two models to predict ICU readmission and death following ICU discharge separately (18). Seven studies used time series models that accounted for longitudinal patient trends (21–25, 30, 44).

Details of study methodology, validation, and sensitivity analyses are summarized in Supplemental Table 2 (http://links.lww.com/CCX/B130). Six studies accounted for reasons behind ICU readmission (20, 31–33, 36, 37), with a single study limiting results to only readmissions directly related to the initial ICU admission (32). In 22 studies, exclusion criteria comprised variations of the following: outbound facility transfer, death during index ICU admission, do not resuscitate (DNR) or withdrawal of invasive physiologic support, and an ICU stay less than 24 hours. Other exclusion criteria, particularly for the surgical or postoperative ICU studies, were surgeries not pertaining to the index ICU stay, missing data, death in surgery, or severe illness such as congenital valvular disease or brain tumor. Rationale for predictor variable selection was stated in 18 studies and was based on clinical experience (six studies), routinely available variables (four), previously unvalidated models (five), hypothesis testing of specific predictor variables (five), or restricted readmission predictors previously identified in the literature (two). Two studies selected predictor variables from a larger pool based on the greatest differences between readmitted and nonreadmitted patient cohorts (18, 21). Validation was performed in 25 studies—internal validation in 19 articles and both internal and external validation in six articles (14, 17, 21, 29, 42, 45). Seventeen studies used discretization to transform continuous variables into categorical variables.

The most common modeling technique was logistic regression. Twenty-three studies evaluated at least one logistic regression model (15–18, 23, 24, 26–29, 31–40, 42, 43, 46). Other model techniques included recurrent neural networks (22, 30), gradient boosting machines (14, 25), and artificial neural networks (23). Model performance was commonly assessed by area under the receiver operating characteristic curve (AUROC), a metric that shows the tradeoff between the true positive rate and false positive rate across different decision thresholds, where 0.5 represents random chance (e.g., a coin flip) and 1 represents perfect discrimination. The AUROC of a test with acceptable discriminating capabilities lies between 0.70 and 0.80. AUROC ranged from 0.51 (26) to 0.94 (21). Models that did not exceed an AUROC of 0.75 tended to 1) use existing clinical risk or acuity scores such as Acute Physiologic Assessment and Chronic Health Evaluation (APACHE) II (26, 34) or Stability and Workload Index for Transfer as the sole predictor (28, 30, 31) and 2) predict readmission based on singular laboratory values or findings (36, 40). The best performing models did one of the following: 1) used models with longitudinal temporal components that can analyze trends over time (21, 22), 2) used a more homogenous, post-surgical patient population (16, 17, 20, 29, 31, 32), or 3) limited ICU readmissions to those directly related to original admission (32).

Model sensitivity and specificity were reported in 15 studies. Sensitivity ranged from 18% to 91% (21, 40). Specificity ranged from 22% to 95% (14, 28). One study reported a range of sensitivities and specificities that varied from 0% to 99% based on the predicted likelihood of readmission or death. Only four models reported both sensitivities and specificities above 70% (18, 21, 29, 32). The models with high sensitivity followed the same three principles as above (22, 30, 31), whereas the models with high specificity used novel predictor variables, such as eosinophilia (14, 40). Models with high AUROC and sensitivity/specificity adhered to the patterns described above and also frequently employed some form of data imputation, internal validation, and ICU readmission timing (18, 21, 22, 29, 32, 34).

Six studies had a high overall risk of bias as indicated by PROBAST (26, 33, 34, 42–44) due to improper inclusion criteria (33, 34, 42, 43) or omission of critical analysis details (26, 44). Four other studies had an unclear overall risk of bias due to lack of detail describing the analysis (31, 33, 34, 41). Overall, the most common (50% of studies) source of bias was the filtering of candidate predictors via univariate analysis (15, 26–28, 31–34, 37–40). The remaining studies used appropriate cohort definitions and analytical methods and were assessed as having a low risk of bias.

DISCUSSION

We identified methodological characteristics that enhanced performance, generalizability, and transparency of ICU readmission prediction models. We did not unequivocally identify the “best” performing ICU prediction model in our review due to the limitations of the metric reported across all studies (AUROC) and the nuance and complexity of adjudicating what describes the “best” ICU prediction model.

Clearly Define a Homogenous Patient Population

A single prediction model that is highly accurate and generalizable to any ICU patient would be quite useful, but the authors found no such model. Instead, it may be preferable to identify patient subgroups with distinct baseline characteristics and physiologic signatures of illness and use separate models for each subgroup. This is consistent with other research trends, such as New York State’s Report Cards on Cardiac Surgeons, which succeeded in generating credible comparisons between the mortality rates of individual providers performing coronary artery bypass grafting; similar projects, such as the Healthcare Financing Administration’s annual report on mortality rates among hospitalized Medicare patients, failed in part due to the population heterogeneity (47). For example, models designed and validated exclusively among cardiac surgery patients performed well (16, 17, 29, 31, 38). Even among models that used a similar patient subgroup, models trained and evaluated in a single center tended to outperform multicenter models.

One counter-interpretation of our results is that the higher performance of homogenous models was a result of overfitting and, thus, a sign of poor external generalizability. In the present review, the likelihood of overfitting was deemed low. Eight of the 11 high-performing studies had large sample sizes (> 1,000), and 10 involved some form of internal or external validation.

A likely contributing factor that helps to explain the increase in performance observed between homogenous single center studies and heterogenous multicenter studies is the strong and often overriding influence that operational and administrative factors play in ICU bed allocation and level of care determination. This includes differences in resource availability (e.g., number of beds, presence of step-down units, and staffing) as well as care protocols that can vary widely both inter- and intra-institutionally (e.g., medical vs surgical services) and can decouple ICU readmission decisions from the underlying patient physiology.

A likely example of this is the increase in performance (AUROC, sensitivity, specificity) observed between the separate logistic regression models for ICU readmission and mortality developed by Badawi and Breslow (18). Death is a physiologic phenomenon that escapes many of the operational and administrative hurdles encountered when admitting a patient to the ICU. Two additional contributing factors, which may help to explain this difference, are that the patients who died represented a smaller, but possibly more physiologically extreme, pole of the dataset and that the model predicting mortality relied on fewer admission variables and included more last-day physiologic variables reflecting patient state at the time of discharge.

Last, it is important to distinguish between palliative care, DNR, or withdrawal of invasive physiologic support patients from the general population as their goals for care tend to differ significantly, which can impact the training of data-driven models.

The importance of homogeneity was substantiated by several studies with a low risk of bias (Supplemental Table 3, http://links.lww.com/CCX/B130) (14–25, 27–30, 32, 37–43, 45, 46). While developing distinct models for each patient subgroup may prove cumbersome if performed to the extreme, limiting future studies to a single surgery type (e.g., cardiac surgery) or a single initial complaint (e.g., respiratory distress) may lead to better overall model performance.

Be Specific When Defining Outcomes

We found that few models controlled for the reasons behind ICU readmission. ICU readmission is often due to multiple factors not necessarily related to patient stability upon discharge, including nosocomial infection, administrative causes, or pathologic causes unrelated to stability during initial discharge. A multicenter cohort study found that less than half of ICU readmissions within the same hospitalization pertained to the initial complaint, and almost half experienced new complications, particularly related to respiratory diseases (48). Furthermore, ICU readmission with the same diagnosis as the initial admission may not represent patient acuity during their initial stay but deterioration at home or within the ward. As time increases between the initial ICU stay and readmission, the likelihood of confounding factors leading to readmission increases. Only seven articles restricted ICU readmissions to within 48 hours or less, and the only model to restrict readmissions to those related to initial admission was one of the highest performing. Restricting readmission causes to physiologic exacerbations related to initial admission and restricting to earlier readmission may be valuable tools that allow a closer snapshot of patient state during their initial ICU stay and should be further considered.

Incorporate Temporality

Models that incorporate time series data generally exhibit greater accuracy than static models. This is likely attributable to the nature of human pathophysiology. Illness severity evolves and snapshot assessments can misrepresent risk for decompensation. Fortunately, electronic health record data are typically recorded with date and time values that can capture trends in illness severity over time. This approach has been successfully used to predict mortality and specific conditions, such as acute kidney injury (49–52).

Use Routinely Collected Predictors

Models should be developed using select, routinely available predictors. Overly complex algorithms can hamper clinicians in practice, where time-sensitive treatments and diagnosis can be critical to patient survival. We found that model simplicity could be improved, as only six studies deliberately limited predictors to those routinely collected, and only one deliberately restricted the number of predictors.

Transparently Report Predictive Performance

AUROC can serve as a valuable indicator of discriminative performance in balanced datasets; however, in the prediction of ICU readmission, a relatively low frequency event, performance can appear deceivingly high for models that are overly biased toward the majority class (no readmission). Alternative performance metrics such as F1 score () and area under the precision-recall curve (), which were reported in a handful of included studies, are robust against dataset imbalance and may paint a more clear and fair picture of how one model compares to another. Additionally, calibration metrics such as Brier score, Hosmer-Lemeshow statistic, and average absolute error can further elucidate the overall performance of models in a robust manner (53, 54). In our review, 18 (55%) of studies reported calibration measurements, the majority of which used the Hosmer-Lemeshow statistic. Further complicating the evaluation of superior model performance are the similar yet distinct values and priorities of stakeholders in the healthcare delivery system (e.g., physicians, patients, and healthcare administrators), which often vary both across and within roles.

Transparently Report Methodology

Approximately half of the reported studies omitted key details, which hampered our ability to critically evaluate the methodology employed in the creation of the models described. This highlights the importance of using reporting guidelines such as Transparent Reporting of multivariate prediction model for the Individual Prognosis Or Diagnosis (TRIPOD) that ensure essential elements for the appraisal of methodological rigor are presented clearly and consistently across all studies (55). None of the included studies reported compliance with the TRIPOD reporting guidelines (Supplemental Table 3, http://links.lww.com/CCX/B130).

Six studies had a high overall risk of bias as indicated by PROBAST (26, 33, 34, 42–44) due to improper inclusion criteria (33, 34, 42, 43) or omission of critical analysis details (26, 44). Four other studies had an unclear overall risk of bias due to lack of detail describing the analysis (31, 33, 34, 41).

Previous systematic reviews of ICU readmission prediction models focused primarily on the outcome (i.e., ICU readmission) rather than model characteristics. Nevertheless, the major findings from other systematic reviews are consistent with findings from the present review. Rosenberg and Watts (2) found that unstable vital signs at the time of ICU discharge were the strongest predictor of ICU readmission. Similarly, Wong et al (56) reported that greater illness severity, as measured with APACHE or Simplified Acute Physiology Score scales at the time of ICU admission or discharge, was associated with increased risk for ICU readmission (57). Apart from the classic approach to using illness severity to predict ICU admission, Vollam et al (58) performed a systematic review to evaluate associations between time of ICU discharge and outcomes. The authors found that evening and nighttime ICU discharge transfer times were associated with an increased prevalence of ICU readmission and in-hospital mortality, thus, underscoring the importance of health system factors in patient triage decisions and outcomes. In a novel assessment of prediction models themselves, Markazi-Moghaddam et al (59) examined the performance and validity of five ICU readmission prediction models that met relatively stringent quality criteria, finding not only heterogeneous and often suboptimal methods but also weak external validity. All of the studies evaluated by Markazi-Moghaddam et al (59) were among those included in the present review. There was general agreement between reviews regarding the content and quality of these three common studies. Collectively, other systematic reviews had different objectives and breadth of included studies but drew conclusions consistent with the present review.

The major findings from the present review are that transparent, high-performing, and generalizable models utilizing clearly defined homogenous patient populations with few ICU readmission confounders, specifically defined outcomes, incorporating routine datapoints collected over time, and presented transparently in terms of methodology and model performance, can inform future investigations in building effective models primed to augment decision-making when applied clinically. To achieve homogeneity, it is necessary to identify patient subgroups with distinct baseline characteristics and physiologic signatures of illness and use separate models for each subgroup. To avoid overfitting, models should incorporate a sufficient sample size, enumerate differences between subgroups and the patient population as a whole, and perform both internal and external validation. Most models presented in this review were published prior to the dissemination of reporting guidelines from editors of critical care journals (60); future investigations should adhere to these guidelines for selecting predictor variables, operationalizing variables, dealing with missing data, appropriate validation, model performance measures and their interpretation, and reporting practices. Future prediction models should also seek to represent and include clinical decisions for treatments, continuous vital sign monitoring, and other relevant aspects of clinical care (61, 62). To determine whether additional observations contribute significantly to predictive performance, future investigations should generate model learning curves in which the y-axis plots a performance metric (ideally the F1 score, representing the weighted average of precision and recall) and the x-axis plots sequential epochs of observations during training. When the slope plateaus, additional observations (e.g., patients or ICU admissions) will not improve performance, but additional input variables (e.g., demographics or biomarkers) might. If the training set is exhausted before the slope plateaus, performance might improve with additional observations. Model learning curves also allow future research to build upon previously reported models in an evidence-based fashion.

This study has several limitations. First, the included studies demonstrate significant heterogeneity, which precludes the performance of a meta-analysis. Although a meta-analysis would help elucidate relationships between appropriate triage decisions and patient outcomes, this objective has been met by previous studies. Meta-analysis was not necessary to complete the other two objectives of this review: critically evaluating the methodologic rigor and predictive performance of models that predict ICU readmission and understanding the characteristics of ideal prediction models. Second, while most included articles used unique datasets generated at their institutions, many used common datasets, namely MIMIC. As MIMIC data are drawn from a single institution, this introduces possible systemic findings into our conclusions. Third, eight of our articles combined mortality with readmission as a single endpoint, which can change the frequency of the outcome in each dataset and introduce bias. Thus, care should be practiced when interpreting the results of the mixed outcome studies, and a more balanced approach using AUROC, sensitivity, and specificity should be employed.

CONCLUSIONS

Among models predicting ICU readmission, models with the highest transparency, performance, and generalizability used clearly defined homogenous patient populations with few ICU readmission confounders, specifically defined outcomes, incorporated routine datapoints collected over time, and presented methodology and model performance in a forthright and unambiguous manner. These findings were substantiated by several studies that had a low risk of bias. Future investigations regarding the prediction of ICU readmission should consider longitudinal time series modeling using homogenous patient populations, with specific outcomes, and routinely collected predictor variables tailored to those populations. Their methodologies should be reported in accordance with consensus guidelines (e.g., TRIPOD) and include sufficient performance and calibration metrics in order to fairly assess model quality.

Supplementary Material

Footnotes

Dr. Loftus was supported by the National Institute of General Medical Sciences (NIGMS) of the National Institutes of Health (NIH) under Award Number K23GM140268. Dr. Ozrazgat-Baslanti was supported by K01 DK120784, R01 DK123078, and R01 DK121730 from the NIH/National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK), R01 GM110240 from the NIH/NIGMS, R01 EB029699 from the NIH/National Institute of Biomedical Imaging and Bioengineering (NIBIB), R01 NS120924 from the National Institute of Neurological Disorders and Stroke (NIH/NINDS), AGR DTD 12-02-20 from University of Florida Research, and UL1TR001427 from the National Center For Advancing Translational Sciences of the NIH. Dr. Tighe was supported by R01GM114290 from the NIGMS and 5R01AG055337 from the National Institute on Aging. Dr. Rashidi was supported by National Science Foundation Faculty Early Career Development (CAREER) award 1750192, 1R01EB029699 and 1R21EB027344 from the NIH/NIBIB, R01GM-110240 from the NIH/NIGMS, 1R01NS120924 from the NIH/NINDS, and by R01 DK121730 from the NIH/NIDDK. Dr. Bihorac was supported R01 GM110240 from the NIH/NIGMS, R01 EB029699 and R21 EB027344 from the NIH/NIBIB, R01 NS120924 from the NIH/NINDS, and by R01 DK121730 from the NIH/NIDDK. The remaining authors have disclosed that they do not have any potential conflicts of interest.

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s website (http://journals.lww.com/ccejournal).

REFERENCES

- 1.Barrett ML, Smith MW, Elixhauser A, et al. : Utilization of intensive care services, 2011: Statistical brief #185. In: Healthcare Cost and Utilization Project (HCUP) Statistical Briefs. Rockville, MD, Agency for Healthcare Research and Quality, 2014, 1–14 [Google Scholar]

- 2.Rosenberg AL, Watts C: Patients readmitted to ICUs*: A systematic review of risk factors and outcomes. Chest 2000; 118:492–502 [DOI] [PubMed] [Google Scholar]

- 3.Cooper GS, Sirio CA, Rotondi AJ, et al. : Are readmissions to the intensive care unit a useful measure of hospital performance? Med Care 1999; 37:399–408 [DOI] [PubMed] [Google Scholar]

- 4.Berenson RA; United States Congress Office of Technology Assessment: Intensive Care Units (ICUs): Clinical Outcomes, Costs, and Decisionmaking. Washington, DC, Congress of the U.S. For Sale by the Supt. of Docs., U.S. G.P.O., 1984 [Google Scholar]

- 5.Chen LM, Martin CM, Keenan SP, et al. : Patients readmitted to the intensive care unit during the same hospitalization: Clinical features and outcomes. Crit Care Med 1998; 26:1834–1841 [DOI] [PubMed] [Google Scholar]

- 6.Bekker HL: Making choices without deliberating. Science 2006; 312:1472; author reply 1472. [DOI] [PubMed] [Google Scholar]

- 7.Wolf FM, Gruppen LD, Billi JE: Differential diagnosis and the competing-hypotheses heuristic. A practical approach to judgment under uncertainty and Bayesian probability. JAMA 1985; 253:2858–2862 [PubMed] [Google Scholar]

- 8.Goldenson RM: The Encyclopedia of Human Behavior; Psychology, Psychiatry, and Mental Health. First Edition. Garden City, NY, Doubleday, 1970 [Google Scholar]

- 9.Dijksterhuis A, Bos MW, Nordgren LF, et al. : On making the right choice: The deliberation-without-attention effect. Science 2006; 311:1005–1007 [DOI] [PubMed] [Google Scholar]

- 10.Wang D, Carrano FM, Fisichella PM, et al. : A quest for optimization of postoperative triage after major surgery. J Laparoendoscopic Adv Surg Tech 2019; 29:203–205 [DOI] [PubMed] [Google Scholar]

- 11.Iapichino G, Corbella D, Minelli C, et al. : Reasons for refusal of admission to intensive care and impact on mortality. Intens Care Med 2010; 36:1772–1779 [DOI] [PubMed] [Google Scholar]

- 12.Wolff RF, Moons KGM, Riley RD, et al. : PROBAST: A tool to assess the risk of bias and applicability of prediction model studies. Ann Intern Med 2019; 170:51–58 [DOI] [PubMed] [Google Scholar]

- 13.Moons KGM, de Groot JAH, Bouwmeester W, et al. : Critical appraisal and data extraction for systematic reviews of prediction modelling studies: The CHARMS checklist. PLoS Med 2014; 11:e1001744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Rojas JC, Carey KA, Edelson DP, et al. : Predicting intensive care unit readmission with machine learning using electronic health record data. Ann Am Thorac Soc 2018; 15:846–853 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ouanes I, Schwebel C, Français A, et al. : A model to predict short-term death or readmission after intensive care unit discharge. J Crit Care 2012; 27:422.e1–e9 [DOI] [PubMed] [Google Scholar]

- 16.Van Diepen S, Graham MM, Nagendran J, et al. : Predicting cardiovascular intensive care unit readmission after cardiac surgery: Derivation and validation of the Alberta Provincial Project for Outcomes Assessment in Coronary Heart Disease (APPROACH) cardiovascular intensive care unit clinical predictio. Crit Care 2014; 18:651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Verma S, Southern DA, Raslan IR, et al. : Prospective validation and refinement of the APPROACH cardiovascular surgical intensive care unit readmission score. J Crit Care 2019; 54:117–121 [DOI] [PubMed] [Google Scholar]

- 18.Badawi O, Breslow MJ: Readmissions and death after ICU discharge: Development and validation of two predictive models. PLoS One 2012; 7:e48758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fialho AS, Cismondi F, Vieira SM, et al. : Data mining using clinical physiology at discharge to predict ICU readmissions. Expert Sys Appl 2012; 39:13158–13165 [Google Scholar]

- 20.Magruder JT, Kashiouris M, Grimm JC, et al. : A predictive model and risk score for unplanned cardiac surgery intensive care unit readmissions. J Card Surg 2015; 30:685–690 [DOI] [PubMed] [Google Scholar]

- 21.Caballero K, Akella R: Dynamic estimation of the probability of patient readmission to the ICU using electronic medical records. AMIA Annu Symp Proc 2015; 2015:1831–1840 [PMC free article] [PubMed] [Google Scholar]

- 22.Lin YW, Zhou Y, Faghri F, et al. : Analysis and prediction of unplanned intensive care unit readmission using recurrent neural networks with long shortterm memory. PLoS One 2019; 14:e0218942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Venugopalan J, Chanani N, Maher K, et al. : Combination of static and temporal data analysis to predict mortality and readmission in the intensive care. Annu Int Conf IEEE Eng Med Biol Soc 2017; 2017:2570–2573 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Xue Y, Klabjan D, Luo Y: Predicting ICU readmission using grouped physiological and medication trends. Artif Intell Med 2019; 95:27–37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Desautels T, Das R, Calvert J, et al. : Prediction of early unplanned intensive care unit readmission in a UK tertiary care hospital: A cross-sectional machine learning approach. BMJ Open 2017; 7:e017199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lee H, Lim CW, Hong HP, et al. : Efficacy of the APACHE II score at ICU discharge in predicting post-ICU mortality and ICU readmission in critically ill surgical patients. Anaesth Intensive Care 2015; 43:175–186 [DOI] [PubMed] [Google Scholar]

- 27.Ng YH, Pilcher DV, Bailey M, et al. : Predicting medical emergency team calls, cardiac arrest calls and re-admission after intensive care discharge: Creation of a tool to identify at-risk patients. Anaesth Intensive Care 2018; 46:88–96 [DOI] [PubMed] [Google Scholar]

- 28.Rosa RG, Roehrig C, De Oliveira RP, et al. : Comparison of unplanned intensive care unit readmission scores: A prospective cohort study. PLoS One 2015; 10:e0143127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chiu YD, Villar SS, Brand JW, et al. : Logistic early warning scores to predict death, cardiac arrest or unplanned intensive care unit re-admission after cardiac surgery. Anaesthesia 2020; 75:162–170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Barbieri S, Kemp J, Perez-Concha O, et al. : Benchmarking deep learning architectures for predicting readmission to the ICU and describing patients-at-risk. Sci Rep 2020; 10:1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Li S, Tang BY, Zhang B, et al. : Analysis of risk factors and establishment of a risk prediction model for cardiothoracic surgical intensive care unit readmission after heart valve surgery in China: A single-center study. Heart Lung 2019; 48:61–68 [DOI] [PubMed] [Google Scholar]

- 32.Hammer M, Grabitz SD, Teja B, et al. : A tool to predict readmission to the intensive care unit in surgical critical care patients-the RISC score. J Intensive Care Med 2021; 36:1296–1304 [DOI] [PubMed] [Google Scholar]

- 33.Jo YS, Lee YJ, Park JS, et al. : Readmission to medical intensive care units: Risk factors and prediction. Yonsei Med J 2015; 56:543–549 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lee HF, Lin SC, Lu CL, et al. : Revised Acute Physiology and Chronic Health Evaluation score as a predictor of neurosurgery intensive care unit readmission: A case-controlled study. J Crit Care 2010; 25:294–299 [DOI] [PubMed] [Google Scholar]

- 35.Frost SA, Tam V, Alexandrou E, et al. : Readmission to intensive care: Development of a nomogram for individualising risk. Crit Care Resuscitation 2010; 12:83–89 [PubMed] [Google Scholar]

- 36.Zhou G, Ho KM: Procalcitonin concentrations as a predictor of unexpected readmission and mortality after intensive care unit discharge: A retrospective cohort study. J Crit Care 2016; 33:240–244 [DOI] [PubMed] [Google Scholar]

- 37.Martin LA, Kilpatrick JA, Al-Dulaimi R, et al. : Predicting ICU readmission among surgical ICU patients: Development and validation of a clinical nomogram. Surg (United States) 2019; 165:373–380 [DOI] [PubMed] [Google Scholar]

- 38.Thomson R, Fletcher N, Valencia O, et al. : Readmission to the intensive care unit following cardiac surgery: A derived and validated risk prediction model in 4,869 patients. J Cardiothorac Vasc Anesth 2018; 32:2685–2691 [DOI] [PubMed] [Google Scholar]

- 39.Woldhek AL, Rijkenberg S, Bosman RJ, et al. : Readmission of ICU patients: A quality indicator? J Crit Care 2017; 38:328– 334 [DOI] [PubMed] [Google Scholar]

- 40.Yip CB, Ho KM: Eosinopenia as a predictor of unexpected re-admission and mortality after intensive care unit discharge. Anaesth Intensive Care 2013; 41:231– 241 [DOI] [PubMed] [Google Scholar]

- 41.Loreto M, Lisboa T, Moreira VP: Early prediction of ICU readmissions using classification algorithms. Comput Biol Med 2020; 118:103636. [DOI] [PubMed] [Google Scholar]

- 42.Kastrup M, Powollik R, Balzer F, et al. : Predictive ability of the stability and workload index for transfer score to predict unplanned readmissions after ICU discharge. Crit Care Med 2013; 41:1608–1615 [DOI] [PubMed] [Google Scholar]

- 43.Pakbin A, Rafi P, Hurley N, et al. : Prediction of ICU readmissions using data at patient discharge. Annu Int Conf IEEE Eng Med Biol Soc 2018; 2018:4932–4935 [DOI] [PubMed] [Google Scholar]

- 44.Klepstad PK, Nordseth T, Sikora N, et al. : Use of national early warning score for observation for increased risk for clinical deterioration during post-ICU care at a surgical ward. Ther Clin Risk Manag 2019; 15:315–322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Taniguchi LU, Ramos F, Momma AK, et al. : Subjective score and outcomes after discharge from the intensive care unit: A prospective observational study. J Int Med Res 2019; 47:4183–4193 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Haribhakti N, Agarwal P, Vida J, et al. : A simple scoring tool to predict medical intensive care unit readmissions based on both patient and process factors. J Gen Intern Med 2021; 36:901–907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Silber JH: Report cards on cardiac surgeons. N Engl J Med 1995; 333:938–939 [PubMed] [Google Scholar]

- 48.Lin WT, Chen W-L, Chao C-M, et al. : The outcomes and prognostic factors of the patients with unplanned intensive care unit readmissions. Medicine 2018; 97:e11124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Shickel B, Loftus TJ, Adhikari L, et al. : DeepSOFA: A continuous acuity score for critically ill patients using clinically interpretable deep learning. Sci Rep 2019; 9:1879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kim SY, Kim S, Cho J, et al. : A deep learning model for real-time mortality prediction in critically ill children. Crit Care 2019; 23:279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Meyer A, Zverinski D, Pfahringer B, et al. : Machine learning for real-time prediction of complications in critical care: A retrospective study. Lancet Respir Med 2018; 6:905–914 [DOI] [PubMed] [Google Scholar]

- 52.Tomasev N, Glorot X, Rae JW, et al. : A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 2019; 572:116–119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Alba AC, Agoritsas T, Walsh M, et al. : Discrimination and calibration of clinical prediction models: Users’ guides to the medical literature. JAMA 2017; 318:1377–1384 [DOI] [PubMed] [Google Scholar]

- 54.Walsh CG, Sharman K, Hripcsak G: Beyond discrimination: A comparison of calibration methods and clinical usefulness of predictive models of readmission risk. J Biomed Inform 2017; 76:9–18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Collins GS, Reitsma JB, Altman DG, et al. : Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. BMJ 2015; 350:g7594. [DOI] [PubMed] [Google Scholar]

- 56.Wong EG, Parker AM, Leung DG, et al. : Association of severity of illness and intensive care unit readmission: A systematic review. Heart Lung 2016; 45:3–9.e2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Le Gall JR, Lemeshow S, Saulnier F: A new Simplified Acute Physiology Score (SAPS II) based on a European/North American multicenter study. JAMA 1993; 270:2957–2963 [DOI] [PubMed] [Google Scholar]

- 58.Vollam S, Dutton S, Lamb S, et al. : Out-of-hours discharge from intensive care, in-hospital mortality and intensive care readmission rates: A systematic review and meta-analysis. Intensive Care Med 2018; 44:1115–1129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Markazi-Moghaddam N, Fathi M, Ramezankhani A: Risk prediction models for intensive care unit readmission: A systematic review of methodology and applicability. Aust Crit Care 2020; 33:367–374 [DOI] [PubMed] [Google Scholar]

- 60.Leisman DE, Harhay MO, Lederer DJ, et al. : Development and reporting of prediction models: Guidance for authors from editors of respiratory, sleep, and critical care journals. Crit Care Med 2020; 48:623–633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Beaulieu-Jones BK, Yuan W, Brat GA, et al. : Machine learning for patient risk stratification: Standing on, or looking over, the shoulders of clinicians? NPJ Digit Med 2021; 4:62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Monfredi O, Keim-Malpass J, Moorman JR: Continuous cardiorespiratory monitoring is a dominant source of predictive signal in machine learning for risk stratification and clinical decision support. Physiol Meas 2021; 42 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.