Summary

In high-risk work environments, workers become habituated to hazards they frequently encounter, subsequently underestimating risk and engaging in unsafe behaviors. This phenomenon has been termed “risk habituation” and identified as a vital root cause of fatalities and injuries at workplaces. Providing an effective intervention that curbs workers’ risk habituation is critical in preventing occupational injuries and fatalities. However, there exists no empirically supported intervention for curbing risk habituation. To this end, here we investigated how experiencing an accident in a virtual reality (VR) environment affects workers’ risk habituation toward repeatedly exposed workplace hazards. We examined an underlying mechanism of risk habituation at the sensory level and evaluated the effect of the accident intervention through electroencephalography (EEG). The results of pre- and posttreatment analyses indicate experiencing the virtual accident effectively curbs risk habituation at both the behavioral and sensory level. The findings open new vistas for occupational safety training.

Subject areas: Behavioral neuroscience, Cognitive neuroscience, Techniques in neuroscience

Graphical abstract

Highlights

-

•

Workers exhibited neural evidence of sensory habituation to warning signals

-

•

Brief virtual reality (VR) training mitigated such habituation

-

•

VR experience also enhanced workers’ vigilant behaviors to hazards at work

-

•

VR is a promising intervention tool to curb risk habituation in workers

Behavioral neuroscience; Cognitive neuroscience; Techniques in neuroscience

Introduction

Humans can become habituated to a variety of sensory signals.1,2,3 In high-risk workplaces, workers often become habituated to risks associated with tasks they perform frequently and consequently engage in unsafe behaviors.4,5,6,7,8,9,10 This behavioral tendency has been defined as risk habituation and identified as one of the key contributors to occupational injuries and fatalities.9,11 For example, in road work zones, workers’ vigilance to approaching struck-by hazards (e.g., construction vehicles) is apt to diminish after frequent exposures.12,13,14,15,16 In many instances of struck-by fatal accidents between construction vehicles and pedestrian workers, the construction vehicles were traveling at a speed of less than 10 mph9,12,17; workers failed to avoid the approaching vehicles because they ignored the warning alarms from the vehicles.18

In spite of periodic safety training, workers tend to focus on their work tasks while ignoring hazards they routinely encounter.19,20,21 Curbing workers’ risk habituation is thus critical to prevent injuries and fatalities at workplaces. Theoretically, a human’s habituation can be identified when stimulus-evoked responses decrease in amplitude (e.g., the intensity of sensory responses in the brain) and/or frequency (e.g., the number of behavioral responses elicited by the stimulus) with repeated exposure.1,3,22,23 Observing the developmental process of workers’ risk habituation in real-world settings is quite challenging,24 and as a result, the treatment of risk habituation within the scientific literature has been largely at the conceptual level. There is very little research that directly measures and quantifies workers’ risk habituation, and there exists no empirically supported intervention for curbing workers’ risk habituation, particularly at the sensory level.

The advancement of virtual reality (VR) technologies offers solutions for observing workers’ risk habituation. VR enables us to expose workers to close-to-real hazardous situations without risking actual injury and observe workers’ behaviors in response to repeated exposure to workplace hazards.25,26,27,28 VR also allows us to provide time-sensitive interventions when workers’ risk habituation is observed. In our previous study, we developed a method of observing and an intervention that curbs behavioral indicators of risk habituation using VR technologies.29 Our intervention is based on the principle of one-shot learning—rapid and substantial changes in human behaviors associated with a salient episodic memory.30,31,32 It has been theorized that workers who experienced an occupational injury or accident in the past perceive greater risks associated with workplace hazards and tend to behave more safely.4,12,33 In an effort to elicit similar changes in participants’ risk perception of frequently encountered hazards, in our previous study, we exposed naive student participants to a virtual accident.29 Furthermore, to empirically examine the effect of experiencing a virtual accident on risk habituation, we created a virtual work environment in which visual attention could be measured. The VR environment exposes participants to repeated struck-by hazards associated with construction vehicles and measures participants’ vigilant orienting behaviors in response to auditory warning alarms from those construction vehicles. During the experiment, participants experienced a virtual accident upon the emergence of habituated behaviors. Although experiencing the VR accident promoted vigilant behaviors in the student participants,29 such vigilant behaviors could simply reflect compensatory goal-directed attention to a revealed threat (i.e., participants actively monitor the vehicle’s movements once they learn it can strike them in the VR task). Whether such vigilant behaviors are the result of changes in how the sensory experience of the alarm is processed is not known, nor is whether such an intervention could curb behavior in experienced construction workers who are subject to significant levels of real-world risk habituation. To this end, the present study aimed to examine the consequence of experiencing the VR accident at the sensory level.

Here, we examined the potential neural indicators of workers’ risk habituation using electroencephalography (EEG). Event-related potentials (ERPs) provide a measure of stimulus-evoked neural responses with high temporal precision.34 ERPs could allow us to determine whether experiencing the VR accident alters the early or late stages of attentional processing. A modulation of early components is thought to be associated with perceptual, more automatic processing,35 while later components are generally associated with more strategic, controlled cognitive processes.36 In two sessions, performed before and after the VR intervention, we recorded ERPs to alarm and control sounds using the equiprobable paradigm (a variant of the oddball paradigm37,38). The alarm sound was similar to the warning signal used in both our VR environment and real construction sites. We mainly focused on two ERP components, one early (N1) and one late (P3), known to be sensitive to auditory habituation.39,40,41 Thus, EEG measures aimed to clarify the cognitive mechanisms that underlie the attenuation of risk habituation to warning signals after experiencing an accident in the VR environment. We hypothesized that construction workers would show a blunted ERP response to the alarm sound compared to the control sound and that this difference would either diminish or go away after experiencing the virtual accident. Such an outcome for the N1 component would provide direct evidence for the effect of the VR accident experience on the automatic sensory processing of warning signals. In contrast, the same outcome for the P3 component would suggest that the increase of vigilant behaviors observed after a VR accident29 ensues from controlled cognitive processes, such as goal-directed attention mechanisms. We supposed that an alteration of the automatic sensory processing, reflected by the N1 component, would have more long-lasting effects on behavior, and in consequence, would strongly support the utility of the VR intervention to curb risk habitation in construction workers.

Results

Decrease in vigilant orienting behaviors toward auditory warning alarms

As repeated exposure to workplace hazards might lead to a decrease in workers’ vigilant behaviors as an attentional consequence of risk habituation, we examined (1) the magnitude of reduction in workers’ vigilant behaviors to repeatedly exposed auditory warning alarms from construction vehicles and (2) the effectiveness of experiencing the VR accident caused by participants’ low vigilance (Figure 1A). At a construction site, workers are apt to direct most of their attention to their work tasks and pay less attention to surrounding hazards.42,43,44 Thus, to create an immersive virtual environment and accelerate participants’ habituation, we designed a virtual road-cleaning task. The task involved removing all debris and cleaning the entire surface of the road with a broom. The participant’s actual/physical sweeping motion was synchronized in the VR environment via motion controllers attached to a real broomstick (Figure 1B). In the experiment, while participants were performing the road-cleaning task, construction vehicles continuously moved back and forth. Auditory warning alarms sounded to warn the proximity of the vehicles (Figure 1C). Measuring the decrease in participants’ visual attention to an approaching hazard provides an empirically rigorous way of observing the development of risk habituation.45,46 Therefore, we measured participants’ vigilant orienting behaviors using the eye-tracking sensors embedded in the VR headset (Figure 1D). One exposure to the hazard was defined as one reciprocal movement of the vehicle moving behind the participant. In each exposure to the hazard, when a participant looked back and checked the approaching vehicle, the response time—the elapsed time between the presentation of the auditory warning alarm and a participant’s hazard-checking behavior—was documented. The frequency of vigilant behaviors (checking rate) was also recorded. A VR accident was triggered upon repeated ignoring of the approaching vehicle (see Figure 1E and STAR Methods).

Figure 1.

Experimental procedure and intervention model

(A) The experiment timeline. Each experiment session consisted of the VR experiment (preceded by practice) and an EEG session measuring sensory responses to signals. The two experiment sessions were scheduled a week apart.

(B) Schematic of the VR experimental setup with a VR headset, motion controllers, and eye-tracking sensors embedded in the VR headset. The participants’ task was removing all debris and cleaning the entire surface of the road with a broom. The participant’s actual/physical sweeping motion was synchronized in the VR environment via motion controllers attached to a real broomstick.

(C–E) Schematic of the experimental and intervention scenario. While the participants were performing the road-cleaning task, construction vehicles continuously moved back and forth. Auditory warning alarms sounded to warn the proximity of the vehicles. The eye-tracking sensors documented participants’ vigilant orienting behaviors. The participants' ignorance of approaching vehicles triggered a VR accident.

(F) The overview scene of the VR road construction environment.

(G and H) The participants performed the virtual road-cleaning task using a real broom with motion controllers.

(I) The example of participant’s vigilant orienting behavior to check approaching hazards during the VR task.

In the first VR session, 20 out of 31 participants experienced the VR accident triggered by their ignorance of the approaching vehicles (i.e., accident group; AG), and 11 participants did not experience the accident because they exhibited regular hazard checking while performing the task (i.e., no accident group; NAG).

Does the repeated exposure to struck-by hazards result in a delay in workers’ vigilant behaviors in the VR environment? To answer this question, we tested a bivariate linear regression model. The regression model for AG positively predicted participants’ response time (vigilant orienting behavior) in the first VR session (Figure 2A; R2 = 0.13, F(1, 54) = 7.16, p = 0.008; B1 = 0.49, p = 0.008, 95% confidence interval [CI] (0.13, 0.85)). With the increase in the number of exposures to the hazards, the AG’s vigilant behaviors were delayed. Consequently, participants in AG experienced the VR accident. The regression model for NAG was also significant (Figure 2A; R2 = 0.02, F(1, 249) = 5.78, p = 0.017; B1 = 0.13, p = 0.033, 95% CI (0.02, 0.25)). However, the R2 value was relatively low because participants in NAG were constantly vigilant toward the hazards. Thus, the association between repeated exposure to the hazards and workers’ vigilant behaviors was attenuated. To investigate further the difference in habituation tendency between AG and NAG, we tested a multiple linear regression analysis model (Figure 2A; R2 = 0.08, F(3, 303) = 8.92, p < 0.001). The result indicated that the interaction between response time and the occurrence of the VR accident approached significance (B3 = 0.36, p = 0.052, 95% CI = −0.01, 0.71). The result of the first VR session indicated the AG’s vigilant orienting behavior was slowed with the increase in the number of exposures to the hazard, culminating in the triggering of the VR accident. On the other hand, the NAG’s vigilant orienting behavior did not significantly slow down over time. Therefore, to supplement the results of the analysis of response time, we analyzed the frequency of participants’ vigilant orienting behaviors (checking rate). There was a significant difference in checking rate between AG and NAG (Figure 2B; Z = 217, p < 0.001). This further suggests that NAG exercised heightened levels of attentiveness toward approaching hazards as compared to AG and, as a consequence, did not experience the VR accident.

Figure 2.

Behavioral risk habituation and the occurrence of the VR accident

(A) The slopes for the effect of the occurrence of the VR accident on response time in the first VR session (see the STAR Methods section for details) for AG and NAG. The shaded envelope indicated the 95% CI for predictions from the linear model.

(B) The average frequency of checking behaviors (checking rate) of NAG and AG. Standard error bars of the mean are included.

Intervention effect of a single VR accident experience on vigilant orienting behaviors

Can experiencing a single VR accident following behavioral indicators of risk habituation curb the tendency to ignore relevant hazards, and is that intervention effect sustained? To answer these questions, we asked the participants to complete the VR task a week after the first participation. The result from the multiple linear regression model for AG, which examines the effect of number of exposures on response time in the second VR session, indicated a significant negative interaction between response time in the second VR session and the VR accident experience in the first VR session (Figure 3A; B3 = 0.45, p = 0.007, 95% CI = 0.12, 0.77), while this interaction was not significant in NAG (Figure 3C; B3 = 0.09, p = 0.29, 95% CI = −0.08, 0.26). In addition, AG presented a significant difference (Figure 3B; t19 = −11.09, p < 0.001, 95% CI (−73.98, −50.48), d = −3.08) in the checking rate between the first and second VR sessions, while NAG did not (Figure 3D; t10 = 0.52, p = 0.62, 95% CI (−0.12, 0.18), d = 0.20). After experiencing the VR accident in the first session, AG exhibited consistent vigilant orienting behaviors in the second VR session. The result confirmed that experiencing a single VR accident triggered by the ignoring of a hazard mitigated participants’ habituation and increased participants' vigilant orienting behaviors.

Figure 3.

Intervention effect on increasing vigilant orienting behaviors

(A) The slopes for the effect of the number of exposures on response time in AG for each VR session. The shaded envelope indicated the 95% CI for predictions from the linear model.

(B) Average checking rate of AG for each VR session. On each box, the central mark indicates the median. The whiskers extend to the highest value and the lowest value.

(C) The slopes for the effect of the number of exposures on response time in NAG for each VR session. The shaded envelope indicated the 95% CI for predictions from the linear model.

(D) Average checking rate of NAG for each VR session. On each box, the central mark indicates the median. The whiskers extend to the highest value and the lowest value.

Intervention effect on sensory-perceptual processing of warning alarms

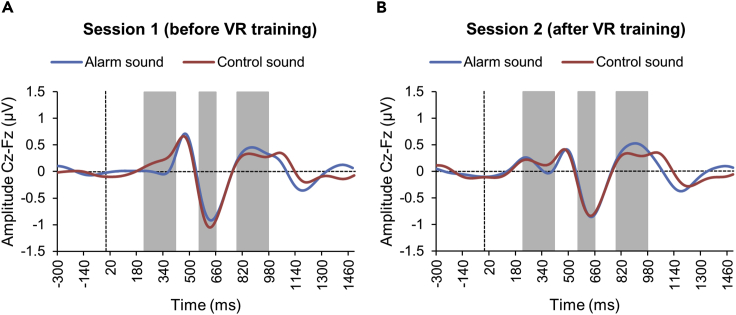

Participants passively listened to the alarm sound and a matched control sound while neural responses to the sounds were recorded using EEG, both before the initial VR session and after the second session. Grand-average ERP waveforms are illustrated as a function of the type of sound for each EEG session in Figure 4. Analyses performed within the 220–420 ms time window revealed a significant main effect of type of sound, F(1, 18) = 16.44, p < 0.001, = 0.477, with higher ERP amplitudes for the control sound than for the alarm sound, no significant main effect of session, F(1, 18) = 0.24, p = 0.627, = 0.013, and no significant interaction between the type of sound and session, F(1, 18) = 2.53, p = 0.129, = 0.123. These results suggest greater early attention to the control sound than to the alarm sound consistently across the two sessions.

Figure 4.

Grand-average ERP waveforms at the Cz-Fz electrode sites for alarm and control sounds

(A) Before and (B) after the VR training. The gray rectangles indicate the time window of the three ERP components analyzed.

Analyses performed within the 540–660 ms time window revealed a significant main effect of type of sound, F(1, 18) = 8.15, p = 0.011, = 0.312, with higher ERP amplitudes for the control sound than for the alarm sound, no significant main effect of session, F(1, 18) = 0.49, p = 0.494, = 0.026, and crucially, a significant interaction between the type of sound and session, F(1, 18) = 5.12, p = 0.036, = 0.222, indicating that ERP amplitude differences between the control sound and the alarm sound significantly decreased after the VR training. Subsequent t-tests showed that ERP amplitudes were significantly greater for the control sound than for the alarm sound before the VR training, t(18) = 3.06, p = 0.007, d = 0.70, whereas ERP amplitudes between the two sounds did not differ significantly after the VR training, t(18) = 0.58, p = 0.284, d = 0.13.

Analyses performed within the 804–980 ms time window also revealed a significant main effect of type of sound, F(1, 18) = 12.64, p = 0.002, = 0.413, with higher ERP amplitudes for the alarm sound than for the control sound, no significant main effect of session, F(1, 18) = 0.15, p = 0.706, = 0.008, and no significant interaction between the type of sound and session, F(1, 18) = 0.70, p = 0.413, = 0.037. Thus, late attention to the alarm sound was higher than to the control sound, and this effect did not differ across the two sessions.

Participants who experienced at least one accident in the VR training (N = 16)

We performed the same analyses as above after removing participants who did not experience the accident in the VR training (N = 3). Results are overall consistent with those observed in the whole group (see details below). The only notable difference was observed within the 220–420 ms time window.

Grand-average ERP waveforms are illustrated as a function of type of sound for each EEG session in Figure 5. Analyses performed within the 220–420 ms time window after stimulus onset revealed a significant main effect of type of sound, F(1, 15) = 10.49, p = 0.006, = 0.412, with higher ERP amplitudes for the control sound than for the alarm sound, no significant main effect of session, F(1, 15) = 0.25, p = 0.628, = 0.016, and an interaction between type of sound and session close to significance, F(1, 15) = 4.22, p = 0.058, = 0.220, suggesting that ERP amplitude differences between the control sound and the alarm sound tended to decrease after the VR training. Subsequent t-tests showed that ERP amplitudes were significantly greater for the control sound than for the alarm sound before the VR training, t(15) = 3.19, p = 0.006, d = 0.80, whereas ERP amplitudes between the two sounds did not differ significantly after the VR training, t(15) = 0.82, p = 0.423, d = 0.21.

Figure 5.

Grand-average ERP waveforms at the Cz and Fz electrode sites for alarm and control sounds

(A) Before and (B) after the VR training for participants who experienced at least one accident in the two VR sessions (N = 16). The gray rectangles indicate the time window of the three ERP components analyzed.

Analyses performed within the 540–660 ms time window after stimulus onset revealed a significant main effect of type of sound, F(1, 15) = 6.04, p = 0.027, = 0.287, with higher ERP amplitudes for the control sound than for the alarm sound, no significant main effect of session, F(1, 15) = 2.18, p = 0.160, = 0.127, and crucially, a significant interaction between type of sound and session, F(1, 15) = 8.84, p = 0.009, = 0.371, indicating that ERP amplitude differences between the control sound and the alarm sound decreased significantly after the VR training. Subsequent t-tests showed that ERP amplitudes were significantly greater for the control sound than for the alarm sound before the VR training, t(15) = 3.01, p = 0.009, d = 0.75, whereas ERP amplitudes between the two sounds did not differ significantly after the VR training, t(15) = 0.26, p = 0.801, d = 0.06.

Analyses performed within the 804–980 ms time window after stimulus onset revealed a significant main effect of type of sound, F(1, 15) = 8.71, p = 0.010, = 0.367, with higher ERP amplitudes for the alarm sound than for the control sound, no significant main effect of session, F(1, 15) = 0.08, p = 0.778, = 0.005, and no significant interaction between type of sound and session, F(1, 15) = 0.95, p = 0.345, = 0.059.

Discussion

We developed a VR safety training environment that exposes experienced construction workers to repeated struck-by hazards and simulates a virtual accident upon the attentional consequence of risk habituation. We warned participants about potential struck-by hazards and requested that they pay attention to approaching vehicles for safety purposes. However, during the experiment, participants became accustomed to repeated exposure to warning alarms associated with struck-by hazards and focused largely on performing the road-cleaning task. Our data show that, overall, participants became increasingly inattentive to struck-by hazards in our VR task, with a substantial proportion of these construction workers experiencing a virtual accident upon repeated ignoring of the hazard. Our findings demonstrate that construction workers’ risk habituation can be observed in the intentionally designed VR environment, thereby providing the potential for manipulating and intervening in risk habituation, a major causal factor of fatal accidents at workplaces.

We further investigated the intervention effect of experiencing the single VR accident on curbing risk habituation. Experiencing the VR accident significantly affected participants’ risk habituation. For participants who experienced the VR accident, a progressive slowing of response time in visual checking was no longer observed in the second session, and the frequency (checking rate) of participants’ vigilant orienting behaviors significantly increased across sessions, with the intervention effects sustaining over at least one week. The findings dovetail with the results of previous conceptual studies claiming that workers who experienced work-related injuries or accidents in real life tend to perceive greater risks associated with workplace hazards.4,12,33 The results of this study also suggest the potential of VR as a one-shot learning platform that leads to rapid changes in workers’ behaviors with a salient and memorable accident experience.

Our findings also demonstrate that individual workers have different risk habituation tendencies. During the VR task, some participants consistently responded to the warning alarms with vigilant orienting across both VR sessions. However, participants who engaged in the VR accident in the first session frequently ignored the approaching construction vehicles and showed rapidly decaying vigilant orienting behaviors. Our VR task, therefore, differentiates between participants who are more or less prone to risk habituation. The direct measurement of workers’ behavioral responses in the VR environment may help researchers and construction safety managers identify which workers are risk prone or vulnerable to risk habituation, thereby allowing for the provision of tailored safety training. Such tailored safety training may also help workers recognize their risk habituation tendencies toward repeatedly encountered workplace hazards and allow them to understand when they engage in risky behaviors.

The results of EEG data analyses also support the intervention effect of our VR training on restoring workers’ sensory responses to warning signals. EEG data are consistent with behavioral effects observed in the VR sessions, especially for the ERP component measured within the 540–660 ms time window, which presumably reflects the N1 because this component is the first negative wave response recorded after auditory stimulus onset.47 The N1 time window is usually earlier (between 100 and 200 ms after stimulus onset48,49), but the time discrepancy could be due to the specificity of sounds presented in our study (i.e., long- and non-monofrequency), which largely differ from short- and monofrequency sounds commonly used in auditory EEG studies (e.g., 60 ms39; 50 ms50; 70 ms41). The alarm sound presented in the EEG sessions was extracted from the alarm signal used in real construction sites to increase the ecological validity of our experiment.51 The auditory N1 component is related to early attention34,52 and sensitive to habituation.39,50

Early attention to the control sound was greater than to the alarm sound before completing the VR training, as reflected by the ERP amplitude difference within the 540–660 ms time window, suggesting a consequence of habituation to the alarm sound resulting from real-world construction experience preceding the experiment. A potential way to mitigate this issue in construction sites could be to employ a variety of alarm sounds and present them in random sequences (i.e., the use of a specific alarm sound would not be predictable by workers). This method might significantly reduce habituation to each specific warning signal, and as a consequence, workers would be less inclined to engage in unsafe behaviors stemming from ignorance of a hazard.

Importantly, the ERP amplitude difference between the control and the alarm sound within the 540–660 ms time window significantly decreased after the VR training. This outcome is consistent with results observed within the 220–420 ms time window when analyses were performed only with participants who experienced a VR accident. Thus, we found physiological evidence of the VR training reducing habituation to the alarm sound at an early stage of attentional processing. The mechanism for sensory N1 habituation is thought to be related to refractoriness of cell populations involved in basic sensory processing systems rather than high-level cognitive processes exerting top-down control over sensory cortex,53,54 suggesting that the reduction of the N1 amplitude difference between the two sounds observed after the VR training is not due to explicit, top-down strategies. No modulation of later ERP components was observed after the VR training. Previous studies reported habituation of auditory stimuli for late components (e.g., P341,55), but this outcome could be evidenced only in specific conditions (e.g., when attentional resources available to perform the task are reduced39). Altogether, the EEG data suggest that the VR training enhances early attentional and sensory-perceptual processing of auditory stimuli signaling a potential danger in construction sites.

The present study demonstrates habituation built up from protracted real-world experience in the first EEG session that is largely abolished following the VR accident intervention. That is, our data show that a singular experience can undo the consequences of months to years of routine exposure to sensory signals. Such an apparently profound effect on sensory habituation is, to our knowledge, unprecedented and opens new vistas in studies of perception and learning. The consequences of habituation may be much more plastic and sensitive to change than previously thought, particularly in the context of an affectively salient precipitating event. Future research should explore the modulability of sensory habituation more broadly and how it responds to recent salient events.

Limitations of the study

Several limitations of this study should be noted. To avoid manipulation on participants’ behaviors, during the VR experiment, the VR accident was triggered in response to participants’ vigilant orienting behaviors. Therefore, the samples were unevenly distributed, and the number of participants who did not experience the VR accident was relatively small. During the experiment, some participants also never exhibited any vigilant orienting behaviors. The data from these participants were included only in the vigilant behavior frequency analysis. The Occupational Safety and Health Administration (OSHA) recognizes four leading causes of fatal accidents—falls, struck-by, caught-in/between, and electrocution hazards (i.e., fatal-four hazards).56 In this study, we focus on observing and curbing construction workers’ risk habituation to struck-by hazards associated with construction vehicles. Future work could extend the proposed intervention to curb workers’ habituation to other fatal-four hazards.

STAR★Methods

Key resources table

| REAGENT or RESOURCES | SOURCE | IDENTIFIER |

|---|---|---|

| Software and algorithms | ||

| VIVE SRanipal Eye Tracking SDK | HTC Corporation | Version 1.1.0.1 |

| Unreal Engine | Epic Games, Inc. | Version 4.22.3 |

| Autodesk 3dS Max | Autodesk Inc. | Version 2019 |

| Autodesk Maya | Autodesk Inc. | Version 2019 |

| PsychoPy | Open Science Tools Ltd. | Version 2021.1.4 |

| OpenBCI GUI | OpenBCI | Version 5.0.4 |

| OpenSync library | OpenSync | Version 3.0 |

| Logic Pro X | Apple Inc. | Version 10.6.3 |

| Python | Python Software Foundation | Version 3.8.2 |

| Python SciPy toolbox | SciPy | Version 1.4.1.33 |

| MNE-Python package | https://doi.org/10.5281/zenodo.7314185 | Version 0.24 |

| EEGLAB | https://doi.org/10.1016/j.jneumeth.2003.10.009 | Version 2021.1 |

| Artifact Subspace Reconstruction method | This study (https://github.com/moeinrazavi/EEG-ASR-Python) | Version 1.0.0 |

| MATLAB | Mathworks | Version R2021a |

| Other | ||

| HTC Vive Pro Eye | HTC Corporation | |

| OpenBCI EEG System | OpenBCI Board Kit of 32 bits and USB Dongle, a cap containing 20 electrodes | |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Changbum R. Ahn (cbahn@snu.ac.kr)

Materials availability

This study did not generate new unique reagents.

Experimental model and subject details

A total of thirty-five construction workers (32 males and 3 females) aged between 20 and 43 years (mean age: 27.26 years, s.d: 6.09 years) were recruited from a construction company in the United States. All the participants were pedestrian workers working at heavy civil construction projects. The experimental protocol was approved by the Institutional Review Board (IRB) at Texas A&M University (IRB 2019-1270D). Informed consent was obtained from all participants. The experiment was conducted in a quiet space at a safety training facility of the construction company. During the experiment, the access to the facility was controlled. To examine the sustained intervention effect, each participant was asked to participate in a second session one week later. Each session, including the VR experiment and the EEG experiment, took approximately 3–3.5 h. Thus, each participant took a total of 6-7 h to complete both sessions. All participants voluntarily participated in this study. Participants were compensated with $200 for participating in the study. Data from four participants—who did not return for their second study session (N = 2) or withdrew from the experiment because of motion sickness (N = 2)—were discarded. Thus, data from thirty-one participants entered the VR training data analyses. Among the thirty-one road construction workers who completed the two VR sessions, the data of twelve participants were excluded from the EEG analyses due to technical problems/malfunction systems (N = 8; i.e., loss of data due to a dysfunction of the Bluetooth system) or artifacts in the EEG recordings (N = 4; see event-related potential analyses section) in at least one of the two EEG sessions.

Methods details

Overview

We designed and performed the experiment with actual road construction workers (1) to examine workers’ behavioral and sensory habituation to repeated auditory warning alarms from construction vehicles and (2) to validate the sustained intervention effect. An overview of the experimental procedure is provided in Figure 1A. In the first session, the participants first completed the EEG session, then the VR training. A week later, the participants completed the second session, with the VR training first and the EEG session afterward.

Apparatus

Virtual reality experiment

The HTC Vive Pro Eye (HTC Corporation, New Taipei City, Taiwan; resolution: 2880 x 1600 pixels; field of view: 98° horizontal and 98° vertical; refresh rate 90 Hz) and Dell Precision T5820 (Dell, Round Rock, TX, USA; CPU: Intel i9-10900 × 3.7 GHz; RAM; DDR4 128 GB; GPU: Nvidia GeForce RTX 3080) were used to display the VR environment. Eye movement was recorded using eye-tracking sensors embedded in the HTC Vive Pro Eye with a peak frequency of 90 Hz.

EEG experiment

A Dell Precision T3620 (Dell, Round Rock, TX, USA) equipped with PsychoPy software v2021.1.457,58,59 was used to present the stimuli on a Dell P217H monitor. The participants viewed the monitor from a distance of approximately 70 cm in a dimly lit room. Participants also wore Etymotic ER4XR 45Ω high fidelity, noise-isolating in-ear earphones (Etymotic Research, Elk Grove Village, IL, USA) to listen to all sounds.

The equipment used to perform measurements by EEG was the OpenBCI Board Kit of 32 bits and USB Dongle (www.openbci.com), with a cap containing 20 electrodes pre-organized according to the international 10-20 system.60 For our study, we have used 15 channels from the 10–20 electrode placement system: C3, C4, Cz, F3, F4, Fz, Fp1, Fp2, P3, P4, Pz, T3, T4, T5, T6. We embedded OpenSync library in PsychoPy58 to synchronize and record EEG signals with the associated alarm/control task markers.61 All electrode impedances were kept below 15 kΩ. The EEG was sampled and digitized at 125 Hz.

Virtual reality experiment procedure

Virtual reality environment

A road maintenance working environment in which participants would be part of an asphalt milling crew was selected for the experimental scenario and designed. The VR environment was created using Unreal Engine v.4.22.3.62 All components included in the VR environment were created using Autodesk 3dS Max v.201963 and Autodesk Maya v.2019.64 To create a VR environment that effectively elicits participants’ risk habituation, the experimental scenario focused on repeated exposure of participants to potential struck-by hazards associated with construction vehicles, with associated auditory warning alarms from the vehicles. The movement of virtual construction vehicles was designed to respond to a participant’s behavior. The reciprocal back-and-forth movement of a vehicle (i.e., street sweeper) that travels behind a participant is controlled by the real-time measurement of its distance from a participant. When the street sweeper reaches the designated minimum distance to a participant—7.5 m, the street sweeper turns off its warning alarm and begins to reverse, thereby repeatedly exposing a participant to the risk of a potential runover accident without interfering with the participant’s virtual road cleaning task. While a participant is performing the sweeping task, dump trucks repeatedly pass by very close to the participant. All the construction vehicles in the VR environment emit operating sounds and auditory warning alarms (i.e., beeping sounds), and these warning alarms are carefully designed to create a realistic auditory experience. The sound attenuation function was adopted. The volume of audio sources is changed in response to the distance and angle between a participant and the audio sources. A participant thus can recognize the proximity and direction of the approaching hazard based on the warning alarms, similar to a real-world road construction site. To design a close-to-real virtual environment, the experimental scenario was reviewed by three experienced construction safety managers at nationwide heavy civil construction companies.

Task in the VR training

While working at a road construction/maintenance site, workers are apt to direct most of their attentional resources to a work task and become less attentive to workplace hazards.42,43,44 Thus, we designed a virtual road cleaning task not only to accelerate participants’ risk habituation within a short time period but also to promote active participation in the experiment. During the VR experiment, a participant was asked to perform a road cleaning task in a road maintenance work zone. The task was to remove all debris and clean the surface of the working lane with a broom. A participant’s physical sweeping movement with an actual broomstick was captured by VR motion controllers attached to the broomstick and synchronized in the VR environment with a virtual broom.

Vigilant orienting behavior measurement

In this study, we defined vigilant orienting behavior as hazard-checking behavior—participant’s eye and/or head movement to check approaching construction vehicles. While working at a dangerous workplace, workers must pay attention to a hazard in order to correctly respond to it. Therefore, recognizing and evaluating the risk associated with workplace hazards require selective attention (specifically, visual attention).45,65,66,67 Although orienting behavior does not always lead to workers’ proper risk perception of workplace hazards,68,69 visual attention is a vital requisite for enhanced risk perception.45 Visual attention is intimately related to eye movements,46,70 and visual checking of surrounding hazards in workplaces is an essential safety behavior. Therefore, measuring the latency (response time) in participants’ visual attention responses to repeatedly encountered workplace hazards provides a concrete and empirically grounded analytical approach to monitoring risk habituation development. In this regard, to measure the latency pattern in participants’ visual attention to the approaching construction vehicle, an eye-tracking system was embedded into the VR environment. During the experiment, eye-tracking sensors integrated with the VR headset document what a participant is looking at with a peak frequency of 90 Hz. The eye movement monitoring system documents the latency of exhibiting vigilant orienting behaviors (response time in each exposure), and the frequency of vigilant orienting behaviors. The collected data from the VR training was preprocessed as follows:

In this study, we defined one exposure to the struck-by hazard as one reciprocal movement of the street sweeper traveling behind a participant. During the VR training, when a participant exhibited a vigilant orienting behavior (looking back and checking the proximity of the approaching vehicle for the first time in each exposure), the latency of response time (i.e., the delay between the beginning of the alarm sounds and participants’ vigilant orienting behaviors) was documented.

The frequency of vigilant orienting behaviors (checking rate, CR) was defined using Equation 1:

| (Equation 1) |

where number of checking cycles = the number of cycles that a participant succeeded in checking the approaching street sweeper by a participant i; and number of exposures = the number of exposures to the struck-by hazard of a participant i.

Intervention (A single memorable experience through a virtual accident experience)

The VR environment includes a system that simulates struck-by accidents with construction vehicles upon a participant’s habituated ignorance of the approaching vehicles. To make the VR accident simulation a memorable event, it was dramatized by emphasizing aversive feedback to a participant. The VR accident simulation includes visual accident scenes, crash sounds, and haptic feedback via the VR motion controllers. In the first VR session, the accident with the street sweeper is triggered by a participant’s habituated ignorance of approaching construction vehicles. To trigger the VR accident upon a participant’s habituation, a behavior-checking system with a moving window was adopted. The moving window counted the number of a participant’s successes in checking the approaching vehicles over the five most recent exposures. When a participant fails to check on the approaching sweeper in three out of these five exposures, the street sweeper starts to move forward toward the participant until it virtually collides with the participant. If a participant recognizes the street sweeper’s erratic movement and succeeds in evading the collision, the street sweeper makes the normal reciprocal movement, and the behavior checking system also restarts to count the participant’s vigilant orienting behaviors. To provide a participant enough time to be aware of surrounding hazards while performing the assigned VR road cleaning task, regardless of participants’ continuous ignorance of the approaching vehicles, the VR accident was not triggered until at least 10 exposures to the struck-by hazard. Although a failure to visually check on three of the most recent five exposures serves as a threshold for the VR accident simulation that is to some degree arbitrary, a participant’s frequent inattention to the repeatedly approaching construction vehicle indicates a proxy for habituation. The VR accident with the sweeper was only triggered in the first VR session.

In the second VR session, a participant was also required to perform the same road cleaning task and was exposed to the same types of struck-by hazards at the first VR session (the risk associated with the street sweeper behind a participant). The latency and frequency of participants’ vigilant orienting behaviors were measured in the same manner as in the first VR session. To observe the sustained intervention effect of experiencing the accident on participants' behaviors, the VR accident with the sweeper was not triggered in the second VR session. However, to make the VR environment more close-to real, another VR accident simulation was designed and embedded in the second VR session. During the VR training, dump trucks repeatedly passed a participant in the next lane of the working lane where a participant was performing the road cleaning task. The accident with the dump truck was triggered at about 20 min after the start of the experiment. Once a participant reached an invisible accident trigger point, one of the dump trucks changed direction and backed up to the lane where the participant was working. The VR accident with the dump truck was also avoidable if a participant recognized the erratic movement of the truck and dodged the collision with the dump truck that was heading toward the participant. Again, the designed and presented struck-by hazards in the VR environment involved auditory warning alarms when they were heading toward a participant. This dump truck interaction was not of interest in the present study and was intended to pilot procedures for a potential future study.

EEG experiment procedure

Auditory stimuli

The two auditory stimuli (alarm and control) were made using Logic Pro X software on a 2017 MacBook Pro (Apple Inc., Cupertino, CA, USA). The original alarm sound—a truck Backing Up Beep sound—was extracted from video files.71 For creating the control sound, the alarm sound was modified using a sound equalizer. The sound distribution was equated to have the same magnitude over the spectrum of sound (from 39 Hz to 14,200 Hz), which generates white noise ambient. The two sounds lasted 600 ms each and were equated using the normalize function to set the loudness to 23 LUFF.

Procedure

The Oddball paradigm has been widely employed to assess auditory stimulus discrimination.72,73 This paradigm usually consists of the frequent presentation of one stimulus designated the “standard” interspersed with rare occurrences of one different stimulus designated the “deviant”.74 A common alternative is an equiprobable paradigm, in which each auditory stimulus is presented an equal number of times in a random order.37,47,75 We opted for the latter paradigm in the present study to efficiently control the habituation of sounds. Note that the equiprobable paradigm is often used as a passive task, which requires no behavioral response.38

Twenty images of construction sites were used as backgrounds in the EEG sessions. Half of the images were presented in the first session and the second half in the second session. In each session, each of the 10 images was presented 10 times in a random order with the restriction that the same image could not be presented two times in a row. We refer to sequence the set of events occurring during the presentation of one image. Each sequence included four sounds (of 600 ms) separated by an interstimulus interval of 3500 ms, 4500 ms, or 5500 ms. The first sound of a sequence was presented at 1750 ms or 2750 ms after the beginning of the sequence to make sure that the EEG signal related to the processing of the sound was not (or minimally) affected by the processing of the image. The last sound of a sequence terminated 1750 ms or 2500 ms before the end of the sequence. To keep constant the duration of each sequence, the five intervals that preceded and followed the sounds always lasted 18 s in total. All the possible combinations of intervals that met this requirement were presented, in a random order. Thus, each sequence lasted 20.4 s (Figure 6). In each session, 200 alarm sounds and 200 control sounds were presented. In two consecutive sequences, four-alarm sounds and four control sounds were presented in a random order so that the EEG session never included more than eight similar sounds in a row. Participants had a self-paced break after 50 sequences in each session.[Insert Figure 6 here]

Figure 6.

Example of a sequence of events in the EEG sessions

(A) The example of EEG experiment.

(B) A sequence included four sounds (of 600 ms) separated by an interstimulus interval of 3,500 ms, 4,500 ms, or 5,500 ms. The first sound of a sequence was presented 1,750 ms or 2,750 ms after a background image appeared (which corresponded to the beginning of a sequence) to make sure that the EEG signal related to the processing of the sound was not (or minimally) affected by the processing of the image. The fourth sound terminated 1,750 ms or 2,500 ms before the end of the sequence. To keep constant the duration of each sequence, the five intervals that preceded and followed the sounds always lasted 18 s in total. All the possible combinations of intervals that met this requirement were presented, in a random order. Thus, each sequence lasted 20.4 s.

Quantification and statistical analysis

Behavioral data analyses

Habituated behavior analyses

In this study, we propose that, in the VR environment, workers’ habituated behaviors to repeatedly exposed workplace hazards can be observed. We tested this notion through the following steps: The bivariate linear regression models predicting response time from number of exposures to the hazards were tested using the following equation:

| (Equation 2) |

where is response time at number of exposures N; is the intercept of the regression line at N = 0; and is the slope of the regression that indicates the change in response time for each increase in number of exposures N. If the test result of the coefficient is significantly positive, the development of participants’ risk habituation can be determined. To avoid data manipulation, if a participant did not check the proximity of the vehicle until the vehicle reached the minimum distance where it starts to back up, that exposure was not included in the response time analysis. Additionally, the Wilcoxon Signed-Ranks test was performed to examine the difference in checking rate between AG and NAG at α = 0.05.

Intervention effect analyses

In this study, we propose the VR accident simulation as a one-shot learning platform that curbs workers’ risk habituation, and our theory is that workers’ habituated behaviors decrease after experiencing a VR accident. We tested this notion using (1) multiple regression analysis estimating response time at number of exposures and VR accident experience in the first VR session, and (2) a paired-samples t-test evaluating the intervention effect on the increase in checking rate for both groups (NAG and AG). Multiple regression analyses were performed to evaluate whether and how a participant’s experience of VR-simulated accidents in the first session affected the latency and frequency of participants’ vigilant orienting behaviors in the second VR session. A participant’s experience of VR-simulated accidents in the first VR session was coded as a categorical variable (dummy-coded as 0 for the no accident group [NAG] and 1 for the accident group [AG]) in the following regression equation:

| (Equation 3) |

where is the dependent variable (response time) at number of exposures and accident experience A; is the simple intercept of the regression line in the no accident group (A = 0, NAG); is the change in the simple intercept for each increase in number of exposures N; is the difference in simple intercepts, comparing the accident group (A = 1, AG) with NAG; and is the difference in simple slopes, comparing AG with NAG.

In addition, paired-samples t-tests were performed to investigate the intervention effect of experiencing the VR accident in the first VR session on checking rate in the second VR session (pre/post-treatment analysis). Results were presented as the mean checking rate. Using the Cohen’s effect sizes (d) test the intervention effect was evaluated, with the following criteria: 0.2 = small effect, 0.5 = moderate effect, and 0.8 = large effect.76 During the first VR session ten participants never exhibited vigilant orienting behaviors. Thus, the data collected from such participants were excluded from the analysis of response time; these data were only used for the analysis of checking rate.

Event-related potential analyses

Python 3.8.2 and EEGLAB v2021.1 were used to do the data preprocessing and analysis.77,78 First, we used Python SciPy toolbox and applied a forward-backward (non-causal) high-pass filter with Kaiser window, transition band of 0.5–1 Hz, and attenuation of 80 dB to remove the drifts from the signal.79,80 Second, we used MNE-Python v0.24 package and applied a bandpass filter with 0.5 Hz and 40 Hz cutoff frequencies.81 Third, we changed the reference of the signals by removing the average of all the 15 channels from the signals.82,83 Fourth, in order to remove the non-stationary artifacts (e.g., motor artifacts) from the signal, we implanted the Artifact Subspace Reconstruction (ASR) method in Python (our code is available at: https://github.com/moeinrazavi/EEG-ASR-Python).84 Fifth, the EEG was segmented relative to the onset of the presentation of each sound stimulus (alarm/control) onset to create stimulus-locked epochs of 1800 ms that included a 300 ms pre-stimulus period. From each epoch, we subtracted the average of signal from −300 ms to −100 ms as the epoch baseline.85 Sixth, in order to remove the stationary and non-brain signal artifacts (e.g., eye blink artifacts), we used Independent Component Analysis (ICA) toolbox in EEGLAB with MATLAB R2021a.86,87 Finally, we visually removed the significantly noisy epochs from the data that were not corrected by the mentioned preprocessing steps.

One of the most prominent auditory ERP components observed in oddball and equiprobable paradigms is N1,88,89,90 which peaks about 100 ms after stimulus onset and lasts for approximately 100 ms.48,49 N1 is distributed mostly over the fronto-central region of the scalp (i.e., at the Cz and Fz electrode sites89). The auditory N1 peak is linked to early attention.34,52 Furthermore, an attenuation of the N1 response was reported to repeated auditory stimulus presentations and would reflect neural habituation in sensory cortex.39,50

Pan et al.41 reported a P3 habituation (i.e., a decrease of the P3 amplitude) from auditory single-stimulus and oddball paradigms.55 Auditory P3 is generally distributed over three midline sites (Cz, Fz, and Pz)41,55 and peaks within a 220-420-ms time window after stimulus onset.39,41

Given the specificity of sounds used in the present study (long, non-monofrequency), we referred to the ERP components analyzed by the time-window rather than a specific label (see discussion). Indeed, auditory stimuli used in EEG studies are usually short and monofrequency (e.g., 60 ms39; 50 ms50; 70 ms41). The alarm sound we used in the EEG sessions was extracted from the alarm signal used in real construction sites to increase the ecological validity of our test. This sound lasted 600 ms and included one beep with a rise and a fall. As a consequence, the identification of ERP components observed in our study requires caution.

Two components, which could correspond to N1 and P3, were measured at the time window of 540-660 ms and 804-980 ms post-stimulus onset, respectively. We also performed a post-hoc analysis at the time window of 220–420 ms post-stimulus onset, based on the apparent signal difference observed between the alarm and control sounds during this period. For these three time windows, we computed the mean amplitude separately for alarm and control sounds at the Cz and Fz electrode sites, where the deflection was maximal.

A 2 × 2 repeated-measures analyses of variance (ANOVA) was conducted on mean ERP amplitudes with type of sound (alarm, control) and session (1, 2) as within-subject variables for each time window. Subsequent t tests were performed when appropriate.

Acknowledgments

This research was supported by National Science Foundation (No. 2017019) and the Research Grant from Seoul National University (No. 0668-20220195). The funders had no role in study design, data collection, and analysis, decision to publish or preparation of the manuscript.

Author contributions

N.K., L.G., C.R.A., and B.A.A. conceived the study. N.K., L.G., C.R.A., and B.A.A. developed the methodology. N.K., L.G., and N.Y. collected the data. N.K., L.G., and M.R. analyzed the data. N.K. and L.G. wrote the original draft of the manuscript. N.K., L.G., C.R.A., and B.A.A. reviewed and edited the manuscript. N.K., L.G., M.R., and N.Y. visualized the data. C.R.A. and B.A.A. supervised the study. C.R.A. and B.A.A. acquired funding.

Declaration of interests

The authors declare no competing interests.

Published: January 20, 2023

Contributor Information

Changbum R. Ahn, Email: cbahn@snu.ac.kr.

Brian A. Anderson, Email: brian.anderson@tamu.edu.

Data and code availability

-

•

Data: Experimental data is available from the lead contact on reasonable request.

-

•

Code: This paper does not report the original code.

-

•

Additional Information: Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

References

- 1.Wilson D.A., Linster C. Neurobiology of a simple memory. J. Neurophysiol. 2008;100:2–7. doi: 10.1152/jn.90479.2008. [DOI] [PubMed] [Google Scholar]

- 2.Schmid S., Wilson D.A., Rankin C.H. Habituation mechanisms and their importance for cognitive function. Front. Integr. Neurosci. 2014;8:97. doi: 10.3389/fnint.2014.00097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wilson F.A., Rolls E.T. The effects of stimulus novelty and familiarity on neuronal activity in the amygdala of monkeys performing recognition memory tasks. Exp. Brain Res. 1993;93:367–382. doi: 10.1007/BF00229353. [DOI] [PubMed] [Google Scholar]

- 4.Blaauwgeers E., Dubois L., Ryckaert L. Real-time risk estimation for better situational awareness. IFAC Proc. Vol. 2013;46:232–239. doi: 10.3182/20130811-5-US-2037.00036. [DOI] [Google Scholar]

- 5.Curry D.G., Quinn R.D., Atkins D.R., Carlson T.C. Injuries & the experienced worker. Prof. Saf. 2004;49:30–34. [Google Scholar]

- 6.Perakslis C. Dagen hogertrafik (H-day) and risk habituation [last word] IEEE Technol. Soc. Mag. 2016;35:88. doi: 10.1109/MTS.2016.2531843. [DOI] [Google Scholar]

- 7.Slovic P. Perception of risk. Science. 1987;236:280–285. doi: 10.1126/science.3563507. [DOI] [PubMed] [Google Scholar]

- 8.Weyman A.K., Clarke D.D. Investigating the influence of organizational role on perceptions of risk in deep coal mines. J. Appl. Psychol. 2003;88:404–412. doi: 10.1037/0021-9010.88.3.404. [DOI] [PubMed] [Google Scholar]

- 9.Daalmans J., Daalmans J. Butterworth-Heinemann; 2012. Human Behavior in Hazardous Situations: Best Practice Safety Management in the Chemical and Process Industries. [Google Scholar]

- 10.Inouye J. Campbell Institute National Safety Council; 2014. Risk Perception: Theories, Strategies, and Next Steps. [Google Scholar]

- 11.Makin A.M., Winder C. A new conceptual framework to improve the application of occupational health and safety management systems. Saf. Sci. 2008;46:935–948. doi: 10.1016/j.ssci.2007.11.011. [DOI] [Google Scholar]

- 12.Duchon J.C., Laage L.W. Vol. 30. Sage CA SAGE Publications; 1986, September. The consideration of human factors in the design of a backing-up warning system; pp. 261–264. (In Proceedings of the Human Factors Society Annual Meeting). [DOI] [Google Scholar]

- 13.Kim N., Kim J., Ahn C.R. Predicting workers’ inattentiveness to struck-by hazards by monitoring biosignals during a construction task: a virtual reality experiment. Adv. Eng. Inf. 2021;49 doi: 10.1016/j.aei.2021.101359. [DOI] [Google Scholar]

- 14.Oken B.S., Salinsky M.C., Elsas S.M. Vigilance, alertness, or sustained attention: physiological basis and measurement. Clin. Neurophysiol. 2006;117:1885–1901. doi: 10.1016/j.clinph.2006.01.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Weinberg W.A., Harper C.R. Vigilance and its disorders. Neurol. Clin. 1993;11:59–78. doi: 10.1016/S0733-8619(18)30170-1. [DOI] [PubMed] [Google Scholar]

- 16.Majekodunmi A., Farrow A. Perceptions and attitudes toward workplace transport risks: a study of industrial lift truck operators in a London authority. Arch. Environ. Occup. Health. 2009;64:251–260. doi: 10.1080/19338240903348238. [DOI] [PubMed] [Google Scholar]

- 17.Glendon A.I., Litherland D.K. Safety climate factors, group differences and safety behaviour in road construction. Saf. Sci. 2001;39:157–188. doi: 10.1016/S0925-7535(01)00006-6. [DOI] [Google Scholar]

- 18.Pegula S.M. An analysis of fatal occupational injuries at road construction sites, 2003-2010. Mon. Labor Rev. 2013;136:1. [Google Scholar]

- 19.Namian M., Albert A., Zuluaga C.M., Behm M. Role of safety training: impact on hazard recognition and safety risk perception. J. Construct. Eng. Manag. 2016;142 doi: 10.1061/(ASCE)CO.1943-7862.0001198. [DOI] [Google Scholar]

- 20.Baldwin T.T., Ford J.K. Transfer of training: a review and directions for future research. Person. Psychol. 1988;41:63–105. doi: 10.1111/j.1744-6570.1988.tb00632.x. [DOI] [Google Scholar]

- 21.Cromwell S.E., Kolb J.A. An examination of work-environment support factors affecting transfer of supervisory skills training to the workplace. Hum. Resour. Dev. Q. 2004;15:449–471. doi: 10.1002/hrdq.1115. [DOI] [Google Scholar]

- 22.Grissom N., Bhatnagar S. Habituation to repeated stress: get used to it. Neurobiol. Learn. Mem. 2009;92:215–224. doi: 10.1016/j.nlm.2008.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Avery S.N., McHugo M., Armstrong K., Blackford J.U., Woodward N.D., Heckers S. Disrupted habituation in the early stage of psychosis. Biol. Psychiatry. Cogn. Neurosci. Neuroimaging. 2019;4:1004–1012. doi: 10.1016/j.bpsc.2019.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Vance A., Kirwan B., Bjornn D., Jenkins J., Anderson B.B. Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. 2017. What do we really know about how habituation to warnings occurs over time? A longitudinal fMRI study of habituation and polymorphic warnings; pp. 2215–2227. [DOI] [Google Scholar]

- 25.Li X., Yi W., Chi H.L., Wang X., Chan A.P. A critical review of virtual and augmented reality (VR/AR) applications in construction safety. Autom. ConStruct. 2018;86:150–162. doi: 10.1016/j.autcon.2017.11.003. [DOI] [Google Scholar]

- 26.Nilsson T., Roper T., Shaw E., Lawson G., Cobb S.V., Meng-Ko H., Miller D., Khan J. Multisensory virtual environment for fire evacuation training. Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems; 2019. p. 1–4. 10.1145/3290607.3313283. [DOI]

- 27.Lin J., Zhu R., Li N., Becerik-Gerber B. Do people follow the crowd in building emergency evacuation? A cross-cultural immersive virtual reality-based study. Adv. Eng. Inf. 2020;43 doi: 10.1016/j.aei.2020.101040. [DOI] [Google Scholar]

- 28.Hasanzadeh S., De La Garza J.M., Geller E.S. Latent effect of safety interventions. J. Construct. Eng. Manag. 2020;146 doi: 10.1061/(ASCE)CO.1943-7862.0001812. [DOI] [Google Scholar]

- 29.Kim N., Anderson B.A., Ahn C.R. Reducing risk habituation to struck-by hazards in a road construction environment using virtual reality behavioral intervention. J. Construct. Eng. Manag. 2021;147 doi: 10.1061/(ASCE)CO.1943-7862.0002187. [DOI] [Google Scholar]

- 30.Lee S.W., O’Doherty J.P., Shimojo S. Neural computations mediating one-shot learning in the human brain. PLoS Biol. 2015;13 doi: 10.1371/journal.pbio.1002137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Polyn S.M., Norman K.A., Kahana M.J. A context maintenance and retrieval model of organizational processes in free recall. Psychol. Rev. 2009;116:129–156. doi: 10.1037/a0014420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lengyel M., Dayan P. Hippocampal contributions to control: the third way. Adv. Neural Inf. Process. Syst. 2007:20. [Google Scholar]

- 33.Chan K., Louis J., Albert A. Incorporating worker awareness in the generation of hazard proximity warnings. Sensors. 2020;20:806. doi: 10.3390/s20030806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Luck S.J. MIT press; 2014. An Introduction to the Event-Related Potential Technique. [Google Scholar]

- 35.Näätänen R., Simpson M., Loveless N.E. Stimulus deviance and evoked potentials. Biol. Psychol. 1982;14:53–98. doi: 10.1016/0301-0511(82)90017-5. [DOI] [PubMed] [Google Scholar]

- 36.Mangun G.R., Hillyard S.A. Electrophysiology of Mind: Event-Related Brain Potentials and Cognition. Oxford University Press; 1995. Mechanisms and models of selective attention; pp. 40–85. [Google Scholar]

- 37.Maitre N.L., Lambert W.E., Aschner J.L., Key A.P. Cortical speech sound differentiation in the neonatal intensive care unit predicts cognitive and language development in the first 2 years of life. Dev. Med. Child Neurol. 2013;55:834–839. doi: 10.1111/dmcn.12191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Key A.P., Yoder P.J. Equiprobable and oddball paradigms: two approaches for documenting auditory discrimination. Dev. Neuropsychol. 2013;38:402–417. doi: 10.1080/87565641.2012.718819. [DOI] [PubMed] [Google Scholar]

- 39.Carrillo-de-la-Peña M.T., García-Larrea L. On the validity of interblock averaging of P300 in clinical settings. Int. J. Psychophysiol. 1999;34:103–112. doi: 10.1016/S0167-8760(99)00066-5. [DOI] [PubMed] [Google Scholar]

- 40.Greife C.L. n, Colorado State University; 2013. Sensory Gating, Habituation, and Orientation of P50 and N100 Event-Related Potential (ERP) Components in Neurologically Typical Adults and Links to Sensory Behaviors. Doctoral dissertatio. [Google Scholar]

- 41.Pan J., Takeshita T., Morimoto K. P300 habituation from auditory single-stimulus and oddball paradigms. Int. J. Psychophysiol. 2000;37:149–153. doi: 10.1016/S0167-8760(00)00086-6. [DOI] [PubMed] [Google Scholar]

- 42.Chen J., Song X., Lin Z. Revealing the “Invisible Gorilla” in construction: estimating construction safety through mental workload assessment. Autom. ConStruct. 2016;63:173–183. doi: 10.1016/j.autcon.2015.12.018. [DOI] [Google Scholar]

- 43.Huang T.R., Watanabe T. Task attention facilitates learning of task-irrelevant stimuli. PLoS One. 2012;7 doi: 10.1371/journal.pone.0035946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Wickens C.D. Multiple resources and mental workload. Hum. Factors. 2008;50:449–455. doi: 10.1518/001872008X288394. [DOI] [PubMed] [Google Scholar]

- 45.Rensink R.A., O'regan J.K., Clark J.J. To see or not to see: the need for attention to perceive changes in scenes. Psychol. Sci. 1997;8:368–373. doi: 10.1111/j.1467-9280.1997.tb00427.x. [DOI] [Google Scholar]

- 46.Hoffman J.E., Subramaniam B. The role of visual attention in saccadic eye movements. Percept. Psychophys. 1995;57(6):787–795. doi: 10.3758/bf03206794. [DOI] [PubMed] [Google Scholar]

- 47.McArthur G.M., Atkinson C.M., Ellis D. Can training normalize atypical passive auditory ERPs in children with SRD or SLI? Dev. Neuropsychol. 2010;35:656–678. doi: 10.1080/87565641.2010.508548. [DOI] [PubMed] [Google Scholar]

- 48.Näätänen R., Picton T. The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology. 1987;24:375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- 49.May P.J.C., Tiitinen H. Mismatch negativity (MMN), the deviance-elicited auditory deflection, explained. Psychophysiology. 2010;47:66–122. doi: 10.1111/j.1469-8986.2009.00856.x. [DOI] [PubMed] [Google Scholar]

- 50.Ethridge L.E., White S.P., Mosconi M.W., Wang J., Byerly M.J., Sweeney J.A. Reduced habituation of auditory evoked potentials indicate cortical hyper-excitability in Fragile X Syndrome. Transl. Psychiatry. 2016;6:e787. doi: 10.1038/tp.2016.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Sardin J. Reverse Beeping 1 (Free Sound Effect) ⋅ BigSoundBank.Com. BigSoundBank.Com. https://bigsoundbank.com/detail-1298-truck-reverse-beep.html

- 52.Rinne T., Särkkä A., Degerman A., Schröger E., Alho K. Two separate mechanisms underlie auditory change detection and involuntary control of attention. Brain Res. 2006;1077:135–143. doi: 10.1016/j.brainres.2006.01.043. [DOI] [PubMed] [Google Scholar]

- 53.Budd T.W., Barry R.J., Gordon E., Rennie C., Michie P.T. Decrement of the N1 auditory event-related potential with stimulus repetition: habituation vs. refractoriness. Int. J. Psychophysiol. 1998;31:51–68. doi: 10.1016/S0167-8760(98)00040-3. [DOI] [PubMed] [Google Scholar]

- 54.Wang P., Knösche T.R. A realistic neural mass model of the cortex with laminar-specific connections and synaptic plasticity–evaluation with auditory habituation. PLoS One. 2013;8 doi: 10.1371/journal.pone.0077876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Romero R., Polich J. P3 (00) habituation from auditory and visual stimuli. Physiol. Behav. 1996;59:517–522. doi: 10.1016/0031-9384(95)02099-3. [DOI] [PubMed] [Google Scholar]

- 56.Institute O.T. OSHA Directorate of Training and Education; 2011. Construction Focus Four: Outreach Training Packet. [Google Scholar]

- 57.Peirce J.W. PsychoPy—psychophysics software in Python. J. Neurosci. Methods. 2007;162:8–13. doi: 10.1016/j.jneumeth.2006.11.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Peirce J., Gray J.R., Simpson S., MacAskill M., Höchenberger R., Sogo H., Kastman E., Lindeløv J.K., Lindeløv J.K. PsychoPy2: experiments in behavior made easy. Behav. Res. Methods. 2019;51:195–203. doi: 10.3758/s13428-018-01193-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Peirce J.W. Generating stimuli for neuroscience using PsychoPy. Front. Neuroinf. 2008;2:10. doi: 10.3389/neuro.11.010.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Jasper H.H. The ten-twenty electrode system of the International Federation. Electroencephalogr. Clin. Neurophysiol. 1958;10:370–375. [PubMed] [Google Scholar]

- 61.Razavi M., Janfaza V., Yamauchi T., Leontyev A., Longmire-Monford S., Orr J. OpenSync: an open-source platform for synchronizing multiple measures in neuroscience experiments. J. Neurosci. Methods. 2022;369 doi: 10.1016/j.jneumeth.2021.109458. [DOI] [PubMed] [Google Scholar]

- 62.Epic Games. Unreal Engine. Epic Games; 2019. [Google Scholar]

- 63.Autodesk A. Autodesk; 2019. 3ds Max. Version 2019. [Google Scholar]

- 64.Autodesk A. Autodesk; 2019. Maya. Version 2019. [Google Scholar]

- 65.Desimone R., Duncan J. Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- 66.Jeelani I., Han K., Albert A. Automating and scaling personalized safety training using eye-tracking data. Autom. ConStruct. 2018;93:63–77. doi: 10.1016/j.autcon.2018.05.006. [DOI] [Google Scholar]

- 67.Nakayama K., Maljkovic V., Kristjansson A. 2004. Short-term Memory for the Rapid Deployment of Visual Attention. [Google Scholar]

- 68.Eiris R., Gheisari M., Esmaeili B. PARS: using augmented 360-degree panoramas of reality for construction safety training. Int. J. Environ. Res. Publ. Health. 2018;15:2452. doi: 10.3390/ijerph15112452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Hasanzadeh S., de la Garza J.M. Computing in Civil Engineering 2019: Visualization, Information Modeling, and Simulation. American Society of Civil Engineers; 2019. Understanding roofer’s risk compensatory behavior through passive haptics mixed-reality system; pp. 137–145. [DOI] [Google Scholar]

- 70.Maioli C., Benaglio I., Siri S., Sosta K., Cappa S. The integration of parallel and serial processing mechanisms in visual search: evidence from eye movement recording. Eur. J. Neurosci. 2001;13:364–372. doi: 10.1111/j.1460-9568.2001.01381.x. [DOI] [PubMed] [Google Scholar]

- 71.Sound Effects Truck backing up beep sound effects. 2017. https://www.youtube.com/watch?v=zXo4E-7rlqI

- 72.Cheour M., Leppänen P.H., Kraus N. Mismatch negativity (MMN) as a tool for investigating auditory discrimination and sensory memory in infants and children. Clin. Neurophysiol. 2000;111:4–16. doi: 10.1016/S1388-2457(99)00191-1. [DOI] [PubMed] [Google Scholar]

- 73.Näätänen R., Paavilainen P., Rinne T., Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin. Neurophysiol. 2007;118:2544–2590. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- 74.Squires N.K., Squires K.C., Hillyard S.A. Two varieties of long-latency positive waves evoked by unpredictable auditory stimuli in man. Electroencephalogr. Clin. Neurophysiol. 1975;38:387–401. doi: 10.1016/0013-4694(75)90263-1. [DOI] [PubMed] [Google Scholar]

- 75.Yoder P.J., Camarata S., Camarata M., Williams S.M. Association between differentiated processing of syllables and comprehension of grammatical morphology in children with Down syndrome. Am. J. Ment. Retard. 2006;111:138–152. doi: 10.1352/0895-8017. [DOI] [PubMed] [Google Scholar]

- 76.Cohen J. Psychological Bulletin. 1992. Quantitative methods in psychology: a power primer. [Google Scholar]

- 77.Delorme A., Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- 78.Van Rossum G., Drake F.L. CreateSpace; 2009. Python 3 Reference Manual. [Google Scholar]

- 79.van Driel J., Olivers C.N.L., Fahrenfort J.J. High-pass filtering artifacts in multivariate classification of neural time series data. J. Neurosci. Methods. 2021;352 doi: 10.1016/j.jneumeth.2021.109080. [DOI] [PubMed] [Google Scholar]

- 80.Virtanen P., Gommers R., Oliphant T.E., Haberland M., Reddy T., Cournapeau D., Burovski E., Peterson P., Weckesser W., Bright J., et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods. 2020;17:261–272. doi: 10.1038/s41592-019-0686-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Gramfort A., Luessi M., Larson E., Engemann D.A., Strohmeier D., Brodbeck C., Goj R., Jas M., Brooks T., Parkkonen L., et al. MEG and EEG data analysis with MNE-Python. Front. Neurosci. 2013;7:267. doi: 10.3389/fnins.2013.00267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Bertrand O., Perrin F., Pernier J. A theoretical justification of the average reference in topographic evoked potential studies. Electroencephalogr. Clin. Neurophysiol. 1985;62:462–464. doi: 10.1016/0168-5597(85)90058-9. [DOI] [PubMed] [Google Scholar]

- 83.Teplan M. Fundamentals of EEG measurement. Meas. Sci. Rev. 2002;2:1–11. [Google Scholar]

- 84.Blum S., Jacobsen N.S.J., Bleichner M.G., Debener S. A Riemannian modification of artifact subspace reconstruction for EEG artifact handling. Front. Hum. Neurosci. 2019;13:141. doi: 10.3389/fnhum.2019.00141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Grandchamp R., Delorme A. Single-trial normalization for event-related spectral decomposition reduces sensitivity to noisy trials. Front. Psychol. 2011;2:236. doi: 10.3389/fpsyg.2011.00236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.MATLAB. The MathWorks Inc; 2021. [Google Scholar]

- 87.Hyvärinen A., Oja E. Independent component analysis: algorithms and applications. Neural Network. 2000;13:411–430. doi: 10.1016/S0893-6080(00)00026-5. [DOI] [PubMed] [Google Scholar]

- 88.Eichele T., Nordby H., Rimol L.M., Hugdahl K. Asymmetry of evoked potential latency to speech sounds predicts the ear advantage in dichotic listening. Brain Res. Cogn. Brain Res. 2005;24:405–412. doi: 10.1016/j.cogbrainres.2005.02.017. [DOI] [PubMed] [Google Scholar]

- 89.Tomé D., Barbosa F., Nowak K., Marques-Teixeira J. The development of the N1 and N2 components in auditory oddball paradigms: a systematic review with narrative analysis and suggested normative values. J. Neural. Transm. 2015;122:375–391. doi: 10.1007/s00702-014-1258-3. [DOI] [PubMed] [Google Scholar]

- 90.Schröder A., van Diepen R., Mazaheri A., Petropoulos-Petalas D., Soto de Amesti V., Vulink N., Denys D. Diminished n1 auditory evoked potentials to oddball stimuli in misophonia patients. Front. Behav. Neurosci. 2014;8:123. doi: 10.3389/fnbeh.2014.00123. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

-

•

Data: Experimental data is available from the lead contact on reasonable request.

-

•

Code: This paper does not report the original code.

-

•

Additional Information: Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.