Abstract

Crowdsourcing holds great potential: macro-task crowdsourcing can, for example, contribute to work addressing climate change. Macro-task crowdsourcing aims to use the wisdom of a crowd to tackle non-trivial tasks such as wicked problems. However, macro-task crowdsourcing is labor-intensive and complex to facilitate, which limits its efficiency, effectiveness, and use. Technological advancements in artificial intelligence (AI) might overcome these limits by supporting the facilitation of crowdsourcing. However, AI’s potential for macro-task crowdsourcing facilitation needs to be better understood for this to happen. Here, we turn to affordance theory to develop this understanding. Affordances help us describe action possibilities that characterize the relationship between the facilitator and AI, within macro-task crowdsourcing. We follow a two-stage, bottom-up approach: The initial development stage is based on a structured analysis of academic literature. The subsequent validation & refinement stage includes two observed macro-task crowdsourcing initiatives and six expert interviews. From our analysis, we derive seven AI affordances that support 17 facilitation activities in macro-task crowdsourcing. We also identify specific manifestations that illustrate the affordances. Our findings increase the scholarly understanding of macro-task crowdsourcing and advance the discourse on facilitation. Further, they help practitioners identify potential ways to integrate AI into crowdsourcing facilitation. These results could improve the efficiency of facilitation activities and the effectiveness of macro-task crowdsourcing.

Keywords: Affordance, Artificial Intelligence, Facilitation, Macro-Task Crowdsourcing

Introduction

Artificial intelligence (AI) holds the potential to transform collaborative activities such as crowdsourcing (Griffith et al. 2019; Introne et al. 2011; Kiruthika et al. 2020; Manyika et al. 2016; Seeber et al. 2020). In crowdsourcing, a crowd collaborates to solve a task in a digital participative environment, such as an online platform (Estellés-Arolas and González-Ladrón-de-Guevara 2012). Thereby, the crowd may be diverse, including individuals from diverse disciplinary backgrounds (Cullina et al. 2015; Dissanayake et al. 2019). When a crowd is dedicated to tackling complex and interdependent tasks collaboratively, the practice is referred to as macro-task crowdsourcing (Robert 2019; Schmitz and Lykourentzou 2018). Macro-tasks are tasks that are difficult or sometimes impossible to decompose into smaller (interdependent) subtasks (Robert 2019). The use of crowdsourcing to address macro-tasks is rarely straightforward and requires a specific skill set and knowledge of the crowd (Schmitz and Lykourentzou 2018). A prominent example of macro-tasks are wicked problems. Wicked problems are highly complex and thus require the involvement of many different stakeholders (Alford and Head 2017; Head and Alford 2015; Ooms and Piepenbrink 2021). Global challenges that are very broad in scope, such as the advancement of the sustainable development goals as defined by the United Nations (2015), may be understood as wicked problems of current relevance (McGahan et al. 2021). In response to these problems, existing macro-task crowdsourcing initiatives such as OpenIDEO or Futures CoLab elaborate on sustainability-related improvements and solution approaches (Gimpel et al. 2020; Kohler and Chesbrough 2019).

For macro-task crowdsourcing to realize its potential and tackle such complex problems, structure, guidance, and support are needed to coordinate the collaborating crowd workers (Adla et al. 2011; Azadegan and Kolfschoten 2014; Shafiei Gol et al. 2019). If this need is satisfied through unbiased (human) observation and intervention, it is known as facilitation (Adla et al. 2011; Bostrom et al. 1993). Although facilitation has already been widely analyzed in other contexts such as group interaction (Bostrom et al. 1993), face-to-face meetings (Azadegan and Kolfschoten 2014), and open innovation (Winkler et al. 2020), it has barely been investigated in macro-task crowdsourcing. AI is seen as a system’s ability to interpret and learn from external data to achieve a predetermined goal (Kaplan and Haenlein 2019). With AI breaking human text challenges (Wang et al. 2019), new potentials arise, especially for text-based applications like crowdsourcing. The high transformative potential of AI gives rise to the question: Can AI support the facilitation of macro-task crowdsourcing? If it can, the quality of crowdsourcing results might be improved, leading to better outcomes and results. For example, an AI with semantic text understanding could recognize novel or innovative-yet-unrecognized ideas and highlight these as focal points for further discussion within the crowd (Toubia and Netzer 2017). Furthermore, by relieving the bottleneck of labor- and knowledge-intensive facilitation, macro-task crowdsourcing could be applied to more wicked problems.

AI and facilitation may be closely interwoven in macro-task crowdsourcing. Facilitation, in the specific context of macro-task crowdsourcing, requires human facilitators as well as technological advancements which support the former by fulfilling a large variety of burdensome activities (Briggs et al. 2013; de Vreede and Briggs 2019; Franco and Nielsen 2018; Khalifa et al. 2002; Seeber et al. 2016; Winkler et al. 2020). Among other duties, a facilitator is responsible for understanding the problem to be tackled by the macro-task crowdsourcing, motivating and guiding the crowd and its dialogues, and making sense of the outcome. Lately, AI – as one specific technological advancement – has been investigated for its supportive potential (Rhyn and Blohm 2017; Seeber et al. 2016; Tavanapour and Bittner 2018a). AI carries various functionalities, including text mining or natural language processing, that can support macro-task crowdsourcing facilitation. For instance, intelligent conversational agent systems could guide the crowd through the crowdsourcing process (Derrick et al. 2013; Ito et al. 2021) or issue detailed instructions to crowd workers in the form of specific tasks (Qiao et al. 2018). The evaluation of the workers’ contributions could also be drastically simplified by designing appropriate systems that leverage the potential of text mining and natural language generation to automatically generate reports or summaries (Füller et al. 2021; Rhyn et al. 2020). Such AI-augmented facilitation systems could improve human facilitation (Adla et al. 2011; Siemon 2022), (partially) automate facilitation processes (Gimpel et al. 2020; Jalowski et al. 2019; Kolfschoten et al. 2011), or even wholly replace the facilitator with an AI agent (de Vreede and Briggs 2019).

Although AI has considerable potential in macro-task crowdsourcing and assisting human problem solving (Rhyn and Blohm 2017; Schoormann et al. 2021; Seeber et al. 2020), there are only a few AI-related contributions in the literature on macro-task crowdsourcing or crowdsourcing facilitation. A holistic understanding of how AI could be applied to facilitate problem-solving in on- or offline groups is missing. However, such a holistic understanding is necessary to guide further research on crowdsourcing and facilitation and inform practitioners as to how crowdsourcing initiatives might be improved. We set out to investigate how AI can and may enable macro-task crowdsourcing facilitation. Therefore, we pose the following research questions (RQs), which address both the identified lack of research into macro-task crowdsourcing facilitation and the need for a holistic understanding of AI in this given context:

RQ1: Which activities comprise macro-task crowdsourcing facilitation?

RQ2: What action possibilities does AI afford for macro-task crowdsourcing facilitation?

We apply a two-stage, bottom-up approach to establish a theory-driven understanding validated and refined using practical insight to answer these research questions. In our approach, we turn to affordance theory (Volkoff and Strong 2017), which is known to help develop better theories in IT-associated transformational contexts (Ostern and Rosemann 2021). Given AI’s high potential to transform crowdsourcing, affordance theory can be seen as an established, suitable, and meaningful lens to theorize the relationship between the technological artifact of AI and the goal-oriented actor – namely, the facilitator (Lehrer et al. 2018; Markus and Silver 2008; Ostern and Rosemann 2021; Volkoff and Strong 2013). In the first stage, we develop initial sets of macro-task crowdsourcing facilitation activities and AI affordances. Both sets are based on a structured search and review of extant scholarly knowledge. The second stage validates and refines our facilitation activities and AI affordances. We observe two real-world macro-task crowdsourcing initiatives and perform six interviews with experts from the crowdsourcing facilitation and AI domain, thus, including insights from practice.

For RQ1, our results provide a detailed understanding of macro-task crowdsourcing facilitation comprising 17 facilitation activities. We answer RQ2 by developing a set of seven AI affordances relevant to macro-task crowdsourcing facilitation. We also detail manifestations of the affordances that demonstrate actionable practices of AI-augmented macro-task crowdsourcing facilitation. Our findings increase the scholarly understanding of macro-task crowdsourcing facilitation and the application of AI therein. Furthermore, the results will help practitioners to evaluate potential ways of integrating AI in crowdsourcing facilitation. These results will increase the efficiency of facilitation activities and, ultimately, increase the effectiveness of macro-task crowdsourcing.

The remainder of the paper is structured as follows: Sect. 2 provides theoretical background on macro-task crowdsourcing facilitation, AI-augmented facilitation, and affordance theory. We outline our research process in Sect. 3. Section 4 presents the macro-task crowdsourcing facilitation activities, the AI affordances, and the manifestations of AI in macro-task crowdsourcing facilitation. After discussing the implications and limitations of our results in Sect. 5, we conclude with a brief summary in Sect. 6.

Theoretical Background

Macro-Task Crowdsourcing Facilitation

Macro-Task Crowdsourcing

Crowdsourcing is an umbrella term that can have many meanings. The concept was first introduced in an article in Wired magazine (Howe 2006b). Elsewhere, Howe (2006a) defines crowdsourcing as “the act of a company or institution taking a function once performed by employees and outsourcing it to an undefined (and generally large) network of people in the form of an open call.” Since then, understandings of crowdsourcing have evolved. Estellés-Arolas and González-Ladrón-de-Guevara (2012) proposed a holistic definition that we will use in this paper:

“Crowdsourcing is a type of participative online activity in which an individual, an institution, a non-profit organization, or company proposes to a group of individuals of varying knowledge, heterogeneity, and number, via a flexible open call, the voluntary undertaking of a task.”

A panoply of different crowdsourcing types exists, ranging from corporate to social or public contexts (Vianna et al. 2019). In a corporate context, open innovation is used to strategically manage knowledge flows between an external crowd and a firm to improve the firm’s innovation processes (Bogers et al. 2018). With crowdfunding, entrepreneurs can receive funding, via an open call, from funders who may receive a private benefit in return (Belleflamme et al. 2014). Organizations can use micro-task crowdsourcing to outsource low-complexity tasks (e.g., image tagging, or phone number verification) completed by independent crowd workers (Hossain and Kauranen 2015; Schenk and Guittard 2011). More complex tasks (e.g., invention or software engineering) require collaboration among crowd workers (Kittur et al. 2013). Flash organizations, for example, are computationally built structures comprised of a crowd automatically arranged into a hierarchy, where participants are assigned to smaller units focused on complex tasks according to their particular skills (Valentine et al. 2017). The structure of the resultant crowd organization can adapt over time, allowing it to efficiently collaborate and achieve open-ended goals relating to complex tasks (Retelny et al. 2014; Valentine et al. 2017). Real-world problems can be approached by using citizen science, a participative way of performing research involving experts and non-experts (Hossain and Kauranen 2015; Wiggins and Crowston 2011). For example, Fritz et al. (2019) underline the scientific value of citizens’ contributions of data which helped to track the progress of the United Nations’ sustainable development goals.

As a step beyond predominant crowdsourcing types, we define macro-task crowdsourcing based on Leimeister (2010), Lykourentzou et al. (2019), Malone et al. (2010), and Vianna et al. (2019):

Macro-task crowdsourcing leverages the collective intelligence of a crowd through facilitated collaboration on a digital platform to address complex or wicked problems.

The problems being addressed with the help of macro-task crowdsourcing may range from open innovation product design, to software development, or to grand social challenges (Kohler and Chesbrough 2019; McGahan et al. 2021) like climate change (Introne et al. 2011). Many of these are rooted in wicked problems characterized by their high complexity and their need to elicit broad stakeholder involvement (Alford and Head 2017; Ooms and Piepenbrink 2021). Macro-task crowdsourcing differs from existing crowdsourcing types in several ways. Although the boundaries between macro-task and, for example, micro-task crowdsourcing are blurred, there are some distinguishing characteristics, which are presented in Table 1.

Table 1.

Distinctions Between Micro- and Macro-Task Crowdsourcing

| Dimension | Micro-Task Crowdsourcing | Macro-Task Crowdsourcing |

|---|---|---|

| Nature of Problem | Well-defined, structured, and decomposable into smaller parts, which requires low stakeholder involvement | Ill-defined with no clear structure and low decomposability, which requires broad stakeholder involvement |

| Contribution Creation | Parallelized collection of contributions with a low level of collaboration | Collaborative and iterative exchange of ideas among (groups) of workers |

| Crowd Requirements | Workers with skills aligned explicitly to the problem and high efficiency in task-completion | Workers with different backgrounds, diversity in their domain expertise, and a high willingness to collaborate |

| Guiding Process | The requestor or the digital platform’s algorithm performs repetitive and standardized patterns of actions | The facilitator or facilitating teams guide process phases with high degrees of freedom for the workers |

| Generated Outcome | Aggregable and structurable solutions to the problem | Approaches to addressing the problem, which are difficult to synthesize |

The fact that the problem cannot easily be broken down into smaller constituent parts means it requires a high level of crowd diversity – i.e., providing multiple perspectives from experts with different levels of expertise and knowledge in various disciplines (Lykourentzou et al. 2019; Robert 2019). Due to the complexity of the underlying problem and the broad stakeholder involvement, a guiding, moderating, and neutral central agent is necessary, which we refer to as a facilitator (Gimpel et al. 2020). It is important to note that the results produced by the crowd will not necessarily be the final solution to the overarching problem. Existing macro-task crowdsourcing initiatives such as Climate CoLab (Introne et al. 2013), Futures CoLab (Gimpel et al. 2020), and OpenIDEO (Kohler and Chesbrough 2019) tend to produce valuable but non-conclusive approaches to addressing a wicked problem from one specific angle. These approaches have evolved and matured during several guided phases (Gimpel et al. 2020; Introne et al. 2013), making macro-task crowdsourcing even more reliant on a facilitator and a clear understanding of its role within the crowdsourcing initiative.

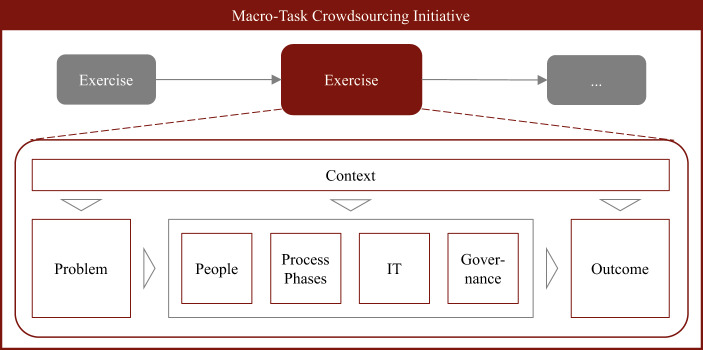

The panoply of different crowdsourcing types has produced a variety of terminologies with synonyms and ambiguities now requiring unification. Figure 1 depicts an abstract view of existing terms and definitions within crowdsourcing. Generally, we use the term macro-task crowdsourcing initiative to refer to an overarching set of online activities that aim to address a problem (Estellés-Arolas and González-Ladrón-de-Guevara 2012). We refer to a crowdsourcing exercise as a whole process of crowdsourcing techniques (Vukovic and Bartolini 2010) that may be applied multiple times or in combination with other exercises as part of a macro-task crowdsourcing initiative.

Fig. 1.

Terminology Within a Macro-Task Crowdsourcing Initiative

The context of an exercise is highly relevant. A macro-task crowdsourcing initiative may conduct multiple exercises in various (e.g., geographical) environments, using different strategies or workflows (e.g., to address the problem) with different infrastructural prerequisites (e.g., hard- and software). The nature of the problem being tackled by an exercise influences how a task is designed and, therefore, how contributions are generated (Zuchowski et al. 2016).

In an exercise, three different groups of people participate. The requestor is an organization or an individual that seeks help, the worker is part of a help-offering crowd capable of (partially) addressing or solving the requestor’s problem (Pedersen et al. 2013). The facilitator acts as a crucial intermediary who tries to understand the requestor and facilitates the crowd of workers to reach a predefined goal concerning the problem (Franco and Nielsen 2018; Gimpel et al. 2020; Rippa et al. 2016). From an activity-driven perspective, exercises consist of three major process phases: preparation, execution, and resolution. While preparation refers to “breaking down a problem or a goal into lower level, smaller sub-task” (Vukovic and Bartolini 2010), execution describes the elaboration on the task by a diverse crowd of workers (Zuchowski et al. 2016) supported and guided by one or more facilitators. Evaluating and synthesizing the workers’ contributions finishes the last process phase, termed resolution (Lopez et al. 2010). IT enables all participants to collaborate online in a distributed or decentralized way. Typically, a digital platform is used to capture and store the interactions and communication between individuals (Lopez et al. 2010). Interactions on a digital platform for macro-task crowdsourcing can include rating, creation, solving, and processing (Geiger and Schader 2014). Sometimes tools like video communication software are used to run the exercise more efficiently or effectively. To steer the exercise within the given context, governance, using a dedicated strategy, creates suitable boundary conditions for the people (Blohm et al. 2018; Pedersen et al. 2013). While rules can define norms or desired conducts, roles govern responsibilities and accountabilities, and culture creates a desirable and productive atmosphere for collaboration.

Each exercise results in different types of outcomes (Zuchowski et al. 2016). Contributions represent manifestations of work on the communicated and processed task. We see tacit knowledge gained during the exercises as learnings that could, for instance, be achieved by reflections or feedback. We distinguish these from consequences, which represent immutable conclusions that have been caused by performing the exercise (e.g., unsatisfied workers who will not contribute to future exercises). Finally, every stakeholder of the macro-task crowdsourcing initiative can perceive value in the exercise. While the requestor could, for example, see value in the synthesized contributions, a worker could perceive value in social recognition within the crowd.

Behind each of these terms, there is a whole range of activities that, taken together, should be carefully aligned with a goal during the macro-task crowdsourcing initiative, in order to contribute to the overarching problem. Thus, facilitation is important to ensure proper alignment and goal orientation. Thereby, facilitators play an essential role, particularly – yet, not only – during the exercises.

Facilitation in Crowdsourcing

In the crowdsourcing domain, crowdsourcing governance aims to facilitate workers in performing their tasks and steer them toward a solution (Pedersen et al. 2013; Shafiei Gol et al. 2019). According to Shafiei Gol et al. (2019), whether crowdsourcing governance is centralized or decentralized, the task is to control and coordinate workers on the crowdsourcing platform. This involves activities such as defining the task (Zogaj and Bretschneider 2014), providing proper incentives (Vukovic et al. 2010), ensuring the quality of the contributions (Blohm et al. 2018), and managing the community and its culture (Zuchowski et al. 2016). Crowdsourcing governance is often analyzed in environments involving paid work or smaller tasks (Blohm et al. 2018; Shafiei Gol et al. 2019). Hence, activities like controlling costs and standardizing procedures also gain relevance (Shafiei Gol et al. 2019). Despite extensive frameworks (Blohm et al. 2020; Shafiei Gol et al. 2019; Zogaj et al. 2015), crowdsourcing governance is often conceptualized on the organizational and platform level, which could explain why it is also referred to as a management activity (Blohm et al. 2018; Jespersen 2018; Pohlisch 2021; Zogaj and Bretschneider 2014). The increasing complexity of the problems under investigation means increasingly sophisticated governance strategies are required to deliver successful crowdsourcing initiatives (Blohm et al. 2018; Boughzala et al. 2014; Pedersen et al. 2013). Since macro-task crowdsourcing initiatives are known for their complex (sometimes even wicked) underlying problems in a collaborative environment, utilizing facilitation can be a suitable and effective governance strategy. Facilitation is primarily focused on the crowd, enabling workers to collaborate on complex tasks and, ultimately, reach an overarching goal (Gimpel et al. 2020; Kim and Robert 2019; Lykourentzou et al. 2019).

To tackle increasingly complex – often wicked – problems using macro-task crowdsourcing, the facilitation of groups is both highly relevant and very challenging (Khalifa et al. 2002; Shafiei Gol et al. 2019). Following Bostrom et al. (1993), the main aim of facilitation is to ensure unified goal orientation among collaborating workers. This challenging task can require various social and technical skills or abilities to support problem-solving (Antunes and Ho 2001). Researchers have explored several types of facilitation specifically tailored to collaborative settings. Adla et al. (2011) differentiate between four overlapping types: Technical facilitation mainly aims to support participants with technology issues. Group process facilitation strives to ensure all members of a group jointly reach overarching goals such as motivation or moderation. Process facilitation assists by coordinating participants or structuring meetings. Finally, content facilitation focuses on, and introduces changes to, the content under discussion. Facilitators serve as experts practicing techniques to support problem-solving processes (Winkler et al. 2020), for example, in face-to-face meetings (Azadegan and Kolfschoten 2014; Bostrom et al. 1993). Besides completing a burdensome amount of work before, during, and after the collaboration (Vivacqua et al. 2011), facilitators must also evince particular character and behavioral traits (Dissanayake et al. 2015a). Training and experience (Clawson and Bostrom 1996), appearance and behavior within a group (Franco and Nielsen 2018; Ito et al. 2021; McCardle-Keurentjes and Rouwette 2018), and the handling of feedback and reflection (Azadegan and Kolfschoten 2014; de Vreede et al. 2002) play an essential role here. Thereby, facilitators maintain a delicate balance between situations in which they moderate and observe the group and instances in which they intervene – for instance, due to content-related issues (Khalifa et al. 2002) – without compromising the outcome of the exercise (Dissanayake et al. 2015b). To better assist the group and balance the workload, multiple facilitators with different foci may sometimes be involved, making it possible to split the work among the facilitators (Franco and Nielsen 2018) and maintain a good relationship with all the workers (Liu et al. 2016). However, some scholars note that face-to-face facilitation techniques may be less effective when applied in distributed or virtual environments (Adla et al. 2011). Hence, it is difficult for crowdsourcing facilitators to rely on facilitation knowledge established in other contexts. This difficulty could be rooted in the fundamentally different nature of collaboration on a crowdsourcing platform (Gimpel et al. 2020; Nguyen et al. 2013).

Building upon the current, broad understanding of crowdsourcing governance (Blohm et al. 2020; Pedersen et al. 2013; Shafiei Gol et al. 2019) and facilitation (Antunes and Ho 2001; Bruno et al. 2003; Kolfschoten et al. 2011; Maister and Lovelock 1982; Zajonc 1965) offered in the literature – in particular, an existing definition by Bostrom et al. (1993) – we define macro-task crowdsourcing facilitation thus:

Facilitation in macro-task crowdsourcing initiatives comprises all observing and intervening activities used before, during, and after a macro-task crowdsourcing exercise to foster beneficial interactions among crowd workers aimed at making (interim-) outcomes easier to achieve and ultimately align joint actions with predefined goals.

Despite substantial knowledge of crowdsourcing governance and facilitation, an overarching and integrated understanding of relevant facilitation activities in macro-task crowdsourcing is missing. Therefore, it is challenging for facilitators to delimit their competencies in crowdsourcing endeavors involving many participants and perspectives (Zhao and Zhu 2014).

Advances in AI-Augmented Facilitation

AI uses technologies and algorithms to simulate and replicate human behavior or achieve intelligent capabilities (Alsheibani et al. 2018; Simon 1995; Stone et al. 2016; Te’eni et al. 2019). AI may be defined as a “[…] system’s ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation […]” (Kaplan and Haenlein 2019). Although more general definitions exist – such as those by Rai et al. (2019) and Russell and Norvig (2021) – in this paper, we follow the definition by Kaplan and Haenlein (2019). Their socio-technical system perspective focuses on the interrelationship between humans and AI, which is highly relevant in the context of macro-task crowdsourcing facilitation. While AI has been a subject established in science for over seven decades (Haenlein and Kaplan 2019; Rzepka and Berger 2018; Simon 1995), in recent years, it has received increasing attention in both research and practice (Bawack et al. 2019; de Vreede et al. 2020; Hinsen et al. 2022; Hofmann et al. 2021; Leal Filho et al. 2022; Pumplun et al. 2019; Rai 2020). AI is expected to disrupt the interplay between user, task, and technology (Maedche et al. 2019; Rzepka and Berger 2018) and the nature of work (Brynjolfsson et al. 2017; Iansiti and Lakhani 2020; Nascimento et al. 2018). This expectation is accompanied by many unrealistic expectations, and the timeless question of “[W]hat can AI do today?” (Brynjolfsson and McAffe 2017). There is a stream of AI research that answers this question using terminology usually related to humans or animals, including intelligence, learning, recognizing, and comprehending (Asatiani et al. 2021; Benbya et al. 2021; Rai et al. 2019), that explicitly considers human-inspired AI and humanized AI (Kaplan and Haenlein 2019). For example, Hofmann et al. (2020) answer the question of “what can AI do today?” by providing a structured method to create AI use-cases applicable to various domains. Thereby, they distinguish seven abstract functions, defined in Table 2, through which AI can occur as a solution: perceiving, identification, reasoning, predicting, decision-making, generating, and acting (Hofmann et al. 2020). However, such approaches may also lead to the over-humanization of AI and should not distort the fact that AI systems are human-made artifacts, not humans.

Table 2.

Seven Artificial Intelligence Functions, Following Hofmann et al. (2020)

| AI Function | Definition |

|---|---|

| Perceiving | “Acquiring and processing data from the real world to produce information” |

| Identification | “Extracting and identifying specific objects from data” |

| Reasoning | “Explaining underlying relationships and structures in data” |

| Prediction | “Estimating future events or conditions on a continuous scale” |

| Decision-making | “Choosing between known, discrete alternatives” |

| Generating | “Producing or creating something” |

| Acting | “Executing goal-oriented actions (e.g., movement, navigate, control)” |

AI differs in the presence of cognitive, emotional, or social intelligence (Kaplan and Haenlein 2019). To be able to best support – or even replace – the facilitator would require an AI to hold all three types of intelligence, thereby resulting in a self-conscious and self-aware humanized AI (de Vreede and Briggs 2019; Kaplan and Haenlein 2019). Humanized AIs are not yet available in the facilitation domain, which could either be due to the complexity of collaboration (Kolfschoten et al. 2007) or the limited capabilities of current AI systems (Briggs et al. 2013; Kaplan and Haenlein 2019; Sousa and Rocha 2020). Hence, scholars from the facilitation domain focus on projects and approaches to building human-inspired AIs (Seeber et al. 2018). We refer to these as AI-augmented facilitation systems, which could have a vast impact on team collaboration (Maedche et al. 2019; Seeber et al. 2018, 2020). For instance, Derrick et al. (2013) and Ito et al. (2021) propose the first results of conversational AI capable of issuing instructions to team members or responding to workers’ contributions. Further inspired by the widespread application of AI (Dwivedi et al. 2021; Wilson and Daugherty 2018), researchers have also begun to explore more specifically AI’s potential use in crowdsourcing facilitation (de Vreede and Briggs 2018; Rhyn and Blohm 2017; Tavanapour and Bittner 2018a). For instance, some approaches seek to automate facilitation activities and decision-making by integrating AI such as text mining or natural language processing (Gimpel et al. 2020). Most of these AI-augmented approaches are prototypes, suggesting that further investigation of possible AI-augmented facilitation may be warranted (Askay 2017; Ghezzi et al. 2018; Robert 2019).

Affordance Theory

Within our research, we use affordance theory as a conceptual lens. Affordances are action possibilities that characterize the relationship between a goal-oriented actor and an artifact within a given environment (Burlamaqui and Dong 2015; Gibson 1977; Markus and Silver 2008). The concept of affordances was initially introduced in ecological psychology to describe how animals perceive value and meanings in things within their environment (Gibson 1977). Scholars have translated the concept of affordances to technological contexts (Achmat and Brown 2019; Autio et al. 2018; Bayer et al. 2020; Gaver 1991). Affordances theory now serves as an established lens to investigate socio-technical phenomena emerging from information technology (Dremel et al. 2020; Du et al. 2019; Keller et al. 2019; Lehrer et al. 2018; Malhotra et al. 2021; Markus and Silver 2008). Thereby, affordances describe the relationship between an actor and an information technology to determine goal-oriented action possibilities available to the actor and using specific information technology at hand (Faik et al. 2020; Markus and Silver 2008; Volkoff and Strong 2017). Actors can perceive or actualize affordances (Ostern and Rosemann 2021). Perceiving affordances requires that the actor holds a certain level of awareness regarding the information technology and is, hence, able to identify its potential uses (Burlamaqui and Dong 2015; Volkoff and Strong 2017). The information about a perceived affordance can lead actors to an affordance’s actualization. Herein, the actor makes efforts to realize the affordance, unleashing the value it holds in relation to the actor’s goal (Ostern and Rosemann 2021).

To analyze the “cues of potential uses” (Burlamaqui and Dong 2015) of AI in a specific environment, researchers often turn to affordance theory (Burlamaqui and Dong 2015; Kampf 2019; Volkoff and Strong 2017). In our endeavor, the particular environment is macro-task crowdsourcing with the facilitator as the actor and AI as the specific information technology. This confluence of technology and actor in our macro-task crowdsourcing context is a complex socio-technical phenomenon, where affordance theory can help better understand the interrelationships. With RQ2, we aim to exploratively investigate the relationship between the actor and the technology, revealing the action possibilities of AI in macro-task crowdsourcing facilitation. In line with the original definition by Gibson (1977) and following technology-related affordance literature (Faik et al. 2020; Leonardi 2011; Norman 1999; Steffen et al. 2019; Vyas et al. 2006), we focus on perceived affordances throughout our research endeavor. Hence, we define affordances in our context as perceived action possibilities arising from AI in macro-task crowdsourcing facilitation that do not necessarily need to be performed (Askay 2017). We see these perceived affordances as necessary to compose the nucleus of AI’s intersubjective meaning for facilitators (Suthers 2006). The most salient perceived affordances will ultimately support collaboration among the crowdsourcing workers.

Research Design

Our research set out to address the lack of knowledge on macro-task crowdsourcing facilitation and the need for a holistic understanding of how AI might augment facilitation in this context. Thereby, we followed a two-stage, bottom-up approach to establish a theory-driven understanding that we then validated and refined from a practical perspective. In our approach, we turned to affordance theory as an established lens to theorize the relationship between the technological artifact, AI, and the goal-oriented actor, the facilitator (Lehrer et al. 2018; Markus and Silver 2008; Ostern and Rosemann 2021; Volkoff and Strong 2013). Our approach served to identify macro-task crowdsourcing facilitation activities and AI affordances in macro-task crowdsourcing facilitation. Firstly, in the initial development stage, we conducted two literature searches. We identified 17 macro-task crowdsourcing activities and 116 statements about AI in macro-task crowdsourcing being further processed to manifestation (i.e., specific action possibilities) that substantiate AI’s potential use for macro-task crowdsourcing facilitation. From this, we identified seven AI affordances for macro-task crowdsourcing. Secondly, we iteratively refined our results in the validation & refinement stage through two observed macro-task crowdsourcing initiatives and six semi-structured interviews with experts from the AI and crowdsourcing facilitation domain. Figure 2 depicts the overarching research design, which yielded seven AI affordances for macro-task crowdsourcing.

Fig. 2.

Overarching Two-Stage, Bottom-Up Approach

Initial Development Stage

We developed an initial set of AI affordances in three steps. The aim in the first two steps was to gain an understanding of facilitation and AI within macro-task crowdsourcing. Thereby, we developed macro-task crowdsourcing facilitation activities necessary for performing the third step, which served to combine relevant insights from literature into an initial set of AI affordances.

In step I) Facilitation activities list, we conducted a structured literature search to extract activities that describe macro-task crowdsourcing facilitation. In an initial broad search, we identified the journal ‘Group Decision and Negotiation’ as an adequate source of broad, foundational knowledge about facilitation (Laengle et al. 2018). A searched for the term ‘facilitation’ in this journal returned a total of 176 papers, which we sequentially screened by title, abstract, and full text to determine whether facilitation was the core subject of each article. In doing so, we identified ten papers, plus one additional relevant paper from another outlet (Appendix A.1), whose full-text we further processed. We extracted 477 statements (i.e., excerpts) about activities or capabilities (i.e., repeatable patterns of action) relevant for facilitation. For each statement, we then decided whether the activity or capability was transferable to macro-task crowdsourcing facilitation. We excluded statements if the underlying activity did not necessarily need to be performed by a facilitator (e.g., recruitment of the worker) or if it neither contributed to fostering beneficial interactions among crowd workers or aligning joint actions with predefined goals (e.g., communication of the exercises’ results or distributing rewards to workers). We categorized the 317 remaining statements into 17 broader macro-task crowdsourcing facilitation activities that iteratively emerged in the researcher team’s discussions. These 17 activities served as comprehensive, foundational knowledge about macro-task crowdsourcing facilitation in the next two steps.

Step II) AI in macro-task crowdsourcing served to capture manifestations of AI in macro-task crowdsourcing. As highlighted above, the digital nature of crowdsourcing platforms means the application of AI in crowdsourcing is more widespread than in other situations where facilitation plays an essential role (e.g., face-to-face meetings). We conducted a systematic literature review on the topic of macro-task crowdsourcing (vom Brocke et al. 2015; Wolfswinkel et al. 2013). In keeping with our research goal of exploring “cues of potential uses” (Burlamaqui and Dong 2015, p. 305) of AI (i.e., affordances), we had identified ‘information systems,’ ‘computer science,’ and ‘social science’ as our fields of research. Hence, we selected four established databases (i.e., AIS eLibrary, ACM Digital Library, IEEE Explore Digital Library, and Web of Science) that covered this broad disciplinary spectrum. Our search query did not include specific AI terms since the literature includes various definitions and terms to refer to corresponding AI technologies (Bawack et al. 2019). Instead, we iteratively developed our search query and ended up with a more general tripartite version representing a process-driven perspective on online crowdsourcing:

(‘crowd*’ OR ‘collective intelligence’) AND (‘task’ OR ‘activity’ OR ‘action” OR ‘process’ OR ‘capability’ OR ‘facilitat*’) AND (‘platform’ OR ‘information system’ OR ‘information technology’ OR ‘information and communications technology’).

Applying this search query to the identified databases resulted in a total of 5,808 hits. To refine our sample of papers, we identified and removed 502 duplicates, which led to 5,306 distinct papers. In manually screening the papers, we applied the criteria listed in Table 3 to narrow our search results to macro-task crowdsourcing and ensure high levels of relevance and rigor.

Table 3.

In- and Exclusion Criteria of the Literature Search

| Inclusion Criteria | Exclusion Criteria |

|---|---|

|

• Explains induced or abstracted knowledge from multiple crowdsourcing exercises • Contains managing actions performed ex-ante, ex-nunc, or ex-post of a crowdsourcing exercise • Depicts human interaction or collaboration on or with the crowdsourcing platform • Includes frameworks, models, taxonomies, or conceptualizations related to the crowdsourcing- domain |

• Does not mainly focus on (macro-task) crowd-sourcing • Is not written in English • Was published before 2000 (and, thus, does not discuss contemporary AI systems) • Is a book, (extended) abstract, presentation, single case study, or research-in-progress paper that does not contain relevant interim results or findings • Has identical authors and elaborates on a very similar topic to a paper already included |

Using these criteria, we narrowed the search results by sequentially analyzing title and abstract, which narrowed the total to 283 papers potentially relevant to macro-task crowdsourcing. We read these 283 papers in full text, finally identifying nine papers that name and describe AI in the context of macro-task crowdsourcing. We also included three papers, found elsewhere during our research process, that matched all of our defined criteria. We analyzed these 12 papers (Appendix A.2) in-depth to extract 116 statements about AI manifestations in macro-task crowdsourcing. In the next step, these statements were used together with the previous results to develop AI affordances.

In step III) Initial AI affordances, we developed an initial set of AI affordances by combining and aggregating the results of steps I) and II). Thereby, we assigned 116 manifestations (Appendix A.3) of AI in macro-task crowdsourcing to the 17 activities of macro-task crowdsourcing facilitation. To further distinguish and explain the role of AI in each manifestation, we used AI functions proposed by Hofmann et al. (2020). In doing so, we assigned each manifestation one specific AI function, describing how AI occurs or could occur as a solution in the selected manifestation (Hofmann et al. 2020). This two-dimensional matrix resulted in an AI manifestation mapping for macro-task crowdsourcing facilitation.

To create the initial set of affordances, the research team held discussions to identify archetypes within the manifestation mapping. We remained open-minded about whether an archetype would be created based on the functioning of AI (horizontal axis of the matrix) or the facilitation actions (vertical axis of the matrix). To support the development of AI affordances, we reached out to three scholars with expertise in affordance theory. They contributed valuable input regarding common pitfalls and best practices during the development stage. We recognized seven archetypes whose manifestations we then analyzed to identify affordances. Every affordance is described and classified in terms of AI functions and facilitation activity (see Sect. 4.1).

Validation & Refinement Stage

Although we rigorously identified our AI affordances based on scholarly knowledge, a practical validation was necessary to ascertain potential end-users’ perceptions. To this end, we validated and refined our initial set of AI affordances from step III) with two observed macro-task crowdsourcing initiatives as well as six semi-structured interviews (Myers and Newman 2007).

In step IV) Crowdsourcing initiatives, we longitudinally observed two macro-task crowdsourcing initiatives, namely Trust CoLab (TCL) and Pandemic Supermind (PSM). Observing these initiatives not only helped us to validate our facilitation activities but also to gain rare practical insights on macro-task crowdsourcing facilitation and real-world AI manifestations. Table 4 describes the two macro-task crowdsourcing initiatives under consideration.

Table 4.

Two Macro-Task Crowdsourcing Initiatives Within Validation and Refinement Stage

| Trust CoLab | Pandemic Supermind | |

|---|---|---|

| Problem/Goal | Anticipating the state of trust in medicine and healthcare in 2040 | Identifying the critical unmet needs of the COVID-19 pandemic |

| Participants |

• 105 workers • 1 facilitator and 1 supporting team |

• 206 workers • 2 facilitators and 2 supporting teams |

| Usage of AI |

• Ex-post decision to use AI • Semantical clustering of submitted contributions |

• Ex-ante decision to use AI • In-situ analysis of contributions, worker activity, and worker network • Extensive semantic evaluation of the contributions |

Our primary sources of data collection were documentation (e.g., mails, final reports, and meeting protocols) and participant observation (e.g., discussion within the facilitation team and analysis of AI tools used) that we gained from both crowdsourcing initiatives. Observation of the facilitators‘ actions in the macro-task crowdsourcing initiatives supported the set of 17 facilitation activities from step I). Each of the activities was observed, and no other major activities were found. Additionally, we could refine and enhance the manifestations within our AI manifestation mapping, which was created in step III), by analyzing the application of AI tools and the perceived demand for AI support within both initiatives. Nevertheless, the limited application of AI tools in both initiatives could not validate all affordances and suggested an additional validation and refinement step. Hence, in step V) Interviews, we conducted six semi-structured interviews, which we used to uncover potential affordances (Volkoff and Strong 2013). We selected experts from academia and practice with multiple years of experience in the AI or facilitation domain, as listed in Table 5 (Myers and Newman 2007; Schultze and Avital 2011).

Table 5.

Experts for Validation Interviews

| ID | Focus | Experience With the Focus | Job Title |

|---|---|---|---|

| 1 | Intersect Facilitation and Artificial Intelligence | 2 years | Researcher |

| 2 | Intersect Facilitation and Artificial Intelligence | 2 years | Researcher |

| 3 | Artificial Intelligence | 4 years | AI Developer |

| 4 | Artificial Intelligence | 7 years | Co-Founder of AI Start-up |

| 5 | Facilitation | 6 years | Manager |

| 6 | Facilitation | 5 years | Project Director |

Interviews lasted between 37 and 72 min, were held in the native language of the interviewee, and were recorded with the consent of each interviewee. We informed the interviewees about the research topic and sent a detailed interview guide in advance to better allow the interviewees to prepare for the interview. The guide contained definitions and illustrations, the then-current set of affordances, and the intended structure of the interview. Appendix C.1 provides more details about the structure of the interview as well as the prepared questions.

The semi-structured interviews started with a short description of the research project and definitions of crowdsourcing and facilitation necessary to ensure a mutual understanding of crowdsourcing facilitation. After that, we encouraged the interviewees to share their experience of AI within an ideation section (i.e., a less structured and guided part of the interview). Next, we sought open-ended feedback on the affordances by asking questions regarding the completeness, comprehensiveness, meaningfulness, level of detail, and applicability of the criteria in relation to today’s crowdsourcing initiatives (Sonnenberg and vom Brocke 2012). During the interviews, we took notes to highlight the experts’ essential statements and better respond to the interviewee in the course of the conversation. We iteratively adapted and refined our affordances after each interview. The experts’ feedback led us to overhaul one affordance entirely (i.e., workflow enrichment; previously: environment creation) and improve the descriptions of two other affordances (i.e., improvement triggering and worker profiling).

To align all of the practical and theoretical insights gained, we conducted a final reflective refinement after the interviews. Therein, we followed Schreier (2012) to carefully analyze all six experts’ statements regarding our predefined criteria and enrich our AI manifestation, mapping with potential use cases of AI within macro-task crowdsourcing facilitation named by the experts. Appendix C.3 contains some exemplary expert quotes. Our concept-driven coding frame (Schreier 2012) comprised two categories: (1) feedback regarding artificial intelligence affordances and (2) potential use-cases of AI within macro-task crowdsourcing facilitation. While the feedback is structured in five subcategories according to our defined criteria, the potential use-cases encompass 17 subcategories representing the facilitation activities developed in step I). We extracted transcripts of all relevant statements from the interviewees and mapped these to our coding frame. Finally, we refined the AI affordances and AI manifestation mapping accordingly. The two validation and refinement steps yielded a validated and refined list of seven affordances and 44 manifestations. Appendix B contains a detailed description of the refinement and Appendix C.2 a description of the validation.

Results

Macro-Task Crowdsourcing Facilitation

Based on our literature search, we identified 17 macro-task crowdsourcing facilitation activities. Table 6 comprises an exhaustive list of activities found in the current literature, from facilitation joining crowdsourcing’s specific conditions. We argue that the distinction between a more straightforward administrative activity (e.g., sending invitation emails to the workers) and a more complex facilitation activity (e.g., writing a motivational text for the workers’ invitation) can depend on each particular exercise. The borders of this distinction can also be fluid. Nevertheless, it is essential to clearly define the facilitator’s role in each exercise to avoid misunderstandings between the facilitator and other stakeholders of the macro-task crowdsourcing initiative (e.g., the platform administrator or the requestor).

Table 6.

Facilitation Activities in Macro-Task Crowdsourcing

| Activity Name | Description | Supporting Literature |

|---|---|---|

| Task Design | Decomposition of an overarching problem into small workable pieces that are bundled into tasks to be presented to the workers | Antunes and Ho (2001), Boughzala et al. (2014), Hetmank (2013), Khalifa et al. (2002), Kolfschoten et al. (2007), Pohlisch (2021), Zogaj and Bretschneider (2014), Zogaj et al. (2015) |

|

Task Communication |

Preparation and distribution of relevant information and instructions regarding the tasks, presented in a comprehensible and appealing way | Antunes and Ho (2001), Blohm et al. (2020), Kolfschoten et al. (2011), de Vreede et al. (2002), Erickson et al. (2012), Xia et al. (2015), Zuchowski et al. (2016) |

| Workflow Design & Selection | Composing a sequence of necessary work steps to be executed on the platform to address the designed tasks by (a team of) workers | Assis Neto and Santos (2018), Briggs et al. (2013), Geiger et al. (2011), Hetmank (2013), Khalifa et al. (2002), Kolfschoten et al. (2007) |

|

Worker Motivation |

Triggering workers’ intrinsic or extrinsic motivation in order to stimulate a high rate of contributions and a high level of engagement on the platform | Askay (2017), Adla et al. (2011), Azadegan and Kolfschoten (2014), Blohm et al. (2020), Chittilappilly et al. (2016),Vukovic et al. (2010), de Vreede et al. (2002) |

|

Contribution Support |

Assisting the workers in the execution of their tasks through explanations, consultation, or training to foster task completion | Adla et al. (2011), Blohm et al. (2018), de Vreede et al. (2002), Franco and Nielsen (2018), Hosseini et al. (2015), Tavanapour and Bittner (2018b) |

|

Performance Monitoring |

Using predefined measurements to measure, analyze, and understand workers’ activity and interactions, as well as the quality of contributions | Blohm et al. (2018), Briggs et al. (2013), Gimpel et al. (2020), Kolfschoten et al. (2011), Nguyen et al. (2015), Vivacqua et al. (2011) |

|

Tool Usage & Integration |

Introduction and utilization of (technical) tools to ease the execution of tasks and communication and collaboration among the workers | Briggs et al. (2013), de Vreede et al. (2002), Jespersen (2018), Kolfschoten et al. (2007), Rhyn and Blohm (2017), Tazzini et al. (2013) |

| Crowd Moderation | Observing and guiding the workers’ communication by understanding group dynamics, recognizing systemic misunderstandings, and identifying or resolving conflicts | Adla et al. (2011), Chan et al. (2016), de Vreede et al. (2002), Faullant and Dolfus (2017), Franco and Nielsen (2018), |

|

Crowd Coordination |

Organizing and structuring the joint interaction of the workers by scheduling tasks, managing the workload, and adapting the workflow or strategy when necessary | Antunes and Ho (2001), Askay (2017), Azadegan and Kolfschoten (2014), Franco and Nielsen (2018), Hetmank (2013), Pedersen et al. (2013), Wedel and Ulbrich (2021) |

|

Participation Encouragement |

Attracting, nudging, or convincing individual workers to improve their participation or engagement in the exercise | Askay (2017), Azadegan and Kolfschoten (2014), Gimpel et al. (2020), McCardle-Keurentjes and Rouwette (2018), Vivacqua et al. (2011) |

|

Contribution Evaluation |

Reviewing, assessing, and filtering relevant contributions using a systematic process | de Vreede et al. (2002), Hetmank (2013), Kolfschoten et al. (2011), McCardle-Keurentjes and Rouwette (2018), Pedersen et al. (2013), Pohlisch (2021), Zhao and Zhu (2016) |

|

Contribution Aggregation |

Gathering and collecting information from relevant contributions to meaningfully reassemble or summarize insights gained | Adla et al. (2011), Azadegan and Kolfschoten (2014), Chan et al. (2016), Chittilappilly et al. (2016), Franco and Nielsen (2018), Geiger et al. (2011), Vukicevic et al. (2022) |

| Quality Control | Analysis of redundant, invalid, or irrelevant contributions in order to learn from workers’ unintended behavior from the workers | Adla et al. (2011), Alabduljabbar and Al-Dossari (2016), Boughzala et al. (2014), Gimpel et al. (2020), Kolfschoten et al. (2011), Zogaj and Bretschneider (2014), Zuchowski et al. (2016) |

| Decision Making | Elaboration, presentation, and decisions on possible alternatives for action based on the achieved outcomes | Adla et al. (2011), Gimpel et al. (2020), Khalifa et al. (2002), McCardle-Keurentjes and Rouwette (2018), Rhyn and Blohm (2017) |

| Goal Orientation | Aligning all interactions between workers, facilitators, and requestors on a predefined goal to focus on the purpose of the initiative | Antunes and Ho (2001), Boughzala et al. (2014), Briggs et al. (2013), Gimpel et al. (2020), Khalifa et al. (2002), Kohler and Chesbrough (2019), Pedersen et al. (2013) |

|

Culture Development |

Establishing a pleasant atmosphere between and among workers, facilitators, and requestors to achieve efficient and effective communication on the platform | Askay (2017), Azadegan and Kolfschoten (2014), Boughzala et al. (2014) Briggs et al. (2013), de Vreede et al. (2002), Kohler and Chesbrough (2019), Pohlisch (2021) |

| Risk Management | Identification and evaluation of potential deviations from acceptable behavior on the platform; control and monitor relevant behaviors to foster positive and tackle adverse effects | Kamoun et al. (2015), Kolfschoten et al. (2007), Onuchowska and de Vreede (2018), Pedersen et al. (2013), Pohlisch (2021), Vivacqua et al. (2011), Zogaj and Bretschneider (2014) |

In the validation & refinement stage, we observed two macro-task crowdsourcing initiatives (i.e., TCL and PSM). By carefully observing the facilitators within TCL and PSM, we were able to identify action patterns that matched the facilitation activities’ descriptions. Thereby, we confirmed the existence of all 17 facilitation activities, although their scope varied within the two initiatives under consideration. Table 7 depicts exemplary actions in TCL and PSM, mainly performed by the facilitator, that matched the elaborated description of the 17 facilitation activities. Some of these facilitation activities were AI-augmented (i.e., the facilitator was supported by an AI tool), making both initiatives valuable subjects for further analysis regarding our AI affordances.

Table 7.

Macro-Task Facilitation Activities Within the Selected Initiatives

| Activity Name | Exemplary Action in TCL | Exemplary Action in PSM |

|---|---|---|

| Task Design | Decomposition of the purpose of the initiative into four sequential exercises, each consisting of one task | Decomposition of the purpose of the initiative into three exercises with a total of five tasks |

|

Task Communication |

Discussions about and adaptions of the task to be presented between the facilitator and the supporting team | Provision of exemplary contributions to underline the nature of desired contributions |

| Workflow Design & Selection | Selection of a four-phase workflow enabled by the platform to develop scenarios about how trust in healthcare or medicine could evolve until 2040 | Selection and design of a three-phase workflow (partially) supported by the platform to identify approaches for better pandemic resilience |

|

Worker Motivation |

Initial motivational mail that welcomes the workers and highlights the value of the workers’ expected contributions to society | User profile on the platform was prefilled with a short biography of the worker to value the workers’ participation |

|

Contribution Support |

Video tutorials and FAQs were designed and made available | Quick responses from the facilitators to questions that arose from the workers |

|

Performance Monitoring |

Bi-weekly manual report to track the current amount of workers‘ contributions | Automated AI-augmented dashboard to monitor the contribution upload frequency, most used keywords, and topics arising |

|

Tool Usage & Integration |

Usage of one generic online crowdsourcing platform that has been customized to suit the scenario development process | Integration of one AI tool to support the facilitation activities during and after each exercise |

| Crowd Moderation | Active participation by the supporting team in the discussions and contributions from the worker; reports to the facilitator | Hosting of live virtual events to catalyze conversations about the topics within the ongoing task among the workers |

|

Crowd Coordination |

Continuous facilitator notes (notification sent to the crowd) regarding the current and future steps | Creation of worker groups based on their professional background to coordinate parallel task execution in the first exercise; a merging of groups in the second exercise to support cross-fertilization of ideas among workers |

|

Participation Encouragement |

Sending targeted emails to workers who were not active on the platform | Weekly encouragement of the crowd via email to send feedback, which was regularly reflected and integrated by the facilitators |

|

Contribution Evaluation |

Iterative reviewing and selection of the contributions after each exercise; removal of duplicate contributions | Weekly discussions between the facilitator and the requestor about recent contributions from the workers |

|

Contribution Aggregation |

Initial semantical clustering of submitted contributions with manual adaptations | Application of different semantical clustering algorithms and manual refinements |

| Quality Control | Notifying workers about redundant contributions during the exercises | Continuous monitoring of the social network graph of the crowd to avoid topic biases |

| Decision Making | Creation of one final report in collaboration with the requestor of the initiative | One detailed report about the results was made publicly available and shared with the requestor |

| Goal Orientation | Raise discussion-stimulating questions to reach a broad range of sentiment | A small adjustment to one communicated task to cover issues of misunderstanding |

|

Culture Development |

General rules regarding behavioral and cultural expectations were made available | Reference to the Chatham House Rule to build an appreciative atmosphere |

| Risk Management | Test run of the crowdsourcing platform with 10 participants | Thorough testing of the AI tool with data from similar initiatives to ensure the functionality |

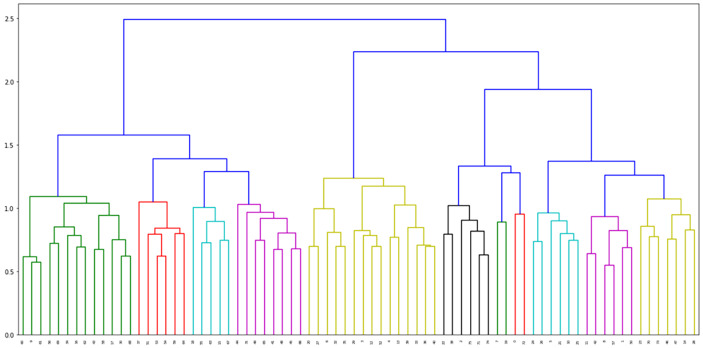

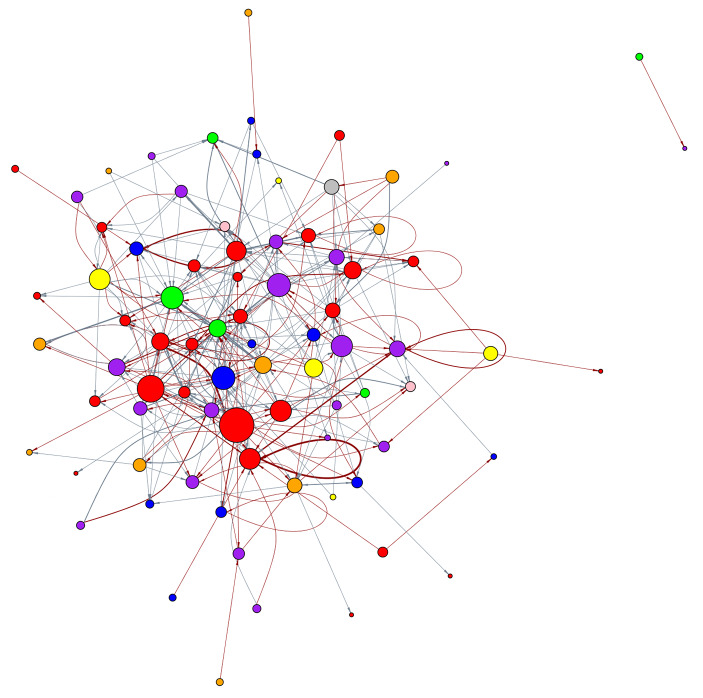

Throughout the macro-task crowdsourcing initiatives of TCL and PSM, dedicated teams used two different AI tools to support the facilitators in their work. In TCL, the facilitator was mainly supported in aggregating the workers’ contributions between the four phases. All of the workers’ contributions were first exported from the platform before a natural language processing Python script preprocessed the contributions (i.e., performing stemming and lemmatization). The script then created a detailed word cloud to provide the facilitator with a broad overview of the main concepts. Finally, the contributions were semantically clustered by the script using the Universal Sentence Encoder algorithm (Cer et al. 2018). We refer to Appendix D.1 for two interim results of the Python script. The results were discussed by the initiative’s stakeholders and manually refined by the facilitator and the supporting team. In PSM, the two facilitators were supported by a web application written in R. The application could directly access the latest contribution data via an application programming interface provided by the crowdsourcing platform. Therefore, the facilitators could use the web application’s algorithms during internal meetings to discuss the latest contribution data. In TCL, the AI tool only used the codified contributions made by the workers while the web application also used metadata such as comments or likes on the contributions. This metadata allowed a broad set of functionalities such as keyword extraction, topic modeling, word co-occurrences, network analysis, and word searches. We refer to Appendix D.2 for two screenshots of the web application.

Artificial Intelligence Affordances

Given the extensive knowledge base on macro-task crowdsourcing facilitation, we searched for AI manifestations by conducting a second literature search to create an initial AI manifestation mapping. By analyzing the macro-task crowdsourcing initiatives TCL and PSM regarding potential use-cases of AI-augmented facilitation, and gathering statements about potential use-cases for AI in facilitation from the six expert interviews, we were able to refine and extend our initial AI manifestation mapping. Therein, we searched for archetypes of manifestations that could lead to potential affordances. Table 8 lists the final seven affordances of AI for macro-task crowdsourcing facilitation and one example of how AI-augmented facilitation could be implemented in the case of each affordance.

Table 8.

Artificial Intelligence Affordances in Macro-Task Crowdsourcing Facilitation

| ID | Affordance Name |

Description | Exemplary AI Augmentation |

|---|---|---|---|

| 1) | Contribution Assessment | AI affords in-depth analysis of the quality of workers’ contributions to identify valuable ideas and extract relevant input for further processing. | Semantical natural language processing to remove unnecessary information |

| 2) | Improvement Triggering | AI affords identification and nudging of non or less-active workers towards higher participation and triggers improvement measures for inadequate contributions. | Nudging during contribution creation based on natural language understanding |

| 3) | Operational Assistance | AI affords support for workers through the whole process of contribution development, including the identification of relevant ideas, elaboration of (interim) results, and submission of the final contribution. | AI chat assistants to answer questions during the contribution creation process |

| 4) |

Workflow Enrichment |

AI affords the provision and integration of useful information and knowledge to a predefined workflow, enabling highly productive collaboration among workers. | Natural language understanding to identify mismatches between the facilitator’s proposed task and the workers’ contributions |

| 5) | Collaboration Guidance | AI affords collective guidance for workers during their collaboration on the platform in such a way that they will focus on a predefined goal relating to the overarching problem. | Sentiment detection to generate semantic embeddings of the workers’ contributions |

| 6) |

Worker Profiling |

AI affords analysis of the network of workers to track the skills and activity of individuals as well as to monitor the quality of their created contributions. | (Social) Network algorithms to generate activity reports from the crowdsourcing platform data |

| 7) | Decision-making Preparation | AI affords aggregate outcomes and synthesizes relevant contributions and, therefore, creates a valuable foundation for decision-makers. | Summary generation algorithms to synthesize the free-text contributions of the workers |

In the following, we describe each of the affordances in detail. Thereby, we explain the relationship between the facilitator’s goal and AI within macro-task crowdsourcing. To further elaborate on the affordances, we highlight some AI manifestations found in the literature (step II), our two macro-task crowdsourcing initiatives (step IV), or our interviews (step V). These manifestations provide examples of what AI is perceived to afford within macro-task crowdsourcing facilitation.

1) Contribution Assessment. In bringing a macro-task crowdsourcing initiative to fruition, one of the biggest challenges facilitators face is dealing with the number of contributions made by workers (Blohm et al. 2013; Nagar et al. 2016) and “[understanding] all the results from a crowdsourcing exercise in a way that’s empirical and meaningful” (Expert 5). AI affords the analysis of contributions such that the quality can be assessed and valuable ideas or relevant content can be extracted. Facilitators could use AI to analyze the content of a contribution via semantical natural language processing (Gimpel et al. 2020) to determine its novelty or similarity compared to other contributions. (Semi-)automated contribution assessments could decide whether each contribution brings the initiative one step closer to the goal (Haas et al. 2015; Nagar et al. 2016; Rhyn and Blohm 2017). This could involve removing unnecessary information to allow a better assessment by the facilitator further downstream (Expert 4, 6) or to detect outliers by assessing each contribution’s relevance to the topic at hand (Case PSM).

2) Improvement Triggering. In crowdsourcing, facilitators often face a 90-9-1 distribution, where only 1% of the workers create nearly all of the contributions (Troll et al. 2017). Since macro-task crowdsourcing heavily relies on active knowledge exchange and idea cross-fertilization between various workers (Gimpel et al. 2020), non- or low-active workers need to be triggered to contribute, thereby stimulating a better thematic discourse (Expert 2, 4). Yet, even if all workers contribute, their contributions may sometimes lack quality; ideas may lack originality or readability. AI affords recognition of individual contributions that are unoriginal or add no value (e.g., due to the existence of similar or identical contributions) (Hetmank 2013; Rhyn and Blohm 2017), and of workers who do not actively participate in the exercise (e.g., through lack of time or attention). Intelligent mechanisms such as personalized nudging (Expert 2, 4, 5) can improve behavior or quality (Chiu et al. 2014; Haas et al. 2015; Riedl and Woolley 2017). One approach would be to use natural language understanding to automatically notify workers during the creation of a contribution that theirs is similar to other available contributions or is not sufficiently comprehensive (Case PSM) – for example, by displaying a uniqueness score (Expert 3).

3) Operational Assistance. When creating a contribution, workers may experience technical difficulties or develop questions regarding idea formulation (Adla et al. 2011; Hosseini et al. 2015). Usually, workers will either stop working on their contributions or contact the facilitator, who then has to step in and solve the problem (Adla et al. 2011), consuming the worker’s and the facilitator’s precious time. AI could identify the cause of either process- or technical-related problems and offer assistance. Missing information, which could hinder the workflow, could be identified by AI and provided at the appropriate time (Chittilappilly et al. 2016; Seeber et al. 2016). Robotic process automation (also referred to as intelligent automation technologies) could assign workers appropriate tasks based on the worker’s domain knowledge, previous crowdsourcing experience (Expert 4), or a lack of contributions in a specific task (Case PSM). Deep learning algorithms could help translate contributions or overcome language barriers (Expert 1). Pre-trained AI chat assistants could interactively explain the contribution creation process to the workers on a step-by-step basis and answer their questions accordingly (Tavanapour and Bittner 2018a).

4) Workflow Enrichment. To use the workers’ time as effectively as possible, facilitators break down the goal of an exercise into smaller tasks (Vukovic and Bartolini 2010). They efficiently integrate these tasks into an effective workflow supported by a crowdsourcing platform (Hetmank 2013). This is usually accompanied by a reduction in special attention to the needs of individual workers. AI could suggest the facilitator integrate additional or new information into the workflow (Chittilappilly et al. 2016; Riedl and Woolley 2017) or adjust the proposed next steps (Xiang et al. 2018). This could lead to a modified workflow or improved effectiveness. Natural language understanding could identify mismatches between the facilitator’s proposed task and the workers’ contributions, which may be the result of ambiguous task descriptions (Case PSM). Depending on the extent of the worker’s domain knowledge, the description of the task could be paraphrased or extended using natural language generation (Expert 1, 5). If workers do not find appropriate resources supporting their idea, natural language processing could identify the topic and the facilitator could then refer the worker to relevant data or scientific sources (Expert 6).

5) Collaboration Guidance. Facilitation, in a narrow sense, involves fostering collaboration and interdisciplinary exchange of information (Expert 2). However, such thematic exchange can go astray and move away from the exercise’s actual goal, despite facilitative support. Therefore, facilitators have to decide whether the existing discourse should be maintained or if the worker should be guided in a different direction (Xiang et al. 2018; Zheng et al. 2017). AI affords the evaluation of workers’ moods and the direction of the discussion with reference to the content. This provides the facilitator with a better understanding of the current atmosphere among the workers and the thematic focus of their collaboration. On the one hand, automated text mining, like sentiment detection, could generate semantic embeddings of the contributions (Expert 4) (Nagar et al. 2016), which could help to assess the maturity of the collaboration (Gimpel et al. 2020; Qiao et al. 2018). On the other hand, word-to-vec algorithms could focus the content of the discussion and uncover unprocessed areas (Expert 2, 3, 5) and frequently discussed topics (Case PSM), or help the facilitator to detect emerging topics (Case TCL).

6) Worker Profiling. Experienced facilitators mobilize the varied skills and expertise of the workers participating in an exercise (Tazzini et al. 2013). However, as the number of workers increases, getting to know one another becomes more difficult, particularly in an online crowdsourcing environment. Hence, facilitators may lack important information about workers, such as their previous experience in crowdsourcing or domain-specific skillsets. AI affords the use of interaction among workers (Dissanayake et al. 2014), as well as information on their backgrounds (Bozzon et al. 2013; Tazzini et al. 2013), to better assess the workers’ activity and the characteristics of their collaboration (Gimpel et al. 2020). Natural language generation could be used to process information from the worker’s publications or the worker’s social media profile to create a summary of the individual’s background (Expert 4). However, the rules of platform governance, as defined by the initiative stakeholders, must be upheld in any such investigations in order to avoid ethical concerns on the part of the workers (Alkharashi and Renaud 2018; Kocsis and Vreede 2016; Schlagwein et al. 2019). Alternatively, activity reports could be generated from the crowdsourcing platform via the use of (social) network algorithms (Case TCL) or natural language processing (Expert 1), leading to fully-automated dashboard generation for tracking the workers’ activity (Expert 6).

7) Decision-making Preparation. After one or more exercises, the workers will have provided several contributions. Facilitators then have to aggregate and synthesize these contributions into a meaningful foundation for decision-makers, such as a final report (Chan et al. 2016; Gimpel et al. 2020). AI affords support in decision-making preparation and could provide a synthesis, such as a decision template or recommendation for action to the requestors (Hetmank 2013) (Expert 2). Neural networks that have been specifically trained using vocabulary from the exercise’s domain could cluster the contributions and highlight unique ideas (Case TCL and PSM) (Expert 2, 5). Natural language understanding could be used to perform question answering based on the contributions, which could help a facilitator interact with the contributions and better understand the workers’ ideas, even after the exercise and without contacting the workers (Expert 3). Furthermore, summary generation algorithms could comprehensively synthesize the clustered contributions in ways that are meaningful for the decision-maker (Case PSM) (Expert 1, 2).

Despite the immense potential of AI in macro-task crowdsourcing facilitation, as reflected by the seven affordances, the interviewed experts stressed that researchers and facilitators must carefully consider which facilitation activity should be enabled or performed by AI (Expert 1, 2, 3, 4, 5). AI is prone to biases (Expert 4) and could systematically discriminate against specific workers (e.g., a natural language processing contribution evaluation algorithm could systematically down-rate contributions from workers with dyslexia). On top of that, the unreflected use of AI could lead facilitators to blindly believe in the underlying model and thereby reduce the overall level of goal achievement. Experts also argued that AI has limitations in understanding ethical and cultural factors and cannot fully imitate human interactions as facilitators (Expert 1, 5, 6). “I think it is really nice to have a name and a face to identify with a person who is communicating and asking you to do these things.” (Expert 5). Furthermore, facilitators should also consider the effort and difficulties during the development: “The art of AI is often not to solve the task, but to explain and teach the AI the task.” (Expert 3). Ultimately, the ill-considered use of AI in macro-task crowdsourcing could induce much bias in the outcome of an exercise (Expert 4) or decrease the workers’ participation and performance (Expert 6).

Even after a full review, we could not establish a hierarchy among the affordances. Nonetheless, an interlocking of individual affordances cannot be ruled out. To better illustrate the interdependencies of the affordances, the individual facilitation activities, and AI functions, Table 9 shows the revised version of the AI manifestation mapping. This table records all AI manifestations (i.e., specific action possibilities) that occurred during our research process. Every AI manifestation therein was observed either in literature (L), our observed crowdsourcing initiatives (C), or our interviews (I) and describes a possible shape of the corresponding affordance (1)-7)) concerning a facilitation activity or AI function.

Table 9.

Revised Artificial Intelligence Manifestation Mapping

| Facilitation Activity | Perceiving | Recognizing | Reasoning | Decision- making |

Predicting | Generating | Acting |

|---|---|---|---|---|---|---|---|

| Contribution Evaluation | 1) L C I | 1) L I | 1) L C I | 1) L | 2) L | ||

| Participation Encouragement | 2) L C I | ||||||

| Worker Motivation | 2) L C | ||||||

| Performance Monitoring | 6) L C | 6) L C I | 2) L I | ||||

| Quality Control | 6) L I | 6) L I | 6) L C I | 2) L C | |||

| Contribution Support | 3) L C I | 3) L I | |||||

| Crowd Coordination | 3) C | 3) L I | 3) L | 3) L I | |||

| Task Communication | 4) C | 4) L I | |||||

| Task Design | 4) L I | 4) L | 4) L I | ||||

| Tool Usage & Integration | 4) I | ||||||

| Workflow Design & Selection | 4) L | 4) L | |||||

| Crowd Moderation | 5) L C I | 5) L C I | 5) L I | 5) L I | |||

| Culture Development | 5) I | 5) I | 5) L | ||||

| Goal Orientation | 5) L C I | 5) L I | |||||

| Risk Management | 5) C I | 5) L | |||||

| Contribution Aggregation | 7) L C I | 7) L C I | 7) L I | ||||

| Decision Making | 7) L C I | 7) L | |||||

|

Please note the following abbreviations: Affordances: (1) Contribution Assessment; (2) Improvement Triggering; (3) Operational Assistance; (4) Workflow Enrichment; (5) Collaboration Guidance; (6) Worker Profiling; (7) Decision-making Preparation Manifestations: L: observed in literature; C: observed in crowdsourcing initiative; I: observed in interviews | |||||||