Abstract

The increasing frequency and magnitude of viral outbreaks in recent decades, epitomized by the COVID-19 pandemic, has resulted in an urgent need for rapid and sensitive diagnostic methods. Here, we present a methodology for virus detection and identification that uses a convolutional neural network to distinguish between microscopy images of fluorescently labeled intact particles of different viruses. Our assay achieves labeling, imaging, and virus identification in less than 5 min and does not require any lysis, purification, or amplification steps. The trained neural network was able to differentiate SARS-CoV-2 from negative clinical samples, as well as from other common respiratory pathogens such as influenza and seasonal human coronaviruses. We were also able to differentiate closely related strains of influenza, as well as SARS-CoV-2 variants. Additional and novel pathogens can easily be incorporated into the test through software updates, offering the potential to rapidly utilize the technology in future infectious disease outbreaks or pandemics. Single-particle imaging combined with deep learning therefore offers a promising alternative to traditional viral diagnostic and genomic sequencing methods and has the potential for significant impact.

Keywords: SARS-CoV-2, influenza, viral diagnostics, fluorescence microscopy, machine learning

Introduction

The SARS-CoV-2 betacoronavirus has infected hundreds of millions of people since its emergence, resulting in numerous deaths and causing worldwide social and economic disruption. The emergence of a number of variants of concern (VOCs) that pose an increased risk to global public health by affecting transmission, associated disease severity, or vaccine efficacy has further complicated response efforts.

Current SARS-CoV-2 diagnostic methods include nucleic acid amplification tests, antigen detection, and serology tests.1 Reverse transcriptase polymerase chain reaction (RT-PCR) is considered the gold standard for diagnosis; however, RT-PCR takes several hours to provide a result, is restricted to specialized laboratories (as it requires viral lysis and RNA extraction), and can be limited by supply chain issues. Isothermal nucleic acid amplification methods, such as loop-mediated isothermal amplification (RT-LAMP), offer a promising alternative that does not require thermal cycling and can provide results within an hour;2−7 however, these methods are still subject to supply chain issues, similar to RT-PCR. Lateral-flow immunochromatographic assays using gold nanoparticles as a colorimetric label to detect SARS-CoV-2-specific antigens provide a rapid platform for point-of-contact virus detection but can have lower sensitivities.8 Viral strain, or variant, identification largely relies on sequencing of the viral genome. There is thus an urgent need for new viral detection approaches, particularly ones that can be deployed in non-laboratory settings.

In previous published work we described a robust method to rapidly label enveloped virus particles using a solution of a divalent cation (such as Ca2+), short DNAs of non-specific sequence, and a particle with a negatively charged lipid bilayer, and we suggested that the cations facilitate an interaction between the negatively charged polar heads of the viral lipid membrane and the negatively charged phosphates of the nucleic acid.9 By including a fluorophore on the DNAs, we have been able to easily generate bright fluorescent particles for any enveloped virus tested to date (multiple strains of Influenza A, Influenza B, baculovirus, respiratory syncytial virus (RSV), Infectious Bronchitis Virus (IBV), human coronaviruses OC43, HKU1 and NS63, and SARS-CoV-2). We have previously characterized the labeling method to show that virus-specific signals were only observed when the cation, virus, and fluorescent DNA were all present (with signals being absent in controls where any one of these major components were excluded), that we could co-stain cation-labeled virus particles with virus-specific antibodies, and that the size of the labeled particles correlated perfectly with that observed in electron microscopy images of the virus,9 providing us with confidence that we can specifically label viruses with this method.

To address the need for new viral detection approaches, we have used this labeling method to develop a diagnostic test that relies on the detection of intact virus particles using wide-field fluorescence imaging. Our method starts with the near-instantaneous fluorescence labeling of viruses in a sample; we subsequently surface-immobilize labeled particles, collect diffraction-limited images containing thousands of labeled particles, and finally use image analysis and machine learning to identify different viruses in biological and clinical samples (Figure 1A). Our approach exploits the fact that distinct virus types and strains have differences in surface chemistry, size, and shape, which in turn affect the fluorophore distribution and density over the surface of different viruses. Such differences can be captured by convolutional neural networks (CNNs),10,11 which have been used previously to classify super-resolved microscopy images of heterogeneous virus populations into particle classes with distinct structural features,12 and to detect virus particles in transmission electron microscopy images.13

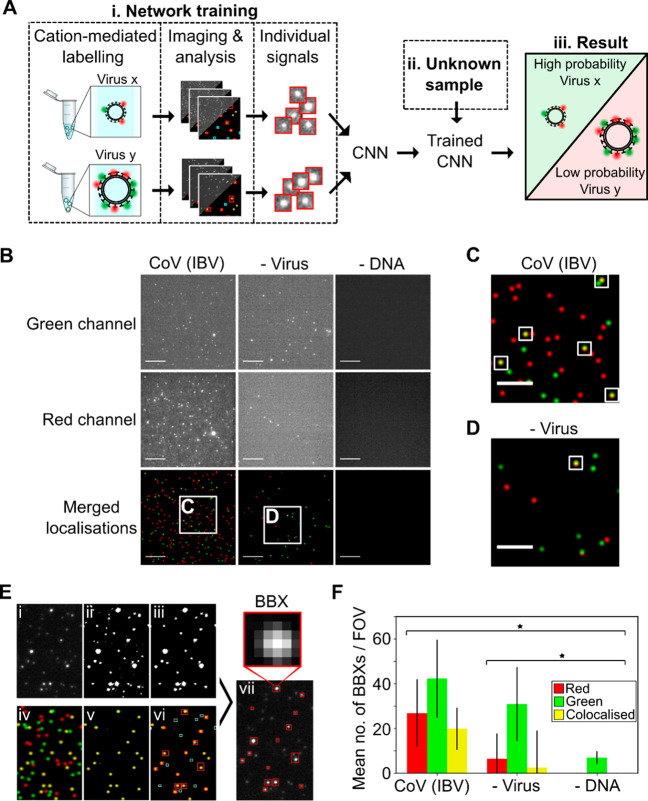

Figure 1.

A fluorescent labeling and imaging strategy to detect viruses. (A) Overview: (i) Viruses were labeled and imaged. Individual signals were isolated, and a convolutional neural network (CNN) was trained to exploit differences in the features of different viruses to identify them. (ii) Signals from unknown samples can then be fed into the trained CNN to allow (iii) virus classification. (B) Representative fields of view (FOVs) of infectious bronchitis virus (CoV (IBV)). 1 ×104 PFU/mL virus was labeled with 0.23 M SrCl2, 1 nM Cy3 (green) DNA, and 1 nM Atto647N (red) DNA before being imaged. Green DNA was observed in the green channel (top panels) and red DNA in the red channel (middle panels); merged red and green localizations are shown in the lower panels. Scale bar, 10 μm. A negative control where DNA was replaced with water is included. (C, D) Zoomed-in images from (B); white boxes represent examples of colocalized particles. Scale bar, 5 μm. (E) Segmentation process: (i) Cropped FOV from the red channel. (ii) Intensity filtering applied to (i) to produce a binary image. (iii) Area filtering applied to (ii) to include only 10–100 pixel objects. (iv) Location image associated with (i). (v) Colocalized signals in the location image. (vi) Bounding boxes (BBXs) found from (iii) drawn onto (v). Non-colocalized objects (cyan) are rejected. (vii) Colocalized objects (red) are drawn over (i). Scale bar, 10 μm. (F) Plot of mean number of BBXs per FOV for labeled CoV (IBV) and the negative controls. Error bars represent the standard deviation of 81 FOVs from a single slide. Statistical significance was determined by one-way ANOVA, *P = 6.01 × 10–22.

We have shown that we can use this methodology to differentiate a range of viruses in oro- and nasopharyngeal swabs, with high overall sample accuracies of 98.0% (using 51 clinical samples on multiple different versions of the trained network) and 97.1% (using 104 clinical samples on a single trained network). The use of universal, non-specific chemistry to fluorescently label all viral particles in a sample, combined with general-use widefield microscopy, means that the assay can be extended to additional pathogens using a simple software upgrade, without changes to the labeling reaction or hardware. We therefore see an opportunity for our testing platform to potentially make an impact not only during pandemics but also in the future as a surveillance platform for new emerging pathogens.

Results and Discussion

Labeled Virus Particles Can Be Efficiently Detected with TIRF Microscopy

To demonstrate our ability to label, immobilize, and image virus particles, we initially used infectious bronchitis virus (IBV), an avian coronavirus (CoV). We labeled IBV using a divalent cation (here, Sr2+, which performs very similarly to Ca2+; see below) and a mixture of green and red fluorescent DNAs (labeled with Cy3 or Atto647N fluorophores, respectively), immobilized particles on a chitosan-coated glass slide, and imaged particles using total-internal-reflection fluorescence microscopy (TIRF) (Sup.Figure 1A). Fluorescent labeling was achieved within seconds via a single-step addition of labeling mixture (see Experimental Methods), after which the viruses were immediately immobilized. The resulting images contained particles with either single green or red fluorescence signals (shown as green and red particles), as well as colocalized green and red fluorescence signals (shown as yellow particles) (Figure 1B–D). Efficient virus labeling was achieved using either CaCl2 (Sup.Figure 1B,C) or SrCl2 (Figure 1B–D), although both solutions resulted in a number of colocalized signals in the virus-negative controls, likely due to random coincidence or cation-mediated clustering of DNAs on the surface. Omission of DNAs resulted in complete loss of the fluorescent signal (Figure 1B, right panels).

Prior to use for machine learning, the virus images were pre-processed to isolate individual image signals into bounding boxes (BBXs) using segmentation of the field of view (FOV) through adaptive filtering (Figure 1E). The BBX-based approach is preferred over the use of full FOVs for classification, since the former is immune to features such as virus concentration or variability in the background and illumination pattern. The raw FOVs from the red channel (Figure 1E-i) were converted into a binary format (Figure 1E-ii), and area filtering was used to disregard objects with a total area (i.e., width × length) smaller than 10 pixels (1170 nm; single fluorophores) or larger than 100 pixels (11 700 nm; aggregates, cells, or cell fragments) (Figure 1E-iii). At the same time, to enrich our sampling for viruses and exclude signals not arising from virus particles, the location image (showing the green, red, and yellow signals from both channels; Figure 1E-iv) was used to identify colocalized signals (Figure 1E-v). This information was then combined with the signals identified in the filtered binary image (Figure 1E-iii) to reject signals not meeting the colocalization condition (Figure 1E-vi; cyan boxes) and retain signals meeting the colocalization condition (Figure 1E-vi–vii; red boxes). The segmentation was fully automated, allowing each FOV to be processed in ∼2 s. In this experiment, the mean number of colocalized BBXs per FOV obtained when IBV was present was ∼6-fold higher than when the virus was absent (Figure 1F), confirming that virus-specific images are being captured in our preprocessing step.

Positive and Negative Virus Images Can Be Distinguished Using Deep Learning

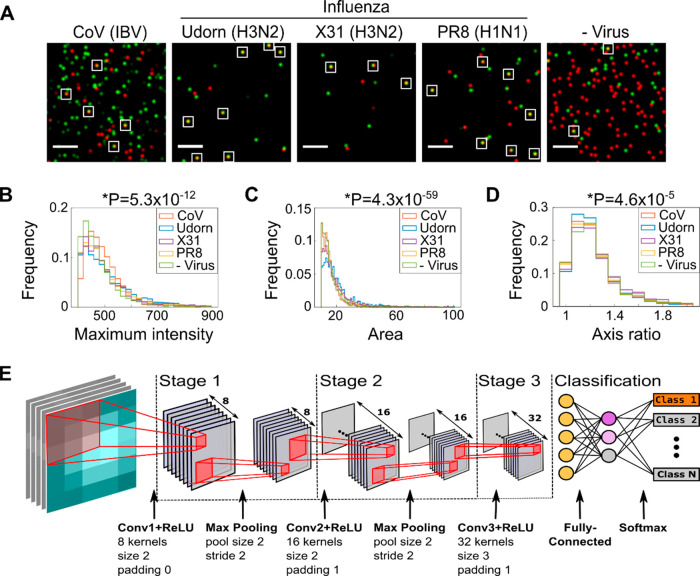

Having shown that we could efficiently image virus samples and isolate the resulting signals into BBXs, we hypothesized that we could use a custom-built CNN to differentiate between signals observed in virus-positive and virus-negative samples, as well as between images of different viruses. To explore this, we fluorescently labeled and imaged IBV, three laboratory-grown influenza A strains—H3N2 A/Udorn/72 (Udorn), H3N2 A/Aichi/68 (X31), and H1N1 A/PR8/8/34 (PR8)—and a virus-negative control consisting of virus-free cell culture media (Figure 2A). The viruses are similar in size and shape and cannot be distinguished by the eye in diffraction-limited microscope images of fluorescently labeled particles (Sup.Figure 2). After image segmentation and examination of the properties of the resulting BBXs, however, we observed that the four viruses exhibited small, yet statistically significant, differences in maximum pixel intensity, area, and semimajor-to-semiminor axis ratio within the BBXs (Figure 2B–D); e.g., IBV appears brighter than influenza, whereas Udorn occupies a larger area than the other viruses.

Figure 2.

Design of a convolutional neural network to classify imaged viruses. (A) Representative FOVs of fluorescently labeled coronavirus (CoV (IBV)), two strains of H3N2 influenza (A/Udorn/72 (Udorn) and A/Aichi/68 (X31)), an H1N1 influenza strain (A/PR8/8/34 (PR8), and a negative control where virus was substituted with allantoic fluid. The samples were immobilized and labeled with 0.23 M SrCl2, 1 nM Cy3 (green) DNA, and 1 nM Atto647N (red) DNA before being imaged. Merged red and green localizations are shown; examples of colocalizations are highlighted with white boxes. Scale bar, 10 μm. (B–D) Normalized frequency plots of the maximum pixel intensity, area, and semimajor-to-semiminor axis ratio within the BBXs of the four different viruses. Values taken from 81 FOVs from a single slide for each virus. Statistical significance was determined by one-way ANOVA, P values depicted above graphs. (E) Illustration of the 15-layer shallow convolutional neural network. Following the input layer (inputs comprising BBXs from the segmentation process), the network consists of three convolutions (stages 1–3). Stages 1 and 2 each contain a ReLU layer to introduce non-linearity, a batch normalization layer (not shown), and a max pooling layer, while stage 3 lacks a max-pooling layer. The classification stage has a fully connected layer and a softmax layer to convert the output of the previous layer to a normalized probability distribution, allowing the initial input to be classified.

This was further supported by super-resolution imaging of cation-labeled virus particles. Fluorescence-based super-resolution microscopy allowed us to take both diffraction-limited and high-resolution images of the same fields of view, providing a direct comparison between the signals isolated into BBXs for the machine learning and their super-resolved versions. We immobilized biotinylated viruses on pegylated coverslips before labeling them with CaCl2 and a DNA conjugated to a photoswitchable Alexa647 dye. When imaged, the fluorescent signals from the Alexa647 DNAs on the virus particles were recorded, and each resulting localization was precisely fitted to reconstruct a super-resolved image. Cluster analysis of the super-resolved localizations revealed that the fluorescent signals observed in the diffraction limited images of labeled samples correspond to particles of the correct size and shape of virions, and that different virus classes appear to have subtle differences in their labeling density, area, and shape (Sup.Figure 3). These small differences, as well as more abstract image features such as pixel correlations, can be exploited by deep learning algorithms to classify the viruses.

To classify the different samples, we constructed a 15-layer CNN (Figure 2E, see legend for details). We started by imaging IBV and a virus-negative control consisting of only SrCl2 and DNA. The two samples were independently imaged four times each over a three-day period. Imaging over 3 days allowed any potential heterogeneity in the image procurement process (such as small differences in temperature on different days) to be captured, in order to enhance the ability of the trained models to classify data from future datasets. The resulting BBXs obtained for each sample were then randomly divided into a training dataset (70%) and a validation dataset (30%). The training dataset was used to train the CNN to differentiate IBV from negative signals, using ∼3000 BBXs per sample.

The trained network was validated using the remaining 30% of the data (that the network had never seen before). The first data point in the network validation session was at 50% accuracy (as expected for a completely random classification of objects into two categories), followed by an initial rapid increase in validation accuracy as the network detected the most obvious parameters, followed by a slower increase as the number of iterations increased (Sup.Figure 4A). This was accompanied by a similar decrease in the Loss Function (Sup.Figure 4B). The entire training and validation process took 12 min to complete (Sup.Figure 4C).

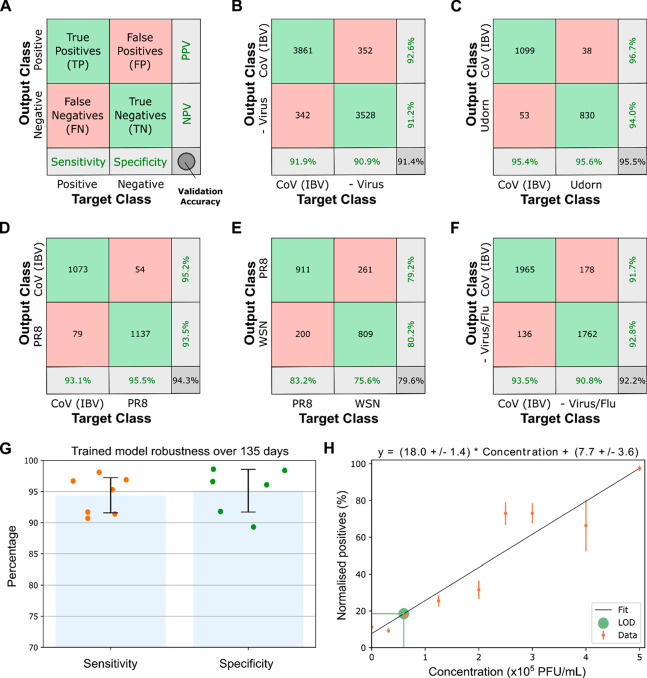

Results of the network validation are shown as a confusion matrix, commonly used to visualize performance measures for classification problems (Figure 3A). The rows correspond to the predicted class (output class), the columns to the true class (Target Class), and the far-right, bottom cell represents the overall validation accuracy (hereafter, accuracy) of the model for each classified particle. For IBV vs negative, the trained network was able to differentiate positive and negative samples with high accuracy (91.4%), sensitivity (91.9%), and specificity (90.9%) (Figure 3B). Of note, these probabilities refer to the identification of single virus particles in the sample and not the whole sample; the probability of correctly identifying a sample with hundreds or thousands of virus particles will therefore increase (see later).

Figure 3.

Network validation results for laboratory-grown virus strains. (A) Network validation results shown as a confusion matrix: rows, predicted class (output class); columns, true class (target class); right column, positive and negative predictive values (percentages of BBXs that are correctly and incorrectly predicted); bottom row, sensitivity and specificity. (B) Confusion matrix of CoV (IBV) positive and negative samples. (C, D) Confusion matrices of CoV (IBV) vs influenza Udorn or PR8. (E) Confusion matrix of influenza PR8 vs influenza WSN. (F) Confusion matrix of CoV (IBV) vs a pooled dataset of the virus-negative control and three influenza A strains. (G) A trained network is robust over significant time. The network was trained on data from images of the virus IBV and allantoic fluid as a negative control. Each data point (orange for sensitivity; green for specificity) corresponds to the classification result for signals detected at different dates over a period of 135 days. Error bars represent standard deviation. (H) Defining the limit of detection for accurate machine learning classification. Increasing concentrations of IBV were labeled and imaged, the resulting images were fed into the trained network. The number of normalized positive particles (positive particles/all particles) increased linearly with increasing virus concentration. Error bars represent standard deviation. The limit of detection (LOD) was defined as 6 × 104 PFU/mL, with 99.85% certainty.

Efficient Classification of Different Virus Strains across Optical Systems Using Deep Learning

Next, we tested the network’s ability to distinguish between different virus types and strains by training the network on BBXs obtained (as described above) from images of IBV and influenza Udorn, X31, PR8, and H1N1 A/WSN/33 (WSN) strains. The network easily distinguished between the coronavirus and influenza, with a validation accuracy of 95.5% for IBV vs Udorn (Figure 3C) and 94.3% for IBV vs PR8 (Figure 3D). The network was also able to differentiate between closely related strains of influenza (WSN vs PR8), albeit with a slightly lower accuracy of 79.6% (Figure 3E), perhaps reflecting the greater homogeneity between H1N1 strains of the same virus. The ability to distinguish between different influenza viruses that were grown in the same cell line (i.e., WSN and PR8 were both grown in MDCK cells) established that our classification is not host-cell dependent. The network was also able to distinguish between IBV and a pooled dataset consisting of the virus-negative control and three influenza strains (92.2%) (Figure 3F) and, importantly, was able to distinguish three viruses from each other in a multi-classifier experiment (the coronavirus IBV and two influenza strains, PR8 and WSN; 81.9%) (Sup.Figure 5).

To demonstrate the general applicability of our approach, we performed similar experiments using a second optical system (a Zeiss Elyra 7 with a 63× objective rather than an ONI Nanoimager with a 100× objective). We compared two strains of influenza (WSN and Udorn) with negative samples lacking virus, and with each other, and found that we were able to distinguish the samples with accuracies ranging from ∼74 to 78% (Sup.Figure 6), thus establishing that virus classification is independent of the imaging conditions (e.g., exposure, illumination) and microscope type (e.g., magnification, numerical aperture). We also showed that a neural network returned robust results over significant time without requiring re-training, with no decrease in sensitivity or specificity over a period of 135 days (Figure 3G).

We estimated the limit of detection (LOD) of our assay by testing the ability of the network to accurately detect decreasing IBV, WSN, and SARS-CoV-2 concentrations (Figure 3H and Sup.Figure 7). Training was performed on laboratory propagated virus samples of known titer, followed by normalization of the number of BBXs in each class by the total number of BBXs in the sample (to counter variations between samples). Images were analyzed by the trained network, and the number of particles classified as positive was fitted with increasing virus concentration, giving estimated LODs of 6 × 104, 4.6 × 104, and 5.4 × 104 PFU/mL for the three virus strains tested. This sensitivity, as expected, was lower than that of amplification-based methods like RT-PCR (∼102 PFU/mL14), however is still within a clinically useful range; SARS-CoV-2 viral loads have been demonstrated to be between 104 and 107 copies per mL in throat swab and sputum samples.15

Classification of Clinical Samples with High Accuracy

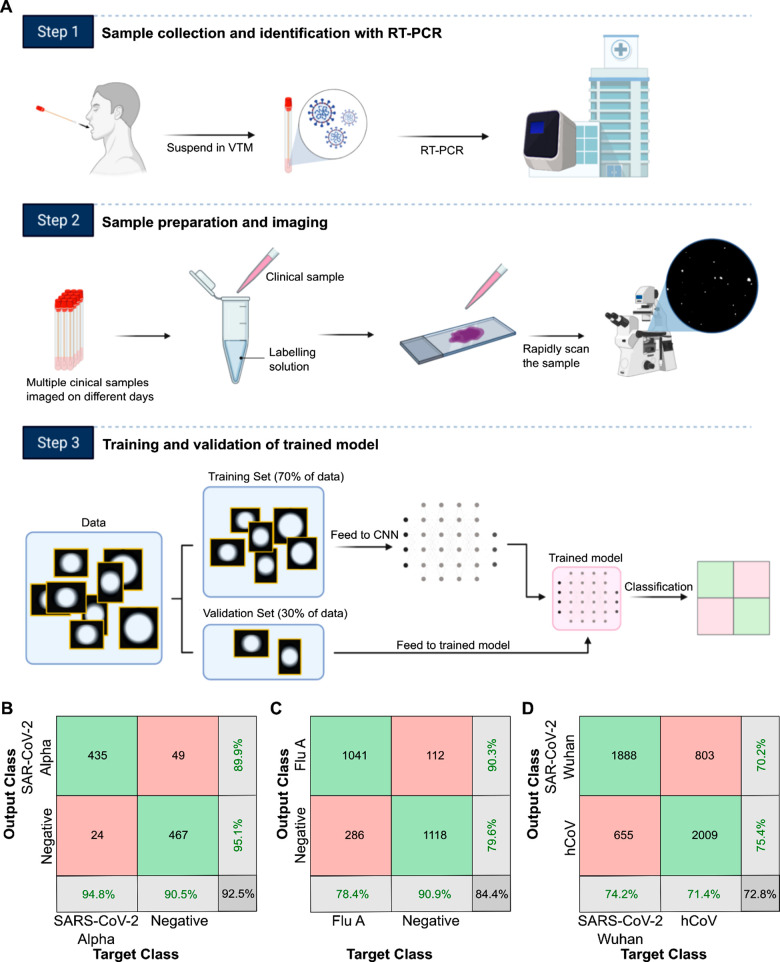

Having demonstrated our assay on laboratory-grown viruses, we next assessed clinical samples (workflow in Figure 4A). Throat swabs from 33 patients negative for virus (as determined by RT-PCR) or positive for SARS-CoV-2, seasonal hCoVs (OC43, HKU1, or NL63), or human influenza A (as determined by RT-PCR) were inactivated with formaldehyde before being labeled and immobilized (see Experimental Methods). Robust and reproducible labeling of viruses in clinical samples was achieved (Sup.Figure 8). Images of the samples captured over three different days were used to train and validate the network to answer a variety of paired questions (e.g., SARS-CoV-2 vs negative, or SARS-CoV-2 vs hCoVs; details of clinical samples used for network training and validation are described in Table S1), and similarly to above, the results of the network validation were depicted as confusion matrices.

Figure 4.

A deep learning network can differentiate viruses in clinical samples. (A) Workflow for training and validation of clinical samples. Samples were collected from 33 patients, labeled, and imaged on a microscope over three different days. The images were processed to isolate the individual signals into BBXs. 70% of the BBXs were used to train a convolutional neural network (CNN), resulting in a trained model. The remaining 30% of the BBXs were used to validate the trained model, providing the result in a confusion matrix. (B) Confusion matrix showing that a trained network could differentiate positive (Alpha variant) SARS-CoV-2 and negative clinical samples. (C) Confusion matrix showing that a trained network could differentiate influenza A (Flu A) positive clinical samples from negative samples. (D) Confusion matrix showing that a trained network could differentiate SARS-CoV-2 samples (original Wuhan variant) from seasonal human coronavirus (hCoV) samples.

Our initial results with SARS-CoV-2 clinical samples showed a lower validation accuracy than that achieved with laboratory-grown virus strains (∼70% at the BBX level, Sup.Figure 9A). However, the accuracy was substantially improved by performing labeling at a higher pH (pH 8), likely due to the higher isoelectric point (pI; the pH at which the net charge of the particle is zero) of SARS-CoV-2 relative to influenza (pI of ∼9 compared to ∼6).16−18 As the virions are more negatively charged at higher pH, they are more efficiently labeled using the cationic solution and more efficiently captured by the charged chitosan surface on the glass slide, leading to more efficient SARS-CoV-2 detection and improved detection accuracy. Using the optimized protocol, the trained network was able to distinguish between virus-positive and virus-negative clinical samples with excellent accuracy, distinguishing between SARS-CoV-2-positive and negative BBXs with a validation accuracy of ∼93% (Figure 4B).

We could also distinguish between Flu A and negative BBXs with a validation accuracy of ∼84% at the BBX level (Figure 4C), and between seasonal hCoV and negative samples with an accuracy of ∼78% (Sup.Figure 9B). The network could also distinguish SARS-CoV-2 from seasonal hCoVs with a validation accuracy of ∼73% (Figure 4D) and SARS-CoV-2 from Flu A with a validation accuracy of ∼70% (Sup.Figure 9C), potentially useful in diagnosing cocirculating infections. Lastly, the network was able to distinguish between negative samples and combined data from two variants of SARS-CoV-2, the original Wuhan strain (SARS-CoV-2) and the Alpha variant, with an accuracy of ∼75% (Sup.Figure 9D), and between the two variants with an accuracy of ∼70% (Sup.Figure 9E).

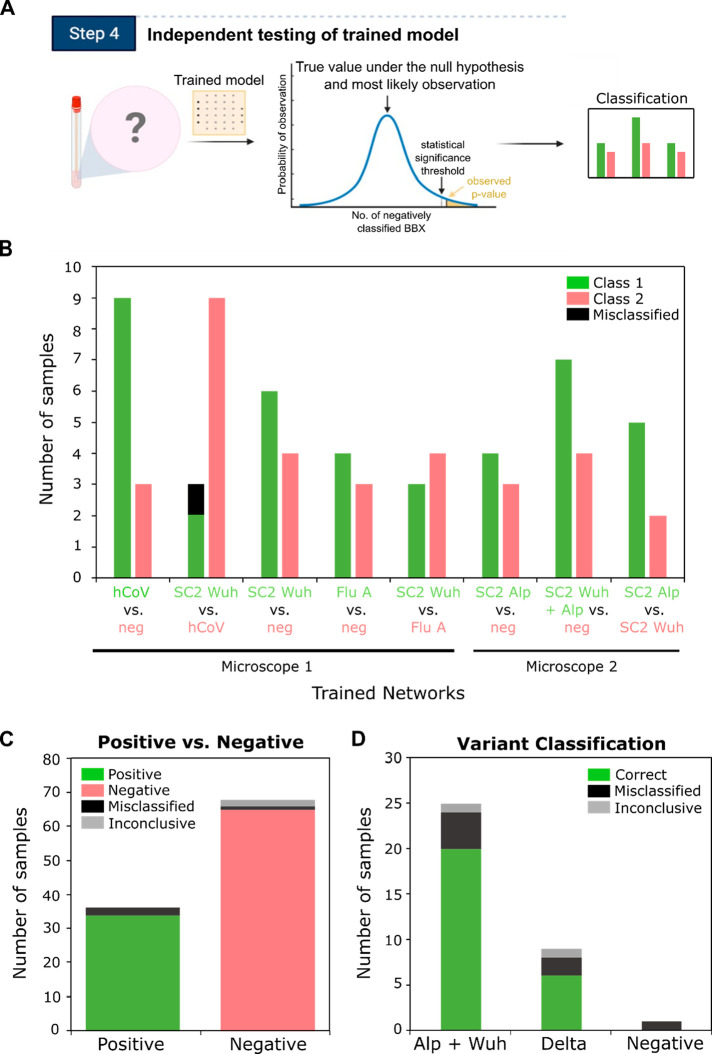

Testing of Trained Networks on Independent Clinical Samples

Next, we tested the trained network’s ability to diagnose independent clinical samples never seen before for either network training or validation. A total of 51 samples (from a different set of patients to those used for network training/validation), comprised of negative samples or samples positive for SARS-CoV-2, Flu A, or seasonal hCoVs, were imaged on a fourth day and assessed by the trained networks described in the section above within a few seconds. By comparing the results to RT-PCR and carrying out chi-squared tests where necessary (Figure 5A and Sup.Figure 10, Steps 1 and 2), we showed that 50 out of 51 clinical samples tested were classified correctly, giving an excellent overall sample accuracy of 98.0% (Figure 5B and Sup.Table 2). For the negative samples, 11 out of 11 were classified correctly, giving a perfect sample specificity of 100%, and for the positive samples, 39 out of 40 were classified correctly, giving a very high sample sensitivity of 97.5%. We observed that the single incorrectly classified sample provided a much lower number of BBXs than the other samples (Sup.Table 2), suggesting that the viral load in this sample may have been close our limit of detection and thus explaining the misclassification.

Figure 5.

Independent testing of the trained network with clinical samples. (A) Schematic of workflow of independent testing. Previously unseen samples are imaged, the images are processed into BBXs which are fed through a trained network. When necessary, a chi-squared statistical test is performed to test the null hypothesis that the sample is negative. If the p-value is smaller than a pre-set confidence threshold, the null hypothesis is rejected and the sample is classified as positive. If the p-value is greater than the threshold, the sample is classified as negative. (B) Summary of independent testing results using multiple trained models. 51 patient samples that were not used for network training or validation were run through different trained versions of the network, detailed on the bottom of the plot. Some samples were tested in multiple versions of the network, for further details see Sup.Table 2. Chi-squared tests were carried out to classify the samples (see Sup.Figure 8 and Table 2; samples with a p-value smaller than a preset confidence threshold were classified as positive) and the results compared to RT-PCR. 50 out of 51 samples were classified correctly (incorrect classification shown in black), giving an overall sample accuracy of 98.03%. Results were obtained using two different microscopes (see Experimental Methods). (C) Summary of independent testing results using a single trained network. 104 patient samples were analyzed as in B), but tested in a single trained network (SARS-CoV-2 vs negative). (D) Variant classification of clinical samples identified as positive in (C). The BBXs from images of the clinical samples classified as positive by the first network were passed through a second trained model (Wuhan + Alpha SARS-CoV-2 vs Delta SARS-CoV-2).

Within this analysis, we also tested whether we could differentiate different SARS-CoV-2 variants. The BBXs from images of seven clinical samples that had been classified as positive by a SARS-CoV-2 vs negative network were passed through a second trained network to test whether it was the original Wuhan variant or the Alpha variant that first arose in the UK in 2020 (Sup.Figure 10, Steps 3 and 4). All seven variant samples were classified correctly (Figure 5B).

Given the potential clinical relevance of a test that can diagnose different SARS-CoV-2 variants without the need for sequencing, we decided to explore this further using an additional 104 clinical samples, 68 of which were determined to be virus-negative and 36 of which were determined to be SARS-CoV-2 positive by RT-PCR (Sup.Table 3). The samples were taken from patients from November 2020 to July 2021, and of the positive samples, 14 were the original Wuhan variant, 12 were the Alpha variant (as suggested by a spike gene target failure in RT-PCR), and 10 were the Delta variant. Samples were labeled, immobilized, and imaged as normal, followed by processing of the images into BBXs. In the first step of the analysis the 104 samples were classified as either SARS-CoV-2-positive or negative (results of the network validation in Sup.Figure 11A). Two of the negative samples were inconclusive due to a low number of BBXs (less than the 5 needed for the chi-squared test). Of the remaining 102 samples, all but three of the samples were classified correctly (Figure 5C). Of the three samples that were misclassified, one was a negative sample and two were positive samples (Figure 5C), providing us with an overall sample accuracy of 97.1%, a sensitivity of 94.4%, and a specificity of 98.5%.

For the subsequent variant classification step, the BBXs from images of the clinical samples classified as positive by the first network (35 samples in total) were passed through a second trained model (Wuhan + Alpha SARS-CoV-2 vs Delta SARS-CoV-2) (Sup.Figure 11B). Two samples gave inconclusive results as the resulting p-values were closer than 3 orders of magnitude (Sup.Figure 10, Steps 3 and 4). The single negative sample that had been incorrectly classified as positive by the first trained model was classified as Delta, while six remaining positive samples were misclassified (two Delta, two Alpha, and 2 Wuhan variant), giving an overall sample accuracy of 77.1%, inconclusive rate of 5.7%, and misclassification rate of 17.1% (Figure 5D and Sup.Table 4). While our variant classification accuracy is lower than our overall positive vs negative test accuracy, we believe that this may still be of use in the context of a rapid variant screening assay in the absence of sequencing facilities.

Conclusions

In summary, we have shown a proof of principle for the use of single-particle fluorescence microscopy and deep learning to rapidly detect and classify viruses, including coronaviruses. We have carried out two clinical tests of our method using 155 patient samples in total, which provided high overall sample accuracies of 98.0% and 97.1%. We note that these results were obtained with samples collected over a significant time period, using a range of different collection kits containing different volumes of viral transport media, and stored at different temperatures for varying periods of time. Given these sampling inconsistencies, which could potentially impact the number of intact virus particles in each sample, the results of these small-scale clinical trials are extremely encouraging, demonstrating the potential of our method as a viable diagnostic test.

Our initial proof-of-principle experiments, carried out using viruses grown in cell culture, demonstrated that the CNN can distinguish not only between samples with and without virus, but also between the avian coronavirus IBV and various strains of influenza with high accuracies of >90% per particle (e.g., IBV vs Udorn, IBV vs PR8). We confirmed our approach using multiple stocks of cell-grown viruses and different microscopes. A truly independent validation of the trained network using samples that have not been used in either training or validation was not possible using cell grown viruses, however, as all stocks are essentially the same virus, grown in the same cell line and similar conditions, even when grown at different times. Importantly, this was possible using clinical samples though, as novel samples could be obtained from new patients that were entirely independent of those used for training/validation. The accurate classification of completely independent clinical samples served as clear proof that our network could use image information to accurately classify samples never seen before.

We also accounted for any other potential confounding factors such as sample preparation (by only comparing samples prepared and inactivated in the same way), as well as differences in virus concentration and differences in imaging quality, by classifying the isolated signals only from individual viruses and not full fields-of-view. This approach renders the network completely agnostic to virus concentration, signal density, or small day-to-day imaging differences such as uneven illumination, which means that virus classification is solely dependent on the fluorescent images of the virus. We have even shown that related H3N2 virus strains, prepared in the same way in the same cells, could be reliably distinguished from each other, establishing that classification is independent of the host cell in which the virus samples were grown. All of this, together with our finding that clinical samples that produced different CT values in RT-PCR were correctly classified independently of their concentration, provides several lines of strong evidence that our network can truly differentiate between different viruses.

The power of our method comes from the ability to rapidly and universally label enveloped viruses in a sample and swiftly image them using diffraction-limited microscopy. We have shown that even with the limited information present in the low-resolution images, a trained CNN can very effectively differentiate between virus strains. This is based on our findings that different virus families, and even different virus strains, exhibit small differences in their distributions of size, shape, and labeling efficiency when labeled using the cation-mediated method (Figure 2 and Sup.Figure 3). The labeling is an electrostatic interaction between the phosphate backbone of the DNA and the lipid membrane of the virus.9 Different viruses will therefore exhibit differences in labeling efficiency and coverage due to their different isoelectric points (the pH at which a virus has a neutral surface charge), e.g., WSN: 4.7, PR8: 5.3, IBV: 7.2, and SC2: 8.5.16−19 The surface charge of virus strains is also likely to be affected by mutations in the surface glycoproteins, thus explaining our ability to effectively differentiate variants.

This hypothesis is supported by measurements to assess zeta potential (the surface potential of a nanoparticle in solution). Zeta potential measurements on three different influenza strains were carried out in triplicate at a range of pH values, showing that, as expected, the negativity of the zeta potential increases with increased solution pH (Sup.Figure 12A). Interestingly, we also observed differences in the zeta potential for different viruses at the same pH, even for closely related viruses of the same subtype, i.e., H1N1, WSN, and PR8. This suggests that even at higher pH, the labeling efficiency, which relies on charge, will be different for each of the chosen viruses. This, in combination with our documented size and shape differences between viruses, can create features (intensity, label density, semimajor–semiminor axis, size) in the images that the network can learn. We propose that it is these differences, among others, that allow the network to distinguish viruses. We also explored the patterns “seen” by two different networks using the DeepDreamImage function in Matlab,20 which suggests that a network trained to differentiate between negative and positive SARS-CoV-2 samples may see positive signals as rounded shapes with high intensity in the center of the BBX, whereas the pixels have much lower values in the center of the BBX for a negative sample, supporting our theory that virus signals are brighter than the signals observed in a negative sample (Sup.Figure 12B,C). Comparison of the patterns observed by a network trained to differentiate between two variants of SARS-CoV-2 is harder to interpret; however, there are higher intensity pixels in both than in the negative sample (Sup.Figure 12D,E).

The results of our independent testing of the network with clinical swabs did not provide 100% accuracy in results, suggesting that further improvements to our method may be beneficial. During our second clinical validation that initially tested for SARS-CoV-2-positive or negative, followed by variant classification, two samples did not provide enough BBXs for us to accurately conclude a result using the chi-squared test. Interestingly, both of the inconclusive samples were negatives (validated by RT-PCR); further testing of more samples may establish that this can happen only for negative samples, in which case it may be a useful indicator for a negative diagnosis. The small number of positive samples that were misclassified may have been due to low viral load, which can be improved through further optimization of the protocol, such as by improving virus immobilization, concentrating the sample, or limiting the time the sample is stored before being imaged. It is possible that the single negative sample that was misclassified may have contained another virus that was not SARS-CoV-2; in further iterations of the test we will use multi-classifier networks (as demonstrated in Sup.Figure 5) to recognize all the major families of circulating respiratory viruses (e.g., influenza, HCoVs, SARS-CoV-2, and RSV), providing a multi-pathogen testing platform. We will also need to further investigate the ability of the network to classify mixed samples and the potential to use labeling solutions at different pH values, hypothesized to result in different labeling efficiencies—a property that could be exploited to potentially multiplex the test and provide further features for classification. This may in turn offer improvements upon the reported sensitivity and specificity.

The current gold standard for viral diagnostics is RT-PCR, which requires a time-consuming (∼30 min) RNA extraction step, followed by the main assay (which can take several hours). In this article, we describe a laboratory-based proof-of-principle assay that involves (i) instantaneous sample labeling, (ii) 10 s for sample mounting, (iii) 40 s for focusing, (iv) 2 min for image acquisition (81 FOVs), and (v) 20 s for analysis, thus easily providing a result within just 5 min. Our assay requires no RNA extraction, but in order to easily work with clinical samples in a containment level 2 laboratory, we initially inactivated samples with a low concentration of formaldehyde (4%) for 30 min prior to sample preparation, which has been shown to maintain virus particle shape while rendering them non-infectious.21 In later experiments presented here, we moved to working with samples inactivated with 1% formaldehyde in just 5 min (having used plaque assays to show that this lower concentration and time were still sufficient to fully inactivate samples), rendering the entire test complete from start to finish within just 10 min. In order to further develop the assay, which currently requires a research microscope in a laboratory environment, into a potential point-of-care tool, we require further development. Our envisaged commercial version of the test will not require inactivation at all through use of a bio-contained sample capsule in which the labeling takes place, and will use a small, simplified version of a fluorescence microscope custom-built to perform our assay measurements.

Despite the need for further development, our approach may offer some potential advantages over existing diagnostic technology in the future. Our approach avoids the need for viral lysis or amplification, and in comparison to RT-PCR, which may still return a positive result due to RNA fragment detection for several weeks after an individual is no longer infectious, our assay only reports on intact viral particles, which may be useful as a readout of infectivity (although this was not evaluated here). It may also offer a potential testing window from the time that virus particles start to be produced in the airways until the infected individual is no longer infectious, and hence may be useful in the pre-symptomatic disease phase.

A further key advantage of our test over enzyme-based methods is that the reagents are very affordable. The most expensive component is the fluorescent DNA, which we typically use at 1 nM concentration in a very small sample volume (20 μL); even in the small volumes that we order DNA, the cost amounts to ∼2.7 pence to run 10 000 labeling reactions, demonstrating the scalability of our test. Finally, the ease with which the network can be retrained to detect a novel virus suggests that it could be useful in detecting new and emerging pathogens during pandemic situations.

Experimental Methods

Laboratory-Grown Virus Strains and DNAs

The influenza strains (H1N1 A/Puerto Rico/8/1934 (PR8), H3N2 A/Udorn/72 (Udorn), H1N1 A/WSN/33 (WSN), and H3N2 A/Aichi/68 (X31)) used in this study have been described previously.9 Briefly, WSN, PR8, and Udorn were grown in Madin–Darby bovine kidney (MDBK) or Madin–Darby canine kidney (MDCK) cells, and X31 was grown in embryonated chicken eggs. The cell culture supernatant or allantoic fluid was collected, and the viruses were titered by plaque assay. Titers of PR8, Udorn, WSN, and X31 were 1 × 108 plaque forming units (PFU)/mL, 1 × 107 PFU/mL, 2 × 106 PFU/mL, and 4.5 × 108 PFU/mL, respectively. The coronavirus IBV (Beau-R strain)22 was grown in embryonated chicken eggs and titered by plaque assay (1 × 106 PFU/mL). Influenza and IBV were inactivated by the addition of 2% formaldehyde before use. SARS-CoV-2 was grown in Vero E6 cells and titered by plaque assay (1.05 × 106 PFU/mL). The virus was inactivated by addition of 4% formaldehyde before use.

Single-stranded oligonucleotides labeled with either red or green dyes were purchased from IBA (Germany). Our main criteria for oligo selection were length (DNAs need to be longer than 20 bases) and fluorophore modification (selection for bright and stable dyes) rather than sequence, as robust labeling occurs regardless of sequence if the other two conditions are met.9 The “red” DNA used in this manuscript was modified at the 5′ end with ATTO647N (5′ ACAGCACCACAGACCACCCGCGGATGCCGGTCCCTACGCGTCGCTGTCACGCTGGCTGTTTGTCTTCCTGCC 3′), and the “green” DNA was modified at the 3′ end with Cy3 (5′ GGGTTTGGGTTGGGTTGGGTTTTTGGGTTTGGGTTGGGTTGGGAAAAA 3′). The DNA used for super-resolution imaging was modified at the 5′ end with Alexa647 (5′ TCCGCTCTCACAATTCCACACATTATACGAGCCGAAGCATAAAGTGTCAAGCCT 3′).

Clinical Samples

Ethical approval was obtained for the use of anonymized oro- or nasopharyngeal specimens from patients for the diagnosis of influenza and other respiratory pathogens, including SARS-CoV-2 (North West-Greater Manchester South Research Ethics Committee [REC], REC ref:19/NW/0730). Specimens were maintained in Copan Universal Transport Medium (UTM) before being inactivated in 4% final concentration of formaldehyde (Pierce) for 30 min at room temperature, or 1% formaldehyde for 5 min at room temperature for the 104 samples used in the second clinical trial.21 Samples were confirmed as SARS-CoV-2-positive or negative using either the Public Health England 2019-nCoV real-time RT-PCR RdRp gene assay or RealStar SARS-CoV-2 RT-PCR Kit (Altona Diagnostics). Testing for other respiratory pathogens and sub-typing of seasonal human coronavirus (hCoV) samples as OC43, HKU1, or NL63 strains was conducted using the BioFire FilmArray Respiratory Panel (Biomerieux, Marcy-L’Etoile, France) and Cepheid Xpert Xpress Flu/RSV (Cepheid, Sunnyvale, CA, USA).

We used 213 clinical samples in total, taken from patients from November 2020 to July 2021. In order to train the network, we imaged samples from different patients over 3 days (different sample prep from the same patient samples on each day). We used 70% of the BBXs isolated from all the images taken over the 3 days to train the network, leaving the remaining 30% of the BBXs for network validation, the results of which are shown in the confusion matrices. Each confusion matrix corresponds to an individually trained model. In total, 58 clinical samples were used for training and validation of the network.

We then carried out two independent tests of the trained networks using clinical samples not used for either training or validation. The first test used 51 patient samples comprised of negative samples, or samples positive for SARS-CoV-2, Flu A, or seasonal hCoVs. The second test used 104 patient samples comprised of negative samples or samples positive for SARS-CoV-2. Of the positives, 14 were the original Wuhan variant, 12 were the Alpha variant (indicated by a spike gene target failure in RT-PCR [TaqPath Covi-19 combo kit, ThermoFisher]),23 and 10 were the Delta variant (confirmed through RT-PCR [Taqman SARS-CoV-2 mutation panel [ThermoFisher]).

Sample Preparation

Both positive and negative samples were prepared in the same way (e.g., inactivated in the same concentration of formaldehyde or labeled in the same buffer), and only samples similarly prepared were compared with each other. Glass slides were treated with 0.015 mg/mL chitosan (a linear polysaccharide) in 0.1 M acetic acid for 30 min before being washed thrice with Milli-Q water or with 0.01% poly-l-lysine (Sigma) for 15 min (Figure 3A, Sup.Figure 6, and Sup.Figure 10C). While both of these reagents gave some background in the negative controls they resulted in very rapid virus immobilization, an important factor in preventing virus aggregation. Unless otherwise stated, virus stocks (typically 10 μL) were diluted in 0.23 M CaCl2 or SrCl2 (as described in the figure legends) and 1 nM of each fluorescently labeled DNA in a final volume of 20 μL, before being added to the slide surface. For SARS-CoV-2 imaging, the cationic labeling solution was buffered with 20 mM Tris, pH 8. Virus labeling with CaCl2 has been described previously;9 SrCl2 provides similar results (Figure 1). For laboratory grown virus stocks, negatives were taken using virus-free Minimal Essential Media (Gibco) or allantoic fluid from uninfected eggs in place of the virus.

Imaging

Images were captured using three wide-field Nanoimager microscopes.9 “Microscope 1” was equipped with a Hamamatsu Flash 4 LT.1 sCMOS camera, and “Microscopes 2 and 3” were equipped with a Hamamatsu Flash4 V3 sCMOS camera; in all other respects, the systems were identical. The sample was imaged using total internal reflection fluorescence (TIRF) microscopy and a 100× oil-immersion objective. The laser illumination was focused at a typical angle of 53° with respect to the normal. Movies of 5 frames per field of view (FOV) (measuring 75 × 49 μm) were taken at a frequency of 33 Hz and exposure time of 30 ms, with laser intensities kept constant at 0.78 kW/cm2 for the red (640 nm) and 1.09 kW/cm2 for the green (532 nm) laser. To automate the task and ensure no bias in the selection of FOVs, the whole sample was scanned using the multiple acquisition capability of the microscope; 81 FOVs were imaged in 2 min. Defocusing was carefully controlled using an automated autofocus mechanism to bring the sample to a pre-defined axial position before each field of view was exposed to the excitation lasers. This was achieved by imaging the reflection of a near-IR laser off the glass/sample medium interface and matching the image to a pre-recorded reference image.

Data in Sup. Figure 6 was acquired using a Zeiss Elyra 7 microscope equipped with two pco.edge sCMOS (version 4.2 CL HS) cameras. TIRF images were acquired using the alpha Plan-Apochromat 63×/1.46 oil objective. A laser intensity of 10% for the 641 nm laser was used for imaging Atto647N. Laser intensities of 6% for the 561 nm and 3% for the 488 nm laser were used for imaging Cy3. The exposure time was 50 ms.

Super-resolution Imaging

For super-resolution imaging, passivated microscope slides were prepared by washing in acetone and Vectabond solution (Vector Laboratories) before being incubated with NHS-PEG:Biotin-NHS-PEG in an 80:1 ratio. 0.5 mg/mL neutravidin was incubated for 10 min at room temperature on the slide shortly before virus was added. Viruses were biotinylated by incubation in a 1 mg/mL Sulfo-NHS-LC-Biotin (ThermoFisher) for 3 h at 37 °C before being labeled with 0.23 M CaCl2 and 1 nM Alexa647-labeled DNA in a final volume of 20 μL, before being added to the slide surface. After incubation for 30 min at room temperature the slide was washed thrice in 1× PBS before imaging in 50 mM MEA and an enzymatic oxygen scavenging system consisting of 1 mg/mL glucose oxidase, 40 μg/mL catalase, and 1.0% (wt/vol) glucose. Super-resolution localizations were extracted using the built-in Nanoimager software and analyzed further in Matlab. Localizations were clustered with DBScan using a minimum cluster size of 50 and an epsilon of 30 nm, followed by computing the convex hull to find the area of the clustered points.

Data Segmentation

Each FOV in the red channel was turned into a binary image using MATLAB’s built-in imbinarize function with adaptive filtering sensitivity set to 0.5. Adaptive filtering uses statistics about the neighborhood of each pixel it operates on to determine whether the pixel is foreground or background. The filter sensitivity is a variable which, when increased, makes it easier to pass the foreground threshold. The bwpropfilt function was used to exclude objects with an area outside the range 10–100 pixels (1 pixel = 117 nm), aiming to disregard free ssDNA and aggregates. We imaged single fluorophores and found that they did not exceed 10 pixels in area, giving us a lower limit, and we arbitrarily chose 100 pixels as the upper limit to exclude very large aggregates or cellular debris. The regionprops function was employed to extract properties of each found object: area, semi-major to semi-minor axis ratio (or simply, axis ratio), coordinates of the object’s center, bounding box (BBX) encasing the object, and maximum pixel intensity within the BBX.

Accompanying each FOV is a location image (LI) summarizing the locations of signals received from each channel (red and green); colocalized signals in the LI image were shown in yellow. Objects found in the red FOV were compared with their corresponding signal in the associated LI. Objects that did not arise from colocalized signals were rejected. The qualifying BBXs were then drawn onto the raw FOV, and images of the encased individual viruses were saved.

Machine Learning

The CNN used only the red channel as input, as analysis using both channels was not found to improve the overall accuracy. No normalization of the images was carried out, however the bounding boxes (BBXs) from the data segmentation had variable sizes (never larger than 17 pixels in any direction due to the size filtering). Thus, all the BBX were resized such that they had a final size of 17×17 pixels by means of padding (adding extra pixels with 0 gray-value until they reach the required size).

The resized images were used as the input for the 15-layer CNN. The network was built using Matlab 2020b and trained using the computer’s GPU (specifications: NVIDIA 2080Ti, 32 GB RAM, i7 processor). The network had 3 convolutional layers in total, with kernels of 2×2 for the first two convolutions and 3×3 for the last one. The learning rate was set to 0.01, and the learning schedule rate remained constant throughout the training. The hyperparameters remained the same throughout the training process for all models; the mini batch size was set to 1000, the maximum number of epochs to 100, and the validation frequency to 20 iterations.

In the classification layer, trainNetwork took the values from the softmax function and assigned each input to one of K mutually exclusive classes using the cross entropy function for a 1-of-K coding scheme,24

| 1 |

where N is the number of samples, K is the number of classes, tij is the indicator that the ith sample belongs to the jth class, and yij is the output for sample i for class j, which in this case is the value from the softmax function. That is, it is the probability that the network associates the ith input with class j.25 A stochastic gradient descent with momentum set to 0.9 was used as the optimizer.

Zeta Potential Measurements

The Zetasizer Nano S with disposable folded capillary cells (DTS1070) was used for all zeta potential measurements. The temperature was set at 25 °C and equilibration time for the system to 120 s. For pH 4–5 the samples were diluted in 20 mM sodium acetate, for pH 6–7 in 20 mM HEPES, and for pH 9 20 mM Tris. For each pH the buffer information was used to fill in the dispersant information in the software to calculate the viscosity and dielectric constant. For the material sample type, 50% lipid and 50% protein was used to calculate the absorption and refractive index, and the analysis model was set to “auto” mode. The measurement duration was determined by the software with a minimum of 10 runs and a maximum of 100 runs. Three measurements were taken for every sample at each pH.

Statistical Analysis

Confusion Matrices

The results of each network validation are shown as a confusion matrix, which make used of the following terms:

True positive (TP): BBXs correctly identified as positive,

False Positive (FP): BBXs incorrectly identified as positive,

True negative (TN): BBXs correctly identified as negative, and

False negative (FN): BBXs incorrectly identified as negative.

Sensitivity refers to the ability of the test to correctly identify positive BBXs. It can be calculated by dividing the number of true positives over the total number of positives.26

| 2 |

Specificity refers to the ability of the test to correctly identify negative BBXs. It can be calculated by dividing the number of true negatives over the total number of negatives.26

| 3 |

The percentages of BBXs that are correctly and incorrectly predicted by the trained model are known as the positive predictive value (PPV) and negative predictive value (NPV), respectively.

| 4 |

| 5 |

The overall balanced validation accuracy of the model is given by

| 6 |

Limit of Detection

In order to calculate the limit of detection (LOD), increasing concentrations of the CoV IBV (dilutions in allantoic fluid) were labeled and imaged, the resulting images were pre-processed, and the individual signals were fed into the trained network. The normalized average of TP (TP/TP + FP) and standard deviation (STD) were calculated and plotted against the corresponding concentrations as a scatter plot. The plot was fitted as a linear regression, as given by

| 7 |

where the virus concentration was treated as the independent variable and a represents the LOD. For the final value of the LOD a + (3STD) = 6 × 104 PFU/mL was used, which corresponds to a 99.85% confidence interval assuming a normal distribution. Experiments with the influenza strain AWSN/33 were carried out in a similar way.

In order to calculate the LOD of SARS-CoV-2, experiments were carried out in a similar way, however the plot was fitted as a sigmoid, as given by

| 8 |

where L is the maximum normalized positive value (considered as the sensitivity of the model), b adds bias to the output and changes its range from [0,L] to [b,L+b], k scales the input, and X0 is the point at which the sigmoid should output the value L/2.

Chi-Squared Test

In order to go from single BBX classification to calling the result of a clinical sample as a whole the Chi-squared test was used, which takes into consideration the total number of bounding boxes, the number of BBXs that were classified as positive or negative, and the specificity of the trained model (i.e., the probability of classifying a negative signal as such). By taking into account the specificity of each trained model and the total number of signals in a sample, we account for the variability in the number of detected signals between samples. The test also considers that statistically a number of the bounding boxes will be misclassified. The Chi-squared test is a statistical hypothesis test that assumes (the null hypothesis) that the observed frequencies for a categorical variable match the expected frequencies for the categorical variable and can be calculated from the equation below:

| 9 |

where χ2 = chi squared, Oi is the observed value, Ei is the expected value,, and n is the number of labels. The threshold p-value for a test can vary depending on the trained model but in general is smaller than p-value = 0.01 which corresponds to a confidence of greater than 99%. In this paper the Null hypothesis is that the sample is negative and it is only rejected when the p-value is below the threshold in which case the sample is classified as positive.

Acknowledgments

We are grateful to Micron Oxford, funded by Wellcome Strategic Awards (091911 and 107457; PI Ilan Davis), for their loan of their microscope and to Nadia Halidi for her help with the instrument. Special thanks to Dr. Rebecca Moore and Prof. William James (Sir William Dunn School of Pathology, Oxford, UK) for providing laboratory-grown SARS-CoV-2. We thank the microbiology laboratory staff at the John Radcliffe Hospital, Oxford.

Supporting Information Available

The Supporting Information is available free of charge at https://pubs.acs.org/doi/10.1021/acsnano.2c10159.

Immobilization strategy, super-resolution images of labeled viruses, limits of detection, experimental workflows, and tables containing data on clinical samples (PDF)

Author Contributions

N.S., A.T., A.M., C.H., A.N.K., and N.C.R. designed and carried out experiments, analyzed data, and interpreted results. N.S. wrote analysis software. E.B. provided reagents. C.F. and D.M. validated the virus inactivation method. L.P., M.A., S.O., A.V., P.C.M., N.S., and D.C. collected, diagnosed, and typed clinical samples that were considered to be appropriate for the purposes of this study. N.C.R. wrote the manuscript. All authors reviewed and approved the final manuscript.

This research was supported by a Royal Society Dorothy Hodgkin Research Fellowship DKR00620 and Research Grant for Research Fellows RGF\R1\180054 (N.C.R.), the University of Oxford COVID-19 Research Response Fund (N.C.R and A.N.K.), BBSRC grant BB/V001868/1 (A.N.K., N.C.R., and C.H.), a BBSRC-funded studentship (N.S.), and Wellcome Trust grant 110164/Z/15/Z (A.N.K.).

This study was supported by the National Institute for Health Research (NIHR) Health Protection Research Unit in Healthcare Associated Infections and Antimicrobial Resistance (NIHR200915), a partnership between the UK Health Security Agency (UKHSA) and the University of Oxford, and the NIHR Oxford Biomedical Research Centre (BRC). The report presents independent research. The views expressed are those of the author(s) and not necessarily those of the NIHR, UKHSA, or the Department of Health and Social Care.

The authors declare the following competing financial interest(s): The work was carried out using a wide-field microscope from Oxford Nanoimaging, a company in which A.N.K. is a co-founder and shareholder, and is being commercialized by Pictura Bio, a company in which N.C.R. and N.S. are co-founders. Patent applications relating to the work have been submitted by N.C.R., A.N.K., and N.S. (PCT/GB2019/053073 and PCT/GB2021/050990).

Notes

A version of this work was deposited on a preprint server: Nicolas Shiaelis, Alexander Tometzki, Leon Peto, Andrew McMahon, Christof Hepp, Erica Bickerton, Cyril Favard, Delphine Muriaux, Monique Andersson, Sarah Oakley, Alison Vaughan, Philippa C. Matthews, Nicole Stoesser, Derrick Crook, Achillefs N. Kapanidis, Nicole C. Robb. Virus detection and identification in minutes using single-particle imaging and deep learning. MedRxiv, 2020, 10.13.20212035 (accessed 09/12/2022). DOI: 10.1101/2020.10.13.20212035.

Supplementary Material

References

- Udugama B.; Kadhiresan P.; Kozlowski H. N.; Malekjahani A.; Osborne M.; Li V. Y. C.; Chen H.; Mubareka S.; Gubbay J. B.; Chan W. C. W. Diagnosing COVID-19: The Disease and Tools for Detection. ACS Nano 2020, 14 (4), 3822–3835. 10.1021/acsnano.0c02624. [DOI] [PubMed] [Google Scholar]

- Huang W. E.; Lim B.; Hsu C. C.; Xiong D.; Wu W.; Yu Y.; Jia H.; Wang Y.; Zeng Y.; Ji M.; Chang H.; Zhang X.; Wang H.; Cui Z. RT-LAMP for rapid diagnosis of coronavirus SARS-CoV-2. Microb Biotechnol 2020, 13 (4), 950–961. 10.1111/1751-7915.13586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu R.; Wu X.; Wan Z.; Li Y.; Zuo L.; Qin J.; Jin X.; Zhang C. Development of a Novel Reverse Transcription Loop-Mediated Isothermal Amplification Method for Rapid Detection of SARS-CoV-2. Virol Sin 2020, 35 (4), 344–347. 10.1007/s12250-020-00218-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamb L. E.; Bartolone S. N.; Ward E.; Chancellor M. B. Rapid detection of novel coronavirus/Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2) by reverse transcription-loop-mediated isothermal amplification. PLoS One 2020, 15 (6), e0234682. 10.1371/journal.pone.0234682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Y.; Odiwuor N.; Xiong J.; Sun L.; Nyaruaba R. O.; Wei H.; Tanner N. A. Rapid Molecular Detection of SARS-CoV-2 (COVID-19) Virus RNA Using Colorimetric LAMP. medRxiv 2020, 2020.02.26.20028373(accessed July 07, 2020) 10.1101/2020.02.26.20028373. [DOI] [Google Scholar]

- El-Tholoth M.; Anis E.; Bau H. H. Two stage, nested isothermal amplification in a single tube. Analyst 2021, 146 (4), 1311–1319. 10.1039/D0AN01835J. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez-Manzano J.; Malpartida-Cardenas K.; Moser N.; Pennisi I.; Cavuto M.; Miglietta L.; Moniri A.; Penn R.; Satta G.; Randell P.; Davies F.; Bolt F.; Barclay W.; Holmes A.; Georgiou P. Handheld Point-of-Care System for Rapid Detection of SARS-CoV-2 Extracted RNA in under 20 min. ACS Cent Sci. 2021, 7 (2), 307–317. 10.1021/acscentsci.0c01288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinnes J.; Deeks J. J; Berhane S.; Taylor M.; Adriano A.; Davenport C.; Dittrich S.; Emperador D.; Takwoingi Y.; Cunningham J.; Beese S.; Domen J.; Dretzke J.; Ferrante di Ruffano L.; Harris I. M; Price M. J; Taylor-Phillips S.; Hooft L.; Leeflang M. M.; McInnes M. D.; Spijker R.; Van den Bruel A. Rapid, point-of-care antigen and molecular-based tests for diagnosis of SARS-CoV-2 infection. Cochrane Database Syst. Rev. 2022, 3, CD013705. 10.1002/14651858.CD013705.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robb N. C.; Taylor J. M.; Kent A.; Pambos O. J.; Gilboa B.; Evangelidou M.; Mentis A. A.; Kapanidis A. N. Rapid functionalisation and detection of viruses via a novel Ca(2+)-mediated virus-DNA interaction. Sci. Rep. 2019, 9 (1), 16219. 10.1038/s41598-019-52759-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rawat W.; Wang Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput 2017, 29 (9), 2352–2449. 10.1162/neco_a_00990. [DOI] [PubMed] [Google Scholar]

- LeCun Y.; Bengio Y.; Hinton G. Deep learning. Nature 2015, 521 (7553), 436–44. 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- Laine R. F.; Goodfellow G.; Young L. J.; Travers J.; Carroll D.; Dibben O.; Bright H.; Kaminski C. F. Structured illumination microscopy combined with machine learning enables the high throughput analysis and classification of virus structure. Elife 2018, 7, 40183. 10.7554/eLife.40183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito E.; Sato T.; Sano D.; Utagawa E.; Kato T. Virus Particle Detection by Convolutional Neural Network in Transmission Electron Microscopy Images. Food Environ. Virol 2018, 10 (2), 201–208. 10.1007/s12560-018-9335-7. [DOI] [PubMed] [Google Scholar]

- Arnaout R.; Lee R. A; Lee G. R.; Callahan C.; Cheng A.; Yen C. F; Smith K. P; Arora R.; Kirby J. E The Limit of Detection Matters: The Case for Benchmarking Severe Acute Respiratory Syndrome Coronavirus 2 Testing. Clinical Infectious Diseases: an Official Publication of the Infectious Diseases Society of America 2021, 73 (9), e3042–e3046. 10.1093/cid/ciaa1382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pan Y.; Zhang D.; Yang P.; Poon L. L. M.; Wang Q. Viral load of SARS-CoV-2 in clinical samples. Lancet Infect Dis 2020, 20 (4), 411–412. 10.1016/S1473-3099(20)30113-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Awadasseid A.; Wu Y.; Tanaka Y.; Zhang W. Initial success in the identification and management of the coronavirus disease 2019 (COVID-19) indicates human-to-human transmission in Wuhan, China. Int. J. Biol. Sci. 2020, 16 (11), 1846–1860. 10.7150/ijbs.45018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michen B.; Graule T. Isoelectric points of viruses. J. Appl. Microbiol. 2010, 109 (2), 388–397. 10.1111/j.1365-2672.2010.04663.x. [DOI] [PubMed] [Google Scholar]

- Scheller C.; Krebs F.; Minkner R.; Astner I.; Gil-Moles M.; Watzig H. Physicochemical properties of SARS-CoV-2 for drug targeting, virus inactivation and attenuation, vaccine formulation and quality control. Electrophoresis 2020, 41 (13–14), 1137–1151. 10.1002/elps.202000121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadasiv E. C.; Yeh T. T.; Chang P. W. Protein pI alteration related to strain variation of infectious bronchitis virus, an avian Coronavirus. J. Virol Methods 1991, 33 (1–2), 115–25. 10.1016/0166-0934(91)90012-O. [DOI] [PMC free article] [PubMed] [Google Scholar]

- TensorFlow.org. DeepDreaming with TensorFlow, Version 1.0, 2019. https://github.com/tensorflow/docs/blob/master/site/en/tutorials/generative/deepdream.ipynb.

- Lyonnais S.; Henaut M.; Neyret A.; Merida P.; Cazevieille C.; Gros N.; Chable-Bessia C.; Muriaux D. Atomic force microscopy analysis of native infectious and inactivated SARS-CoV-2 virions. Sci. Rep. 2021, 11 (1), 11885. 10.1038/s41598-021-91371-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casais R.; Thiel V.; Siddell S. G.; Cavanagh D.; Britton P. Reverse genetics system for the avian coronavirus infectious bronchitis virus. J. Virol 2001, 75 (24), 12359–69. 10.1128/JVI.75.24.12359-12369.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bal A.; Destras G.; Gaymard A.; Stefic K.; Marlet J.; Eymieux S.; Regue H.; Semanas Q.; d’Aubarede C.; Billaud G.; Laurent F.; Gonzalez C.; Mekki Y.; Valette M.; Bouscambert M.; Gaudy-Graffin C.; Lina B.; Morfin F.; Josset L. Two-step strategy for the identification of SARS-CoV-2 variant of concern 202012/01 and other variants with spike deletion H69-V70, France, August to December 2020. Euro Surveill. 2021, 26 (3), 2100008. 10.2807/1560-7917.ES.2021.26.3.2100008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishop C. M.Pattern Recognition and Machine Learning; 1st ed.; Springer: New York, 2006; p 738. [Google Scholar]

- The Mathsworks Inc. Deep Learning Toolbox, R2022b, 2019. https://uk.mathworks.com/help/deeplearning/ug/layers-of-a-convolutional-neural-network.htmlbvk87b1

- Altman D. G.; Bland J. M. Diagnostic tests. 1: Sensitivity and specificity. BMJ 1994, 308 (6943), 1552. 10.1136/bmj.308.6943.1552. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.