Abstract

Background

Line‐field confocal optical coherence tomography (LC‐OCT) is an imaging technique providing non‐invasive “optical biopsies” with an isotropic spatial resolution of ∼1 μm and deep penetration until the dermis. Analysis of obtained images is classically performed by experts, thus requiring long and fastidious training and giving operator‐dependent results. In this study, the objective was to develop a new automated method to score the quality of the dermal matrix precisely, quickly, and directly from in vivo LC‐OCT images. Once validated, this new automated method was applied to assess photo‐aging‐related changes in the quality of the dermal matrix.

Materials and methods

LC‐OCT measurements were conducted on the face of 57 healthy Caucasian volunteers. The quality of the dermal matrix was scored by experts trained to evaluate the fibers’ state according to four grades. In parallel, these images were used to develop the deep learning model by adapting a MobileNetv3‐Small architecture. Once validated, this model was applied to the study of dermal matrix changes on a panel of 36 healthy Caucasian females, divided into three groups according to their age and photo‐exposition.

Results

The deep learning model was trained and tested on a set of 15 993 images. Calculated on the test data set, the accuracy score was 0.83. As expected, when applied to different volunteer groups, the model shows greater and deeper alteration of the dermal matrix for old and photoexposed subjects.

Conclusions

In conclusion, we have developed a new method that automatically scores the quality of the dermal matrix on in vivo LC‐OCT images. This accurate model could be used for further investigations, both in the dermatological and cosmetic fields.

Keywords: deep learning, dermal fibers, dermal matrix quality, in vivo, line‐field confocal optical coherence tomography

List of Abbreviations

- DEJ

dermo‐epidermal junction

- LC‐OCT

line‐field confocal optical coherence tomography

- RCM

reflectance confocal microscopy

1. INTRODUCTION

Like other human organs, the skin undergoes intrinsic chronological aging. In addition, due to its nature as a protective barrier from the environment, skin is the target of extrinsic aging. Among environmental factors involved, sun exposure is the main cause of skin damage. 1 Regardless of the origin of skin aging, the most visible changes associated with an aged face are pigmented spots, sagging, and the emergence of wrinkles. 2 Thanks to histological analyzes, it is now assumed that the main alterations in aged skin are localized in the dermis, with disorganization of its specific architecture. 3 Indeed, collagen and elastin, that are the two major fibrous proteins of the skin dermis, undergo reduced biosynthesis as well as fragmentation across aging. Visually, abundant, tightly packed, and well‐organized young fibers became sparse, fragmented, and disorganized. 4 , 5 The matrix takes on a spongy appearance as the network loses its typical “spiderweb” architecture. 6 Hence, visualizing the structural changes of the dermis across aging is of important need in different domains, both dermatological and cosmetic research.

As an alternative to classical invasive biopsies, modifications of dermal architecture may now be visualized in vivo using non‐invasive devices. 7 Dermoscopy, photoacoustic tomography, reflectance confocal microscopy (RCM) and optical coherence tomography (OCT) are emerging tools acting as optical biopsies. 8 , 9 , 10 All these devices have limited characteristics and allow either in‐depth analyzes with low resolution or cellular resolution but for shallow depths. 11 , 12 Recently developed, line‐field confocal OCT (LC‐OCT) is an innovative technology combining conventional OCT and RCM. 13 In comparison with previously described technologies, LC‐OCT provides the best trade‐off in terms of spatial resolution (∼1 μm) and deep penetration for the study of skin structures located in the dermis. 14 , 15 , 16 , 17 Indeed, we demonstrated in a previous study that LC‐OCT can be used as a new method for the quantification of superficial dermis thickness in vivo and non‐invasively. 18

Analysis of obtained images is classically performed by experts, trained to decipher such images. In addition to requiring long and fastidious expert training, results remain operator‐dependent. Moreover, as hundreds of images can be analyzed to generate significant results, this process is time‐consuming. 19 For several years, deep learning‐based image analysis became highly beneficial, both in terms of speed of analysis and reduced error accumulation. 20 Such methods are used in the medical field for image segmentation, prediction, or classification for several years, thus helping in the diagnosis of clinicians. 21 Recently, deep learning reveals useful to analyze RCM images in the dermatological field. 22 It is thus of interest to develop a new method that automatically and quickly classifies LC‐OCT images, in a standardized manner, free from human assessment.

In this context, this research work aims to develop a new automated method to score precisely the quality of the dermal matrix with the goal to characterize the age‐related changes directly from in vivo LC‐OCT images. For this purpose, LC‐OCT measurements were conducted on the face of young, aged, and non‐photo‐exposed or aged and photo‐exposed volunteers. The quality of the dermal matrix was firstly scored by experts trained to evaluate both the state of the fibers according to four grades and the global texture of the matrix. Their comparisons with data obtained from deep learning‐based automated classification of LC‐OCT images are reported in the present paper. The use of deep learning as a new method that automatically scores dermal matrix quality on in vivo LC‐OCT images has been validated and applied to the assessment of photo‐aging‐related changes in this area.

2. MATERIALS AND METHODS

2.1. LC‐OCT image acquisition

LC‐OCT device, deepLiveTM, was developed by DAMAE Medical. 15 A stack of grayscale en face images of 1224 × 500 μm was acquired from the skin surface down to 300 μm depth. The resulting volume has an isotropic resolution of about 1 μm.

Since acquired volume contains not only the dermis but also the epidermal layer of the skin, a sub‐volume starting from the dermal‐epidermal junction (DEJ) and with a depth of about 70 μm was selected by the experts as the volume of the dermis that could be visually exploited (the thickness of the volume depends on the skin of each volunteer which impacts the signal differently in depth).

2.2. Development of the deep learning classification method

2.2.1. Panel

Image acquisition was performed on the cheeks of a panel of 57 healthy Caucasian female volunteers between 22 and 79 years (mean = 56).

2.2.2. Database

To obtain a representative sampling of the dermal matrix and limit redundancy, images were extracted from the volume of 70 μm, every 5 μm in depth by dividing the image alternately into two or three sub‐images of size 400 × 400 μm, carrying the set of data to 1 777 images in total.

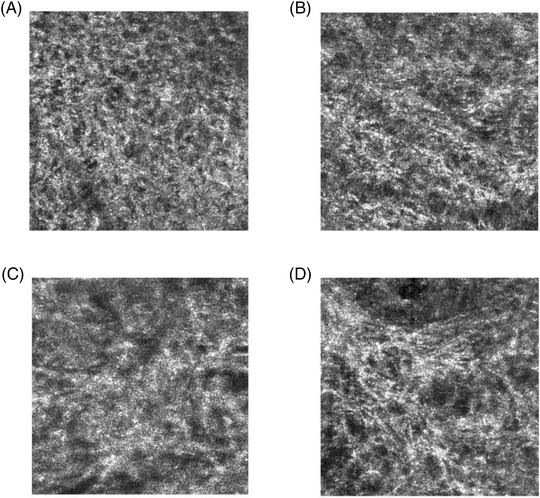

Images were labeled by six experts as one of the following four states of dermal quality as illustrated in Figure 1.

FIGURE 1.

Score scale of dermis quality from in vivo line‐field confocal optical coherence tomography (LC‐OCT) images The scoring scale used by experts to score LC‐OCT images is divided into 4 stages, according to the quality of the dermis. (A) Representative image of score 1: bad quality of dermis with coarse and fragmented fibers, sponge like network. (B) score 2 image: low quality of dermis with small fibers appearing unevenly. (C) score 3 image: good quality of dermis with partially reticulated network and (D) score 4 corresponding to excellent dermis quality with web‐like pattern, elongated and reticulated fibers.

The scoring scale was established by consensus among experts and is designed to represent the gradual change in fiber shape from coarse and fragmented to elongated and reticulated. As the score increases, the fibers tend to organize into a reticulate network typically seen on younger panels. All experts for this study were trained beforehand and were required to make their scoring independently with no communication. If the labeling between the experts was inconsistent, the selected score corresponds to the most frequent score attributed by the experts. Table 1 details the total number of labeled images per class.

TABLE 1.

Summary of the labeled dataset

| Class | #Labeled images |

|---|---|

| Stage 1 | 399 |

| Stage 2 | 678 |

| Stage 3 | 586 |

| Stage 4 | 114 |

| Total | 1777 |

2.2.3. Deep learning techniques

Automatic image classification was tackled by using adapting a MobileNetv3‐Small architecture 23 from the TensorFlow python open‐source software library. 24 The Tensorflow platform offers a MobileNetV3 architecture with pre‐trained weights on the famous ImageNet database. It was used as a base model and adapted to our needs of a 4 classes classification by adding on top of the base model a global average pooling layer and a dense output layer which trades the features into 4 classes prediction thanks to a SoftMax activation function.

As we choose to keep the dimension used for the MobileNetV3 training with ImageNet (224 × 224 × 3 pixels RGB images) we had to adapt the original grayscale images from the LC‐OCT database described above, that have each a dimension of 400 × 400 × 1 pixels. Images of neighboring altitudes (above and below) were added to the images we use. Given an altitude z, among the three channels, the red image channel corresponds to the gray levels image of the altitude z – 1 μm, the green channel to the image of the position z, and the blue one to the grayscale image of the altitude z + 1 μm. We thus obtained 400 × 400 × 3 pixels images composed of three adjacent altitudes allowing us to integrate more information in each data feeding the model. These images were then rescaled to 224 × 224 × 3 pixels as needed as input for the model. Finally, the dataset has been augmented to 15 993 images by applying rotations and vertical mirroring. Two‐thirds of the data were randomly dedicated to training while the remaining, which consists of 4 797 images (1 127 class 1 images, 1 835 class 2 images, 1 529 class 3 images, and 306 class 4 images), was used for assessing the performance of the algorithm.

Regarding the training process, we took advantage of the pre‐trained model as a feature extractor by using transfer learning and training only the last two layers of the model. The model was trained using an Adam optimizer with a learning rate of 0.001, decreasing at a rate of 0.5 every five consecutive epochs with no loss reduction. The loss function used was a sparse categorical cross entropy, adapted to convert the SoftMax output to a one‐hot encoded one. We fed the model with batches of 32 images and stopped training when 15 consecutive epochs had elapsed with no loss reduction. The weights corresponding to the epoch producing the minimum validation loss were kept.

The model was then fine‐tuned, by training again only part of it (keeping the first 100 layers frozen). The parameters were the same as in the first training except for a learning rate of 0.0001 for the optimizer. The loss obtained at the end of the fine‐tuning process was 0.53.

2.2.4. Evaluation of the performance of the model

The accuracy score was defined as the ratio of the number of images correctly classified by the model over the total number of images classified. The model assigned a quality score ranging from 1 (poor quality) to 4 (good quality) to each image.

A confusion matrix that compared the score predicted by the model with the actual score was calculated to better assess the performance of the model for each score. Based on the confusion matrix, precision and recall metrics were calculated for each score using the following formula where TP is true positive (model correctly predicts positive score), TN is true negative (model correctly predicts negative score), FN is a false negative (model incorrectly predicts negative score), and FP is false positive (model incorrectly predicts the positive score):

Since there are four scores in this classification problem, we used the One‐versus‐Rest approach which decomposes this problem into four binary classification problems. After calculating the individual performance metric of each score, the overall performance is calculated by taking an average of the performance metrics of all the scores.

2.3. In vivo studies

2.3.1. Panel

LC‐OCT acquisitions were performed on the cheeks of 36 healthy Caucasian female volunteers, divided into three groups: (I) 12 young subjects between 22 and 32 years (mean 28), (II) 12 old and not photoexposed subjects between 62 and 70 years (mean 66), and (III) 12 old and photoexposed subjects between 62 and 71 years (mean 67). Photoexposition was determined through a survey established from literature and analyzing the level of exposition during childhood, adolescence, and adulthood. 25 , 26 , 27

2.3.2. Image analysis

Three images of dimension 400 × 400 pixels were extracted from each of the 70 layers of the volume, prepared, and analyzed thanks to the deep learning model.

2.3.3. Statistical analysis

On each layer, the mean value of the scores for the three images was computed. The mean and the standard deviation of quality scores were computed for each group. One‐way ANOVA followed by Tukey's HSD multiple comparison test was used to compare the mean quality score. p‐Values of less than 0.05 were considered significant. *: p < 0.05, **: p < 0.01, ***: p < 0.001.

3. RESULTS

3.1. Prediction potential for the deep learning model

In order to determine the performance of the classification model, it was assessed on 4 797 images, previously classified by experts. Figure 2 shows the confusion matrix which compares the true label (according to the experts) to those predicted by the model. Each box corresponds to the percentage of images classified in this score by the model. The intensity of the blue color depends on the quality of the model prediction. Hence, dark blue values present on the diagonal indicate that the model makes good predictions whatever the class. As a result, among 1 127 images scored at 1 by experts (upper line), the model ranks 927 with a score 1 (82%), 169 with a score 2 (15%), 31 with a score 3 (3%) and none with a score 4 (0%). In the second line, among 1 835 class 2 images, the model makes a good prediction for 1 564 images, only 151 were predicted with a score 1, 118 with a score 3, and 2 with a score 4. In the third line and the lower line respectively, 1 271 of the 1 529 class 3 images and 242 of the 306 class 4 images were well predicted by the model. Finally, the calculated accuracy score on the test data set was 0.83.

FIGURE 2.

Confusion matrix comparing experts' scores to those predicted by the model Each row corresponds to a score according to experts. Each column corresponds to a predicted score according to the model. Each box corresponds to the percentage of images classified in this score by the model. The intensity of the blue color depends on the quality of the model prediction.

To better assess the prediction quality of each score, the precision and recall metrics are calculated for each score and averaged to obtain an overall score (Table 2). All values were above 80% except for the class 4 recall score having a value of 79.

TABLE 2.

Precision and recall metrics for each class and average values of performance measures. Support is the number of instances of each actual class of the test dataset

| Precision | Recall | Support | |

|---|---|---|---|

| Class 1 | 0.83 | 0.82 | 1127 |

| Class 2 | 0.81 | 0.85 | 1835 |

| Class 3 | 0.86 | 0.83 | 1529 |

| Class 4 | 0.90 | 0.79 | 306 |

| Average | 0.85 | 0.82 |

Taken together, the results show that the prediction performance of the deep learning model is satisfactory.

3.2. In vivo evaluation of photo‐aging related changes of dermal fibers state

In order to validate the use of the deep learning model as a new method that automatically scores dermal matrix quality on in vivo LC‐OCT images, it was applied to the assessment of photo‐aging‐related changes.

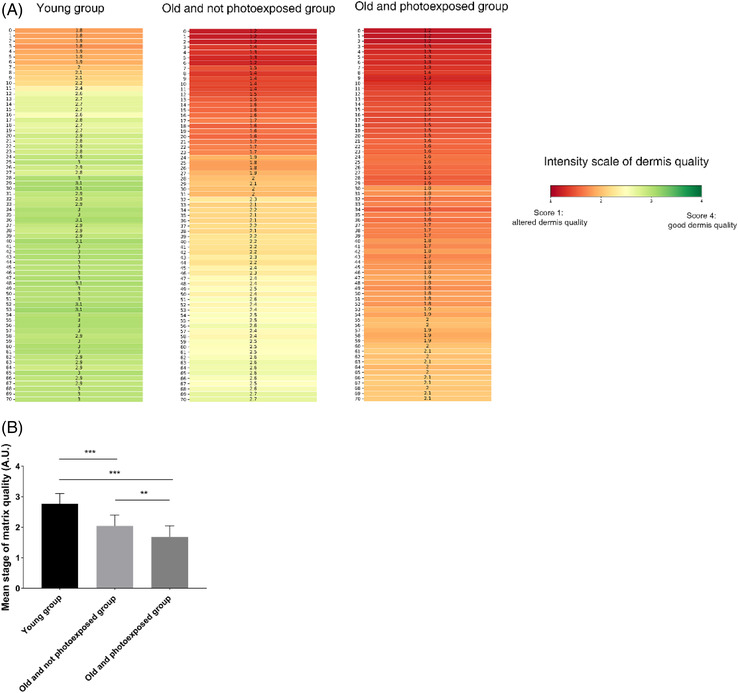

Figure 3A shows the mean profile of the dermal matrix computed for each group. The value mentioned at each micron corresponds to the mean of the scores obtained for all the volunteers in the group. The color scale highlights that the profiles differ according to age and photoexposure, with a greater and deeper alteration for old and photoexposed subjects.

FIGURE 3.

Application of the model to the scoring of dermis quality across (photo‐) aging (A) Mean profile of the dermal matrix in the three age groups. The value of each micron at depth represents the average quality score computed on all the subjects. The depth 0 corresponds to the dermal‐epidermal junction (DEJ). The color represents the intensity of dermis quality with a score 1 or altered dermis quality in red and a score 4 or good dermis quality in green. (B) Comparison of the mean score of dermis quality on all the depths, between the three age groups. The score of quality of the dermal matrix (mean ± SD) for each group. n = 12 for each group. **: p < 0.05; ***: p < 0.001

Statistical analysis of the average scores obtained over the entire volume of the dermis (Figure 3B) confirms that young subjects had a significantly (p < 0.0001) better quality of the whole dermis (mean 2.77 ± 0.33, n = 12) than elderly subjects photoexposed (mean 1.68 ± 0.37, n = 12) or not photoexposed (mean 2.04 ± 0.36, n = 12). Among the old subjects, the photoexposed group had a significantly more altered dermal matrix than the non‐photoexposed group (p < 0.05).

As expected, results confirmed the impact of chronological aging and photoexposure on the quality of the dermal matrix.

4. DISCUSSION

In the present study, we developed a new automated method to precisely score the quality of the dermal matrix directly from in vivo LC‐OCT images. LC‐OCT is an innovative technology allowing in vivo optical biopsies, in real‐time and with high resolution and deep penetration. 28 Its application was mainly described for the analysis of skin lesions, such as carcinomas and melanomas, that are located in the epidermis. 13 In a previous study, we demonstrated that LC‐OCT allows non‐invasive quantification of superficial dermis thickness. 18 Beyond this global analysis, images could be more informative after an accurate analysis of the state of the dermis. To go even further, it was thus of interest to classify LC‐OCT images according to the score of dermal quality.

Investigation of modified skin components was classically determined by experts after a long and fastidious training to analyze such images. 29 Moreover, scoring many images is laborious and time‐consuming, thus subjected to operator‐dependent results. Hence, as seen in similar technologies like RCM, 30 , 31 deep learning can be useful for identifying structures or classifying states usually only evaluated by experts. Neural networks such as Resnest bring good results when it comes to classifying textures as reported in the work of Malciu and colleagues, 22 and often comparable to clinician level. Very recently, epidermal lesions were quantified directly on in vivo 3D LC‐OCT images, thanks to a deep learning algorithm. 32

For this study, LC‐OCT images were classified according to 4 different states, depending on the quality of the dermal matrix (i.e., score 1 for a low matrix quality to 4 for the highest matrix quality). As most of the information within the LC‐OCT images lies in the architectural and morphological features, 33 texture analysis‐based methods have been our focus. In this research, we examined two different features used for classification decisions, namely the texture of the dermal matrix and the fragmentation of dermal fibers, both of which are obviously closely related. 4 , 6 The model was trained on images previously scored by 6 different experts, each scoring with its sensitivity to texture variations. To overcome this inter‐expert subjectivity, the most represented score is used for the learning of the model. This is the advantage of such an approach, to develop a model which gives an entire note and not a decimal score which would be the average of the appreciations of several human readers. Our model based on MobileNetV3 exhibited good performance (accuracy: 83%, precision: 85%, recall: 82%) in scoring the state of the dermal matrix, which satisfies the requirements of the dermatological and cosmetic fields. 34

This model has optimized performances in terms of accuracy and latency with a contained number of parameters. This reduces the overall network size when compared to classic big architectures like VGG19 or ResNet, with a limited state‐of‐the‐art drop of accuracy. 35 The architecture of the model is well adapted to the need of the study, thus allowing a satisfying accuracy. To obtain an even more robust model, we plan to increase the number and variety of labeled images and optimize the parameters of the algorithm. Moreover, this relatively simple model is the first step in the development of an automated method to classify images obtained by LC‐OCT. Obviously perfectible, its complexity could improve accuracy. Finally, the detection of DEJ on LC‐OCT images was manually performed by experts before automatic classification. Since the development of this model, the automatic detection of DEJ on images has been added. As already demonstrated for RCM images, 36 , 37 such a segmentation improves the time‐saving as well as sensitivity and specificity of the classification results, by overcoming inter‐expert variability.

Thanks to this approach, we are now able to go further in the analysis of dermal images acquired by LC‐OCT. Firstly, we rank the state of the dermal matrix across aging. As expected, we scored a significant alteration in the state of the dermal matrix in the older age group. These observations are in agreement with the literature where it is commonly accepted that dermal fibers undergo progressive degradation during aging. 38 Now that this automatic method for quantifying the state of the dermis has been validated, it could be used to assess the anti‐aging effect of dermo‐cosmetic products targeting the dermis. This study also proves that the precision of our model allows discriminating chronological aging‐related dermal changes from those due to photo‐aging. Hence, the model could help the diagnosis of solar elastosis following long‐term exposure. 39 Beyond healthy skin, this automatic and quantified analysis of in vivo LC‐OCT images could also be applied for clinical investigations to improve understanding and/or diagnosis of skin disorders targeting the dermis, such as stretch marks, 40 scars from different origins, 41 , 42 or skin fibrosis. 43

In conclusion, the results confirm that a deep learning technique can provide an efficient and objective assessment of the quality of the dermal matrix, thus reducing the workload of experts. This new approach could be used to demonstrate the beneficial and progressive changes brought by dermo‐cosmetic treatments aimed at improving the dermal matrix.

CONFLICT OF INTEREST

Josselin Breugnot, Pauline Rouaud‐Tinguely, Sophie Gilardeau, Delphine Rondeau, Sylvie Bordes, Elodie Aymard, and Brigitte Closs are full employees of SILAB. The authors have no financial conflicts of interest.

FUNDING INFORMATION

None

ETHICS STATEMENT

All of this study was compliant with the Declaration of Helsinki Principles, and it was approved by the institutional review board of SILAB.

ACKNOWLEDGMENT

The authors would like to thank all the volunteers and investigators who participated in this study.

Breugnot J, Rouaud‐Tinguely P, Gilardeau S, et al. Utilizing deep learning for dermal matrix quality assessment on in vivo line‐field confocal optical coherence tomography images. Skin Res Technol. 2023;29:1–8. 10.1111/srt.13221

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.

REFERENCES

- 1. Parrado C, Mercado‐Saenz S, Perez‐Davo A, Gilaberte Y, Gonzalez S, Juarranz A. Environmental stressors on skin aging. Mechanistic insights. Front Pharmacol. 2019;10:759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Farage MA, Miller KW, Elsner P, Maibach HI. Characteristics of the aging skin. Adv Wound Care. 2013;2:5‐10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Haydont V, Bernard BA, Fortunel NO. Age‐related evolutions of the dermis: clinical signs, fibroblast and extracellular matrix dynamics. Mech Ageing Dev. 2019;177:150‐156. [DOI] [PubMed] [Google Scholar]

- 4. Quan T, Fisher GJ. Role of age‐associated alterations of the dermal extracellular matrix microenvironment in human skin aging: a mini‐review. Gerontology. 2015;61:427‐434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Cole MA, Quan T, Voorhees JJ, Fisher GJ. Extracellular matrix regulation of fibroblast function: redefining our perspective on skin aging. J Cell Commun Signal. 2018;12:35‐43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Zorina A, Zorin V, Kudlay D, Kopnin P. Age‐related changes in the fibroblastic differon of the dermis: role in skin aging. Int J Mol Sci. 2022;23:6135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Newton VL, Mcconnell JC, Hibbert SA, Graham HK, Watson RE. Skin aging: molecular pathology, dermal remodelling and the imaging revolution. G Ital Dermatol Venereol. 2015;150:665‐74. [PubMed] [Google Scholar]

- 8. Li D, Humayun L, Vienneau E, Vu T, Yao J. Seeing through the skin: photoacoustic tomography of skin vasculature and beyond. JID Innov. 2021;1:100039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Pokhrel PK, Helm MF, Greene A, Helm LA, Partin M. Dermoscopy in primary care. Prim Care. 2022;49:99‐118. [DOI] [PubMed] [Google Scholar]

- 10. Schwartz M, Levine A, Markowitz O. Optical coherence tomography in dermatology. Cutis. 2017;100:163‐166. [PubMed] [Google Scholar]

- 11. Schneider SL, Kohli I, Hamzavi IH, Council ML, Rossi AM, Ozog DM. Emerging imaging technologies in dermatology: part I: basic principles. J Am Acad Dermatol. 2019;80:1114‐1120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Schneider SL, Kohli I, Hamzavi IH, Council ML, Rossi AM, Ozog DM. Emerging imaging technologies in dermatology: part II: applications and limitations. J Am Acad Dermatol. 2019;80:1121‐1131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Dubois A, Levecq O, Azimani H, et al. Line‐field confocal optical coherence tomography for high‐resolution noninvasive imaging of skin tumors. J Biomed Opt. 2018;23:1‐9. [DOI] [PubMed] [Google Scholar]

- 14. Chauvel‐Picard J, Bérot V, Tognetti L, et al. Line‐field confocal optical coherence tomography as a tool for three‐dimensional in vivo quantification of healthy epidermis: a pilot study. J Biophotonics. 2022;15:e202100236. [DOI] [PubMed] [Google Scholar]

- 15. Davis A, Levecq O, Azimani H, Siret D, Dubois A. Simultaneous dual‐band line‐field confocal optical coherence tomography: application to skin imaging. Biomed Opt Express. 2019;10:694‐706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Dubois A, Levecq O, Azimani H, et al. Line‐field confocal time‐domain optical coherence tomography with dynamic focusing. Opt Express, OE. 2018;26:33534‐33542. [DOI] [PubMed] [Google Scholar]

- 17. Ogien J, Daures A, Cazalas M, Perrot J‐L, Dubois A. Line‐field confocal optical coherence tomography for three‐dimensional skin imaging. Front Optoelectron. 2020;13:381‐392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Pedrazzani M, Breugnot J, Rouaud‐Tinguely P, et al. Comparison of line‐field confocal optical coherence tomography images with histological sections: validation of a new method for in vivo and non‐invasive quantification of superficial dermis thickness. Skin Res Technol. 2020;26:398‐404. [DOI] [PubMed] [Google Scholar]

- 19. Jiang R, Kezele I, Levinshtein A, et al. A new procedure, free from human assessment that automatically grades some facial skin structural signs. Comparison with assessments by experts, using referential atlases of skin ageing. Int J Cosmet Sci. 2019;41:67‐78. [DOI] [PubMed] [Google Scholar]

- 20. Melanthota SK, Gopal D, Chakrabarti S, Kashyap AA, Radhakrishnan R, Mazumder N. Deep learning‐based image processing in optical microscopy. Biophys Rev. 2022;14:463‐481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Esengönül M, Marta A, Beirão J, Pires IM, Cunha A. A systematic review of artificial intelligence applications used for inherited retinal disease management. Medicina. 2022;58:504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Malciu AM, Lupu M, Voiculescu VM. Artificial intelligence‐based approaches to reflectance confocal microscopy image analysis in dermatology. J Clin Med. 2022;11:429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Howard A, Sandler M, Chen B, et al. Searching for MobileNetV3. in 2019 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE; 2019. doi: 10.1109/ICCV.2019.00140 [DOI] [Google Scholar]

- 24. Abadi M, Barham P, Chen J, et al. TensorFlow: a system for large‐scale machine learning. 2016.

- 25. Glanz K, Yaroch AL, Dancel M, et al. Measures of sun exposure and sun protection practices for behavioral and epidemiologic research. Arch Dermatol. 2008;144:217‐222. [DOI] [PubMed] [Google Scholar]

- 26. Tatalovich Z, Wilson JP, Mack T, Yan Y, Cockburn M. The objective assessment of lifetime cumulative ultraviolet exposure for determining melanoma risk. J Photochem Photobiol B. 2006;85:198‐204. [DOI] [PubMed] [Google Scholar]

- 27. Rosso S, Miñarro R, Schraub S, et al. Reproducibility of skin characteristic measurements and reported sun exposure history. Int J Epidemiol. 2002;31:439‐446. [PubMed] [Google Scholar]

- 28. Ruini C, Schuh S, Sattler E, Welzel J. Line‐field confocal optical coherence tomography‐practical applications in dermatology and comparison with established imaging methods. Skin Res Technol. 2021;27:340‐352. [DOI] [PubMed] [Google Scholar]

- 29. Cheng B, Joe Stanley R, Stoecker WV, et al. Analysis of clinical and dermoscopic features for basal cell carcinoma neural network classification. Skin Res Technol. 2013;19:e217‐e222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Kaur P, Dana KJ, Cula GO, Mack MC. Hybrid deep learning for Reflectance Confocal Microscopy skin images. in 2016 23rd International Conference on Pattern Recognition (ICPR). 2016. doi: 10.1109/ICPR.2016.7899844 [DOI]

- 31. Kose K, Bozkurt A, Alessi‐Fox C, et al. Utilizing machine learning for image quality assessment for reflectance confocal microscopy. J Invest Dermatol. 2020;140:1214‐1222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Fischman S, Pérez‐Anker J, Tognetti L, et al. Non‐invasive scoring of cellular atypia in keratinocyte cancers in 3D LC‐OCT images using deep learning. Sci Rep. 2022;12:481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Ashok PC, Praveen BB, Bellini N, Riches A, Dholakia K, Herrington CS. Multi‐modal approach using Raman spectroscopy and optical coherence tomography for the discrimination of colonic adenocarcinoma from normal colon. Biomed Opt Express. 2013;4:2179‐2186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Cai L, Gao J, Zhao D. A review of the application of deep learning in medical image classification and segmentation. Ann Transl Med. 2020;8:713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Abd Elaziz M, Dahou A, Alsaleh NA, Elsheikh AH, Saba AI, Ahmadein M. Boosting COVID‐19 image classification using MobileNetV3 and Aquila optimizer algorithm. Entropy. 2021;23:1383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Robic J, Perret B, Nkengne A, Couprie M, Talbot H. Three‐dimensional conditional random field for the dermal‐epidermal junction segmentation. J Med Imaging. 2019;6:024003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Kose K, Bozkurt A, Alessi‐Fox C, et al. Segmentation of cellular patterns in confocal images of melanocytic lesions in vivo via a multiscale encoder‐decoder network (MED‐Net). Med Image Anal. 2021;67:101841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Naylor EC, Watson REB, Sherratt MJ. Molecular aspects of skin ageing. Maturitas. 2011;69:249‐256. [DOI] [PubMed] [Google Scholar]

- 39. O'Leary S, Fotouhi A, Turk D, et al. OCT image atlas of healthy skin on sun‐exposed areas. Skin Res Technol. 2018;24:570‐586. doi: 10.1111/srt13221.12468 [DOI] [PubMed] [Google Scholar]

- 40. Schuck DC, de Carvalho CM, Sousa MP, et al. Unraveling the molecular and cellular mechanisms of stretch marks. J Cosmet Dermatol. 2020;19:190‐198. [DOI] [PubMed] [Google Scholar]

- 41. Barone N, Safran T, Vorstenbosch J, Davison PG, Cugno S, Murphy AM. Current advances in hypertrophic scar and keloid management. Semin Plast Surg. 2021;35:145‐152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Clark AK, Saric S, Sivamani RK. Acne scars: how do we grade them? Am J Clin Dermatol. 2018;19:139‐144. [DOI] [PubMed] [Google Scholar]

- 43. Babalola O, Mamalis A, Lev‐Tov H, Jagdeo J. Optical coherence tomography (OCT) of collagen in normal skin and skin fibrosis. Arch Dermatol Res. 2014;306:1‐9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.