Abstract

The identification and characterization of the structural sites which contribute to protein function are crucial for understanding biological mechanisms, evaluating disease risk, and developing targeted therapies. However, the quantity of known protein structures is rapidly outpacing our ability to functionally annotate them. Existing methods for function prediction either do not operate on local sites, suffer from high false positive or false negative rates, or require large site‐specific training datasets, necessitating the development of new computational methods for annotating functional sites at scale. We present COLLAPSE (Compressed Latents Learned from Aligned Protein Structural Environments), a framework for learning deep representations of protein sites. COLLAPSE operates directly on the 3D positions of atoms surrounding a site and uses evolutionary relationships between homologous proteins as a self‐supervision signal, enabling learned embeddings to implicitly capture structure–function relationships within each site. Our representations generalize across disparate tasks in a transfer learning context, achieving state‐of‐the‐art performance on standardized benchmarks (protein–protein interactions and mutation stability) and on the prediction of functional sites from the prosite database. We use COLLAPSE to search for similar sites across large protein datasets and to annotate proteins based on a database of known functional sites. These methods demonstrate that COLLAPSE is computationally efficient, tunable, and interpretable, providing a general‐purpose platform for computational protein analysis.

Keywords: deep learning, functional site annotation, protein structure analysis, representation learning, structural informatics

1. INTRODUCTION

The three‐dimensional structure of a protein determines its functional characteristics and ability to interact with other molecules, including other proteins, endogenous small molecules, and therapeutic drugs. Biochemical interactions occur at specific regions of the protein known as functional sites. We consider functional sites that range from a few atoms which coordinate an ion or catalyze a reaction to larger regions which bind a cofactor or form a protein–protein interaction surface. The identification of such sites—and accurate modeling of the local structure–function relationship—is critical for determining a protein's biological role, including our understanding of disease pathogenesis and ability to develop targeted therapies or protein engineering technologies. Significant effort has gone into curating databases to catalog these structure–function relationships, (Akiva et al., 2014; Furnham et al., 2014; Ribeiro et al., 2018) but this cannot keep up with the rapid increase in proteins in need of annotation. The number of proteins of the Protein Data Bank (PDB) (Berman et al., 2002) increases each year, and AlphaFold (Jumper et al., 2021) has added high‐quality predicted structures for hundreds of thousands more. This explosion of protein structure data necessitates the development of computational methods for identifying, characterizing, and comparing functional sites at proteome scale.

Many widely used methods for protein function identification are based on sequence. Sequence profiles and hidden Markov models built using homologous proteins (Bernhofer et al., 2021; El‐Gebali et al., 2019; Haft et al., 2013; Mi et al., 2005; Mitchell et al., 2019) are often used to infer function by membership in a particular family, but these methods do not always identify specific functional residues and can misannotate proteins in mechanistically diverse families (Schnoes et al., 2009). Additionally, structure and function are often conserved even when sequence similarity is very low, resulting in large numbers of false negatives for methods based on sequence alignment (Rost, 1999; Tian & Skolnick, 2003). Approaches based on identifying conserved sequence motifs within families can help to address these issues (Attwood, 2002; Sigrist et al., 2013). However, these methods suffer from similar limitations as sequences diverge, resulting in high false positive and false negative rates, especially when the functional residues are far apart in sequence (Fetrow & Skolnick, 1998). More generally, sequence‐based methods cannot capture the complex 3D conformations and physicochemical interactions required to accurately define a functional site or inform opportunities to engineer or mutate specific residues.

Recently, methods have applied machine learning to predict function from sequence (Kulmanov & Hoehndorf, 2020; Sanderson et al., 2021) or structure (Gligorijević et al., 2021). However, like profile‐based methods, these lack the local resolution necessary to identify specific functional sites, and their reliance on nonspecific functional labels such as those provided by Gene Ontology terms (Ashburner et al., 2000) often limits practical utility (Ramola et al., 2022). Machine learning approaches that focus on local functional sites are either specific to a particular type of site (e.g., ligand binding, [Tubiana et al., 2022]; [Zhao et al., 2020] enzyme active sites [Moraes et al., 2017]) or require building specific models for each functional site of interest, (Buturovic et al., 2014; Torng & Altman, 2019a) which can be computationally expensive and demands sufficient data to train an accurate model.

A major consideration for building generalizable machine learning models for protein sites is the choice of local structure representation. FEATURE, (Bagley & Altman, 2008) a hand‐crafted property‐based representation, has shown utility for many functionally‐relevant tasks (Buturovic et al., 2014; Liu & Altman, 2011; Tang & Altman, 2014). However, FEATURE uses heterogeneous features (a mix of counts, binary, and continuous) which are more difficult to train on and meaningfully compare in high dimensions. Additionally, FEATURE consists of radial features without considering orientation and does not account for interactions between atoms in 3D, leading to loss of information (Torng & Altman, 2019a). Deep learning presents an attractive alternative by enabling the extraction of features directly from raw data, (LeCun et al., 2015) but the high complexity of deep learning models means that they require large amounts of labeled data. To address this, a paradigm has emerged in which models are pre‐trained on very large unlabeled datasets to extract robust and generalizable features which can then be “transferred” to downstream tasks (Hu et al., 2019; Oquab et al., 2014). This approach has been successfully applied to learn representations of small molecules (Duvenaud et al., 2015; Gilmer et al., 2017) and protein sequences, (Alley et al., 2019; Rives et al., 2019; Sanderson et al., 2021) but there are few examples of representations learned directly from 3D structure. Initial efforts focus on entire proteins rather than sites and operate only at residue‐level resolution (Hermosilla & Ropinski, 2021; Zhang et al., 2022).

We address these issues by developing compressed latents learned from aligned protein structural environments (COLLAPSE), a framework for functional site characterization, identification, and comparison which (a) focuses on local structural sites, defined as all atoms within a 10 Å radius of a specific residue; (b) captures complex 3D interactions at atom resolution; (c) works with arbitrary sites, regardless of the number of known examples; and (d) enables comparison between sites across proteins. COLLAPSE combines self‐supervised methods from computer vision, (Grill et al., 2020) graph neural networks designed for protein structure, (Jing et al., 2020; Jing et al., 2021) and multiple sequence alignments of homologous proteins to learn 512‐dimensional protein site embeddings that capture structure–function relationships both within and between proteins.

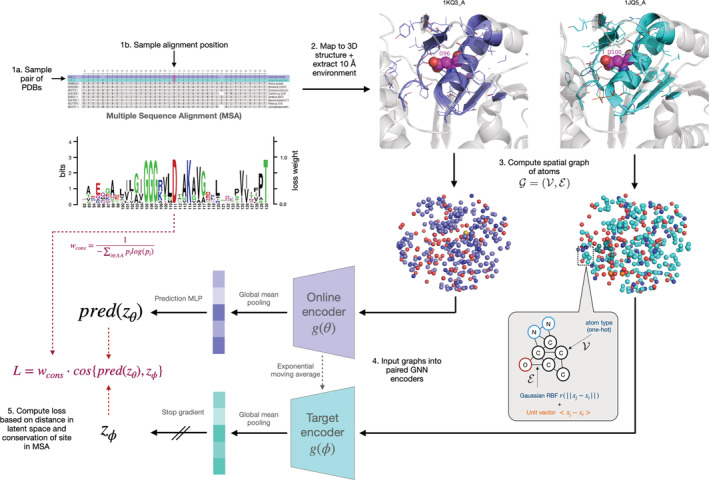

Self‐supervised representation learning refers to the procedure of training a model to extract high‐level features from raw data using one or more “pretext tasks” defined using intrinsic characteristics of the input data. The choice of pretext task is critical to the utility of the learned representations. A popular class of methods involves minimizing the distance between the embeddings of two augmented versions of the same data point (for example, cropped and rotated views of the same image), thereby learning a representation that is robust to noise which is independent of the fundamental features of the original data (Che et al., 2020; Chen & He, 2020; Grill et al., 2020). Since function is largely conserved within a protein family, we draw an analogy between homologous proteins and augmented views of the same image. Specifically, we hypothesized that by pulling together the embeddings of corresponding sites in homologous proteins, we could train the model to learn features which capture the site's structural and functional role. In this scheme, sequence alignments are used to identify correspondences between amino acids, which are then mapped to 3D structures to define the structural site surrounding each residue (Figure 1, Section 2.2).

FIGURE 1.

Schematic of a single iteration of COLLAPSE algorithm. Clockwise from the top left, we show (1 a,b) the process of sampling a pair of sites from the MSA, (2) extracting the corresponding structural environments, and (3) converting into a spatial graph. The inset shows the node and edge featurization scheme. Finally, we show (4) a schematic of the network architecture, consisting of paired graph neural networks followed by mean pooling over all nodes to produce site embeddings. (5) these embeddings are then compared using a loss function weighted by the conservation of the position in the MSA, as shown by the sequence logo in center left

Pre‐trained representations are typically used in one of two settings: (a) transfer learning, which leverages general representations to improve performance on problem‐specific supervised tasks where access to labeled data is limited; and (b) extracting insights about the underlying data from the learned embedding space directly (e.g., via visualization or embedding comparisons) (Detlefsen et al., 2022). In this paper, we illustrate the utility of COLLAPSE protein site in both settings. First, we demonstrate that COLLAPSE generalizes in a transfer learning setting, achieving competitive or best‐in‐class results across a range of downstream tasks. Second, we describe two applications that demonstrate the power of our embeddings for protein function analysis without the need to train any downstream models: an iterated search procedure for identifying similar functional sites across large protein databases, and a method for efficiently annotating putative functional sites in an unlabeled protein. All datasets, models, functionality, and source code can be found in our Github repository (https://github.com/awfderry/COLLAPSE).

2. RESULTS

2.1. Intrinsic evaluation of COLLAPSE embeddings

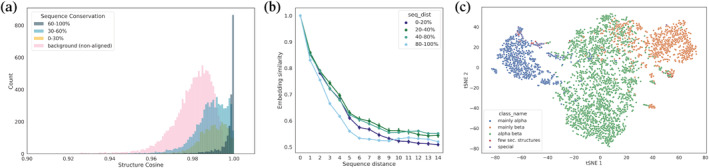

To evaluate the extent to which COLLAPSE embeddings capture relevant structural and functional features, we embedded the environments of all residues in a held‐out set consisting of proteins with varying levels of sequence similarity to proteins in the training set. First, we find that the degree of similarity between embeddings of aligned sites is correlated with the level of conservation of that site in the multiple sequence alignment (MSA) (Figure 2a). Even at less than 30% conservation, aligned sites are significantly more similar on average than a randomly sampled background of nonaligned sites

FIGURE 2.

Analysis of learned embeddings. (a) Raw cosine similarity distributions (i.e., before quantile transformation) of aligned sites, binned by the sequence conservation of the corresponding column of the MSA. Highly conserved positions also have highly similar embeddings, but even less conserved positions have more similar embeddings than randomly sampled nonaligned sites (in pink). (b) Spatial sensitivity of embedding similarity, as measured by the sequence distance between two sites. Results are stratified by the average distance to the closest training protein, demonstrating that neighboring embeddings can be readily distinguished even for proteins with very low similarity to the training set. (c) tSNE projection of average protein‐level embeddings for single‐domain chains, colored by the highest‐level CATH class, showing that embeddings effectively capture secondary structure patterns

We also confirmed that our embeddings capture local information at a residue‐level resolution, meaning that neighboring environments can be effectively distinguished from each other. Indeed, the normalized cosine similarity between residue embeddings decreases between the residues in sequence increases (Figure 2b). This effect generalizes even to proteins far away from the training set in sequence identity. Finally, among chains with a single fold according to CATH 4.2 (Orengo et al., 1997) (, the top‐level structural class can be distinguished clearly in protein‐level embeddings, suggesting that secondary structure is a major feature captured by COLLAPSE (Figure 2c). Lower levels of the CATH hierarchy also cluster clearly in low‐dimensional space (Figure S1).

2.2. Transfer learning and fine‐tuning to improve performance on supervised tasks

To assess COLLAPSE in a transfer learning context, we use ATOM3D, a suite of benchmarking tasks and datasets for machine learning in structural biology (Townshend et al., 2021). We selected two tasks from ATOM3D which focus on protein sites: protein interface prediction (PIP) and mutation stability prediction (MSP). We compare performance to the ATOM3D reference models and to the task‐specific GVP‐GNN reported in (Jing et al., 2021), which is state‐of‐the‐art for all tasks. Table 1 reports the results both with and without fine‐tuning the embedding model parameters. Without fine‐tuning, COLLAPSE embeddings and a simple classifier achieve results comparable or better than the ATOM3D reference models trained specifically for each task. Fine‐tuning improves performance further, achieving state‐of‐the‐art on PIP and comparable performance to the GVP‐GNN on MSP, outperforming FEATURE as well as the ATOM3D baselines (Table S6). As an external evaluation, we also evaluated COLLAPSE on the prediction of protein–protein interaction sites compared to MaSIF, a deep learning model designed for protein surfaces (Gainza et al., 2020). Our models achieve close to the performance of MaSIF and better than baseline methods despite the fact that the randomly‐sampled surface points of the MaSIF dataset are out‐of‐distribution for our model pre‐trained on environments centered around individual residues (Figure S8).

TABLE 1.

Performance of models trained on ATOM3D benchmark tasks

| Task (metric) | COLLAPSE (fixed) | COLLAPSE (fine‐tuned) | ATOM3D 3DCNN | ATOM3D GNN | ATOM3D ENN | GVP‐GNN |

|---|---|---|---|---|---|---|

| PIP (AUROC) | 0.848 ± 0.018 | 0.881 ± 0.004 | 0.844 ± 0.002 | 0.669 ± 0.001 | N/A | 0.866 ± 0.004 |

| MSP (AUROC) | 0.616 ± 0.006 | 0.668 ± 0.018 | 0.574 ± 0.005 | 0.621 ± 0.009 | 0.574 ± 0.040 | 0.680 ± 0.015 |

Note: Comparisons are made with ATOM3D reference architectures—3D convolutional neural network (3DCNN), graph neural network (GNN), and equivariant neural network (ENN)—as well as the geometric vector perceptron (GVP‐GNN) results reported in Jing et al. (2021), which is state‐of‐the‐art for these datasets. The metric is area under the receiver operator characteristic curve (AUROC), and we report mean and standard deviation across three training runs. Numbers in bold indicate best performance on each task (within one standard deviation).

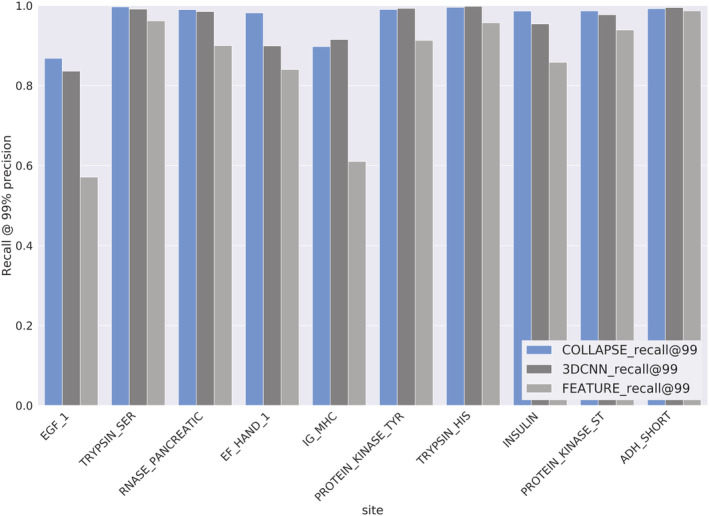

2.3. Building functional site prediction models using COLLAPSE embeddings

We train prediction models for 10 functional sites defined by the prosite database, (Sigrist et al., 2013) which identifies local sites using curated sequence motifs. On sites labeled true positive (TP) by prosite, COLLAPSE outperforms the analogous FEATURE models and perform comparably or better than task‐specific 3DCNN models trained end‐to‐end, achieving greater than 86% recall on all sites at a threshold of 99% precision (Figure 3). prosite also provides false negatives (FNs; true proteins which are not recognized by the prosite pattern) and false positives (FPs; proteins which match the prosite pattern but are not members of the functional family). Table 2 shows the number of proteins correctly reclassified by the models trained on TP sites. For all families, COLLAPSE correctly identifies a greater or equal number of FN proteins compared to FEATURE and 3DCNN classifiers. The improvement is notable in some cases, such as a 162.5% increase in proteins detected for IG_MHC, a 37.5% increase for ADH_SHORT, and a 17.6% increase for EF_HAND_1. For four of the seven proteins with FP data, we correctly rule out all FPs. For ADH_SHORT and EF_HAND_1, we perform 9.1% and 4.0% worse relative to 3DCNN, respectively, but this slight increase in FPs is not substantial relative to the improvement in FNs recovered for these families. To evaluate our embeddings beyond prosite, we also trained functional residue prediction models on four benchmark datasets from (Xin & Radivojac, 2011). Models based on COLLAPSE embeddings perform significantly better than the best‐performing method on the prediction of zinc binding sites and enzyme catalytic sites (15.3% and 9.3% improvement, respectively), and within 10% of the best‐performing method on DNA binding and phosphorylation sites (Table S5).

FIGURE 3.

Performance of models trained on true positives from 10 prosite functional sites in 5‐fold cross‐validation: COLLAPSE embeddings + SVM (blue), 3DCNN trained end‐to‐end (dark gray), and FEATURE vectors + SVM (light gray). Metric is the recall for all TP annotations at a threshold, which produces 99% precision. COLLAPSE achieves better recall than FEATURE and better or comparable recall to the 3DCNN

TABLE 2.

Performance of models trained on prosite TP/TN on held‐out prosite FP/FN annotations

| Site | prosite label | COLLAPSE | FEATURE | 3DCNN | prosite total |

|---|---|---|---|---|---|

| ADH_SHORT | FN | 11 | 8 | 7 | 14 |

| FP | 30 | 33 | 33 | 33 | |

| EF_HAND_1 | FN | 40 | 28 | 34 | 48 |

| FP | 120 | 106 | 125 | 128 | |

| EGF_1 | FN | 60 | 34 | 58 | 90 |

| FP | 19 | 19 | 19 | 19 | |

| IG_MHC | FN | 21 | 8 | 8 | 47 |

| FP | 31 | 31 | 31 | 31 | |

| PROTEIN_KINASE_ST | FN | 269 | 264 | 268 | 271 |

| PROTEIN_KINASE_TYR | FN | 3 | 3 | 3 | 3 |

| FP | 14 | 20 | 20 | 20 | |

| TRYPSIN_HIS | FN | 10 | 3 | 10 | 16 |

| FP | 4 | 4 | 4 | 4 | |

| TRYPSIN_SER | FN | 9 | 9 | 9 | 12 |

| FP | 1 | 1 | 1 | 1 |

Note: Comparisons are made with FEATURE and 3DCNN numbers as reported in Torng et al. (2019). The number of proteins which are correctly reclassified (i.e., FPs predicted as negative, FNs predicted as positive) is reported for each method (higher is better). Numbers in bold indicate best performance on each site.

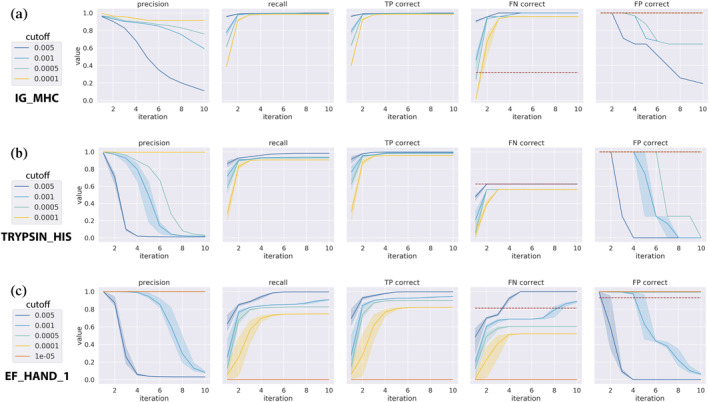

2.4. Iterative search for functional sites across protein databases

While COLLAPSE embeddings can be used to train highly accurate models for functional site detection, we can only train such models for those functional sites for which we have sufficient training examples. Another way to understand the possible function of a site is to analyze similar sites retrieved from a structure database. The set of hits retrieved by this search may contain known functional annotations or other information which sheds light on the query site. We use iterative COLLAPSE embedding comparisons to perform such a search across the PDB. We investigate the performance of this method on the prosite dataset while varying two parameters: the number of iterations and the p‐value cutoff for inclusion at each iteration. The method generally achieves high recall and precision after 2–5 iterations at a p‐value cutoff of (Figure 4; Figure S4). Notably, when evaluating on the FN and FP subsets, our search method even outperforms the cross‐validated models on some sites (e.g., IG_MHC, Figure 4a). However, the precision and recall characteristics vary widely across families; in some cases it predicts the same set of proteins as the trained model (e.g., TRYPSIN_HIS; Figure 4b), while in others it performs worse (e.g., EF_HAND_1; Figure 4c). Importantly, the method requires no training and is very efficient: runtime per iteration scales linearly with the size of the query set and with database size (Figure S5).

FIGURE 4.

Iterated functional site search performance per iteration for three prosite families. Colors denote different user‐specified empirical p‐value cutoffs and error bars represent variance over three randomly sampled queries. From left to right, metrics shown are: Precision across all results (including TP, FP, and FN), recall across all results, proportion of TP sites predicted correctly, proportion of FN sites predicted correctly, and proportion of FP sites predicted correctly. For FN and FP, the performance of our CV‐trained models is shown as a red dashed line. Sample error is shown for three random starting queries. The three families shown are (a) IG_MHC, (b) TRYPSIN_HIS, and (c) EF_HAND_1, in order of relative performance compared to CV‐trained models. While the performance characteristics vary across sites, the number of iterations and p‐value cutoff can be tuned to achieve good performance

2.5. Annotation of functional sites in protein structures

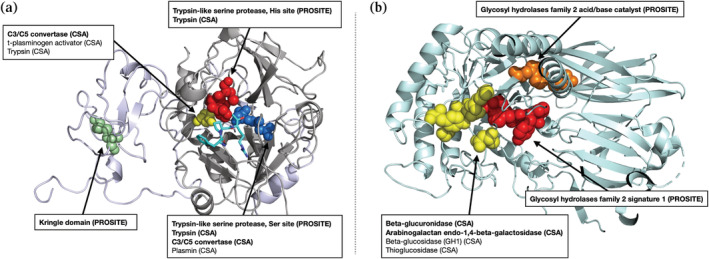

Our iterative search method assumes that a site of interest has already been identified. However, when a new protein is discovered and its structure is solved, the locations of functional sites are often unknown. By comparing local environments in the protein's structure to those contained in databases of known functional sites, we can predict which sites are likely to be functional. Figure 5 shows two example annotations using a modified mutual best hit criterion against a reference database consisting of embeddings from prosite and the catalytic site atlas (CSA). First, we show the structure of meizothrombin, a precursor to thrombin and a trypsin‐like serine protease with a canonical His‐Asp‐Ser catalytic triad. Our method correctly identifies all three residues as belonging to the trypsin‐like serine protease family in prosite (Figure 5a). Hits against the CSA, which are more specific, also include closely homologous proteins such as C3/C5 convertase. The associated kringle domain is also identified by its characteristic disulfide bond. Second, we show the structure of beta‐glucuronidase (Figure 5b), a validation set protein which has no homologs in the training set. We correctly identify all four catalytic residues defined by the CSA (in yellow), as well as prosite signatures corresponding to the glycosyl hydrolases family 2, the family which contains beta‐glucuronidase.

FIGURE 5.

Results of functional annotation tool applied to (a) meizothrombin (PDB ID 1A0H) and (b) beta‐glucuronidase (PDB ID 3HN3), both at p < . No member of the beta‐glucuronidase family is in the training set (maximum sequence identity 2.8%). Functional residues identified by our method are shown as spheres, with colors corresponding to the functional site. Hits labeled in bold are also significant at a more stringent cutoff (p < ). All hits represent either the correct function or those of very closely related proteins, showing that COLLAPSE is effective for annotation of proteins whether or not similar proteins are present in the training set

3. DISCUSSION

3.1. The COLLAPSE self‐supervised training framework enables the learning of rich, informative embeddings of functional sites

The utility of COLLAPSE embeddings for functional analysis derives from several key features of the training algorithm. First, the use of homology as a source of self‐supervision signal allows the model to learn patterns of structural conservation across proteins, imbuing the model with a biological inductive bias towards features that may be important to the protein's function. Such patterns could in theory be learned by a model which sees each protein independently, but it would require much more data and training time to identify subtle signals across disparate proteins. The bootstrap training objective not only forces the model to learn a distance function for comparing embeddings that meaningfully captures the functional relationship between sites, but by sampling pairs of proteins and residues each iteration it also greatly increases the effective size of the training set relative to models that take in a single protein (or even residue) at a time. Indeed, we have found empirically that nonbootstrap objectives (e.g., those based on masked residue modeling or autoencoders) produce representations that are much less informative for functional tasks. While MSAs have proved crucial to the success of sequence‐based models, to our knowledge this is the first time they have been used to provide a supervision signal for a structure‐based model.

Second, by focusing on local protein sites, our embeddings are more precise and flexible than models which produce a single representation of an entire protein. COLLAPSE embeddings can be used for arbitrary tasks on the level of single residues or even individual functional atoms, to detect important regions in proteins, and to identify functional relationships between proteins even if they are divergent in sequence or global fold. Moreover, by aggregating over multiple residues or entire proteins, site‐specific embeddings can also be applied to domain‐level or full‐protein tasks. For simple tasks such as distinguishing high‐level CATH class (Figure 2c), a naive approach of averaging over all residues is sufficient, but more complex aggregation methods will be necessary for many problems. As a proof of concept, we trained a simple recurrent neural network (RNN) model on sequences of COLLAPSE embeddings to make protein‐level predictions of fold and enzyme class (Supplementary Note 9). Compared to 16 other sequence and structure based methods, (Hermosilla et al., 2020) our RNNs trained on COLLAPSE embeddings rank in the top five across all tasks and testing sets (Figure S7).

Finally, by using an atomic graph representation and a GVP‐GNN encoder, COLLAPSE captures all inter‐atomic interactions (in contrast to methods which operate at a residue level) and produces representations that are fully equivariant to 3D rotation and translation. The importance of capturing local structural features in functional site analysis is demonstrated by the improved performance of COLLAPSE relative to sequence‐based methods such as MMSeqs2 (Steinegger & Söding, 2017) (Table S4), especially on the more difficult FN and FP proteins. While sequence embeddings from ESM‐1b (Rives et al., 2021) do produce very good predictive models for certain sites, they dramatically overfit to others (notably IG_MHC) and generally underperform across all sites in search and annotation applications (Figures S9–S10, Supplementary Note 5). This suggests that sequence and structure representations are complementary, and many tasks may benefit from a combination of the two approaches.

3.2. COLLAPSE produces general‐purpose representations which facilitate the improvement of computational methods for diverse applications in protein function analysis

COLLAPSE is effective in transfer learning and as fixed embeddings, and generalizes across tasks that require the model to learn different aspects of the protein structure–function relationship. Although it may not be the state‐of‐the‐art on every task, its competitive performance in every context we tested demonstrates its utility as a general‐purpose representation. This flexibility makes COLLAPSE embeddings ideal for not only building new predictive models and performing comparative analyses, but also for easily incorporating structural information into existing computational methods. Given the improved performance over FEATURE across our benchmarks, we also expect that substituting COLLAPSE embeddings will lead to improved performance for most applications addressed by the FEATURE suite of methods (Liu & Altman, 2011; Tang & Altman, 2014; Torng & Altman, 2019b).

Another important aspect which sets COLLAPSE apart from task‐specific machine learning methods is the ability to perform meaningful comparisons between functional sites directly in the embedding space. Due to the bootstrap pre‐training objective, the embedding distance provides a functionally relevant distance measure for comparing sites. Note that for all comparisons, our method of standardizing embedding comparisons is critical for determining their statistical significance as well as increasing their effective range (Supplementary Note 1). We demonstrate the benefits of using comparisons directly in the embedding space by developing methods for functional site search and annotation, both of which are efficient, generalizable, and allow a user to tune the sensitivity and specificity of the results. For example, for discovery applications it may be desirable to optimize for sensitivity at the cost of more false positives, while prioritizing drug targets for experimental validation may require greater specificity.

The ability of iterative nearest‐neighbor searches in the embedding space to identify known sites in prosite demonstrates that functional sites cluster meaningfully in the embedding space. The effect of changing input parameters (number of iterations and p‐value cutoff) on the sensitivity and specificity of the results varies somewhat across functional families. In some cases (notably IG_MHC), this method achieves better sensitivity for FNs than even machine learning models trained using CV, while in others (EF_HAND_1, PROTEIN_KINASE_TYR) it cannot achieve this without a significant drop in precision. This is likely due to differences in structural conservation between sites, whereby sites which are more structurally heterogeneous are more difficult to fully capture using a nearest‐neighbor approach than a trained model which can learn to recognize diverse structural patterns. However, since training an accurate model requires access to a representative training dataset which is not always available, we consider our search method to be a powerful complement to site‐specific models in cases where labeled data is scarce or where the similarity to a specific query is important. We also note that while structural search methods exist for full proteins (Holm & Rosenström, 2010; van Kempen et al., 2022) or binding sites, (Liu & Altman, 2011; Valasatava et al., 2014; Zemla et al., 2022) ours is the first search tool specifically designed for arbitrary local structural sites.

Functional annotation of novel protein structures is of great value to the structural biology and biochemistry communities, but there are few tools for doing so at the residue level. COLLAPSE provides a method for residue‐level annotation which is efficient and tunable, making it suitable for both screening and discovery purposes. As shown by the examples in Figure 5, the method identifies known functional annotations while limiting false positives to closely related homologs, even when the input is not related to any protein in the training set (<5% sequence identity for beta‐glucuronidase). Confidence in a new prediction's accuracy can be assessed by its significance level or more sophisticated evaluations for example, multiple neighboring residues being annotated with the same function may imply a more likely correct prediction. Additionally, because we can identify many sites on a single protein, it is also possible to identify previously unknown alternative functional sites or allosteric sites. Importantly, all predictions can be explained and cross‐referenced by rich metadata from the reference data sources, enhancing trust and usability. Of the PDBs returned for true positive sites in meizothrombin and beta‐glucuronidase, 45.5% (20/44) and 87.5% (14/16), respectively, were not hits in a protein PSI‐BLAST search with standard parameters, demonstrating the value of local structural comparisons for functional annotation. Additionally, the method is easy to update and extend over time via the addition of new sources of functional data, and reference databases can even be added or removed on a case‐by‐case basis.

3.3. Advances in protein structure prediction provide great opportunities for expanding functional analysis and discovery

COLLAPSE depends on the availability of solved 3D protein structures in the PDB. This restricts not only the number of homologous proteins that can be compared at each training step, but also the set of protein families which can even be considered—less than one third of alignments in the CDD contained at least two proteins with structures in the PDB. Including structures from AlphaFold Structure Database (Varadi et al., 2022) would dramatically increase the coverage of our training dataset, but the utility of including predicted structures alongside experimentally solved structures in training or evaluation of machine learning models still needs to be evaluated (Derry et al., 2022). A preliminary evaluation of our annotation method on the predicted structure for meizothrombin reveals high agreement with the corresponding PDB structure (Figure S6) despite a root‐mean‐square deviation of 3.67 Å between the two structures, suggesting that COLLAPSE may already generalize to AlphaFold predictions for some proteins. Given this finding, we anticipate that COLLAPSE will be a powerful tool for functional discovery within the AlphaFold database, which has already yielded several novel insights (Akdel et al., 2021; Bordin et al., 2022).

In summary, COLLAPSE is a general‐purpose protein structure embedding method for functional site analysis. We provide a Python package and command‐line tools for generating embeddings for any protein site, conducting functional site searches, and annotating input protein structures. We also provide downloadable databases of embeddings for a nonredundant subset of the PDB and for known functional sites. We anticipate that as more data becomes available, these tools will serve as a catalyst for data‐driven biological discovery and become a critical component of the protein research toolkit.

4. MATERIALS AND METHODS

4.1. Training dataset and data processing

COLLAPSE pre‐training relies on a source of high‐quality protein families associated with known structures and functions, as well as multiple sequence alignments (MSAs) in order to define site correspondences. We use the NCBI‐curated subset of the Conserved Domain Database (CDD), (Lu et al., 2020; Marchler‐Bauer et al., 2003) which explicitly validates domain boundaries using 3D structural information. We downloaded all curated MSAs from the CDD (n = 17,906 as of Sep. 2021) and filtered out those that contained less than two proteins with structures deposited in the PDB. After removing chains with incomplete data or which could not be processed properly, this resulted in 5643 alignments for training, corresponding to 16,931 PDB chains (Figure S2). We then aligned the sequences extracted from the ATOM records in each PDB chain to its MSA, without altering the original alignment, thus establishing the correct mapping from alignment position to PDB residue number. As a held‐out set for validation, we select 1370 families defined by pfam (El‐Gebali et al., 2019) which do not share a common superfamily cluster (as defined by the CDD) with any training family. We then bin these families based on the average sequence identity to the nearest protein in the training dataset and sample five families from each bin, resulting in 50 validation families with varying levels of similarity to the training data (Table S1).

4.1.1. Definition of sites and environments

In general, we define protein sites relative to the location of the relevant residues. Specifically, we define the environment surrounding a protein site as all atoms within 10 Å radius of the functional center of the central residue. The functional center is defined as the centroid of the functional atoms of the side chain as defined by previous work (Bagley & Altman, 2008; Torng & Altman, 2019a). For residues with two functional centers (Trp and Tyr), during training one is randomly chosen at each iteration, and at inference time the choice depends on the specific application (i.e., if the function being evaluated depends on the aromatic or polar group; see Table S2). If the functional atom is not known (e.g., for annotating unlabeled proteins), we take the average over all heavy side‐chain atoms.

4.1.2. Empirical background calculation

To make comparisons more meaningful and to provide a mechanism for calculating statistical significance, we quantile‐transform all cosine similarities relative to an empirical cosine similarity distribution. To compute background distributions, we use a high‐resolution (<2.0 Å), nonredundant subset of the PDB at 30% sequence identity provided by the PISCES server (Wang & Dunbrack, 2003) (5833 proteins). We compute the embeddings of 100 sites from each structure, corresponding to five for each amino acid type, sampled with replacement. Exhaustively computing all pairwise similarities is computationally infeasible, so we sample pairs of environments and compute the cosine similarity of each. We performed this procedure to generate empirical similarity distributions for the entire dataset and for each amino acid individually (Figure S3). Cosine similarities are then quantile‐transformed relative to the relevant empirical cumulative distribution function:

The p‐value for any embedding comparison is then defined as , or the probability that a randomly sampled pair of embeddings is at least as similar as the pair in question. Amino acid–specific empirical backgrounds are used for functional site search and are aggregated into a single combined distribution for annotation. For the functional site–specific background used to filter hits during annotation, we use an empirical background computed by comparing each functional site embedding to the embeddings of the corresponding amino acid in the 30% nonredundant PDB subset.

4.2. COLLAPSE training algorithm

Each iteration of the COLLAPSE pre‐training algorithm consists of the following steps, as shown in Figure 1. We trained our final model using the Adam optimizer (Kingma & Ba, 2015) with a learning rate of 1 e‐4 and a batch size of 48 pairs for 1200 epochs on a single TESLA V100 GPU. Model selection and hyperparameter tuning was evaluated using intrinsic embedding characteristics (Section 2.1) and ATOM3D validation set performance (Section 4.3). See Supplementary Note 2 for further discussion of hyperparameter selection and modeling choices.

Step 1. Randomly sample one pair of proteins from the MSA and one aligned position from each protein (i.e., there is not a gap in either protein). Map MSA column position to PDB residue number using the pre‐computed alignment described in Section 2.2. Note that this step ensures that each epoch, a different pair of residues is sampled from each CDD family, effectively increasing the size of the training dataset by many orders of magnitude relative to a strategy which trains on individual proteins or MSAs.

Step 2. Extract 3D environment around each selected residue (Section 4.1.1). Only atoms from the same chain are considered. Waters and hydrogens are excluded but ligands, metal ions, and cofactors are included.

Step 3. Convert each environment into a spatial graph . Each node in the graph represents an atom and is featurized by a one‐hot encoding of the atom type carbon (C), nitrogen (N), oxygen (O), fluorine (F), sulfur (S), chlorine (Cl), phosphorus (P), selenium (Se), iron (Fe), zinc (Zn), calcium (Ca), magnesium (Mg), and “other” , representing the most common elements found in the PDB. Edges in the graph are defined between any pair of atoms separated by than 4.5 Å. Following (Jing et al., 2021) edges between atoms with coordinates are featurized using (1) a 16‐dimensional Gaussian radial basis function encoding of distance and (2) a unit vector encoding orientation.

Step 4. Compute embeddings of each site. We embed each pair of structural graphs using a pair of graph neural networks, each composed of three layers of Geometric Vector Perceptrons (GVPs), (Jing et al., 2020; Jing et al., 2021) which learn rotationally‐equivariant representations of each atom and have proved to be state‐of‐the‐art in a variety of tasks involving protein structure (Hsu et al., 2022; Jing et al., 2021). We adopt all network hyperparameters (e.g., number of hidden dimensions) from (Jing et al., 2021). Formally, each GVP learns a transformation of the input graph into 512‐dimensional embeddings of each node:

The final embedding of the entire graph is then computed by global mean pooling over the embeddings of each atom. While in principle, the two networks could be direct copies of each other (i.e., have tied parameters ), we adopt the approach proposed by Grill et al. (2020) which refers to the two networks as the online encoder and the target encoder, respectively. Only the online network parameters are updated by gradient descent, while the target network parameters are updated as an exponential moving average of :

where is a momentum parameter which we set equal to 0.99. No gradients are propagated back through the target network, so only is updated based on the data during training. Intuitively, the target network produces a regression target based on a “decayed” representation, while the online network is trained to continually improve this representation. Only the online network is used to generate embeddings for all downstream applications.

Step 5. Compute loss and update parameters. The loss function is defined directly in the embedding space using the cosine similarity between the target network embedding and the online network embedding projected through a simple predictor network . This predictor network learns to optimally match the outputs of the online and target networks and is crucial to avoiding collapsed representations. To increase the signal‐to‐noise ratio and encourage the model to learn functionally relevant information, we weight the loss at each iteration by the sequence conservation of that column in the original MSA (defined by the inverse of the Shannon's entropy of amino acids at that position, ignoring gaps). To reduce bias, we include all proteins in the alignment curated by CDD for computing conservation, even those without corresponding structures. As a result of this, the loss function is expressed as:

where

Finally, we symmetrize the loss by passing each site in the input pair through both online and target networks and summing the loss from each. This symmetrized loss is then used to optimize the parameters of the online network using gradient descent.

4.3. Transfer learning and fine‐tuning

We retrieved the pre‐processed PIP and MSP datasets from (Townshend et al., 2021) as well as the performance metrics for baseline models. Both datasets consist of paired residue environments, so we embed the environments surrounding each residue in the pair, concatenate the embeddings, and train a two‐layer feed‐forward neural network to predict the binary outcome (see Supplementary Note 3 for details). For the comparison with MaSIF, we obtained the MaSIF‐site training and testing datasets from (Gainza et al., 2020). Since MaSIF operates on a dense mesh of points along the protein surface, we computed embeddings for environments centered around each surface coordinate. We then added a two‐layer feed‐forward network to predict whether or not the residue is part of a protein–protein interaction site (Supplementary Note 4). For all tasks, we trained models with and without allowing the parameters of the embedding model to change (fine‐tuned and fixed, respectively). Hyperparameters were selected by monitoring the relevant metric on the validation set.

4.4. Training site‐specific models on prosite data

We choose 10 sites presented in (Torng & Altman, 2019a) selected because they are the most challenging to predict using FEATURE‐based approaches (Buturovic et al., 2014; Torng & Altman, 2019a). For each functional site, we train a binary classifier on fixed COLLAPSE embeddings in five‐fold nested cross‐validation (CV). The classifiers are support vector machines (SVMs) with radial basis function kernels and weighted by class frequency. Within each training fold, the inner CV is used to select the regularization hyperparameter and the outer CV is used for model evaluation. To enable more accurate comparisons, we use the same dataset, evaluation procedures as (Torng & Altman, 2019a). We benchmark against reported results for SVMs trained on FEATURE vectors (a direct comparison to our procedure) and 3D convolutional neural networks (3DCNNs) trained end‐to‐end on the functional site structures (the current state of the art for this task). We use prosite FN/FP sites as an independent validation of our trained models, using an ensemble of the models trained on each CV fold and the classification threshold determined above. A site is considered positive if the probability estimate from any of the five‐fold models is greater than the threshold. Some proteins contain more than one site; in these cases, the protein is considered to be positive if any sites are predicted to be positive.

4.5. Iterated functional site search

First, we embed the database to be searched against using the pre‐trained COLLAPSE model. For the results presented in Section 2.4, we use the same prosite dataset used to train our cross‐validated models to enable accurate comparisons. However, we also provide an embedding dataset for the entire PDB and scripts for generating databases for any set of protein structures. Then, we index the embedding database using FAISS, (Johnson et al., 2021) which enables efficient similarity searches for high‐dimensional data. For each site, we then perform the following procedure five times with different random seeds in order to assess the variability of results under different query sites. The input parameters are the number of iterations and the p‐value cutoff for selecting sites at each iteration .

Sample a single site from the prosite TP dataset (to simulate querying a known functional site), generate COLLAPSE embedding, and add to query set.

Compute effective cosine similarity cutoff using the quantile of the empirical background for the functional amino acid of the query site (e.g., cysteine for an EGF_1 site).

Compare embedding(s) of query to database and retrieve all neighbors within of the query.

Add all neighbors to query set and repeat Step 3 times. Note that when there is more than one query point, neighbors to any point in the query are returned.

Compute precision and recall of final query set, using prosite data as ground truth.

4.6. Protein site annotation

Instead of a database of all protein sites, the annotation method requires a database of known functional sites. We use all true positive sites defined in prosite. For each pattern, we identify all matching PDBs using the ScanProsite tool (Sigrist et al., 2013) and extract the residues corresponding to all fully conserved positions in the pattern (i.e., where only one residue is allowed). The environment around each residue is embedded using COLLAPSE. We also embed all residues in the CSA, a curated dataset of catalytic residues responsible for an enzyme's function. All data processing matches the pre‐training procedure. The final dataset consists of 25,407 embeddings representing 1870 unique functional sites.

The annotation method operates in a similar fashion to the search method, where each residue in the input protein is embedded and compared to the functional site database. Any residue that has a hit with a p‐value below the pre‐specified cutoff is returned as a potential functional site. To filter out false positives due to common or nonspecific features (e.g., small polar residues in alpha‐helices), we also remove hits which are not significant against the empirical distribution specific to that functional site (Section 4.1.2). This results in a modified mutual best hit criterion with two user‐specified parameters: the residue‐level and site‐level significance thresholds. Along with each hit is the metadata associated with the corresponding database entry (PDB ID, functional site description, etc.) so each result can be examined in more detail. For the examples presented we remove all ligand atoms from the input structure to reduce the influence of nonprotein atoms on the embeddings.

AUTHOR CONTRIBUTIONS

Alexander Derry: Conceptualization (lead); data curation (lead); formal analysis (lead); funding acquisition (equal); investigation (lead); methodology (lead); resources (equal); software (lead); validation (lead); visualization (lead); writing – original draft (lead); writing – review and editing (equal). Russ B. Altman: Conceptualization (supporting); funding acquisition (equal); investigation (supporting); methodology (supporting); project administration (lead); resources (equal); supervision (lead); writing – review and editing (equal).

Supporting information

Data S1: Supporting Information

ACKNOWLEDGMENTS

We thank Kristy Carpenter, Delaney Smith, Adam Lavertu, and Wen Torng for useful discussions. Computing for this project was performed on the Sherlock cluster; we would like to thank Stanford University and the Stanford Research Computing Center for providing computational resources and support. A.D. is supported by LM012409 and R.B.A. is supported by NIH GM102365 and Chan Zuckerberg Biohub.

Derry A, Altman RB. COLLAPSE: A representation learning framework for identification and characterization of protein structural sites. Protein Science. 2023;32(2):e4541. 10.1002/pro.4541

Review Editor: Nir Ben‐Tal

Funding information Chan Zuckerberg Initiative; National Institutes of Health, Grant/Award Number: GM102365; U.S. National Library of Medicine, Grant/Award Number: LM012409

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are openly available in Zenodo at http://doi.org/10.5281/zenodo.6903423, reference number 6903423.

REFERENCES

- Akdel M, Pires DE, Pardo EP, et al. A structural biology community assessment of AlphaFold 2 applications. bioRxiv. 2021:461876. 10.1101/2021.09.26.461876 [DOI] [Google Scholar]

- Akiva E, Brown S, Almonacid DE, Barber AE 2nd, Custer AF, Hicks MA, et al. The structure‐function linkage database. Nucleic Acids Res. 2014;42:D521–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alley EC, Khimulya G, Biswas S, AlQuraishi M, Church GM. Unified rational protein engineering with sequence‐based deep representation learning. Nat Methods. 2019;16:1315–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashburner M, Ball CA, Blake JA, Botstein D, Butler H, Cherry JM, et al. Gene ontology: tool for the unification of biology. Nat Genet. 2000;25:25–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Attwood TK. The PRINTS database: a resource for identification of protein families. Brief Bioinform. 2002;3:252–63. [DOI] [PubMed] [Google Scholar]

- Bagley SC, Altman RB. Characterizing the microenvironment surrounding protein sites. Protein Sci. 2008;4:622–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berman HM, Battistuz T, Bhat TN, Bluhm WF, Bourne PE, Burkhardt K, et al. The Protein Data Bank. Acta Crystallogr D Biol Crystallogr. 2002;58:899–907. [DOI] [PubMed] [Google Scholar]

- Bernhofer M, Dallago C, Karl T, Satagopam V, Heinzinger M, Littmann M, et al. PredictProtein ‐ predicting protein structure and function for 29 years. Nucleic Acids Res. 2021;49:W535–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bordin N, Sillitoe I, Nallapareddy V, et al. AlphaFold2 reveals commonalities and novelties in protein structure space for 21 model organisms. bioRxiv. 2022:494367. 10.1101/2022.06.02.494367 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buturovic L, Wong M, Tang GW, Altman RB, Petkovic D. High precision prediction of functional sites in protein structures. PLoS One. 2014;9:e91240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Che F, Yang G, Zhang D, Tao J, Shao P, Liu T. Self‐supervised graph representation learning via bootstrapping. arXiv. 2020;2011:05126. 10.48550/arXiv.2011.05126 [DOI] [Google Scholar]

- Chen X, He K. Exploring simple Siamese representation learning. arXiv. 2020. 2011.10566. 10.48550/arXiv.2011.10566 [DOI] [Google Scholar]

- Derry A, Carpenter KA, Altman RB. Training data composition affects performance of protein structure analysis algorithms. Pac Symp Biocomput. 2022;27:10–21. [PMC free article] [PubMed] [Google Scholar]

- Detlefsen NS, Hauberg S, Boomsma W. Learning meaningful representations of protein sequences. Nat Commun. 2022;13:1914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duvenaud D, Maclaurin D, Aguilera‐Iparraguirre J, et al. Convolutional networks on graphs for learning molecular fingerprints. Advances in Neural Information Processing Systems. 2015;28. [Google Scholar]

- El‐Gebali S, Mistry J, Bateman A, et al. The Pfam protein families database in 2019. Nucleic Acids Res. 2019;47:D427–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetrow JS, Skolnick J. Method for prediction of protein function from sequence using the sequence‐to‐structure‐to‐function paradigm with application to glutaredoxins/thioredoxins and T1 ribonucleases. J Mol Biol. 1998;281:949–68. [DOI] [PubMed] [Google Scholar]

- Furnham N, Holliday GL, de Beer TA, Jacobsen JO, Pearson WR, Thornton JM. The catalytic site atlas 2.0: cataloging catalytic sites and residues identified in enzymes. Nucleic Acids Res. 2014;42:D485–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gainza P, Sverrisson F, Monti F, Rodolà E, Boscaini D, Bronstein MM, et al. Deciphering interaction fingerprints from protein molecular surfaces using geometric deep learning. Nat Methods. 2020;17:184–92. [DOI] [PubMed] [Google Scholar]

- Grill J‐B, Strub F, Altché F, et al. Bootstrap your own latent ‐ a new approach to self‐supervised learning. Adv Neural Inf Process Syst. 2020;33:21271–84. [Google Scholar]

- Gilmer J, Schoenholz SS, Riley PF, Vinyals O, Dahl GE. Neural message passing for quantum chemistry. Proceedings of the 34th International Conference on Machine Learning. 2017;70:1263–72. [Google Scholar]

- Gligorijević V, Renfrew PD, Kosciolek T, Leman JK, Berenberg D, Vatanen T, et al. Structure‐based protein function prediction using graph convolutional networks. Nat Commun. 2021;12:1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haft DH, Selengut JD, Richter RA, Harkins D, Basu MK, Beck E. TIGRFAMs and genome properties in 2013. Nucleic Acids Res. 2013;41:D387–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hermosilla P, Schäfer M, Lang M, et al. Intrinsic‐extrinsic convolution and pooling for learning on 3D protein structures. arXiv. 2020;2007:06252. 10.48550/arXiv.2007.06252 [DOI] [Google Scholar]

- Hermosilla P, Ropinski T. Contrastive representation learning for 3D protein structures. arXiv. 2021;2205:15675. 10.48550/arXiv.2205.15675 [DOI]

- Holm L, Rosenström P. Dali server: conservation mapping in 3D. Nucleic Acids Res. 2010;38:W545–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu C, Verkuil R, Liu J, et al. Learning inverse folding from millions of predicted structures. bioRxiv. 2022:487779. 10.1101/2022.04.10.487779 [DOI] [Google Scholar]

- Hu W, Liu B, Gomes J, et al. Strategies for pre‐training graph neural networks. International conference on learning representations; 2020. [Google Scholar]

- Jing B, Eismann S, Soni PN, Dror RO. Equivariant graph neural networks for 3D macromolecular structure. arXiv. 2021;2106:03843. 10.48550/arXiv.2106.03843 [DOI] [Google Scholar]

- Jing B, Eismann S, Suriana P, Townshend RJL, Dror R. Learning from protein structure with geometric vector Perceptrons. arXiv. 2020;2009:01411. 10.48550/arXiv.2009.01411 [DOI] [Google Scholar]

- Johnson J, Douze M, Jegou H. Billion‐scale similarity search with GPUs. IEEE Trans Big Data. 2021;7:535–47. [Google Scholar]

- Jumper J, Evans R, Pritzel A, Green T, Figurnov M, Ronneberger O, et al. Highly accurate protein structure prediction with AlphaFold. Nature. 2021;596:583–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kingma DP, Ba JL. Adam: a method for stochastic optimization. in 3rd international conference on learning representations, ICLR 2015 ‐ conference track proceedings (2015).

- Kulmanov M, Hoehndorf R. DeepGOPlus: improved protein function prediction from sequence. Bioinformatics. 2020;36:422–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–44. [DOI] [PubMed] [Google Scholar]

- Liu T, Altman RB. Using multiple microenvironments to find similar ligand‐binding sites: application to kinase inhibitor binding. PLoS Comput Biol. 2011;7:e1002326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu S, Wang J, Chitsaz F, Derbyshire MK, Geer RC, Gonzales NR, et al. CDD/SPARCLE: the conserved domain database in 2020. Nucleic Acids Res. 2020;48:D265–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marchler‐Bauer A, Anderson JB, DeWeese‐Scott C, Fedorova ND, Geer LY, He S, et al. CDD: a curated Entrez database of conserved domain alignments. Nucleic Acids Res. 2003;31:383–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moraes JPA, Pappa GL, Pires DEV, Izidoro SC. GASS‐WEB: a web server for identifying enzyme active sites based on genetic algorithms. Nucleic Acids Res. 2017;45:W315–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mi H, Lazareva‐Ulitsky B, Loo R, Kejariwal A, Vandergriff J, Rabkin S, et al. The PANTHER database of protein families, subfamilies, functions and pathways. Nucleic Acids Res. 2005;33:D284–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell AL, Attwood TK, Babbitt PC, Blum M, Bork P, Bridge A, et al. InterPro in 2019: improving coverage, classification and access to protein sequence annotations. Nucleic Acids Res. 2019;47:D351–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oquab M, Bottou L, Laptev I, Sivic J. Learning and transferring mid‐level image representations using convolutional neural networks. 2014 IEEE conference on computer vision and pattern recognition; 2014. New York (NY): IEEE. p. 1717–1724. [Google Scholar]

- Orengo CA, Michie AD, Jones S, Jones DT, Swindells MB, Thornton JM. CATH–a hierarchic classification of protein domain structures. Structure. 1997;5:1093–108. [DOI] [PubMed] [Google Scholar]

- Ramola R, Friedberg I, Radivojac P. The field of protein function prediction as viewed by different domain scientists. bioRxiv. 2022:488641. 10.1101/2022.04.18.488641 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribeiro AJM, Holliday GL, Furnham N, Tyzack JD, Ferris K, Thornton JM. Mechanism and catalytic site atlas (M‐CSA): a database of enzyme reaction mechanisms and active sites. Nucleic Acids Res. 2018;46:D618–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rives A, Meier J, Sercu T, et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc Natl Acad Sci U S A. 2021;118:e2016239118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rost B. Twilight zone of protein sequence alignments. Protein Eng. 1999;12:85–94. [DOI] [PubMed] [Google Scholar]

- Sanderson T, Bileschi ML, Belanger D, Colwell LJ. ProteInfer: deep networks for protein functional inference. bioRxiv. 2021:461077. 10.1101/2021.09.20.461077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schnoes AM, Brown SD, Dodevski I, Babbitt PC. Annotation error in public databases: misannotation of molecular function in enzyme superfamilies. PLoS Comput Biol. 2009;5:e1000605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinegger M, Söding J. MMseqs2 enables sensitive protein sequence searching for the analysis of massive data sets. Nat Biotechnol. 2017;35:1026–8. [DOI] [PubMed] [Google Scholar]

- Sigrist CJA, de Castro E, Cerutti L, et al. New and continuing developments at PROSITE. Nucleic Acids Res. 2013;41:D344–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian W, Skolnick J. How well is enzyme function conserved as a function of pairwise sequence identity? J Mol Biol. 2003;333:863–82. [DOI] [PubMed] [Google Scholar]

- Tang GW, Altman RB. Knowledge‐based fragment binding prediction. PLoS Comput Biol. 2014;10:e1003589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tubiana J, Schneidman‐Duhovny D, Wolfson HJ. ScanNet: an interpretable geometric deep learning model for structure‐based protein binding site prediction. Nat Methods. 2022;19:730–9. [DOI] [PubMed] [Google Scholar]

- Torng W, Altman RB. Graph convolutional neural networks for predicting drug‐target interactions. J Chem Inf Model. 2019;473074:4131–49. [DOI] [PubMed] [Google Scholar]

- Torng W, Altman RB. High precision protein functional site detection using 3D convolutional neural networks. Bioinformatics. 2019;35:1503–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Townshend RJL, Vögele M, Suriana PA, et al. ATOM3D: tasks on molecules in three dimensions. Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks; 2021. Red Hook (NY): Curran Associates, Inc. [Google Scholar]

- van Kempen M, Kim SS, Tumescheit C, Mirdita M, Söding J, Steinegger M. Foldseek: fast and accurate protein structure search. bioRxiv. 2022:479398. 10.1101/2022.02.07.479398 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valasatava Y, Rosato A, Cavallaro G, Andreini C. MetalS3, a database‐mining tool for the identification of structurally similar metal sites. J Biol Inorg Chem. 2014;19:937–45. [DOI] [PubMed] [Google Scholar]

- Varadi M, Anyango S, Deshpande M, Nair S, Natassia C, Yordanova G, et al. AlphaFold protein structure database: massively expanding the structural coverage of protein‐sequence space with high‐accuracy models. Nucleic Acids Res. 2022;50:D439–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang G, Dunbrack RL. PISCES: a protein sequence culling server. Bioinformatics. 2003;19:1589–91. [DOI] [PubMed] [Google Scholar]

- Xin F, Radivojac P . Computational methods for identification of functional residues in protein structures. Curr Protein Pept Sci. 2011;12:456–69. [DOI] [PubMed] [Google Scholar]

- Zemla A, Allen JE, Kirshner D, Lightstone FC. PDBspheres ‐ a method for finding 3D similarities in local regions in proteins. bioRxiv. 2022:474934. 10.1101/2022.01.04.474934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Z, Xu M, Jamasb A, et al. Protein representation learning by geometric structure Pretraining. arXiva. 2022;2203:06125. 10.48550/arXiv.2203.06125 [DOI] [Google Scholar]

- Zhao J, Cao Y, Zhang L. Exploring the computational methods for protein‐ligand binding site prediction. Comput Struct Biotechnol J. 2020;18:417–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data S1: Supporting Information

Data Availability Statement

The data that support the findings of this study are openly available in Zenodo at http://doi.org/10.5281/zenodo.6903423, reference number 6903423.