Abstract

Induced pluripotent stem cells (iPSCs) can be differentiated into mesenchymal stem cells (iPSC-MSCs), retinal ganglion cells (iPSC-RGCs), and retinal pigmental epithelium cells (iPSC-RPEs) to meet the demand of regeneration medicine. Since the production of iPSCs and iPSC-derived cell lineages generally requires massive and time-consuming laboratory work, artificial intelligence (AI)-assisted approach that can facilitate the cell classification and recognize the cell differentiation degree is of critical demand. In this study, we propose the multi-slice tensor model, a modified convolutional neural network (CNN) designed to classify iPSC-derived cells and evaluate the differentiation efficiency of iPSC-RPEs. We removed the fully connected layers and projected the features using principle component analysis (PCA), and subsequently classified iPSC-RPEs according to various differentiation degree. With the assistance of the support vector machine (SVM), this model further showed capabilities to classify iPSCs, iPSC-MSCs, iPSC-RPEs, and iPSC-RGCs with an accuracy of 97.8%. In addition, the proposed model accurately recognized the differentiation of iPSC-RPEs and showed the potential to identify the candidate cells with ideal features and simultaneously exclude cells with immature/abnormal phenotypes. This rapid screening/classification system may facilitate the translation of iPSC-based technologies into clinical uses, such as cell transplantation therapy.

Keywords: induced pluripotent stem cells, retinal pigment epithelial cells, artificial intelligence, deep learning, convolutional neural network, traditional machine learning

1. Introduction

Induced pluripotent stem cell (iPSC) technologies have been shown to hold great promise for personalized therapy, translation medicine, and regeneration medicine [1]. Owing to the potential of iPSCs to be generated from somatic cells via the transduction of specific transcription factors [2], scientists reprogrammed patient-derived somatic cells to generate patient-specific iPSCs [3,4,5]. Remarkably, silencing the expression of human leukocyte antigen (HLA) class I allows to establish the universal human leukocyte antigen (HLA) iPSCs characterized by low immunogenicity [6]. In addition, following the stimuli by defined factors, iPSCs can undergo differentiation into different lineages and exhibit robust phenotypic and genotypic changes [2,7]. For instance, iPSCs can be differentiated into various retinal cell types, including retinal pigment epithelial cells (iPSC-RPEs) [8], retinal ganglion cells (iPSC-RGCs) [9,10], and retinal organoids [11,12]. These iPSC-derived retinal cell lineages have been utilized for disease modeling [9], pathogenesis investigation [9], and regeneration medicine [13].

Age-related macular degeneration (AMD), including dry and wet types, is the leading cause of visual impairment or severe visual loss in the elderly population in developing countries [14]. In a study by Takahashi et al., patient-derived skin fibroblasts were used to generate iPSC-RPEs for transplantation [15]. These iPSC-RPEs exhibited high quality, consistency, safety, and could be generated in large quantities [16]. The generation of iPSC-derived cell products for clinical application requires complicated characterization and quality control, which is a very time-consuming and labor-intensive work [15,16]. These disadvantages may limit the productive efficiency and sufficient quantity of iPSC-RPEs, therefore reducing the clinical applicability of iPSC-derived cell products.

Deep learning is as a branch of machine learning that constitutes the field of artificial intelligence (AI) [17]. Traditional machine learning algorithms require extracting the features from the original data through feature engineering and have been widely used for image recognition [18,19]. However, these algorithms are predominantly designed to deal with low-dimensional data and therefore carry some disadvantages [20]. The technologies of deep learning originated from the artificial neural networks (ANNs) that can achieve learning goals via the acquisition of large data [21]. Unlike traditional machine learning, which processes the original images by splicing or extracting their features, deep learning algorithms are able to extract the features automatically by convolving the original images, followed by pooling, downsampling, or upsampling [22]. There are various widely used traditional machine learning algorithms, including the support vector machines (SVMs), random forests (RFs), and principal component analysis (PCA). On the contrary, frequently used deep learning algorithms include multilayer perceptrons (MLPs), recurrent neural networks (RNNs), and convolutional neural networks (CNNs). Owing to their multiple advantages, deep learning algorithms have been extensively used in image analysis and other biomedical applications [23].

CNNs represent one of the deep learning algorithms. Several studies reported the use of CNNs for cell classification and the evaluation of cell differentiation. For example, Niioka et al., used CNNs to identify the stage of C2C12 mouse myoblast differentiation with an accuracy rate of 91.3%. After data augmentation by flipping and rotation, the accuracy rate of evaluation was improved by 10% [24]. Kusumoto et al., used CNN to distinguish iPSC-derived endothelial cells and non-endothelial cells with an accuracy rate of more than 90% [25]. Orita et al., employed CNN to examine the readiness of cultivated iPSC-derived cardiomyocytes for experimental procedures with an accuracy of 89.7% [26]. Overall, these reports demonstrated that CNN could be used as a feasible tool for biological image recognition and the evaluation of cell maturation and differentiation. To meet the demands of iPSC technologies in personalized therapy, translational medicine, and transplantation, the cultivation, maintenance, and the characterization of differentiated cells require large-scale manpower and management efforts. In this study, we aimed to establish an AI-based screening system that can rapidly screen the iPSC-derived cell lineages and recognize the differentiated cells with ideal morphologies and other features, while simultaneously excluding the cells with immature or other unexpected phenotypes. This rapid screening/selection system may facilitate the translation of iPSC-based technologies into clinical uses, such as cell transplantation therapy.

2. Materials and Methods

2.1. Cell Culture

iPSCs were seeded on the dishes pre-coated with Geltrex™ matrix (#A1413301; Thermo Fisher Scientific, Waltham, MA, USA) dissolved in DMEM/F-12 (#10565-018; Thermo Fisher Scientific) and maintained in StemFlex™ medium (#A3349401; Thermo Fisher Scientific, Waltham, MA, USA) in a humidified incubator at 37 °C and 5% CO2. iPSCs were subcultured by dissociation in Versene solution (#15040066; Thermo Fisher Scientific, Waltham, MA, USA) and re-seeding in StemFlex medium. The induction of iPSC-MSC differentiation was conducted following the methods described by Hynes et al. [27]. Briefly, undifferentiated iPSCs without mouse embryonic fibroblast feeders were dissociated with a cell scraper and washed using the MSC wash medium containing MEM α-based medium supplemented with 5% fetal bovine serum, 50 U/mL penicillin, and 50 μg/mL streptomycin. The wash medium with feeder-free iPSC suspension was centrifuged at 400× g for 5 min at 4 °C, and then the supernatant was removed. Subsequently, these iPSC colonies were resuspended in MSC culture medium consisting of MEM α-based medium supplemented with 10% fetal bovine serum, 2 mM L-glutamine, 1 mM sodium pyruvate, 50 U/mL penicillin, and 50 μg/mL streptomycin, non-essential amino acids (NEAA), and HEPES solution. The medium was changed every 3 to 4 days, and iPSC colonies were differentiated into a mixture of heterogenous cell types after a 2-week differentiation time course. The serial passaging was used to select MSC-like cells which were more homogenous and exhibited pronounced fibroblast morphology. For the differentiation of iPSCs into iPSC-RPEs and iPSC-RGCs, the differentiation following the procedures reported by Regent et al. [28], Yang et al. [8] and the protocols for the routine generation of iPSC-RGCs in our laboratory [9,10] were used, respectively.

2.2. Image Acquisition and Image Data Augmentation

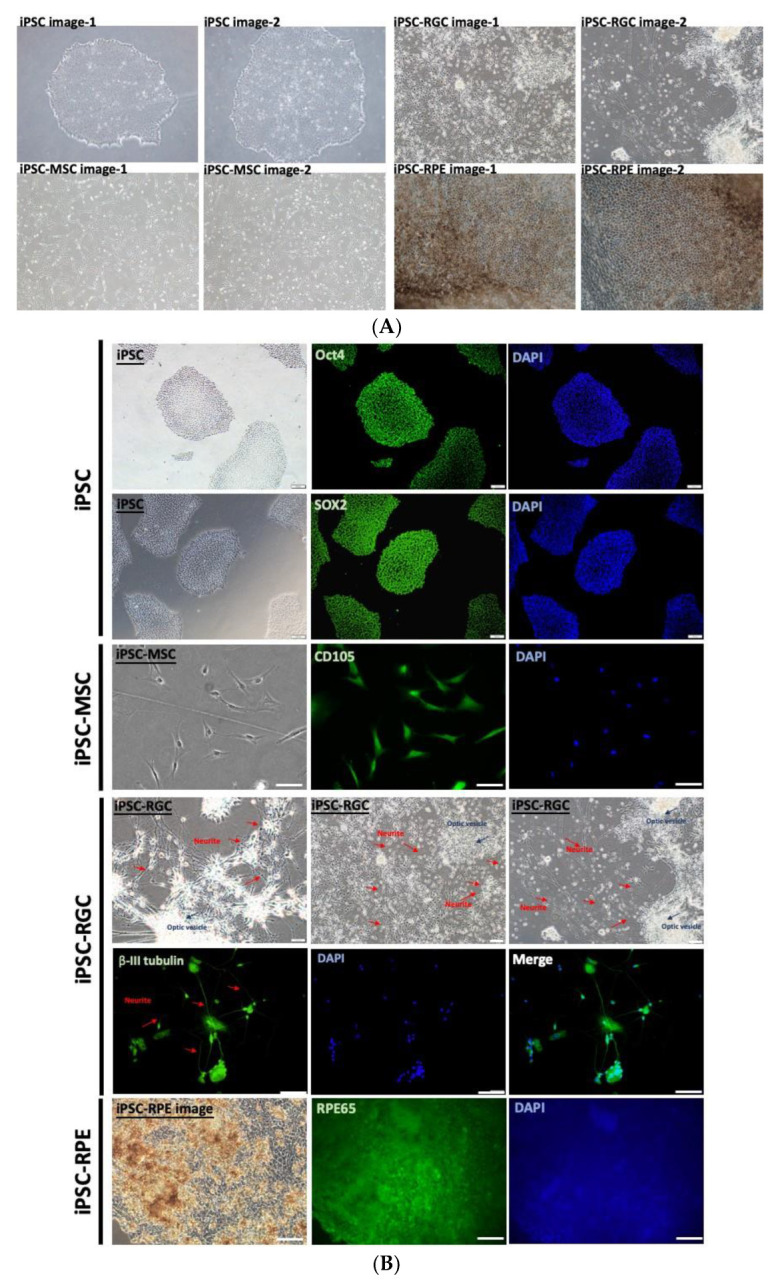

To establish the AI-based iPSC screening system that can rapidly recognize iPSCs and iPSC-derived cell lineages, we sought to use both traditional machine learning and deep learning algorithms to recognize the images of iPSCs and iPSC-derived retinal or mesenchymal lineage cells. Meanwhile, we attempted to use these algorithms to evaluate the differentiation degree of iPSC-derived RPEs. All the images of iPSCs and iPSC-derived lineage cells were captured by qualified technicians or research assistants using the inverted fluorescence microscope (Olympus IX71; Olympus Corporation, Tokyo, Japan). At the end of differentiation procedures, all differentiated lineages cells (i.e., iPSC-MSCs, iPSC-RGCs, and iPSC-RPEs) with any given differentiation degree were enrolled in this study and cell images were obtained. Representative images of undifferentiated iPSCs and iPSC-derived cells with optimal differentiation are shown in Figure 1A. Under the cultivation conditions using the aforementioned protocols, undifferentiated iPSCs consistently formed compacted colonies exhibiting well-defined margins, large nuclei, less cytoplasm, and other embryonic stem cell-like morphologies (Figure 1A), consistently with previously published results [29]. After receiving the stimuli and undergoing proper differentiation, iPSC-MSCs presented typical stromal cell- or fibroblast-like morphologies (Figure 1A), which were apparently different from those of undifferentiated iPSCs. Following the stepwise differentiation protocol [10] for iPSC-RGCs, iPSCs formed neuroepithelium and optic vesicles at days 16 and 46 post-induction, respectively. Eventually, the optic vesicles were switched into neuronal culture with B-27 supplement and Notch signaling inhibitor to differentiate into iPSC-RGCs (Figure 1A). For the differentiation of iPSC-RPEs, several remarkable pigmented patches were observed at the end of differentiation time course (Figure 1A).

Figure 1.

Characterization of iPSCs, iPSC-MSCs, iPSC-RGCs, and iPSC-RPEs with optimal differentiation. (A) Representative images of iPSCs, iPSC-MSCs, iPSC-RGCs, and iPSC-RPEs obtained by phase-contrast microscopy. (B) Validation of the indicated cell types by immunofluorescent staining of the indicated lineage-specific markers. Nuclei stained with DAPI. Scale bars = 100 μm.

In addition to the morphologies and histological findings, the specific features of undifferentiated iPSCs and iPSC-derived lineage cells were validated by examining the biological markers of each cell type. Using immunofluorescence staining, iPSCs exhibiting typical embryonic stem cell-like morphologies were found to be stained strongly positive for Oct4 and Sox2 (Figure 1B). iPSC-MSCs not only presented fibroblast-like morphologies, but were stained positive for CD190 (Figure 1B). The iPSC-RGCs were characterized by the formation of neurites projected from the somas within the optic vesicles. The neurites and somas of iPSC-RGCs were stained positive for beta III-tubulin (Figure 1B). iPSC-RPEs were characterized by an extensive distribution of pigmented patches and positive staining for RPE65 (Figure 1B).

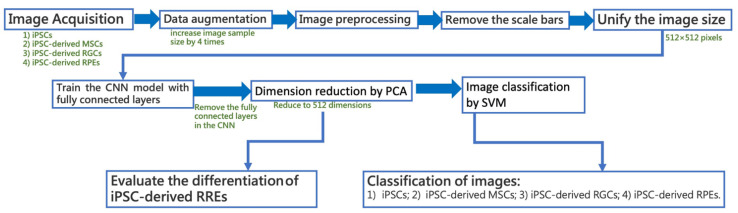

Briefly, the training of these algorithms for image recognition was conducted as summarized in Figure 2. Overall 1937 images of iPSCs and other differentiated cells were enrolled; including 401 images of iPSCs, 565 images of iPSC-MSCs, 370 images of iPSC-RPEs, and 601 images of iPSC-RGCs. The image file formats were either JPG or TIFF. The magnification of these images was between 10 and 20X. Prior to the training of the CNN, the image dataset was split the training (1291 images) and test (647 images) subsets. The images of the training subset were subjected to data augmentation, which involved horizontal flip, vertical flip, and horizontal and vertical flip combined, resulting in 5164 images in the training subset. 20% (1032 images) of the augmented data were selected as the validation subset. The data in the test subset were not augmented. After the data augmentation, the number of images of iPSCs increased from 401 to 1604, the number of images of iPSC-MSCs increased from 565 to 2260, the number of images of iPSC-RGCs increased from 602 images to 2408, and the number of iPSC-RPE images increased from 370 to 1480. Images were preprocessed to unify their size. To avoid incorrect feature extraction, the scale bars in all figures were removed. Subsequently, we trained the CNN networks which consisted of fully connected layers. After removing the fully connected layers, the PCA was used to reduce the number of dimensions of image deep feature extraction. After the feature projection, SVM was used to classify the pooled images in the validation set into four categories: iPSCs, iPSC-MSCs, iPSC-RGCs, and iPSC-RPEs. The maximal variance between iPSC images and the images to be tested was used to distinguish the features among these four categories. For iPSC-RPEs, the projected distances between iPSC-RPEs and iPSCs were used to evaluate their differentiation degree.

Figure 2.

The training scheme of the neural network algorithm for the recognition of cell images.

2.3. Image Preprocessing

To enhance the capacity of our models to distinguish images at both low and high magnification and minimize the adverse effects of excessive dimensions during the training, images of different cells were preprocessed and assigned for feature scaling, aiming to train the neural network to detect the non-repetitive images during data augmentation. At the macroscopic perspective, we input the 512 × 512 × 3 tensor (macroscopic tensor) into the CNN model, and a copy of this tensor was used as the input of the CNN. At the microscopic perspective, the images were divided equally into four 256 × 256 × 3 tensors in order to detect the subtle differences among images. After the dividing, a macroscopic image and four microscopic images were subjected to five CNNs for the subsequent training. Before the training, the image order was randomly changed to enhance the convergence rate and accuracy, and the random seed was set as zero. Because the input size of the macroscopic tensor was 512 × 512, the average pooling layer was added at the front of the input in the macroscopic tensor to ensure that the inputs were all 256 × 256 × 3 in size in the five CNN models.

2.4. Model Design and Training

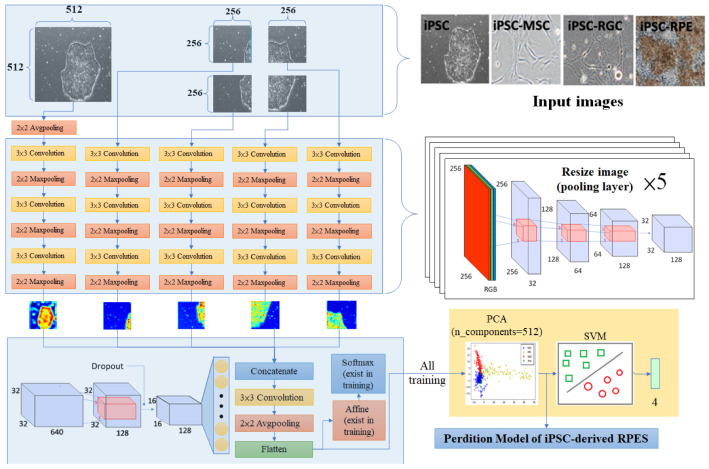

Figure 3 illustrates the CNN neural network architecture of the multi-slice tensors shared by all cut images in this study. We subjected all cut images to the CNN that contains three convolutional layers and three maximum pooling layers. After passing through five convolutional layers, the input images were stacked, followed by another convolutional layer and subsequent flattening. After flattening and passing through the fully connected layer, the output results were obtained. The kernel size of these five CNN models was 3 × 3, and the number of filters was 32, 64, and 128 in sequence. After each convolutional layer, a 2 × 2 maximum pooling was performed. Before training, the input images were passed through an average pooling layer of 2 × 2 size, allowing the inputs of the first convolutional layer of the five CNNs to be 256 × 256 × 3 in size. After passing the convolutional layers and the pooling layer, the output 32 × 32 × 128 tensors were obtained. These tensors were then concatenated, merged into 32 × 32 × 640 tensors, and passed through another convolutional layer with 128 filters and a 3 × 3 kernel size. After that, 50% of the neurons were randomly discarded and averagely pooled to prevent overfitting, resulting in the tensors with 16 × 16 × 128 in size. After flattening and passing through a hidden layer containing 128 neurons, the tensors were output to the output layer containing 4 neurons in which the softmax was used as the activation function.

Figure 3.

The CNN architecture of the proposed multi-slice tensor model.

2.5. N-Dimensional Classifier Based on the combination of PCA and SVM

SVM is a supervised learning model used for tackling classification problems. Four-dimensional spaces were defined for the target images, i.e., iPSCs, iPSC-MSCs, iPSC-RGCs, and iPSC-RPEs to represent the features of each image. Since SVM may exhibit better performance of classification than the fully connected layer, we calibrated the model after obtaining a certain accuracy by removing the neurons behind the flatten layer and using the flatten layer as an alternative output. After the removal of the flatten layer, the file size was reduced from 61.9 MB to 4.68 MB. All images in the training set were subjected to the calibrated model for further training, generating 5164 arrays with 32,768 dimensions. We then used the MaxAbsScaler in scikit-learn to downsample this array to floating-point numbers between -1 and 1 and subsequently used the machine learning in scikit-learn and PCA to reduce the dimensions of these arrays from 32,768 to 512. Next, we subjected these 5164 arrays with 512 dimensions to the SVM for supervised learning. The addition of PCA and SVM robustly improved the classification effect of the original model. Finally, we modified the feature scaling during preprocessing and changed the input from the range 0 to 1 to −1 to 1, and then retrained the model using the training set.

3. Results

3.1. Heatmap Visualization of the Image Features

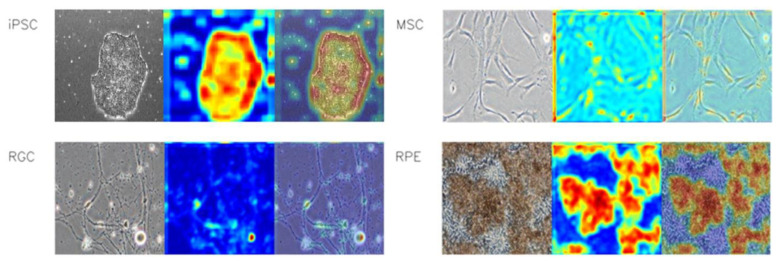

To ensure that the CNN model captured the correct features of the cell images, we used heatmap to visualize the detectable features. iPSCs were characterized by the well-defined borders among all iPSC colonies. In some images, the boundary occupied the entire image. iPSC-MSCs were characterized by the elongated morphologies, which were very subtle on the images. iPSC-RGCs were characterized by extending neurites and iPSC-RPEs were characterized by the dark brown pigmentation. These features could be detected by either macro- or microscopic examination. The features of iPSCs were detectable using macroscopic examination. Considering the subtle features of iPSC-MSCs and iPSC-RGCs, we resized all cell images to a size of 512 × 512 pixels where the subtle features were detectable. All resized images were then subjected to the model and converted into the tensors. After the conversion, the tensors were further cropped, allowing the model to adapt the input images at both macro- and microscopic perspectives. Figure 4 shows the representative features of iPSCs, iPSC-MSCs, iPSC-RGCs, iPSC-RPEs with heatmap visualization.

Figure 4.

Comparison of four cell types with the heatmap imaging. For each indicated cell type, the left panel shows the representative phase-contrast microscopy image, the middle panel shows the heatmap calculated by the CNN model, and the right panel shows the overlay of the heatmap and the input.

3.2. Scale Bars Confound the Training of CNN

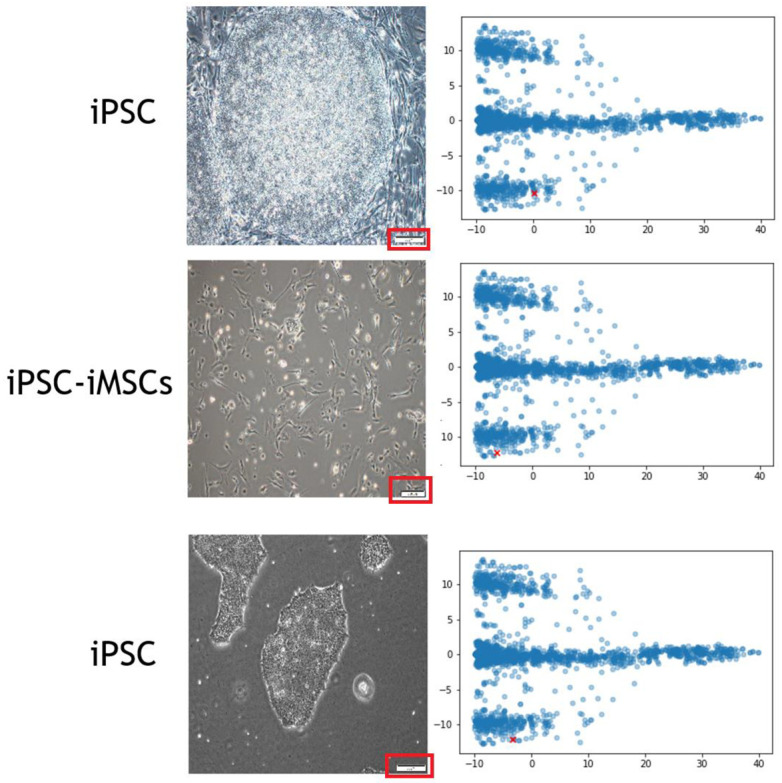

When the scale bars were detected at the corner of the images, CNN overemphasized the weights area on the scale bar regions (Figure 5), so we cropped the images in the test set to remove the scale bars during the image preprocessing and subjected them to subsequent training. However, we did not remove the scale bars on the images in the validation set. Comparing to the training in which the images with the scale bars were used, training the CNN using the images without the scale bars have significantly improved the accuracy of image recognition for the images containing the scale bars. In addition, after the dimension reduction using PCA, we were able to easily detect whether the scale bars were included in the images through the feature coordination. This was due to CNN focusing the attention on the scale bars.

Figure 5.

A representative image interpretation by our proposed model. The cell images (left side) with scale bars (red rectangle) were subjected to the training of our proposed model. When using PCA to reduce the dimension (right side), the scale bars can be detected in the feature coordination (red cross point).

3.3. Effect of Feature Scaling on the Accuracy and Loss during the Training

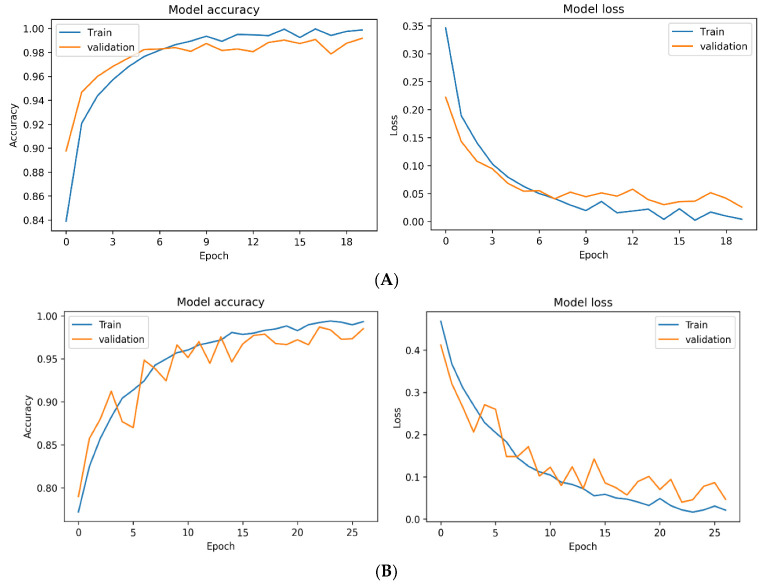

We recorded the values of the training process and used Python’s matplotlib library to plot the line charts of model accuracy and model loss. We found that the model exhibited a batch training set accuracy higher than 80% in the first epoch of training, and a steady decline of the loss function (Figure 6A). In addition, when the features were scaled to a range between 0 and 1 instead of −1 to 1 with an average of zero during preprocessing, the training process was relatively unstable with relatively slow training speed (Figure 6B). At the first epoch, the accuracy of the training set was only 80%, significantly less than the previous model trained with an average of zero.

Figure 6.

Effect of feature scaling on the accuracy and loss during the training. (A) Comparison of training and validation accuracy (left) and loss (right) with the features between −1 and 1 using accuracy and loss. (B) Comparison of training and validation accuracy (left) and loss (right) with the features between 0 and 1 using accuracy and loss.

3.4. Effect of Image Division on the Performance of the Multi-Slice Tensor Model

The magnifications of all enrolled cell images were between 10 to 20X. To analyze the effect of input images with different magnifications on the verification of the model, we cut each input image into four equal parts. Subsequently, all input images were resized to the size of 512 × 512. The effect of image division in the training set on the performance of the multi-slice tensor model is presented in Table 1.

Table 1.

Effect of image division in the training set on the accuracy of the multi-slice tensor model.

| Training Set | Test Set | |

|---|---|---|

| With Cut Images | Without Cut Images | |

| With cut images | 97.2% | 97.9% |

| Without cut images | 70.6% | 96.4% |

The difference between the two models was whether the cut input images were included in the training set during training. Invariantly of the presence or absence of the cut input images in the training set, the test set without the cut images exhibited a recognition accuracy of higher than 95%. However, training without the cut input images in the training set showed a recognition accuracy of only 70.6% in the test set containing the cut images. Training the CNN model using the training set that contained the cut input images consistently exhibited a recognition accuracy higher than 97%. Collectively, our findings indicated that expanding the input image data in the training set via image division helped the model to adapt to the recognition of images with different magnification.

In a case of using a single tensor model without recruiting cut input images (only a macroscopic model was used), the resultant accuracy reached 94.4%. However, if the multi-slice tensor model was used, the accuracy increased to 96.5%. When we removed the fully connected layers and used the flatten layer as the input of the SVM to undergo a supervised classification, the accuracy declined to 89.7%. When PCA was added after the flatten layer to reduce the dimensions, which was followed by SVM, the accuracy increased to 97.3%. If we replaced PCA by linear discriminant analysis (LDA) to conduct the supervised dimension reduction, the accuracy declined to 53.9%. We found that this model exhibited good segmentation performance during the training, but poor segmentation performance during the test. Subsequently, we used feature scaling between −1 to 1 as the input to replace the scaling 0 to 1, as a result, the accuracy of 97.2% was obtained after retraining this model. These data indicated that normalization using 0 as the center was beneficial to the model training. When the training was completed, PCA was applied to the flatten layer to conduct the dimension reduction and SVM was used for the supervised classification, as result, the highest accuracy of 97.8% was obtained. The accuracy of different CNN models used in this study is compared and shown in Table 2.

Table 2.

The comparison of CNN models.

| Input | CNN Model | Classification | Accuracy |

|---|---|---|---|

| 0 to 1 | Single tensor | fully connected layers | 94.4% |

| 0 to 1 | multi-slice tensors | fully connected layers | 96.5% |

| 0 to 1 | multi-slice tensors | SVM | 89.7% |

| 0 to 1 | multi-slice tensors | PCA + SVM | 97.3% |

| 0 to 1 | multi-slice tensors | LDA + SVM | 53.9% |

| −1 to 1 | multi-slice tensors | fully connected layers | 97.2% |

| −1 to 1 | multi-slice tensors | PCA + SVM | 97.8% |

After several training epochs, we were able to accurately predict the 647 images in the test set using our aforementioned training model with an accuracy of 97.8%. Among each classification, the accuracy of iPSCs, iPSC-MSCs, iPSC-RGCs, and iPSC-RPEs prediction were 97%, 97.8%, 97%, and 100%, respectively. We trained this model for 19 epochs with an individual batch size of 8. The confusion matrix summarizing the recognition results is presented in Table 3.

Table 3.

Confusion matrix summarizing the recognition results by the multi-slice tensor model.

| Average Accuracy: 97.8% | Recognition | Recall | F Score | |||

|---|---|---|---|---|---|---|

| iPSC | iPSC-MSC | iPSC-RGC | iPSC-RPE | |||

| iPSC | 130 | 1 | 2 | 1 | 97.0% | 97.4% |

| iPSC-MSC | 0 | 185 | 3 | 1 | 97.8% | 97.8% |

| iPSC-RGC | 1 | 5 | 195 | 0 | 97.0% | 97.4% |

| iPSC-RPE | 0 | 0 | 0 | 123 | 100% | 98.9% |

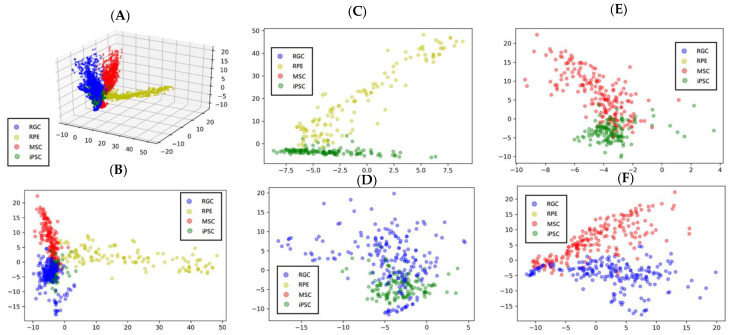

3.5. Projection of the Cell Features Using PCA

Next, we subjected the features extracted by the convolutional layers to a 512-dimension PCA for dimension reduction and feature projection. Notably, the features of iPSC-MSCs, iPSC-RGCs, and iPSC-RPEs were all of higher variability as compared to those of iPSCs (green scatter; Figure 7). When we further measured the coordinates of projections in the feature space, we found that the distance from the spot of any given image to iPSCs could reflect the differentiation status of the image. After dimension reduction using PCA and the evaluation of differentiation degree by detecting the coordinates, we found that the first to third dimensions were the most weighted and could separate these four types of cells in this study. Among these cells, iPSC-RPEs and iPSCs were separable by the value of 0 in the 0th dimension. iPSC-MSCs and iPSCs were separable by the value of 0 in the 1st dimension, and iPSC-RGCs and iPSCs were separable by the value of 0 in the 2nd dimension. In addition, iPSC-RGCs and MSCs were separable by the value of 0 in the 1st dimension. Figure 7 shows the three-dimensional/two-dimensional projection and the maximum projection results of each classification after using PCA for the output of the multi-slice tensor model.

Figure 7.

Three-dimensional projection, the maximum projection results, and two-dimensional projection of iPSCs, iPSC-MSCs, iPSC-RPEs, and iPSC-RGCs after using PCA for the output of the multi-slice tensor model. (A) The three-dimensional projection of four cell types; (B) Results of the maximal 2D projection; (C) The projection of iPSCs and iPSC-RPEs (D) the projection of iPSCs and iPSC-RGCs; (E) the projection of iPSCs and iPSC-MSCs; (F) the projection of MSCs and iPSC-RGCs.

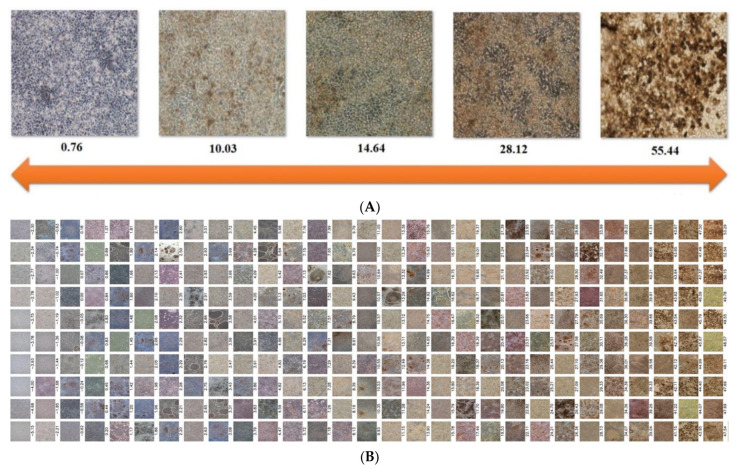

3.6. Evaluating the Differentiation Degree of iPSC-RPEs

Based upon the scatter plot output by PCA, we found that the 1st dimension showed the best performance to separate the spots of iPSCs and iPSC-RPEs. We took this dimension as an example and renamed each iPSC-RPE image using their coordinates after feature projection and then ordered them accordingly. The iPSC-RPEs with the coordinate further from that of iPSCs exhibited a more obvious dark brown feature of iPSC-RPEs. Figure 8A shows the comparison of the phenotypes and corresponding PCA-projected coordinates of each iPSC-RPE image. iPSC-RPEs with optimal differentiation may represent ideal cell source for clinical transplantation. Figure 8B shows the sorting of the values of projections of iPSC-RPEs to represent the differentiation degree.

Figure 8.

Evaluation of the RPE differentiation degree by the value of the PCA projection. (A) From left to right, the larger value reflects higher differentiation degree of iPSC-RPEs. (B) Sorting the values of projections of iPSC-RPEs. From left to right: the larger value reflects higher differentiation degree of iPSC-RPEs.

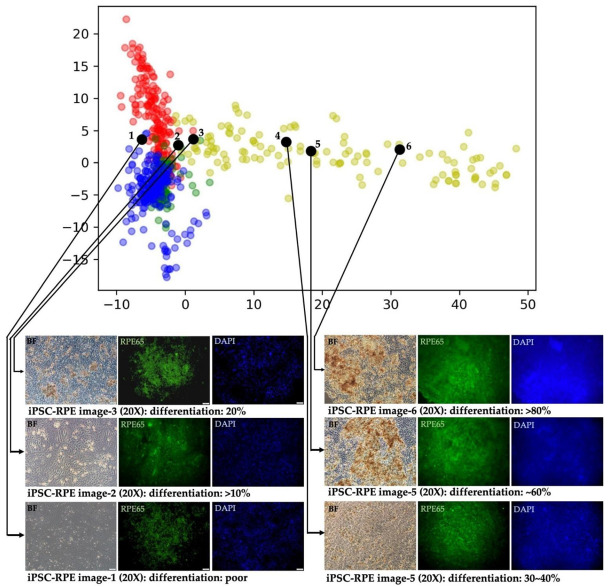

To further verify the accuracy of iPSC-RPE image recognition by this proposed model, we picked up 6 dishes of iPSC-RPEs with various differentiation degree after undergoing the complete differentiation time course (Figure 9) using protocols reported by either Regent et al. [28] or Yang et al. [8] The differentiation degree of each iPSC-RPE dish was interpreted by qualified technicians after microscopic examination. Subsequently, the images of these cells were used as the input in the multi-slice tensor model and output using PCA. These iPSC-RPEs with various differentiation degree were also subjected to immunofluorescence staining and the RPE65 staining of each dish of iPSC-RPE was obtained. As shown in Figure 9, the iPSC-RPEs with poor differentiation degree, as validated by low RPE65 staining, were projected to the left side of the iPSC-RPE scatter plot. iPSC-RPEs with 40–60% differentiation degree were projected to the middle part of the iPSC-RPE scatter plot. iPSC-RPEs with optimal differentiation degree (more than 80%) were projected to the right side of the iPSC-RPE scatter plot. Collectively, our data demonstrated that our proposed CNN model could be used for evaluating the differentiation degree of iPSC-derived cell lineages.

Figure 9.

Using iPSC-RPEs with various differentiation degree as the input to verify the accuracy of iPSC-RPE image recognition by the multi-slice tensor model. In the scatter plot of iPSC-RPEs (yellow green spots), iPSC-RPEs with poor differentiation were projected to the left side, iPSC-RPEs with moderate differentiation to the middle, and iPSC-RPEs with high differentiation-to the right side of the scatter plot.

4. Discussion

In this study, we developed a multi-slice tensor model accompanied by dimension reduction by PCA and supervised classification by SVM to screen iPSC-derived cell lineages with an accuracy of 97.8%, When we added the PCA after the flatten layer of this model to measure the coordinates of projections in the feature space, the differentiation degree of iPSC-RPEs was accurately recognized. We demonstrated the potential of this proposed multi-slice tensor model as a tool for efficient and reliable biological image recognition for the selection of iPSC-RPEs after defined iPSC-RPE differentiation. Overall, this multi-slice tensor model is able to distinguish differentiated cells of different cell lineages with corresponding unique features and help the selection of iPSC-RPEs with optimal differentiation.

The major advances in the clinical use of iPSC-based technologies in cell therapy have been made possible due to the fact that iPSCs represent an infinite source of any type of human cells. iPSCs can be obtained from reprogramming an adult somatic cell and are capable of proliferation, differentiation, and replacement of damaged cells, enabling their dynamic niche in regenerative medicine. To date, there is a broad range of ongoing research in cell therapies for various diseases using iPSCs-derived cell types ranging from dopaminergic progenitors for Parkinson’s disease [30], iPSC-derived cardiac progenitors for heart failure [31], iPSC-derived beta-pancreatic cells for type I diabetes [32], iPSC-derived NK cells for advanced solid tumors [33], and iPSC-RPEs for retinal disorders [16,34]. Stemming from these recent advances, there are growing expectations toward quality control in the mass production of iPSCs for their respective clinical applications.

Effective quality control of stem cells largely determines the success of stem cell therapy. However, the analysis of iPSC-derived colonies requires manual identification, which is time-consuming, labor-intensive, and error-prone. Hence, before human iPSCs can be applied as a standard method and manufactured in large scale, the decision-making must be automated to ensure an efficient and objective quality control process. AI, an emerging field of computer science and engineering, has shown potential applications in stem cell research, including understanding the behavior of iPSCs, recognizing individual iPSC-derived cell types, and the characterization and evaluation of cell qualities in transplantations [35,36]. Machine learning-based AI algorithms have become a standard method for various aspects of image analysis, and, in particular, in the recognition and analysis of iPSC colony images [25,37,38]. Such algorithmic frameworks range from the convolutional neural networks (CNNs) to the more traditional algorithms, such as support vector machines (SVMs), principal component analysis (PCA), random forests (RFs), decision trees (DTs), and multi-linear perceptions (MLPs). To date, a limited number of studies describes the application of CNNs for clinical stem cell analysis, particularly related to iPSCs. These studies delineate the potential application of CNNs for the analysis of iPSC microscopy images [39], the identification of iPSC-derived cell types, such as endothelial cells based only on their morphology [40], and the identification of very early onset pluripotent stem cells differentiation [41]. More traditional algorithms have also been used in the studies concerning the image recognition of stem cells too. Kavitha et al., have shown that the SVMs, RFs, and AdaBoost algorithms are able to recognize and determine the health of iPSCs better than the DTs and MLPs, although all five learning methods achieved accuracies of more than 87.5% [42]. In a separate study, they also demonstrated that the CNN-based approach achieved an accuracy of 93.2% in classifying iPSCs compared to 83.4% by the SVM model [43]. One issue for improving the accuracy of SVMs is finding an appropriate kernel for the given data. Joutsijoki et al., showed that the intensity histograms-based SVM using linear kernel functions alone is not adequate for iPSC colony image classification, achieving the accuracy of only 54% [44]. Wakui et al., used a non-linear SVM classifier to assess the quality of three types of iPSC cells and obtained an average accuracy of more than 80%. [45] Zanaty et al., introduced a new kernel function that can be used for both linear and non-linear datasets to improve the classification accuracy of SVMs, called the Gaussian radial basis polynomials function (GRPF). The GRPF-based SVM approach achieved an average accuracy of 95.79% that was far greater than the average accuracy of 85.77% in the RBF-based kernel SLM and 84.90% in the MLP [46]. Additionally, there have also been studies that used a combination of CNN and SVM to improve the image recognition accuracy. Acevedo et al., first used CNN as a tool for feature extraction, and then used SVM to use these features for classification. The accuracy achieved was as high as 96 and 95%, compared to the accuracies of 86% and 90% obtained when only the CNN models VGG16 and SingingV3 were used, respectively [47]. Cascio et al., also adopted a similar approach to classify indirect immunofluorescence images, achieving an accuracy of 96.4%. Features were extracted using an AlexNet-based network and then, cell pattern association was performed using SVMs and k-nearest neighbors (KNN) classifier [48].

So far, there are still several drawbacks in our proposed multi-slice tensor model. The model was trained by the input using the images containing single cell type and was able to distinguish iPSCs, iPSC-MSCs, iPSC-RPEs, and iPSC-RGCs in the validation set with high accuracy. However, it remains unclear whether this multi-slice tensor model is able to simultaneously recognize different iPSC-derived lineage cells in an image containing the co-culture of different lineage cells. This model did not ensure the selection of high quality iPSCs and exclusion of bad quality iPSCs. In addition, although this model showed remarkable performance in recognizing the differentiation degree of iPSC-RPEs, our findings did not confirm that this model could be used for the evaluation of differentiation degree in iPSC-MSCs and iPSC-RGCs. In addition to the formation of fibroblast-like phenotypes, iPSC-MSCs did not present other unique and visualizable features after the end of differentiation. Our model recognized iPSC-RGCs by detecting the extensive formation of neurites. Nevertheless, the optic vesicles, form which the neurites projected, were heterogeneously consisted of somas and other cell structures (Figure 1). On the other hand, can be individually separated manually, however, it requires significant manpower [9,10]. The optic nerve is well known not to regenerate, and the repair of damaged optic nerves using iPSC-RGCs remains a hurdle in clinical transplantation [49].

5. Conclusions

In this study, we used a combination of multi-slice tensor model and PCA to reduce dimension for feature extraction, and SVM and CNN to recognize and classify iPSC colony images. This is the first of such studies among stem cell studies to the best of our knowledge. Our model achieved a high accuracy in distinguishing between the iPSCs and iPSC-derived MSCs, RGCs, and RPEs. More notably, the model was able to distinguish the quality and extent of maturation of iPSC-derived RPEs, which may serve as an important tool in the ongoing efforts of pre-clinical studies and clinical transplantations of iPSC-derived RPEs [16,34]. If only the single tensor model was used without recruiting cut input images, the accuracy reached 94.4%. If the multi-slice tensor model was used, the accuracy reached 96.5%. When the fully connected layers were removed and only the flatten layer was used as the SVM input, the accuracy decreased to 89.7%. When PCA was used upon the flatten layer for SVM input, the accuracy increased to 97.3%. The hybrid methodology of PCA + SVM and promising results herein demonstrate potential in automating the interpretation and early detection of stem cells, thereby assisting researchers in the stem cell culture progress and making a big step forward in the development of regenerative and precision medicine. Expectably, owing to the abilities of this model to accurately recognize well-differentiated iPSC-RPEs, it is possible to employ this multi-slice tensor model to select the candidate cells with ideal features, and simultaneously exclude cells with immature/abnormal phenotypes. After some fine-tuning and optimization, this rapid screening system may hold promises and facilitate the manufacturing of clinical grade iPSC-RPEs and help their translation into clinical cell therapy. Further preclinical studies and validation is still required to verify the applicability of this system.

Acknowledgments

We thank Kesshmita Paranjothi for her outstanding technologies for iPSC-RGC culture and maintenance and Cheng-Hung Chang for his assistance in image capturing using confocal microscopies.

Author Contributions

Conceptualization: C.-Y.L. and Y.C.; methodology, W.-C.C., C.-Y.L., T.-T.C., E.-T.T., N.L. and S.-J.C. (Shih-Jie Chou); software, T.-T.C.; validation, C.-E.G., W.-C.C. and Y.-P.Y.; investigation, E.-T.T., T.-T.C. and S.-J.C. (Shih-Jie Chou); resources, W.-C.C., S.-J.C. (Shih-Jen Chen) and S.-J.C. (Shih-Jie Chou); data curation, W.-C.C. and S.-J.C. (Shih-Jie Chou); writing—original draft preparation, Y.-J.H. and Y.C.; writing—review and editing, Y.C. and A.A.Y.; supervision, Y.-P.Y., D.-K.H. and S.-J.C. (Shih-Jen Chen); funding acquisition, C.-Y.L. and Y.C. and S.-H.C. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

This research is approved by Institutional Review Board of Taipei Veterans General Hospital (2021-10-006CC).

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This study was funded by Ministry of Science and Technology (MOST) (MOST 111-2314-B-075-036-MY3; MOST 111-2320-B-075-007; MOST 111-2320-B-A49-028-MY3; MOST 111-2321-B-A49-009), National Science and Technology Council (NSTC 111-2634-F-006-012); Taipei Veterans General Hospital (V111B-025).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Shi Y., Inoue H., Wu J.C., Yamanaka S. Induced pluripotent stem cell technology: A decade of progress. Nat. Rev. Drug Discov. 2017;16:115–130. doi: 10.1038/nrd.2016.245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Takahashi K., Yamanaka S. Induction of pluripotent stem cells from mouse embryonic and adult fibroblast cultures by defined factors. Cell. 2006;126:663–676. doi: 10.1016/j.cell.2006.07.024. [DOI] [PubMed] [Google Scholar]

- 3.Hsu C.C., Lu H.E., Chuang J.H., Ko Y.L., Tsai Y.C., Tai H.Y., Yarmishyn A.A., Hwang D.K., Wang M.L., Yang Y.P., et al. Generation of induced pluripotent stem cells from a patient with Best Dystrophy carrying 11q12.3 (BEST1 (VMD2)) mutation. Stem Cell Res. 2018;29:134–138. doi: 10.1016/j.scr.2018.03.019. [DOI] [PubMed] [Google Scholar]

- 4.Peng C.H., Huang K.C., Lu H.E., Syu S.H., Yarmishyn A.A., Lu J.F., Buddhakosai W., Lin T.C., Hsu C.C., Hwang D.K., et al. Generation of induced pluripotent stem cells from a patient with X-linked juvenile retinoschisis. Stem Cell Res. 2018;29:152–156. doi: 10.1016/j.scr.2018.04.005. [DOI] [PubMed] [Google Scholar]

- 5.Lu H.E., Yang Y.P., Chen Y.T., Wu Y.R., Wang C.L., Tsai F.T., Hwang D.K., Lin T.C., Chen S.J., Wang A.G., et al. Generation of patient-specific induced pluripotent stem cells from Leber’s hereditary optic neuropathy. Stem Cell Res. 2018;28:56–60. doi: 10.1016/j.scr.2018.01.029. [DOI] [PubMed] [Google Scholar]

- 6.de Rham C., Villard J. Potential and limitation of HLA-based banking of human pluripotent stem cells for cell therapy. J. Immunol. Res. 2014;2014:518135. doi: 10.1155/2014/518135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Okita K., Ichisaka T., Yamanaka S. Generation of germline-competent induced pluripotent stem cells. Nature. 2007;448:313–317. doi: 10.1038/nature05934. [DOI] [PubMed] [Google Scholar]

- 8.Lin Y.Y., Chien Y., Chuang J.H., Chang C.C., Yang Y.P., Lai Y.H., Lo W.L., Chien K.H., Huo T.I., Wang C.Y. Development of a Graphene Oxide-Incorporated Polydimethylsiloxane Membrane with Hexagonal Micropillars. Int. J. Mol. Sci. 2018;19:2517. doi: 10.3390/ijms19092517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yang Y.P., Chang Y.L., Lai Y.H., Tsai P.H., Hsiao Y.J., Nguyen L.H., Lim X.Z., Weng C.C., Ko Y.L., Yang C.H., et al. Retinal Circular RNA hsa_circ_0087207 Expression Promotes Apoptotic Cell Death in Induced Pluripotent Stem Cell-Derived Leber’s Hereditary Optic Neuropathy-like Models. Biomedicines. 2022;10:788. doi: 10.3390/biomedicines10040788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wu Y.R., Wang A.G., Chen Y.T., Yarmishyn A.A., Buddhakosai W., Yang T.C., Hwang D.K., Yang Y.P., Shen C.N., Lee H.C., et al. Bioactivity and gene expression profiles of hiPSC-generated retinal ganglion cells in MT-ND4 mutated Leber’s hereditary optic neuropathy. Exp. Cell Res. 2018;363:299–309. doi: 10.1016/j.yexcr.2018.01.020. [DOI] [PubMed] [Google Scholar]

- 11.Ahmad Mulyadi Lai H.I., Chou S.J., Chien Y., Tsai P.H., Chien C.S., Hsu C.C., Jheng Y.C., Wang M.L., Chiou S.H., Chou Y.B., et al. Expression of Endogenous Angiotensin-Converting Enzyme 2 in Human Induced Pluripotent Stem Cell-Derived Retinal Organoids. Int. J. Mol. Sci. 2021;22:1320. doi: 10.3390/ijms22031320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Huang K.C., Wang M.L., Chen S.J., Kuo J.C., Wang W.J., Nhi Nguyen P.N., Wahlin K.J., Lu J.F., Tran A.A., Shi M., et al. Morphological and Molecular Defects in Human Three-Dimensional Retinal Organoid Model of X-Linked Juvenile Retinoschisis. Stem Cell Rep. 2019;13:906–923. doi: 10.1016/j.stemcr.2019.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Peng C.H., Chuang J.H., Wang M.L., Jhan Y.Y., Chien K.H., Chung Y.C., Hung K.H., Chang C.C., Lee C.K., Tseng W.L., et al. Laminin modification subretinal bio-scaffold remodels retinal pigment epithelium-driven microenvironment in vitro and in vivo. Oncotarget. 2016;7:64631–64648. doi: 10.18632/oncotarget.11502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ferris F.L., 3rd, Wilkinson C.P., Bird A., Chakravarthy U., Chew E., Csaky K., Sadda S.R., Beckman Initiative for Macular Research Classification Clinical classification of age-related macular degeneration. Ophthalmology. 2013;120:844–851. doi: 10.1016/j.ophtha.2012.10.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mandai M., Watanabe A., Kurimoto Y., Hirami Y., Morinaga C., Daimon T., Fujihara M., Akimaru H., Sakai N., Shibata Y., et al. Autologous Induced Stem-Cell-Derived Retinal Cells for Macular Degeneration. N. Engl. J. Med. 2017;376:1038–1046. doi: 10.1056/NEJMoa1608368. [DOI] [PubMed] [Google Scholar]

- 16.Kamao H., Mandai M., Okamoto S., Sakai N., Suga A., Sugita S., Kiryu J., Takahashi M. Characterization of human induced pluripotent stem cell-derived retinal pigment epithelium cell sheets aiming for clinical application. Stem Cell Rep. 2014;2:205–218. doi: 10.1016/j.stemcr.2013.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Choi R.Y., Coyner A.S., Kalpathy-Cramer J., Chiang M.F., Campbell J.P. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020;9:14. doi: 10.1167/tvst.9.2.14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kim S.J., Cho K.J., Oh S. Development of machine learning models for diagnosis of glaucoma. PLoS ONE. 2017;12:e0177726. doi: 10.1371/journal.pone.0177726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Andrade De Jesus D., Sanchez Brea L., Barbosa Breda J., Fokkinga E., Ederveen V., Borren N., Bekkers A., Pircher M., Stalmans I., Klein S., et al. OCTA Multilayer and Multisector Peripapillary Microvascular Modeling for Diagnosing and Staging of Glaucoma. Transl. Vis. Sci. Technol. 2020;9:58. doi: 10.1167/tvst.9.2.58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Liu T., Lu Y., Zhu B., Zhao H. Clustering high-dimensional data via feature selection. Biometrics. :2022. doi: 10.1111/biom.13665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sarker I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021;2:420. doi: 10.1007/s42979-021-00815-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Liu D., Wen B., Jiao J., Liu X., Wang Z., Huang T.S. Connecting Image Denoising and High-Level Vision Tasks via Deep Learning. IEEE Trans. Image Process. 2020;29:3695–3706. doi: 10.1109/TIP.2020.2964518. [DOI] [PubMed] [Google Scholar]

- 23.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 24.Niioka H., Asatani S., Yoshimura A., Ohigashi H., Tagawa S., Miyake J. Classification of C2C12 cells at differentiation by convolutional neural network of deep learning using phase contrast images. Hum. Cell. 2018;31:87–93. doi: 10.1007/s13577-017-0191-9. [DOI] [PubMed] [Google Scholar]

- 25.Kusumoto D., Yuasa S. The application of convolutional neural network to stem cell biology. Inflamm. Regen. 2019;39:14. doi: 10.1186/s41232-019-0103-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Orita K., Sawada K., Koyama R., Ikegaya Y. Deep learning-based quality control of cultured human-induced pluripotent stem cell-derived cardiomyocytes. J. Pharmacol. Sci. 2019;140:313–316. doi: 10.1016/j.jphs.2019.04.008. [DOI] [PubMed] [Google Scholar]

- 27.Hynes K., Menicanin D., Gronthos S., Bartold M.P. Differentiation of iPSC to Mesenchymal Stem-Like Cells and Their Characterization. Methods Mol. Biol. 2016;1357:353–374. doi: 10.1007/7651_2014_142. [DOI] [PubMed] [Google Scholar]

- 28.Regent F., Morizur L., Lesueur L., Habeler W., Plancheron A., Ben M’Barek K., Monville C. Automation of human pluripotent stem cell differentiation toward retinal pigment epithelial cells for large-scale productions. Sci. Rep. 2019;9:10646. doi: 10.1038/s41598-019-47123-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nagasaka R., Matsumoto M., Okada M., Sasaki H., Kanie K., Kii H., Uozumi T., Kiyota Y., Honda H., Kato R. Visualization of morphological categories of colonies for monitoring of effect on induced pluripotent stem cell culture status. Regen. Ther. 2017;6:41–51. doi: 10.1016/j.reth.2016.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Takahashi J. iPS cell-based therapy for Parkinson’s disease: A Kyoto trial. Regen. Ther. 2020;13:18–22. doi: 10.1016/j.reth.2020.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kawaguchi S., Soma Y., Nakajima K., Kanazawa H., Tohyama S., Tabei R., Hirano A., Handa N., Yamada Y., Okuda S., et al. Intramyocardial Transplantation of Human iPS Cell-Derived Cardiac Spheroids Improves Cardiac Function in Heart Failure Animals. JACC Basic Transl. Sci. 2021;6:239–254. doi: 10.1016/j.jacbts.2020.11.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Cito M., Pellegrini S., Piemonti L., Sordi V. The potential and challenges of alternative sources of β cells for the cure of type 1 diabetes. Endocr. Connect. 2018;7:R114–R125. doi: 10.1530/EC-18-0012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hong D., Patel S., Patel M., Musni K., Anderson M., Cooley S., Valamehr B., Chu W. 380 Preliminary results of an ongoing phase I trial of FT500, a first-in-class, off-the-shelf, induced pluripotent stem cell (iPSC) derived natural killer (NK) cell therapy in advanced solid tumors. BMJ Spec. J. 2020:8. doi: 10.1136/jitc-2020-SITC2020.0380. [DOI] [Google Scholar]

- 34.Hirami Y., Osakada F., Takahashi K., Okita K., Yamanaka S., Ikeda H., Yoshimura N., Takahashi M. Generation of retinal cells from mouse and human induced pluripotent stem cells. Neurosci. Lett. 2009;458:126–131. doi: 10.1016/j.neulet.2009.04.035. [DOI] [PubMed] [Google Scholar]

- 35.Coronnello C., Francipane M.G. Moving towards induced pluripotent stem cell-based therapies with artificial intelligence and machine learning. Stem Cell Rev. Rep. 2021;18:559–569. doi: 10.1007/s12015-021-10302-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Tucker B.A., Mullins R.F., Stone E.M. Autologous cell replacement: A noninvasive AI approach to clinical release testing. J. Clin. Investig. 2020;130:608–611. doi: 10.1172/JCI133821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 38.Heravi E.J., Aghdam H.H., Puig D. CCIA; Washington, DC, USA: 2016. Classification of Foods Using Spatial Pyramid Convolutional Neural Network; pp. 163–168. [Google Scholar]

- 39.Wang L., Li X., Huang W., Zhou T., Wang H., Lin A., Hutchins A.P., Su Z., Chen Q., Pei D. TGFβ signaling regulates the choice between pluripotent and neural fates during reprogramming of human urine derived cells. Sci. Rep. 2016;6:22484. doi: 10.1038/srep22484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kusumoto D., Lachmann M., Kunihiro T., Yuasa S., Kishino Y., Kimura M., Katsuki T., Itoh S., Seki T., Fukuda K. Automated deep learning-based system to identify endothelial cells derived from induced pluripotent stem cells. Stem Cell Rep. 2018;10:1687–1695. doi: 10.1016/j.stemcr.2018.04.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Waisman A., La Greca A., Möbbs A.M., Scarafía M.A., Velazque N.L.S., Neiman G., Moro L.N., Luzzani C., Sevlever G.E., Guberman A.S. Deep learning neural networks highly predict very early onset of pluripotent stem cell differentiation. Stem Cell Rep. 2019;12:845–859. doi: 10.1016/j.stemcr.2019.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kavitha M.S., Kurita T., Ahn B.-C. Critical texture pattern feature assessment for characterizing colonies of induced pluripotent stem cells through machine learning techniques. Comput. Biol. Med. 2018;94:55–64. doi: 10.1016/j.compbiomed.2018.01.005. [DOI] [PubMed] [Google Scholar]

- 43.Zanaty E.A. Support Vector Machines (SVMs) versus Multilayer Perception (MLP) in data classification. Egypt. Inform. J. 2012;13:177–183. doi: 10.1016/j.eij.2012.08.002. [DOI] [Google Scholar]

- 44.Joutsijoki H., Rasku J., Haponen M., Baldin I., Gizatdinova Y., Paci M., Saarikoski J., Varpa K., Siirtola H., Ávalos-Salguero J., et al. Classification of iPSC colony images using hierarchical strategies with support vector machines; Proceedings of the 2014 IEEE Symposium on Computational Intelligence and Data Mining (CIDM); Orlando, FL, USA. 9–12 December 2014; pp. 86–92. [Google Scholar]

- 45.Wakui T., Matsumoto T., Matsubara K., Kawasaki T., Yamaguchi H., Akutsu H. Method for evaluation of human induced pluripotent stem cell quality using image analysis based on the biological morphology of cells. J. Med. Imaging. 2017;4:044003. doi: 10.1117/1.JMI.4.4.044003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kavitha M.S., Kurita T., Park S.-Y., Chien S.-I., Bae J.-S., Ahn B.-C. Deep vector-based convolutional neural network approach for automatic recognition of colonies of induced pluripotent stem cells. PLoS ONE. 2017;12:e0189974. doi: 10.1371/journal.pone.0189974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Acevedo A., Alférez S., Merino A., Puigví L., Rodellar J. Recognition of peripheral blood cell images using convolutional neural networks. Comput. Methods Programs Biomed. 2019;180:105020. doi: 10.1016/j.cmpb.2019.105020. [DOI] [PubMed] [Google Scholar]

- 48.Cascio D., Taormina V., Raso G. Deep CNN for IIF Images Classification in Autoimmune Diagnostics. Appl. Sci. 2019;9:1618. doi: 10.3390/app9081618. [DOI] [Google Scholar]

- 49.Yang Y.P., Hsiao Y.J., Chang K.J., Foustine S., Ko Y.L., Tsai Y.C., Tai H.Y., Ko Y.C., Chiou S.H., Lin T.C., et al. Pluripotent Stem Cells in Clinical Cell Transplantation: Focusing on Induced Pluripotent Stem Cell-Derived RPE Cell Therapy in Age-Related Macular Degeneration. Int. J. Mol. Sci. 2022;23:13794. doi: 10.3390/ijms232213794. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.