Abstract

We reviewed the literature on the assessment of acceptability of HIV prevention and treatment interventions and service delivery strategies. Following PRISMA guidelines, we screened 601 studies published from 2015-2020 and included 217 in our review. Of 384 excluded studies, 21% were excluded because they relied on retention as the sole acceptability indicator. Of 217 included studies, only 16% were rated at our highest tier of methodological rigor. Operational definitions of acceptability varied widely and failed to comprehensively represent the suggested constructs in current acceptability frameworks. Overall, 25 studies used formal quantitative assessments (including four adapted measures used in prior studies) and six incorporated frameworks of acceptability. Findings suggest acceptability assessment in recent HIV intervention and service delivery research lacks harmonization and rigor. We offer guidelines for best practices and future research, which are timely and critical in this era of informed choice and novel options for HIV prevention and treatment.

Keywords: HIV/AIDS, acceptability, assessment, measurement, systematic review

INTRODUCTION

HIV prevention and treatment interventions and service delivery models are rapidly evolving. To maximize the potential impact of these new interventions and approaches, it is critical that they are designed and implemented in ways that are acceptable to the individuals for whom they are intended to reach and/or engage. Formative research on the acceptability of HIV interventions and delivery models can elucidate participants’ perspectives on factors that may influence engagement and/or adherence and offer insight into the outcomes subsequently observed (1–3). Furthermore, findings from acceptability studies can inform the adoption, implementation, and scale-up of new HIV interventions and service delivery strategies (4,5). While the importance of acceptability has been widely recognized in HIV research, little consensus remains in this field, as well as other fields (e.g., health services research, behavioral and implementation science), on how to best define or assess it (1,4,6–8). This, in turn, has made it hard to compare acceptability assessments across studies and identify ways to optimize interventions and/or models of delivery.

In the HIV literature, the assessment of acceptability has been evolving over time. First, acceptability was assessed to understand individuals’ preferences for the physical qualities (e.g., size, smell, color) of different HIV prevention products and intentions to use these products (2,9–12). Then, assessments of acceptability tended to focus more on the uptake, retention, and adherence (often measured via drug levels or viral load) of HIV interventions and service delivery models (13). More recently, however, the field has begun to recognize acceptability as a distinct, multi-factorial construct separate from intention and behavioral outcomes, which focuses on individuals’ perception of a given intervention or delivery model in their environmental, social, and cultural contexts (1,2,14). Consequently, there have been increasing efforts to develop or adapt existing behavioral and social sciences theories and frameworks (15,16) to define, assess, and understand the acceptability of HIV interventions and models of service delivery (1,4,17).

One of the more recently developed theoretical frameworks for acceptability derived from a systematic review of the health services and behavioral sciences literature is the Theoretical Framework of Acceptability (TFA) (15,16). The TFA defines acceptability as “a multi-faceted construct that reflects the extent to which people delivering or receiving a healthcare intervention consider it to be appropriate, based on anticipated or experienced cognitive and emotional responses to the intervention” and outlines seven component constructs of acceptability: affective attitude, burden, perceived effectiveness, ethicality, intervention coherence, opportunity costs, and self-efficacy (15). The framework can guide both quantitative and qualitative assessments of intervention acceptability before, during, and after intervention participation among a variety of stakeholders (e.g., providers, clients) (15).

In the present study, we reviewed the literature on the assessment of acceptability related to HIV prevention and treatment interventions and service delivery models. We aimed to describe current approaches to defining and assessing acceptability and how these compare to the TFA, and to recommend future directions for acceptability assessment and research.

METHODS

We followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (18).

Search strategy

One author (JV) searched four electronic bibliographic databases (i.e., PubMed, CINAHL, PsycINFO, and EMBASE) for primary studies with the search terms: “(Acceptability [Title] or Feasibility [Title]) AND (HIV [Title/Abstract])”. Because indicators for acceptability and methodologies for measuring these are changing rapidly for HIV prevention and treatment interventions and service delivery models, we restricted our search to studies published from January 1, 2015 through June 2, 2020 to capture the most recent approaches being used in this research field. Restricting to this time period also allowed us to focus on acceptability measurement relevant for current HIV prevention and treatment approaches. We did not include restrictions on language of publication at this stage. The reference lists of included studies were also reviewed for additional publications.

Study selection

Studies included for data extraction: 1) focused on an HIV prevention or treatment intervention or service delivery strategy, including but not limited to HIV pre-exposure prophylaxis (PrEP), antiretroviral therapy (ART), long-acting biomedical HIV prevention, HIV self-testing (HIVST), behavioral interventions and adherence counseling, voluntary medical male circumcision, and prevention-of-maternal-to-child transmission services; 2) included an explicit measure of acceptability beyond recruitment or drop-out rates and metrics of product adherence; 3) included original research (e.g., not a systematic review or protocol); and 4) were published in the specified time frame. To understand how frequently studies used recruitment and/or drop-out rates as a metric of acceptability, we captured the number of studies excluded from our review for this reason but did not abstract any additional data from these studies. We excluded studies that assessed acceptability solely as retention or adherence because these behavioral outcomes are conceptually different from acceptability (1,2). We also excluded any studies that were unpublished (e.g., conference abstracts or data from research seminars) or not published in a peer-reviewed journal. Duplicate studies were removed.

A random sample of 10% of all titles and abstracts was reviewed by all authors to ensure reliable application of inclusion and exclusion criteria. Authors disagreed on the application of this criteria for roughly 15% of studies in this sample. After discussion and alignment on application of the criteria, only studies where all authors agreed were included. The remaining articles were randomly assigned to individual authors and screened independently at the title/abstract level. Two authors from the team independently screened all studies at the full-text level and noted reasons for exclusion. Any disagreements on inclusion decisions were resolved through discussion; again, only studies in which all authors agreed were included.

Data abstraction

All authors collaboratively developed a structured data abstraction form, which was then piloted with 10% of included full-text articles and revised for clarity. Thereafter, co-authors abstracted the following information from each study: author; title; year; study location; study design; population for acceptability measurement (e.g., end-users of biomedical HIV prevention product; healthcare workers delivering prevention products); sample size; study objective related to acceptability assessment; and the type of HIV intervention or service delivery model. We also abstracted whether the acceptability measurement was informed by a theoretical model, a validated scale, and/or a previously published measure; whether the study provided an operational definition of acceptability (e.g., satisfaction; willingness to use a product); whether acceptability was measured qualitatively or quantitatively; whether acceptability was measured prior to intervention delivery or after the intervention was complete; and specific items and response patterns for each acceptability measure. A second author was assigned to verify each abstraction, and the group of seven authors resolved all disagreements through discussion until consensus was reached.

Analysis

We used descriptive statistics to summarize characteristics of included studies and stratified our findings by type of measurement (quantitative or qualitative) and HIV intervention (biomedical or behavioral). Biomedical interventions were defined as interventions in which use of a vaccine, drug (e.g., daily oral PrEP), device (e.g., vaginal ring), diagnostic tool (e.g., HIV self-testing), or medical intervention (e.g., voluntary medical male circumcision) was the primary focus. Behavioral interventions were defined as those that sought to improve use and adherence to a biomedical intervention (e.g., adherence groups, SMS messaging, enhanced counseling) or to modify systems and structures to promote delivery of a biomedical intervention (e.g., pharmacy-based PrEP delivery). We chose to stratify our findings by quantitative and qualitative measurement approaches because these typically have a unique set of methods and techniques. Additionally, we stratified our findings by biomedical and behavioral interventions to distinguish if there were any differences in acceptability assessment for physical products (e.g., pills, injections, gels) versus scaffolding interventions to promote product use (e.g., counseling, linkage to care).

We categorized studies into tiers based on the quality and rigor of their acceptability assessment as follows:

Tier 0 studies did not report acceptability data apart from retention or adherence measurement (these were excluded after title and abstract review).

Tier 1 studies assessed only one component of acceptability (e.g., affective attitude) using one or two questions.

Tier 2 studies assessed more than one component of acceptability with at least one question per component.

Tier 3 studies assessed acceptability based on items from a theory or framework, validated scale, or previously published scale.

All tiers were mutually exclusive; none of the Tier 1 and 2 studies based their acceptability assessment on a theory or framework, validated scale, or previously published scale or instrument; rather, they assessed components of acceptability defined by the authors. For Tier 3-rated quantitative acceptability assessment studies, we examined whether a specific threshold for “good acceptability” was established a priori.

We reviewed the specific acceptability assessment items and response patterns abstracted from each included study and identified which, if any, of the seven TFA constructs these captured (see Appendix Table 1 for TFA construct definitions). We also compared the operational definitions of acceptability captured in our review with the TFA constructs to identify definitions that more closely align with those of other implementation constructs (e.g., appropriateness, usability, satisfaction) or that could be considered correlates of (i.e., factors associated with) acceptability as opposed to acceptability measurements themselves (19).

RESULTS

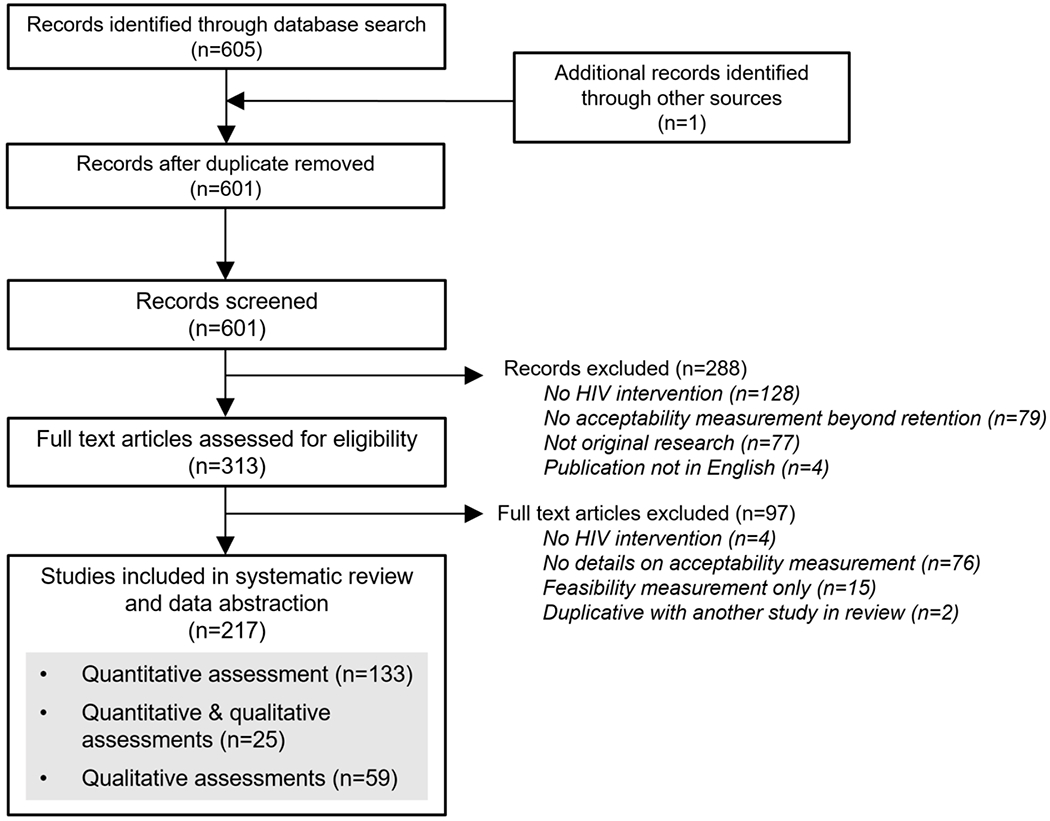

Our search identified 601 unique studies. After screening titles, abstracts, and full-text articles, we selected 217 studies for data abstraction and analysis (Figure 1). Of 384 excluded studies, the most common reasons for exclusion were that they did not focus on an HIV prevention or treatment intervention (34%), measure acceptability beyond intervention uptake/retention (21%), present original research (20%), or provide details on how acceptability was measured (20%).

Figure 1.

PRISMA diagram of studies included in our review

We describe the characteristics of the studies included in our review in Table 1. Of the 217 studies, 133 (61%) measured acceptability only quantitatively, 59 (27%) measured acceptability only qualitatively, and 25 (12%) measured acceptability both quantitatively and qualitatively. Studies were located mainly in North America (45%) or sub-Saharan Africa (33%). Nearly half (47%) of studies that included quantitative acceptability assessments (n=158) had sample sizes over 200; about half (54%) of studies that included qualitative acceptability assessments (n=84) had samples sizes less than 50. Study populations varied and included men who have sex with men (MSM) (29%), youth (21%), people living with HIV (19%), people not living with HIV (13%), or healthcare providers (11%). For the interventions highlighted in the studies, qualitative acceptability assessments mainly concerned HIV testing (26%), mHealth (23%), and behavioral (25%) interventions, while quantitative acceptability assessments mainly concerned PrEP (23%), mHealth (22%), and HIV testing (19%) interventions.

Table 1.

Summary of studies included in the systematic review

| Characteristic | Quantitative studiesa (n=158) |

Qualitative studiesa (n=84) |

|---|---|---|

| Study locationb | ||

| East Asia and Pacific | 16 (10%) | 8 (10%) |

| Europe and Central Asia | 14 (8%) | 4 (5%) |

| Latin America and the Caribbean | 12 (7%) | 5 (6%) |

| Middle East and North Africa | 1 (1%) | 0 (0%) |

| North America | 78 (47%) | 30 (35%) |

| South Asia | 1 (1%) | 6 (7%) |

| Sub-Saharan Africa | 43 (26%) | 32 (38%) |

| Year of publication | ||

| 2015-2016 | 46 (29%) | 22 (26%) |

| 2017-2018 | 53 (34%) | 28 (33%) |

| 2019-2020 | 59 (37%) | 34 (40%) |

| Sample size | ||

| 0-49 participants | 35 (22%) | 45 (54%) |

| 50-199 participants | 49 (31%) | 28 (34%) |

| ≥200 participants | 74 (47%) | 10 (12%) |

| Time of acceptability assessment | ||

| Before | 65 (41%) | 29 (35%) |

| During | 6 (4%) | 3 (4%) |

| After | 86 (54%) | 48 (57%) |

| Unclear | 6 (4%) | 3 (4%) |

| Not reported | 6 (4%) | 3 (4%) |

| Study populationb | ||

| MSM | 48 (22%) | 22 (16%) |

| Transgender people | 8 (4%) | 5 (4%) |

| Sex workers | 3 (1%) | 2 (1%) |

| PWID | 7 (3%) | 3 (2%) |

| Pregnant/post-partum women | 3 (1%) | 9 (7%) |

| Youth | 37 (17%) | 17 (13%) |

| PLWH | 25 (12%) | 18 (13%) |

| HIV-negative people | 24 (11%) | 7 (5%) |

| Women | 19 (9%) | 10 (7%) |

| Men | 10 (5%) | 9 (7%) |

| Healthcare providers | 12 (5%) | 18 (13%) |

| Dyads | 3 (1%) | 0 |

| Other | 20 (9%) | 14 (10%) |

| HIV interventionsb | ||

| PrEP | 39 (23%) | 11(13%) |

| HIV testing | 30 (19%) | 22 (26%) |

| Microbicides | 11 (7%) | 8 (10%) |

| mHealth | 35 (21%) | 19 (23%) |

| Behavioral interventions | 25 (15%) | 21 (25%) |

| VMMC | 10 (6%) | 2 (2%) |

| Financial incentives | 0 (0%) | 3 (4%) |

| Other | 17 (10%) | 8 (10%) |

Abbreviations: People who inject drugs (PWID); People living with HIV (PLWH); pre-exposure prophylaxis (PrEP); voluntary medical male circumcision (VMMC); men who have sex with men (MSM)

The sum of all quantitative and qualitative studies does not equal total studies because some studies included both quantitative and qualitative measurements of HIV intervention acceptability.

Some studies evaluated the acceptability of an intervention in multiple location and among multiple populations or interventions, thus the some of these categories exceed the total number of quantitative or qualitative studies.

Table 2 describes the quantitative assessments of acceptability, categorized by tier (1, 2, or 3) and HIV intervention type (biomedical or behavioral). Most studies were rated as Tier 2 (52%), followed by Tier 1 (32%), and Tier 3 (16%). Among the Tier 3 studies, no one validated scale emerged as a dominant acceptability measure (see Appendix Table 2 for more details on these scales, including frequency of use). Instead, 12 unique scales for acceptability assessment were identified among these studies, only five of which were used in more than one study: four studies used the Abbreviated Acceptability Rating Profile (20), four used the Client Satisfaction Questionnaire (21), two used the Systems Usability Scale (SUS) (22), two used the Health Information Technology Usability Evaluation Scale (23), and two used the Post-System Usability Questionnaire (24). Among these Tier 3 studies, scales that focused on usability were more commonly used for quantitative acceptability assessment of behavioral mHealth interventions, while scales that focused on products or medications were more commonly used for assessment of biomedical interventions. Only one of the Tier 3 quantitative studies, which used the SUS, pre-specified a threshold for acceptability a priori (25).

Table 2.

Summary of studies with quantitative assessment of acceptability identified in the review (N=158)

| HIV intervention typesa | Study populations | Operational definitionsb | Timing of assessmentsa | Summary of items |

|---|---|---|---|---|

| Tier 1 (n=51; 32%): Assessed one acceptability component using one to two questions | ||||

|

Biomedical (n=37) e.g., HIVST; HIV treatments; PrEP; HIV vaccine; microbicides; VMMC |

Adolescents; MSM; women; men; PLWH; FSWs; healthcare workers; PWID; parents of newborn infants | Willingness to use/recommend; perceived effectiveness and benefits; usability; satisfaction; attitudes | Before (n=21) During (n=1) After (n=10) Unclear (n=1) Not reported (n = 5) |

One to two questions on intervention benefits (Likert scale), willingness to use/recommend/pay/ prescribe, preferences, likes and dislikes, usability, and/or use in different scenarios. |

|

Behavioral (n=14) e.g., HIV testing in schools; mHealth for ART retention; needle syringe programs; case managers |

MSM; PLWH; PWID; pregnant women living with HIV; young adults | Willingness to use/refer/accept/pay; likes; perceived barriers and benefits; preferences | Before (n=6) After (n=8) |

One to two questions on intervention acceptance, likes (5-point visual analog scale), willingness to use/receive/recommend/pay, and/or helpfulness. |

| Tier 2 (n=82; 52%): Assessed more than one acceptability component with at least one question per component | ||||

|

Biomedical (n=45) e.g., HIVST; PrEP; microbicides; HIV treatments; VMMC |

Adolescents; caregivers; men; women; PLWH; clients at STI clinics; FSWs; healthcare workers; key populations; parents of newborn infants; transgender women; young adults | Attitudes; willingness to use/buy; preferences; comfort level; confidence in use; perceived effectiveness/ accuracy; likes and dislikes; satisfaction | Before (n=23) During (n=3) After (n=22) Unclear (n=2) Not reported (n=1) |

Components assessed included: willingness to use/pay/recommend, preferences, ease of use, concerns, likes and dislikes, perceived accuracy, comfort, interest, attitudes/perceptions, overall experience, awareness, future use, and affordability. |

|

Behavioral (n=37) e.g., HIV testing via CHWs; peer navigators; pharmacy delivery; mHealth for PrEP/ART adherence; mobile clinics |

MSM; healthcare workers; Black adults; PLWH; PWID; young adults; Latinx MSM; TB clients | Acceptability; appeal; satisfaction; comfort level; good experience; willingness to use/try/recommend; liking; perceived benefits; usability; perceived effectiveness; convenience | Before (n=12) During (n = 1) After (n=24) Unclear (n=3) |

Components assessed included: quality, satisfaction, usability, willingness to use, comfort level, appropriateness, interest, benefits, attitudes, experiences, likeability, understanding/ comprehension, and confidence. |

| Tier 3c (n=25; 16%): Used a validated scale and/or established theory or framework for acceptability assessment | ||||

|

Biomedical (n=6) e.g., ART; HIV vaccines; microbicides; PrEP |

Men not living with HIV; PLWH; transgender women; MSM, women | Satisfaction; acceptability; willingness to recommend; likelihood of using; willingness to use; appropriateness | Before (n=2) After (n=4) |

• HIV treatment satisfaction questionnaire (HIVTSQ, 10-item) • Brief Acceptability Questionnaire (BAQ) • Product Acceptability Questionnaire (PAQ) • Produce Preference Questionnaire (OPPQ) • Study Medication Satisfaction (SMSQ) • Modified scales published in other studies |

|

Behavioral (n=19) e.g., mHealth/SMS for HIV prevention, PrEP/ART adherence; programs for sexual risk reduction, PrEP/ART adherence, HIV testing |

Persons who use drugs; MSM; transgender women; adolescents; PLWH; healthcare workers; incarcerated people; Black people | Acceptability; behavioral change; perceived helpfulness; likes and dislikes; satisfaction; usability; willingness to recommend/use; compatibility; relative advantage; | Before (n = 1) During (n =1) After (n=19) |

• Abbreviated Acceptability Rating Profile • Client Satisfaction Questionnaire (8-item) • Systems Usability Scale (SUS) • Health Information Technology Usability Evaluation Scale (Health-ITUES, 20-item) • Post-Study System Usability Questionnaire (PSSUQ, 16-item) • Acceptability, Feasibility, Appropriateness Scale (AFAS, 13-item) • Acceptability and Action Questionnaire (7-item) • Modified scales published in other studies |

Abbreviations: men who have sex men (MSM); people living with HIV (PLWH); people who inject drugs (PWID); tuberculosis (TB); female sex worker (FSWs); sexually transmitted infections (STIs); voluntary medical male circumcision (VMMC); antiretroviral treatment (ART); pre-exposure prophylaxis (PrEP); HIV self-testing (HIVST); community health workers (CHWs); short message service (SMS)

Within each tier, some studies were counted in more than one intervention category if the intervention included both biomedical and behavioral components.

The content of this column reflects the determination made by the authors of this review as to what the included studies assessed as “acceptability.” The included studies may use different terminology than that shown here (e.g., may claim to measure “acceptability” but, in our determination, actually measure “satisfaction).

Among the Tier 3 quantitative instruments for acceptability assessment, none specified a threshold for determination or indication of acceptability a priori. Gray text indicates the populations, definitions of acceptability, and summary items from studies included in this tier that used modified existing scales published in other studies versus established validated scales.

In Table 3, we describe data for the studies using qualitative assessments of acceptability. Like the quantitative studies included in our review, most qualitative studies were rated as Tier 2 (70%), followed by Tier 1 (23%) and Tier 3 (7%). We identified six established theories or frameworks that were used for qualitative assessment of acceptability in Tier 3 studies: the Mensch, van der Straten, Katzen acceptability framework (1), the Morrow & Ruiz’s use experience framework (2), the Technology Acceptance Model (26), the Theoretical Domains Framework (27,28), the TFA (15,16), and the Unified Theory of Acceptance and Use of Technology (29). None of these theories and frameworks were used in more than one study. The theories and frameworks were applied across diverse participant populations, including MSM, sex workers, and healthcare workers; because so few theories/frameworks for qualitative acceptability assessment were identified, no clear patterns emerged for assessment of biomedical or behavioral interventions. In Appendix Table 3, we detail the theories and frameworks employed in the studies we reviewed (including studies with quantitative acceptability assessments); however, in some cases, the theory or framework was not used for assessing acceptability.

Table 3.

Summary of studies with qualitative assessments of acceptability included in the review (N=84)

| HIV intervention typesa | Study populations | Operational definitionsb | Timing of assessmentsa | Summary of items |

|---|---|---|---|---|

| Tier 1 (n=19; 23%): Assessed one acceptability component using one to two questions | ||||

|

Biomedical (n=6) e.g.,cure (cell & gene therapy), PrEP, rectal microbicides, PrEP drug monitoring, HIVST |

MSM; general population adults; people who use drugs; pharmacy clients; PLWH; pregnant women living with HIV; laboratory staff | Attitudes and feelings, beliefs; ease of use; experience with intervention; willingness to use | Before (n=4) During (n=1) After (n=1) Unclear (n=1) |

What the intervention experience was like for participants; what could be done to improve the intervention; likelihood of using the product (e.g., self-testing kit); and likelihood of recommending the product (e.g., self-testing kit) to a friend; willingness to use the product. |

|

Behavioral (n=13) e.g., adherence clubs; drug monitoring; mHealth; peer delivery of HIV testing; integrated POC testing; peer navigation; financial incentives; HIV testing; vending machines or at-home HIVST |

MSM; PLWH/TB; young MSM; transgender women; pregnant and post-partum women living with HIV; cis/trans FSWs; adult men; PLWH; women living with HIV; healthcare providers | Potential benefits; willingness to use; satisfaction; preference; willingness to recommend; perceived agreeableness; motivation; comfort with intervention; beliefs/attitudes; barriers/facilitators; perceived utility | Before (n=6) After (n=6) Unclear (n=1) |

Experience of and satisfaction with the intervention; willingness and motivation to participate in the future; intervention acceptability and appropriateness; attitudes and preferences regarding intervention content and delivery; |

| Tier 2 (n=59, 70%): Assessed more than one acceptability component with at least one question per component | ||||

|

Biomedical (n=27) e.g., HIVST; HIV treatments; HIV vaccine; microbicides; PrEP; VMMC |

Community members; cultural leaders; FSWs; healthcare workers; people who use contraception; PLWH; pregnant and post-partum women; stakeholders; transgender women; uncircumcised men; women; young MSM; |

“Acceptability”c; attitudes/opinions; concerns; interest in; likes and dislikes; perceptions; perceived benefits; preferences; quality of service; satisfaction; willingness to use/support/recommend | Before (n=12) After (n=12) Not reported (n=3) |

Awareness, acceptability, possible use of the intervention; views on dosing regimens/frequency and distribution sites; understanding of product efficacy; worries and concerns about a product (e.g., PrEP); willingness to use, ability to use; thoughts on how to make using the product easier; prior knowledge of a product (e.g., PrEP); perceived barriers and facilitators to future uptake; preferences for PrEP access; likes and dislike about a product; recommendations for nationwide implementation. |

|

Behavioral (n=32) e.g., financial incentives for ART adherence; mHealth for HIV prevention; motivational interviewing; peer navigation and counseling |

Adolescents; caregivers; community stakeholders; healthcare workers PLWH; pregnant PLWH; People who use drugs; migrants; MSM; Native American adolescents; transgender youth |

“Acceptability”c; attitudes; areas for improvement; barriers/ challenges and benefits; cultural relevance; ease of use; likes and dislikes; preferences; satisfaction; willingness to recommend; willingness to engage | Before (n=5) During (n=1) After (n=26) Unclear (n=1) |

Ease/difficulty of using the intervention, what was helpful about the intervention, thoughts on intervention delivery (e.g., frequency of calls); thoughts on intervention modules (usefulness, improvements, likes and dislikes, importance); how relatable the intervention content is; willingness to recommend to a friend; willingness to participate and engage in the intervention; satisfaction; appropriateness; cultural relevance; contextual factors that impact intervention uptake; recommendations for improving design; perceived impact on targeted behavior. |

| Tier 3 (n=6, 7%): Used an established theory or framework for acceptability assessment | ||||

|

Biomedical (n=4) e.g., rectal microbicides |

HIV− women, transgender women MSM | Willingness to use; perceived barriers and facilitators to uptake; preferences | Before (n=2) During (n=1) After (n=1) |

• Morrow & Ruiz (use experiences) • Theoretical Framework of Acceptability • Technology acceptance model • Mensch acceptability framework |

|

Behavioral (n=2) e.g., HIV/STI risk reduction program, mobile smartphone sexual health app; stigma-mitigation training |

Healthcare workers; men; incarcerated women; young MSM | Satisfaction; usability; experiences with use; helpfulness; appropriateness | After (n=2) | • Theoretical Domains Framework • Unified Theory of Acceptance and Use of Technology |

Abbreviations: men who have sex men (MSM); people living with HIV (PLWH); people who inject drugs (PWID); tuberculosis (TB); female sex worker (FSWs); sexually transmitted infections (STIs); voluntary medical male circumcision (VMMC); antiretroviral treatment (ART); pre-exposure prophylaxis (PrEP); HIV self-testing (HIVST); point-of-care (POC)

Within each tier, some studies were counted in more than one intervention category if the intervention included both biomedical and behavioral components.

The content of this column reflects the determination made by the authors of this review as to what the included studies assessed as “acceptability.” The included studies may use different terminology than that shown here (e.g., may claim to measure “acceptability” but, in our determination, actually measure “satisfaction).

These studies did not include an operational definition of acceptability

Both the quantitative (Table 2) and qualitative (Table 3) assessments of acceptability captured in this review focused on a wide range of HIV prevention, treatment, and service delivery interventions for diverse populations. They also used a variety of operational definitions of acceptability and timepoints for acceptability assessment. Common operational acceptability definitions included willingness to use/recommend, perceived effectiveness and benefits, likes and dislikes, satisfaction, and usability. Most acceptability assessments were conducted before or after an intervention was implemented, with few measurements occurring during an intervention. No clear pattern on operational acceptability definitions or assessment timing emerged by intervention type (behavioral or biomedical) or study tier.

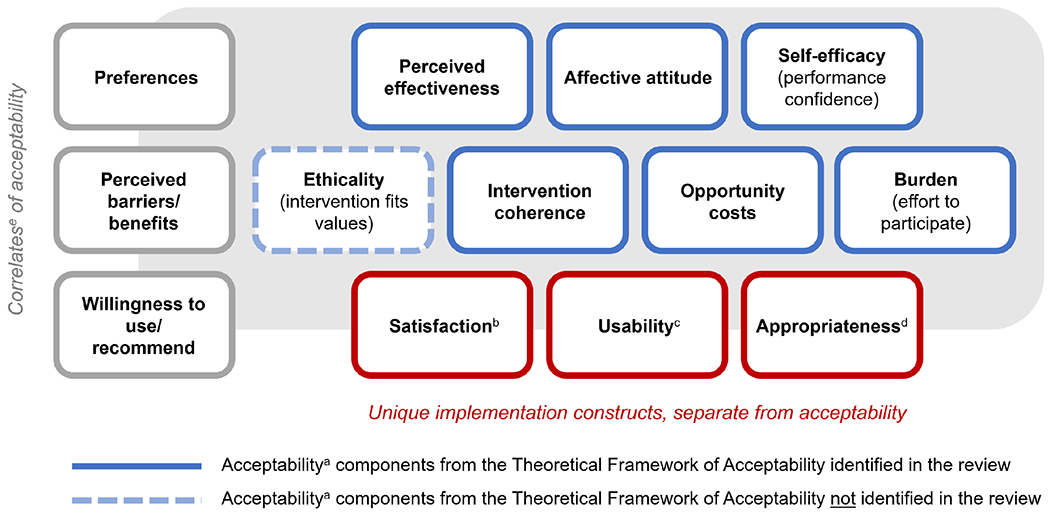

Figure 2 shows the components of acceptability captured in the scale items and operational definitions of the studies included in our review, as mapped to the TFA (15,16). Ethicality was the only TFA component that did not emerge in the studies included in our review. Our analysis also revealed that, despite claiming to measure acceptability, many studies measured correlates of acceptability (preferences, perceived barriers/benefits, and willingness to use/recommend) or constructs that, though relevant to implementation, are conceptually distinct from acceptability (e.g., satisfaction, usability, and appropriateness) (19).

Figure 2. Components of acceptabilitya identified in our systematic review of the HIV intervention and service delivery literature, compared to those in the Theoretical Framework of Acceptability.

aAcceptability is defined as a multi-faceted construct that reflects the extent to which people delivering or receiving a healthcare intervention consider it to be appropriate, based on anticipated or experienced cognitive and emotional responses to the intervention (Sekhon 2017).

bSatisfaction is the state of being content or fulfilled with a service or intervention based on one’s needs and desires or being content with the general service-delivery experience (Proctor 2011; Giese 200; Rothschild 2021).

cUsability is defined as the ease with which an intervention, product, or system can be learned and used within a specific context (Grudniewicz 2015; Gagliardi 2011)

dAppropriateness is the perceived fit, relevance, or compatibility of the innovation or evidence-based practice for a given practice setting, provider, or consumer and/or the perceived fit of the innovation to address a particular issue or problem (Proctor 2011).

eCorrelates of acceptability are factors associated with acceptability that can be predictors of or variables that are correlated with acceptability without implying anything about the directionality of the relationship.

DISCUSSION

We conducted a systematic review of research studies published from 2015-2020 that measured the acceptability of socio-behavioral and biomedical HIV prevention and treatment interventions and service delivery models. Among the studies included in our final review, we found that many assessed only one component of acceptability (e.g., affective attitude) and most did not use a validated scale or established theory or framework to inform the acceptability assessment. Among the studies that used a validated scale or theory/framework for acceptability assessment, there was inconsistency in the scales and theories/frameworks used, with few being used in more than one study. While many components of acceptability identified in the TFA (15,16), an established multi-dimensional acceptability framework, were captured in our data extraction, we also captured many other “components” that are more commonly classified as correlates of acceptability (e.g., willingness to use/recommend, perceived barriers and benefits) or separate implementation constructs (e.g., satisfaction, usability, appropriateness) (19). Our findings emphasize the need for a good, validated acceptability instrument in the field of HIV intervention and service delivery research that is easy to complete, has a clear threshold for acceptability determination, and can be applied across varying interventions and adapted to different populations and settings.

Narrow and psychometrically questionable methods of assessing acceptability (i.e., those that do not use a validated scale or an established theory/framework and pre-specified thresholds) risk yielding incomplete data on acceptability that lack insight and are not actionable. Understanding acceptability based on the anticipated or experienced cognitive and emotional responses of intervention users or recipients may inform intervention tailored refinements based on the specific target population or setting (30,31). A more granular assessment of the various components that make up the broader concept of acceptability can help improve the social and behavioral congruence of intervention implementation and ultimately the real-world intervention effectiveness (32,33). Product developers of biomedical interventions may benefit from an early (and ongoing) focus on product acceptability to optimize the drug vehicle, dosage, and use considerations throughout the research and development process (1). In addition, the appeal, fit, and interest for a particular intervention among a specific target population from a user-centric vantage point is key to its adoption and ultimate health impact at the implementation and roll-out stage. Understanding the views, values, and preferences of end users on the potential benefits and harms of the intervention is also key to intervention approval and recommendations from regulatory bodies and policy makers (4,34,35).

We found that many studies used only study retention or adherence data as the sole indicator of acceptability or did not include any details on how acceptability was assessed; these were excluded from full-text review. We considered acceptability to be a multi-faceted concept that is conceptually separate from behavioral outcomes (e.g., intervention retention, adherence) (1,2,16). Participants might adhere to an intervention or remain engaged in a study, for example, despite considering the intervention unacceptable. They might be motivated to receive benefits from the intervention or study participation, but this does not mean that, given other options, they would persist in using this intervention in the future. This is why a direct assessment of participants’ rating of acceptability, separate from adherence or engagement, is critical to the development of interventions with the most potential benefit. Acceptability may drive (i.e., act as a mediator for) other behavioral variables, but it is most instructive to view it as a distinct construct related to individuals’ perceptions of and experiences engaging with a given intervention or service in their context.

Among the TFA acceptability components, our review on acceptability assessment in the HIV literature captured all but ethicality. In the TFA, ethicality is defined as “the extent to which the intervention has good fit with an individual’s value system” (15,16). Although it is likely that participants took into account, to at least to some extent, whether an intervention fit with their value system, no researcher opted to measure this as a distinct construct impacting acceptability. It may be viewed as too distal or diffuse an influence on acceptability judgments. Studies may also capture this within measures of internalized or experienced stigma, rather than as a component of acceptability. Future work to refine the TFA might include attempts to directly assess the role of value systems in decisions around acceptability and whether it is possible to accurately measure this dimension.

In this review, we also found that a number of self-described acceptability studies actually assessed implementation science constructs related to, but conceptually distinct, from acceptability, including satisfaction (a state of being content or fulfilled with an intervention or with a general service-delivery experience (19,36,37)), usability (the ease with which an intervention can be learned and used (38,39)), or appropriateness (the perceived fit, relevance, or compatibility of the intervention for a given setting (19)). We might note that the categorization of acceptability components, acceptability correlates, and implementation science constructs is somewhat subjective. A better understanding of how different implementation science constructs, inclusive and exclusive of acceptability, are defined in “classical” behavior change and health psychology theories, implementation theories, and evaluation frameworks could help researchers more clearly delineate these constructs (40).

Not pre-specifying a threshold for acceptability determination in quantitative instruments (as was the case for almost all the Tier 3 studies captured in this review) reduces the overall rigor of acceptability research. In the absence of an a priori threshold specification, the potential for measurement bias is introduced by arbitrary or subjective selection of thresholds post-hoc to suggest high intervention acceptability. Additionally, it adds to the challenge of comparing acceptability determinations across studies. However, we acknowledge that not all validated scales have recommended thresholds to inform acceptability determination and that existing thresholds may not hold if they are being used in a new setting or population in which the scale and threshold have not been validated (as is often the case). Thus, as new scales for acceptability assessment are developed and validated and data on responses eventually emerge, researchers may consider recommending a threshold as well as contextual considerations that can inform acceptability determination to help improve the rigor of these assessments. We additionally acknowledge that setting a pre-determined cut-off for acceptability determination is not always feasible nor appropriate and that data, at times, may have to be presented as descriptive and open to interpretation.

In this review, many studies assessed acceptability retrospectively, after clients or providers experienced or delivered the intervention or model of service delivery. This approach may be appropriate for behavioral interventions, which can often be complex (e.g., counseling services (41,42)) or a package of intervention services (e.g., six-month PrEP dispensing supported with interim HIV self-testing (43)), which may be hard for clients to fully comprehend before experiencing the intervention. This approach may also be appropriate for biomedical interventions if informing intervention components that can be modified (e.g., packaging that can make the intervention more acceptable without changing the “active ingredient”), but less appropriate if informing intervention components that need regulatory approvals or collaboration with private-sector partners for modification. Retrospective acceptability assessments, however, may result in bias if they are only completed among individuals that chose to engage and/or persist with the intervention, while those who might not have found the intervention acceptable dropped it prior to assessment.

Thus, prospective acceptability assessments, also captured in many studies in this review, which mimic the consumer experience may be better suited for some interventions. Prospective acceptability assessments are appropriate for informing the design and development of interventions or models of service delivery so that when they become available for clients and providers, they have the greatest probability of adoption. This approach also mimics real-world settings in which individuals have to make choices about trying new products or interventions without any prior experience using or engaging with them. Prospective acceptability assessments, however, are by definition solely anticipatory and not based on real experience and thus may be less valid than retrospective assessment. When conducting prospective acceptability assessments, it is important to present options as neutral to help limit bias and potential rejection (44). An alternative timing for acceptability assessment is while the intervention is ongoing, which can provide real-time feedback and help inform if adaptations are needed mid-implementation to enhance intervention acceptability and potential downstream effectiveness.

The populations and settings in which the acceptability of HIV prevention and treatment interventions and service delivery models were assessed in this review were diverse. The populations included people living and not living with HIV, young people, cis-gender men, cis-gender women, and healthcare workers in high-, middle-, and low-income countries. Because these approaches were so varied, no one approach emerged as the standard of practice for any population or setting. As the HIV prevention and treatment field continues to refine and develop best practices for acceptability assessment, tailored approaches for different populations and settings should be considered. For example, when assessing the acceptability of interventions among underserved populations at increased risk of HIV acquisition – such as sex workers, transgender people, or adolescents – it is critical to reflect on who holds the power in that interaction and the ability of participants to candidly express their attitudes toward the intervention. Adaptations to the design or implementation of the acceptability assessment may be needed to empower participants and ensure the reliability of assessment findings. Another important consideration is to understand what alternatives different populations in different settings may have to the intervention being assessed, as this may influence participants’ attitudes and perceptions of the intervention being presented to them. Additionally, perceptions of intervention acceptability may vary by context, including geography and culture, thus affecting the transportability of intervention effectiveness in new settings. This emphasizes the importance of conducting acceptability assessments in new environments prior to intervention introduction, when possible, to determine if any intervention adaptations are needed to enhance acceptability and potential downstream effectiveness.

Based on the findings of this review, we developed recommendations for researchers interested in conducting acceptability assessments for HIV prevention and treatment interventions or service delivery strategies (Box 1). These recommendations include selecting the components (more than one) of acceptability (e.g., affective attitude, burden) that are most relevant to the intervention, selecting qualitative or quantitative data for assessment, selecting the best timing for assessment (e.g., before, during, or after the intervention), and selecting a validated scale or established theory or framework to inform the assessment. We recommend pre-specifying the threshold for acceptability assessment (either based on the literature and prior thresholds if using a validated scale or based on a priori criteria if using a new measure). Additionally, we recommend pilot testing all acceptability assessments in the populations and settings of interest and adapting the assessment to fit the context in which it is being conducted (including the translatability of items and concepts to non-English languages); potentially adjusting how the assessment is administered (e.g., who, what, where, when) to minimize the impact of power differentials between participants and researchers.

Box 1. Recommendations for conducting studies on the acceptability of HIV prevention or treatment interventions or service delivery strategies.

| 1. Select the acceptability components relevant to your intervention: not all acceptability components may be relevant, just select the ones (preferably more than two) that are, and clearly define them. |

| 2. Select quantitative or qualitative data collection: determine if you would like to assess acceptability using quantitative or qualitative data or both (it may depend on the resources available to you and the type of data you aim to collect). |

| 3. Select timing for acceptability assessment: determine the timing of assessment (e.g., before, during, or after the intervention – or at all three time points) that would best meet your research objectives. |

| 4. Select a validated scale or established theory/framework: when selecting a scalea or theory/frameworkb, ensure that it is measuring acceptability and not another implementation construct (e.g., appropriateness) and is assessing all the acceptability components you have deemed pertinent to your work. Additionally, confirm what populations and settings the scale or theory/framework has been validated in and consider if the items and response patterns would be appropriate for the populations and settings of interest. |

| 5. Pre-specify your acceptability threshold: if you are using a validated scale, specify your threshold a priori based on the literature and prior thresholds used to enhance the robustness of and reduce any potential bias in your findings. If you are using a new scale, specify a threshold based on a priori criteria and hypotheses. |

| 6. Pilot test your assessment in your populations and settings of interest: to ensure the assessment is understood, appropriate, and working as intended. |

| 7. Adapt the validate scale or established theory/framework: if needed, adapt the assessment you are using so it is appropriate for the populations and settings of interest. Also consider the translatability of the items and response patterns to non-English languages, as appropriate. |

| 8. Reflect on and consider the role of power differentials in your study: be sure to consider the role of power differentials in your assessment and adjust data collection techniques accordingly to minimize social desirability bias. |

Of the Tier 3 validated scales identified in our review, the following measured acceptability and not another implementation construct: the Acceptability and Action Questionnaire (AAQ); the Acceptability, Feasibility, Appropriateness Scale (AFAS); the Abbreviated Acceptability Rating Profile (AARP); the Brief Acceptability Questionnaire (BAQ); and the Product Acceptability Questionnaire (PAQ). Other scales to consider that were in press during the review or are common in other fields include: the Theoretical Framework of Acceptability (TFA) Generic Questionnaire (in press during the review) and the Acceptability of Intervention Measure (AIM, commonly used in implementation science).

Of the Tier 3 established theories and frameworks identified in our review, the following measured acceptability and not another implementation construct: the Mensch, van der Straten, Katzen acceptability framework, the Morrow & Ruiz’s use experience framework, the Technology Acceptance Model (TAM), the TFA, and the Unified Theory of Acceptance and Use of Technology (UTAUT).

This review has strengths and weaknesses. A strength of this review is its comprehensiveness. Screening >600 titles and extracting and analyzing data from >200 studies provided us with broad insight into current acceptability assessments and may increase the applicability of our recommendations to a wide range of HIV prevention and treatment interventions and service delivery models. Additionally, for all included studies, more than one author reviewed the data abstraction for each study, thus increasing the reliability of our findings. Consolidation of study findings into general categories (e.g., HIV intervention, population, operational acceptability definition) helped us identity broad themes across studies; however, it also resulted in the loss of some of the nuanced differences between studies and could have resulted in false dichotomies between overlapping categories. For example, we decided to separate interventions into biomedical and behavioral interventions for ease of description, with the recognition that most biomedical interventions are, in reality, bio-behavioral because their uptake and use rely on human behaviors (45). We also chose to use study authors’ definitions of acceptability, rather than imposing our own interpretation or meaning onto their methods, even if they did not fit within more established acceptability frameworks such as the TFA (15,16). Finally, this review focused on HIV prevention and treatment interventions and service delivery models, and thus our findings on acceptability assessment may have limited generalizability to other disease prevention and treatment interventions.

Future research might expand the literature search to include work in areas other than HIV. This could result in the identification of acceptability measures commonly used in other fields (such as the Acceptability of Intervention Measure, or AIM (46)) that could be adapted for HIV intervention and service delivery research. Work is needed to directly compare the most commonly used Tier 3 acceptability assessments across fields and subject them to more rigorous psychometrical evaluation. New frameworks consolidating components of acceptability found across current theories and frameworks would be helpful, as would specific recommendations on how to best assess each component.

CONCLUSION

In sum, in the HIV prevention, treatment, and service delivery field, there has been a growing recognition of the importance of assessing the acceptability of new and existing interventions to increase uptake and engagement in care over time. However, the lack of consistency of acceptability assessment in the field makes it challenging to understand how to interpret and apply the findings from these acceptability studies. Current conflation of other constructs relevant to implementation (e.g., satisfaction) with acceptability creates confusion and impedes our ability to identify potential ways to improve HIV service delivery. Our recommendations may help guide future acceptability assessments that will inform the development and implementation of novel interventions and service delivery strategies in the area of HIV and beyond.

Declarations:

This review was supported by the National Institute of Mental Health (P30 MH123248, K99 MH123369, K99 MH121166); University of Washington/Fred Hutchinson Center for AIDS Research (AI027757) and the Office of HIV/AIDS Network Coordination (HANC) which is funded in whole or in part with Federal funds from the Division of AIDS, National Institute of Allergy and Infectious Diseases, National Institutes of Health, Department of Health and Human Services, grant number UM1 AI068614, entitled Leadership Group for a Global HIV Vaccine Clinical Trials (Office of HIV/AIDS Network Coordination) with additional support from the National Institute of Mental Health. No author reports any conflict of interest. All authors contributed equally to the review of the articles, extraction of data, drafts and edits of the manuscript, and interpretation of results.

Appendix Table 1.

Definition of constructs in the Theoretical Framework of Acceptability

| Construct | Definitiona |

|---|---|

| Affective attitude | How an individual feels about the intervention |

| Burden | The perceived amount of effort that is required to participate in the intervention |

| Ethicality | The extent to which the intervention has good fit with an individual’s value system |

| Intervention coherence | The extent to which the participant understands the intervention and how it works |

| Opportunity costs | The extent to which benefits, profits, or values must be given up to engage in the intervention |

| Perceived effectiveness | The extent to which the intervention is perceived as likely to achieve its purpose |

| Self-efficacy | The participant’s confidence that they can perform the behavior(s) required to participate in the intervention |

Sekhon M, Cartwright M, Francis JJ. Acceptability of healthcare interventions: an overview of reviews and development of a theoretical framework. BMC Health Serv Res. 2017 Jan 26;17(1):88.

Appendix Table 2.

Validated scales for acceptability assessment identified from the systematic review

| Validated scale | Count | Operational definitionsa | Interventions | Study populations | Timing | Preset cut-off? |

|---|---|---|---|---|---|---|

| Abbreviated Acceptability Rating Profile (10-item) | 4 | Acceptability (Shrestha, 2020) | Behavioral (mHealth/SMS for PrEP adherence) | People who use drugs | After | N |

| Behavior change; perceived helpfulness (Hidalgo, 2015) | Behavioral (for HIV prevention) | Young MSM | After | N | ||

| Acceptability (Madkins, 2019) | Behavioral (mHealth for HIV prevention) | Young MSM | After | N | ||

| Acceptability (Shrestha, 2018) | Behavioral (for PrEP adherence) | People who use methadone | After | N | ||

| Client Satisfaction Questionnaire (8-item) | 4 | Satisfaction (Moitra, 2020) | Behavioral (MI for PrEP) | MSM | After | N |

| Satisfaction (Sevelius, 2020) | Behavioral (sexual risk) | Transgender women | After | N | ||

| Satisfaction; helpfulness/appropriateness (Johnson, 2015) | Behavioral (HIV prevention program) | Incarcerated women | Before, During, After | N | ||

| Satisfaction (Cordova, 2019) | Behavioral (mHealth for HIV prevention) | Adolescents | After | N | ||

| Systems Usability Scale (SUS, 10-item) | 2 | Usability (Sullivan, 2017) | Behavioral (mHealth for HIV prevention) | MSM | After | Y/N |

| Usability (Horvath, 2020) | Behavioral (mHealth for HIV testing) | MSM | After | Y/N | ||

| Health Information Technology Usability Evaluation Scale (Health-ITUES, 20-item) | 2 | Satisfaction; usability (Gannon, 2020) | Behavioral (for HIV prevention) | Young MSM | After | |

| Usability; satisfaction (Ignacio, 2019) | Behavioral (mHealth/app for HIV prevention) | Young MSM | After | N | ||

| Post-study System Usability Questionnaire (PSSUQ, 16-item) | 2 | Satisfaction; usability (Gannon, 2020) | Behavioral (for HIV prevention) | Young MSM | After | N |

| Usability; satisfaction (Ignacio, 2019) | Behavioral (mHealth/app for HIV prevention) | Young MSM | After | N | ||

| HIV treatment satisfaction Questionnaire (HIVTSQ, 10-item) | 1 | Satisfaction (Murray, 2019) | Biomedical (long-acting injectable ART) | PLWH who use substances | After | N |

| Acceptability, Feasibility, Appropriateness Scale (AFAS, 13-item) | 1 | Satisfaction (Kutner, 2020) | Behavioral (stigma mitigation) | Healthcare workers | After | N |

| Brief Acceptability Questionnaire (BAQb) | 1 | Satisfaction (Leyva, 2015) | Biomedical (microbicides) | Men not living with HIV | After | N |

| Product Acceptability Questionnaire (PAQb) | 1 | Satisfaction (Leyva, 2015) | Biomedical (microbicides) | Men not living with HIV | After | N |

| Product Preference Questionnaire (OPPQb) | 1 | Satisfaction (Leyva, 2015) | Biomedical (microbicides) | Men not living with HIV | After | N |

| Acceptability and Action Questionnaire (7-item) | 1 | Satisfaction (Moitra, 2015) | Behavioral (acceptance-based behavioral therapy) | PLWH | After | N |

| Study Medication Satisfaction Questionnaire (SMSQ, 12-item) | 1 | Satisfaction; willingness to recommend (Murray, 2018) | Biomedical (PrEP) | Men not living with HIV | After | N |

| Adapted scales from these studies: | ||||||

| - ATN 082 Study | 1 | Likelihood of using | Biomedical (PrEP) | Transgender women | Before | |

| - Week’s scale for vaginal microbes | 1 | Acceptability | Biomedical (microbicides) | MSM | Before | |

| - Previous Uganda study | 1 | Willingness to recommend | Behavioral (mHealth/SMS for ART) | PLWH | After | |

| - Brow-Peterside et al, 2000 | 1 | Compatibility; relative advantage; observability | Behavioral (mHealth for condom use) | Black adults | After | |

| - Australian study | 1 | Acceptability; attitudes/opinions | Biomedical (HIVST) | Men who purchase sex | After | |

| - Chirwa, 2011 | 1 | Willingness to use | Biomedical (Condoms) | Women | After | |

| - Bauermeister, 2015 & Widman 2017 | 2 | Satisfaction; usability | Behavioral (sexual risk reduction) | Adolescents | After | |

| Satisfaction; willingness to recommend | Behavioral (mHealth for HIV prevention) | Adolescent women | After | |||

| - Vandelanotte, 2003 | 1 | Usability | Behavioral (ART adherence) | Black women living with HIV | After | |

| - Njozing, 2011; Thomas, 2009; Sutiono, 2016 | 1 | Appropriateness | Biomedical (HIV testing via community healthcare workers) | TB clients; healthcare workers | Before | |

| - Lewis, 2018 | 1 | N/A | Biomedical (HIVST) | Key pops | N/A | |

| - James, 2018 | 1 | N/A | Behavioral (mobile clinic for sexual health services) | Adolescents & young adults | N/A | |

| - Haper, 2003 | 1 | N/A | Behavioral (mHealth for HIV prevention) | Adolescents | During, After | |

| - Sullivan, 2014; Stephenson, 2011; Stephenson, 2014; Stephenson, 2012 | 1 | Willingness to participate | Behavioral (couples HIV testing & counseling) | PLWH | Before | |

| - Marlatt, 2018; D’Amico, 2010; Osilla, 2008 | 1 | Satisfaction | Behavioral (mHealth for substance use & HIV risk behaviors) | People who use drugs | After |

Abbreviations: HIV self-testing (HIVST); antiretroviral treatment (ART); pre-exposure prophylaxis (PrEP); motivational interviewing (MI); people living with HIV (PLWH); tuberculosis (TB); men who have sex with men (MSM)

These operational definitions of acceptability were determined by reviewing the authors’ descriptions of how the assessed acceptability in their study.

The number of items in these scales was not clear from the review of the literature.

Appendix Table 3.

Theories and frameworks for qualitative acceptability assessment identified from the systematic review

| Underlying theory | Count | Operational definitionsa | Interventions | Study populations | Timing |

|---|---|---|---|---|---|

| Mensch, van der Straten, Katzen acceptability framework | 1 | Use attributes, product characteristics, drug formulation and dosing regimen, effect on sex, product-related norms and perceived partner acceptability (Montgomery, 2017) | Biomedical (PrEP intravaginal rings) | Cis-Women | During and After |

| Morrow & Ruiz’s framework | 1 | Satisfaction; future use (Bauermeister, 2020) | Biomedical (PrEP intravaginal rings) | Young women not living with HIV | After |

| Technology Acceptance Model (TAM) | 1 | Willingness to use; perceived barriers/facilitators; preferences (Chakrapani, 2017) | Biomedical (microbicides) | MSM | Before |

| Theoretical Domains Framework (TDF) | 1 | Satisfaction (Kutner, 2020) | Behavioral (stigma mitigation) | Healthcare workers | After |

| Theoretical Framework of Acceptability (TFA) | 1 | Affective attitude, burden, ethicality, intervention coherence, opportunity costs, perceived effectiveness, self-efficacy (Chakrapani, 2020) | Biomedical (PrEP) | Transgender women | Before |

| Unified Theory of Acceptance and Use of Technology (UTAUT) | 1 | Usability (Gannon, 2020) | Behavioral (mHealth/app for sexual health) | Young MSM | After |

Abbreviations: pre-exposure prophylaxis (PrEP); men who have sex with men (MSM)

These operational definitions of acceptability were determined by reviewing the authors’ descriptions of how the assessed acceptability in their study.

REFERENCES

- 1.Mensch BS, van der Straten A, Katzen LL. Acceptability in Microbicide and PrEP Trials: Current Status and a Reconceptualization. Curr Opin HIV AIDS. 2012. Nov;7(6):534–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Morrow KM, Ruiz MS. Assessing Microbicide Acceptability: A Comprehensive and Integrated Approach. AIDS Behav. 2008. Mar;12(2):272–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Donenberg GR, Merrill KG, Obiezu-Umeh C, Nwaozuru U, Blachman-Demner D, Subramanian S, et al. Harmonizing Implementation and Outcome Data Across HIV Prevention and Care Studies in Resource-Constrained Settings. Glob Implement Res Appl. 2022. Apr 7;1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Griffin JB, Ridgeway K, Montgomery E, Torjesen K, Clark R, Peterson J, et al. Vaginal ring acceptability and related preferences among women in low- and middle-income countries: A systematic review and narrative synthesis. PLoS ONE. 2019. Nov 8;14(11):e0224898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Atkins K, Yeh PT, Kennedy CE, Fonner VA, Sweat MD, O’Reilly KR, et al. Service delivery interventions to increase uptake of voluntary medical male circumcision for HIV prevention: A systematic review. PloS One. 2020;15(1):e0227755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Young I, McDaid L. How acceptable are antiretrovirals for the prevention of sexually transmitted HIV?: A review of research on the acceptability of oral pre-exposure prophylaxis and treatment as prevention. AIDS Behav. 2013. Jul 30;18(2):195–216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Montgomery ET, van der Straten A, Chitukuta M, Reddy K, Woeber K, Atujuna M, et al. Acceptability and use of a dapivirine vaginal ring in a phase III trial. AIDS Lond Engl. 2017. May 15;31(8):1159–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Golub SA, Operario D, Gorbach PM. Pre-exposure prophylaxis state of the science: empirical analogies for research and implementation. Curr HIV/AIDS Rep. 2010. Nov;7(4):201–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Carballo-Diéguez A, Exner T, Dolezal C, Pickard R, Lin P, Mayer KH. Rectal microbicide acceptability: results of a volume escalation trial. Sex Transm Dis. 2007. Apr;34(4):224–9. [DOI] [PubMed] [Google Scholar]

- 10.Coly A, Gorbach PM. Microbicide acceptability research: recent findings and evolution across phases of product development. Curr Opin HIV AIDS. 2008. Sep;3(5):581–6. [DOI] [PubMed] [Google Scholar]

- 11.Mantell JE, Myer L, Carballo-Diéguez A, Stein Z, Ramjee G, Morar NS, et al. Microbicide acceptability research: current approaches and future directions. Soc Sci Med 1982. 2005. Jan;60(2):319–30. [DOI] [PubMed] [Google Scholar]

- 12.Severy LJ, Tolley E, Woodsong C, Guest G. A framework for examining the sustained acceptability of microbicides. AIDS Behav. 2005. Mar;9(1):121–31. [DOI] [PubMed] [Google Scholar]

- 13.Celum CL, Gill K, Morton JF, Stein G, Myers L, Thomas KK, et al. Incentives conditioned on tenofovir levels to support PrEP adherence among young South African women: a randomized trial. J Int AIDS Soc. 2020. Nov;23(11):e25636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Somefun OD, Casale M, Haupt Ronnie G, Desmond C, Cluver L, Sherr L. Decade of research into the acceptability of interventions aimed at improving adolescent and youth health and social outcomes in Africa: a systematic review and evidence map. BMJ Open. 2021. Dec 20;11(12):e055160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sekhon M, Cartwright M, Francis JJ. Acceptability of healthcare interventions: an overview of reviews and development of a theoretical framework. BMC Health Serv Res. 2017. Jan 26;17(1):88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sekhon M, Cartwright M, Francis JJ. Acceptability of health care interventions: A theoretical framework and proposed research agenda. Br J Health Psychol. 2018. Sep;23(3):519–31. [DOI] [PubMed] [Google Scholar]

- 17.Woodsong C, Alleman P. Sexual pleasure, gender power and microbicide acceptability in Zimbabwe and Malawi. AIDS Educ Prev Off Publ Int Soc AIDS Educ. 2008. Apr;20(2):171–87. [DOI] [PubMed] [Google Scholar]

- 18.Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021. Mar 29;372:n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for Implementation Research: Conceptual Distinctions, Measurement Challenges, and Research Agenda. Adm Policy Ment Health. 2011;38(2):65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tarnowski KJ, Simonian SJ. Assessing treatment acceptance: the Abbreviated Acceptability Rating Profile. J Behav Ther Exp Psychiatry. 1992. Jun;23(2):101–6. [DOI] [PubMed] [Google Scholar]

- 21.Larsen DL, Attkisson CC, Hargreaves WA, Nguyen TD. Assessment of client/patient satisfaction: Development of a general scale. Eval Program Plann. 1979. Jan 1;2(3):197–207. [DOI] [PubMed] [Google Scholar]

- 22.Brooke J. SUS: a “quick and dirty” usability scale. In: Usability Evaluation in Industry. London: Taylor and Francis; 1986. [Google Scholar]

- 23.Schnall R, Cho H, Liu J. Health Information Technology Usability Evaluation Scale (Health-ITUES) for Usability Assessment of Mobile Health Technology: Validation Study. JMIR MHealth UHealth. 2018. Jan 5;6(1):e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lewis JR. Psychometric Evaluation of the Post-Study System Usability Questionnaire: The PSSUQ. Proc Hum Factors Soc Annu Meet. 1992. Oct 1;36(16):1259–60. [Google Scholar]

- 25.Sullivan PS, Driggers R, Stekler JD, Siegler A, Goldenberg T, McDougal SJ, et al. Usability and Acceptability of a Mobile Comprehensive HIV Prevention App for Men Who Have Sex With Men: A Pilot Study. JMIR MHealth UHealth. 2017. Mar 9;5(3):e26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Davis FD. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989;13(3):319–40. [Google Scholar]

- 27.Michie S, Johnston M, Abraham C, Lawton R, Parker D, Walker A, et al. Making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care. 2005. Feb;14(1):26–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cane J, O’Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci IS. 2012. Apr 24;7:37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Venkatesh V, Morris MG, Davis GB, Davis FD. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003;27(3):425–78. [Google Scholar]

- 30.Mayo AJ, Browne EN, Montgomery ET, Torjesen K, Palanee-Phillips T, Jeenarain N, et al. Acceptability of the Dapivirine Vaginal Ring for HIV-1 Prevention and Association with Adherence in a Phase III Trial. AIDS Behav. 2021. Aug;25(8):2430–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.van der Straten A, Browne EN, Shapley-Quinn MK, Brown ER, Reddy K, Scheckter R, et al. First Impressions Matter: How Initial Worries Influence Adherence to the Dapivirine Vaginal Ring. J Acquir Immune Defic Syndr 1999. 2019. Jul 1;81(3):304–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sekhon M, van der Straten A, MTN-041/MAMMA Study Team. Pregnant and breastfeeding women’s prospective acceptability of two biomedical HIV prevention approaches in Sub Saharan Africa: a multisite qualitative analysis using the Theoretical Framework of Acceptability. PLoS ONE. 2021;In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sekhon M, Cartwright M, Francis JJ. Development of a theory-informed questionnaire to assess the acceptability of healthcare interventions. BMC Health Serv Res. 2021; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.WHO: Handbook for Guideline Development: 2nd edition [Internet]. World Health Organization; 2014. [cited 2021 Oct 13]. Available from: https://apps.who.int/iris/bitstream/handle/10665/145714/9789241548960_eng.pdf?sequence=1&isAllowed=y [Google Scholar]

- 35.Koechlin FM, Fonner VA, Dalglish SL, O’Reilly KR, Baggaley R, Grant RM, et al. Values and Preferences on the Use of Oral Pre-exposure Prophylaxis (PrEP) for HIV Prevention Among Multiple Populations: A Systematic Review of the Literature. AIDS Behav. 2017. May;21(5):1325–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Giese J, Cote J. Defining Consumer Satisfaction. Acad Mark Sci Rev. 2000. Jan 1;4:1–24. [Google Scholar]

- 37.Rothschild CW, Brown W, Drake AL. Incorporating Method Dissatisfaction into Unmet Need for Contraception: Implications for Measurement and Impact. Stud Fam Plann. 2021. Mar;52(1):95–102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gagliardi AR, Brouwers MC, Palda VA, Lemieux-Charles L, Grimshaw JM. How can we improve guideline use? A conceptual framework of implementability. Implement Sci IS. 2011. Mar 22;6:26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Grudniewicz A, Bhattacharyya O, McKibbon KA, Straus SE. Redesigning printed educational materials for primary care physicians: design improvements increase usability. Implement Sci IS. 2015. Nov 4;10:156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci IS. 2015. Apr 21;10:53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Baron D, Scorgie F, Ramskin L, Khoza N, Schutzman J, Stangl A, et al. “You talk about problems until you feel free”: South African adolescent girls’ and young women’s narratives on the value of HIV prevention peer support clubs. BMC Public Health. 2020. Jun 26;20(1):1016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Velloza J, Poovan N, Ndlovu N, Khoza N, Morton JF, Omony J, et al. Adaptive HIV pre-exposure prophylaxis adherence interventions for young South African women: Study protocol for a sequential multiple assignment randomized trial. PLOS ONE. 2022. Apr 13;17(4):e0266665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ngure K, Ortblad KF, Mogere P, Bardon AR, Thomas KT, Mangale D, et al. Six-month PrEP dispensing with HIV self-testing to improve the efficiency of delivery in Kenya: a randomized non-inferiority implementation trial. Lancet HIV. 2022;In-press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Wei S su, Zou Y feng, Xu Y fang, Liu J ji, Nong Q xing, Bai Y, et al. [Acceptability and influencing factors of pre-exposure prophylaxis among men who have sex with men in Guangxi]. Zhonghua Liu Xing Bing Xue Za Zhi Zhonghua Liuxingbingxue Zazhi. 2011. Aug;32(8):786–8. [PubMed] [Google Scholar]

- 45.Amico KR, Mansoor LE, Corneli A, Torjesen K, van der Straten A. Adherence support approaches in biomedical HIV prevention trials: experiences, insights and future directions from four multisite prevention trials. AIDS Behav. 2013. Jul;17(6):2143–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, et al. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017. Aug 29;12(1):108. [DOI] [PMC free article] [PubMed] [Google Scholar]