Abstract

Understanding pedestrian motion is critical for many real-world applications, e.g., autonomous driving and social robot navigation. It is a challenging problem since autonomous agents require complete understanding of its surroundings including complex spatial, social and scene dependencies. In trajectory prediction research, spatial and social interactions are widely studied while scene understanding has received less attention. In this paper, we study the effectiveness of different encoding mechanisms to understand the influence of the scene on pedestrian trajectories. We leverage a recurrent Variational Autoencoder to encode pedestrian motion history, its social interaction with other pedestrians and semantic scene information. We then evaluate the performance on various public datasets, such as ETH–UCY, Stanford Drone and Grand Central Station. Experimental results show that utilizing a fully segmented map, for explicit scene semantics, out performs other variants of scene representations (semantic and CNN embedding) for trajectory prediction tasks.

Keywords: Deep learning, Trajectory prediction, Semantic segmentation, Variational auto encoder

Introduction

Forecasting pedestrian motion is imperative for many applications such as autonomous vehicle (AV) navigation, safe maneuvering of social robots and surveillance systems. Accurate prediction of pedestrian motion can help an AV to plan its motion ahead and enable emergency breaking in critical situations. For surveillance systems, pedestrian motion understanding can help detect suspicious behaviour. Recently, with spread of COVID-19, trajectory predictions can be used monitor for safe distancing and generate alerts when social distancing guidelines are not met [1]. The task of pedestrian trajectory prediction is challenging in nature due to erratic dynamics and the ability of humans to make sudden directional changes. However, when people walk, they tend to follow learned societal norms, for example they avoid collision and give right of way as necessary, but the social context and influences may not be consistent with all of those around them.

Pedestrian motion is also influenced by scene context as they try to avoid stationary objects and follow social rules (e.g. passing on the left). Prior to deep learning techniques, modeling of pedestrian motion was carried out using hand crafted features and experimentally validated. The Social Force (SF) model [2] used vector functions to incorporate pedestrian interactions. To understand scene influence, SF used repulsive and attractive potentials to incorporate interaction with scene objects. As deep learning emerged, researchers have shifted towards data driven methodologies for modeling pedestrian behavior. To implement such techniques a video sequence is preprocessed to generate trajectories and a neural network model is then trained as a sequence to sequence learning task.

In this paper, we investigate the influence of scene structure on pedestrian motion depth. Figure 1 shows the approach used to incorporate different scene encoding mechanisms (convolutional, semantic, and full segmentation) which builds upon our prior work [3]. We run extensive experiments on wide range of datasets including Stanford Drone and Grand Central Train Station with more complex properties than the more commonly used ETH–UCY. We also provide a small upgrade from the prior Recurrent Auto-Encoder (RAE) to Variational Autoencoder (VAE) for the baseline architecture to provide multimodal predictions. Our results indicate that the use of full semantics help to achieve better prediction results compared to convolutional and semantic embedding techniques.

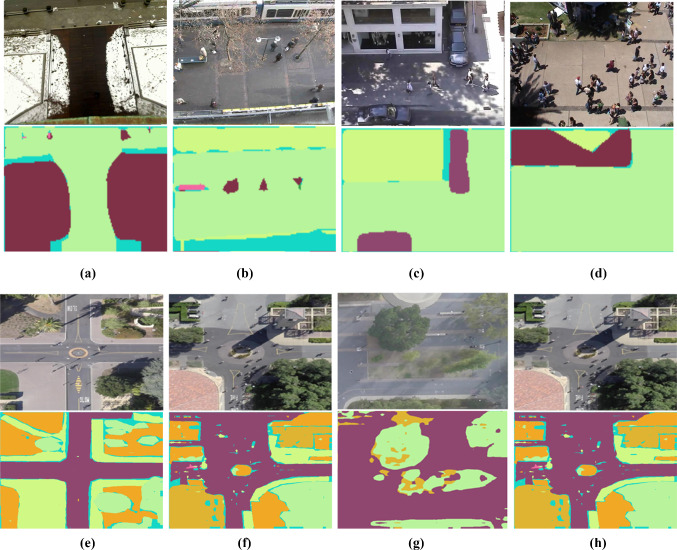

Fig. 1.

Our proposed model based on a Recurrent VAE which uses PSP-Net to incorporate Scene Semantics. We explicitly compare popular CNN scene encoding (green), semantic segmentation encoding (dashed blue), and fully segmented images for scene representation (color figure online)

Related work

Much of the current work in pedestrian motion prediction can be classified as sequence-sequence modeling which incorporates recurrent neural networks (RNNs), graph neural networks and/or Transformers. RNN variants, e.g. the long short-term memory (LSTM) [4] or gated recurrent unit (GRU) have been used extensively to capture the sequential temporal information of pedestrian trajectories. Graph networks help explicitly model relationships between pedestrians. Finally, Transformer networks have become popular recently, due to tremendous success in natural language processing (NLP), with their ability to model high-level temporal dependencies.

The trajectory prediction research is broadly divided into two areas: (i) inter-pedestrian interaction where a grid framework in terms of an occupancy map is proposed to encode social context. (ii) Pedestrian-scene context where researchers study influence of scene structure (sidewalks/roads/exit)

Pedestrian–Pedestrian interaction

Social LSTM (S-LSTM) [5] was the seminal work which focused on understanding pedestrian interaction using a data driven methodology as opposed to physics-based modeling. It proposed that each pedestrian behaviour could be modeled using an LSTM and the hidden states of LSTMs were shared between pedestrians in a fixed neighbourhood known as the social pooling layer. Similar works [6, 7] extended this general idea for complex social concepts such as groups. Gupta et al. introduced Social GAN (S-GAN) [8], a GAN-based network in conjunction with LSTM encoder–decoder to model the multi-modal behavior choice of pedestrians. It also used an efficient global pooling technique, in contrast to the local pooling of Social LSTM, for social interaction.

Since pedestrian behaviour is stochastic in nature, Variational Autoencoders (VAE) [9] and inverse optimal control [10] techniques have also been studied. In the context of autonomous driving, these trajectory prediction methods have been extended for the motion planning task. Deo and Trivedi [11] addressed the social interaction of vehicles through a convolution social pooling layer and then predicted different vehicle maneuvers. Becker [12] et al. proposed the RED predictor to compare the prediction results with linear interpolation as base line. It consists of an RNN-Encoder with a stacked multi-layer perceptron (MLP) and used smooth trajectories to forecast future time steps, which helped in preventing accumulation of error. Compared to other baseline methods like RNN-MLP, RNN-encoder-MLP and Temporal Convolutional Networks (TCN), RED has strong results on the TrajNet [13] challenge despite its simple architecture.

More recently, graph neural networks have become popular where pedestrian motion and their interaction with surroundings is modeled by creating a spatio-temporal graph [14, 15]. Graph networks provide flexible and explicit modeling of relationships since pedestrians are nodes and connections are made based on distances to others. However, these models fail to capture explicit scene interactions.

With advancement in NLP research, Transformer Networks [16] have evolved as a powerful sequence-sequence modeling technique. They have outperformed RNNs which were previously used for NLP related tasks, such as machine translation, text auto-completion, sentiment analysis, etc. Transformers disregard any sequential nature of sequences and model temporal dependencies using its strong self-attention mechanism. Giuliari et al. [17] implemented a Transformer for the trajectory prediction task by only encoding historical information of pedestrians, however, it does not capture any scene feature or social interactions leaving room for improvement. Others have combined graph networks with transformers to capture more robust social cues (STAR-D [18] and STGT [19]). More recently, AgentFormer [20] modeled joint social and temporal interactions through Transformers.

Scene interaction

It is clear that scene structure, in addition to social dynamics, plays an important role in pedestrian motion (e.g. walls prevent motion). The Social-LSTM approach was extended by adding scene features. Bartoli et al. [21] used a context-based pooling strategy to include static scene features/points of interest. Peek into the Future (PIF) [22] and State Refinement LSTM (SR-LSTM) [23] extended S-LSTM by incorporating scene features and new pooling strategies to improve prediction results. Social Scene-LSTM (SS-LSTM) [24] used an explicit scene context branch paired with social context and self context (dynamics) for prediction. They used a CNN encoder for the scene that was trained from scratch specifically for trajectory prediction.

Many attention-based GAN models [25–27] have emerged recently which incorporate scene influence on pedestrian motion and generate multimodal predictions. They provide specific social and scene attention to focus on important characteristics of pedestrian motion. Multi-agent tensor fusion [28] leverages GANs and CNNs to capture dynamic vehicle behavior by fusing the motion of multiple agents and their respective scene context into a tensor.

The current state-of-the-art methods—Y-Net [29], PECNet [30], P2T [31]—use a two-stage modeling approach where first goals (destinations) are estimated and then trajectories are predicted conditioned on these final positions.

Though CNNs can effectively encode image content, semantic segmentation networks provide more explicit scene information. A common practice is to replace a pre-trained CNN feature extraction network with the encoding half of a semantic network. Sadeghian et al. [25] uses such scene encoding strategy through a Fully Convolution Network (FCN) encoder [20, 32] uses a hand-crafted semantic map. SSeg-LSTM [33] extended the idea of SS-LSTM to explicitly incorporate scene semantics through the use of a semantic segmentation network (SegNet [34]) to encode scene features and showed improvement compared to just CNN-encoding.

While semantic embedding has shown improvement, much of the semantic power in segmentation networks can be found in the decoding branch. A high percentage of parameters are effectively thrown-away when only using the encoding. This work examines the effectiveness of using semantically segmented images for incorporating scene into pedestrian trajectory prediction. The advantage is a plug-and-play update for many existing prediction architectures that can easily be updated with improved segmentation approaches.

Model architecture

Our model consists of a recurrent VAE architecture consisting of three branches as shown in Fig. 1. The top layer encodes historical motion/dynamics using a LSTM. The observed motion for each person i in a scene is denoted as where is the observed trajectory length. The middle layer takes into account social interactions between pedestrians using a grid-based pooling mechanism [8]. The final layer captures scene encoding via a semantic segmentation architecture. The three branch encodings are combined for each pedestrian and a LSTM decoder is used to predict their future trajectories. The output is defined as where is the predicted trajectory length.

Encoder

Dynamics branch

The minimum input used for trajectory prediction is historical trajectory information . These past positions provide context on pedestrian dynamics which can be decoded to produce a prediction of the future trajectory. The historical dynamics of pedestrian i are encoded through a MLP embedding layer which takes in the position coordinates

| 1 |

where is an embedding function with as a learnable embedding weight matrix. The spatio-temporal dynamics are fully encoded sequentially through a LSTM block to produce a hidden state sequence

| 2 |

with a learnable weight matrix of observed motion.

Since previous work has shown its success modeling non-sequential data by recovering complex multi-modal distributions over data space, we utilize a VAE for modeling our sequential hidden states .

Scene segmentation branch

In order to understand the influence of scene structure we study three different types of scene encoding techniques with increasing scene contextualization:

CNN feature embedding,

Semantic embedding,

Full semantic scene segmentation embedding.

CNN feature embedding makes use of powerful object detection/recognition features to implicitly describe the scene. In our work we utilize the VGG16 network which has been pre-trained on ImageNet. Note that this network is not trained on any trajectory specific recognition task but relies on general descriptors. Additionally, other works have utilized changes in CNN features [24–27] while our work uses a static scene descriptor.

Scene semantics are incorporated in two ways based on the PSP-Net [35] encoder–decoder scene segmentation network. A popular method for semantic embedding is to utilize the scene encoder output (blue dashed line in Fig. 1). The idea is to provide a compact convolutional representation of the scene—based on semantics rather than pre-trained classification. However, this approach neglects the significant number of parameters in the decoder required to generate semantic images.

The final scene embedding technique utilized the full PSP-Net encoder–decoder network and operates on fully segmented images. While more computationally heavy than CNN or semantic embedding, the use of the full segmentation network provides explicit semantic information and provides a distilled view of the scene (Fig. 2). The semantic segmentation images provides strong contextual cues (e.g. walk along sidewalks and not through trees) for trajectory prediction.

Fig. 2.

PSP-Net semantic output for a–d ETH–UCY and e–h Stanford Drone

To produce the final trajectory prediction, the scene features are passed through a linear layer to produce a fixed-size representation

| 3 |

where is the output from different scene embedding techniques and the scene feature weights.

Latent space

After the encoding branches, information from the three branches are concatenated and embedded into a VAE latent space for each person i via a Linear layer:

| 4 |

| 5 |

where are the weights of the linear layer and are the latent space mean and standard deviation, respectively.

The probability model framework of the VAE can be written as:

| 6 |

The latent variables are drawn from prior . The data has a likelihood conditioned on latent variables

Decoder

At the decoding step, we sample from the latent space to initialize the hidden states for the LSTM decoder . In order to train the network, ground truth embeddings are generated through a embedding function . The final predicted positions are then obtained through the decoding steps:

| 7 |

| 8 |

where are the embedding weights and are the decoder weights.

Implementation and evaluation details

Our model uses an LSTM framework to encode observed trajectories (8 time steps which correspond to 3.2 s) and PSP-Net to capture scene features. We utilize the same pooling method and training parameters as mentioned in generator module of S-GAN [8]. The latent space () has dimension of 64. The decoder outputs future trajectory points (12 time steps which correspond to 4.2 s). To implement the segmentation networks, we annotated different frames across pedestrian datasets and generated image masks. The networks were trained for 200 epochs with batch size of 2 using Adam optimizer.

Trajectory evaluation is performed using the standard error metrics of average displacement error (ADE) and final displacement error (FDE). For ETH–UCY the metrics are in meters space and for SDD it is in pixels.

| 9 |

| 10 |

where N is the total number of trajectories.

In each case, lower ADE or FDE indicates a better prediction. As is typical in literature, performance on a dataset is measured by the average ADE/FDE (in meters) on different data splits.

Experimental evaluation

In order to determine how to best represent scene influence in the trajectory prediction problem, we perform a comparison of competing scene embeddings on three popular trajectory prediction datasets ETH–UCY, SDD and Grand Central with differing properties such as amount of scene influence.

ETH–UCY

The combined dataset ETH [36] and UCY[37] is a widely used public dataset for evaluating trajectory prediction methods. It has 5 scenes known as ETH, Hotel, Univ, Zara1, Zara2 with approx 1500 trajectories altogether.

Quantitative analysis

The recent state-of-the-art architectures can broadly be categorized into GANs, Transformers/Attention and Graph Neural Networks and can be further classified based on if they also take into account goals/destination of agents during training time. Our results are compared with following baseline architectures:

LSTM The vanilla LSTM is a basic encoder–decoder framework. S-LSTM [5] which models pedestrian behaviour through individual LSTM and the social interactions through a pooling layer. It contains no scene context.

General Adversarial Networks (GAN) S-GAN [8] generates multi modal predictions and captures pedestrian interaction through a pooling module. SoPhie [25] uses GAN’s with social and physical attention. It utilizes scene features with VGG-19 fine tuned for the semantic embedding task. We also compare with more recent architectures Goal-GAN [26] which predicts destinations and multi-modal trajectories towards the estimated goal and MG-GAN [27] which uses a hierarchical GAN module to forecast multiple trajectory modes.

Transformers Trajectory Transformer-TF [17] replaces RNN/LSTM with a vanilla transformer and leverages the effectiveness of self-attention to predict trajectories for individual people. It does not cater for any social or scene information. AgentFormer [20] jointly predicts trajectories in a scene with a Transformer-based attention mechanism. It uses hand crafted semantic maps to encode scene features.

Variational Auto Encoders (VAE) Trajectron++ [15] uses a VAE and constructs a spatio-temporal graph between pedestrians with an added attention module to forecast node features. PECNet [30] explicitly encodes intermediate pedestrian destinations and final trajectory prediction is based on learned goals or intent. Recurrent Auto Encoder (RAE) [3] which is our previous work and also an improvement over SSeg-LSTM [33], uses a vanilla auto encoder to model pedestrian behaviour.

Our work utilizes a VAE as base architecture and we then run experiments with different scene encoding techniques in conjunction with historical dynamics and social context. VAE incorporates social interaction and scene context with VGG-16 as a convolutional backbone. The VAE variant uses the encoder from PSP-Net, when trained on a pedestrian dataset, to obtain a semantic embedding. VAE uses the fully segmented semantic output map is in the scene branch to encode explicit scene context.

Social embedding versus scene embedding

We draw a comparison between models which involve social interaction and scene embedding. We observe that SoPhie, VAE and VAE outperform their closest neighbors of S-LSTM and S-Gan which do not contain scene context. This shows that scene information plays an important role in predicting accurate trajectories. We further analyze the influence of scene structure by looking at different encoding mechanisms.

Scene embedding versus scene segmentation

While scene embedding provided marginal improvement over social embeddings, we observe that using a fully segmented output map (VAE) provides significantly better results, especially on ADE. We speculate that the maximum representation power from semantic segmentation networks comes from the decoder which needs to produce semantic interpretation and labels from embeddings. The VGG-16 or ResNet (VAE) encoders do not provide strong semantic insight, therefore, taking advantage of decoder is necessary to accurately encode scene features.

In addition, model complexity also plays an important role in accurate trajectory prediction. From Table 1 we see that our previous work [3] based on Recurrent Auto Encoder (RAE) has slightly poorer results compared to VAE version. One reason can be attributed to the difference in their latent space structure. The VAE assumes that input data has some underlying probability distribution and attempts to find its parameters providing extra modeling and generalization ability.

Table 1.

ETH–UCY ADE/FDE (meters) comparison (lower is better)

| Model | Scene | Social | ETH | Hotel | Univ | Zara1 | Zara2 | Avg |

|---|---|---|---|---|---|---|---|---|

| S-LSTM [5] | 1.09/2.35 | 0.79/1.76 | 0.67/1.40 | 0.47/1.00 | 0.56/1.17 | 0.72/1.54 | ||

| LSTM [4] | 1.09/2.41 | 0.86/1.91 | 0.61/1.31 | 0.41/0.88 | 0.52/1.11 | 0.70/1.52 | ||

| S-GAN-VP20 [8] | 0.87/1.62 | 0.67/1.37 | 0.76/1.52 | 0.35/0.68 | 0.42/0.84 | 0.61/1.21 | ||

| SoPhie [25] | 0.70/1.43 | 0.76/1.67 | 0.54/1.24 | 0.30/0.63 | 0.38/0.78 | 0.54/1.15 | ||

| SR-LSTM [23] | 0.63/1.25 | 0.37/0.74 | 0.41/0.90 | 0.32/0.70 | 0.51/1.10 | 0.45/0.94 | ||

| STAR-D [18] | 0.56/1.11 | 0.26/0.50 | 0.41/0.90 | 0.31/0.71 | 0.52/1.15 | 0.41/0.87 | ||

| Goal-GAN [26] | 0.59/1.18 | 0.19/0.35 | 0.60/1.19 | 0.43/0.87 | 0.32/0.65 | 0.45/0.85 | ||

| MG-GAN [27] | 0.47/0.91 | 0.14/0.24 | 0.54/1.07 | 0.36/0.73 | 0.29/0.60 | 0.36/0.71 | ||

| Transformer-TF [17] | 0.61/1.12 | 0.18/0.30 | 0.35/0.65 | 0.22/0.38 | 0.17/0.32 | 0.31/0.55 | ||

| PECNet [30] | 0.54/0.87 | 0.18/0.24 | 0.35/0.60 | 0.22/0.39 | 0.17/0.30 | 0.29/0.48 | ||

| Trajectron++ [15] | 0.39/0.83 | 0.12/0.21 | 0.20/0.44 | 0.15/0.33 | 0.11/0.25 | 0.19/0.41 | ||

| AgentFormer [20] | 0.45/0.75 | 0.14/0.22 | 0.25/0.45 | 0.18/0.30 | 0.14/0.24 | 0.23/0.39 | ||

| Y-Net [29] | 0.28/0.33 | 0.10/0.14 | 0.24/0.41 | 0.17/0.27 | 0.13/0.22 | 0.18/0.27 | ||

| RAE [3] | 0.86/1.65 | 0.89/1.75 | 0.56/1.34 | 0.42/0.80 | 0.40/0.81 | 0.62/1.23 | ||

| RAE [3] | 0.88/1.70 | 0.79/1.53 | 0.56/1.39 | 0.41/0.97 | 0.42/0.96 | 0.61/1.31 | ||

| RAE [3] | 0.79/1.48 | 0.64/1.34 | 0.52/1.40 | 0.32/0.99 | 0.36/0.80 | 0.52/1.20 | ||

| VAE | 0.96/1.87 | 0.64/1.43 | 0.77/1.62 | 0.51/1.08 | 0.54/0.95 | 0.68/1.39 | ||

| VAE | 0.94/1.69 | 0.63/1.44 | 0.69/1.69 | 0.41/0.83 | 0.53/0.96 | 0.65/1.24 | ||

| VAE | 0.93/1.60 | 0.61/1.45 | 0.64/1.38 | 0.47/0.81 | 0.52/0.94 | 0.62/1.25 | ||

| VAE | 0.75/1.62 | 0.60/1.28 | 0.53/1.35 | 0.30/0.79 | 0.34/0.91 | 0.50/1.19 |

Bold indicates best performing model

Overall, for VAE there is a (4.4, 8.8, 26.5)% improvement with scene encoding of CNN, semantic-embedding, and semantic segmentation respectively.

Qualitative analysis

We qualitatively analyze different scenarios where availability of semantic scene segmentation plays an important role in trajectory forecasting. When pedestrians are walking they tend to traverse a walkable path, for example side walks, entrance/exits, etc. It is important for our architecture to semantically identify these points of interests.

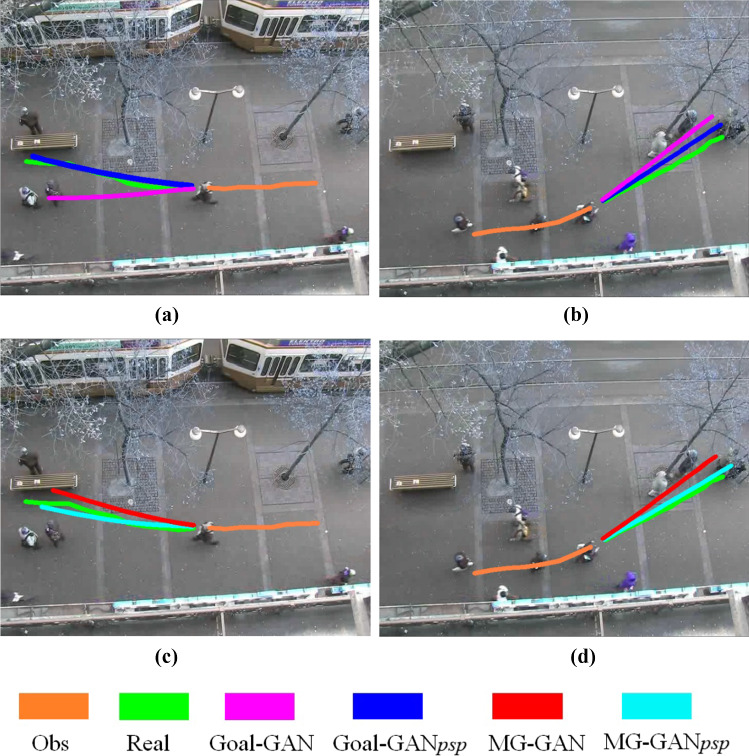

In Fig. 3a VAE is able to avoid the stopped pedestrians and bench simultaneously. Since vanilla VAE does not model scene features, we see the worst performance. Similarly in Fig. 3b, c we observe a rich scene interaction and therefore VAE has successful predictions, where pedestrian’s future motion is closer to ground truth, compared to VAE, VAE and VAE.

Fig. 3.

ETH–UCY qualitative comparison show improved performance with full PSP segmentation

In the ETH sequence in Fig. 3d–f, VAE is able to predict a trajectory that follows the edge of the path next to the snow covering. This is because in Fig. 2, PSP-Net was better able to identify static objects (snow area and walkable path). In Fig. 3d the pedestrian tends to maintain walking on the pavement (successfully identified by PSP-Net) and travels to enter the building again highlighting the importance of semantic scene structure.

In all the cases we see that a vanilla VAE was the worst performer, since it didn’t capture scene interactions. Though adding scene information in the form of VGG and semantic embedding improved the prediction performance, we observe that semantic obstacle information is not fully utilized by VAE and VAE. Both VGG and semantic embeddings do not explicitly extract scene features whereas the complete semantic map distinctly captures them and hence we see improved performance for VAE.

Scene semantics impact on recent architectures

We further investigate the importance of semantic maps by carrying out experiments on more recent architectures (Goal-GAN [26] and MG-GAN [27]). Dendorfer et al. [27] uses CNN encoding with physical attention to incorporate scene features, similar to Sophie [25], while [26] estimates target destinations and then a routing module predicts trajectories in the direction of predicted goals. It uses a CNN encoder–decoder to capture scene features.

Table 2 shows the results when we replace the scene encoding branch in [26, 27] with the PSP segmentation network enabling the use of full semantic output maps. Like VAE, there is a slight improvement over basic CNN encoding, The results further validates our initial hypothesis that given any trajectory prediction model (e.g. stronger models such as Goal-GAN and MG-GAN), the use of semantic features over CNN encoding provides strong scene representation which helps to improve motion forecasting task.

Table 2.

ADE/FDE (meters) for SOTA upgrade

| Dataset | Goal-GAN | Goal-GAN | MG-GAN | MG-GAN |

|---|---|---|---|---|

| ETH | 0.59/1.18 | 0.56/1.17 | 0.47/0.91 | 0.45/0.87 |

| Hotel | 0.19/0.35 | 0.18/0.32 | 0.14/0.24 | 0.12/0.21 |

| Zara1 | 0.60/1.19 | 0.57/1.20 | 0.54/1.07 | 0.54/1.01 |

| Zara2 | 0.43/0.87 | 0.40/0.87 | 0.36/0.73 | 0.34/0.73 |

| Univ | 0.32/0.65 | 0.30/0.61 | 0.29/0.60 | 0.27/0.57 |

| AVG | 0.43/0.85 | 0.40/0.83 | 0.36/0.71 | 0.34/0.68 |

Bold indicates best performing model

Qualitatively, these results can be seen in Fig. 4a–d, where again, the use of PSP images results in better predictions. For example in Fig. 4a, b Goal-GAN has better performance than Goal-GAN. Similar behaviour is observed for MG-GAN and MG-GAN in Fig. 4c, d.

Fig. 4.

ETH–UCY Qualitative comparison still shows improvements by using semantic images even with Goal-GAN (top row) and MG-GAN (bottom row)

Stanford drone dataset (SDD)

The Stanford drone dataset (SDD) [38] consists of trajectories of pedestrians, bicyclists, skateboarders and vehicles captured using drones at 60 different scenes on the Stanford university campus. The dataset provides a bird’s eye view images of the scenes, and locations of tracked agents in the scene’s pixel co-ordinates. It has a diverse set of scene elements like roads, sidewalks, walkways, buildings, parking lots, terrain and foliage. The roads and walkways have different configurations, including roundabouts and four-way intersections. Unlike ETH–UCY, SDD is a large dataset with much more scene influence. We use the dataset split defined in the TrajNet benchmark [13] which was also used in prior works [25, 27] for defining train, validation and test sets.

Quantitative analysis

We repeat the scene encoding evaluation on SDD since it provides rich scene information from 60 different scenarios (much more diverse than ETH–UCY). Figure 2e–h shows segmented output maps of a few scenes from SDD. We train and evaluate the VAE and its scene variants and show quantitative results in Table 3. We also compare our models with other state-of-the-art architectures in the literature:

Y-Net [29]: Takes into account a semantic map (U-Net), trajectory heat maps and additional information of pedestrian’s destinations and way points to predict future motion

P2T-IRL [31]: Utilized a reinforcement learning approach to learn a reward function from trajectories and output predicted trajectories conditioned on end goals.

CGNS [39]: Utilized a scene embedding (VGG-19) and historical motion with a VAE and uses a GAN to predict future motion.

DESIRE [10]: A RNN encoder–decoder model which captures scene context (CNN), social interaction and agent’s history. It uses Inverse Optimal Control to further refine and rank the predictions.

Table 3.

ADE/FDE (pixel) comparison on stanford drone dataset (SDD)

| Model | Scene | Social | End points | ADE | FDE |

|---|---|---|---|---|---|

| Y-Net [29] | 7.85 | 11.85 | |||

| PECNet [30] | 9.96 | 15.88 | |||

| P2T IRL [31] | 12.58 | 22.07 | |||

| CGNS [39] | 15.60 | 28.20 | |||

| SoPhie [25] | 16.27 | 29.38 | |||

| DESIRE [10] | 19.25 | 34.05 | |||

| S-GAN [8] | 27.23 | 41.44 | |||

| RAE [3] | 22.83 | 39.86 | |||

| RAE [3] | 17.74 | 38.46 | |||

| RAE [3] | 15.43 | 27.87 | |||

| VAE | 28.65 | 41.38 | |||

| VAE | 21.54 | 36.21 | |||

| VAE | 16.89 | 35.47 | |||

| VAE | 14.38 | 26.53 |

Bold indicates best performing model

Overall, for our VAE approach, there is a (24.8, 41.1, 49.8)% improvement with the addition of scene encoding through CNN, semantic-embedding, and semantic segmentation respectively which highlights the value of scene information. Our quantitative results show that VAE outperforms S-GAN, SoPhie, DESIRE and CGNS. In addition, like previously, VAE provides improvement over VAE and VAE results (same for RAE variants) which validates our hypothesis that using semantics provides rich scene context for trajectory prediction tasks. Note there is a larger improvement than observed in ETH–UCY between VAE over the more typically used VAE or even VAE approaches. The SDD improvement of 17/33% (ADE/FDE) versus 4/12% in ETH–UCY seems to be due to more complex scene and increased structural influence on how the pedestrians behave (e.g. sidewalks vs. roads).

Although P2T, PECNet and Y-Net have superior performance to our architectures, it must be noted that these models explicitly encode pedestrian destination and waypoints at training time which provides additional guiding information not available for our VAE variants.

Qualitative analysis

Figure 5 shows examples from three different SDD scenes. In Fig. 5b, the pedestrian stays on a walking path (green) rather than continue into the trees as VAE (blue) is able to reproduce unlike the other VAE variants. This can be attributed to the fact that utilization of semantics can add meaningful and explicit scene representation (trees, walking path etc). Figure 5c shows that pedestrian attempts to enter a building (purple circle) while crossing the road. A building entrance is an essential scene feature as it acts as a source or sink for pedestrian trajectories. Although it does not quite walk around the corner, the VAE prediction accurately localizes the building entrance.

Fig. 5.

SDD Qualitative Comparison. Top Row: Death Circle, Middle Row: Little, Bottom Row: Hyang. The left column is the full scene image and the remaining columns are example predictions from the highlighted yellow/magenta areas (color figure online)

In Fig. 5e, a pedestrian makes a transition from a grass patch to the side sidewalk and the VAE has a better prediction compared with the other variants. It seems, that since the trajectory originated in the grass it helps bias the prediction to stay close rather than rely on pure dynamics. The vanilla VAE does not encode any scene information so VAE predictions tend to be linear and predicts a straight trajectory. We see that VAE and VAE have slightly improved the prediction (trends closer to the ground truth). Similarly, in Fig. 5f, the pedestrian transitions its motion from road to sidewalk and VAE shows better performance compared to others (Fig. 6).

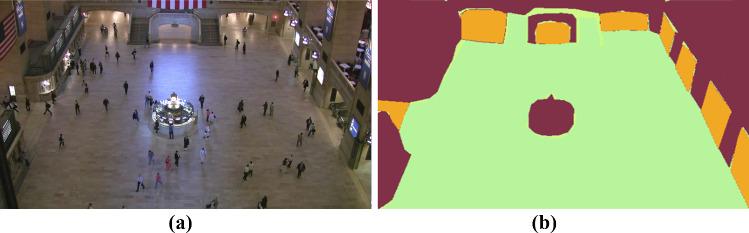

Fig. 6.

a Snapshot of GC Dataset, b Semantic Output (PSP-Net)

As we have seen in previous examples, a walkway is an important scene feature which is imperative for our models to take into account while making predictions. In Fig. 5h, the pedestrian is walking towards the main N-S pathway and its ground truth (green) trajectory shows its intent to take a left turn. Here the VAE (red) shows a straight prediction as it is not aware of any scene structure, its prediction is based on only observed motion and social interaction. Though adding scene information in terms of CNN and semantic embedding (VAE and VAE) slightly improved the results, it was VAE which out performed all the methods (having almost perfect FDE) perhaps since it provides preference for predictions on walking paths as opposed to the building/grass. Finally in Fig. 5i we see a pedestrian taking a sharp turn around the building and VAE effectively predicts the ending position. However, the prediction provides a straight shortest-path trajectory rather than the more natural real curve.

Grand Central (GC) Station dataset

The GC dataset [40] is quite different than the previous two datasets as it was collected from a 1-h surveillance video (23 fps) in a very crowded indoor scene—New York City’s Grand Central Train Station. There are 12,684 manually annotated pedestrian trajectories and an average of over 100 pedestrians in each frame. Here social interactions may have the most influence due to scene density and while there is little scene distinction (mostly an open area), the passages to trains are significant points of interest.

PSP-Net is able to extract scene semantics and clearly identify walking paths, entry and exit locations, and ticketing counters (center and left). In this dataset, pedestrian motion is highly influenced by the entry points for accessing different trains. The results in Table 4 indicate there is still value in scene information even in the crowded train station. For both RAE and VAE versions, the trend of increased performance with more explicit scene semantic holds with VAE having the best performance. Overall, for VAE there is a (3.9, 9.4, 14.0)% improvement when using scene encoding of CNN, semantic-embedding, and semantic segmentation respectively. This further validates our hypothesis that explicit scene representation plays an important role in accurately predicting pedestrian trajectories.

Table 4.

ADE/FDE (meters) comparison on Grand Central Station dataset

| Model | ADE | FDE |

|---|---|---|

| LSTM | 0.576 | 1.137 |

| RAE | 0.557 | 1.086 |

| RAE | 0.541 | 1.078 |

| RAE | 0.523 | 0.986 |

| VAE | 0.565 | 1.112 |

| VAE | 0.543 | 1.087 |

| VAE | 0.512 | 0.998 |

| VAE | 0.486 | 0.871 |

Bold indicates best performing model

Conclusion

Our goal in this paper was to extensively study different scene encoding mechanisms for pedestrian trajectory prediction. We took into account three different scene representation techniques (i) off-the-shelf CNN (ii) semantic embedding and (iii) a fully segmented output map. Our quantitative and qualitative analysis on three different datasets, with varying degree of scene influence, indicated that knowledge of explicit scene semantics can help achieve more accurate pedestrian trajectory forecasts as opposed to more straight forward CNN or semantic embedding methods.

Biographies

Arsal Syed

received his BS degree in Engineering Science from Ghulam Ishaq Khan Institute, Pakistan in 2012 and MS degree from New York Institute of Technology (NYIT) in 2015. He graduated with PhD in Electrical Engineering from University of Nevada Las Vegas (UNLV) in 2021. He was Applied Scientist Intern at Amazon in Summer’19 and Research Scientist Intern at Hitachi Automotive Systems in Spring’20. His research focuses on utilizing computer vision and machine learning techniques to understand pedestrian behaviour.

Brendan Tran Morris

received his BS degree from the University of California, Berkeley, in 2002 and his MS and PhD from the University of California, San Diego (UCSD), in 2006 and 2010 respectively. He is currently an Associate Professor of Electrical and Computer Engineering, the founding director of the Real-Time Intelligent Systems (RTIS) Laboratory. He and his team conduct research on computationally efficient systems that utilize computer vision techniques for activity analysis, situational awareness, and scene understanding.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Arsal Syed, Email: syeda3@unlv.nevada.edu.

Brendan Tran Morris, Email: brendan.morris@unlv.edu.

References

- 1.Li J, Wu Z. The application of Yolov4 and a new pedestrian clustering algorithm to implement social distance monitoring during the COVID-19 pandemic. J. Phys. 2019;1865:04. [Google Scholar]

- 2.Helbing D, Molnar P. Social force model for pedestrian dynamics. Phys. Rev. E. 1995;51(5):4282. doi: 10.1103/PhysRevE.51.4282. [DOI] [PubMed] [Google Scholar]

- 3.Syed, A., Morris, B.T.: CNN, segmentation or semantic embeddings: evaluating scene context for trajectory prediction. In: ISVC (2020)

- 4.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9(8):1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 5.Alahi, A., Goel K., Ramanathan, V., Robicquet, A., Fei-Fei L., Savarese S.: Social-LSTM: human trajectory prediction in crowded spaces. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 961–971 (2016)

- 6.Bisagno, N. Zhang, B. Conci, N.: Group LSTM: group trajectory prediction in crowded scenarios. In: Proceedings of the European Conference on Computer Vision, pp. 213–225 (2018)

- 7.Cheng B, Xu X, Zeng Y, Ren J, Jung S. Pedestrian trajectory prediction via the Social-Grid LSTM model. J. Eng. 2018;2018(16):1468–1474. doi: 10.1049/joe.2018.8316. [DOI] [Google Scholar]

- 8.Gupta, A., Johnson, J., Fei-Fei, L., Savarese S., Alahi A.: Social GAN: socially acceptable trajectories with generative adversarial networks. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2255–2264 (2018)

- 9.Yao Y, Atkins E, Johnson-Roberson M, Vasudevan R, Du X. BiTraP: bi-directional pedestrian trajectory prediction with multi-modal goal estimation. IEEE Robot. Autom. Lett. 2021;6(2):1463–1470. doi: 10.1109/LRA.2021.3056339. [DOI] [Google Scholar]

- 10.Lee, N., Choi, W., Vernaza, P., Choy, C.B, Torr, P.H, Chandraker, M.: Desire: Distant future prediction in dynamic scenes with interacting agents. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2165–2174 (2017)

- 11.Deo, N., Trivedi, M.M.: Convolution social pooling for vehicle trajectory prediction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, vol. 2018, pp. 1468–1476 (2018)

- 12.Becker, S., Hug, R., Hübner, W., Arens, M.: RED: A simple but effective Baseline Predictor for the TrajNet Benchmark. In: European Conference on Computer Vision Workshops (2018)

- 13.Sadeghian, A., Kosaraju, V., Gupta, A., Savarese, S., Alahi, A.: Trajnet: towards a benchmark for human trajectory prediction. arXiv preprint (2018)

- 14.Mohamed, A., Qian, K., Elhoseiny M., Claudel C.: Socialstgcnn: a social spatio-temporal graph convolutional neural network for human trajectory pre-diction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 14424–14432 (2020)

- 15.Salzmann T., Ivanovic, B., Chakravarty, P., Pavone M.: Trajectron++: dynamically-feasible trajectory forecasting with heterogeneous data. arXiv preprint arXiv:2001.03093 (2020)

- 16.Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones L., Gomez A., Kaiser L., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 6000–6010 (2017)

- 17.Giuliari, F., Hasan, I., Cristani, M., Galasso F.: Transformer networks for trajectory forecasting. In: 25th International Conference on Pattern Recognition (ICPR), pp. 10335–10342 (2021)

- 18.Yu C., Ma, X., Ren, J., Zhao H., Yi, S.: Spatio-temporal graph transformer networks for pedestrian trajectory prediction. In: European Conference on Computer Vision, pp. 507–523 (2020)

- 19.Syed, A., Morris, B.: STGT: forecasting pedestrian motion using spatio-temporal graph transformer. In: IEEE Intelligent Vehicles Symposium (IV), pp. 1553–1558 (2021)

- 20.Yuan, Y., Weng, X., Ou, Y., Kitani, K.M.: AgentFormer: agent-aware transformers for socio-temporal multi-agent forecasting. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 9813–9823 (2021)

- 21.Bartoli, F., Lisanti, G., Ballan, L., Del Bimbo, A.: Context-Aware Trajectory Prediction. In: 24th International Conference on Pattern Recognition, Beijing, China, pp. 1941–1946 (2018)

- 22.Liang, J., Jiang, J., Niebles, C., Hauptmann, G., Fei-Fei, L.: Peeking into the future: predicting future person activities and locations in videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5718–5727 (2019)

- 23.Zhang, P., Ouyang, W., Zhang, P., Xue, J., Zheng, N.: SR-LSTM: state refinement for LSTM towards pedestrian trajectory prediction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 12085–12094 (2019)

- 24.Xue, H., Huynh, D.Q., Reynolds, M.: SS-LSTM: a hierarchical LSM model for pedestrian trajectory prediction. In: IEEE Winter Conference on Applications of Computer Vision, pp. 1186–1194 (2018)

- 25.Sadeghian, A. , Kosaraju, V., Sadeghian, A., Hirose, N., Savarese, S.: SoPhie: an attentive GAN for predicting paths compliant to social and physical constraints. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1349–1358 (2019)

- 26.Dendorfer, P., Osep, A., Leal-Taixé, L.: Goal-GAN: multimodal trajectory prediction based on goal position estimation. In: Proceedings of the Asian Conference on Computer Vision (2020)

- 27.Dendorfer, P., Elflein, S., Leal-Taixé, L.: MG-GAN: a multi-generator model preventing out-of-distribution samples in pedestrian trajectory prediction. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, pp. 13158–13167 (2021)

- 28.Zhao, T., Xu, Y., Monfort, M., Choi, W., Baker, C., Zhao, Y., Wang, Y., Nian, Wu, Y.N.: Multi-agent tensor fusion for contextual trajectory prediction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 12126–12134 (2019)

- 29.Mangalam, K., An, Y., Girase, H., Malik, J.: From goals, waypoints and paths to long term human trajectory forecasting. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 15213–15222 (2021)

- 30.Mangalam, K., Girase, H., Agarwal, S., Lee, K.H., Adeli, E., Malik, J., Gaidon, A.: It is not the journey but the destination: endpoint conditioned trajectory prediction. In: Computer Vision-ECCV, vol. 2020, pp. 759–776 (2020)

- 31.Deo, N., Trivedi, M.M.: Trajectory forecasts in unknown environments conditioned on grid-based plans. arXiv 2020 arXiv:2001.00735

- 32.Long, J., Shelhamer E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015) [DOI] [PubMed]

- 33.Syed, A., Morris, B.T.: SSeg-LSTM: semantic scene segmentation for trajectory prediction. In: IEEE Intelligent Vehicles Symposium (IV), pp. 2504–2509 (2019)

- 34.Badrinarayanan V, Handa A, Cipolla R. SegNet: a deep convolutional encoder–decoder architecture for robust semantic pixel-wise labelling. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39(12):2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 35.Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6230–6239 (2017)

- 36.Pellegrini, S., Ess, A., Schindler, K., and van Gool, L.: You’ll never walk alone: modeling social behavior for multi-target tracking. In: Proceedings of the IEEE Conference on Computer Vision, pp. 261–268 (2009)

- 37.Leal-Taixe, L., Fenzi, M., Kuznetsova, A., Rosenhahn, B., Savarese, S.: Learning an image-based motion context for multiple people tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3542–3549 (2014)

- 38.Robicquet, A., Sadeghian, A., Alahi, A., Savarese, S.: Learning social etiquette: human trajectory understanding in crowded scenes. In: Computer Vision 14th European Conference, Amsterdam, The Netherlands (2016)

- 39.Jiachen, Li., Hengbo, Ma., Tomizuka, M.: Conditional generative neural system for probabilistic trajectory prediction. arXiv preprint arXiv:1905.01631 (2019)

- 40.Yi, S., Li, H., Wang, X.: Understanding pedestrian behaviors from stationary crowd groups. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3488–3496 (2015)