Abstract

The interest for machine learning (ML) has grown tremendously in recent years, partly due to the performance leap that occurred with new techniques of deep learning, convolutional neural networks for images, increased computational power, and wider availability of large datasets. Most fields of medicine follow that popular trend and, notably, radiation oncology is one of those that are at the forefront, with already a long tradition in using digital images and fully computerized workflows. ML models are driven by data, and in contrast with many statistical or physical models, they can be very large and complex, with countless generic parameters. This inevitably raises two questions, namely, the tight dependence between the models and the datasets that feed them, and the interpretability of the models, which scales with its complexity. Any problems in the data used to train the model will be later reflected in their performance. This, together with the low interpretability of ML models, makes their implementation into the clinical workflow particularly difficult. Building tools for risk assessment and quality assurance of ML models must involve then two main points: interpretability and data-model dependency. After a joint introduction of both radiation oncology and ML, this paper reviews the main risks and current solutions when applying the latter to workflows in the former. Risks associated with data and models, as well as their interaction, are detailed. Next, the core concepts of interpretability, explainability, and data-model dependency are formally defined and illustrated with examples. Afterwards, a broad discussion goes through key applications of ML in workflows of radiation oncology as well as vendors’ perspectives for the clinical implementation of ML.

1. Introduction

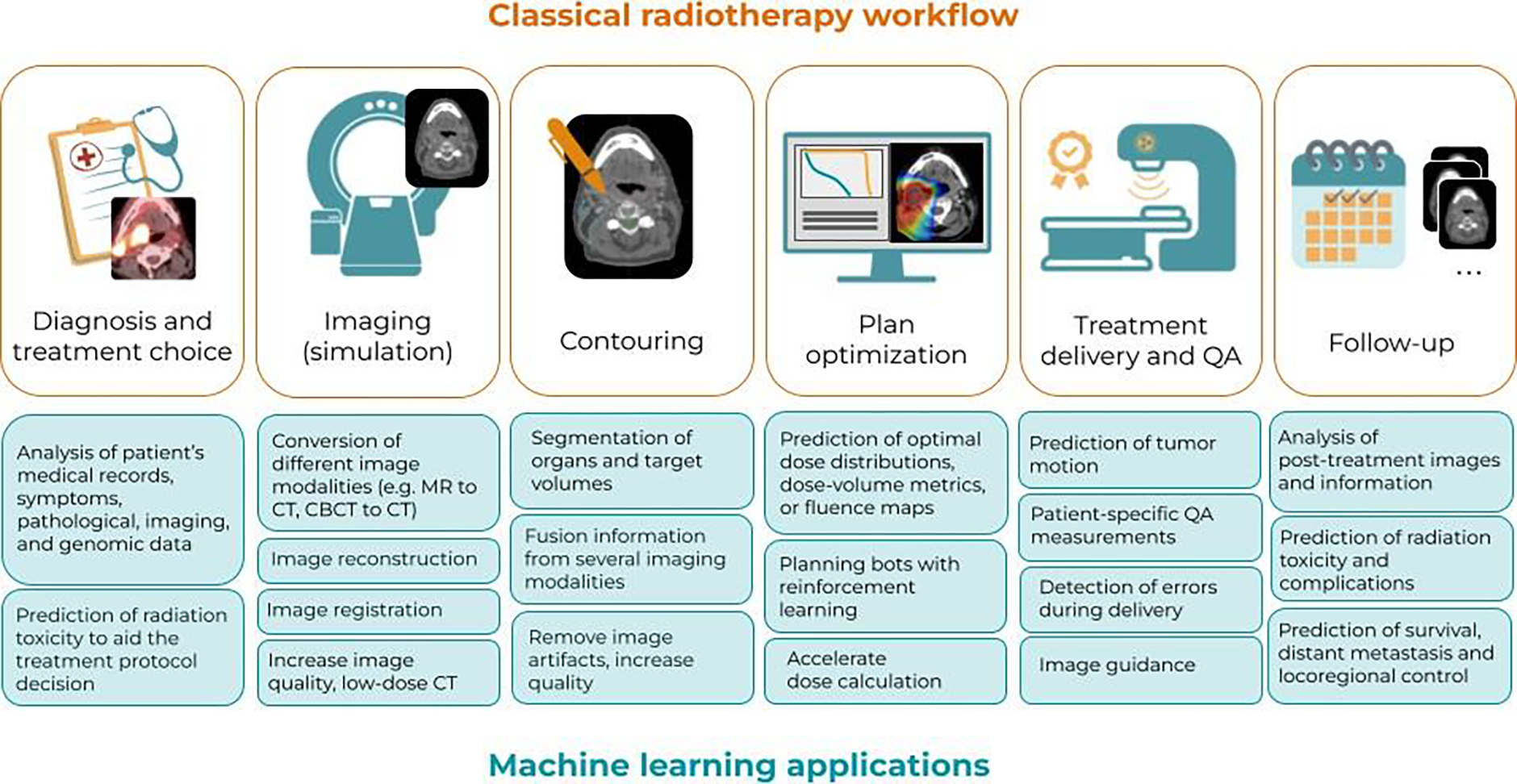

Radiation oncology is a medical field that heavily relies on information technology and computational methods. Even though the goal of radiation therapy can be stated as simply as irradiating the tumor while minimizing the dose to the healthy tissue, numerous and complex calculations are needed to achieve such a goal. From the image reconstruction and analysis steps to locate the tumor and organs, down to the plan optimization process to find the machine parameters that deliver the desired dose, image and data processing algorithms are at the backbone of radiotherapy treatments.

This tight entanglement between software and clinical practice is doing nothing but growing with time and, needless to say, the recent rise of artificial intelligence (AI) tools, specifically machine learning (ML) and deep learning (DL), is disruptively transforming the field of radiation oncology (Thompson et al 2018, Feng et al 2018, Sahiner et al 2019, Boldrini et al 2019). One can find examples of applications of AI/ML/DL algorithms in every block of the radiotherapy workflow, including image segmentation (Seo et al 2020), treatment planning (Wang et al 2020a), quality assurance (Chan et al 2020), and outcome prediction (Isaksson et al 2020), among others (Shan et al 2020, Jarrett et al 2019).

ML/DL has the potential to automate and speed up the whole radiotherapy treatment workflow (Unkelbach et al 2020, Wang et al 2020a, Cardenas et al 2019), freeing time in the physicians schedules to focus on more relevant patient care instead of repetitive and mechanical tasks. More importantly, though, ML/DL can also help standardize and improve the current clinical practice (Sher et al 2021, Thor et al 2021, van der Veen et al 2019), by mitigating variability and suboptimality related to human factors, as well as by transferring the knowledge from more to less experienced centers (e.g., planning of new or emerging treatments, transferring expertise to developing countries, etc). The ESTRO-HERO (Health Economics Radiation Oncology) group has claimed for years a problem of underprovision of radiation therapy (Lievens et al 2020, Korreman et al 2021, Lievens et al 2014), meaning that the optimal utilization benchmark is not met in many countries. With the aging population and the associated increased incidence of cancer, this underprovision will only grow larger. ML/DL can thus play an important role in solving this problem (Korreman et al 2021), but only if we can ensure safe and efficient clinical implementation of this technology.

After a few years of research, the feasibility and potential to use ML models in the clinic has been well demonstrated, and we are now progressively shifting to the implementation phase of either in-house or commercial ML software (Brouwer et al 2020). In 2019 alone, 77 AI/ML-based medical devices were approved by the FDA in the US and 100 were CE-marked in Europe, while back in 2015 the approved devices barely exceeded 10 (Muehlematter et al 2021). Nevertheless, some clinicians may still be reluctant to adopt this technology in the clinical routine. One of the reasons is that they might feel unfamiliar with the technology and its mathematical principles, especially for recent DL models. To overcome this, multiple review articles have been published recently, introducing the main technological pillars of AI/ML/DL to clinicians (Barragán-Montero et al 2021a, Wang et al 2020a, Shen et al 2020b, Cui et al 2020). In parallel, the medical physicists community is working towards a change in the curriculum of the clinicians, to include basic education about AI/ML/DL techniques (Zanca et al 2021, Xing et al 2021). However, the main reason motivating the cautious adoption of ML/DL models in the clinical environment is their sometimes hazardous reliability. Can we guarantee that all outputs provided by the ML model are correct? How can we detect the cases for which the ML prediction has failed? Why or how did the ML model yield that specific result or conclusion? Answering these questions is very often not straightforward for current ML-based applications. This, together with their intrinsic black-box nature, increases the skepticism around ML/DL models and hinders their wide adoption in clinical practice. In the popular acception, a black box is a system whose inner workings are unknown or highly complex. When algorithms are difficult to understand, unveiling their reasoning and their risks of failure becomes very complicated.

The literature is scarce about how to ensure safe clinical implementation of these black-box systems in radiation oncology. But recently, some groups have started gathering recommendations towards that end (Vandewinckele et al 2020, Brouwer et al 2020, He et al 2019, Liu et al 2020, Rivera et al 2020). Developing ML models that guarantee consistently good performance under all circumstances is utopical. However, one can find strategies to increase their transparency and assess the reliability of their answers for each specific situation. Matters of safety and quality standards are addressed by quality assurance (QA) in the broad sense. When processes involve ML/DL, we identify two key concepts that must integrate QA: model interpretability/explainability and data-model dependency.

First, interpretability and explanations of ML models allows the end-user to better understand, debug, and even improve these models (Huff et al 2021, Jia et al 2020, Reyes et al 2020). Often, the terms interpretability and explanability are used interchangeably. However, it is important to distinguish between the property of models to be understandable (i.e. interpretability) and the means that are used to explain non-interpretable models (i.e. explanations). Second, the data-driven nature of ML/DL forces QA to extend beyond the model itself, by investigating the data that feeds it and makes it task-specific, as well as how the model performance depends on it, namely, data-model dependency. On the one hand, the data distribution needs to be carefully analyzed to ensure that it is a faithful representation of the considered problem (Diaz et al 2021, Willemink et al 2020). On the other hand, one can explore how the model performs, for instance, under perturbation of the input data to learn about its robustness (e.g., generalization to similar domains) and precision (e.g., quantification of model uncertainty (Ghoshal et al 2021, Begoli et al 2019)).

In this review, we describe in detail key aspects of interpretability, explainability and data-model dependency in ML/DL, and discuss how they can be applied to increase the reliability and safety of ML/DL applications in the field of radiation oncology. Section 2 starts by reviewing all the possible risks associated with ML/DL models, and provides illustrative examples in the medical field. Section 3 introduces general considerations and technical foundations about interpretability, explainability and data-model dependency in ML. These topics have been studied for years in fundamental ML research, but they only start to integrate the vocabulary of clinical research and practitioners. We believe it is essential to bring this knowledge closer to the clinical environment, in order to provide the radiation oncology community with a well-structured background to develop reliable and safe ML models. Section 4 walks the reader through the radiation oncology workflow and digs into key applications of ML, specifically discussing issues related to interpretability, explainability and data-model dependency. Section 5 wraps-up this manuscript with final conclusions.

2. Risks associated with the use of ML for medical applications

The first step towards a safe clinical implementation of ML models is to become aware of the different risk factors associated with this technology, which is the goal of this section. As ML techniques are essentially data-driven, the main risks associated with their use can then stem from the data itself or the model. Data issues appear when the data used to train our ML algorithm does not reflect the ground truth of the problem at hand, whereas model issues are due to incorrect performance of the model itself. In the following, we identify the main issues in these two categories and provide illustrative examples in the medical field.

2.1. Data

In computer science, the acronym GIGO stands for ‘Garbage In, Garbage Out’, and it refers to the fact that when a system is fed with low-quality data, the output will be deficient likewise. In ML specifically, GIGO can have dramatic consequences as it affects the training of the model. In medical applications of ML, GIGO can affect the patient’s outcome and it is one of the main factors to take into account when aiming at their safe clinical implementation. GIGO has two main roots: insufficient data in quantity and inappropriate data in quality (figure 1).

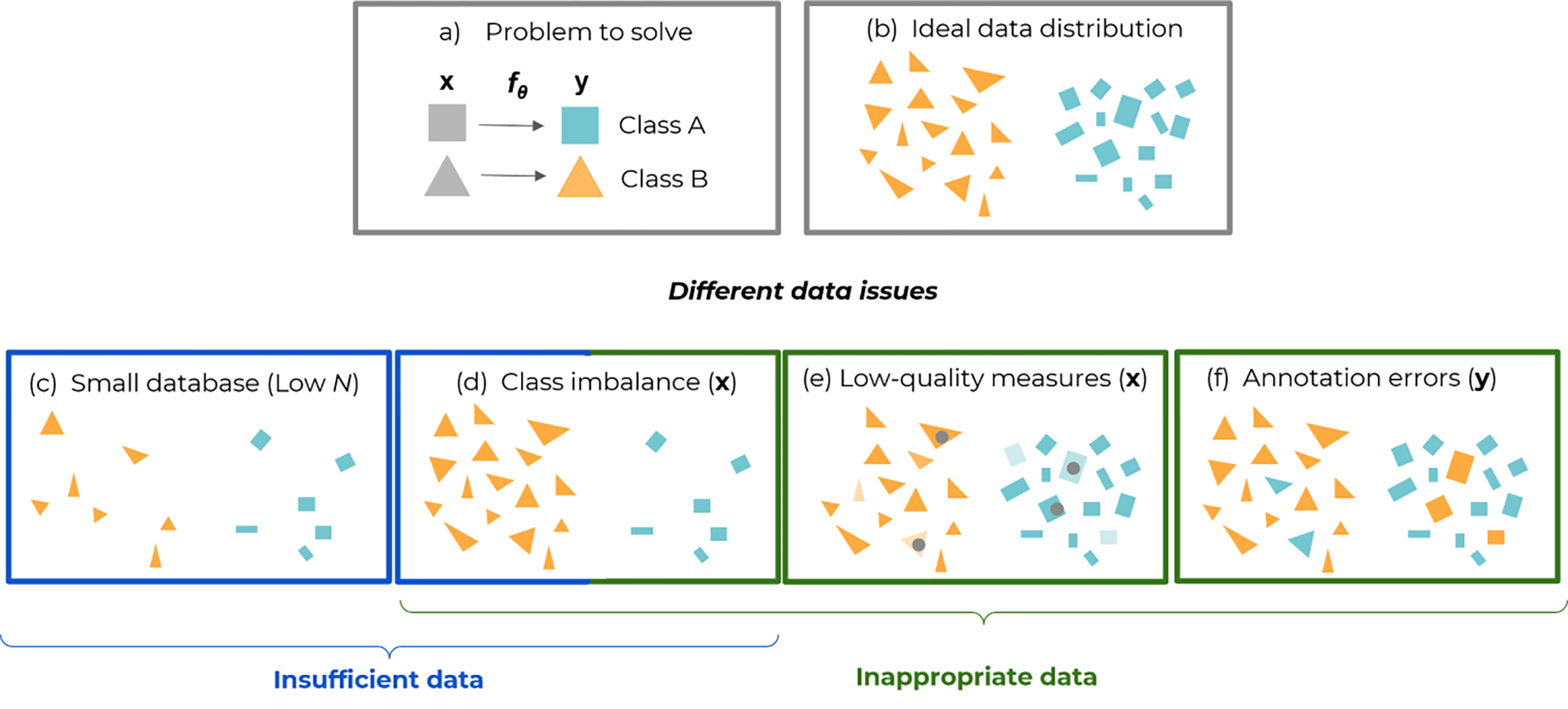

Figure 1.

Some data-related pitfalls of supervised learning, exemplified with a binary classification problem. Panel (a) formalizes the problem and how the model maps the inputs (features or images) to the outputs (class labels green and orange). Panel (b) shows an ideal dataset with enough data globally (high N) and in each class. Panel (c) illustrates insufficient data, when the number of total examples N is too low (for all classes). Panels (d) to (f) illustrate cases of inappropriate data: (d) Class imbalance, when class populations are unequal and minor classes might not be given enough importance in the performance figures. (e) Low-quality or corrupted inputs x, e.g., blurred, noisy, or artifacted images, represented by a lighter color and gray dots in the figure. (f) Annotation errors (mistakes in class labels y). To some extent, class imbalance can be seen as a particular case of insufficient data, when one of the classes has a low N with respect to the other(s).

More specifically, most ML applications attempt to learn an unknown phenomenon y = φ(x) in a supervised way, that is, where inputs are mapped to some desired output, with a flexible model y = fθ(x) having parameters θ. A finite dataset of input-output pairs (xi, yi)1≤i≤N is sampled from a population (figure 1.a and 1.b). In this sampling and learning process, insufficient data problems arise when the dataset size N is too low, whereas inappropriate data problems are related to the sampling, measurement, and annotation in the pairs (xi, yi) (figure 1. c–f).

2.1.2. Insufficient data

Insufficient data often result from the difficulty to collect and to annotate data in the medical field, due to cost, ethical issues, or expert availability. A too small dataset is generally unable to reflect all variations that can exist in a (patient) population. The size of the data to be collected typically must grow with the complexity of the task to accomplish. A complicated task usually involves many features or criteria to make a decision. The input dimensionality (e.g., just a few biomarkers, versus images with millions of voxels) and the output dimensionality (e.g., the number of classes or diseases to be distinguished) are typically faithful indicators of complexity. In computer vision, for classification of natural images, rules of thumb state that up to 1000 instances per class can be necessary, and the performance increases logarithmically with the dataset size (Sun et al 2017). In the medical field, the lower availability of data (Willemink et al 2020) is compensated by the greater regularity in images, with simple backgrounds, similar anatomies and orientations in the foreground. For instance, in dose prediction for radiotherapy, models like U-Net are efficient at learning from relatively small datasets (e.g. around 50–100 patients), thanks to a densely connected network architecture (Barragán-Montero et al 2021b, Barragán-Montero et al 2019, Nguyen et al 2019a, Fan et al 2019). Recent applications of U-Net like architectures or yet Generative Adversarial Networks (GANs) for other tasks such as image segmentation (e.g. organ (Nikolov et al 2018) and target volumes (Cardenas et al 2021)), image synthesis (e.g. generation of synthetic CTs from MR images (Maspero et al 2018)), or image registration, have also demonstrated a good performance when trained with databases in the order of one hundred patients or even lower (Sokooti et al 2017). Nevertheless, building a well-curated and up-to-date database of few decens or hundreds (patients) samples still remains a challenge for most medical institutions, and it is often the result of several years of work. For instance, (Grossberg et al 2018) presented the head and neck squamous cell carcinoma collection, comprising data from 215 patients collected during 10 years of treatment (from 2003 and 2013).

2.1.2. Inappropriate data

Inappropriate data covers a wide range of possible problems. In collecting input-output pairs (xi, yi) they can concern the sampling of xi in the population, the measurement of xi, or the annotation yi. Often, medical databases can suffer from several of these issues. Therefore, good data curation algorithms, together with interpretable/explainable ML and the exploration of data-model dependency, can help to properly identify and fix each issue (see Section 3).

Data sampling in the population: domain coverage and class imbalance

To be effective and to generalize to any individual from the population, the collected data must be representative of it, that is, it has to reflect all relevant variations in that population (i.e., domain coverage). In classification tasks, for example, not all variabilities could be represented within a single class or one or several classes might be underrepresented with respect to others in the database used to train the ML model (i.e. minority classes). Often, the technical term used to refer to this situation in ML is “class imbalance” (Johnson and Khoshgoftaar 2019). This results in wrong or reduced accuracy predictions for those underrepresented classes. In fact, the ML model will focus mainly on the majority class during learning, and in extreme cases, may ignore the minority class altogether. Class imbalance can be also seen as a particular case of insufficient data (Section 2.1.1), where the number samples in the minority class(es) (Nm) is much lower than that of the dominating class(es) (Nd), i.e. Nm << Nd (Figure 1). Notice, however, that class imbalance can occur even for models trained with databases containing a large total N, as long as the ratio between classes remains inappropriately balanced. This is the reason why we have decided to include class imbalance in the “inappropriate data” category.

In the medical field, the minority class can be represented by patients groups (e.g. with positive/negative diagnosis, rare diseases, patients under/over certain age, gender, ethnicity, etc…), but also at the pixel level (e.g. 2% of pixels of class A and 98% pixels of class B).

At the patient groups level, a common example of imbalanced datasets are those for skin cancer, which consist predominantly of healthy samples with only a small percentage of malignant ones (Zunair and Ben Hamza 2020, Mikolajczyk and Grochowski 2018, Emara et al 2019). Another example is how gender unbalance between male and female patients in the training database can lead to biased ML models. For instance, a recent study analyzed the effect of gender imbalance when training ML models to diagnose various thoracic diseases (Larrazabal et al 2020). A consistent decrease in performance was observed when using male patients for training and female for testing (and vice versa).

Regarding the pixel level, the most trivial example is the detection or segmentation of small lesions or organs from medical images (Bria et al 2020, Gao et al 2019, 2021). A good illustrative case is the segmentation of organs for head and neck cancer patients, where the ratio between small and big organ volumes can reach a factor 100 (e.g., optic structures versus parotids or oral cavity)(Gao et al 2019). For instance, a difference up to 20% in Dice coefficient for the ML model accuracy can be found between the smallest organs (e.g. optic nerves and chiasm) and the bigger ones (Tong et al 2018)

Data measurement: low quality or corrupted records

As soon as population sampling issues are sorted out, another caveat concerns the quality of the records in that sample. For example, in an application that involves medical images, those can be more or less noisy, blurry, or subject to artifacts (Dodge and Karam 2016). Concepts like image definition, (optical) resolution, contrast, or signal-to-noise ratio are important here and condition even more ML performance than it does for human observers, who can more naturally disregard artifacts and compensate for noise or blur. This is really the classical meaning of ‘garbage in, garbage out’ in signal processing: corrupted data leads to poor performance. Typical examples of noise and artifacts in medical images include CT artifacts due to metal implants (Kalender et al 1987, Barrett and Keat 2004), ring and scatter noise in Cone Beam CT images (Zhu et al 2009), or artifacts due to patient motion (Zaitsev et al 2015). In extreme cases, even slight perturbations can have dramatic effects and can be exploited to defeat or ‘attack’ the model with so-called ‘adversarial examples’ (Finlayson et al 2019, Szegedy et al 2013). For instance, adding adversarial noise to an image of a skin mole, classified by the model as benign, can suddenly make the model change the output to malign (Finlayson et al 2019).

For noise, blur, and low contrast, improving the image acquisition device or tuning its parameters are straightforward recommendations. Data curation to avoid badly corrupted records or the presence of confounding artifacts can also improve performance. Often, this is at the price of lower robustness and generalization capability, since ML models are left totally unaware of these outliers and pathological cases at training time, although they might still show up when the ML model is queried. Some unwanted artifacts in images can also turn into confounders or spurious revealers, like the presence of a plaster cast in radiological images when it comes to spot broken bones, or image tags that correlate with patient, disease, or treatment categories that should be predicted from the image content, not from such side information (Badgeley et al 2019, Zech et al 2018).

Another type of low quality records include the cases for which data is uninformative or not informative enough. The records do not convey all the necessary information to solve the problem at hand. For instance, an image with a small field of view that does not cover (or not entirely) the region of interest for a diagnosis or segmentation model would be considered uninformative. Another example is when the necessary information is spread over several sources and the model has access to only one or few of them. For instance, ML models for segmentation of tumor volumes are often provided with only one image (e.g., CT), while in clinical practice the physician gathers information from several sources to perform the segmentation (e.g., PET, MR, endoscopy images or meta-data like age, patient’s physical condition, other diseases, etc) (Ye et al 2021, Moe et al 2021).

Data annotation: low quality annotation, label noise, or inter-observer variability

In the collected data pairs (xi, yi), yi is responsible for the supervision of the training, that is, to associate the correct output to any input record xi. The quality of this annotation or label is thus of paramount importance (Karimi et al 2020, Frenay and Verleysen 2014).

The most straightforward example of low-quality annotations is the presence of inaccuracies induced by human errors when labeling medical images used for training a ML model. For instance, (Yu et al 2020) recently studied the effect of using inaccurate contours when training an automatic segmentation ML model for the mandible. They showed a decrease in the Dice coefficient between 5% and 15% when the ratio of inaccurate contours increased from 40% to 100%. Another recent study investigated the effect of using erroneous labels when training a ML model for skin cancer classification (Hekler et al 2020), reporting a 10% decrease in accuracy when using the imperfect labels versus the perfect ground truth.

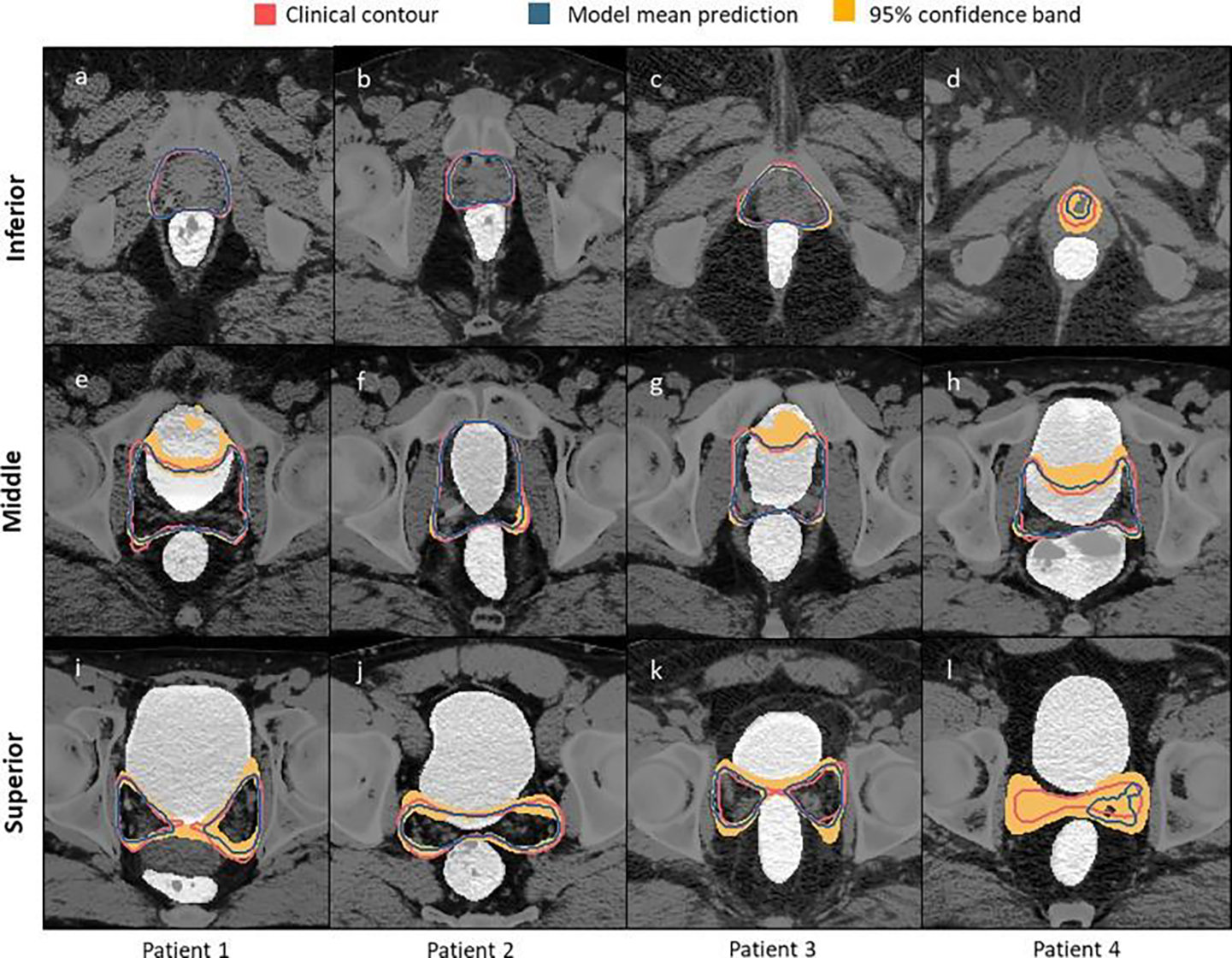

Another major data quality issue in the radiation oncology field is data heterogeneity or variability. Overall, these variabilities can be viewed into two categories: 1) lateral variability and 2) longitudinal variability. Lateral variability describes the difference in data distributions for a given time frame. Some examples include the interobserver variability in radiotherapy treatment planning (Berry et al 2016, Nelms et al 2012), the variability in delineation of tumor and organ volumes across different physicians (Apolle et al 2019, Veen et al 2019, van der Veen et al 2020), or the differences between clinical practices among institutions (Eriguchi et al 2013, Gershkevitsh et al 2014). In contrast, longitudinal variability describes the difference in data distributions over time, such as the evolution of treatment techniques (Shang et al 2015), the introduction of new delineation guidelines (Grégoire et al 2018, Brouwer et al 2015) or fractionation protocols (Parodi 2018, Dearnaley et al 2017).

Lateral and longitudinal variability are often entangled together within retrospective databases containing patients treated with radiotherapy by different physicians, institutions, and at different time points. Although the individual effect of each source of variability is hard to quantify, a recent study has demonstrated that the use of homogeneous data increases the accuracy and the robustness of ML models (Barragán-Montero et al 2021b). The study compared two ML models for radiotherapy dose prediction for esophageal cancer. The first model was trained with a variable database (i.e., retrospective patients, different time frames, planning protocols, treating physicians), while the second was trained with a homogeneous one (i.e., same time frame, same treatment protocol, same physician). The second model was able to reduce the mean absolute error of the predicted dose distribution.

Yet another important issue is the presence of annotation bias. General examples of bias in the medical domain include over-diagnosis of certain diseases (Blumenthal-Barby and Krieger 2015), or bias induced by gender, race or socioeconomic factors (Schulman et al 1999, Bach et al 1999, Forrest et al 2013, Lievens and Grau 2012, Obermeyer et al 2019). For instance, (Bach et al 1999) reported significant racial differences in the treatment of lung cancer. They observed that black patients are less likely to receive surgical treatment than white patients, which entailed a decrease of 8% for the five-year survival rate of this population. Often, one of the most important sources of this kind of bias is the socioeconomic level of the patient, which is also well known to affect the treatment chosen and delivered for cancer patients (Zhou et al 2021, Lievens and Grau 2012, Ou et al 2008, Forrest et al 2013).

Last but not least, variability and biases can somehow co-exist in many scenarios. For instance, in lateral variability, medical experts can disagree persistently about the annotation of some data instances. Across consistent groups of experts, this can be seen as biases, whereas for ML models these discrepancies are seen as a variability around a consensus that might not be agreed upon yet. The framework of supervised learning, with functional models ŷ = f(x) can only produce a single output ŷ for a given input x. If several outputs need nevertheless to be produced, then new explicative inputs must be identified and appended to x. Alternatively, one can also train an individual model for each possible output ŷl, like if several ground truths were possible for a given x. For instance, a recent study about radiotherapy dose prediction for prostate cancer patients illustrated the differences in treatment planning practices between different doctors and institutions, and generated specific ML models for each clinical practice (Kandalan et al 2020).

2.2. Model and learning frameworks

Most current ML methods extend and upscale supervised learning techniques developed by statisticians over the past 100 years (Friedman et al 2001). Supervised learning for ML algorithms do not substantially differ from linear or logistic regression models. In all cases, they find a function y = fθ(x) that models the phenomenon under study y = φ(x). Model fitting amounts to minimizing the discrepancy between the ground truth y, as measured or annotated, and ŷ as yielded by the model. ML tries to identify the relationships that map the features in x to the outputs y. In the following, we present several limitations related to this learning framework, which should be carefully taken into account when implementing ML models in the clinical environment

2.2.2. Non-causal correlations and hidden confounders

When trying to find the relationships that map the features in x to the outputs y, the optimal solution is typically the one that finds strong dependencies between the considered features (e.g., patient’s smoking condition) and outcomes (e.g., probability of lung cancer). However, the weakness of supervised learning, and most ML frameworks in general, is that it cannot infer causality out of the input-output dependencies, which can be either causal and relevant or spurious and confounding in the interpretation of the model. This represents an important risk when it comes to medical applications (Castro et al 2020). For instance, a recent study found that a convolutional neural network (CNN), trained to process X-rays images to predict pneumonia, was using the hospital information to make predictions, often disregarding the areas of the image with radiological findings relevant to the underlying pathology (Zech et al 2018). Specifically, the CNN was trained with databases from multiple hospitals, where the prevalence of pneumonia was very different. The hospital information was retrieved from a hospital-specific token, located in the corner of the image, and other image features indicative of the radiograph’s origin (figure 2). This information was strongly correlated with the prevalence of pneumonia in the considered dataset, without any causality, thus acting as a hidden confounder and leading to the so-called “shortcut learning” (Geirhos et al 2020). One can find many other examples of confounders and spurious correlations in the literature of ML models for medical applications. For instance, another study reported that an artificial neural network, trained to estimate the probability of death from pneumonia in the emergency room, labeled asthmatic patients as having a low risk of death, because in the training data this cohort was seeking care faster than non-asthmatic patients (Cooper et al 2005). Yet another recent study found that colon cancer screening or abnormal breast findings were highly correlated to the risk of having a stroke, with no clinical justification (Mullainathan and Obermeyer 2017).

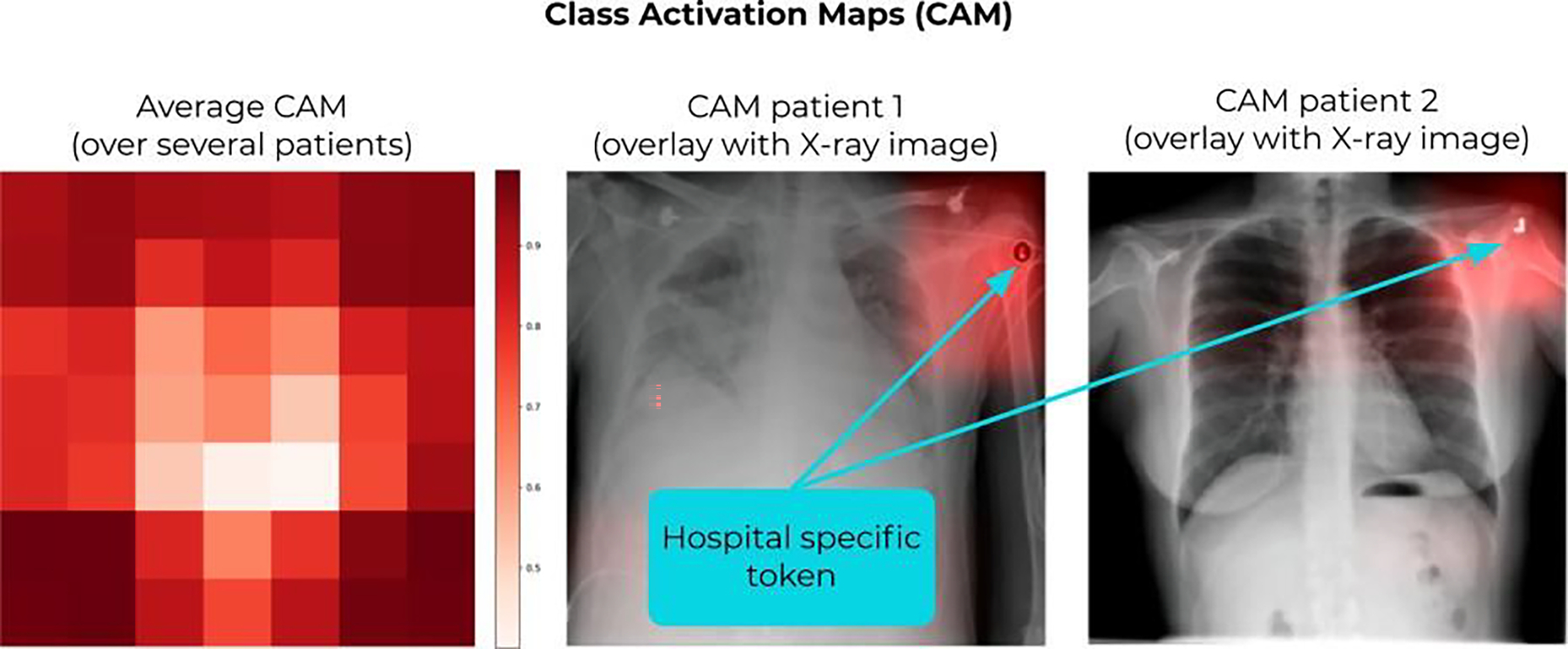

Figure 2.

Adapted from (Zech et al 2018). Class activation maps (CAM) showing the relevant regions considered by the CNN to make the prediction. The model in this study was trained to predict pneumonia from X-ray images. By looking at the CAMs, they found out that the model was looking at the corner of the images, and in particular, at the hospital-specific metal token (a hidden confounder) to make the prediction. (Left) CAM averaged over several patients; (middle and right) examples of CAMs for two patients.

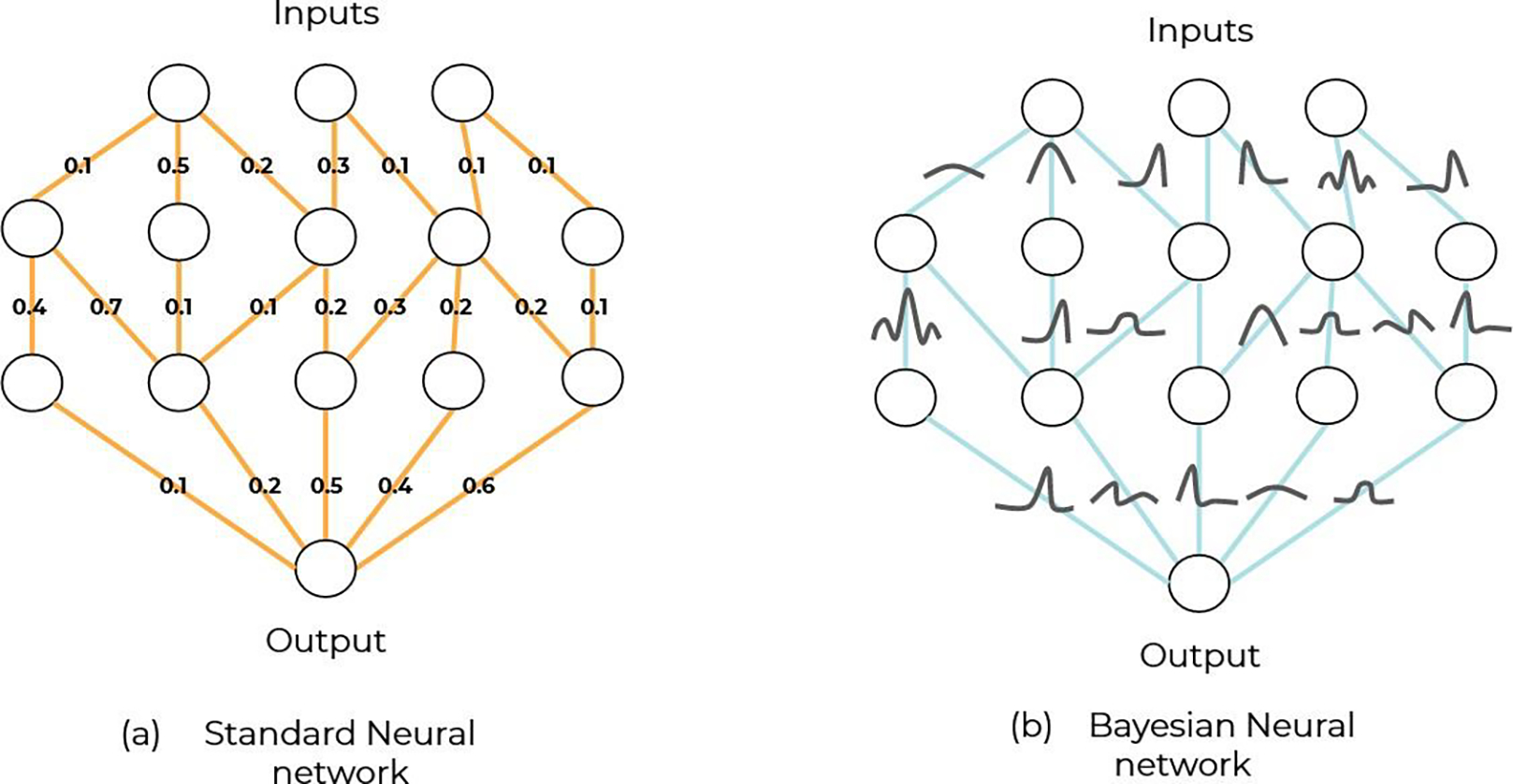

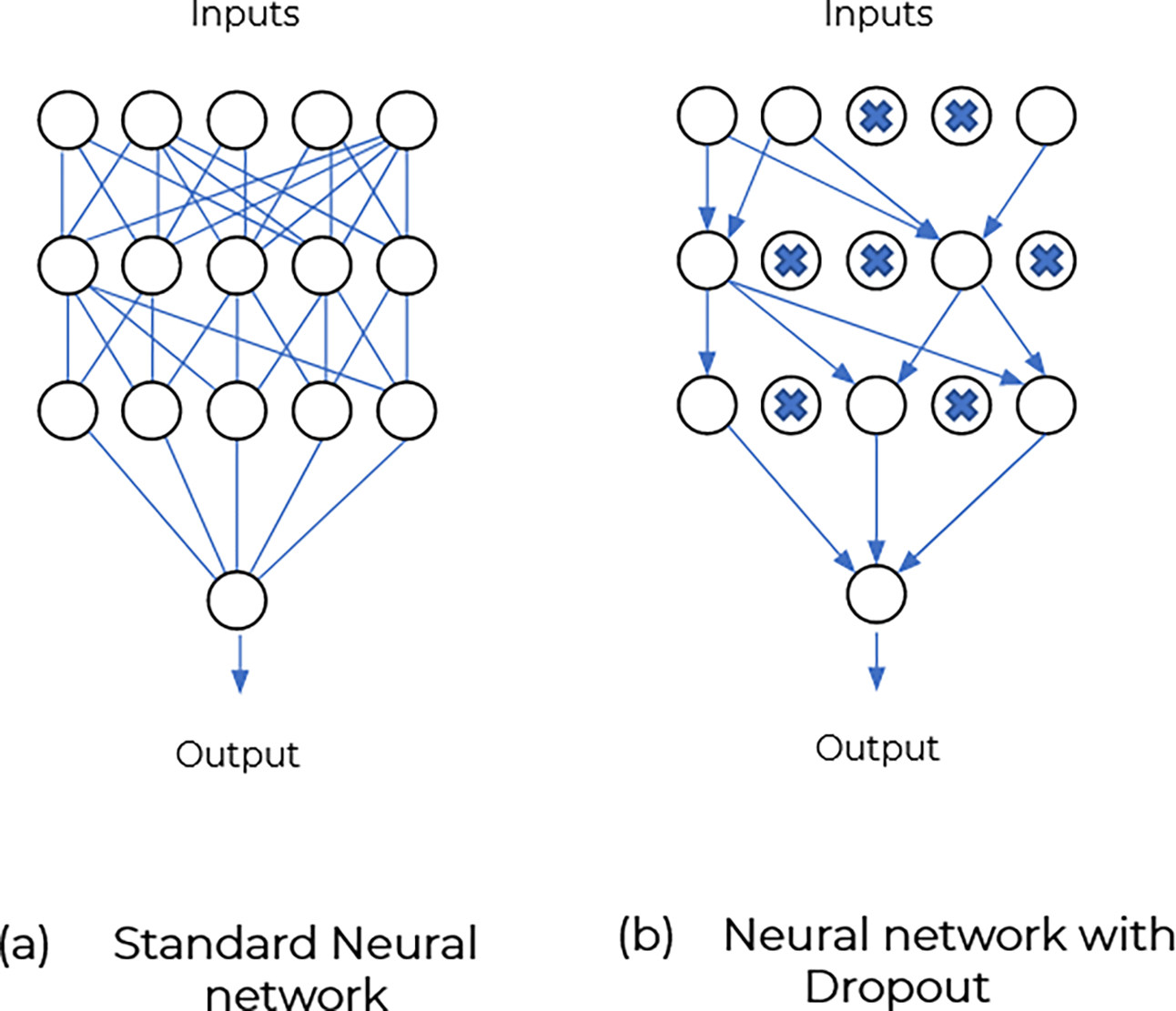

2.2.3. Model complexity: size, nonlinearity, and opacity

Beyond the inability to identify relevant causality, the interpretability of ML models can be further impeded by their sheer size and complexity. The advantage of state-of-the-art ML models (i.e. CNNs, GANs, …) over classical linear models is their increased capability to find a function that approximates the problem under study (y = fθ(x)). This is often done by drawing on nonlinear relationships between variables (e.g., patient characteristics) and outcomes (e.g., mortality probability). Finding the final function can be accomplished by either directly estimating the parameters of a nonlinear function of fixed complexity (e.g., an artificial neural network) or estimating the complexity and shape of a nonlinear function (e.g., non-parametric algorithms like gradient boosting) (Friedman et al 2001). In all cases, the consequence of nonlinearity is an increased number of parameters required to build that function fθ(x). A modern ML model can have between a few thousands and several millions of trainable parameters. For instance, Nguyen et al. (2019) compared different ML models for predicting the radiotherapy dose for head and neck cancer patients, reporting between 3 and 40 millions of trainable parameters for the considered models (Nguyen et al 2019a). The bigger the number of parameters, the less tractable the model becomes, thus reducing the interpretability of the provided function and turning it into a black-box. Notice that the same issue happens for big linear models, too. Promoting sparsity, that is, the parsimonious use of the available features and variables, to reduce the number of effective (non-zero) parameters) (Vinga 2021, Oswal 2019, Rish and Grabarnik 2014) can mitigate this issue of size and interpretability of large black-box models. For such models, identifying hidden confounders and non-causal correlations becomes very difficult, which certainly increases the risk when using them for medical applications. This lack of interpretability has been recently highlighted as one of the most important issues to be addressed in the medical domain before ML algorithms can be widely accepted in the clinic (Reyes et al 2020, Luo et al 2019).

2.2.4. Task-specialized learning, static models, and low generalization

Supervised learning is often cast within a simplified framework that ignores time, where all the dataset is supposed to be known at once and engraved in marble for eternity. Any change entails retraining from scratch. In other words, most ML models cannot learn incrementally, interactively, nor in real-time. They are trained with data from past experience and they become fixed and static models as soon as training ends. This represents an important limitation when it comes to their application in the ever-changing medical field: technologies improve (Shang et al 2015), medical protocols evolve (Grégoire et al 2018, Parodi 2018), and the distribution of patient populations change over time (Chai and Jamal 2012). In this fast-moving world, static AI models quickly become irrelevant. Therefore, it is imperative to shift towards models and frameworks that can quickly adapt to new settings or changing distributions over time. The framework of supervised learning is also essentially specific to a task and exclusively driven by performance at that task. This means that a model trained for a particular application offers no real guarantee to be good at other similar tasks, and the learnt skills are hard to reuse and/or generalize. For instance, specific ML models are currently trained to predict the radiotherapy dose for each cancer location (e.g., head and neck (Nguyen et al 2019a), lung (Barragán-Montero et al 2019), breast (Ahn et al 2021), etc.), instead of reusing the learned skills from one location to another. The same issue can be observed for other applications, such as diagnosis or organ segmentation models. In order to be more efficient and increase the generalization capabilities, future ML in the medical field would require stronger models, with an increased capability to reuse the learning skills. This paradigm shift has been coined as the “weak versus strong AI”.

The low generalization capability of current ML models is widely debated in the literature. In the medical domain, many publications state that, for a successful clinical implementation, ML models should be able to generalize to new data, that is, keep performing well enough on records coming from different hospitals, images from different scanners and vendors, different imaging and treatment protocols, different patient populations, data changes over time, etc. A large number of studies have been published focusing on the question of generalization. For instance, (Liang et al 2020) illustrated the problem of generalization with a ML model trained to convert CBCT into synthetic CT images. The authors trained the model on CBCT images acquired from one vendor’s scanners for head and neck cancer patients, and they quantified the decrease of performance when applying the model to images from another vendor’s scanners and from different locations (e.g., prostate, pancreatic, and cervical cancer). In (Feng et al 2020), the generalization issue was illustrated with a model trained to segment thoracic organs. The model could not generalize to their local dataset because they used an abdominal compression technique, whereas the training set was acquired with free breathing. The subtle shift of thoracic organs due to the abdominal compression caused significantly worse performance on the local dataset. Similarly, (Pan et al 2019) studied the generalization of a ML model to classify abnormal chest radiographs from different institutions. The generalization across different scanners has also been a topic of discussion for models trained to segment MR images (Yan et al 2020, Meyer et al 2021). Other examples include exploring the generalization of ML models for fluence map prediction in radiotherapy treatment planning (Ma et al 2021), generalizability in radiomics modeling (Park et al 2019, Mali et al 2021), or generalization of models for classification of histological images (Lafarge et al 2019). Another well-known example is the study by (Zech et al 2018), already discussed in Section 2.2.1 (Figure 2). The ML model was not able to generalize to radiographs from other hospitals because its learning had been biased by a hidden confounder (i.e., the hospital-specific metallic token).

Generalization is a very abstract term, and the examples above show that poor generalization can be frequent. Recently (D’Amour et al 2020) introduced an umbrella term to cover all the seemingly different failures to generalize in current ML: “underspecification”. It refers to the typical inability of the ML pipeline (training, validation and testing) to ensure that the model has seen and encoded all the relevant variabilities of the underlying system or problem. (Eche et al 2021) discuss how this concept echoes in the medical field, from the perspective of radiologists. They relate underspecification to the aforementioned antagonism of “weak versus strong AI”. They also distinguish narrow and broad generalization. Narrow generalization corresponds to the case that is considered by design in most validation frameworks: test or deployment data are supposed to be independent and identically distributed (i.i.d.) as data in the training and validation sets. Independence guarantees the new data is unseen, while the identity of the underlying distribution ensures consistent predictability. In contrast, broad generalization aims at maintaining predictability if the deployment data are independent but possibly differently distributed. The deployment data distribution can then have other or slightly shifted variabilities than in training and validation. For this reason, broad generalization is also known as (distribution) domain shift or drift. If generalization problems arise, we can refer to our two-fold categories in this section: data and model issues. A model cannot generalize properly if the training data and the actual data at deployment time are not i.i.d., that is, the former is not representative of the latter (see section 2.1), or if the model has not learned correctly, due to hidden confounders, overfitting to (noisy) training data, etc. Broad generalization to non-i.i.d. datasets is a much more ambitious goal and it aims at strong AI, closer to natural intelligence, where general knowledge is acquired and re-used across analogous problems and tasks. Although strongly desirable, broad generalization is controversial. In (Futoma et al 2020) the authors discuss how seeking broad generalisability can be detrimental to the clinical applicability of some ML models, and they provide some illustrative examples. Imagine, for instance, a ML model with an excellent performance for diagnosis of a certain disease in hospital A, properly generalizing to the entire patient population in that hospital. The model might not work with equal performance for hospital B, since the patient population might differ (domain shift and out-of-domain samples). However, trying to change the model to increase the performance for hospital B might be at the cost of lowering the performance for hospital A, in the same way as when individual human experts get replaced with a single all-rounder. For current ML models there is a trade-off between performance and generalization, which must be carefully considered for clinical applications. In this case, building a new (specific) model for hospital B would be more appropriate than using a general model with lower performance. Futoma et al. claim that we should stop demanding broad generalization and focus on understanding how, when, and why a machine learning system works.

3. Interpretability, explainability and data-model dependency

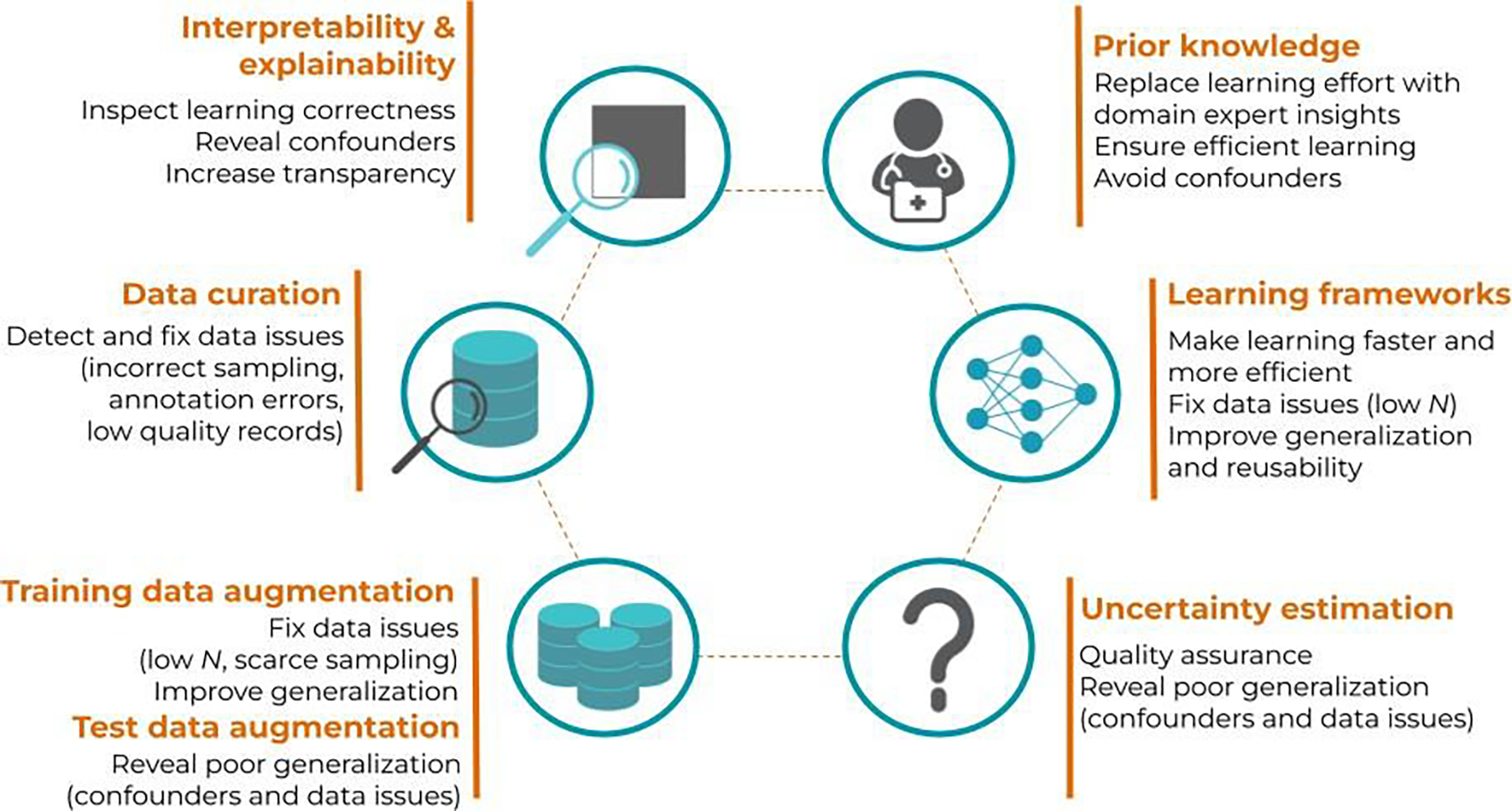

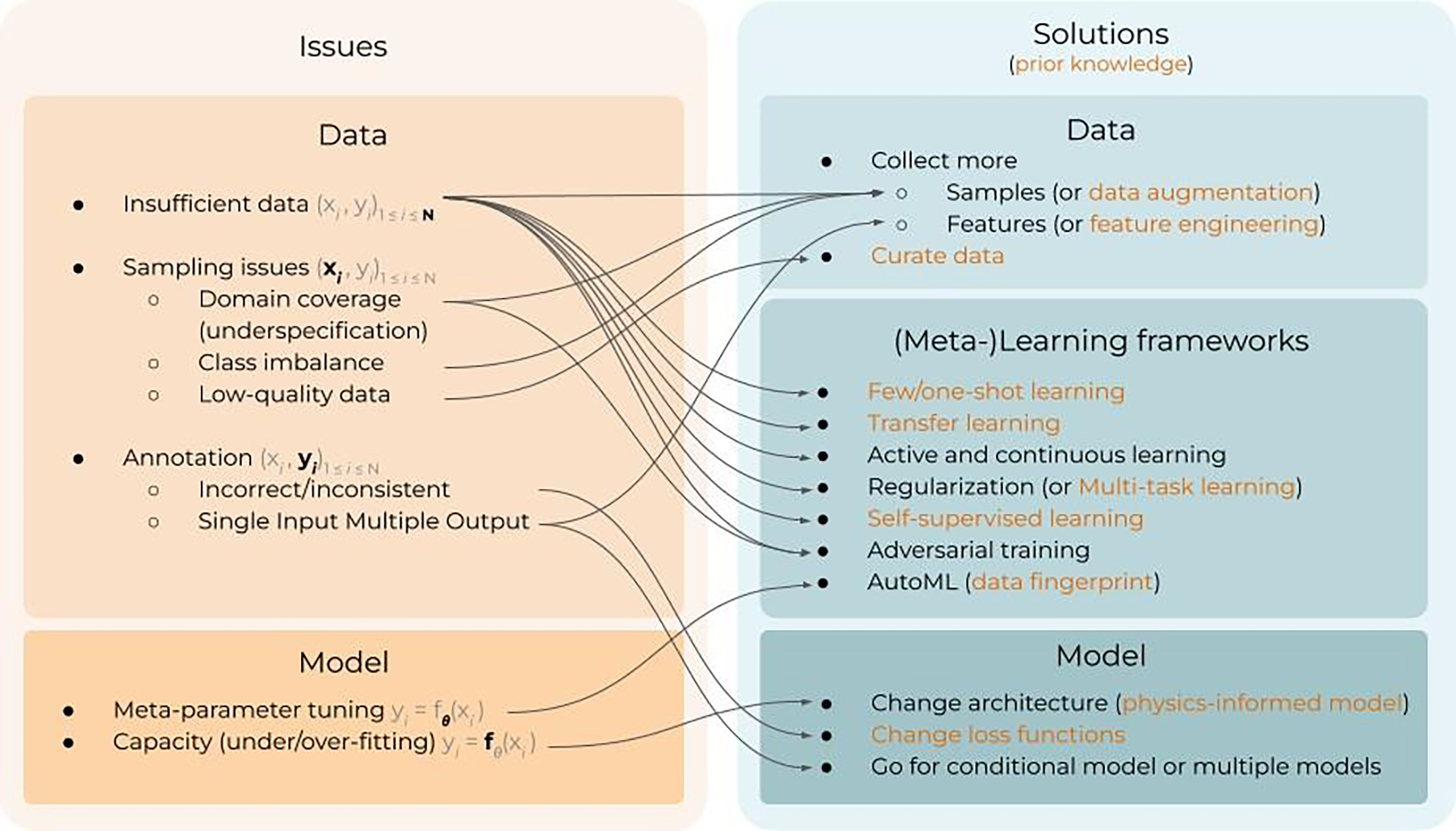

The previous section introduced the different risk factors of ML models for medical applications, clearly distinguishing two categories: data and model issues. However, in practice, data and model issues are often entangled, and identifying the actual risks for a given medical application is not straightforward. In order to properly identify and fix each risk factor, we must implement strategies that enable us to interpret and/or explain the behavior of ML models, as well as to explore the data and how the model performance depends on it. More importantly, this entanglement between data and model issues makes the possible range of solutions a non bijective problem, i.e., a certain technique can be the solution to several of the aforementioned issues in Section 2, and vice-versa, a certain issue can be fixed (or mitigated) by different techniques. For instance, providing explanations about the model behavior may reveal non-causal correlations involving confounders; but they can also be revealed by exploring the performance of the model in different datasets or related tasks. Figure 3 presents a schematic view of the concepts described in this section, in order to guide the reader to understand how these techniques connect and serve as solutions to the risks presented in Section 2, ensuring a safe and efficient clinical implementation of ML. Section 3.1 will cover general concepts and key techniques for interpretability and explainability. These techniques can be used to inspect if a ML model has learnt the underlying problem correctly, thus helping to identify data issues, hidden confounders, etc. Section 3.2 will cover key concepts related to the data and the learning process. On the one hand, targeting directly the data distribution to avoid insufficient and low-quality data will ensure that the ML model is encoding and learning the problem correctly. This includes data curation to detect and fix possible data issues, data augmentation to ensure a sufficient domain coverage, and techniques to efficiently incorporate (expert) prior knowledge about the domain. On the other hand, analyzing how the model reacts to different and external datasets (i.e., test data augmentation or stress testing), and estimating its uncertainty, can serve to further quantify the performance and generalization capacity. Lastly, a full section is dedicated to describe and discuss different learning frameworks proposed in the ML community to achieve robust and efficient learning, becoming one step closer to strong AI models.

Figure 3.

Schematic view of the different concepts presented in this topical review and how they can serve as key solutions to overcome the limitations of current ML models, ultimately ensuring a safe and efficient clinical implementation.

3.1. Interpretability and explainability

Although the terms interpretability and explainability are often used interchangeably (Huff et al 2021, Reyes et al 2020, Luo et al 2019), it is important to stress the difference between the transparency of the model to the end-user (i.e., interpretability), and the techniques used to provide insights about the inner workings of black-box models (i.e., explainability). In this section, we provide basic background knowledge about interpretability and explainability, so that the reader can make a conscious choice when aiming at the clinical implementation of ML methods. Please note that this is not an exhaustive review of all existing methods for interpretable and explainable ML, but rather an introductory section to these topics for the medical community. For extensive technical reviews we refer to (Arrieta et al 2020, Doshi-Velez and Kim 2017).

3.1.2. Interpretability

Interpretability is a property of models (and sometimes decisions) to be understandable by their users (Guidotti et al 2019, Arrieta et al 2020). Although the questions about interpretability have been around for a few decades already (Kodratoff 1994) (Adadi and Berrada 2018), the vocabulary and its conceptualization were not so clear. Until 2015–2016, interpretability was identified in the ML literature by several different terms (interpretability, understandability, comprehensibility, etc.) (Bibal and Frénay 2016). Furthermore, the problems of providing understandable, trustworthy, or justifiable models were confounded. With the growth in use of ML and, in particular, DL, in our society, the ML literature had to focus on interpretability.

In fact, interpretability is a concept that is hard to define because of its subjective nature (Bibal and Frénay 2016). For example, a model can be interpretable for a ML expert, but not for a lay person. In particular, a model that would include and manipulate information that a physician can easily understand can, on the contrary, be difficult to understand by a radiotherapy technician or a dosimetrist. Objectively quantifying interpretability is hard and has mostly been done in the ML literature through the complexity of models, excluding the content of these models. For instance, the bigger a decision tree is (i.e., the more nodes it has), the less interpretable it gets. Similarly, the more non-zero coefficients a linear model has (i.e., the less sparse it is), the less interpretable it is. Some models, specially those with highly nonlinear nature like neural networks (see Section 2.2.2), are assumed to be black boxes in practically all cases, as they always are structurally complex, even if they manipulate understandable information.

Although controversial (Rudin 2019), most researchers rely on the hypothesis that the more complex the model is, the better accuracy it has. For instance, if the underlying relationship between features and outcome is nonlinear, the result will be models with likely better accuracy compared to linear models. Similarly, shallow ML models are often overperformed by deep models (Liang et al 2019a) (Chauhan et al 2019). Hence, what we trade for better accuracy is a higher complexity, and thus worse interpretability of ML models (Valdes et al 2016a, Caruana et al 2015). Those against this hypothesis argue the existence of a set of equally-accurate models for a given problem, with different levels of complexity and interpretability (i.e., Rashomon sets) (Fisher et al 2019, Rudin 2019). Thus, the problem is not the absence of accurate and interpretable models, but the difficulty to find them.

Several authors are actively working in developing accurate and interpretable ML models (Valdes et al 2016a, Caruana et al 2015, Luna et al 2019). For instance, Valdes et al. (2016) developed an improved version of classical decision trees (based on boosting) for a patient stratification tool. The model achieved a high accuracy while being rather transparent, since the subpopulations defined by the leaf nodes of decision trees could easily be interpreted by human experts. Another example is the use of Generalized Additive Models (GAMs), which create nonlinear transformations of individual variables, later combining them into a generalized linear model. The contribution of each variable can be interpreted from the individual graphs representing the nonlinear transformations (Caruana et al 2015). Yet another example is the recent work of Luna et al., who created a further improved decision tree by exploiting the mathematical connection between individual partitions and gradient boosting. The resulting decision trees were smaller and, as such, more accurate (Luna et al 2019). Despite the promising results obtained by these algorithms, whether they can obtain similar performance on more complicated medical problems remains to be seen.

The complexity of the model is only one of the multiple factors that are involved in the concept of interpretability (Guidotti et al 2019). Indeed, this feature does not suffice, as mathematically complex models can be made understandable through their representation. For instance, what makes decision trees interpretable is not the mathematical complexity behind those trees, but the fact that a tree representation is easy to follow by humans. After the complexity of models, the second factor is therefore the possible representations of this model. Third, as previously mentioned, the expertise of the user also plays a major role. The interpretability of decision trees and their useful representation can be low for someone who has never seen any decision tree, while it can be high for a ML expert.

Finally, the time provided to grasp the model is also a factor of interpretability. With an infinite amount of time, all models can be understood. What makes complex models hard to grasp is that they have to be understood in a short period of time. Therefore, the shorter this period of time is, the more difficult it is to interpret the model. This means that in a clinical environment, where the schedules are very tight, for a model to be interpretable, it must largely be less complex than in other contexts with milder time constraints.

Another way to see the aforementioned factors (e.g. complexity, representation, and time) is that if one of them is low, the others have to compensate. For instance, if the period of time to grasp is very short (e.g., in a case of medical emergency), then (1) the intrinsic complexity of the model must be low, and/or (2) the representation of the model must make it easy to grasp, and/or (3) the users (in this example, the emergency caretakers) must be trained to be experts in those models. Note that the concept of explainability (i.e., the ability to explain the inner workings of the model) is also determined by the same factors.

3.1.3. Explainability

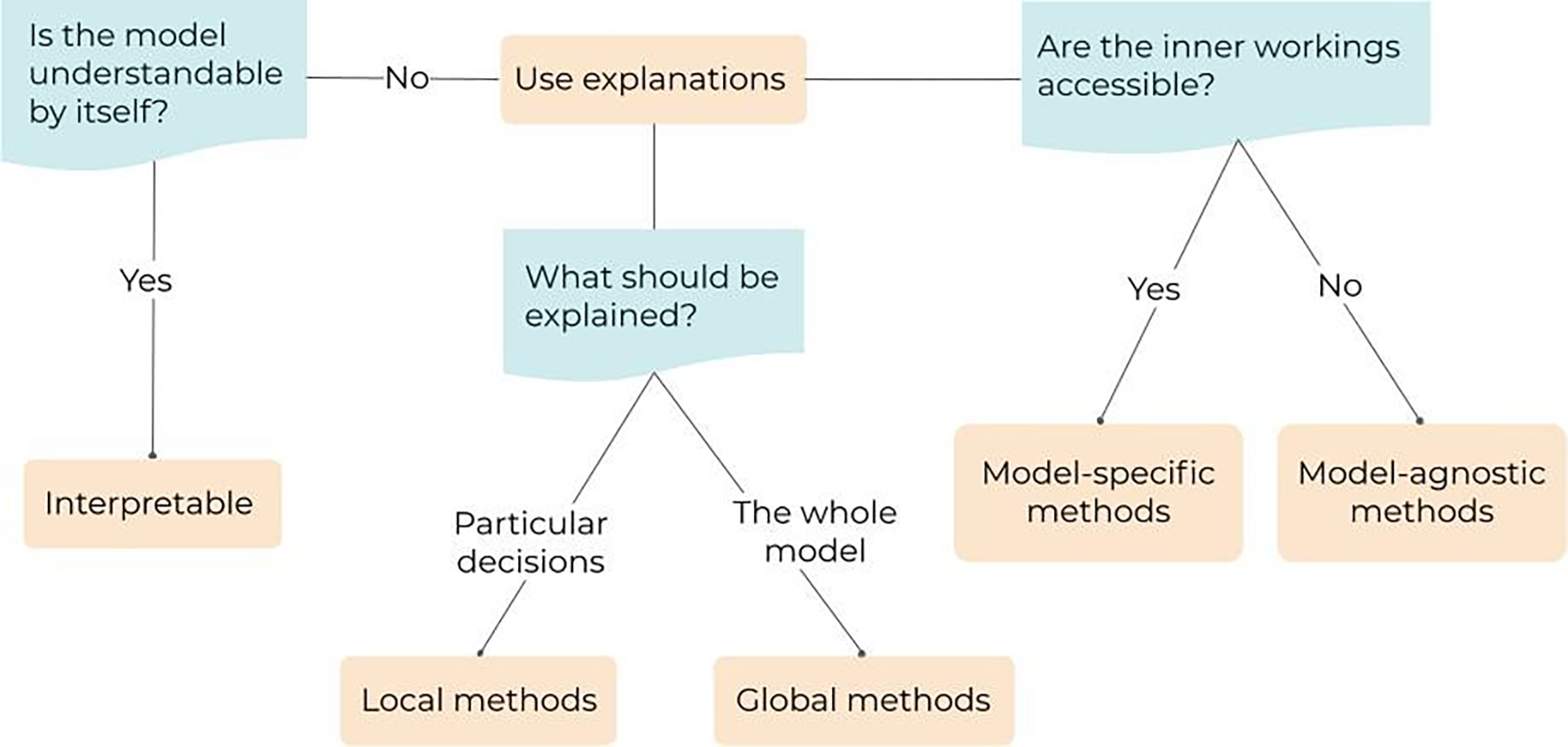

When a model is not interpretable (i.e., it is a black box), bu7t its scrutiny is still important or necessary (e.g., by law, to enable a safe clinical implementation or simply to increase trust of the medical practitioners), another property is considered: its explainability (Guidotti et al 2019, Arrieta et al 2020). Explainability is the capacity of a model to be explained, even if not totally interpretable. The question “is the model understandable by itself?” (figure 4) is therefore the first to be answered before unnecessarily using explanation methods if the model is already interpretable. If the answer is negative, there are different approaches to provide explanations, depending on the accessibility of the inner workings of the model (model-specific versus model-agnostic explanations), as well as on the nature of what should be explained (local versus global explanations).

Figure 4.

Pipeline describing the typology and the selection of the explanation method. First, if the model is already understandable, it is said to be interpretable. Second, if the model is not understandable, two questions need to be answered to select the right explanation technique: (1) are the inner workings of the model accessible? and (2) what needs to be explained (the whole model or particular decisions)?

Model-specific versus model-agnostic explanations

If the elements of the inner workings of the model are accessible, this information can be used to provide explanations about the model behavior. In these cases, the way the models are built can provide clues about the model decisions. These explanations are model-specific as they cannot be used, as they are, to explain a completely different model. Notice that the difference between the access to these elements of explanation and interpretability is that these elements do not fully explain the model. They are just characteristics of the models that can be exploited to gain insights about its inner workings. These clues may not be enough for gaining the trust of users or, in certain cases, for the law, but it is a first step that makes black boxes a bit more transparent. Two examples detailed just below of model-specific explanations are the feature importance provided by the out-of-bag error in bagging methods like random forests or boosted decision trees, and saliency maps when there is an access to the gradients in artificial or convolutional neural networks (Simonyan et al 2013).

Random forests (Breiman 2001) use different subsets of instances when training the different decision trees in the forest. For each decision tree, the subset of instances that are not used to train the tree (i.e., that are out of the bag) can be used to compute a certain error called the out-of-bag error. The feature importance in the forest is then provided by the effect of perturbing the feature values on the out-of-bag error. If the out-of-bag error changes when perturbing the feature values, this means that the feature is important. For instance, a recent study used the out-of-bag error for highlighting the most important features of a ML model applied to detect lung cancer from CT radiomics and/or semantic features (Bashir et al 2019).

If the gradients of a model are accessible, they can be used to explain the model. For instance, when predicting an image class, convolutional neural networks (CNN) back-propagate the decision on the class to the pixels through the gradients. Looking at the gradients when back-propagating has the effect of providing, for each pixel, the importance of the pixel on the prediction. The resulting image, where pixels are highlighted with respect to their contribution to the prediction, is called a saliency map (Simonyan et al 2013). Other gradient-based explanation techniques have been developed since then, like Grad-CAM (Gradient Class Activation Maps) and all its variants (Selvaraju et al 2017). Gradient-based techniques have been extensively used in medical applications to explain the performance of ML models (Singh et al 2020, Huff et al 2021). A popular example is the study by (Zech et al 2018), already mentioned in Section 2.2.2, where a CNN was trained to predict pneumonia from X-ray images (figure 2). By using class activation maps (CAM) (Zhou et al 2016), they discovered that the CNN was not looking at relevant areas for the disease in the X-ray images. Other examples include the study of (Diamant et al 2019), where a CNN was trained to predict treatment outcome of patients with head and neck cancer, and Grad-CAMs were used to visualize the areas of the CTs that were found to be relevant for the prediction. Yet another example is the study by (Liang et al 2019a), who trained a CNN to predict pneumonitis as a side effect from thoracic radiotherapy, and used Grad-CAM to locate the regions of the dose distribution that were relevant to the prediction.

Another idea is to test whether activations, in a chosen layer, relate to predefined concepts by defining Concept Activation Vectors (CAV) (Kim et al 2018). The idea is similar to saliency maps, except that it is the sensitivity of the activations with regards to predefined concepts that is investigated, instead of a sensitivity with regards to the input (e.g., the pixels). This strategy is sometimes called explanations through semantics (Reyes et al 2020), since it allows us to explain the features learned by the model to the users in terms of human-understandable concepts. Concept Vectors have not yet been used in many medical applications, but a good illustrative example is the study from (M et al 2020). They applied CAV and an extended version of it, Regression Concept Vectors (RCV), to provide explanations for CNNs trained to diagnose breast cancer from histopathological Whole Slide Imaging and retinopathy of prematurity from retinal photographs. They used concepts such as the area or the contrast of the image to describe the visual aspect of the learned features.

In some cases, the black box does not provide any information about its inner workings. This can be, for instance, because the model is property of a company that does not want to provide access to the inside of its black box. In such a case, generic methods for explaining black boxes (also called model-agnostic methods) are used. These agnostic methods work on analyzing the decisions made by the black box when particular inputs are provided.

Agnostic feature importance highlights the input features that seem to be the most important ones when making a decision (Fisher et al 2019). One particularly well-known technique of agnostic feature importance is SHapley Additive exPlanations (SHAP) (Lundberg and Lee 2017). Recently, SHAP has been used to provide explanations of a model trained to predict locoregional relapse for oropharyngeal cancers (Giraud et al 2020), to interpret a model trained to predict 10-year overall survival of breast cancer patients (Jansen et al 2020), or yet to produce heat maps that visualize the areas of melanoma images that are most indicative of the disease (Shorfuzzaman 2021).

Notice that model-agnostic can have two different meanings in the literature. The first one, presented here, considers that the explanation is model-agnostic because no assumption is made about the inner workings of the black box (Guidotti et al 2019, Molnar 2019). The second meaning of “model-agnostic” is that the explanation technique can be applied to a broad range of different models (Arrieta et al 2020, Das and Rad 2020). This distinction makes that saliency maps are not included in the first meaning (because the inner workings are considered through the gradients), but included in the second (because saliency maps can be developed for all differentiable models).

Local versus global explanations

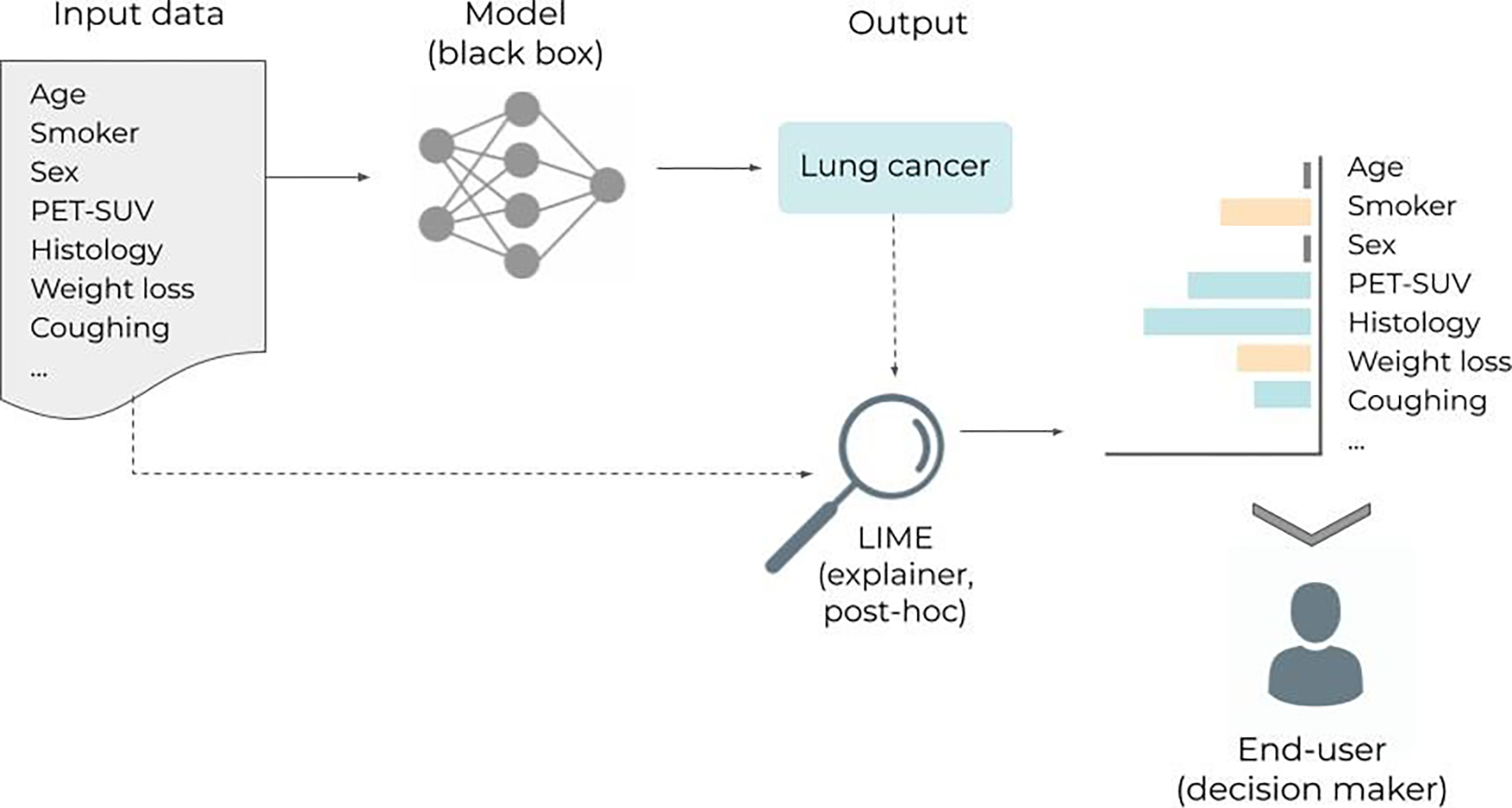

When a local explanation is required, the objective is to provide an explanation that is faithful to the behavior of a black box for a particular decision, and for the decisions on very similar input data. Notice that the categories model-specific/agnostic and global/local are complementary to each other. For instance, the flagship method among model-agnostic local explanation methods is Local Interpretable Model-agnostic Explanations (LIME) (Ribeiro et al 2016). The idea of LIME is to learn an interpretable model (e.g., a linear model) based on instances that are obtained by perturbing the feature values of the instances for which the decision needs to be explained (figure 5). By perturbing the target instance, a neighborhood around this instance is created and the black box is queried for this neighborhood. The interpretable model is then trained to reproduce the decisions of the black box for the instances in this neighborhood, hence the local-aspect of the explanation. Many variants of LIME have been developed, for instance, by making the perturbations in such a way that the neighborhood is realistic (e.g., randomly perturbing pixels of face images will not provide another face image, a smarter perturbation technique would be needed to obtain that (Ivanovs et al 2021)). Applications of LIME in the medical field remain seldom, but an illustrative example is the study by (Palatnik de Sousa et al 2019), who generated explanations on how a CNN detects tumor tissue for lymph nodes metastasis in patches extracted from histology whole slide images. Another example is the study by (Jansen et al 2020), who also used LIME to interpret a model trained to predict 10-year overall survival of breast cancer patients.

Figure 5.

Inspired by (Ribeiro et al 2016). Workflow illustrating the use of LIME (Local Interpretable Model-agnostic Explanations). The idea of LIME is to learn an interpretable model (e.g., a linear model) to explain individual predictions. In the example, a black box model receives a set of variables for a new patient (i.e. age, smoker, …) and classifies the patient as having lung cancer. The LIME model then provides the user with information (i.e. explanations) about the features that most contributed to the prediction. “Age” and “Sex” did not contribute at all, “Smoker” and “Weight-loss” were against it, while “PET-SUV”, “Histology”, and “Coughing” contributed for the positive lung cancer classification.

Regarding model-specific local explanations, attention mechanism is a good example. Attention-based neural networks are models that contain one or several layers designed to focus on the relevant elements of the input for a particular prediction (Bahdanau et al 2014, Vaswani et al 2017). Attention layers have first been developed primarily to increase the performance of models and have afterwards been used as a way to self-explain the model. One particular interest of attention for explanation is that the explanation is learned during the training phase of the model. This means that no post-hoc explanation technique (i.e. after the model is trained), such as LIME, is needed to explain the model in a post-processing phase. Medical applications of attention mechanisms include classification of breast cancer histopathology images (Yang et al 2020a), or segmentation of cardiac substructures on MRI (Sun et al 2020), among others (Chen et al 2020a, Bamba et al 2020, Zhang et al 2017). Notice, however, that the use of attention as an explanation is still debated (Jain and Wallace 2019, Wiegreffe and Pinter 2019).

In the case of a global explanation, like agnostic (Fisher et al 2019, Gevrey et al 2003) or specific (Breiman 2001) global feature importance explanations, the entire inner workings of the black box is approximated. For instance, a neural network can be co-learned with a decision tree to (i) produce a better decision tree thanks to the neural network and (ii) obtain an interpretable representation of the neural network via the decision tree (Nanfack et al n.d.). Another example is the neural decision tree technique proposed in (Yang et al 2018), where any setting of the weights corresponds to a specific decision tree. Notice that a global explanation can be obtained by combining several local explanations that are performed on sufficiently different input instances (Setzu et al 2020). However, the issue is that combining many interpretable models can make the whole combination uninterpretable (e.g., the combination of decision trees in a random forest), which does not solve the problem of explaining the black box.

New trends and limitations

Today, many conferences, workshops and special issues in journals focus on interpretability and explainability. This interest leads to an ever growing literature on the subject. In particular, one hot topic, in addition to the post-hoc methods like LIME, is the subject of disentangled neural networks (Chen et al 2020b, Luo et al 2019). The idea behind neural network disentanglement is to combine the performance of neural networks with the need for interpretability and explanations. In disentangled neural networks, while the network is optimized to solve the problem, the neurons and filters are also constrained to correspond to concepts that are easily identifiable by humans. In the end, when the network is trained and makes a prediction, the activation of the neurons provides important clues on the concepts that have been used to make the decision. Medical applications of disentangled neural networks are rare, since it is a rather new field. But a good example is the work from (Chartsias et al 2019), who explored a factorisation to decompose the input into spatial anatomical and imaging factors. Their model was applied to analyzing cardiovascular MR and CT images. Another example is the study from (Meng et al 2021), who applied disentangled representations to fetal ultrasound images.

Another hot-topic is based on the aforementioned limitation of attention to be an explanation (Jain and Wallace 2019, Wiegreffe and Pinter 2019). While the debate converges towards the idea that attention may not be an explanation, solutions have been developed to address the issue. In particular, effective attention has been found to be the part of attention that can be considered as an explanation (Brunner et al 2019). The idea would therefore be to decompose attention weights into two parts and to use the effective attention part to explain the model.

In general, an important point for discussion is the accuracy of the explanations. For the cases where the approximation of the black box by the explanation is correct, the explanation gives truthful information about how different variables interact to result in a prediction. However, for those cases where the approximation is not correct, algorithms designed to provide explanations about the original black-box model are not a faithful representation of the original model (Jacovi and Goldberg 2020). As such, they provide a false and possibly dangerous sense of confidence. Unfortunately, it is not possible to know beforehand whether the approximation made by the explanation is accurate.

Some authors are also critical of the kind of explanation that is under study. Most, if not all, explanation techniques suppose that an explanation should only be faithful to the model (i.e., accurately reflecting its reasoning) (Jacovi and Goldberg 2020). However, another important aspect of explanations is their plausibility (i.e., how convincing it is to humans) (Riedl 2019). Indeed, one could accept to lose a reasonable amount of faithfulness to make the explanation plausible and, thus, useful, for the user.

Finally, besides the degree of faithfulness and plausibility, the explanation may not be lawful enough (Bibal et al 2021). Indeed, the strength and the type of the explanation can also be constrained by the law. For instance, a feature importance method can have a reasonable level of faithfulness and plausibility, but can fail as an explanation with respect to the law.

3.2. Data-model dependency

As a consequence to the intrinsic data-driven nature of ML algorithms, many of the risks associated with their use are related to the data itself and how it is processed inside the model (see Section 2). Thus, in addition to understanding the behavior of ML models (Section 3.1), acting on the data and analyzing how the model performance depends on it is key to enable a safe and efficient clinical implementation. In the following, we present several lines of action that can help to identify and reduce the risks of failure for ML models in the medical context, as well as to ensure an efficient implementation and use.

3.2.2. Data curation and data augmentation

The most straightforward techniques to ensure sufficient quality and quantity for the data, before training the ML model, are data curation and data augmentation. First, data curation can help detect any errors in the labels or identify missing and incomplete records, among other issues. Second, data augmentation can increase the variability in the training set, thus helping better represent the patient population under study (see Section 2).

Although most of the data curation process is currently done with very simple methods (e.g. scripts for data visualization, dictionaries for correct labeling (Schuler et al 2019, Mayo et al 2016), etc.), some groups have recently started to explore the use of ML models to be used for data curation and label cleaning specifically. For instance, Yang et al. (Yang et al 2020b) used a 3D Non-local Network with Voting (3DNNV) to standardize anatomical nomenclature in radiotherapy treatments. Another interesting approach is the “label cleaning network” or CleanNet, introduced by Lee et al. (Lee et al 2018), although the latter has only been applied to natural images. Another interesting approach is the one presented by (Dakka et al 2021), who trained multiple ML architectures on the data to be cleansed, with several cross-validation sets. The ML models are applied back to the same training (uncleansed) dataset to infer the labels, and those that cannot be consistently classified correctly are considered as poor-quality data. They called the method “untrainable data cleansing”, and illustrated their successful performance in several medical classification problems. Other groups have concentrated efforts in developing crowd-powered algorithms for large-scale medical image annotation (Heim et al 2018). In addition to the data cleaning, pre-processing methods can be used to increase the consistency of the data. For instance, for medical images, it is important to pay attention to things such as the voxel size, the image size, range of the image voxel values, registration between multimodal images, etc. Typical pre-processing techniques are image resampling, cropping and (histogram) normalization. For a comprehensive review of data curation tools and open-access platforms we refer elsewhere (Diaz et al 2021, Willemink et al 2020).

Regarding data augmentation, it works particularly well when dealing with images as input data. Two types of image data augmentation techniques exist: basic image manipulations and deep learning approaches (Shorten and Khoshgoftaar 2019). Basic image manipulation techniques consist of geometric image transformations such as image flipping, translations, random cropping and rotations and photometric image transformation like the addition of noise, mixing images and random erasing. Beyond those more basic approaches, adversarial training (Moosavi-Dezfooli et al 2015, Bowles et al 2018) and neural style transfer (Gatys et al 2015, Jackson et al 2018) are ML-based strategies that can be used for data augmentation. These techniques use neural networks to add transformations to the original data. In the case of adversarial training, two networks compete against each other: the first network (generator) generates synthetic images (the augmented data), while the second network (discriminator) tries to discriminate between real and synthetic images. Thus, the final transformations to generate the augmented data are those that are able to fool the discriminator network, leading to synthetic images that look truly real and have the same characteristics as the original set. In neural style transfer, the transformations are predefined (e.g., night to day) and a single network is used to turn the original data into the new style (Gawlikowski et al 2021, Ma et al 2019). For a complete review of data augmentation techniques we refer to the survey in (Shorten and Khoshgoftaar 2019). Data augmentation is nowadays used in most medical imaging applications to increase the number of training samples and improve generalization (Nalepa et al 2019, Chlap et al 2021). For instance, (Meyer et al 2021) used a data augmentation approach based on Gaussian Mixture Models to increase the variability of a given dataset of MR images in terms of intensities and contrast. This helped to increase the generalization of ML models trained for segmentation of MR images from different scanners. In a similar study, the authors used adversarial training (generative adversarial networks, GANs) to generate synthetic data to overcome generalization issues to different MR manufacturers (Yan et al 2020). Another example is the study by (Zhang et al 2020c), who applied a series of stacked transformations to each image when training the ML model. The idea was to simulate the expected domain shift for a specific medical imaging modality with extensive data augmentation on the source domain, thus improving the generalization to the shifted domains. They applied their model to segment different organs in MR and ultrasound images, showing promising results.

Although data augmentation is typically used to increase the training dataset, the same techniques can also be applied during the testing phase, in order to inspect the robustness and generalization of the ML model to a well-varied data distribution. This is known as test-time data augmentation (Nalepa et al 2019, Wang et al 2019b, Moshkov et al 2020). For instance, (Wang et al 2019b) investigated how test-time augmentation can improve the performance of a ML model for brain tumor segmentation. They augmented the image by 3D rotation, flipping, scaling, and adding random noise. After using test-time augmentation, their results appeared to be more spatially consistent. Recently, (D’Amour et al 2020) proposed a well-controlled framework to analyze the generalization capacity of ML models with the so-called “stress-testing”. The idea is to apply customized tests designed to reproduce the challenges that the model will encounter when deployed in the actual (clinical) world. In particular, two of the proposed tests (i.e., shifted performance and contrastive evaluation) aim to test the model with instances from a shifted domain. This can easily be done with test-time data augmentation, by changing the resolution, contrast, or noise level of the images. Although the concept of stress testing is rather new, the medical community is being encouraged to apply before clinically implementing ML models (Eche et al 2021). For instance, (Young et al 2021) applied stress-testing for ML models trained to diagnose skin lesions. They found inconsistent predictions on images captured repeatedly in the same setting or subjected to simple transformations (e.g., rotation).

In addition, test-time data augmentation can be used as a means to quantify the uncertainty associated with the prediction (see Section 3.2.3) of the ML model (Gawlikowski et al 2021, Wang et al 2019a, Ayhan and Berens 2018). For instance, in the previous example, (Wang et al 2019b) used test-time data augmentation to generate uncertainty maps for the segmented brain volumes.

3.2.3. Prior and domain-specific knowledge

The learning capability of ML models critically depends on the information conveyed by the data used to train them. Beyond this obvious statement that has been discussed in Section 2.1, we can possibly provide and/or guide our ML models with the even more relevant information for improved learning efficiency. Incorporating prior- and domain-specific knowledge into ML models can help achieve this goal and yield more robust models. There are several ways to incorporate this knowledge into an ML model (Xie et al 2021, Deng et al 2020, Muralidhar et al 2018a, Dash et al 2022) and here we present three common approaches: input data, loss function and hand-crafted features.

Input data

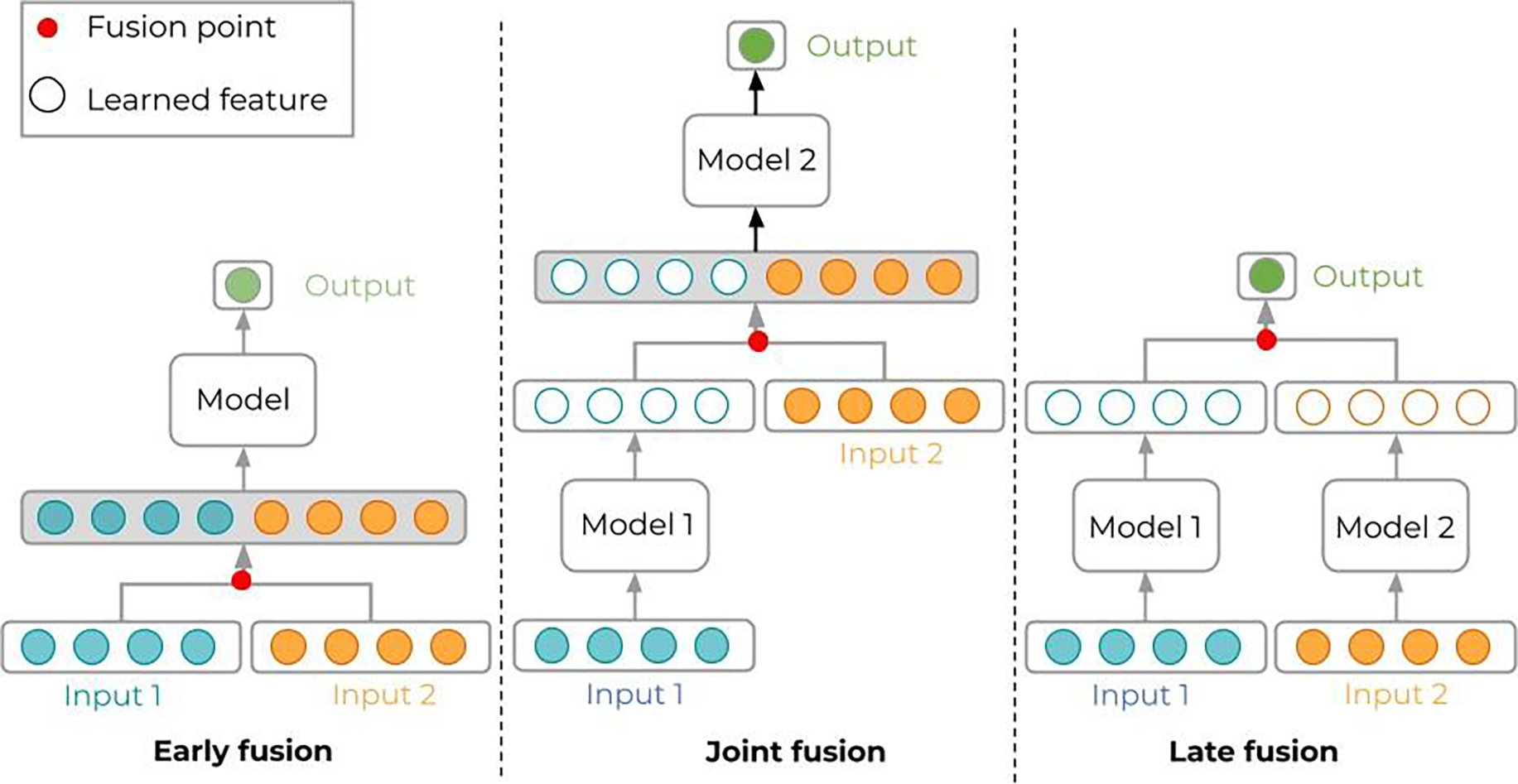

Sometimes, we attempt to train the model with incomplete information. For instance, medical images are typically associated with additional information than what is depicted. Certain anatomical features might result from specific diseases or medical procedures (e.g., surgical removal of the tumor), while remaining too stealthy cues. Similarly, a given radiotherapy dose distribution is the result of physician and patient choices regarding secondary effects, treatment protocols, and so on, while having directly this information side channel would ease learning. Training the ML model with the bare images, without including this prior and domain-specific information will result in poor performance. A common strategy to include this prior- and/or domain-knowledge is to modify the input itself. This includes changing the size and/or the format of the input: adding more input channels for CNN models, mixing images and text data as input, etc. When adding more input channels but keeping the same data type (e.g., stacking extra images such as MR or PET on top of CT), no significant changes need to be done in the architecture of the model. However, when using heterogeneous data types (e.g., images, text, scalars, …) several options are possible as to where to merge these sources in the network data path. We refer here to the early fusion, joint fusion and late fusion strategies (Figure 6). In the first, the different input modalities are joined before being fed into a single model. This fusion is done through concatenation and/or pooling, among other strategies. The joint or intermediate fusion consists in joining the features learned from the first layers of the network with other input modalities, before feeding this joint data into a final model. Finally, the late fusion strategy refers to the process of using a combination of outputs coming from multiple models to make a decision (Huang et al. 2020).

Figure 6.

Multi-modal data can be processed in different ways in ML models, depending on how the various modalities are merged. Early fusion (left) is possible if the data types or modalities are not too different. A typical example is given by stacking multiple registered image modalities like CT, MR, PET, which are processed in convolutional layers. Joint fusion (middle) is typical of image data accompanied by simple indicators in vectors or text; then, convolutional layers (model 1) process the images to transform into feature vectors that are then merged (concatenated) with the other indicators to form a longer feature vector to be processed by the final model. Late fusion (right) pushes joint fusion even further: the output is a very simple combination of data coming from separate models dedicated to each data type; to some extent, late fusion bears some similarity with ensemble learning.

Examples of incorporating domain-knowledge into the input data are many. For instance, a study looking into volumetric dose calculation using DL investigated the use of 3D voxel-based distance from source, central beamline distance, radiological depth, and volume density, as entire volumetric inputs (Kontaxis et al 2020). Other photon and proton dose calculation studies investigated having a first-order prior of the dose calculation as input into the model (Xing et al 2020, Wu et al 2020). Similarly, recent studies about dose prediction for radiotherapy have explored the use of auxiliary information (e.g., non-modulated beam doses) to improve the robustness of the ML model (Barragán-Montero et al 2019, Hu et al 2021b). Yet another study about automatic three-dimensional segmentation of organs from CT images improved the performance of the ML model by using as input a two-dimensional contour of the considered organ (Trimpl et al 2021). Examples of mixing different data types include the addition of electronic health records and clinical data, like text and laboratory results, to the image data (Zhen et al 2020, Shehata et al 2020, Huang et al 2020).

These studies demonstrate that, by including these additional domain knowledge-focused inputs, the models outperform those using only more basic input data.

Loss function

In supervised learning, for some input xi, the loss function L(yi, ŷi) measures the mismatch or error between the desired output yi and the actual output ŷi = fθ(xi) for the model with its current parametrization θ. Optimal parameters are found by minimizing the loss for all (xi, yi).