Abstract

The aim of the present study was to investigate whether affective video can elicit ERPs related to emotional processing. Compared with neutral video clips, violent video clips elicited delayed but amplitude-similar N1 component. The most conspicuous finding was enhanced EPN and LPP components for violent than neutral video clips. These data indicate the possibility of using affective video as stimulus to elicit ERPs and provide new evidence for processing affective stimuli, using real-life video clips with better ecological validity.

Keywords: Video, Affective processing, ERPs, EPN, LPP

Introduction

Successful communication usually requires the processing of emotional information produced by different sensory modalities such as audio and visual. It is not surprising that people are increasingly interested in the processing of emotional information in the human brain (e.g., Labar and Cabeza, 2006; Olofsson et al. 2008; Zinchenko et al. 2017). In particular, compared with other neuroimaging techniques such as functional magnetic resonance imaging (fMRI) and PET, event-related potentials (ERPs) can assess neural responses to emotional events with a millisecond time resolution by analyzing the amplitude and latency of ERP components. Rapid processing of emotional stimuli is an important aspect of emotional response, which can promote the perception of potential dangerous events through the rapid processing pathway involving thalamus and amygdala (e.g., LeDoux 2000; Olofsson et al. 2008). Thus, scalp recorded brain potentials (ERP) provide a powerful tool for describing emotional processing in the human brain (e.g., Britton et al. 2006; Olofsson et al. 2008).

To date, most affective ERP studies have used stimuli from the International Affective Picture System (IAPS), which provides a standardized stimulus set for attention and emotion research (Lang et al. 1999). These images are rated using a nine point scale with the different valence (from unpleasant to pleasant) and arousal (from low to high) level by both female and male young adults. The availability of this organized stimulus set was a major driving force in affective ERP research, because images vary systematically in valence and arousal.

By investigating the neural basis of processing emotional information, several ERP components drew out the time course of processing emotional visual pictures. At the early stage of visual perception, the N1 component was larger for unpleasant valence images than for pleasant and neutral images, which may indicate that unpleasant valence preferentially attracts attention early in the information processing stream (Olofsson and Polich 2007; Olofsson et al. 2008). Another ERP component is the early posterior negativity (EPN), an emotion-specific endogenous negative shift over occipito-temporal sites, which was elicited by pleasant and unpleasant pictures vs. neutral ones and started around 150 ms after picture onset and reached its peak between 250 and 350 ms (Conroy and Polich 2007; Aldunate et al. 2018). It has been considered as a marker of the earliest processing of selective emotional perception in relation to the amount of attentional resources allocated to emotional salient stimuli (Schupp et al. 2004, 2006). The later stage of the affective ERP is dominated by the late positive potential (LPP), a long-lasting elevated ERP positivity with a central-parietal posterior scalp distribution, reflecting the allocation of attention to emotional stimuli that is necessary for motivation (Olofsson et al. 2008; Sun et al. 2015).

In light of previous studies that have investigated the temporal course of processing emotional pictures, it should be noteworthy that the static emotional pictures were generally used, which actually have lower ecological validity and do not ideally represent real-life processes (Foley et al. 2012). It is necessary to used stimuli that have a higher ecological validity to further reveal the time course of emotional processing. Actually, real-life-like stimulation by videos or virtual-reality environments may serve as powerful means of creating specific cognitive or affective states and help to investigate dysfunctions in psychiatric and neurological disorders more efficiently. Recently, Erdogdu et al. (2019) developed a method to generate ERPs and EROs during watching videos, in which repeated luminance changes were presented on short video segments and the ERP/EROs were time-locked to those distortions in short video segments. However, the method proposed by Erdogdu et al. indeed did not elicit the ERP/EROs related to the video content per se. In addition, one study compared the differences in electroencephalographic (EEG) power spectra and EEG brain sources between men and women during the exposure of affective music video stimuli and found that the gender differences of the mean EEG power for all frequency bands were related to the emotional stimuli, which was more evident for negative emotions (Goshvarpour and Goshvarpour 2019). However, to date no study directly investigated the cognitive neuro-dynamics related to the time course of processing emotional videos.

The real-life emotional processing happens in a dynamic multi-domain environment and the dynamic quality of information is important for emotion recognition (Fujimura and Suzuki, 2010). Especially, with the development of the Internet, the short video is becoming more and more popular and has become an important part of daily life. Therefore, in the present study we used dynamic videos to maximally approximate real-life stimuli, with a higher ecological validity relative to previously used static pictures, elicit robust neural responses (e.g., Klasen et al. 2012; Donohue et al. 2013) and further answer the complex research question of processing emotional visual information. In particular, this study would focus on ERPs elicited by violent video clips, which have a great impact and interference on our life, especially on the health and development of teenagers.

To date, for ERP components related violent pictures, several findings did not reveal evident differences between processing negative and violent IAPS pictures (Bailey et al. 2012; Kunaharan and Walla 2015). In IAPS database, violent pictures depicted aggressive acts such as assaults with weapons and beatings, while nonviolent images depicted drug abuse, disease, and mutilations. Although violent pictures were rated as more threatening than negative pictures, the pleasant ratings for negative and violent pictures were not significantly different but neutral pictures were rated as more pleasant than violent pictures (Bailey et al. 2012). As one type of negative emotional stimuli, ERP data showed that the violent IAPS pictures elicited smaller N1 and larger EPN and LPP than did neutral ones, but these three ERP components did not differ between violent and non-violent negative IAPS pictures (Bailey et al. 2012; Kunaharan and Walla 2015). Taking all of these considerations into account, we selected above three ERP components, i.e., N1, EPN, and LPP, as indices of our ERP results. Based on the latency of these three ERP components, the time course of emotion video processing can be divided into three separate stages: an early stage (100–200 ms), a middle stage (200–350 ms), and a late stage (> 400 ms). Consequently, the time course of emotion video processing also can be represented by N1, EPN and LPP.

Methods

Participants

Twenty-eight healthy participants (female, 12, mean age 25.8 ± 3.9 years), all right-handed (Edinburgh Handedness Inventory score, 89.5 ± 12.9), with normal or corrected-to-normal vision and normal hearing participated in the present experiments. All participants signed a consent form and received payment for their participation. The experiment was conducted in accordance with guidelines of the Declaration of Helsinki and approved by the Ethics Committee of Liaocheng University, China.

Stimulus and procedure

Stimuli consisted of 30 violent video clips from violent movies and 30 natural life video clips from feature film (i.e., neutral emotional video stimuli as control condition). Importantly, in order to avoid the difference of neuro response for processing people-related and non-people-related contents, all videos were people-related and the people in the video were not familiar to all participants. In each violent video clips, two people were involved, such as, one person attacks another person. Similarly, two people were also involved in each neutral video clips, such as, they are communicating peacefully. The duration of each video clip was 3 s, with original color. In order to control the multi-sensory processing, all the video were played silently. Before the experiment, all the video clips were rated based on the 7-point questionnaire of the arousal and valence levels by 160 participants, who did not participate in the present EEG experiment. The rate findings showed that the mean valence level was 1.8 ± 0.8 and 3.4 ± 0.4 for violent and neutral video stimuli (p < 0.1), respectively, and the mean arousal level 5.2 ± 0.8 and 3.8 ± 0.6 (p < 0.05) for violent and neutral video stimuli, respectively.

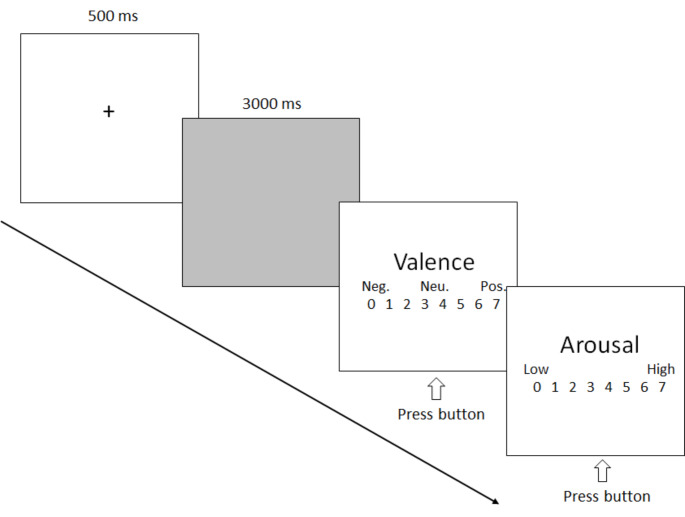

The present 28 participants were seated in a dimly lit sound-attenuated booth about 1 m from a computer screen and told that a series of video clips would be presented and that he/she should attend to each video clip the entire time it appeared on the screen. As shown in Fig. 1, each trial started with a fixation cross on a blank computer screen for 500 ms. Subsequently, a video clip was presented and played continuously for 3 s without lens switching. After the video disappeared, the arousal and valence levels were rated based on a 7-point questionnaire. A variable interval (10–15 s) occurred between each trial. Each video was repeated two times, so there were a total of 120 trials (60 for violent and 60 for neutral video clips). There were four blocks (1 min break between two blocks) with 30 trials for each and all trials were presented randomly. Before the formal experiment, there was a short term exercise, in which the video stimuli did not appear in subsequent formal experiments.

Fig. 1.

A schematic illustration of the experimental procedure. Neg. = negative; Neu. = neutral; Pos. = positive

EEG recoding

Electroencephalogram (EEG) signals were continuously recorded by the Neurolab® digital amplifier system, with a cap of Ag /AgCl electrodes at 64 sites according to the expanded international 10–20 system (Synapse Co. Ltd, Suzhou, China, http://www.neurolab.com.cn). The reference electrode was placed on the tip of nose and the EEG data were offline converted into the bio-mastoid averaged reference in data analysis. To record vertical and horizontal electro-oculogram (EOG) signals, two electrodes were placed above and below the right eye respectively, and two electrodes at the left and right outer canthi. The impedance of the electrodes was kept below 5kΩ. EEG and EOG signals were amplified with a band pass of 0.01–100 Hz at a sampling rate of 1000 Hz.

Data analysis

Data were analyzed using EMSE software (http://www.cortecsolusions.com) as well as house-made Matlab routines. Raw EEG data was 30 Hz low-pass filtered (24 dB) for eliminating high-frequency noises. Eye movements (vertical and horizontal) were corrected using an ICA procedure and in order to eliminate the DC or slow wave artifacts, the EEG signals exceeding ± 50µV in amplitude were rejected. Remaining artifacts exceeding ± 100µV in amplitude or containing a change of over 100µV within a period of 50 ms were rejected. The EEG was then segmented into epochs ranging from 500 ms before to 3000 ms after stimulus onset for all conditions. In a subsequent step, individual epochs were visually scanned and epochs containing artifacts were manually discarded. Approximately 15% of epochs were excluded from further analysis. Artifact-free EEG epochs (45 at least for violent and neutral videos, respectively) were averaged separately for each participant and for each video stimulus type, respectively.

Based on the current goal and previous studies (Cuthbert et al. 2000; Olofsson et al. 2008; Zinchenko et al. 2017), we evaluated N1, EPN and LPP components at the selected posterial electrode sites, i.e., LOT (left occipital-temporal sites; grand averaged with P3, P5, P7, PO5, PO7 and O1) and ROT (right occipital-temporal sites; grand averaged with P4, P6, P8, PO6, PO8 and O2). Based on the grand-averaged ERP waveforms, the peak amplitude and latency of N1 were measured between 120 and 200 ms and the mean amplitude of EPN was measured between 200 and 300 ms. For the LPP component, mean amplitudes were measured per second between 1 and 3 s post stimulus onset. These measurements were performed using two-way repeated measures ANOVA with Video type (violent and neutral) and Site (LOT, ROT) as within-subject factors.

Source analysis of EPN and LPP components

The computation of images of electric neuronal activity based on extracranial measurements would provide important information on the time course and localization of brain function. In the present study, we attempted to analyze the cortex sources of emotion-specific EPN and LPP components (difference waveforms between ERPs to violent and neutral videos) by an academic software, “sLORETA”, in which the sLORETA method (standardized low-resolution brain electromagnetic tomography) was run with a standardized boundary element method volume conductor model and the expanded electrode coordinate (MNI, Montreal Neurological Institute stereotactic coordinates) showed a validity as relative head-surface-based positioning systems (Fuchs et al. 2002; Pascual-Marqui 2002). It has been shown that sLORETA can yield images of standardized current density with zero localization error (Wagner et al. 2004).

Results

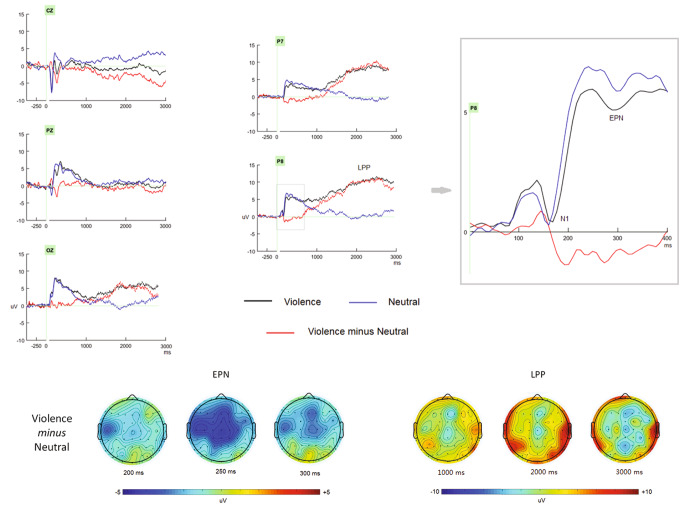

As presented in Fig. 2, the video elicited clear early N1, EPN and LPP components, which could be modulated by emotional valence level, that is, violent video elicited enhanced EPN and LPP components.

Fig. 2.

The grand ERP waveforms as well as the 2D scalp map elicited by violence and neutral video clips, respectively

The ANOVA showed that although the N1 amplitude did not differ between violent (0.6 uV) and neutral (0.7 uV; p > 0.1) videos, the peak latency of N1 was slower for violent (171 ms) than neutral (160 ms; p < 0.02) videos.

For EPN component, at the measurement time window, violent video elicited significant negative flection (5.6 uV) than did neutral video stimuli (6.8 uV; p < 0.05). According to the scalp distribution of EPN (Fig. 2), it should be noteworthy that the EPN was more conspicuous at the central electrode sites. To help understanding the violent vs. neutral comparison, we computed mean valence and arousal ratings for violent and neutral conditions per participant as predictors, and see whether the ERP differences between violent and neutral conditions as dependent variables can be explained more by valence or arousal. The correlation analysis did not show any significant correlations between valence ratings or arousal ratings and EPN amplitudes, respectively (p > 0.1).

For LPP component, the mean amplitudes were significantly enhanced for violent than neutral videos for per second (mean amplitudes between 1 and 3 s post stimulus onset: 8.9 uV and 0.4 uV for violent than neutral videos, respectively; p < 0.01). The correlation analysis showed the negative correlation (more violent, larger LPP) between valence ratings and LPP amplitudes, r = -0.45, p < 0.05, and no evident correlation was found between arousal ratings and LPP (p > 0.05).

Figure 3 showed the current source densities of EPN and LPP components estimated by sLORETA method, respectively. The source analysis of the EPN revealed the generator at left occipital-temporal area with the maximize current density of 8.01/mm3, Brodmann area 19, Cuneus, Occipital Lobe, with the MNI coords of -25, -90 and 35 mm for x, y, and z. For LPP component, the cortex generator located at frontal-central and occipital-temporal area, with the maximize current density of 102/mm3, Brodmann area 6, Superior Frontal Gyrus, Frontal Lobe, with the MNI coords of 20, -5 and 70 mm for x, y, and z.

Fig. 3.

The current density of source analysis for EPN and LPP components related to violent video clips, respectively

Discussion

The aim of the present study was to investigate whether emotional videos can elicit ERPs related to emotional processing. Would such effects be found, we sought to determine their nature and time course. To achieve this goal, three ERP components, N1, EPN and LPP components, were assessed. Compared with neutral video clips, violent video clips elicited delayed but amplitude-similar N1 component. This finding differs from previous research that enhanced N1 was elicited by unpleasant valence pictures, and could be related to the stimuli type (video in the present study vs. pictures in previous studies).

The most conspicuous finding was enhanced EPN and LPP components for violent than neutral video. Actually, these findings are consistent with recent evidence revealing differences in the ERP correlates of processing affective pictures (Bailey et al. 2011; Olofsson et al. 2008). To date, converging evidence suggested that affective pictures elicited an endogenous negative shift over occipito-temporal sites that started around 150 ms after picture onset and reached its peak around 300 ms, i.e., EPN (early posterior negativity), which increased with the arousal level of the emotional pictures (Conroy and Polich 2007). Particularly, there was also evidence that the amplitude of the EPN did not differ between neutral and non-violence negative pictures but was enhanced for violent than non-violence negative pictures (Bailey et al. 2012). It should be noted that based on the present scalp distribution of amplitudes between 200 and 300 ms post stimulus onset, the EPN was more conspicuous at the central electrode sites, like the frontal-central N2 component related to early stimulus discrimination (decoding emotional information) and response selection processes (Di Russo et al. 2006). However, the present source analysis of EPN revealed the significant activation at the posterior region (left temporal-occipital area). Recently, it has been considered that the EPN indexes natural selective attention, such that evaluation of image features is guided by perceptual qualities that select affectively arousing stimuli for further processing (Dolcos and Cabeza 2002; Schupp et al. 2004). Therefore, the enhance EPN for violent video could be due to more attentional capture and allocation for violent than neutral stimuli.

As for the LPP component, the present findings were assistant with most previous ERP studies using affective pictures, that is, the enhanced central-parietal distributed positive slow deflection for affective pictures over neutral ones. The LPP in affective pictures has been widely thought to be associated with processes related to the allocation of attention to affectively arousing stimuli and reflect the late evaluation of affective pictures (Conroy and Polich 2007; Olofsson et al. 2008; Schupp et al. 2004; Bailey et al. 2012). To our knowledge, this is the first investigation of LPP of brain potentials elicited by real-life video clips. Although the video takes a long time (3 s), we still got reliable LPP component after eliminating direct-current drift and slow potential artifacts, in line with previous study that presented affective pictures for 6 s (e.g., Cuthbert et al. 2000). Interestingly, using a non-affective discrimination task Carretie and colleagues (2006) found that the LPP related to unpleasantly arousing stimuli was located in the left precentral gyrus by means of LORETA (low resolution tomography algorithm) source localization, which was partly in line with the present findings. Based on the importance of frontal and temporal lobes in attention, emotion and memory, the generator of LPP at frontal-central and occipital-temporal region implicated that top-down processes such as emotional evaluation or suppression appear to interact with affective stimulus activation during slow wave ERP ranges as do memory encoding processes (cf., Carretie et al. 2006; Olofsson et al. 2008).

In sum, the present study investigated the possibility of using video as stimulus to elicit ERPs and found that basically, the emotional video clips can elicited clear emotion-related potentials like affective pictures. These data provide new evidence for processing affective stimuli, using real-life video clips with better ecological validity.

Acknowledgements

This work was supported by the Basic Research Key Program, Defence Advanced Research Projects of PLA (2019-JCQ-ZNM-02) and National Natural Science Foundation of China (61806210).

Contribution to this work

Chen, Li, and Fang finished the data analysis and the draft, Sun finished the design and draft, Zhao finished revised the manuscript.

Data Availability Statement

Data archiving is not mandated but data will be made available on reasonable request.

Disclosure

All authors declare no competing interests and have nothing to disclose.

Footnotes

Siyu Chen and Xinhong Li equally contributed to this work

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Gang Sun, Email: gsun960@gmail.com.

Lun Zhao, Email: lunzhao6@gmail.com.

References

- Aldunate N, López V, Bosman CA (2018) Early influence of affective context on emotion perception: EPN or Early-N400? Frontiers in Neuroscience. 12:708. 10.3389/fnins.2018.00708 [DOI] [PMC free article] [PubMed]

- Bailey K, West R, Anderson CA. The association between chronic exposure to video game violence and affective picture processing: An ERP study. Cogn Affect Behav Neu. 2011;11:259–276. doi: 10.3758/s13415-011-0029-y. [DOI] [PubMed] [Google Scholar]

- Bailey K, West R, Mullaney KM. Neural correlates of processing negative and sexually arousing pictures. PLoS ONE. 2012;7(9):e45522. doi: 10.1371/journal.pone.0045522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britton JC, Taylor SF, Sudheimer KD, Liberzon I. Facial expressions and complex IAPS pictures: common and differential networks. NeuroImage. 2006;31:906–919. doi: 10.1016/j.neuroimage.2005.12.050. [DOI] [PubMed] [Google Scholar]

- Carretie L, Hinojosa JA, Albert J, Mercado F. Neural response to sustained affective visual stimulation using an indirect task. Exp Brain Res. 2006;174:630–637. doi: 10.1007/s00221-006-0510-y. [DOI] [PubMed] [Google Scholar]

- Conroy MA, Polich J. Affective valence and P300 when stimulus arousal level is controlled. Cognition & Emotion. 2007;21:891–901. doi: 10.1080/02699930600926752. [DOI] [Google Scholar]

- Cuthbert BN, Schupp HT, Bradley MM, Birbaumer N, Lang PJ. Brain potentials in affective picture processing: covariation with autonomic arousal and affective report. Biol Psychol. 2000;52:95–111. doi: 10.1016/S0301-0511(99)00044-7. [DOI] [PubMed] [Google Scholar]

- Di Russo F, Taddei F, Apnile T, Spinelli D. Neural correlates of fast stimulus discrimination and response selection in top-level fencers. Neurosci Lett. 2006;408:113–118. doi: 10.1016/j.neulet.2006.08.085. [DOI] [PubMed] [Google Scholar]

- Dolcos F, Cabeza R. Event-related potentials of emotional memory: encoding pleasant, unpleasant, and neutral pictures. Cogn Affect Behav Neurosci. 2002;2:252–263. doi: 10.3758/CABN.2.3.252. [DOI] [PubMed] [Google Scholar]

- Donohue SE, Todisco AE, Woldorff MG. The rapid distraction of attentional resources toward the source of incongruent stimulus input during multisensory conflict. J Cogn Neurosci. 2013;25(4):623–635. doi: 10.1162/jocn_a_00336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erdogdu E, Kurt E, Deniz Duru A, Uslu A, Basar-Eroglu C, Demiralp T. Measurement of cognitive dynamics during video watching through event-related potentials (ERPs) and oscillations (EROs) Cogn Neurodyn. 2019;13:503–512. doi: 10.1007/s11571-019-09544-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foley E, Rippon G, Thai NJ, Longe O, Senior C. Dynamic facial expressions evoke distinct activation in the face perception network: a connectivity analysis study. J Cogn Neurosci. 2012;24(2):507–520. doi: 10.1162/jocn_a_00120. [DOI] [PubMed] [Google Scholar]

- Fujimura T, Sato W, Suzuki N. Facial expression arousal level modulates facial mimicry. Int J Psychophysiol. 2010;76(2):88–92. doi: 10.1016/j.ijpsycho.2010.02.008. [DOI] [PubMed] [Google Scholar]

- Fuchs M, Kastner J, Wagner M, Hawes S, Ebersole JS. A standardized boundary element method volume conductor model. Clin Neurophysiol. 2002;113:702–712. doi: 10.1016/S1388-2457(02)00030-5. [DOI] [PubMed] [Google Scholar]

- Goshvarpour A, Goshvarpour A. EEG spectral powers and source localization in depressing, sad, and fun music videos focusing on gender differences. Cogn Neurodyn. 2019;13:pages161–173. doi: 10.1007/s11571-018-9516-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klasen M, Chen YH, Mathiak K. Multisensory emotions: perception, combination and underlying neural processes. Rev Neurosci. 2012;23(4):381–392. doi: 10.1515/revneuro-2012-0040. [DOI] [PubMed] [Google Scholar]

- Kunaharan S, Walla P (2015) ERP differences between violence, erotic, pleasant, unpleasant and neutral images. Front Hum Neurosci 9. 10.3389/conf.fnhum.2015.219.00035

- LaBar KS, Cabeza R. Cognitive neuroscience of emotional memory. Nat Rev Neurosci. 2006;7:54–64. doi: 10.1038/nrn1825. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert B. International Affective Picture System (IAPS): Instruction manual and affective ratings. The Center for Research in Psychophysiology. Gainsville, Florida: University of Florida; 1999. [Google Scholar]

- LeDoux JE. Emotion circuits in the brain. Annu Rev Neurosci. 2000;23:155–184. doi: 10.1146/annurev.neuro.23.1.155. [DOI] [PubMed] [Google Scholar]

- Olofsson JK, Nordin S, Sequeira H, Polich J. Affective picture processing: An integrative review of ERP findings. Biol Psychol. 2008;77:247–265. doi: 10.1016/j.biopsycho.2007.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olofsson JK, Polich J. Affective visual event-related potentials: arousal, repetition, and time-on-task. Biol Psychol. 2007;75(1):101–108. doi: 10.1016/j.biopsycho.2006.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pascual-Marqui RD. Standardized low-resolution brain electromagnetic tomography (sLORETA): technical details. Methods Find Exp Clin Pharmacol. 2002;24:5–12. [PubMed] [Google Scholar]

- Wagner M, Fuchs M, Kastner J. Evaluation of sLORETA in the presence of noise and multiple sources. Brain Topogr. 2004;16:277–280. doi: 10.1023/B:BRAT.0000032865.58382.62. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Cuthbert BN, Bradley MM, Hillman CH, Hamm AO, Lang PJ. Brain processes in emotional perception: Motivated attention. Cognition & Emotion. 2004;18:593–611. doi: 10.1080/02699930341000239. [DOI] [Google Scholar]

- Schupp H, Flaisch T, Stockburger J, Junghöfer M, Anders S, Ende M, Junghöfer M, Kissler J, Wildgruber D. Emotion and attention: event-related brain potential studies. Prog Brain Res. 2006;156:31–51. doi: 10.1016/S0079-6123(06)56002-9. [DOI] [PubMed] [Google Scholar]

- Sun D, Chan CC, Fan J, Wu Y, Lee TM. Are Happy Faces Attractive? The Roles of Early vs. Late Process Front Psychol. 2015;6:1812. doi: 10.3389/fpsyg.2015.01812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zinchenko A, Obermeier C, Kanske P, Schröger E, Kotz SA. Positive emotion impedes emotional but not cognitive conflict processing. Cogn Affect Behav Neurosci. 2017;17(3):665–677. doi: 10.3758/s13415-017-0504-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data archiving is not mandated but data will be made available on reasonable request.