Abstract

Background

The rhetoric surrounding clinical artificial intelligence (AI) often exaggerates its effect on real-world care. Limited understanding of the factors that influence its implementation can perpetuate this.

Objective

In this qualitative systematic review, we aimed to identify key stakeholders, consolidate their perspectives on clinical AI implementation, and characterize the evidence gaps that future qualitative research should target.

Methods

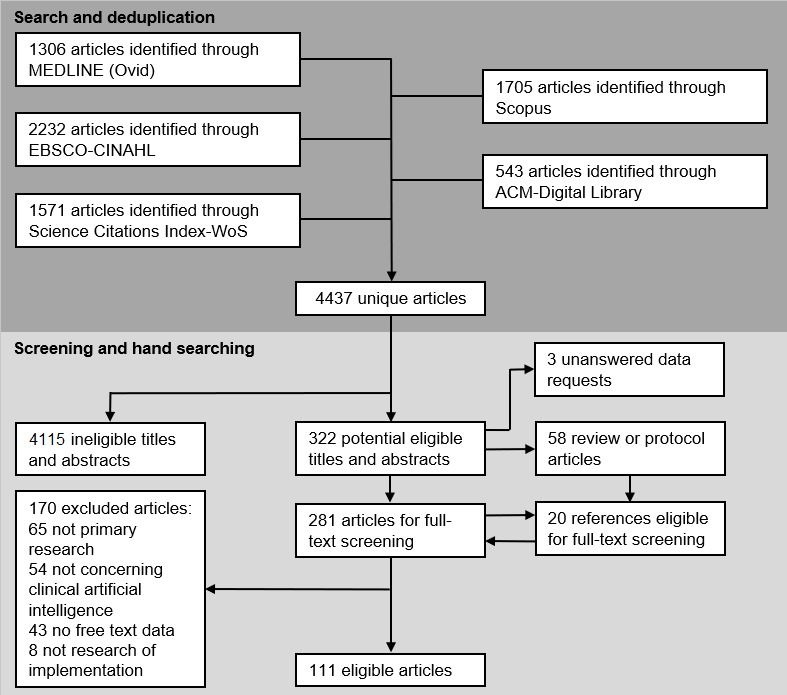

Ovid-MEDLINE, EBSCO-CINAHL, ACM Digital Library, Science Citation Index-Web of Science, and Scopus were searched for primary qualitative studies on individuals’ perspectives on any application of clinical AI worldwide (January 2014-April 2021). The definition of clinical AI includes both rule-based and machine learning–enabled or non–rule-based decision support tools. The language of the reports was not an exclusion criterion. Two independent reviewers performed title, abstract, and full-text screening with a third arbiter of disagreement. Two reviewers assigned the Joanna Briggs Institute 10-point checklist for qualitative research scores for each study. A single reviewer extracted free-text data relevant to clinical AI implementation, noting the stakeholders contributing to each excerpt. The best-fit framework synthesis used the Nonadoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS) framework. To validate the data and improve accessibility, coauthors representing each emergent stakeholder group codeveloped summaries of the factors most relevant to their respective groups.

Results

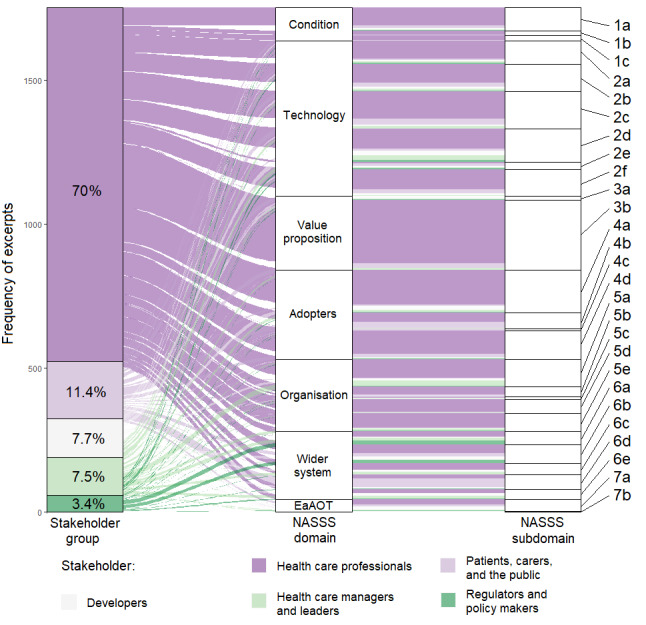

The initial search yielded 4437 deduplicated articles, with 111 (2.5%) eligible for inclusion (median Joanna Briggs Institute 10-point checklist for qualitative research score, 8/10). Five distinct stakeholder groups emerged from the data: health care professionals (HCPs), patients, carers and other members of the public, developers, health care managers and leaders, and regulators or policy makers, contributing 1204 (70%), 196 (11.4%), 133 (7.7%), 129 (7.5%), and 59 (3.4%) of 1721 eligible excerpts, respectively. All stakeholder groups independently identified a breadth of implementation factors, with each producing data that were mapped between 17 and 24 of the 27 adapted Nonadoption, Abandonment, Scale-up, Spread, and Sustainability subdomains. Most of the factors that stakeholders found influential in the implementation of rule-based clinical AI also applied to non–rule-based clinical AI, with the exception of intellectual property, regulation, and sociocultural attitudes.

Conclusions

Clinical AI implementation is influenced by many interdependent factors, which are in turn influenced by at least 5 distinct stakeholder groups. This implies that effective research and practice of clinical AI implementation should consider multiple stakeholder perspectives. The current underrepresentation of perspectives from stakeholders other than HCPs in the literature may limit the anticipation and management of the factors that influence successful clinical AI implementation. Future research should not only widen the representation of tools and contexts in qualitative research but also specifically investigate the perspectives of all stakeholder HCPs and emerging aspects of non–rule-based clinical AI implementation.

Trial Registration

PROSPERO (International Prospective Register of Systematic Reviews) CRD42021256005; https://www.crd.york.ac.uk/prospero/display_record.php?RecordID=256005

International Registered Report Identifier (IRRID)

RR2-10.2196/33145

Keywords: artificial intelligence, systematic review, qualitative research, computerized decision support, qualitative evidence synthesis, implementation

Introduction

Background

Clinical artificial intelligence (AI) is a growing focus in academia, industry, and governments [1-3]. However, patients have benefited only in a few real-world contexts, reflecting a know-do gap called the “AI chasm” [4,5]. There is already evidence of tasks where health care professional (HCP) performance has been surpassed [6]. Reporting practices concerning quantitative measures of efficacy are also improving against evolving standards [7]. The rate-limiting step to patient benefit from clinical AI now seems to be real-world implementation [8]. This necessitates an understanding of how in real-world use, each technology may interact with the various configurations of policy-, organizational-, and practice-level factors [9,10]. Qualitative methods are best suited to produce evidence-based guidance to anticipate and manage implementation challenges; however, they remain rare in the clinical AI literature [1,11,12].

Prior Work

Qualitative clinical AI literature was broadly synthesized until 2013 [13]. Despite accommodating eligibility criteria, the study synthesized 16% (9/56) of qualitative studies that were eligible, prioritizing only higher-quality articles for data extraction. All the 9 studied tools were based on electronic health care records to support various aspects of prescribing. All except 1 of the studies were set in the United States, and all applied rule-based decision logic preprogrammed by human experts. The main findings included usability concerns for HCPs, poor integration of the data used by tools with the workflows and platforms in which they were placed, the technical immaturity of tools and their host systems, and the fact that adopters had a variable perception of the AI tools’ value depending on their own experience [13]. Much of the subsequent clinical AI literature refers to machine learning or non–rule-based tools, which differ from rule-based tools in ways that may limit the understanding of the clinical, social, and ethical implications of their implementation [3]. An example of such a tool is a classification algorithm that distinguishes retinal photographs containing signs of diabetic retinopathy from those that do not [14]. The tool “learned” to do this in a relatively unexplainable fashion through exposure to a great quantity of retinal imaging data accompanied by human-expert labels of whether diabetic retinopathy was present. These non–rule-based tools promise broader applicability and higher performance than rule-based tools that automate established human clinical reasoning methods [3]. An example of a rule-based tool is one that applies an a priori decision tree determined by human clinical experts to produce individualized management recommendations for patients [15]. Despite the differences in their mechanisms, both tool groups satisfy the Organization for Economic Cooperation and Development’s definition of AI [16]. It is unclear whether the rule-based majority of the limited qualitative clinical AI evidence base is relevant to the modern focus on non–rule-based clinical AI [17]. However, as only 4 primary qualitative studies were identified across 2 recent syntheses of non–rule-based tools, it appears that broader eligibility criteria will be required to synthesize a meaningful volume of research at present [11,12]. Although primary qualitative clinical AI research is growing, its pace remains relatively slow. If the impact of this important work is to be maximized, clarity is required regarding which perspectives and factors that influence implementation remain inadequately explored [1].

Goal of This Study

This qualitative evidence synthesis aimed to identify key stakeholder groups in clinical AI implementation and consolidate their published perspectives. This synthesis process aimed to maximize the accessibility and utility of published data for practitioners to support their efforts to implement various clinical AI tools and to complement their insight into the unique context that they target (Textbox 1). As a secondary aim, this synthesis aimed to improve the impact of future qualitative investigations of clinical AI implementation by recommending evidence-based research priorities.

The research question, eligibility criteria informing a search strategy, and research databases that the search strategy was applied to on April 30, 2021 (Multimedia Appendix 1).

-

Research question

What are the perspectives of stakeholders in clinical artificial intelligence (AI) and how can they inform its implementation?

-

Participants

Humans participating in primary research reporting free-text qualitative data

-

Phenomena of interest

Individuals’ perspectives of rule-based or non–rule-based clinical AI implementation

-

Context

Research from any real-world, simulated, or hypothetical health care setting worldwide, published between January 1, 2014, and April 30, 2021, in any language

-

Databases searched

Ovid-MEDLINE, EBSCO-CINAHL, ACM Digital Library, Science Citation Index-Web of Science, and Scopus

Methods

Overview

This qualitative evidence synthesis adhered to an a priori protocol, the Joanna Briggs Institute (JBI) guidance for conduct and ENTREQ (Enhancing Transparency in Reporting the Synthesis of Qualitative research) reporting guidance [18-20]. The best-fit framework synthesis method was selected using the RETREAT (Review Question-Epistemiology-Time or Timescale-Resources-Expertise-Audience and Purpose-Type of Data) criteria [21,22]. Following a review of implementation frameworks, the Nonadoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS) framework was selected to accommodate the interacting complexity of factors and related stakeholders, which shape the implementation of health care technologies at the policy, organizational, and practice level [10]. The NASSS framework consists of seven domains, which categorize the factors that can influence implementation: (1) Condition, (2) Technology, (3) Value proposition, (4) Adopters, (5) Organization, (6) Wider context, (7) Embedding and adaptation over time [10]. In addition to its focus on technological innovations and its value in considering implementation factors between policy and practice levels, NASSS can be used as a determinant or evaluation framework rather than a process model, and it applies a relatively high level of theoretical abstraction [23]. This means that NASSS can readily accommodate perspectives from various stakeholders, contexts, and tools without enforcing excessive assumptions about the mechanisms of implementation, which is well-suited to the heterogeneous literature to be synthesized [24].

Search Strategy and Selection Criteria

The research question and eligibility criteria informed a preplanned search strategy (available for all databases in Multimedia Appendix 1) that is designed with an experienced information specialist (FRB), informed by published qualitative and clinical AI search strategies and executed in 5 databases (Textbox 1) [6,11,13,25,26]. The search strings were designed in Ovid-MEDLINE and translated into EBSCO-CINAHL, ACM Digital Library, Science Citation Index-Web of Science, and Scopus. The exact terms used are available in Multimedia Appendix 1, but each string combined the same 3 distinct concepts of qualitative research, AI, and health care with AND Boolean operator terms. Differing thesaurus terms and search mechanisms between the databases demanded adaptation of the original search string, but each translation was aimed to reflect the original Ovid-MEDLINE version as closely as possible and was checked for sensitivity and specificity through pilot searches before the final execution. Studies concerning AI as a treatment, such as chatbots to provide talking therapies for mental health conditions, were not eligible as they represent an emerging minority of clinical AI applications [27]. They also evoke social and technological phenomena that are distinct from AI, providing clinical decision support, and therefore, risk diluting synthesized findings with nongeneralizable perspectives. The search strategy was reported in line with the PRISMA-S (Preferred Reporting Items for Systematic Reviews and Meta-Analyses literature search extension) [28]. Search results were pooled in Endnote (version 9.3.3; Clarivate Analytics) for deduplication and uploaded to Rayyan [29]. The references of any review or protocol studies returned were manually searched before exclusion along with all eligible study references. Potentially relevant missing data identified in the full-text reviews were pursued with up to 3 emails to the corresponding authors. Examples of such data included eligible protocols published ≥1 year previously without a follow-up report of the study itself or multimethod studies that appeared to report only quantitative data. Title, abstract, and full-text screening were fully duplicated by 2 independent reviewers (MA and HDJH) with a third arbiter of disagreement (GM; Multimedia Appendix 2). Eligible articles without full text in English were translated using an automated digital translation service between May and June 2021 (Google Translate). The validity of this approach in systematic reviews has been tested empirically and is applied routinely in quantitative and qualitative syntheses [30,31].

Data Analysis

Characteristics and an overall JBI 10-point checklist for qualitative research score was assigned for each study and discussed by 2 reviewers (MA and HDJH) for 9.9% (11/111) of eligible studies [18]. The remaining 90.1% (100/111) were equally divided for the independent extraction of characteristics and assignment of the JBI 10-point checklist for qualitative research scores. Free-text data extraction using NVivo (Release 1.2; QSR International) was performed by a single reviewer (HDJH) following consensus exercises with 3 other authors (MA, GM, and FRB). Data were extracted in individual excerpts, which were determined to be continuous illustrations of a stakeholder’s perspective on clinical AI. A single reviewer (HDJH) assigned each excerpt a JBI 3-tiered level of credibility (Textbox 2) to complement the global appraisal of each study provided by the JBI 10-point checklist for qualitative research [18].

Three-tiered Joanna Briggs Institute (JBI) credibility rating applied to each data excerpt, as described in the JBI Reviewers’ Manual The systematic review of qualitative data [18].

-

Unequivocal

Findings accompanied by an illustration that is beyond reasonable doubt and, therefore, not open to challenge

-

Credible

Findings accompanied by an illustration lacking clear association with it and, therefore, open to challenge

-

Not supported

When neither 1 nor 2 apply and when most notably findings are not supported by the data

All perspectives relating to the phenomena of interest (Textbox 1) arising from participant quotations or authors’ narratives were extracted verbatim from the results and discussion sections. Each excerpt was attributed to the voice of an emergent stakeholder group and a single NASSS subdomain [10]. When the researcher (HDJH) extracting data felt that perspectives fell outside the NASSS subdomains, a draft subdomain was added to the framework to be later reviewed and reiterated with authors with varied perspectives as per the best-fit framework synthesis method [26]. A similar approach was applied to validate the stakeholder groupings which emerged. To permit greater granularity and meaning from the synthesis of such a large volume of data, inductive themes were also created within each NASSS subdomain. The initial data-led titles for these inductive themes were generated by the researcher extracting the data, making initial revisions as the data extraction proceeded. This was followed by several rounds of discussion with the coauthors to review and reiterate the inductive themes alongside their associated primary data to consolidate themes when appropriate and to maximize the accessibility and accuracy of their titles.

NASSS allows researchers to operationalize theory to find coherent sense in large and highly heterogeneous data such as those in this study. However, this may limit the accessibility of the analysis for some stakeholders, as it demands some familiarity with theoretical approaches [32]. To remove this barrier, the key implementation factors arising from the NASSS best-fit framework synthesis were delineated by their relevance for the 5 stakeholder groups that arose from the data. Coauthors with lived experience of each emergent stakeholder role were then invited to coproduce a narrative summary of the factors most relevant to their role. The initial step in this process was the provision of a longer draft of findings relating to each stakeholder group’s perspective by the lead reviewer (HDJH) before the review and initial discussion with each coauthor. This included a senior consultant ophthalmologist delivering and leading local services (SJT), a senior clinical academic working in clinical AI regulation and sitting on a committee advising the national government on regulatory reform (AKD), a clinical scientist working for an international MedTech company (CJK), the founder and managing director of The Healthcare Leadership Academy (JM), and a panel of 4 members of the public experienced in supporting research (reference group). In these 5 separate coproduction streams, the lead reviewer (HDJH) contributed their oversight of the data to discussions with each stakeholder representative (AKD, CJK, JM, SJT, and reference group), who gave feedback to prioritize and frame the data discussed. The lead reviewer then redrafted the section for further rounds of review and feedback until an agreement was reached. This second analytical step validated the findings, increased their accessibility, and aimed to support different stakeholders’ empathy for one another.

To preserve methodological rigor while pursuing broad accessibility, the results were presented for 3 levels of engagement. First, we used 5 stakeholder group narratives. Second, 63 inductive themes were distributed across the 27 subdomains of the adapted NASSS framework. The final most granular level of presentation used an internal referencing system within the Results section to link each assertion of the stakeholder group narratives with its supporting primary data and inductive theme (Multimedia Appendix 3 [33-143]). Notably, insights relevant to a given stakeholder group’s perspective were often contributed by study participants from different stakeholder groups (Figure 1 [19]). This is demonstrated by the selected excerpts contained within the 5 stakeholder group narratives, which are all followed by a brief description of the stakeholders who contributed to the excerpt.

Figure 1.

Sankey diagram illustrating the proportion of 1721 primary study excerpts derived from the voice of each of 5 emergent stakeholder groups and how each excerpt relates to each domain and subdomain of an adapted Non-adoption, Abandonment, Scale-up, Spread and Sustainability (NASSS) framework [19]. EaAOT: embedding and adaptation over time.

Results

Overview

From an initial 4437 unique articles, 111 (2.5%) were found to be eligible, in which 2 (1.8%) were written in languages other than English [33,34] and the corresponding authors for 3 (2.7%) further studies [144-146], containing potentially relevant data, were not successfully contacted (Figure 2 [147]). Specific exclusion criteria were recorded for each excluded article at the full-text review stage (Multimedia Appendix 2), with most exclusions (4115/4326, 95.12%) made at the title and abstract screening stage. The absence of qualitative research methods was the most common cause of these exclusions. In the 111 eligible studies, there were 1721 excerpts. In assigning a JBI credibility score to each of these 1721 excerpts, 1155 (67.11%) were classified as unequivocal, 373 (21.67%) as equivocal, and 193 (11.21%) as unsupported [18]. The excerpts were categorized within the 27 subdomains of the adapted NASSS framework (Table 1) Inductive themes from within each NASSS subdomain are also listed along with the reference code applied throughout the results section and additional materials and the number of eligible primary studies which contributed.

Figure 2.

PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) style flowchart of search and eligibility check executions [39].

Table 1.

Subdomains of the Nonadoption, Abandonment, Scale-up, Spread, and Sustainability (NASSS) framework used for data analysis with 2 data-led additions to the original subdomain list (n=111) [10].

| NASSS subdomain and codes | Inductive theme | Papers, n (%) | |

| 1a. Nature of condition or illness | |||

|

|

1a.1 | Type or format of care needs | 11 (9.9) |

|

|

1a.2 | Ambiguous, complicated, or rare decisions | 23 (20.7) |

|

|

1a.3 | Quality of current care | 18 (16.2) |

|

|

1a.4 | Decision urgency and impact | 11 (9.9) |

| 1b. Comorbidities | |||

|

|

1b.1 | Other associated health problems | 5 (4.5) |

|

|

1b.2 | Aligning patient and health priorities | 6 (5.4) |

| 1c Sociocultural factors | No subthemes | 13 (11.7) | |

| 2a. Material properties | |||

|

|

2a.1 | Usability of the tool | 28 (25.2) |

|

|

2a.2 | Lack of emotion | 12 (10.8) |

|

|

2a.3 | Large amounts of changing data | 14 (12.6) |

| 2b. Knowledge to use it | |||

|

|

2b.1 | Knowledge required of patients | 24 (21.6) |

|

|

2b.2 | Enabling users to evaluate tools | 20 (18) |

|

|

2b.3 | Agreeing the scope of use | 19 (17.1) |

| 2c. Knowledge generated by it | |||

|

|

2c.1 | Communicate meaning effectively | 45 (40.5) |

|

|

2c.2 | Target a clinical need | 23 (20.7) |

|

|

2c.3 | Recommend clear action | 25 (22.5) |

| 2d. Supply model | |||

|

|

2d.1 | Equipment and network requirements | 23 (20.7) |

|

|

2d.2 | Working across multiple health data systems | 25 (22.5) |

|

|

2d.3 | Quality of the health data and guidelines used | 33 (29.7) |

| 2e. Who owns the intellectual property? | No subthemes | 14 (12.6) | |

| 2f. Care pathway positioninga | |||

|

|

2f.1 | Extent of tools’ independence | 23 (20.7) |

|

|

2f.2 | When and to whom the tool responds | 21 (18.9) |

|

|

2f.3 | How and where the tool responds | 20 (18) |

| 3a. Supply-side value (to developer) | No subthemes | 7 (6.3) | |

| 3b. Demand-side value (to patient) | |||

|

|

3b.1 | Time required for service provision | 27 (24.3) |

|

|

3b.2 | Patient-centered care | 22 (19.8) |

|

|

3b.3 | Cost of health care | 17 (15.3) |

|

|

3b.4 | Impact on outcomes for patients | 28 (25.2) |

|

|

3b.5 | Educating and prompting HCPsb | 41 (36.9) |

|

|

3b.6 | Consistency and authority of care | 33 (29.7) |

| 4a. Staff (role and identity) | |||

|

|

4a.1 | Appetite and needs differ between staff groups | 33 (29.7) |

|

|

4a.2 | Tools redefine staff roles | 33 (29.7) |

|

|

4a.3 | Aligning with staff values | 28 (25.2) |

| 4b. Patient (simple vs complex input) | |||

|

|

4b.1 | Inconvenience for patients | 10 (9.0) |

|

|

4b.2 | Patients’ control over their care | 14 (12.6) |

|

|

4b.3 | Aligning patients’ agendas with tool use | 11 (9.9) |

| 4c. Carers | No subthemes | 4 (3.6) | |

| 4d. Relationshipsa | |||

|

|

4d.1 | Patients’ relationships with their HCPs | 30 (27) |

|

|

4d.2 | Users’ relationships with tools | 13 (11.7) |

|

|

4d.3 | Relationships between health professionals | 21 (18.9) |

| 5a. Capacity to innovate in general | |||

|

|

5a.1 | Resources needed to deliver the benefits | 29 (26.1) |

|

|

5a.2 | Leadership | 26 (23.4) |

| 5b. Readiness for this technology | |||

|

|

5b.1 | Pressure to find a way of improving things | 9 (8.1) |

|

|

5b.2 | Suitability of hosts’ premises and technology | 15 (13.5) |

| 5c. Nature of adoption or funding decision | No subthemes | 7 (6.3) | |

| 5d. Extent of change needed to organizational routines | |||

|

|

5d.1 | Fitting the tool within current practices | 14 (12.6) |

|

|

5d.2 | Change to intensity of work for staff | 22 (19.8) |

| 5e. Work needed to plan, implement, and monitor change | |||

|

|

5e.1 | Training requirements | 17 (15.3) |

|

|

5e.2 | Effort and resources for tool launch | 23 (20.7) |

| 6a. Political or policy context | |||

|

|

6a.1 | Different ways to incentivize providers | 10 (9) |

|

|

6a.2 | Importance of government strategy | 8 (7.2) |

|

|

6a.3 | Policy and practice influence each other more | 15 (13.5) |

| 6b. Regulatory and legal issues | |||

|

|

6b.1 | Impact on patient groups | 19 (17.1) |

|

|

6b.2 | Product assurance | 14 (12.6) |

|

|

6b.3 | Deciding who is responsible | 8 (7.2) |

| 6c. Professional bodies | |||

|

|

6c.1 | Resistance from professional culture | 20 (18) |

|

|

6c.2 | Lack of understanding between professional groups | 9 (8.1) |

| 6d. Sociocultural context | |||

|

|

6d.1 | Culture’s effect on tool acceptability | 17 (15.3) |

|

|

6d.2 | Public reaction to tools varies | 10 (9) |

| 6e. Interorganizational networking | No subthemes | 14 (12.6) | |

| 7a. Scope for adaptation over time | |||

|

|

7a.1 | Normalization of technology and decreased resistance | 15 (13.5) |

|

|

7a.2 | Improvement of technology and its implementation | 11 (9.9) |

| 7b. Organizational resilience | No subthemes | 3 (2.7) | |

aIndicates a subdomain added to the original NASSS framework through application of the best-fit framework synthesis method [21].

bHCP: health care professional.

Five distinct stakeholder groups emerged through the analysis, each contributing excerpts related to 17 to 24 of the 27 subdomains (Figure 1). Eligible studies (Table 2) represented 23 nations, with the United States, the United Kingdom, Canada, and Australia as the most common host nations, and 25 clinical specialties, with a clear dominant contribution from primary care (Multimedia Appendix 4 [33-143]). Although there was some representation from resource-limited nations, 88.2% (90/102) of the studies focusing on a single nation were in countries meeting the United Nations Development Programme’s definition of “very high human development” with a human development index between 0.8 and the upper limit of 1.0 [148]. The median human development index of the host nations for these 101 studies was 0.929 (IQR 0.926-0.944). The JBI 10-point checklist for qualitative research scores assigned to each study had a median of 8 (IQR 7-8) [18]. Detailed characteristics, including AI use cases, are available in Multimedia Appendix 4.

Table 2.

Characteristics of 111 eligible studies and the clinical artificial intelligence (AI) studied.

| Characteristic | Studies, n (%) | |

| Clinical AI application | ||

|

|

Hypothetical | 31 (27.9) |

|

|

Simulated | 24 (21.6) |

|

|

Clinical | 56 (50.5) |

| Clinical AI nature | ||

|

|

Rule based | 66 (59.5) |

|

|

Non–rule based | 41 (36.9) |

|

|

NSa | 4 (3.6) |

| Clinical AI audience | ||

|

|

Public | 5 (4.5) |

|

|

Primary care | 45 (40.5) |

|

|

Secondary care | 43 (38.7) |

|

|

Mixed | 3 (2.7) |

|

|

NS | 15 (13.5) |

| Clinical AI input | ||

|

|

Numerical or categorical | 83 (74.8) |

|

|

Imaging | 9 (8.1) |

|

|

Mixed | 1 (0.9) |

| Clinical AI task | ||

|

|

Triage | 15 (13.5) |

|

|

Diagnosis | 15 (13.5) |

|

|

Prognosis | 10 (9) |

|

|

Management | 46 (41.4) |

|

|

NS | 24 (21.6) |

| Research method | ||

|

|

Interviews | 54 (48.6) |

|

|

Focus groups | 19 (17.1) |

|

|

Surveys | 12 (10.8) |

|

|

Think aloud exercises | 1 (0.9) |

|

|

Observation | 1 (0.9) |

|

|

Mixed | 24 (21.6) |

aNS: not specified.

Developers

The developers of clinical AI required both technical and clinical expertise alongside effective interaction within the multiple professional cultures that stakeholders inhabit (6e and 6c.2). This made cross-disciplinary work a priority, but it was challenged by the immediate demands of clinical duties that limited HCPs’ engagement (5a.1). State incentive systems for cross-disciplinary work had the potential to make this collaboration more attractive for developers (6a.2); nevertheless, those who independently prioritized multidisciplinary teams appeared to increase their innovations’ chances of real-world utility (2c.2). The instances when HCP time had been funded by industry or academia were highly valued (4a.3):

...she [an IT person with a clinical background] really bridges that gap...when IT folks talk directly to the front line, sometimes there’s just the language barrier there.

Unspecified professional [35]

To safeguard clinical AI utility, developers sometimes built in plasticity to accommodate variable host contexts (2a.3). This plasticity was beneficial both in terms of the clinical “reasoning” a tool applied and where and how it could be applied within different organizations’ or individuals’ practice (2e and 5d.1). The usability and accessibility of clinical AI often have a greater impact on adopter perceptions than their performance (2a.1 and 2b.1). There were many examples of clinical AI abandonment from adopters who had not fully understood a tool (2b.3 and 5e.1) or organizations that lacked the capacity or experience to effectively implement it (5e.2). Vendors who invested in training, troubleshooting, and implementation consultancy were often better received:

I’ve learned...that this closing the loop is what makes the sale...sometimes, we’re handed a package with the implementation science done.

Health care manager [36]

The poor interoperability of different systems has inhibited clinical AI scale-up (2d.2), but it has seemed to benefit electronic health care record providers, whose market dominance has driven the uptake of their own clinical AI tools (3a.1). Clinical AI developed inhouse, or by third parties, seemed to be at a competitive disadvantage (2d.1). Increasing market competition and political attention may lead to software or regulatory developments that indiscriminately enhance interoperability and disrupt this strategic issue (3a.1 and 7a). Developers were also affected by defensive attitudes from health care organizations and patients, many of whom distrust industry with access to the data on which clinical AI’s training depends (2d.3 and 2e):

For example, Alibaba is entering the health industry. But hospitals only allow Alibaba to access data of outpatients, not data of inpatients. They [the IT firms] cannot get the core data [continuous data of inpatients] from hospitals.

Policy maker [37]

Health Care Professionals

The HCPs’ perspectives on clinical AI varied greatly (4a.1), but they commonly perceived value from clinical AI that facilitated clinical training (3b.5), reduced simple or repetitive tasks (3b.1 and 3b.2), improved patient outcomes (3b.4), or widened individuals’ scope of practice (4a.2). Despite these incentives, HCP adoption was often hampered by inadequate time to embed clinical AI in practice (5d.1), skepticism about its ability to inform clinical decisions (6c.1 and 2c.2), and uncertainty around its mechanics (2b.2). The “black box” effect associated with non–rule-based clinical AI prompted varied responses, with the burden of improvement placed on either the HCP to educate themselves or developers to produce more familiar metrics of efficacy and interpretability (2c.1 and 2b.2):

“When I bring on a test, I usually know what method it is. You tell me AI, and I have conceptually no idea.”... As a result, pathologists wanted to get a basic crash course in using AI...

HCP [38]

The HCP culture could be very influential in local clinical AI implementation (6c.1). Professional hierarchies were exposed and challenged through the interplay of clinical AI and professional roles and relationships (4d.3). Some experienced this as a “levelling-up” opportunity, favoring evidence over eminence-based medicine and nurturing more collaborative working environments (2d.3 and 3b.6). Others felt that their capabilities were being undervalued and even feared redundancy on occasion (4a.2):

The second benefit was the potential to use the deep learning system’s result to prove their own readings to on-site doctors. Several nurses expressed frustration with their assessments being undervalued or dismissed by physicians.

Authors’ representation of HCPs [39]

In some studies, HCPs felt that care provision improved both in terms of quality and reach (3b.1 and 3b.4). A virtuous cycle of engagement and value perception could develop, depending on where HCPs saw value and need in a given context (2c.2 and 2b.3). This was often when clinical AI aligned with familiar ways of working (5d.1), prompting or actioning things that HCPs knew but easily forgot (3b.5), and where the transfer of responsibility was gradual and HCP led (2f.1):

...to the physician, the algorithmic sorting constituted an extension of her own, and her experienced colleagues’ expertise...“I consider it a clinical judgement, which we made when we decided upon the thresholds”...

HCP [40]

Health Care Managers and Leaders

Strong leadership at any level within health care organizations supported successful implementation (5a.2). Competing clinical demands and the scale of projects had the potential to disincentivize initial resource investments and jeopardize the implementation of clinical AI (5e.2). Resources committed to the clinical AI implementation held more than their intrinsic value, as they signaled to adopters that implementation was a priority and encouraged a positive workforce attitude (5b.2). A careful selection of clinical AI tools that seem likely to ultimately relieve workforce pressure may help managers to protect investment and adopter buy-in despite excessive clinical burdens (3b.1 and 5b.1). Stepwise or cyclical implementation of clinical AI were also advocated as a means of smoothing workflow changes and minimizing distractions from active projects:

I think that if you keep it simple, and maybe in a structured way if you could layer it, so that you know, for 2012 we are focusing on these five issues and in 2013 we’re focusing on these...over time you would introduce better prescribing.

Primary care leader [41]

The significant commitment required for effective implementation underlined the importance of judicious clinical AI selection and where, how, and for whom it would be applied (2f and 1a.3). A heuristic approach from managers’ knowledge of their staff characteristics (eg, age, training, and contract length) roughly informed a context-specific implementation strategy (4a.1). However, co-design with the adopters themselves better supported the alignment of local clinical AI values, staff priorities, and patient needs (4b.3 and 5d.1). There were examples of this process being rushed and heavy investments achieving little owing to misalignment of these aspects (2b.3 and 5a):

...due to shortage of capacity and resources in hospitals, business cases were often developed too quickly and procurements were made without adequate understanding of the problems needing to be addressed

Authors’ representation of health care managers [42]

HCPs sometimes developed negative relationships with clinical AI, which limited sustainability if issues were not identified or addressed (4d.2 and 4a.1). Just as clinical AI with the flexibility to be applied to different local workflows appeared to be better received by adopters, an influential factor for implementation was health care managers who were prepared to be flexible about which part of workflow was targeted (2f). Clinical AI implementation often revealed preexistent gaps between ideal and real-world care. Managers framed this as not only a problematic creation of necessary work but also helpful evidence to justify greater resourcing from policy makers or higher leadership (6a.3 and 5a.1). The need to consider staff well-being by managers was also illustrated, as clinical AI sometimes absorbed simple aspects of clinical work, increasing the concentration of intellectually or emotionally strenuous tasks within clinician workflows (2a.2, 1a.2, and 5d.2):

The problem with implementing digital technologies is that all too often, we fail to recognise or support the human effort necessary to bring them into use and keep them in use.

Authors’ representation of HCPs [43]

Patients, Carers, and the Public

Concerns about the impact of clinical AI on HCP-patient interactions mainly came from the fear of HCP substitution (4d.1). These concerns seemed strongest within mental health and social care contexts, which were felt to demand a “human touch” (1a.1, 1c, and 2a.2). Patient-facing clinical AI, such as chronic disease self-management tools, was well received if they operated under close HCP oversight (2f.1 and 2f.2). The use of clinical AI as an adjunct for narrow and simplistic tasks was more prevalent (2f.1 and 1a.2), aiming to liberate HCPs’ attention to improve care quality or reach (3b.2). There were also examples of patient-facing clinical AI that appeared to better align patients and HCP agendas ahead of consultations, empowering patients to represent their wishes more effectively (4b.2 and 4c):

It is an advantage when reliable information can be sent to the patient, because GPs [General Practitioners] often have to use time to reassure patients that have read inappropriate information from unreliable sources.

HCP [44]

There was little evidence of research into carers’ perspectives. Available perspectives suggested that clinical AI could make health care decisions more transparent, helping carers to advocate for patients (4c). This could help anticipate and mitigate some of the reported patient inconveniences and anxieties associated with clinical AI (2b.1 and 4b.1):

One participant stated that the intervention needed to be “patient-centred”. “Including patients in the design phase” and “conducting focus groups for patients” were suggested to improve implementation of the eHealth intervention.

Unspecified participants [45]

Public perception of clinical AI was extremely variable, and with little personal experience, it was common to draw on hesitancy (6d.2 and 6d.1):

...many women, who had a negative or mixed view of the effect of AI in society, were unsure of why they felt this way...

Authors’ representation of public [46]

Popular media were often felt to play a key role in informing the public and to encourage expectations far removed from real-world health care (6d.1) However, in cases where clinical AI was endorsed by trusted HCPs overseeing their care, these issues did not appear problematic (6b.2).

Regulators and Policy Makers

There was a perceived need for ongoing regulation of clinical AI and the contexts in which they are applied. This was both in terms of how tools are deployed to new sites (2b.3 and 5f.2) and how they may evolve through everyday practice (2a.3 and 7a.2). To make this evolution safe, stakeholders identified the need for long-term multistakeholder collaboration (6e). However, the data highlighted disincentives for this way of working, suggesting that there may be a need to enforce it (6a.2 and 6c.2). Stakeholders also raised issues around generalizability and bias for the populations they served, which were context specific and could evolve over time (6b.1). Otherwise, practitioners could gradually apply clinical AI to specific settings for which it was not appropriately trained or validated (2b.3). This “use case creep” described in the data further supported the perceived need for continual monitoring and evaluation of adopters’ interaction with clinical AI (6b):

...they reported use of the e-algo only when they were confused or had more difficult cases. They did not feel the time required to use the e-algo warranted its use in the cases they perceived as routine or simple.

Authors’ representation of HCPs [15]

Stakeholders often felt that clinical AI increased the speed and strength of policy and practice’s influence over one another (6a.3). Many appreciated its improvement of care consistency across contexts and alignment of practices with guidelines (3b.6 and 2d.3). Others criticized it as an oversimplification (6c.1). An opportunity was seen for policy development to become more dynamic and evidence based (3b.4). Some envisaged this as an automated quality improvement cycle, whereas others anticipated complete overhauls of treatment paradigms (2f.1).

I could easily see us going to that payer and saying, “Well, our risk model...shows your patient population is higher risk. We need to do more intervention, so we need more money.”

Health care manager [36]

Anxiety over who would hold legal responsibility if clinical AI became dominant was common (6b.3). The litigative threat was even felt by individuals who avoided clinical AI use, as HCPs feared allegations of negligence for not using clinical AI (6b.3). Neither industry nor clinical professionals felt well placed to take on legal responsibility for clinical AI outcomes because they felt they only understood part of the whole (2b.2 and 6e). This was mainly presented as an educational issue rather than a consequence of transparency and explainability concerns (2b.2). Such high-stakes uncertainties appeared likely to perpetuate resistance from stakeholders (6c.1) although some data suggested that legislation could prompt adaptation to commercial and clinical practices that would reassure individual adopters (6b.2):

...physicians stated that they were not prepared (would not agree?) to be held criminally responsible if a medical error was made by an AI tool.

Authors’ representation of HCPs [47]

...content vendors clearly state that they do not practice medicine and therefore should not be liable...

Authors’ representation of developer [48]

Discussion

Principal Findings

These data highlight the breadth of the interdependent factors that influence the implementation of clinical AI. They also highlight the influence of at least 5 distinct stakeholder groups over each factor (Figure 1): developers, HCPs, health care managers and leaders, public stakeholders, and regulators and policy makers. It should be emphasized that most individuals belong to more than one stakeholder group simultaneously, and the clinical AI tool and context under consideration will transform the influence of any given implementation factor; thus, robust boundaries and weightings between different stakeholders are inevitably artificial. However, to provide a simplified overview, the common factors related to each stakeholder group’s perspective are summarized in Table 3.

Table 3.

A summary of common factors influencing clinical artificial intelligence (AI) implementation from 5 different stakeholder perspectives.

| Stakeholder group | Common factors influencing clinical AI implementation |

| Developers |

|

| Health care professionals |

|

| Health care managers and leaders |

|

| Patients, carers, and the public |

|

| Regulators and policy makers |

|

The strong representation of HCPs’ perspectives in the literature is an asset. However, the 30.04% (517/1721) of the excerpts from all other stakeholder perspectives clearly hold important but underexplored insights across all implementation factors (Figure 1), which should be prioritized in future research. The underrepresentation of certain stakeholders is partly masked by the need to group together the least represented stakeholders to permit meaningful synthesis, exemplified by the total of 0.35% (6/1721) of excerpts, which is related to the carer perspective. Failure to reform this clinician-centricity will limit the understanding and management of the inherent multistakeholder process of implementation. Encouragingly, the frequency at which specific factors arose in studies of rule-based and non–rule-based tools seemed largely comparable (Multimedia Appendix 5). This supports the use of the wider general clinical AI evidence base to inform non–rule-based tool implementation, which has been curated and characterized in this study to support future tool and context-specific implementation efforts in anticipating and managing a unique constellation of factors and stakeholders (Multimedia Appendix 3). This is caveated in more dominant areas of discussion for non–rule-based tools, such as intellectual property, regulation, and sociocultural attitudes, where further research specific to non–rule-based clinical AI is required.

Comparison With Prior Work

This qualitative evidence synthesis has demonstrated that many implementation factors concerning early rule-based clinical AI tools continue to be influential [149]. However, the analysis and presentation of this work has prioritized enabling a varied readership to interpret data within their own context and experience rather than prescribing factors to be considered for a narrow range of clinical AI tools and contexts [24,32]. As a result, this study has consolidated a wider scope of research than previous work to synthesize findings that can support future implementation practice and research, considering a wide range of clinical AI tools and contexts. This approach may compromise the depth of support offered by this study relative to other syntheses for particular clinical specialties, clinical AI types, or stakeholder groups [11,12]. To maintain rigor while acknowledging the subjective value of eligible data, a systematic, transparent, and empirical approach has been adopted. This contrasts with narrative reviews in the literature, which provide valuable insights that draw more directly on the expertise of particular groups and collaborations but may not be easily generalized to diverse clinical AI tools [8,150].

Limitations

First, some of this study’s findings are limited by the low representation of certain groups’ perspectives in eligible studies, which necessitated highly abstracted definitions of key stakeholders to facilitate meaningful synthesis. In addition to the example of carers mentioned previously, employees of academic and commercial institutions were both termed “developers.” A related second limitation of this study was the use of databases that focused on peer-reviewed literature. This search strategy is likely to have contributed to the low representation of non-HCP stakeholder groups, as peer-reviewed publications are a resource-intensive approach to dissemination that does not reward other stakeholders as closely as it does HCPs. Potential mitigation steps included the addition of social media or policy documents, but they were thought to be unfeasible for this study, given the extensive eligible literature returned by the broad search strategy applied [151]. Instead, a codevelopment step was added to the analysis process to reinforce the limited stakeholder perspectives that did arise from the search strategy with the coauthors’ lived experience. This was also valuable because it helped mitigate a further source of bias from factors relevant to given stakeholders that were often being described in the primary data by participants from different stakeholder groups. This is reflected in the sources of the sample excerpts interspersing the results section and by the 61 excerpts attributed to the patient (4b) or carer (4c) NASSS subdomains, 57% (35/61) were sourced from stakeholders outside the public, patients, and carer stakeholder group. In addition to mitigating these limitations, the codevelopment step of analysis was also intended to help improve the accessibility of implementation science within clinical AI, where theory-focused dogma often obscures the value for practitioners [32,152]. A third limitation is the likely underrepresentation of non-English language reports of studies, despite the English language limits only being applied through database indexing. Search strings devised in other languages or searches deployed in databases that focus on non-English literature could examine this potential limitation.

Future Directions

The relatively short list of eligible qualitative studies derived from such broad eligibility criteria emphasizes the need for more primary qualitative research to explore the growing breadth of clinical AI tools and implementation contexts. Future primary qualitative studies should prioritize the perspectives of non-HCP stakeholders. Researchers may wish to couple the relevant data curated here (Multimedia Appendix 3) and a rationally selected theoretical approach to develop their sampling and data collection strategies [153]. Further exploration of implementation factors more pertinent to non–rule-based tools, such as intellectual property, regulation, and sociocultural attitudes, may also improve the literature’s contemporary relevance.

Conclusions

This study has consolidated multistakeholder perspectives of clinical AI implementation in an accessible format that can inform clinical AI development and implementation strategies involving varied tools and contexts. It also demonstrates the need for more qualitative research on clinical AI, which more adequately represents the perspectives of the many stakeholders who influence its implementation and the emerging aspects of non–rule-based clinical AI implementation.

Acknowledgments

The consortium author titled “Technology Enhanced Macular Services Study Reference Group” consists of 4 members of the public: Rashmi Kumar, Rosemary Nicholls, Angela Quilley, and Christine Sinnett. They also contributed to analyzing the data. This study was part of a proposal funded by the National Institute for Health and Care Research doctoral fellowship (NIHR301467). The funder had no role in study design, data collection, data analysis, data interpretation, or manuscript writing.

Abbreviations

- AI

artificial intelligence

- ENTREQ

Enhancing Transparency in Reporting the Synthesis of Qualitative research

- HCP

health care professional

- JBI

Joanna Briggs Institute

- NASSS

Nonadoption, Abandonment, Scale-up, Spread, and Sustainability

- PRISMA-S

Preferred Reporting Items for Systematic Reviews and Meta-Analyses literature search extension

- RETREAT

Review Question-Epistemiology-Time or Timescale-Resources-Expertise-Audience and Purpose-Type of Data

Search strategies.

Excluded studies with potentially eligible abstracts.

Codebook.

Eligible primary articles and their characteristics.

Excerpts by clinical artificial intelligence type.

Data Availability

All free-text data are available in Multimedia Appendix 3 and curated using the adapted Nonadoption, Abandonment, Scale-up, Spread, and Sustainability framework detailed in Table 1. Eligible studies and their characteristics are also available in Multimedia Appendix 4.

Footnotes

Authors' Contributions: All the authors had full access to all the data in the study and had final responsibility for the decision to submit for publication. Two authors accessed each of the excerpts comprising the data for this study (HDJH and MA). HDJH, GM, FRB, DT, and PAK conceived the study. HDJH, FRB, GM, and PAK developed the search strategy. HDJH, MA, GM, and FRB conducted the screening process. HDJH and MA extracted the study characteristics, and HDJH extracted excerpts. HDJH, SJT, AKD, CJK, and JM analyzed the data. HDJH drafted the report with inputs from SJT, AKD, CJK, JM, CP, DT, PAK, FRB, and GM.

Conflicts of Interest: CJK is an employee of Google and owns stocks as part of the standard compensation package. PAK has acted as a consultant for Google, DeepMind, Roche, Novartis, Apellis, and BitFount and is an equity owner in Big Picture Medical. He has received speaker fees from Heidelberg Engineering, Topcon, Allergan, and Bayer. All other authors declare no competing interests.

References

- 1.Zhang J, Whebell S, Gallifant J, Budhdeo S, Mattie H, Lertvittayakumjorn P, del Pilar Arias Lopez M, Tiangco BJ, Gichoya JW, Ashrafian H, Celi LA, Teo JT. An interactive dashboard to track themes, development maturity, and global equity in clinical artificial intelligence research. Lancet Digital Health. 2022 Apr;4(4):e212–3. doi: 10.1016/s2589-7500(22)00032-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Muehlematter UJ, Daniore P, Vokinger KN. Approval of artificial intelligence and machine learning-based medical devices in the USA and Europe (2015–20): a comparative analysis. Lancet Digital Health. 2021 Mar;3(3):e195–203. doi: 10.1016/s2589-7500(20)30292-2. [DOI] [PubMed] [Google Scholar]

- 3.Generating Evidence for Artificial Intelligence Based Medical Devices: A Framework for Training Validation and Evaluation. Geneva: World Health Organization; 2021. [Google Scholar]

- 4.Yin J, Ngiam KY, Teo HH. Role of artificial intelligence applications in real-life clinical practice: systematic review. J Med Internet Res. 2021 Apr 22;23(4):e25759. doi: 10.2196/25759. https://www.jmir.org/2021/4/e25759/ v23i4e25759 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019 Jan 7;25(1):44–56. doi: 10.1038/s41591-018-0300-7.10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 6.Liu X, Faes L, Kale AU, Wagner SK, Fu DJ, Bruynseels A, Mahendiran T, Moraes G, Shamdas M, Kern C, Ledsam JR, Schmid MK, Balaskas K, Topol EJ, Bachmann LM, Keane PA, Denniston AK. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Digit Health. 2019 Oct;1(6):e271–97. doi: 10.1016/S2589-7500(19)30123-2. https://linkinghub.elsevier.com/retrieve/pii/S2589-7500(19)30123-2 .S2589-7500(19)30123-2 [DOI] [PubMed] [Google Scholar]

- 7.Liu X, Rivera SC, Moher D, Calvert MJ, Denniston AK, SPIRIT-AICONSORT-AI Working Group Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI Extension. BMJ. 2020 Sep 09;370:m3164. doi: 10.1136/bmj.m3164. http://www.bmj.com/lookup/pmidlookup?view=long&pmid=32909959 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Marwaha JS, Landman AB, Brat GA, Dunn T, Gordon WJ. Deploying digital health tools within large, complex health systems: key considerations for adoption and implementation. NPJ Digit Med. 2022 Jan 27;5(1):13. doi: 10.1038/s41746-022-00557-1. doi: 10.1038/s41746-022-00557-1.10.1038/s41746-022-00557-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Maniatopoulos G, Hunter DJ, Erskine J, Hudson B. Large-scale health system transformation in the United Kingdom. J Health Organ Manag. 2020 Mar 17;34(3):325–44. doi: 10.1108/jhom-05-2019-0144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Greenhalgh T, Wherton J, Papoutsi C, Lynch J, Hughes G, A'Court C, Hinder S, Fahy N, Procter R, Shaw S. Beyond adoption: a new framework for theorizing and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. J Med Internet Res. 2017 Nov 01;19(11):e367. doi: 10.2196/jmir.8775.v19i11e367 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Shinners L, Aggar C, Grace S, Smith S. Exploring healthcare professionals' understanding and experiences of artificial intelligence technology use in the delivery of healthcare: an integrative review. Health Informatics J. 2020 Jun 30;26(2):1225–36. doi: 10.1177/1460458219874641. https://journals.sagepub.com/doi/abs/10.1177/1460458219874641?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed . [DOI] [PubMed] [Google Scholar]

- 12.Young AT, Amara D, Bhattacharya A, Wei ML. Patient and general public attitudes towards clinical artificial intelligence: a mixed methods systematic review. Lancet Digital Health. 2021 Sep;3(9):e599–611. doi: 10.1016/s2589-7500(21)00132-1. [DOI] [PubMed] [Google Scholar]

- 13.Miller A, Moon B, Anders S, Walden R, Brown S, Montella D. Integrating computerized clinical decision support systems into clinical work: a meta-synthesis of qualitative research. Int J Med Inform. 2015 Dec;84(12):1009–18. doi: 10.1016/j.ijmedinf.2015.09.005.S1386-5056(15)30040-X [DOI] [PubMed] [Google Scholar]

- 14.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, Venugopalan S, Widner K, Madams T, Cuadros J, Kim R, Raman R, Nelson PC, Mega JL, Webster DR. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016 Dec 13;316(22):2402–10. doi: 10.1001/jama.2016.17216.2588763 [DOI] [PubMed] [Google Scholar]

- 15.Knoble SJ, Bhusal MR. Electronic diagnostic algorithms to assist mid-level health care workers in Nepal: a mixed-method exploratory study. Int J Med Inform. 2015 May;84(5):334–40. doi: 10.1016/j.ijmedinf.2015.01.011.S1386-5056(15)00014-3 [DOI] [PubMed] [Google Scholar]

- 16.Recommendation of the Council on Artificial Intelligence. OECD Legal Instruments. [2021-11-04]. https://tinyurl.com/5n6mbe4k .

- 17.McCoy LG, Brenna CT, Chen SS, Vold K, Das S. Believing in black boxes: machine learning for healthcare does not need explainability to be evidence-based. J Clin Epidemiol. 2022 Feb;142:252–7. doi: 10.1016/j.jclinepi.2021.11.001.S0895-4356(21)00354-1 [DOI] [PubMed] [Google Scholar]

- 18.Lockwood C, Porritt K, Munn Z, Rittenmeyer L, Salmond S, Bjerrum M, Loveday H, Carrier J, Stannard D. JBI Manual for Evidence Synthesis. Adelaide, Australia: Joanna Briggs Institute; 2020. Chapter 2: Systematic reviews of qualitative evidence. [Google Scholar]

- 19.Al-Zubaidy M, Hogg HDJ, Maniatopoulos G, Talks J, Teare MD, Keane PA, R Beyer F. Stakeholder perspectives on clinical decision support tools to inform clinical artificial intelligence implementation: protocol for a framework synthesis for qualitative evidence. JMIR Res Protoc. 2022 Apr 01;11(4):e33145. doi: 10.2196/33145. https://www.researchprotocols.org/2022/4/e33145/ v11i4e33145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tong A, Flemming K, McInnes E, Oliver S, Craig J. Enhancing transparency in reporting the synthesis of qualitative research: ENTREQ. BMC Med Res Methodol. 2012 Nov 27;12(1):181. doi: 10.1186/1471-2288-12-181. https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/1471-2288-12-181 .1471-2288-12-181 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Booth A, Carroll C. How to build up the actionable knowledge base: the role of 'best fit' framework synthesis for studies of improvement in healthcare. BMJ Qual Saf. 2015 Nov 25;24(11):700–8. doi: 10.1136/bmjqs-2014-003642. http://qualitysafety.bmj.com/lookup/pmidlookup?view=long&pmid=26306609 .bmjqs-2014-003642 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Booth A, Noyes J, Flemming K, Gerhardus A, Wahlster P, van der Wilt GJ, Mozygemba K, Refolo P, Sacchini D, Tummers M, Rehfuess E. Structured methodology review identified seven (RETREAT) criteria for selecting qualitative evidence synthesis approaches. J Clin Epidemiol. 2018 Jul;99:41–52. doi: 10.1016/j.jclinepi.2018.03.003. https://eprints.whiterose.ac.uk/129465/ S0895-4356(17)30974-5 [DOI] [PubMed] [Google Scholar]

- 23.Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015 Apr 21;10(1):53. doi: 10.1186/s13012-015-0242-0. https://implementationscience.biomedcentral.com/articles/10.1186/s13012-015-0242-0 .10.1186/s13012-015-0242-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kislov R, Pope C, Martin GP, Wilson PM. Harnessing the power of theorising in implementation science. Implement Sci. 2019 Dec 11;14(1):103. doi: 10.1186/s13012-019-0957-4. https://implementationscience.biomedcentral.com/articles/10.1186/s13012-019-0957-4 .10.1186/s13012-019-0957-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.DeJean D, Giacomini M, Simeonov D, Smith A. Finding qualitative research evidence for health technology assessment. Qual Health Res. 2016 Aug 26;26(10):1307–17. doi: 10.1177/1049732316644429.1049732316644429 [DOI] [PubMed] [Google Scholar]

- 26.Nagendran M, Chen Y, Lovejoy CA, Gordon AC, Komorowski M, Harvey H, Topol EJ, Ioannidis JP, Collins GS, Maruthappu M. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. BMJ. 2020 Mar 25;368:m689. doi: 10.1136/bmj.m689. http://www.bmj.com/lookup/pmidlookup?view=long&pmid=32213531 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rivera SC, Liu X, Chan A, Denniston AK, Calvert MJ, SPIRIT-AICONSORT-AI Working Group Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI Extension. BMJ. 2020 Sep 09;370:m3210. doi: 10.1136/bmj.m3210. http://www.bmj.com/lookup/pmidlookup?view=long&pmid=32907797 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rethlefsen ML, Kirtley S, Waffenschmidt S, Ayala AP, Moher D, Page MJ, Koffel JB, PRISMA-S Group PRISMA-S: an extension to the PRISMA statement for reporting literature searches in systematic reviews. Syst Rev. 2021 Jan 26;10(1):39. doi: 10.1186/s13643-020-01542-z. https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/s13643-020-01542-z .10.1186/s13643-020-01542-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan-a web and mobile app for systematic reviews. Syst Rev. 2016 Dec 05;5(1):210. doi: 10.1186/s13643-016-0384-4. https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/s13643-016-0384-4 .10.1186/s13643-016-0384-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ng S, Yeatman H, Kelly B, Sankaranarayanan S, Karupaiah T. Identifying barriers and facilitators in the development and implementation of government-led food environment policies: a systematic review. Nutr Rev. 2022 Jul 07;80(8):1896–918. doi: 10.1093/nutrit/nuac016. https://europepmc.org/abstract/MED/35388428 .6564436 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Jackson JL, Kuriyama A, Anton A, Choi A, Fournier J, Geier A, Jacquerioz F, Kogan D, Scholcoff C, Sun R. The accuracy of Google translate for abstracting data from non–English-language trials for systematic reviews. Ann Intern Med. 2019 Jul 30;171(9):677. doi: 10.7326/m19-0891. [DOI] [PubMed] [Google Scholar]

- 32.Rapport F, Smith J, Hutchinson K, Clay-Williams R, Churruca K, Bierbaum M, Braithwaite J. Too much theory and not enough practice? The challenge of implementation science application in healthcare practice. J Eval Clin Pract. 2022 Dec 15;28(6):991–1002. doi: 10.1111/jep.13600. [DOI] [PubMed] [Google Scholar]

- 33.Rüppel J. ["Allowing the data to 'speak for themselves'" - the classification of mental disorders and the imaginary of computational psychiatry] Psychiatr Prax. 2021 Mar 02;48(S 01):S16–20. doi: 10.1055/a-1364-5551. [DOI] [PubMed] [Google Scholar]

- 34.Liberati E, Galuppo L, Gorli M, Maraldi M, Ruggiero F, Capobussi M, Banzi R, Kwag K, Scaratti G, Nanni O, Ruggieri P, Polo Friz H, Cimminiello C, Bosio M, Mangia M, Moja L. [Barriers and facilitators to the implementation of computerized decision support systems in Italian hospitals: a grounded theory study] Recenti Prog Med. 2015 Apr;106(4):180–91. doi: 10.1701/1830.20032. [DOI] [PubMed] [Google Scholar]

- 35.Ash JS, Chase D, Baron S, Filios MS, Shiffman RN, Marovich S, Wiesen J, Luensman GB. Clinical decision support for worker health: a five-site qualitative needs assessment in primary care settings. Appl Clin Inform. 2020 Aug 30;11(4):635–43. doi: 10.1055/s-0040-1715895. http://www.thieme-connect.com/DOI/DOI?10.1055/s-0040-1715895 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Benda NC, Das LT, Abramson EL, Blackburn K, Thoman A, Kaushal R, Zhang Y, Ancker JS. "How did you get to this number?" stakeholder needs for implementing predictive analytics: a pre-implementation qualitative study. J Am Med Inform Assoc. 2020 May 01;27(5):709–16. doi: 10.1093/jamia/ocaa021. https://europepmc.org/abstract/MED/32159774 .5803107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sun TQ, Medaglia R. Mapping the challenges of Artificial Intelligence in the public sector: evidence from public healthcare. Gov Inf Q. 2019 Apr;36(2):368–83. doi: 10.1016/j.giq.2018.09.008. [DOI] [Google Scholar]

- 38.Cai CJ, Winter S, Steiner D, Wilcox L, Terry M. "Hello AI": uncovering the onboarding needs of medical practitioners for human-AI collaborative decision-making. Proc ACM Human Comput Interact. 2019 Nov 07;3(CSCW):1–24. doi: 10.1145/3359206. [DOI] [Google Scholar]

- 39.Beede E, Baylor E, Hersch F, Iurchenko A, Wilcox L, Ruamviboonsuk P, Vardoulakis LM. A human-centered evaluation of a deep learning system deployed in clinics for the detection of diabetic retinopathy. Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems; CHI '20: CHI Conference on Human Factors in Computing Systems; Apr 25 - 30, 2020; Honolulu HI USA. 2020. [DOI] [Google Scholar]

- 40.Torenholt R, Langstrup H. Between a logic of disruption and a logic of continuation: negotiating the legitimacy of algorithms used in automated clinical decision-making. Health (London) 2021 Mar 08;:1363459321996741. doi: 10.1177/1363459321996741. [DOI] [PubMed] [Google Scholar]

- 41.Clyne B, Cooper JA, Hughes CM, Fahey T, Smith SM, OPTI-SCRIPT study team A process evaluation of a cluster randomised trial to reduce potentially inappropriate prescribing in older people in primary care (OPTI-SCRIPT study) Trials. 2016 Aug 03;17(1):386. doi: 10.1186/s13063-016-1513-z. https://trialsjournal.biomedcentral.com/articles/10.1186/s13063-016-1513-z .10.1186/s13063-016-1513-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mozaffar H, Cresswell KM, Lee L, Williams R, Sheikh A, NIHR ePrescribing Programme Team Taxonomy of delays in the implementation of hospital computerized physician order entry and clinical decision support systems for prescribing: a longitudinal qualitative study. BMC Med Inform Decis Mak. 2016 Feb 24;16(1):25. doi: 10.1186/s12911-016-0263-x. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-016-0263-x .10.1186/s12911-016-0263-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Pope C, Turnbull J. Using the concept of hubots to understand the work entailed in using digital technologies in healthcare. J Health Organ Manag. 2017 Aug 21;31(5):556–66. doi: 10.1108/jhom-12-2016-0231. [DOI] [PubMed] [Google Scholar]

- 44.Van de Velde S, Kortteisto T, Spitaels D, Jamtvedt G, Roshanov P, Kunnamo I, Aertgeerts B, Vandvik PO, Flottorp S. Development of a tailored intervention with computerized clinical decision support to improve quality of care for patients with knee osteoarthritis: multi-method study. JMIR Res Protoc. 2018 Jun 11;7(6):e154. doi: 10.2196/resprot.9927. https://www.researchprotocols.org/2018/6/e154/ v7i6e154 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Jackson BD, Con D, De Cruz P. Design considerations for an eHealth decision support tool in inflammatory bowel disease self-management. Intern Med J. 2018 Jun 12;48(6):674–81. doi: 10.1111/imj.13677. [DOI] [PubMed] [Google Scholar]

- 46.Lennox-Chhugani N, Chen Y, Pearson V, Trzcinski B, James J. Women's attitudes to the use of AI image readers: a case study from a national breast screening programme. BMJ Health Care Inform. 2021 Mar 01;28(1):e100293. doi: 10.1136/bmjhci-2020-100293. https://informatics.bmj.com/lookup/pmidlookup?view=long&pmid=33795236 .bmjhci-2020-100293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Laï M, Brian M, Mamzer M. Perceptions of artificial intelligence in healthcare: findings from a qualitative survey study among actors in France. J Transl Med. 2020 Jan 09;18(1):14. doi: 10.1186/s12967-019-02204-y. https://translational-medicine.biomedcentral.com/articles/10.1186/s12967-019-02204-y .10.1186/s12967-019-02204-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ash JS, Sittig DF, McMullen CK, Wright A, Bunce A, Mohan V, Cohen DJ, Middleton B. Multiple perspectives on clinical decision support: a qualitative study of fifteen clinical and vendor organizations. BMC Med Inform Decis Mak. 2015 Apr 24;15(1):35. doi: 10.1186/s12911-015-0156-4. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-015-0156-4 .10.1186/s12911-015-0156-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Abdi S, Witte L, Hawley M. Exploring the potential of emerging technologies to meet the care and support needs of older people: a delphi survey. Geriatrics (Basel) 2021 Feb 13;6(1):19. doi: 10.3390/geriatrics6010019. https://www.mdpi.com/resolver?pii=geriatrics6010019 .geriatrics6010019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Abejirinde I-O, Douwes R, Bardají A, Abugnaba-Abanga R, Zweekhorst M, van Roosmalen J, De Brouwere V. Pregnant women's experiences with an integrated diagnostic and decision support device for antenatal care in Ghana. BMC Pregnancy Childbirth. 2018 Jun 05;18(1):209. doi: 10.1186/s12884-018-1853-7. https://bmcpregnancychildbirth.biomedcentral.com/articles/10.1186/s12884-018-1853-7 .10.1186/s12884-018-1853-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Abidi S, Vallis M, Piccinini-Vallis H, Imran SA, Abidi SS. Diabetes-related behavior change knowledge transfer to primary care practitioners and patients: implementation and evaluation of a digital health platform. JMIR Med Inform. 2018 Apr 18;6(2):e25. doi: 10.2196/medinform.9629. https://medinform.jmir.org/2018/2/e25/ v6i2e25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Adams SJ, Tang R, Babyn P. Patient perspectives and priorities regarding artificial intelligence in radiology: opportunities for patient-centered radiology. J Am Coll Radiol. 2020 Aug;17(8):1034–6. doi: 10.1016/j.jacr.2020.01.007.S1546-1440(20)30031-4 [DOI] [PubMed] [Google Scholar]

- 53.Alagiakrishnan K, Wilson P, Sadowski CA, Rolfson D, Ballermann M, Ausford A, Vermeer K, Mohindra K, Romney J, Hayward RS. Physicians' use of computerized clinical decision supports to improve medication management in the elderly - the Seniors Medication Alert and Review Technology intervention. Clin Interv Aging. 2016;11:73–81. doi: 10.2147/CIA.S94126. https://europepmc.org/abstract/MED/26869776 .cia-11-073 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Alaqra AS, Ciceri E, Fischer-Hubner S, Kane B, Mosconi M, Vicini S. Using PAPAYA for eHealth – Use case analysis and requirements. Proceedings of the Annual IEEE Symposium on Computer-Based Medical Systems; Annual IEEE Symposium on Computer-Based Medical Systems; Jul 28-30, 2020; Rochester, MN, USA. 2020. [Google Scholar]

- 55.Andrews JA. Applying digital technology to the prediction of depression and anxiety in older adults. White Rose eTheses Online. 2018. [2022-12-22]. https://etheses.whiterose.ac.uk/20620/

- 56.Baysari MT, Del Gigante J, Moran M, Sandaradura I, Li L, Richardson KL, Sandhu A, Lehnbom EC, Westbrook JI, Day RO. Redesign of computerized decision support to improve antimicrobial prescribing. A controlled before-and-after study. Appl Clin Inform. 2017 Sep 13;8(3):949–63. doi: 10.4338/ACI2017040069. https://europepmc.org/abstract/MED/28905978 .2017040069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Biller-Andorno N, Ferrario A, Joebges S, Krones T, Massini F, Barth P, Arampatzis G, Krauthammer M. AI support for ethical decision-making around resuscitation: proceed with care. J Med Ethics. 2022 Mar;48(3):175–83. doi: 10.1136/medethics-2020-106786.medethics-2020-106786 [DOI] [PubMed] [Google Scholar]

- 58.Blease C, Kaptchuk TJ, Bernstein MH, Mandl KD, Halamka JD, DesRoches CM. Artificial intelligence and the future of primary care: exploratory qualitative study of UK general practitioners' views. J Med Internet Res. 2019 Mar 20;21(3):e12802. doi: 10.2196/12802. https://www.jmir.org/2019/3/e12802/ v21i3e12802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Bourla A, Ferreri F, Ogorzelec L, Peretti C-S, Guinchard C, Mouchabac S. Psychiatrists' attitudes toward disruptive new technologies: mixed-methods study. JMIR Ment Health. 2018 Dec 14;5(4):e10240. doi: 10.2196/10240. https://mental.jmir.org/2018/4/e10240/ v5i4e10240 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Cameron DH, Zucchero Sarracini C, Rozmovits L, Naglie G, Herrmann N, Molnar F, Jordan J, Byszewski A, Tang-Wai D, Dow J, Frank C, Henry B, Pimlott N, Seitz D, Vrkljan B, Taylor R, Masellis M, Rapoport MJ. Development of a decision-making tool for reporting drivers with mild dementia and mild cognitive impairment to transportation administrators. Int Psychogeriatr. 2017 Sep;29(9):1551–63. doi: 10.1017/S1041610217000242.S1041610217000242 [DOI] [PubMed] [Google Scholar]

- 61.Catho G, Centemero NS, Catho H, Ranzani A, Balmelli C, Landelle C, Zanichelli V, Huttner BD, on the behalf of the Q-COMPASS study group Factors determining the adherence to antimicrobial guidelines and the adoption of computerised decision support systems by physicians: a qualitative study in three European hospitals. Int J Med Inform. 2020 Sep;141:104233. doi: 10.1016/j.ijmedinf.2020.104233. https://linkinghub.elsevier.com/retrieve/pii/S1386-5056(20)30127-1 .S1386-5056(20)30127-1 [DOI] [PubMed] [Google Scholar]

- 62.Chang W, Liu H-E, Goopy S, Chen L-C, Chen H-J, Han C-Y. Using the five-level taiwan triage and acuity scale computerized system: factors in decision making by emergency department triage nurses. Clin Nurs Res. 2017 Oct;26(5):651–66. doi: 10.1177/1054773816636360.1054773816636360 [DOI] [PubMed] [Google Scholar]

- 63.Chirambo GB, Muula AS, Thompson M. Factors affecting sustainability of mHealth decision support tools and mHealth technologies in Malawi. Informatics Med Unlocked. 2019;17:100261. doi: 10.1016/j.imu.2019.100261. [DOI] [Google Scholar]

- 64.Chow A, Lye DC, Arah OA. Psychosocial determinants of physicians' acceptance of recommendations by antibiotic computerised decision support systems: a mixed methods study. Int J Antimicrob Agents. 2015 Mar;45(3):295–304. doi: 10.1016/j.ijantimicag.2014.10.009.S0924-8579(14)00331-8 [DOI] [PubMed] [Google Scholar]

- 65.Chrimes D, Kitos NR, Kushniruk A, Mann DM. Usability testing of Avoiding Diabetes Thru Action Plan Targeting (ADAPT) decision support for integrating care-based counseling of pre-diabetes in an electronic health record. Int J Med Inform. 2014 Sep;83(9):636–47. doi: 10.1016/j.ijmedinf.2014.05.002. https://europepmc.org/abstract/MED/24981988 .S1386-5056(14)00095-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Collard SS, Regmi PR, Hood KK, Laffel L, Weissberg‐Benchell J, Naranjo D, Barnard‐Kelly K. Exercising with an automated insulin delivery system: qualitative insight into the hopes and expectations of people with type 1 diabetes. Pract Diab. 2020 Feb 06;37(1):19–23. doi: 10.1002/pdi.2255. [DOI] [Google Scholar]

- 67.Connell A, Black G, Montgomery H, Martin P, Nightingale C, King D, Karthikesalingam A, Hughes C, Back T, Ayoub K, Suleyman M, Jones G, Cross J, Stanley S, Emerson M, Merrick C, Rees G, Laing C, Raine R. Implementation of a digitally enabled care pathway (part 2): qualitative analysis of experiences of health care professionals. J Med Internet Res. 2019 Jul 15;21(7):e13143. doi: 10.2196/13143. https://www.jmir.org/2019/7/e13143/ v21i7e13143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Cresswell K, Callaghan M, Mozaffar H, Sheikh A. NHS Scotland's Decision Support Platform: a formative qualitative evaluation. BMJ Health Care Inform. 2019 May;26(1):e100022. doi: 10.1136/bmjhci-2019-100022. https://informatics.bmj.com/lookup/pmidlookup?view=long&pmid=31160318 .bmjhci-2019-100022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Dalton K, O'Mahony D, Cullinan S, Byrne S. Factors affecting prescriber implementation of computer-generated medication recommendations in the SENATOR trial: a qualitative study. Drugs Aging. 2020 Sep;37(9):703–13. doi: 10.1007/s40266-020-00787-6.10.1007/s40266-020-00787-6 [DOI] [PubMed] [Google Scholar]

- 70.de Watteville A, Pielmeier U, Graf S, Siegenthaler N, Plockyn B, Andreassen S, Heidegger C-P. Usability study of a new tool for nutritional and glycemic management in adult intensive care: Glucosafe 2. J Clin Monit Comput. 2021 May;35(3):525–35. doi: 10.1007/s10877-020-00502-1.10.1007/s10877-020-00502-1 [DOI] [PubMed] [Google Scholar]

- 71.Dikomitis L, Green T, Macleod U. Embedding electronic decision-support tools for suspected cancer in primary care: a qualitative study of GPs' experiences. Prim Health Care Res Dev. 2015 Nov;16(6):548–55. doi: 10.1017/S1463423615000109.S1463423615000109 [DOI] [PubMed] [Google Scholar]

- 72.Fan X, Chao D, Zhang Z, Wang D, Li X, Tian F. Utilization of self-diagnosis health chatbots in real-world settings: case study. J Med Internet Res. 2021 Jan 06;23(1):e19928. doi: 10.2196/19928. https://www.jmir.org/2021/1/e19928/ v23i1e19928 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Flint R, Buchanan D, Jamieson S, Cuschieri A, Botros S, Forbes J, George J. The Safer Prescription of Opioids Tool (SPOT): a novel clinical decision support digital health platform for opioid conversion in palliative and end of life care-a single-centre pilot study. Int J Environ Res Public Health. 2019 May 31;16(11):1926. doi: 10.3390/ijerph16111926. https://www.mdpi.com/resolver?pii=ijerph16111926 .ijerph16111926 [DOI] [PMC free article] [PubMed] [Google Scholar]