Abstract

We represent the optimal control functions by neural networks and solve optimal control problems by deep learning techniques. Adjoint sensitivity analysis is applied to train the neural networks embedded in differential equations. This method can not only be applied in classic epidemic control problems, but also in epidemic forecasting, discovering unknown mechanisms, and the ideas behind can give new insights to traditional mathematical epidemiological problems.

Keywords: Optimal control, Deep learning, Epidemic models, Adjoint sensitivity analysis

Introduction

Optimal control is a branch of optimization theory that deals with finding a control for a dynamical system over a period of time, which shares tremendous popularity and plays a critical role in numerous applications in science, engineering and operations research (Betts 2010; Lenhart and Workman 2007). Optimal control is also believed to be foundation of many ecology and epidemiology problems, such as cost-effectiveness analysis, optimal vaccine distributions (Lenhart and Workman 2007), and many epidemiology problems such as epidemic forecasting, model discovery can be regarded as optimal control problems. However, efficiently and accurately solving optimal control problems is a challenging work. Most of real world optimal control problems have no explicit solutions and strongly rely on numerical methods like direct method, indirect method or dynamic programming (Betts 2010). Direct methods transform an optimal control problem to a nonlinear programming problem (NLP), so that some well-developed and mature NLP optimizers can be seamlessly applied at a low cost. But direct methods do not include adjoint information such as Pontryagin’s maximum principle (Pontryagin 1962) or Hamilton–Jacobi–Bellman equation (Bellman 1966), which makes direct methods less accurate. By using Pontryagin’s maximum principle (Pontryagin 1962), indirect methods transform an optimal control problem to a two points boundary value problem (TPBVP). But solving TPBVP depends on a good initial guess. Dynamic programming methods deal with an optimal control problem by solving a Hamilton–Jacobi–Bellman equation (Bellman 1966), but faces well-known Bellman curse of dimensionality (Bellman 1966). Hence, it still repays much more studies to develop new approaches to tackle optimal control problems efficiently and accurately.

In recent years, deep neural network (LeCun et al. 2015; Goodfellow et al. 2016), as a universal approximator for unknown functions or operators (Hornik et al. 1990; Lu et al. 2021), shows an unreasonable effectiveness (Sejnowski 2020) in solving traditional difficult problems, such as pattern recognition, learning unknown mechanisms, image recognition (He et al. 2016), and natural language processing (Devlin et al. 2018). Deep neural networks can even be used to aid mathematicians in discovering new conjectures and theorems (Davies et al. 2021), and physicians in finding new physical laws (Cranmer et al. 2020). To better understand the essence and improve the performance of deep learning methods on scientific problems such as physics, chemistry, ecology and epidemiology, more and more works pay attention to coupling or embedding differential equations and deep neural networks. Such attempts can be traced back at least to 1990s, which solves partial differential equations by neural networks (Dissanayake and Phan-Thien 1994; Lagaris et al. 1998). One important idea is regarding deep neural network (DNN) as numerical discretization schemes of ordinary or partial differential equation, which inspires researchers to redesign traditional neural architectures based on discretization methods (Niu et al. 2019; Chen 2019; Lu et al. 2018; Ruthotto and Haber 2020) or to replace DNN by a continuous differential equation (Chen et al. 2018; De Brouwer et al. 2019; Rubanova et al. 2019). Another revolutionary idea is training DNN from the perspective of optimal control theory. This extends the idea of backpropogation (Goodfellow et al. 2016) for functions to include adjoint sensitivity analysis (Cao et al. 2003) for dynamic systems, which opens the mind in differential programming (Baydin et al. 2018) and inspires a lot of architectures coupling neural network and differential equation, such as neural differential equation (Chen et al. 2018) and universal differential equations which embed neural networks into the differential equations (Rackauckas et al. 2020).

Despite the remarkable progress in coupling the differential equation and deep neural networks, deep learning methods like universal differential equations (Rackauckas et al. 2020) haven’t attracted enough attention (Song and Xiao 2022) and widely used in epidemiology problems such as epidemic control, epidemic forecasting and epidemic model discovery. This may be because coupling of transmission models and deep learning requires the researchers to have a strong background of mathematical epidemiology and deep learning as well as a basic understanding of differential programming and scientific computation, which might not always be expected in aforementioned fields. To fill this gap, we introduce universal differential equations, and apply universal differential equations to solve optimal epidemic control problems, which will also give new insights to traditional mathematical epidemiological problems.

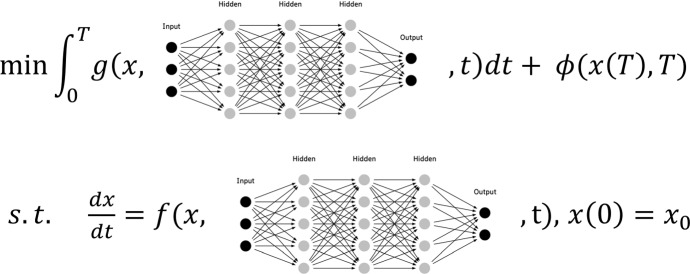

In this paper, we will consider the following optimal control problem in Bolza form (Betts 2010; Lenhart and Workman 2007):

| 1 |

where the functions , , and are assumed to be continuously differentiable. By representing the optimal control function u(t) as a neural network

| 2 |

receiving t and x as inputs (see Fig. 1), the optimal control problem (1) becomes a parameter optimization problem as follows:

| 3 |

which can be learned by modern differential programming methods such as auto differentiation (Baydin et al. 2018; Ma et al. 2021) and adjoint sensitivity analysis method (Cao et al. 2003), and mature first order optimizers like Adam (Kingma and Ba 2014, Kochenderfer and Wheeler 2019, Chapter 5) or other second order optimizers like L-BFGS (Jorge and Stephen 2006, Kochenderfer and Wheeler 2019, Chapter 6). The last layer of the neural network is defined as

| 4 |

where is sigmoid function. The last layer can be regarded as a hard constraint to satisfy in optimal control problem (1).

Fig. 1.

Scheme of deep learning method to solve optimal control problem (1)

To better introduce deep learning techniques, we focus on optimal control problems without path constraints. However, general optimal control problems with additional continuously differentiable path constraints condition ,

| 5 |

can be transformed into optimal control problem (1) by augmented lagrangian method (Ito and Kunisch 1990), penalty method (Jorge and Stephen 2006, Chapter 17), barrier or relaxed barrier method (Auslender 1999; Feller and Ebenbauer 2016).

Optimal control is a broad concept, and it is core or engine of many real world problems, even the essence of training deep learning models is to solve optimal control problems (Benning et al. 2019). Many data driven epidemic problems can be regarded as or transformed to optimal control problems. By fixing control function u(t) as constant vector, epidemic models calibration is a special case of optimal control problems (1). Moreover, epidemic forecasting and epidemic model discovery can also be treated as optimal control problem. Let u(t) be some unknown epidemic mechanisms which needs to be learned by some kind of loss function

where is observation data, then the objective function in (1) can be represented by Dirac function

The rest of the paper is organized as follows. In Sect. 2, we will introduce deep learning techniques (universal differential equation method) and algorithm to train the neural networks embedded in differential equations. In Sect. 3, we will briefly review traditional methods to solve optimal control problems including direct, indirect and dynamic programming methods, compare deep learning method with these methods, and see how traditional methods can be combined with deep learning method. We will give several examples in Sect. 4 including classic optimal control, epidemic forecasting and model discovery problems. Conclusion and discussion will be in Sect. 5.

Universal differential equation method

Universal differential equation (UDE) (Rackauckas et al. 2020) is a special kind of differential equation with universal approximators embedded. “Universal” here means universal approximators and can be neural networks, Gaussian process, decision trees, Chebyshev polynomials, Fourier series and other parametric or nonparametric approximator kernels. Throughout this paper, we use neural networks as universal approximator. UDE couples differential equation and deep learning architectures for scientific machine learning, and can be accurately and efficiently extrapolate beyond the original partially observation data, obtain differentiable and fully observed fitting data, all in a time and data-efficient manner. In this section, we will introduce deep neural network and universal differential equation, and show how to train the parameter optimization problem (3) with neural network embedded.

Deep neural network

Deep neural network (DNN), as a universal approximator or feature extractor, is a special kind of compositional function which applies linear and nonlinear transformations to the inputs recursively (see the diagram of a deep neural network in Fig. 1). In recent years, a lot of different deep neural networks architectures are proposed (Goodfellow et al. 2016), such as feed-forward neural network (FNN, also called multi-layer perceptron (MLP)), convolution neural network (CNN), recurrent neural network (RNN), long short-term memory (LSTM), gate recurrent unit (GRU). Throughout this paper, we only use the simplest feed-forward neural network architectures, which is sufficient for approximating the optimal control functions. However, the core ideas and training techniques can also be extended to other complicated neural network architecture. For example, CNN can be applied in spatial epidemic models with human or host diffusion.

Let be an L-layer feed-forward neural network, also called as -hidden layer neural network. denotes the number of neurons in the kth layer with and . Denote the weight matrix and bias vector in the kth layer by and , respectively. Then the FNN can be recursively defined as follows:

input layer: .

hidden layers: for .

output layer: ,

where is a matrix or tensor, and is a nonlinear activation function, and can be logistic sigmoid, the hyperbolic tangent (tanh), the rectified linear unit (ReLU, ) and swish function () or other activation functions (see Dubey et al. 2022 for review). Here, activation functions in different layers can be different.

The most fundamental and important property of DNN is that a single layer neural network can simultaneously and uniformly approximate a function and its partial derivatives, which is often called as universal approximation theorem. Let be d-dimensional nonnegative integers set. For , set , and

We say if for all , where is the space of continuous functions. Then we have the following universal approximate theorem of derivatives using single hidden layer neural networks (Pinkus 1999).

Theorem 1

(Pinkus 1999) Let , and set . Assume and that is not a polynomial. Then a single hidden layer neural network:

is dense in

Universal approximation theorem theoretically ensures that deep neural networks can approximate unknown mappings or operators by directly learning the entire set of nonlinear interactions from data. Deep neural networks method is shown practically to easily find good surrogate to fit the data or do model calibration. More importantly, good surrogate doesn’t need to be unique, which implies a belief in practice that for large neural networks, a local minima is enough and global minima often leads to over-fitting, which is still under theoretical investigating.

Universal differential equation

Before UDE, we first introduce neural differential equations (Chen et al. 2018), which inspired the ideas in UDE and can be regarded as a special case of UDE. Neural differential equations are initial value problems with the following form:

| 6 |

where is a deep neural network receiving [u, t] as input and is weight parameter vector. From the perspective of deep learning, neural differential equations are redesigned sequential neural networks based on numerical schemes of differential equation, and they can be regarded as continuous-depth or “infinitely deep” ResNet-like deep learning models. Since the embedded neural network in Neural ODE is a universal approximator, it follows that neural differential equations can learn to approximate any differential equation with sufficient regularity.

However, neural differential equations haven’t broken away from the category of deep learning and are still data driven methods without including knowledge or known mechanisms. UDE extends the previous data-driven neural ODE approaches to directly utilize mechanistic modeling simultaneously with universal approximators. UDE are initial value problems as follows:

| 7 |

where f is a knowledge based or known mechanistic model and denotes the missing or unknown terms, and are parameters of known mechanisms and neural networks, respectively, which can be estimated simultaneously. UDE is data-driven, knowledge based method, which has stronger explainability than black box deep neural networks or neural differential equations, since they keep known mechanisms in physics, chemistry, ecology or epidemiology. UDE is proved to be a method with good generalization and can be trained with less sample data (Rackauckas et al. 2020).

Adjoint sensitivity analysis and training techniques

Now it remains to solve the differential equation constrained parameter optimization problem (3). Let be solution map of the ODE

Then , which transform optimization problem (3) to an unconstrained optimization problem

| 8 |

Except for the solution map , problem (8) is no mystery and only concerned with parameter . However, first order optimizers like Adam (Kingma and Ba 2014, Kochenderfer and Wheeler 2019, Chapter 5) or second order optimizers like L-BFGS (Jorge and Stephen 2006, Kochenderfer and Wheeler 2019, Chapter 6) can not directly be applied on unconstrained optimization problem (8), for large neural networks. The reason is that “backpropagation” (Goodfellow et al. 2016) does not work anymore. To apply modern differential programming techniques, we need to evaluate

| 9 |

This is not a trivial task, at least for calculation Assume that is -dimensional, to calculate , we need to solve the following dimensional ODE:

| 10 |

where are dense jacobians also needing a lot of computation resources. In large neural networks ( is large), for every step in the loop of optimization, we will need to solve dimensional ODE, which spends huge computational resources.

Therefore, the key issue of solving parameter optimization problem (3) is how to implement “backpropagation” (Goodfellow et al. 2016) for differential equations. Fortunately, it is not new story in optimal control theory. “Backpropagation” for differential equations can date back to 1960s when Pontryagin’s maximium principle (Pontryagin 1962) was developed. From the perspective of optimal control, the training of neural differential equations can be regarded as optimal control problems, which extend the idea of backpropagation (Goodfellow et al. 2016) in differential programming to include adjoint sensitivity analysis (Cao et al. 2003).

In what follows, we will introduce adjoint sensitivity analysis in detail. With adjoint sensitivity analysis results, first order optimizers like Adam (Kingma and Ba 2014) can be directly applied with no mystery even for large neural networks. For simplicity, we consider the following reformulated differential equation constrained parameter optimization problem:

| 11 |

Here y can be regarded as neural networks (neural differential equations) or dynamics including neural networks (universal differential equations). Note that optimal control problem (3) can be rewritten to (11) by Dirac function

Moreover, if the optimal control problem is data-driven and the loss function is discrete, i.e.,

we can rewrite discrete loss function by Dirac function

Our purpose here is to derive the “backpropagation” of derivatives of to . By introducing adjoint variable , we obtain

Then

Let

| 12 |

We obtain

| 13 |

If the loss function is discrete, i.e.,

then system (12) becomes an impulsive system

| 14 |

We have the following adjoint sensitivity result:

Theorem 2

Let

where and the functions , are continuously differentiable. Then we have

| 15 |

From Theorem 2, to calculate , we only need to solve 2n dimensional ODE. The dimensional is not dependent on the dimension of parameter , which makes the couple of large scale neural networks and differential equations possible. Two approaches are possible to numerically solve in (15). One is continuous adjoint method, also called the differentiate-then-discretize approach, which applies a numerical discretization to solve the adjoint ODE () in (15). Another is discrete adjoint sensitivity, also called as discretize-then- differentiate method, which first numerically approximates the forward system (y(t)) and then obtain the adjoint of the discrete system of (y(t)). The detailed comparison of these two methods to evaluate adjoint sensitivities is not our focus and can be seen in Zhang and Sandu 2014; Ma et al. 2021. The algorithm to train the universal differential equation is shown in Algorithm 1.

Comparison and combination with traditional optimal control methods

We will briefly review traditional methods to solve optimal control problems including direct, indirect and dynamic programming methods, compare deep learning methods with these traditional methods, and see how traditional methods can be combined with deep learning method to improve the training efficiency and reduce the training time. This part can also be regarded as additional materials for Optimal Control Applied to Biological Models (2007)Lenhart and Workman (2007). All methods mentioned are shown in Fig. 2.

Fig. 2.

Optimal control methods including direct, indirect, dynamic programming and deep learning

Direct method

The core of a direct method is to transform the original optimal control problem (1) to a nonlinear programming problem (NLP) without including adjoint information, and then some well-developed and mature NLP optimizers can be seamlessly applied at a low cost. These NLP optimizers include Ipopt (open source, Wächter and Biegler (2006)), SNOPT (limited academic license, Gill et al. (2005)), Knitro (commercial with limited academic license, Byrd et al. (2006)) and so on (see review Kronqvist et al. (2019) for more details). Compared with indirect and dynamic programming methods, direct methods do not need adjoint information such as Pontryagin’s maximum principle (Pontryagin 1962) or Hamilton–Jacobi–Bellman equation (Bellman 1966). This makes direct methods less accurate but possible to be easily posted and widely used to solve highly complicated nonlinear optimal control problems in real-life application.

Direct methods are quite broad and encompasses many techniques (Betts 2010), different from the way control and states to be discretized, how to handle differential equations. These transcription methods can be divided into two main classes: shooting methods and collection methods. Shooting methods includes direct single shooting (Hicks and Ray 1971) and multiple shooting (Bock and Plitt 1984). Direct single shooting method is the simplest method for transcribing an optimal control problem to NLP. It first parameterizes the control function u(t) by using surrogates like polynomials, piecewise polynomials, or simply piecewise constants, and then use embedded numerical ODE solvers to eliminate the differential equations, and apply adjoint sensitivity analysis (Cao et al. 2003) to compute the jacobians of the continuity and boundary conditions with respect to the parameters. Choose a fixed time grid

and set the control function

where the parameter is mN dimensional vector. By using robust ODE solvers, we can obtain x(t, c), which transcribe the optimal control problem to the following NLP:

| 16 |

Multiple shooting (Bock and Plitt 1984) breaks up the time grid into some number of segments, and using single shooting method to solve for each segment. The idea behind the direct multiple shooting method stems from the observation that long integration of dynamics can be counterproductive for transcribing continuous optimal control problems into NLP, and this problem can be tackled by limiting the integration over arbitrarily short time intervals. In contrast to single shooting methods, multiple shooting solves the ODE separately on each interval ,

which transcribe the optimal control problem to the following NLP:

| 17 |

For direct collection method, both state and control functions are parameterized by surrogates like high order polynomial functions, which avoid the adjoint sensitivity analysis of direct shooting methods at the cost of a larger NLP. The collocation points (knots) for these methods are located at the roots of an orthogonal polynomial, for example, using Chebyshev or Legendre basis (Darby et al. 2011). In contrast to direct shooting method, the state function is approximated by a combination of polynomial basis. For time interval , choose collocation points

and set

and the Lagrange basis for example,

Then optimal control problem can be transposed to the following sparse NLP problem:

| 18 |

where the derivatives are concerned with polynomials and the integration can be handled by methods like Gaussian quadrature. To increase the accuracy of direct collection method, either the number of trajectory segments (h-method) or the order of the polynomial in each segment (p-method). One important reason to use high-order orthogonal polynomials in direct collection method is that they provide accurate approximations that converge exponentially for problems whose solutions are smooth (Rao 2009).

Indirect method

The essence of indirect methods is to change the optimal control problem (1) to a fixed point problem by Pontryagin’s maximium principle (Pontryagin 1962). Indirect methods use the Hamiltonian function

| 19 |

to derive the necessary conditions for optimality which is a two-point boundary value problem (TPBVP),

| 20 |

where and

| 21 |

Note here that for state function x(t), the boundary condition is at , while for adjoint function , the boundary condition is at . Thus TPBVP (20) can not be solved by numerical ODE solver (Rackauckas and Nie 2017). Similar to direct methods, shooting methods or collection (pseudo-spectral) methods are ofen applied to solve TPBVP (Betts 2010). The ideas behind indirect shooting method and indirect collection method are similar to direct methods, so we omit the details here. Moreover, the derivation of TPBVP can be equivalently obtained by Pontryagin’s maximum principle (Pontryagin 1962), calculus of variational or dynamic programming. We didn’t give much detail here since it is not our focus, one can see (Li and Yong 1995, Chapter 4) for more details.

Compared with direct methods, indirect methods include adjoint information of optimal control problem (1). Thus indirect methods are more accurate. However, derivation of TPBVP by Pontryagin’s maximum principle (Pontryagin 1962) or Hamilton–Jacobi–Bellman equation (Bellman 1966) makes optimal control problem (1) hard to be posted, thus it is not easy to solve highly complicated nonlinear optimal control problems in real-life application. Moreover, solving TPBVP greatly depends on a good initial guess, which can not be easily obtained for large optimal control problem.

Dynamic programming method

Dynamic programming (DP) method was developed in the fifties and sixties of the 19th century, most prominently by Bellman (1966), and now it attracts much attention due to the popularity of Reinforcement Learning (Sutton and Barto 2018). Thanks to Bellman principle, optimal control problem (1) can be transformed to the following Hamilton–Jacobi–Bellman (HJB) equation

| 22 |

where H is Hamiltonian defined as (19), V(x, t) is the value function

After solving the HJB equation (22), the optimal control function can be obtained from

and the state function is the solution of the following ODE

However, solving HJB equation (22) is not an easy task and faces well-known Bellman curse of dimensionalty. The easiest way to solve HJB equation (22) is using DP to directly discrete state space, control space and time space. Assume that each state dimension has alternative values, each control dimension has alternative values and time [0, T] is divided to N segments, then the computation complexity will be . Due to curse of dimensionality, simple DP can only be used in low-dimensional optimal control problem. To reduce the computation complexity, methods like Differential Dynamic Programming (Jacobson and Mayne 1970) or iterative LQR (Li and Todorov 2004) were proposed to break the problem into cascaded of subproblems. Approximate dynamic programming methods like DDP are fast and have a low memory, making them amenable to embedded implementation. However, DDP or iLQR methods are less numerically robust and less well-suited to handling nonlinearity in state and constraints.

Finally, we listed several frequently used open source packages to solve optimal control problem, especially on Julia Language (Bezanson et al. 2017) in Table 1.

Table 1.

Open source packages to solve optimal control problem

| Packages | Methods | Languages |

|---|---|---|

| Casadi (Andersson et al. 2019) | Direct, Indirect | Python, C++, Julia |

| PSOPT (Becerra 2010) | Direct | Python, C++ |

| InfiniteOpt.jl (Pulsipher et al. 2022) | Direct | Julia |

| DifferentialEquations.jl (Rackauckas and Nie 2017) | Indirect | Julia |

| TrajectoryOptimization.jl (Howell et al. 2019) | DP | Julia |

Comparison and combination between different methods

Without relying on adjoint information such as Pontryagin’s maximum principle (Pontryagin 1962) or Hamilton–Jacobi–Bellman equation (Bellman 1966), direct methods are less accurate but more flexible to solve highly complicated nonlinear optimal control problems in real-life application. Compared with direct methods, indirect methods include adjoint information of optimal control problem. Indirect methods are more accurate but hard to be posted since derivation of TPBVP by Pontryagin’s maximum principle (Pontryagin 1962) needs much manually work, especially for pure state constraints. Moreover, solving TPBVP greatly depends on a good initial guess, making indirect methods not easy to solve highly complicated nonlinear optimal control problems in real-life application. Dynamic programming is easy to be posted and accurate, but solving HJB equation (22) is not an easy task and faces well-known Bellman curse of dimensionalty. Compared with traditional methods such as direct , indirect and dynamic programming, deep learning methods can integrate modern differential programming tools and neural networks are believed to some extent share bless of dimensionality. The comparison details are shown in Table 2.

Table 2.

Comparisons of different methods to solve optimal control. (DtO: discretize-then-optimize)

| Methods | Direct method | Indirect method | HJB method | Deep learning |

|---|---|---|---|---|

| Transcriptions | Nonlinear programming problem (NLP) | Two point boundary value problem (TPBVP) | Dynamic programming | Parameter optimization |

| Trajectory or Parameter Optimization | Trajectory | Trajectory | Trajectory | Parameter |

| u(x, t) or x(u, t) | – | x(u, t) | u(x, t) | x(u, t) |

| OtD or DtO | DtO | OtD | DtO | DtO and OtD |

| Advantages | Mature Optimizers, easy to post, easy to solve | Accurate | Accurate | Accurate, bless of dimensionality, extendable |

| Disadvantages | Less accurate | Hard to post, hard to solve, initial guess | Curse of dimensionality | Theoretically under exploring, slow |

Although deep learning method (UDE) is different from other methods, they can be combined. For long range time horizon optimal control problem or oscillation data driven problems, multiple shooting can be used to train the neural networks which helps to escape “flattened out” fitting for oscillation data (Turan and Jäschke 2022). Moreover, multiple shooting can also be combined with neural differential equation and become differential multiple shooting layer (Massaroli et al. 2021).

For data driven problems, training universal differential equations is computation consuming, especially for solving differential equations and adjoint sensitivity analysis. Collection methods can be combined to reduce the computation time. By using collection methods, we directly obtain from observation data points, which escapes from solving differential equations at a cost of low accuracy. Thus, collection method can be combined to pretrain the neural networks and help to obtain good initial value of parameters, and then Algorithm 1 can be applied to finish the training procedures.

Numerical simulations

In this section, we will use universal differential equation method to solve classic optimal control, epidemic forecasting and epidemic model discovery problems. For traditional methods to solve classic optimal control problems, only direct method is implemented since it is mostly used. Deep learning methods and direct collection methods are implemented in open source Julia language 1.8.2 (Bezanson et al. 2017), Laptop Y7000P with i5-9300HF CPU, 16G RAM. All algorithms and codes in this section are available in https://github.com/Song921012/OptEpiDeepL.

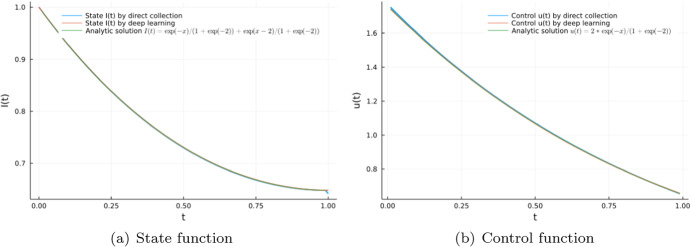

Optimal control problem with analytic solution

To start with, we solve Example 3.2 in Optimal Control Applied to Biological Models Lenhart and Workman (2007) with little modification

| 23 |

where I(t) can be regarded as infected population and u(t) are concerned with control measures. Optimal control problem (23) admits analytic solution

and

By representing u(t) as a full connected neural networks with activation function, optimal control problem (23) becomes a parameter optimization problem, which can be trained by Algorithm 1. From Fig. 3, both direct collection method and deep learning method fit the analytic solution well.

Fig. 3.

a State function for optimal control problem (22) by direct collection and deep learning method; b control function for optimal control problem (22) by direct collection and deep learning method optimal

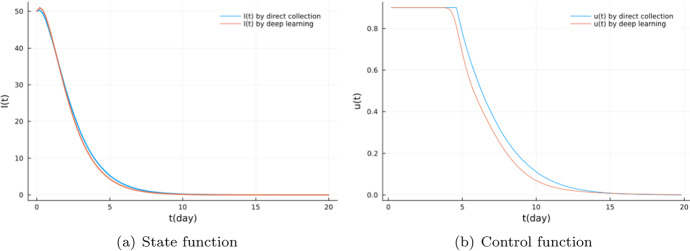

SEIR model based epidemic control

In this example, we will use universal differential equation method to solve the following epidemic control problem

| 24 |

where S(t), E(t), I(t) denote the expected number of suspected, exposed and infected individuals, respectively, and u(t) are concerned with permanently removal rate of S, for example, vaccination rate. The objective of the epidemic control is less control strategies, less health resources and less total infections. A is the weight between the trade-off pair. A two hidden layer neural network is used to represent control function u(t),

| 25 |

and the training procedures are similar to optimal control problem (23). Figure 4 shows that deep learning techniques obtains more accurate optimal control solution with similar training time.

Fig. 4.

a State function for optimal epidemic control problem (24) by direct collection and deep learning method; b control function for optimal epidemic control problem (24) by direct collection and deep learning method

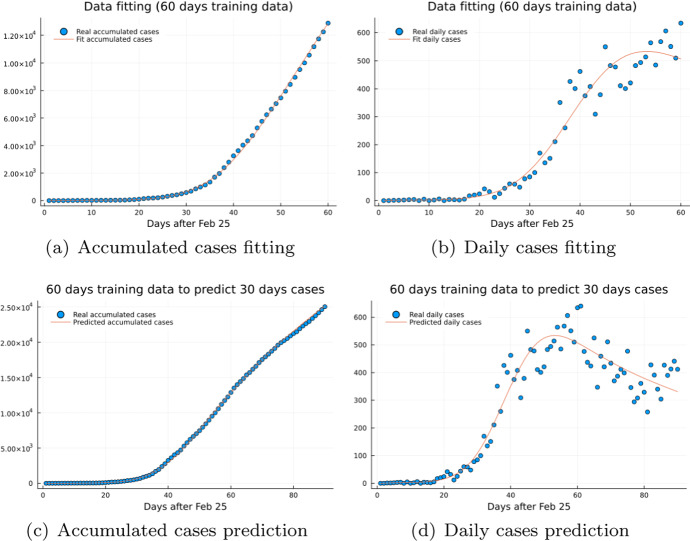

Epidemic forecasting

In this example, we will transform epidemic forecasting problem to an optimal control problem. Particularly, we will apply UDE method on the first wave Covid-19 cases in Ontario, Canada, i.e., using UDE model (3) to fit the case data and training the neural networks embedded in differential equations, and doing epidemic forecasting. Traditional architectures like LSTM, RNN, GRU (Goodfellow et al. 2016), or neural ordinary differential equations are often undifferentiatedly applied to handle time series data without including knowledge or mechanisms. However, all of them omit the epidemiology. Here, we use the following universal differential equation model with part of epidemiology:

| 26 |

where S(t), I(t) and H(t) denote the number of suspected individuals, infected individuals and accumulated confirmed cases at time t, is infection period of infectious disease, and denotes force of infection. To fit the observation data, we need to solve the following ordinary differential equation constrained optimization problem:

| 27 |

Ontario’s first wave data began on Feb 25, 2020 and continued for about 150 days. In Fig. 5, 60 days Ontario’s first wave accumulated case data was used to train the neural network in (27), and the trained model is applied to forecast the epidemic in further 30 days. Figure 5a, b show that the trained model (26) fits the 60 days observation data very well, and generalize very well and is able to predict further 30 days cases (Fig. 5c, d).

Fig. 5.

60 days Ontario’s first wave case data to train neural networks in (27) and predict further 30 days case data. a, b Accumulated and daily cases data fitting. c Real accumulated cases and predicted accumulated cases. d Real daily cases and predicted daily cases

Epidemic model discovery

In this example, we generate data from the following SIR epidemic model with behavior change mechanism (Funk 2010; Verelst et al. 2016)

| 28 |

Here, is force of infection with media impact (Song and Xiao 2018). Our task here is to learn the force of infection function from the generated data, which can be done by three steps:

- Step one: setting up the optimal control problem,

29 - Step two: representing force of infection function by neural networks and training the resulting universal differential equation:

30 Step three: recovering the simplest equation from neural networks by symbolic regression Schmidt and Lipson (2009).

From Fig. 6a, we can see that the training data in time span [0, 1] is enough to train universal differential equation (30), and it generalize well in time span [1, 4]. Moreover, Fig. 6b shows that neural networks fits the force of infection function well.

Fig. 6.

Universal differential equation (4) to learn the force of infection in transmission model (28). a Data fitting and prediction. b Real force of infection and learned force of infection

However, black box neural network is not what epidemiologist really need. In epidemiology, researchers care about both learning ability and interpreticity. We want to efficiently learn the missing mechanisms in transmission models and wish the missing mechanisms represented as simplest equation rather than black-box neural network, which is difficult to interpret in epidemiology. Symbolic Regression (SR) is a type of regression analysis that searches the space of mathematical expressions to find the model that best fits a given dataset, both in terms of accuracy and simplicity (Schmidt and Lipson 2009). After learning the parameters of neural networks, we generate data from neural networks and use the generated data to search the simplest equation:

Since this paper doesn’t focus on equation finding methods, we omit the details of symbolic regression here, one can see Schmidt and Lipson (2009), Song et al. (2022) for more details.

Conclusion and discussion

Deep neural network (LeCun et al. 2015; Goodfellow et al. 2016) is shown to be a universal approximator for unknown mappings with bless of dimensionality (Hornik et al. 1990; Han et al. 2018), which shows an unreasonable effectiveness (Sejnowski 2020) in pattern recognition, learning unknown mechanisms and solving traditional difficult problems such as image recognition (He et al. 2016), natural language processing (Devlin et al. 2018). In this paper, we use neural network to represent control functions and solve optimal control problems by deep learning techniques. The applications are not restricted in classical epidemic control problems, many data driven epidemiological problems like epidemic forecasting, unraveling missing transmission mechanisms, can be regarded as optimal control problems and solved by deep learning techniques. Compared with traditional methods such as direct, indirect and dynamic programming methods, deep learning methods can integrate modern differential programming tools and solve large scale optimal control problems.

We show how to extend the idea of backpropagation (Goodfellow et al. 2016) in differential programming to include adjoint sensitivity analysis (Pontryagin 1962; Cao et al. 2003). Thanks to adjoint sensitivity analysis, deep neural networks and dynamic systems can be coupled seamlessly. In this paper, only control function is represented by neural networks. Actually, high dimensional state function, for example, human mobility epidemic models with many patches, can also be approximated by neural networks,

and optimal control problem (1) can be transformed into

| 31 |

where

| 32 |

The way in (32) to solve ODE by neural networks is often called physical informed neural networks (PINNs) (Raissi et al. 2019; Karniadakis et al. 2021). Moreover, neural networks (NNs) are not only universal approximators of continuous functions but also universal approximators of nonlinear continuous operator in infinite dimensional space, which implies that optimal control problem (1) can also be solved by neural operator (Lu et al. 2021).

This study also has some limitations. First, we didn’t add many details on solving general optimal control problems with path constraints. The tricks on how to transform general optimal control into problem (1) by augmented lagrangian method (Ito and Kunisch 1990), penalty method (Jorge and Stephen 2006, Chapter 17), barrier or relaxed barrier method (Auslender 1999; Feller and Ebenbauer 2016), are not included in our study. Second, we didn’t give much theoretical proof and complexity analysis which theoretically shows that deep learning methods can solve optimal control problems with bless of dimensionality. These problems will be our future work.

Acknowledgements

S.Y. is financed in part by a grant from the National Natural Science Foundation of China (NSFC, 12101487(PS)) and the China Scholarship Council (No. 202006280442); J .W. is supported by the Canada Research Chair Program (No. 230720); P.S. is supported by the Postdoctoral Fellowship of York University, Toronto, Canada, China Postdoctoral Science Foundation (No. 2020M683445) and the National Natural Science Foundation of China (NSFC, 12101487(PS); NSFC, 12220101001(PS)). The authors would like to thank the referees for kind help to review the paper.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Shuangshuang Yin, Email: yinss2014@stu.xjtu.edu.cn.

Jianhong Wu, Email: wujh@yorku.ca.

Pengfei Song, Email: song921012@xjtu.edu.cn.

References

- Andersson JAE, Gillis J, Horn G, Rawlings JB, Diehl M. CasADi—a software framework for nonlinear optimization and optimal control. Math Program Comput. 2019;11(1):1–36. [Google Scholar]

- Auslender A. Penalty and barrier methods: a unified framework. SIAM J Optim. 1999;10(1):211–230. [Google Scholar]

- Baydin AG, Pearlmutter BA, Radul AA, Siskind JM. Automatic differentiation in machine learning: a survey. J Mach Learn Res. 2018;18:1–43. [Google Scholar]

- Becerra VM (2010) Solving complex optimal control problems at no cost with PSOPT. In: 2010 IEEE international symposium on computer-aided control system design, pp 1391–1396

- Bellman R. Dynamic programming. Science. 1966;153(3731):34–37. doi: 10.1126/science.153.3731.34. [DOI] [PubMed] [Google Scholar]

- Benning M, Celledoni E, Ehrhardt M, Owren B, Schhönlieb C (2019) Deep learning as optimal control problems: models and numerical methods. J Comput Dyn

- Betts JT. Practical methods for optimal control and estimation using nonlinear programming. Philadelphia: SIAM; 2010. [Google Scholar]

- Bezanson J, Edelman A, Karpinski S, Shah VB. Julia: a fresh approach to numerical computing. SIAM Rev. 2017;59(1):65–98. [Google Scholar]

- Bock HG, Plitt KJ. A multiple shooting algorithm for direct solution of optimal control problems. IFAC Proc Vol. 1984;17(2):1603–1608. [Google Scholar]

- Byrd RH, Nocedal J, Waltz RA (2006) KNITRO: an integrated package for nonlinear optimization. In: Large-scale nonlinear optimization. Springer, pp 35–59

- Cao Y, Li S, Petzold L, Serban R. Adjoint sensitivity analysis for differential-algebraic equations: the adjoint DAE system and its numerical solution. SIAM J Sci Comput. 2003;24(3):1076–1089. [Google Scholar]

- Chen RT, Rubanova Y, Bettencourt J, Duvenaud DK (2018) Neural ordinary differential equations. NeurIPS 31

- Chen X (2019) Ordinary differential equations for deep learning. arXiv:1911.00502

- Cranmer M, Sanchez Gonzalez A, Battaglia P, Xu R, Cranmer K, Spergel D, Ho S. Discovering symbolic models from deep learning with inductive biases. NeurIPS. 2020;33:17429–17442. [Google Scholar]

- Darby CL, Garg D, Rao AV. Costate estimation using multiple-interval pseudospectral methods. J Spacecr Rockets. 2011;48(5):856–866. [Google Scholar]

- Davies A, Veličković P, Buesing L, Blackwell S, Zheng D, Tomašev N, Tanburn R, Battaglia P, Blundell C, Juhász A, Lackenby M, Williamson G, Hassabis D, Kohli P. Advancing mathematics by guiding human intuition with AI. Nature. 2021;600(7887):70–74. doi: 10.1038/s41586-021-04086-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Brouwer E, Simm J, Arany A, Moreau Y (2019) GRU-ODE-Bayes: continuous modeling of sporadically-observed time series. NeurIPS 32

- Devlin J, Chang MW, Lee K, Toutanova K (2018) Bert: pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805

- Dissanayake M, Phan-Thien N. Neural-network-based approximations for solving partial differential equations. Commun Numer Methods Eng. 1994;10(3):195–201. [Google Scholar]

- Dubey SR, Singh SK, Chaudhuri BB (2022) Activation functions in deep learning: a comprehensive survey and benchmark. Neurocomputing

- Feller C, Ebenbauer C. Relaxed logarithmic barrier function based model predictive control of linear systems. IEEE Trans Autom Control. 2016;62(3):1223–1238. [Google Scholar]

- Funk S. Modelling the influence of human behaviour on the spread of infectious diseases: a review. J R Soc Interface. 2010;7(50):1247–1256. doi: 10.1098/rsif.2010.0142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gill PE, Murray W, Saunders MA. SNOPT: an SQP algorithm for large-scale constrained optimization. SIAM Rev. 2005;47(1):99–131. [Google Scholar]

- Goodfellow I, Bengio Y, Courville A. Deep learning. Cambridge: MIT Press; 2016. [Google Scholar]

- Han J, Jentzen AEW. Solving high-dimensional partial differential equations using deep learning. Proc Natl Acad Sci USA. 2018;115(34):8505–8510. doi: 10.1073/pnas.1718942115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

- Hicks G, Ray W. Approximation methods for optimal control synthesis. Can J Chem Eng. 1971;49(4):522–528. [Google Scholar]

- Hornik K, Stinchcombe M, White H. Universal approximation of an unknown mapping and its derivatives using multilayer feedforward networks. Neural Netw. 1990;3(5):551–560. [Google Scholar]

- Howell TA, Jackson BE, Manchester Z (2019) ALTRO: a fast solver for constrained trajectory optimization. In: 2019 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, pp 7674–7679

- Ito K, Kunisch K. The augmented Lagrangian method for equality and inequality constraints in Hilbert spaces. Math Program. 1990;46(1):341–360. [Google Scholar]

- Jacobson DH, Mayne DQ. Differential dynamic programming, 24. Amsterdam: Elsevier; 1970. [Google Scholar]

- Jorge N, Stephen JW. Numerical optimization. New York: Springer; 2006. [Google Scholar]

- Karniadakis GE, Kevrekidis IG, Lu L, Perdikaris P, Wang S, Yang L. Physics-informed machine learning. Nat Rev Phys. 2021;3(6):422–440. [Google Scholar]

- Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

- Kochenderfer MJ, Wheeler TA. Algorithms for optimization. Cambridge: MIT Press; 2019. [Google Scholar]

- Kronqvist J, Bernal DE, Lundell A, Grossmann IE. A review and comparison of solvers for convex MINLP. Optim Eng. 2019;20(2):397–455. [Google Scholar]

- Lagaris IE, Likas A, Fotiadis DI. Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans Neural Netw. 1998;9(5):987–1000. doi: 10.1109/72.712178. [DOI] [PubMed] [Google Scholar]

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- Lenhart S, Workman JT. Optimal control applied to biological models. Cambridge: Chapman and Hall/CRC; 2007. [Google Scholar]

- Li X, Yong J. Optimal control theory for infinite dimensional systems. New York: Springer; 1995. [Google Scholar]

- Li W, Todorov E (2004) Iterative linear quadratic regulator design for nonlinear biological movement systems. In: ICINCO

- Lu L, Jin P, Pang G, Zhang Z, Karniadakis GE. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat Mach Intell. 2021;3(3):218–229. [Google Scholar]

- Lu Y, Zhong A, Li Q, Dong B (2018) Beyond finite layer neural networks: bridging deep architectures and numerical differential equations. In: International conference on machine learning, PMLR, pp 3276–3285

- Ma Y, Dixit V, Innes MJ, Guo X, Rackauckas C (2021) A comparison of automatic differentiation and continuous sensitivity analysis for derivatives of differential equation solutions. In: 2021 IEEE high performance extreme computing conference (HPEC). IEEE, pp 1–9

- Massaroli S, Poli M, Sonoda S, Suzuki T, Park J, Yamashita A, Asama H. Differentiable multiple shooting layers. NeurIPS. 2021;34:16532–16544. [Google Scholar]

- Niu MY, Horesh L, Chuang I (2019) Recurrent neural networks in the eye of differential equations. arXiv:1904:12933

- Pinkus A. Approximation theory of the MLP model in neural networks. Acta Numer. 1999;8:143–195. [Google Scholar]

- Pontryagin LS. The mathematical theory of optimal processes. New York: Wiley; 1962. [Google Scholar]

- Pulsipher JL, Zhang W, Hongisto TJ, Zavala VM. A unifying modeling abstraction for infinite-dimensional optimization. Comput Chem Eng. 2022;156:107567. [Google Scholar]

- Rackauckas C, Nie Q. Differentialequations.jl: a performant and feature-rich ecosystem for solving differential equations in Julia. J Open Res Softw. 2017;5(1):15. [Google Scholar]

- Rackauckas C, Ma Y, Martensen J, Warner C, Zubov K, Supekar R, Skinner D, Ramadhan A, Edelman A (2020) Universal differential equations for scientific machine learning. arXiv:2001.04385

- Raissi M, Perdikaris P, Karniadakis GE. Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J Comput Phys. 2019;378:686–707. [Google Scholar]

- Rao AV. A survey of numerical methods for optimal control. Adv Astronaut Sci. 2009;135(1):497–528. [Google Scholar]

- Rubanova Y, Chen RT, Duvenaud DK (2019) Latent ordinary differential equations for irregularly-sampled time series. NeurIPS 32

- Ruthotto L, Haber E. Deep neural networks motivated by partial differential equations. J Math Imaging Vis. 2020;62(3):352–364. [Google Scholar]

- Schmidt M, Lipson H. Distilling free-form natural laws from experimental data. Science. 2009;324(5923):81–85. doi: 10.1126/science.1165893. [DOI] [PubMed] [Google Scholar]

- Sejnowski TJ. The unreasonable effectiveness of deep learning in artificial intelligence. Proc Natl Acad Sci USA. 2020;117(48):30033–30038. doi: 10.1073/pnas.1907373117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song P, Xiao Y. Global Hopf bifurcation of a delayed equation describing the lag effect of media impact on the spread of infectious disease. J Math Biol. 2018;76(5):1249–1267. doi: 10.1007/s00285-017-1173-y. [DOI] [PubMed] [Google Scholar]

- Song P, Xiao Y. Estimating time-varying reproduction number by deep learning techniques. J Appl Anal Comput. 2022;12(3):1077–1089. [Google Scholar]

- Song P, Xiao Y, Wu J (2022) Methods coupling transmission models and deep learning. Preprint

- Sutton RS, Barto AG. Reinforcement learning. Adaptive computation and machine learning series. 2. Cambridge: MIT Press; 2018. [Google Scholar]

- Turan EM, Jäschke J. Multiple shooting for training neural differential equations on time series. IEEE Control Syst Lett. 2022;6:1897–1902. [Google Scholar]

- Verelst F, Willem L, Beutels P. Behavioural change models for infectious disease transmission: a systematic review (2010–2015) J R Soc Interface. 2016;13(125):20160820. doi: 10.1098/rsif.2016.0820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wächter A, Biegler LT. On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math Program. 2006;106(1):25–57. [Google Scholar]

- Zhang H, Sandu A. FATODE: a library for forward, adjoint, and tangent linear integration of odes. SIAM J Sci Comput. 2014;36(5):C504–C523. [Google Scholar]