Abstract

Continuous imaging of cardiac functions is highly desirable for the assessment of long-term cardiovascular health, detection of acute cardiac dysfunction and clinical management of critically ill or surgical patients1–4. However, conventional non-invasive approaches to image the cardiac function cannot provide continuous measurements owing to device bulkiness5–11, and existing wearable cardiac devices can only capture signals on the skin12–16. Here we report a wearable ultrasonic device for continuous, real-time and direct cardiac function assessment. We introduce innovations in device design and material fabrication that improve the mechanical coupling between the device and human skin, allowing the left ventricle to be examined from different views during motion. We also develop a deep learning model that automatically extracts the left ventricular volume from the continuous image recording, yielding waveforms of key cardiac performance indices such as stroke volume, cardiac output and ejection fraction. This technology enables dynamic wearable monitoring of cardiac performance with substantially improved accuracy in various environments.

Subject terms: Echocardiography, Disease prevention, Sensors and biosensors

Innovations in device design, material fabrication and deep learning are described, leading to a wearable ultrasound transducer capable of dynamic cardiac imaging in various environments and under different conditions.

Main

Main

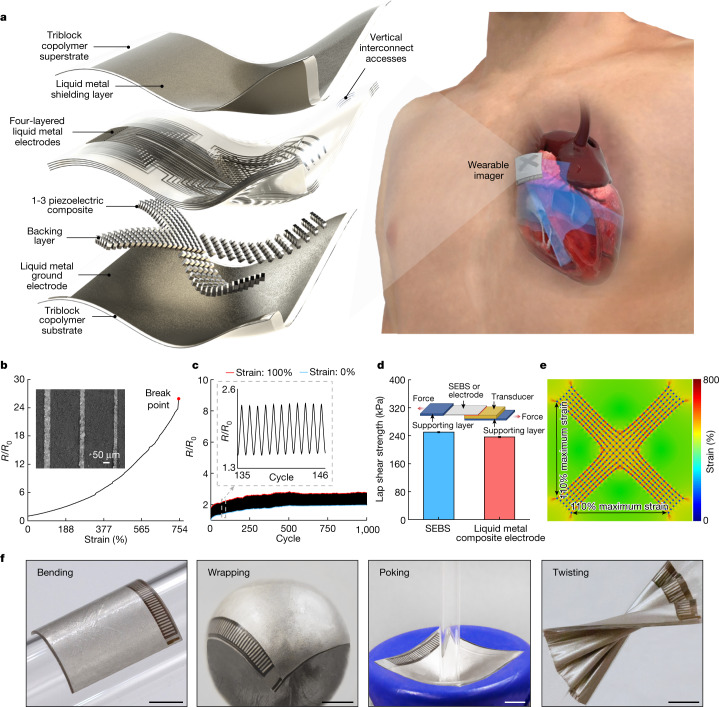

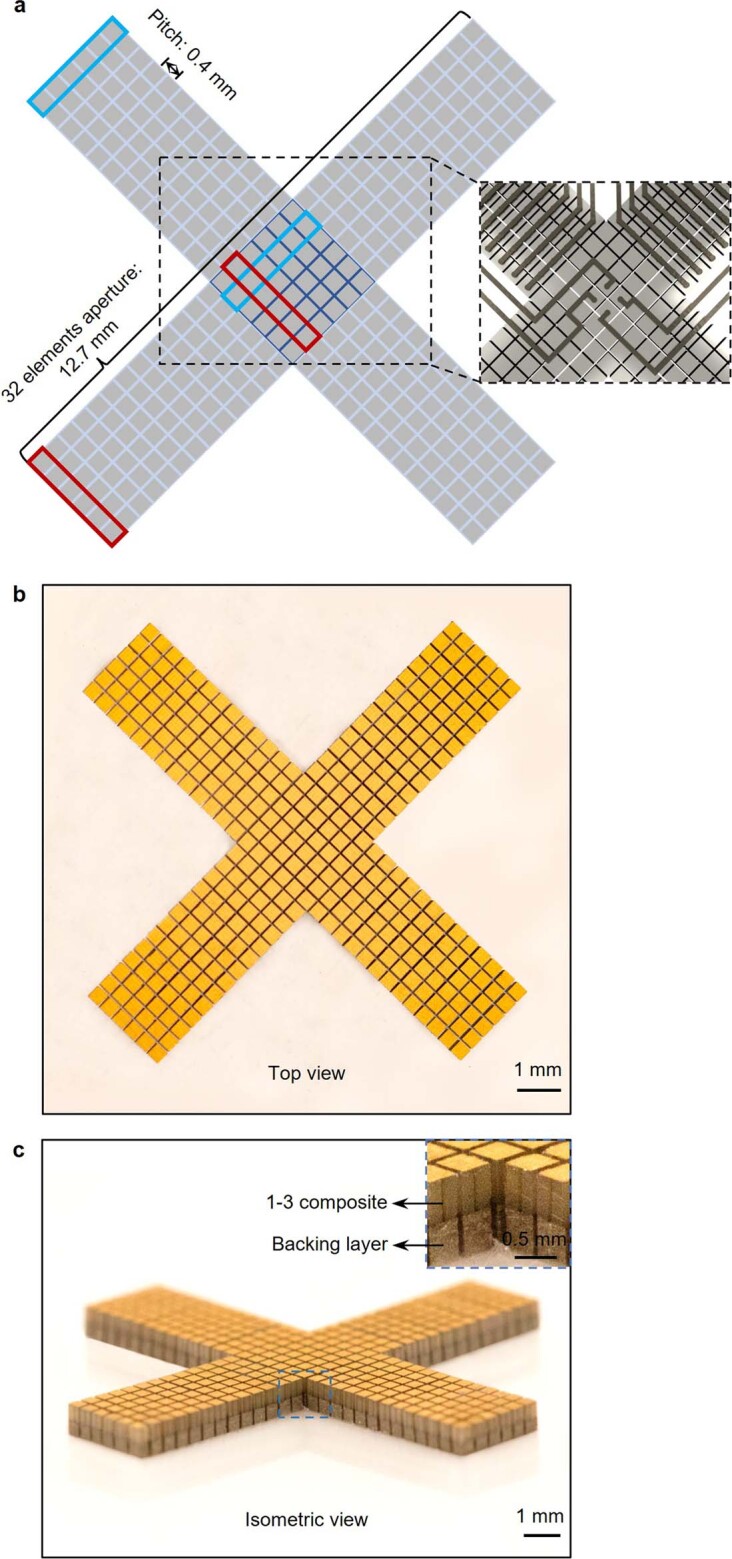

The device features piezoelectric transducer arrays, liquid metal composite electrodes and triblock copolymer encapsulation, as shown by the exploded schematics (Fig. 1a, left, Extended Data Fig. 1 and Supplementary Discussion 3). The device is built on styrene–ethylene–butylene–styrene (SEBS). To provide a comprehensive view of the heart, standard clinical practice is to image it in two orthogonal orientations by rotating the ultrasound probe17. To eliminate the need for manual rotation, we designed the device with an orthogonal configuration (Fig. 1a, right and Supplementary Videos 1 and 2). Each transducer element consisted of an anisotropic 1-3 piezoelectric composite and a silver-epoxy-based backing layer18,19. To balance the penetration depth and spatial resolution, we chose a centre resonant frequency of 3 MHz for deep tissue imaging19 (Supplementary Fig. 1). The array pitch was 0.4 mm (that is, 0.78 ultrasonic wavelengths), which enhances lateral resolutions and reduces grating lobes20.

Fig. 1. Design and characterization of the wearable cardiac imager.

a, Schematics showing the exploded view of the wearable imager, with key components labelled (left) and its working principle (right). b, Resistance of the liquid metal composite electrode as a function of uniaxial tensile strain. The electrode can be stretched up to about 750% without failure. The y axis is the relative resistance defined as R/R0, in which R0 and R are the measured resistances at 0% strain and a given strain, respectively. The inset is a scanning electron micrograph of the liquid metal composite electrodes with a width as small as about 30 µm. Scale bar, 50 μm. c, Cycling performance of the electrode between 0% and 100% uniaxial tensile strain, showing the robustness of the electrode. The inset shows the zoomed-in features of the graph during cyclic stretching and relaxing of the electrode. d, Lap shear strength of the bonding between transducer elements and SEBS or liquid metal composite electrode. Data are mean and s.d. from n = 3 tests. The inset is a schematic setup of the lap shear test. e, Finite element analysis of the entire device under 110% biaxial stretching. f, Optical images showing the mechanical compliance of the wearable imager when bent on a developable surface, wrapped around a non-developable surface, poked and twisted. Scale bars, 5 mm.

Extended Data Fig. 1. Schematics and optical images of the orthogonal imager.

a, The orthogonal imager consists of four arms, in which six small elements in one column are combined as one long element, and a central part that is shared by the four arms. The blue and red boxes label a long element integrated by six small pieces in each direction. The number of elements in one direction is 32. The pitch between the elements is 0.4 mm. Optical images in top view (b) and isometric view (c) showing the morphology of the orthogonal array. We used an automatic alignment strategy to fabricate the orthogonal array by bonding a large piece of backing layer with a large piece of 1-3 composite and then dicing them together into small elements with designed configurations. Inset in c shows the details of the elements. The 1-3 composite and backing layer have been labelled.

To individually address each element in such a compact array, we made high-density multilayered stretchable electrodes based on a composite of eutectic gallium–indium liquid metal and SEBS21. The composite is highly conductive and easy to pattern (Fig. 1b,c, Supplementary Figs. 2–4 and Methods). Lap shear measurements show that the interfacial bonding strength is about 250 kPa between the transducer element and the SEBS substrate, and about 236 kPa between the transducer element and the composite electrode (Fig. 1d and Supplementary Fig. 5), which are both stronger than typical commercial adhesives22 (Supplementary Table 2). The resulting electrode has a thickness of only about 8 μm (Supplementary Figs. 6 and 7). Electromagnetic shielding, also made of the composite, can mitigate the interference of ambient electromagnetic waves, which reduces the noise in the ultrasound radiofrequency signals and enhances the image quality23 (Supplementary Fig. 8 and Supplementary Discussion 4). The device has excellent electromechanical properties, as determined by its high electromechanical coupling coefficient, low dielectric loss, wide bandwidth and negligible crosstalk (Supplementary Fig. 1 and Methods). The entire device has a low Young’s modulus of 921 kPa, comparable with the human skin modulus24 (Supplementary Fig. 9). The device exhibits a high stretchability of up to approximately 110% (Fig. 1e and Supplementary Fig. 10) and can withstand various deformations (Fig. 1f). Considering that the typical strain on the human skin is within 20% (ref. 19), these mechanical properties allow the wearable imager to maintain intimate contact with the skin over a large area, which is challenging for rigid ultrasound devices25.

Imaging strategies and characterizations

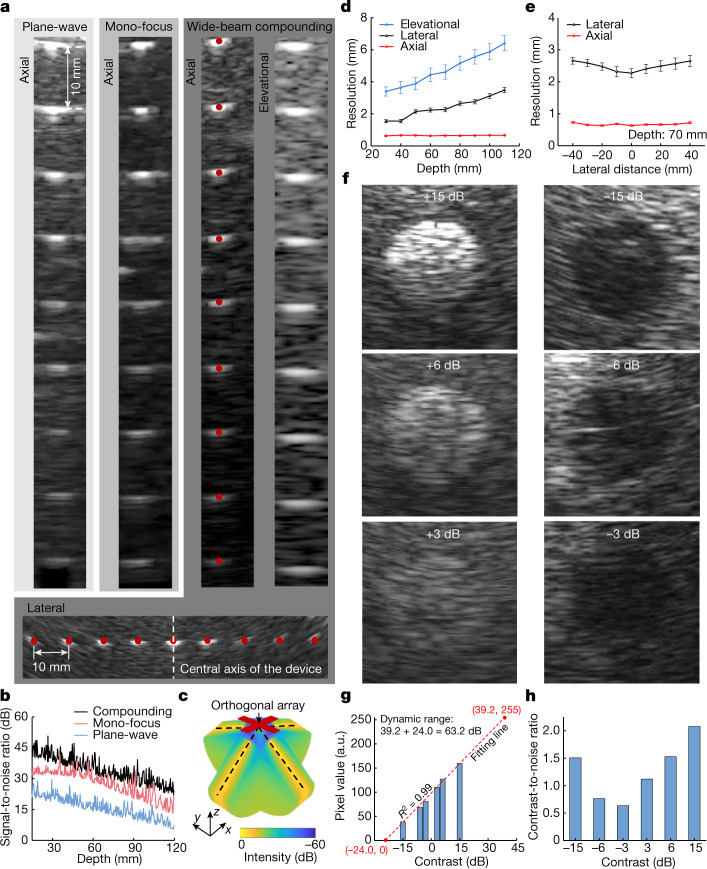

We evaluated the quality of the generated images based on the five most crucial metrics for anatomical imaging: spatial resolutions (axial, lateral and elevational), signal-to-noise ratio, location accuracies (axial and lateral), dynamic range and contrast-to-noise-ratio26.

The transmit beamforming strategy is critical for image quality. Therefore, we compared three distinct strategies: plane-wave, mono-focus and wide-beam compounding. Phantoms containing monofilament wires were used for this comparison (Supplementary Fig. 11, position 1). Among the three strategies, the wide-beam compounding implements a sequence of divergent acoustic waves with a series of transmission angles, and the generated images of each transmission are coherently combined to create a compounding image, which has the best quality with an expanded sonographic window27 (Fig. 2a,b and Supplementary Figs. 12–14). We also used a receive beamforming strategy to further improve the image quality (Supplementary Fig. 15 and Methods). The wide-beam compounding achieves a synthetic focusing effect and, therefore, a high acoustic intensity across the entire insonation area (Fig. 2c and Supplementary Fig. 13), which leads to the best signal-to-noise ratio and spatial resolutions (Fig. 2a, third column, Fig. 2b and Supplementary Fig. 12).

Fig. 2. B-mode imaging strategies and characterizations.

a, Imaging results on wire (100 µm in diameter) phantoms using different transmit beamforming strategies. The first three columns show the images through plane-wave, mono-focus and wide-beam compounding at different depths, respectively. The fourth column shows the imaging resolution of wide-beam compounding in the elevational direction. The bottom row shows images of laterally distributed wires by the wide-beam compounding, from which the lateral accuracy and spatial resolutions at different lateral distances from the central axis can be obtained. b, Signal-to-noise ratios as a function of the imaging depth under different transmission strategies. c, Simulated acoustic fields of the wide-beam compounding, with enhanced acoustic field across the entire insonation area. d, Elevational, lateral and axial resolutions of the device using wide-beam compounding at different depths. e, Lateral and axial resolutions of the device using wide-beam compounding with different lateral distances from the central axis. Data in d and e are mean and s.d. from five tests (n = 5). f, Imaging inclusions with different contrasts to the matrix. On the basis of these B-mode images, the dynamic range (g) and contrast-to-noise ratio (h) of the device can be quantified.

To quantify the device spatial resolutions using the wide-beam compounding strategy, we measured full widths at half maximum from the point spread function curves28 extracted from the images (Fig. 2a, third and fourth columns and the bottom row and Supplementary Fig. 11, positions 1 and 2). As the depth increases, the elevational resolution deteriorates (Fig. 2d) because the beam becomes more divergent in the elevational direction. Therefore, we integrated six small elements into a long element (Extended Data Fig. 1) to offer better acoustic beam convergence and elevational resolution. The lateral resolution deteriorates only slightly with depth (Fig. 2d) owing to the process of receive beamforming (Methods). The axial resolution remains almost constant with depth (Fig. 2d) because it depends only on the frequency and bandwidth of the transducer array. Similarly, at the same depth, the axial resolution remains consistent with different lateral distances from the central axis of the device, whereas the lateral resolution is the best at the centre, where there is a high overlap of acoustic beams after compounding (Fig. 2e and Methods).

Another critical metric for imaging is the location accuracies. The agreements between the imaging results and the ground truths (the red dots in Fig. 2a) in the axial and lateral directions are 96.01% and 95.90%, respectively, indicating excellent location accuracies (Methods).

Finally, we evaluated the dynamic range and contrast-to-noise ratio of the device using the wide-beam compounding strategy. Phantoms containing cylindrical inclusions with different acoustic impedances were used for the evaluation (Supplementary Fig. 11, position 3). A high acoustic impedance mismatch results in images with high contrast (Fig. 2f). We extracted the average grey values of the inclusion images and performed a linear regression29, and determined the dynamic range to be 63.2 dB (Fig. 2g, Supplementary Fig. 16 and Methods), which is well above the 60-dB threshold typically used in medical diagnosis30.

We selected two regions of interest, one inside and the other outside each inclusion area, to derive the contrast-to-noise ratios31, which range from 0.63 to 2.07 (Fig. 2h and Methods). A higher inclusion contrast leads to a higher contrast-to-noise ratio of the image. The inclusions with the lowest contrast (+3 dB or −3 dB) can be clearly visualized, demonstrating the outstanding sensitivity of this device20. The performance of the wearable imager is comparable with that of the commercial device (Supplementary Figs. 17 and 18, Extended Data Table 1 and Supplementary Discussion 5).

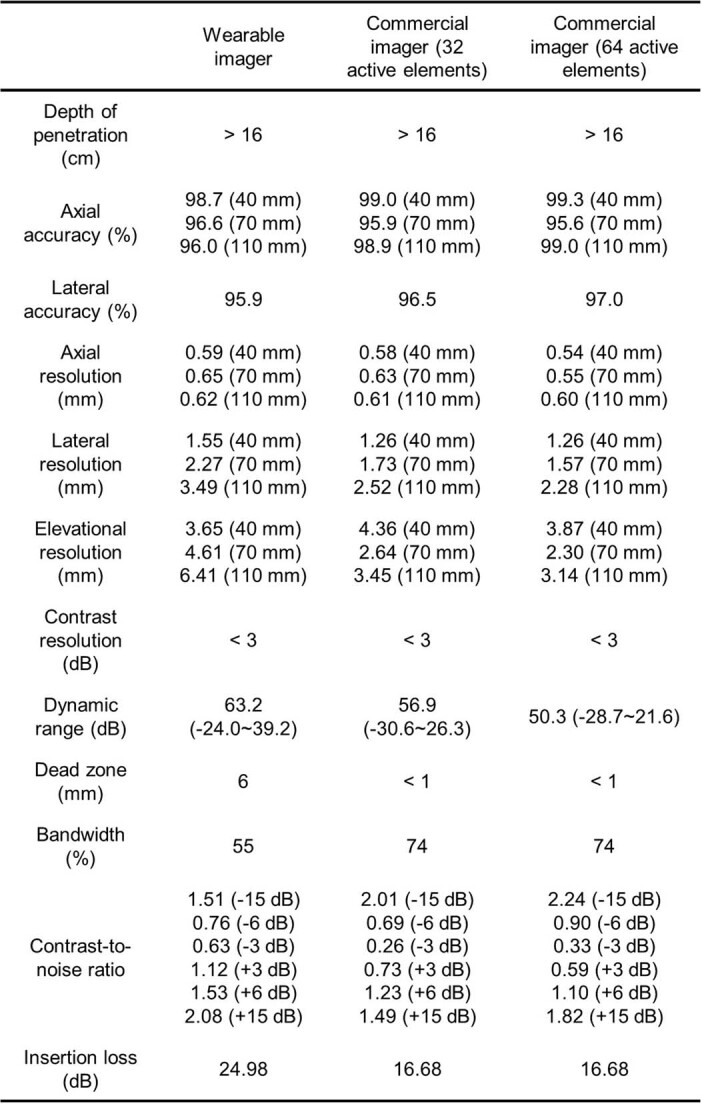

Extended Data Table 1.

Full comparison of the imaging metrics between the wearable imager and a commercial ultrasound imager (Model P4-2v)

The overall performance of the wearable imager is comparable with that of the commercial one. Because the wearable orthogonal array has 32 elements in each direction, we also provided measurements of the commercial imager with only 32 elements activated.

Echocardiography from several views

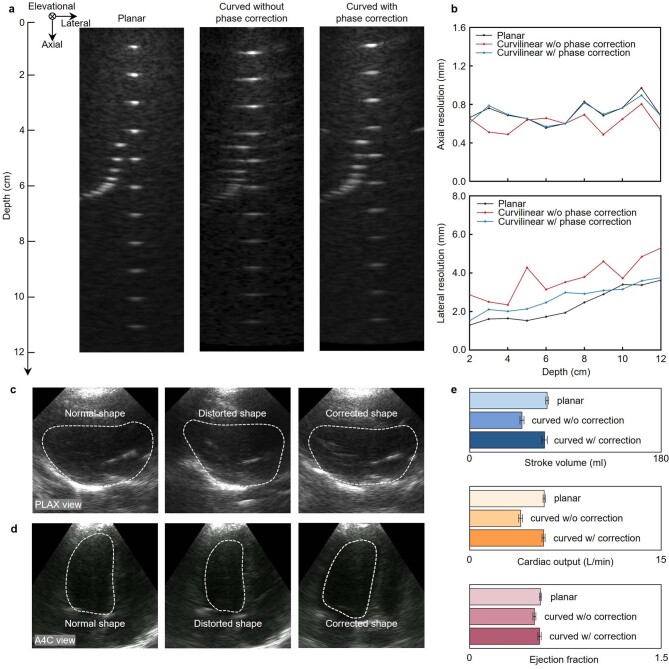

Echocardiography is commonly used to examine the structural integrity and blood-delivery capabilities of the heart. Uniquely for soft devices, the contours of the human chest cause a non-planar distribution of the transducer elements, which leads to phase distortion and therefore image artefacts32. We used a three-dimensional scanner to collect the chest curvature to compensate for element position shifts within the wearable imager and thus correct phase distortion during transmit and receive beamforming (Supplementary Fig. 19, Extended Data Fig. 2 and Supplementary Discussion 6).

Extended Data Fig. 2. Characterization of the effects of phase correction on imaging quality.

B-mode images of a line phantom obtained from different situations (a). Left, from a planar surface. Middle, from a curvilinear surface without phase correction. Right, from a curvilinear surface with phase correction. b, The axial and lateral resolutions at different depths under these three situations. No obvious difference in axial resolution was found because it is mainly dependent on the transducer frequency and bandwidth. The lateral resolution of the wearable imager was improved after phase correction. Images collected in (c), the parasternallong-axis (PLAX) view of the heart and (d), the apical four-chamber view when measured by a planar probe (left panel), a curved probe without phase correction (middle panel) and a curved probe with phase correction (right panel). The left ventricular boundaries are labelled by white dashed lines in the images. e, Comparison of measured cardiac indices showing the impact of phase correction. Each measurement is based on the mean of five consecutive cardiac cycles (n = 5). The standard deviations are indicated by the error bars.

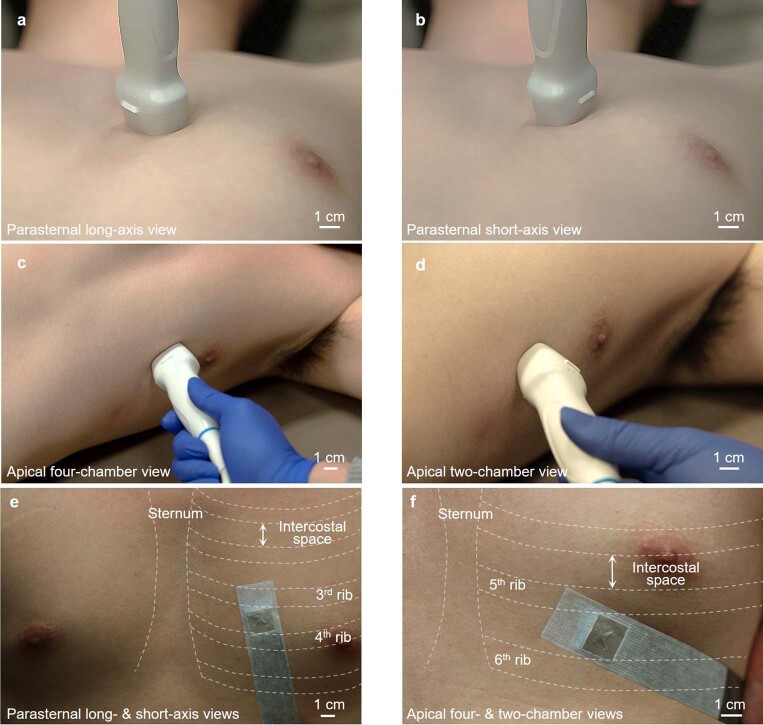

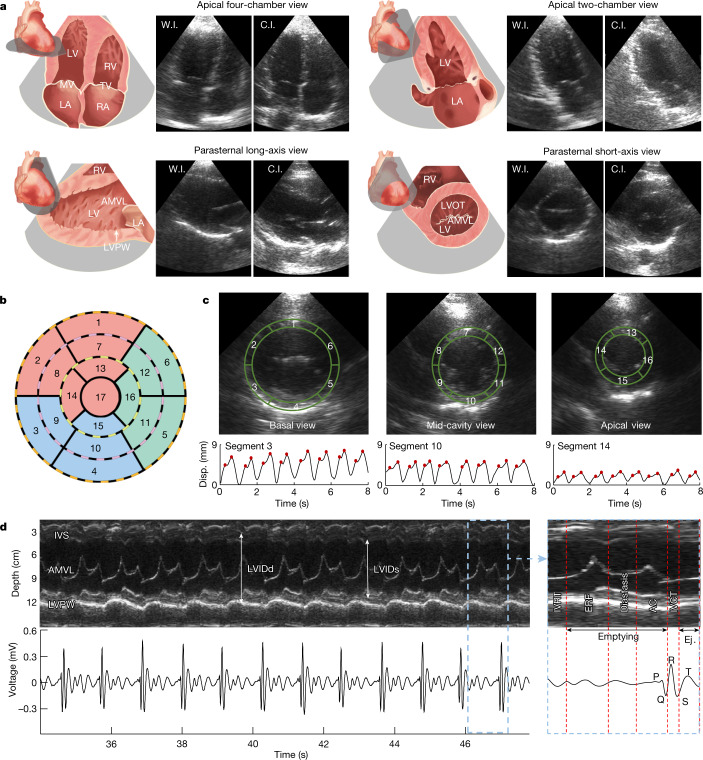

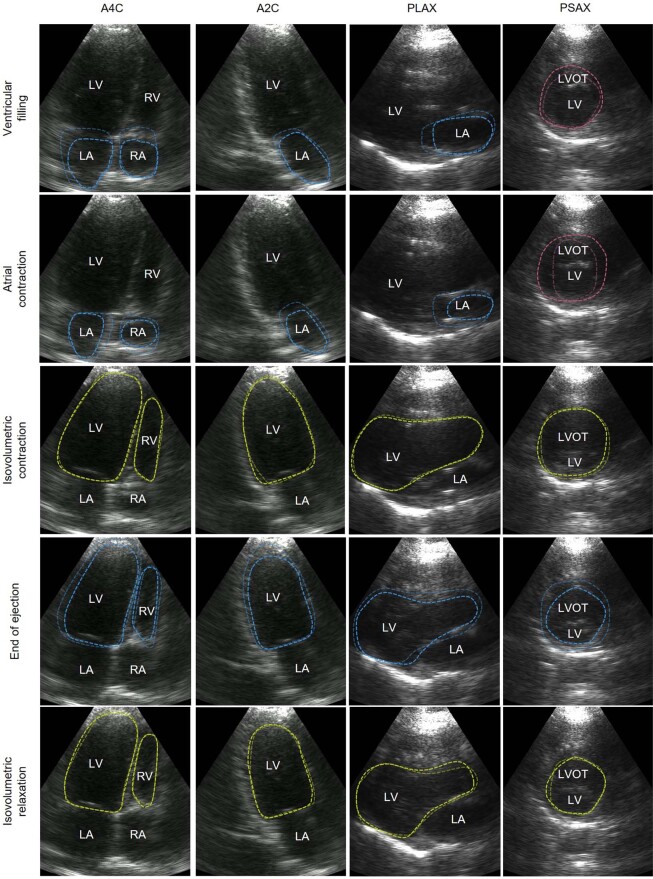

We compared the performance of the wearable device with a commercial device in four primary views of echocardiography, in which critical cardiac features can be identified (Extended Data Fig. 3). Figure 3a shows the schematics and corresponding B-mode images of these four views, including apical four-chamber view, apical two-chamber view, parasternal long-axis view and parasternal short-axis view. The difference between the results from the wearable and commercial devices is negligible. The parasternal short-axis view is particularly useful for evaluating the contractile function of the myocardium based on its motion in the radial direction and its relative thickening, as both are easily seen from this view. During contraction and relaxation, healthy myocardium undergoes strain and the wall thickness changes accordingly: thickening during contraction and thinning during relaxation. The strength of the left ventricle’s contractile function can be directly reflected on the ultrasound image through the magnitude of the myocardial strain. Abnormalities in the contractile function, such as akinesia, can be indicative of ischaemic heart disease and myocardial infarction33.

Extended Data Fig. 3. Optical images showing positions and orientations for ultrasound heart imaging.

a, Parasternal long-axis view. b, Parasternal short-axis view. c, Apical four-chamber view. d, Apical two-chamber view. The orthogonal wearable cardiac imager combines parasternal long-axis and short-axis views (e) and apical four-chamber and apical two-chamber views without rotation (f). The wearable imager can capture two parasternal views from a single position or two apical views from another single position. The sternum and ribs are labelled to indicate intercostal spaces.

Fig. 3. Echocardiography in several standard views.

a, Schematics and B-mode images of cardiac anatomies from the wearable and commercial imagers. The wearable imager was placed in the parasternal position for imaging in the parasternal long-axis and short-axis views and relocated at the apical position for imaging in the apical four-chamber and two-chamber views. b, 17-segment model representation of the left ventricular wall. Each of the concentrically nested rings that make up the circular plot represents the parasternal short-axis view of the myocardial wall from a different level of the left ventricle. c, B-mode images of the left ventricle in basal, mid-cavity and apical views (top row) and corresponding typical displacement for segments 3, 10 and 14, respectively (bottom row). The physical regions of the left ventricular wall represented by each segment of the 17-segment model have been labelled on the corresponding short-axis views. The peaks are marked with red dots. d, M-mode images (upper left) extracted from parasternal long-axis view and corresponding electrocardiogram signals (lower left). A zoomed-in plot shows the different phases of a representative cardiac cycle (right). Primary events include diastole and opening of the mitral valve during the P-wave of the electrocardiogram, opening of the aortic valve and systole during the QRS complex and closure of the aortic valve during the T-wave. AC, atrial contraction; AMVL, anterior mitral valve leaflet; C.I., commercial imager; ERF, early rapid filling; Ej., ejection; IVCT, isovolumetric contraction time; IVRT, isovolumetric relaxation time; IVS, interventricular septum; LA, left atrium; LV, left ventricle; LVIDd, left ventricular internal diameter end diastole; LVIDs, left ventricular internal diameter end systole; LVOT, left ventricular outflow tract; LVPW, left ventricular posterior wall; MV, mitral valve; RA, right atrium; RV, right ventricle; TV, tricuspid valve; W.I., wearable imager.

To better localize the specific segment of the left ventricular wall that is potentially pathological, the 17-segment model can be adopted as in standard clinical practice33 (Fig. 3b). We took the basal, mid-cavity and apical slices of the parasternal short-axis view from the left ventricular wall, and divided them into segments according to the model. Each segment is linked to a certain coronary artery, allowing ischaemia in the coronary arteries to be localized on the basis of akinesia in the corresponding myocardial segment33. We then recorded the displacement waveforms of the myocardium boundaries (Fig. 3c and Supplementary Discussion 6). The two peaks in each cardiac cycle in the displacement curves correspond to the two inflows into the left ventricle during diastole. The wall displacements, as measured in the basal, mid-cavity and apical views, become sequentially smaller owing to the decreasing radius of the myocardium along the conical shape of the left ventricle.

Motion-mode (M-mode) images track activities over time in a one-dimensional target region34,35. We extracted M-mode images from parasternal long-axis view B-mode images (Fig. 3d). Primary targets include the left ventricular chamber, the septum and the mitral/aortic valves. In M-mode, structural information, such as the myocardial thickness and the left ventricular diameter, can be tracked according to the distances between the boundaries of features. Valvular functions, for example, their opening and closing velocities, can be evaluated on the basis of the distance between the leaflet and septal wall (Supplementary Discussion 1). Moreover, we can correlate the mechanical activities in the M-mode images with the electrical activities in the electrocardiogram measured simultaneously during different phases in a cardiac cycle (Fig. 3d and Supplementary Discussions 1 and 6).

Monitoring during motion

Stress echocardiography assesses cardiac responses to stress induced by exercise or pharmacologic agents, which may include new or worsened ischaemia presenting as wall-motion abnormalities, and is crucial in the diagnosis of coronary artery diseases36. Furthermore, subjects with heart failure may sometimes seem asymptomatic at rest, as the heart sacrifices its efficiency to maintain the same cardiac output37,38. Thus, by pushing the heart towards its limits during exercise, the lack of efficiency becomes apparent. However, in current procedures, ultrasound images are obtained only before and after exercise. With the cumbersome apparatus, it is impossible to acquire data during exercise, which may contain invaluable real-time insights when new abnormalities initiate39 (Supplementary Discussion 7). Also, because images are traditionally obtained after exercise, a quick recovery can mask any transient pathologic response during stress and lead to false-negative examinations40. In addition, the end point for terminating the exercise is subjective, which may result in suboptimal testing.

The wearable ultrasonic patch is ideal for overcoming these challenges. The device can be attached to the chest with minimal constraint to the movement of the subject, providing a continuous recording of cardiac activities before, during and after exercise with negligible motion artefacts (Extended Data Fig. 4). This not only captures the real-time responses during the test but also offers objective data to standardize the end point and enhances patient safety throughout the test (Supplementary Discussion 7). We used liquidus silicone as the couplant to achieve images of stable quality instead of water-based ultrasound gels that evaporate over time (Supplementary Figs. 20 and 21 and Supplementary Discussion 8). We observed no skin irritation or allergy after 24 h of continuous wear (Supplementary Fig. 22). The heart rate of the subject remained stable with a constant device temperature of about 32 °C after the device continuously worked for 1 h (Supplementary Fig. 23). In addition, one device was tested on different subjects (Supplementary Fig. 24). The reproducible results indicate the stable and reliable performance of the wearable imager.

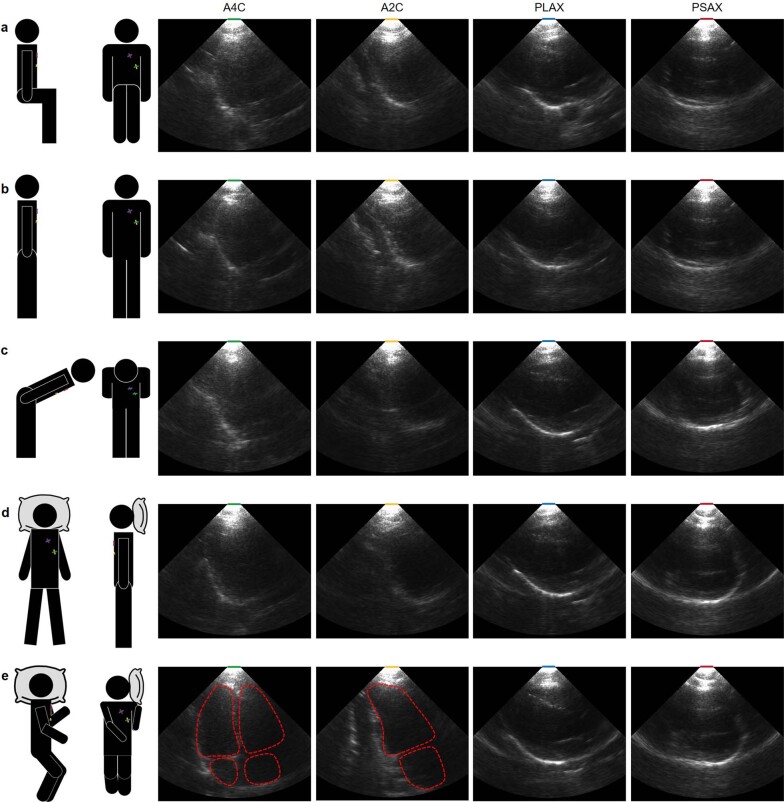

Extended Data Fig. 4. B-mode images collected from a subject with different postures.

The four views collected when the subject is sitting (a), standing (b), bending over (c), lying flat (d) and lying on their side (e). The PLAX and PSAX views can keep their quality at different postures, whereas the quality of A4C and A2C views can only be achieved when lying on the side. A2C, apical two-chamber view; A4C, apical four-chamber view; PLAX, parasternal long-axis view; PSAX, parasternal short-axis view.

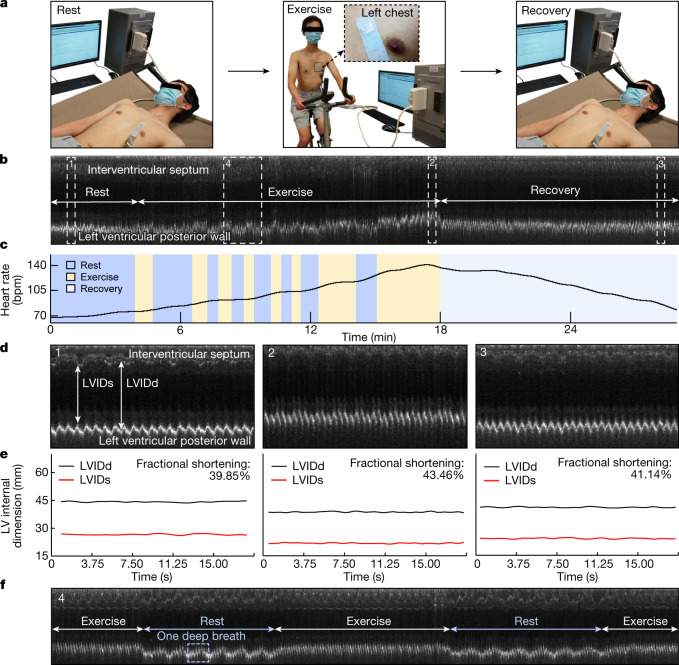

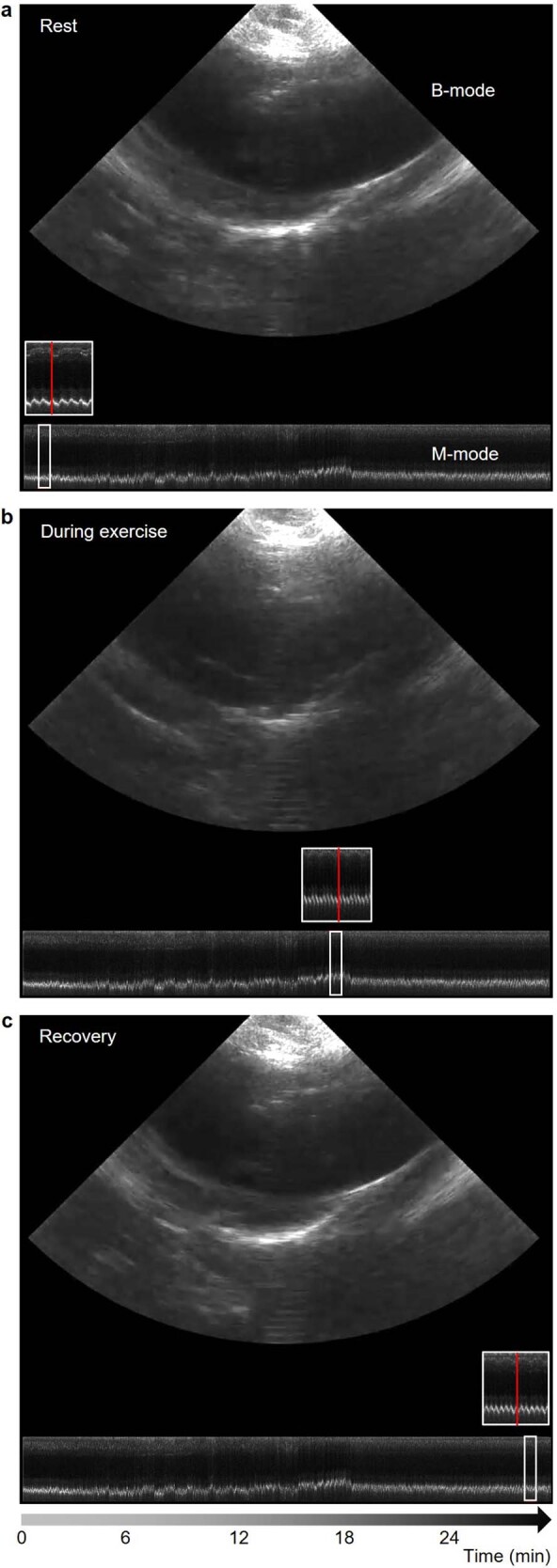

We performed stress echocardiography to demonstrate the performance of the device during exercise (Supplementary Discussion 7). The device was attached to the subject for continuous recording along the parasternal long axis during the entire process, which consisted of three main stages (Fig. 4a). In the rest stage, the subject laid in the supine position. In the exercise stage, the subject exercised on a stationary bike with several intervals until a possible maximal heart rate was reached. In the recovery stage, the subject was placed in the supine position again. The device demonstrated uninterrupted tracking of the left ventricular activities, including the corresponding M-mode echocardiography and synchronized heart-rate waveform (Fig. 4b,c, Extended Data Fig. 5 and Supplementary Video 3). We examined a representative section of each testing stage and extracted the left ventricular internal diameter end systole (LVIDs) and left ventricular internal diameter end diastole (LVIDd) (Fig. 4d). The LVIDs and LVIDd of the subject remained stable during the rest stage (Fig. 4e). In the exercise stage, the interventricular septum and left ventricular posterior wall of the subject moved closer to the skin surface, with the latter moving more than the former, resulting in a decrease in LVIDs and LVIDd. In the recovery stage, the LVIDs and LVIDd slowly returned to normal. The variation in fractional shortening, a measure of the cardiac muscular contractility, reflects the changing demand for blood supply in different stages of stress echocardiography (Fig. 4e). Particularly, section 4 in Fig. 4b includes periods of exercise and intervals for rest, when patterns of a deep breath can also be seen from the left ventricular posterior wall motions (Fig. 4f).

Fig. 4. Monitoring during motion.

a, Three stages of stress echocardiography. In the rest stage, the subject laid supine for around 4 min. In the exercise stage, the subject rode a stationary bike for about 15 min, with intervals for rest. In the recovery stage, the subject laid supine again for about 10 min. The wearable imager was attached to the chest of the subject throughout the entire test, even during the transitions between the stages. b, Continuous M-mode echocardiography extracted from the parasternal long-axis-view B-mode images of the entire process. Key features of the interventricular septum and left ventricular posterior wall are identified. The stages of rest, exercise (with intervals of rest) and recovery are labelled. c, Variations in the heart rate extracted from the M-mode echocardiography. d, Zoomed-in images of sections 1 (rest), 2 (exercise) and 3 (recovery) (dashed boxes) in b. e, Left ventricular internal diameter end diastole (LVIDd) and left ventricular internal diameter end systole (LVIDs) waveforms of the three different sections of the recording and corresponding average fractional shortenings. f, Zoomed-in images of section 4 (dashed box) during exercise with intervals of rest in b. In the first interval, the subject took a rhythmic deep breath six times, whereas during exercise, there seems to be no obvious signs of a deep breath, probably because the subject switched from diaphragmatic (rest) to thoracic (exercise) breathing, which is shallower and usually takes less time.

Extended Data Fig. 5. Continuous cardiac imaging during rest, exercise and recovery.

Representative B-mode and M-mode images during rest (a), exercise (b) and recovery (c). The red line highlights the M-mode section corresponding to the current B-mode frame. More details can be seen in Supplementary Video 3.

Automatic image processing

Cardiovascular diseases are often associated with changes in the pumping capabilities of the heart, which could be measured by stroke volume, cardiac output and ejection fraction. Non-invasive, continuous monitoring of these indices are valuable for the early detection and surveillance of cardiovascular conditions (Supplementary Discussion 9). Critical information embodied in these waveforms may help precisely determine potential risk factors and track the health state41 (Supplementary Discussion 10). On the other hand, processing of the unprecedented image data streams, if done manually, can be overwhelming for clinicians, which potentially introduces interobserver variability or even errors42.

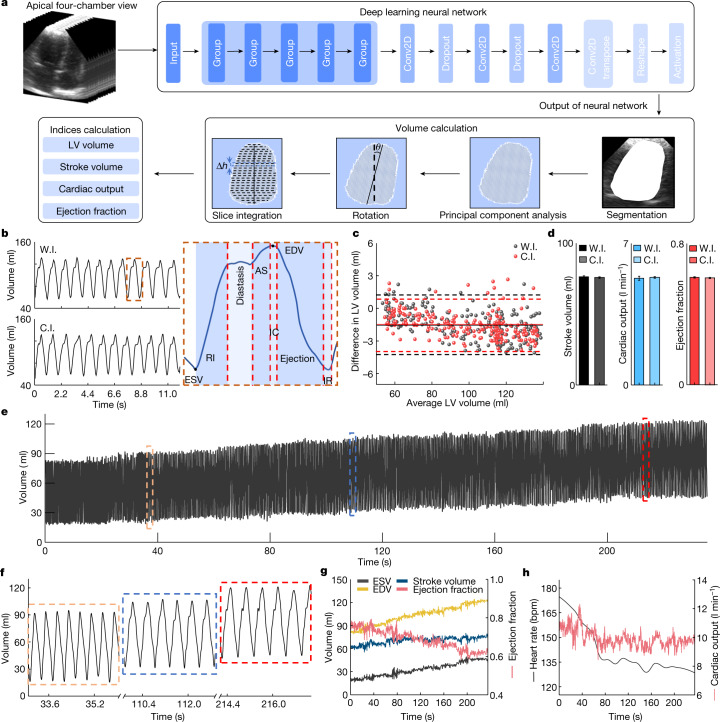

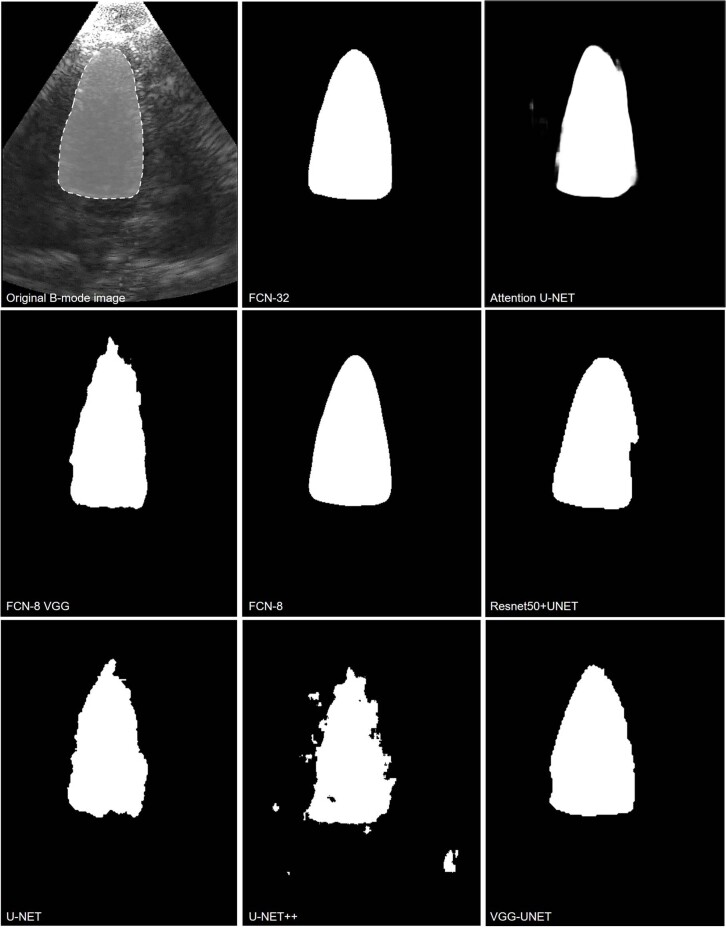

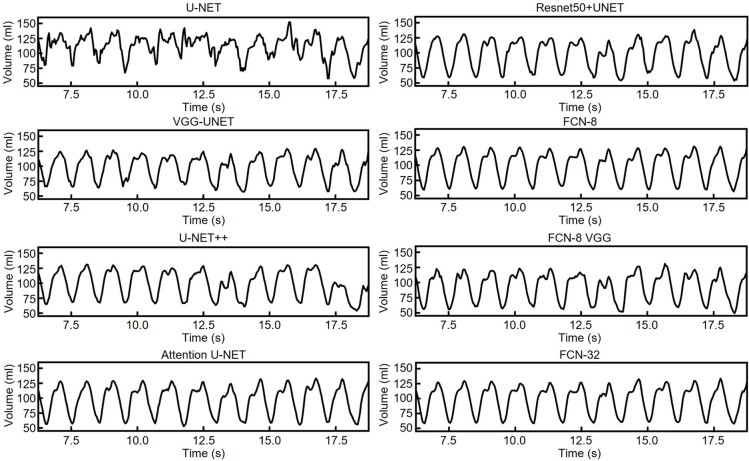

Automatic image processing can overcome the challenges. We applied a deep learning neural network to extract key information (for example, the left ventricular volume in apical four-chamber view) from the continuous stream of images (Fig. 5a, Supplementary Fig. 25 and Supplementary Discussion 11). We evaluated different types of deep learning models43 through the output images and waveforms of the left ventricular volume (Extended Data Figs. 6 and 7, Supplementary Table 3 and Supplementary Video 4). The FCN-32 model outperforms others based on qualitative and quantitative analyses (Supplementary Fig. 26, Supplementary Tables 4 and 5 and Supplementary Discussion 11). We also applied data augmentation to expand the dataset and improve the performance (Supplementary Fig. 27 and Supplementary Discussion 11).

Fig. 5. Automatic image processing by deep learning.

a, Schematic workflow. Pre-processed images are used to train the FCN-32 model. The trained model accepts the unprocessed images and predicts the left ventricular (LV) volume, based on which stroke volume, cardiac output and ejection fraction are derived. b, Left ventricular volume waveform generated by the FCN-32 model from both the wearable imager (W.I.) and the commercial imager (C.I.) (left). Critical features are labelled in one detailed cardiac cycle (right). c, Bland–Altman analysis of the average of (x axis) and the difference between (y axis) the model-generated and manually labelled left ventricular volumes for the wearable (black) and commercial (red) imagers. Dashed lines indicate the 95% confidence interval and about 95% of the data points are within the interval for both imagers. Solid lines indicate mean differences. d, Comparing the stroke volume, cardiac output and ejection fraction extracted from results by the wearable and commercial imagers. Data are mean and s.d. from twelve cardiac cycles (n = 12). e, The model-generated left ventricular volume waveform in the recovery stage. f, Three representative sections of the recording from the initial, middle and end stages of e. End-systolic volume (ESV), end-diastolic volume (EDV), stroke volume and ejection fraction (g) and cardiac output and heart rate waveforms (h) derived from the left ventricular volume waveform. The end-systolic volume and end-diastolic volume gradually recover to the normal range in the end section. The stroke volume increases from about 60 ml to about 70 ml. The ejection fraction decreases from about 80% to about 60%. The cardiac output decreases from about 11 l min−1 to about 9 l min−1, indicating that the decreasing heart rate from about 175 bpm to about 130 bpm overshadowed the increasing stroke volume. AS, atrial systole; IC, isovolumetric contraction; IR, isovolumetric relaxation; RI, rapid inflow.

Extended Data Fig. 6. Segmentation results of the left ventricle with different deep learning models.

By qualitatively evaluating the result, we found no ‘jitteriness’ in Supplementary Video 4. The segmented left ventricle contracts and relaxes as naturally as the B-mode video. The segmentation boundaries are smooth with the highest fidelity. Compared with the original B-mode image, the FCN-32 model has the best agreement among all models used in this study.

Extended Data Fig. 7. Waveforms of the left ventricular volume obtained with different deep learning models.

Those waveforms are from segmenting the same B-mode video. Qualitatively, the waveform generated by the FCN-32 model gains the best stability and the least noise, and the waveform morphology is more constant from cycle to cycle. Quantitatively, the comparison results of those models is in Supplementary Fig. 26, which shows that the FCN-32 model has the highest mean intersection over union, showing the best performance in this study.

The output left ventricular volumes for the wearable and commercial imagers show similar waveform morphologies (Fig. 5b, left). From the waveforms, corresponding phases of a cardiac cycle can be identified (Fig. 5b, right and Extended Data Fig. 8). Bland–Altman analysis gives a quantitative comparison between the model-generated and manually labelled left ventricular volumes, indicating a stable and reliable performance of the FCN-32 model44 (Fig. 5c and Supplementary Discussion 11). The mean differences in the left ventricular volume are both approximately −1.5 ml, which is acceptable for standard medical diagnosis45. We then derived stroke volume, cardiac output and ejection fraction from the left ventricular volume waveforms. No marked difference is observed in the averages or standard deviations between the two devices (Fig. 5d). The results verified the comparable performance of the wearable imager to the commercial imager.

Extended Data Fig. 8. Different phases in a cardiac cycle obtained from B-mode imaging.

The rows are B-mode images of A4C, A2C, PLAX and PSAX views in the same phase. The columns are B-mode images of the same view during ventricular filling, atrial contraction, isovolumetric contraction, end of ejection and isovolumetric relaxation. The dashed lines highlight the main features of the current phase. Bluish lines mean shrinking in the volume of the labelled chamber. Reddish lines mean expansion in the volume of the labelled chamber. Yellowish lines mean retention in the volume of the labelled chamber. A2C, apical two-chamber view; A4C, apical four-chamber view; LA, left atrium; LV, left ventricle; LVOT, left ventricular outflow tract; RA, right atrium; RV, right ventricle; PLAX, parasternal long-axis view; PSAX: parasternal short-axis view.

The left ventricular volume is constantly changing and generally follows a steady-state pattern at rest in healthy subjects. Therefore, stroke volume, cardiac output and ejection fraction also tend to remain constant. However, cardiac pathologies or ordinary daily activities such as exercise may dynamically change those indices. To validate the performance of the wearable imager under dynamic situations, we extracted the left ventricular volume from recordings in the recovery stage of stress echocardiography (Fig. 5e). The dimensions of the left ventricle cannot be accurately determined when the images are collected in the standing position, owing to anatomical limitations of the human body (Supplementary Fig. 28 and Supplementary Discussion 9). Owing to the deep breathing after exercise, the heart was sometimes blocked by the lungs in the image. We used an image-imputation algorithm to complement the blocked part (Supplementary Fig. 29 and Supplementary Discussion 11). The acquired waveform shows an increasing trend in the left ventricular volume. Figure 5f illustrates three representative sections of the recording taken from the beginning, middle and end of the recovery stage. In the initial section, the diastasis stage is barely noticeable because of the high heart rate. In the middle section, the diastasis stage becomes visible. In the end section, the heart rate decreases notably. The end-diastolic and end-systolic volumes are increasing, because the slowing heartbeat during recovery allows more time for blood to fill the left ventricle46 (Fig. 5g). The stroke volume gradually increases, indicating that the end-diastolic volume increases slightly faster than the end-systolic volume (Fig. 5g). The ejection fraction decreases as heart contraction decreases during the recovery (Fig. 5g). The cardiac output reduces, indicating a larger influence brought about by the decreasing heart rate than the increasing stroke volume (Supplementary Discussion 9).

Discussion

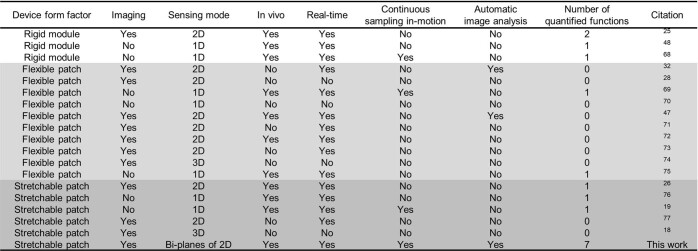

Echocardiography is crucial in the diagnosis of cardiac diseases, but the current implementation in clinics is cumbersome and limits its application in continuous monitoring. Emerging technologies based on wearable rigid modules25 or flexible patches47 lack one or more of the ideal properties of wearable ultrasound technologies (Extended Data Table 2). In this work, we provided uninterrupted frame-by-frame acquisitions of cardiac images even when the subject was undertaking intensive exercise. In addition, the wearable imager with deep learning gave actionable information by automatically and continuously outputting curves of critical cardiac metrics, such as myocardial displacement, stroke volume, ejection fraction and cardiac output, which are highly desirable in critical care, cardiovascular disease management and sports science48. This capability is unprecedented in conventional clinical practice9 and the non-invasiveness can extend potential benefits to the outpatient and athletic populations.

Extended Data Table 2.

Summary of wearable ultrasonic devices for continuous monitoring of deep tissues

‘Number of quantified functions’ means the amount of physiological signals collected from images. For example, the number of quantified functions in this work is seven because we can extract myocardium displacement, left ventricular internal diameter, fractional shortening, stroke volume, ejection fraction, cardiac output and heart rate from those B-mode images.

The implications of this technology go far beyond imaging the heart, as it can be generalized to image other deep tissues, such as the inferior vena cava, abdominal aorta, spine and liver (Supplementary Fig. 30). For example, as demonstrated in an ultrasound-guided biopsy procedure on a cyst phantom (Supplementary Fig. 31), the two orthogonal imaging sections present the entire biopsy process simultaneously, freeing up one hand of the operator (Supplementary Video 5). The uniquely enabling capability of this technology forgoes the need for an operator to constantly hold the device.

Other future efforts could ensue by further improving spatial resolutions (Supplementary Fig. 32). A three-dimensional scanner can only provide the curvature of a static human chest. To accommodate the dynamic chest curvature, advanced imaging algorithms need to be developed to compensate for the phase distortion and thus improve spatial resolutions. In addition, the wearable imager is connected to the back-end system for data processing by means of a flexible cable (Supplementary Fig. 33) and future work needs to focus on system miniaturization and integration. Besides, the FCN-32 neural network can only be applied to subjects in the training dataset at present. Its generalizability could potentially be improved by expanding the training dataset or optimizing the network with few-shot-learning49 or reinforcement-learning50 strategies, which will allow the model to adapt to a larger population.

Methods

Materials

Gallium–indium eutectic liquid metal, toluene, ethyl alcohol, acetone and isopropyl alcohol were purchased from Sigma-Aldrich. SEBS (G1645) was obtained from Kraton. Silicone (Ecoflex 00-30) was bought from Smooth-On as the encapsulation material of the device. Silicone (Silbione) was obtained from Elkem Silicones as the ultrasound couplant. Aquasonic ultrasound transmission gel was bought from Parker Laboratories. 1-3 composite (PZT-5H) was purchased from Del Piezo Specialties. Silver epoxy (Von Roll 3022 E-Solder) was obtained from EIS. Anisotropic conductive film cable was purchased from Elform.

Design and fabrication of the wearable imager

We designed the transducer array in an orthogonal geometry, similar to a Mills cross array (Supplementary Fig. 34), to achieve biplane standard views simultaneously. For the transducers, we chose the 1-3 composite for transmitting and receiving ultrasound waves because it possesses superior electromechanical coupling18. In addition, the acoustic impedance of 1-3 composites is close to that of the skin, maximizing the acoustic energy propagating into human tissues19. The backing layer dampens the ringing effect, broadens the bandwidth and thus improves the spatial resolution18,51.

We used an automatic alignment strategy to fabricate the orthogonal array. The existing method of bonding the backing layer to the 1-3 composite was to first dice many small pieces of backing layer and 1-3 composite, and then bond each pair together one by one. A template was needed to align the small pieces. This method was of very low efficiency. In this study, we bond a large piece of backing layer with a large piece of 1-3 composite and then dice them together into small pieces with designed configurations. The diced array is then automatically aligned on adhesive tape with high uniformity and perfect alignment.

Electrodes based on eutectic gallium–indium liquid metal are fabricated to achieve better stretchability and higher fabrication resolution than existing electrodes based on serpentine-shaped copper thin film. Eutectic gallium–indium alloys are typically patterned through approaches such as stencil lithography52, masked deposition53, inkjet printing54, microcontact printing55 or microfluidic channelling56. Although these approaches are reliable, they are either limited in patterning resolution or require sophisticated photolithography or printing hardware. The sophisticated hardware makes fabrication complicated and time-consuming, which presents a challenge in the development of compact, skin-conformal wearable electronics.

In this study, we exploited a new technology for patterning. We first screen-printed a thin layer of liquid metal on a substrate. A key consideration before screen printing was how to get the liquid metal to wet the substrate. To solve this problem, we dispersed big liquid metal particles into small microparticles using a tip sonicator (Supplementary Fig. 2). When microparticles contacted air, their outermost layer generated an oxide coating, which lowered the surface tension and prevented those microparticles from aggregating. In addition, we used 1.5 wt.% SEBS as a polymer matrix to disperse the liquid metal particles because SEBS could wet well on the liquid metal surface. We also used SEBS as the substrate. Therefore, the SEBS in the matrix and the substrate could merge and cure together after screen printing, allowing the liquid metal layer to adhere to the substrate efficiently and uniformly. Then we used laser ablation to selectively remove the liquid metal from the substrate to form patterned electrodes.

The large number of piezoelectric transducer elements in the array requires many such electrodes to address each element individually. We designed a four-layered top electrode and a common ground electrode. There are SEBS layers between different layers of liquid metal electrodes as insulation. To expose all electrode layers to connect to transducer elements, we used laser ablation to drill vertical interconnect accesses21. Furthermore, we created a stretchable shielding layer using liquid metal and grounded it through a vertical interconnect access, which effectively protected the device from external electromagnetic noises (Supplementary Fig. 8).

Before we attached the electrodes to the transducer array, we spin-coated toluene–ethanol solution (volume ratio 8:2) on the top of the multilayered electrode to soften the liquid-metal-based elastomer, also known as ‘solvent-welding’. The softened SEBS provided a sufficient contact surface, which could help form a relatively strong van der Waals force between the electrodes and the metal on the transducer surface. After bonding the electrodes to the transducer array, we left the device at room temperature to let the solvent evaporate. The final bonding strength of more than 200 kPa is stronger than many commercial adhesives22.

To encapsulate the device, we irrigated the device in a petri dish with uncured silicone elastomer (Ecoflex 00-30, Smooth-On) to fill the gap between the top and bottom electrodes and the kerf among the transducer elements. We then cured the silicone elastomer in an oven for 10 min at 80 °C. As the filling material, it suppresses spurious shear waves from adjacent elements, effectively isolating crosstalk between the elements18,19. With that being said, we think the main reason for the suppressed spurious shear waves is because of the epoxy in the 1-3 composite, which limits the lateral vibration of the piezoelectric materials. The Ecoflex as the filling material may have contributed but not played a chief role because the kerf is not too wide, only 100 to 200 µm. We lifted off the glass slide on the top electrode and directly covered the top electrode with a shielding layer. Then we lifted off the glass slide on the bottom electrode to release the entire device. Finally, screen-printing an approximately 50-μm layer of silicone adhesive on the device surface completed the entire fabrication.

Characterization of the liquid metal electrode

Existing wearable ultrasound arrays can achieve excellent stretchability by serpentine-shaped metal thin films as electrodes19,26. The serpentine geometry, however, severely limits the filling ratio of functional components, precluding the development of systems that require a high integration density or a small pitch. In this study, we chose to use liquid metal as the electrode owing to its large intrinsic stretchability, which makes the high-density electrode possible. The patterned liquid metal electrode had a minimum width of about 30 μm with a groove of about 24 μm (Supplementary Fig. 3), an order of magnitude finer than other stretchable electrodes18,26,57. The liquid metal electrode is ideal for connecting arrays with a small pitch58.

This liquid metal electrode exhibited high conductivity, exceptional stretchability and negligible resistance change under tensile strain (Fig. 1b and Supplementary Fig. 4). The initial resistance at 0% strain was 1.74 Ω (corresponding to a conductivity of around 11,800 S m−1), comparable with reported studies59,60. The resistance gradually increased with strain until the electrode reached the approximately 750% failure strain (Fig. 1b and Supplementary Fig. 4). The relative resistance is a parameter widely used to characterize the change in the resistance of a conductor (that is, the liquid metal electrode in this case) under different strains relative to the initial resistance58–60. The relative resistance is unitless. When the strain was 0%, the initial resistance R0 was 1.74 Ω. When the electrode was under 750% strain, the electrode was broken and the resistance R at the breaking point was measured to be 44.87 Ω. Therefore, the relative resistance (R/R0) at the breaking point was 25.79.

To investigate the electrode fatigue, we subjected them to 100% cyclic tensile strain (Fig. 1c). The initial 500 cycles observed a gradual increase in the electrode resistance because the liquid metal, when stretched, could expose more surfaces. These new surfaces were oxidized after contacting with air, leading to the resistance increase (Supplementary Fig. 4). After the initial 500 cycles, the liquid metal electrode exhibited stable resistance because, after a period of cycling, there were not many new surfaces exposed.

This study is the first to use liquid metal-based electrodes to connect ultrasound transducer elements. The bonding strength between them directly decides the robustness and endurance of the device. This is especially critical for the wearable patch, which will be subjected to repeated deformations during use. Therefore, we characterized the bonding strength of the electrode to the transducer element using a lap shear test. The liquid metal electrode was first bonded with the transducer element. The other sides of the electrode and the element were both fixed with stiff supporting layers. The supporting layer serves to be clamped by the tensile grips of the testing machine. Samples will be damaged if they are clamped by the grips directly. Then a uniaxial stretching was applied to the sample at a strain rate of 0.5 s−1. The test was stopped when the electrode was delaminated from the transducer element. A SEBS film was bonded with a transducer element and we performed the lap shear test using the same method. The peak values of the curve were used to represent the lap shear strength (Fig. 1d). The bonding strength between the pure SEBS film and the transducer element was roughly 250 kPa, and that between the electrode and the transducer element was about 236 kPa, which were both stronger than many commercial adhesives (Supplementary Table 2). The results indicate the robust bonding between the electrode and the element, preventing the electrodes from delamination under various deformations. This robust bonding does not have any limitations on the ultrasound pressures that can be transduced.

Characterization of the transducer elements

The electromechanical coupling coefficient of the transducer elements was calculated to be 0.67, on par with that of commercial probes (0.58–0.69)61. This superior performance was largely owing to the technique for bonding transducer elements and electrodes at room temperature in this study, which protected the piezoelectric material from heat-induced damage and depolarization. The phase angle was >60°, substantially larger than most earlier studies18,62, indicating that most of the dipoles in the element aligned well after bonding63. The large phase angle also demonstrated the exceptional electromechanical coupling performance of the device. Dielectric loss is critical for evaluating the bonding process because it represents the amount of energy consumed by the transducer element at the bonding interface20. The average dielectric loss of the array was 0.026, on par with that of the reported rigid ultrasound probes (0.02–0.04)64–66, indicating negligible energy consumed by this bonding approach (Supplementary Fig. 1b). The response echo was characterized in time and frequency domains (Supplementary Fig. 1c), from which the approximately 35 dB signal-to-noise ratio and roughly 55% bandwidth were derived. The crosstalk values between a pair of adjacent elements and a pair of second nearest neighbours have been characterized (Supplementary Fig. 1d). The average crosstalk was below the standard −30 dB in the field, indicating low mutual interference between elements.

Characterization of the wearable imager

We characterized the wearable imager using a commercial multipurpose phantom with many reflectors of different forms, layouts and acoustic impedances at various locations (CIRS ATS 539, CIRS Inc.) (Supplementary Fig. 11). The collected data are presented in Extended Data Table 1. For most of the tests, the device was first attached to the phantom surface and rotated to ensure the best imaging plane. Raw image data were saved to guarantee minimum information loss caused by the double-to-int8 conversion. Then the raw image data were processed using the ‘scanConversion’ function provided in the k-Wave toolbox to restore the sector-shaped imaging window (restored data). We applied five times upsampling in both vertical and lateral directions. The upsampled data were finally converted to the dB unit using:

| 1 |

The penetration depth was tested with a group of lines of higher acoustic impedance than the surrounding background distributed at different depths in the phantom. The penetration depth is defined as the depth of the deepest line that is differentiable from the background (6 dB higher in pixel value). Because the deepest line available in this study was at a depth of 16 cm and was still recognizable from the background, the penetration depth was determined as >16 cm.

The accuracy is defined as the precision of the measured distance. The accuracy was tested with the vertical and lateral groups of line phantoms. The physical distance between the two nearest pixels in the vertical and lateral directions was calculated as:

| 2 |

| 3 |

We acquired the measured distance between two lines (shown as two bright spots in the image) by counting the number of pixels between the two spots and multiplying them by Δy or Δx, depending on the measurement direction. The measured distances at different depths were compared with the ground truth described in the data sheet. Then the accuracy can be calculated by:

| 4 |

The lateral accuracy was presented as the mean accuracy of the four neighbouring pairs of lateral lines at a depth of 50 mm in the phantom.

The spatial resolutions were tested using the lateral and vertical groups of wires. For the resolutions at different depths, the full width at half maximum of the point spread function in the vertical or lateral directions for each wire was calculated. The vertical and lateral resolutions could then be derived by multiplying the number of pixels within the full width at half maximum by Δy or Δx, depending on the measurement direction. The elevational resolutions were tested by rotating the imager to form a 45° angle between the imager aperture and the lines. Then the bright spot in the B-mode images would reveal scatters out of the imaging plane. The same process as calculating the lateral resolutions was applied to obtain the elevational resolutions. The spatial resolutions at different imaging areas were also characterized with the lateral group of wires. Nine wires were located at ±4 cm, ±3 cm, ±2 cm, ±1 cm and 0 cm from the centre. The lateral and axial resolutions of the B-mode images from those wires were calculated with the same method.

Note that the lateral resolution worsens with the depth, mainly because of the receive beamforming (Supplementary Fig. 15). There are two beamformed signals, A and B. The lateral resolution of the A point (x1) is obviously better than that of the B point (x2). The fact that lateral resolution becomes worse with depth is inevitable in all ultrasound imaging, as long as receive beamforming is used.

As for different transmit beamforming methods, the wide-beam compounding is the best because it can achieve a synthetic focusing effect in the entire insonation area. The better the focusing effect, the higher the lateral resolution, which is why the lateral resolution of the wide-beam compounding is better than the other two transmit methods at the same depth. Furthermore, the multiple-angle scan used in the wide-beam compounding can enhance the resolution at high-angle areas. The multiple-angle scan combines transmissions at different angles to achieve a global high signal-to-noise ratio, resulting in improved resolutions.

The elevational resolution can only be characterized when the imaging target is directly beneath the transducer. For those targets that are far away from the centre, they are difficult to be imaged, which makes their elevational resolutions challenging to calculate. When characterizing the elevational resolution, the device should rotate 45°. In this case, most of the reflected ultrasound waves from those wires cannot return to the device owing to the large incidence angles. Therefore, those wires cannot be captured in the B-mode images. One potential solution is to decrease the rotating angle of the device, which may help capture more wires distributed laterally in the B-mode image. However, a small rotating angle will cause the elevational image to merge with the lateral image, which increases the error of calculating the elevational resolution. Considering those reasons, we only characterized the elevational resolution of the imaging targets directly beneath the transducer array.

The contrast resolution, the minimum contrast that can be differentiated by the imaging system, was tested with greyscale objects. The collected B-mode images are shown in Fig. 2. Because the targets with +3 and −3 dB, the lowest contrast available in this study, could still be recognized in the images, the contrast resolution of the wearable imager is determined as <3 dB.

The dynamic range in an ultrasound system refers to the contrast range that can be displayed on the monitor. The contrast between an object and the background is indicated by the average grey value of all pixels in the object in the display. The grey value is linearly proportional to the contrast. The larger the contrast, the larger the grey value. Because the display window was using the data type ‘uint8’ to differentiate the greyscale, the dynamic range was defined as the contrast range with a grey value ranging from 0 to 255.

The object with −15 dB contrast has the lowest average grey value, whereas the object with +15 dB contrast has the highest (Supplementary Fig. 16). In our case, there are six objects with different contrasts to the background in the phantom. The highest grey value obtained from the object of +15 dB contrast was 159.8, whereas the lowest grey value from the object of −15 dB contrast was 38.7. We used a linear fit to extrapolate the contrasts when the corresponding average grey values were equal to 255 and 0, which corresponded to contrasts of 39.2 dB and −24.0 dB, respectively. Then the dynamic range was determined as:

| 5 |

The dead zone is defined as the depth of the first line phantom that is not overwhelmed by the initial pulses. The dead zone was tested by imaging a specific set of wire phantoms with different depths right beneath the device (Supplementary Fig. 11, position 4) directly and measuring the line phantoms that were visible in the B-mode image.

The bandwidth of the imager is defined as the ratio between the full width at half maximum in the frequency spectrum and the centre frequency. It was measured by a pulse-echo test. A piece of glass was placed 4 cm away from the device and the reflection waveform was collected with a single transducer. The collected reflection waveform was converted to the frequency spectrum by a fast Fourier transform. The full width at half maximum was read from the frequency spectrum. We obtained the bandwidth using:

| 6 |

Contrast sensitivity represents the capability of the device to differentiate objects with different brightness contrasts20. The contrast sensitivity was tested with the greyscale objects. The contrast sensitivity is defined as the contrast-to-noise ratio (CNR) of the objects having certain contrasts to the background in the B-mode image:

| 7 |

in which μin and σin are the mean and the standard deviation of pixel intensity within the object, and μout and σout are the mean and the standard deviation of pixel intensity of the background.

The insertion loss is defined as the energy loss during the transmission and receiving. It was tested in water with a quartz crystal, a function generator with an output impedance of 50 Ω and an oscilloscope (Rigol DS1104). First, the transducer received an excitation in the form of a tone burst of a 3-MHz sine wave from the function generator. Then the same transducer received the echo from the quartz crystal. Given the 1.9-dB energy loss of the transmission into the quartz crystal and the 2.2 × 10−4 dB (mm MHz)−1 attenuation of water, the insertion loss could be calculated as:

| 8 |

Simulation of the acoustic field

The simulation computes the root mean square of the acoustic pressure at each point in the defined simulation field. The root mean square is defined in the equation below and gives an average acoustic pressure over a certain time duration, which is pre-defined in a packaged function of the software. In the equation, xi is the simulated acoustic pressure at the ith time step.

| 9 |

Figure 2c is the simulated root mean square of the transmitted acoustic pressure field by the orthogonal transducers. The simulation was done using the MATLAB UltraSound Toolbox67. Each one-dimensional phased array in the orthogonal transducers gives a sector-shaped acoustic pressure field. The simulation merges two such sector-shaped acoustic pressure fields. The imaging procedure was done with the same parameters as the simulations.

In the simulation, we defined the transducer parameters first: the centre frequency of the transducers as 3 MHz, the width of the transducers as 0.3 mm, the length of the transducers as 2.3 mm, the pitch of the array as 0.4 mm, the number of elements as 32 and the bandwidth of the transducers as 55%. Then we defined wide-beam compounding (Supplementary Fig. 13) as the transmission method: 97 transmission angles, from −37.5° to +37.5°, with a step size of 0.78°. Then the acoustic pressure field was the overall effect of the 97 transmissions. Finally, we defined the computation area: −8 mm to +8 mm in the lateral direction, −6 mm to +6 mm in the elevational direction and 0 mm to 140 mm in the axial direction.

Online content

Any methods, additional references, Nature Portfolio reporting summaries, source data, extended data, supplementary information, acknowledgements, peer review information; details of author contributions and competing interests; and statements of data and code availability are available at 10.1038/s41586-022-05498-z.

Supplementary information

This file contains Supplementary Discussions 1–11, Supplementary Figures 1–34, Supplementary Tables 1–5 and Supplementary References.

Cardiac long and short axis views imaged by an orthogonal array.

Cardiac apical four–and two–chamber views imaged by an orthogonal array.

Continuous cardiac imaging during rest, exercise, and recovery.

Left ventricle segmentation results by FCN-32.

Imaging guided biopsy on a phantom by an orthogonal array.

Acknowledgements

We thank Z. Wu, R. Chen and W. Zhao for guidance and discussions on experiments. We thank E. Echegaray, M. Kraushaar, X. Guo and Y. Hewei for testing and consultation of echocardiography. This work was supported by the National Institutes of Health (NIH) (1R21EB025521-01, 1R21EB027303-01A1, 3R21EB027303-02S1 and 1R01EB033464-01). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH. All bio-experiments were conducted in accordance with the ethical guidelines of the NIH and with the approval of the Institutional Review Board of the University of California, San Diego.

Extended data figures and tables

Author contributions

H. Hu, H. Huang, M. Li, X.G. and S. Xu designed the research. H. Hu, H. Huang, M. Li and L.Y. performed the experiments. X.G., R.Q. and M. Li designed and trained the neural network. H. Hu, H. Huang, M. Li and Y.M. performed the data processing and simulations. H. Hu, H. Huang and S.Xu analysed the data. H. Hu, H. Huang, M. Li, R.S.W., R.Q., S. Xiang, J.W. and S. Xu wrote the paper. All authors provided constructive and valuable feedback on the manuscript.

Peer review

Peer review information

Nature thanks David Ouyang, Roger Zemp and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Data availability

All data are available in the manuscript or Supplementary Information.

Code availability

The code that produced the findings of this study is available at https://github.com/UCSD-XuGroup/Wearable-Cardiac-Ultrasound-Imager.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Hongjie Hu, Hao Huang, Mohan Li, Xiaoxiang Gao

Extended data

is available for this paper at 10.1038/s41586-022-05498-z.

Supplementary information

The online version contains supplementary material available at 10.1038/s41586-022-05498-z.

References

- 1.Levick, J. R. An Introduction to Cardiovascular Physiology (Butterworth-Heinemann, 1991).

- 2.Yazdanyar A, Newman AB. The burden of cardiovascular disease in the elderly: morbidity, mortality, and costs. Clin. Geriatr. Med. 2009;25:563–577. doi: 10.1016/j.cger.2009.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ouyang D, et al. Video-based AI for beat-to-beat assessment of cardiac function. Nature. 2020;580:252–256. doi: 10.1038/s41586-020-2145-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jozwiak M, Monnet X, Teboul JL. Monitoring: from cardiac output monitoring to echocardiography. Curr. Opin. Crit. Care. 2015;21:395–401. doi: 10.1097/MCC.0000000000000236. [DOI] [PubMed] [Google Scholar]

- 5.Frahm J, Voit D, Uecker M. Real-time magnetic resonance imaging: radial gradient-echo sequences with nonlinear inverse reconstruction. Invest. Radiol. 2019;54:757–766. doi: 10.1097/RLI.0000000000000584. [DOI] [PubMed] [Google Scholar]

- 6.Commandeur F, Goeller M, Dey D. Cardiac CT: technological advances in hardware, software, and machine learning applications. Curr. Cardiovasc. Imaging Rep. 2018;11:19. doi: 10.1007/s12410-018-9459-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Angelidis G, et al. SPECT and PET in ischemic heart failure. Heart Fail. Rev. 2017;22:243–261. doi: 10.1007/s10741-017-9594-7. [DOI] [PubMed] [Google Scholar]

- 8.Efimov IR, Nikolski VP, Salama G. Optical imaging of the heart. Circ. Res. 2004;95:21–33. doi: 10.1161/01.RES.0000130529.18016.35. [DOI] [PubMed] [Google Scholar]

- 9.Gargesha M, Jenkins MW, Wilson DL, Rollins AM. High temporal resolution OCT using image-based retrospective gating. Opt. Express. 2009;17:10786–10799. doi: 10.1364/oe.17.010786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wang RY, et al. High-resolution image reconstruction for portable ultrasound imaging devices. EURASIP J. Adv. Signal Process. 2019;2019:56. [Google Scholar]

- 11.Baribeau Y, et al. Handheld point-of-care ultrasound probes: the new generation of POCUS. J. Cardiothorac. Vasc. Anesth. 2020;34:3139–3145. doi: 10.1053/j.jvca.2020.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zimetbaum PJ, Josephson ME. Use of the electrocardiogram in acute myocardial infarction. N. Engl. J. Med. 2003;348:933–940. doi: 10.1056/NEJMra022700. [DOI] [PubMed] [Google Scholar]

- 13.Alihanka J, Vaahtoranta K, Saarikivi I. A new method for long-term monitoring of the ballistocardiogram, heart rate, and respiration. Am. J. Physiol. 1981;240:R384–R392. doi: 10.1152/ajpregu.1981.240.5.R384. [DOI] [PubMed] [Google Scholar]

- 14.García-González, M. A., Argelagós-Palau, A., Fernández-Chimeno, M. & Ramos-Castro, J. in Computing in Cardiology 2013 461–464 (IEEE, 2014).

- 15.Elgendi M. On the analysis of fingertip photoplethysmogram signals. Curr. Cardiol. Rev. 2012;8:14–25. doi: 10.2174/157340312801215782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Isaacson D, Mueller JL, Newell JC, Siltanen S. Imaging cardiac activity by the D-bar method for electrical impedance tomography. Physiol. Meas. 2006;27:S43–S50. doi: 10.1088/0967-3334/27/5/S04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Schiller NB, et al. Recommendations for quantitation of the left ventricle by two-dimensional echocardiography. J. Am. Soc. Echocardiogr. 1989;2:358–367. doi: 10.1016/s0894-7317(89)80014-8. [DOI] [PubMed] [Google Scholar]

- 18.Hu H, et al. Stretchable ultrasonic transducer arrays for three-dimensional imaging on complex surfaces. Sci. Adv. 2018;4:eaar3979. doi: 10.1126/sciadv.aar3979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wang C, et al. Monitoring of the central blood pressure waveform via a conformal ultrasonic device. Nat. Biomed. Eng. 2018;2:687–695. doi: 10.1038/s41551-018-0287-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shung, K. K. Diagnostic Ultrasound: Imaging and Blood Flow Measurements (CRC, 2005).

- 21.Huang ZL, et al. Three-dimensional integrated stretchable electronics. Nat. Electron. 2018;1:473–480. [Google Scholar]

- 22.Wu SJ, Yuk H, Wu J, Nabzdyk CS, Zhao X. A multifunctional origami patch for minimally invasive tissue sealing. Adv. Mater. 2021;33:e2007667. doi: 10.1002/adma.202007667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wu H, Shen G, Chen Y. A radiation emission shielding method for high intensity focus ultrasound probes. Biomed. Mater. Eng. 2015;26:S959–S966. doi: 10.3233/BME-151390. [DOI] [PubMed] [Google Scholar]

- 24.Chen QP, et al. Ultrasonic inspection of curved structures with a hemispherical-omnidirectional ultrasonic probe via linear scan SAFT imaging. NDT E Int. 2022;129:102650. [Google Scholar]

- 25.Wang C, et al. Bioadhesive ultrasound for long-term continuous imaging of diverse organs. Science. 2022;377:517–523. doi: 10.1126/science.abo2542. [DOI] [PubMed] [Google Scholar]

- 26.Wang C, et al. Continuous monitoring of deep-tissue haemodynamics with stretchable ultrasonic phased arrays. Nat. Biomed. Eng. 2021;5:749–758. doi: 10.1038/s41551-021-00763-4. [DOI] [PubMed] [Google Scholar]

- 27.Montaldo G, Tanter M, Bercoff J, Benech N, Fink M. Coherent plane-wave compounding for very high frame rate ultrasonography and transient elastography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2009;56:489–506. doi: 10.1109/TUFFC.2009.1067. [DOI] [PubMed] [Google Scholar]

- 28.Ghavami M, Ilkhechi AK, Zemp R. Flexible transparent CMUT arrays for photoacoustic tomography. Opt. Express. 2022;30:15877–15894. doi: 10.1364/OE.455796. [DOI] [PubMed] [Google Scholar]

- 29.Xiao Y, Boily M, Hashemi HS, Rivaz H. High-dynamic-range ultrasound: application for imaging tendon pathology. Ultrasound Med. Biol. 2018;44:1525–1532. doi: 10.1016/j.ultrasmedbio.2018.03.004. [DOI] [PubMed] [Google Scholar]

- 30.Zander D, et al. Ultrasound image optimization (“knobology”): B-mode. Ultrasound Int. Open. 2020;6:E14–E24. doi: 10.1055/a-1223-1134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kempski KM, Graham MT, Gubbi MR, Palmer T, Lediju Bell MA. Application of the generalized contrast-to-noise ratio to assess photoacoustic image quality. Biomed. Opt. Express. 2020;11:3684–3698. doi: 10.1364/BOE.391026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Huang X, Lediju Bell MA, Ding K. Deep learning for ultrasound beamforming in flexible array transducer. IEEE Trans. Med. Imaging. 2021;40:3178–3189. doi: 10.1109/TMI.2021.3087450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cerqueira MD, et al. Standardized myocardial segmentation and nomenclature for tomographic imaging of the heart. A statement for healthcare professionals from the Cardiac Imaging Committee of the Council on Clinical Cardiology of the American Heart Association. Circulation. 2002;105:539–542. doi: 10.1161/hc0402.102975. [DOI] [PubMed] [Google Scholar]

- 34.Feigenbaum H. Role of M-mode technique in today’s echocardiography. J. Am. Soc. Echocardiogr. 2010;23:240–257. doi: 10.1016/j.echo.2010.01.015. [DOI] [PubMed] [Google Scholar]

- 35.Devereux RB, et al. Standardization of M-mode echocardiographic left ventricular anatomic measurements. J. Am. Coll. Cardiol. 1984;4:1222–1230. doi: 10.1016/s0735-1097(84)80141-2. [DOI] [PubMed] [Google Scholar]

- 36.Armstrong WF, Pellikka PA, Ryan T, Crouse L, Zoghbi WA. Stress echocardiography: recommendations for performance and interpretation of stress echocardiography. J. Am. Soc. Echocardiogr. 1998;11:97–104. doi: 10.1016/s0894-7317(98)70132-4. [DOI] [PubMed] [Google Scholar]

- 37.Rerych SK, Scholz PM, Newman GE, Sabiston DC, Jr, Jones RH. Cardiac function at rest and during exercise in normals and in patients with coronary heart disease: evaluation by radionuclide angiocardiography. Ann. Surg. 1978;187:449–464. doi: 10.1097/00000658-197805000-00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Little WC, Applegate RJ. Congestive heart failure: systolic and diastolic function. J. Cardiothorac. Vasc. Anesth. 1993;7:2–5. doi: 10.1016/1053-0770(93)90091-x. [DOI] [PubMed] [Google Scholar]

- 39.Hill J, Timmis A. Exercise tolerance testing. Br. Med. J. 2002;324:1084–1087. doi: 10.1136/bmj.324.7345.1084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Marwick, T. H. in Echocardiography (eds Nihoyannopoulos, P. & Kisslo, J.) 491–519 (Springer, 2018).

- 41.Hammermeister KE, Brooks RC, Warbasse JR. The rate of change of left ventricular volume in man: I. Validation and peak systolic ejection rate in health and disease. Circulation. 1974;49:729–738. doi: 10.1161/01.cir.49.4.729. [DOI] [PubMed] [Google Scholar]

- 42.Pellikka PA, et al. Variability in ejection fraction measured by echocardiography, gated single-photon emission computed tomography, and cardiac magnetic resonance in patients with coronary artery disease and left ventricular dysfunction. JAMA Netw. Open. 2018;1:e181456. doi: 10.1001/jamanetworkopen.2018.1456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ghorbanzadeh O, et al. Evaluation of different machine learning methods and deep-learning convolutional neural networks for landslide detection. Remote Sens. 2019;11:196. [Google Scholar]

- 44.Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986;327:307–310. [PubMed] [Google Scholar]

- 45.Matheijssen NA, et al. Assessment of left ventricular volume and mass by cine magnetic resonance imaging in patients with anterior myocardial infarction intra-observer and inter-observer variability on contour detection. Int. J. Cardiovasc. Imaging. 1996;12:11–19. doi: 10.1007/BF01798113. [DOI] [PubMed] [Google Scholar]

- 46.Fritzsche RG, Switzer TW, Hodgkinson BJ, Coyle EF. Stroke volume decline during prolonged exercise is influenced by the increase in heart rate. J. Appl. Physiol. 1999;86:799–805. doi: 10.1152/jappl.1999.86.3.799. [DOI] [PubMed] [Google Scholar]

- 47.Pashaei V, et al. Flexible body-conformal ultrasound patches for image-guided neuromodulation. IEEE Trans. Biomed. Circuits Syst. 2020;14:305–318. doi: 10.1109/TBCAS.2019.2959439. [DOI] [PubMed] [Google Scholar]

- 48.Kenny JS, et al. A novel, hands-free ultrasound patch for continuous monitoring of quantitative Doppler in the carotid artery. Sci. Rep. 2021;11:7780. doi: 10.1038/s41598-021-87116-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Sung, F. et al. in Proc. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition 1199–1208 (IEEE, 2018).

- 50.Kaelbling LP, Littman ML, Moore AW. Reinforcement learning: a survey. J. Artif. Intell. Res. 1996;4:237–285. [Google Scholar]

- 51.Lin MY, Hu HJ, Zhou S, Xu S. Soft wearable devices for deep-tissue sensing. Nat. Rev. Mater. 2022;7:850–869. [Google Scholar]

- 52.Jeong SH, et al. Liquid alloy printing of microfluidic stretchable electronics. Lab Chip. 2012;12:4657–4664. doi: 10.1039/c2lc40628d. [DOI] [PubMed] [Google Scholar]

- 53.Kramer RK, Majidi C, Wood RJ. Masked deposition of gallium-indium alloys for liquid-embedded elastomer conductors. Adv. Funct. Mater. 2013;23:5292–5296. [Google Scholar]

- 54.Ladd C, So JH, Muth J, Dickey MD. 3D printing of free standing liquid metal microstructures. Adv. Mater. 2013;25:5081–5085. doi: 10.1002/adma.201301400. [DOI] [PubMed] [Google Scholar]

- 55.Tabatabai A, Fassler A, Usiak C, Majidi C. Liquid-phase gallium–indium alloy electronics with microcontact printing. Langmuir. 2013;29:6194–6200. doi: 10.1021/la401245d. [DOI] [PubMed] [Google Scholar]

- 56.Cheng S, Wu Z. Microfluidic electronics. Lab Chip. 2012;12:2782–2791. doi: 10.1039/c2lc21176a. [DOI] [PubMed] [Google Scholar]

- 57.Sempionatto JR, et al. An epidermal patch for the simultaneous monitoring of haemodynamic and metabolic biomarkers. Nat. Biomed. Eng. 2021;5:737–748. doi: 10.1038/s41551-021-00685-1. [DOI] [PubMed] [Google Scholar]

- 58.Liu S, Shah DS, Kramer-Bottiglio R. Highly stretchable multilayer electronic circuits using biphasic gallium-indium. Nat. Mater. 2021;20:851–858. doi: 10.1038/s41563-021-00921-8. [DOI] [PubMed] [Google Scholar]

- 59.Ma Z, et al. Permeable superelastic liquid-metal fibre mat enables biocompatible and monolithic stretchable electronics. Nat. Mater. 2021;20:859–868. doi: 10.1038/s41563-020-00902-3. [DOI] [PubMed] [Google Scholar]

- 60.Lopes PA, Santos BC, de Almeida AT, Tavakoli M. Reversible polymer-gel transition for ultra-stretchable chip-integrated circuits through self-soldering and self-coating and self-healing. Nat. Commun. 2021;12:4666. doi: 10.1038/s41467-021-25008-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Mi XH, Qin L, Liao QW, Wang LK. Electromechanical coupling coefficient and acoustic impedance of 1-1-3 piezoelectric composites. Ceram. Int. 2017;43:7374–7377. [Google Scholar]

- 62.Wang Z, et al. A flexible ultrasound transducer array with micro-machined bulk PZT. Sensors. 2015;15:2538–2547. doi: 10.3390/s150202538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Hong C-H, et al. Lead-free piezoceramics – where to move on? J. Materiomics. 2016;2:1–24. [Google Scholar]

- 64.Zhu BP, et al. Sol–gel derived PMN–PT thick films for high frequency ultrasound linear array applications. Ceram. Int. 2013;39:8709–8714. [Google Scholar]

- 65.Li X, et al. 80-MHz intravascular ultrasound transducer using PMN-PT free-standing film. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2011;58:2281–2288. doi: 10.1109/TUFFC.2011.2085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Zhu B, et al. Lift-off PMN–PT thick film for high-frequency ultrasonic biomicroscopy. J. Am. Ceram. Soc. 2010;93:2929–2931. doi: 10.1111/j.1551-2916.2010.03873.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Shahriari S, Garcia D. Meshfree simulations of ultrasound vector flow imaging using smoothed particle hydrodynamics. Phys. Med. Biol. 2018;63:205011. doi: 10.1088/1361-6560/aae3c3. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

This file contains Supplementary Discussions 1–11, Supplementary Figures 1–34, Supplementary Tables 1–5 and Supplementary References.

Cardiac long and short axis views imaged by an orthogonal array.

Cardiac apical four–and two–chamber views imaged by an orthogonal array.

Continuous cardiac imaging during rest, exercise, and recovery.

Left ventricle segmentation results by FCN-32.

Imaging guided biopsy on a phantom by an orthogonal array.

Data Availability Statement

All data are available in the manuscript or Supplementary Information.

The code that produced the findings of this study is available at https://github.com/UCSD-XuGroup/Wearable-Cardiac-Ultrasound-Imager.