Abstract

Background:

Online cognitive assessments are alternatives to in-clinic assessments.

Objectives:

We evaluated the relationship between online and in-clinic self-reported Everyday Cognition Scale (ECog).

Methods:

In 94 Alzheimer’s Disease Neuroimaging Initiative and Brain Health Registry (ADNI-BHR) participants, we estimated associations between online and in-clinic Everyday Cognition using Bland-Altman plots and regression. In 472 ADNI participants, we estimated reliability of in-clinic Everyday Cognition completed six months apart using Bland-Altman plots and regression.

Results:

Online Everyday Cognition associations: Mean difference was 0.11 (95% limits of agreement: −0.41 to 0.64). In-clinic Everyday Cognition score increased by 0.81 for each online Everyday Cognition score unit increase (R2=0.60). In-clinic Everyday Cognition reliability: Mean difference was 0.01 (95% limits of agreement: −0.61 to 0.62). In-clinic Everyday Cognition score at enrollment increased by 0.79 for each in-clinic Everyday Cognition score unit increase at six months (R2=0.61).

Conclusion:

Online Everyday Cognition closely corresponded with in-clinic Everyday Cognition, supporting validity of using online cognitive assessments to more efficiently facilitate Alzheimer’s disease research.

Keywords: Everyday cognition, Online research registry

1. INTRODUCTION

Clinical assessment of cognition and functional abilities plays an important role in identifying, diagnosing, and monitoring individuals at risk for cognitive decline, mild cognitive impairment (MCI), and dementia due to Alzheimer’s disease (AD). Problems in everyday function help predict those who will more rapidly decline and convert to dementia [1,2]. The Everyday Cognition Scale (ECog) is a clinical assessment of decline in instrumental activities of daily living that map to six cognitive domains [3]. ECog correlates well with functional and cognitive status, and is associated with clinical diagnosis and AD biomarker status [4,5]. However, many traditional cognitive assessments for clinical research are time consuming and expensive [6] because they are paper and pencil-based and are optimally conducted in a supervised clinical setting with an experienced and certified rater [7], which generates logistical challenges and financial barriers. In-person clinical assessments can deter broad participation in clinical studies and pose special barriers for individuals who do not live near a research clinic or have access to transportation [8]. These barriers reduce diversity in AD research.

One strategy to overcome these barriers is to leverage digital technologies to facilitate screening, assessment, and enrollment [9], such as through establishing online registries [10–12]. The Brain Health Registry (BHR) is an online registry at the University of California San Francisco (UCSF) that captures data remotely using online questionnaires and neuropsychological tests adapted from traditional clinical assessments to longitudinally monitor cognition and functional status [13]. There is emerging evidence for validity of BHR measures [5,14,15]. However, the validity of unsupervised measures obtained remotely online compared to traditional measures obtained in a supervised clinical setting has not been fully established.

In this study, we tested the hypothesis that online ECog closely corresponded with in-clinic ECog in 94 participants enrolled in both the Alzheimer’s Disease Neuroimaging Initiative (ADNI) and Brain Health Registry (BHR). The direct comparison of the traditional in-clinic measure of ECog and the adapted online measure of ECog is a novel step in assessing the validity of online measures of cognition and functional ability. We assessed how well the measure of self-reported ECog collected in BHR, an unsupervised online setting, agrees with and predicts the same measure of self-reported ECog collected in ADNI, a supervised clinical setting. Since the correspondence of in-clinic and online ECog measures could not logically exceed the test-retest reliability of the in-clinic ECog measure, we used this as a benchmark for validity of the online measure by comparing the associations between online ECog and in-clinic ECog to the test-retest reliability of in-clinic ECog.

2. METHODS

This study compared data collected in a supervised clinical setting from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) to data collected remotely online from the Brain Health Registry (BHR). Data were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). The ADNI was launched in 2003 as a public-private partnership, led by Principal Investigator Michael W. Weiner, MD. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer’s disease (AD). During the four phases of the ADNI study (ADNI-1, ADNI-GO, ADNI-2, ADNI-3), participants were rolled over from previous phases for continued monitoring, while new participants were added with each phase [16]. Participants were diagnosed as cognitively normal, MCI, or with dementia due to AD according to ADNI inclusion criteria [17].

2.1. Participants:

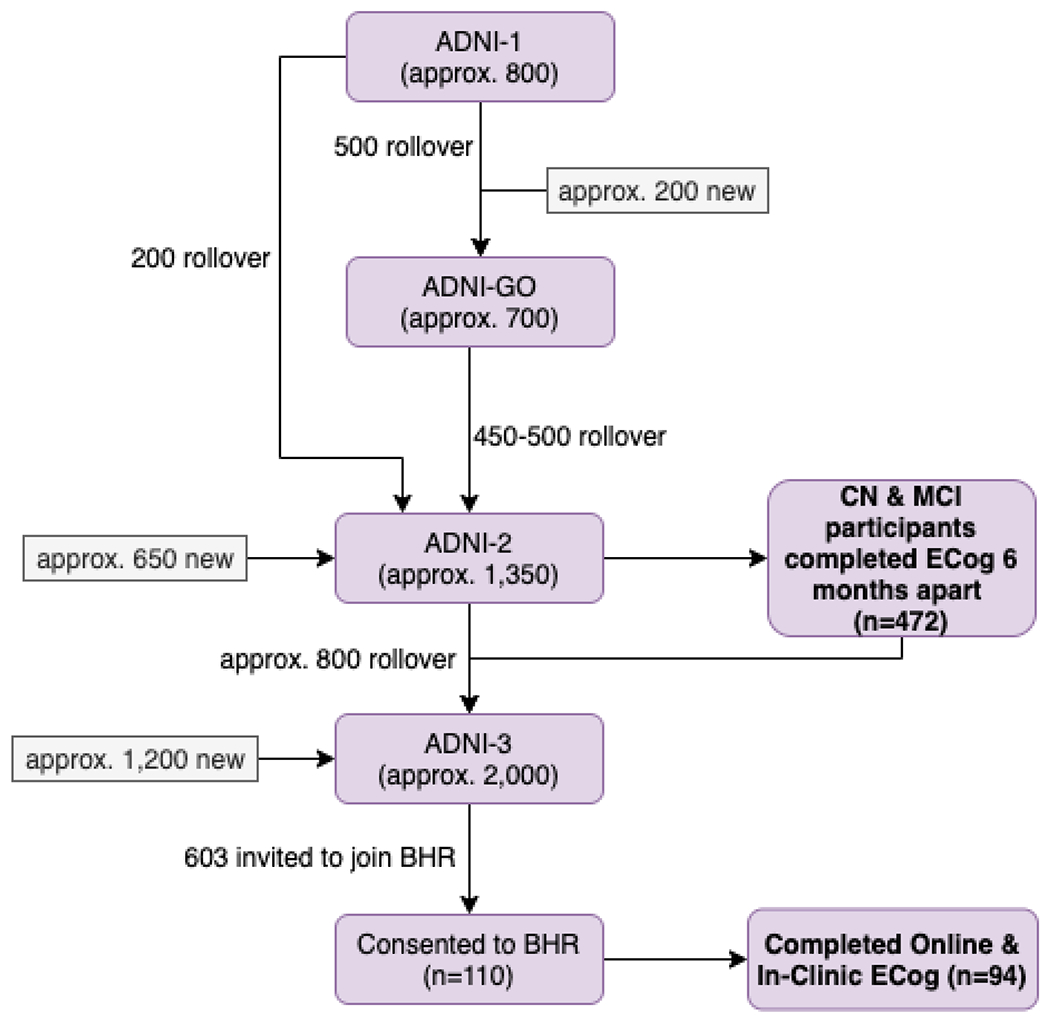

2.1.1. Participants enrolled in both ADNI and the Brain Health Registry to complete the self-reported online Everyday Cognition (n=94)

Existing ADNI-3 participants were invited to enroll in BHR to complete supplemental online assessments. Of the 603 ADNI-3 participants invited to join BHR, 110 consented and enrolled in BHR (referred to as ADNI-BHR participants), and 94 of those completed ECog both in-clinic and online and have evaluable linked data (Figure 1). All 94 participants who completed ECog both in-clinic and online, regardless of cognitive status, were included in this study due to the limited sample size.

Figure 1: Enrollment Flow Chart for ADNI and BHR.

Approximate (approx.) ADNI enrollment numbers were provided from http://adni.loni.usc.edu/study-design [16]. Bold boxes represent study samples used in this analysis.

2.1.2. Participants enrolled in ADNI to estimate test-retest reliability of the self-reported in-clinic Everyday Cognition (n=472)

Out of all of the participants enrolled in ADNI-2 [16], 472 completed two separate in-clinic ECog assessments about six months apart (Figure 1). The average time between assessments was 189 (interquartile range (IQR) 106 to 352) days. An estimate of the test-retest reliability of self-reported in-clinic ECog was obtained from these 472 ADNI participants, of which 250 were diagnosed as stable cognitively normal, 208 were diagnosed as stable MCI, 11 converted from cognitively normal to MCI, and 3 reverted from MCI to cognitively normal according to ADNI inclusion criteria [17].

2.2. Measurements

2.2.1. Measurements collected in-clinic in ADNI

Participants in ADNI were asked to complete the Everyday Cognition Scale (ECog) in-clinic. The ECog includes 39 items assessing an individual’s capability to perform everyday tasks in comparison to 10 years prior [3]. Ratings use a four-point Likert scale ranging from “better or no change” to “consistently much worse” to report changes in ability to perform tasks in six cognitively-relevant domains: Memory, Language, Visuospatial Abilities, and Planning, Organization, and Divided Attention [18]. A total ECog score, which was the sum of the ratings from all completed items divided by the number of items completed, ranged from 1 to 4 [19].

Participant age, gender (categorized as male or female), years of education (ranging from 0 to 20 years), self-reported race (categorized as American Indian or Alaskan Native, Asian, Native Hawaiian or other Pacific Islander, Black or African American, White, more than one race, or unknown) and ethnicity (categorized as Hispanic or Latino, not Hispanic or Latino, or unknown) were collected as part of their regular, ADNI study visits.

2.2.2. Measurements collected online in the Brain Health Registry

Participants in Brain Health Registry (BHR) were asked to complete an online adaptation of the ECog Scale in an unsupervised setting, such as a quiet space at home with an internet connection. All 39 of the in-clinic ECog items were used verbatim in the online ECog [14]. The online ECog was scored in the same way as the in-clinic ECog, resulting in a total online ECog score ranging from 1 to 4.

2.3. Statistical Analysis

2.3.1. Associations between self-reported online Everyday Cognition and in-clinic Everyday Cognition in ADNI-BHR participants (n=94)

We tested the hypothesis that online self-reported total ECog score corresponded well with in-clinic self-reported total ECog score using a Bland-Altman plot and linear regression. The nearest date of in-clinic ECog completion in ADNI was matched to the date the online ECog was completed at time of enrollment into BHR. The Bland-Altman plot was constructed and the mean difference was calculated to evaluate the method agreement between online ECog and in-clinic ECog. 95% limits of agreement were calculated as ±1.96 standard deviations of the mean difference. We would expect 95% of differences between measurements by two methods to lie between these limits. [20].

To evaluate how well online ECog could predict in-clinic ECog, a linear regression model was fit with online ECog total score as the sole predictor and in-clinic ECog total score as the outcome. Linearity of the regression model was assessed using diagnostics including a Locally Weighted Scatterplot Smoother (LOWESS) and component plus residual plots [21]. The fit of the linear model was confirmed by fitting a linear spline as well as the restricted cubic spline to test for departure from linearity. Normality of the error term was assessed using diagnostic graphical methods, such as the kdensity nonparametric estimate of the normal distribution of the residuals and the curvature of the normal Q–Q plot. Constant variance was assessed using the shape of the residual versus fitted (RVF) plots. Influential points were identified from a boxplot using an absolute DFBETA statistic cutoff of 0.5. Prediction error was corrected for optimism with 10-fold cross-validation using STATA’s crossfold utility to avoid overfitting.

2.3.2. Test-retest reliability of self-reported in-clinic Everyday Cognition in ADNI participants (n=472)

We assessed the test-retest reliability of self-reported Everyday Cognition collected in a clinic from ADNI participants who completed two separate repeating in-clinic ECog assessments about six months apart using intraclass correlation coefficient (ICC), a Bland-Altman plot, and linear regression. The Bland-Altman plot and 95% limits of agreement, calculated as ±1.96 standard deviations of the mean difference, were constructed to evaluate the agreement between in-clinic ECog at time of enrollment into ADNI and in-clinic ECog at six months. The linear regression model was fit with in-clinic ECog total score at time of enrollment into ADNI as the sole predictor and in-clinic ECog total score at six months as the outcome. All of the same methods used to evaluate the first model for linearity, normality, constant variance, and influential points were used. Prediction error was corrected for optimism with 10-fold cross-validation using STATA’s crossfold utility to avoid overfitting.

We compared the Bland-Altman results assessing the method agreement between online and in-clinic ECog to the Bland-Altman results assessing the repeatability of in-clinic ECog. We evaluated prediction performance of the linear regression models using R2 and compared the R2 result from the estimated test-retest reliability of in-clinic ECog to the R2 result from the prediction of in-clinic ECog using online ECog. Statistical analyses were performed using STATA (version 16.1).

3. RESULTS

3.1. Associations between self-reported online Everyday Cognition and in-clinic Everyday Cognition in ADNI-BHR participants (n=94)

94 participants were enrolled in ADNI-BHR and completed self-reported ECog both online and in-clinic. The median and interquartile range (IQR) of age for this sample was 75 (71 to 80) years, 61% of the sample was female, and 95% identified as white (Table 1). 77% of this sample was diagnosed as cognitively normal and 22% was diagnosed with MCI.

Table 1.

Characteristics of participants

| ADNI | ADNI-BHR | ||||

|---|---|---|---|---|---|

|

| |||||

| Variable | Completed in-clinic ECog 6 mo. Apart N=472 |

Invited N=603 |

Non-Consented N=493 |

Consented N=110 |

Completed ECog online & in-clinic N=94 |

| Demographics | |||||

| Age years, median (IQR) | 80 (64-97) | 76 (70-82) | 76 (70-82) | 76 (70-81) | 75 (71-80) |

| Education years, median (IQR) | 16 (11-20) | 17 (15-18) | 17 (15-18) | 17 (16-19) | 18 (16-19) |

| Female (%) | 233 (49%) | 316 (52%) | 250 (51%) | 66 (60%) | 57 (61%) |

| Race | |||||

| White (%) | 435 (92%) | 542 (90%) | 443 (90%) | 99 (90%) | 89 (95%) |

| Black or African American (%) | 23 (5%) | 31 (5%) | 23 (5%) | 8 (7.3%) | 3 (3%) |

| Asian (%) | 6 (1.4%) | 14 (2.3%) | 12 (2%) | 2 (1.8%) | 1 (1%) |

| More than one race (%) | 5 (1%) | 13 (2.2%) | 12 (2%) | 1 (0.9%) | 1 (1%) |

| American Indian or Alaskan Native (%) | 1 (0.2%) | 1 (0.2%) | 1 (0.2%) | - | - |

| Native Hawaiian or Other Pacific Islander (%) | 1 (0.2%) | - | - | - | - |

| Unknown | 1 (0.2%) | 2 (0.3%) | 2 (0.4%) | - | - |

| Ethnicity | |||||

| Hispanic or Latino (%) | 15 (3%) | 26 (4.3%) | 23 (5%) | 3 (2.7%) | 2 (2%) |

| Not Hispanic or Latino (%)) | 454 (96%) | 575 (95.4%) | 468 (95%) | 107 (97.3%) | 92 (98%) |

| Unknown (%) | 3 (0.6%) | 2 (0.3%) | 2 (0.4%) | - | - |

| Diagnosis | |||||

| Cognitively Normal (%) | 253 (54%) | 357 (59.2%) | 274 (55.6%) | 83 (75.5%)) | 72 (77%) |

| Mild Cognitive Impairment (%) | 219 (46%) | 202 (33.5%) | 177 (36%) | 25 (22.7%) | 21 (22%) |

| Dementia (%) | - | 44 (7.3%) | 42 (8.5%) | 2 (1.8%) | 1 (1%) |

| Computer Confidence* | |||||

| Usually Needs Help (%) | - | - | - | - | 3 (4%) |

| Depends on Task (%) | - | - | - | - | 34 (43%)) |

| Confident (%) | - | - | - | - | 43 (54%) |

Missing values in the Computer Confidence variable

Abbreviations: IQR, interquartile range; ADNI, Alzheimer’s Disease Neuroimaging Initiative; BHR, Brain Health Registry; mo., months

Data presented as median (IQR) or number (%)

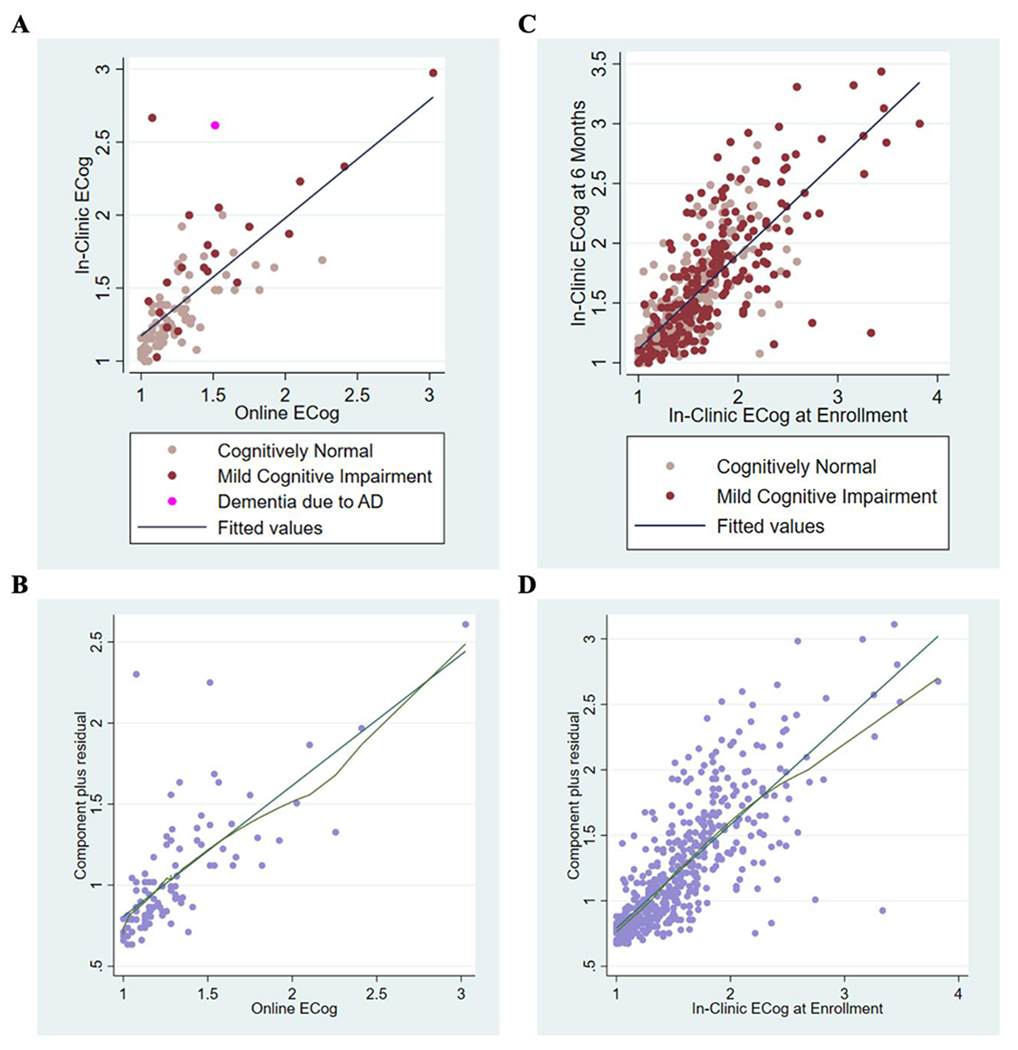

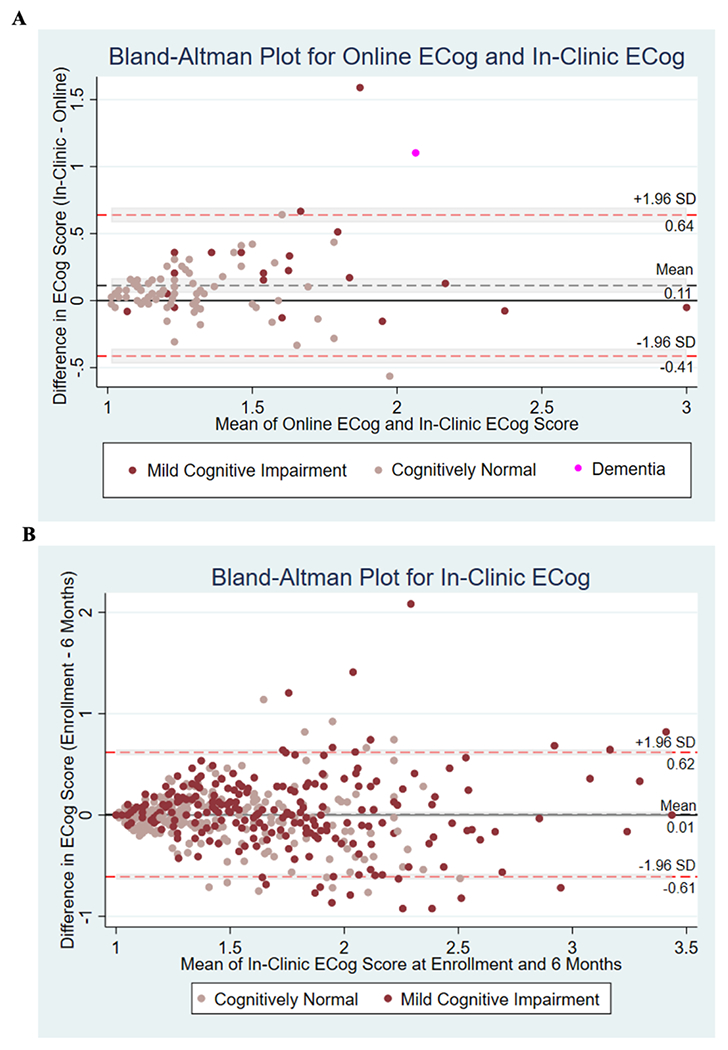

Out of the 94 participants enrolled in ADNI-BHR and included in this analysis, 67 of the participants completed the in-clinic ECog first and 27 of the participants completed the online ECog first. The average absolute time between the online ECog completion and the in-clinic ECog completion was 24.5 (IQR 0 to 112) days. A scatterplot showed a strong association between self-reported online ECog total scores and self-reported in-clinic ECog total scores (Figure 2A). The Bland-Altman plot (Figure 3A) showed that average in-clinic ECog scores were a little higher than online ECog scores (mean difference: 0.11 (95% CI 0.06 to 0.17)) and the 95% limits of agreement were −0.41 (95% CI −0.47 to −0.36) to 0.64 (95% CI 0.58 to 0.69).

Figure 2: Scatterplots and Component Plus Residual Plots of Everyday Cognition Total Scores.

(A) Self-report online Everyday Cognition and in-clinic Everyday Cognition (n=94) (B) LOWESS curve from the CPR plot for linear regression to predict in-clinic Everyday Cognition using self-report online Everyday Cognition (n=94) (C) Repeating self-report in-clinic Everyday Cognition at time of enrollment into ADNI and in-clinic Everyday Cognition at six months (n=472) (D) LOWESS curve from the CPR plot for in-clinic Everyday Cognition linear regression (n=472)

Figure 3: Bland-Altman Plots and 95% Limits of Agreement.

(A) Differences between measures of in-clinic Everyday Cognition and online Everyday Cognition against mean (Bland-Altman) Plot, with the limits of agreement (red dashed line), the mean difference (black dashed line), shaded confidence intervals. (B) Differences between repeated measures of in-clinic Everyday Cognition against mean (Bland-Altman) Plot, with the limits of agreement (red dashed line), the mean difference (black dashed line), shaded confidence intervals

In linear regression analysis, we found that the mean online ECog total score increased by 0.81 (95% CI 0.65 to 0.97) for each unit increase in in-clinic ECog total score. The optimism-corrected R2 using 10-fold cross-validation was estimated as 0.60 (95% CI 0.41 to 0.78) (Table 2). The LOWESS curve from the component plus residual (CPR) plot agreed with the linear fit (Figure 2B), as did the restricted cubic spline model. When two influential points were omitted from the regression model, we found that the mean online ECog total score increased by 0.89 (95% CI 0.76 to 1.03) for each unit increase in in-clinic ECog total score. The adjusted R2 was 0.65.

Table 2.

Estimated regression coefficients of linear regression models

| Model/Variable | ß | SE | p value | 95% CI | 10-fold cross-validated R2 | 95% CI |

|---|---|---|---|---|---|---|

| Prediction of in-clinic ECog using online ECog model | - | - | - | - | 0.60 | 0.41 - 0.78 |

| Online ECog | 0.81 | 0.08 | < 0.01 | 0.65 - 0.97 | - | - |

| Intercept | 0.37 | 0.11 | 0.001 | 0.15 - 0.59 | - | - |

| Test-retest of in-clinic ECog model | - | - | - | - | 0.61 | 0.53 - 0.69 |

| ECog at Enrollment | 0.79 | 0.03 | < 0.01 | 0.73 - 0.85 | - | - |

| Intercept | 0.32 | 0.05 | < 0.01 | 0.23 - 0.42 | - | - |

Abbreviations: ECog, Everyday Cognition; SE, standard error; CI, confidence interval

3.2. Test-retest reliability of self-reported in-clinic Everyday Cognition in ADNI participants (n=472)

We estimated the test-retest reliability of self-reported in-clinic ECog using the sample of 472 ADNI participants who completed two separate in-clinic ECog assessments approximately six months apart. The median and interquartile range (IQR) of age for this sample was 80 (64 to 97) years, 49% of the sample was female, and 92% identified as white (Table 1). A scatterplot showed a strong association between self-reported in-clinic ECog total scores at time of enrollment into ADNI-2 and six months (Figure 2C) and the intraclass correlation (ICC) was estimated to be 0.71 (95% CI 0.62 to 0.79). The Bland-Altman plot (Figure 3B) showed that average in-clinic ECog scores at enrollment were slightly higher than in-clinic ECog scores at six months (mean difference: 0.01 (95% CI −0.02 to 0.03)) and the 95% limits of agreement were −0.61 (95% CI −0.64 to −0.58) to 0.62 (95% CI 0.59 to 0.65). This indicated good test-retest reliability of the self-reported in-clinic ECog. 54% of this sample was diagnosed as cognitively normal and 46% was diagnosed with MCI.

In linear regression analysis, we found that the mean in-clinic ECog total score collected at time of enrollment into ADNI increased by 0.79 (95% CI 0.73 to 0.85) for each unit increase in in-clinic ECog total score at six months. The optimism-corrected R2 using 10-fold cross-validation was estimated as 0.61 (95% CI 0.53 to 0.69) (Table 2). The LOWESS curve from the component plus residual (CPR) plot indicated slight departure from linearity starting around an in-clinic ECog score at enrollment of 2.5 (Figure 2D). This departure from linearity was confirmed by fitting a linear spline with a knot placed at an in-clinic ECog score of 2.5, as well as by fitting the restricted cubic spline. Using a linear spline regression model with a knot placed at an in-clinic ECog score of 2.5, we found that the mean in-clinic ECog total score collected at time of enrollment increased by 0.85 (95% CI 0.78 to 0.92) for each unit increase in in-clinic ECog total score at six months. The adjusted R2 was 0.61. When one influential point was omitted from the regression model, we found that the mean in-clinic ECog total score at enrollment increased by 0.82 (95% CI 0.77 to 0.88) for each unit increase in in-clinic ECog total score. The adjusted R2 was 0.63.

4.0. DISCUSSION

The major finding of this study was that the online ECog closely corresponded with in-clinic ECog and may provide as much information as repeating in-clinic ECog. This is an important finding because it demonstrates that the remotely administered online ECog may be just as useful as the traditional in-clinic ECog. In the Bland-Altman plots, we found that the mean difference of 0.01 between in-clinic ECog scores completed approximately six months apart indicated better agreement compared to the mean difference of 0.11 between online and in-clinic ECog scores. However, we found that the 95% limits of agreement closely corresponded. For 95% of individuals, a measurement by online ECog would be between 0.41 units less and 0.64 units greater than a measurement by in-clinic ECog. Similarly, for 95% of individuals, a measurement by in-clinic ECog at six months would be between 0.61 units less and 0.62 units greater than a measurement by in-clinic ECog at time of enrollment.

The intraclass correlation (ICC) of the repeating in-clinic ECog was 0.71 (95% CI 0.62 to 0.79), meaning that 71% of the total variability in ECog was due to differences between subjects (true between-subject variability) and 29% was due to within-subject random measurement error or changes over the six month follow up. Our results from the prediction of in-clinic ECog using online ECog suggested that the ECog collected in an unsupervised online setting could predict the ECog collected in a supervised clinic setting. We used 10-fold cross-validation to avoid overfitting and give a realistic estimate of the usefulness of the online ECog. The optimism-corrected R2 of 0.60 (95% CI 0.41 to 0.78) showed that online ECog scores explained a moderate proportion, 60%, of the total variability of in-clinic ECog scores. The optimism-corrected R2 of the model used to predict in-clinic ECog using online ECog (R2= 0.60, 95% CI 0.41 to 0.78) was similar to the optimism-corrected R2 of the model used to estimate the test-retest reliability of self-reported in-clinic ECog (R2=0.61 (95% CI 0.53 to 0.69). Based on R2 comparison, this indicated that online ECog corresponded well with in-clinic ECog and provided almost as much information as repeating the in-clinic ECog. These results demonstrate the realistic usefulness and clinical validity of online measures of cognition and functional ability in clinical research.

There was evidence of cognitive impairment in both the ADNI sample used to estimate the test-retest reliability of self-reported in-clinic ECog (46% (219/472) MCI) and the ADNI-BHR sample used for the prediction of in-clinic ECog using online ECog (22% (22/94) MCI or dementia). So the results from this study extend previous findings that unsupervised online measures [5,14,15] were associated with participant cognition and diagnosis along the CN to MCI continuum. The ADNI-BHR cohort used in this analysis is particularly valuable because their online data is linked to confirmed clinical data, including neuropsychological tests, clinical diagnosis, and AD biomarkers. In the future, the ADNI-BHR cohort can be used to study relationships between additional online and in-clinic variables. Since the cohort is followed longitudinally both in-clinic and online, it provides a unique opportunity to investigate online variables associated with disease progression and cognitive decline.

This study has limitations. First, in the ADNI-BHR cohort there is a selection bias for participants with computer and internet access and literacy, and the cohort overrepresents those identified as White and those with high educational attainment. 110 of the 603 invited ADNI participants consented to enroll in online BHR. These factors will impact the generalizability of the results. Although these cohort characteristics will limit the external generalizability of these findings, the validation of online assessments will nonetheless greatly expand the accessibility to alternative screening measures of cognitive and functional status at a much lower cost and encourage broad diverse participation in AD clinical research studies, such as from those who do not have access to a memory clinic but have access to a computer. It will be crucial to validate online functional measures in a more diverse and highly characterized cohort in the future. Second, the test-retest reliability of in-clinic ECog relied on administrations given approximately six months apart. Since ECog assesses an individual’s capability to perform everyday tasks in comparison to activity levels ten years prior [3], it may be reasonable to estimate the reliability with a six month interval. Third, the average time interval between ECog administrations was different for the test-retest analysis (time interval of six months) and the online versus in-clinic analysis (time interval of 24.5 days apart). In addition, the sequence of ECog administrations was not taken into account. We assumed that practice effects, if any, were the same. However, it may be possible that a person completing two assessments 24.5 days apart could perform differently compared to a person completing two assessments six months apart. In the future, a better estimate of test-retest reliability could be achieved in which the sequence of online and in-clinic assessments is randomly assigned and the timing of assessments is kept consistent.

In conclusion, this study suggests that assessment of cognition and functional abilities collected in an unsupervised online setting provided as much information as repeating the assessment of cognition and functional abilities collected in a supervised clinical setting. We suggest that these results support the validity of leveraging a digital approach to overcome barriers in AD clinical research, by more effectively and broadly facilitating recruitment, screening, and monitoring activities in clinical trials aimed to develop new treatments and prevention strategies for AD and related dementias. The validation of remotely collected online cognitive functional assessments indicative of meaningful clinical measures could expand the methods used to identify, diagnose, and monitor individuals at risk for cognitive decline and have impactful and scalable applications in brain aging and AD clinical research.

5.0. ACKNOWLEDGEMENTS

The authors would like to acknowledge the participants who participated in the ADNI study, as well as the individuals, including faculty and staff, who contributed to the study planning and operations of the ADNI study. We would also like to acknowledge the contributions of all Brain Health Registry participants and all Brain Health Registry staff members.

6.0. FUNDING

Data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH-12-2-0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie, Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol-Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Lumosity; Lundbeck; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California.

Declarations of interest:

Taylor Howell has nothing to disclose. John Neuhaus reports grants from the National Institutes of Health (NIH). M. Maria Glymour reports grants from the National Institutes of Health (NIH) and the Robert Wood Johnson Foundation. Michael W Weiner reports grants from the National Institutes of Health (NIH) (5U19AG024904-14; 1R01AG053798-01A1; R01 MH098062; U24 AG057437-01; 1U2CA060426-01; 1R01AG058676-01A1; and 1RF1AG059009-01) and the Department of Defense (DOD) (W81XWH-15-2-0070; 0W81XWH-12-2-0012; W81XWH-14-1-0462; and W81XWH-13-1-0259); served on advisory boards for Alzheon, Inc., Biogen, Cerecin, Dolby Family Ventures, Eli Lilly, Merck Sharp & Dohme Corp., Nestle/Nestec, and Roche, University of Southern California (USC); provided consulting to Baird Equity Capital, BioClinica, Cerecin, Inc., Cytox, Dolby Family Ventures, Duke University, FUJIFILM-Toyama Chemical (Japan), Garfield Weston, Genentech, Guidepoint Global, Indiana University, Japanese Organization for Medical Device Development, Inc. (JOMDD), Nestle/Nestec, NIH, Peerview Internal Medicine, Roche, T3D Therapeutics, University of Southern California (USC), and Vida Ventures; acted as a lecturer for The Buck Institute for Research on Aging; received funding for academic travel from USC; holds stock options with Alzeca, Alzheon Inc., and Anven; reports research support from the Patient-Centered Outcomes Research Institute (PCORI) (PPRN-1501-26817), California Department of Public Health (CDPH) (16-10054), University of Michigan (18-PAF01312), Siemens (444951-54249), Biogen (174552), Hillblom Foundation (2015-A-011-NET), Alzheimer’s Association (BHR-16-459161), the State of California (18-109929), Johnson & Johnson, Kevin and Connie Shanahan, General Electric (GE), VU Medical Center, Australian Catholic University (HBI-BHR), The Stroke Foundation, and the Veterans Administration. Rachel L Nosheny reports grants from the National Institutes of Health (NIH), Alzheimer’s Association, California Department of Public Health, and Genentech, Inc.

7.0 REFERENCES

- [1].Farias ST, Mungas D, Harvey DJ, Simmons A, Reed BR, DeCarli C. The Measurement of Everyday Cognition (ECog): Development and validation of a short form. Alzheimers Dement 2011;7:593–601. 10.1016/j.jalz.2011.02.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Farias ST, Cahn-Weiner DA, Harvey DJ, Reed BR, Mungas D, Kramer JH, et al. Longitudinal Changes in Memory and Executive Functioning are Associated with Longitudinal Change in Instrumental Activities of Daily Living in older adults. Clin Neuropsychol 2009;23:446–61. 10.1080/13854040802360558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Farias ST, Mungas D, Reed BR, Cahn-Weiner D, Jagust W, Baynes K, et al. The Measurement of Everyday Cognition (ECog): Scale Development and Psychometric Properties. Neuropsychology 2008;22:531–44. 10.1037/0894-4105.22.4.531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Rueda AD, Lau KM, Saito N, Harvey D, Risacher SL, Aisen PS, et al. Self-rated and informant-rated everyday function in comparison to objective markers of Alzheimer’s disease. Alzheimer’s & Dementia 2015;11:1080–9. 10.1016/jjalz.2014.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Nosheny RL, Camacho MR, Jin C, Neuhaus J, Truran D, Flenniken D, et al. Validation of online functional measures in cognitively impaired older adults. Alzheimer’s & Dementia 2020;16:1426–37. 10.1002/alz.12138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Cummings J, Aisen P, Barton R, Bork J, Doody R, Dwyer J, et al. Re-Engineering Alzheimer Clinical Trials: Global Alzheimer’s Platform Network. J Prev Alzheimers Dis 2016;3:114–20. 10.14283/jpad.2016.93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Grill JD, Karlawish J. Addressing the challenges to successful recruitment and retention in Alzheimer’s disease clinical trials. Alzheimer’s Research & Therapy 2010;2:34. 10.1186/alzrt58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].The Robert Wood Johnson Foundation Commission to Build a Healthier America. Overcoming Obstacles to Health. 2008. [Google Scholar]

- [9].Vanbelle C, Hayduk R, Hughes L. Innovative Digital Patient Recruitment Strategies in Prodromal Alzheimer’s Disease Trials n.d.:8.

- [10].Reiman EM, Langbaum JBS, Fleisher AS, Caselli RJ, Chen K, Ayutyanont N, et al. Alzheimer’s Prevention Initiative: A Plan to Accelerate the Evaluation of Presymptomatic Treatments. J Alzheimers Dis 2011;26:321–9. 10.3233/JAD-2011-0059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].About TrialMatch | Alzheimer’s Association n.d https://www.alz.org/alzheimers-dementia/research_progress/clinical-trials/about-clinical-trials (accessed August 10, 2019).

- [12].Watson JL, Ryan L, Silverberg N, Cahan V, Bernard MA. Obstacles And Opportunities In Alzheimer’s Clinical Trial Recruitment. Health Aff (Millwood) 2014;33:574–9. 10.1377/hlthaff.2013.1314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Weiner MW, Nosheny R, Camacho M, Truran-Sacrey D, Mackin RS, Flenniken D, et al. The Brain Health Registry: An internet-based platform for recruitment, assessment, and longitudinal monitoring of participants for neuroscience studies. Alzheimer’s & Dementia 2018;14:1063–76. 10.1016/jjalz.2018.02.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Nosheny RL, Camacho MR, Insel PS, Flenniken D, Fockler J, Truran D, et al. Online study partner-reported cognitive decline in the Brain Health Registry. Alzheimer’s & Dementia: Translational Research & Clinical Interventions 2018;4:565–74. 10.1016/j.trci.2018.09.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Mackin RS, Insel PS, Truran D, Finley S, Flenniken D, Nosheny R, et al. Unsupervised online neuropsychological test performance for individuals with mild cognitive impairment and dementia: Results from the Brain Health Registry. Alzheimer’s & Dementia: Diagnosis, Assessment & Disease Monitoring 2018;10:573–82. 10.1016/j.dadm.2018.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].ADNI | Study Design n.d. http://adni.loni.usc.edu/study-design/(accessed April 29, 2021).

- [17].Weiner M, Petersen R, Clinic M, Shaw LM, Trojanowski J, Toga A. Alzheimer’s Disease Neuroimaging Initiative 3 (ADNI3) Protocol Protocol Short Title: ADNI3 Protocol Number: ATRI-00 n.d.:48.

- [18].Farias ST, Park LQ, Harvey DJ, Simon C, Reed BR, Carmichael O, et al. Everyday Cognition in older adults. J Int Neuropsychol Soc 2013; 19. 10.1017/S1355617712001609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Farias ST, Mungas D, Harvey DJ, Simmons A, Reed BR, DeCarli C. The Measurement of Everyday Cognition (ECog): Development and validation of a short form. Alzheimers Dement 2011;7:593–601. 10.1016/jjalz.2011.02.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Bland JM, Altman DG. Applying the right statistics: analyses of measurement studies. Ultrasound in Obstetrics & Gynecology 2003;22:85–93. 10.1002/uog.122. [DOI] [PubMed] [Google Scholar]

- [21].Vittinghoff E, Glidden DV, Shiboski SC, McCulloch CE. Regression Methods in Biostatistics: Linear, Logistic, Survival, and Repeated Measures Models. 2nd ed. New York: Springer-Verlag; 2012. 10.1007/978-1-4614-1353-0. [DOI] [Google Scholar]